Abstract

Tourism management plays an important role in the context of Smart Cities. In this work, we have used thermal cameras for the development of an Object Detection solution in pedestrian areas. The solution can classify people, bikes, strollers, and count people in Real-Time by using telephoto and wide-angle thermal cameras, in hot squares where there is a relevant number of people passing by. This work has improved FASTER-R-CNN and YOLOv5 architectures with new data sets and fine-tuning approaches to enhance mean average precision and flexibility whether compared to state of the art solutions. Both top-down and bottom-up training adaptation approaches have been assessed in order to demonstrate that the proposed bottom-up approach can provide better results. Results have overcome the state-of-the-art in terms of mean Average Precision in counting (i) for relevant number of people in the scene (removing the limitation of previous state-of-the-art solutions that were set to provide good precision up to 10 people) and (ii) in terms of flexibility with respect to different kinds of camera and resolutions. The resulting model can produce results also when executed on thermal camera and in Real-Time on industrial PC of mid-level. The proposed solution has been developed and validated in the framework of the Herit-Data EC project and it has exploited the Snap4City platform for the final collection of data results, monitoring and their publication on real time dashboards.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Undoubtedly tourism is a vital component for many cities and yet its management is a difficult task, with many problems, like overcrowded situations, getting in the way and bringing forth lesser appreciation of any touristic site experience. Thanks to the development of modern cities there are plenty of possible solutions in the context of Smart Cities based on the use of Big Data and IoT Devices (Internet of Things) to acquire useful information on city conditions/context, and tourists’ behaviour within the city. People detection and counting are the most interesting features for both security and touristic monitoring in hot destinations and sites of interest around the world, as in malls, stadiums, theatres, etc., to provide support to decision makers. People counting can be very useful for any detection of critical conditions (early warning), for security purposes and for support to decisions on cleaning activities. Detection and counting of people is a needed instrument for cultural sites’ management, and any adopted solution has to respect the GDPR (General Data Protection Regulation) [17] and more generally privacy issues.

Italy is one of the countries having a large number of hot destinations, such as: Florence, Venice, Rome, Milan, etc. In some cases, municipalities drew the line somewhere, to keep an acceptable quality of services and experiences for each and every city user, including tourists. This was performed by putting limits for instance on the number of people attending the main city squares and areas. Limitations are typically imposed by tripod gates, tickets, and other physical/invasive solutions reducing any free flow of and it may cause difficulties in evacuation cases. There are nonintrusive solutions based on different technologies, which allows to detect and count the number of people in specific areas of interest. For example, IoT sensors-based solutions as PaxCounters are widely used (Wi-Fi sniffers [3], Laser counting, infrared counters, etc. [41]) and yet in some scenarios, like the one of major squares, such means could be difficult to exploit, as most of them have limited range capabilities in counting in wide ranges of conditions. In any controlled conditions, such as fairs, festivals, museums, etc., using wearable tags, to be assigned to a significant number of attendees, can be a viable solution to understand how people move, how much time they stay in each room, etc. On the other hand, these solutions are invasive and quite expensive. Alternative solutions are based on video cameras, which allow to detect, classify, count and track people [29] by Computer Vision and artificial intelligence, AI. This is a field where AI analyzes visual data and provides support to decision-makers, with hints on scene understanding for both environment and situation [40]. Governments and companies are investing in security networks hundreds of millions: more and more surveillance cameras are watching the world, according to the report from the industry researcher IHS Markit (https://www.wsj.com/articles/a-billion-surveillance-cameras-forecast-to-be-watching-within-two-years-11575565402). Most of these solutions have strong applications in the context of security and surveillance where GDPR issues are not so binding. On the contrary, when it comes to on-street people counting, RGB cameras are not very much appreciated by municipalities for their difficulties in passing GDPR compliance assessment.

An emerging trade-off consists in using thermal cameras for both detection and counting purposes. Thermal cameras are much more acceptable in on-road counting, since they do not allow any face recognition, whereas RGB cameras do. Thermal cameras are more expensive than RGB cameras, but, as to people counting, they have the advantage to be (i) non-invasive privacy compliant, and (ii) capable to work well even without lighting. As to thermal cameras, there are object detection algorithms that could be used to elaborate video stream images, so as to detect the presence of particular types of objects and their position in the image [21, 27, 28]. These algorithms provide relevant limitations regarding when it comes to counting, as discussed in next subsection. This is the reason preventing their usage for the described purpose. Therefore, our focus has been on solving such pending problems about the usage of thermal cameras for people detection and counting in dense conditions.

1.1 Related works

In the framework of people flow analysis for tourism management, Multiclass Object Detection and People Counting are fundamental tasks to provide support to decision-makers. In most cases, the task is managed by using Computer Vision techniques using colour images. They could be a bit of a problem for privacy issues, and same for the procedure to get the GDPR approval. The related work discussed on this section is focused on thermal camera and it is summarized in Table 1.

Multiclass Object Detection aims to determine the bounding boxes of the elements in an image and their classification. For this problem, Computer Vision solutions at the state-of-the-art are primarily based on RGB images [4, 23, 25, 45], or on thermal images [21, 27, 28], and in some cases by using both colour and thermal images [36].

In more details, Jia, Zhu et al. [21], presented the LLVIP dataset including street images in RGB and thermal formats that can be used for different purposes. In [21], authors wrote a section relevant to the problem of Pedestrian Detection. To this end, they fine-tuned the pre-trained YOLOv5 model [22] on COCO dataset [31] by using the infrared data from LLVIP. The solution achieved a mean Average Precision (mAP) at the Intersection over Union (IoU) threshold of 0.5 (mAP_0.5) of 0.965 on the thermal images, compared to the 0.908 of the corresponding RGB images. Krišto et al., in [28], proposed a solution for person detection and surveillance by using thermal cameras and taking into account also the effects of weather conditions. In that context, a custom dataset has been created with videos acquired during winter in different weather conditions (clear weather, rain, fog), during night and at different distances from the camera (ranging from 30 m to 215 m, using YOLOv3). This solution achieved a mAP_0.5 of 0.87. In [37], a YOLOv3 model has been trained to detect both Human and Nonhuman objects (e.g., dogs) in thermal images. In this case, they achieved a mAP_0.5 score of 0.9798, thus confirming a possible exploitation of the solution for any automatic monitoring of protected objects and areas. Kowalsky et at., in [27], compared different state-of-the-art Object Detection models to detect people and inflatable boats from a distance of 50-200 m, thanks to thermal images. In terms of performance, the best model turned out to be Faster R-CNN (Region based Convolutional Neural Network) with ResNet101. On the other hand, in terms of processing time, YOLOv3 was significantly faster and achieved a Detection Rate (DR) with an IoU threshold set at 0.7 (DR_0.7) of 65%. Goel et al., focused on the problem of pedestrian detection [18]. The dataset used was the Thermal OSU Pedestrian Dataset from OTCBVS Benchmark Database [10]. Best results were obtained by using a Faster R-CNN, thus demonstrating the solution validity in multiple illumination conditions depending on weather (Dense Cloudy, Light Rain, Partly Cloudy, Haze, Sunny). Multiclass Object Detection has an important role in advanced driver assistance systems (ADAS) and autonomous driving applications. In [8], Dai et al., proposed TIRNet a deep neural network architecture based on convolutional layers to detect cars, pedestrians, cyclists, buses and trucks. Overall, the mAP_0.5 over all the classes for the proposed dataset achieved 0.7485 and, if considering only the pedestrian class, mAP_0.5 = 0.8047. On the KAIST dataset [7], the TIRNet achieved a mAP_0.5 = 0.5993. Other works on Multiclass Object Detection in autonomous driving are Kera et al., [24] and Munir et al., [35]. Here authors used a self-supervised technique to learn enhanced feature representation by using unlabelled data and a multi-scale encoder-decoder transformer network; the latter could exploit these enhanced features embedding to develop a robust thermal image object detector. In this latter case, the proposed approach achieved over all the classes on the KAIST dataset a mAP_0.5 = 0.7322 and on the FLIR-ADAS dataset [14] mAP_0.5 = 0.7757. Kera et al., in [24], proposed an EfficientNet solution with a weighted bidirectional feature pyramid network, achieving a mAP_0.5 = 0.773 on the FLIR-ADAS. Li et al., in [30], have based their solution on YOLOv5, improving the state-of-the-art performance as to the problem of Object Detection in the two datasets FLIR-ADAS and KAIST, achieving a mAP_0.5 of 0.835 and 0.983, respectively.

When the goal is just counting people in the scene, the state-of-the-art primarily presents detection-based approaches [13, 15, 33, 43]. These systems first detect people on images and then count them, such as in [38]. These systems are in most cases based on classifiers trained on the recognition of a whole body or a part of it (for example the head, which resulted to be in most cases less precise than body detection). An example, is based on YOLOv3 classifier as presented in [34], obtaining a classification accuracy of 96.1% on the INRIA dataset [9] (uncrowded urban contexts) and 82.1% on the Shanghai Tech dataset [46] (urban contexts with some crowded scenes). Detection-based approaches can be used for people counting and are also widely exploited in tracking systems as in [2], [5, 12, 39, 47]. Thermal cameras have been also used for the analysis of bio samples, where some elements may present specific thermal characteristics [1].

1.2 Article aims and contributions

In this paper, we have focused on problems related to people detection and counting in cultural heritage locations, which are crowded with tourists such as: Florence in Italy, Valencia in Spain, Pont du Gard in France, Dubrovnik in Croatia, etc. These places have specific city squares (located in strictly pedestrian areas) which attract high numbers of tourists, almost at any time of the day. Therefore, the proposed solution has dealt with the issue of people detection (classification) and counting; if compared with other solutions, it has overcome the state-of-the-art solutions on three main goals, and it could provide higher:

-

mean average precision for detection and counting in crowded conditions, detection to identify/count: people, bikes/motorbikes, strollers/carts;

-

flexibility in terms of counting range where the relevant mean average precision can be obtained over 10 people, which is a limitation of state-of-the-art solutions, as highlighted in the paper;

-

flexibility with respect to changing different kinds of thermal camera lenses: from telephoto to wide-angle, without losing in terms of mean average precision.

The proposed solution exploited the YOLOv5 [22] and LLVIP [21] with a set of tuning approaches (transfer learning) to improve the mean average precision and the flexibility of previous solutions in the state-of-the-art. To this end, we explored both top-down and bottom-up training adaptation approaches and could demonstrate that bottom-up approach can provide best results according to the above-mentioned objectives in terms of performance and flexibility.

In addition, the solution has been implemented to obtain Real-Time execution on (i) mid-level industrial PC capable to perform multiple Python stream processing (which allows to use the solution connected to any RTSP stream of thermal cameras), (ii) board of AXIS thermal cameras. The results of people detection processes can be used to track the number of objects of some specific classes of interest, and they can be integrated in monitoring dashboards to become a useful tool for decision-makers. The proposed solution has been tested and validated to detect people (pedestrians, bikes/motorbikes, strollers/carts) in strictly pedestrian areas, which is the typical case in cultural cities in Europe. And more in particular in Piazza Della Signoria in Florence, Italy, the city hall square of Florence, Italy, which is a city attracting about 15 million of tourists per year. Solutions have been developed and validated in the context of the Herit-Data Interreg European Commission project [20] which aims to identify innovative solutions to monitor and manage the impact of tourism on cultural and natural heritage sites, with the support of new Big Data technologies. The solution has been implemented by exploiting the Snap4City framework and platform which is a fully open-source solution [https://www.snap4city.org], [16, 19].

1.3 Article structure

The paper structure is as follows. Section 2 describes both problem and data according to the operating conditions, therefore data which can be obtained by thermal cameras of different kinds. On these grounds, an assessment of the state-of-the-art solutions (based on YOLO and Faster-R-CNN) is provided to stress the identified limitations which make them unsuitable in counting and classifying high number of people via thermal cameras. In Section 4, the identified solution to enforce flexibility and high mean average precision in counting high number of people is presented. Section 5 presents the usage of a bottom-up training adaptation approach (transfer learning) which additionally has improved the mean average precision and flexibility of the early YOLO with LLVIP training. In Section 6, the deployment architecture, which can be used to adopt the solution for Real-Time detection and counting of people, is described. Conclusions are drawn in Section 6.

2 Problem and data definition

As mentioned in the introduction, the main goal of this research was to detect and count people in real-time within tourism frameworks. And, in particular, the goal was set in terms of detecting and managing situations where we may have up to 60-70 people in a single image. The state-of-the-art of thermal camera datasets and solutions have not yet addressed such a condition. For example, considering the most widespread dataset of thermal images: LLVIP dataset [21] is limited to max 10 people, KAIST dataset provides a mean number of objects of 0.90 per image [19], CTIR dataset provides a mean number of objects of 2.59 per image [8], and FLIR-ADAS dataset a mean number of objects per image of 2.73 [14]. Critical tourism conditions may present much higher numbers, thus counting solutions have to work with relevant mean average precision in the range from 0 to 70.

For example, in Piazza della Signoria square in Florence, Italy, we manually counted hundreds of people in total and 70 under the view of each single camera. These conditions can be regarded as crowded. In the views reported in Fig. 1, two cameras’ views (CAM51 and CAM52) take the most relevant portions of the square, in which we may have reasonably up to 70 people in the square. Over that number of people, a critical condition may be warned. Squares need to be physically monitored for security reasons and for cleaning and assistance. In this specific case, large amount of people in the square may unexpectedly arrive from two main directions, that is from Ponte Vecchio (CAM51) and Uffizi (CAM52), respectively. In Fig. 2, sample raw images of CAM51 and CAM52 labelled in Fig. 1 are reported.

Views of CAM51 (a) and CAM52 (b) of Piazza Della Signoria, Florence, Italy, see Fig. 1

Our main goal has focussed on producing a solution which can: (i) perform people detection and counting with high mean average precision in the range from 0 to 70, (ii) be applied to different thermal cameras without retraining (which would increase cost), (iii) be adopted in real time on RTSP stream, as well as directly on board of the camera (to produce detections and counting, providing the bounding box via MQTT messages).

According to above requirements, our first experiment has been to assess best solutions from the state-of-the-art on CAM51 and CAM52 scenarios and data. To this end, we began from assessing:

-

(i)

YOLOv5 based solution pre-trained with COCO dataset and fine-tuned with LLVIP dataset implemented as in [21], which includes more than 12,000 images limited to max 10 people. Implementation has been based on the Ultralytics code [22] using the architecture YOLOv5s to balance detection capability, speed of execution and model weight in view of installing the solution on edge devices. The obtained model has been called YOLO-LLVIP.

-

(ii)

Faster R-CNN (in the following FRCNN) pre-trained with ImageNet dataset [11]. The FRCNN has been realized using the Detectron2 framework [42] specifically using the architecture X101-FPN. FRCNN has been fine-tuned with LLVIP dataset (never carried out in literature). This was performed for the first time by the authors in this research and presented in this paper (named as FRCNN-LLVIP model).

Therefore, the resulting models of this first phase (YOLO-LLVIP and FRCNN-LLVIP models) have been assessed with respect to the original LLVIP validation dataset and with respect to test datasets created from the videos acquired from CAM51 and CAM52 and by selecting images in the latter dataset with at most 10 people. Results are reported in Table 2. Please note that all the adopted datasets in this first case had a maximum of 10 people (since the LLVIP provides images of 10 people to the utmost, a selection has been performed for the comparison). More details on the standard metrics used for the comparison are reported in Section 5.A. From this early analysis, see Table 2, it turned out that there was a reduction of performance for both YOLO-LLVIP and FRCNN-LLVIP models in terms of mAP_0.5 and precision, passing from LLVIP validation to the two real cases of CAM51 and CAM52 (see Table 2). This assessment has provided evidence about a lack of flexibility as to the models under test, since they could not be successfully used on people detection on new cases, which may have different camera lens and contexts.

All solutions at the state-of-the-art are providing results for less than 10 people [18, 21, 27, 28], and this is also evident from data sets as described at the beginning of Section 4. Therefore, a second experiment was conducted to assess the quality of the above presented models with respect to images taken from CAM51 and CAM52 test data sets, in the event of higher number of people (see Table 3). When the number is greater than 10, more relevant errors are occurring. Both YOLO-LLVIP and FRCNN-LLVIP models provide a worst mAP_0.5, when the number per image is greater than 50 wrt to the “<=10 case”, of Table 1. Both models seem to work better for CAM51, rather than for CAM52 set (see their description in Section 2.A).

The above-presented experiments have proven the limited capabilities shown by YOLO-LLVIP and FRCNN-LLVIP models, when they are used in different cases, such as CAM51, CAM52. In fact, they present:

-

low and decreasing mean average precision in detection and counting people in crowded conditions, and in general when the number of people is greater than 10;

-

low flexibility, providing a relevant decrement of mean average precision when different kinds of thermal cameras from telephoto to wide-angle are adopted.

For this reason, the approach of direct transfer learning was not viable to solve the problem and we decided to perform an additional fine tuning and training. We created new data sets for training and validation to overcome the detected and verified problems, as described above.

2.1 Flexible people detection dataset of thermal images

In order to generate data for training some video sequences have been taken and manually classified by using Yolo_Label tool (https://github.com/developer0hye/Yolo_Label). It creates a .txt file for each image, where for each object in the image it specifies: <object-class> < x_center> < y_center> <width > <height>. The <object-class> for the object detection/ classification has been assigned as: 0-green for people, 1-blue for bikes/motorbikes and 2-red for strollers/carts (the sum of these classes makes the number of counted people in the image). See the example of Fig. 3 for CAM51, where 71 people, 1 bike and 2 strollers have been labeled. Regarding CAM52, frames were rectified by removing the wide-angle lens distortion. Subsequently, the images were labelled.

In order to build a new thermal image training data set and to evaluate machine learning models and solutions as a function of the number of detected/classified people, images have been labeled according to the number of people and their classification. Typically, the images of a data set have been grouped according to the number of people: <=10, >10 and < =25, >25 and < =50, >50 and < =75, >75. The highest number of people in the scene is 97 people for CAM51, and 79 people for CAM52. Table 4 shows the number of images and the number of people, bikes and strollers within the different datasets. Each and every image stands for a positive example, meaning that it includes at least one object. In addition, to train the multi-category detection “person”, “bike” and “stroller”, a minimum of 7% of images containing each category has been selected. As to test datasets, images from the two thermal cameras have been labeled considering only people in the scenes; wherever on the bike there was a person, it was considered and same for strollers.

2.2 Metrics

In order to evaluate the results of such trained models, the following metrics have been used. IoU metric in Object Detection evaluates the degree of overlap between the ground truth (gt) and prediction (pd):

Fixed α IoU threshold and defining: TP = True Positive, FP = False Positive, FN = False Negative, TN = True Negative. Other metrics are:

-

\(Precision=\frac{TP}{TP+ FP}\)

-

\(Recall=\frac{TP}{TP+ FN}\)

-

AP is the area under precision-recall curve (p(r)) evaluated by using α αIoU threshold.

$${AP}_{\alpha }={\int}_0^1p(r) dr$$ -

mAP, mean Average Precision is the average of AP values over all classes.

$${mAP}_{\alpha }=\frac{1}{\mid n\_ classes\mid}\sum_{i=1}^{n\_ classes}{AP}_{\alpha \kern0.5em i}$$

3 Enforcing flexibility in the model

This section describes the process to realize a model addressing the problems of people detection (classification) and counting satisfying requirements of:

-

R1) high mean average precision for people detection and counting, as well as bikes/motorbikes, strollers/carts detection and counting in crowded conditions;

-

R2) high flexibility in terms of counting range where a relevant mean average precision is obtained;

-

R3) high flexibility allowing the usage of different thermal cameras from telephoto to wide-angle without a relevant decrement of mean average precision in counting;

-

R4) real time computation capabilities on stream and on board of TV Cameras.

Based on the results at the state-of-the-art, the single-stage object detection YOLO architecture has been compared to the multi-stage object detection FRCNN with FPN architecture in terms of both detection capability and execution speed.

3.1 Case(i) for multiclass object detection

In this Case (i), the selected architectures YOLO pretrained on COCO dataset and FRCNN pretrained on ImageNet dataset [11], have been fine-tuned for the problem of multiclass object detection of people, bikes and strollers using the training datasets from CAM51 and CAM52 with the aim of choosing the best solution to satisfy requirements R1, R2, R3. CAM51 and CAM52 training sets are described in Section 2.A and they contain a large range of people in the scene, even more than 70.

The YOLO architecture, pretrained with COCO [21], has been fine-tuned with the training sets of CAM51 and CAM52 (see Table 4), respectively. This brought forth the so called: YOLO-CAM51 and YOLO-CAM52 models. Such training processes used an early stopping with patience set to 100 on the mAP_0.5 of the validation set. Moreover, also FRCNN pretrained with ImageNet [11] has been fine-tuned with training sets of CAM51 and CAM52 (see Table 4), respectively, thus obtaining the so called: FRCNN-CAM51 and FRCNN-CAM52 models. Both training processes used an early stopping with patience set to 500 on the mAP_0.5 of the validation set.

Results in terms of precision, recall, mAP_0.5, mAP_0.5:0.95 are reported in Table 5. Results show that the models based on YOLO achieved better results compared to those based on FRCNN and overall, the validation dataset CAM51 achieved better performance compared to the CAM52 in both tested architectures. Please note that CAM51 and CAM52 validation data sets include images for multiclass detection with a number of people in most cases higher than 10.

To better understand such results on the assessed models, the confusion matrixes for each class of interest (namely people, bikes and strollers and background) can be analyzed. Therefore, Figs. 4 and 5 show confusion matrixes for the validations of CAM51/CAM52, for YOLO and FRCNN respectively. In both validation cases, especially for the True Positives on the class Person, the YOLO based model architectures outperformed the FRCNN based models. Comparing results of Table 5 with those of Tables 2 and 3, it is self-evident that fine tuning of YOLO and FRCNN models could produce better results than those directly obtained on YOLO-LLVIP and FRCNN-LLVIP models, in terms of mAP_0.5.

3.2 Case (i) for MonoClass object detection

According to requirement R1, research focused on overcoming the performance of the newly developed models with respect to the number of people in the scenes. For this purpose, YOLO-CAM51, YOLO-CAM52, FRCNN-CAM51, FRCNN-CAM52 models have been compared to assess the mAP as a function of a number of people detected with respect to the test sets shown in Table 4 for CAM51 and CAM52. Results are reported in Table 6 for YOLO-CAM51, FRCNN-CAM51 models (fine-tuned with CAM51 training set), and in Table 7 for the YOLO-CAM52, FRCNN-CAM52 models, which were developed with CAM52 training set. As a result, such 4 fine-tuned models for specific cameras achieve better results, if compared with results obtained by the YOLO-LLVIP and FRCNN-LLVIP models of Table 3. Please note that, the LLVIP dataset (used in YOLO-LLVIP and FRCNN-LLVIP models of Table 3) contains images with fewer people (<=10) and in this category YOLO-LLVIP model achieved a mAP_0.5 of 0.908 for the CAM51 test <=10 dataset (see Table 3), to be compared with the value of both 0.970 as to YOLO-CAM51 model and 0.9232 as to FRCNN-CAM51 model, as reported in Table 4. As to CAM52 test set <=10, YOLO-LLVIP model achieved a mAP_0.5 = 0.737 (in Table 3), compared to the value of 0.975 of FRCNN-CAM52 model and 0.962 of YOLO-CAM52 model (in Table 7).

When considering scenes with more than 10 people (see Tables 6 and 7), performances of the newly fine-tuned models outperform YOLO-LLVIP values, see Table 3. Therefore, a relevant level of flexibility has been enforced into the fine-tuned models with respect to the formed YOLO-LLVIP and FRCNN-LLVIP models. In more details, according to Table 6, the most suitable architecture for flexible detection capabilities in terms of counting range as to CAM51 test set turned out to be YOLO-CAM51 model. On the other hand, as to CAM52 test set, no identified models can overcome the others in all the cases under analysis. YOLO-CAM52 model could be better ranked on 3 of the 5 ranges of people counts (Table 7), while FRCNN-CAM52 model on 2. As a final consideration, the YOLO-CAM51 model turned out to be the best balance, as it was the best model on 7 cases over 10 for both CAM51 and CAM52 test sets. A consideration can be performed on the mean value of mAP_0.5 over all cases (Table 6). As to YOLO-CAM51 model it resulted to be 0.9271, while for FRCNN-CAM51 model we recorded 0.8882, which confirm the detailed results above.

Moreover, according to requirement R4, solutions have been assessed in terms of their execution time (see Table 8). CPU computations have been performed on 8 cores XEON at 2.3 GHz, while deep learning solutions have been executed on GPU as NVIDIA Quadro GV100 with 32GByte Ram, which has 5120 CUDA Cores, FP64 perf as 7.4 TFLOPS. YOLO-CAM51 model can perform real-time detections up to 112 frames per second, using the GPU in the worst case evaluated (>75& < 97 detection test set) compared to the 8 frames of FRCNN-CAM51 model.

According to R1-R4declared goals and requirements, the most suitable architecture turned out to be YOLO-CAM51 (YOLO model with fine-tuned with the new data set of CAM51). For this reason, it has been further improved by (i) combining other newly training sets, (ii) using bottom-up layer wise domain adaptation, as reported in the following section, with a focus on object detection and counting.

3.3 Case (ii) for MonoClass object detection

According to the above results, with the aim of enforcing more flexibility to the YOLO-CAM51 model, two new approaches have been produced and discussed in the following. They have been named as: Case (ii)a, and Case (ii)b.

Case (ii)a has been obtained by starting from YOLO model performing a fine-tuning using both CAM51 and CAM52 training sets, as described in Table 4. The resulting model has been called YOLO-CAM51-52 model; results are reported in Table 9 which shows how the model has been assessed against test datasets of both cameras, in different people ranges. According to the mAP, combinations of training sets did not improve results obtained for CAM51 of Table 6, while it improved the ones obtained for CAM52. Especially mAP_05 could improve for all ranges, but not for the range of (50-75] people with a mAP_0.5 of 0.878, to be compared with CAM52 model of 0.879 in Table 7, thus improving its flexibility. The mean value of mAP_05 over all ranges turned out to be 0.91.

Case (ii)b has been created from YOLO model and by means of a fine-tuning using three training sets of Table 4: CAM51 Training, CAM52 Training, and LLVIP Training. Results are reported in Table 10, where the produced YOLO-CAM51-52-LLVIP model has been assessed against test datasets for each class of people counting. As a result, YOLO-CAM51-52-LLVIP model has provided a valid solution to the problem of people detection and has achieved comparable results, since this specific model was trained on the LLVIP, thus achieving/confirming a mAP_0.5 of 0.96, a non-reduction of performance on LLVIP case. The mean value of mAP_0.5 on all cases has been 0.9278, while it has been 0,9247 if we take into account only results of test set coming from the CAM51/52.

On test datasets of CAM51 and CAM52, results are comparable and, in some cases, also better than the specific model, as in the case with <=10 people on CAM52 where the combined model could achieve a mAP_0.5 of 0.974, while the specific one has a mAP_0.5 of 0.962. Results with crowded situations in our case study are also valid and especially when there are more than 75 people in the scene: in this occurrence the combined model achieves better mAP_0.5 scores forCAM52 (with a mAP_0.5 of 0.804 with respect to 0.786 of the specific model). Relevant improvements in the flexibility among different cameras have been obtained in wider range of cases (see Table 10) with a marginal reduction of the highest performance obtained for CAM51 case, in Table 6. The mean mAP_0.5 turned out to be slightly improved in this latter case (see Table 10). Therefore, the resulted YOLO-CAM51-52-LLVIP model outperforms the mean average precision of the former YOLO-LLVIP model for small number of people, and strongly outperform all YOLO-LLVIP and FRCNN-LLVIP models when larger ranges of people need to be addressed. This final model has demonstrated a high degree of flexibility and resilience with respect to people range and different kinds of cameras, thus satisfying requirements R1, R2, and R3.

4 Bottom-up layer-wise domain adaptation

While aiming at a solution that can preserve both flexibility in terms of range of people and camera kinds, an additional solution has been developed and is discussed in this section. Kiew et al., in [26], performed an extensive assessment comparing both top-down and bottom-up domain adaptation strategies on thermal images and proposed a bottom-up layer wise domain adaptation on YOLOv3 architecture, outperforming the best performing single-modality pedestrian detection results on the KAIST and the FLIR-ADAS datasets. In [26], domain adaptation attempted to exploit learned knowledge from a source domain (RGB images) in a new target related domain (thermal images). In our case, we performed a fine-tuning process starting from a pre-trained model on COCO dataset (RGB images) using thermal images.

The approach of fine-tuning adopted in the previous section has been a top-down domain adaptation on the thermal domain via back-propagation, where the supervision signal comes from the loss at the top of the network down to the new input distribution.

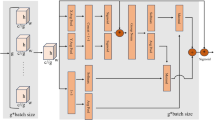

Both top-down and bottom-up domain adaptation strategies adopt the same flow chart, which is presented in terms of main steps in Fig. 6. New training data sets have been created by both collecting images from thermal cameras (CAM51 and CAM52) and annotating them manually, while dataset LLVIP has been just inherited. Images have been preprocessed to bring them to the same size. Then, on the basis of the new training set, YOLOv5s pre-trained on COCO has been fine-tuned with the Bottom-Up layer-wise domain adaptation. Validation and test sets have been used, respectively. Details regarding the Bottom-Up layer-wise domain adaptation are reported in the following.

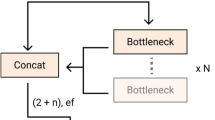

The bottom-up layer-wise domain adaptation is based on the hypothesis that slowly fine-tuning from bottom of the network should preserve more knowledge from the original domain. We applied this process on YOLOv5s architecture, which is made up of three main components: (i) the model backbone: CSPDarknet [44] extracting features from input image and being composed by Simplified Cross Stage Partial Bottleneck blocks C3 and a cascaded faster version of Spatial Pyramid Pooling Layer SPPF (https://github.com/ultralytics/yolov5/pull/4420/files); (ii) the model neck: PANet [32] elaborating feature pyramids to generalize objects in different scales; (iii) YOLO-head performing final detection. YOLOv5s is represented in Fig. 7, and it has been summarized with a representation of 27 blocks that are reported in the figure with both parenthesis and block id. Main details about type of blocks are also reported in terms of functionality and dimensionality. Similar blocks are grouped in terms of color. YOLOv5s is made up of a total of 177 (N) layers and a total of 7.2 M parameters.

Differently from [26], we applied the approach bottom-up layer-wise domain adaptation fully on thermal data and on YOLOv5 architecture: this process is graphically summarized in Fig. 8. Considering the epoch i during the training of the network, the process freezes the layers 3i + 1:N, where N is the total number of layers of the considered architecture (for YOLOv5s is 177). Therefore, at the starting epoch of a re-training process, base layers are also trainable, while the other upper part of the network is frozen. After every epoch, the 3i + 1 layers are unfrozen until the entire network is fine-tuned.

We applied the bottom-up layer-wise domain adaptation starting from YOLOv5s pre-trained on COCO dataset, at first using only single camera datasets, then using combination of both CAM51 and CAM52 training datasets: results did not improve the top-down fine-tuning reported in Section 5. On the other hand, using the bottom-up layer-wise domain adaptation on LLVIP, CAM51 and CAM52 training datasets could produce the new model YOLO-CAM51-52-LLVIP-BLDA, with results that improved those obtained by using the top-down strategy, as reported in Table 11. This new model achieved a mAP_0.5 on the validation dataset made up of the united version of LLVIP, CAM51, CAM52, test datasets of 0.966.

To compare these results with respect to those of the Multiclass Object Detection reported in Section 5, YOLO-CAM51-52-LLVIP-BLDA model has been validated on the united version of validation datasets CAM51 and CAM52. Results in terms of confusion matrix are reported in Fig. 9.

YOLO-CAM51-52-LLVIP-BLDA model for Multiclass Object Detection task could achieve, as to the person class category, a percentage of True Positive detected objects of 0.93; as to the bike class 0.89 and it could manage to detect every stroller presented in the validation sets. When comparing these results using the specifics CAM51 and CAM52 models on the respective validation datasets as reported in Section 5, the percentage of True Positive detected objects as to the person class has improved with respect to the value of 0.895 of the specific models; as to the stroller class it has achieved the same number of detection as in the validation datasets, and as to the bike class it has achieved a slightly less value of 0.89 compared to the value of 0.895 achieved with the specific camera models. On these grounds, this one bottom-up model could provide high flexibility for detection of people, bikes, strollers on different image types from telephoto to wide-angle cameras.

As to the analysis on datasets with number of people in the image, results in terms of precision, recall, mAP_0.5, mAP_0.5:0.95 are reported in Table 12. When considering the mAP_0.5 on the LLVIP validation dataset, YOLO-CAM51-52-LLVIP-BLDA model has achieved a value of 0.959, thus confirming results related to YOLO-LLVIP model. When considering test datasets of CAM51 in the categories with number of people <=10 and > 10& < =25, YOLO-CAM51-52-LLVIP-BLDA-Model has achieved the best results over all the proposed models, with mAP_0.5 values of 0.979 and 0.974. As to test datasets of CAM52 in all the categories from people <=10 up to 79, this model has achieved the best results over all the models under evaluation in this work.

Therefore, YOLO-CAM51-52-LLVIP-BLDA-Model could provide better results with respect to the YOLO-CAM51-52-LLVIP-Model of Table 12, showing more flexibility with respect to the different number of people in the scenes, as well as when changing camera resolution and lenses. The mean value of mAP_0.5 on all cases turned out to be 0.9456, while it has been 0.9443 if taking into account only results of test set coming from CAM51/52.

5 Deployment architecture

In compliance with GDPR rules, our system has used two thermal cameras CAM51 (which is an AXIS Q1951-E), and CAM52 (AXIS Q1952-E). The Q1951-E has a telephoto 35 mm camera lens with a horizontal field of view of 10.5° and F1.14 with 768 × 576 pixel images. The Q1952-E has a wide-angle 10 mm camera lens with a horizontal field of view of 63° and F1.17 with 640 × 480 pixel images but it has been positioned vertically. In order to process images from thermal camera Q1952-E, a wide-angle correction has been applied using the undistortImage function of the Fisheye camera model in the OpenCV library [6]. The deployment of the solution can be performed in two ways, as reported in Fig. 10.

In the first case, AXIS cameras are ARM7 architecture, and thus an Application Plugin in C++ to execute such trained models has been developed. Therefore, in this case, the model execution is performed on Edge into the Application Plugin. The Application Plugin (see Fig. 11) can directly show results (image and related bounding boxes, and data below the image with its bounding box and the classification) on the camera’s web interface and it can produce MQTT messages with bounding box of detected objects and their classifications. MQTT messages can be sent outside to some MQTT brokers, as well on local Node-RED (with an internal Aedes MQTT broker) installed in the camera; Snap4City Library can be installed on the local Node-RED to send data in protected way to some servers and create dashboards. In addition, via Node-RED it is possible to create dashboards or collect data coming from multiple cameras to perform data aggregation, reasoning and providing higher level results to be sent on cloud via MQTT or other protocols.

In the second case (reported in the bottom part of Fig. 10), a Python process receives the RTSP stream from a camera, it executes the above produced model for object detection and it saves the detected bounding boxes directly on some local database. It may also send the same information via some protocols to others for example via MQTT, NGSI V2, and rest calls. The execution is performed on Python, executing the training model on an NVIDIA T1000 4gb GPU. In this case, the model execution can process 8 frames per second, which is the number of frames produced by the thermal camera. On this appliance (industrial PC), a NODE-RED can be installed to get data from database and send them to Snap4City framework infrastructure via MQTT or NGSI V2 messages.

In both cases, data have to arrive to a Snap4City/IoT platform, where a given number of IoT Devices is used to receive data and visualize results in dashboards in Real-Time. Therefore, dashboards are used to show results as in Fig. 12, which reports trends on number of people for a week for both cameras, and on which the drill down/up on time trends of people counting can be performed.

5.1 Edge-device architecture and execution performance analysis

For the first case, the architecture of the Application Plugin is shown in Fig. 13. The process on ARM7 extracts a frame from the RTSP stream and performs wide-angle correction and image resize (to 640X640) according to the camera kind and settings. Then the above presented model is used for object detection generating the counting and bounding boxes for the detected classes. Bounding boxes are superimposed on the frame to show them to the web pages produced by the camera, and sent in MQTT to some brokers or other cameras (in this case Snap4City platform to collect real time data and further data aggregation and data analytics).

System architecture for the application plugin of Fig. 10

As to the Application Plugin installed on CAM51, execution time has been assessed and tuned. In fact, execution time also depends on the number of people detected. In Fig. 14, execution time as a function of the number of bounding boxes is reported. Our analysis has been performed by processing the image data acquired since 14/07/2022 until 19/07/2022, when the interval of boxes/people detected has been from 0 to 60. Based on these execution times starting from base scenario (0 boxes) with an execution time of 9.174 seconds, the time increment to detect a box is of about 0.077 seconds.

6 Conclusions

An important role for Smart Cities is played by Tourism management applications especially to study solutions able to let tourists enjoy the quality of their experience, even in crowded sites. In this work, we have proposed the usage of thermal cameras which are not invasive and cope well with privacy issues and GDPR. Both YOLO and Faster-R-CNN were fine-tuned using the LLVIP dataset to improve their flexibility in people detection. When the scene presented more than 10 people the results were not satisfactory. Therefore, in this paper an approach for multiclass object detection of people, bikes and strollers, has been proposed, with results used to create a monitoring dashboard. For multiclass object detection task, YOLO engine has been exploited, because it performs a single-shot object detection providing at the same time low computational time performances and good detection accuracy over all the considered classes. To this end, new specific thermal cameras training sets have been produced for fine tuning both YOLO and Faster-R-CNN. We have tested and compared a wide set of tuning approaches in order to improve the mean average precision and flexibility of state-of-the-art solutions. To this end, we have explored both top-down and bottom-up training adaptation approaches and we have demonstrated that bottom-up approach can provide best results according to the above-mentioned goals in terms of performance and flexibility. As a result, we have produced a solution providing a higher mean average precision for cameras with different lenses (from wide-angle to telephoto) and covering a large of number of people in the scenes. The solution has been derived from YOLOv5 architecture based on a bottom-up layer-wise domain adaptation that could fit for the need of a lower computational time. As to mean mAP_0.5, the solution has achieved a value of 0.986 on the object of the scene on the test datasets for scenarios with less than 10 objects, and a value of 0.9456 with mixed scenes with up to 97 objects. Moreover, this solution has been tested in two possible deployment configurations: (i) an industrial PC with GPU that could provide Real-Time processing results and, (ii) a specific implementation and deploy on thermal cameras which can produce good results over 2 frames per minute. Such solution has been massively tested on Piazza Della Signoria, Florence, Italy, sending data to Snap4City platform and Dashboards.

Data availability

The data supporting the findings of this study are available from Https://www.snap4city.org/, but some restrictions apply to the availability of original source data, wherever used under licence for this current study.

References

Alharthy KM et al (2023) Boron nitride nanocage as drug delivery systems for chloroquine, as an effective drug for treatment of coronavirus disease: a DFT study. Inorg Chem Commun 150:110482

Azhar MIH, Zaman FHK, Tahir NM, Hashim H (2020) People tracking system using DeepSORT. In: 2020 10th IEEE international conference on control system, computing and engineering (ICCSCE), pp 137–141

Badii C et al (2019) Microservices suite for Smart city applications. Sensors 19(21):4798

Barba Guamán LR, Naranjo J, Ortiz A, Pinzon Gonzalez J (2021) Object detection in rural roads through SSD and YOLO framework, pp 176–185. https://doi.org/10.1007/978-3-030-72657-7_17

Belmouhcine A, Simon J, Courtrai L, Lefèvre S (2021) Robust deep simple online real-time tracking. In: 2021 12th international symposium on image and signal processing and analysis (ISPA), pp 138–144. https://doi.org/10.1109/ISPA52656.2021.9552062

Bradski G. The openCV library. Dr. Dobb's Journal: Software Tools for the Professional Programmer. 2000;25(11):120-3

Choi Y, Kim N, Hwang S, Park K, Yoon JS, An K, Kweon IS. KAIST multi-spectral day/night data set for autonomous and assisted driving. IEEE Transactions on Intelligent Transportation Systems. 2018;19(3):934–48

Dai X, Yuan X, Wei X (2021) TIRNet: object detection in thermal infrared images for autonomous driving. Appl Intell 51:1244–1261

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR'05), vol 1, pp 886–893. https://doi.org/10.1109/CVPR.2005.177

Davis JW, Keck MA (2005) A two-stage template approach to person detection in thermal imagery. In: Proc. 7th IEEE workshops appl. comput. vis. (WACV/MOTION), vol. 1, pp 364–369

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp 248–255

Everingham M, Eslami SMA, Van Gool L, Williams CKI, Winn J, Zisserman A (2015) The Pascal visual object classes challenge: a retrospective. Int J Comput Vis 111(1):98–136

Fang Y, Zhan B, Cai W, Gao S, Hu B (2019) Locality-constrained spatial transformer network for video crowd counting. In: 2019 IEEE international conference on multimedia and expo (ICME), pp 814–819

FLIR Thermal Dataset. [Online] Available: https://www.flir.it/oem/adas/adas-dataset-form/. (last access 27-07-2023)

Fu J, Yang H, Liu P, Hu Y (2018) A CNN-RNN neural network join long short-term memory for crowd counting and density estimation. In: 2018 IEEE int. conf on advanced manufacturing (ICAM), pp 471–474

Garau C, Nesi P, Paoli I, Paolucci M, Zamperlin P (2020) A big data platform for smart and sustainable cities: environmental monitoring case studies in Europe lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), 12255 LNCS, pp 393–406.

GDPR: General Data Protection Regulation, https://gdpr.eu/. Last access 27 Jul 2023.

Goel R, Sharma A, Kapoor R (2021) Deep learning based thermal object recognition under different illumination conditions. In: 2021 second international conference on electronics and sustainable communication systems (ICESC), pp 1227–1233

Han Q., Nesi P., Pantaleo G., Paoli I., Smart city dashboards: design, development, and evaluation, (2020) proceedings of the 2020 IEEE international conference on human-machine systems, ICHMS 2020, art. no. 9209493, pp. 1–4

Herit-Data Interreg project. Innovative solutions to better manage tourism flow impact on cultural and natural heritage sites through technologie and big data, https://herit-data.interreg-med.eu/ (last access 27-07-2023)

Jia X, Zhu C, Li M, Tang W, Zhou W (2021) LLVIP: a visible-infrared paired dataset for low-light vision. arXiv. https://doi.org/10.48550/ARXIV.2108.10831

Jocher G et al (2020) Ultralytics/yolov5. Github Repository, YOLOv5

Karthi M, Muthulakshmi V, Priscilla R, Praveen P, Vanisri K (2021) Evolution of YOLO-V5 algorithm for object detection: automated detection of library books and performace validation of dataset. In: 2021 int. conf. on innovative computing, intelligent communication and Smart electrical systems (ICSES), pp 1–6

Kera SB, Tadepalli A, Ranjani JJ (2022) A paced multi-stage block-wise approach for object detection in thermal images. Vis Comput. pp.1–17. https://doi.org/10.1007/s00371-022-02445-x

Khalfaoui A, Badri A, Mourabit IE (2022) Comparative study of YOLOv3 and YOLOv5's performances for real-time person detection. In: 2022 2nd international conference on innovative research in applied science, engineering and technology (IRASET), pp 1–5. https://doi.org/10.1109/IRASET52964.2022.9737924

Kieu M, Bagdanov A, My K, Bertini M (2020) Bottom-up and layer-wise domain adaptation for pedestrian detection in thermal images. ACM Transaction Multimededia Computing Communication Application. https://doi.org/10.1145/3418213.

Kowalski MŁ et al (2021) Detection of inflatable boats and people in thermal infrared with deep learning methods. Sensors vol.21, n.16, pp: 5330, https://doi.org/10.3390/s21165330

Krišto M, Ivasic-Kos M, Pobar M (2020) Thermal object detection in difficult weather conditions using YOLO. IEEE Access 8:125459–125476. https://doi.org/10.1109/ACCESS.2020.3007481

Li S et al (2019) Supervised people counting using an overhead fisheye camera. In: 2019 16th IEEE international conference on advanced video and signal based surveillance (AVSS). IEEE

Li S, Li Y, Li Y, Li M, Xu X (2021) YOLO-FIRI: improved YOLOv5 for infrared image object detection. IEEE Access 9:141861–141875. https://doi.org/10.1109/ACCESS.2021.3120870

Lin T-Y et al (2014) Microsoft COCO: common objects in context. arXiv. https://doi.org/10.48550/ARXIV.1405.0312

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8759–8768

Liu L, Chen J, Wu H, Li G, Li C, Lin L (2021) Cross-modal collaborative representation learning and a large-scale rgbt benchmark for crowd counting. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4823–4833

Menon A, Omman B, Asha S (2021) Pedestrian counting using yolo V3. In: 2021 int. conf. on innovative trends in information technology (ICITIIT), pp 1–9

Munir F, Azam S, Jeon M (2021) SSTN: self-supervised domain adaptation thermal object detection for autonomous driving. In: 2021 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 206–213

Kieu, M., Bagdanov, AD, Bertini, M., Del Bimbo, A. (2020). Task-conditioned domain adaptation for pedestrian detection in thermal imagery. In European Conference on Computer Vision. Cham: Springer International Publishing (pp. 546-562).

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. arXiv preprint arXiv:1804.02767. https://arxiv.org/abs/1804.02767

Sharath S, Biradar V, Prajwal M, Ashwini B (2021) Crowd counting in high dense images using deep convolutional neural network. In: 2021 IEEE int. conf. on distributed computing, VLSI, electrical circuits and robotics (DISCOVER), pp 30–34

Stovall J, Harris A, O’Grady A, Sartipi M (2019) Scalable object tracking in Smart cities. In: 2019 IEEE international conference on big data (Big Data), pp 3813–3819. https://doi.org/10.1109/BigData47090.2019.9005472

Udrea I, Alionte CG, Ionaşcu G, Apostolescu TC (2021) New research on people counting and human detection. In: 2021 13th international conference on electronics, computers and artificial intelligence (ECAI), pp 1–6

Wu H et al (2018) Multipoint infrared laser-based detection and tracking for people counting. Neural Comput & Applic 29(5):1405–1416

Wu Y, Kirillov A, Massa F, Lo W-Y, Girshick R (2019) Detectron2. https://ai.meta.com/tools/detectron2/

Xu M (2019) An efficient crowd estimation method using convolutional neural network with thermal images. In: 2019 IEEE int. conf. on signal, information and data processing (ICSIDP), pp 1–6

Yin Y, Li H, Fu W (2020) Faster-YOLO: an accurate and faster object detection method. Digital Signal Process 102:102756. https://doi.org/10.1016/j.dsp.2020.102756

Yin K et al (2021) Multi-scale object detection algorithm in Smart City based on mixed dilated convolution pyramid. In: 2021 IEEE SmartWorld, ubiquitous intelligence & computing, advanced & trusted computing, scalable computing & communications, internet of people and Smart city innovation (SmartWorld/SCALCOM/UIC/ATC/IOP/SCI), pp 590–597. https://doi.org/10.1109/SWC50871.2021.00088

Zhang Y, Zhou D, Chen S, Gao S, Ma Y (2016) Single-image crowd counting via multi-column convolutional neural network. In: IEEE conf. on computer vision and pattern recognition

Zhang Y, Chen Z, Wei B (2020) A sport athlete object tracking based on deep sort and Yolo V4 in case of camera movement. In: 2020 IEEE 6th international conference on computer and communications (ICCC), pp 1312–1316

Acknowledgements

Authors would like to thank the Herit-Data (https://herit-data.interreg-med.eu/) for partially funding this research, as well as AXIS for their support with thermal cameras. Km4City and Snap4City (https://www.snap4city.org) are open technologies and research areas of DISIT Lab.

Funding

Open access funding provided by Università degli Studi di Firenze within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Collini, E., Palesi, L.A.I., Nesi, P. et al. Flexible thermal camera solution for Smart city people detection and counting. Multimed Tools Appl 83, 20457–20485 (2024). https://doi.org/10.1007/s11042-023-16374-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16374-x