Abstract

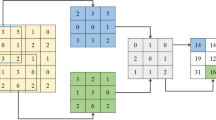

Infrared and visible image fusion aims to generate a single fused image that contains abundant texture details and thermal radiance information. For this purpose, many unsupervised deep learning image fusion methods have been proposed, ignoring image content and quality awareness. To address these challenges, this paper presents a quality and content-aware image fusion network, termed QCANet, capable of solving the similarity fusion optimization problems, e.g., the dependence of fusion results on source images and the weighted average fusion effect. Specifically, the QCANet is composed of three modules, i.e., Image Fusion Network (IFNet), Quality-Aware Network (QANet), and Content-Aware Network (CANet). The latter two modules, a.k.a., QANet and CANet, aim to improve the content semantic awareness and quality awareness of IFNet. In addition, a new quality-aware image fusion loss is introduced to avoid the weighted average effect caused by the traditional similarity metric optimization mechanism. Therefore, the stumbling blocks of deep learning in image fusion, i.e., similarity fusion optimization problems, are significantly mitigated. Extensive experiments demonstrate that the quality and content-aware image fusion method outperforms most state-of-the-art methods.

Similar content being viewed by others

References

Aardt V (2008) Jan: assessment of image fusion procedures using entropy, image quality, and multispectral classification. J Appl Remote Sens 2(1):1–28

Bavirisetti D P, Dhuli R (2016) Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys Technol 76:52–64

Chen H, Varshney P K (2007) A human perception inspired quality metric for image fusion based on regional information. Inf Fusion 8(2):193–207

Cui G, Feng H, Xu Z, Li Q, Chen Y (2015) Detail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decomposition. Opt Commun 341(341):199–209

Eskicioglu A M, Fisher P S (1995) Image quality measures and their performance. IEEE Trans Commun 43(12):2959–2965

Fang A, Zhao X, Zhang Y (2020) Cross-modal image fusion guided by subjective visual attention. Neurocomputing 414:333–345

Gu K, Zhai G, Yang X, Zhang W, Chen C W (2015) Automatic contrast enhancement technology with saliency preservation. IEEE Trans Circuits Syst Video Technol 25(9):1480–1494

Gu K, Li L, Lu H, Min X, Lin W (2017) A fast reliable image quality predictor by fusing micro- and macro-structures. IEEE Trans Ind Electron 64(5):3903–3912

Gu K, Jakhetiya V, Qiao J -F, Li X, Lin W, Thalmann D (2018) Model-based referenceless quality metric of 3d synthesized images using local image description. IEEE Trans Image Process 27(1):394–405

Ha Q, Watanabe K, Karasawa T, Ushiku Y, Harada T (2017) Mfnet: towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes. In: 2017 IEEE/RSJ International conference on intelligent robots and systems, pp 5108–5115

Hou R, Nie R, Zhou D, Cao J, Liu D (2019) Infrared and visible images fusion using visual saliency and optimized spiking cortical model in non-subsampled shearlet transform domain. Multimed Tools Appl (78):28609–28632

Jiayi M, Wei Y, Pengwei L, Chang L, Junjun J (2019) Fusiongan: a generative adversarial network for infrared and visible image fusion. Inf Fusion 48:11–26

Ke G, Zhou J, Qiao J, Zhai G, Bovik A C (2017) No-reference quality assessment of screen content pictures. IEEE Trans Image Process PP(99):1–1

Li C, Liang X, Lu Y, Zhao N, Tang J (2019) Rgb-t object tracking: benchmark and baseline. Pattern Recogn 96:106977

Li H, Wu X -J (2019) Densefuse: a fusion approach to infrared and visible images. IEEE Trans Image Process 28(5):2614–2623

Li H, Wu X -J, Durrani T (2020) Nestfuse: an infrared and visible image fusion architecture based on nest connection and spatial/channel attention models. IEEE Trans Instrum Meas 69(12):9645–9656

Li H, Wu X -J, Kittler J (2021) Rfn-nest: an end-to-end residual fusion network for infrared and visible images. Inf Fusion 73:72–86

Ma J, Zhou Y (2020) Infrared and visible image fusion via gradientlet filter. Comput Vis Image Underst 197–198:103016

Ma J, Liang P, Yu W, Chen C, Jiang J (2019) Infrared and visible image fusion via detail preserving adversarial learning. Inf Fusion 54:85–98

Ma J, Xu H, Jiang J, Mei X, Zhang X -P (2020) Ddcgan: a dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans Image Process 29:1–1

Ma J, Tang L, Xu M, Zhang H, Xiao G (2021) Stdfusionnet: an infrared and visible image fusion network based on salient target detection. IEEE Trans Instrum Meas 70:1–13

Ma J, Zhang H, Shao Z, Liang P, Xu H (2021) Ganmcc: a generative adversarial network with multi-classification constraints for infrared and visible image fusion. IEEE Trans Instrum Meas 70:5005014

Naidu V (2011) Image fusion technique using multi-resolution singular value decomposition. Def Sci J 61(5):479–484

Peng C, Tian T, Chen C, Guo X, Ma J (2021) Bilateral attention decoder: a lightweight decoder for real-time semantic segmentation. Neural Netw 137:188–199

Shah P, Merchant S N, Desai U B (2013) Multifocus and multispectral image fusion based on pixel significance using multiresolution decomposition. SIViP 7(1):95–109

Sun Y, Zuo W, Liu M (2019) RTFNEt: RGB-thermal fusion network for semantic segmentation of urban scenes. IEEE Robot Autom Lett 4 (3):2576–2583

Tang L, Yuan J, Ma J (2022) Image fusion in the loop of high-level vision tasks: a semantic-aware real-time infrared and visible image fusion network. Inf Fusion 82:28–42

Tang L, Yuan J, Zhang H, Jiang X, Ma J (2022) Piafusion: a progressive infrared and visible image fusion network based on illumination aware. Inf Fusion 83-84:79–92

Toet A (2014) TNO image fusion dataset. Figshare Dataset

Wang H, Zhong W, Wang J (2004) Research of measurement for digital image definition. J Image Graph 9(7):828–831

Wang Z, Bovik A C, Sheikh H R, Simoncelli E P (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Xu H, Zhang H, Ma J (2021) Classification saliency-based rule for visible and infrared image fusion. IEEE Trans Comput Imaging 7:824–836

Xu H, Ma J, Jiang J, Guo X, Ling H (2022) U2fusion: a unified unsupervised image fusion network. IEEE Trans Pattern Anal Mach Intell 44(1):502–518

Xydeas C S, Petrović V (2000) Objective image fusion performance measure. Electron Lett 36(4):308–309

Zhang Z, Liu Y, Tan H, Yi X, Zhang M (2018) No-reference image sharpness assessment using scale and directional models. In: 2018 IEEE International conference on multimedia and expo (ICME), pp 1–6

Zhang Y, Liu Y, Sun P, Yan H, Zhao X, Zhang L (2020) Ifcnn: a general image fusion framework based on convolutional neural network. Inf Fusion 54:99–118

Zhang X, Ye P, Xiao G (2020) Vifb: a visible and infrared image fusion benchmark. In: CVF Conference on computer vision and pattern recognition workshops, pp 468–478

Zhang H, Xu H, Tian X, Jiang J, Ma J (2021) Image fusion meets deep learning: a survey and perspective. Inf Fusion 76:323–336

Zhou K, Chen L, Cao X (2020) Improving multispectral pedestrian detection by addressing modality imbalance problems. In: Vedaldi A, Bischof H, Brox T, Frahm J-M (eds) Computer vision—ECCV 2020. Springer, pp 787–803

Zhou H, Wu W, Zhang Y, Ma J, Ling H (2021) Semantic-supervised infrared and visible image fusion via a dual-discriminator generative adversarial network. IEEE Trans Multimed 1–1

Acknowledgments

This work has been supported by these following projects: (1) Grant No. D5120190078, National Science and technology projects of China. (2) Grant No. D5140190006, Key R & D projects of Shaanxi Province.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Raw data for the dataset is not publicly available to preserve individuals’ privacy under the Northwestern Polytechnical University Data Protection Regulation.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, W., Fang, A., Wu, J. et al. Quality and content-aware fusion optimization mechanism of infrared and visible images. Multimed Tools Appl 82, 47695–47717 (2023). https://doi.org/10.1007/s11042-023-15237-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15237-9