Abstract

Image segmentation is a critical stage in the analysis and pre-processing of images. It comprises dividing the pixels according to threshold values into several segments depending on their intensity levels. Selecting the best threshold values is the most challenging task in segmentation. Because of their simplicity, resilience, reduced convergence time, and accuracy, standard multi-level thresholding (MT) approaches are more effective than bi-level thresholding methods. With increasing thresholds, computer complexity grows exponentially. A considerable number of metaheuristics were used to optimize these problems. One of the best image segmentation methods is Otsu’s between-class variance. It maximizes the between-class variance to determine image threshold values. In this manuscript, a new modified Otsu function is proposed that hybridizes the concept of Otsu’s between class variance and Kapur’s entropy. For Kapur’s entropy, a threshold value of an image is selected by maximizing the entropy of the object and background pixels. The proposed modified Otsu technique combines the ability to find an optimal threshold that maximizes the overall entropy from Kapur’s and the maximum variance value of the different classes from Otsu. The novelty of the proposal is the merging of two methodologies. Clearly, Otsu’s variance could be improved since the entropy (Kapur) is a method used to verify the uncertainty of a set of information. This paper applies the proposed technique over a set of images with diverse histograms, which are taken from Berkeley Segmentation Data Set 500 (BSDS500). For the search capability of the segmentation methodology, the Arithmetic Optimization algorithm (AOA), the Hybrid Dragonfly algorithm, and Firefly Algorithm (HDAFA) are employed. The proposed approach is compared with the existing state-of-art objective function of Otsu and Kapur. Qualitative experimental outcomes demonstrate that modified Otsu is highly efficient in terms of performance metrics such as PSNR, mean, threshold values, number of iterations taken to converge, and image segmentation quality.

Similar content being viewed by others

1 Introduction

In the domain of computer vision and image processing, image segmentation is a key component. A single image is always better than thousand words. Segmentation aims to extract features and track information of an image. Image segmentation divides the image into a set of non-overlapping contours based on certain properties like texture, color, homogeneity, and structure [37, 39]. An automatic image segmentation process always remains a very complex procedure in image processing. This process becomes more sophisticated when test images are natural, realistic, and degraded. An algorithm may be called a good segmentation technique if it is able to differentiate among different classes of images or frontiers. The major issue in segmentation is identifying the scene elements successfully in an image. Further application of image thresholding is image recognition. Image thresholding can be categorized into, i.e. single-level and multi-level thresholding methods. In single-level thresholding segmentation processes, only one threshold value is needed, while in multi-level, multiple thresholds are required. The main problem is to find the appropriate values for each picture based on the histogram [31]. Image segmentation can be used in a wide variety of applications, including different fields like biomedical, satellite, infrared (IR), surveillance, agricultural images, etc. These other modalities have unique information, and therefore it is a complex process to make a generalized automatic thresholding technique.

In 1979, Otsu proposed a thresholding method that maximizes the between-class variance and minimizes intraclass variance to achieve optimum threshold values and generates better results [1]. In order to perform segmentation based on image thresholding, Otsu’s between class variance and other maximum entropy methods like Kapur’s entropy [13], Renyi’s entropy [10], and Tsallis entropy [30] have been developed. This approach combines information theory successfully, but the probability of a gray level value being shown primarily affects the methodologies. Another reason for influencing or affecting the segmentation results is to ignore the gray level value of the pixels. The brightness and contrast of an image are not affected by the 1-D Otsu method. Furthermore, it is a procedure with less computation cost for a small number of thresholds. Nevertheless, the algorithm primarily considers only the gray level value; for that reason, it fails to produce optimum results in the case of noisy images.

Tsallis in [2] suggested using the idea of moment-preserving to create a threshold approach for a robust gray image. Kapur, Sahoo, and Wong utilized histogram entropy in a method termed Kapur entropy to discover optimal thresholds [21] and commonly employed the approach to detect the problem of picture threshold segmentation. Cross-entropy reduces the cross-entropy of a picture and the segmented image [10, 26], and the optimal threshold is also utilized for detecting the ideal thresholds. Entropy-based methods are more common among all the methods listed above. Several thresholding methods have been included in the literature [48].

In addition, these approaches may be easily applied to the segmentation of thresholding using multi-levels. For many thresholds, however, the computational time will increase exponentially as they hunt for the best threshold values to improve objective features, resulting in increasingly longer calculation times. Separating objects from backgrounds is the hardest and most complex task in image processing [1]. Background and foreground can be differentiated using the segmentation process. To define multiple regions of an image, multi-level thresholding techniques have been developed, but the computational cost also increases with an increasing number of thresholds. Given this, it is necessary to adopt algorithms that help to search for the optimal threshold values. The most preferred and cost-effective procedure for thresholding is using metaheuristic or optimization algorithms [13, 37].

On the other hand, metaheuristic algorithms (MA) are used to define the process of finding the optimal solution out of available solutions while considering different constraints [10, 30]. This property of the optimization algorithm is used to find the optimal thresholding value used for image thresholding. Different objective functions like Otsu, Kapur, Renyi, Tsallis, etc. [2, 21, 26] are defined to calculate optimal threshold values. Some recently proposed MA are the Hybrid Dragonfly algorithm (DA) and Firefly Algorithm (FA) (HDAFA) [39], the Starling Murmuration Optimizer [48], the Conscious Neighborhood-based Crow Search Algorithm (CCSA) [46], the Quantum-based avian navigation optimizer algorithm (QANA) [47], the Opposition-based Moth Swarm Algorithm (OBMSA) [32], the Archimedes optimization algorithm (AOA) [14], to mention some.

In the related literature, metaheuristic algorithms and their improved versions are used for multi-level segmentation. Such as artificial bee colony (ABC) [44], genetic algorithms (GA) [40], honey bee mating optimization (HBMO) [17], modified firefly algorithm (MFA) [15], particle swarm optimization (PSO) [30], bacterial foraging algorithm (BFA) [45], differential evolution (DE) algorithms [25], wind-driven optimization(WDO) [24], cuckoo search (CS) algorithms [31], ant colony algorithm (ACO) [11], grasshopper optimization algorithm (GOA) [27], self-adaptive parameter optimization (SAPO) [8], electromagnetism-like optimization (EMO) algorithm [16], and glowworm swarm optimization (GSO) algorithms [29]. Some other interesting approaches that successfully segment digital images are based on modern MA as the Coronavirus Optimization Algorithm combined with Harris Hawks Optimizer [18], the improved modified Differential Evolution (MDE) [35], the directional mutation and crossover boosted ant colony optimization (XMACO) [34], the Harris Hawks Optimizer (HHO) [36], the Mutated Electromagnetic Field Optimization (MEFO) [4] or the Altruistic Harris Hawks Optimizer [5]. From these methods it is possible to see that the use of MA for thresholding also benefits field as medicine, where the proper analysis of the images is crucial for a good diagnosis.

The paper aims to present a methodology for image segmentation using the Arithmetic Optimization algorithm (AOA) [3] and Hybrid Dragonfly algorithm (DA) and Firefly Algorithm (FA) HDAFA using modified Otsu’s function. The results of modified Otsu’s are compared with the standard Otsu and Kapur’s methods. The suggested approach combines local properties performed using Otsu with the entropy of Kapur. In this context, the maximum variance value from multiple Otsu classes is coupled with the maximum total entropy computed from Kapur’s entropy method. The novelty of the proposed hybrid objective function is the combination of the between-class variance with the entropy. Since entropy is a tool that helps to measure the uncertainty of a set of information, it clearly improves the segmentation by selecting the appropriate thresholds that reduce such uncertainties by maximizing the entropy and the variance at the same time.

On the other hand, due to its simple and easy application, AOA has been applied to address various real-time problems, like encompassing the lifetime of the RFID network, photovoltaic systems, and range-based wireless node localization [41]. In this paper, the application of AOA is extended to multi-level digital image segmentation by thresholding. Although randomization and static swarm behavior have a great worldwide search capability for AOA, its local search capabilities are limited and result in local optima capturing. At the original phases, the iteration level hybridization method guarantees exploration capability and exploitation capability in the subsequent phases and ensures an enhanced accuracy of the global optimum [41].

It uses common mathematical operations such as Division (D), Addition (A), Multiplication (M), and Subtraction (S), which are applied and modeled to execute optimization in a wide variety of search fields. Population-based algorithms (PBA) [7] commonly launch their improvement processes by randomly selecting several candidate solutions. A defined solution is enhanced incrementally by a set of optimization rules and analyzed sequentially by a particular objective function, which is the basis of optimization techniques. Although PBA is stochastically trying to find some efficient strategy for optimization problems, a single-run solution is not guaranteed. However, a large set of possible solutions and optimization simulations improve the chance of an optimum global solution to the problem c. The major contributions of this paper may be summarized as:

-

Propose a modified Otsu method and use it as an objective function for image segmentation.

-

The local properties of Otsu’s between-class variance, like the maximum variance value achieved from multiple Otsu classes, are combined with maximum total entropy calculated from the Kapur method.

-

The effectiveness and quality of solutions generated by modified Otsu’s are evaluated using arithmetic optimization algorithm (AOA), hybrid dragonfly algorithm, and firefly algorithm HDAFA.

-

The performance of the modified Otsu has been investigated on images widely used in the image processing literature and compared with a basic Otsu method.

-

Quantitative analysis is carried out using different threshold values, PSNR, mean, STD, and number of iterations.

The structure of this manuscript is as follows, Section 2, presents the basic concepts of image segmentation and the modified Otsu approach. Section 3 includes AOA, motivation, exploration, and exploitation stages. Section 4, provides statistical testing/ qualitative parameters, performance evaluation and comparison, experimental results, and related comparative analysis, among other state-of-art. Finally, the paper is concluded in section 5.

2 Image segmentation

Each local region of a picture has a distinct threshold based on its features. Histogram-based thresholding is the most popular approach for segmenting digital pictures. The automated separation of pictures and context is the most active and intriguing field of image processing and pattern recognition [37]. However, it can also provide original forecasts or pre-processing for more complicated phases.

Depending on the threshold values needed to segment the image, thresholding may be defined by bi-level (BT) or multi-level (MT) thresholding defined by Eq. 1. Bi-level thresholding divides an image into two distinct regions, while MT creates multiple regions in an image.

For a given image b(x, y) having a size (m × n) with L intensity levels, a1 and a2 are two different classes depending on the threshold value T. While opting for bilevel thresholding, extra care should be seen to find a suitable correct threshold value (T). To improve segmentation results, MT is used in many cases [7]. Besides, the traditional techniques are calculatedly costly owing to the multimodality of the histogram if many thresholds are necessary. This is a difficult question; thus, scholars have identified several ways for the pre-eminent gray threshold [24].

2.1 Otsu’s between class variance

The between-class variance proposed by Otsu is a nonparametric automatic method for image segmentation that employs threshold values [19]. In Otsu’s method, the intraclass variance is calculated and it provides optimum threshold values. a1, a2, …. , an are different classes of image with different threshold values. In this sense to employ the Otsu’s between class variance, it is necessary to compute the probability distribution pi that is given as:

where ni is a number of a pixel having grey level i, and N is the total number of pixels. The average grey level of an image I is given by

Where, \( {\omega}_a={\sum}_{i=0}^T{p}_i,\kern0.5em {\omega}_b={\sum}_{i=T+1}^{L-1}{p}_i \)

The average levels of μa and μb for two classes of a1 and a2 are as follows:

If the mean intensity of the image is given by μI then

Between class variance \( {\sigma}_B^2 \) needs to be maximized to find the optimal thresholding value using the following equation:

Bilevel thresholding generates two separate regions based on the intensity of a single threshold value. A further extension from bi-level to multi-level thresholding may be carried out for Otsu. In addition, multi-level thresholding generates several regions [a1, a2, a3, ai, ……. . an] based on the following rules:

where n defines the number of classes like [a1, a2, a3, ai, ……. . an] considering i as a certain class for a given image b(x, y) having L gray levels (1,2,…, L) in the range [0, L-1]. Extended between the class value is given by f(k) defined as the between-class variance.

while considering the above classes, the Otsu method can be easily extended to multi-level thresholding for M-1 thresholding levels. Where ωi is a zeroth-order cumulative moment for ith class and μT is mean intensity for hole image. In 2001 Liao proposed a simple and less complex alternative method given by the following equation for k thresholds [28].

From Eq. 11, μa and μb are average levels for classes a1 and a2 already explained. If the between-class variance has a maximum value, then within class variable will always have a minimum value. This methodology is described in Eq. 12

where fOTSU represents fitness function, and maximizing this would correspond to optimal intensity threshold levels. The fitness function considering i multi-level threshold values is given by Eq. 13.

2.2 Kapur’s entropy

The basic concept underlying Kapur’s entropy approach is Shannon entropy. Shannon proposed an entropy function based on the idea that the probability of occurrence is inversely proportional to information [20]. The Shannon entropy for a system H is defined in Eq. 14.

Where H is Shannon entropy, Pi is the probability of the ith gray level, and n is the total number of pixels. Kapur’s entropy is implemented using the probability distribution of the gray-level histogram. The maximal value of Kapur’s entropy is obtained from the optimal threshold value. To define it, let us consider pa, pb, …. . ps as the probability distribution of gray level. Two distinct distributions for object and background are derived from discrete values in the range of a to m, and another value proceeds from 1 + m to n; these are symbolized by A and B and are defined as follows:

The following equations define the corresponding entropies for the probability distributions A and B:

In order to find the optimal threshold value, a sum of these two entropies must have attained maximum value, and the final expression is defined in Eq. 19.

Notice that Max(φs) gives optimal threshold value with the well-separated object and background details.

Multi-level thresholding using Kapur’s entropy

Adopting the entropy-based segmentation can only be beneficial if multi-level segmentation is performed. Kapur’s entropy method can be stretched from two-level to MT. The entropy of an image is a measure of its compactness and addresses how separation may be carried out among different classes. An image may be divided into different segments using multiple threshold values, as explained in the next equations.

Where, pi is the probability that event i occurs, H denotes entropy, m represents dimensions and pa,pb, …,pm represents the probability of grey level for different areas in a multi-dimensional way. The optimal threshold value is chosen analogously, such the objective function is maximized [43]. The Eq. 21 defines the Kapur’s objective function.

To extend the above expression for multi-level thresholding Kapur’s objective function is defined as follows:

where different thresholds are represented by TH [th1, th2, th3, …, thk − 1] and i correspond to a specific class.

2.3 A modified Otsu’s between class variance

In Kapur’s entropy, if H(A) and H(B) are identical or similar, i.e., variance between the classes is less than both classes are same as given below:

In general terms, a value of ∅k will be maximum at \( s=\frac{1}{2}n \) for symmetrical distribution, where n is the total number of grey levels. However, this concept cannot be useful for images having multiple objects superimposed on the same background. If an image has two different objects with the same histogram level to be segmented, that image can be segmented using the proposed multi-level thresholding method [6, 28]. In the proposed methodology, the local properties carried out using Otsu’s variance are combined with Kapur’s entropy. In this case, the maximum variance value obtained from different classes of Otsu is combined with the maximum overall entropy calculated from Kapur’s entropy. The proposed method presented in Eq. 25 is the proposed modification of Otsu. Here Kapur’s entropy function is used as a weight function for Otsu.

Otsu and Kapur’s entropy objective functions fall under the optimization problems used in image segmentation. Otsu maximizes the between-class variance, and Kapur’s entropy maximizes posterior entropy. As discussed in subsequent sections, the experimental results prove that the proposed modified algorithm can produce better segmentation results.

3 Optimizing the proposed improved Otsu’s method

This section explains how the different metaheuristic algorithms could be used to optimize the modified Otsu’s method proposed in the previous section. Here two algorithms are discussed that will be used to generate an optimum value of multi-level threshold value using various objective functions like modified Otsu, the standard version Otsu, and Kapur’s method. Basically, the thresholding techniques are treated as optimization problems. To exemplify the implementation of metaheuristics to search for the best configuration of thresholds, they are used the Arithmetic Optimization algorithm (AOA) [3] and the Hybrid Dragonfly algorithm and Firefly Algorithm (HDAFA) [39]. The basic concepts, such as optimization techniques, are explained in this section. Finally, their implementation for image thresholding is also described.

3.1 AOA to optimize the modified Otsu’s function

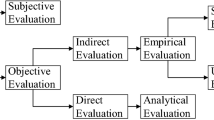

Considering the variations among meta-heuristic methods in population-based approaches, the optimization process comprises two cycles: exploitation vs. exploration. The previous examples of extensive coverage are search fields utilizing search agents to bypass local solutions. Above is the increase in the performance of solutions achieved during the exploration process, as shown in Fig. 1.

AOA search phases [3]

The AOA algorithm intends to explore the search space position (optimum threshold value) for the multi-level threshold issue that maximizes the objective function considered in Eqs. 13, 22, and 25. The intake to this method is an image, and the output indicates the optimum threshold. As discussed in Eq. 25, ψi represents modified Otsu fitness function, and maximizing this would correspond to optimal intensity threshold levels.

The following equations give the fitness for multi-level thresholding.

The optimization method starts with selected sets denoted by A as in Eq. 28, A denotes the whole population, and N is the number of elements in the population. The ideal set in every iteration is created randomly and is taken as the optimum threshold value. A grayscale image with a pixel value between 0 and 255 has the probability of a threshold value between the above-said limit. Therefore, lower and upper limits for matrix element a1, j is 0 and 255, respectively. Exploitation/Exploration should be carefully chosen at the start of AOA for image segmentation. The coefficient of math optimizer accelerated (MOA) is defined in Eq.29.

where, MOA (Citer) = ith corresponds to the iteration function value, Miter is the maximum number of iterations, Max and Min is the accelerated function of Max. and Min. Values Citer is the current iteration (within 1 and Miter).

Exploration stage

The exploratory nature of AOA is discussed as per the AO mathematical calculations, whether Division (D) or Multiplication (M) operators have obtained high distribution values or decisions that contribute to an exploration search method. However, as opposed to other operators, these D and M operators never easily reach the objective due to the high distribution of S and A operators. AOA exploration operators exploit the whole image arbitrarily through many regions based on the modified Otsu values and seek a better alternative (threshold values) dependent on two fundamental search techniques M and D search techniques, as shown in Eq. 30.

Where, ai(Citer + 1) = ith is a solution for the next iteration, ai, j(Citer + 1) = jth is the position in the current iteration, μ is a control parameter that must have a value lower or equal to 0.5. LBj and UBj are the lower and upper bound limits, ε is the smallest integer value, and finally, bestaj is the jth position of the optimal solution (threshold value) so far. From Eq. 28 the Math Optimizer Probability coefficient (MOP) is computed as follows:

where, MOP(Citer) = ith is the iteration function value, Citer corresponds to the current iteration, Miter is the maximum number of iterations.

Exploitation stage

To apply AOA to image processing applications, the exploitation nature of AOA makes use of AO mathematical formulas, whether using addition (A) or subtraction (S) as they provide high-density results. AOA exploitation operators exploit the search field deeply through many regions of an image and seek a better threshold value dependent on two key search techniques A and S search techniques, as shown in Eq. 32.

The description of AOA is presented in Algorithm 1, and the flow chart of the proposed technique implemented using AOA is discussed in Fig. 2.

3.2 HDAFA to optimize the modified Otsu function

Dragonfly (DF) behavior follows concepts of separation, harmonization, cohesiveness, the distraction of the opponent, and the attraction of food. The search space is the answer to every dragon flying in the swarm. A swarm motion of dragonflies is determined by five separate operators such as separation, alignment, cohesiveness, food attraction, and the diversion towards hostile sources. Separation (Si) pertains to static collision prevention between individuals and persons living in the area. Alignment (Ai) is about the pace of people in the neighbourhood matching to others. Cohesion (Ci) concerns people’s tendency to the mass centre of the neighbourhood. The proper weights are assigned for each operator and adapted for the convergence of DF to the best solution. The nearby range of the DF also improves with the progress of the optimization technique. The mathematical application of DA can be explained below. Considering the population of the N dragonfly Eq. 33 is the location of the ith dragonfly [39].

If the search space of the dragonfly i = 1, 2, 3, …, N, \( {x}_i^d \) and the search agent number N. is the search agent. The objective function is assessed based on the starting position values altered between the variables’ lower and higher bounds. Weighing rands (s), lines (a), cohesiveness (c), feed (f), and adversary (e) are randomly initialized for every dragonfly. The position and speed of dragonfly separation are estimated with Eqs. 34–36, alignment and cohesiveness factors.

Where Xi refers to the position and Vi is the speed of the person. X refers to the current condition of persons, and N refers to the number of neighboring persons. Eqs. 37 and 38, respectively, are computed for Fi and Ei adversary diversionfFood source attraction.

Here X represents the current position of the person and X+ shows the food supply and X− shows the enemy’s source. The distance from the locality is computed with the N of Euclid between all the dragonflies calculated and selected. The distance rij is calculated by Eq. 39.

If there is at least a DF in the area, the speed of the DF is arranged following Particle Swarm Optimization (PSO) speed equation Eq. 40 [23]. The location of the DF is updated using Eq. 41, and this equation is comparable to PSO’s position equation.

Suppose there is no DF in the surrounding radius. In that case, the location of the DF is revised using Levy Flight [9] as described in Eq. 42. This improves the unpredictability, messiness, and search capacity of DF globally.

This methodology fuses the Dragonfly algorithm with the Firefly algorithm (FA). In FA, fireflies emit flashlights, and their mates attract them. The objective function is then evaluated based on the current position and speed. The position update procedure continues until the end condition is fulfilled.

Objective (attractiveness) and light intensity fluctuation are the major FA development issues. The luminosity of each firefly is affected by the type of the encrypted cost function or simply by the illumination of the fitness value or objective function. This is the problem of maximization, where the objective function is maximized to find the optimal solution. In this case, it is required to increase the light intensity emitted by these flies, and obviously, light intensity decreases with an increase in distance. Eq. 43 may be used to show the intensity of light at many distances:

Where I is the provider of the luminance with distance r from the firefly and I0 is the initial light intensity r = 0; and where γ is the factor of the absorption of light, which describes the change of the attractiveness and the speed of convergence, and general FA effects. γ varies typically from 0.1 to 10. As the appeal of a firefly is related to the intensity of light perceived by adjoining fireflies, the attraction may be shown as follows (at a Cartesian distance r from the firefly:

Range attraction r = 0 is represented by the function β0. In the same way, the intensity of light I and attractiveness factor β are synonyms. Intensity is an objective measurement of the light emitted, whereas attractiveness is a comparative measure of the light perceived by fireflies and evaluated by other mates. Any two fireflies i and j can be separated by the Cartesian distance, defined as the distance between them at xi and xj, respectively, as in Eq. 47.

Alternatively, firefly i can be attracted to another brighter firefly j as follows:

From Eq. 46t is the number of iterations in the loop. Besides, the first term in Eq. 46, which is attributable to the appeal, appears in the equation. ∝(Nrand − 0.5) is the random sampling term, and the search space can be widened by using it. Randomization coefficient (∝) and number vector (Nrand) from Gaussian distribution ([0, 1]). Random number generator in ∝ ∈[0, 1]. Nrand’s value is an evenly distributed random number generator. This is Firefly i’s next move given by Eq. 47:

The initial phase is followed by a continuous series of performances, which continue until the optimization technique is complete. Any optimization algorithm must balance exploring and exploiting the search space to arrive at an optimal global solution. A worldwide search in the search space is called exploration. Exploitation or intensification is a local search based on the best available solution. Inefficient algorithms suffer from too much exploration and exploitation, which increases the chance of local optima [12].

Dragonflies traverse the search space via Levy flying. It increases the number of possible answers and boosts the algorithm’s exploration capabilities. These factors may be adjusted with very few parameters, and adaptive tuning helps to balance the swarming algorithm’s local and global search capabilities.

There are several advantages to using the conventional DA method; however, there are also some downsides, such as sluggish convergence. FA, too, looks to be a little restricted based on the convergence rate As a result, both ideas are scheduled to be blended in a way that answers the difficulties of optimization with greater convergence, and the DA algorithm impacts FA. Eq. 40 will be used if the dragonfly does not include any neighborhoods. Algorithm HDAFA evaluates the FA position update given in Eq. 45. Due to the poor convergence features of DA and FA, this change will assist overcome the current disadvantages of slow convergence characteristics (Fig. 3).

4 Experimental results

4.1 Experimental setup

The widely used ten benchmark images (Bear, Building, Deer, Penguin, Dome, Gentleman, Horse, Tiger, Sea-star, and White bird) are selected to verify the correctness of the presented approach. All the selected images are shown in Figs. 4 and 5. It can be judged from these figures that all the images have distinct patterns and shapes of the histograms. A wide variety of histogram images are selected to verify the diversity of the presented image. The proposed algorithms are implemented in MATLAB 2021 using Intel(R) Core (TM) i7-3520 M CPU @ 2.90GHz 16 GB RAM machine. The results presented by optimization algorithms are unstable because the required parameters depend on the random numbers and are stochastic methods. To verify the consistency of the presented techniques, all the algorithms are tested 50 times for different threshold values Th = 2, 3, 4 and 5.

Peak signal-to-noise ratio (PSNR) indicates the amount of noise present in the resultant image as compared to the original image [38]. The PSNR between original or ground truth IG and the segmented image Ith is calculated as mentioned in Eq. 48.

Where an image size is M × N, a higher PSNR value is desirable, and it represents less amount of noise that has been added during the processing [22].

Another performance metric is Standard Deviation (STD); its ideal value should be zero. It denotes the level of variation or deviation from its mean value and is given by Eq. 50 [42]. A lower value of STD will denote higher stability, and a higher value signifies an unstable algorithm.

Where k represents Max. iter and in the presented approach Maximum iteration value is chosen as 150, σi represents a value of an objective function for ith run, and μ is the mean value.

4.2 Performance evaluation and comparison

To demonstrate that the modified Otsu’s is an interesting alternative for multi-level thresholding, the proposed algorithm is compared with other similar implementations. For each image, the PSNR, STD, and the mean of the objective function values are computed. Moreover, the entire test is performed using Otsu’s, Kapur’s, and modified Otsu’s objective functions. To analyze the results of the proposed approach, different comparisons are conducted. The first experiment is conducted to compare the modified Otsu’s, Otsu’s function, and Kapur as criteria using the AOA technique. The second experiment is performed using HDAFA as an optimization method using modified Otsu’s, Otsu’s, and Kapur’s as objective functions. Several performance parameters like PSNR, mean, Std. Dev., and 35 iterations are selected to verify its performance and computational effort. All algorithms run 35 times over each benchmark image to ensure the correct results.

Tables 1, 2, and 3 show the results after applying the AOA using Otsu’s, Kapur’s, and modified Otsu’s method as an objective function over the selected 10 benchmark images. The results present the segmented images considering four different threshold points Th = 2, 3, 4, and 5. Tables 1, 2, and 3, provide the segmented images, histogram along with threshold values, and convergence graphs showing the number of iterations required to achieve stabilization. The segmented images provide evidence that the outcome is better with the 4 and the 5; however, if the segmentation task does not require to be extremely accurate, then it is possible to select the 3. It is depicted from the results that most of the time, modified Otsu’s as objective function stabilizes or achieves convergence under 50 iterations only. Tables 1, 2, and 3 gather segmented images for qualitative analysis and from each technique to graphically contrast them. It is feasible to note that the algorithm’s overall performance using modified Otsu’s as an objective function for thresholding is more accurate to a human expert’s assessment of the findings in Table 6.

Tables 4, 5, 6, 7, 8, and 9 show threshold values, PSNR, Mean, STD, and iteration required to achieve convergence using Otsu’s, Kapur’s entropy and modified Otsu’s method for AOA and HDAFA algorithm for 10 test images. These tables show significant conclusions concerning the PSNR and mean value for modified Otsu’s method over other objective functions. It can be noticed that many approaches find the same threshold values, especially during segmentation with a small number of thresholds. Image thresholding’s goal is to provide high-quality pictures that have a specific number of thresholds. The PSNR is a quality statistic that is frequently used to evaluate the quality of a processed signal relative to the original, as mentioned in the previous subsection. To assess multi-dimensional signals, in this case, images, PSNR has been expanded. In Tables 4, 5, 6, 7, 8, and 9, a higher mean PSNR value denotes better image segmentation when considering the threshold values of a specific algorithm.

In contrast to the mean value, a lower STD value is preferred because it shows less range in the outcomes produced by each approach. The STD value often rises when the number of threshold values has been increased. Most sample images demonstrate that modified Otsu’s method outperforms Otsu’s and Kapur’s entropy functions. During experimentation, it is found that the result for two-level thresholding is always better for all source images in AOA and HDAFA. It is noticeably observed for the entire dataset the modified Otsu’s method achieved the best metric values for maximum cases.

The number of iterations in Table 10 provides evidence that the AOA and HDAFA using modified Otsu’s stabilizes the system in less time. Modified Otsu’s using both AOA and HDAFA optimization requires a low number of iterations depending on the dimension of the problem provided. Under such circumstances, it is demonstrated that the computational cost of modified Otsu’s is lower than Otsu’s and Kapur’s entropy for multi-level thresholding problems. Out of the total of forty experiments, twenty-four times modified Otsu’s using AOA, and HDAFA optimization achieved the best score. It is clearly concluded from the evaluation of metric scores proposed that modified Otsu’s method outperforms in terms of the number of iterations required to achieve optimum value.

PSNR provides information related to the peak signal-to-noise ratio, and it is a measure to represent peak error. It is used to verify the similarity that exists between the original and the segmented image. To compute the PSNR, it is necessary to use the Root Mean Square Error (RMSE) pixel to pixel. PSNR results in Table 11 provide evidence that the outcome is better with the 4th and the 5th level of thresholding. Similarly, a comparison of the mean among modified Otsu’s, Otsu’s, and Kapur using AOA and HDAFA is presented in Table 12. All algorithms run 35 times independent runs of the same algorithm over each benchmark image, and the average value is reported to ensure the correct results.

Two different metaheuristic approaches test these three objective functions and in maximum cases proposed objective function produces not only quantitatively superior images but also good in terms of qualitatively results.

5 Conclusion

In this study, modified Otsu’s method is proposed for the multi-level thresholding approach using metaheuristic algorithms AOA and HDAFA for image segmentation. Otsu’s as an objective function is modified by hybridizing the features of Otsu’s and Kapur’s entropy algorithms. The proposed modified Otsu’s function combines the capability to find the optimum threshold value that maximizes the overall entropy from Kapur’s and the maximum variance value of the different classes from Otsu’s method. The efficiency of the proposed objective function is checked over existing Otsu’s and Kapur’s entropy functions to find optimum multi-level threshold values required for image segmentation using AOA and HDAFA optimization algorithms. The experiment uses four levels of threshold values, i.e., 2,3,4, and 5, over ten standard benchmark images widely used for segmentation. The quantitative analysis for the maximum sample images demonstrates that modified Otsu’s method outperforms Otsu’s and Kapur’s entropy functions using both metaheuristic methods. During experimentation, it is found that the result for two-level thresholding is always better for all source images in both AOA and HDAFA. Moreover, using the proposed modified Otsu’s function, the results for higher threshold values are superior using AOA as compared to HDAFA. The modified Otsu’s algorithm may be implemented for many image processing applications, for example, surveillance, biomedical imaging, industrial implementations, and pattern recognition. In future work, more complex concepts of entropy algorithms may be explored to make them durable for colored images. Also, the algorithm may be able to segment biomedical images like MRI and other thermal images.

Data availability

The datasets generated during and/or analysed during the current study are available in the Berkeley Segmentation Data Set 500 (BSDS500) repository,

https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/grouping/resources.html

References

Abak AT, Baris U, Sankur B (1997) The performance evaluation of thresholding algorithms for optical character recognition. Proc Fourth Int Conf Doc Anal Recognit 2:10–13. https://doi.org/10.1109/ICDAR.1997.620597

Abdel-Khalek S, Ben Ishak A, Omer OA, Obada ASF (2017) A two-dimensional image segmentation method based on genetic algorithm and entropy. Optik (Stuttg) 131:414–422. https://doi.org/10.1016/j.ijleo.2016.11.039

Abualigah L, Diabat A, Mirjalili S, Abd Elaziz M, Gandomi AH (2021) The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376:113609. https://doi.org/10.1016/j.cma.2020.113609

Aranguren I, Valdivia A, Pérez-Cisneros M, Oliva D, Osuna-Enciso V (2022) Digital image thresholding by using a lateral inhibition 2D histogram and a mutated electromagnetic field optimization. Multimed Tools Appl 81:10023–10049. https://doi.org/10.1007/s11042-022-11959-4

Bandyopadhyay R, Kundu R, Oliva D, Sarkar R (2021) Segmentation of brain MRI using an altruistic Harris hawks’ optimization algorithm. Knowledge-Based Syst 232:107468. https://doi.org/10.1016/j.knosys.2021.107468

Bhandari AK, Kumar IV (2019) A context sensitive energy thresholding based 3D Otsu function for image segmentation using human learning optimization. Appl Soft Comput J 82:105570. https://doi.org/10.1016/j.asoc.2019.105570

Bohat VK, Arya KV (2019) A new heuristic for multilevel thresholding of images. Expert Syst Appl 117:176–203. https://doi.org/10.1016/j.eswa.2018.08.045

Brest J, Žumer V, Maučec MS (2006) Self-adaptive differential evolution algorithm in constrained real-parameter optimization. In: 2006 IEEE congress on evolutionary computation, CEC 2006. pp 215–222

Chaves AS (1998) A fractional diffusion equation to describe Lévy flights. Phys Lett Sect A Gen At Solid State Phys 239:13–16. https://doi.org/10.1016/S0375-9601(97)00947-X

Elaziz MA, Lu S (2019) Many-objectives multilevel thresholding image segmentation using knee evolutionary algorithm. Expert Syst Appl 125:305–316. https://doi.org/10.1016/j.eswa.2019.01.075

Feng Y, Wang Z (2014) Ant Colony optimization for image segmentation. https://doi.org/10.5772/14269

Gandomi AH, Yang XS, Talatahari S, Alavi AH (2013) Firefly algorithm with chaos. Commun Nonlinear Sci Numer Simul 18:89–98. https://doi.org/10.1016/j.cnsns.2012.06.009

Garg A, Mittal N, Singh S, Sharma N (2020) TLBO Algorithm for Global Optimization : Theory , Variants and Applications with Possible Modification. Int J Adv Sci Technol 29:1701–1728

Hashim FA, Hussain K, Houssein EH, Mabrouk MS, al-Atabany W (2021) Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl Intell 51:1531–1551. https://doi.org/10.1007/s10489-020-01893-z

He L, Huang S (2017) Modified firefly algorithm based multilevel thresholding for color image segmentation. Neurocomputing. 240:152–174. https://doi.org/10.1016/j.neucom.2017.02.040

Hemeida A, Mansour R, Hussein ME (2019) Multilevel thresholding for image segmentation using an improved electromagnetism optimization algorithm. Int J Interact Multimed Artif Intell 5:102. https://doi.org/10.9781/ijimai.2018.09.001

Horng M-H (2010) A multilevel image thresholding using the honey bee mating optimization. Appl Math Comput 215:3302–3310

Hosny KM, Khalid AM, Hamza HM, Mirjalili S (2022) Multilevel segmentation of 2D and volumetric medical images using hybrid coronavirus optimization algorithm. Comput Biol Med 150:106003. https://doi.org/10.1016/j.compbiomed.2022.106003

Houssein EH, Helmy BE-d, Oliva D et al (2021) A novel black widow optimization algorithm for multilevel thresholding image segmentation. Expert Syst Appl 167:114159. https://doi.org/10.1016/j.eswa.2020.114159

Ji W, He X (2021) Kapur’s entropy for multilevel thresholding image segmentation based on moth-flame optimization. Math Biosci Eng 18:7110–7142. https://doi.org/10.3934/mbe.2021353

Kapur JN, Sahoo PK, Wong AKC (1985) A new method for gray-level picture thresholding using the entropy of the histogram. Comput Vision, Graph Image Process 29:273–285

Kaur R, Singh S (2017) An artificial neural network based approach to calculate BER in CDMA for multiuser detection using MEM. Proc 2016 2nd Int Conf next Gener Comput Technol NGCT 2016 450–455. https://doi.org/10.1109/NGCT.2016.7877458

Kennedy J, Eberhart RC (1995) Particle swarm optimization. Neural networks, 1995 proceedings, IEEE Int Conf 4:1942–1948 vol.4. https://doi.org/10.1109/ICNN.1995.488968

Kumar A, Kumar V, Kumar A, Kumar G (2014) Cuckoo search algorithm and wind driven optimization based study of satellite image segmentation for multilevel thresholding using Kapur ’ s entropy. Expert Syst Appl 41:3538–3560. https://doi.org/10.1016/j.eswa.2013.10.059

Kumar A, Misra RK, Singh D, Das S (2019) Testing a multi-operator based differential evolution algorithm on the 100-digit challenge for single objective numerical optimization. 2019 IEEE Congr Evol Comput CEC 2019 - proc 34–40. https://doi.org/10.1109/CEC.2019.8789907

Lei B, Fan J, Fan J (2019) Adaptive Kaniadakis entropy thresholding segmentation algorithm based on particle swarm optimization. Soft Comput 2:. https://doi.org/10.1007/s00500-019-04351-2, 24, 7305, 7318

Liang H, Jia H, Xing Z, Ma J, Peng X (2019) Modified grasshopper algorithm-based multilevel thresholding for color image segmentation. IEEE Access 7:11258–11295. https://doi.org/10.1109/ACCESS.2019.2891673

Liao PS, Chen TS, Chung PC (2001) A fast algorithm for multilevel thresholding. J Inf Sci Eng 17:713–727. https://doi.org/10.6688/JISE.2001.17.5.1

Liao WH, Kao Y, Li YS (2011) A sensor deployment approach using glowworm swarm optimization algorithm in wireless sensor networks. Expert Syst Appl 38:12180–12188. https://doi.org/10.1016/j.eswa.2011.03.053

Nadimi-Shahraki MH, Taghian S, Mirjalili S, Zamani H, Bahreininejad A (2022) GGWO: gaze cues learning-based grey wolf optimizer and its applications for solving engineering problems. J Comput Sci 61:101636. https://doi.org/10.1016/j.jocs.2022.101636

Oliva D, Abd Elaziz M, Hinojosa S (2019) Multilevel thresholding for image segmentation based on metaheuristic algorithms. In: Studies in Computational Intelligence. pp 59–69

Oliva D, Esquivel-Torres S, Hinojosa S et al (2021) Opposition-based moth swarm algorithm. Expert Syst Appl 184:115481. https://doi.org/10.1016/j.eswa.2021.115481

Ouyang HB, Gao LQ, Kong XY, Li S, Zou DX (2016) Hybrid harmony search particle swarm optimization with global dimension selection. Inf Sci 346:318–337. https://doi.org/10.1016/j.ins.2016.02.007

Qi A, Zhao D, Yu F, Heidari AA, Wu Z, Cai Z, Alenezi F, Mansour RF, Chen H, Chen M (2022) Directional mutation and crossover boosted ant colony optimization with application to COVID-19 X-ray image segmentation. Comput Biol Med 148:105810. https://doi.org/10.1016/j.compbiomed.2022.105810

Ren L, Zhao D, Zhao X, Chen W, Li L, Wu TS, Liang G, Cai Z, Xu S (2022) Multi-level thresholding segmentation for pathological images : optimal performance design of a new modified differential evolution. Comput Biol Med 148:105910. https://doi.org/10.1016/j.compbiomed.2022.105910

Rodríguez-Esparza E, Zanella-Calzada LA, Oliva D et al (2020) An efficient Harris hawks-inspired image segmentation method. Expert Syst Appl:155. https://doi.org/10.1016/j.eswa.2020.113428

Singh S, Mittal N, Singh H (2020) A multilevel thresholding algorithm using LebTLBO for image segmentation. Neural Comput Applic 32:16681–16706. https://doi.org/10.1007/s00521-020-04989-2

Singh S, Mittal N, Singh H (2020) Multifocus image fusion based on multiresolution pyramid and bilateral filter. IETE J Res 68:2476–2487. https://doi.org/10.1080/03772063.2019.1711205

Singh S, Mittal N, Singh H (2021) A multilevel thresholding algorithm using HDAFA for image segmentation. Soft Comput 25:10677–10708. https://doi.org/10.1007/s00500-021-05956-2

Singh S, Mittal N, Thakur D, Singh H, Oliva D, Demin A (2021) Nature and biologically inspired image segmentation techniques. Arch Comput Methods Eng 29:1415–1442. https://doi.org/10.1007/s11831-021-09619-1

Singh S, Singh H, Mittal N, Singh H, Hussien AG, Sroubek F (2022) A feature level image fusion for night-vision context enhancement using arithmetic optimization algorithm based image segmentation. Expert Syst Appl 209:118272. https://doi.org/10.1016/j.eswa.2022.118272

Singh S, Mittal N, Singh H (2022) A feature level image fusion for IR and visible image using mNMRA based segmentation. Neural Comput Applic 34:8137–8154. https://doi.org/10.1007/s00521-022-06900-7

Sreeja P, Hariharan S (2018) An improved feature based image fusion technique for enhancement of liver lesions. Biocybern Biomed Eng 38:611–623. https://doi.org/10.1016/j.bbe.2018.03.004

Sun X (2019) Multilevel image threshold segmentation using an improved Bloch quantum artificial bee colony algorithm. Multimed Tools Appl 79:2447–2471. https://doi.org/10.1007/s11042-019-08231-7

Verma OP, Parihar AS (2017) An optimal fuzzy system for edge detection in color images using bacterial foraging algorithm. IEEE Trans Fuzzy Syst 25:114–127. https://doi.org/10.1109/TFUZZ.2016.2551289

Zamani H, Nadimi-Shahraki MH, Gandomi AH (2019) CCSA: conscious neighborhood-based crow search algorithm for solving global optimization problems. Appl Soft Comput J 85:105583. https://doi.org/10.1016/j.asoc.2019.105583

Zamani H, Nadimi-Shahraki MH, Gandomi AH (2021) QANA: quantum-based avian navigation optimizer algorithm. Eng Appl Artif Intell 104:104314. https://doi.org/10.1016/j.engappai.2021.104314

Zamani H, Nadimi-Shahraki MH, Gandomi AH (2022) Starling murmuration optimizer: a novel bio-inspired algorithm for global and engineering optimization. Comput Methods Appl Mech Eng 392:114616. https://doi.org/10.1016/j.cma.2022.114616

Acknowledgments

This work was supported by DST, Government of India, for the Technology Innovation Hub at the IIT Ropar in the National Mission on Interdisciplinary Cyber-Physical Systems framework.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this manuscript.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Singh, S., Mittal, N., Singh, H. et al. Improving the segmentation of digital images by using a modified Otsu’s between-class variance. Multimed Tools Appl 82, 40701–40743 (2023). https://doi.org/10.1007/s11042-023-15129-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15129-y