Abstract

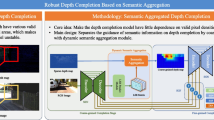

The depth completion task aims to recover dense and reliable depth from sparse and accurate depth. Only relying on sparse depth usually cannot achieve good performance. Most methods use RGB images with rich semantic information as a guide and achieve good results. However, The segmentation boundary of the RGB feature does not conform to the real depth distribution in some areas. (For example, there should be no segmentation boundary in an area where the depth changes continuously.) And common fusions (such as concatenated by channels and pixel-by-pixel addition) will promote the propagation of this wrong segmentation boundary features. Therefore two novel modules using dynamic convolution and attention mechanism are proposed in terms of preventing and correcting the propagation of wrong information. The proposed network is divided into two independent branches, then converge the output of the two branches by predicting the corresponding confidence of them. In the guided convolution branch, dynamic convolution is performed to fuse the high-level features of the RGB image and the low-level features of the sparse depth map. In the bidirectional attention branch, the attention mechanism is introduced to construct a bidirectional attention module, which is aimed to correct the wrong segmentation boundaries in the RGB image to achieve more effective feature fusion. Compared with the state-of-the-art methods, the proposed method still maintains excellent performance under different sparse input conditions. And the proposed method has shorter inference time and smaller model size while achieving competitive results.

Similar content being viewed by others

References

Chen J, Wang X, Guo Z, Zhang X, Sun J (2020) Dynamic region-aware convolution. arXiv:2003.12243

Chen Y, Dai X, Liu M, Chen D, Yuan L, Liu Z (2020) Dynamic convolution: Attention over convolution kernels. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11030–11039

Cheng X, Wang P, Guan C, Yang R (2020) Cspn++: Learning context and resource aware convolutional spatial propagation networks for depth completion. In: AAAI, pp 10615–10622

Cheng X, Wang P, Yang R (2018) Depth estimation via affinity learned with convolutional spatial propagation network. In: Proceedings of the European conference on computer vision (ECCV), pp 103–119

Eldesokey A, Felsberg M, Khan FS (2019) Confidence propagation through cnns for guided sparse depth regression. IEEE Transactions on Pattern Analysis and Machine Intelligence

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3146– 3154

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the kitti dataset. The International Journal of Robotics Research 32(11):1231–1237

Gu J, Xiang Z, Ye Y, Wang L (2021) Denselidar: a real-time pseudo dense depth guided depth completion network. IEEE Robotics and Automation Letters 6(2):1808–1815

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Hu M, Wang S, Li B, Ning S, Fan L, Gong X (2021) Penet: Towards precise and efficient image guided depth completion. arXiv:2103.00783

Imran S, Long Y, Liu X, Morris D (2019) Depth coefficients for depth completion. In: 2019 IEEE/CVF Conference on computer vision and pattern recognition (CVPR), IEEE, pp 12438–12447

Jaderberg M, Simonyan K, Zisserman A et al (2015) Spatial transformer networks. In: Advances in neural information processing systems, pp 2017–2025

Jaritz M, De Charette R, Wirbel E, Perrotton X, Nashashibi F (2018) Sparse and dense data with cnns: Depth completion and semantic segmentation. In: 2018 International conference on 3d vision (3DV), IEEE, pp 52–60

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv:1412.6980

Ku J, Harakeh A, Waslander SL (2018) In defense of classical image processing: Fast depth completion on the cpu. In: 2018 15Th conference on computer and robot vision (CRV), IEEE, pp 16–22

Liu L, Song X, Lyu X, Diao J, Wang M, Liu Y, Zhang L (2021) Fcfr-net: Feature fusion based coarse-to-fine residual learning for depth completion. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 2136–2144

Liu LK, Chan SH, Nguyen TQ (2015) Depth reconstruction from sparse samples: Representation, algorithm, and sampling. IEEE Trans Image Process 24 (6):1983–1996

Lu K, Barnes N, Anwar S, Zheng L (2020) From depth what can you see? depth completion via auxiliary image reconstruction. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11306–11315

Ma F, Cavalheiro GV, Karaman S (2019) Self-supervised sparse-to-dense: Self-supervised depth completion from lidar and monocular camera. In: 2019 International conference on robotics and automation (ICRA), IEEE, pp 3288–3295

Mal F, Karaman S (2018) Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In: 2018 IEEE International conference on robotics and automation (ICRA), IEEE, pp 1–8

Park J, Joo K, Hu Z, Liu CK, Kweon IS (2020) Non-local spatial propagation network for depth completion. arXiv:2007.10042

Qiu J, Cui Z, Zhang Y, Zhang X, Liu S, Zeng B, Pollefeys M (2019) Deeplidar: Deep surface normal guided depth prediction for outdoor scene from sparse lidar data and single color image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3313–3322

Schuster R, Wasenmüller O, Unger C, Stricker D (2020) Ssgp: Sparse spatial guided propagation for robust and generic interpolation. arXiv:2008.09346

Shivakumar SS, Nguyen T, Miller ID, Chen SW, Kumar V, Taylor CJ (2019) Dfusenet: Deep fusion of rgb and sparse depth information for image guided dense depth completion. In: 2019 IEEE Intelligent transportation systems conference (ITSC), IEEE, pp 13–20

Tang J, Tian FP, Feng W, Li J, Tan P (2019) Learning guided convolutional network for depth completion. arXiv:1908.01238

Uhrig J, Schneider N, Schneider L, Franke U, Brox T, Geiger A (2017) Sparsity invariant cnns. In: 2017 International conference on 3d vision (3DV), IEEE, pp 11–20

Van Gansbeke W, Neven D, De Brabandere B, Van Gool L (2019) Sparse and noisy lidar completion with rgb guidance and uncertainty. In: 2019 16Th international conference on machine vision applications (MVA), IEEE, pp 1–6

Wang F, Jiang M, Qian C, Yang S, Li C, Zhang H, Wang X, Tang X (2017) Residual attention network for image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3156–3164

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Woo S, Park J, Lee JY, So Kweon I (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Xiang R, Zheng F, Su H, Zhang Z (2020) 3ddepthnet:, Point cloud guided depth completion network for sparse depth and single color image. arXiv:2003.09175

Xu Y, Zhu X, Shi J, Zhang G, Bao H, Li H (2019) Depth completion from sparse lidar data with depth-normal constraints. In: Proceedings of the IEEE international conference on computer vision, pp 2811–2820

Xu Z, Yin H, Yao J (2020) Deformable spatial propagation networks for depth completion. In: 2020 IEEE International conference on image processing (ICIP), IEEE, pp 913–917

Zhang Y, Nguyen T, Miller ID, Shivakumar SS, Chen S, Taylor CJ, Kumar V (2019) Dfinenet:, Ego-motion estimation and depth refinement from sparse, noisy depth input with rgb guidance. arXiv:1903.06397

Zhang Y, Zhang J, Wang Q, Zhong Z (2020) Dynet: Dynamic convolution for accelerating convolutional neural networks. arXiv:2004.10694

Zhao S, Gong M, Fu H, Tao D (2021) Adaptive context-aware multi-modal network for depth completion. IEEE Transactions on Image Processing

Funding

This study was funded by the Jiangsu Provincial Key R&D Program(No.BE2018066) and the National Natural Science Foundation of China(U1830105).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, K., Li, Q. & Zhou, Y. An adaptive converged depth completion network based on efficient RGB guidance. Multimed Tools Appl 81, 35915–35933 (2022). https://doi.org/10.1007/s11042-022-13341-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13341-w