Abstract

Big data plays a major role in the learning, manipulation, and forecasting of information intelligence. Due to the imbalance of data delivery, the learning and retrieval of information from such large datasets can result in limited classification outcomes and wrong decisions. Traditional machine learning classifiers successfully handling the imbalanced datasets still there is inadequacy in overfitting problems, training cost, and sample hardness in classification. To forecast a better classification, the research work proposed the novel “Self-Boosted with Dynamic Semi-Supervised Clustering Method”. The method is initially preprocessed by constructing sample blocks using Hybrid Associated Nearest Neighbor heuristic over-sampling to replicate the minority samples and merge each copy with every sub-set of majority samples to remove the overfitting issue thus slightly reduce noise with the imbalanced data. After preprocessing the data, massive data classification requires big data space which leads to large training costs. Therefore, the approach suggested a Heterogeneous Weight Ensemble classifier which calculates data space in each data sample by its nearest neighbors and adaptive weight adjustment to resolve the training cost with unstable sample problems. Subsequently, the classification acquires poor performance due to sample hardness. Thus the work introduced a Self-Managed Ensample Optimizer which separates the bulk of specimens into the bins according to their rigidity values and provides better classification results. Consequently, the proposed work effectively classifies the imbalanced dataset with high accuracy of 99%, to obtain balanced data with improved classification results.

Similar content being viewed by others

References

Basgall MJ, Hasperué W, Naiouf M, Fernández A, Herrera F (2018) SMOTE-BD: an exact and scalable oversampling method for imbalanced classification in big data. In: VI Jornadas de Cloud Computing & big Data (JCC&BD) (La Plata)

Basgall MJ, Hasperué W, Naiouf M, Fernández A, Herrera F (2019) An analysis of local and global solutions to address big data imbalanced classification: a case study with SMOTE preprocessing. In: Conference on cloud computing and big data, pp 75–85

Chen G, Liu Y, Ge Z (2019) K-means Bayes algorithm for imbalanced fault classification and big data application. J Process Control 81:54–64

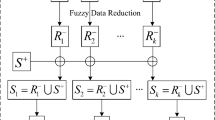

Elkano M, Galar M, Sanz J, Bustince H (2018) CHI-BD: a fuzzy rule-based classification system for big data classification problems. Fuzzy Sets Syst 348:75–101

Fernández A, Carmona CJ, Jose del Jesus M, Herrera F (2017) A Pareto-based ensemble with feature and instance selection for learning from multi-class imbalanced datasets. Int J Neural Syst 27(06):1750028

Fernández A, del Río S, Chawla NV, Herrera F (2017) An insight into imbalanced big data classification: outcomes and challenges. Complex & Intelligent Systems 3(2):105–120

Fernández A, García S, Galar M, Prati RC, Krawczyk B, Herrera F (2018) Imbalanced classification for big data. In: Learning from imbalanced data sets, pp 327–349

García S, Zhang ZL, Altalhi A, Alshomrani S, Herrera F (2018) Dynamic ensemble selection for multi-class imbalanced datasets. Inf Sci 445:22–37

Guo T, Zhu X, Wang Y, Chen F (2019) Discriminative sample generation for deep imbalanced learning. In: IJCAI, pp 2406–2412

Hassib EM, El-Desouky AI, El-Kenawy ESM, El-Ghamrawy SM (2019) An imbalanced big data mining framework for improving optimization algorithms performance. IEEE Access 7:170774–170795

Hassib EM, El-Desouky AI, Labib LM, El-kenawy ESM (2020) WOA+ BRNN: an imbalanced big data classification framework using whale optimization and deep neural network. Soft Comput 24(8):5573–5592

Komamizu T, Uehara R, Ogawa Y, Toyama K (2020) MUEnsemble: multi-ratio under sampling-based ensemble framework for imbalanced data. In: International conference on database and expert systems applications, pp 213–228

Koziarski M (2020) Radial-based Undersampling for imbalanced data classification. Pattern Recogn 102:107262

Leevy JL, Khoshgoftaar TM, Bauder RA, Seliya N (2018) A survey on addressing the high-class imbalance in big data. Journal of Big Data 5(1):42

Lin WC, Tsai CF, Hu YH, Jhang JS (2017) Clustering-based undersampling in class-imbalanced data. Inf Sci 409:17–26

Luengo J, García-Gil D, Ramírez-Gallego S, García S, Herrera F (2020) Imbalanced data preprocessing for big data. In: Big data preprocessing, pp 147–160

Maldonado S, López J (2018) Dealing with high-dimensional class-imbalanced datasets: embedded feature selection for SVM classification. Appl Soft Comput 67:94–105

Patil SS, Sonavane SP (2017) Enriched over_sampling techniques for improving classification of imbalanced big data. In: 2017 IEEE third international conference on big data computing service and applications (BigDataService), pp 1–10

Rendón E, Alejo R, Castorena C, Isidro-Ortega FJ, Granda-Gutiérrez EE (2020) Data sampling methods to Deal with the big data multi-class imbalance problem. Appl Sci 10(4):1276

Sáez JA, Krawczyk B, Woźniak M (2016) Analyzing the oversampling of different classes and types of examples in multi-class imbalanced datasets. Pattern Recogn 57:164–178

Triguero I, Galar M, Merino D, Maillo J, Bustince H, Herrera F (2016) Evolutionary undersampling for extremely imbalanced big data classification under apache spark. In: 2016 IEEE congress on evolutionary computation (CEC), pp 640–647

Vuttipittayamongkol P, Elyan E, Petrovski A, Jayne C (2018) Overlap-based undersampling for improving imbalanced data classification. In: International conference on intelligent data engineering and automated learning, pp 689–697

Wang Z, Xin J, Yang H, Tian S, Yu G, Xu C, Yao Y (2017) Distributed and weighted extreme learning machine for imbalanced big data learning. Tsinghua Sci Technol 22(2):160–173

Zhai J, Zhang S, Wang C (2017) The classification of imbalanced large data sets based on map-reduce and ensemble of elm classifiers. Int J Mach Learn Cybern 8(3):1009–1017

Zhai J, Zhang S, Zhang M, Liu X (2018) Fuzzy integral-based ELM ensemble for imbalanced big data classification. Soft Comput 22(11):3519–3531

Acknowledgements

This paper and the research behind it would not have been possible without the exceptional support of my supervisor, Dr Dhawaleswar Rao CH, Associate professor, Centurion university of technology and management. For his valuable and constructive suggestions during the planning and development of this paper. His enthusiasm, knowledge and exacting attention to detail have been an inspiration and kept my work on track.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abhilasha, A., Naidu, P.A. Self-boosted with dynamic semi-supervised clustering method for imbalanced big data classification. Multimed Tools Appl 81, 43083–43106 (2022). https://doi.org/10.1007/s11042-022-12038-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12038-4