Abstract

A new non-stationary, high-order sequential simulation method is presented herein, aiming to accommodate complex curvilinear patterns when modelling non-Gaussian, spatially distributed and variant attributes of natural phenomena. The proposed approach employs spatial templates, training images and a set of sample data. At each step of a multi-grid approach, a template consisting of several data points and a simulation node located in the center of the grid is selected. To account for the non-stationarity exhibited in the samples, the data events decided by the conditioning data are utilized to calibrate the importance of the related replicates. Sliding the template over the training image generates a set of training patterns, and for each pattern a weight is calculated. The weight value of each training pattern is determined by a similarity measure defined herein, which is calculated between the data event of the training pattern and that of the simulation pattern. This results in a non-stationary spatial distribution of the weight values for the training patterns. The proposed new similarity measure is constructed from the high-order statistics of data events from the available data set, when compared to their corresponding training patterns. In addition, this new high-order statistics measure allows for the effective detection of similar patterns in different orientations, as these high-order statistics conform to the commutativity property. The proposed method is robust against the addition of more training images due to its non-stationary aspect; it only uses replicates from the pattern database with the most similar local high-order statistics to simulate each node. Examples demonstrate the key aspects of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The uncertainty of a spatially distributed and varying geological attribute can be quantified by analyzing the variation in this attribute within a set of geostatistical or stochastic simulations. Second-order spatial simulation methods (Journel and Huijbregts 1978; David 1988; Goovaerts 1997; Chiles and Delfiner 2012) have been used to quantify spatial uncertainty. Since the early 1990s, a new multiple-point statistics (MPS) spatial simulation framework has been developed to overcome the limitations of previous approaches (Guardiano and Srivastava 1993; Gómez-Hernández and Srivastava 2021; Strebelle 2002, 2021; Journel 2005; Liu et al. 2006; Arpat and Caers 2007; Hu and Chugunova 2008; de Vries et al., 2008; Mariethoz et al. 2010; Honarkhah and Caers 2010; De Iaco and Maggio 2011; Stien and Kolbjørnsen 2011; Chatterjee et al. 2012; Lochbühler et al. 2013; Rezaee et al. 2013; Toftaker and Tjelmeland 2013; Mustapha et al. 2014; Strebelle and Cavelius 2014; Zhang et al. 2017). MPS methods are able to capture and produce more complex spatial patterns than second-order spatial simulation methods by using multiple-point spatial templates that replace the well-established two-point spatial statistics. In the MPS framework, sample data are used as the conditioning data, while the multiple-point spatial relations of the simulations are captured from patterns extracted from a training image (TI) or analogue. As a result, MPS simulations exhibit the statistics of the TI instead of the data, which raises the issue of statistical conflicts between the samples and the TI in circumstances in which sample data are relatively abundant, such as in mining applications (Osterholt and Dimitrakopoulos 2007; Goodfellow et al. 2012). High-order spatial simulation methods (HOSIM) have been developed to address this issue and to provide a new geostatistical simulation framework that deals with complex spatial patterns. HOSIM does not require distributional assumptions and is mathematically consistent (Dimitrakopoulos et al. 2010; Mustapha and Dimitrakopoulos 2010a,b, 2011a; Mustapha et al. 2011, 2014; Tamayo-Mas et al. 2016; Minniakhmetov and Dimitrakopoulos 2016; Minniakhmetov et al. 2018; Yao et al. 2018). It should be noted that in HOSIM methods, the conditional probability distribution function (CPDF) is modelled by a set of orthogonal polynomials, Legendre polynomials in particular (Abramowitz 1974). Under the stationarity assumption, high-order spatial cumulants (Dimitrakopoulos et al. 2010) are inferred by averaging the same-order cumulants of the samples extracted from either the data set alone, if sufficient data are provided, or both data and TI in the case of sparse data. The high-order statistics enable the CPDF model to capture the complex spatial structures and connectivity of the high values within the data and to later reproduce them via the simulation process (Mustapha and Dimitrakopoulos 2011a, b; Minniakhmetov et al. 2018; de Carvalho et al. 2019). Stationarity is a major assumption made by the commonly employed spatial simulation methods and may not always be easily accommodated in applications. The traditional approach assumes that a mean function (drift) exists as a linear or polynomial trend. The so-called universal kriging (David 1977) considering the drift, however, may be difficult to apply to some cases (Cressie 1986). More recent research adopts the local probability distribution to model the non-stationary distribution, but only second-order statistics are considered (Machuca-Mory and Deutsch 2013). With respect to high-order simulations, the same assumption also results in the use of all patterns extracted from data and the TI for the estimation of spatial cumulants, leading to the slow convergence of the orthogonal polynomials used for modelling CPDFs. In practice, this calculation may result in numerical instabilities (Boyd and Ong 2011). Thus, a non-stationary approach is used herein to address these issues. For each simulation, a set of patterns are chosen from the data and TI based on their similarities to the data event of the simulation pattern. Then, only the chosen patterns are used for inferring the high-order statistics of the simulation pattern. Similar patterns could be generated from a simple CPDF model with faster convergence using a lower number of polynomial terms.

The high-order sequential simulation method introduced here first finds a data event of a fixed size \(n\) in the immediate neighbourhood of each simulation node, and a set of \(n+1\) high-order spatial statistics is then calculated from the data event. A novel similarity measure is then introduced and utilized to compare the extracted statistics from the simulation grid to those extracted from all of the patterns in the TI; the statistics with the highest similarity score are then used for the inference of the high-order statistics at that node. This is the non-stationary aspect of the method, which is the key difference between this method and previous high-order simulation methods (Mustapha and Dimitrakopoulos 2010b, 2011b; Minniakhmetov et al. 2018, Minniakhmetov and Dimitrakopoulos 2021; Yao et al. 2018, 2020, 2021). The proposed method avoids an excessive number of patterns extracted from the TI due to the selectivity of the method in terms of choosing the number of related patterns. In addition, the method is able to efficiently identify patterns with similar statistics in different orientations without reducing the computational efficiency of the algorithm.

In the following sections, the proposed method and corresponding algorithm are first detailed. Then, examples and comparisons are presented, and conclusions follow.

2 The Method

2.1 Spatial Random Field

Given a probability space \((\Omega ,\mathcal{F},P)\), a function \(Z\left(X\right):{\mathcal{D}\subset R}^{\mathcal{N}}\to R\) is a real-valued spatial random field given that for any integer number \(n\ge 0\) and \(\forall r\in {R}^{n+1},\) a subset \({A}_{r}=\{Z(\mathcal{X})\le r\}\in \mathcal{F}\) exists and its probability is defined by \(P\), where \(\mathcal{D}\) is the spatial domain in a two- or three-dimensional space \({R}^{\mathcal{N}}(\mathcal{N}=\mathrm{2,3})\) and \(\mathcal{X}\subset \mathcal{D}\) is a set of \(n+1\) points in this domain. Note that \(\mathcal{X}=\{x,{x}_{1},\dots ,{x}_{n}\}\) and \(Z(X)=\{Z(x),Z({x}_{1}),\dots ,Z({x}_{n})\}\) and thus the binary operation \(\le \) in \({A}_{r}=\{Z(\mathcal{X})\le r\}\) is true only if all elements on the left-hand side are less than or equal to the elements on the right hand side of the equation.

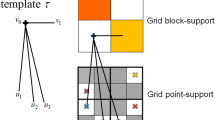

2.2 Template, Pattern, Data Event and Neighbourhood

Consider a random field \(Z\left(X\right)\) with a fixed structure in space and a spatial template \(T\) that is characterized by a set of lag vectors (Mustapha and Dimitrakopoulos 2011b), that is, \(T=\{{h}_{1},\dots ,{h}_{n}\}\). The template connects a specific location \(x\) to each of the positions in the neighbourhood \({N}_{x}=x+T=\{{x}_{1},\dots ,{x}_{n}\}\). Without loss of generality, hereafter the real-valued spatial random field associated to the location \(x\) and its neighbourhood \({N}_{x}\) is denoted by \(Z(x,{N}_{x}),\) and its realization is denoted by \(Z({x}_{i},{N}_{{x}_{i}})\). Furthermore, the data event vector \({d}_{{N}_{x}}=Z({N}_{x})=[Z({x}_{1}),\dots ,Z({x}_{n}){]}^{T}\) represents the realization of the field at the position of the neighbourhood nodes.

2.3 Invariant High-Order Statistics

Vieta's formula (Funkhouser 1930) presents a relation between the coefficients and the roots of a polynomial. For a polynomial of degree \(n,\)

with the roots \([{x}_{1},\dots ,{x}_{n}{]}^{\mathrm{T}},\) the coefficients are \(U=[{u}_{1},\dots ,{u}_{n}{]}^{\mathrm{T}},\) where

with \(m\in \{1,\dots ,n\}\) and the normalization factor \({c}_{m}=(\genfrac{}{}{0pt}{}{n}{m}){\sigma }^{m}\), where \(\sigma \) is the standard deviation of the \(x\) in the TI. Furthermore, a recurrence relation could present the coefficients of a polynomial \(q(X)=(X-{x}_{0})p(X)={X}^{n+1}+{c}_{1}{\overline{u}}_{1}{X}^{n}+\dots +{c}_{n+1}{\overline{u}}_{n+1}\), which has one extra root \({x}_{0}\), that is, \(\{{x}_{0},{x}_{1},\dots ,{x}_{n}\}\). Then, it follows that

Vieta's formula transforms the input vector of the roots of the polynomial \([{x}_{1},\dots ,{x}_{n}{]}^{\mathrm{T}}\) into the vector of the coefficients \([{u}_{1},\dots ,{u}_{n}{]}^{\mathrm{T}}\), with the advantage of being invariant to the ordering of the domain, given that the polynomial is invariant under a re-ordering of its roots. Herein, Eq. (2) is used to transform either the patterns or the data events of the simulation and TI, such that

2.4 High-Order Statistics Distance Vector

Given two sets of data events \(d_{{N_{{x_{s} }} }}\) and \(d_{{N_{{x_{s} }} }}\) of order n, in order to develop an L2-norm distance measure, one must compare all possible ordering of these two data events, which results in n × (n!) number of operations. Hence, for a pattern of order n = 5, the number of operations becomes 600 per pattern, which is computationally expensive and can only operate on small TIs. In a new approach (Abolhassani et al. 2017), the L2-norm distance is calculated for the high-order statistics vectors of the data events

where the high-order statistics of the data event \(U\) s are calculated from Eq. (2). It is worth mentioning that the number of operations for this new distance measure is dramatically reduced to \(n\times {2}^{n-1}\) per simulation node versus \(n\times \left(n\right)!\) in L2-norm. For \(n=5\), \(\#\mathrm{op}(L2-\mathrm{norm})=600\) versus \(\#\mathrm{op}(\mathrm{high}-\mathrm{ord})=80\).

2.5 Modelling the Spatial Non-Stationary Joint Cumulative Distribution Function (CDF) as a Finite Sum of Disjoint CDFs

Given a probability space, the real-valued spatial random field \(Z(x,{N}_{x})\) is fully characterized by its non-stationary joint cumulative probability distribution function \(F(x,Z(x,{N}_{x}))\). In this work, this function is modelled by the statistical ensemble (Landau and Lifshitz 1980) given by

which is a sum over \(m\) mutually exclusive stationary CDFs, \({F}_{i}\)s, with a set of non-stationary mixing coefficients, known as a state set with binary variables \({\phi }_{i}({x}_{0})\in \{\mathrm{0,1}\}\). At any position \({x}_{0}\), the value of only one of the coefficients is 1, and the rest are zero, that is, \({\phi }_{k}=1\) and \({\phi }_{i}=0\) for \(i\ne k\).

2.6 Mutually Exclusive CDFs

On the grid of an input image with a fixed template T, Eq. (6) is considered to have a mutually exclusive set of stationary CDFs only if it satisfies the condition

where D(.) is the high-order statistics distance vector calculated from Eq. (5) to compare two sets of high-order statistics vectors of two data events \({d}_{{N}_{{x}_{1}}}\) and \({d}_{{N}_{{x}_{2}}}\). In addition, \({U}_{Z({x}_{1},{N}_{{x}_{1}})}\) and \({U}_{Z({x}_{2},{N}_{{x}_{2}})}\) are the high-order statistics of two patterns, introduced in Sect. 2.3, with elements calculated from relation (2). According to Eq. (7), if the statistics of the data event of two patterns are close, then their CDFs are equal to an identical stationary CDF, defined as \({F}_{k}(Z(x,{N}_{x})), \mathrm{and}\), and further, that their high-order statistics are the same. This also implies that \({\phi }_{k}({x}_{1})={\phi }_{k}({x}_{2})=1\).

A set of mutually exclusive CDFs are used as the basis functions to span a more complex non-stationary CDF using Eq. (6). At each position on the grid, only one basis function is responsible for the simulation of the node; that is, each basis function \({F}_{k}(Z(x,{N}_{x}))\) simulates a set of nodes \({\mathcal{D}}_{k}\) on the grid. The \({\mathcal{D}}_{k}\) s are mutually disjoint, and their union is the entire grid \(\mathcal{D}={\cup }_{k=1}^{m}{\mathcal{D}}_{k}\). As a result, for each simulation node, the entire TI is searched for nodes with similar statistics. Considering Eq. (7), the resulting set of nodes are generated from one of the basis CDFs and used for estimating the high-order statistics of that basis CDF, and the simulation node value is then sampled from the CDF.

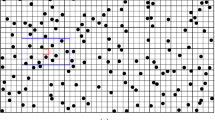

2.7 High-Order Transformation-Invariant Sequential Simulation

The goal is to simulate the non-stationary, real-valued random field \(\{Z({x}_{s},{N}_{{x}_{s}})|{x}_{s}\in {\Omega }_{s}\}\) at the position of all simulation nodes \({\Omega }_{s}\) with a fixed template T. A sequential multi-grid process is used when provided with a sparse set of data \(\{Z({x}_{h},{N}_{{x}_{h}})|{x}_{h}\in {\Omega }_{h}\}\) on a regular grid \({\Omega }_{h}\) and a fine-resolution TI \(\{Z({x}_{t},{N}_{{x}_{t}})|{x}_{t}\in {\Omega }_{t}\}\). In the hierarchy of the sequential multi-grid simulation, the coarse templates are firstly adopted to complete the first-stage simulation. As more nodes are simulated, finer-scale templates are used to search for the conditioning data. The order of the sequential simulation is shown in Fig. 1.

The hierarchy of the sequential multi-grid simulation. The order of the simulation starting from the blue nodes (sample data) and the red nodes (simulated first), followed by black nodes, orange nodes and eventually the green nodes. The simulation of each stage is conditioned on the previous simulated nodes

At each sequence, the path is chosen randomly and saved into a vector containing the indices of the visiting nodes \({I}_{s}\). Each successive random variable \(Z({x}_{s},{N}_{{x}_{s}})\) at node \(\{{x}_{s}|s\in {I}_{s}\}\) is conditioned to a data event \({d}_{{N}_{{x}_{s}}}\) and the neighbourhood nodes that are selected from the set of previously simulated nodes and the sample data (Goovaerts 1997). A template \(T=\{{h}_{1},\dots ,{h}_{n}\}\) is formed spatially by connecting each data event node to the simulation node. The first goal is to simulate the high-order statistics of the pattern, that is, \({U}_{Z({x}_{s},{N}_{{x}_{s}})}\), and then to sample the simulation node \(Z\left({x}_{s},{N}_{{x}_{s}}\right),\) given the CPDF \(P({U}_{Z({x}_{s},{N}_{{x}_{s}})}|{U}_{{d}_{{N}_{{x}_{s}}}},{U}_{Z({x}_{t},{N}_{{x}_{t}})})\). In this case, the \({U}_{(.)}\) function refers to the high-order statistics vector with the elements calculated from Eq. (2). This probability is complex and cannot be simulated directly unless it is represented by a model with a set of parameters \(\theta \in\Theta \) that are independent from the data event and TI. The parameter \(\theta \) is optimized to express the data event and TI. Thus, this probability can be decomposed using the total probability rule into

An estimation of the argument of the integral further simplifies Eq. (8). Figure 2 represents the term \(P(\theta |{U}_{{d}_{{N}_{{x}_{s}}}},{U}_{Z({x}_{t},{N}_{{x}_{t}})})\) as a function of \(\theta \). The contribution of this function is negligible except for a narrow band near an optimal value for the parameter’s maximum a posteriori estimation (MAP) \({\theta }_{\mathrm{MAP}}\). Consequently, Eq. (8) can be estimated as

In Eq. (9), \({C}_{\mathrm{MAP}}\) is the normalization factor to ensure the validity of the probability. Equation (9) implies that the probability distribution function of the node \({x}_{s}\) can be calculated if \({\theta }_{\mathrm{MAP}}\) is known. To estimate \({\theta }_{\mathrm{MAP}}\) based on (Fig. 2), \(\theta ={\theta }_{\mathrm{MAP}}\) when \(P(\theta |{U}_{{d}_{{N}_{{x}_{s}}}},{U}_{Z({x}_{t},{N}_{{x}_{t}})})\) is maximized for \(\theta \in\Theta \); that is,

Using Bayes’ rule, the right-hand side of the equation is extended into

In this case, one assumes a uniform prior in the parameter space \(\Theta \), with the marginal probability in TI \(P({U}_{Z({x}_{t},{N}_{{x}_{t}})})\) remaining independent from \(\theta \). Hence, the \({\theta }_{\mathrm{MAP}}\) is equivalent to the maximum likelihood estimation \({\theta }_{\mathrm{MLE}},\) and Eq. (11) simplifies into

Each node in TI is only conditioned on its neighbours \({N}_{{x}_{t}}\) and the parameter set \(\theta \). Hence, the joint distribution in Eq. (12) can be further decomposed into

A probability is maximized if the logarithm of that probability is maximized.

Furthermore, at the maximum, the derivative with respect to \(\theta \) should be zero; that is,

Equation (15) is solved for an optimal solution \({\theta }_{\mathrm{MLE}}\) by modelling the \(P({U}_{Z({x}_{t},{N}_{{x}_{t}})}|\theta ,{U}_{{d}_{{N}_{{x}_{s}}}},{U}_{{d}_{{N}_{{x}_{t}}}})\) with an exponential family in Sect. 2.8.

2.8 High-Order Simulation Model

The exponential family is used here to model the likelihood function in Eq. (15) with the parameter set \(\theta =[{\theta }_{1},\dots ,{\theta }_{n+1}{]}^{T}\) as

In Eq. (16), \(W\) is introduced as a weight function based on the similarity measure of the data event \({d}_{{N}_{{x}_{s}}}\) and \({d}_{{N}_{{x}_{t}}}\). It ensures that the TI patterns with more similar data events contribute more to the likelihood function. \(W\) has a probability distribution over \([0, 1]\); \({W}_{\mathrm{ML}}\) is the maximum likely similarity that is estimated by

where \(D(.,.)\) is given by Eq. (5) as the high-order statistics distance vector, a distance measure between two data events, and \(\Sigma \left({d}_{{N}_{{x}_{t}}}\right)\) is the mean square distance vector of the data event over the TI. Note that the weight \(W\) is defined to be a positive function that decreases as the distance between the two data events increases. \({{\sigma }_{0}}^{2}\) in Eq. (16) is to ensure that a proper probability is established for Eq. (16).

Substituting Eq. (16) into Eq. (15), the solution of the \({\theta }_{\mathrm{MLE}}\) is

the non-stationary expected value of the vector of the high-order statistics of the simulation pattern. Having calculated the \({\theta }_{\mathrm{MLE}}=[{\theta }_{1},\dots ,{\theta }_{n+1}]\) in Eq. (19) and considering Eq. (9), one gets

Applying the recurrence relation (3), Eq. (20) is rewritten in terms of the simulation data event \({U}_{{d}_{{N}_{{x}_{s}}}}\) and the simulation node \(Z({x}_{s})\) (the extra root in Vieta's formula (3)); that is,

where \({u}_{m}\) can be calculated with Eq. (2) and \({c}_{m}=(\genfrac{}{}{0pt}{}{n}{m}){\sigma }^{m}\), in which \(\sigma \) is the standard deviation of the \(x\) in the TI. Eventually, the simulation node \(Z({x}_{s})\) can be sampled from the distribution in Eq. (21).

2.9 Algorithm

-

1.

Define a random path \({\overline{\Omega }}_{s}\) to visit all positions on the simulation grid \({\Omega }_{s}\).

-

2.

Select the next unsampled position on the simulation grid \(x_{s} \in {\overline{\Omega }}_{s}\).

-

3.

Search the conditioning data \({\Omega }_{h}\) for \(n\) immediate neighbourhood positions \(N_{{x_{s} }} = \left\{ {x_{1} , \ldots ,x_{n} } \right\} \subset {\Omega }_{h}\) of the simulation node \(x_{s}\).

-

4.

Generate the data event vector \(d_{{N_{{x_{s} }} }} = Z\left( {N_{{x_{s} }} } \right) = [Z\left( {x_{1} } \right), \ldots ,Z\left( {x_{n} } \right)]^{T}\) with the node values at the neighbourhood positions \(N_{{x_{s} }}\).

-

5.

Compute the high-order statistics vector of the data event \(U_{{d_{{N_{{x_{s} }} }} }} = [u_{1} , \ldots ,u_{n} ]^{T}\) with elements \(u_{m}\) calculated from Eq. (2).

-

6.

Generate template \(T = \left\{ {h_{1} , \ldots ,h_{n} } \right\} = \left\{ {x_{1} - x_{s} , \ldots ,x_{n} - x_{s} } \right\}\).

-

7.

Search all positions in the TI and select the positions of the patterns \( \left( {{\Omega }_{t} ,N_{{{\Omega }_{t} }} } \right)\) matching the template T.

-

8.

Select the next unvisited TI pattern \(\left( {x_{t} ,N_{{x_{t} }} } \right) \in \left( {{\Omega }_{t} ,N_{{{\Omega }_{t} }} } \right)\).

-

9.

Generate the TI data event \(d_{{N_{{x_{t} }} }} = Z\left( {N_{{x_{t} }} } \right) = [Z\left( {y_{1} } \right), \ldots ,Z\left( {y_{n} } \right)]^{T}\) according to the node values in the TI.

-

10.

Calculate the high-order statistics vector \(U_{{d_{{N_{{x_{t} }} }} }} = [u_{1} , \ldots ,u_{n} ]^{T}\) of the data event \(d_{{N_{{x_{t} }} }}\) with elements \(u_{m}\) calculated from Eq. (2).

-

11.

Calculate the high-order distance \(D\left( {d_{{N_{{x_{s} }} }} ,d_{{N_{{x_{t} }} }} } \right)\) in Eq. (5).

-

12.

Calculate the similarity measure \({\Sigma }\left( {d_{{N_{{x_{t} }} }} } \right)\) from Eq. (17).

-

13.

Continue to Step (8) until all of the TI patterns are visited.

-

14.

Calculate \(\theta_{MLE} = [\theta_{1} , \ldots ,\theta_{n + 1} ]^{T}\) using Eq. (19).

-

15.

Draw a sample \(\overline{Z}\left( {x_{s} } \right)\) randomly from the distribution function in Eq. (21).

-

16.

Continue to Step (2) until all of the grid is simulated.

3 Examples and Comparisons

Applied aspects of the simulation method presented in the previous section are examined in this section using two-dimensional horizontal layers from the Stanford V fluvial reservoir (Mao and Journel 1999). Selected layers represent exhaustive images that are then sampled to generate data sets subsequently used to generate simulations with the proposed new non-stationary high-order spatial transformation-invariant simulation method, HOSTSIM. The statistics of the simulated realizations, namely, histogram, variogram map and third-order L-shaped cumulant map, are then compared to the ones calculated from the initial data. In addition, the simulations generated by the HOSTSIM method are compared to those generated by the well-known FILTERSIM multiple-point simulation method (Zhang et al. 2006) through its implementation available in the public domain (Remy et al. 2009).

Figure 3 shows two exhaustive images used for the simulations \( E_{1}\) and \(E_{2}\); each one is a horizontal section of the complete data with size of \(100 \times 100m^{2}\). Two sets of data are then selected from each section \(E_{1}\) and \(E_{2}\), one sampled at 625 and the other at 156 locations, respectively, resulting in four different data sets, namely \(DS_{1}\) (625 points from \(E_{1}\)), \(DS_{2}\) (156 points from \(E_{1}\)), \(DS_{3}\) (625 points from \(E_{2}\)) and \(DS_{4}\) (156 points from \(E_{2}\)), as shown in Fig. 4. The TI used for all simulations is a different section selected from the Stanford V fluvial reservoir and is shown in Fig. 5.

In each example, one of the data sets in Fig. 4 and the TI in Fig. 5 are used as input for simulations, and two realizations are generated by each method noted above. The exhaustive images, the input data and the realizations generated by HOSTSIM and FILTERSIM are illustrated in Figs. 6, 7, 8 and 9 for data samples \(DS_{1}\) through \(DS_{4}\), along with their respective validation graphs. The validation graphs are generated for both the input data and the HOSTSIM simulation and include the histogram, variogram map and third-order L-shaped spatial cumulant map. Note that the second-order variogram map of an image represents the variogram for each lag direction, that is, \(r \in \left[ {0,65} \right]\) and \(\theta \in \left\{ {0,\frac{\pi }{30}, \ldots ,\pi } \right\}\), in a polar coordinate system. The third-order L-shaped spatial cumulant of an image is generated by using an L-shaped template, which represents two orthogonal lag vectors from a common point. For all possible pairs of horizontal and vertical lags, the template is shifted over the image to extract the third-order cumulant by averaging the values calculated for each pattern within the image (Mustapha and Dimitrakopoulos 2010b, 2011a).

Example 1: The \(E_{1}\) exhaustive image is provided in the top row (left), and the input image \(DS_{1}\) is in the second row. Two HOSTSIM and FILTERSIM simulations are in the second and third columns (top section), followed by the histogram, variogram map and third-order spatial cumulant map below

Example 2: The \(E_{2}\) exhaustive image is shown in the top row (left), and the input image \(DS_{4}\) is provided in the second row. The two HOSTSIM and FILTERSIM simulations are in the second and third columns (above), followed by the histogram, variogram map and third-order spatial cumulant map (below)

3.1 Example 1

Figures 6 and 7 show the results generated from \(DS_{1}\) and \(DS_{3}\) with 625 data samples. There are low-contrast regions in the HOSTSIM realizations (light blue/green area seen toward the bottom of \(E_{1}\) in Fig. 6, and in the bottom-right and top-center of the \(E_{2}\) realization in Fig. 7) in both realizations. This behaviour is expected, as a denser set of data is available in these regions, and HOSTSIM can match similar patterns within the TI and better simulate the corresponding nodes. This is also the case in the high-value areas on the simulated realization (red-coloured regions, or channels). In general, both HOSTSIM and FILTERSIM perform well in reproducing the horizontal channels, which are along the preferential direction in both the exhaustive image and the denser data samples. However, HOSTSIM shows better performance than FILTERSIM in reproducing the connectivity along the vertical channels, as can be seen from the major vertical channels in the left part of the exhaustive image and the related realizations. In this situation, the concept of weighted high-order spatial statistics plays an important role; accordingly, HOSTSIM demonstrates an advantage because the samples along the vertical channels are given more weight according to their similarity to the sample data events even when they are less frequent. Although there are some cases where the connectivity of the channels is not well generated, for example in the top-right part of both realizations in Fig. 7, the narrow channel is not well represented in the data, and the HOSTSIM cannot accurately estimate the high-order statistic of the data event to match appropriate patterns from either the data or the TI. The histogram and variogram graphs of the HOSTSIM simulations are similar to those calculated for \(DS_{1}\) and \(DS_{3}\) in both Figs. 6 and 7. Similarity of the third-order spatial cumulants can also be observed from Figs. 6 and 7.

3.2 Example 2

The HOSTSIM realizations are presented in Figs. 8 and 9. As the number of input data are reduced to 156 samples, the quality of the results is reduced (relative to Example 1). The low-contrast regions are, however, simulated in these examples (light blue/green areas). Some parts of the channels are not generated in both realizations (the center-left part of the channel in Fig. 8 and the center-left and bottom-left part of the channels in Fig. 9) due to a lack of high-value samples in those regions, which are necessary in order to represent narrow channels. On the other hand, some channels are merged in the HOSTSIM realizations (center-right part of both Figs. 8 and 9), which is due to a lack of low-value samples between two channels in the input data samples. The validation graphs of the HOSTSIM simulations are similar to the ones generated for the input data samples in Figs. 8 and 9, although they are somewhat degraded due to the sparsity of the input data. While the HOSTSIM realizations generated for this example are somewhat degraded compared with the previous example, there is still better connectivity in the data than in the results from FILTERSIM; for example, the channel in the center-right part of Fig. 8 is partially generated in HOSTSIM realizations but not well presented in the FILTERSIM realizations. Low-contrast regions are better represented in the HOSTSIM realizations as well (see the bottom-left part of Fig. 8). Regarding the reproduction of high-order spatial statistics, the third-order L-shaped spatial cumulants are well generated for the lags < 50 m. Considering that replicates with lags larger than 50 m are relatively few because the samples are taken from an area of 100 \(\times\) 100 m2, given the sparsity of the samples, the inference of spatial cumulants within a short-scale range is more reliable in terms of comparisons.

To complete our analysis, two commonly used similarity measures in computer vision are applied here to compare the realizations generated by HOSTSIM and FILTERSIM for all cases. These measures are the peak signal-to-noise ratio (PSNR) and the structural similarity index (SSIM) (Salomon 2007). The PSNR is a point-to-point difference measure between two images in the logarithmic scale; in particular, the realization image is compared with the exhaustive image from point to point. Realizations that are more similar to the exhaustive image result in greater values, and where PSNR values approach infinity, the images are identical. Hence, this measure is useful for qualitatively comparing two realizations generated for an exhaustive image, as the PSNR values do not have an absolute interpretation. The SSIM, on the other hand, provides an absolute quantity comparing the similarity between a realization and an exhaustive image with a value between 0 and 1, where SSIM = 1 indicates identical images. Table 1 presents the PSNR and SSIM results calculated for HOSTSIM and FILTERSIM realizations generated for all four data sets (\(DS_{1}\), \(DS_{2}\), \(DS_{3}\) and \(DS_{4} )\). The reference exhaustive image for each data set is defined as the reference image in the calculation of PSNR and SSIM. HOSTSIM realizations are more similar to the exhaustive images in all cases. The SSIM values of the HOSTSIM realizations are \(\approx 10{\text{\% }}\) more accurate than the FILTERSIM realizations.

4 Conclusions

A new high-order, non-stationary stochastic simulation method was introduced in this paper. Given a spatially sparse set of data and a TI, this method simulates realizations of attributes of interest on a finer spatial grid. The method is designed to use only training patterns that respect the local high-order statistics of the simulation pattern by comparing the spatial high-order statistics of the data events of both the training and the target simulation patterns.

The high-order statistics of the data event of each simulation pattern is first calculated and compared with high-order statistics of the data event calculated for the training patterns with a similar spatial template. The contribution of each training pattern in simulating a pattern is determined by a weight calculated based on the similarity of the high-order statistics of the training and simulation patterns. The calculated weights are designed to be invariant against ordering the data event nodes. The high-order statistics of the simulation pattern is then estimated by calculating the non-stationary maximum likelihood estimate over the training patterns by giving more weight to the most similar patterns, after which a sample is drawn from the distribution function fitted to the estimated high-order statistics. The use of the high-order statistics in this simulation method ensures the ability to capture and reproduce complex patterns in the generated simulations. Meanwhile, using the non-stationarity similarity measure ensures that only training patterns that respect the local statistics of the simulation are used in the estimation of the high-order spatial statistics of the pattern. Notably, (i) non-stationary weight reduces the numerical instabilities that may be encountered in stationary high-order simulation methods, and (ii) the transformation-invariant quality of the measure ensures that different orientations of each TI are considered for the simulation of the pattern. The latter allows for data-driven simulations even without the use of a TI. Two set of examples have been used for testing the proposed method. The first contains 625 data points and the second contains a very sparse set of 156 data points. The simulation results are calculated on a 100 × 100 spatial grid. The training patterns are extracted from a 100 × 100 grid image. Despite the sparsity of the data in both examples, the method is able to simulate the channels in the initial exhaustive image. This is due to the utilization of a non-stationary rotation invariant weighting for the contribution of the patterns based on the similarity of the high-order statistics of their data events.

References

Abolhassani AAH, Dimitrakopoulos R, Ferrie FP (2017) A new high-order, nonstationary, and transformation invariant spatial simulation approach. In: Gómez-Hernández J, Rodrigo-Ilarri J, Rodrigo-Clavero M, Cassiraga E, Vargas-Guzmán J (eds) Geostatistics Valencia 2016. Springer, Dordrecht

Abramowitz M (1974) Handbook of mathematical functions. Dover Publications, New York

Arpat GB, Caers J (2007) Conditional simulation with patterns. Math Geol 39(2):177–203

Boyd JP, Ong JR (2011) Exponentially-convergent strategies for defeating the runge phenomenon for the approximation of non-periodic functions, part two: multi-interval polynomial schemes and multi-domain Chebyshev interpolation. Appl Numer Math 61(4):460–472

Chatterjee S, Dimitrakopoulos R, Mustapha H (2012) Dimensional reduction of pattern-based simulation using wavelet analysis. Math Geosci 44(3):343–374. https://doi.org/10.1007/s11004-012-9387-4

Chiles JP, Delfiner P (2012) Geostatistics: modeling spatial uncertainty, 2nd edn. Wiley, Hoboken

Cressie N (1986) Kriging nonstationary data. J Am Stat Assoc 81(395):625–634. https://doi.org/10.2307/2288990

David M (1977) Geostatistical ore reserve estimation. Elsevier, Amsterdam

David M (1988) Handbook of applied advanced geostatistical ore reserve estimation. Elsevier, Amsterdam

de Carvalho JP, Dimitrakopoulos R, Minniakhmetov I (2019) High-order block support spatial simulation method and its application at a gold deposit. Math Geosci 51(6):793–810. https://doi.org/10.1007/s11004-019-09784-x

de Vries LM, Carrera J, Falivene O, Gratacós O, Slooten LJ (2008) Application of multiple point geostatistics to non-stationary images. Math Geosci 41(1):29. https://doi.org/10.1007/s11004-008-9188-y

De Iaco S, Maggio S (2011) Validation techniques for geological patterns simulations based on variogram and multiple-point statistics. Math Geosci 43(4):483–500. https://doi.org/10.1007/s11004-011-9326-9

Dimitrakopoulos R, Mustapha H, Gloaguen E (2010) High-order statistics of spatial random fields: exploring spatial cumulants for modeling complex non-Gaussian and non-linear phenomena. Math Geosci 42(1):65–99. https://doi.org/10.1007/s11004-009-9258-9

Funkhouser HG (1930) A short account of the history of symmetric functions of roots of equations. Am Math Month 37(7):357–365

Gómez-Hernández JJ, Srivastava RM (2021) One step at a time: the origins of sequential simulation and beyond. Math Geosci 53(2):193–209. https://doi.org/10.1007/s11004-021-09926-0

Goodfellow R, Consuegra FA, Dimitrakopoulos R, Lloyd T (2012) Quantifying multi-element and volumetric uncertainty, Coleman McCreedy deposit, Ontario, Canada. Comput Geosci 42:71–78

Goovaerts P (1997) Geostatistics for natural resources evaluation. Oxford University Press, New York

Guardiano FB, Srivastava RM (1993) Multivariate geostatistics: beyond bivariate moments. In: Soares A (ed) Geostatistics Tróia ‘92. Springer, Dordrecht

Honarkhah M, Caers J (2010) Stochastic simulation of patterns using distance-based pattern modeling. Math Geosci 42(5):487–517. https://doi.org/10.1007/s11004-010-9276-7

Hu LY, Chugunova T (2008) Multiple-point geostatistics for modeling subsurface heterogeneity: a comprehensive review. Water Resour Res. https://doi.org/10.1029/2008WR006993

Journel AG (2005) Beyond covariance: the advent of multiple-point geostatistics. In: Leuangthong O, Deutsch CV (eds) Geostatistics Banff 2004. Springer, Dordrecht

Journel AG, Huijbregts CJ (1978) Mining geostatistics. Academic Press, London

Landau L, Lifshitz E (1980) Statistical physics, 3rd edn. Pergamon Press, Oxford

Liu D, Wang Z, Zhang B, Song K, Li X, Li J, Li F, Duan H (2006) Spatial distribution of soil organic carbon and analysis of related factors in croplands of the black soil region, northeast China. Agric Ecosyst Environ 113(1):73–81

Lochbühler T, Doetsch J, Brauchler R, Linde N (2013) Structure-coupled joint inversion of geophysical and hydrological data. Geophysics 78(3):1–14

Machuca-Mory DF, Deutsch CV (2013) Non-stationary geostatistical modeling based on distance weighted statistics and distributions. Math Geosci 45(1):31–48. https://doi.org/10.1007/s11004-012-9428-z

Mao S, Journel A (1999) Generation of a reference petrophysical/seismic data set: the Stanford V reservoir. In: Proceedings of the 12th annual report, Stanford center for reservoir forecasting. Stanford, CA, USA

Mariethoz G, Renard P, Straubhaar J (2010) The direct sampling method to perform multiple-point geostatistical simulations. Water Resour Res 46:W11536. https://doi.org/10.1029/2008WR007621

Minniakhmetov I, Dimitrakopoulos R (2016) Joint high-order simulation of spatially correlated variables using high-order spatial statistics. Math Geosci 49(1):39–66. https://doi.org/10.1007/s11004-016-9662-x

Minniakhmetov I, Dimitrakopoulos R (2021) High-order data-driven spatial simulation of categorical variables. Math Geosci. https://doi.org/10.1007/s11004-021-09943-z

Minniakhmetov I, Dimitrakopoulos R, Godoy M (2018) High-order spatial simulation using Legendre-like orthogonal splines. Math Geosci. https://doi.org/10.1007/s11004-018-9741-2

Mustapha H, Dimitrakopoulos R (2010a) Generalized Laguerre expansions of multivariate probability densities with moments. Comput Math Appl 60(7):2178–2189

Mustapha H, Dimitrakopoulos R (2010b) High-order stochastic simulation of complex spatially distributed natural phenomena. Math Geosci 42(5):457–485. https://doi.org/10.1007/s11004-010-9291-8

Mustapha H, Dimitrakopoulos R (2011a) Hosim: a high-order stochastic simulation algorithm for generating three-dimensional complex geological patterns. Comput Geosci 37(9):1242–1253

Mustapha H, Dimitrakopoulos R (2011b) A new approach for geological pattern recognition using high-order spatial cumulants. Comput Geosci 36(3):313–334

Mustapha H, Dimitrakopoulos R, Chatterjee S (2011) Geologic heterogeneity representation using high-order spatial cumulants for subsurface flow and transport simulations. Water Resour Res 47:W08536. https://doi.org/10.1029/2010WR009515

Mustapha H, Chatterjee S, Dimitrakopoulos R (2014) CDFSIM: efficient stochastic simulation through decomposition of cumulative distribution functions of transformed spatial patterns. Math Geosci 46(1):95–123. https://doi.org/10.1007/s11004-013-9490-1

Osterholt V, Dimitrakopoulos R (2007) Simulation of wireframes and geometric features with multiple-point techniques: application at Yandi iron ore deposit. In: Orebody modelling and strategic mine planning, 2nd ed., AusIMM, Spectrum Series vol 14. pp 95–124.

Remy N, Boucher A, Wu J (2009) Applied geostatistics with SGeMS : a user’s guide. Cambridge University Press, Cambridge, UK

Rezaee H, Mariethoz G, Koneshloo M, Asghari O (2013) Multiple-point geostatistical simulation using the bunch-pasting direct sampling method. Comput Geosci 54:293–308

Salomon D (2007) Data compression: the complete reference. Springer, New York. doi: https://doi.org/10.1007/978-1-84628-603-2

Stien M, Kolbjørnsen O (2011) Facies modeling using a Markov mesh model specification. Math Geosci 43(6):611–624

Strebelle S (2002) Conditional simulation of complex geological structures using multiple-point statistics. Math Geol 34(1):1–21

Strebelle S (2021) Multiple-point statistics simulation models: pretty pictures or decision-making tools? Math Geosci 53(2):267–278. https://doi.org/10.1007/s11004-020-09908-8

Strebelle S, Cavelius C (2014) Solving speed and memory issues in multiple-point statistics simulation program SNESIM. Math Geosci 46(2):171–186. https://doi.org/10.1007/s11004-013-9489-7

Tamayo-Mas E, Mustapha H, Dimitrakopoulos R (2016) Testing geological heterogeneity representations for enhanced oil recovery techniques. J Petrol Sci Eng 146:222–240

Toftaker H, Tjelmeland H (2013) Construction of binary multi-grid Markov random field prior models from training images. Math Geosci 45(4):383–409. https://doi.org/10.1007/s11004-013-9456-3

Yao L, Dimitrakopoulos R, Gamache M (2018) A new computational model of high-order stochastic simulation based on spatial Legendre moments. Math Geosci 50:929–960. https://doi.org/10.1007/s11004-018-9744-z

Yao L, Dimitrakopoulos R, Gamache M (2020) High-order sequential simulation via statistical learning in reproducing kernel Hilbert space. Math Geosci 52(5):693–723. https://doi.org/10.1007/s11004-019-09843-3

Yao L, Dimitrakopoulos R, Gamache M (2021) Training image free high-order stochastic simulation based on aggregated kernel statistics. Math Geosci 53(7):1469–1489. https://doi.org/10.1007/s11004-021-09923-3

Zhang T, Switzer P, Journel A (2006) Filter-based classification of training image patterns for spatial simulation. Math Geol 38(1):63–80

Zhang T, Gelman A, Laronga R (2017) Structure- and texture-based fullbore image reconstruction. Math Geosci 49(2):195–215. https://doi.org/10.1007/s11004-016-9649-7

Acknowledgements

This work was funded from Natural Science and Engineering Research Council of Canada (NSERC) Collaborative Research and Development Grant CRDPJ 500414-16, NSERC Discovery Grant 239019, and mining industry partners of the COSMO Stochastic Mine Planning Laboratory (AngloGold Ashanti, Barrick Gold, BHP, De Beers Canada, IAMGOLD Kinross Gold, Newmont and Vale).

Author information

Authors and Affiliations

Corresponding author

Appendix: Similarity Measures

Appendix: Similarity Measures

PSNR and SSIM (Salomon 2007) are two image similarity measures that are used in this paper to evaluate the simulation quality. PSNR (peak signal-to-noise ratio) is a measure calculated by comparing a reconstructed image to the original image. Assuming two \(m \times n\) images denoted \(I\) (reference) and \(K\) (reconstructed), first the mean-square error is calculated according to the following equation

The PSNR is then calculated as

This function returns a real value between zero and infinity. Greater values of PSNR imply better quality.

The structural similarity index (SSIM) is another quality measure used in this paper; it evaluates similarity according to a relative measure varying between 0 and 1 that compares an image to a reference image. Images that are more similar to the reference image result in SSIM values closer to 1. For the calculation of SSIM, two images \(I\) (reference) and \(K\) (reconstructed) of size \(m \times n\) are considered. Multiple smaller windows are generated from both images. Consider \(x\) and \(y\) representing two windows from \(I\) and \(K\). SSIM is calculated by

where \(\mu_{x}\) and \(\mu_{y}\) are the mean values over \(x\) and \(y\) windows. The parameters \(\sigma_{x}\), \(\sigma_{y}\) and \(\sigma_{xy}\) are the variance of \(x\) and \(y\) and the covariance between \(x\) and \(y\), respectively. The values \(c_{1}\) and \(c_{2}\) are constants.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Haji Abolhassani, A.A., Dimitrakopoulos, R., Ferrie, F.P. et al. A New Non-stationary High-order Spatial Sequential Simulation Method. Math Geosci 54, 1097–1119 (2022). https://doi.org/10.1007/s11004-022-10004-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11004-022-10004-2