Abstract

This paper performs an investigation of Kleinberg’s axioms (from both an intuitive and formal standpoint) as they relate to the well-known k-mean clustering method. The axioms, as well as a novel variations thereof, are analyzed in Euclidean space. A few natural properties are proposed, resulting in k-means satisfying the intuition behind Kleinberg’s axioms (or, rather, a small, and natural variation on that intuition). In particular, two variations of Kleinberg’s consistency property are proposed, called centric consistency and motion consistency. It is shown that these variations of consistency are satisfied by k-means.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of important areas of machine learning is the cluster analysis or clustering. Its importance stems from countless application domains, like agriculture, industry, business, healthcare etc. Cluster analysis seeks to split a set of items into subsets (usually disjoint, though not necessarily, possibly with the subsets forming a hierarchy) called clusters or groups that should be similar within the clusters and dissimilar between them. Additional criteria like group balancing, group size limits from below and above etc. may be also taken into account.

As the diversity of clustering methods grows, there exists a strong pressure for finding some formal framework to get a systematic overview of the expected properties of the partitions obtained. An axiomatic framework is needed, among others, for the following purposes: (1) common understanding of the goals of clustering, (2) common testing ground for comparison of various algorithms applied to the same data set, (3) predictability of algorithm behavior for similar clustering tasks, (4) predictability of partition properties of a population from the properties of the partition of (sufficiently large) sample. The above-mentioned goals are already pursued for classification algorithms.

A number of axiomatic frameworks have been devised for methods of clustering, the most cited probably the Kleinberg’s system (Kleinberg, 2002)Footnote 1. Kleinberg (2002, Sect. 2) defines clustering functions and the distance as

Definition 1

“A clustering function is a function f that takes a distance function d on [set] S [of size \(n\ge 2\)] and returns a partition \(\Gamma\) of S. The sets in \(\Gamma\) will be called its clusters. We note that, as written, a clustering function is defined only on point sets of a particular size (n); however, all the specific clustering functions we consider here will be defined for all values of n larger than some small base value.”

Definition 2

“With the set \(S=\{1,2,\dots ,n\}\) [...] we define a distance function to be any function \(d : S \times S \rightarrow {\mathbb {R}}\) such that for distinct \(i,j\in S\) we have \(d(i,j)\ge 0, d(i,j)=0\) if and only if \(i=j\), and \(d(i,j)=d(j,i)\). One can optionally restrict attention to distance functions that are metrics by imposing the triangle inequality: \(d(i,k)\le d(i,j)+d(j,k)\), for all \(i,j,k\in S\). We will not require the triangle inequality [...] , but the results to follow both negative and positive still hold if one does require it.”

Kleinberg does not require the triangle inequality. But if we consider the Euclidean space, the natural domain of k-means algorithm, then the triangle inequality is implied. Euclidean space imposes even stronger constraints (especially in higher dimensions). For this reason it is not quite accurate to state that both positive and negative Kleinberg’s results hold in case that such constraints on distance are imposed. In particular we will show that Kleinberg’s claim that k-means is not consistent, does not hold in one-dimensional Euclidean space (see our Theorem 3) and Kleinberg’s proof of k-means inconsistency is not valid in low dimensional Euclidean spaces (see our Lemma 12).

Embedding into Euclidean space raises the question on the behavior of not only the observed points in the data sample, but also of those points not present, that is the actual shape of a cluster and the clustering/partition in general, as well as the continuity of any transformation of data set. So issues will be discussed here that go beyond the framework considered by Kleinberg.

Kleinberg (2002) claims that a good partition may only be a result of a reasonable method of clustering. Hence he formulated axioms for clustering methods of distance-based cluster analysis. He postulates that some quite “natural” axioms need to be met, when we manipulate the distances between objects. As the axioms proved to be not applicable to all clustering algorithms, some authors, e.g. Ackerman et al. (2010), recommend to speak about properties. We will use the terms “axiom” and “property” interchangeably.

Property 1

(richness property) “Let Range(f) denote the set of all partitions \(\Gamma\) such that \(f(d) = \Gamma\) for some distance function d. Range(f) is equal to the set of all partitions of S.”

Property 2

(scale-invariance property) “For any distance function d and any \(\alpha > 0\), we have \(f(d) = f(\alpha \cdot d)\).”

Property 3

(consistency property) “Let \(\Gamma\) be a partition of S, and d and \(d'\) two distance functions on S. We say that \(d'\) is a \(\Gamma\)-transformation of d if (a) for all \(i, j \in S\) belonging to the same cluster of \(\Gamma\), we have \(d'(i, j) \le d(i, j)\) and (b) for all \(i, j \in S\) belonging to different clusters of \(\Gamma\), we have \(d'(i, j) \ge d(i, j)\). Let d and \(d'\) be two distance functions. If \(f(d) =\Gamma\), and \(d'\) is a \(\Gamma\)-transformation of d, then \(f(d') = \Gamma\)

(Scale-)invariance and consistency properties assume a transform of the data set, more precisely, of a concrete distance function, while richness does not. We shall call these transforms invariance transforms and consistency transforms. Furthermore, consistency and its variants are related to a concrete partition of a data set, while the other are not. Classes of both invariance transforms and consistency transforms include identity transform on the set of distances as a special case. We will speak subsequently, that a transform is applicable to a given partition, iff the data can be transformed in such a way that at least one distance between data points differs between the data set prior and after the transform. There exist trivial cases when the transforms are not applicable to the data set. One such case is the data set consisting of a single data point. We will say then that an algorithm does not have the non-trivial consistency property if there exist non-trivial data sets, that is ones with more than 2 data points in each cluster, to which no consistency transformation is applicable.

In this paper we concentrate on the mismatch and reconciliantion between the very popular k-means algorithm and the Kleinberg’s axiomatic system. k-means fulfils only one of three Kleinberg’s axioms - scale-invariance. k-means clustering algorithm seeks, for a dataset \({\textbf{X}}\), to minimize the functionFootnote 2

under some partition \(\Gamma\) into k clusters, where \(u_{ij}\) is an indicator ( \(u_{ij}\in \{0,1\}\)) of the membership of data point \({{\textbf {x}}}_i\) in the cluster \(C_j\) having the center at \(\varvec{\mu }_j\).

We will call k- means-ideal such an algorithm that finds a \(\Gamma _{opt}\) that attains the minimum of function \(Q(\Gamma )\). It is known that it is a hard task.

There exists a whole stream of research papers that approximate k-means-ideal within a reasonable error bound (e.g. \(9+\epsilon\) by Kanungo et al. (2002)) via cleverly initiated k-means type algorithms, e.g. k-means++, like (Song & Rajasekaran, 2010), or by some other types of algorithms, like (robust) single link, or not so approximating the partition into k clusters, but rather approximating the quality function via using a higher \(k'\) when clustering. Assumptions are possibly made about the structure of the data, e.g. about sufficiently large gaps between the clusters, or low variance compared to cluster center distances etc. These investigations aim at reducing the complexity of clustering task. But in practice, when special constraints cannot be assumed, an algorithm is used with the following structure:

-

1.

Initialize k cluster centers \(\varvec{\mu }_1,\dots ,\varvec{\mu }_k\).

-

2.

Assign each data element \({\textbf{x}}_i\) to the cluster \(C_j\) identified by the closest \(\varvec{\mu }_j\).

-

3.

Update \(\varvec{\mu }_j\) of each cluster \(C_j\) as the gravity center of data elements in \(C_j\).

-

4.

Repeat steps 2 and 3 until reaching a stop criterion (no change of cluster membership, or no sufficient improvement of the objective function, or exceeding some maximum number of iterations, or some other criterion).

If step 1 is performed as random uniform sampling from the set of data points (without replacement), then we will speak about k-means-random algorithm. If step 1 is performed according to k-means++ heuristics proposed by Arthur and Vassilvitskii (2007), then we will speak about k-means++ algorithm. Both attain a local minimum at worst, though k-means++ has the advantage that it will be a local minimum by at most \(O(\ln k)\) worse than the k-means-ideal, while k-means-random does not have such guarantees.

The k-means++ algorithm makes the initial guess of cluster centers as follows. \(\varvec{\mu }_1\) is set to be a data point uniformly sampled from \({\textbf{X}}\). The subsequent cluster centers are data points picked from \({\textbf{X}}\) with probability proportional to the squared distance to the closest cluster center chosen so far. For details check (Arthur & Vassilvitskii, 2007). The algorithm proposed by Ostrovsky et al. (2013) differs from the k-means++ only by the non-uniform choice of the first cluster center (the first pair of cluster centers should be distant, and the choice of this pair is proportional in probability to the squared distances between data elements).

We shall also discuss a variant of bisectional-k-means (Steinbach et al., 2000) by Steinbach et al. Bisectional-k-means consists in recursive application of 2-means \(k-1\) times to the cluster of highest cardinality. Our variant, bisectional-auto-means, differs in that the number of clusters is selected by the algorithm itself. It forbids clusters with cardinality below 2 and it stops if no cluster split leads to relative Q decrease of that cluster above some threshold. The algorithm is scale-invariant (relative threshold) and it is also rich “to a large extent” (cluster size \(\ge 2\), we call it \(2++\)-near-richness property). We will discuss consistency (Theorem 26).

We will also touch, in Sect. 8.1, the incremental k-means discussed by Ackerman and Dasgupta (2014). This k-means version does not guarantee to reach a local minimum and has been used in Ackerman and Dasgupta (2014) purely for some theoretical investigations of clusterability. We will use it to demonstrate that enclosure in a ball is a vital issue if we want to discuss cluster separation.

In \({\mathbb {R}}^m\), one would expect that small increases in inter-cluster distances and small decreases in inner-cluster distances should keep the clustering intact. We discuss this in Sect. 3.2.

We demonstrate that, in the fixed-dimensional Euclidean space, Kleinberg’s consistency axiom is counterintuive, in particular for k-means, and that its replacement with centric consistency and motion consistency yields reasonable axiomatic description of k-means behavior. Our contributions are:

-

We show that Kleinberg’s proof of non-consistency of k-means is not applicable in fixed dimensional space. k-means is consistent in 1d (see Sect. 3.1), For higher dimensions non-consistency must be proven differently than Kleinberg did (Section 3.2)

-

We show in Sect. 4.2 that consistency property alone leads to contradictions in \({\mathbb {R}}^m\).

-

We propose a reformulation of the Kleinberg’s axioms in accordance with the intuitions and demonstrate that under this reformulation the axioms stop to be contradictory (Section 6). In particular we introduce the notion of centric-consistency (Sect. 5) We provide a clustering function that fits the axioms of near-richness, scale-invariance and centric-consistency.

-

We show that k-means is centric-consistent (Sect. 5). Hence a real-world algorithm like k-means conforms to an axiomatic system consisting of: centric-consistency, scale-invariance and k-richness (Sect. 5).

-

We demonstrate in Sect. 6 that a natural constraint imposed onto Kleinberg’s consistency leads directly to the requirement of linear scaling, so that the centric consistency can be considered LESS restrictive than his.

-

We demonstrate there exists an algorithm (from the k-means family) that matches the axioms of constrained consistency, richness and invariance.

-

As the centric consistency imitates only the consistency inside a cluster, we introduce the notion of motion consistency, to approximate the consistency property outside a cluster and show that k-means is motion-consistent (Sect. 7) only if a gap between clusters is imposed.

-

We show that appropriately designed gaps induce local minima (Sect. 8) for k-means and formulate conditions under which the gap leads to a global minimum for k-means (Sect. 9).

-

We propose an alternative approach to reconcile Kleinberg’s axioms with k-means by either relaxing centric consistency to inner cluster consistency or k-richness to an approximation of richness (Sects. 8 and 9)

We start this paper with a review of the previous work on development of an axiomatic system (Sect. 2) and round the paper up with a discussion of some open problems (Sect. 10).

2 Previous work

Axiomatic systems for clustering may be traced back to as early as 1973, when Wright (Wright, 1973) proposed axioms of clustering functions creating unsharp partitions, similar to fuzzy systems. In his framework every domain object was attached a positive real-valued weight, that could be distributed among multiple clusters.

In general, as exposed by van Laarhoven and Marchiori (2014) and Ben-David and Ackerman (2009) the clustering axiomatic frameworks address either: (1) required properties of clustering functions, or (2) required properties of the values of a clustering quality function, or (3) required properties of the relation between qualities of different partitions.

One prominent axiomatic set, that was later fiercely discussed, was that of Kleinberg, as already stated. From the point of view of the above classification, it imposes restrictions on the clustering function itself.

2.1 Contradictions and counterintuitiveness of Kleinberg’s axioms

Kleinberg demonstrated that his three axioms ( Properties 1,2, 3) cannot be met all at once. So Kleinberg’s work points at an important issue that we shall first of all revise our expectations towards the obtained partition, as the seemingly obvious axiom set is not sound. He formulated the Impossibility Theorem.

Theorem 1

(Kleinberg 2002, Theorem 2.1) For each \(n\ge 2\), there is no clustering function f that satisfies Scale-Invariance, Richness, and Consistency.

An alternative proof to Kleinberg’s can be found in a paper by Ambroszkiewicz and Koronacki (2010), along with some discussion of the Kleinberg’s concepts. Ackerman et al. (2010) prove a slightly more general impossibility theorems (engaging inner-consistency and outer-consistency).

It has been observed by van Laarhoven and Marchiori (2014) that Kleinberg’s proof of Impossibility Theorem stops to be valid in case of graph clustering.

Kleinberg showed also that his axioms can be met pair-wise. He uses for purpose of this demonstration versions of the well-known statistical single-linkage procedure. The versions differ by the stopping condition:

-

k-cluster stopping condition (which stops adding edges when the sub-graph first consists of k connected components) - not rich,

-

Distance-r stopping condition (which adds edges of weight at most r only) - not scale-invariant,

-

Scale-stopping condition (which adds edges of weight being at most some percentage of the largest distance between nodes) - not consistent.

Notice that, as demonstrated by Kleinberg in his paper, also popular k-median and k-means clustering algorithms do not have the consistency property.Ben-David and Ackerman (2009) drew attention by an illustrative example (their Figure 2), that consistency is a problematic property by itself as it may give rise to new clusters at micro or macro-level.

2.2 Resolving contradictions by weakening richness

A number of relaxations of axioms related to clustering functions have been proposed in order to overcome the Kleinberg’s impossibility result. We recall several of them here, based on an overview by Ackerman (2012) and tutorial by Ben-David (2005). Kleinberg proposed a weakening of richness:

Property 4

The clustering method should allow to obtain any partition of the objects except into clusters each consisting of a single element (Kleinberg’s near-richness property)

However, he showed that this weakening does not resolve the basic contradiction in his axiomatic system, as the problem is more profound and related to so-called anti-chains. Kleinberg introduced the concept of a collection of partitions being an antichain.

Definition 3

We say that a partition \(\Gamma\) is a refinement of a partition \(\Gamma '\) if for every set \(C\in \Gamma\), there is a set \(C'\in \Gamma '\) such that \(C\subseteq C'\). We define a partial order on the set of all partitions by writing \(\Gamma \preceq \Gamma '\) if \(\Gamma\) is a refinement of \(\Gamma '\). Following the terminology of partially ordered sets, we say that a collection of partitions is an antichain if it does not contain two distinct partitions such that one is a refinement of the other.

Kleinberg showed, that invariance and consistency imply antichain property (his Theorem 3.1). The proof of this Theorem reveals, however, the mechanism behind creating the contradiction in his axiomatic system: the consistency operator creates new structures in the data.

As near-richness is not anti-chain, obviously restrictions onto invariance or consistency have to be introduced. It was also proposed to weaken Kleinberg’s richness (by Kleinberg himself) to k-richness:

Property 5

(Zadeh and Ben-David (2009)) For any partition \(\Gamma\) of the set \({\textbf{X}}\) consisting of exactly k clusters there exists such a distance function d that the clustering function f(d) returns this partition \(\Gamma\).

This relaxationFootnote 3 allows for some algorithms splitting the data into a fixed number of clusters, like k-means, not to be immediately discarded as clustering algorithms, given that no cluster is allowed to be empty.Footnote 4 This is because the set of all partitions into k clusters for a fixed k is anti-chain.

However, more strictly speaking, only k-means-ideal is k-rich. k-richness is problematic for randomized algorithms, like the k-means-random or k-means++, as their output is not deterministic. Therefore Ackerman et al. (2010) introduce the concept of probabilistic k-richness as:

Property 6

For any partition \(\Gamma\) of the set \({\textbf{X}}\) consisting of exactly k clusters and every \(\epsilon >0\) there exists such a distance function d that the clustering function returns this partition \(\Gamma\) with probability exceeding \(1-\epsilon\).

They postulate (omitting the proof) that probabilistic k-richness in probabilistic sense is possessed by k-means-random algorithm.

Furthermore, weakening of Kleinberg’s axioms via k-richness does not suffice to make k-means a clustering function as it still violates consistency axiom.

2.3 Resolving contradictions by weakening consistency

To overcome the problems with the consistency property, Ackerman et al. (2010) propose splitting of it into the concept of outer-consistency and of inner-consistency

Property 7

The clustering method is said to be outer-consistent if it delivers the same partition if one increases only distances between elements from different clusters and lets the distances within clusters remain unchanged.

Property 8

The clustering method is said to be inner-consistent if it delivers the same partition when one decreases only distances between elements from same cluster and lets the distances between elements of different clusters remain unchanged.

The k-means algorithm is said to be in this sense outer-consistent (Ackerman 2010, Section 5.2). However, the k-means algorithm is not inner-consistent, see (Ackerman 2010, Section 3.1). They prove that (1) no general clustering function can simultaneously satisfy outer-consistency, scale- invariance, and richness (Ackerman 2010, Theorem 1), and (2) no general clustering function can simultaneously satisfy inner-consistency, scale- invariance, and richness (Ackerman 2010, Lemma1). So in fact split into inner- and outer-consistency carries the problem of consistency and is in fact of no help. It only points out that the k-means has a major problem with inner consistency. They claim also that k -means-ideal has the properties of outer-consistency and localityFootnote 5. Ackerman et al. (2010) show that these properties are satisfied neither by k-means-random nor by a k-means with furthest element initialization.

Still another relaxation of the Kleinberg’s consistency is called Refinement Consistency. It is a modification of the consistency axiom by replacing the requirement that \(f(d)=f(d')\) with the requirement that one of \(f(d), f(d')\) is a refinement of the other. In particular, unidirectional coarsening consistency would mean f(d) is a refinement of \(f(d')\). and unidirectional refinement consistency would mean \(f(d')\) is a refinement of f(d). A partition \(\Gamma '\) is a refinement of a partition \(\Gamma\) if for each cluster \(c'\in \Gamma '\) there exists a cluster \(c\in \Gamma\) such that \(c'\subseteq c\). Obviously the replacement of the consistency requirement with refinement consistency breaks the impossibility proof of Kleinberg’s axiom system. The rationale behind this property is one of hierarchical clustering. Consistency transform may provide some substructure of a cluster so that recovering this cluster would make less sense than diving into the subclusters. On the other hand, the consistency transformation may lead to indiscernibility of contents of one or more subclusters so that instead of recovering them a more general structure comprising these clusters would make sense. See for example the effect visible in Figs. 2 and 3. An impossibility theorem was demonstrated for unidirectional-refinement-consistency, scale invariance and richness, but refinement-consistency, scale invariance and near-richness (richness excluding partition into singletons) are consistent with one another (Kleinberg, 2002).

Zadeh and Ben-David (2009) propose instead the order-consistency so that some versions of single-linkage algorithm can be classified as clustering algorithm. For any two distance functions d and \(d'\), if the orderings of edge lengths are the same then \(f(d)=f(d')\). k-means is not order-consistent.

2.4 Resolving contradictions by weakening scale-invariance

While the above discussion was concentrated around relaxing consistency and richness axioms, one may ask also about possibilities to overcome axiom contradiction via relaxation of the invariance axiom. In his tutorial, Ben-David (2005) suggests to relax Scale-Invariance to Robustness, that is, “Small changes in distance function d should result in small changes of partition f(d)” (Ben-David 2005, slide 25). This property of robustness draws the attention to the issue of cluster separation, or gaps between clusters.

2.5 Resolving contradictions by moving focus from the clustering functuion to cluster quality function

Ben-David and Ackerman (2009) propose another direction of resolving the problem of Kleinberg’s axiomatization impossibility. Instead of axiomatizaing the clustering function, one should rather create axioms for cluster quality function.

Definition 4

Let \({\mathfrak {C}}({\textbf{X}})\) be the set of all possible partitions over the set of objects \({\textbf{X}}\), and let \({\mathfrak {D}}({\textbf{X}})\) be the set of all possible distance functions over the set of objects \({\textbf{X}}\). A clustering-quality measure (CQM) \(J: {\textbf{X}} \times {\mathfrak {C}}({\textbf{X}}) \times {\mathfrak {D}}({\textbf{X}}) \rightarrow {\mathbb {R}}^+\cup \{0\}\) is a function that, given a data set (with a distance function) and its partition into clusters, returns a non-negative real number representing how strong or conclusive the clustering is.

Ackerman and Ben-David propose the following axioms:

Property 9

(CQM-Richness) A quality measure m satisfies richness if for each non-trivial partition \(\Gamma ^*\) of X, there exists a distance function d over X such that \(\Gamma ^* = argmax_\Gamma \{J(X,\Gamma , d)\}\).

Property 10

(CQM-scale-invariance) A quality measure J satisfies scale invariance if for every clustering \(\Gamma\) of (X, d) and every positive \(\beta\), \(J(X,\Gamma , d) = J( X,\Gamma , \beta d)\).

Property 11

(CQM-consistency) A quality measure J satisfies consistency if for every clustering \({\mathcal {C}}\) over (X, d), whenever \(d'\) is consistency-transformation of d, then \(J(X,\Gamma , d') \ge J(X,\Gamma , d)\).

Ackerman and Ben-David claim that

Theorem 2

CQM-Consistency, CQM-scale-invariance, and CQM-richness for clustering-quality measures form a consistent set of requirements.

The problem with this axiomatic set is that the CQM-consistency does not tell anything about the (optimal) partition being the result of the consistency-transform, while Kleinberg’s axioms make a definitive statement: the partition before and after consistency-transform has to be the same. So k-means could be in particular rendered to become CQM-consistent, CQM-scale-invariant, and CQM-rich, if one applies a bi-sectional version (bisectional-auto-means).

A number of further characterizations of clustering functions has been proposed to overcome Kleinberg axiom problems, e.g. (Ackerman et al., 2010) for linkage algorithms, (Carlsson & Mémoli, August 2010) for hierarchical algorithms, (Carlsson & Mémoli, 2008) for multiscale clustering.

Note that beside Kleinberg’s axioms there exist other impossible characterizations of clustering functions. Meilǎ (2005) demonstrates that one can’t compare partitions in a manner that agrees with the lattice of partitions, is convexly additive and bounded.

3 Impact of Embedding on Kleinberg’s Results

3.1 Embedding into One-Dimensional Space

In this subsection we will recall that the embedding into the Euclidean space may change some properties of clustering algorithms (Klopotek & Klopotek, 2021).

Kleinberg showed (Kleinberg 2002, Theorem 4.1) that in general case k-means is not consistent. We claim here in Theorem 3 that in one dimensional Euclidean space k-means is consistent. Ackerman (2010, Sect. 3.1) claim that k-means algorithm is not inner-consistent. In Lemma 5 we state here however, that no clustering function is inner-consistent in one dimension in the proper sense. Ackerman et al. (2010) introduce the concept of probabilistic k-richness (Ackerman 2010, Definition 3 (k-Richness)) (see Property 6) and in their Fig.2 classify k-means-random algorithm as one possessing the property of probabilistic k-richness. Based on a one-dimensional example, we demonstrate that k-means-random does not have probabilistic k-richness property, see Theorems 10, 11 - it has only a weak probabilistic k-richness property. These results shall convince that embedding into Euclidean space is worth investigating when considering axiomatization of clustering. We have proven in (Klopotek and Klopotek 2021, Theorem 4 therein)

Theorem 3

k-means is consistent in one dimensional Euclidean space.

So, the dimensionality counts when considering Kleinberg’s axioms. Kleinberg’s Theorem 4.1. (Kleinberg, 2002) stopped to be valid in one dimension.

Lemma 4

In one dimensional Euclidean space the inner-consistency transform is not applicable non-trivially, if we have a partition into more than two clusters.

Proof

On a line, the position of a point is uniquely defined by distances from two distinct points with fixed positions. Consider 3 clusters \(C_1,C_2,C_3\) of the clustering. Assume that the inner consistency transform moves closer points in cluster \(C_1\). So pick two points \(A\in C_2,B\in C_3\). The distance between these two points A, B is fixed. So let their positions, without any decrease in generality, be fixed. Now the distances of any point \(Z\in C_1\) to any of these selected points A, B cannot be changed. Hence the positions of points of the first cluster are fixed, no inner-consistency transform applies. \(\square\)

What is more, even with two clusters there are problems.

Lemma 5

In one dimensional Euclidean space the inner-consistency transform is not applicable non-trivially if we have at least two clusters with at least two (distinct) points each.

Proof

Let us denote the coordinates of these four points with a, b, c, d, where cluster assignment is \(a,b\in C_1\), \(c,d\in C_2\). In order to have a non-identity inner-consistency transform, let images \(a',b'\) of a, b get closer, that is \(|a-b|>|a'-b'|\). But the distances to image \(c'\) of c need to be preserved, that is \(|c'-a'|=|c-a|\) and \(|c'-b'|=|c-b|\). This is possible only in case of the strange clustering in which c lies between a and b, whereas \(c'\) does not lie in the interval \((a',b')\). Assume \(a'<b'<c'\). Now \(d'\) cannot lie between \(a'\) and \(b'\), because then \(|d-a|+|d-b|=|d'-a'|+|d'-b'|=|a'-b'|<|a-b|\) which denies triangle inequality. If d would lie outside (a, b) then \(|a-b|=| |d-a|-|d-b| |=| |d'-a'|-|d'-b'| |=|a'-b'|\) which is a contradiction (a, b did not get closer). If d would lie inside (a, b) then assume \(a<c<d<b\). \(|a'-b'|=|(c-a)-(b-c)|=|2c-a-b|\) and . \(|a'-b'|=|(d-a)-(d-c)|=|2d-a-b|\). So either 2d-a-b=2c-a-b, in which case d and c have to be identical (contradiction) or \(2d-a-b=-2c+a+b\) so that \(2d+2c=2a+2b\), or \(d+c=a+b\). in the latter case we would have \(d'<a'<b'<c'\) and so \(c'-d'= (c'-b')+(b'-a')+(a'-d')> (b -c )+ (d -a )>d-a\), which contradicts the idea of inner consistency transformation. \(\square\)

Via an example we show that by consistency transformation followed by a scaling-invariance transformation we obtain an inconsistent effect - elements of different clusters get closer to one another. That is we demonstrate that application of (scaling-) invariance axiom leads to violation of the consistency axiom of Kleinberg. More precisely:

Theorem 6

For a clustering algorithm f, conforming to consistency and scaling invariance axioms, if distance \(d_2\) is derived from the distance \(d_1\) by consistency transformation, and \(d_3\) is obtained from \(d_2\) via scaling, then the \(d_3\) cannot always be obtained from \(d_1\) via consistency transformation.

Proof

We prove the Theorem by example. Let \(S=\{e_1,e_2,e_3,e_4\}\) and let a clustering function partition it into \(S_1=\{e_1\},S_2=\{e_2,e_3\},S_3=\{e_4\}\) under distance function \(d_1\). One can construct a distance function \(d_2\) being a consistency-transform of \(d_1\) such that \(d_2(e_2,e_3)=d_1(e_2,e_3)\) and \(d_2(e_1,e_2)+ d_2(e_2,e_3)=d_2(e_1,e_3)\) and \(d_2(e_2,e_3)+ d_2(e_3,e_4)=d_2(e_2,e_4)\) and \(d_2(e_1,e_2)+d_2(e_2,e_3)+ d_2(e_3,e_4)=d_2(e_1,e_4)\) which implies that these points under \(d_2\) lie on a straight line. Without restricting the generality assume that the coordinates of these points in this line are located at points \(e_1=(0), e_2=(0.4), e_3=(0.6), e_4=(1)\) resp. Let perform Kleinberg’s consistency-transformation yielding distnce \(d_3\) keeping points in \({\mathbb {R}}\) and moving \(\{e_2,e_3\}\) closer to each other so that \(e_1=(0), e_2=(0.5), e_3=(0.6)\). But then \(e_4\) has to be shifted at least to \(e_4=1.1\).

Apply rescaling into the original interval that is multiply the coordinates (and hence the distances, yielding \(d_4\)) by 1/1.1. Then \(e_1=(0), e_2=\left( \frac{5}{11}\right) , e_3=\left( \frac{6}{11}\right) , e_4= (1)\). \(e_3\) is now closer to \(e_1\) than before. We could have made the things still more drastic by transforming \(d_2\) to \(d_3'\) in such a way that \(e_4=(2)\). In this case the rescaling would result in \(e_1= (0) , e_2= (0.25) , e_3= (0.3) , e_4=(1)\). So the distance between clusters \(S_1,S_2\) decreases instead of increasing, as would be expected if \(d_3\) could be obtained from \(d_1\) via consistency transform. \(\square\)

One would expect that the consistency transform should have moved elements of a cluster closer together and further apart those from distinct clusters and rescaling should not disturb the proportions. It turned out to be the other way. The above result tells us that it is possible for consistency combined with scaling invariance to yield inconsistent results. This suggests that it may be reasonable to introduce a concept comprising scaling and consistency.

Property 12

(scaling-consistency ) Let \(\Gamma\) be a partition of S, and d and \(d'\) two distance functions on S. We say that \(d'\) is a scaling-consistency-transformation of d if (a) for all \(i, j \in S\) belonging to the same cluster of \(\Gamma\), we have \(\frac{d'(i, j)}{\max _{i,j}d'(i, j)} \le \frac{d(i, j)}{\max _{i,j}d(i, j)}\) and (b) for all \(i, j \in S\) belonging to different clusters of \(\Gamma\), we have \(\frac{d'(i, j)}{\max _{i,j}d'(i, j)} \ge \frac{d(i, j)}{\max _{i,j}d(i, j)}\). If \(f(d) =\Gamma\), and \(d'\) is a scaling-consistency-transformation of d, then \(f(d') = \Gamma\)

Theorem 6 would not be valid if consistency would be replaced by scaling-consistency. Let us strengthen the statement of Theorem 6 by demonstrating that it is impossible in one dimension to perform a consistency transformation in such a way that extreme points agree prior and after the transformation if the number of clusters differs from 2.

Lemma 7

In one dimension, if we have more than 2 clusters, no consistency transformation exists such that the extreme points initially belong to different clusters, the extreme points before and extreme points after the transformation agree, and the distances within more than two clusters change.

Proof

As the distances between elements of different clusters cannot decrease, the extreme points will remain the same (except for the possibility that they flip, but we preclude this without restricting the generality of the results). Consider two points from the third cluster, not covering any of the extreme points. If we want two points in this cluster get closer to one another, either of them has to change its position. But it will then get closer to either of the extreme points of the data set, which belongs to a distinct cluster what would contradict consistency transformation assumptions. So no distance within internal clusters of the data set can be changed. \(\square\)

Lemma 7 shows that in one dimension, the Kleinberg’s consistency makes sense for two clusters only.

Let us turn to the issue of probabilistic k-richness of (Ackerman 2010, Definition 3 (k-Richness)). We claim below, denying Ackerman et al. (2010), that k-means-random can be in no way probabilistically k-rich.

But we can weaken the requirement that it returns the expected clustering with any predefined probability. Instead we impose a milder requirement that it is returned with a positive probability dependent on k only independently of the sample size. If we have such a function pr(k), then we are able to achieve success probability \(1-\epsilon\) that we desire by repeating k-means-random run a desired number of times, dependent again on k only.

Property 13

A clustering method is said to have weak probabilistic k-richness property if there exists a function \(pr(k)>0\) for (each k) independent of the sample size and distance that for any partition \(\Gamma\) of the set \({\textbf{X}}\) consisting of exactly k clusters there exists such a distance function d that the clustering function returns this partition \(\Gamma\) with probability exceeding pr(k).

Theorem 8

(We proved it in (Klopotek 2019, Theorem 1)) k-means-ideal algorithm is k-rich. k-means-random algorithm is weakly probabilistically k-rich. k-means++ algorithm is probabilistically k-rich.

Theorem 9

In one dimension k-means-ideal can be considered as a k-clustering method as it is consistent (by Theorem 3), k-rich (by Theorem 8) and scale-invariant (by the property of quality function).

Theorem 10

(We proved it in (Klopotek 2019, Theorem 2)) Define the enclosing radius of a cluster as the distance from cluster center to the furthest point of the cluster. For \(k\ge 3\), when distances between cluster centers exceed 6 times the largest enclosing radius r, the k-means-random algorithm is not probabilistically k-rich.

This not quite the denial of Ackerman’s claim (the distances between clusters can be smaller), but the fact that clusters with wide gaps between them cannot be detected, is disturbing. We proved a denial of Ackerman’s claim in Klopotek (2019).

Theorem 11

(see Klopotek (2019)) k-means-random algorithm is not probabilistically k-rich for \(k\ge 4\).

3.2 More than one dimension

Kleinberg proved via a bit artificial example (with unbalanced samples and an awkward distance function) that k-means algorithm with k=2 is not consistent. Kleinberg’s counter-example would require an embedding in a very high dimensional space, non-typical for k-means applications. Also k-means tends to produce rather balanced clusters, so Kleinberg’s example could be deemed to be eccentric. We have shown that k-means is consistent in one dimension (see Sect. 3.1). What about the higher dimensions? We claim:

Lemma 12

In \({\mathbb {R}}^m\) For data sets of cardinality \(n>2(m+1)\) Kleinberg’s proof (Kleinberg 2002, Theorem 4.1) that k-means (\(k=2\)) is not consistent, is not valid.

Proof

In terms of the concepts used in the Kleinberg’s proof, either the set X or the set Y is of cardinality \(m+2\) or higher. Kleinberg requires that distances between \(m+2\) points are all identical which is impossible in \({\mathbb {R}}^m\) (only up to \(m+1\) points may be equidistant). \(\square\)

In (Klopotek 2021, Theorem 5) we proved, by a more realistic example (balanced, in Euclidean space) that inconsistency of k-means in \({\mathbb {R}}^m\) is a real problem:

Lemma 13

k-means in 3d is not consistent.

Not only consistency violation is shown there, but also refinement-consistency violation. Not only in 3d, but also in higher dimensions. So what about the case of 2 dimensions? We have proven (Klopotek 2021 , Theorem 6) that

Lemma 14

k-means in 2d is not consistent.

Consider a consistency-transform on a single cluster of a partition. The consistency-transform shall provide distances compatible with the situation that only elements of a single cluster change position in the embedding space in a continuous way. By continuous way we mean that for each point \({\textbf{p}}\) relocating to a new position \(\mathbf {p'}\) there exists a continuous function \(f_{{\textbf{p}}}(\beta )\) with \(\beta \in [0,1]\) such that \(f_{{\textbf{p}}}(0)= {\textbf{p}}\) and \(f_{{\textbf{p}}}(1)= \mathbf {p'}\) and for each \(\beta\) in range for the clustering \(\Gamma\) \(f_{\Gamma }(\beta )\) is a consistency transform of the \(f_{\Gamma }(\beta ')\) for each \(\beta '\le \beta ,\beta '\ge 0\).

Define A as an internal cluster if any of its data points \({\textbf{p}}\) lies within the convex hull (but not on the border) of the points from outside of A.

Theorem 15

In a fixed dimensional Euclidean space \({\mathbb {R}}^m\) it is impossible to perform continuous consistency-transform relocating only points within a single internal cluster (or alternatively formulated, changing only the distances to elements of a single cluster).

Proof

If two points of a cluster A should get closer to one another then at least one of them has to change its position. Let \({\textbf{p}}\in A\) be an internal point we intend to move to a new internal position \(\mathbf {p'}\) not further away from the hyperplane h mentioned below than any other point from outside the cluster and not contained in h. in order to perform the consistency-transformation. The hyperplane h orthogonal to \(\mathbf {p'}-{\textbf{p}}\) passing through \({\textbf{p}}\) will contain at least one point of the other clusters on each side of this hyperplane (say \({\textbf{a}}\) on the same side as \(\mathbf {p'}\), and \({\textbf{b}}\) on the other (as p is in an internal cluster). The squared distances of these points to \({\textbf{p}}\) would be squared distances to the hyperplane plus the squared distances in this hyperplane of the point projections to \({\textbf{p}}\). The squared distance of \({\textbf{a}}\) to \(\mathbf {p'}\) would be squared difference between distances of \({\textbf{a}}\) to its projection on the h and of \(\mathbf {p'}\) to \({\textbf{p}}\) plus the squared distances in this hyperplane of the point projection to \({\textbf{p}}\). That means that the distance to this point will decrease, contrary to consistency assumption. So the points of A that are internal, cannot move. Now look at points of A that are not internal. They had to keep their distance to the internal points or decrease it. But if they decrease it, they will get closer to the hyperplane orthogonal to motion direction and passing through \({\textbf{p}}\) and hence be closer to at least one other point from outside of A which contradicts the consistency transform. Hence no possibility of distance change exists. \(\square\)

The continuous consistency-transform enforces either adding a new dimension and moving the affected internal single cluster along it or to change positions of elements in at least two clusters within the embedding space. Therefore for many practical datasets, neither the non-trivial single-cluster continuous consistency transformation nor single-cluster inner-consistency transformation can be applied. Same holds for multiple-cluster inner-consistency:

Lemma 16

In m-dimensional Euclidean space for a data set with no cohyperplanarity of any \(m+1\) data points the inner-consistency transform is not applicable non-trivially, if we have a partition into more than \(m+1\) clusters.

Proof

In \({\mathbb {R}}^m\), the position of a point is uniquely defined by distances from \(m+1\) non-cohyperplanar distinct points with fixed positions. Assume that the inner consistency transform moves closer points in cluster \(C_0\). So pick \(m+1\) points \(A_1,\dots ,A_{m+1}\) from any \(m+1\) other different clusters \(C_1,\dots ,C_{m+1}\). The distances between these \(m+1\) points are fixed. So let their positions, without any decrease in generality, be fixed in space. Now the distances of any point Z in the cluster \(C_0\) to any of these selected points cannot be changed. Hence the positions of points of the first cluster are fixed, no inner-consistency transformation applies. \(\square\)

As the data sets with properties mentioned in the premise of the lemma are always possible, no algorithm producing the mentioned number of clusters has non-trivially the inner consistency property for all sets.

Theorem 17

In a fixed dimensional Euclidean space \({\mathbb {R}}^m\) it is impossible to perform continuous outer-consistency-transform increasing distances to a single internal cluster only.

Proof

This theorem can be proven following the method of Theorem 15. \(\square\)

There exists always a data set for which continuous outer-consistency of a single cluster is not applicable.

This theorem trivializes Ackerman et al. (2010) claim that k-means possesses the property of outer-consistency: in vast majority of situations k-means algorithm is applied to data where clusters easily happen to be all internal. The key word in this theorem is however continuously in strict conjunction with Euclidean distance and moving a single cluster. It is the embedding into the Euclidean space that causes the problem.

But what when we want to move several clusters?

Theorem 18

There exist infinitely many clusterings for which continuous Kleinberg’s consistency transform is always an identity transformation, whatever number of clusters is subject of the transform.

Proof

Let us consider the clustering into two clusters presented in Fig. 1 to the left. Assume that the points are sufficiently dense to be not distinguishable by human eye. recall the simple geometrical fact, that for points B, C the points X, Y lie on the other side of the straight line BC and that the orthogonal projections of X, Y on BC lie on the line segment BC. Under these circumstances, if we keep lengths of line segments BX, BY, CX, CY and decrease the length of line segment BC, then the distance between X, Y will increase. The same will happen, if we increase the lengths of line segments BX, BY, CX, CY slightly. Consider now the Kleinberg’s consistency transform of the green and blue cluster. Line segments BX, BY, CX, CY cannot be shortened and line segments BC, XY cannot be lengthened. So both line segments BC and XY will be of constant length under continuous consistency transform. Same applies to segments AB and AC. For this reason this applies to all interior points of the triangle A, B, C as no inner point of a triangle can be moved closer to three triangle corners at once. For any other green point D at least for two of the points A, B, C the respective line segments DA, DB, DC have the property that two blue points lie on the opposite side of it, and the orthogonal projections lie on the respective line segment. Therefore the consistency transform applied to both clusters will in no way change the green cluster.

By symmetry we see that we have the same with the blue cluster.

Under these conditions the consistency transform reduces to the outer consistency transform. But in whatever direction we try to shift the green cluster, the point B or C will get closer to X or Y. So the outer consistency transform is not applicable. It is easy to create similar constructs to infinitely many clusterings where clusters are not convex. \(\square\)

In the above proof we have deliberately used concave clusters. But what when we restrict ourselves to partitions into convex clusters.

Theorem 19

There exist infinitely many clusterings for which continuous outer-consistency transform is always an identity transformation, even if each cluster of the clustering is enclosed into a convex set not intersecting with those of the other clusters, whatever number of clusters is subject of the transform.

Proof

The Fig. 1 to the right illustrates the problem. The differences between the speed vectors of clusters sharing an edge must be orthogonal to that edge (or approximately orthogonal, depending on the size of the gap). In this figure the direction angles of the lines AF, BG, CH were deliberately chosen in such a way that it is impossible. \(\square\)

Hence in general case the continuous consistency transform is impossible, and in case of convex clusters the outer consistency transform is impossible.

So these impossibility results apply to clustering methods, like k-single-link, for which a cluster may contain points from other cluster within its convex hull. In such cases the continuous outer-consistency transform is not applicable. So k-single link algorithm is not continuously outer-consistent. And it is not continuously consistent. This puts Kleinberg’s axiomatic system in a new light.

It is, however, worth noting that

Theorem 20

In a fixed dimensional Euclidean space \({\mathbb {R}}^m\) it is always possible to apply continuous outer-consistency-transform increasing distances between all clusters resulting from a k-means algorithm.

Proof

For the continuous move operation fix the position of one cluster and move the other clusters in the direction of the vector pointing from this cluster center to the other cluster center and with speed of motion proportional to the length of this vector. Then each cluster will move perpendicularly to the hyperplane separating it from some other cluster, so that the distances between each pair of points from two clusters will increase. \(\square\)

So Kleinberg’s consistency transform is applicable continuously to k-means and still k-means is not consistent. It becomes obvious that Kleinberg’s consistency transform requires urgently a substitute under the fixed-dimensional Euclidean space.

We have already shown that in general k-means-random, contrary to claims of Ackerman et al. (2010) (see Theorem 10) is not probabilistically k-rich in one dimension. Same holds for more dimensions.

Theorem 21

(We proved it in (Klopotek 2019 , Theorem 3)) For \(k\ge m V_{ball, m, R}/V_{simplex, m, R-4r}\) where \(V_{ball, m, R}= \frac{\pi ^\frac{m}{2}}{\Gamma \left( \frac{m}{2} + 1\right) }R^m\), \(V_{simplex, m, R-4r}= {\frac{\sqrt{n+1}}{n!\sqrt{2^n}}} (R-4r)^m\), where m is the dimension of space, \(S_{m}(R) = {\frac{2\pi ^{(m+1)/2}}{\Gamma (\frac{m+1}{2})}R^m}\), when distances between cluster centers exceed 10 times the largest enclosing radius r, and \(R=14r\), the k-means-random algorithm is not probabilistically k-rich.

4 Counter-intuitiveness of Kleinberg Axioms

4.1 What about invariance

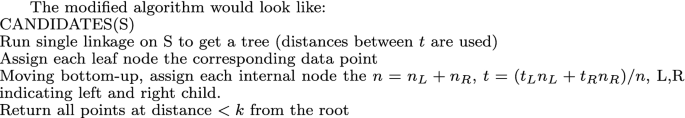

Data from Fig. 2 after Kleinberg’s consistency-transformation clustered by k-means algorithm into two groups

The (scaling) invariance may seem to be a natural property for clustering algorithms and it is so in some range. But real measurement devices have a finite resolution so that scaling the data down beyond some limit may make them indistinguishable. Also scaling up is not without problems as beyond some scale other measurement methods with different precision have to be applied. So the axiom is acceptable only within a reasonable range of scaling factors.

4.2 Counter-intuitiveness of Consistency Axiom

We have proven a number of formal limitations imposed by fixed dimensionality onto Kleinberg’s consistency transform, and its derivatives like continuous-consistency transform, inner-consistency transform, outer consistence transform. In one dimension k-means starts to be consistent, contrary to the general result of Kleinberg (for not embedded distance function). In 1d inner consistent transformation stops to be possible, a combination of consistency transform and invariance transformation produces a result that is not consistent (in the sense of Kleinberg’s consistency transformation), Kleinberg’s consistency makes sense only for two clusters if invariance shall not lead to inconsistency. In higher dimensional spaces, inner consistency transform cannot be applied to a single internal cluster. Continuous outer consistency transform cannot be applied to a single internal cluster. Continuous consistency transform cannot be applied to a single internal cluster. But in m dimensional space, if you have more than \(m+1\) clusters, they all may turn out to be internal.

Data from Fig. 2 after Kleinberg’s consistency-transformation clustered by k-means algorithm into four groups

In this section let us point at practical problems with consistency. Already Ben-David Ben-David and Ackerman (2009) indicated problems consisting in emergence of impression of a different clustering of data (with a different number of clusters) as they show in their Fig.1. This may imply problems if we allow for the frequent practice of applying k-means algorithm with k varying over a range of values in order to identify which value of k is most appropriate for a given data set. A "saturation" of the percentage of the variance explained may be considered as a good choice of k, e.g. exceeding say 90% of explained variance or abrupt break in increase of relative variance explained.

Let us apply this method of choosing k to the data illustrated in Fig. 2. This example is a mixture of data points sampled from 8 normal distributions. The k-means algorithm with \(k=8\), as expected, separates quite well the points from various distributions, as visible in that Figure. As visible from the second column of Table 1, in fact \(k=8\) does the best job in reducing the unexplained variance. At about 7-8 clusters we get saturation, 90% explained variance mark is crossed and with more than 8 clusters the relative increase of variance explained drops abruptly. The second column in Table 2 shows the relative gain in variance explained compared to uniform distribution. We see here that, compared to uniform distribution, the biggest advantage is for 7 clusters. However, there is a radical drop of advantage for more than 8 clusters.

Figure 3 illustrates a result of a \(\Gamma\)-transform on the results of the clustering from Fig. 2 (outer consistency transform was applied). Visually we would tell that now we have two clusters. We could also classify this data set as having four clusters, as indicated in Fig. 4. This renders Kleinberg’s consistency axiom counterintuitive, independently of the choice of the particular clustering algorithm - the k-means. And demonstrates the weakness of outer-consistency concept. The third column of the Table 2 shows a pick of gain for 2 and then for 6 clusters, with an abrupt decrease after 8 clusters.

Let us make a remark also about Fig. 5 and 4\(^{th}\) columns in tables 1 and 2. The data represent the proportional continuous outer-consistency transformation as explained in Theorem 20. We see that this kind of transformation does not violate the data structure - 8 clusters become the best choice with no competitor, when we look at the saturation criterion.

Last not least let us draw the attention to the fact that Kleinberg’s consistency transforms may create new structures.

Example 1

Let us consider a set of 98 points with coordinates equal \((i,j)\cdot \sqrt{2}\), \(i,j=1,\dots ,10\) except for (1,1) and (10,10) as well as their mirrors with symmetry center (0,0). The result of clustering of this data into 2 to 10 clusters is shown in table 3 in columns Original and Original (gain). The Original gain column indicates that the best clustering is for \(k=2\). Now let us perform the Kleinberg consistency transform when we consider the clustering into two clusters. One possibility is to have points with coordinates \(-(i,j)/6+10\sqrt{2}, (i,j)/6+10\sqrt{2}-1, (i,j)/6-10\sqrt{2}, -(i,j)/6-10\sqrt{2}+1\), with \(i,j=0,\dots ,6\). The result of clustering of this data into 2 to 10 clusters is shown in table 3 in columns Kleinberg and Kleinberg (gain). The Kleinberg gain column indicates that the best clustering is for \(k=4\), though one for \(k=2\) is fairly good.

Data from Fig. 2 after continuous Kleinberg’s \(\Gamma\)-transformation clustered by k-means algorithm into 8 groups

Finally, do not overlook the effect presented in Fig. 10. The consistency transformation can generate new clusters. This is the reason why we encounter contradictions in the Kleinberg’s axiomatic system.

4.3 Problems of Richness Axiom

We have already shown some problems with the richness, when it comes to embedding into 1d. One can point at a k-means heuristics (k-means++) that is k-rich in probabilistic sense. But contrary to general Ackerman’s result, in 1d k-means-random is not k-rich in probabilistic sense.

While consistency transform turns out to be too restrictive in finite dimensional space, the richness is problematic the other way.

As already mentioned, richness or near-richness forces the introduction of refinement-consistency which is a too weak concept. But even if we allow for such a resolution of the contradiction in Kleinberg’s framework, it still does not make it suitable for practical purposes. The most serious drawback of Kleinberg’s axioms is the richness requirement.

But we may ask whether or not it is possible to have richness, that is for any partition there exists always a distance function that the clustering function will return this partition, and yet if we restrict ourselves to \({\mathbb {R}}^m\), the very same clustering function is not rich any more, or even it is not chaining.

Consider the following clustering function f(). If it takes a distance function d() that takes on only two distinct values \(d_1\) and \(d_2\) such that \(d_1<0.5 d_2\) and for any three data points a, b, c if \(d(a,b)=d_1, d(b,c)=d_1\) then \(d(a,c)=d_1\), it creates clusters of points in such a way that a, b belong to the same cluster if and only if \(d(a,b)=d_1\), and otherwise they belong to distinct clusters. If on the other hand f() takes a distance function not exhibiting this property, it works like k-means. Obviously, function f() is rich, but at the same time, if confined to \({\mathbb {R}}^m\), if \(n>m+1\) and \(k\ll n\), then it is not rich – it is in fact k-rich, and hence not chaining.

Can we get around the problems of all three Kleinberg’s axioms in a similar way in \({\mathbb {R}}^m\)? Regrettably,

Theorem 22

If \(\Gamma\) is a partition of \(n>2\) elements returned by a clustering function f under some distance function d, and f satisfies Consistency, then there exists a distance function \(d_E\) embedded in \({\mathbb {R}}^m\) for the same set of elements such that \(\Gamma\) is the partition of this set under \(d_E\).

Theorem 22 implies that the constructs of contradiction of Kleinberg axioms are simply transposed from the domain of any distance functions to distance functions in \({\mathbb {R}}^m\).

Proof

To show the validity of the theorem, we will construct the appropriate distance function \(d_E\) by embedding in the \({\mathbb {R}}^m\). Let dmax be the maximum distance between the considered elements under d. Let \(C_1,\dots ,C_k\) be all the clusters contained in \(\Gamma\). For each cluster \(C_i\) we construct a ball \(B_i\) with radius \(r_i\) equal to \(r_i=\frac{1}{2} \min _{x,y\in C_i, x\ne y} d(x,y)\). The ball \(B_1\) will be located in the origin of the coordinate system. \(B_{1,\dots ,i}\) be the ball of containing all the balls \(B_1,\dots ,B_i\). Its center be at \(c_{1,\dots ,i}\) and radius \(r_{1,\dots ,i}\). The ball \(B_{i}\) will be located on the surface of the ball with center at \(c_{1,\dots ,i-1}\) and radius \(r_{1\dots ,i-1}+dmax+r_{i}\). For each \(i=1,\dots ,k\) select distinct locations for elements of \(C_i\) within the ball \(B_i\). The distance function \(d_E\) define as the Euclidean distances within \({\mathbb {R}}^m\) in these constructed locations.

Apparently, \(d_E\) is a consistency-transform of d, as distances between elements of \(C_i\) are smaller than or equal to \(2 r_{i}=\min _{x,y\in C_i, x\ne y} d(x,y)\), and the distances between elements of different balls exceed dmax. \(\square\)

This means that if f is rich and consistent, it is also rich in \({\mathbb {R}}^m\).

But richness is not only a problem in conjunction with scale-invariance and consistency, but rather it is a problem by itself.

It has to be stated first that richness is easy to achieve. Imagine the following clustering function. You order nodes by average distance to other nodes, on tights on squared distance and so on, and if no sorting can be achieved, the unsortable points are set into one cluster. Then we create an enumeration of all clusters and map it onto unit line segment. Then we take the quotient of the lowest distance to the largest distance and state that this quotient mapped to that line segment identifies the optimal clustering of the points. Though the algorithm is simple in principle (and useless also), and meets axioms of richness and scale -invariance, we have a practical problem: As no other limitations are imposed, one has to check up to \(\sum _{k=2}^n \frac{1}{k!}\sum _{j=1}^{k}(-1)^{k-j}\Big (\begin{array}{l}k\\ j\end{array}\Big )j^n\) possible partitions (Bell number) in order to verify which one of them is the best for a given distance function because there must exist at least one distance function suitable for each of them. This cannot be done in reasonable time even if each check is polynomial (even linear) in the dimensions of the task (n).

Furthermore, most algorithms of cluster analysis are constructed in an incremental way. But this can be useless if the clustering quality function is designed in a very unfriendly way. For example as an XOR function over logical functions of class member distances and non-class member distances (e.g. being true if the distance rounded to an integer is odd between class members and divisible by a prime number for distances between class members and non-class members, or the same with respect to class center or medoid).

Just have a look at sample data from Table 4. A cluster quality function was invented along the above line and exact quality value was computed for partitioning first n points from this data set as illustrated in Table 5. It turns out that the best partition for n points does not give any hint for the best partition for \(n+1\) points therefore each possible partition needs to be investigated in order to find the best one.Footnote 6

Summarizing these examples, the learnability theory points at two basic weaknesses of the richness or even near-richness axioms. On the one hand the hypothesis space is too big for learning a clustering from a sample On the other hand an exhaustive search in this space is prohibitive so that some theoretical clustering functions do not make practical sense.

There is one more problem. If the clustering function can fit any data, we are practically unable to learn any structure of data space from data (Kłopotek, 1991). And this learning capability is necessary at least in the cases: either when the data may be only representatives of a larger population or the distances are measured with some measurement error (either systematic or random) or both. Note that we speak here about a much broader aspect than cluster stability or cluster validity, pointed at by von Luxburg et al. (2011); von Luxburg (2009).

In the special case of k-means, the reliable estimation of cluster center position and of the variance in the cluster plays a significant role. But there is no reliability for cluster center if a cluster consists of fewer than 2 elements, and for variance the minimal cardinality is 3. Hence the \(3++\)-near-richness is a must for similar applications.

5 k-means and the centric-consistency axiom

Let us introduce a still another version of consistency transformation:

Definition 5

Let \(\Gamma\) be a partition embedded in \({\mathbb {R}}^m\). Let \(C\in \Gamma\) and let \(\varvec{\mu }_c\) be the center of the cluster C. We say that we execute the \(\Gamma ^*\) transform (or a centric consistency transformation) if for some \(0<\lambda \le 1\) we create a set \(C'\) with cardinality identical with C such that for each element \({{\textbf {x}}}\in C\) there exists \({\textbf {x'}}\in C'\) such that \({\textbf {x'}}=\varvec{\mu }_c+\lambda ({{\textbf {x}}}-\varvec{\mu }_c)\), and then substitute C in \(\Gamma\) with \(C'\).

Note that the set of possible centric consistency transformations for a given partition is neither a subset nor superset of the set of possible Kleinberg’s consistency transformations, Instead it is a k-means clustering model specific adaptation of the general idea of shrinking the cluster. The first differentiating feature of the centric consistency is that no new structures are introduced in the cluster at any scale. The second important feature is that the requirement of keeping the minimum distance to elements of other clusters is dropped and only cluster centers do not get closer to one another. Further justifications are explained in Figs. 6 and 7.

Getting all data points closer to the cluster center without changing the position of cluster center, if we do not ensure that they move along the line connecting each with the center. Left picture - data partition before moving data closer to the cluster center. Right picture - data partition thereafter.

Note also that the centric consistency does not suffer from the impossibility of transformation for clusters that turn out to be internal.

Property 14

A clustering method matches the condition of centric consistency if after a \(\Gamma ^*\) transform it returns the same partition.

Our proposal of centric-consistency has a practical background. Kleinberg proved that k-means does not fit his consistency axiom. As shown experimentally in Table 1, k-means algorithm behaves properly under \(\Gamma ^*\) transformation. Fig. 8 illustrates a two-fold application of the \(\Gamma ^*\) transform (same clusters affected as by \(\Gamma\)-transform in the preceding figure). As recognizable visually and by inspecting the forth column of Table 1 and Table 2, here \(k=8\) is the best choice for k-means algorithm, so the centric-consistency axiom is followed.

Data from Fig. 2 after a centralized \(\Gamma\)-transformation (\(\Gamma ^*\) transformation), clustered by k-means algorithm into 8 groups

Let us now demonstrate theoretically, that k-means algorithm really fits in the limit the centric-consistency axiom.

Theorem 23

k-means algorithm satisfies centric consistency in the following way: if the partition \(\Gamma\) is a local minimum of k-means, and the partition \(\Gamma\) has been subject to centric consistency yielding \(\Gamma '\), then \(\Gamma '\) is also a local minimum of k-means.

Proof

The k-means algorithm minimizes the sumFootnote 7Q from equation (1). \(V(C_j)\) be the sum of squares of distances of all objects of the cluster \(C_j\) from its gravity center. Hence \(Q(\Gamma )= \sum _{j=1}^k \frac{1}{n_j} V(C_j)\). Consider moving a data point \({{\textbf {x}}}^*\) from the cluster \(C_{j_0}\) to cluster \(C_{j_l}\) As demonstrated by Duda et al. (2000), \(V( C_{j_0} -\{{{\textbf {x}}}^*\})= V(C_{j_0}) - \frac{n_{j_0}}{n_{j_0}-1}\Vert {{\textbf {x}}}^*-\varvec{\mu }_{j_0}\Vert ^2\) and \(V(C_{j_l}\cup \{{{\textbf {x}}}^*\})= V(C_{j_l}) + \frac{n_l}{n_l+1}\Vert {{\textbf {x}}}^*-\varvec{\mu }_{j_l}\Vert ^2\) So it pays off to move a point from one cluster to another if \(\frac{n_{j_0}}{n_{j_0}-1}\Vert {{\textbf {x}}}^*-\varvec{\mu }_{j_0}\Vert ^2 > \frac{n_{j_l}}{n_{j_l}+1}\Vert {{\textbf {x}}}^*-\varvec{\mu }_{j_l}\Vert ^2\). If we assume local optimality of \(\Gamma\), this obviously did not pay off. Now transform this data set to \(\mathbf {X'}\) in that we transform elements of cluster \(C_{j_0}\) in such a way that it has now elements \({{\textbf {x}}}_i'={{\textbf {x}}}_i+\lambda ({{\textbf {x}}}_i - \varvec{\mu }_{j_0})\) for some \(0<\lambda <1\), see Fig. 9. Consider a partition \(\Gamma '\) of \(\mathbf {X'}\). All clusters are the same as in \(\Gamma\) except for the transformed elements that form now a cluster \(C'_{j_0}\). The question is: does it pay off to move a data point \({\textbf {x'}}^*\in C'_{j_0}\) between the clusters? Consider the plane containing \({{\textbf {x}}}^*, \varvec{\mu }_{j_0}, \varvec{\mu }_{j_l}\). Project orthogonally the point \({{\textbf {x}}}^*\) onto the line \(\varvec{\mu }_{j_0}, \varvec{\mu }_{j_l}\), giving a point \({{\textbf {p}}}\). Either \({{\textbf {p}}}\) lies between \(\varvec{\mu }_{j_0}, \varvec{\mu }_{j_l}\) or \(\varvec{\mu }_{j_0}\) lies between \({{\textbf {p}}}, \varvec{\mu }_{j_l}\). Properties of k-means exclude other possibilities. Denote distances \(y=\Vert {{\textbf {x}}}^*- {{\textbf {p}}}\Vert\), \(x=\Vert \varvec{\mu }_{j_0}- {{\textbf {p}}}\Vert\), \(d=\Vert \varvec{\mu }_{j_0}- \varvec{\mu }_{j_l}\Vert\). In the second case the condition that moving the point does not pay off means:

If we multiply both sides with \(\lambda ^2\), as \(\lambda <1\), we get:

which means that it does not payoff to move the point \({\textbf {x'}}^*\) between clusters either. Consider now the first case and assume that it pays off to move \({\textbf {x'}}^*\). So we would have

and at the same time

Subtract now both sides:

This implies

It is a contradiction because

So it does not pay off to move \({\textbf {x'}}^*\), hence the partition \(\Gamma '\) remains locally optimalFootnote 8 for the transformed data set. \(\square\)

If the data have one stable optimum only like in case of well separated normally distributed k real clusters, then both turn to global optima.

Impact of contraction of the data point \({{\textbf {x}}}^*\) towards cluster center \(\varvec{\mu }_{j_0}\) by a factor \(\lambda\) to the new location \({{\textbf {x}}}^{*'}\) - local optimum maintained. The left image illustrates the situation when the point \({{\textbf {x}}}^*\) is closer to cluster center \(\varvec{\mu }_{j_0}\). The right image refers to the inverse situation. The point \({{\textbf {p}}}\) is the orthogonal projection of the point \({{\textbf {x}}}^*\) onto the line \(\varvec{\mu }_{j_0}, \varvec{\mu }_{j_l}\)

However, it is possible to demonstrate that the newly defined transform preserves also the global optimum of k-means.

Theorem 24

k-means algorithm satisfies centric consistency in the following way: if the partition \(\Gamma\) is a global minimum of k-means, and the partition \(\Gamma\) has been subject to centric consistency yielding \(\Gamma '\), then \(\Gamma '\) is also a global minimum of k-means.

Proof

Note that the special case of \(k=2\) of this theorem has been proven in (Klopotek 2020, Theorem 3). Hence let us turn to the general case of k-means (\(k\ge 2\)). Let the optimal clustering for a given set of objects X consist of k clusters: T and \(Z_1,\dots ,Z_{k-1}\). The subset T shall have its gravity center at the origin of the coordinate system. The quality of this partition \(Q(\{T,Z_1,\dots ,Z_{k-1}\}) =n_T Var(T) +\sum _{i=1}^{k-1}n_{Z_i} Var(Z_i)\), where \(n_{Z_i}\) is the cardinality of the cluster \({Z_i}\). We will prove by contradiction that by applying our \(\Gamma\) transform we get partition that will be still optimal for the transformed data points. We shall assume the contrary that is that we can transform the set T by some \(1>\lambda >0\) to \(T'\) in such a way that optimum of k-means clustering is not the partition \(\{T',Z_1,\dots ,Z_{k-1}\}\) but another one, say \(\{T'_1\cup Z_{1,1} \cup \dots \cup Z_{k-1,1} , T'_2\cup Z_{1,2} \cup \dots \cup Z_{{k-1},2} \dots , T'_k\cup Z_{1,k} \cup \dots \cup Z_{{k-1},k} \}\) where \(Z_i= \cup _{j=1}^{k} Z_{i,j}\) (where \(Z_{i,j}\) are pairwise disjoint), \(T'_1,\dots ,T'_k\) are transforms of disjoint sets \(T_1,\dots ,T_k\) for which in turn \(\cup _{j=1}^{k}T_j=T\). It may be easily verified that

while (denoting \(Z_{*,j}= \cup _{i=1}{k-1}Z_{*,j}\))

whereas

while

The following must hold:

and

Additionally also

and ...and

These latter k inequalities imply that for \(l=1,\dots ,k\):

Consider now an extreme contraction (\(\lambda =0\)) yielding sets \(T_j"\) out of \(T_j\). Then we have

because the linear combination of numbers that are bigger than a third yields another number bigger than this. Let us define a function

It can be easily verified that g(x) is a quadratic polynomial with a positive coefficient at \(x^2\). Furthermore \(g(1)=Q(\{T,Z_1,\dots ,z_{k-1}\}) - Q(\{T_1\cup Z_{*,1},\dots , T_k\cup Z_{*,k} \})<0\), \(g(\lambda )=Q(\{T',Z_1,\dots ,Z_{k-1}\}) - Q(\{T'_1\cup Z_{*,1},\dots , T'_k\cup Z_{*,k} \})>0\), \(g(0)=Q(\{T",Z_1,\dots ,Z_{k-1}\}) - Q(\{T"_1\cup Z_{*,1},\dots , T"_k\cup Z_{*,k} \})<0\). But no quadratic polynomial with a positive coefficient at \(x^2\) can be negative at the ends of an interval and positive in the middle. So we have the contradiction. This proves the thesis that the (globally) optimal k-means clustering remains (globally) optimal after transformation. \(\square\)

So summarizing the new \(\Gamma\) transformation preserves local and global optima of k-means for a fixed k. Therefore k-means algorithm is consistent under this transformation. Hence

Theorem 25

k-means algorithm satisfies Scale-Invariance, k-Richness, and centric Consistency.

Note that (\(\Gamma ^*\) based) centric-consistency is not a specialization of Kleinberg’s consistency as the requirement of increased distance between all elements of different clusters is not required in \(\Gamma ^*\) based Consistency.

Theorem 26

Let a partition \(\{T,Z\}\) be an optimal partition under 2-means algorithm. Let a subset P of T be subjected to centric consistency transformation yielding \(P'\), and \(T'=(T-P)\cup P'\). Then partition \(\{T',Z\}\) is an optimal partition of \(T'\cup Z\) under 2-means. satisfies

Proof

(Outline) Let the optimal clustering for a given set of objects X consist of two clusters: T and Z. Let T consist of two disjoint subsets P, Y, \(T=P \cup Y\) and let us ask the question whether or not centric consistency transformation of the set P will affect the optimality of clustering, that is Let \(T'=P' \cup Y\) with \(P'\) being an image of P under centric-consistency transformation. We ask if \(T'\),Z is the optimal clustering of \(T'\cup Z\). Assume the contrary, that is that there exists a clustering into sets \(K'=A'\cup B\cup C\), \(L'=D'\cup E\cup F\), where \(P'=A'\cup D', Y=B\cup E, Z=C\cup F\), that has lower clustering quality function value \(Q(\{K',L'\},\{\varvec{\mu }_{K},\varvec{\mu }_L\}\) where \(\varvec{\mu }_{K},\varvec{\mu }_L\) are the assumed cluster centers, not necessarily being the gravity centers, of \(K'\) and \(L'\), but the elements of these clusters are closer to its center than to the other for some \(\lambda =\lambda ^*\). Note that we do not assume changes of \(\varvec{\mu }_{K},\varvec{\mu }_{L}\) if \(\lambda\) is changed. Note that for \(\lambda =0\) either \(A'\) or \(D'\) would be empty. So assume \(A'=X'\). And assume \(\{K',L'\}\) is a better partition than \(\{T',Z\}\). That is

If \(K=X\cup B\cup C\), then note that

Hence

which is a contradiction because the partition \(\{T,Z\}\) was assumed to be optimal. So consider other values of \(\lambda\). It can be shown that \(h(\lambda )=Q(\{T',Z\})-Q(\{K',L\},\{\varvec{\mu }_{K},\varvec{\mu }_L\})\) is a quadratic polynomial in \(\lambda\). It is easily seen that if \(\lambda\) goes to infinity, then from some point on \(Q(\{T'\})>Q(\{A',D'\},\{\varvec{\mu }_{K},\varvec{\mu }_L\})\) and in the limit it strives towards \(+\infty\). As a consequence \(Q(\{T',Z\})>Q(\{K',L'\},\{\varvec{\mu }_{K},\varvec{\mu }_L\})\). for all \(\lambda\) above some threshold. So the quadratic form \(h(\lambda )\) must have a positive sign at the coefficient at \(\lambda ^2\) and by a similar argument as in the previous proof we obtain the contradiction. Hence the claim of the theorem. \(\square\)

Theorem 26 means that

Theorem 27

Bisectional-auto-means algorithm is centric-consistent, (scale)-invariant and \(2++\)-nearly-rich.

6 Consistency Axiom of Kleinberg Revisited

Kleinberg’s proof of contradictions between his axioms relies to a large extent on the anti-chain property of the clustering function fitting invariance and consistency axioms (his Theorem 3.1). As already mentioned, the mechanism behind creating the contradiction in his axiomatic system is based on the fact that the consistency operator creates new structures in the data. This is clearly visible in Fig. 10. In this example, if the clustering algorithm returned a single cluster, then by the consistency transform, combined with (scaling) invariance, you can obtain any clustering structure you want. This is not something one expects from a cluster-preserving transformation. So what kind of constraints one would like to see with respect to such a transformation? First one has at least to agree partially what a cluster is. Usually we imagine a cluster as a set of points close to each other as opposed to other clusters. So in order for new clusters not to emerge, one would not allow cluster points to get closer relatively to other data points under a cluster preserving transformation. So the first requirement is given rise in this way: The distances should preserve their order. The second is that the distances should not differentiate: longer and shorter distances should approach the same limit. Last not least no structural transitions of points should emerge: If a point was contained in a polyhedron of other points, it shall remain therein.

So if three data points A, C, B belong to the same cluster and upon consistency transformation they are mapped to \(A',C',B'\), and \(|AC| < |AB|\), then we require that \(\frac{|A'C'|}{|AC|}\ge \frac{|A'B'|}{|AB|}\) and at the same time \(|A'B'| \ge |A'C'|\). Additionally, the same property has to hold in the convex hull of A, B, C, because usually we cluster a sample and not the universe when creating a partition of the data. We shall call this inner convergent consistency condition.

So in one dimension, let C lie between A and B. So \(|CA|,|BC| < |AB|\). Furthermore, \(C'\) would lie between \(A'\) and \(B'\) after the transformation. From the above condition we have that

This is only valid if actually \(\frac{|A'B'|}{|AB|}= \frac{|A'C'|}{|AC|}\) which means that in one dimension the consistency transformation has to take the form of linear transformation. In two dimensions, the proof is as follows (see Fig. 11). Let AB be the longest edge of the triangle ABC. Then \(A'B'\) be the longest edge of the triangle \(A'B'C'\). Assume we have performed invariance operation so that \(|AB|=|A'B'|\). We can superimpose both triangles as in Fig. 11, so that \(A=A'\) and \(B=B'\). Further H be the orthogonal projection of C onto AB and \(H'\) be the orthogonal projection of \(C'\) onto AB. P be point on the line segment AB. The considerations of the 1d case indicate that under convergent consistency transform P is identical with its image \(P'\). As PC is necessarily shorter than AC and BC, also \(P'C'/PC\ge A'C'/AC, B'C'/BC\). Let us introduce the notation: \(a=|AH|, b=|BH|, s=|PH|, d1=|HH'|, d2=||CH|-|C'H'||\).

So from \(P'C'/PC \ge A'C'/AC\) we get the requirement

This leads to the expression

If the distance \(s=a\), this simplifies to