Abstract

We introduce a probabilistic language and an efficient inference algorithm based on distributional clauses for static and dynamic inference in hybrid relational domains. Static inference is based on sampling, where the samples represent (partial) worlds (with discrete and continuous variables). Furthermore, we use backward reasoning to determine which facts should be included in the partial worlds. For filtering in dynamic models we combine the static inference algorithm with particle filters and guarantee that the previous partial samples can be safely forgotten, a condition that does not hold in most logical filtering frameworks. Experiments show that the proposed framework can outperform classic sampling methods for static and dynamic inference and that it is promising for robotics and vision applications. In addition, it provides the correct results in domains in which most probabilistic programming languages fail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Robotics research has made important achievements in problems such as state estimation, planning, and learning. However, the majority of probabilistic models used, such as Bayesian networks, cannot easily represent relational information, that is, objects, properties as well as the relations that hold between them. Relational representations allow one to encode more general models, to integrate background knowledge about the world, and to convert low-level information into human-readable form. Probabilistic programming languages (De Raedt et al. 2008) and statistical relational learning techniques (SRL) (Getoor and Taskar 2007) provide such relational representations and have been successful in many application areas ranging from natural language processing to bioinformatics.

This paper extends probabilistic logic programming techniques to deal with hybrid relational domains, involving both discrete and continuous random variables in two settings. The first setting is a static one, where we contribute a new inference algorithm for Distributional Clauses (DC) (Gutmann et al. 2011), a recent extension of Sato’s distribution semantics (Sato 1995) for dealing with continuous variables. The second setting is a dynamic one, where we extend the DC framework for coping with time. For the resulting Dynamic Distributional Clauses (DDC), we develop a particle filter (DCPF) that exploits the static inference algorithm for filtering. Particle filters (Doucet et al. 2000) are widely applied in domains such as probabilistic robotics (Thrun et al. 2005), and we adapt them here for use in hybrid relational domains, in which each state of the environment is represented as an interpretation, that is, a set of ground facts that defines a possible world. The statistical relational learning literature already contains several approaches to temporal models and to particle filters; see Sect. 8 for a detailed discussion. However, few frameworks are suited for tracking or other robotics applications: they are too slow for online applications or they only support discrete domains.

The distinguishing features of the proposed framework are that: (1) it provides an inference method that extends the applicability of likelihood weighting (LW) and works with zero probability evidence; (2) it exploits partial worlds as samples allowing for a potentially infinite state space; (3) it employs a relational representation to represent (context-specific) independence assumptions, and exploits these to speed up inference; (4) it is suited for tracking and robotics applications; and (5) it has a bounded space complexity for filtering by avoiding inference backward in time (backinstantiation).

The contributions of this paper are that (1) we propose an improved sampling algorithm for static inference; (2) we extend DC for dynamic domains (DDC); (3) we introduce an optimized particle filter for DDC; (4) we prove the theoretical correctness for DCPF and study its relation with Rao–Blackwellized particle filters; (5) we integrate online learning in the DCPF; and (6) we adopt the DCPF in some tracking scenarios. This paper is based on previous papers (Nitti et al. 2013, 2014c) (plus a position paper Nitti et al. 2014a and a summary paper Nitti et al. 2014b), but is extended with contributions (1) and (4), and additional experiments.

This paper is organized as follows: we first review DC (Sect. 2), introduce some extensions and present the static inference procedure (Sect. 3). We then introduce the Dynamic DC, the particle filters (Sect. 4), and propose the DCPF (Sect. 5). We then integrate learning in DCPF (Sect. 6), present some experiments (Sect. 7), discuss related work (Sect. 8), and conclude (Sect. 9).

2 Distributional clauses

We now introduce the distributional clauses (DC) (Gutmann et al. 2011), an extension of distribution semantics (Sato 1995), while assuming some familiarity with statistical relational learning and logic programming (De Raedt et al. 2008) (see “Logic programming” in the Appendix for a brief overview).

Formally, a distributional clause is a formula of the form \(\mathtt{{h}}\sim {\mathcal {D}} \leftarrow \mathtt{{b_1,\dots ,b_n}}\), where the \(\mathtt{{b_i}}\) are literals and \(\sim \) is a binary predicate written in infix notation.

The intended meaning of a distributional clause is that each ground instance of the clause \((\mathtt{{h}}\sim {\mathcal {D}} \leftarrow \mathtt{{b_1,\ldots ,b_n}})\theta \) defines the random variable \({\mathtt {h}}\theta \) with distribution \({\mathcal {D}}\theta \) whenever all the \(\mathtt{{b_i}} \theta \) hold, where \(\theta \) is a substitution. In other words, a distributional clause can be seen as a powerful template to define conditional probabilities: \(p(\mathtt{{h}}\theta |(\mathtt{{b_1,\ldots ,b_n}})\theta )={\mathcal {D}}\theta \). The term \({\mathcal {D}}\) can be nonground, i.e., values, probabilities, or distribution parameters can be related to conditions in the body. Furthermore, given a random variable r, the term \(\simeq \!\!(r)\) constructed from the reserved functor \(\simeq \!\!/1\) represents the value of r. Abusing notation, for brevity, we shall sometimes write \(r {\sim }=v\) instead of \(\simeq \!\!(r)=v\), which is true iff the value of the random variable \(r\) unifies with v.

Example 1

Consider the following clauses:

where ‘\(\mathtt{{is}}\)’ is the equality operator. Capitalized terms such as \(\mathtt{{P,A,}}\) and \(\mathtt{{B}}\) are logical variables, which can be substituted with any constant. Clause (1) states that the number of people \(\mathtt{{n}}\) is governed by a Poisson distribution with mean 6. Clause (2) models the position \(\mathtt{{pos(P)}}\) as a continuous random variable uniformly distributed from 0 to \(\mathtt{{M}}= 10 \mathtt{{N}}\) (that is, 10 times the number of people), for each person identifier \(\mathtt{{P}}\) such that \(\mathtt{{1\le P\le N}}\), where \(\mathtt{{P}}\) is integer and \(\mathtt{{ N}}\) is unified with the number of people \(\mathtt{{n}}\). For example, if the value of \(\mathtt{{n}}\) is 2, there will be 2 independent random variables \(\mathtt{{pos(1)}}\) and \(\mathtt{{pos(2)}}\) with distribution \(\mathtt{{uniform(0,20)}}\). Finally, clause (3) defines the binary relation \(\mathtt{{left}}\), comparing people positions. Note that the atom \(\mathtt{{left(a,b)}}\) is defined using a deterministic clause, but it is a random variable as it depends on other random variables.

DCs support continuous distributions (under reasonable conditions) and naturally cope with an unknown number of objects (Gutmann et al. 2011). In addition to distributional clauses, we shall also employ deterministic clauses as in Prolog. We shall often talk about clauses when the context is clear.

A distributional program \(\varvec{\mathbb {P}}\) is a set of distributional and/or deterministic clauses that defines a distribution p(x) over possible worlds x. The probability \(p(q)\) of a query \(q\) can be estimated using Monte-Carlo methods, that is, possible worlds are sampled from p(x), and p(q) is approximated as the ratio of samples in which the query q is true.

The procedure used to generate possible worlds defines the semantics and a basic inference algorithm. A possible world is generated starting from the empty partial world \(x=\emptyset \); then for each distributional clause \(\mathtt{{h}} \sim {\mathcal {D}} \leftarrow \mathtt{{b_1, \dots , b_n}}\), whenever the body \(\{\mathtt{{b_1}}\theta , \dots , \mathtt{{b_n}}\theta \}\) is true in the set x for the substitution \(\theta \), a value v for the random variable \(\mathtt{{h}}\theta \) is sampled from the distribution \({\mathcal {D}}\theta \) and \(\mathtt{{h}}\theta =v\) is added to the new partial world \(\hat{x}\). This is also performed for deterministic clauses, adding ground atoms to \(\hat{x}\) whenever the body is true. This process is then recursively repeated until a fixpoint is reached, that is, until no more variables can be sampled and added to the world. The final world is called complete or full, while the intermediate worlds are called partial. The process is based on sampling, thus ‘world’ is often replaced with sample or particle. Notice that a possible world may contain a countably infinite number of random variables (and atoms).

Example 2

Given the DC program \(\varvec{\mathbb {P}}\) defined by (1), (2), and (3), \(\mathtt{\{n=2, pos(1)=3.1,}\) \(\mathtt{pos(2)=4.5,left(1,2)\}}\) is a possible (complete) world. This world is sampled in the following order: \(\emptyset \rightarrow \mathtt{\{n=2\}\rightarrow \{n=2,pos(1)=3.1,pos(2)=4.5\}\rightarrow \{n=2,} \mathtt{pos}\) \(\mathtt{(1)=3.1,pos(2)=4.5,left(1,2)\}}\).

Gutmann et al. (2011) formally describe this generative process using the \(ST_P\) operator. To define a proper probability distribution \(p(x)\), \(\varvec{\mathbb {P}}\) needs to satisfy the validity conditions described in Gutmann et al. (2011). See “Distributional clauses” in the Appendix for details on the validity conditions and the \(ST_P\) operator. Throughout the paper the partial worlds will be written as \(x^{P} \), while \(x \supseteq x^{P} \) indicates a complete world consistent with \(x^{P} \), and \(x^a=x\setminus x^{P}\) represents the remaining part of the world.

We extended DC to allow for negated literals in the body of distributional clauses. To accommodate negation, we need to consider the case that a random variable is not defined in a full world x. Any comparison involving a non-defined variable will fail; therefore, its negation will succeed. In contrast, grounded atoms \(a\) are considered false in \(x\), if \(a\notin x\) (standard closed-world assumption). For further details on negation in DC see “Negation” in the Appendix.

3 Static inference for distributional clauses

Sampling full worlds is generally inefficient or may not even terminate as possible worlds can be infinitely large. Therefore, Gutmann et al. (2011) use magic sets (Bancilhon and Ramakrishnan 1986) to generate only those facts that are relevant for answering the query. Magic sets are a well-known logic programming technique for forward reasoning. In this paper, we propose a more efficient sampling algorithm based on backward reasoning and likelihood weighting.

3.1 Importance sampling

Given a program \(\varvec{\mathbb {P}}\), the probability of the query q is estimated by applying importance sampling to partial samples \(x^{P(i)}\), with \(i=1,\dots ,N\) where N is the number of samples. In importance sampling the proposal probability \(g(x)\) used to generate samples is not necessary the target probability p(x).

The Monte-Carlo approximation is the following (where  is the indicator functionFootnote 1):

is the indicator functionFootnote 1):

where the weight is \(w_q^{(i)}=p(q|x^{P(i)})\frac{p(x^{P(i)})}{g(x^{P(i)})}\). Formula (4) uses a fixed split \(x=x^{P}\cup x^a\). Following Milch (2006) we extend this idea to exploit context-specific independencies: we can have a different split in different samples.

There are two reasons to sample partial worlds instead of complete ones. First, the sampling process is faster and terminates (under some conditions) even when the complete world is a countably infinite set. Second, the estimator variance is generally lower with respect to a naive Monte-Carlo estimator that samples complete worlds. Indeed, sampling some variables \(x^{P(i)}\) and computing \(p(q|x^{P(i)})\) analytically is an instance of the conditional Monte-Carlo method (Lemieux 2009) (sometimes called Rao–Blackwellization), which has better performance with respect to naive Monte-Carlo.

The split of x has to guarantee that the probability \(p(q|x^{P(i)})\) is analytically computable. Let var(q) denote the set of all random variables in q. If \(var(q)\subseteq var( x^{P(i)})\), all the variables in q are instantiated in \(x^{P(i)}\), thus q can be determined deterministically:  . In some cases it is possible to compute \(p(q|x^{P(i)})\) without sampling variables in q.

. In some cases it is possible to compute \(p(q|x^{P(i)})\) without sampling variables in q.

Example 3

Given the clauses (1), (2), (3),

while the marginalized variables \(\mathtt{{pos(2),pos(3), left(X,Y)}}\) are irrelevant. We could even do better, for example \(p(q|x^{P(i)})=p\mathtt{{(pos(1)>5|\{n=3\}}})\) is analytically computable without sampling \(var(q)=\mathtt{{\{pos(1)\}}}\).

In general, it is impossible to sample only var(q) as the DC program does not directly define the distribution of these variables. For example, to sample \(\mathtt{{pos(1)}}\) defined by (2) we first need to sample \(\mathtt{{n}}\) defined by (1); thus we need to follow the generative sampling process (\(ST_P\) operator) until the variables of interest are sampled. Backward reasoning (or the magic set transformation) can help to focus the sampling.

The probability of a query given evidence p(q|e) can be estimated using formula (4) twice, once to estimate p(q, e) and another time to estimate p(e), then p(q|e) is approximated as the ratio of the two quantities. As an alternative, formula (4) can be adapted to use the same partial samples to estimate the two quantities. In detail, each sample is split in \(x=x_e^{P} \cup x_q^{P} \cup x^a\), such that \(x_e^{P}\) is sufficient to compute \(p(e|x_e^{P})\) and \(x_q^{P} \cup x_e^{P}\) is sufficient to compute \(p(q|e,x_q^{P},x_e^{P})\). Formula (4) is applied to estimate p(e), then the same partial samples \(x_e^{P(i)}\) are expanded to estimate p(q, e):

where the proposal \(g\) has the same factorization of the target distribution: \(g(x^P_q,x^P_e)=g(x_q^{P}|x_e^{P})g(x_e^{P})\).

3.2 Sampling partial possible worlds

We now present our approach to sampling possible worlds and computing p(q) following Equation (4) and p(q|e) following (5). Central is the algorithm with signature

EvalSampleQuery(q : query, \(x^{P(i)}\) : partial world) returns \((w^{(i)}_q,x_q^{P(i)})\)

that starts from a given query q and a partial world \(x^{P(i)}\) (which will be empty in case there is no evidence, cf. below), and generates an expanded partial world \(x_q^{P(i)}\) together with its weight \(w^{(i)}_q\) so that

-

1.

\(x_q^{P(i)}\supseteq x^{P(i)}\), i.e., \(x_q^{P(i)}\) is an expansion of \(x^{P(i)}\) obtained using \(ST_P\),

-

2.

\(var(q)\subseteq var(x_q^{P(i)})\), which ensures that we can evaluate q in \(x^{P(i)}_q\) and therefore \(p(q|x^{P(i)}_q)\),

-

3.

\(w_{q}^{(i)}=p(q|x_q^{P(i)})\frac{p(x_{q}^{P(i)}|x^{P(i)})}{g(x_{q}^{P(i)}|x^{P(i)})}.\)

\(p(q)\) is estimated by calling \((w_{q}^{(i)},x_q^{P(i)})\leftarrow \) EvalSampleQuery \((q,\emptyset )\) \(N\) times and applying (4). The probability p(q|e) is estimated by calling, for each sample, \((w_{e}^{(i)},x_e^{P(i)})\leftarrow \) EvalSampleQuery(\(e,\emptyset )\) for the evidence, and \((w_{q}^{(i)},x_q^{P(i)}){\leftarrow }\) EvalSampleQuery(\(q, x_{e}^{P(i)})\) for the query given \(x_{e}^{P(i)}\), and then applying (5).

The key question is thus how to sample one such partial world \(x^{P(i)}_q\) for a generic call of EvalSampleQuery \((q, x^{P(i)})\). To realize this, we combine likelihood weighting (LW) (Fung and Chang 1989; Koller and Friedman 2009) with a variant of SLD-resolution in the EvalSampleQuery algorithm that we describe below.

SLD-resolution (Apt 1997; Lloyd 1987; Nilsson and Małiszyński 1995) is an inference procedure to prove a query q, used in logic programming, that focuses the proof on the relevant part of the program \(\varvec{\mathbb {P}}\). The basic idea is replacing an atom with its definition. Given \(q=(q_1,q_2,\dots ,q_n)\) and a rule \({head\leftarrow body} \in \varvec{\mathbb {P}}\) such that \(\theta =mgu(q_1,head)\) (i.e. \(q_1\theta =head\theta \)), then the new goal becomes \(q'=(body,q_2,\dots ,q_n)\theta \), where mgu indicates the most general unifier. If the empty goal is reached then the query is proved. If it is impossible to reach the empty goal, the query is assumed false under the closed-world assumption. There may be more than one rule that satisfies the mentioned conditions, resulting in the SLD-tree. In Prolog the tree is traversed using depth-first search with backtracking.

Likelihood weighting is a type of importance sampling that forces variables to be consistent with the evidence by using an adapted proposal distribution \(g\). It has been shown (Fung and Chang 1989) that LW reduces the variance of the estimator with respect to the naive Monte-Carlo estimator. In this paper LW is also used to force variables to be consistent with the query. LW has connections with conditional Monte Carlo (for binary events). Indeed, computing \(p(r=v|x^{P(i)})\) is equivalent to imposing \(r=v\) and weight the sample with \(w=p(r=v|x^{P(i)})\).

Adapting SLD-resolution. EvalSampleQuery employs an extension of SLD-resolution to determine which random variables to sample, until the query q can be evaluated. However, unlike traditional SLD-resolution, it keeps track of a number of global variables:

-

1.

the weight \(w^{(i)}_{q}\), initialized to 1,

-

2.

the initial query \(iq\), initialized to q, and

-

3.

the partial sample \(x^{P(i)}\).

Starting from a goal \(G=q\), EvalSampleQuery applies inference rules until the goal G is empty (i.e., \(q\) has been proven) or no more rules can be applied (\(q\) fails). If the query succeeds, the algorithm returns the final weight and the expanded sample \((w^{(i)}_{q}, x_q^{P(i)})\). If the query fails it returns \((w^{(i)}_{q}=0,x_q^{P(i)})\).

Given a goal \(G\) and the global variables \(w^{(i)}_{q},iq,x^{P(i)}\), applying a rule produces a new goal \(G'\) and modifies the global variables:

-

1.

\(G'\) is the new goal obtained from \(G\) using a kind of SLD-resolution step;

-

2.

if a new variable \(r\) is sampled with value v,

-

set \(w^{(i)}_{q}\leftarrow w^{(i)}_{q}\frac{p(r=v|x^{P(i)})}{g(r=v|x^{P(i)})}\) (based on LW) and

-

\( x^{P(i)}\leftarrow x^{P(i)}\cup \{r=v\}\).

In addition, if \(r{\sim }=Val \in iq\) and \((r{\sim }=v,iq)\Leftrightarrow iq\theta \) with \(r\) grounded and \(\theta =\{Val=v\}\) then:

-

\(iq\leftarrow iq\theta \)

-

-

3.

if a new atom \(h\) is proved, set \( x^{P(i)}\leftarrow x^{P(i)}\cup \{h\}\).

The inference rules applied by EvalSampleQuery resemble SLD resolution applied to a query \(q\): they are applied with a backtracking strategy, negation as failure to prove negated literals (as in SLDNF) and tabling to improve performance. However, important differences are required to handle the stochastic nature of sampling and to exploit LW whenever possible. Note that the partial sample \(x^{P(i)}\) can only grow during the application of the inference rules, i.e., backtracking does not remove sampled values from the partial sample. This is necessary to guarantee the generation of a sample from the defined proposal distribution \(g\).

The algorithm needs the original query to apply LW. Since the inference rules change the current goal to prove, we distinguish the current goal \(G\) from the original query \(iq=q\); during sampling iq can be simplified (e.g., applying substitutions) as long as \(\varvec{\mathbb {P}}\models (x^{P(i)},iq)\Leftrightarrow (x^{P(i)},q)\), i.e. iq is logically equivalent to q given \(x^{P(i)}\) and the DC program \(\varvec{\mathbb {P}}\). For those reasons \(x^{P(i)}, w^{(i)}_{q},\) and \(iq\) are global variables.

Let us assume that the query \(q=(q_1,q_2,\dots ,q_n)\) contains only equality comparisons for random variables, e.g., \( r{\sim }=v\). Any other comparison operator \( r\odot v\) can be converted in \( r{\sim }=Val,Val\odot v\), where Val is a logical variable and \( Val\odot v\) is ground during evaluation. For example, \(\simeq \!\!(n)>5\) becomes \( n {\sim }=N,N>5\). The inference rules are the following:

-

1a.

\({\text {If }} [\exists h\in x^{P(i)}:\theta =mgu(q_{1},h)]\) OR \([builtin(q_{1}),\exists \theta : q_{1}\theta ]\) then:

$$\begin{aligned} (q_1,q_2,\dots ,q_n) \vdash (q_2,\dots ,q_n)\theta \end{aligned}$$i.e., if \(q_{1}\theta \) is true in \(x^{P(i)}\) for a substitution \(\theta \), remove \(q_{1}\) from the current goal and apply the substitution \(\theta \) to the current goal. \(q_{1}\) can also be a built-in predicate such as \(1<4\) that is trivially proved.

-

1b.

\({\text {If }} \exists \theta {\text { s.t. }} h\leftarrow body \in \varvec{\mathbb {P}}, \theta =mgu(q_{1},h) \text { then:}\)

$$\begin{aligned} (q_1,q_2,\dots ,q_n)\vdash (body,add(h),q_2,\dots ,q_n)\theta \end{aligned}$$i.e., if \(q_1\) unifies with the head of a deterministic clause, then add the body of the clause and \(add(h)\) to the current goal, and apply substitution \(\theta \). The special predicate \(add(h)\) indicates that \(h\) must be added to \(x^{P(i)}\) after the body has been proven.

-

2a.

If \(\exists \theta \) s.t. \(h=v\in x^{P(i)},\theta =mgu(q_{1}, h{\sim }=v)\):

$$\begin{aligned} (q_1,q_2,\dots ,q_n)\vdash (q_2,\dots ,q_n)\theta \end{aligned}$$i.e., if \(q_1\theta \) compares a sampled random variable \(h\) to a value and \(q_1\theta \) is true in \(x^{P(i)}\), then remove \(q_{1}\) from the current goal and apply substitution \(\theta \).

-

2b.

If \(\exists \theta \) s.t. \(h\sim {\mathcal {D}} \leftarrow body \in \varvec{\mathbb {P}},\theta =mgu(q_{1},h{\sim }=Val),h\notin var(x^{P(i)})\):

$$\begin{aligned} (q_1,q_2,\dots ,q_n) \vdash (body,sample(h, {\mathcal {D}}),q_1,q_2,\dots ,q_n)\theta \end{aligned}$$i.e., if \(q_1\) compares a (not yet sampled) variable \(h\) that unifies with the head of a DC clause, then add the body of the clause and \(sample(h, {\mathcal {D}})\) to the current goal and apply the substitution \(\theta \); \(sample(h, {\mathcal {D}})\) is a special predicate that indicates that we need to sample h from \({\mathcal {D}}\) and add \(h=val\) to \(x^{P(i)}\) after the body has been proven.

-

3a.

If \((h{\sim }=v)\in iq, ground(h{\sim }=v), h\notin var(x^{P(i)}),[(h\ne v,x^{P(i)})\models \lnot iq ]\):

$$\begin{aligned} (sample(h, {\mathcal {D}}),q_2,\dots ,q_n)&\vdash \ (q_2,\dots ,q_n)\\ w^{(i)}_{q}&\leftarrow w^{(i)}_{q}\cdot likelihood_{{\mathcal {D}}}(h=v) \\ x^{P(i)}&\leftarrow x^{P(i)}\cup \{h=v\} \end{aligned}$$i.e., if \(sample(h, {\mathcal {D}})\) is in the current goal, \(h{\sim }=v\) is ground in \(iq\), and \(h\ne v\) makes iq false (always true if iq is a conjunction of literals), and \(h\) is not sampled in \(x^{P(i)}\), then add \(h=v\) to \(x^{P(i)}\), weight accordingly (LW), and remove \(sample(h, {\mathcal {D}})\) from the current goal.

-

3b.

If \(h\notin var(x^{P(i)}),ground(h)\), and rule 3a is not applicable:

$$\begin{aligned} (sample(h, {\mathcal {D}}),q_2,\dots ,q_n)&\vdash \ (q_2,\dots ,q_n) \\ x^{P(i)}&\leftarrow x^{P(i)}\cup \{h=v\}\\ {\text {if }} h{\sim }=Val\in iq,{((h{\sim }=v,iq)\Leftrightarrow iq\gamma )}{\text { then }} iq&\leftarrow iq\gamma \end{aligned}$$with v sampled from \({\mathcal {D}}\), and \(\gamma =\{Val=v\}\). That is, if \(sample(h, {\mathcal {D}})\) is in the current goal, and rule 3a is not applicable, then sample h, add it to \(x^{P(i)}\), and remove \(sample(h, {\mathcal {D}})\) from the current goal. Finally, apply the substitution \(\gamma \) to \(iq\) iff \(iq\gamma \) is equivalent to \(iq\) with \(h{\sim }=v\) (always true if iq is a conjunction of literals).

-

3c.

If ground(h):

$$\begin{aligned} (add(h),q_2,\dots ,q_n)&\vdash (q_2,\dots ,q_n)\\ x^{P(i)}&\leftarrow x^{P(i)}\cup \{h\} \end{aligned}$$i.e., if \(add(h)\) is in the current goal and \(h\) is ground, then add \(h\) to \(x^{P(i)}\) and remove \(add(h)\) from the current goal.

EvalSampleQuery performs lazy instantiation exploiting context-specific independencies: only the random variables needed to answer the query are sampled, the values of the remaining random variables are irrelevant to determine the true value of \(q\) for that specific partial instantiation.

Theorem 1

For \(N \rightarrow \infty \) samples generated using EvalSampleQuery, the estimation \(\hat{p}(q)\) obtained using (4) converges with probability 1 to the correct probability p(q).

Proof

It is sufficient to prove that EvalSampleQuery satisfies the importance sampling requirement for which convergence guarantees are available (Robert and Casella 2004), that is \(\forall x: p(q|x)p(x)>0\Rightarrow g(x)>0 \) or equivalently: \(\forall x:g(x)=0\Rightarrow p(q|x)p(x)=0\). The algorithm samples random variables h using the target distribution when LW is not applied (rule 3b): \(g(h|x^{P(i)})=p(h|x^{P(i)})\). LW is applied with proposal \(g(h=val|x^{P(i)})=1\) for grounded equalities in the initial query \((h{\sim }=val) \in iq\) (rule 3a). Therefore, \(g(h\ne val,x^{P(i)})=0\) but also \(p(q|h\ne val,x^{P(i)})p(h\ne val,x^{P(i)})=0\), because the query q fails for \(h\ne val\). Indeed, \((h\ne val,x^{P(i)})\models \lnot iq\) as required in rule 3a, and it is easy to show that \(\varvec{\mathbb {P}}\models (x^{P(i)},iq)\Leftrightarrow (x^{P(i)},q)\), therefore \((\varvec{\mathbb {P}},h\ne val,x^{P(i)})\models \lnot q\). The requirement is thus satisfied (\(\forall x:g(x)=0\Rightarrow p(q|x)p(x)=0 \)). \(\square \)

Theorem 1 is extendable for conditional probabilities \(p(q|e)=p(q,e)/p(e)\), as long as \(p(e)>0\). The remainder of this section will consider \(p(e)=0\); it can be safely skipped by the reader less interested in technical details.

Zero probability evidence. Special considerations need to be made for queries with zero probability evidence. For example, when the evidence is \(h{\sim }=val\) with h a continuous random variable defined with a density distribution. Such conditional distributions are not unambiguously defined, and a reformulation of the problem, e.g., a change of variables, can produce a different result (Borel–Kolmogorov paradox (Kadane 2011, Chap. 5.10). To avoid these issues we need to make some assumptions. In this section we make an explicit distinction between probabilities P, and densities p: \(\frac{d}{dx}P(X\le x)=p(x)\). Following Kadane (2011), we define the conditional probability for zero probability evidence as follows:

The conditional density is thus \(p(x|e=v)=\frac{p(x,e=v)}{p(e=v)}\). If the evidence is a disjunction, the limit might not exists, e.g.,:

To avoid this issue, we assume \(da=db\). In this case we obtain

For example, the probability of nationality given a height of 180 cm or 160cm is defined as \(P(nationality|height\in [180-da/2,180+da/2]\ OR\ height\in [160-db/2,160+db/2])\) for \(da\rightarrow 0,db\rightarrow 0\). The limit depends on how da and db relate to each other. Setting \(da=db\) seems a reasonable assumption when the intervals are comparable quantities, but other assumptions are possible. Definition (6) is extendable to more complex distributions that involve multiple variables and mixtures of discrete and continuous variables.

To apply importance sampling to definition (6), it is sufficient to estimate \(P(e\in [v-dv/2,v+dv/2])\) and \(P(q,e\in [v-dv/2,v+dv/2])\) for \(dv\rightarrow 0\). Knowing that \(dr\rightarrow 0 \Rightarrow P(h\in [r-dr/2,r+dr/2])\rightarrow p(h=r)dr\), every time we apply LW to a continuous variable, \(h=r\) is intended as \(h\in [r-dr/2,r+dr/2]\) with \(dr\rightarrow 0\), thus the incremental weight in rule 3a is \(p(h=r)dr\).

Formula (5) needs the sum of importance weights. This has to be carefully computed when there is a mix of densities and probability masses (Owen 2013, Chap. 9.8). Imagine that there is a sample weight \(w_{1}=P(x)\) obtained assigning a discrete variable to a value, and a second sample weight \(w_{2}=p(y)dy\) obtained assigning a continuous variable to a value, or more precisely to a range \([y-dy/2,y+dy/2]\), with \(dy\rightarrow 0\). The weight \(w_{1}\) trumps \(w_2\), because the latter goes to zero. Indeed, the second sample has a weight infinitely smaller than the first, and thus it is ignored in the weight sum: \(w_{1}+w_2=P(x)+p(y)dy = P(x)\) (for \(dy\rightarrow 0\)). Analogously, a weight \(w_{a}=p(x_{1},\dots ,x_n)dx_1,\dots ,dx_n\) that is the product of n (one-dimensional) densities trumps a weight \(w_{b}=p(x'_{1},\dots ,x'_n,x'_{n+1},\dots ,x'_{n+m})dx_1,\dots ,dx_{n+m}\) that is the product of n densities of the same variables and \(m>0\) other densities (i.e. \(w_a+w_b=w_a\)). If all the weights are n-dimensional densities (of the same variables), then the quantities are comparable and are trivially summed. However, if the weights refer to different variables, we need an assumption to ensure the existence of the limit, e.g., \(\forall {i}: dx_i=dx\), thus \({dx^{n+m}}/{dx^n}\rightarrow 0\), making again n-dimensional densities trump \((n+m)\)-dimensional densities. For \(m=0\) the densities are trivially summed, e.g., \(w_1=p(a,b,c)dadbdc\) and \(w_2=p(f,g,e)dfdgde\), assuming \(dadbdc=dfdgde=dx^{3}\), we obtain \(w_1+w_2=(p(a,b,c)+p(f,g,e))dx^{3}\). Finally, the ratio of weights sums in (5) is computed assuming again \(\forall {i}: dx_i=dx\), obtaining \(P(q|e)\approx \lim \limits _{dx\rightarrow 0}(k_n dx^v)/(k_d dx^l)\). If \(v>l\) then \(P(q|e)=0\), otherwise for \(v=l\) we have \(P(q|e)\approx {k_n}/{k_d}\). Those distinctions are automatically performed in EvalSampleQuery. For zero probability evidence we do not have convergence results for every DC program, query, and evidence, nonetheless the inference algorithm produces the correct results in many domains, as shown in the next section and in the experiments.

3.3 Examples

We now illustrate EvalSampleQuery and the cases when LW can be applied with the following example.

Example 4

We have an urn, where the number of balls \(\mathtt{{n}}\) is a random variable and each ball \(\mathtt{{X}}\) has a color, material, and size with a known distribution. The i-th ball drawn with replacement from the urn is named \(\mathtt{{drawn(i)}}\). The special predicate \(\mathtt{{ findall(A,B,L)}}\) finds all \(\mathtt{{A}}\) that makes \(\mathtt{{B}}\) true and puts them in a list \(\mathtt{{L}}\). In (11) \(\mathtt{{L}}\) is the list of integers from 1 to \(\mathtt{{N}}\), where \(\mathtt{{N}}\) is unified with the number of balls.

Let us consider the query \(\mathtt{{p(color(2){\sim }=black)}}\), the derivation is the following (omitting \(iq=q\)):

The algorithm starts checking whether a rule is applicable to the current goal initialized with the query. For example, rule 2a fails because \(\mathtt{{color(2)}}\) is not in the sample \(x^{P(i)}\). Rule 2b can be applied to clause (8), obtaining tuple 2. At this point \(\mathtt{{material(2)}}\) needs to be evaluated, it is not sampled and it unifies with the head of clause (10), thus applying rule 2b we obtain tuple 3. Now ‘\(\mathtt{{n}}\)’ is required, thus it is sampled with value e.g, 3 (rules 2b on (7) and 3b), for which ‘\(\mathtt{{n{\sim }=N,between(1,N,2)}}\)’ succeeds for \(\mathtt{{ N=3}}\) (rule 2a and 1a). At tuple 6 the body of (10) has been proven; therefore, \(\mathtt{{material(2)}}\) is sampled with a value e.g., \(\mathtt{{wood}}\) (rule 3b). Now in tuple 7, the formula \(\mathtt{{ material(2){\sim }=metal}}\) fails because the sampled value is \(\mathtt{{ wood}}\) (and thus the body of clause (8) fails); the algorithm backtracks to tuple 1 and applies rule 2b on clause (9) obtaining tuple 9. This time \(\mathtt{{ material(2){\sim }=wood}}\) is true and can be removed from the current goal (rule 2a). At this point \(\mathtt{{color(2)}}\) needs to be sampled (tuple 10). LW is applied because \(\mathtt{{color(2)}}\) is in the original query \(iq=q\), thus \(\mathtt{{color(2)=black}}\) is added to the sample with weight 1 / 2 (rule 3a). The query in this sample is true with final weight 1 / 2.

3.3.1 Query expansion

Instead of asking for \(\mathtt{{color(2){\sim }=black}}\), let us add \(\mathtt{{ a \leftarrow color(2){\sim }=black}}\) to the DC program and ask \(p\mathtt{{( a)}}\); the query does not change. However, the described rules do not apply LW because \(iq=\mathtt{{a}}\), and \(\mathtt{{a}}\) is deterministic. It is clear that LW should be applicable also in this case. To solve this issue it is sufficient to expand iq, replacing each literal in iq by its definition; e.g., \(iq=\mathtt{{a}}\) becomes \(iq=\mathtt{{(color(2){\sim }=black)}}\). At this point the algorithm is able to apply LW as before. We can go even further, replacing \( iq=\mathtt{{(color(2){\sim }=black)}}\) with \( iq=\mathtt{{(color(2){\sim }=black,{(material(2){\sim }=metal\ OR\ material(2){\sim }=wood)})}}\), where the disjunction of the bodies that define \(\mathtt{{color(2)}}\) has been added. Note that for random variables we need to keep the literal in iq after the expansion (e.g., \(\mathtt{{color(2){\sim }=black}}\)). Indeed, the disjunction of the bodies guarantees only that the random variable exists, not that it takes the value black. This procedure is a type of partial evaluation (Lloyd and Shepherdson 1991) adapted for probabilistic DC programs. There are several ways to unfold (expand) a query, one possible way is the following. Before applying the inference rules, the initial query iq is set to the disjunction of all proofs of q without sampling: \( iq=(proof_1\ OR\ proof_2\ OR \dots OR\ proof_n)\). Each proof is determined using SLD-resolution (a number of unfolding operations); since random variables are not sampled, if the truth value of a literal cannot be determined because it is non-ground (e.g., \(\mathtt{{ N>0}}\), or \(\mathtt{{between(A,B,C)}}\)) it is left unchanged in the proof. Starting from \(proof=q\), a proof can be found as follows:

-

e1

replace each deterministic atom \( h\in proof\) with \( body\theta \) where \( head \leftarrow body \in \varvec{\mathbb {P}}\) and \( \theta =mgu(h,head)\), the process is repeated recursively for \( body\theta \);

-

e2

if the truth value of \( h\in proof\) cannot be determined it is left unchanged;

-

e3

for each \( h{\sim }=value \in proof\) add \( body\theta \) where \(head\sim {\mathcal {D}} \leftarrow body \in \varvec{\mathbb {P}}\) and \( \theta =mgu(h,head)\), the process is repeated recursively for \( body\theta \).

A depth limit is necessary for recursive clauses. The obtained formula can be simplified, e.g., \((a,b)\ OR\ (a,d)\) becomes \(a,(b\ OR\ d)\); this is useful to know which variables can be forced to be true (in the example ‘a’).

After the iq expansion the sampling algorithm can start using the same inference rules, that cover the case in which iq contains disjunctions. As described in rule 3a, we can apply LW setting \(h=val\), only when \((h\ne val,x^{P(i)})\models \lnot iq\). Thus, if \(iq=\mathtt{{((h{\sim }=val\ OR\ a),b)}}\), LW cannot be applied for \(\mathtt{{h{\sim }=val}}\). However, if \(\mathtt{{a}}\) becomes false, iq simplifies to \(\mathtt{{ (h{\sim }=val,b)}}\), and LW can be applied setting \(h=val\), because \(h\ne val\) makes iq false. To determine whether \((h\ne val,x^{P(i)})\models \lnot iq\) holds, it is convenient to simplify iq whenever a random variable is sampled. For example, after a random variable \(\mathtt{{h}}\) has been sampled with value \(\mathtt{{v}}\), \(\mathtt{{ h{\sim }=val}}\) is replaced with its truth value (true or false). Furthermore, \((\mathtt{{true\ OR\ a}})\) is simplified with \(\mathtt{{true}}\), \((\mathtt{{false\ OR\ a}})\) with \(\mathtt{{a}}\), and so on. The expansion of iq and the simplification guarantee that \(\varvec{\mathbb {P}}\models (x^{P(i)},iq)\Leftrightarrow (x^{P(i)},q)\) as required by Theorem 1. The iq expansion allows to exploit LW in a broader set of cases. Indeed, it is basically a form of partial evaluation adapted for DCs and being able to exploit similar optimizations, e.g., using constraint propagation where the constraints that make the query true are propagated with the iq expansion, and updated according to the sampled variables.

3.3.2 Complex queries

Example 5

A more complex query is \(\mathtt{{\simeq \!\!(color(2))=\ \simeq \!\!(color(1))}}\), which is converted to \(\mathtt{{color(2){\sim }=Y, color(1){\sim }=Y}}\) as in this way each subgoal refers to a single random variable. In this case, \(\mathtt{{color(2)}}\) is sampled (rule 3b: LW is not used ) for example to \(\mathtt{{red}}\) (after sampling \({\mathtt{{n}}}\) and \({\mathtt{{material}}}\)). Assuming \(\mathtt{{n}}\ge 2\) the first subgoal \(\mathtt{{color(2){\sim }=Y}}\) succeeds with substitution \(\gamma =\{\mathtt{{Y= red}}\}\), thus the original query becomes \(iq=\mathtt{{ (color(2){\sim }=red, color(1){\sim }=red}}\)). The remaining subgoal will be \(\mathtt{{color(1){\sim }=red}}\) for which LW is used (rule 3a). Indeed, the original query \(iq\) becomes grounded and LW can be applied.

The examples show that EvalSampleQuery exploits LW in complex queries (or evidence). This is also valid for continuous random variables for which MCMC or naive MC will return \(0\). The probability of such queries is \(0\), but \(p(e)=0\) is not a satisfactory answer to estimate p(q|e), and the limit (6) needs to be computed. For simple evidence or queries (e.g., \(\mathtt{{size(1){\sim }=v}}\)) classical LW is sufficient to solve the problem. For more complex evidence (e.g., \(\mathtt{{\simeq \!\!(size(1))=\ \simeq \!\!( size(2))}}\)) MCMC, naive MC or classical LW will fail to provide an answer (all samples are rejected). Many probabilistic languages cannot handle those queries (if we exclude explicit approximations such as discretization). In contrast, the proposed algorithm is able to provide a meaningful answer.

Example 6

Let us consider the Indian GPA problem (Perov et al.) proposed by Stuart Russell. According to Perov et al. “Stuart Russell [...] pointed out that most probabilistic programming systems [...] produce the wrong answer to this problem”. The reason for this is that contemporary probabilistic programming languages do not adequately deal with mixtures of density and probability mass distributions. The proposed inference algorithm for DC does provide the correct results for the Indian GPA problem. The DC program that defines the domain is the following:

where \(\mathtt{{h \sim val(v)}}\) means that \(\mathtt{{h}}\) has value \(\mathtt{{v}}\) with probability 1. Briefly, the student GPA has a mixed distribution that depends on the student nationality. An interesting query is \(p(\mathtt{{nation{\sim }=america|studentGPA}})\). For example, for \(\mathtt{{studentGPA}}=4\) such probability is 1. The proposed inference algorithm provides the correct result for this query. This is due to the proper estimation of limit (6) and the relative importance weights. Moreover, the iq expansion and the generalized LW avoid rejection-sampling issues with continuous evidence.

Example 7

The last case to discuss is a query that contains random variables that are nonground terms, e.g., \(\mathtt{{color(X){\sim }=black}}\), which is interpreted as \(\mathtt{{\exists X\ color(X){\sim }=}} \mathtt{{black}}\). In this case LW is not applied because the goal is nonground. Applying LW would produce wrong results because we would force the value \(\mathtt{{black}}\) only for the first proof (e.g., \(\mathtt{{color(1){\sim }=black}}\)), ignoring the other possible proofs (e.g., \(\mathtt{{color(2){\sim }=black}}\)), and thus violating the importance sampling requirement. In some cases, query expansion can enumerate all possible grounded proofs, making LW applicable.

LW can also be applied for the query \(\mathtt{{\simeq \!\!(material(\simeq \!\!(drawn(1))))= wood}}\) (the first drawn ball is made of wood) which is converted to \(\mathtt{(drawn(1){\sim }=X,}\) \(\mathtt{{material}}{} \mathtt{{(X){\sim }=}}\) wood). Once \(\mathtt{{drawn(1)}}\) is sampled to a value \(\mathtt{{v}}\) (without LW), the substitution \(\theta =\{\mathtt{{X= v}}\}\) is applied to the current goal and to iq. At this point LW can be applied to \(\mathtt{{material(v){\sim }=wood}}\) because it is grounded in iq (rule 3a). In other words, for the partial world \(x^{P(i)}=\mathtt{\{drawn(1)=v\}}\) the only value of \(\mathtt{{material(v)}}\) that makes the query true is \(\mathtt{{wood}}\) for which LW is applicable. For this query one sample is sufficient to obtain the exact result.

4 Dynamic distributional clauses

We now extend Distributional Clauses to Dynamic Distributional Clauses (DDC) for modeling dynamic domains. We then discuss how inference by means of filtering is realized in the propositional case.

4.1 Dynamic distributional clauses

As in input-output HMMs and in planning, we shall explicitly distinguish between the hidden state \(x_t\), the evidence or observations \(z_t\), and the action \(u_t\) (input). Therefore, in DDCs, each predicate/variable is classified as state x, action u, or observation z, with a subscript that refers to time 0, for the initial step; time t for the current step, and \(t+1\) for the next step. The definition of a discrete-time stochastic process follows the same idea of a Dynamic Bayesian Network (DBN). We need sets of clauses that define:

-

1.

the prior distribution: \(\mathtt{{h_0}}\sim {\mathcal {D}} \leftarrow \mathtt{{body_0}}\),

-

2.

the state transition model : \(\mathtt{{h_{t+1}}}\sim {\mathcal {D}} \leftarrow \mathtt{{body_{t:t+1}}}\) (the body involves variables at time t and eventually at time \(t+1\)),

-

3.

the measurement model: \(\mathtt{{z_{t+1}}}\sim {\mathcal {D}} \leftarrow \mathtt{{body_{t+1}}}\), and

-

4.

clauses that define a random variable at time t from other variables at the same time (intra-time dependence): \(\mathtt{{h_{t}}}\sim {\mathcal {D}} \leftarrow \mathtt{{body_t}}\).

Obviously, deterministic clauses are allowed in the definition of the stochastic process. As these clauses are all essentially distributional clauses, the semantics remains unchanged.

Example 8

Let us consider a dynamic model for the position of 2 objects: a ball and a box. For the sake of clarity we consider one-dimensional positions.

The object positions have a uniform distribution at step 0 (14). The next position of each object is equal to the current position plus Gaussian noise (15). In addition, the observation model (16) for each object is a Gaussian distribution centered in the actual object position. As in Example 1, we can also define the number of objects as a random variable.

4.2 Inference and filtering

If we consider the time step as an argument of the random variable, inference can be done as in the static case with no changes. However, the performance will degrade, while time and space complexity will grow linearly with the maximum time step considered in the query. This problem can be mitigated if we are interested in filtering, as we shall show in the next section.

The problem of filtering has been well studied in the propositional case (e.g., for DBNs). It is concerned with the estimation of the current state \(x_t\) when the state is only indirectly observable through observations \(z_{t}\). Formally, filtering is concerned with estimating the belief, that is, the probability density function \(bel(x_t)=p(x_t |z_{1:t}, u_{1:t})\). The Bayes filter computes recursively the belief at time \(t+1\), starting from the belief at t, the last observation \(z_{t+1}\), and the last action performed \(u_{t+1}\) through

where \(\eta \) is a normalization constant. Even in the propositional case, the above integral is only tractable for specific combinations of distributions \(bel(x_{t})\), \(p(x_{t+1} | x_{t},u_{t+1})\), and \(p(z_{t+1} | x_{t+1})\) [e.g., the Kalman filter (Kalman 1960)], and one therefore has to resort to approximations. One solution used in the propositional case is to use Monte-Carlo techniques for approximating the integral, resulting in the particle filter (Doucet et al. 2000). The key idea of particle filtering is to represent the belief by a set of weighted samples (often called particles). Given N weighted samples \(\{(x_{t}^{(i)},w_{t}^{(i)})\}\) distributed as \(bel(x_{t})\), a new observation \(z_{t+1}\), and a new action \(u_{t+1}\), the particle filter generates a new weighted sample set that approximates \(bel(x_{t+1})\).

The Particle Filtering (PF) algorithm proceeds in three steps:

-

(a)

Prediction step: sample a new set of samples \(x_{t+1}^{(i)}\), \(i=1, \ldots , N\), from a proposal distribution \(g(x_{t+1} | x_{t}^{(i)}, z_{t+1}, u_{t+1})\).

-

(b)

Weighting step: assign to each sample \(x_{t+1}^{(i)}\) the weight:

$$\begin{aligned} w_{t+1}^{(i)} =w_{t}^{(i)} \frac{p(z_{t+1} | x_{t+1}^{(i)}) p(x_{t+1}^{(i)} | x_{t}^{(i)}, u_{t+1})}{g(x_{t+1}^{(i)} | x_{t}^{(i)}, z_{t+1}, u_{t+1})} \end{aligned}$$ -

(c)

Resampling: if the variance of the sample weights exceeds a certain threshold, resample with replacement, from the sample set, with probability proportional to \(w_{t+1}^{(i)}\) and set the weights to 1.

A common simplification is the bootstrap filter, where the proposal distribution is the state transition model \(g(x_{t+1} | x_{t}^{(i)}, z_{t+1}, u_{t+1}) = p(x_{t+1} | x_{t}^{(i)}, u_{t+1})\) and the weight simplifies to \(w_{t+1}^{(i)} =w_{t}^{(i)} p(z_{t+1} | x_{t+1}^{(i)})\).

5 DCPF: a particle filter for dynamic distributional clauses

We now develop a particle filter for a set of dynamic distributional clauses that define the prior distribution, state transition model and observation model, (cf. Sect. 4.1). Throughout this development, we only consider the bootstrap filter for simplicity, but other proposal distributions are possible.

5.1 Filtering algorithm

The basic relational particle filter applies the same steps as the classical particle filter sketched in Sect. 4.2 and employs the forward reasoning procedure for distributional clauses sketched in Sect. 2. Each sample \(x_{t}^{(i)}\) will be a complete possible world at time \(t\). Working with complete worlds is computationally expensive and may lead to bad performance. Therefore, we shall work with samples that are partial worlds as in the static case. The resulting framework, that we shall now introduce, is called the Distributional Clauses Particle Filter (DCPF).

Starting from a DDC program \(\varvec{\mathbb {P}}\), weighted partial samples \(\{(x_{t}^{P(i)},w_{t}^{(i)})\}\), a new observation list \(z_{t+1}=\{z^j_{t+1}=v_j\}\), and a new action \(u_{t+1}\), the DCPF filtering algorithm performs the weighting and prediction steps from time \(t\) to time \(t+1\) expanding the partial samples as shown in Fig. 1. The new set of samples is \(\{(\hat{x}_{t+1}^{P(i)},w_{t+1}^{(i)})\}\). The DCPF filtering algorithm is the following:

-

Step (1): expand the partial sample to compute \(w_{t+1}^{(i)} = w_{t}^{(i)} p({z_{t+1}} |\hat{x}_{t+1}^{P(i)})\)

-

Resampling (if necessary)

-

Step (2): complete the prediction step (a)

Step (1) performs the weighting step (b) and implicitly (part of) the prediction step (a): it computes the weight \(w_{t+1}^{(i)} = w_{t}^{(i)} p({z_{t+1}} |\hat{x}_{t+1}^{P(i)})\) calling EvalSampleQuery \(({z_{t+1}},x_{t}^{P(i)})\). EvalSampleQuery will automatically sample relevant variables at time \(t+1\) and t until \(p({z_{t+1}} |\hat{x}_{t+1}^{P(i)})\) is computable. If there are no observations, Step (1) is skipped, and the weights remain unchanged. At this point each sample has a new weight \(w_{t+1}^{(i)}\), and resampling can be performed. For resampling we use a minimum variance sampling algorithm (Kitagawa 1996), but other methods are possible.

Sample partition, before (left) and after (right) the filtering algorithm. Initially \(x_{t+1}\) is not sampled, therefore \({x}^a_{t+1}=x_{t+1}\) and \(x^{P}_{t+1}=\emptyset \). The inference algorithm samples variables \(x^m_t \subseteq x^a_t \), \(x^m_{t+1} \subseteq x^a_{t+1} \) and adds them respectively to \(x^{P}_{t}\) and \(x^{P}_{t+1}\). Indeed, \(\hat{x}^{P}_{t}={x}^{P}_{t} \cup x^m_t\), \(\hat{x}^a_{t}={x}^a_{t} \setminus x^m_t\), \(\hat{x}^{P}_{t+1}={x}^{P}_{t+1} \cup x^m_{t+1}=x^m_{t+1}\), \(\hat{x}^a_{t+1}={x}^a_{t+1} \setminus x^m_{t+1}\)

Step (2) performs the prediction step for variables that have not yet been sampled because they are not directly involved in the weighting step. The algorithm queries the head of any DC clause in the state transition model (intra-time clauses excluded), thus it evaluates the body recursively. Whenever the body is true for a substitution \(\theta \), the variable-distribution pair \(r_{t+1}\theta \sim {\mathcal {D}}\theta \) is added to the sample. Avoiding sampling is beneficial for performance, as discussed in Sect. 3.1. Step (2) is necessary to make sure that the partial sample at the previous time step t can be safely forgotten, as we shall discuss in the next section. After Step (2) the set of partial samples \(\hat{x}^{P(i)}_{t+1}\) with weights \(w_{t+1}^{(i)}\) approximates the new belief \(bel(x_{t+1})\).

In the classical particle filter resampling is the last step (c). In contrast, DCPF performs resampling before completing the prediction step (i.e., before Step (2)). This is loosely connected to auxiliary particle filters that perform resampling before the prediction step (Whiteley and Johansen 2010). Anticipating resampling is beneficial because it reduces the variance of the estimation.

To answer a query \(q_{t+1}\), it suffices to call \((w_{q}^{(i)},\hat{x}_{q_{t+1}}^{P(i)})\leftarrow \) EvalSample Query(\( q,\hat{x}_{t+1}^{P(i)}\)) for each sample and use formula (5) where \(w_e^{(i)}\) is replaced by \(w_{t+1}^{(i)}\). After querying, the partial samples \(\hat{x}_{q_{t+1}}^{P(i)}\supseteq \hat{x}_{t+1}^{P(i)}\) are discarded, i.e., the partial samples remain \(\hat{x}_{t+1}^{P(i)}\). This improves the performance, indeed querying does not expand \(\hat{x}_{t+1}^{P(i)}\).

Example 9

Consider an extension of Example 8, where the next position of the object after a tap action is the current position plus a displacement and plus Gaussian noise. The latter two parameters depend on its type and the material of the object below it. We consider a single axis for simplicity.

We define \(\mathtt{{on(A,B)_t}}\) from the z position of \(\mathtt{{A}}\) and \(\mathtt{{ B}}\). \(\mathtt{{A}}\) is on \(\mathtt{{B}}\) when \(\mathtt{{A}}\) is above \(\mathtt{{B}}\) and the distance is lower than a threshold. We omit the clause for brevity.

A partial sample for Example 9, before (left) and after (right) the filtering algorithm

To understand the filtering algorithm let us consider Step (1) for the observation \(\mathtt{{obsPos(1)_{t+1}{\sim }=2.5}}\) (there is no observation for object 2), and action \(\mathtt{{tap(1)_{t+1}}}\). Let us assume \(x_{t}^{P(i)}=\mathtt{{\{pos(1)_t=2,pos(2)_t=5\}}}\). The sample before and after the filtering step is shown in Fig. 2. The algorithm tries to prove \(\mathtt{{ {obsPos(1)_{t+1}}{\sim }=2.5}}\). Rule 2b applies for DC clause (21) that defines \(\mathtt{{obsPos(1)_{t+1}}}\) for \(\theta =\mathtt{{\{ID=1\}}}\). The algorithm tries to prove the body and the variables in the distribution recursively, that is \(\mathtt{{ pos(1)_{t+1}}}\). The latter is not in the sample and rule 2b applies for DC clause (17) with \(\theta =\mathtt{{\{ID=1\}}}\). The proof fails, therefore it backtracks and applies rule 2b to (18). Its body is true assuming that \(\mathtt{{on(1,3)_t}}\) succeeds (and added to the sample). Thus, \(\mathtt{{pos(1)_{t+1}}}\) will be sampled from \(\mathtt{{gaussian(2.3,0.04)}}\) and added to the sample (rule 3b). Deterministic facts in the background knowledge, such as \(\mathtt{{ type(1,cube)}}\), are common to all samples; therefore, they are not added to the sample. At this point \(p(\mathtt{{obsPos(1)_{t+1}}}{\sim }=2.5|\hat{x}^{P(i)}_{t+1})\) is given by (21). This is equivalent to applying rule 3a that imposes the query to be true and updates the weight.

Step (1) is complete, let us consider Step (2). The algorithm queries all the variables in the head of a clause in the state transition model, in this case \(\mathtt{{pos(ID)_{t+1}}}\). This is necessary to propagate the belief for variables not involved in the weighting process, such as \(\mathtt{{pos(2)_{t+1}}}\). The query \(\mathtt{{ pos(ID)_{t+1}{\sim }=Val}}\) succeeds for \(\mathtt{{ID=2}}\) applying (17), and \(\mathtt{{pos(2)_{t+1}\sim gaussian(5,0.01)}}\) is added to the sample. The algorithm backtracks looking for alternative proofs of q, there are none, so the procedure ends. In the next step \(\mathtt{{ pos(2)_{t+1}}}\) is required for \(\mathtt{{pos(2)_{t+2}}}\), so \(\mathtt{{ pos(2)_{t+1}}}\) will be sampled from the distribution stored in the sample. Note that \(\mathtt{{on(A,B)_t}}\) is evaluated selectively. Any other relation or random variable eventually defined in the program remains marginalized. For example, any relation that involves object 2 is not required (e.g., \(\mathtt{{on(2,B)_t}}\)).

5.2 Avoiding backinstantiation

We showed that lazy instantiation is beneficial to reduce the number of variables to sample and to improve the precision of the estimation in the static case. However, to evaluate a query at time t in dynamic models, the algorithm might need to instantiate variables at previous steps, sometimes even at time 0. We call this backinstantiation. This requires one to store the entire sampled trajectory \(x^{P(i)}_{1:t}\), which may have a negative effect on performance. If we are interested in filtering, this is a waste of resources.

We shall now show that the described filtering algorithm performs lazy instantiation over time and avoids backinstantiation. We will first derive sufficient conditions for avoiding backinstantiation in DDC, and then prove that these conditions hold for the DCPF algorithm.

Rao-Blackwellization. Let us assume that the complete world at time t can be written as \(x_t = x_{t}^{P} \cup x_{t}^{a}\) (Fig. 1). Let us consider the following factorization:

In the particle filtering literature this is called Rao–Blackwellization (Doucet et al. 2000), where \( bel(x^{P}_{1:t})\) is approximated as a set of weighted samplesFootnote 2 \(\sum _i w^{(i)} \delta _{x^{P(i)}_{1:t}}(x^{P}_{1:t})\) (\(\delta _v(x)\) is the Dirac delta function centered in \(v\)), while the posterior distribution of \(x^a_t\) is available in closed form.

Rao–Blackwellized particle filters (RBPF) described in the literature, adopt a fixed and manually defined split of \(x_t = x_{t}^{P} \cup x_{t}^{a}\). In contrast, our approach exploits the language and its inference algorithm to perform a dynamic split that may differ accross samples, as described for the static case in Sect. 3.

Backinstantiation in the DCPF. One contribution in the DCPF is that it integrates RBPF and logic programming to avoid backinstantiation over variables \(r\in x_{1:t-1}\). For this reason we are interested in performing a filtering step determining the smallest partial samples that approximate the new belief \(bel(x_{t+1})\) and are d-separated from the past. To avoid backinstantiation we require that \(p(x^{a}_{t}| x^{P(i)}_{1:t},z_{1:t},u_{1:t} )\) is a known distribution for each sample i or at most parametrized by \( x^{P(i)}_{t}\): \(p(x^{a}_{t}| x^{P(i)}_{1:t},z_{1:t},u_{1:t} )=f^{(i)}_{t}( x^a_{t}; x^{P(i)}_{t} )\). Note that the latter equation does not make any independence assumptions: \(f^{(i)}_{t}\) is a probability distribution that incorporates the dependency of previous states and observations and can be different in each sample.

Formally, starting from a partial sample \(x^{P(i)}_{1:t}\) with weight \(w^{(i)}_{t}\) sampled from \(p(x^{P}_{1:t}|z_{1:t},u_{1:t})\), a new observation \(z_{t+1}\), a new action \(u_{t+1}\), and the distribution \(p(x^{a}_{t}|x^{P(i)}_{1:t},z_{1:t},u_{1:t} )\), we look for the smallest partial sample \(\hat{x}^{P(i)}_{1:t+1}=\{x^{P(i)}_{1:t-1},\hat{x}_{t}^{P(i)},\hat{x}_{t+1}^{P(i)}\}\) with \(x_{t}^{P(i)}\subseteq \hat{x}_{t}^{P(i)}\), such that \(\hat{x}^{P(i)}_{1:t+1}\) with weight \(w^{(i)}_{t+1}\) is distributed as \(p(\hat{x}^{P}_{1:t+1}|z_{1:t+1},u_{1:t+1})\) and \(p(x^{a}_{t+1}|\hat{x}^{P(i)}_{1:t+1},z_{1:t+1},u_{1:t+1} )\) is a probability distribution available in closed form. Even though the formulation considers the entire sequence \(x_{1:t+1}\), to estimate \(bel(x_{t+1})\) the previous samples \(\{x^{P(i)}_{1:t-1},\hat{x}_{t}^{P(i)}\}\) can be forgotten.

D-separation conditions. We will now describe sufficient conditions that guarantee d-separation and thus avoid backinstantiation; then we will show that these conditions hold for the DCPF filtering algorithm. The belief update is performed by adopting RBPF steps.

Starting from \(x_{t}^{P(i)}, w^{(i)}_{t},p(x^{a}_{t}|x^{P(i)}_{1:t},z_{1:t},u_{1:t} )=f^{(i)}_{t}(x^a_t;x^{P(i)}_t )\) and a new observation \(z_{t+1}\), let us expand \(x_{t}^{P(i)}\) sampling random variables \(r_t\in var(x^a_{t})\) from \(f^{(i)}_{t}(x^a_t;x^{P(i)}_t )\), and \(r_{t+1}\in var(x_{t+1})\) from the state transition model \(p(r_{t+1}|x^{P(i)}_{t})\) defined by DDC clauses, until the expanded sample \(\{\hat{x}_{t}^{P(i)},\hat{x}_{t+1}^{P(i)}\}\) and the remaining \(\hat{x}^a_{t},\hat{x}^a_{t+1}\) satisfy the following conditions:

-

1.

the partial interpretation \(\hat{x}^{P(i)}_{t+1}\) does not depend on the marginalized variables \(x^a_{t}\): \(p(\hat{x}^{P(i)}_{t+1} |\hat{x}^{P(i)}_{t},\hat{x}^a_{t}, u_{t+1})=p(\hat{x}^{P(i)}_{t+1} |\hat{x}^{P(i)}_{t},u_{t+1})\);

-

2.

the weighting function does not depend on the marginalized variables: \(p(z_{t+1} | x^{(i)}_{t+1})= p(z_{t+1}|\hat{x}^{P(i)}_{t+1})\)

-

3.

\(p(\hat{x}^a_{t+1}|\hat{x}^{P(i)}_{1:t+1},z_{1:t+1},u_{1:t+1} )=f^{(i)}_{t+1}(\hat{x}^a_{t+1}; \hat{x}^{P(i)}_{t+1})\) is available in closed form.

Condition 1 is a common simplifying assumption in RBPF (Doucet et al. 2000), while condition 2 is not strictly required; however it simplifies the weighting and the computation of \(f^{(i)}_{t+1}\). In some cases condition 2 can be removed, for example for discrete or linear gaussian models (using Kalman Filters). Condition 3 guarantees that the previous samples \(\{x^{P(i)}_{1:t-1},\hat{x}_{t}^{P(i)}\}\) can be forgotten to estimate \(bel(x_\tau )\) for \(\tau \ge t+1\).

Theorem 2

Under the d-separation conditions 1,2,3 the samples

\(\{\hat{x}_{t+1}^{P(i)},f^{(i)}_{t+1}(\hat{x}^a_{t+1}; \hat{x}^{P(i)}_{t+1})\}\) with weights \(\{w^{(i)}_{t+1}\}\) approximate \(bel(x_{t+1})\), with

If \(\hat{x}^a_{t+1}\) does not depend on \(\hat{x}^a_t\), then \(f^{(i)}_{t+1}(\hat{x}^a_{t+1}; \hat{x}^{P(i)}_{t+1})=p(\hat{x}^a_{t+1}|\hat{x}^{P(i)}_t, \hat{x}^{P(i)}_{t+1})\).

Proof

The formulas are derived from RBPF (for the bootstrap filter) for which:

where \(\eta =p(z_{t+1}|\hat{x}^{P(i)}_{0:t+1},z_{1:t})\). Condition 2 makes \(p(z_{t+1} | x^{(i)}_{t+1})=p(z_{t+1} |\hat{x}^{P(i)}_{t+1})\) and \(\eta =p(z_{t+1} |\hat{x}^{P(i)}_{t+1})\), simplifying the formulas. \(\square \)

Step (1) in the filtering algorithm (Sect. 5.1) guarantees condition 1 and 2, while Step (2) guarantees condition 3. For a proof sketch see Theorems 5 and 6 in “Theorems” in the Appendix. Indeed, EvalSampleQuery used in Step (1) and (2) will never need to sample variables at time \(t-1\) or before, because the belief distribution of marginalized variables \(r_t\in \hat{x}^a_t\) is \(f^{(i)}_{t}(x^a_t;\hat{x}^{P(i)}_t )\) are available in closed form and (eventually) parameterized by \(\hat{x}^{P(i)}_t\), while \(r_{t+1}\in \hat{x}^a_{t+1}\) are sampled from the state transition model. After Step (2) any \(r_{t+1}\in \hat{x}^a_{t+1}\) is derivable from \(\hat{x}_{t+1}^{P(i)}\) together with the DDC program. These conditions avoid backinstantiation during filtering or query evaluation, thus previous partial states \(x_{0:t}^{P(i)}\) can be forgotten.

Step (2) avoids computing the integral (23). The integral is approximated with a single sample, or equivalently the partial sample is expanded until \(\hat{x}^a_{t+1}\) does not depend on marginalized \(\hat{x}^a_t\), for which \(f^{(i)}_{t+1}(\hat{x}^a_{t+1}; \hat{x}^{P(i)}_{t+1})=p(\hat{x}^a_{t+1}|\hat{x}^{P(i)}_t, \hat{x}^{P(i)}_{t+1})\) is derivable from the DDC program. In detail, for each \(r_{t+1}\theta \in \hat{x}^a_{t+1}\) Step (2) stores \(r_{t+1}\theta \sim {\mathcal {D}}\theta \), where \({\mathcal {D}}\theta =p(r_{t+1}\theta |\hat{x}^{P(i)}_t, \hat{x}^{P(i)}_{t+1})\), e.g., \(\mathtt{{pos(2)_{t+1}}}\sim \mathtt{{Gaussian(5,0.01)}}=f^{(i)}_{t+1}{} \mathtt{{( pos(2)_{t+1})}}\) as shown in Fig. 2. Such distribution is not parametrized and it is generally different in each sample. In contrast, \(p(\mathtt{{size(A)_{t}}}|x^{P(i)}_{1:t},z_{1:t},u_{1:t} )=Gaussian([{\text {if }}{} \mathtt{{type(A,ball)}}\) then \( \mu =1 ;{\text { else }} \mu =2],0.1)=f^{(i)}_{t}(\mathtt{{size(A)_{t}}};x^{P(i)}_{t} )\) is a distribution parametrized by other variables in \(x^{P(i)}_{t}\). It is sufficient to store this parametric function once, using DDC clauses, instead of storing each distribution separately for each sample. Similarly, the DDC clause that defines \(\mathtt{{on(A,B)_t}}\) from object positions at time t is sufficient to represent all marginalized facts \(\mathtt{{on(a,b)_t}}\) not in \(x^{P(i)}_t\). In general, intra-time DDC clauses can represent distributions of marginalized variables for an unspecified number of objects. Thus, Step (2) does not need to query variables defined by intra-time clauses, as proved in Theorem 6, “Theorems” in the Appendix.

Step (2) could be improved applying (23) whenever possible. Moreover, if there is a set of variables that has the same prior and transition model, the belief update (23) can be performed once for all whole set. Whenever a variable is required, it will be sampled. This does not require bounding the number of such sets of variables, and it can be considered as a simple form of lifted belief update. In some cases the belief \(f^{(i)}_{t}(x^a_{t}; x^{P(i)}_{t})\) can be directly specified for any time \(t\), we call this precomputed belief. As lifted belief update, this is applicable to an unbounded set of variables, and avoids unnecessary sampling.

Theorem 3

DCPF has a space complexity per step and sample bounded by the size of the largest partial state \(x^{P(i)}_{t}\), together with \(f^{(i)}_t(x_t^a; x^{P(i)}_{t})\).

Proof

(sketch) The filtering algorithm proposed for DCPF avoids backinstantiation, therefore the space complexity is bounded by the dimension of the state space at time \(t\). A tighter bound is the size of the largest partial state. \(\square \)

5.3 Limitations

We will now describe the limitations of the proposed algorithms.

Lazy instantiation is beneficial only when there are facts or random variables that are irrelevant during inference. For example, this is true when the model includes background knowledge that is not entirely required for a query. In fully-connected models or when the entire world is relevant for a query, lazy instantiation is useless. Nonetheless, the proposed method generalizes LW, thus it can be beneficial even in the described worse cases.

The above issues apply also to inference in dynamic models, but the latter raises additional issues for filtering in particular. One is the curse of dimensionality that produces poor results for high-dimensional state spaces, or equivalently it requires a huge number of samples to give acceptable results. There are some solutions in the literature (e.g., factorising the state space Ng et al. 2002). In DCPF this problem can be alleviated using a suboptimal proposal distribution (see “Proposal distribution” in the Appendix for details).

6 Online parameter learning

So far we assumed that the model used to perform state estimation is known. In practice, it may be hard to determine or to tune the parameters manually, and therefore the question arises as to whether we can learn them. We will first review online parameter learning in classical particle filters, then we will show how to adapt those methods in DCPF.

6.1 Learning in particle filters

A simple solution to perform state estimation and parameter learning with particle filters consists of adding the static parameters to the state space: \(\bar{x}_t=\{x_t,\theta \}\). The posterior distribution \(p(\bar{x}_t|z_{1:t})\) is then described as a set of samples \(\{x^{(i)}_t,\theta ^{(i)}\}\). However, this solution produces poor results due to the following degeneracy problem. As the parameters are sampled in the first step and left unchanged (since they are static variables), after a few steps the parameter samples \(\theta ^{(i)}\) will degenerate to a single value due to resampling. This value will remain unchanged regardless of incoming new evidence. Limiting or removing resampling is not a good solution, because it will produce poor state estimation results. Better strategies have been proposed and are summarized in Kantas et al. (2009). We focus on two simple techniques with limited computational cost: artificial dynamics (Higuchi 2001) and resample-move (Gilks and Berzuini 2001). Both methods introduce diversity among the samples to solve the described degeneration problem.

The first method adds artificial dynamics to the parameter \(\theta \): \(\theta _{t+1}=\theta _t + \epsilon _{t+1},\) where \(\epsilon _{t+1}\) is artificial noise with a small and decreasing variance over time. This strategy has the advantage to be simple and fast, nonetheless it modifies the original problem and requires tuning (Kantas et al. 2009). We will show that this technique is suitable for the scenarios considered in this paper (for a limited number of parameters).

The second method is resample-move that adds a single MCMC step to rejuvenate the parameters in the samples. There are several variants of this technique, the most notable are Storvik’s filter (Storvik 2002) and Particle Learning by Carvalho et al. (2010). To understand these approaches, consider the following factorization of the joint distribution of interest:

In addition to the standard propagation and weighting steps, both algorithms perform a Gibbs sampling step that samples a new parameter value \(\theta _{t}^{(i)}\) from the distribution \(p(\theta | {x^{(i)}_{0:t}},{z_{1:t}})=p(\theta |s^{(i)}_{t}) \) where \(s^{(i)}_{t}\) captures the sufficient statistics of the distribution. \(p(\theta | {x}_{0:t},{z_{1:t}})\) is recursively updated as follows:

This leads to a deterministic sufficient statistics update \(s_{t} = S(s_{t-1},x_t,x_{t-1},z_t)\).

Storvik’s filter algorithm goes as follows:

-

propagate: \(x^{(i)}_t \sim g({x_t}|{x^{(i)}_{t-1}},\theta _{t-1}^{(i)},z_t)\),

-

resample samples with weights: \(w^{(i)}_t=\frac{p({z_t}|{x^{(i)}_t},\theta _{t-1}^{(i)})p({x^{(i)}_t}|{x^{(i)}_{t-1}},\theta _{t-1}^{(i)})}{g({x^{(i)}_t}|{x^{(i)}_{t-1}},\theta _{t-1}^{(i)},z_t)}\),

-

propagate sufficient statistics: \(s^{(i)}_{t} = S(s^{(i)}_{t-1},x^{(i)}_t,x^{(i)}_{t-1},z^{(i)}_t)\), and

-

sample \(\theta _{t}^{(i)} \sim p(\theta |s^{(i)}_{t})\).

The Particle Learning proposed by Carvalho et al. (2010) is an optimization of Storvik’s filter since it adopts the auxiliary particle filter (Pitt and Shephard 1999) and an optimal proposal distribution g. Resample-move strategies do not change the problem as in the artificial dynamics, and have been proven to be successful for several classes of problems (Carvalho et al. 2010, 2010; Lopes et al. 2010). However, they suffer from the sufficient statistics degeneracy problem that can produce an increasing error in the parameter posterior distribution (Andrieu et al. 2005).

6.2 Online parameter learning for DCPF

We now propose an integration of the mentioned learning methods in DCPF. The main contribution is to adapt artificial dynamics and the Storvik’s filter for DCPF and allow learning of a number of parameters defined at run-time. Indeed, the relational representation allows to define an unbounded set of parameters to learn, e.g., the size of each object \(\mathtt{{size(ID)}}\). The number of objects and thus parameters to learn is not necessarily known in advance.

Artificial dynamics in DCPF. To describe the integration of artificial dynamics in DCPF we consider an object tracking scenario called Learnsize (Sect. 7.4), in which the parameters to learn are the sizes of all objects. We defined a uniform prior: \(\mathtt{{{size(ID)_{0} \sim uniform(0,20)}.}}\) Since the number of objects is not defined in advance we can directly define the size distribution at time t for any \(\mathtt{{size(ID)_{t}}}\) not yet sampled: \(\mathtt{{{size(ID)_{t} \sim uniform(0,20)}}}\) (i.e., \(f^{(i)}_{t}(x^a_{t}; x^{P(i)}_{t})\) is directly defined for not sampled \(\mathtt{{size(ID)_t}}\)). Whenever the size of an object \(\mathtt{{x}}\) (not yet sampled) is needed for inference, \(\mathtt{{size(x)_{t}}}\) is sampled for the above rule with no need to perform backinstantiation. While the transition model defines the artificial dynamics: \(\mathtt{{{size(ID)_{t+1} \sim gaussian(\simeq \!\!(size(ID)_{t}),}}} {\bar{\sigma }}^2/\mathtt{{{{T^{x}})}}},\) where \(\mathtt{{T}}\) is the time step, \(\mathtt{{X}}\) is a fixed exponent (set to 1 in this paper) and \(\bar{\sigma }^2\) is a constant that represents the initial variance. Initially the variance is high, thus the particle filter can “explore” the parameter space, after some steps the variance decreases in the hope that the parameter converges to the real value.

Storvik’s filter in DCPF. Equation (24) shows how to update the parameter posterior and then the sufficient statistics for each sample. However, this formulation needs a conjugate prior for the parameter likelihood. For a complex distribution this may be hard. We developed a variant of Storvik’s filter to overcome this problem. In detail, we add \({\hat{\theta }_t}\) to the state that represents the currently sampled “parameter” value, while \(\theta \) is the parameter to estimate, e.g., the mean of \({\hat{\theta }_t}\) (Fig. 3 on the right). We also assume the state \(x_t\) and the observations depend only on \({\hat{\theta }_t}\): \(p(x_t|x_{t-1},{\hat{\theta }_t},\theta )=p(x_t|x_{t-1},{\hat{\theta }_t})\) and \( p({z_t}|{x_t},\hat{\theta }_t,\theta )= p({z_t}|{x_t},\hat{\theta }_t)\). Thus, the posterior becomes:

Knowing that \(p(\theta |\hat{\theta }_{0:t},{x_{0:t}},{z_{1:t}})\propto p({x_{0:t}},\theta ,\hat{\theta }_{0:t}|{z_{1:t}})\) we replace (24) with:

Thus \(p(\theta |\hat{\theta }_{0:t},{x_{0:t}},{z_{1:t}})=p(\theta |\hat{\theta }_{0:t})=p(\theta | s_t)\). At this point we can avoid sampling \(\theta \) as required by the Storvik’s filter, but sample \({\hat{\theta }_t}\) from the marginal distribution: \(p({\hat{\theta }_t}|s_{t-1})=\int _\theta p({\hat{\theta }_t}|\theta )p(\theta |s_{t-1})d\theta .\)

For example, in the Learnsize scenario, for each object \(\mathtt{{ID}}\) we have \({\hat{\theta }_t}=\mathtt {cursize_{t}(ID)}\) and the parameter to learn is \(\theta =\mathtt {size(ID)}\). For each object \(p({\hat{\theta }_t}|\theta )\) is defined as \(\mathtt{{cursize_{t}(ID) \sim Gaussian(\simeq \!\!size(ID),}}\bar{\sigma }^2)\), where \(\bar{\sigma }^2\) is a fixed variance. The conjugate prior of \(\mathtt{{size(ID)}}\) is a Gaussian with hyperparameters \(\mathtt{{\mu _0(ID),\sigma _0^2(ID)}}\): \(\mathtt{{ size(ID) \sim Gaussian(\mu _0(ID),\sigma _0^2(ID))}}\). As explained \(\theta =\mathtt {size(ID)}\) need not be sampled, indeed \({\hat{\theta }_t}=\mathtt {cursize_{t}(ID)}\) is directly sampled from \(p({\hat{\theta }_t}|s_{t-1})\), i.e. \(\mathtt{{cursize_{t}(ID)} \sim Gaussian(\mu _{t-1}(ID),\sigma _{t-1}^2(ID)+\sigma ^2)}\). For each \(\mathtt{{ ID}}\) the posterior \(p(\theta |s_{t})\) is a Gaussian as the prior, and the sufficient statistics \(s_{t}=\mathtt{{\mu _{t}(ID),\sigma _{t}^2(ID)}}\) are computed using Bayesian inference. The posterior distribution of the parameters can become peaked in few steps, causing again a degeneration problem. This issue is mitigated reducing the influence of the evidence during the Bayesian update.

7 Experiments

This section answers the following questions:

-

(Q1) Does the EvalSampleQuery algorithm obtain the correct results?

-

(Q2) How do the DCPF and the classical particle filter compare?

-

(Q3) How do the DC and DCPF perform with respect to a representative state-of-the-art probabilistic programming language for static and dynamic domains?

-

(Q4) Is the DCPF suitable for real-world applications?

-

(Q5) How do the learning algorithms perform?

Among the several state-of-the-art probabilistic languages, BLOG (Milch et al. 2005) is a system that shares some similarities with DC and DCPF. For this reason, we compared the performance with BLOG for static domains and its dynamic extension DBLOG (de Salvo Braz et al. 2008) for temporal domains.

All algorithms were implemented in YAP Prolog and run on an Intel Core i7 3.3GHz for simulations and on a laptop Core i7 for real-world experiments. To measure the error between the predicted and the exact probability for a given query, we compute the empirical standard deviation (STD). The average used to compute STD is the exact probability when available or the empirical average otherwise. We report STD \(99\%\) confidence intervals. Notice that those intervals refer to the uncertainty of the STD estimation, not to the uncertainty of the probability. If the number of samples is not sufficient to give an answer (e.g., all samples are rejected), a value is randomly chosen from 0 to 1. The results are averaged over 500 runs. In all the experiments we measure the CPU time (“user time” in the Unix “time” command). This makes a fair comparison between DC and DCPF (not parallelized in the current implementation) and (D)BLOG that often uses more than one CPU at the time. Time includes initialization: around 0.3s for (D)BLOG, 0.03s for DC and DCPF.

We first describe experiments in static domains, then in dynamic domains (synthetic and real-world scenarios).

7.1 Static domains

In the first experiment we tested the correctness of EvalSampleQuery for static inference with and without LW and query expansion (Q1) using Example 4 in Sect. 3.3. Figure 4 shows some results. The error (STD) converges to zero for all algorithms. EvalSampleQuery with LW (without query expansion) has a lower STD (Fig. 4b), but it is slower for the same number of samples (Fig. 4c). Nonetheless, the overhead is beneficial because for the same execution time the STD of LW is lower (Fig. 4a). Adding query expansion (Sect. 3.3.1) to LW has a computational cost. This is beneficial for query q1 (as defined in the caption of Fig. 4), allowing to exploit LW in disjunctions. Nonetheless, for query q2 the query expansion overhead is not compensated by an error reduction.

Results for static inference with LW and without LW (naive). For LW we show results with (LWexp) and without query expansion. The queries are \(\mathtt{{q1=(drawn(3) {\sim }=10)}}\) with evidence \(\mathtt{{((drawn(1){\sim }=9,drawn(2){\sim }=9)\ OR\ (drawn(1){\sim }=10,drawn(2){\sim }=10))}}\) and \(\mathtt{{q2=(drawn(1) {\sim }=X,}}\) \(\mathtt{{drawn(2) {\sim }=X,color(X){\sim }=black)}}\). The axes in (a) and (b) are in logarithmic scale. q1LWexp and q2naive partially overlap in (a) and (b); q2LWexp and q2LW overlap in (b)

In the second experiment (Fig. 5) we considered an identity uncertainty domainFootnote 3 used in BLOG (Milch et al. 2005). The query considered is the probability that the second and third drawn balls are the same, given that the color of the drawn balls are respectively black, white, and white. We compared DC with BLOG. We consider several settings for DC: naive (EvalSampleQuery without LW), LW (EvalSampleQuery with LW, using formula (5) to compute p(q|e)), and LW2 where EvalSampleQuery estimates p(q, e) and p(e) independently using half of the available samples each. LW and LW2 are tested with and without query expansion (respectively LWexp and LW2exp). The results show that the error (STD), for the same number of samples or time, is lower for DC with LW (Fig. 5a, b). In particular, the lowest error is obtained with LW2exp. Any DC setting is faster than BLOG (Fig. 5c). The latter has an unexpected logarithmic-like behavior for a small number of samples. In addition, it seems that BLOG does not use LW in this domain, indeed the error is comparable with naive Monte Carlo for the same number of samples (Fig. 5b). In contrast, as described in Sect. 3.3.2 EvalSampleQuery exploits LW in complex queries as equalities between random variables.

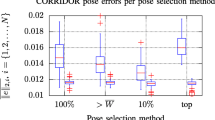

Identity uncertainty domain used in Milch et al. (2005). The axes in (a) and (b) are in logarithmic scale. LW and LWexp overlap in (b); BLOG and naive overlap in (b); LW and LW2 overlap in (c)