Abstract

We show that Malitsky’s recent Golden Ratio Algorithm for solving convex mixed variational inequalities can be employed in a certain nonconvex framework as well, making it probably the first iterative method in the literature for solving generalized convex mixed variational inequalities, and illustrate this result by numerical experiments.

Similar content being viewed by others

1 Introduction

Mixed variational inequalities are general problems which encompass as special cases several problems from continuous optimization and variational analysis such as minimization problems, linear complementary problems, vector optimization problems or variational inequalities, having applications in economics, engineering, physics, mechanics and electronics (see [8, 9, 11, 12, 23, 32,33,34, 36] among others).

The first motivation behind this study comes from [19, 20], where existence results for mixed variational inequalities involving quasiconvex functions were provided, hence the question of numerically solving such problems arose naturally. As far as we know in the literature one can find only iterative methods for solving mixed variational inequalities without convexity assumptions where the involved functions are taken continuous in works like [32,33,34], however these algorithms are either implicitly formulated or merely conceptual schemes, no implementations of them being known to us. Note moreover that these methods involve inner loops or two forward steps in each iteration, while the one considered in our work makes a single proximal step per iteration.

In order to provide algorithms for solving mixed variational inequalities involving noncontinuous nonconvex functions we can envision at least two ways, to extend algorithms from the corresponding convex case or to combine methods of proximal point type for minimizing quasiconvex functions (cf. [24, 35]) with known algorithms for solving variational inequalities. In this note we contribute to the first direction, leaving the other for future research.

More precisely we show that the recent Golden Ratio Algorithm due to Malitsky (cf. [27]) remains convergent for certain nonconvex mixed variational inequalities as well, making it probably the first iterative method (of proximal point type) in the literature for solving nonsmooth mixed variational inequalities beyond convexity. The governing operators of the mixed variational inequalities which turn out to be solvable via the Golden Ratio Algorithm need to satisfy only a quite general monotonicity type hypothesis, while the involved functions can be prox-convex, a generalized convexity notion recently introduced in [13], whose definition is satisfied by several families of quasiconvex and weakly convex functions and not only. The theoretical advances are illustrated and confirmed by numerical experiments where some interesting facts regarding the “conflict” between the operator and the function involved in the mixed variational inequalities can be noticed even if only toy examples are considered.

The structure of the paper is as follows. In Sect. 2, we set up notation and preliminaries, reviewing some standard facts on generalized convexity. In Sect. 3, we show that the Golden Ratio Algorithm converges for certain nonconvex mixed variational inequalities, while in Sect. 4 we computationally solve in matlab two mixed variational inequalities where the involved functions are prox-convex (but not convex) on the constraint set, in particular an application in oligopolistic markets.

2 Preliminaries and Basic Definitions

We denote by \(\langle \cdot ,\cdot \rangle \) the inner product of \({\mathbb {R}}^{n}\) and by \(\Vert \cdot \Vert \) the Euclidean norm. The indicator function of a nonempty set \(K \subseteq {\mathbb {R}}^{n}\) is defined by \(\iota _{K} (x) := 0\) if \(x \in K\), and \(\iota _{K} (x) := + \infty \) elsewhere. By \({\mathbb {B}} (x, \delta )\) we mean the closed ball centered at \(x\in {\mathbb {R}}^{n}\) and with radius \(\delta > 0\). By convention, take \(0(+\infty )=+\infty \).

Given any \(x, y, z \in {\mathbb {R}}^{n}\) and \(\beta \in {\mathbb {R}}\), we have

Given any function \(h:{\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}} := {\mathbb {R}} \cup \{\pm \infty \}\), the effective domain of h is defined by \({{\,\mathrm{dom}\,}}\,h := \{x \in {\mathbb {R}}^n: h(x) < + \infty \}\). It is said that h is a proper function if \(h(x)>-\infty \) for every \(x \in {\mathbb {R}}^{n}\) and \({{\,\mathrm{dom}\,}}\,h\) is nonempty (clearly, \(h(x) = + \infty \) for every \(x \notin {{\,\mathrm{dom}\,}}\,h\)). By \({\mathrm{arg~min}}_{{\mathbb {R}}^{n}} h\) we mean the set of all minimizers of h.

A function h with a convex domain is said to be

- (a):

-

convex if, given any \(x, y \in {{\,\mathrm{dom}\,}}\,h\), then

$$\begin{aligned} h(\lambda x + (1-\lambda )y) \le \lambda h(x) + (1 - \lambda ) h(y) ~ \forall ~ \lambda \in [0, 1]; \end{aligned}$$(2.3) - (b):

-

quasiconvex if, given any \(x, y \in {{\,\mathrm{dom}\,}}\,h\), then

$$\begin{aligned} h(\lambda x + (1-\lambda ) y) \le \max \{h(x), h(y)\} ~ \forall ~ \lambda \in [0,1]. \end{aligned}$$(2.4)

Every convex function is quasiconvex. The function \(h: {\mathbb {R}} \rightarrow {\mathbb {R}}\) with \(h(x) = x^{3}\) is quasiconvex without being convex. Recall that

where \({{{\,\mathrm{epi}\,}}}\,h := \{(x,t) \in {\mathbb {R}}^{n} \times {\mathbb {R}}: h(x) \le t\}\) is the epigraph of h and \(S_{\lambda } (h) := \{x \in {\mathbb {R}}^{n}: h(x) \le \lambda \}\) its sublevel set at the height \(\lambda \in {\mathbb {R}}\).

The proximity operator of parameter \(\gamma >0\) of a function \(h: {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}\) at \(x \in {\mathbb {R}}^{n}\) is defined by \({{{\,\mathrm{Prox}\,}}}_{{^\gamma }h}: {\mathbb {R}}^{n} \rightrightarrows {\mathbb {R}}^{n}\) with

Let K be a closed and convex set in \({\mathbb {R}}^n\), \(h: K \rightarrow \overline{{\mathbb {R}}}\) with \(K \cap {{\,\mathrm{dom}\,}}h \ne \emptyset \) a proper function, and \(f: K \times K \rightarrow {\mathbb {R}}\) be a real-valued bifunction. We say that f is

- (a):

-

monotone on K, if for every \(x, y \in K\)

$$\begin{aligned} f(x, y) + f(y, x) \le 0, \end{aligned}$$(2.6) - (b):

-

h-pseudomonotone on K, if for every \(x, y \in K\)

$$\begin{aligned} f(x, y) + h(y) - h(x) \ge 0 ~ \Longrightarrow ~ f(y, x) + h(x) - h(y) \le 0. \end{aligned}$$(2.7)

Every monotone bifunction is h-pseudomonotone, but the converse statement is not true in general. Furthermore, if \(h \equiv 0\), then 0-pseudomonotonicity coincides with the usual pseudomonotonicity, see [16].

A new class of generalized convex functions which includes some classes of quasiconvex functions and weakly convex functions along other functions was introduced in [13].

Definition 2.1

Let K be a closed set in \({\mathbb {R}}^{n}\) and \(h: {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}\) be a proper function such that \(K \cap {{\,\mathrm{dom}\,}}h \ne \emptyset \). We say that h is prox-convex on K (with prox-convex value \(\alpha \)) if there exists \(\alpha > 0\) such that for every \(z \in K\), \({{\,\mathrm{Prox}\,}}_{h + \iota _{K}} (z) \ne \emptyset \), and

Some basic properties of prox-convex functions are recalled below.

Remark 2.1

- (i):

-

Let K be a closed set in \({\mathbb {R}}^{n}\) and \(h: {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}\) be a proper prox-convex function on K such that \(K \cap {{\,\mathrm{dom}\,}}\,h \ne \emptyset \). Then the map \(z \rightarrow {{\,\mathrm{Prox}\,}}_{h+\iota _{K}} (z)\) is single-valued, i.e., the left-hand side of relation (2.8) holds as an equality (by a slight abuse of notation see [13, Proposition 3.3]), and firmly nonexpansive on K, that is, given \(z_{1}, z_{2} \in K\) and \({\overline{x}}_{1} \in {{\,\mathrm{Prox}\,}}_{h + \iota _{K}} (z_{1})\) and \({\overline{x}}_{2} \in {{\,\mathrm{Prox}\,}}_{h + \iota _{K}} (z_{2})\), one has

$$\begin{aligned} \Vert {\overline{x}}_{1} - {\overline{x}}_{2} \Vert ^{2} \le \langle z_{1} - z_{2}, {\overline{x}}_{1} - {\overline{x}}_{2} \rangle . \end{aligned}$$(2.9) - (ii):

-

If h is a proper, lower semicontinuous and convex function, then h is prox-convex with prox-convex value \(\alpha =1\) (see [13, Proposition 3.4]), but the converse statement does not hold in general. Indeed, the function \(h(x) = -x^{2}-x\) is prox-convex (with prox-convex value \(\alpha \) for every \(\alpha >0\)) without being convex on \(K=[0, 1]\). Furthermore, a class of quasiconvex functions which are prox-convex as well is analyzed in [13, Section 3.2].

- (iii):

-

As was proved in [13, Theorem 4.1], the classical proximal point algorithm remains convergent for optimization problems consisting in minimizing prox-convex functions, too.

- (iv):

-

In the literature one can find similar works where the proximity operator of a function is considered with respect to a given set like above, for instance [3, 14] where the employed functions are not split from the corresponding sets either.

- (v):

-

We are not aware of any universal recipe for computing the proximity operator of a prox-convex function with respect to a given set. The most direct approach (used also for the numerical experiments presented in Sect. 4 as well as in [13]) is to determine it by hand by means of basic calculus techniques for determining the minimum of a function over a set. Alternatively one could employ (or adapt if necessary) in order to computationally derive proximal points of functions over sets (as the algorithms actually use them at certain points and closed formulae of the corresponding proximity operators are not really necessary) the software packages from [40] (in julia) or [26] (in python). Related to this issue is the computation of proximal points of a convex function over a nonconvex set, a contribution to this direction being available in the recent work [15]. Note also that in [17] a method based on the computation of proximal points of piecewise affine models of certain classes of nonconvex functions is proposed for determining the proximal points of the latter, while in the convex case one finds a symbolic computation method in [25] and an interior one in [10], however the possible ways of extending them towards nonconvex frameworks or for functions restricted to sets require further investigations.

- (vi):

-

To the best of our knowledge besides the convex and prox-convex functions only the weakly convex ones have single-valued proximity operators (as noted in [17]). This feature is very relevant when constructing proximal point algorithms as the new iterate is uniquely determined and does not have to be picked from a set (that needs to be found and can also be empty).

For a further study on generalized convexity we refer to [4, 16] among other works.

3 Nonconvex Mixed Variational Inequalities

Let \(K\subseteq {\mathbb {R}}^{n}\) be nonempty, \(T: {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}^{n}\) be an operator and \(h: K \rightarrow {\mathbb {R}}\) a real-valued function. The mixed variational inequality problem or variational inequality of the second kind is defined by

We denote its solution set by S(T; h; K).

Remark 3.1

Following [23, 31], problem (3.1) can be seen as a reformulation of a Walrasian equilibrium model or of an oligopolistic equilibrium model, where (after some transformations explained in [23, Section 2]) the (monotone) operator T represents the demand, while h the supply. While in [23] the function representing the supply is taken convex (however no economical reason behind this choice is given), in [31] it is DC (difference of convex). Another concrete application that can be recast as a mixed variational inequality problem can be found in [12] (see also [11]), where electrical circuits involving devices like transistors are studied by means of mixed variational inequalities. There the involved (monotone) operator is linear, while the electrical superpotential of the practical diodes is represented by the involved function (taken convex in order to fit the theoretical model, again without any physical explanation). In [36] a dual variational formulation for strain of an elastoplasticity model with hardening is reformulated as a mixed variational inequality, while in [28] the same happens with the frictional contact of an elastic cylinder with a rigid obstacle, in the antiplane framework.

Remark 3.2

If \(h \equiv 0\), (3.1) reduces to the variational inequality problem (see [8, 9, 22]). On the other hand, if \(T \equiv 0\) (3.1) reduces to the constrained optimization problem of minimizing h over K. When h is convex, (3.1) is a convex mixed variational inequality, for which one can find various (proximal point type) algorithms in the literature, for instance in [5, 27, 37, 41, 43,44,45]. However when involving nonconvex functions mixed variational inequalities become harder to approach. For instance, the solution sets of such problems may be empty even for a compact and convex set K as was noted in [30, Example 3.1] (see also [20, page 127]). Indeed, take \(T = I\) (the identity map), \(K = {\mathbb {B}} (0, 1)\), and \(h: {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}\) given by \(h(x) = - \Vert x \Vert ^{2}\). Then (3.1) has no solutions. Assuming the existence of a solution to (3.1), denoted by \({\overline{x}} \in K\), one has in (3.1) for \(y = -{\overline{x}}\)

Setting \({\overline{x}} = 0\) in (3.1), we have \(\langle 0, 0 \rangle + h(y) - h(0) = - \Vert y \Vert ^{2} \ge 0\) for all \(y \in K\), a contradiction. Therefore, \(S(I; h; K) = \emptyset \).

The reason behind this situation is the “conflict” between the properties of the operator T and those of the function h. Since we are searching for a point where an inequality is satisfied for all the considered variables, if h goes downwards (for instance, when it is concave), then T needs to go upwards faster and vice-versa. This conflict is restricted to nonconvex functions, since if h is convex (3.1) has a solution on any compact and convex set K. Existence results for the nonconvex and noncoercive setting can be found in [19,20,21], in which this conflict appears in a theoretical assumption called mixed variational inequality property (see [42, Definition 3.1]).

For problem (3.1), let us consider the following additional assumptions

- (A1):

-

T is an L-Lipschitz-continuous operator on K, where \(L>0\);

- (A2):

-

h is a lower semicontinuous prox-convex function on K with prox-convex value \(\alpha > 0\);

- (A3):

-

T and h satisfy the following generalized monotonicity condition on K (cf. [27, 39])

$$\begin{aligned} \langle T(y), y - {\overline{x}} \rangle + h(y) - h({\overline{x}}) \ge 0, ~ \forall ~ y \in K, ~ \forall ~ {\overline{x}} \in S(T; h; K); \end{aligned}$$(3.2) - (A4):

-

S(T; h; K) is nonempty.

Remark 3.3

- (i):

-

If T is h-pseudomonotone on K (in particular, monotone), then T satisfies assumption (A3) (see [39]). Indeed, take \({\overline{x}} \in S(T; h; K)\). Since T is h-pseudomonotone, we have for every \(y\in K\)

$$\begin{aligned} \langle T({\overline{x}}), y - {\overline{x}} \rangle + h(y) - h({\overline{x}}) \ge 0&~ \Longrightarrow ~ \langle T(y), {\overline{x}} - y \rangle + h({\overline{x}}) - h(y) \le 0, \\&\Longleftrightarrow ~ \langle T(y), y - {\overline{x}} \rangle + h(y) - h({\overline{x}}) \ge 0, \end{aligned}$$i.e., T satisfies assumption (A3).

Note also that the generalized monotonicity condition (A3) can be further weakened to [27, Equation (71)] without losing the convergence of the algorithm proposed below.

- (ii):

-

Results guaranteeing existence of solutions to mixed variational inequalities beyond convexity may be found in [19,20,21].

The algorithm we consider for solving (3.1) was recently introduced by Malitsky in [27] with the involved function taken convex.

As a first result, we validate the stopping criterion of Algorithm 1 in our generalized convex framework. In the following statements the sequences \(\{x^k\}_k\) and \(\{z^k\}_k\) are the ones generated by Algorithm 1.

Proposition 3.1

If (A2) holds and \(x^{k+1} = x^{k} = z^{k}\) for some \(k\in {\mathbb {N}}\), then the stopping point of Algorithm 1 is a solution of (3.1), i.e. \(x^{k} \in S(T; h; K)\).

Proof

If \(x^{k+1} = x^{k} = z^{k}\), then \(x^{k} = {{\,\mathrm{Prox}\,}}_{h + \iota _{K}} \left( x^{k} - (1/\alpha ) T(x^{k})\right) \), so by (2.8), we have

i.e., \(x^{k} \in S(T; h; K)\). \(\square \)

In order to state the convergence of Algorithm 1, we first show the following general result, whose proof follows the steps of the one of [27, Theorem 1].

Lemma 3.1

Suppose that assumptions (Ai) with \(i \in \{1,\ldots , 4\}\) hold. Let \(x^{1}, z^{0} \in K\) be arbitrary. Then for each \({\overline{x}} \in S(T; h; K)\), the sequences \(\{x_{k}\}_k\) and \(\{z^{k}\}_k\) satisfy that

Proof

By applying relation (2.8) to Eq. 3.4 for \(k+1\) and k, we have

Take \(x = {\overline{x}} \in S(T; h; K)\) in the first equation, \(x=x^{k+1}\) in the second equation, and note that \(x^{k} - z^{k-1} = \phi (x^{k} - z^{k})\). Thus,

By adding these inequalities, and since \({\overline{x}} - x^{k+1} = {\overline{x}} - x^{k} + x^{k} - x^{k+1}\) and \(\alpha > 0\), we have

Since T satisfies assumption (A3), we have

i.e., it follows from (3.8) that

By applying (2.1), we have

From step (3.3), we have \(x^{k+1} = (1 + \phi ) z^{k+1} - \phi z^{k}\), thus

By replacing (3.10) in Eq. (3.9), and since \(\phi ^{2} = 1 + \phi \), \((\phi - 1) \Vert x^{k+1} - z^{k} \Vert ^{2} - \frac{1}{\phi } \Vert x^{k+1} - z^{k} \Vert ^{2} = 0\), we have

Finally, since T is L-Lipschitz-continuous, it follows that

and the result follows by replacing this in Eq. (3.11). \(\square \)

As a consequence, we have the following statement.

Corollary 3.1

Suppose that assumptions (Ai) with \(i \in \{1,\ldots , 4\}\) hold. Let \(x^{1}, z^{0} \in K\) be arbitrary and \({\overline{x}} \in S(T; h; K)\). If

then the sequences \(\{x^{k}\}_{k}\) and \(\{z^{k}\}_{k}\) satisfy that

Remark 3.4

Note that, in particular, if h is convex, then \(\alpha = 1\). Hence, if \(L \le {\phi }/{2}\), then relation (3.13) holds, which is the usual assumption for the convex case. Moreover, due to the convexity of h, we have \(\langle {\overline{x}} - z, x - {\overline{x}} \rangle \ge 0\). Hence, \({\overline{x}} = {{\,\mathrm{Prox}\,}}_{h + \iota _{K}} (z)\) implies that

Then there exists \(\gamma \ge 1\) large enough such that condition (3.12) holds.

Now, we present the following convergence result of Algorithm 1.

Theorem 3.1

Suppose that assumptions (Ai) with \(i \in \{1,\ldots , 4\}\) hold. Let \(x^{1}, z^{0} \in K\) be arbitrary. If condition (3.12) holds, then the sequences \(\{x^{k}\}_k\) and \(\{z^{k}\}_k\) converge to a solution of problem (3.1).

Proof

Take an arbitrary \({\overline{x}} \in S(T; h; K)\). It follows from Lemma 3.1 that the sequence \(\left\{ (1 + \phi ) \Vert z^{k+1} - {\overline{x}} \Vert ^{2} + \frac{\phi }{2} \Vert x^{k+1} - x^{k} \Vert ^{2} \right\} _k\) is bounded. Then, \(\{ \Vert z^{k} - {\overline{x}} \Vert \}_k\) and \(\{z^{k}\}_k\) are bounded too. Furthermore, by (3.5),

hence \(\{x^{k}\}_k\) has at least a cluster point. Going to subsequences if necessary, the same happens with \(\{z^{k}\}_k\), too. By using (3.3), we have

Therefore, it follows by Eqs. (3.14) and (3.15) that \(\Vert x^{k+1} - x^{k} \Vert \rightarrow 0\) when \(k\rightarrow +\infty \).

Now, take \({\widehat{x}}\) be an arbitrary cluster point of the sequence \(\{x^{k}\}_k\) generated by our algorithm, and recall Eq. (3.6):

Since h is lower semicontinuous and T is L-Lipschitz-continuous, by taking the limit in Eq. (3.6) (passing to subsequences if needed) and by using Eq. (3.15), we have

i.e., \({\widehat{x}} \in S(T; h; K)\). Therefore, every cluster point of \(\{x^{k}\}_k\) is a solution of problem (3.1). \(\square \)

Remark 3.5

- (i):

-

As explained before, the conflict between the properties of the function and those of the operator in the nonconvex case (something similar happens in Multiobjective Optimization where one has several conflicting objective functions) is controlled in Algorithm 1 in condition (3.12). However, note that if \(T=0\), then \(L=0\) and (3.12) holds automatically. On the other hand, if h is convex (in particular \(\iota _{K}\)), then \(\alpha = 1\), so condition (3.12) becomes “\(L \in \, ]0, {\phi }/{2}]\)”, which is usual for the convex case. Note also that one can find in the literature various methods for determining the Lipschitz constants of the involved operators, see for instance [38].

- (ii):

-

In contrast to the methods proposed in [27, 37, 43] and references therein, Algorithm 1 is not restricted to the convexity of the function h. Other recent algorithms for solving mixed variational inequalities where the involved function is convex can be found also in [5, 41, 44, 45].

- (iii):

-

If \(h \equiv 0\), then Algorithm 1 is a variant of [39, Algorithm 2.1] for operators T which satisfy the general monotonicity assumption (A4) with \(h \equiv 0\).

- (iv):

-

Combining some steps in the proof of Theorem 3.1 (in particular Lemma 3.1 and (3.14)), one can conclude that for any solution \({\overline{x}} \in S(T; h; K)\) the sequences \(\{x^{k} - {\overline{x}}\}_k\) and \(\{z^{k} - {\overline{x}}\}_k\) are convergent.

Remark 3.6

A legitimate question concerns the possible modification of the algorithm considered in this section by employing (constant or variable) stepsizes in the proximal (backward) steps. This can be done when the function \(\lambda \alpha h\) is prox-convex with prox-convex value \(\alpha >0\) (where \(\lambda \in \, ]0, \phi / (2L)]\)) by replacing 3.4 with

The convergence statement of this modified algorithm is similar to Theorem 3.1, its proof following analogously. However, when assuming h to be prox-convex with prox-convex value \(\alpha >0\) it is not known whether \(\lambda h\) (for some \(\lambda >0\)) is prox-convex or not. While (2.8) implies a similar inequality for \(\lambda h\) with the prox-convex value \(\lambda \alpha \), it is not sure that \({{\,\mathrm{Prox}\,}}_{h + \iota _{K}} (z) \ne \emptyset \) yields \({{\,\mathrm{Prox}\,}}_{\lambda h + \iota _{K}} (z) \ne \emptyset \).

4 Numerical Experiments

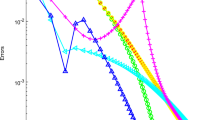

We implemented the modification of Algorithm 1 presented in Remark 3.6 in matlab 2019b-Win64 on a Lenovo Yoga 260 Laptop with Windows 10 and an Intel Core i7 6500U CPU with 2.59 GHz and 16GB RAM. As stopping criterion of the algorithm we considered the situation when the absolute values of both the differences between \(x^{k+1}\) and \(x^k\), and \(x^{k+1}\) and \(z^k\) are not larger than the considered error \(\varepsilon >0\). First we considered a toy example, where one can already note the “conflict” between the properties of the involved operator and function, then an application in oligopolistic markets.

4.1 Theoretical Example

We consider first the following mixed variational inequality

that is a special case of (3.1) for \(K=[0, 1] \subseteq {\mathbb {R}}\), \(h:{\mathbb {R}}\rightarrow {\mathbb {R}}\), \(h(y)= - y^2-y\), and \(T: {\mathbb {R}}\rightarrow {\mathbb {R}}\), \(Tx=x\). The function \(g: {\mathbb {R}}\rightarrow {\mathbb {R}}\) given by \(g(y) = \langle T(x), y\rangle + h(y)\) is prox-convex on K for any \(x\in K\) for any \(\alpha > 0\) and problem (4.1) has a unique solution \({\overline{x}}=1\in K\). The fact that \(y \mapsto \langle T(x), y \rangle \) is increasing on K while h is decreasing on K adds additional complications to problem (4.1).

The value of the proximity operator of parameter \(\alpha \lambda \) of h (for fixed \(\alpha , \lambda >0\)) over K at \(z-\lambda T(x)\in K\), for \(x, z\in K\), is, for the situations considered below, where \(\alpha \lambda <1/2\), equal to \((z+\lambda (\alpha -x))/(1-2\lambda \alpha )\) when this lies in K, otherwise being 0 when \(\lambda (x-\alpha ) \ge z\) and 1 otherwise, i.e. when \(z + 3 \alpha \lambda - \lambda x >1\). In case \(\alpha \lambda \ge 1/2\) the analysis can be done in a similar manner. Hence, as \(\lambda \alpha h\) is prox-convex, we can employ for solving (4.1) the modification of Algorithm 1 mentioned in Remark 3.6 with constant stepsize \(\lambda \alpha \).

In Tables 1 and 2 we present the computational results obtained by implementing this algorithm for various choices of the involved parameters and of the allowed error \(\varepsilon > 0\). In all the situations presented below we give the number of iterations needed by the program to reach the solution \({\overline{x}}=1\) of (4.1).

4.2 Application in Oligopolistic Equilibrium

The Nash-Cournot oligopolistic market equilibrium is a fundamental model in economics (see [23, 31] for more on this and related references). It assumes that there are n companies producing a common homogeneous commodity. For \(i\in \{1, \ldots , n\}\), company i has a strategy set \(D_i \subseteq {\mathbb {R}}_+\), a cost function \(\varphi _i\) defined on the strategy set \(D = \prod _{i=1}^{n} D_i\) of the model and a profit function \(f_i\) that is usually defined as price times production minus costs (of producing the considered production). Each company is interested in maximizing its profit by choosing the corresponding production level under knowledge on demand of the market and of production of the competition (seen as input parameters). A commonly used solution notion in this model is the celebrated Nash equilibrium. A point (strategy) \({{\bar{x}}} = ({{\bar{x}}}_1, \ldots , {{\bar{x}}}_n)^\top \in D\) is said to be a Nash equilibrium point of this Nash-Cournot oligopolistic market model if

where the vector \({{\bar{x}}}[x_i]\) is obtained from \({{\bar{x}}}\) by replacing \({{\bar{x}}}_i\) with \(x_i\). Following some transformations described in detail in [23, 31], the problem of determining a Nash equilibrium of a Nash-Cournot oligopolistic market situation can be rewritten as a mixed variational inequality of type (3.1), where the involved operator captures various parameters and additional information and the function h is the sum of the cost functions of the considered companies, each of them depending on a different variable that represents the corresponding production.

Following the oligopolistic equilibrium model analyzed in [31], where some of the involved cost functions are convex and some DC, we consider the mixed variational inequality corresponding to a model with 5 companies, whose cost functions are defined as follows

The cost functions \(\varphi _1\), \(\varphi _3\) and \(\varphi _5\) are prox-convex (see [13]), while \(\varphi _2\) and \(\varphi _4\) convex. Functions \(\varphi _1\) and \(\varphi _3\) were employed in the similar application considered in [31] (following the more applied paper [2]) as (DC) cost functions for oligopolistic equilibrium problems, while \(\varphi _4\) is the Huber loss function (cf. [18]) that is used in various contexts in economics and machine learning for quantifying costs. This model also includes functions with negative values such as \(\varphi _1\) that quantify the possibility of having negative costs, for instance in case of a surplus of energy on the market (as discussed in the recent works [1, 7]). To make the problem more challenging from a mathematical point of view we consider the involved convex cost functions defined over the whole space. Unlike (4.1), the present problem is unbounded in the second and fourth argument (note that the corresponding constraint sets are not compact). Note moreover that different to the literature on algorithms for DC optimization problems where usually only critical points are determinable (and not optimal solutions), for the DC functions that are also prox-convex proximal point methods are capable of delivering global minima (on the considered sets).

In our numerical experiments for solving (3.1) (denoted (OMP) in this case) where \(T(x) = Ax\), \(x\in {\mathbb {R}}^5\), with \(A\in {\mathbb {R}}^{5\times 5}\) a real symmetric positive semidefinite matrix of norm 1 (for simplicity) and \(h(x_1, \ldots , x_5) = \sum _{i=1}^{5}\varphi _i(x_i)\), we employed the modification of Algorithm 1 mentioned in Remark 3.6 with constant stepsize \(\lambda \alpha \), for fixed \(\alpha , \lambda >0\). In each experiment the matrix A is randomly generated and scaled in order to have \(L=1\). The proximity operator of (the separable function) h has as components the ones of the involved functions, which are known (cf. [6, 13, 29]). For instance, the proximity operator of parameter \(\gamma >0\) of \(\varphi _1\) over [0, 2] at \(z\in [0, 2]\), is, when \(\gamma < 1/2\), equal to \((z+\gamma )/(1-2\gamma )\) when this lies in [0, 2], otherwise being 0 when \(z < -\gamma \) and 2 otherwise, while in case \(\gamma \ge 1/2\) the analysis can be done in a similar manner. In the following numerical implementations we apply these formulae for \(\gamma = \alpha \lambda < 1/2\).

In Tables 3 and 4 we present the computational results obtained by implementing the mentioned algorithm in matlab for various choices of the involved parameters and of the allowed error \(\varepsilon > 0\). In all the situations presented below we give the number of iterations and the CPU time needed by the program to reach a suitable approximation of the solution of the corresponding oligopolistic equilibrium problem. As one can notice, unlike in the experiments for solving (4.1) where the choice of \(\lambda \) and \(\alpha \) does not influence the number of iterations in a significant manner, in the present case this (like the CPU time) depends on the values of the two mentioned parameters.

5 Conclusions

We contribute to the study of mixed variational inequalities beyond convexity by showing that a recent proximal point type algorithm due to Malitsky remains convergent for a class of nonconvex mixed variational inequalities, too. The convergence statement holds when there is a certain relation between the Lipschitz constant of the governing operator of the mixed variational inequality and the prox-convex value of the involved function, which can be seen as a way to control the “conflict” between the properties of the mentioned operator and function. This conflict belongs to the nature of nonconvex mixed variational inequality problems since it is no longer present when the operator vanishes, and collapses to a standard assumption when the function is convex. Since this is the first (as far as we know) proximal point type algorithm for iteratively determining a solution to a generalized convex mixed variational inequality, this relation has not been analyzed in the literature yet.

In a subsequent work, we plan to continue with the study of nonconvex mixed variational inequalities and, in particular, to provide a deeper analysis of the mentioned relation and to extend beyond convexity other recent algorithms for solving convex mixed variational inequalities as well as methods of proximal point type for minimizing quasiconvex functions towards nonconvex mixed variational inequalities.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Aust, B., Horsch, A.: Negative market prices on power exchanges: evidence and policy implications from Germany. Electr. J. 33, article number: 106716 (2020)

Bigi, G., Passacantando, M.: Differentiated oligopolistic markets with concave cost functions via Ky Fan inequalities. Decis. Econ. Finance 40, 63–79 (2017)

Boţ, R.I., Csetnek, E.R.: Proximal-gradient algorithms for fractional programming. Optimization 66, 1383–1396 (2017)

Cambini, A., Martein, L.: Generalized Convexity and Optimization. Springer, Berlin (2009)

Chen, C., Ma, S., Yang, J.: A general inertial proximal point algorithm for mixed variational inequality problem. SIAM J. Optim. 25, 2120–2142 (2015)

Combettes, P.L., Pesquet, J.-C.: Proximal thresholding algorithm for minimization over orthonormal bases. SIAM J. Optim. 18, 1351–1376 (2007)

Corbet, S., Goodell, J.W., Günay, S.: Co-movements and spillovers of oil and renewable firms under extreme conditions: new evidence from negative WTI prices during COVID-19. Energy Econ. 92, article number: 104978 (2020)

Facchinei, F., Pang, J.-S.: Finite-Dimensional Variational Inequalities and Complementarity Problems, vol. I. Springer, New York (2003)

Facchinei, F., Pang, J.-S.: Finite-Dimensional Variational Inequalities and Complementarity Problems, vol. II. Springer, New York (2003)

Friedlander, M.P., Goh, G.: Efficient evaluation of scaled proximal operators. arXiv:1603.05719 (2016)

Goeleven, D.: Complementarity and Variational Inequalities in Electronics. Academic Press, London (2017)

Goeleven, D.: Existence and uniqueness for a linear mixed variational inequality arising in electrical circuits with transistors. J. Optim. Theory Appl. 138, 397–406 (2008)

Grad, S.-M., Lara, F.: An extension of proximal point algorithms beyond convexity . arXiv:2104.08822 (2021)

Gribonval, R., Nikolova, M.: A characterization of proximity operators. J. Math. Imaging Vis. 62, 773–789 (2020)

Gupta, S.D., Stellato, B., Van Parys, B.P.G.: Exterior-point operator splitting for nonconvex learning. arXiv:2011.04552 (2020)

Hadjisavvas, N., Komlosi, S., Schaible, S.: Handbook of Generalized Convexity and Generalized Monotonicity. Springer, Boston (2005)

Hare, W., Sagastizábal, C.: Computing proximal points of nonconvex functions. Math. Program. 116, 221–258 (2009)

Huber, P.J.: Robust estimation of a location parameter. Ann. Math. Stat. 35, 73–101 (1964)

Iusem, A., Lara, F.: Optimality conditions for vector equilibrium problems with applications. J. Optim. Theory Appl. 180, 187–206 (2019)

Iusem, A., Lara, F.: Existence results for noncoercive mixed variational inequalities in finite dimensional spaces. J. Optim. Theory Appl. 183, 122–138 (2019)

Iusem, A., Lara, F.: A note on “Existence results for noncoercive mixed variational inequalities in finite dimensional spaces”. J. Optim. Theory Appl. 187, 607–608 (2020)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications. Academic Press, New York (1980)

Konnov, I., Volotskaya, E.O.: Mixed variational inequalities and economic equilibrium problems. J. Appl. Math. 6, 289–314 (2002)

Langenberg, N., Tichatschke, R.: Interior proximal methods for quasiconvex optimization. J. Glob. Optim. 52, 641–661 (2012)

Lauster, F., Luke, D.R., Tam, M.K.: Symbolic computation with monotone operators. Set Valued Var. Anal. 26, 353–368 (2018)

Luke, D.R., Gretchko, S., Schulz, J., Jansen, M., Dornheim, A., Ziehe, S., Nahme, R., Tam, M.K., Mattson, P., Wilke, R., Hesse, R., Stalljahn, A.: ProxToolbox—a toolbox of algorithms and projection operators for implementing fixed point iterations based on the Prox operator. https://gitlab.gwdg.de/nam/ProxPython (2012)

Malitsky, Y.: Golden ratio algorithms for variational inequalities. Math. Program. 184, 383–410 (2020)

Matei, A., Sofonea, M.: Solvability and optimization for a class of mixed variational problems. Optimization (2019). https://doi.org/10.1080/02331934.2019.1676242

Moreau, J.-J.: Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. Fr. 93, 273–299 (1965)

Muu, L.D., Nguyen, V.H., Quy, N.V.: On Nash–Cournot oligopolistic market equilibrium models with concave cost functions. J. Glob. Optim. 41, 351–364 (2008)

Muu, L.D., Quy, N.V.: Global optimization from concave minimization to concave mixed variational inequality. Acta Math. Vietnam. 45, 449–462 (2020)

Noor, M.A.: Proximal methods for mixed variational inequalities. J. Optim. Theory Appl. 115, 447–452 (2002)

Noor, M.A., Huang, Z.: Some proximal methods for solving mixed variational inequalities. Appl. Anal. Article ID 610852 (2012)

Noor, M.A., Noor, K.I., Zainab, S., Al-Said, E.: Proximal algorithms for solving mixed bifunction variational inequalities. Int. J. Phys. Sci. 6, 4203–4207 (2011)

Papa Quiroz, E.A., Mallma Ramirez, L., Oliveira, P.R.: An inexact proximal method for quasiconvex minimization. Eur. J. Oper. Res. 246, 721–729 (2015)

Prigozhin, L.: Variational inequalities in critical-state problems. Physica D 197, 197–210 (2004)

Quoc, T.D., Muu, L.D., Hien, N.V.: Extragradient algorithms extended to equilibrium problems. Optimization 57, 749–766 (2008)

Scaman, K., Virmaux, A.: Lipschitz regularity of deep neural networks: analysis and efficient estimation. In: Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Adv Neural Inform Process Syst, pp. 3835–3844 (2018)

Solodov, M., Svaiter, B.F.: A new projection method for variational inequality problems. SIAM J. Control. Optim. 37, 765–776 (1999)

Stella, L., Antonello, N., Fält, M.: ProximalOperators.jl—proximal operators for nonsmooth optimization in Julia. https://github.com/kul-forbes/ProximalOperators.jl (2018)

Thakur, B.S., Varghese, S.: Approximate solvability of general strongly mixed variational inequalities. Tbil. Math. J. 6, 13–20 (2013)

Wang, M.: The existence results and Tikhonov regularization method for generalized mixed variational inequalities in Banach spaces. Ann. Math. Phys. 7, 151–163 (2017)

Wang, Z., Chen, Z., Xiao, Y., Zhang, C.: A new projection-type method for solving multi-valued mixed variational inequalities without monotonicity. Appl. Anal. (2018). https://doi.org/10.1080/00036811.2018.1538499

Xia, F.-Q., Huang, N.-J.: An inexact hybrid projection-proximal point algorithm for solving generalized mixed variational inequalities. Comput. Math. Appl. 62, 4596–4604 (2011)

Xia, F.-Q., Zou, Y.-Z.: A projective splitting algorithm for solving generalized mixed variational inequalities. J. Inequal. Appl 2011, 27 (2011)

Acknowledgements

This research was partially supported by FWF (Austrian Science Fund), project M-2045 (S.-M. Grad) and Conicyt–Chile under project Fondecyt Iniciación 11180320 (F. Lara). The authors are grateful to the handling Associate Editor and two anonymous reviewers for comments and suggestions that contributed to improving the quality of the manuscript.

Funding

Open access funding provided by University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Grad, SM., Lara, F. Solving Mixed Variational Inequalities Beyond Convexity. J Optim Theory Appl 190, 565–580 (2021). https://doi.org/10.1007/s10957-021-01860-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-021-01860-9