Abstract

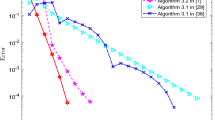

In this paper, we present the Wolfe’s reduced gradient method for multiobjective (multicriteria) optimization. We precisely deal with the problem of minimizing nonlinear objectives under linear constraints and propose a reduced Jacobian method, namely a reduced gradient-like method that does not scalarize those programs. As long as there are nondominated solutions, the principle is to determine a direction that decreases all goals at the same time to achieve one of them. Following the reduction strategy, only a reduced search direction is to be found. We show that this latter can be obtained by solving a simple differentiable and convex program at each iteration. Moreover, this method is conceived to recover both the discontinuous and continuous schemes of Wolfe for the single-objective programs. The resulting algorithm is proved to be (globally) convergent to a Pareto KKT-stationary (Pareto critical) point under classical hypotheses and a multiobjective Armijo line search condition. Finally, experiment results over test problems show a net performance of the proposed algorithm and its superiority against a classical scalarization approach, both in the quality of the approximated Pareto front and in the computational effort.

Similar content being viewed by others

Change history

01 December 2018

The contents of the paper [1] were submitted for publication in J Optim Theory Appl (JOTA) on 22 December 2016, reviewed and approved by the Editors-in-Chief.

Notes

For \(y, y' \in {\mathbb {R}}^r\), \(y<y'\) (resp. \(y \le y'\)) means \(y_i<y'_i\) (resp. \(y_i \le y'_i\)) for all \(i \in \{1,\ldots ,r\}\); obviously, \(y \lneq y'\) means \(y \le y'\) and \(y\ne y'\).

Pointed means \(C\cap -C = \{0\}\), \(\mathrm{int}C\) stands for the topological interior of C, and, \(y \le _C y'\) means \(y-y'\in -C\).

References

Collette, Y., Siarry, P.: Multiobjective Optimization. Principles and Case Studies. Springer, Berlin (2004)

Figueira, J., Greco, S., Ehrgott, M.: Multiple criteria decision analysis. In: State of the Art Surveys. Springer, New York (2005)

Ehrgott, M.: Multicriteria Optimization, 2nd edn. Springer, Berlin (2005)

Deb, K.: Multi-objective Optimization Using Evolutionary Algorithm. Wiley, Chichester (2001)

Miettinen, K.: Nonlinear Multiobjective Optimization. Kluwer, Boston (1999)

Fliege, J., Graña Drummond, L.M., Svaiter, B.F.: Newton’s method for multiobjective optimisation. SIAM J. Optim. 20, 602–626 (2009)

Fliege, J.: An efficient interior-point method for convex multicriteria optimization problems. Math. Oper. Res. 31, 825–845 (2006)

Eichfelder, G.: An adaptive scalarization method in multiobjective optimization. SIAM J. Optim. 19, 1694–1718 (2009)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Meth. Oper. Res. 51, 479–494 (2000)

Graña Drummond, L.M., Iusem, A.N.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28, 5–29 (2004)

García-Palomares, U.M., Burguillo-Rial, J.C., González-Castaño, F.J.: Explicit gradient information in multiobjective optimization. Oper. Res. Lett. 36, 722–725 (2008)

Bonnel, H., Iusem, A.N., Svaiter, B.F.: Proximal methods in vector optimization. SIAM J. Optim. 15, 953–970 (2005)

Wolfe, P.: Methods of nonlinear programming. In: Graves, R.L., Wolfe, P. (eds.) Recent Advances in Mathematical Programming, pp. 67–86. McGraw–Hill, New York (1963)

Bazaraa, M.S., Sherali, H.D., Shetty, C.M.: Nonlinear Programming: Theory and Algorithms, 3rd edn. Wiley, New York (2006)

Luenberger, D.G., Ye, Y.: Linear and Nonlinear Programming, 3rd edn. Springer, New York (2008)

El Maghri, M.: A free reduced gradient scheme. Asian J. Math. Comput. Res. 9, 228–239 (2016)

Goh, C.J., Yang, X.Q.: Duality in Optimization and Variational Inequalities. Taylor and Francis, London (2002)

Ponstein, J.: Seven types of convexity. SIAM Rev. 9, 115–119 (1967)

Bento, G.C., Cruz Neto, J.X., Oliveira, P.R., Soubeyran, A.: The self regulation problem as an inexact steepest descent method for multicriteria optimization. Eur. J. Oper. Res. 235, 494–502 (2014)

Mangasarian, O.L.: Nonlinear Programming. SIAM, Philadelphia (1994)

Bento, G.C., Cruz Neto, J.X., Santos, P.S.M.: An inexact steepest descent method for multicriteria optimization on riemannian manifolds. J. Optim. Theory Appl. 159, 108–124 (2013)

Durea, M., Strugariu, R., Tammer, C.: Scalarization in geometric and functional vector optimization revisited. J. Optim. Theory Appl. 159, 635–655 (2013)

Huard, P.: Convergence of the reduced gradient method. In: Mangasarian, O.L., Meyer, R.R., Robinson, S.M. (eds.) Nonlinear Programming, pp. 29–59. Academic Press, New York (1975)

Armijo, L.: Minimization of functions having Lipschitz continuous first partial derivatives. Pac. J. Math. 16, 1–3 (1966)

Zitzler, E., Deb, K., Thiele, L.: Comparison of multiobjective evolutionary algorithms: empirical results. Evol. Comput. 8, 173–195 (2000)

Bolintinéanu, S., (new name: Bonnel, H.), El Maghri, M.: Pénalisation dans l’optimisation sur l’ensemble faiblement efficient. RAIRO Oper. Res. 31, 295–310 (1997)

Bolintinéanu, S., El Maghri, M.: Second-order efficiency conditions and sensitivity of efficient points. J. Optim. Theory Appl. 98, 569–592 (1998)

El Maghri, M., Bernoussi, B.: Pareto optimizing and Kuhn–Tucker stationary sequences. Numer. Funct. Anal. Optim. 28, 287–305 (2007)

Acknowledgements

The authors are thankful for the valuable suggestions of the editor Dr. Franco Giannessi and the reviewers that helped improve the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Maghri, M.E., Elboulqe, Y. Reduced Jacobian Method. J Optim Theory Appl 179, 917–943 (2018). https://doi.org/10.1007/s10957-018-1362-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-018-1362-x

Keywords

- Multiobjective optimization

- Nonlinear programming

- Pareto optima

- KKT-stationarity

- Descent direction

- Reduced gradient method