Abstract

In recent years experiments have demonstrated that living cells can measure low chemical concentrations with high precision, and much progress has been made in understanding what sets the fundamental limit to the precision of chemical sensing. Chemical concentration measurements start with the binding of ligand molecules to receptor proteins, which is an inherently noisy process, especially at low concentrations. The signaling networks that transmit the information on the ligand concentration from the receptors into the cell have to filter this receptor input noise as much as possible. These networks, however, are also intrinsically stochastic in nature, which means that they will also add noise to the transmitted signal. In this review, we will first discuss how the diffusive transport and binding of ligand to the receptor sets the receptor correlation time, which is the timescale over which fluctuations in the state of the receptor, arising from the stochastic receptor-ligand binding, decay. We then describe how downstream signaling pathways integrate these receptor-state fluctuations, and how the number of receptors, the receptor correlation time, and the effective integration time set by the downstream network, together impose a fundamental limit on the precision of sensing. We then discuss how cells can remove the receptor input noise while simultaneously suppressing the intrinsic noise in the signaling network. We describe why this mechanism of time integration requires three classes (groups) of resources—receptors and their integration time, readout molecules, energy—and how each resource class sets a fundamental sensing limit. We also briefly discuss the scheme of maximum-likelihood estimation, the role of receptor cooperativity, and how cellular copy protocols differ from canonical copy protocols typically considered in the computational literature, explaining why cellular sensing systems can never reach the Landauer limit on the optimal trade-off between accuracy and energetic cost.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Living cells can sense changes in their environment with extraordinary precision. Receptors in our visual system can detect single photons [66], some animals can smell single molecules [15], swimming bacteria can respond to the binding and unbinding of only a limited number of molecules [12, 72], and eukaryotic cells can respond to a difference in \(\sim \)10 molecules between the front and the back of the cell [80]. Recent experiments suggest that the precision of the embryonic development of the fruitfly Drosophila is close to the limit set by the available number of regulatory proteins [27, 32, 39, 78]. This raises the question what is the fundamental limit to the precision of chemical concentration measurements.

Living cells measure chemical concentrations via receptor proteins, which can either be at the cell surface or inside the cell. These measurements are inevitably corrupted by two sources of noise. One is the stochastic transport of the ligand molecules to the receptor via diffusion; the other is the binding of the ligand molecules to the receptor after they have arrived at its surface. Berg and Purcell pointed out in the seventies that cells can reduce the sensing error by increasing the number of measurements, and that they can do so in two principal ways [12]. The first is to simply increase the number of receptors. The other is to increase the number of measurements per receptor. In the latter scenario, the cell infers the ligand concentration not from the instantaneous ligand-binding state of the receptor, but rather from its average over some integration time T. This time integration has to be performed by the signaling network downstream of the receptor proteins.

In recent years, tremendous progress has been made in understanding how accurately cells can measure chemical concentrations [12, 13, 31, 36–38, 45, 46, 49, 51, 54, 64, 70, 71, 80, 85]. Most of these studies assume that the cell estimates the concentration via the mechanism of time integration as envisioned by Berg and Purcell [12, 13, 36–38, 45, 46, 51, 64, 70, 71, 80, 85], although Mora, Endres, Wingreen and others have shown that under certain conditions a better estimate of the concentration can be obtained via maximum likelihood estimation [31, 49, 54]. In this review, we will limit ourselves to sensing static concentrations, which do not change on the timescale of the response, and we will focus on the mechanism of time integration, although we will also briefly discuss the scheme of maximum likelihood estimation. This review will follow a series of papers written by the authors, but, in doing so, will also discuss other relevant papers.

Specifically, in this review we will address the following questions: if the downstream signaling network integrates the state of the receptor over some given integration time T, what is then the sensing error? This is the question that was first addressed by Berg and Purcell [12], and later followed up by many authors [13, 45, 46, 64, 70, 71, 80, 85]. The answer depends on the correlation time of the receptor, which is determined by the stochastic arrival of the ligand molecules at the receptor by diffusion and on the stochastic binding of the ligand molecules to the receptor. Recently, the correct expression for the correlation time and hence the sensing error has become the subject of debate [12, 13, 46], which we will review in Sect. 2. The next question is: How do signaling networks integrate the receptor state? Do they integrate it uniformly in time, as assumed by Berg and Purcell? If not, can cellular sensing systems then actually reach the sensing limit of Berg and Purcell? As we will see, signaling networks integrate the receptor state non -uniformly in time, and, as a result, cells can not only reach the Berg–Purcell limit, but, in some cases, even beat it by about 10 % [36].

Importantly, the signaling network downstream of the receptor is inherently stochastic, because of the discrete character of the reactants and the probabilistic nature of their chemical and physical interactions. This means that while the network is removing the receptor input noise, which is extrinsic to the network, it will also add its own intrinsic noise to the transmitted signal [30, 61, 76]. Most studies have ignored this intrinsic noise in the signaling network, essentially assuming that it can be made arbitrarily small [3, 11–13, 31, 36, 45, 46, 49, 64, 70, 71, 80, 85]. However, can signaling networks remove the extrinsic noise in the input signal and simultaneously suppress the intrinsic noise of the signaling network [37, 38]? If so, what resources—receptors, time, readout molecules, energy—are required? Do these resources fundamentally limit sensing, like weak links in a chain? Or can they compensate each other, leading to trade-offs between them? We will see that equilibrium networks, which are not driven out of thermodynamic equilibrium, can sense—energy dissipation is not essential for sensing [37]. However, their sensing accuracy is limited by the number of receptors; adding a downstream network can never improve the precision of sensing. This is because equilibrium sensing systems face a fundamental trade-off between the removal of extrinsic and intrinsic noise [37]. Only non-equilibrium systems can lift this trade-off: they can integrate the receptor state over time while suppressing the intrinsic noise by using energy to store the receptor state into stable chemical modification states of the readout molecules [37, 38, 51]. Storing the state of the bound receptor over time using a canonical push-pull signaling network requires at least one readout molecule to store the state and at least \(4 k_BT\) of energy to store it reliably [38]. Non-equilibrium systems thus require three resource classes—a resource or a combination of resources—that are fundamentally required for sensing: receptors and their integration time, readout molecules, and energy. Each resource class sets a fundamental sensing limit, which means that the sensing precision is bounded by the limiting resource class and cannot be enhanced by increasing another class.

Last but not least, we will address the question of whether cellular sensing involves computations that can be understood using ideas from the thermodynamics of computation [10, 48]. Cells seem to copy the ligand-binding state of the receptor into chemical modification states of downstream readout molecules [37, 38, 51], but can this process be rigorously mapped onto computational protocols typically considered in the computational literature [59]? If so, how do these cellular copy protocols compare to thermodynamically optimal protocols? Can they reach the Landauer bound, which states that the fundamental limit on the energetic cost of an irreversible computation is \(k_B T \ln (2)\) per bit? We will see that cellular copy operations differ fundamentally in their design from thermodynamically optimal protocols, and that as a result they can never reach the Landauer limit, regardless of parameters [59].

2 The Berg–Purcell Limit

2.1 Set Up of the Problem

Berg and Purcell and subsequent authors [11–13, 45, 46, 64, 70, 71, 80, 85] considered the scenario in which the cell estimates the ligand concentration c, assumed to be constant on the timescale of the response, by monitoring the occupancy of the receptor to which the ligand molecules bind and unbind. The key idea is that the cell infers the concentration by estimating the true average receptor occupancy \(\overline{n}\) or probability p that a receptor is ligand bound, \(\overline{n}=p\), from the average occupancy \({n}_T\) over some integration time T, and by inverting the input-output relation p(c) [12]. A central result is that for a single receptor. The time average of its occupancy n(t) over the integration time T is \(n_T = (1/T) \int _0^T n(t^\prime ) dt^\prime \). The error in the estimate of the receptor occupancy, \(\delta n_T\), propagates to that in the estimate of the concentration c. Linearizing the input-output relation p(c), and using the rules of error propagation, the fractional error in the estimate of the concentration, \(\delta c / c\), is then given by

where \(\sigma _{n_T}^2\) is the variance in the time-averaged occupancy \(n_T\), and dp / dc is the gain, which determines how the error in the estimate of p propagates to that in the estimate of c. The gain can be obtained from the input-output relation \(p(c)=c / (c+K_D)\), where \(K_D\) is the receptor-ligand binding affinity: \(dp/dc=p(1-p)/c\). In the limit that the integration time T is much longer than the receptor correlation time \(\tau _c\), which is defined as the autocorrelation time of the signal n(t), the variance in the estimate \(n_T\) of the true mean occupancy \(p=\overline{n}\) is

where the instantaneous variance \(\sigma ^{2}_{n}=\left\langle n^{2} \right\rangle -\left\langle n \right\rangle ^{2}=p(1-p)\), and \(P_n(\omega )\) and \(\widehat{C}_n(s)\) are respectively the power spectrum and the Laplace transform of the correlation function \(C_n(t)\) of n(t). The above expression shows that the variance in the average \(n_T\) is given by the instantaneous variance \(\sigma ^2_n\) divided by \(T/(2 \tau _c)\), which can be interpreted as the number of independent measurements of n(t). Inserting Eq. 2 into Eq. 1 yields

This is indeed the sensing error based on \(T/(2\tau _c)\) independent concentration measurements.

Eq. 3 holds for any single receptor, be it a promoter on the DNA, a receptor on the cell membrane, or a receptor protein freely diffusing inside the cytoplasm [60]. All we need to do to get the sensing error, is to find the receptor correlation time \(\tau _c\) or the zero-frequency limit of the power spectrum, \(P_n (\omega =0)=2\sigma ^2_n \tau _c = 2 p(1-p) \tau _c\), which depends on the scenario by which the ligand finds the receptor.

Below, we describe different studies on the accuracy of sensing a concentration via a single, spherical receptor. All these studies start from Eqs. 1–3, and then proceed to derive the power spectrum or receptor correlation time, from which the sensing error can be obtained via Eqs. 2 or 3. However, as we will see, these studies use completely different approaches to arrive at the power spectrum or receptor correlation time.

2.2 Expression of Berg and Purcell

To obtain the receptor correlation time \(\tau _c\), Berg and Purcell assumed that the ligand binds the receptor in a Markovian fashion, which means that \(\tau _c\) is given by

where \(k_f\) is the ligand-receptor binding rate and \(k_b\) is the unbinding rate. Berg and Purcell described the binding site as a circular patch on the membrane, with patch radius s. To get the forward rate \(k_f\), they assumed \(k_f\) is given by the diffusion-limited binding rate \(k_D\), but with the cross section s renormalized by the sticking probability. For the binding of a ligand to a membrane patch, \(k_f = k_D = 4 D s\). We will consider the binding of ligand to a spherical receptor protein with ligand-receptor cross section \(\sigma \), in which case \(k_f = k_D = 4\pi \sigma D\). To get the backward rate \(k_b\), Berg and Purcell exploited the detailed-balance condition \(k_f c (1-p) = p k_b\), which states that in steady state the net rate of binding equals the net rate of unbinding.

Combining Eqs. 3 and 4 yields the following expression of Berg and Purcell for the sensing error

This expression can be understood intuitively: The factor \(4 \pi \sigma D c\) is the rate at which ligand molecules arrive at the receptor, \(1-p\) is the probability that the receptor is free, and hence \(4 \pi \sigma D c (1-p)\) is the count rate at which the receptor binds the ligand molecules; \(4 \pi \sigma D c (1-p)\) multiplied with T is thus the total number of counts in the integration time T. Indeed, this expression states that the fractional error \(\delta c / c\) decreases with the square root of the number of counts, as we would expect intuitively.

While this expression makes sense intuitively, there are two problems. First, receptor-ligand binding is, in general, not Markovian. To illustrate this, imagine for the sake of the argument that a ligand-bound receptor is surrounded by a uniform, equilibrium distribution of ligand molecules. If the receptor-bound ligand dissociates, then the other ligand molecules will still have the equilibrium distribution. If it rebinds and then dissociates again, the other ligand molecules will again still have the equilibrium distribution. The problem arises when (a) the rebinding of the dissociated ligand molecule is pre-empted by the binding of another ligand molecule; and (b) if this second molecule dissociates from the receptor before the first has diffused into the bulk. If this happens, then the receptor and the dissociated ligand molecule at contact are no longer surrounded by a uniform equilibrium distribution of ligand molecules. Indeed, the process of binding generates non-trivial spatio-temporal correlations between the positions of the ligand molecules, which depend on the history of the association and dissociation events. This turns an association-dissociation reaction into a complicated non-Markovian, many-body problem, which can, in general, not be solved analytically.

The second problem of the analysis of Berg and Purcell is that not all ligand-receptor association reactions are diffusion limited. Berg and Purcell were fully aware of this, but they argued on p. 208 of Ref. [12] that if the ligand “doesn’t stick on its first contact, it may very soon bump into the site again—and again. If these encounters occur with a time interval short compared to \(\tau _b\) [the time a ligand is bound], their result is equivalent merely to a larger value of \(\alpha \) [the sticking probability]. As we have no independent definition of the patch radius s , we may as well absorb the effective \(\alpha \) into s.” This argument, however, does not take into account that when a ligand arrives at the receptor for the first time and does not stick immediately, it may also return to the bulk, after which another ligand molecule may bind. Moreover, a ligand molecule that has just dissociated from the receptor may either rapidly rebind the receptor, or diffuse away from it into the bulk. It thus remained unclear how accurate the expression of Berg and Purcell, Eq. 5, is.

2.3 Expression of Bialek and Setayeshgar

Bialek and Setayesghar sought to generalize the result of Berg and Purcell by explicitly taking into account the receptor-ligand binding dynamics [13]. They considered a model in which the ligand molecules can diffuse, bind the receptor with a rate given by \(k_a\) multiplied by the local concentration of the ligand at the receptor surface, and unbind from the receptor with a rate \(k_d\). Here, \(k_a\) and \(k_d\) are the intrinsic rate constants, which are determined by the chemistry of the receptor-ligand interaction. This model is described by the following reaction-diffusion equations

where c(x, t) is the concentration of ligand at position x at time t and \(x_0\) is the position of the receptor. To solve these equations, Bialek and Setayesghar linearized Eqs. 6 and 7, and then invoked the fluctuation-dissipation relation, which, applied to this case, relates the spontaneous fluctuations in the receptor occupancy \(\delta n(t)=n(t) -\bar{n}\) to the linear response of the receptor occupancy \(\delta n(t)\) to changes in the binding free energy \(\delta F(t)\); in frequency space: \(P_n (\omega ) = (2 k_BT / \omega )\mathrm{Im} \left[ \delta n(\omega ) / \delta F(\omega ) \right] \), where \(\mathrm{Im}[\dots ]\) denotes the imaginary part. [13].

This linear-response approach makes it possible to analytically obtain the power spectrum \(P_n(\omega )\) and hence the receptor correlation time (see Eq. 1):

Combining this expression with Eq. 3, and exploiting that \(p=k_a c / (k_a c + k_d)\), yields the following expression for the sensing error:

The first term describes the contribution to the sensing error from the stochastic transport of the ligand molecules to the receptor by diffusion. The second term describes the contribution from the intrinsic stochasticity of the binding kinetics of the receptor: Even in the limit that \(D\rightarrow \infty \), such that the ligand concentration is uniform in space at all times, the ligand concentration can still not be measured with infinite precision because the receptor stochastically switches between the bound and unbound states, leading to noise in the estimate of the receptor occupancy. This term is absent in Eq. 5 since Berg and Purcell assume that the binding reaction is fully diffusion limited, which means that all arrivals at the receptor surface lead to binding; this is equivalent to taking the intrinsic rate constants \(k_a, k_d \rightarrow \infty \) at fixed \(k_a/k_d\), while keeping the diffusion-limited rate constant \(k_D = 4 \pi \sigma D\) finite.

Can biochemical systems actually reach the diffusion-limited regime where \(k_a \gg k_D\)? The maximal possible \(k_a\) is given by transition-state theory, which yields the rate constant \(k_\mathrm{TST}\) in the absence of any recrossings of the dividing surface that separates the bound from the unbound state [9, 18]. It is \(k_\mathrm{TST} = k_0 \exp [-\beta \Delta F]\), where \(\Delta F\) is the free-energy barrier separating the bound form the unbound state, and \(k_0\) is a kinetic prefactor. For spherical molecules that can bind in any orientation, the prefactor is given by the collision frequency of a hard-sphere fluid [86], \(k_0 = \pi \sigma ^2 \left\langle |v_{RL}| \right\rangle \), where \(\left\langle |v_{RL}| \right\rangle = \sqrt{8 k_B T/(\pi m_{RL})}\) is the mean relative velocity of ligand and receptor, with \(m_{RL}\) their reduced mass. For diffusion-limited reactions, we expect that \(\Delta F=0\), and \(k_a^\mathrm{max} = k_0\). For a reduced protein mass of about \(m_{RL}\simeq 100 \mathrm {\,kDa}\) and a cross section of \(\sigma \simeq 5\mathrm {\,nm}\), this yields \(k_a^\mathrm{max} \approx 10^{11}\mathrm {\,M}^{-1}\mathrm {\,s}^{-1}\). In contrast, with typical in vivo and in vitro diffusion constants of \(D\simeq 1-100\mathrm {\,\mu m}^2\mathrm {\,s}^{-1}\), the diffusion-limited rate \(k_D = 4 \pi \sigma D \approx 10^{7}-10^{9} \mathrm {\,M}^{-1}\mathrm {\,s}^{-1}\), which is indeed much smaller than \(k_a^\mathrm{max}\).

Although this calculation suggests that \(k_a\) can exceed \(k_D\) for biochemical systems, a few important points are worthy of note: first, binding proceeds via diffusion, which means that the transmission coefficient of the reaction is probably (much) lower than unity. Another way to estimate \(k_a^\mathrm{max}\) is to imagine that the receptor and ligand, in order to bind, have to diffuse over a microscopic distance \(\lambda \), yielding \(k_a^\mathrm{max} = 4\pi \sigma ^2 D / \lambda \) and \(k_a^\mathrm{max} / k_D = \sigma / \lambda \). Since \(\lambda \) is expected to be on the order of the size of a water molecule, also this estimate suggests that \(k_a^\mathrm{max}\) can be significantly larger than \(k_D\).

In fact, inside the crowded environment of the cell, \(\sigma /\lambda \) is probably an underestimate, because \(k_a\) is set by the diffusion constant at short length and timescales, corresponding to that of proteins in water, while \(k_D\) is set by the long-time diffusion constant of proteins inside crowded media, which is about fivefold lower [26]. On the other hand, proteins typically bind ligand via patches or binding pockets, and these orientational constraints can drastically lower the intrinsic binding rate. However, Northrup and Erickson showed that each receptor-ligand encounter involves many diffusive steps and collisions, during which the two molecules can reorient [58]. This process is aided by interaction forces, such as coulombic forces or crowding induced depletion forces, which keep reactant and ligand together, giving them time for rotational alignment. Indeed, protein–protein association reactions can be fast: association rates of \(10^8\)–\(10^9~\mathrm{M}^{-1}\mathrm{s}^{-1}\) are not uncommon [68]. These reactions are likely to be diffusion limited. For a review on protein association rates, we refer the reader to the review article of Ref. [68].

More generally, the first term on the right-hand side Eq. 9 presents a noise floor that is solely due to the stochastic transport of the ligand to the receptor by diffusion, independent of the binding kinetics of the ligand after it has arrived at the receptor. The first term is thus considered to be the fundamenetal sensing limit set by the physics of diffusion [13], and it can be compared with the expression of Berg and Purcell, Eq. 5. It is clear that the expression of Bialek and Setayesghar and that of Berg and Purcell differ by a factor \(1/(2(1-p))\). This difference can have marked implications. Although the Bialek-Setayeshgar expression predicts that the uncertainty due to diffusion remains bounded even in the limit that \(p\rightarrow 1\), the Berg–Purcell expression suggests that it diverges in this limit. Intuitively, we expect a dependence on p, because a higher receptor occupancy at fixed \(k_D\) should reduce the count rate—if the receptor is bound most of the time, because, e.g., the receptor-ligand dissociation rate is low, then it cannot bind new molecules at a high rate.

2.4 The Expression of Kaizu and Coworkers

To elucidate the discrepancy between Eqs. 5 and 9, Kaizu and coworkers rederived the expression for the sensing error [46]. They considered exactly the same model as that of Bialek and Setayesghar [13], but analyzed it using the large body of work on reaction-diffusion systems, developed by Agmon, Szabo and coworkers [1]. The goal is to obtain the zero-frequency limit of the correlation function, \(\widehat{C}(s=0)\), from which the correlation time and hence the sensing error can be obtained, see Eq. 2. The correlation function of any binary switching process is given by

where \(p=\bar{n}\) is, as before, the equilibrium probability for the bound state and \(p_{*|*}(\tau )=\langle n(\tau )n(0)\rangle /\overline{n}\) is the probability the receptor is bound at \(t=\tau \) given it was bound at \(t=0\). To obtain the correlation function, we thus need \(p_{*|*}(\tau )=1-\mathscr {S}_\mathrm{rev}\left( t|*\right) \), where \(\mathscr {S}_\mathrm{rev}\left( t|*\right) \) is the probability that the receptor is free at time t given that it was occupied at time \(t=0\). It is given by the exact expression

The subscript “rev” denotes that a reversible reaction is considered, meaning that in between \(t=0\) and t the receptor may bind and unbind ligand a number of times. The probability that a receptor-ligand pair dissociates between \(t'\) and \(t'+dt'\) to form an unbound pair at contact is \(k_d[1-\mathscr {S}_\mathrm{rev}(t'|*)]dt'\), while the probability that the free receptor with a ligand molecule at contact at time \(t'\) is still unbound at time \(t>t'\) is \(\mathscr {S}_\mathrm{rad}(t-t'|\sigma )\); the subscript “rad” means that we now consider an irreversible reaction (\(k_d=0\)), which can be obtained by solving the diffusion equation using a “radiation” boundary condition [1].

While Eq. 11 is exact, it cannot be solved analytically, because, as discussed above, an association-dissociation reaction is a non-Markovian, many-body problem. To solve Eq. 11, Kaizu and coworkers made the assumption that after each receptor-ligand dissociation event, the other ligand molecules have the uniform, equilibrium distribution. Mathematically, this assumption can be expressed as

where \(\mathscr {S}_\mathrm{rad}(t|\mathrm{eq})\) is the probability that a receptor which initially is free and surrounded by an equilibrium distribution of ligand molecules remains free until at least a later time t, while \(S_\mathrm{rad}(t|\sigma )\) is the probability that a free receptor that is surrounded by only one single ligand molecule, which initially is at contact, is still unbound at a later time t. To solve Eqs. 11 and 12, a relation between \(\mathscr {S}_\mathrm{rad}\left( t|\mathrm eq\right) \) and \(S_\mathrm{rad}\left( t|\sigma \right) \) is needed, which can be obtained from \(\mathscr {S}_\mathrm{rad}\left( t|\mathrm eq\right) = e^{-c \int _0^t k_\mathrm{rad}\left( t'\right) dt'}\) [65] and the detailed-balance relation for the time-dependent bimolecular rate constant \(k_\mathrm{rad}(t) = k_a S_\mathrm{rad}(t|\sigma )\) [1].

With these relations, Eqs. 11 and 12 can be solved in Laplace space, which, together with Eq. 10, yields the following expression for the receptor correlation time \(\tau _c=(\sigma ^2_n)^{-1}\widehat{C}_n(s=0)\) [46]:

Here \(k_\mathrm{on}\) and \(k_\mathrm{off}\) are the renormalized association and dissociation rates

with \(K_\mathrm{eq}=k_a/k_d= k_\mathrm{on}/k_\mathrm{off}\) the equilibrium constant and \(k_D = 4\pi \sigma D\) the diffusion-limited rate constant—\(k_D = k_\mathrm{rad}(t\rightarrow \infty )\) for \(k_a\rightarrow \infty \).

As before, the sensing error is obtained by combining Eq. 13 with Eq. 3, and exploiting that \(p=k_\mathrm{on}c/(k_\mathrm{on}c+k_\mathrm{off})=k_\mathrm{on}c \tau _c\), [46]:

The second term is identical to that of Bialek and Setayesghar, Eq. 9. However, the first term, which constitutes the fundamental limit, disagrees with the expression of Bialek and Setaeysghar, but agrees with that of Berg and Purcell, Eq. 5. This suggests that the expression of Berg and Purcell is indeed the most accurate expression for the fundamental sensing limit.

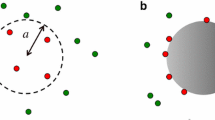

But it could of course be that both the analysis of Berg and Purcell and that of Kaizu et al. are inaccurate. To investigate this, Kaizu and coworkers performed particle-based simulations of the same model studied by Bialek and Setayesghar and Kaizu et al.; to test the expression of Berg and Purcell, the system was chosen to be deep in the diffusion-limited regime. The simulations were performed using Green’s Function Reaction Dynamics, which is an exact algorithm to simulate reaction-diffusion systems at the particle level in time and space, and hence does not rely on the approximation used to derive the analytical result of Kaizu et al. [75, 82, 83]. Figure 1 shows the results for the zero-frequency limit of the power spectrum, \(P_n (\omega \rightarrow 0) = 2 \sigma ^2_n \tau _c\), which provides a test for the receptor correlation time \(\tau _c\) and hence the sensing error (see Eqs. 1 and 2), because \(\sigma ^2_n = p(1-p)\). It is seen that the prediction of Kaizu and coworkers agrees very well with the simulation results, in contrast to that of Bialek and Setagesghar. This shows that the expression of Kaizu et al. and hence that of Berg and Purcell, is the most accurate expression for the sensing precision.

The zero-frequency limit of the power spectrum, \(P_n (\omega \rightarrow 0) = 2 \sigma ^2_n \tau _c\) with \(\sigma ^2_n = p(1-p)\), as a function of the average receptor occupancy \(\overline{n}\) for \(c=0.4\mathrm {\,\mu M}\); \(\overline{n}\) is varied by changing \(k_d\). It is seen that the theoretical prediction of Kaizu et al. [46] (red line) agrees very well with the simulation results (red symbols), in contrast to that of Bialek and Setayeshgar [13] (black line). Parameters: \(D=1\mathrm {\,\mu m^2\,s^{-1}}\), \(\sigma =10\mathrm {\,nm}\), \(L=1\mathrm {\,\mu m}\), \(k_a=552\mathrm {\,\mu M^{-1}s^{-1}}\) (Color figure online)

2.5 Role of Rebinding

The question remains why the analysis of Kaizu et al. is so accurate. The central assumption of Eq. 12 makes the propensity for binding the next ligand independent of the history of the previous binding events. In essence, it reduces the non-Markovian many-body problem to a Markovian two-body problem, which can be seen from the expression for the receptor correlation time, Eq. 13. This is indeed the expression for the correlation time of a receptor that switches in a memoryless fashion between the bound and unbound states with switching rates \(k_\mathrm{on}c\) and \(k_\mathrm{off}\).

But why is Eq. 12 accurate? And what is the role of rebindings? Do they not generate an algebraic tail in the correlation function? As it turns out, these questions are intimately related. It is well known that in an unbounded system, the correlation function exhibits an algebraic tail because at long times the relaxation of the receptor state is dominated by the slow diffusive transport of the ligand over long distances [35, 62]. However, we typically expect the space to be bounded, both for the binding of ligand to a receptor inside the cell and to a receptor at the cell surface. In this case, the dissociated ligand particles lose memory on the timescale needed to cross the bounded volume. Indeed, the simulations of [46] were performed in a finite box of cellular dimensions, yielding exponential, not algebraic, decay at long times. Now, the correlation function of the theory of Kaizu et al. has an algebraic tail [46]. This comes from the particle that has just dissociated: in the theory, this particle returns to the receptor as if it were in an unbounded space, yielding a survival probability, \(S_\mathrm{rad}(t|\sigma )\), (Eq. 12), that decays algebraically at long times. However, the theory assumes that the other particles have, after each dissociation event, the uniform, equilibrium distribution, and their survival probability \(\mathscr {S}_\mathrm{rad}(t|\mathrm{eq})\) decays exponentially at long times. As a result, the amplitude of the algebraic tail of the correlation function is very small in the theory of Kaizu et al. [46].

Still, the question remains how accurate the central assumption, Eq. 12, is. To elucidate the key assumption underlying Eq. 12, it is instructive to imagine a scenario where after a dissociation event, the other particles do have the uniform distribution; Eq. 12 is then obeyed. If the dissociated particle then rebinds and unbinds again, then Eq. 12 is still satisfied. However, Eq. 12 breaks down when (a) the rebinding of the dissociated particle is pre-empted by the binding of another particle from the bulk; and (b) if this second particle dissociates from the receptor before the first has equilibrated by diffusing into the bulk. This first particle then no longer obeys the uniform equilibrium distribution, and the survival probability of the particles other than that which just dissociated, no longer is given by \(\mathscr {S}_\mathrm{rad}\left( t|\mathrm eq\right) \). However, the time a ligand molecule spends near the receptor is typically much shorter than the time for molecules to arrive from the bulk at biologically relevant concentrations, which means that the probability of rebinding interference is very small, and condition (a) is not met. Because biologically relevant concentrations are low, also the dissociation rates are typically low, which means that in case rebinding interference does happen (and condition (a) is met), condition (b) is still not met, because during the long time the particle is associated with the receptor, the previously bound particle has had ample time to diffuse and equilibrate in the bulk. The likelihood that both conditions are met, necessary for the analysis to break down, is thus very small [46].

Because rebindings are so much faster than bulk arrivals, they can be integrated out [46, 56, 84]. Exploiting that rebinding interference can be neglected, the probability that a particle that has just dissociated from the receptor will rebind the receptor rather than diffuse away into the bulk is \(p_\mathrm{reb}=1-S_\mathrm{rad}(\infty |\sigma ) = k_a / (k_a+k_D)\). The mean number of rounds of rebinding and dissociation before it diffuses into the bulk is then \(N_\mathrm{reb} = k_a/k_D\), which rescales the effective dissociation rate: \(k_\mathrm{off} = k_d / (N_\mathrm{reb} + 1)=k_d k_D / (k_a+k_D)\); in this model, the molecule thus rebinds the receptor before it escapes into the bulk as often as when it would be the only ligand molecule in the system. Similarly, a molecule that arrives at the receptor from the bulk may either bind the receptor or escape back into the bulk with probability \(p_\mathrm{esc} = 1-p_\mathrm{reb}\); the mean number of rounds of escape and arrival before binding is \(N_\mathrm{esc} = 1/N_\mathrm{reb}\), which rescales the effective association rate \(k_\mathrm{on} = k_D / (1+N_\mathrm{esc}) = k_a k_D / (k_a+k_D)\). These are indeed precisely the rates of Eqs. 14 and 15.

This analysis also elucidates the role of rebinding in sensing. The probability of rebinding does not depend on the concentration, and rebindings therefore do not provide information on the concentration. They merely increase the receptor correlation time by increasing the effective receptor on-time from \(k_d^{-1}\) to \(k_\mathrm{off}^{-1} = k_d^{-1}/(1+N_\mathrm{reb})\). After \((1+N_\mathrm{reb})\) rounds of dissociation and rebinding, the molecule escapes into the bulk, and then another molecule will arrive at the receptor with rate \(k_Dc\); this molecule may return to the bulk or bind the receptor, such that a new molecule will bind after a time \((k_\mathrm{on}c)^{-1}\) on average. Importantly, this molecule will bind in a memoryless fashion and with a rate that depends on the concentration. This binding event thus provides an independent concentration measurement. The mean waiting time in between independent binding events is therefore \(\tau _w = 1/k_\mathrm{off}+1/(k_\mathrm{on}c)\), which allows us to rewrite Eq. 16 in a form that we would expect intuitively:

Indeed, the sensing error \(\delta c / c\) decreases with one over the square root of the number of independent measurements \(T/\tau _w\) during the integration time T.

Lastly, why does the expression of Bialek and Setayesghar, Eq. 9, miss the factor \(1-p\) in the diffusion term, in contrast to the expressions of Berg and Purcell, and Kaizu and coworkers? All studies start from Eq. 1, which assumes that the input-output relation p(c) is linear over the range of the fluctuations in \(n_T\). However, these analyses differ in how they arrive at the zero-frequency limit of the power spectrum or, equivalently, the receptor correlation time (see Eq. 2). The work of Berg and Purcell starts from the assumption that the receptor dynamics is a random telegraph process; this approach naturally takes into account that when a receptor is bound to a ligand molecule, it cannot bind new ligand molecules. It thus recognizes that the net rate of ligand binding depends on the diffusive transport of the ligand to the receptor and on the receptor occupancy. The theory of Kaizu and coworkers is based on a stochastic, particle-based description of the receptor dynamics, which also captures the binary character of the receptor naturally. Why the analysis of Bialek and Setayesghar misses the factor \(1-p\) in the diffusion term is not entirely clear, but we believe it results from the linearization of the reaction-diffusion equations, which misses correlations between the state of the receptor and the local ligand concentration—if a molecule arrives at a ligand-bound receptor, then it cannot bind to the receptor. In essence, their analysis is a small-noise approximation which is valid when there are many receptors in close proximity, since then fluctuations in the occupancy will be small relative to the mean. But for a single receptor, with a single ligand-binding site, the binary character of the receptor state needs to be taken into account.

3 Can Cells Reach the Berg–Purcell Limit?

The work of Berg and Purcell and subsequent studies like those discussed above [12, 13, 45, 46, 64, 70, 71, 80, 85] assume not only a given integration time T, but also that the downstream signaling network averages the state of the receptor uniformly in time over this integration time T. It remained unclear, however, how the signaling network determines the (effective) integration time T, whether the network averages the signal uniformly in time, and how this assumption affects the sensing precision [36]. It thus remained open whether signaling networks can actually reach the Berg–Purcell limit.

To address these questions, the authors of Ref. [36] considered linear, but otherwise arbitrary signaling network. For deterministic networks of this type, the output \(X(T_\mathrm{o})\) at time \(T_\mathrm{o}\) can be written as

where \(\chi (t-t^\prime )\) is the response function of the network and RL(t) is the stochastic receptor signal, i.e., the number of receptors that are bound to ligand.. To compare to previous results, the authors assumed that at \(t=0\) the environment changes instantaneously and that the receptors and hence RL(t) immediately adjust, so that RL(t) is stationary for \(0<t<T_\mathrm{o}\), with fluctuations that decay exponentially with correlation time \(\tau _c\); here, in contrast to the studies discussed in the previous section, the authors thus assumed a given receptor correlation time \(\tau _c\)—they did not ask how this correlation time is set by the receptor-ligand cross section, the number of receptors, and the concentration and diffusion constant of the ligand [46]. Moreover, the authors assumed that either: (1) \(\chi (T_\mathrm{o}-t)=0\) for \(t<0\), which corresponds to a scenario where the response time \(\tau _r\) of the network is shorter than \(T_\mathrm{o}\), or, equivalently, the network reaches steady state by the time \(T_\mathrm{o}\); or (2) \(RL(t)=0\) for \(t<0\), which corresponds to a scenario in which the cell is initially in a basal state. In both cases, \(X(t) = \int _{0}^{T_\mathrm{o}} \chi (T_\mathrm{o}-t^\prime ) RL(t^\prime ) dt^\prime \). When neither \(\chi (T_\mathrm{o}-t)\) nor RL(t) are zero for \(t<0\), then previous states of the environment influence the state of the network at \(T_\mathrm{o}\), which can either be a source of noise, or a source of information if the environments are correlated.

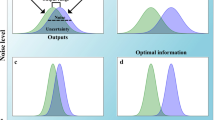

Extracting information from noisy input signals with linear signaling networks. a, c, e, g The weighting functions corresponding to different signaling networks are not uniform. b, d, f, h The ability of a signaling network to measure ligand concentration depends on its weighting function. The typical error (variance) in the estimate of ligand concentration is plotted as a percentage increase over the error of an estimate based on uniform weighting, assumed in the Berg–Purcell limit (Eq. 1 with \(T=T_\mathrm{o}\)). a Reversible, one-level cascades selectively amplify late (\(t=T_\mathrm{{o}}\)) values of the signal, b leading to worse performance than the uniform average. c Irreversible, N-level cascades amplify early (\(t=0\)) values of the signal, d leading to worse performance than the uniform average. e The optimal weighting function, given in Ref. [36], averages the signal, selectively amplifying less correlated values. The delta functions are truncated for illustration. f The optimal weighting function outperforms the uniform average. g A signaling network consisting of two branches, which selectively amplify late (\(t=T_\mathrm{{o}}\)) (left branch) and early (\(t=0\)) (right branch) values of the signal, approximates the optimal weighting function (\(k_1 = 3.1\); \(k_2 = 10\); \(k_3 = 1\); \(k_4 = 0.35\); \(k_5 = 1\); \(k_6 \gg 1\); \(T_\mathrm{{o}}=2\)). h The network in f can outperform the uniform average (\(\tau _\mathrm{c}\) varies for fixed \(T_\mathrm{{o}}=2\))

While in the studies discussed in the previous section, the concentration is estimated from the average receptor occupancy, here the idea is that the cell infers the ligand concentration from the output \(X(T_\mathrm{o})\) and by inverting the input–output relation \(\overline{X}(c)\). Using error propagation, the error in the estimate of the concentration is then given by

The authors of Ref. [36] then studied different signaling architectures, shown in Fig. 2. Clearly, these networks do not, in general, average the receptor signal uniformly in time; instead, they have non-uniform weighting functions (Fig. 2a, c, e, g). They weigh receptor signals in the past with a response function that depends on both the lifetime of the signaling molecules and on the topology of the signaling network. One-layer networks consisting of a single reversible reaction give most weight to the most recent signal value (left-most column), while multi-level cascades consisting of irreversible reactions give more weight to signal values more in the distant past (second column). This concept can be generalized to arbitrarily large signaling networks. Multilevel reversible cascades have weighting functions that peak at some finite time in the past, balancing the down-weighting of the signal from the distant past due to the reverse reactions, with the down-weighting of the signal from the recent past resulting from the multilevel character of the network. Linear combinations of the weighting functions for reversible and irreversible cascades can be achieved with multiple cascades that are activated by the input in parallel and which independently activate the same effector molecule. Clearly, signaling networks allow for very diverse weighting functions.

This idea can be exploited to improve the accuracy of sensing, as shown in the right two columns of Fig. 2. A network with a feedforward topology that combines a fast reversible cascade with a slow irreversible cascade cannot only reach the Berg–Purcell limit, but even beat it by about \(10 \%\), when the observation time \(T_\mathrm{o}\) is on the order of the receptor correlation time \(\tau _c\). The reason is that this network selectively amplifies the more recent signal values and those further back in the past. This is beneficial, because these signal values are less correlated. Interestingly, feedforward motifs are very common in cellular biochemical networks [2]. Canonical signal transduction pathways that employ these motifs are GPCR signaling [41] and MAPK signaling [20, 87].

Our analysis also provides a clear perspective on the integration time. While in the previous section the downstream network integrates the input n(t) uniformly in time over a given integration time T, here the network integates the input RL(t) via a non-uniform weighting funtion \(\chi (t-t^\prime )\). Clearly, \(T_\mathrm{o}\), the time on which the cell must respond, provides an upper bound on the integration time. Yet, the processing network weights the input signal by \(\chi (T_\mathrm{o}-t)\), which may become zero for \(t < T_\mathrm{o}\). In this case, the effective integration time \(T_\mathrm{eff}\) is limited by the range over which \(\chi (T_\mathrm{o}-t)\) is nonzero. For example, the weighting function of the one-level reversible cascade becomes zero on the time scale \(k_b^{-1}=\tau _r\), the lifetime of the output component, which sets the relaxation time \(\tau _r\) of the network. This can be (much) smaller than \(T_\mathrm{o}\), in which case \(T_\mathrm{eff}\) is limited by \(\tau _r\): \(T_\mathrm{eff}\sim \tau _r<T_\mathrm{o}\). As we will see in Sect. 5, degradation of the output erases memory of the input.

While the data processing inequality suggests that it is advantageous to limit the number of nodes in a signaling network to minimize the effect of intrinsic noise, this study shows that there can be a competing effect in favor of increasing the number of nodes: better removal of extrinsic noise. Additional nodes make it possible to sculpt the weighting function for averaging the incoming signal, allowing signaling networks to reach and even exceed the Berg–Purcell limit.

4 Fundamental Sensing Limit of Equilibrium Systems

Signaling networks are stochastic in nature, which means that while they may remove the extrinsic noise in the input signal, they will also add their own intrinsic noise to the transmitted signal. Most studies on the accuracy of sensing have ignored this intrinsic noise of the signaling network [3, 12, 13, 31, 36, 45, 46, 49, 64, 70, 71, 80, 85]. They essentially assume that the intrinsic noise can be made arbitrarily small and that the extrinsic noise in the receptor signal can be filtered with arbitrary precision by simply integrating the receptor signal for longer. However, the extrinsic and intrinsic noise are not generally independent: changing a parameter in the system tends to affect both sources of noise [76]. This raises the question whether the extrinsic and intrinsic noise can be lowered simultaneously, and if so, what resources would be required to achieve this.

To address these questions, the authors of [37] first studied equilibrium networks that are not driven out of thermodynamic equilibrium via the turnover of fuel. Inspired by one component signaling networks [81], they started with the simplest possible equilibrium network, where a free receptor \(\mathrm{R}\) can either bind a ligand molecule \(\mathrm{L}\) or a cytoplasmic readout molecule \(\mathrm{X}\) (but not both): \(\mathrm{R} + \mathrm{L} \rightleftharpoons \mathrm{RL}\), \(\mathrm{R} + \mathrm{X} \overset{k_\mathrm{f}}{\underset{k_\mathrm{r}}{\rightleftharpoons }} \mathrm{RX}\). The linearized deviation \(\delta x(t)=X(t)-\overline{X}\) of the copy number X(t) from its steady-state value \(\overline{X}\) is

where \(\chi (t-t^\prime ) = e^{-(t-t^\prime ) / \tau _r}\) is the response function with \(\tau _r=1/(k_f (\overline{X}+\overline{R})+k_r)\) the integration time, \(\gamma = k_\mathrm{f} \overline{X}\), RL(t) is the input signal and \(\eta \) describes the intrinsic noise of the signaling network, set by the rate constants and copy numbers.

The sensing error for this system in steady state can be computed, as before, via Eq. 19. Here, the variance \(\sigma ^2_X= \langle \delta x \rangle ^2\), obtained from Eq. 20, can be decomposed into the sum of the extrinsic noise \(\sigma _{\mathrm{ex},x}^2 \equiv \gamma ^2 K_{\delta RL,\delta RL}\) and the intrinsic noise \(\sigma _{\mathrm{in},x}^2 \equiv \gamma K_{\delta RL,\eta }+ K_{\eta ,\eta }\), where \(K_{A,B} = \int _{-\infty }^{t} \int _{-\infty }^{t} e^{-(t-t_1')/\tau _\mathrm{I}} C_{A,B}(t_1',t_2') e^{-(t-t_2')/\tau _\mathrm{I}} dt_1 dt_2\) with the correlation function \(C_{AB}(t_1,t_2) = \langle A(t_1) B(t_2) \rangle \). This decomposition is not unique, but in this form the extrinsic noise term features a canonical temporal average of the input (receptor) fluctuations [61, 69, 76], which can be made arbitrarily small by increasing the effective integration time of the network. However, the authors of Ref. [37] found that when doing so in a system with \(R_T\) receptors would reduce the total sensing error below \(4/R_T\), the intrinsic noise would inevitably rise. The network faces a fundamental trade-off between the removal of extrinsic and intrinsic noise—both noise sources cannot be lowered simultaneously below a limit corresponding to a sensing error of \(4/R_T\).

Signaling networks are usually far more complicated than one consisting of a single readout species, and as discussed in the previous section, additional network layers can reduce the sensing error [36]. This raises the question whether a more complicated equilibrium network can overcome the limit set by the number of receptors. Searching over all possible network topologies to address this question is impossible. However, equilibrium systems are fundamentally bounded by the laws of equilibrium thermodynamics, regardless of their topology. Indeed, starting from the grand-canonical partition function, one can show that for any equilibrium network the gain \(d\overline{X}/d\mu \), with \(\mu =\mu ^0+kT \log (c)\) the chemical potential of the ligand, is given by the co-variance \(\sigma ^2_{X,RL}\) between X and RL, because RL (or, in general, the complex containing the ligand) is the species conjugate to the chemical potential. This means that these systems face a trade-off between gain (sensitivity) and noise: increasing the gain inevitably increases the noise. This has marked implications: using \(d\overline{X}/d\mu = \sigma ^2_{X,RL}\) and Eq. 19, we find that the sensing error based on the readout X is \(\left( \delta c / c\right) _X^2 = \sigma ^2_X / (\sigma ^2_{X,RL})^2\) , while if the receptors themselves are taken as the readout, the sensing error is \(\left( \delta c / c\right) _{RL}^2 = 1/\sigma ^2_{RL}\). From this it follows that

Here the first equality inequality on the right-hand side follows from the fact that \(|\sigma _{X,RL}^2|/\sqrt{\sigma _X^2 \sigma _{RL}^2}\) is a correlation coefficient, which is always less than 1 in magnitude. The second inequality follows from the observation that for any stochastic variable \(0<Y<a\), \(\sigma ^2_Y \le a^2 / 4\), meaning that \(\sigma ^2_{RL} < R_T^2 / 4\). Eq. 21 thus shows that in equilibrium systems a downstream signaling network can never improve the accuracy of sensing. The sensing precision is limited by the total number of receptors \(R_T\), regardless of how complicated the downstream network is, or how many protein copies are devoted to making it.

What is the origin of the sensing limit in equilibrium sensing systems? Why do these systems face a fundamental trade-off between gain and noise, and between extrinsic and intrinsic noise? These systems transduce the signal by harvesting the energy of ligand binding: this energy is used to boot off the downstream signaling molecules from the receptor. However, detailed balance, by putting a constraint on the binding affinities of receptor-readout and receptor-ligand binding, then dictates that receptor-readout binding also influences receptor-ligand binding, thus perturbing the future signal. Indeed, the trade-offs faced by equilibrium networks are all different manifestations of their time-reversibility. The only way for a time-reversible system to “integrate” the past is for it to integrate and hence perturb the future. Concomitantly, in a time reversible system, there is no sense of “upstream” and “downstream”, concepts which rely on a direction of time [33]; RL is as much a readout of X, as the other way around. While in equilibrium systems the readout encodes the receptor state, the readout is not a stable memory that is decoupled from changes in the receptor state: a change in the state of the readout, induced by readout-receptor (un)binding, influences the future receptor state. This introduces cross-correlations between the intrinsic fluctuations in the activation of the readout, modeled by \(\eta (t)\) in Eq. 20, and the extrinsic fluctuations in the input RL(t): \(K_{RL,\eta }\ne 0\). It is these cross-correlations, which ultimately arise from time reversibility, that lead, in these equilibrium systems, to a fundamental tradeoff between the removal of extrinsic and intrinsic noise and between increasing the gain and suppressing the noise.

5 Sensing in Non-equilibrium Systems

To beat the sensing limit of equilibrium systems, energy and the receptor need to be employed differently. Rather than using the energy of ligand binding to change the state of the readout, the system should use fuel. This makes it possible change the readout via chemical modification, with the receptor catalyzing the modification reaction: \(\mathrm{RL} + \mathrm{X} \rightarrow \mathrm{RL} + \mathrm{X}^*\). This decouples receptor-ligand binding from receptor-readout binding: the activation of the readout does not influence the future receptor signal, while, conversely, a change in the receptor state does not affect the stability of the readout. Each readout molecule that has interacted with the receptor provides a stable memory; collectively, the readout molecules encode the history of the receptor state. This enables the mechanism of time integration, in which the trade-off between noise and sensitivity is broken, and the extrinsic and intrinsic noise can be reduced simultaneously [37].

Catalysts cannot change the chemical equilibrium of two reactions that are the microscopic reverse of each other. To make the average state of the readout dependent on the average receptor occupancy, the activation reaction \(\mathrm{RL}+\mathrm{X} \rightarrow \mathrm{RL} + \mathrm{X}^*\) must therefore be coupled to a reaction that is not its microscopic reverse, and the system must be driven out of equilibrium. The simplest implementation is precisely the canonical signaling motif of a receptor driving a push-pull network. In such a network the receptor itself or the enzyme associated with it, like CheA in E. coli chemotaxis, catalyzes the activation of a readout protein X via chemical modification, i.e. the phosphorylation of the messenger protein CheY; active readout molecules \(\mathrm{X}^*\) can then decay spontaneously or be deactivated by an enzyme, like the phosphatase CheZ in E. coli, via a reaction that is not the microscopic reverse of the activation reaction. Typically, the activation via chemical modification is coupled to fuel turnover, while deactivation is not; in E. coli chemotaxis, for example, phosphorylation of CheY is fueled by ATP hydrolysis: \(\mathrm{CheA} + \mathrm{ATP} + \mathrm{CheY} \rightarrow \mathrm{CheA} + \mathrm{ADP} + \mathrm{CheY_p}\), while dephosphorylation is not: \(\mathrm{CheZ} + \mathrm{CheY_p} \rightarrow \mathrm{CheZ}+\mathrm{CheY}+\mathrm{Pi}\). Another classical example is MAPK signaling, where activation of MAPK is driven by ATP hydrolysis, while deactivation is not (even though it is typically catalyzed by a phosphatase). In all these systems, ATP hydrolysis is used to drive the readout molecule to a high energy state, the active phosphorylated state, which then relaxes back to the inactive dephosphorylated state via another pathway, setting up a cycle in state space leading to energy dissipation.

5.1 The Sensing Error

To derive the fundamental resources required for sensing, it is instructive to view the downstream system as a device that samples the state of the receptor discretely [38]. The activation reaction \(\mathrm{RL}+\mathrm{X}+\mathrm{ATP} \overset{k_\mathrm{f}}{\rightarrow }\mathrm{RL} + \mathrm{X}^*+\mathrm{ADP}\) (assumed to be fueled by ATP hydrolysis) generates samples of the ligand-binding state of the receptor by storing the receptor state in the stable modification states of the readout molecules. We expect that if there are N receptor-readout interactions, then the cell has N samples of the receptor state and the error in the concentration estimate, \(\delta c/c\), is reduced by a factor of \(\sqrt{N}\). However, to derive the effective number of samples, we have to consider not only the creation of samples, but also their decay and reliability. The decay reaction \(\mathrm{X}^* \overset{k_\mathrm{r}}{\rightarrow }\mathrm{X}\) is equivalent to discarding or erasing samples. The microscopically reverse reactions of these activation and deactivation reactions, namely the receptor-mediated deactivation \(\mathrm{X^*} + \mathrm{RL} +\mathrm{ADP} \overset{k_\mathrm{-f}}{\rightarrow }\mathrm{X} + \mathrm{RL} + \mathrm{ATP}\) and the spontaneous (or phosphatase catalyzed) activation \(\mathrm{X}\overset{k_\mathrm{-r}}{\rightarrow }\mathrm{X}^*\) independent of the receptor, generate incorrect samples of the receptor state. Energy is needed to break time-reversibility and to protect the coding.

How the receptor samples are generated, erased, and how they are stored in the readout, determine the number of samples, their independence, and their reliability, which together set the sensing precision [38]:

This expression is obtained from Eq. 20 with \(\sigma ^2_X\) computed via the linear-noise approximation [38, 76]. The quantity \(\bar{N}_I\), discussed below, is the average number of receptor samples that are independent out of a total of \(\bar{N}_\mathrm{eff}\) samples. The first term is the error on the concentration estimate that would be expected on the basis of \(\bar{N}_I\) perfect, independent samples of the receptor state that can be unambiguously identified (as in Eq. 3). A second correction term arises, however, because the cell cannot distinguish between those readout molecules that have collided with an unbound receptor since their last dephosphorylation event, and those that have not.

The number of independent measurements \(\bar{N}_I\) can be expressed in terms of collective variables that describe the resource limitations of the cell

This expression has a clear interpretation. The relaxation time \(\tau _r\) is the timescale on which receptor samples are created and decay. It thus sets the timescale of the memory and hence the effective integration time (see also Sect. 3). The quantity \(\dot{n}\) is the net flux of X across the cycle of activation by the receptor and deactivation. It equals the net rate at which X is modified by the receptor molecules that are bound to ligand. The ratio \(\dot{n}/p\) is thus the rate at which the receptor, bound or unbound, is sampled, and the quantity \(\dot{n} \tau _r/p\) is the total number of receptor samples taken during \(\tau _r\), \(\bar{N}\).

Not all of these samples are reliable. The effective number of samples taken during \(\tau _r\) is \(\bar{N}_\mathrm{eff} = q \bar{N}\), where \(0\le q\le 1\) measures the quality of each sample. Here, \(\Delta \mu _1\) and \(\Delta \mu _2\) are the average free-energy drops across the activation and deactivation pathway respectively, in units of \(k_ B T\); \(\Delta \mu =\Delta \mu _1+\Delta \mu _2\) is the total free-energy drop across the cycle, which is given by the free energy of the fuel turnover, such as that of ATP hydrolysis. When \(\Delta \mu =\Delta \mu _1=\Delta \mu _2=0\), an active read-out molecule is as likely to be created by the ligand-bound receptor as it is created spontaneously and there is no coding and no sensing; indeed, in this limit, \(q=0\) and \(\bar{N}_\mathrm{eff}=0\). In contrast, when \(\Delta \mu _1, \Delta \mu _2 \rightarrow \infty \), \(q \rightarrow 1\) and \(\bar{N}_\mathrm{eff} \rightarrow \bar{N}\).

The factor \(f_I\) denotes the fraction of samples that are independent. It depends on the correlation time \(\tau _c\) of receptor-ligand binding and on the time interval \(\Delta = 2 \tau _r/(\bar{N}_\mathrm{eff}/R_{T})\) between samples of the same receptor. Samples farther apart are more independent.

5.2 Fundamental Resources and Trade-Offs

Eqs. 22 and 23 can be used to find the resources that fundamentally limit sensing. A fundamental resource or combination of resources is a (collective) variable that when fixed, puts a lower bound on the sensing error, no matter how the other variables are varied. It can be found via constraint-based optimization, yielding [38]:

This expression identifies three fundamental resource classes, each yielding a fundamental sensing limit: \(R_T(1+\tau _r /\tau _c)\), which for the relevant regime of time integration \(\tau _r>\tau _c\) is \(R_T \tau _r / \tau _c\), \(X_T\), and \(\dot{w} \tau _r\). These classes cannot compensate each other in achieving a desired sensing precision, and hence do not trade-off against each other. The sensing precision is, like the weakest link in a chain, bounded by the limiting resource, as illustrated in Fig. 3a-c and Fig. 4. However, within each class, trade-offs are possible. We now briefly discuss the fundamental resource classes and their associated sensing limits.

Trade-offs in non-equilibrium sensing. a When two resources A and B compensate each other, one resource can always be decreased without affecting the sensing error, by increasing the other resource; concomitantly, increasing a resource will always reduce the sensing error. When both resources are instead fundamental, the sensing error is bounded by the limiting resource and cannot be reduced by increasing the other. b, c The three classes time/receptor copies, copies of downstream molecules, and energy are all required for sensing, with no trade-offs among them. The minimum sensing error obtained by minimizing Eq. 22 is plotted for different combinations of (b) \(X_T\) and w, and (c) \(R_T(1+\tau _r/\tau _c)\) and w. The curves track the bound for the limiting resource indicated by the grey lines, showing that the resources do not compensate each other. The plot for the minimum sensing error as a function of \(R_T(1+\tau _r/\tau _c)\) and \(X_T\) is identical to that of (c) with w replaced by \(X_T\). d The energy requirements for sensing. In the irreversible regime (\(\Delta \mu \rightarrow \infty \)), the work to take one sample of a ligand-bound receptor, \(w/(p\bar{N}_\mathrm{eff})\), equals \(\Delta \mu \), because each sample requires the turnover of one fuel molecule, consuming \(\Delta \mu \) of energy. In the quasi-equilibrium regime (\(\Delta \mu \rightarrow 0\)), each effective sample of the bound receptor requires \(4 \mathrm{k_BT}\), which defines the fundamental lower bound on the energy requirement for taking a sample. When \(\Delta \mu = 0\), the network is in equilibrium and both w and \(\bar{N}\) are 0. ATP hydrolysis provides \(20 \mathrm{k_BT}\), showing that phosphorylation of read-out molecules makes it possible to store the receptor state reliably. The results are obtained fromEq. 23 with \(\Delta \mu _1 = \Delta \mu _2 = \Delta \mu /2\). e Sampling more than once per correlation time requires more resources, while the benefit is marginal. As the sampling rate is increased by increasing the readout copy number \(X_T\), the number of independent measurements \(\bar{N}_I\) saturates at the Berg–Purcell limit \(R_T \tau _r/\tau _c\), but the energy and protein cost (\(\propto X_T\)) continue to rise

Receptors and their integration time, \(R_T \tau _r / \tau _c\). The number of receptor samples increases with the number of readout molecules, \(X_T\). In fact, as \(X_T \rightarrow \infty \), the spacing between the samples \(\Delta \rightarrow 0\) and the effective number of receptor samples \(\overline{N}_\mathrm{eff} \rightarrow \infty \); this is indeed the Berg–Purcell mechanism of time integration. However, each receptor can take an independent concentration measurement only every \(2\tau _c\), meaning that the number of independent measurements taken during the integration time \(\tau _r\) is, per receptor, \(\tau _r / \tau _c\) (the disappearance of the factor two is due to the fact that the deactivation of X increases the effective spacing between the samples, see [38]). Assuming that the receptors bind independently (but see Sect. 6.2), the total number of independent concentration measurements, \(\overline{N}_I\), taken during \(\tau _r\), is then limited by \(R_T \tau _r / \tau _c\), no matter how large \(X_T\) is (Fig. 3e). This yields the sensing limit of Berg and Purcell, \(\left( \delta c / c\right) ^2 \ge 4 / (R_T \tau _r / \tau _c)\), recognizing that the receptors are assumed to bind independently, and \(p(1-p)\le 0.25\) (cf. Eq. 3). While the product \(R_T \tau _r /\tau _c\) is fundamental, \(R_T\) and \(\tau _r\) are not: the error is determined by the total number of independent concentration measurements, and it does not matter whether these measurements are performed by many receptors over a short integration time or by one receptor over a long integration time.

The number of readout molecules, \(X_T\). Each concentration measurement needs to be stored in the chemical modification state of a readout molecule, and \(X_T\) limits the maximum number of measurements that can be stored. Consequently, no matter how many receptors the cell has, or how much time it uses to integrate the receptor state, the sensing error is fundamentally limited by the pool of readout molecules, \(\left( \delta c / c\right) ^2 \ge 4 /X_T\).

Energy, \(\dot{w}\tau _r\) , during the integration time The power, the rate at which the fuel molecules do work, is \(\dot{w}=\dot{n}\Delta \mu \), and the total work performed during the integration time is \(w\equiv \dot{w} \tau _r\). This work is spent on taking samples of receptor molecules that are bound to ligand, because only they can modify X. The total number of effective samples of ligand-bound receptors obtained during \(\tau _r\), is \(p\overline{N}_\mathrm{eff}\). Hence, the work needed to take one effective sample of a ligand-bound receptor is \(w/(p\bar{N}_\mathrm{eff})= \Delta \mu / q\) (see Eq. 23). Figure 3d shows this quantity as a function of \(\Delta \mu \). In the limit that \(\Delta \mu \gg 4 k_BT\), \(w /(p\bar{N}_\mathrm{eff})=\Delta \mu \), because the quality factor \(q\rightarrow 1\); in this regime, each receptor state is reliably encoded in the chemical modification state of the readout, and increasing \(\Delta \mu \) further increases increases the sampling cost with no reward in accuracy. In the opposite regime, \(\Delta \mu < 4 k_B T\), however, the quality of the samples, q, rapidly decreases with decreasing \(\Delta \mu \). In this regime, the system must take multiple noisy receptor samples to give the same information as one single perfect sample. In the limit \(\Delta \mu \rightarrow 0\), the quality factor \(q \rightarrow \Delta \mu / 4\) and the work to take one effective sample of a ligand-bound receptor approaches its minimal value of \(w/(p\overline{N}_\mathrm{eff})=\Delta \mu / q = 4 kT\). Substituting this in Eq. 22 yields another bound on the sensing error: \(\left( \delta c/c \right) ^2 \ge 4/(\dot{w}\tau _r)\). The bound can be reached when \(R_T \tau _r / \tau _c\) and \(X_T\) are not limiting, and \(\Delta \mu \rightarrow 0\). This bound shows that while the total work \(w=\dot{w}\tau _r\) done during the integration time \(\tau _r\) is fundamental, the power \(\dot{w}\) and \(\tau _r\) are not, leading to a trade-off between accuracy, speed and power, as found in adaptation [47].

5.3 Design Principle of Optimal Resource Allocation

The observation that resources cannot compensate each other, naturally yields the design principle of optimal resource allocation, which states that in an optimally designed system, each resource is equally limiting so that no resource is in excess and thus wasted. Quantitatively, Eq. 24 predicts that in an optimally designed system

In an optimal sensing system, the number of independent concentration measurements \(R_T\tau _r / \tau _c\) equals the number of readout molecules \(X_T\) that store these measurements and equals the work (in units of \(k_BT\)) to create the samples. Interestingly, the authors of Ref. [38] found that the chemotaxis system of E. coli obeys the principle of optimal resource allocation, Eq. 25. This indicates that there is a selective pressure on the optimal allocation of resources in cellular sensing.

6 Discussion

6.1 Different Sensing Strategies Encode and Decode Ligand Information Differently

Cells use different sensing strategies, which differ in how they process information about the ligand concentration. The data processing inequality [21] guarantees for any network that no readout X can have more information about the ligand concentration encoded in its time trace than the ligand-bound receptor RL has in its time-trace [37]: \(I( X_{[0,T]}(t); \mu _L) \le I( RL_{[0,T]}(t); \mu _L)\), where I is the mutual information between the arguments with \(\mu _L\) the chemical potential of the ligand, and \(y_{[0,T]}(t)\) indicates the time trace of \(y=X,RL\) from time 0 to time T. Clearly, the accuracy of sensing for any network is bounded by the amount of information that is in the time trace of the receptor state. However, the different sensing strategies differ in how they encode the ligand concentration in the receptor dynamics and in how they decode the information that is in the receptor time trace.

For equilibrium networks, the data processing inequality guarantees that no readout has more information about the ligand than the receptors at any given time [37]: \(I(X(T); \mu _L) \le I(RL(T); \mu _L) \le \log _2 (R_\mathrm{T} + 1)\), and therefore the information in the instantaneous level of the readout is bounded by the total number of receptors \(R_T\). This statement is the information-theoretic analogue of Eq. 21. The history of receptor states does contain more information about the ligand concentration than the instantaneous receptor state, but an equilibrium signaling network cannot exploit this: its output contains no more information than the instantaneous receptor state.

Cells that use the mechanism of time integration can exploit the information that is the time trace of the receptor, and for these networks \(I(X(T);\mu _L)\) can be larger than \(I(RL(T);\mu _L)\). These cells estimate the ligand concentration from the average receptor occupancy over an integration time, which, as we have seen in Sect. 3, is determined by the architecture of the readout system and the lifetime of the readout molecules. It is quite clear that cells employ this mechanism of time integration: the central motif of cell signaling in both prokaryotes and eukaryotes, the push-pull network, implements time averaging by storing the receptor state into stable chemical modification states of the readout molecules, which, collectively, encode the average receptor occupancy over the past integration time.

Another sensing strategy is maximum likelihood estimation [31, 49, 54]. It estimates the ligand concentration not from the average receptor occupancy over the integration time T, as in the mechanism of time integration, but rather from the mean duration of the unbound state of the receptor \(\tau _u\): \(\hat{c}_\mathrm{MLE}=1/(\tau _u k_\mathrm{on})\). The sensing error of this strategy for a single receptor is \(\left( \delta c / c \right) ^2_\mathrm{MLE} = 1 / (k_\mathrm{on} c (1-p) T)\) [31], which is half that of the mechanism of time integration, see Eq. 17. The reason why this sensing strategy is more accurate is that only the binding rate depends on the concentration, not the unbinding rate. Hence, only the unbound interval provides information on the concentration. In contrast, the mechanism of time integration infers the concentration from the mean receptor occupancy, which depends on both the unbound interval and the uninformative bound interval.

How cells could actually implement the strategy of maximum-likelihood estimation remains an open question. One possibility is that receptors are internalized upon ligand binding, another that they bind ligand only briefly and signal only transiently, which could be achieved via receptor adaptation or desensitization following ligand binding [31]. Another intriguing possibility has recently been suggested by Lang et al. [49]. It is inspired by the observation that many receptors, such as receptor-tyrosine kinases and G-protein coupled receptors, are chemically modified via fuel turnover [49]. In this scheme, the cell estimates the ligand concentration from the average receptor occupancy over an integration time T, as in the canonical mechanism of time integration. However, upon ligand binding, the receptor is driven via fuel turnover through a non-equilibrium cycle of m chemical modification steps, before it can release and bind new ligand again. In the limit that the energy drop over the cycle \(\Delta \mu \rightarrow \infty \) and \(m\rightarrow \infty \), the sensing accuracy approaches the maximum-likelihood-estimation limit, even though the concentration is inferred from the average receptor occupancy. The reason is that in this limit the interval distribution of the active receptor state becomes a delta function instead of an exponential one as in the case of canonical time integration. This eliminates the noise from the uninformative bound interval in estimating the average receptor occupancy.

6.2 The Importance of Spatio-temporal Correlations

Ultimately, the precision of sensing via a mechanism that relies on integrating the receptor state, be it the canonical Berg–Purcell scheme with Markovian active receptor states or the maximum-likelihood scheme of Lang et al. with non-Markovian active states [49], is determined by the number of receptors, the receptor correlation time, and how the readout molecules sample the receptor molecules. The analysis of Ref. [38] ignores any spatio-temporal correlations of both the ligand molecules and the readout molecules. In this analysis, the different receptor molecules bind the ligand molecules independently, and the correlation time of the receptor cluster is that of a single receptor molecule \(\tau _c\). The total number of independent concentration measurements in the integration time T is then the number of receptors \(R_T\) times the number of independent measurements per receptor, \(T / \tau _c\), yielding the fundamental limit \(\left( \delta c/ c\right) ^2 \ge 2 \tau _c/ (p(1-p)R_T T)\). Importantly, because \(\tau _c\) is independent of the number of receptors, the sensing error decreases with the number of receptors. However, diffusion introduces spatio-temporal correlations between the different ligand-receptor binding events [11–13, 85]. Consequently, the correlation time \(\tau _N\) of \(R_T\) receptors on a spherical cell of radius \(\mathcal{R}\) is not that of a single receptor molecule, but is rather given by [11]

As pointed out by Wang et al. [85], the correlation time \(\tau _N\) increases with the number of receptors \(R_T\) (and even diverges for \(R_T\rightarrow \infty \)), which means that when \(R_T\) is large and/or the integration time T is short, the mechanism of time integration breaks down. In this regime the equilibrium sensing strategy is superior, because it relies on sensing the instantaneous receptor state [85]. Using receptors that bind ligand non-cooperatively as the readout, \(\left( \delta c / c\right) ^2_{RL} = 1/\sigma ^2_{RL}=1/((p(1-p) R_T)\), which indeed decreases with \(R_T\) [37, 85].

When the integration time T is longer than \(\tau _N\), the sensing error is given by [11]

For large \(R_T\) (but not so large that \(\tau _N > T\)), the sensing error reduces to

This, apart from the factor \(1-p\), is the classical result of Berg and Purcell [12, 13]. At sufficiently large \(R_T\), the sensing error is limited by diffusion, the size of the cell and the integration time. It becomes independent of \(R_T\), because the decrease of the instantaneous error with \(R_T\), \(1/(R_T(p(1-p))\), is cancelled by the increase of the correlation time with \(R_T\). Another interpretation of the observation that the sensing error becomes independent of \(R_T\) and p in the large \(R_T\) limit is the following: Receptor binding given that ligand is at contact is independent, and hence its contribution to the sensing error (second term) decreases with \(R_T\), going to zero as \(R_T\rightarrow \infty \). The contribution from diffusion (first term) becomes, in this limit, independent of \(R_T\) and p, because there are always enough free receptors available for binding each new ligand molecule that arrives at the cell surface; indeed, by replacing R by \(\sigma \), Eq. 30 is identical to Eq. 5 for \(p\rightarrow 0\)—the sensing error of a cell with many receptors (some of which are bound) is identical to that of a single receptor of the same size that is always free, and binds and unbinds ligand with intrinsic rates \(k_a, k_d \rightarrow \infty \).

Not only in the encoding of the ligand concentration in the receptor dynamics, but also in the decoding of this information by the readout system, spatio-temporal correlations can become important. Receptor and readout molecules are often spatially partitioned, due e.g., to the underlying cytoskeletal network or lipid rafts. Even in a system that is spatially homogeneous on average, spatio-temporal partitioning would occur, because of the finite speed of diffusion. We have recently shown that this partitioning decreases the propagation of noise, essentially because the activation of the different readout molecules becomes less correlated [55]. Whether there exists an optimal diffusion constant of the readout molecules that matches the correlation length and time of the receptors, which is set by the ligand diffusion and binding dynamics, is an intriguing question for future work.

6.3 The Dimensionality of the System