Abstract

Depictions of sadness are commonplace, and here we aimed to discover and catalogue the complex and nuanced ways that people interpret sad facial expressions. We used a rigorous qualitative methodology to build a thematic framework from 3,243 open-ended responses from 41 people who participated in 2020 and described what they thought sad expressors in 80 images were thinking, feeling, and/or intending to do. Face images were sourced from a novel set of naturalistic expressions (ANU Real Facial Expression Database), as well as a traditional posed expression database (Radboud Faces Database). The resultant framework revealed clear themes around the expressors’ thoughts (e.g., acceptance, contemplation, disbelief), social needs (e.g., social support or withdrawal), social behaviours/intentions (e.g., mock or manipulate), and the precipitating events (e.g., social or romantic conflict). Expressions that were perceived as genuine were more frequently described as thinking deeply, reflecting, or feeling regretful, whereas those perceived as posed were more frequently described as exaggerated, overamplified, or dramatised. Overall, findings highlight that facial expressions — even with high levels of consensus about the emotion category they belong to — are interpreted in nuanced and complex ways that emphasise their role as other-oriented social tools, and convey semantically related emotion categories that share smooth gradients with one another. Our novel thematic framework also provides an important foundation for future work aimed at understanding variation in the social functions of sadness, including exploring potential differences in interpretations across cultural settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Facial expressions are ubiquitous and communicate nuanced information about others’ internal states and outward intentions (Wang, 2009). Originally, the Basic Emotions Theory (BET) proposed that people universally experience and express a small number of discrete, basic emotions (Ekman & Friesen, 1969). The prototypical forms of each basic emotion were deemed to have one universal meaning (i.e., one meaning for sad, one for happy, etc.). However, contrary to BET’s original claims, facial expressions that convey the same emotion category can vary widely in physical form (e.g., activation of different facial muscles) and people appear to attribute different meanings to these different forms (Adams & Kleck, 2005; Balsters et al., 2013; Niedenthal et al., 2010). The basic emotion of happiness is a good example, in that smiles vary in physical form (Duchenne, 1990; Ekman, 1985), and at least three of these different forms have been linked to distinctive meanings (e.g., genuine positive feelings, affiliative intent, or dominance; Niedenthal et al., 2010).

Over time, BET was revised to account for these variations in physical form and attributed meaning, including the softening of discrete basic emotions to ‘emotion families’ (Ekman, 1992), and, more recently, the view that facial expressions sit within a continuous space of blended and semantically related emotions (Cowen et al., 2019; Cowen & Keltner, 2017, 2020). Additionally, a range of theoretical perspectives suggest that facial expressions and their meaning are socially or culturally constructed, leading to variations in their interpretation (Crivelli et al., 2016; Crivelli & Gendron, 2017; Jack et al., 2012, 2014; Lindquist et al., 2006). These newer conceptualisations allow for differences in the meanings attributed to each specific facial expression and align more closely with another influential theoretical perspective, the Behavioural Ecology View (BECV). BECV proposes that facial expressions are social tools, rather than direct readouts of emotion, and that people react to facial expressions as a function of the social contexts in which they occur (Crivelli & Fridlund, 2019).

Despite this theoretical acknowledgement that people attribute varied meanings to each category of facial expression, there has been less emphasis on exploring how interpretations vary for expressions other than smiles (but cf. Kret, 2015; Zickfeld et al., 2020). Here, we focus on sad facial expressions as they, like smiles, have a central role in social cohesion and attachment, vary in physical form, and are likely to communicate multiple distinct meanings (Adams & Kleck, 2005; Namba et al., 2017; Reed et al., 2015; Zickfeld et al., 2020). We used a novel set of naturalistic expressions, which depicted the varied repertoire of expressive behaviour encountered in real life, plus some traditional posed expression stimuli, both of which had high levels of labelling agreement. Thus, throughout this study, we refer to sad expressions as a group of facial expressions that vary in their physical appearance but are typically perceived as communicating sadness. Our investigation used a rigorous qualitative approach that captured the meanings participants freely attributed to these sad expressions.

Theoretical Potential for Varying Meanings

BET has been highly-influential in facial expression research since the 1960s (e.g., Ekman & Friesen, 1969), leading many researchers to focus on a small number of basic emotions that were assumed to be universally displayed and understood. A foundational principle of BET is that when someone experiences a basic emotion it spontaneously erupts on their face in the form of a prototypical facial expression (Ekman & Friesen, 1975). Any instances where the facial display differed from the prototypical expression was explained by either “display rules” causing people to regulate their facial display to meet social expectations (e.g., smiling to hide sadness at work) or the concept of blends, whereby different emotions experienced at one time produce blended or compound facial expressions (Ekman, 1970, 1971). However, these concepts have been criticised as post-hoc explanations that enable BET to accommodate any evidence that contradicts its predictions (Crivelli & Fridlund, 2019).

The initial emphasis on basic emotions and expressions meant there was little impetus to explore whether expressions within an emotion category varied in their meanings, and instead focused interest on differences between each category of basic emotion. Ekman and colleagues (2002) distinguished between these basic expressions by recording the visible facial activations, coded as Action Units (AUs), required to produce a high level of agreement about the display of each basic emotion on the face. For example, the prototypical sad expression was proposed to involve activation of the inner brow raiser (AU1), the brow lowerer (AU4), the lip corner depressor (AU15), and sometimes the chin raiser (AU17; Ekman et al., 2002) and these combinations would not be evident in other basic emotions. Over time, BET softened the concept of basic emotions and expressions to represent prototypical exemplars of broader emotion families, rather than distinct prototypes (Ekman, 1992). These emotion families reflect a group of different, yet related, emotion states that share common characteristics, such as commonalities in expression, physiological activity, eliciting events, and appraisal processes (Ekman & Friesen, 1975). The core idea is that all expressions within an emotion family have some shared core physical features (e.g., all expressions within the ‘happy’ family involve smiling) but also subtle variations in form that reflect the conditions under which they were produced. For instance, differences in the eliciting event and social context, and whether the expression was spontaneously elicited or deliberately posed. Therefore, within each emotion family, the different physical forms are likely to signal a range of meanings centred around a unifying theme.

Current perspectives in BET suggest emotions and expressions do not reflect discrete emotion categories and instead have smooth gradients that correspond to continuous variations in meaning (Cowen & Keltner, 2020). In this “semantic space approach” (Cowen et al., 2019; Cowen & Keltner, 2017, 2021), people rely on thousands of words to describe specific expressive behaviour (i.e., facial expressions) that result from emotional experiences, and this reflects the “semantic space” of that expressive behaviour. These spaces vary in the distinct types of emotion represented, their mapping onto different dimensions (e.g., valence, arousal, dominance), and the distribution of emotions within the space (i.e., their proximity to one another; Cowen et al., 2019; Cowen & Keltner, 2017, 2021). This approach captures the relationships, patterns, and gradients between emotions, rather than representing them as distinct entities (Cowen et al., 2019; Cowen & Keltner, 2017, 2021). According to this perspective, the emotional meaning conveyed by facial expressions are unique, highly varied, and depend on their relative position along the continuous gradient between categories (Cowen et al., 2019; Cowen & Keltner, 2017, 2021).

Similarly, BECV has argued from its inception (Fridlund, 1991) that all facial expressions serve different social functions, as their purpose is to signal nuanced information about expressors’ social behaviour across widely varying contexts (Crivelli & Fridlund, 2018, 2019). Unlike BET, which focused the role of expressions on communicating expressors’ emotions, BECV proposes that expressions instead function to guide observers’ behaviour in social interactions (Crivelli & Fridlund, 2018). BECV also implies that, as social interactions are highly varied across contexts and people, both the physical production of expressions, as well as what is understood by the observer, would also likely vary (Crivelli & Fridlund, 2018, 2019).

Yet, despite the current perspectives in BET and arguments of BECV, most research has continued with a limited focus on basic emotions with single meanings (Dawel et al., 2021) and the differences between, rather than within, emotion categories. A notable exception is smiling, where the difference in smile types highlights how varied meanings can emerge from one type of expression—many of which fall within the happy “family”, although some do not (Duchenne, 1990; Ekman, 1985; Niedenthal et al., 2010). For example, Niedenthal and colleagues’ (2010) simulation-of-smiles model proposes three different smile types with distinct physical forms and meanings: reward smiles, affiliative smiles, and dominance smiles (Niedenthal et al., 2010). Ekman and colleagues also proposed a range of different smile types (Ekman, 1985; Ekman & Friesen, 1982; Frank & Ekman, 1993), primarily distinguished by those that also include with a Duchenne marker (AU6), as evaluated more favourably than non-Duchenne smiles (Gunnery & Ruben, 2016; Krumhuber & Kappas, 2022; Quadflieg et al., 2013; Wang et al., 2017). Thus, even when there is high consensus about the emotion category (e.g., happiness), variations in physical form (e.g., different smiles, AUs activated) can give rise to different meanings. While this past research focused on physical differences across one specific facial feature (i.e., smiling), we focused on expressions linked to the same emotion category label (i.e., sadness) that varied widely in physical form.

Variation in Sad Facial Expressions and Potential for Different Meanings

There is strong evidence for both physical variation across sad expressions (Adams & Kleck, 2003, 2005; Balsters et al., 2013; Namba et al., 2017) and people associating the concept of sadness with a variety of different meanings (Ekman & Cordaro, 2011; Huron, 2018; Plaks et al., 2022; Reed & DeScioli, 2017). With regard to physical variations in sad facial expressions, these can include differences in AU activation, which seem to particularly vary for spontaneous versus posed expressions (Jakobs et al., 2001; Namba et al., 2017), eye gaze direction (Adams & Kleck, 2003, 2005), and the presence of tears (Balsters et al., 2013). Further, the eye and brow region appear particularly important for the recognition of sadness, over and above other emotions (Beaudry et al., 2014), suggesting variation in this facial region is likely to have implications for the attributed meanings. The substantial variation in the physical form of sad expressions suggests people are likely to attribute a range of different meanings to sad expressions (Balsters et al., 2013; Gračanin et al., 2021; Ito et al., 2019; Miller et al., 2020; Zickfeld et al., 2020), although the full range of meanings is yet to be explored.

Evidence for people associating the concept of sadness with a variety of different meanings comes from studies using a range of different stimuli, including both faces and vignettes, and suggests potentially different interpretations from sad expressions, such as a role in communicating loss (Ekman & Cordaro, 2011; Reed & DeScioli, 2017), soliciting helping behaviour or altruistic assistance (Huron, 2018; Marsh & Ambady, 2007; Reed & DeScioli, 2017), dampening aggression (Huron, 2018), and ‘softening’ the negative effect of anger expressed on the face (Plaks et al., 2021). Specific examples from sad facial expressions include increasing the credibility of uncertain losses in economic games (Reed & DeScioli, 2017), eliciting more positive ratings than angry expressions in some moral decision making scenarios (Plaks et al., 2022), and soliciting higher intent to help ratings than both neutral and anger expressions (Marsh & Ambady, 2007). Tears on neutral faces have also been found to signal a greater need for social support, evoking greater empathic concern, feelings of connectedness, and intentions to provide social support (Zickfeld et al., 2020). There is also some preliminary evidence that perceived genuineness impacts the meanings given to sad expressions, with observers reporting greater intent to help people displaying genuine than posed distress cues (sad, as well as fearful, expressions; Dawel, Wright et al., 2019). Given there is clear evidence that sad expressions can serve different social functions, it is likely that people interpret them in a range of different ways. Thus, the present study aimed to catalogue the full range of meanings that people attribute to sad expressions. Establishing this catalogue is a critical first step towards future work seeking to link physical variations directly with distinct meanings.

Rationale for Using Naturalistic Expressions

Major reviews of emotion perception research have argued that naturalistic stimuli are needed to understand how people respond to facial expressions in real life (Barrett et al., 2019; Russell & Fernandez Dols, 2017). Traditionally, research has relied on a small number of posed expression databases that eliminate or systematically manipulate variation across stimuli to achieve a high level of experimental control (Dawel et al., 2021; Russell, 1994). For example, models/actors are often instructed to pose each of the basic expressions in research labs without feeling any real emotion (Ekman et al., 2002; Langner et al., 2010; Le Mau et al., 2021; Lundqvist et al., 2015). As a result, all the sad (or happy, angry, etc.) expressions in a database look very similar to one another—potentially restricting the meanings that people attribute to them. Further, these traditional expression stimuli are often perceived as faking emotion (Dawel et al., 2017).

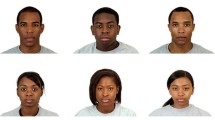

The real life physical variation in sad expressions (Adams & Kleck, 2003, 2005; Balsters et al., 2013; Namba et al., 2017) and evidence that people attribute different meanings to expressions that are perceived as genuine versus posed (Dawel et al., 2019; Frank & Ekman, 1993; Gunnery & Ruben, 2016) motivated us to use naturalistic expressions in the present study (see Fig. 1). We selected naturalistic stimuli from an innovative new database of clips extracted from YouTube—the ANU Real Facial Expression Database (ANU RealFED)—according to two key criteria: (1) the stimuli had been categorised as sad by consensus labelling in past research and (2) they were selected to vary as much as possible in their ratings of perceived genuineness, eye gaze directions, viewpoints, tearfulness, and combinations of visible facial activations. We also included a small number of posed images to ensure our selected stimuli captured the full spectrum of perceived genuineness, from highly genuine to highly posed or “faked”. Posed stimuli were selected from the Radboud Faces Database (RaFD) based on one key criterion: although homogenous in visible facial muscle activation, they were selected to vary as much as possible in eye gaze direction and viewpoint. The present study aimed to overcome the issue that past emotion perception research has relied heavily on posed expression stimuli (Dawel et al., 2021; Russell, 1994), and offers a unique perspective based on predominantly naturalistic facial expression stimuli.

Prototypical expression of sadness proposed by Ekman et al. (2002) in comparison to the selected naturalistic and posed stimuli: (A) Depicts the AUs involved in the prototypical expression of sadness according to Ekman et al. (2002) (example image sourced from the Radboud Faces Database; Langner et al., 2010); (B) Depicts physically diverse, naturalistic stimuli selected from the ANU RealFED; (C) Depicts physically similar, posed stimuli selected from the RaFD (Langner et al., 2010)

The Current Research

While there is evidence that the meanings people attribute to sad expressions vary (Ekman & Cordaro, 2011; Huron, 2018; Plaks et al., 2022; Reed & DeScioli, 2017; Zickfeld et al., 2020), there has been no systematic attempt to catalogue these meanings. A comprehensive framework that captures the range of interpretations of sad expressions is important for understanding the everyday variation and thematic nuances in sadness perception, and the likely impact of these perceptions on ensuing response behaviours. Such a framework would be valuable for future research seeking to explore differences based on culture, as well as individual differences in clinical and personality traits linked to unique social perspectives (e.g., autism).

Thus, the aim of the present study was to investigate how people freely interpreted a large set of physically varying sad expressions (N = 80 stimuli) from the ANU RealFED (naturalistic n = 65 stimuli) and RaFD (posed n = 15). Stimuli were selected on the basis that they were categorised as “sad” by the majority of participants (with an average consensus labelling percentage of 82.5%, see Method), varied in their perceived genuineness (as per previous pilot studies, see Method), and included as much physical variation as possible (e.g., activation of different muscles at different intensities, with differences in eye gaze direction, viewpoint, and tearfulness).

As our study was exploratory, we opted for an open-ended qualitative approach that asked participants to freely describe what they thought each facial expression communicated. To analyse our data, we applied thematic analysis, which generates a rich description of a dataset through a structured, systematic, and theoretically flexible process across three broad stages (Braun & Clarke, 2006, 2014): (1) initially examining the response data and generating a preliminary thematic structure; (2) searching for themes, reviewing themes and defining themes; and (3) reviewing and reporting the key findings. We expected that participants would interpret the sad expressions using a range of different meanings, given their widely varying physical forms, although we were open as to the range of interpretations that would arise.

Method

Participants

Analysed data were from 41 adults (15 women, 26 men, Mage = 30.6 years, SDage = 5.8, rangeage = 20–41) recruited from the Prolific online platform (https://www.prolific.co/) in 2020. Our sample exceeded the typical sample size for qualitative research (20 to 30 participants), which is usually smaller than for quantitative methods (Levitt et al., 2018; Marshall et al., 2013; Mason, 2010). We required that all participants reported normal or corrected-to-normal vision, spoke English as a first language and be the same ethnicity as the face stimuli (i.e., White European), to control for the impact of race and culture on expression perception (Elfenbein & Ambady, 2002; Matsumoto, 1992; Matsumoto & Ekman, 1989). We also required that participants not report a disorder known to impact face perception (e.g., autism, psychosis) and excluded one participant who reported autism. Data from an additional eight participants were excluded because they did not finish the study (n = 7) or sent a follow-up e-mail stating they misunderstood the task (n = 1). Participants were paid £4.75 for the 45-minute study. The study was approved by the ANU Human Research Ethics Committee protocol 2015/305.

Stimuli

Stimuli were 80 images of physically diverse sad facial expressions, comprising 65 images from the naturalistic ANU RealFED (Dawel et al., in prep) and 15 images from the posed RaFD (Langner et al., 2010). Stimuli were cropped around the face and set against a black background to avoid context effects (Barrett et al., 2011; Righart & De Gelder, 2008). Participants were asked to adjust their screen size to ensure stimuli were ~ 5 cm high.

Stimuli were selected according to three primary criteria. First, each expression must have been categorised as “sad” by the majority of participants in prior studies (see Supplement S1 for details). Average labelling agreement for the stimuli was 82.5%, with agreement greater than 80% for 50 of the 80 stimuli (and for 38/65 naturalistic). This level of agreement exceeds that for the prototypical posed sad stimuli that the literature has relied on (e.g., 61% agreement for Ekman & Friesens’ 1976 Pictures of Facial Affect sad stimuli based on Palermo and Coltheart (2004) and 80% agreement for Langner et al.‘s (2010) RaFD sad stimuli). However, we note that as the stimuli were taken from the internet or posed by actors, we cannot confirm which emotion(s) the expressors were genuinely feeling. Second, the total set of expressions should include as much physical variation as possible (e.g., activation of different muscles at different intensities, with differences in eye gaze direction, viewpoint, and tearfulness). Third, the total set of expressions should vary in perceived genuineness ratings as much as possible, according to ratings from pilot research (also see Supplement S1 for details).

ANU Real Facial Expression Database

The ANU RealFED comprises still frames extracted from YouTube videos showing people in a wide range of settings (e.g., reality television clips, news clips, Vlogs; see Supplement S2 for database details). Pilot data for the still frame stimuli includes emotion labelling and perceived genuineness ratings (N = 14–26 per stimulus). The image stimuli were selected on the basis that a prior set of participants had labelled the images as “sad” in a forced-choice emotion labelling task. As such, the selected stimuli were predetermined to look “sad” exactly as they were shown in the present study. Images were selected if they were clear and showed faces front on with eyes open and features unobscured. In this emotion labelling task, participants selected the emotion shown on each face from nine emotion labels (anger, contempt, disgust, fear, happy, sad, surprise, other, no emotion; see Supplement S1). Using this data, 431 of 2,924 ANU RealFED stimuli met our criterion of being categorised as “sad” by the majority of participants. To identify the clearest expressions, we asked another five participants (1 women, 4 men, Mage = 30 years, SDage = 4.3, rangeage = 24–34), recruited from Prolific, to rate the clarity of each face, from 1 (terrible) through 3 (satisfactory) to 5 (excellent). We required stimuli to be rated as satisfactory or above by at least 4 of the 5 participants (n = 235 stimuli) to be considered further.

We selected the final 65 stimuli (34 female and 31 male faces) from this pool to show as much physical variation as possible (e.g., variety of viewpoints, gaze directions, and levels of tearfulness), and to vary across the full spectrum of genuineness ratings, balanced across the female and male faces (genuineness ratings: N = 30 rated > + 1 = genuine, N = 20 rated < -1 = posed, N = 15 rated between − 1 and + 1 = ambiguous; see Supplement S1 for individual genuineness rating data). Roughly half the stimuli had glassy eyes or tears (n = 35). On average, the final selected ANU RealFED stimuli were categorised as “sad” 81% of the time (SD = 15.6%, range = 50–100%; see Supplement S1 for individual stimuli labelling data).

Radboud Faces Database (Langner et al., 2010)

The RaFD was developed using a modified version of the Directed Facial Action Task, which involves actors posing each of the basic expressions by activating the specific sets of AUs believed to be involved in each expression prototype (Ekman, 2007; Ekman et al., 1983). This database is one of several posed expression sets used extensively throughout the literature (Dawel et al., 2021), and includes 5,880 images with systematic differences in eye gaze direction and viewpoint (Langner et al., 2010). We included stimuli from this database to ensure our selected stimuli included some posed images in addition to the naturalistic images (genuineness ratings: N = 1 rated > + 1 = genuine, N = 4 rated < -1 = posed, N = 10 rated between − 1 and + 1 = ambiguous; see Supplement S1), and included variation in head and eye gaze direction. To ensure a wide range of physical variation was shown across the selected stimuli, we selected 15 sad expressions that showed ~ half women and men (7 women, 8 men) across three different viewpoints (9 frontal view, 3 left view, 3 right view), with roughly equal numbers of stimuli showing direct and indirect eye gaze (averted to the left or right; 6 direct, 9 indirect). On average, the final selected RaFD stimuli were categorised as “sad” 89% of the time (SD = 12.4%, range = 69–100%; see Supplement S1).

Sad Expression Meaning Task

The 80 sad expression images were presented onscreen one at a time, in a different random order for each participant. Participants were asked “What does this facial expression tell you about what the person is thinking, feeling, and/or intending to do?”. Participants typed their responses into an open-ended text box. Each image remained onscreen until the participant finished responding, with no time limit. Prior to completing the task, participants were instructed to report all the different possible meanings for each expression and whether they knew anyone depicted in the stimuli. The full participant instructions are presented in Supplement S3. We gave participants example responses for smiling expressions, which demonstrated the appropriate response style and level of detail, without priming them on possible answers for the sad expressions (see Supplement S3 for further information).

The mean completion time for the experiment was 43 mins and participants typically provided 1–2 sentences per face. While there were no attention checks embedded within the task, review of the raw response data indicated no signs of disengagement or lack of attention (e.g., no instances of participants copying and pasting their responses across multiple stimuli) and pilot participants reported a high level of engagement. For this reason, we do not believe fatigue effects impacted the data.

Data Cleaning and Screening

Of the 3,280 responses, 3,243 (98.8%) were retained for analysis after the process of data cleaning and screening. Responses were excluded if the participant reported knowing the person depicted (i.e., by writing “I know who this person is”; n = 37) because familiarity impacts expression perception (Ma-Kellams & Blascovich, 2012). We used the spell check function in Microsoft Excel to identify and change incorrect spellings (e.g., the spelling error,“Not bothered, ematoonelss”, was identified and corrected to “Not bothered, emotionless”). Incorrect spellings that appeared intentional (conveyed a deliberate meaning, e.g., “he gonna lol” instead of “he’s going to laugh”) were retained. Those that could not be interpreted (e.g. “Maybe fake ceub”) were coded as junk.

Data Analysis and Intercoder Reliability

Braun and Clarke (2006) proposed a six phase process of thematic analysis, which we grouped into three broad stages (see Supplement S4). We coded themes using a semantic approach, which identifies themes based on the explicit patterns of meaning in the response data (Braun & Clarke, 2006). Our approach was primarily data-driven (inductive). However, inspiration for the themes Social Loss/Conflict, Failure, and Social Support were drawn from the Situation-Symptom Congruence Hypothesis, which links different sadness precipitants to a range of depressive symptoms (Azungah, 2018; Keller & Nesse, 2006). Development of the thematic framework was led by EB, supported by fortnightly discussions with AD. Data are available at https://osf.io/yc697/. This study was not preregistered.

Results

To address our research aim, which was to catalogue the different ways people interpret sad facial expressions, we constructed a novel coding framework that organised and grouped the different meanings from the semantic content of responses. After introducing the overall framework, we report the reliability of the framework using estimates of cross-coder agreement and summarise the findings. We provide in-depth descriptions of each theme in the framework based on the patterns of meaning coded within them, including example quotes to support these descriptions where appropriate. We cover the content of each theme separately, to the extent this is possible. However, there are inevitably important links across the themes—primarily linking judgements about emotions, physical characteristics, and social intentions—which we explore using key examples.

The Newly Developed Coding Framework

The final coding framework included 12 overarching parent-themes, which were comprised of 34 child themes and 12 grandchild themes, shown in Fig. 2. The parent themes, shown in the numbered and coloured boxes, reflect the core patterns of meaning found across all responses. The child themes, positioned outside the parent-theme boxes, reflect the patterns of meaning within each parent theme, and the grandchild themes, dot-pointed below the child themes, reflect the patterns of meaning within each child theme. Overall, a total of 8,638 codes were applied to the 3,243 responses analysed. Each response was coded an average of 2.7 times. Of the total codes, 4433 (51.3%) were coded into more than one theme. For example, the response “genuinely sad, like he’s at a funeral for a loved one” was coded three times: in the Target Emotion child theme (“sad”; Emotion Word parent theme), Genuine child theme (“genuinely sad”; Emotional Genuineness parent theme), and Grief child theme (“at a funeral for a loved one”; Precipitants parent theme).

Intercoder Reliability

After the final coding stage was complete, an independent coder (SDLH), who was not involved in the framework development, cross-coded a random 25% of the responses to assess intercoder reliability, using Cohen’s kappa coefficient (O’Connor & Joffe, 2020). The overarching framework demonstrated strong intercoder reliability (κ = .95, Nraters = 2, nobservations = 880). Kappa coefficients for each parent theme also exceeded the threshold for good reliability (κ ≥ .80, range = .81-.93; see Supplement S5; Landis & Koch, 1977; O’Connor & Joffe, 2020). These estimates are consistent with other qualitative studies using comparable designs (Al-Rawi et al., 2021; Chadwick et al., 2011; Deighton-Smith & Bell, 2018).

The Novel Coding Framework. Note. Themes inside the coloured boxes reflect the overarching parent themes. The percentage in brackets is the percentage of total codes captured by each parent theme. The black text outside the boxes documents the associated child themes, alongside example quotes selected from responses. Grandchild themes are listed as dot-points

Summary of Participants’ Responses Coded in the Novel Framework

Overall, participants’ responses were diverse and multifaceted, often going beyond the use of simplistic emotion labels. While participants still used single emotion labels, they also provided a rich array of detailed, diverse, and nuanced interpretations that described the expressors’ complex experiences of emotion. This included inferences about the expressors’ emotions, thoughts, intentions, and behaviours. The expressions were often perceived as communicative tools, used to achieve an underlying social purpose (e.g., to mock or manipulate), and also as social cues, used to predict the expressor’s thoughts (e.g., acceptance, contemplation, disbelief), social needs (e.g., support or withdrawal) and current or intended behaviours. Participants also made meta-judgments about the expressions, most often about their level of genuineness and exaggeration, which were used as cues to the expressors’ underlying intentions. Likewise, participants made inferences about the precipitating events that led to the expression, including situations of social or romantic conflict, as well as physical pain, failure, and receiving either good or bad news. Responses mentioned emotion words, particularly “sad” and “upset”, as well as the physical features of the faces, most notably “tears”. Findings suggested people use emotion words as a shortcut for describing facial expressions but, when asked, they can infer detailed information about the expressors’ thoughts, feelings, and intentions from the expressions. Altogether, responses highlighted the importance of facial expressions as intricate tools for social communication and influence, making them more than only straightforward displays of discrete emotions.

Theme 1. Emotion Words

Responses were coded into the Emotion Words theme when an emotion label (e.g., “sad”, “upset”, “disapproval and possible disgust”) was used to describe the faces (with five child themes that captured the different emotion words, described in Table 1). As expected, sad and upset were the most commonly used labels, consistent with our stimulus selection criterion that expressions were previously primarily categorised as “sad” in a forced-choice labelling task. However, many other emotion labels were referenced in the responses, including anger and disappointment, and also positive emotions, such as happy, amused, and cheerful. Figure 3 shows a word cloud that visualises the most frequently used emotion labelling words. This Figure shows that the faces were most often labelled as sad or upset, and that these key words were complemented by a range of alternatives at lower frequencies, which also included positive words, such as joyful.

While participants labelled the facial expressions using emotion words, responses suggested their interpretations were not as simplistic as originally proposed by BET (Ekman & Friesen, 1969). Responses indicated the facial expressions were sometimes interpreted as reflecting multiple different mixed emotions (e.g., “combination of sadness and anger”), which transitioned over brief time periods (e.g., “was upset but is now getting angry”). The attributions of mixed or transitioning emotional states were sometimes linked to unique social needs. For example, one observer stated that an expressor looked, “lonely and depressed like he needs help desperately”. Participants described the sad expressions using emotion words that were both positively and negatively valenced (e.g., “they look sad but also sort of proud in a way”), demonstrating how facial expressions are not always perceived as read-outs of separate and distinct emotion categories. Overall, responses within this theme highlighted how perceivers often go beyond attributing single emotion words to facial expressions. Instead, the inferred meanings reflected mixed and continuously evolving emotion states, which signalled distinct social needs.

Theme 2. Physical Descriptions of the Face

Responses under the Physical Descriptions of the Face theme included references to the physical features of the faces, such as “big pout” and “smirking”. Some responses mentioned specific physical features (e.g., “her lower face looks sad”). However, most described crying or the presence of tears on the faces, which was captured by one child theme (Tearful child theme, with 6 grandchild themes described in Table 2). Building on Theme 1, where positive emotion words were often used alongside negative words, there were multiple references to “tears of joy”, highlighting how facial expressions that most people categorise as sad, even those with tears, may also be perceived as conveying positive emotions. Also like the Theme 1 finding that facial expressions were sometimes perceived as communicating mixed emotions (e.g., sadness and happiness combined), in some instances, perceivers inferred different emotions from different physical characteristics, such as “his eyes suggest he is upset but the rest of his face looks like he is experiencing pleasure”. Additionally, certain forms of physical variation were described in conjunction with precipitating events, such as the statement “pouting because they didn’t get their way”, and judgements about emotional intensity, such as “looks very emotionally hurt her eyes scream extreme sadness”. In general, responses illustrated how people use physical features to infer information about expressors’ emotional experiences and precipitating events.

Themes 3–6. Meta-Judgements: Suppression/Masking, Genuineness, Exaggeration, and Intensity

Four parent themes were grouped together under the umbrella of Meta-Judgements, which captured inferences about emotion suppression/masking, genuineness, exaggeration, and intensity (described in Table 3). Many responses referenced the expressors’ ability to deliberately manipulate the appearance of their expression for a social purpose, such as “deliberately making this face to show mock sadness”. Some meta-judgements referenced the physical characteristics of the face. For instance, one observer described “overly flexed frown doesn’t really occur to this extent without doing it on purpose”. Participants often linked the meta-judgements to different social meanings. For example, one observer described an expression as “true sadness” and followed up by stating “maybe they lost their long-term partner”, whereas another participant described an expressor as “acting sad, being silly – perhaps playing with a child”. In these themes, participants made meta-judgements about the expressions which were often used to infer expressors’ underlying intentions.

Theme 7. Inferences about the Events that Precipitated the Sad Expressions

Responses under the Precipitants theme described the events or situations believed to elicit the facial expressions (with five child themes, described in Table 4). The precipitants varied widely, from professional and academic failures to physical injuries, interpersonal conflicts and violence, and the death of loved ones. There were six grandchild themes under the Social Loss/Conflict child theme, which included precipitants such as grief and bullying. Participants described sadness resulting from the death of a loved one (e.g., “grief, like a family member has died”), a romantic relationship break-up (e.g., “her partner has left her”) or alternative form of relationship loss (e.g., “just dropped a child off for college”). Likewise, consistent with sadness resulting from failed efforts (Keller & Nesse, 2006), other responses described expressors who experienced academic and professional failures, such as “just been turned down for a promotion at work”. The preceding events were sometimes linked to the expressors’ underlying intentions. For example, one observer stated the expressor was “plotting his revenge after being wronged”. Responses under this theme suggested people assign meaning to facial expressions by situating them within a specific social context.

Theme 8. Inferences about the Thoughts of the Expressor

Responses under the Thoughts theme involved predictions about the expressors thinking deeply or emotionally processing an experience (with 6 child themes, described in Table 5). Many responses referenced emotion-focused coping strategies used to deal with difficult circumstances, such as denial (e.g., Disbelief child theme) and acceptance (e.g., Acceptance child theme; Carver et al., 1989). Responses often linked expressors’ thoughts to precipitants, such as “they just can’t believe that something terrible has happened. Maybe they were in a car crash”. In this way, participants situated expressors’ thoughts within specific external contexts. Interestingly, responses also described the expressors reflecting on past actions. For example, one expressor was described as “remorseful and sad…he may have caused harm to someone and is sorry for his actions”. Responses under this theme highlighted how people use facial expressions to infer expressors’ thoughts and cognitions.

Themes 9–11. The Social Nature of the Sad Expressions

This group of four themes all relate to the social nature of sad facial expressions. Responses under these themes described facial expressions as tools for communicating different social messages, with each theme representing a distinct type of social message.

Theme 9. Inferences about the Social Needs of the Expressor

Responses under the Social Needs theme involved inferences about the expressors’ unique needs based on their facial expressions, with two child themes that reflected opposing needs: a desire for support or sympathy (e.g., “wants to be comforted”; Social Support child theme: 0.7% (60 codes)) and a desire for withdrawal (e.g., “needs some time to himself”; Social Withdrawal child theme: 0.7% (62 codes)). Social needs were often described alongside precipitants. For example, one observer stated an expressor was “offended by something, and just wants to be left alone”, while another expressor was described as “struggling through a situation they want to get out of”. Likewise, social needs were sometimes described alongside emotion words. For instance, one participant reported an expressor looked “sad but also frustrated” and followed up by stating “I would leave them alone”. Responses under this theme reflected how people use facial expressions to infer others’ social needs.

Theme 10. Social Interactions and Intentions Described to Contextualise the Sad Expressions

Responses under the Social Interactions and Intentions theme described the expressors’ current or intended social behaviours (with 5 child themes, described in Table 6). Many responses described facial expressions being displayed to “make someone laugh”, “mock someone”, or “manipulate someone”, which were often linked with meta-judgements about the expressions, particularly those under the Emotional Genuineness and Emotional Exaggeration child themes, as well as physical features of the face. For instance, responses included “a fake pout, making fun of someone or playing by making a face at them” and “a fake cry to try to convince people they are the victim of the situation”. Given these responses focused on expressors’ social intentions, perceivers might use these attributions to guide their social behaviour. For example, an observer might respond differently to an expressor who is “talking about something traumatic that happened”, compared to an expressor who is “making this face to manipulate someone”. Responses under this theme reflected how facial expressions are used to guide social interactions.

Theme 11. Expressions as Tools for Social Communication

Responses under the Other-Directed Sentiments theme described the expressors’ ability to communicate their thoughts and feelings through their facial expressions (with 4 child themes, described in Table 7). Responses under this theme demonstrated how facial expressions may be used to communicate deliberate social messages. For example, one expressor was described as “full of anger and wants others to know it”, while another was “sarcastically making a sad face to mean ‘I don’t care’”. In some instances, perceivers adopted the position of the expressor to infer their thoughts and feelings. For example, one participant stated “I am disappointed in the decision you have made but can’t do anything about it”. Responses under this theme highlighted how facial expressions are perceived as communicative tools, intentionally displayed to convey distinct social messages.

Theme 12. Predictions About Current and Future Behaviours of the Expressor

Responses under the Current and Intended Behaviours theme predicted the present, ongoing, or future actions of the expressor. There were two child themes that captured the orientation of responses, including: the current or ongoing actions of the expressors (“she’s watching a sad movie”; Current Actions child theme: 3.5% (299 codes)); and the future behaviours of the expressors (“she’s about to scream in anger”; Intended Behaviour child theme: 2.9% (248 codes)). Many responses linked the expressors’ behaviours to social objectives and intentions. For example, one expressor was described as “sniggering like at someone else’s expense” and another as “listening intently as someone shares something personal”. Responses under this theme demonstrated how people infer information about other’s social behaviour through their facial expressions.

Thematic Correlations with Consensus Labelling

To explore whether the use of target words (e.g., “sad”, “sadness”) increased with labelling agreement, we calculated Spearman correlations between the percentage of “sad” labelling agreement in the prior sample used to select the stimuli and (1) the use of target emotion labels (defined as sad, sadness, and upset), (2) the use of non-target emotion labels (defined as positive, neutral or any other emotion labels), and (3) the use of mixed or transitioning emotion labels. This analysis revealed that the use of target labels was associated with significantly higher agreement, r(78), = .689, p < .001, whereas the use of non-target labels or mixed and transitioning emotion labels (i.e., the use of two or more emotion words or emotions fluctuating over time) was associated with significantly lower agreement, non-target: r(78), = − .640, p < .001; mixed/transitioning: r(78), = -.323, p = .003. These correlations suggest that the variation in emotion labels was strongly related to how consensually they signalled “sad” (i.e., more varied emotion labels were used at lower levels of “sad” consensus). The findings also highlight how labelling consensus reduces at the edge of the emotion family as it blends into other emotion families (which is associated with the use of varied emotion labels other than sad, sadness, or upset).

Exploratory correlations were conducted between the percentage of “sad” labelling agreement and the number of codes under each child and/or grandchild theme (as long as the child/grandchild theme had at least 50 coding references) to explore whether certain themes are at the centre of the sadness prototype (and whether others appear only as sad consensus drops off). The following themes were associated with significantly higher “sad” agreement: need for social support (r(78), = .249, p = .026), social issues as a precipitant (r(78), = .307, p = .006), tearfulness (r(78), = .335, p = .002), and thoughts of struggling to cope (r(78), = .301, p = .007). This indicates that the sadness prototype is most readily associated with sadness from social issues, the need of social support and feelings of struggling to cope, which is broadly consistent with the view that sad expressions have a role in communicating social loss (Ekman & Cordaro, 2011; Reed & DeScioli, 2017) and soliciting helping behaviour or altruistic assistance (Huron, 2018; Marsh & Ambady, 2007; Reed & DeScioli, 2017).

In contrast, the following themes were associated with significantly lower “sad” agreement: conflict as a precipitant (r(78), = − .307, p = .006), descriptions of intended behaviours (r(78), = − .525, p = < 0.001) and current actions (r(78), = − .297, p = .007), and thoughts of struggling to understand (r(78), = − .298, p = .007) and disbelief (r(78), = − .272, p = .014). This suggests that sadness arising from conflict, and feelings of struggling to understand and disbelief, only emerge at the edge of the sadness emotion family as it blends into other families, perhaps anger (often believed to communicate threat, conflict and aggression; Krieglmeyer & Deutsch, 2013). It also suggests that people don’t readily interpret sad expressions as communicating intended behaviours and current actions, which aligns with the view of facial expressions arising from predominantly emotional or internal experiences rather than readouts of physical behaviour (Cowen & Keltner, 2020; Ekman & Friesen, 1975).

Comparisons between the Meanings Attributed to Perceived as Genuine and Posed Stimuli

Following the qualitative coding, we conducted some additional, exploratory analyses that investigated how the perception of the sad expressions as genuine or socially posed impacted the way they were interpreted. Here, we compared the frequencies of coding under each parent theme, for stimuli that pilot participants rated as genuine (n = 31) and posed (n = 24) separately (see Supplement S6 for the procedure used to split stimuli into groups based on perceived genuineness). We use the terms genuine and posed to refer to the groups of stimuli, but please note that the terms refer only to the perception of the stimuli, as indicated by the pilot ratings, and do not reflect how the stimuli were generated.

Chi-Square adjusted standardised residual analyses tested for significant differences in the coding frequencies for the stimuli rated as genuine and posed (See Fig. 4 and Supplement S7 for analysis findings). While some differences in coding frequencies were expected for certain parent themes, such as Emotional Genuineness and Emotional Exaggeration, others were largely driven by the data, such as differences in coding frequencies for the Thoughts and Social Needs parent themes.

Overall, the exploratory genuineness analyses suggested that posed expressions were more likely to be described as exaggerated, overamplified, or dramatised, and participants were more likely to comment on the non-genuineness of these expressions (e.g., describing the expression as unreal or ‘faked’). In contrast, people were more likely to make inferences about the underlying cognitions of the expressor, including the thought processes involved in analysing or reflecting on past experiences, and feelings of shame, regret, and remorse, when responding to genuine expressions. In many instances, participants explicitly described whether the sad expressions were genuine or posed and commented on the level of exaggeration without being prompted. These responses suggested people make spontaneous inferences about genuineness when attributing meaning to sad expressions.

Discussion

Using a qualitative design, the present study provides compelling evidence that people interpret physically varying sad facial expressions as communicating a set of diverse meanings. While participants often used single emotion labels to describe the expressions, they also provided a rich array of detailed, diverse, and complex meanings that described the expressors’ nuanced experiences of emotion, thoughts, intentions, and behaviours. The interpretations went beyond simple, discrete meanings, and included descriptions of nuanced, mixed, and transitioning emotions that were semantically related to one another. Altogether, the current findings indicate facial expressions are perceived in nuanced and complex ways that reflect their role as other-oriented social tools.

People Interpret Sad Expressions as Nuanced Tools for Social Communication

Participants interpreted the sad expressions using a set of diverse meanings that predicted the expressor’s internal experience of emotion, their social needs and intentions, and the social context. Some responses aligned with the view that sad expressions facilitate social cohesion by signalling a need for support in times of difficulty or uncertainty (Ekman & Cordaro, 2011; Huron, 2018; Reed & DeScioli, 2017), but other responses framed the expressions as communicative tools used to achieve a social purpose (e.g., to mock or manipulate), and social cues used to predict the expressors’ thoughts (e.g., acceptance, contemplation, disbelief), unique social needs (e.g., support or withdrawal), and current or intended behaviours. The meanings were often linked to the physical features of the face (e.g., the presence of tears), as well as meta-judgements about the expressions, most frequently about their level of genuineness and exaggeration. Participants also made inferences about precipitating events, such as sadness from interpersonal loss or conflict, failure, physical pain, and receiving good or bad news. Altogether, findings suggest people attribute a range of unique and complex meanings to facial expressions associated with sadness, and these meanings reflect their role as highly varied other-oriented social tools.

Beyond Sadness: Facial Expressions Communicate Mixed and Transitioning Emotions

Participants perceived the facial expressions as social communication tools that conveyed detailed information about the expressors’ internal thoughts and experiences, and often described the expressions as conveying emotion categories that blend into one another (Cowen et al., 2019; Cowen & Keltner, 2017, 2021). While participants often used single emotion labels, they frequently provided nuanced descriptions of the expressions, which involved multiple different emotions that changed over time. This suggests people use emotion words as a shortcut for describing facial expressions, but can provide a rich array of detailed information when directly asked about expressors’ thoughts, feelings, and intentions. These findings also challenge the original assumption that basic emotions are discrete and therefore are always experienced and expressed in isolation from one another (Ekman & Friesen, 1969). In this way, participants’ responses provided qualitative support for compound expressions, where multiple basic emotions are expressed on the face at one time, communicating unique compounded meanings (Du et al., 2014). For example, many responses described combinations of sadness and fear, and sadness and anger, which are two of the four sad-compound expressions proposed by Du et al. (2014). Participants also described combinations of opposing emotion categories, such as sadness and happiness, suggesting there is an overlap between the perception of positively and negatively valenced emotions on the face. Interestingly, the attributions of transitioning internal states occurred despite the use of static expression stimuli, suggesting participants viewed the sad expressions as inherently dynamic and fluctuating. Overall, findings indicate emotions are not perceived from the face simplistically and discretely, and instead reflect nuanced and blended emotion categories that are semantically related to one another (Cowen et al., 2019; Cowen & Keltner, 2017, 2021).

Implications and Future Directions: The Meanings Attributed to Facial Expressions are Highly-Varied and Socially-Bound

Current findings demonstrate how people attribute a wide range of varied and socially-bound meanings to facial expressions, consistent with the current views of BET and BECV (Crivelli & Fridlund, 2018, 2019; Ekman, 1993). The diverse meanings catalogued in the framework also support the “semantic space” perspective, where the emotional meanings conveyed by facial expressions vary depending on their relative position between emotion categories (Cowen et al., 2019; Cowen & Keltner, 2017, 2021). Consistent with this idea, participants described a range of different meanings centred around the theme of sadness from situations of loss, failure, conflict, and physical pain, situated within different social contexts. Similarly, many themes in the framework captured the social utility of facial expressions proposed by BECV, as the expressions were frequently described as communicating the expressors’ thoughts, needs and intentions toward others, which included deliberate attempts to convey information to, and influence the behaviour of, others (Crivelli & Fridlund, 2018, 2019). These findings support the current perspectives of both BET and BECV that position facial expressions as complex, other-oriented social tools used to influence others’ behaviour and reflect semantically related emotions.

Overall, the finding that physically varying sad expressions communicate a range of different meanings raises the question of whether people use this physical variation to infer distinct meanings. For example, in the present study, participants frequently referenced the physical features of the face, including mentions of tearfulness, facial features (e.g., eyes, pout), and different regions of the face (e.g., upper or lower half), and linked them to specific emotions (e.g., sad, distressed), intensities and the perceived genuineness of the expressions. This broadly aligns with past research linking physical characteristics of the face to perceptual judgments about expressions, including the Duchenne marker as a signal of emotion authenticity for happiness and the eye region as a cue for sadness (Duchenne, 1990; Frank et al., 1993; Gunnery & Ruben, 2016; Wegrzyn et al., 2017). People might use this physical variation to infer internal states. However, this study was unable to provide direct support for this relationship as variation was not systematically controlled. Future research controlling for variation, by either systematically manipulating variation across facial expressions or coding for naturally occurring variation, is needed to explore the links between specific physical features and inferred meanings. Stimuli and data from the current study are available open-access and could be used for the initial exploration of this question, in future research coding relevant features in the faces.

Interestingly, while participants’ general descriptions are reasonably well aligned with what previous research has considered for sadness, such as the triggers of loss, failure, and helplessness for sadness (e.g., Lench et al., 2016), the responses referenced a wide range of emotion components, such as information on other triggers (e.g., here “precipitants”), physiology (e.g., “physical descriptions”), and sensations (e.g., “emotion words”). Current findings suggest nuanced physical variations may be linked to different interpretations of the underlying triggers, appraisals, physiology, motivations, etc. This possibility is similar to that for smiles, whereby different smiles have been linked with different aspects of the “happiness” construct (Gunnery & Ruben, 2016; Krumhuber & Kappas, 2022; Niedenthal et al., 2010). In the present study, for example, we found “sad” expressions could both invite and repel approach from others. This finding suggests that different facial expressions associated with the same emotion label (“sad”) result in a wide range of perceptions, which are likely intertwined with heterogeneity in the underlying emotion construct. Thus, an important avenue for future research will be to link physical variation in the face with different interpretations.

It is important to note that while the themes catalogued in the present framework may reflect interpretations of sad facial expressions, they may also reflect the psychological construct of sadness itself. Emotion concepts, evident in language, shape our perceptions of facial expressions and, thus, reflect a naturally intertwined process (Barrett et al., 2007, 2011; Fugate et al., 2010; Lindquist et al., 2006). Future research could explore thematic consistency across other types of “sad” stimuli, such as vignettes, to assess whether these themes generalise to the overarching psychological construct of sadness or are specific to sad faces.

Another question worth exploring in future research is whether there are sex differences in the meanings given to the expressions, or differences based on the sex of the faces. Research suggests women are faster and more accurate at facial expression recognition than men (Hall, 1978; Kret & De Gelder, 2012; Thompson & Voyer, 2014) and that the perception of certain expressions (e.g., happiness, fear, anger) are biased towards either female or male faces (Hess et al., 2009; Zebrowitz et al., 2010). Our open-access data could also be used for initial exploration of participant and stimulus-level sex differences in the meanings attributed to sad expressions.

Limitations of the Naturalistic Stimuli and Qualitative Research Design

This study focused on cataloguing the diverse meanings inferred by participants, rather than the accuracy of their perceptions. We selected sad expressions that were labelled as sad by the majority of participants in the pilot stage. To capture the physical variation found across more nuanced, naturalistic sad expressions, we also included some stimuli with less consistent consensus labelling percentages, as long as they were above 50%. However, as the naturalistic stimuli were sourced from YouTube, we do not know what the expressors were actually thinking, feeling, or intending, and there is no way to determine whether the attributed meanings accurately captured the expressors’ experiences. Likewise, the posed stimuli were taken from actors instructed to activate specific sets of AUs in the absence of real felt emotion. However, this study was interested in peoples’ perceptions of the expressions, which are meaningful regardless of whether they are accurate. As people usually do not have access to expressors’ true thoughts and feelings, their social behaviours are largely driven by perception (Hendriks & Vingerhoets, 2006; Sassenrath et al., 2017; Zickfeld et al., 2020). Yet, for this reason, we are unable to comment on whether the catalogued meanings reflect the expressors’ true experiences.

We removed the background context from the expression images to ensure we captured interpretations based solely on the physical features of the faces. However, in real-life peoples’ interpretations of facial expressions are influenced by the context in which they occur (Calbi et al., 2017; Hassin et al., 2013; Le Mau et al., 2021). Future research may benefit from considering the role of context in qualitative emotion interpretations.

While the qualitative research design allowed participants to freely describe their interpretations, it is possible that participants’ responses were influenced by the research question, which explicitly asked participants about thoughts, feelings and intentions, and the example responses provided (Agee, 2009). We provided this research question and example responses to set expectations about the level of detail required. However, while participants still sometimes used single emotion words to describe the sad expressions, they also provided nuanced and rich descriptions of the expressions, indicating this response style came naturally and with relative ease. The example responses provided to participants were also specific to smiles, ensuring that participants were not primed with possible meanings. Instead, findings highlighted that while people may use emotion words as a shortcut for describing expressions, they can also provide a rich array of novel, nuanced and detailed information when directly asked about the expressors’ thoughts, feelings, and intentions. An alternative for future research could be to examine whether participants’ implicit interpretations of sad expressions align with the explicit interpretations catalogued in this study.

Given the large pool of evidence that culture, race, and past facial experience impacts expression perception (Benitez-Garcia et al., 2018; Elfenbein, 2013; Elfenbein & Ambady, 2002; Jack, 2013; Jack et al., 2012; Matsumoto, 1992; Matsumoto & Ekman, 1989), we matched the ethnicity of the study sample to the expressors shown in the stimuli. An interesting question is whether the findings would vary if participants of other ethnicities, or experience with different cultures, were asked about the expressors’ thoughts, feelings, and intentions of the current stimulus set. Future research using stimuli that depict expressors of other ethnicities (which are being collected via efforts such as https://debruine.github.io/project/manyfaces/), are needed to determine the limits of generalisability of the current the framework.

Conclusions

To the best of our knowledge, the present study is the first to qualitatively catalogue the varied meanings attributed to a large set of sad facial expressions. Findings highlighted that while people frequently use single emotion words to describe facial expressions, they also provide a range of detailed, diverse, and complex meanings when directly asked about expressors’ thoughts, feelings, and behaviours. Altogether, the attributed meanings involved nuanced experiences of emotion that were sometimes mixed and transitioning, and included inferences about social intentions, behaviours, and context, which emphasised the role of facial expressions as complex other-oriented social tools that reflect blended and semantically related emotions. The current study provides an important and nuanced framework that creates a strong foundation for future research that seeks to explore how these varying perceptions influence ensuing response behaviours. The framework may also be used to explore differences based on culture, and whether people with neurodiverse social perspectives, such as those with autism, interpret and respond to facial expressions in unique ways.

Data and stimuli are available at https://osf.io/yc697/. This study was not preregistered.

References

Adams, R. B., & Kleck, R. E. (2003). Perceived gaze direction and the processing of facial displays of emotion. Psychological Science, 14(6), 644–647. https://doi.org/10.1046/j.0956-7976.2003.psci_1479.x.

Adams, R. B., & Kleck, R. E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion, 5(1), 3. https://doi.org/10.1037/1528-3542.5.1.3y.

Al-Rawi, A., Siddiqi, M., Li, X., Vandan, N., & Grepin, K. (2021). A thematic analysis of Instagram’s gendered memes on COVID-19. Journal of Visual Communication in Medicine, 44(4), 137–150. https://doi.org/10.1080/17453054.2021.1941808.

Azungah, T. (2018). Qualitative research: Deductive and inductive approaches to data analysis. Qualitative Research Journal, 18(4), 383–400. https://doi.org/10.1108/QRJ-D-18-00035.

Balsters, M. J., Krahmer, E. J., Swerts, M. G., & Vingerhoets, A. J. (2013). Emotional tears facilitate the recognition of sadness and the perceived need for social support. Evolutionary Psychology, 11(1), 148–158. https://doi.org/10.1177/147470491301100114.

Barrett, L. F., Lindquist, K. A., & Gendron, M. (2007). Language as context for the perception of emotion. Trends in Cognitive Sciences, 11(8), 327–332. https://doi.org/10.1016/j.tics.2007.06.003.

Barrett, L. F., Mesquita, B., & Gendron, M. (2011). Context in emotion perception. Current Directions in Psychological Science, 20(5), 286–290. https://doi.org/10.1177/096372141142252.

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1–68. https://doi.org/10.1177/1529100619832930.

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., & Tapp, R. (2014). Featural processing in recognition of emotional facial expressions. Cognition and Emotion, 28(3), 416–432. https://doi.org/10.1080/02699931.2013.833500.

Benitez-Garcia, G., Nakamura, T., & Kaneko, M. (2018). Multicultural facial expression recognition based on differences of western-caucasian and east-asian facial expressions of emotions. IEICE Transactions on Information and Systems, 5, 1317–1324. https://doi.org/10.1587/transinf.2017MVP0025.

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa.

Braun, V., & Clarke, V. (2014). What can thematic analysis offer health and wellbeing researchers? International Journal of Qualitative Studies on Health and Well-Being, 9(1), 26152. https://doi.org/10.3402/qhw.v9.26152.

Calbi, M., Heimann, K., Barratt, D., Siri, F., Umiltà, M. A., & Gallese, V. (2017). How Context influences our perception of emotional faces: A behavioral study on the Kuleshov Effect. Frontiers in Psychology, 8, 1684. https://doi.org/10.3389/fpsyg.2017.01684.

Carver, C. S., Scheier, M. F., & Weintraub, J. K. (1989). Assessing coping strategies: A theoretically based approach. Journal of Personality and Social Psychology, 56(2), 267–283. https://doi.org/10.1037/0022-3514.56.2.267.

Chadwick, P., Kaur, H., Swelam, M., Ross, S., & Ellett, L. (2011). Experience of mindfulness in people with bipolar disorder: A qualitative study. Psychotherapy Research, 21(3), 277–285. https://doi.org/10.1080/10503307.2011.565487.

Cowen, A. S., & Keltner, D. (2017). Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proceedings of the National Academy of Sciences, 114(38). https://doi.org/10.1073/pnas.1702247114.

Cowen, A. S., & Keltner, D. (2020). What the face displays: Mapping 28 emotions conveyed by naturalistic expression. American Psychologist, 75(3), 349–364. https://doi.org/10.1037/amp0000488.

Cowen, A. S., & Keltner, D. (2021). Semantic Space Theory: A Computational Approach to emotion. Trends in Cognitive Sciences, 25(2), 124–136. https://doi.org/10.1016/j.tics.2020.11.004.

Cowen, A. S., Elfenbein, H. A., Laukka, P., & Keltner, D. (2019). Mapping 24 emotions conveyed by brief human vocalization. American Psychologist, 74(6), 698–712. https://doi.org/10.1037/amp0000399.

Crivelli, C., & Fridlund, A. J. (2018). Facial displays are tools for social influence. Trends in Cognitive Sciences, 22(5), 388–399. https://doi.org/10.1016/j.tics.2018.02.006.

Crivelli, C., & Fridlund, A. J. (2019). Inside-out: From basic emotions theory to the behavioral ecology view. Journal of Nonverbal Behavior, 43(2), 161–194. https://doi.org/10.1007/s10919-019-00294-2.

Crivelli, C., & Gendron, M. (2017). Facial Expressions and Emotions in Indigenous Societies (Vol. 1). Oxford University Press. https://doi.org/10.1093/acprof:oso/9780190613501.003.0026.

Crivelli, C., Jarillo, S., Russell, J. A., & Fernández-Dols, J. M. (2016). Reading emotions from faces in two indigenous societies. Journal of Experimental Psychology: General, 145(7), 830.

Dawel, A., Wright, L., Irons, J., Dumbleton, R., Palermo, R., O’Kearney, R., & McKone, E. (2017). Perceived emotion genuineness: Normative ratings for popular facial expression stimuli and the development of perceived-as-genuine and perceived-as-fake sets. Behavior Research Methods, 49(4), 1539–1562. https://doi.org/10.3758/s13428-016-0813-2.

Dawel, A., Dumbleton, R., O’Kearney, R., Wright, L., & McKone, E. (2019). Reduced willingness to approach genuine smilers in social anxiety explained by potential for social evaluation, not misperception of smile authenticity. Cognition and Emotion, 33(7), 1342–1355. https://doi.org/10.1080/02699931.2018.1561421.

Dawel, A., Miller, E. J., Horsburgh, A., & Ford, P. (2021). A systematic survey of face stimuli used in psychological research 2000–2020. Behavior Research Methods, 54(4), 1889–1901. https://doi.org/10.3758/s13428-021-01705-3.

Deighton-Smith, N., & Bell, B. T. (2018). Objectifying fitness: A content and thematic analysis of #fitspiration images on social media. Psychology of Popular Media Culture, 7(4), 467–483. https://doi.org/10.1037/ppm0000143.

Duchenne, G. B. (1990). The mechanism of human facial expression. Cambridge University Press.

Ekman, P. (1970). Universal facial expressions of emotions. California Mental Health Research Digest, 8(4), 151–158.

Ekman, P. (1971). Universals and cultural differences in facial expressions of emotion. Nebraska Symposium on Motivation.

Ekman, P. (1985). Telling lies. W. W. Norton.

Ekman, P. (1992). An argument for basic emotions. Cognition and Emotion, 6(3–4), 169–200. https://doi.org/10.1080/02699939208411068.

Ekman, P. (1993). Facial expression and emotion. American Psychologist, 48(4), 384–392. https://doi.org/10.1037/0003-066X.48.4.384.

Ekman, P., & Cordaro, D. (2011). What is meant by calling emotions basic. Emotion Review, 3(4), 364–370. https://doi.org/10.1177/175407391141074.

Ekman, P., & Friesen, W. V. (1969). The repertoire of nonverbal behavior: Categories, origins, usage, and coding. Semiotica, 1(1), 49–98. https://doi.org/10.1515/semi.1969.1.1.49.

Ekman, P., & Friesen, W. V. (1975). Unmasking the face. A guide to recognizing emotions from facial cues. Prentice Hall.

Ekman, P., & Friesen, W. V. (1982). Felt, false, and miserable smiles. Journal of Nonverbal Behavior, 6(4), 238–252. https://doi.org/10.1007/BF00987191.

Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial action coding system (FACS): Investigators guide. Research Nexus.

Elfenbein, H. A. (2013). Nonverbal dialects and accents in facial expressions of emotion. Emotion Review, 5(1), 90–96. https://doi.org/10.1177/1754073912451332.

Elfenbein, H. A., & Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128(2), 203–235. https://doi.org/10.1037/0033-2909.128.2.203.

Frank, M. G., & Ekman, P. (1993). Not all smiles are created equal: The differences between enjoyment and nonenjoyment smiles. Humor, 6(1), 9–26. https://doi.org/10.1515/humr.1993.6.1.9.

Frank, M. G., Ekman, P., & Friesen, W. V. (1993). Behavioral markers and recognizability of the smile of enjoyment. Journal of Personality and Social Psychology, 64(1), 83. https://doi.org/10.1037//0022-3514.64.1.83.

Fridlund, A. J. (1991). Evolution and facial action in reflex, social motive, and paralanguage. Biological Psychology, 32(1), 3–100. https://doi.org/10.1016/0301-0511(91)90003-Y.

Fugate, J. M. B., Gouzoules, H., & Barrett, L. F. (2010). Reading chimpanzee faces: Evidence for the role of verbal labels in categorical perception of emotion. Emotion, 10(4), 544–554. https://doi.org/10.1037/a0019017.

Gračanin, A., Krahmer, E., Balsters, M., Küster, D., & Vingerhoets, A. J. J. M. (2021). How weeping influences the perception of facial expressions: The signal value of tears. Journal of Nonverbal Behavior, 45(1), 83–105. https://doi.org/10.1007/s10919-020-00347-x.

Gunnery, S. D., & Ruben, M. A. (2016). Perceptions of Duchenne and Non-duchenne smiles: A meta-analysis. Cognition and Emotion, 30(3), 501–515. https://doi.org/10.1080/02699931.2015.1018817.

Hall, J. A. (1978). Gender effects in decoding nonverbal cues. Psychological Bulletin, 85(4), 845–857. https://doi.org/10.1037/0033-2909.85.4.845.

Hassin, R. R., Aviezer, H., & Bentin, S. (2013). Inherently ambiguous: Facial expressions of emotions, in Context. Emotion Review, 5(1), 60–65. https://doi.org/10.1177/1754073912451331.

Hess, U., Adams, R. B., Grammer, K., & Kleck, R. E. (2009). Face gender and emotion expression: Are angry women more like men? Journal of Vision, 9(12), 19–19. https://doi.org/10.1167/9.12.19.

Huron, D. (2018). On the functions of sadness and grief. The function of emotions (pp. 59–91). Springer.

Ito, K., Ong, C. W., & Kitada, R. (2019). Emotional tears communicate sadness but not excessive emotions without other contextual knowledge. Frontiers in Psychology, 10(878), 1–9. https://doi.org/10.3389/fpsyg.2019.00878.

Jack, R. E. (2013). Culture and facial expressions of emotion. Visual Cognition, 21(9–10), 1248–1286. https://doi.org/10.1080/13506285.2013.835367.

Jack, R. E., Garrod, O. G. B., Yu, H., Caldara, R., & Schyns, P. G. (2012). Facial expressions of emotion are not culturally universal. Proceedings of the National Academy of Sciences, 109(19), 7241–7244. https://doi.org/10.1073/pnas.1200155109.

Jack, R. E., Garrod, O. G. B., & Schyns, P. G. (2014). Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Current Biology, 24(2), 187–192. https://doi.org/10.1016/j.cub.2013.11.064.

Jakobs, E., Manstead, A. S., & Fischer, A. H. (2001). Social context effects on facial activity in a negative emotional setting. Emotion, 1(1), 51. https://doi.org/10.1037/1528-3542.1.1.51.

Keller, M. C., & Nesse, R. M. (2006). The evolutionary significance of depressive symptoms: Different adverse situations lead to different depressive symptom patterns. Journal of Personality and Social Psychology, 91(2), 316–330. https://doi.org/10.1037/0022-3514.91.2.316.

Kret, M. E. (2015). Emotional expressions beyond facial muscle actions. A call for studying autonomic signals and their impact on social perception. Frontiers in Psychology, 6. https://doi.org/10.3389/fpsyg.2015.00711.

Kret, M. E., & De Gelder, B. (2012). A review on sex differences in processing emotional signals. Neuropsychologia, 50(7), 1211–1221. https://doi.org/10.1016/j.neuropsychologia.2011.12.022.

Krieglmeyer, R., & Deutsch, R. (2013). Approach does not equal Approach: Angry Facial expressions Evoke Approach only when it serves aggression. Social Psychological and Personality Science, 4(5), 607–614. https://doi.org/10.1177/1948550612471060.

Krumhuber, E. G., & Kappas, A. (2022). More what duchenne smiles do, less what they express. Perspectives on Psychological Science, 17(6), 1566–1575. https://doi.org/10.1177/17456916211071083.

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174. https://doi.org/10.2307/2529310.

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H., Hawk, S. T., & Van Knippenberg, A. D. (2010). Presentation and validation of the Radboud faces database. Cognition and Emotion, 24(8), 1377–1388. https://doi.org/10.1080/02699930903485076.

Le Mau, T., Hoemann, K., Lyons, S. H., Fugate, J. M. B., Brown, E. N., Gendron, M., & Barrett, L. F. (2021). Professional actors demonstrate variability, not stereotypical expressions, when portraying emotional states in photographs. Nature Communications, 12(1), 5037. https://doi.org/10.1038/s41467-021-25352-6.