Abstract

A solid object in \(\mathbb {R}^3\) can be represented by its smooth boundary surface which can be equipped with an intrinsic metric to form a 2-Riemannian manifold. In this paper, we analyze such surfaces using multiple metrics that give birth to multi-spectra by which a given surface can be characterized. Their relative sensitivity to different types of local structures allows each metric to provide a distinct perspective of the shape. Extensive experiments show that the proposed multi-metric approach significantly improves important tasks in geometry processing such as shape retrieval and find similarity and corresponding parts of non-rigid objects.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One way to analyze and process objects in 3D is to treat their shapes as 2-Riemannian manifolds describing their boundary surfaces. The metric chosen for such a surface determines fundamental properties of the shapes by which similarity and other quantities can be evaluated. For example, while two spheres with different radii are non-isometric when equipped with a regular Riemannian metric induced from \(\mathbb {R}^3\), they become isometric when the 2-manifold is equipped with a scale-invariant metric. This means that two surfaces can be isometrically equivalent with respect to one metric and isometrically different with respect to another.

Here, we propose to process a single smooth surface using multiple metrics which offer alternative interpretations of the same shape, each sensitive to different types of geometric structures. Specifically, our multi-metric approach is achieved by considering the spectral decomposition of Laplace–Beltrami operators (LBOs) defined with various metric tensors. We employ the regular and the scale-invariant metrics to illustrate the benefits of the proposed methodology in numerous shape analysis applications. In essence, the spectral decomposition of the LBO defined with the regular metric captures the surface structure as a whole, limiting its ability to capture fine details associated with local geometric structures of regions with high Gaussian curvature. At the other end, the spectral decomposition of the scale-invariant LBO (SI-LBO) is influenced mainly by curved regions, which usually contain significant semantic structures such as joints and fingertips that are essential for shape analysis when considering articulated objects. The complementary structures captured by the spectra resulting from each of the two metrics are the core of the proposed methodology.

Here, we extend the multi-metric approach introduced in [6], which promoted the concatenation of the spectra of the LBO based on the regular metric and of its scale-invariant version to characterize surfaces and match them to their parts. Section 2 establishes the necessary background in geometry processing. Section 3 presents the scale-invariant metric introduced in [2] and displays its effectiveness in representing articulated objects. Section 4 showcases the multi-metric paradigm in shape retrieval and explores an alternative approach to the proposed one, called self-functional map [16]. In a nutshell, the self functional map is a compact representation of a surface given by the inner product of eigenfunctions of Laplace–Beltrami operators defined by two different metrics. Section 5 demonstrates the accuracy of the proposed framework for matching similar regions between shapes [25] and introduces a novel learning-based initialization for computational efficiency. When dealing with shape retrieval, the multi-metric approach leads to improved separation of similar shapes, such as dogs and wolves. While trying to find the location of a part in a given shape, the alignment of the two spectra allows capturing details that may be missed when matching a single spectrum.

2 Background

Shapes as Riemannian Manifolds Let a shape be modeled by a 2-Riemannian manifold \(\mathcal {M} = (S,g)\), where S is a smooth two-dimensional surface embedded in \(\mathbb {R}^3\) and g a metric tensor, also referred to as first fundamental form. The metric tensor defines geometric quantities on the surface, such as lengths of curves and angles between vectors. Note that the same surface S with a different metric \(\tilde{g}\) can be used to define a different manifold \(\tilde{\mathcal {M}}= (S,\tilde{g})\).

Consider a parametric surface \(S(u,v): \varOmega \subseteq \mathbb {R}^2 \rightarrow \mathbb {R}^3\), equipped with the regular intrinsic metric g,

An infinitesimal length element ds on the surface S can then be defined by,

2.1 The Laplace–Beltrami operator

The Laplace–Beltrami operator (LBO) \({\varDelta _{g}}\) is an ubiquitous operator in shape analysis. It generalizes the Laplacian operator to Riemannian manifolds,

where |g| is the determinant of g and \({\mathcal {L}^2(\mathcal {M})}\) stands for the Hilbert space of square-integrable scalar functions defined on \(\mathcal {M}\).

The LBO admits a spectral decomposition under homogeneous Dirichlet boundary conditions,

where \(\partial \mathcal {M}\) stands for the boundary of manifold \(\mathcal {M}\). When considering an intrinsic metric, the set \({\{ \phi _i\}_{i\ge 0}}\) constitutes a basis invariant to isometric deformations. It can be regarded as a generalization of the Fourier basis [30].

Discretization In a discrete setting, S can be approximated, for example, by a triangulated mesh with n vertices. The discrete LBO can then be approximated by,

where \({\textbf {A}} \in \mathbb {R}^{n \times n}\), known as the mass matrix, contains an area element about each vertex, while \({\textbf {W}} \in \mathbb {R}^{n \times n}\) is the cotangent weight matrix [24]. The spectral decomposition of the discrete LBO can be computed as a solution of the generalized eigenvalue problem,

with homogeneous Dirichlet boundary conditions.

2.2 The Hamiltonian Operator in Shape Analysis

In [10] Choukroun et al. adapted the well-known Hamiltonian operator \(\mathcal{H}_{g}\) from quantum mechanics to shape analysis,

with \(v: S \rightarrow \mathbb {R}^{+}\). \(\mathcal{H}_{g}\) is a semi-positive definite operator that admits a spectral decomposition under homogeneous Dirichlet boundary conditions.

Top: First eigenvectors of the LBO of the partial shape \(\mathcal {N}\). Bottom: First eigenvectors of the Hamiltonian of the full shape \(\mathcal {M}\). The Hamiltonian is defined with a step potential v (in black) corresponding to the effective support of \(\mathcal {N}\). With the potential v, the eigenfunctions of the LBO and the Hamiltonian are similar up to a sign

The Hamiltonian operator was first introduced to shape analysis in [10], see also [17]. For our discussion, the most important property of the Hamiltonian is,

Property 1

Let \(\mathcal {M} =(S,g) \) be a Riemmanian manifold and \({v: S \rightarrow \mathbb {R}^{+}}\) a potential function. The eigenfunctions \(\phi _i\) of the Hamiltonian exponentially decay in every \({\hat{s} \in S}\) for which \({v(\hat{s}) > \lambda _i}\).

A proof can be found in [15] (Chapter 2.6); for one-dimensional examples, see [10]. Figure 1 illustrates that the potential can be considered as a mask determining the domain at which the LBO embedded in the Hamiltonian is effective. A second key property of the Hamiltonian is the differentiability of its eigenvalues with respect to its potential function [1].

Property 2

The eigenvalues \(\{ \lambda _i \}_{i\ge 0}\) of the discretized Hamiltonian operator \(\textbf{H}\) are differentiable with respect to the potential v. Namely,

where \({\otimes }\) denotes the element-wise multiplication, \({\phi _i}\) the eigenvector corresponding to \(\lambda _i\) and A the mass matrix.

Using the above notations, a discrete version of the Hamiltonian \({\textbf {H}}\) and the related eigendecomposition problem are given by,

where \({\textbf {V}} \in \mathbb {R}^{n \times n}_{+}\) is a diagonal matrix containing the positive scalar values of the potential v at each vertex of the discretized surface.

3 Scale Invariance as a Measure of Choice

3.1 Scale-Invariant Metric

One version of a scale-invariant metric for surfaces utilizes the Gaussian curvature K as a local scaling of the regular metric elements. A scale-invariant pseudo-metric \(\tilde{g}\) can then be defined by its elements to be,

where \(g_{ij}\) are the elements of the regular metric tensor. Adding a small positive constant \(\epsilon \in \mathbb {R}^+\) to the Gaussian curvature, so that, \( \tilde{g}_{ij} = \sqrt{\epsilon +K^2} \, g_{ij}\), defines a proper metric. The LBO of a Riemmanian manifold \(\tilde{\mathcal {M}}\), defined by the surface S equipped with the scale-invariant metric \(\tilde{g}\) is called the scale-invariant Laplace–Beltrami Operator (SI-LBO),

See [2] for more details.

3.2 Scale-Invariant Metric and Articulated Objects

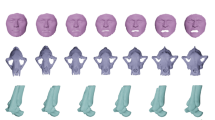

Absolute value of the Gaussian curvature texture mapped to shapes from the FAUST [7] and TOSCA [9] datasets. Regions with large curvature magnitudes are darker and have a larger influence on the spectrum of the SI-LBO, according to Theorem 1. These regions contain important details that can be used to classify an object

The scale-invariant metric inflates semantically important regions in articulated objects. Figure 2 shows the curvature magnitude for some shapes from SHREC’16 [12]. In the human case, for instance, the scale-invariant metric accentuates the head, the hands and the feet, at the expense of flat regions such as the back and the legs. Intuitively, the scale-invariant metric shrinks intrinsically flat regions and inflates intrinsically curved ones (with effective Gaussian curvature). We formalize this intuition in the spectral domain with a direct generalization of the Weyl law for the SI-LBO.

Lemma 1

Let \(\tilde{\mathcal {M}} = (S: \varOmega \subseteq \mathbb {R}^2 \rightarrow \mathbb {R}^3, \tilde{g})\) be a Riemmanian manifold defined with the scale-invariant metric tensor \({\tilde{g}}\) and \(\{\tilde{\lambda }_i \}_{i\ge 1}\) be the spectrum of the scale-invariant LBO of \(\tilde{\mathcal {M}}\). It holds, \(\tilde{\lambda }_i \sim \frac{2\pi i}{\int _{\omega \in \varOmega } |K(\omega )| {\textrm{d}}a(\omega )}\), where \(\sim \) stands for asymptotic equality.

Proof

According to the Weyl law,

where \(da = |g|da\) is an area element. \(\square \)

Next, a simple perturbation analysis expresses the influence of a local change in the Gaussian curvature on the spectrum of the SI-LBO.

Theorem 1

Let \(p \in \tilde{\mathcal {M}}\) be a point with a positive Gaussian curvature and B(p) a neighborhood of p where the Gaussian curvature satisfies \(K(p')\ge 0\) for all \(p' \in B(p)\). Consider a local perturbation in the Gaussian curvature such that \(\int _{B(p) \subset \tilde{\mathcal {M}}'} |K(\omega )| {\textrm{d}}a(\omega )\) = \(\int _{B(p) \subset \tilde{\mathcal {M}}} |K(\omega )| {\textrm{d}}a(\omega ) + \epsilon \), where \(\tilde{\mathcal {M}}\) stands for the perturbed manifold and \(\epsilon >0\). The relative perturbation of the Gaussian curvature, denoted as \(\delta K\), is defined by \( \frac{\epsilon }{ \int _{\varOmega } |K(\omega )| {\textrm{d}}a(\omega )}\), and the relative perturbation of the spectrum, denoted as \(\delta \tilde{\lambda }_{i}\), is given by \(\frac{\tilde{\lambda }_i - \tilde{\mu }_i}{\tilde{\lambda }_i}\), where \(\tilde{\mu }_i\) is the \(i^{th}\) eigenvalue of the perturbed manifold. It holds \(\delta \widetilde{\lambda }_i \sim \delta K \,\).

Proof

Using Lemma 1,

Therefore,

Theorem 1 implies that the SI-LBO spectrum is mostly determined by regions with effective Gaussian curvature. Empirical observations indicate that this property also extends to the modes of the SI-LBO with phase transitions being notably concentrated, or compressed, in curved regions [16]. Consequently, modes associated with the leading (smallest) eigenvalues effectively capture intricate structures, such as finger tips.

Discretization The Gaussian curvature K is estimated using the Gauss–Bonnet formula which is subject to discretization artifacts. To mitigate these effects, we smooth the result by computing the average of the approximated Gaussian curvatures at the first ring neighbors of each vertex. Following [2, 8, 16], we adopt an intermediate metric between the Euclidean and the scale-invariant one

where \(\alpha \in [0, 1]\), and \(\epsilon \in \mathbb {R}^+\) a small positive number that prevents the metric tensor from vanishing. Selecting \(\alpha =0\) yields the regular Riemannian metric while \(\alpha =1\) the scale-invariant one. This intermediate metric effectively trades off between sensitivity to global and local structures as defined by the Gaussian curvature. The interpolation factor \(\alpha \) maintains a balance between capturing fine high curvature details while ensuring robustness to discretization and geometric high-frequency noise.

4 Multi-metric Approaches for Shape Retrieval

4.1 Dual Spectra for Shape Retrieval

Kac [18] published the seminal paper “Can you hear the shape of a drum?”. There, he formulated a central question in geometry processing: Is it possible to recover the exact geometry of a shape from its LBO spectrum? Two decades later, Gordon et al. [14] found a pair of non-isometric 2D polygons with the same spectra, see Fig. 3. While known examples of different manifolds with identical spectra exist, they remain specific and at the other end, non-trivial classes of manifolds, such as bi-axially symmetric planes [33], have been shown to be fully determined by their spectra. In 2005, Reuters et al. [26] proposed eigenvalues of the LBO—named ShapeDNA—as a compact and intrinsic signature for 3D shapes. The spectrum recently regained a great deal of attention in the geometry processing community and a line of recent papers using it demonstrated excellent empirical results operating on shapes encountered in real-world scenarios [4, 11, 20, 22, 23]. We extend this line of thought and consider the concatenation of the leading eigenvalues of the LBO and of the SI-LBO denoted \({\{\lambda _{i}\}^{k}_{i=1}}\) and \({\{\tilde{\lambda }i\}^{k}_{i=1}}\), respectively, as a compact shape descriptor.

As argued in Sect. 3, leading eigenvalues in the spectrum of the SI-LBO are influenced by regions with high Gaussian curvature like joints and fingertips which are essential in representing articulated shapes. The LBO leading eigenvalues, at the other end, treat all surface points alike and are thus less sensitive to these geometric structures. We normalize each truncated spectrum by its \(L_2\) norm to ensure balance between them. As shown in Fig. 4, the incorporation of the SI-LBO results in a clear separation of shape classes [9] such as quadrupeds like horses, cats, dogs, wolves and humans that cannot be separated when only a single LBO spectrum is considered. Figure 5 shows a cluster separation metrics as function of \({\alpha }\) on TOSCA [9]. Based on Fig. 5, we set \(\alpha = 0.33\) and use \(\epsilon =10^{-8}\).

2D multidimensional scaling (MDS) of distances between truncated spectra of the LBO (top-left), of the SI-LBO (top-right) and of the LBO spectrum together with that of the SI-LBO (bottom) for shapes from TOSCA [9]. Points with the same colors represent almost isometric shapes, at different poses, of the same class

Considering the space defined by the MDS of the spectra of TOSCA shapes 4, we observe the variation of a cluster separation metrics with respect to the \(\alpha \) parameter in the scale-invariant metric. The metric considered is the ratio of the mean inter-class distances and the mean intra-class distances. The graph has been smoothed for better visualization and normalized by the ratio obtained for \(\alpha =0\) when only the regular intrinsic metrics is considered

4.2 Self Functional Maps

Halimi et al. [16] proposed the self functional map, an intrinsic shape signature building upon the functional map formulation. In a nutshell, a self functional map is a functional map between two Riemannian manifolds sharing the same underlying surface but with different intrinsic metrics. It can be efficiently computed as the inner product of eigenfunctions of Laplace–Beltrami operators induced by two different metrics,

where the corresponding eigenvectors are given by \(-\varDelta _g \phi _i = \lambda _i\phi _i\) and \(-\varDelta _{{\tilde{g}}} \tilde{\phi }_i = \tilde{\lambda }_i\tilde{\phi }_i\), respectively. This signature treats a single surface with multiple metrics and follows a similar line of thought as the one we promote in this paper. Indeed, using self functional maps with the regular and scale-invariant metrics allowed classification [16], and later on even matching [8], of non-rigid shapes, showcasing the effectiveness of the multi-metric paradigm.

Region localization method with multi-metric and single metric approaches. Left frame: Whole non-rigid shape and its part used for the partial shape matching task. Next frame depicts the matching result with the proposed multi-metric approach (left red patch) compared to the single metric [25] method (right red patch). Light-green: Canonical embedding [29] of the tail and the foreleg (blue and red ellipses, respectively) under the regular metric and the SI one (left and right circles, respectively). A leg is similar to the tail when considering the regular metric. The SI metric emphasizes the fine geometric discrepancies between a leg and the tail. These fine details explain the correct matching obtained by the multi-metric approach

5 Region Localization and Partial Shape Matching

5.1 Dual Spectra Alignment for Region Localization

In this section, we introduce a framework to find the effective support of a part in a shape, as illustrated in Fig. 6. Using Property 1, we reduce the localization of a partial shape within a full shape to a search for a Hamiltonian’s potential v that represents the effective support of the partial shape. Within our framework, v is determined through the minimization of an alignment cost of the spectra of Hamiltonian operators defined on the full shape with the spectra of the Laplace–Beltrami operators defined on the partial shape.

Notations The full shape equipped with the regular metric is denoted by \({\mathcal {M}=(S_f, g)}\) and the spectrum of the Hamiltonian defined over \({\mathcal {M}}\) by \({\{\lambda _i\}^{k}_{i=1}}\) with \({\lambda _1 \le \cdots \le \lambda _k}\). \({\, \tilde{\mathcal {M}}=(S_f, \tilde{g})}\) stands for the full shape defined with the scale-invariant metric and \({\{\tilde{\lambda }_i\}^{k}_{i=1}}\), with \({\tilde{\lambda }_1 \le \cdots \le \tilde{\lambda }_k}\), for the spectrum of the scale-invariant Hamiltonian. We denote by \({\varPhi \in \mathbb {R}^{n \times k}}\) and \({\tilde{\varPhi } \in \mathbb {R}^{n \times k}}\) the k first eigenfunctions of the Hamiltonian and of the scale-invariant Hamiltonian, respectively. In the same way, the partial shape equipped with the regular and the scale-invariant metrics are referred to as \({\mathcal {N}=(S_p, g), \, \tilde{\mathcal {N}}=(S_p, \tilde{g})}\). The spectra of the LBO and of the SI-LBO are, respectively, denoted by \({\{\mu _i\}^{k}_{i=1}}\) and by \({\{\tilde{\mu }_i\}^{k}_{i=1}}\), with \({\mu _1 \le \cdots \le \mu _k}\) and \({\tilde{\mu }_1 \le \cdots \le \tilde{\mu }_k}\).

Optimization We consider a cost function that measures the alignment of the LBO spectrum of \(\mathcal {N}\) and the SI-LBO of \(\tilde{\mathcal {N}}\) with the spectra of the regular and the scale-invariant Hamiltonians of \(\mathcal {M}\) and \(\tilde{\mathcal {M}}\). Namely,

Following [25], the weighted L2 norm \({\Vert .\Vert _w}\) is defined as,

to mitigate the weight given to high frequencies. The cost function Eq. (18) induces a constrained optimization problem,

which is differentiable with respect to v according to Property 2,

where \(\oslash \) stands for point-wise division. To simplify the optimization process, we minimize an unconstrained relaxation of Eq. (20),

where \(q: \mathbb {R} \rightarrow \mathbb {R}^{+}\) is a smooth saturation function set as \(q(x) = 10 \, \mu _k\, \text {tanh}(x) + 1)\). Equation (22) is then minimized with a trust-region procedure [32], a first-order optimization algorithm.

Initialization Strategies We consider two initialization strategies for our iterative method. The first follows the initialization procedure described in [6, 25] where the proposed iterative method is performed over multiple initial potentials. The solution is selected by comparing the projections of the SHOT descriptors [28] onto the first eigenfunctions of the regular and the scale-invariant Hamiltonians of the full shape, with the projection of SHOT descriptors onto the first eigenfunctions of the LBO and the SI-LBO of the partial shape.

While this initialization strategy yields accurate results, it is computationally intensive due to the need to perform the iterative procedure multiple times. It also relies on the extrinsic SHOT descriptors, which may be sensitive to changes in shape discretization [3] and pose. To address these limitations, we explore an additional initialization scheme that relies on a learning method such as DPFM [3]. Deep neural networks for partial shape matching have the advantage of computationally efficient inference, providing high precision score, and not relying on extrinsic descriptors. The learning method is used to generate a single initial potential to which the proposed iterative dual-spectra alignment procedure is then applied. This initialization strategy, called learning initialization, also avoids the use of the extrinsic SHOT features altogether. In Sect. 5.2, we show that this alternative initialization effectively balances quality and computational cost when prior data is available for training a learning-based model.

Learning-based initialization. Illustration of the learning initialization policy on non-isometric shapes from PFARM [19] (a) and shapes from SHREC’16 [12] (b). Left: Partial shape. Middle: Prediction of DPFM [3]. Right: Prediction of the proposed framework initialized with the mask generated by DPFM [3]. IoU is reported behind each shape

5.2 Experiments

We assess the dual-spectra alignment method on SHREC’16 Partial Matching Benchmark (CUTS) [12]. SHREC’16 CUTS includes 120 partial shapes from 8 distinct classes which are obtained by cutting non-rigid shapes with random planes. We adopt the geometric intersection over union (IoU) as our primary evaluation metric and compare the proposed method to competing state of the art frameworks for partial shape similarity. The axiomatic methods we assess are the spectrum alignment procedure proposed by Rampini et al. [25], partial functional correspondences (PFC) [27], and a bag-of-words aggregation [31] of SHOT descriptors [28]. We also consider DPFM [3], a state-of-the-art learning-based method for partial shape matching. For DPFM [3], SHREC’16 CUTS [12] was split into three folds. Training was performed on two folds, keeping each time a third fold apart for evaluation.

As detailed in Table 1, our approach outperforms existing methods, advancing the current state of the art in SHREC’16 CUTS by 12.4%. Although DPFM [3] and PFC [27] showcase commendable precision, their recall is compromised due to prevalent many-to-one correspondences that occasionally occur when deriving a point-to-point map from a functional map, as exposed in [5]. Figure 7 illustrates the advantage of the multi-metric methodology in capturing important parts, such as the head of the third object or the tail of the centaur, which are missed by other methods, including the single metric approach [25].

To fairly compare our approach to recent learning methods, we measure the zero-shot performance of DPFM trained on SHREC’16 CUTS on a challenging test set called PFARM [3, 19]. PFARM [3, 19] extends the FARM test set [19]. It encompasses 27 test pairs of approximately isometric human subjects, each presenting distinct connectivity and vertex density. Table 1 demonstrates that the proposed method has a significant advantage when processing unknown shapes in challenging setups that mirrors real-world applications.

Consistency analysis To evaluate the consistency of the proposed method and its robustness with respect to changes in sampling density, we conduct an experiment on sub-sampled meshes. Each shape in SHREC’16 CUTS was re-sampled using a standard mesh decimation procedure [13] to obtain 50%, 25%, and 10% of the original number of vertices. Figure 8 shows the results of the proposed method applied to shapes with varying sampling density and mesh resolution. There is only a moderate decrease in performance when 90% of the vertices are removed.

Learning-Based Initialization Here, we evaluate the learning-based initialization strategy. Unlike the alternative multi-initializations procedure, which involve the optimization of 41 initial potentials, it utilizes a learning procedure to generate a single potential for optimization. This reduction in the number of potentials results in a significant reduction in computational cost while maintaining high performance on partial shape matching, as demonstrated by the IoU scores of 0.705 on SHREC’16 [12] and 0.526 on PFARM [3, 19].

The learning initialization can be considered as a refinement method. Table 2 compares its performance with that of the zoom-out [21], a standard refinement procedure for shape and partial shape matching used in [3]. Figure 9 displays the power of the proposed method in refining masks generated by DPFM [3].

5.3 Ablation Study: Multi-metric and Single Metric

Multi-metric versus single metric approach Comparison of the proposed multi-metric approach, which includes the spectra of both the LBO and SI-LBO, with a method based only on the spectrum of the LBO and the SI-LBO spectrum. The plot shows the cumulative score of each setup tested on SHREC’16 [12]. The multi-metric approach reaches a mean IoU of 0.75. For the single metric approach, involving only the LBO, mean IoU is 0.71. While using only the SI-LBO, the mean IoU is 0.7

To quantify the benefits of the multi-metric approach proposed for region localization, we compare the alignment of single and multiple spectra while considering itan equal number of eigenvalues for each. Figure 10 shows the cumulative IoU of the proposed framework applied to SHREC’16 [12] while using 20 eigenvalues of the LBO and 20 eigenvalues of the SI-LBO. It is compared to one setup with 40 eigenvalues of the LBO (without SI-LBO) and to a second setup with 40 eigenvalues of the SI-LBO (without LBO). The region alignment problems are thereby solved with the same number of constraints for each problem. Figure 10 shows that the multi-metric approach clearly outperforms single metric approaches.

6 Conclusion

We introduced a novel approach that marries the regular and scale-invariant metrics. The promoted multi-Riemannian metric approach of the same manifold was shown to improve geometry processing tasks. Future research directions include extending the proposed approach to flat domains with vanishing Gaussian curvature and learning metrics adapted to specific classes of shapes.

References

Abou-Moustafa, K.T.: Differentiating Eigenvalues and Eigenvectors. McGill Technical Report (2009)

Aflalo, Y., Kimmel, R., Raviv, D.: Scale invariant geometry for nonrigid shapes. SIAM J. Imaging Sci. 6(3), 1579–1597 (2013)

Attaiki, S., Pai, G., Ovsjanikov, M.: DPFM: deep partial functional maps. In: International Conference on 3D Vision, pp. 175–185. IEEE (2021)

Aumentado-Armstrong, T., Tsogkas, S., Dickinson, S., Jepson, A.: Disentangling geometric deformation spaces in generative latent shape models. Int. J. Comput. Vis. 131(7), 1611–1641 (2023)

Bensaïd, D., Bracha, A., Kimmel, R.: Partial shape similarity by multi-metric Hamiltonian spectra matching. In: International Conference on Scale Space and Variational Methods in Computer Vision, pp. 717–729. Springer (2023)

Bensaïd, D., Rotstein, N., Goldenstein, N., Kimmel, R.: Partial matching of nonrigid shapes by learning piecewise smooth functions. In: Computer Graphics Forum, vol. 42, pp. 14913. Wiley Online Library (2023)

Bogo, F., Romero, J., Loper, M., Black, M.J.: Faust: dataset and evaluation for 3D mesh registration. In: Conference on Computer Vision and Pattern Recognition, pp. 3794–3801 (2014)

Bracha, A., Halimi, O., Kimmel, R.: Shape correspondence by aligning scale-invariant LBO eigenfunctions. In: Eurographics Workshop on 3D Object Retrieval (2020)

Bronstein, A.M., Bronstein, M.M., Kimmel, R.: Numerical Geometry of Non-rigid Shapes. Springer, Berlin (2008)

Choukroun, Y., Shtern, A., Bronstein, A., Kimmel, R.: Hamiltonian operator for spectral shape analysis. IEEE Trans. Vis. Comput. Graph. 26(2), 1320–1331 (2018)

Cosmo, L., Panine, M., Rampini, A., Ovsjanikov, M., Bronstein, M.M., Rodola, E.: Isospectralization, or how to hear shape, style, and correspondence. In: Conference on Computer Vision and Pattern Recognition, pp. 7529–7538 (2019)

Cosmo, L., Rodola, E., Bronstein, M.M., Torsello, A., Cremers, D., Sahillioglu, Y., et al.: Shrec’16: partial matching of deformable shapes. In: Eurographics Workshop on 3D Object Retrieval, pp. 61–67. Eurographics Association (2016)

Garland, M., Heckbert, P.S.: Surface simplification using quadric error metrics. In: Annual Conference on Computer Graphics and Interactive Techniques, pp. 209–216 (1997)

Gordon, C.S.: You can’t hear the shape of a manifold. In: New Developments in Lie Theory and Their Applications, pp. 129–146. Springer (1992)

Griffiths, D., Schroeter, D.: Introduction to Quantum Mechanics. Cambridge University Press, Cambridge (2018)

Halimi, O., Kimmel, R.: Self functional maps. In: International Conference on 3D Vision, pp. 710–718. IEEE (2018)

Iglesias, J.A., Kimmel, R.: Schrödinger diffusion for shape analysis with texture. In: European Conference of Computer Vision Workshops, pp. 123–132. Springer (2012)

Kac, M.: Can one hear the shape of a drum? Am. Math. Mon. 73(4P2), 1–23 (1966)

Kirgo, M., Melzi, S., Patane, G., Rodola, E., Ovsjanikov, M.: Wavelet-based heat kernel derivatives: towards informative localized shape analysis. In: Computer Graphics Forum, vol. 40, pp. 165–179. Wiley Online Library (2021)

Marin, R., Rampini, A., Castellani, U., Rodola, E., Ovsjanikov, M., Melzi, S.: Instant recovery of shape from spectrum via latent space connections. In: 2020 International Conference on 3D Vision, pp. 120–129. IEEE (2020)

Melzi, S., Ren, J., Rodola, E., Sharma, A., Wonka, P. Ovsjanikov, M.: Zoomout: spectral upsampling for efficient shape correspondence. ACM Trans. Graph. (2019)

Moschella, L., Melzi, S., Cosmo, L., Maggioli, F., Litany, O., Ovsjanikov, M., Guibas, L., Rodolà, E.: Learning spectral unions of partial deformable 3D shapes. In: Computer Graphics Forum, vol. 41, pp. 407–417. Wiley Online Library (2022)

Pegoraro, M., Melzi, S., Castellani, U., Marin, R., Rodolà, E.: Localized shape modelling with global coherence: an inverse spectral approach. In: Computer Graphics Forum, vol. 41, pp. 13–24. Wiley Online Library (2022)

Pinkall, U., Polthier, K.: Computing discrete minimal surfaces and their conjugates. Exp. Math. 2(1), 15–36 (1993)

Rampini, A., Tallini, I., Ovsjanikov, M., Bronstein, A.M., Rodola, E.: Correspondence-free region localization for partial shape similarity via Hamiltonian spectrum alignment. In: 2019 International Conference on 3D Vision, pp. 37–46. IEEE (2019)

Reuter, M., Wolter, F.-E., Peinecke, N.: Laplace–Beltrami spectra as ‘shape-DNA’ of surfaces and solids. Comput. Aided Des. 38(4), 342–366 (2006)

Rodolà, E., Cosmo, L., Bronstein, M.M., Torsello, A., Cremers, D.: Partial functional correspondence. In: Computer Graphics Forum, vol. 36, pp. 222–236. Wiley Online Library (2017)

Salti, S., Tombari, F., Di Stefano, L.: SHOT: Unique signatures of histograms for surface and texture description. Comput. Vis. Image Underst. 125, 251–264 (2014)

Sela, M., Aflalo, Y., Kimmel, R.: Computational caricaturization of surfaces. Comput. Vis. Image Underst. 141, 1–17 (2015)

Taubin, G.: A signal processing approach to fair surface design. In: Annual Conference on Computer Graphics and Interactive Techniques, pp. 351–358 (1995)

Toldo, R., Castellani, U., Fusiello, A.: A bag of words approach for 3D object categorization. In: Computer Vision/Computer Graphics Collaboration Techniques, pp. 116–127. Springer (2009)

Yuan, Y.: A review of trust region algorithms for optimization. In: ICIAM, vol. 99, pp. 271–282 (2000)

Zelditch, S.: Spectral determination of analytic bi-axisymmetric plane domains. Geom. Funct. Anal. 10(3), 628–677 (2000)

Acknowledgements

We thank Alon Zvirin for stimulating discussions, and Oshri Halimi and Amit Bracha for their insightful comments about the manuscript. This research was partially supported by the Israel Innovation Authority (Grant No. 82537).

Funding

Open access funding provided by Technion - Israel Institute of Technology.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bensaïd, D., Kimmel, R. A Multi-spectral Geometric Approach for Shape Analysis. J Math Imaging Vis 66, 606–615 (2024). https://doi.org/10.1007/s10851-024-01192-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-024-01192-z