Abstract

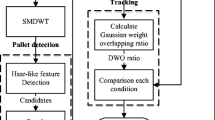

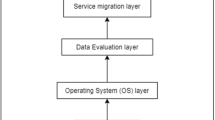

With the development of smart warehouses in Industry 4.0, scheduling a fleet of automated guided vehicles (AGVs) for transporting and sorting parcels has become a new development trend. In smart warehouses, AGVs receive paths from the multi-AGV scheduling system and independently sense the surrounding environment while sending poses as interactive information. This navigation method relies heavily on on-board sensors and significantly increases the information interactions within the system. Under this situation, a solution that locates multiple AGVs in global images of the warehouse by top cameras is expected to have a great effect. However, traditional tracking algorithms cannot output the heading angles required by the AGV navigation and their real-time performance and calculation accuracy cannot satisfy the tracking of large-scale AGVs. Therefore, this paper proposes a multi-AGV tracking system that integrates a multi-AGV scheduling system, AprilTag system, improved YOLOv5 with the oriented bounding box (OBB), extended Kalman filtering (EKF), and global vision to calculate the coordinates and heading angles of AGVs. Extensive experiments prove that in addition to less time complexity, the multi-AGV tracking system can efficiently track a fleet of AGVs with higher positioning accuracy than traditional navigation methods and other tracking algorithms based on various location patterns.

Similar content being viewed by others

Data or code Availability

Data or code may only be provided with restrictions upon request.

References

Lee, J., Kao, H.A., Yang, S.: Shanhu, service innovation and smart analytics for industry 4.0 and big data environment. Procedia Cirp 16, 3–8 (2014). https://doi.org/10.1016/j.procir.2014.02.001Get

Cavanini, L., Cicconi, P., Freddi, A., Germani, M., Longhi, S., Monteriu, A., Pallotta, E., Prist, M.: A preliminary study of a cyber physical system for industry 4.0: Modelling and co-simulation of an agv for smart factories. In: 2018 Workshop on Metrology for Industry 4.0 and IoT. https://doi.org/10.1109/METROI4.2018.8428334, pp 169–174 (2018)

Waschull, S., Bokhorst, J.A.C., Molleman, E., Wortmann, J.C.: Work design in future industrial production : Transforming towards cyber-physical systems. Comput. Indust. Eng. 139(105679), 1–13 (2020). https://doi.org/10.1016/j.cie.2019.01.053

Lynch, L., Newe, T., Clifford, J., Coleman, J., Walsh, J., Toal, D.: Automated ground vehicle (agv) and sensor technologies-a review. In: 2018 12th International Conference on Sensing Technology (ICST) IEEE. https://doi.org/10.1109/ICSensT.2018.8603640, pp 347–352 (2018)

Yu, S., Yan, F., Zhuang, Y., Gu, D.: A deep-learning-based strategy for kidnapped robot problem in similar indoor environment. J. Intell. Robot. Syst. 100(3), 765–775 (2020). https://doi.org/10.1007/s10846-020-01216-x

Qi, M., Li, X., Yan, X., Zhang, C.: On the evaluation of agvs-based warehouse operation performance. Simul. Model. Pract. Theory 87, 379–394 (2018). https://doi.org/10.1016/j.simpat.2018.07.015

De Ryck, M., Versteyhe, M., Debrouwere, F.: Automated guided vehicle systems, state-of-the-art control algorithms and techniques. J. Manuf. Syst. 54, 152–173 (2020). https://doi.org/10.1016/j.jmsy.2019.12.002

Quicktron AGV: http://www.flashhold.com/ (2021)

Omron mobile robot LD/HD series: https://www.fa.omron.com.cn/ (2021)

Ye, H., Chen, Y., Liu, M.: Tightly coupled 3d lidar inertial odometry and mapping. In: 2019 International Conference on Robotics and Automation (ICRA). https://doi.org/10.1109/ICRA.2019.8793511, pp 3144–3150 (2019)

Xiong, J., Liu, Y., Ye, X., Han, L., Qian, H., Xu, Y.: A hybrid lidar-based indoor navigation system enhanced by ceiling visual codes for mobile robots. In: 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO). https://doi.org/10.1109/ROBIO.2016.7866575, pp 1715–1720 (2016)

Dönmez, E., Kocamaz, A.F., Dirik, M.: A vision-based real-time mobile robot controller design based on gaussian function for indoor environment. Arab. J. Sci. Eng. 43(12), 7127–7142 (2018). https://doi.org/10.1007/s13369-017-2917-0

Dönmez, E., Kocamaz, A.F.: Multi target task distribution and path planning for multi-agents. In: 2018 International conference on artificial intelligence and data processing (IDAP). https://doi.org/10.1109/IDAP.2018.8620932, pp 1–7 (2018)

Boutteau, R., Rossi, R., Qin, L., Merriaux, P., Savatier, X.: A vision-based system for robot localization in large industrial environments. J. Intell. Robot. Syst. 99(2), 359–370 (2020). https://doi.org/10.1007/s10846-019-01114-x

Yang, Q., Lian, Y., Xie, W.: Hierarchical planning for multiple agvs in warehouse based on global vision. Simulation Modelling Practice and Theory 104, 102124, 1–15 (2020). https://doi.org/10.1016/j.simpat.2020.102124

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587 (2014)

Bewley, A., Ge, Z., Ott, L., Ramos, F., Upcroft, B., online, Simple, tracking, realtime. In: 2016 IEEE International Conference on Image Processing (ICIP). https://doi.org/10.1109/ICIP.2016.7533003, pp 3464–3468 (2016)

Wojke, N., Bewley, A., Paulus, D.: Simple online and realtime tracking with a deep association metric. In: 2017 IEEE International Conference on Image Processing (ICIP), pp 3645–3649 (2017)

Redmon, J., Divvala, S.K., Girshick, R., Farhadi, A.: You only look once: Unified, real-time object detection, arXiv: Computer Vision and Pattern Recognition. 779–788 (2016)

Redmon, J., Farhadi, A.: Yolo9000: Better, faster, stronger. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/CVPR.2017.690, pp 6517–6525 (2017)

Redmon, J., Farhadi, A.: Yolov3: An incremental improvement. arXiv:Computer Vision and Pattern Recognition, arXiv:1804.02767 (2018)

Kapania, S., Saini, D., Goyal, S., Thakur, N., Jain, R., Nagrath, P.: Multi object tracking with uavs using deep sort and yolov3 retinanet detection, framework. In: Proceedings of the 1st ACM Workshop on Autonomous and Intelligent Mobile Systems Association for Computing Machinery. https://doi.org/10.1145/3377283.3377284, pp 1–6 (2020)

Luo, W., Xing, J., Milan, A., Zhang, X., Liu, W., Zhao, X., Kim, T.-K.: Multiple object tracking: A literature review, arXiv:1409.7618 (2014)

Xu, Y., Zhou, X., Chen, S., Li, F.: Deep learning for multiple object tracking: a survey. IET Comput. Vis. 13(4), 355–368 (2019). https://doi.org/10.1049/iet-cvi.2018.5598

Wang, J., Olson, E.: Apriltag 2: Efficient and robust fiducial detection. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). https://doi.org/10.1109/IROS.2016.7759617, pp 4193–4198 (2016)

Lee, S., Tewolde, G.S., Lim, J., Kwon, J.: Qr-code based localization for indoor mobile robot with validation using a 3d optical tracking instrument. In: 2015 IEEE International Conference on Advanced Intelligent Mechatronics (AIM). https://doi.org/10.1109/AIM.2015.7222664, pp 965–970 (2015)

Quigley, M., Gerkey, B., Smart, W.D.: Programming Robots with ROS: a practical introduction to the Robot Operating System, O’Reilly Media, Inc (2015)

Olson, E.: Apriltag: A robust and flexible visual fiducial system. In: 2011 IEEE International Conference on Robotics and Automation. https://doi.org/10.1109/ICRA.2011.5979561, pp 3400–3407 (2011)

Pfrommer, B., Daniilidis, K.: Tagslam: Robust slam with fiducial markers. arXiv:1910.00679 (2019)

Grobler, P., Jordaan, H.: Autonomous vision based landing strategy for a rotary wing uav. In: 2020 International SAUPEC/RobMech/PRASA Conference. https://doi.org/10.1109/SAUPEC/RobMech/PRASA48453.2020.9041238, pp 1–6 (2020)

Bae, S., Yoon, K.: Confidence-based data association and discriminative deep appearance learning for robust online multi-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 40(3), 595–610 (2018). https://doi.org/10.1109/TPAMI.2017.2691769

Xu, Y., Zhou, X., Chen, S., Li, F.: Deep learning for multiple object tracking: a survey. IET Comput. Vis. 13(4), 355–368 (2019). https://doi.org/10.1049/iet-cvi.2018.5598

Wang, J., Ding, J., Guo, H., Cheng, W., Yang, W.: Mask obb: a semantic attention-based mask oriented bounding box representation for multi-category object detection in aerial images. Remote Sens. 11(24), 2930 (2019). https://doi.org/10.3390/rs11242930

Chen, Z., Chen, K., Lin, W., See, J., Yang, C.: Piou loss: Towards accurate oriented object detection in complex environments. In: European Conference on Computer Vision (ECCV2020), Springer International Publishing. https://doi.org/10.1007/978-3-030-58558-7_12, pp 195–211 (2020)

Zhang, Z.: Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. Mach. Intell. 26(7), 892–899 (2004). https://doi.org/10.1109/TPAMI.2004.21

Wang, Y.M., Li, Y., Zheng, J.: A camera calibration technique based on opencv. In: The 3rd International Conference on Information Sciences and Interaction Sciences. https://doi.org/10.1109/ICICIS.2010.5534797, pp 403–406 (2010)

Huang, W., Maomin, A., Sun, Z.: Design and recognition of twodimensional code for mobile robot positioning. In: International conference on intelligent robotics and applications. https://doi.org/10.1007/978-3-030-27538-9_57, pp 662–672 (2019)

Qrcodescanner: https://github.com/heiBin/QrCodeScanner (2017)

Train convolutional neural network for regression: https://ww2.mathworks.cn/help/deeplearning (2019)

Acknowledgements

This work was supported by National Nature Science Foundation of China under the grant (61973125 and 61803161) and YangFan Innovative & Enterpreneurial Research Team Project of Guangdong Province (2016YT03G125).

Author information

Authors and Affiliations

Contributions

Qifan Yang proposed a multi-AGV tracking system based on the global vision and combined it with a multi-AGV scheduling system and conducted simulation and experimental validation. Yindong Lian achieved locating and navigating multiple AGVs by using extended Kalman filtering (EKF). Yanru Liu achieved the improved YOLOv5 algorithm to locate the tray on the AGV. Wei Xie assisted the experiment and contributed to writing the manuscript. Yibin Yang designed the AGV model in the Gazebo. At last, all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval

This paper does not contain research that requires ethical approval.

Consent to participate

All authors have consented to participate in the research study.

Consent for Publication

All authors have read and agreed to publish this paper.

Conflict of Interests

All authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, Q., Lian, Y., Liu, Y. et al. Multi-AGV Tracking System Based on Global Vision and AprilTag in Smart Warehouse. J Intell Robot Syst 104, 42 (2022). https://doi.org/10.1007/s10846-021-01561-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10846-021-01561-5