Abstract

The complexity of industrial processes imposes a lot of challenges in building accurate and representative causal models for abnormal events diagnosis, control and maintenance of equipment and process units. This paper presents an innovative data-driven causality modeling approach using interpretable machine learning and process mining techniques, in addition to human expertise, to efficiently and automatically capture the complex dynamics of industrial systems. The approach tackles a significant challenge in the causality analysis community, which is the discovery of high-level causal models from low-level continuous observations. It is based on the exploitation of event data logs by analyzing the dependency relationships between events to generate accurate multi-level models that can take the form of various state-event diagrams. Highly accurate and trustworthy patterns are extracted from the original data using interpretable machine learning integrated with a model enhancement technique to construct event data logs. Afterward, the causal model is generated from the event log using the inductive miner technique, which is one of the most powerful process mining techniques. The causal model generated is a Petri net model, which is used to infer causality between important events as well as a visualization tool for real-time tracking of the system’s dynamics. The proposed causality modeling approach has been successfully tested based on a real industrial dataset acquired from complex equipment in a Kraft pulp mill located in eastern Canada. The generated causality model was validated by ensuring high model fitness scores, in addition to the process expert’s validation of the results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

One of the major contributing factors to the higher rates of Greenhouse Gas (GHG) emissions of large-scale industrial facilities is inefficient monitoring, which leads to imprecise control activities causing higher frequency of faults and maintenance activities, poor adaptability to system uncertainties, and excessive energy consumption. Among these complex processes are large final emitters (LFEs) found in process industries such as oil refineries, steel & iron production, the cement industry, chemical processes, and pulp & paper mills (Talbot & Boiral, 2013). Those facilities require massive amounts of energy and they emit an average of 50,000 tons or more of GHG, specifically carbon dioxide (CO2) per annum (Climate Change Connection, 2018). In these large-scale facilities, building precise and representative causal models that can handle the fast-evolving dynamics and the high level of interactions between controllers becomes significantly challenging, time-consuming, and economically expensive in addition to the detailed and extensive subjective knowledge required from process experts and operators (Sun et al. 2021) and (Ragab et al., 2014).

Accordingly, accurate monitoring and root cause analysis tools are essential to promote more efficient supervisory control in these processes, which in return can have a positive impact on energy consumption rates, non-eco-friendly emissions, maintenance costs, and overall system safety (Naghoosi et al. 2013; Lindner et al., 2017). More specifically, there is a need to develop a concrete and representative causality modeling approach that captures the system’s complex dynamics, states evolution, as well as the interactions between the controllers. The approach should also identify the different factors that cause failures, performance deterioration, and their root causes to enhance monitoring and control tasks and, finally, to allow energy-efficient operations.

Causality analysis has become a vital research topic in the industrial community with various applications including fault diagnosis and prognosis, and others (Chen et al., 2018; Chiang et al., 2015; Lindner et al., 2019). In general, several methods have been introduced in the literature for causality modeling, and they can be mainly divided into knowledge-based and data-based methods (Chiang & Braatz, 2003). The former depends on existing knowledge based on expertise and physical models of the system, and they usually require a deep understanding of the process in order to have an accurate model of the system (Ragab et al., Ragab, El Koujok, et al., 2019). However, as modern system architectures become more complex and the number of components significantly increases, the process of formulating an accurate model based on expertise and domain knowledge becomes very difficult, which deems most knowledge-based methods have some limitations when applied in large-scale industrial processes. The latter is based on the exploitation of available data to construct models by analyzing causality relationships between process variables. The data-driven causality analysis methods have attracted several industrial applications (Yang et al. 2014). However, based on an investigation of the literature, we found that existing data-driven causality analysis methods face challenges in complex industrial processes as follows.

-

Firstly, most of these methods are static and face difficulties in capturing the temporal relations between events when applied to systems with a large number of variables and interacting components, where the computational demand becomes excessive (Nauta et al. 2018). Moreover, most of these methods make simplifying assumptions (Lee & Chien, 2020), causing inaccurate results (Elhefnawy et al. 2021).

-

Most of the existing methods do not incorporate events’ sequential information and they mostly focus on finding the direct root cause for a phenomenon of interest such as a fault in a machine. However, in an industrial process, we are more interested in finding the causes for any performance deterioration and not necessarily faults only. This is when the analysis of the sequence of events becomes crucial, as it can reveal the effect of the order in which specific events occurred on the state evolution of the system, which is important to accurately represent the system’s dynamics and to infer the correct causality relationships (Kabir, 2017a). An example is the occurrence of an event, which might not cause deterioration, however, if that event occurs in a specific sequence with other events, then the overall performance of the process can be impacted.

-

Based on insights from industrial partners, existing methods can become inefficient because it is insufficient to infer causality between variables separately (change in variable X1 causes a change in X2). The reason is that in complex processes such as recovery boilers, there are hidden interactions between controllers and it is extremely important to identify those interactions and analyze their effect on the system’s KPIs (Ragab, El Koujok, et al., 2019; Ragab, Yacout, et al., 2019; Derigent et al. 2020).

-

Existing methods deal with abnormal global events without incorporating normal operations (Li et al., 2016), which can be important to guide the operator to actions that avoid performance deterioration or that return the system back to normality.

These challenges cause the existing data-driven causality analysis methods to perform inefficiently in complex equipment found in many process industries. The recovery boilers, heat exchanger, evaporators and bleaching equipment in pulp & paper mills are examples of these kinds of equipment. This type of complex equipment has several interacted variables and are identified as highly dynamical nonlinear systems.

Accordingly, there is a clear need to develop accurate and representative causal models for such complex industrial systems. This paper proposes an innovative data-driven dynamic causality analysis approach based on interpretable machine learning (IML) (Molnar, 2020), process mining (PM) (Van der Aalst, 2016), and human expertise, to fill the discussed research gaps. The approach exploits historical data collected from the process to automatically construct an accurate and representative dynamic causal model.

The proposed approach aims at overcoming the above-mentioned challenges that face existing methods in industrial applications. This work is also motivated by solving one of the big challenges in the Artificial Intelligence (AI) and causality analysis communities, which is “the discovery of high-level causal variables from low-level observations” (Schölkopf et al., 2021). This approach focuses on building a dynamic causal model between high-level events rather than between the low-level variables. In fact, this can be beneficial to several industrial applications, where insights and reasoning are sought on important events that occur in complex processes and their effect on the system’s key performance indicators (KPIs). We attempt to solve this challenge in an innovative way by adopting PM, to automatically build causal models, without heavily involving the process expert. This allows the expert to analyze the temporal relations and the dependencies between events from a higher level. PM links data science and process science to model processes through generating state-event graph models, analyzing bottlenecks, and suggesting process improvement. It is based on exploiting discrete event log data solely, without the need for domain knowledge by analyzing the dependency relationship between events.

PM has been applied widely and successfully in business processes. However, to the best of our knowledge it has not been yet applied to complex industrial processes. One main reason is that most of these industrial systems do not possess well-structured discrete event logs. Generally, data collected in these processes contains low-level observations acquired from sensors with continuous values of KPIs, control setpoints, and measured variables. Therefore, to build discrete event logs that are exploitable by PM, we integrate IML in this work to extract patterns from those low-level continuous datasets. The patterns comprise combinations of conditions on the system’s variables, including the controllable variables. Therefore, these patterns can model the interactions between the controllers and their effect on the system’s KPIs. More importantly, these patterns actually represent the discrete events that occur in the process. Thus, the patterns are used as representative events for these complex processes, which can be exploited by PM techniques to build high-level causal models in the form of state-event graphs. In this paper, we build these models in the form of Petri nets (PNs) which are one of the powerful tools for modeling and visualizing discrete event systems (DES). More significantly, building the causal model in the form of PNs does not only allow inferring causal relations between important events but can also support tracking the state evolution of complex systems in real-time. This is due to the dynamic modeling capabilities of PNs, which allow monitoring of the system trajectory and state transitions. In addition, the reachability graph of the PN causal model can be regarded as an event-driven process, thus facilitating a suitable environment for applying intelligent supervisory control approaches using machine learning (ML) techniques such as reinforcement learning (RL). This can add an essential element of robustness and reasoning to the control approach, where more insights can be realized from analyzing the consequence of actions (or events) through the causal model (Schölkopf et al., 2021).

It is worth mentioning that this approach is bi-directional, where the causal model built from the low-level variables comprises the high-level discrete events and their sequential relations. At the same time, each event in the model can be broken down to its corresponding pattern and back to the low-level variables that define the pattern.

The proposed approach comprises two phases. In the first phase the dataset with continuous variables and KPIs collected from an industrial process is transformed into an event log. The event log is created by labeling the data using a classification criterion based on the KPIs, and afterwards, patterns are extracted using decision trees (Quinlan, 2014), which is one of the top ten machine learning algorithms (Wu et al., 2007), as an IML technique. These patterns represent the different events that occur in the system in a sequential manner. In the second phase, PM techniques are applied to automatically construct a dynamic causal model from the created event log. The dependency relationships are analyzed between the events in the log to incorporate the temporal information and a state-event diagram is generated. A Quantitative analysis is performed on the model to ensure high accuracy and to allow for causality inference.

The contributions of this paper are summarized as follows.

-

IML and PM are integrated to generate high-level causal models for complex industrial processes in the form of state-event graphs (e.g. Petri nets). The causal model is built automatically from low-level sensory data without heavily involving the process expert going through difficult, labor-intensive and time-consuming tasks. The role of the expert is to supervise the modeling procedures, verify and validate the generated model.

-

By using IML to extract the patterns from the continuous observations, the approach is able to model the interactions between the controllers and identify the most important events (both normal and abnormal) that affect the performance of the system. These patterns are exploited as events to form the causal model, thus inferring causality on a higher level and not between low-level variables.

-

The exploitation of PM techniques allows generating a dynamic causal model in the form of PNs that incorporates temporal information, and the sequential relations between events, which are important in finding the causes for KPI-related changes. The PN model allows for tracking the system’s state (based on KPIs) in real-time, as well as the events that cause state transitions.

-

The generated model can be easily updated upon system changes and the acquisition of new data through the use of PM enhancement techniques, without depending on the process experts for tedious remodeling tasks. This allows for online real-time process simulation and fast remodeling tasks and also serves as a dashboard for operators. The approach is applied and validated on an industrial dataset collected from a recovery boiler in a pulp & paper mill.

The rest of the paper is organized into six sections as follows. Section 2 presents the related works for causality analysis in industrial processes. The preliminaries involving state-event graphs (Petri nets) and an illustration for the process mining approach are represented in Sect. 3. The proposed causality analysis methodology is detailed in Sect. 4. Section 5 comprises a case study of a recovery boiler system of a pulp & paper mill located in eastern Canada and presents the results obtained from the proposed methodology. Discussion and future work directions are presented in Sect. 6, followed by a conclusion in Sect. 7.

Related work: causality analysis methods in industrial processes

Causality analysis, which is a major monitoring activity, has become a vital research topic in the industrial community (Yang et al., 2014). Causality analysis can provide the operators with the knowledge and process insights to support decision-making in fault prevention. In general, the traditional causality analysis approach was based on planned or randomized experiments, where for example, an input variable would be manipulated in a process, while other variables are fixed and the outcome (variable of interest) would be observed to conclude if there is a causal relationship between the input variable and the outcome. However, this approach is time-consuming and sometimes infeasible in complex processes, due to the massive number of variables and also for safety measures where experimentation is not tolerated (Spirtes, 2010). Consequently, attention was shifted to searching for causal models based on background knowledge and validating them using statistical tests, as well as data-driven methods that can infer causality relationships between process variables by analyzing sensory data using machine learning techniques.

This section presents a background on causality analysis methods in the literature. As mentioned in the introduction section, causality modeling methods in the literature can be divided into knowledge-based and data-based methods (Chiang & Braatz, 2003). Maurya et al. have devolved knowledge-based modeling approaches based on the system’s mathematical equations (Maurya et al., 2003, 2004, 2006), as well as instrumentation diagrams (Thambirajah et al., 2007, 2009). Gil et al. (2011) have developed a topology-based method for process connectivity based on the physical description of systems. Several causal modeling techniques exist for constructing fault trees (FT) from process knowledge, which are named Model-Based Dependability Analysis (MBDA) techniques, and they depend on discovering dependency relationships from an existing system model (Aizpurua & Muxika, 2013; Sharvia et al., 2016; Kabir, 2017b). Another approach was developed by Leitner-Fischer and Leue (2013) to generate an FT expressing causality. The approach extracts counterexamples from the system’s model, where some test conditions are utilized to discover events combinations that lead to different system states. Although knowledge-based methods have experienced some success in various applications, they are deemed ineffective when applied to large-scale systems and complex processes. This is more specifically due to the dependence of these methods on the existence of precise and representative models, which are extremely difficult to obtain in these large-scale systems and would require extensive effort by the experts and a very deep understanding of the process (Ge et al., 2017).

Therefore, it is appealing to learn causality models using data-driven techniques from historical data. Different techniques have been introduced to exploit historical data in the form of time-series observations to discover causality relations between the variables. Among the well-known data-driven techniques is the Granger causality (Granger, 1969). The Granger causality is based on the vector autoregressive model (VAR), which is a class of linear regressive models. A statistical significance is determined based on a G-causality index, which imposes a causality relation between two time-series if the cause can reduce the prediction error of the effect. One of the main applications of Granger causality was to establish root causes for plant-wide oscillations, which can propagate easily through the system due to the high level of interactions between components (Yuan & Qin, 2014). Several variants of the Granger causality have been developed. Landman et al. developed an approach that utilizes the Granger causality in combination with topology-based methods (Landman et al., 2014), where a causality matrix is initially constructed from the Granger causality, and afterward, each element in that matrix is validated for directness analysis using a connectivity matrix, which is captured from piping and instrumentation diagrams (P&IDs). Zhong et al., (2020) exploit data-driven Granger causality followed by further diagnosis using a topology-based model, which incorporates domain knowledge. The approach was tested to investigate solid oxide fuel cell system oscillations. Another method was proposed by Liu et al., (2020), which builds a simplified Granger causality map by determining its maximum spanning tree to overcome the complications of traditional causal maps such as process loops. Other variants were developed combining Granger causality with kernel entropy component analysis (KECA) for monitoring batch process (Fei et al. 2019), principal component analysis (PCA) for feature space reduction and early detection (Yuan & Qin, 2014), and with singular value decomposition (SVD) to amplify the contributing variables (Pyun et al., 2020). A topological causality approach has been introduced by Zhai and Yang, (2020), which builds a cross-map to infer causality and is validated using the Lorenz system and transfer entropy through causality index.

Various approaches were developed to exploit data and generate graphical causal models. One of the most common is causal Bayesian networks (CBNs), which have been applied widely (Neapolitan, 2004; Pearl, 2009). Li and Shi (2007) introduced an algorithm to generate casual Bayesian networks in rolling processes based on the integration of domain knowledge for parameter learning, and a data-driven approach based on the Peter-Clark (PC) algorithm (Spirtes et al. 2000). The PC algorithm initially constructs a fully connected, undirected causal graph from the historical data as a first step and then determines the adjacencies between the variables and the directions. Peng et al. (2014) have proposed a novel approach based on multilogic probabilistic signed directed graphs (MPSDG) in the TEP, which consists of two modeling stages; an offline and online stage. In the former, the MPSDG is designed using historical frequency probabilities and logic gates describing the causality between variables. In the latter, the initial fault causes are discovered using a backtracking algorithm, and then the Bayesian inference is used to calculate the conditional probabilities of the causes. Moreover, Bauer and Thornhill (2008) have used a cross-correlation (CC) function to detect peaks and to estimate time delays between the system’s variables in a large plant at the Eastman Chemical Company. The approach exploits historical data and the time delays to create a data-driven causality model verified by a statistical inference procedure to validate the peaks discovered from the CC function. Zhang and Geng (2015) have proposed an approach named dynamic uncertain causality graphs (DUCG), which deals with novel faults and represents uncertain causalities, by assessing an efficient probabilistic hypothesis. The approach is based on the decomposition of dynamic evidence into discrete time units and tests a global probabilistic hypothesis that is a combination of all-time units. Li and Yue, (2020) have modified DUCG to overcome difficulties in inferring the causality between events based on crisp numbers. The authors propose an intuitionistic fuzzy set based on dynamic uncertain causality graph (IFDUCG), which uses intuitionistic fuzzy sets to handle the uncertainty of events by integrating experienced knowledge into the traditional model. Jiuyong Li et al., (2016) proposed an approach based on causal decision trees (CDT). The approach exploits the advantages of decision trees to discover causality based on the “divide and conquer” strategy; however, the emphasis was on the distinction between the decision trees’ correlation and causation. Causality is inferred in the algorithm based on the Mantel–Haenszel partial association test (Mantel & Haenszel, 1959). The output of the algorithm is in the form of causal decision trees, where non-leaf nodes are contributors to the outcome variable. It has also been concluded from the literature that fault trees are exploited significantly for extracting causality information (Kabir, 2017a; Malika et al., 2017; Waghen & Ouali, 2019; Zhou & Zhang, 2017). The hierarchy of events in a fault tree is used for interpretation of the dependency relations between basic and intermediate events up to the top-level event (fault).

An interesting approach has been developed by Bozorgi and et al., (2020), which gives recommendations for applying specific solutions based on uplift trees, which is a causal machine learning technique. The approach proposed in that work was applied to a financial business process. It was tested on loan applications problems, where the event logs are analyzed to increase the probability of the desired outcome. In that work, the authors assume an event log already exists, as well as candidate solutions to form action rules that solve a specific problem.

Nauta et al. (2018) have proposed the approach LIFT (learning fault trees from observational data) to generate data-driven static fault trees. Based on the Mantel–Haenszel statistical test, a system top event is analyzed to discover the sequence of contributing basic events by checking different combinations of AND/OR gates. The algorithm discovers causality between cause and effect and emphasizes data stratification to exclude the effect of other variables. Ragab et al., (2018) proposed a causality analysis methodology that combines human expertise, in the form of fault tree analysis (FTA), with additional knowledge extracted by a machine learning method. The proposed methodology was demonstrated using fault trees constructed for a reboiler system in a thermomechanical pulp mill.

Research has been conducted to use the state event graphs as powerful dynamic causality analysis tools, particularly by converting fault trees into state event graphs such as PNs, since these networks have more powerful mathematical modeling capacity than FTA, thus supporting more accurate quantitative analysis (Zhang et al. 2009; Steiner et al, 2012; Kabir et al., 2015). PNs are powerful visualization tools that can model the sequential and concurrency behaviors and allow for process simulation (David & Alla, 2010; Murata, 1989). These networks are convenient and efficient for building causal models for DES to analyze normal and faulty events temporal dependencies (Zhang et al., 2009). Therefore, we are exploiting PNs as the modeling framework in our proposed methodology. In what follows, the basic concepts and characteristics of PNs are presented.

State-event graphs and process mining: preliminaries

This section introduces the preliminaries of the state-event graphs and the process mining techniques. It presents the Petri nets models that illustrate these graphs and their building techniques. The objective is to give an overview to the readers who are not familiar with these concepts and help them understand how they are used in the proposed approach.

State event graphs: the petri net modeling approach

PN is a mathematical and graphical tool used extensively for the modeling and control of DES (David & Alla, 2010). These networks were first introduced by Carl Adam to model chemical processes (Petri, 1966). PNs serve as a promising tool for describing and analyzing event-driven processes that are characterized as non-deterministic, stochastic, concurrent, and/or time-dependent (Murata, 1989). Several extensions to PNs have been developed including timed PNs, hybrid continuous PNs, colored PNs, stochastic PNs, fuzzy PNs, etc. (David & Alla, 2010). Similar to flow charts, PNs can be utilized as a promising visual tool to simulate the dynamical evolution of interacting events in the system. Furthermore, PNs allow developing state and algebraic equations to serve as a mathematical analysis tool to model and analyze the system’s behavior. In what follows, we present the PN background material necessary to follow the ideas presented in the paper. It can be read on a need-to-know basis. For further explanation of PNs concepts, elements, definitions, and applications, the interested reader is referred to (Murata, 1989; David & Alla, 2010; Mansour et al., 2013).

Graphically, PN is a directed bipartite diagram, which consists of nodes describing system states represented by circles called Places, and rectangle-shaped bars called transitions. The transitions represent the events that occur in a system or a specific process, e.g. turning a machine on and off, or changing the set points of some controllers, which as a result can change the state of the system. The elements of the PNs are shown in Fig. 1. The nodes inside the PNs are connected by arcs, which can be weighted to describe the evolution of the system between the different states triggered by events that would occur as a result of specific conditions. The number of Places and transitions inside a PN are finite and non-zero. The state of the system is determined by the number and distribution of tokens (dots) inside the Places of the PN model, which is called marking. A marking is represented mathematically as a vector, where each entry indicates how many tokens are located in each Place. Changing the marking or the distribution of tokens will reflect a change in the state of the system. Transition nodes, which control the token dynamics, are enabled or ready to fire if and only if the number of tokens at each of its input Places is greater than or equal to the weight of the directed arc connecting the Place to the transition. The firing of one transition moves a number of tokens determined by the weight of the input and output arcs, from the transition’s input Places to its output Places. Unweighted arcs have weights equal to one. A PN model, if built accurately, can be very helpful in explaining observational data collected from an industrial process. The transitions in the petri net can represent patterns in the data resembling changes in measured and controllable variables, which can be regarded as events occurring in the process. Moreover, the firing of the transitions would result in a change in the marking of the PN, which represents the current state of the system. Since the state of the system in reality can be determined by the KPIs, the marking can represent different ranges for those KPIs in the data. Mathematically, a PN is represented as \(Q=\left(P , T, {p}^{+},{p}^{-},{M}_{0}\right)\), where P and T donate the sets of Places and transitions, respectively. The notions p+ and p− represent the arc weights from Places to transitions, and from transitions to Places, respectively and \({M}_{0}\) represents the initial marking. The incidence matrix [nxm] is denoted by \(S={p}^{+}- {p}^{-}\), while the firing vector σk [mx1] refers to the enabled transitions at epoch \(k\). Finally, the evolution of states is given by \(M\left(k+1\right)=M\left(k\right)+S{\sigma }_{k}\).

There are important properties that characterize PNs such as concurrency, conflict, liveness, boundedness, reachability, reversibility, and deadlock. Concurrency represents the occurrence of multiple events simultaneously, which signifies one of the essential aspects of PNs as a modeling tool. Conflict is a property of PNs that represents the non-determinism of events, where the firing of an event disables another event from firing due to the limitation of available resources as an example. Liveness refers to events that are continuously enabled or that could occur at any time during the process. Boundedness refers to the maximum number of tokens that any Place can hold in the network. A PN is considered “safe” if all Places are bounded by only holding one token. Reachability refers to the possibility of reaching a specific marking from any other marking through a finite sequence of fired transitions or events. Reversibility is a property where the initial marking is reachable from any other marking. Finally, deadlock is a condition that occurs when no transitions are enabled to fire after reaching a particular marking; particularly, deadlock avoidance is one of the main challenges of PNs supervision.

The analysis of DES modeled as a PN can be conducted by multiple methods. The most common is the coverability graph analysis. It consists of a tree-like structure, which has a start node that represents the initial marking. The growth of the tree branches represents other markings that are reached from a preceding marking, and the arcs connecting the nodes represent the specific transition that caused the marking change and state evolution. An important note is that in the case where the PN is bounded, the coverability tree can be transformed into the simpler reachability graph, where the difference lies in the infinite markings, which only the coverability tree can represent (Murata, 1989).

Given the properties and modeling capabilities of PNs, these networks are exploited in this paper to model the causality relations between interacting events in industrial processes. Information about the sequence of events and their dependency relationships are realized through the analysis of coverability graphs to understand how the occurrence of a specific event could trigger other events. However, the construction of PNs models is a tedious task that requires the involvement of human experts with deep domain knowledge about the system to be modeled. This becomes a significant challenge when modeling large-scale complex systems. However recently, the process mining approach (PM) (Van der Aalst, 2016) has emerged and has proven to be a promising tool in building PN models solely from data without the heavy dependence on domain knowledge and human effort. Therefore, we will exploit PM to build dynamic causal models in the form of PNs from the historical data that are collected from the monitored industrial systems.

Process mining

PM is an emerging approach that has gained a lot of interest. The availability of big data in most organizations’ information systems represents an important asset that is not being fully exploited. PM supports model discovery from data, process enhancement as well as providing deep structural insights for business processes (BP), where a BP is a collection of sequences of events performed by equipment or individuals to provide a particular service or product for customers (Grisold et al. 2020; Weske, 2012). PM comprises a set of data-driven tools that discover, analyze and enhance process models to support decision-making, gain insights, apply verification of process models to locate errors in systems’ procedures, and provide simulation techniques to conduct performance analysis and assistance in process redesign (Reinkemeyer, 2020; Van der Aalst, 2016). The essence of PM lies is in the exploitation of event data without the need for domain knowledge or expertise, as well as to uncover bottlenecks and non-compliance patterns that arise when event data and existing process models are compared and do not reflect the same behavior (Diba et al., 2020; Van der Aalst, 2016). The PM framework is mainly categorized into three tasks; process discovery, conformance checking, and process enhancement, which are depicted in Fig. 2. (Van der Aalst, 2016). Each of these tasks is dependent on the problem at hand. In the discovery task, process models are generated automatically from event logs using data-driven discovery techniques. The conformance task validates the generated models and measures their accuracy by comparing the behavior they allow with the event logs. The third task, edits and enhances the models upon the arrival of new event logs. In what follows, these tasks are briefly explained.

Process discovery

Process discovery constructs and discovers a model from event data logs by analyzing the dependency relationships between events that occur in particular sequences during executing different procedures in an organization. Examples of these dependency relations are; directly follows; never follow; parallelism; and loops. An event log, which is the input to process discovery techniques consists of a number of events, each assigned a Case id, and a timestamp, where a Case represents a specific time period where the system has been operating, such as an hour, day, or week depending on the problem at hand. An example of an event log for a machine operation is shown in Table 1. A Case here represents a day of operation. This event log is named \({E}_{1}\) and has five different events {P1, P2, P3, P4, P5} and three Cases corresponding to three days of operation.

The first PM discovery algorithm introduced was the alpha algorithm (Van Der Aalst et al., 2004). The alpha algorithm takes an event log and generates a workflow net (a class of Petri nets) that can probably replay the sequences found in the event logs correctly. Further improvements have been developed to the alpha miner algorithm by De Medeiros et al. (2003) such as the alpha + algorithm where pre- and pro-processing stages are added to deal with event loops. The heuristic miner (Weijters & Ribeiro, 2011), another process discovery algorithm, utilizes casual nets as the representation of the discovered models. The heuristic miner’s framework is formulated by creating a dependency graph where the frequency of an event x followed by an event y is calculated and afterwards, the dependency graph is transformed into state-event graph after applying noise filtering based on specified thresholds. Another algorithm is the \(\beta \)-algorithm (Wen et al., 2009), which takes into consideration different event types such as start and completion of tasks to build PN models with richer information. For more about PM techniques, the interested reader is referred to (Van der Aalst, 2016).

In this paper, we utilize one of the most exploited process mining discovery algorithms: the inductive miner (IM). This algorithm can deal with huge event data logs, interactive events, and noise filtering while preserving the correctness of the model and rediscoverability (Van der Aalst, 2016). The Inductive Miner generates what is called process trees, which can be easily converted to other notations such as PNs. The Inductive Miner is the one of the few process discovery techniques guaranteeing soundness (total execution of process phases, while reaching a defined end-state), high fitness (accuracy criterion of process models) in finite time (Leemans et al., 2013a). In what follows we explain how the IM algorithm works (Van der Aalst, 2016).

The inductive miner algorithm starts by creating what is called a directly follows graph, which represents the follows relations between the events in an event log. The algorithm comprises two steps, described in what follows.

Steps 1: {Creation of Directly follows graph}.

Let \(E\) be an event log, the directly follows graph of \(E\) is represented as \(D(E)\) =\(({S}_{E} , {\mapsto }_{E} , {S}_{E}^{start} , {S}_{E}^{end})\), where:

-

\({S}_{E}\) is the set of all events in \(E\)

-

\({\mapsto }_{E}\) is the directly follows relations

-

\({S}_{E}^{start}\) is the set of start events

-

\({S}_{E}^{end}\) is the set of end events

The algorithm recursively divides the original event log into sub logs, and for each sub log a directly follows graph \(D(E)\) is created using directly follows relations such as \(x{\mapsto }_{E}y\), if \(x\) is followed directly by \(y\) anywhere in \(E\). Also, \(x{\mapsto }_{E}^{+} y\), if there exists a path from \(x\) to \(y\) that is non-empty. To give an example, for the event log \({E}_{1}\) = \([ \left\{P1, P2, P4,P5\right\}, \left\{P1, P4,P2, P5\right\}, \left\{P1, P3, P5\right\} ]\) shown in Table 1, \({E}_{1}\) consists of three cases and five different events. Therefore, \({S}_{E1}=\{P1, P2, P3, P4, P5\}\), \({S}_{E1}^{start}=\left\{P1\right\}\), which is shown in Fig. 3 by the incoming arc and \({S}_{E1}^{end}=\{P5\}\), which is shown in the figure by the outgoing arc. And the directly follows relations are:

Creating a directly follows graph for event log \({E}_{1}\) and finding cuts that represent the dependency relationships using the IM algorithm (Adopted from (Van der Aalst, 2016))

\(P1{\mapsto }_{E}P2, P1{\mapsto }_{E}P4, P1{\mapsto }_{E}P3, P2{\mapsto }_{E}P4, P4{\mapsto }_{E}P2, P2{\mapsto }_{E}P5, P4{\mapsto }_{E}P5, P3{\mapsto }_{E}P5, P1{\mapsto }_{E}^{+} P5\). Based on these four elements, the directly follows graph is constructed as shown in the first box on the left side in Fig. 3.

The inductive miner algorithm will divide \({E}_{1}\) iteratively until reaching sub logs that contain only one event, which are called base event sub logs. In order to execute these divisions, the algorithm applies cuts in the directly follows graph of the log or sub logs. In this work, we consider three kinds of cuts namely, sequence cuts, exclusive choice cuts, and parallel cuts, represented in process trees notation as \(\to , \times , \mathrm{and}\wedge \), respectively.

Step 2: {Determination of Dependency Relation Cuts}.

As mentioned, the directly follows graph of an event log \(E\) is represented as \(D(E)\) =\(({S}_{E} , {\mapsto }_{E} , {S}_{E}^{start} , {S}_{E}^{end})\), where \({S}_{E}\) is the set of events in \(E\). A cut will divide \({S}_{E}\) into pairwise disjoint sets \({S}_{1}, {S}_{2}, \dots , {S}_{n}\), where \({S}_{i}\cap {S}_{j}=\varnothing \) for \(i\ne j\)

-

Sequence cut of \(D\left(E\right)\) takes the form \((\to , {S}_{1 }, {S}_{2} , \dots ,{S}_{n})\), where \({\forall }_{i,j \in \left\{1,\dots ,n\right\}} {\forall }_{x \in {S}_{i}}{\forall }_{y \in {S}_{j}}\,i{<}j \Longrightarrow x{\mapsto }_{E}^{+}y\) and the opposite is false.

-

Exclusive choice cut of \(D(E)\) is represented as\((\times , {S}_{1 }, {S}_{2} , \dots ,{S}_{n})\), where \({\forall }_{i,j \in \left\{1,\dots ,n\right\}}{\forall }_{x \in {S}_{i}}{\forall }_{y \in {S}_{j}}\, i{\ne}j \Longrightarrow x{\mapsto }_{E}y\) is false.

-

Parallel cut of \(D(E)\) takes the form \((\wedge , {S}_{1 }, {S}_{2} , \dots ,{S}_{n})\), where \({\forall }_{i \in \left\{1,\dots ,n\right\}}{ S}_{i}\cap {S}_{E}^{start}\ne \varnothing \,AND \,{ S}_{i}\cap {S}_{E}^{end}\ne \varnothing \) \(AND\, {\forall }_{i,j \in \left\{1,\dots ,n\right\}}{\forall }_{x \in {S}_{i}}{\forall }_{y \in {S}_{j} } \,i\ne j\Longrightarrow \) \(x{\mapsto }_{E}y\)

Returning to the example, after creating the directly follows graph \({D}_{1}\), the algorithm will cut it into three smaller graphs namely, \({D}_{1a} , {D}_{1b} , and {D}_{1c}\) as shown in the figure, based on the sequence cut\(( \to , \left\{P1\right\}, \left\{P2, P4, P3\right\}, \left\{P5\right\} )\). This divides the set of events into three subsets where arcs connecting those subsets only go from left to right. Therefore, due to this sequence cut, three sub logs are created from the original event log \({E}_{1}\), namely \({E}_{1a }=\left[\left\{P1\right\}\right], {E}_{1b}=\left[\left\{P2, P4\right\}, \left\{P4, P2\right\}, \left\{P3\right\}\right], {E}_{1c}=[\{P5\}]\) and correspondingly, we have.

\({\varvec{I}}{\varvec{M}}\left({E}_{1}\right)= \to ({\varvec{I}}{\varvec{M}}\left({E}_{1a}\right), {\varvec{I}}{\varvec{M}}\left({E}_{1b}\right), {\varvec{I}}{\varvec{M}}\left({E}_{1c}\right))\), where \({\varvec{I}}{\varvec{M}}\left({E}_{1a}\right)=P1, {\varvec{I}}{\varvec{M}}\left({E}_{1c}\right)=P5\), and therefore.

\({\varvec{I}}{\varvec{M}}\left({E}_{1}\right)= \to (P1, {\varvec{I}}{\varvec{M}}\left({E}_{1b}\right), P5)\).

It is worth mentioning that an event cannot appear in more than one sub log. Now since \({E}_{1a}\) and \({E}_{1c}\) contain only one event, therefore, no cutting is further needed. Afterwards, \({D}_{1b}\) is cut into two smaller directly follows graphs namely \({D}_{1d} , {D}_{1e}\) as shown in the figure, based on an exclusive choice cut \((\times , \left\{P2, P4\right\}, \left\{P3\right\} )\). This divides the set of events into two subsets where there are no arcs connecting the two subsets together. Due to this exclusive choice cut, two sub logs are created from \({E}_{1b}\) namely\({E}_{1d}= \left[\left\{P2, P4\right\}, \left\{P4, P2\right\}\right], { E}_{1e}=\left[\left\{P3\right\}\right]\), and we have.

\({\varvec{I}}{\varvec{M}}\left({E}_{1b}\right)= \times ({\varvec{I}}{\varvec{M}}\left({E}_{1d}\right), {\varvec{I}}{\varvec{M}}\left({E}_{1e}\right))\), where \({\varvec{I}}{\varvec{M}}\left({E}_{1e}\right)=P3\), and therefore \({\varvec{I}}{\varvec{M}}\left({E}_{1b}\right)= \times ({\varvec{I}}{\varvec{M}}\left({E}_{1d}\right), P3)\).

The sub log \({E}_{1e}\) is a base case sub log, however, the sub log \({E}_{1d}\) still needs more cuts. Therefore, \({D}_{1d}\) is cut into two sub logs, namely \({D}_{1f}, {D}_{1g}\) as shown in the figure, by the parallel cut \((\wedge , \left\{P2\right\}, \left\{P4\right\} )\), which divides the events of into two subsets where every event in each subset is connected to all events in the other subsets. Due to this parallel cut, two sub logs are created from \({E}_{1d}\), namely\({E}_{1f}= \left[\left\{P2\right\}\right], { E}_{1g}=\left[\left\{P4\right\}\right]\), and we now have.

\({\varvec{I}}{\varvec{M}}\left({E}_{1d}\right)= \wedge ({\varvec{I}}{\varvec{M}}\left({E}_{1f}\right), {\varvec{I}}{\varvec{M}}\left({E}_{1g}\right))\), where \({\varvec{I}}{\varvec{M}}\left({E}_{1f}\right)=P2\) and \({\varvec{I}}{\varvec{M}}\left({E}_{1g}\right)=P4\).

Finally, by substituting, we get \({\varvec{I}}{\varvec{M}}\left({E}_{1d}\right)= \wedge (P2, P4)\), then \({\varvec{I}}{\varvec{M}}\left({E}_{1b}\right)= \times (\wedge (P2, P4), P3 )\), and then.

\({\varvec{I}}{\varvec{M}}\left({E}_{1}\right)= \to (P1, \times \left(\wedge \left(P2, P4\right), P3 \right), P5)\). The equivalent process tree is depicted in Fig. 4. The conversion from process trees to Petri nets is based on the rules in Fig. 5.

Other variants exist for the framework to deal with noise and infrequent behavior such as the Inductive Miner Infrequent Behavior (IMf) (Leemans et al., 2013b). The authors show that the IMf is able to discover sound, deadlock-free PN models that can accurately display the observed behavior in the event log, while filtering infrequent behavior and noise as it analyzes the frequency of events, while maintaining high fitness scores, in addition to preserving an appropriate balance between model simplicity, precision, and generalization.

Conformance checking

Conformance checking is the analysis of an existing or discovered model in terms of accuracy, simplicity and generalization. Particularly, by comparing the event log with the model, the goal is to mark the similarities and key differences between both to analyze how accurate the model is in representing the observed behavior in the log. The main criterion for conformance checking is the fitness value, which is a model accuracy measure representing the percentage of Cases in the event log that could be modeled correctly by the discovered process model. Another conformance criterion is precision, which examines if the model allows for too much behavior that is not observed in the log. A number of techniques have been designed to calculate the fitness value. Token replay (Van der Aalst, 2016) is one of the methods for evaluating the accuracy of the PN models in representing the event log. For each Case in the log, the model is run to simulate those Cases, by placing a token in the start Place. A simulation for each Case begins and as the tokens progress, the behavior of the model is compared to the Case in the log. The comparison is conducted by mapping the event log behavior on top of the model for each Case and then calculating four variables related to the number of tokens; the number of produced tokens by the transitions; the number of consumed tokens by the transitions; the number of missing tokens due to an occurrence of an event in the event log, while there are not enough tokens for the corresponding transition to fire in the model; the number of remaining tokens in the PN if there are tokens in any Place in the PNs other than the end Place after the all events in a specific Case are completely executed. Normally, a perfect Case modeling (100% Case fitness) by the model would mean that; produced = consumed; missing = remaining = 0. In the situation of different values for the four mentioned variables; they are substituted into a formula to calculate the fitness of that Case. This step is repeated for all Cases in the event log and then averaged to calculate the total fitness of the model. Another conformance checking approach is the alignment method (Van der Aalst et al., 2012), where each Case in the log is simulated by the model and a corresponding alignment table is created. This approach can provide a detailed diagnostic procedure for each given Case and can be applied to any modeling process notation.

Process enhancement

The third task of Process Mining is process enhancement, which is the modification of an existing discovered model X that doesn’t align with a certain event log to a new model Y that conforms with the event log while still capturing most of the structure of model X. This can be significantly beneficial for a smooth and automatic model update when a new event log is collected, resembling changes that occurred in the process. An approach was developed in (Fahland & Van Der Aalst, 2015), which applies the repair actions based on the fitness criteria calculated by conformance checking. By applying a threshold on the model’s fitness value, one of three repair actions can be executed; (1) No repair due to perfect alignment; (2) Total event log-based rediscovery due to very low fitness and extreme divergence; or (3) Partial rediscovery only to the non-fitting parts to incorporate the new behavior found the updated event data, while preserving most of the original model’s structure. Werner-Stark et al. (2011) applied the method for exploiting the process enhancement and conformance checking techniques to update a model of a discrete event parking system. A normal behavior model was initially built representing the system’s reference model, and after acquiring new event data log which contained faults that occurred in the system, the logs were replayed on the reference model to detect and pinpoint the divergencies, which were used to modify the reference model to include the new abnormal events in the model.

Proposed methodology

This paper proposes a data-driven dynamic causality analysis approach based on AI and structure learning from low-level observational data. The methodology combines interpretable machine learning (decision trees in this paper), process mining, specifically the process discovery task for model generation and the conformance checking task for model validation and fitness calculation, and human expertise for defining the process’ operating regions and verification to construct accurate and representative dynamic causal graphs for complex industrial processes. It can serve as a building block for different tools in process industries such as systems’ trajectory visualization and supervisory control. The methodology can be applied in industrial plants to avoid abnormal events and to maintain the desired process behavior that achieves energy-efficient operations. The schematic diagram of the methodology is depicted in Fig. 6.

The detailed steps of the proposed methodology are explained in two main phases, namely, (1) operating region definition based on experienced KPI thresholding and pattern extraction using IML, (2) event data log preparation and causal model generation using process mining techniques.

Phase 1. Operating regions definition based on KPIs thresholding and pattern extraction using IML

In this phase, low-level observational data is collected by a data management system from an industrial plant, which comprises three types of time-sampled information: measured variables, controllable variables (variables that operators have direct control on), and KPIs (measured). The KPIs demonstrate how effectively an industrial facility is achieving its objectives. The KPIs can represent ratios and quantities of key production elements, the efficiency of energy consumption, as well as measurements of wastes and non-eco-friendly emissions.

Following the acquisition of data, the first step is to define the operating regions of the process based on the KPIs. Specifically, the aim of this step is to transform the KPIs in the data from continuous values to discrete classes representing how efficient the process is operating in each timestep. The process experts, who are deeply familiar with the plant, would construct a classification criterion particularly based on the KPIs to partition the state space of the system’s operation into several operating regions representing normal and abnormal levels of operations. The definition of operating regions is mainly dependant on human experts and domain knowledge repositories, and in some situations, experts can rely on different data analytics. The process expert defines specific thresholds to be applied to the KPIs in order to define different operating regions. Each defined threshold is a cut point that would determine if a KPI value is normal, slightly abnormal, or severely abnormal, etc. As a result, the KPI values for each observation in the data would satisfy one of the defined operational classes. Examples of these classes are shown in the “KPI Class” column in the table of Fig. 9. The class labels of observations are assigned automatically by projecting them on a set of deductive IF–THEN rules using the thresholds defined on KPIs based on the human expert. Thus, we can exploit those labels as target variables to apply supervised learning techniques, particularly IML, in order to gain some insights on the reasons for KPI class change, which would be the following step in this phase. It is important to note that the human expert is mainly involved to define and confirm those thresholds, as he/she is deeply familiar with the process and its KPIs’ related targets or objectives. In fact, this task is important to extract accurate patterns from the data and this thresholding procedure is done with minimal effort from the expert who will be only involved in the validation of the resulting causal models.

In the second step, we utilize the DT, an inductive rule-based classifier, as the IML method. It is commonly used as a supervised learning method for extracting rules from data. DTs represent a flowchart structure, such that each node in the tree is an evaluation on a specific variable or feature and the result of that evaluation is represented by the output branches. These branches would link the tree nodes until reaching the leaf nodes. Each leaf node corresponds to the classification or decision label. Thus, the classification rules are represented by the paths from the top node to the leaf nodes. The quality of each split in the tree is evaluated by different criterions. Two of the most common are the Gini Index and Information Gain. The Gini Index will measure the frequency at which any observation in the dataset will be misclassified with random classification. While Information Gain measures the reduction in entropy after splitting a dataset is split based on variable.

In this approach, the input variables to the DT method are the measured and controllable variables in the data, while the target classes are the operating regions defined from step one. The main goal of using DTs is to exploit its interpretability to extract meaningful patterns that would explain the relation between each observation and its assigned class/label. Thus, acquiring information about the specific combinations of variables with different values that would lead to different KPI classes (e.g. normal KPI, slightly abnormal KPI, severely abnormal KPI, etc.). Each extracted pattern from the classification DT model is a combination of cut points on the measured variables, controllable variables, or both, with different value ranges, as illustrated in Fig. 7. The objective of the pattern extraction step is two folds (1) to identify the important events that occur in the process and affect the overall performance and their effect on the KPI, (2) to transform the original data, which comprises KPIs and continuous variables (measured and controllable) into discrete event logs, primarily because event logs are the appropriate input for PM discovery techniques.

To ensure highly accurate DT classification and the extraction of strong representative patterns, we apply an IML model enhancement approach based on the work done in (Dhurandhar et al., 2018) to integrate the accuracy of powerful models (such as ensemble of weak learners) and the interpretability of simple models. The main concept is to train a teacher model (powerful model), which has low interpretability; however, can achieve a high test accuracy on a specific dataset (Lee et al, 2021) and use this trained model to assign weights to each observation in the dataset, so that observations that are easy to classify would be assigned higher weights, while the ones that are difficult to classify are given lower weights. Specifically, for each observation in the dataset, the weight is calculated as the probability of assigning the correct (true) label by the trained teacher model. These probabilities can be easily acquired from the prediction probabilities of the trained teacher model for each and every observation. Afterwards, we train the student model (simple model having high interpretability but low test accuracy on the original dataset), using the weighted dataset. The observation weighting forces the simple classification model to focus on predicting the correct class of observations with high weights and now the model can learn while ignoring difficult observations (they are considered outliers) that will probably cause the simple model to have low accuracy. The student model can eventually obtain higher generalized accuracies while still maintaining its interpretability. The paper (Dhurandhar et al., 2018) uses a deep neural network as the teacher model in order to enhance the performance of a CART based on DTs. It starts by adding logistic classifiers to the intermediate layers of an accurate pre-trained deep neural network. Each classifier provides the prediction from the layer it is attached to. A confidence profile curve is generated which contains the confidence scores based on the logistic classifiers output and the true labels of the input. The observations in the original dataset are weighted after by using the area under curve (AUC) as a function of the confidence scores. In this paper, we adapt this method to use Random Forests as the teacher model instead of a deep neural network, and we use the prediction probabilities of the Random Forest to assign weights to each observation.

The technique is powerful since there is no trade-off between the interpretability and the accuracy of the simple model, i.e., the high interpretability of the simple model will not be affected and only its accuracy could be improved (Dhurandhar et al., 2018). The interpretability of the DT model (student) lies in its ability to extract patterns that are represented by the paths from the top node to the leaf nodes where each leaf node is assigned one of the target classes. After applying the IML enhancement method, the observations that are misclassified by the teacher model are assigned lower weights hence can be ignored by the student model. Consequently, after the student is trained based on the new weighted dataset, the output would still be an interpretable tree-like structure comprising more accurate patterns. Furthermore, there is no fixed boundary for accuracy improvement, since the efficiency of the technique depends on the performance of the teacher model and the complexity of the data in terms of data cleanliness, noise, and outliers. An illustration of the DTs model enhancement step is shown in Fig. 8.

By mapping the extracted patterns to the original dataset, sets of observations would be assigned to each pattern if they satisfy the pattern’s rules i.e. satisfy the variables’ ranges defined by that pattern. At the same time, each pattern explains a specific operational class based on the DTs leaf nodes (one of the operating classes defined in step 1), which is the target label used in the training of the DTs classifier. The mapping is performed in the next phase.

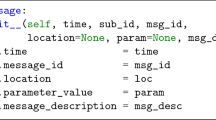

Phase 2: Event data log preparation and causal model generation based on Process Mining

As the first step in this phase, the patterns that were extracted in the form of tree-like structure in Phase 1 using classification DTs are transformed into IF–THEN rules consisting of a set of cut points on the values of the measured or the controllable variables, or both. These rules are mapped to the dataset to assign each extracted pattern to a set of observations that satisfy this pattern. Figure 9. shows this step with an illustrative example of a small dataset consisting of four input variables (X1, X2, X3 and X4) and one KPI. There is one threshold on the KPI set by the expert to define two operating regions (Normal, or Abnormal) which are shown in the “KPI Class” column. The data listed in the table is used to train a classification DT model with the four variables as inputs and the KPI class as the target variable. After training the DT model, a tree structure is generated as shown on the right side of Fig. 9. Each path in the tree (from the top node to a leaf node) represents a pattern, which is translated to a set of nested IF–THEN rules. The pattern P3 is shown as an example. Afterward, each observation in data is run through the sets of IF–THEN rules to determine which pattern covers the observation then the covering patterns are added to the “Event/Pattern” column. Each pattern is an event that occurs during the process. We illustrate the relation between the event and the pattern by this example: by manipulating some of the controllable variables in a real process, the system can transition to a new state and, therefore, this would be considered an event. Also, satisfying the pattern’s values regarding its controllable variables can be similarly considered an event that occurs in the real process, which would also transition the system to a new state.

Therefore, the original continuous dataset is now transformed into an event log, comprising discrete events (which are the extracted patterns), timestamps, and Case ids which would mark the start to the end of a process operation period, and all events that happened in between, such as all events that occurred during in one day of operation. In this paper, all events that happened in one day are assigned the same Case id. Therefore, the output of this step is an event log containing sequentially ordered events (the generated patterns mapped on the observations), which occurred during the process describing the system’s evolution through its operational states. The event log, which is built based on the example in Fig. 9 is shown on the left side of Fig. 10.

Causal model discovered from the event log in Table 1

In the second step, the generated event log is fed as input to the PM platform for preprocessing and causal model generation. Information about the log such as min, max, and the mean number of events per Case, the frequency of occurrence of each event, and frequency of Cases are inspected. Based on the cleanliness and complexity of the original data, some filtering might be needed after the analysis, where some events could be filtered to remove low-frequency events, which are considered as outliers or sensor errors. Afterwards, the event logs are exploited to build an accurate and representative causal model for the process. For this task, we utilize the IMf as the PM discovery technique to process the event log data, analyze the dependency relationships between events, and generate state-event graphs models. The output would be a PN with states representing the operational classes and transitions representing the events in the log. The steps for building the Petri Net model from the event log using the IM algorithm are explained in Sect. 3.2.1. The output Petri net model for the event log listed in Table 1 is shown in the right side of Fig. 10.

In what follows, we explain how the three cases in the event log in Fig. 10 are properly modeled using the IM algorithm. From the discovered PN model shown in the figure, it can be noticed that the event P1 is a start event and P5 is an end event, which indicates that the IM algorithm is able to properly represent the event log, since all Cases start with P1 and end with P5 in the event log. Furthermore, in the event log, P1 can be followed by P2 or P3, or P4, which is represented properly in the PN since the firing of the transition P1 will remove a token from the place S1 and add a token in places S2 and S3, thus only transitions P2, P3, and P4 are enabled to fire afterwards (see Sect. 3.1 for the enabling conditions of transition firing). If P3 is fired then the tokens in places S2 and S3 will be removed and a token will be added to each of the places S4 and S5, thus, P2, and P4 are not enabled to fire while P5 is the only enabled transition. This indicates that the PN model represents Case 3 properly. In the situation where P2 is fired instead of P3, then only the token in S2 will be removed and a token will be added in S4, thus P4 will be the only enabled transition. Afterwards, P4 is fired and the token in S3 will be removed, and a token is added in S5 and therefore P5 is enabled afterwards. This represents Case 1 correctly. The same can actually happen if P4 is fired instead of P2, which will only enable P2 afterwards and then P5, which indicates the model represents Case 2 properly.

The dynamics of the system and its trajectory through time can be tracked by observing the tokens’ movements inside the discovered PN model. Causality relationships could be inferred by observing the event sequences and their temporal dependencies in the Petri net’s coverability graph. The coverability graph is created during quantitative analysis, which shows all possible state changes and the transitions responsible in a sequential manner, due to the occurrence of an event or a group of events. By analyzing the graph, operators could gain insights into how certain events could eventually trigger other events causing state transitions. Following the process model discovery, we proceed to the second task of process mining, which is conformance checking. As mentioned previously, conformance checking measures the accuracy and precision of the models by comparing the behavior or event sequences that the model allows with the event log. More specifically, we are interested in measuring the fitness of the model, which is a measure of how many Cases in the event log could be modeled correctly by the discovered model. By ensuring a high fitness score, a representative and trustable model of the system can be possessed.

In a situation where new data is collected, the third task of process mining, which is process enhancement, is used to update the discovered PNs model by only editing to incorporate the novel event sequences observed in the new event log, while preserving most of the structure of the existing discovered model. This is significantly advantageous, as we are able to update the PNs model in an automated fashion whenever new data is collected, which would save time and effort rather than the traditional way of totally reconstructing the model from scratch. Finally, quantitative analysis is performed on the generated Petri Nets model to ensure the correctness of the model in terms of boundness, liveness, and being deadlock-free.

Case study: A black liquor recovery boiler in a Kraft pulp & paper mill

We implemented the proposed methodology on an industrial case study introduced in (Vakkilainen & others, 2005), involving a black liquor recovery boiler (BLRB) in a pulp & paper mill located in Eastern Canada. The BLRB is one of the major equipment in Kraft P&P mills, made up of several units and it is very important to maintain the BLRB process at a high level of efficiency and availability. The BLRB restores the used chemicals in the process, removes wastes and disposable by-products, and combusts the organic components from the black liquor for steam production. A schematic of the BLRB is depicted in Fig. 11.

The proposed causality analysis methodology exploits the sensory data collected from the BLRB in order to generate an event log comprising sequentially ordered events, and afterwards build a process model that represents the evolution of the system and causality relations. The data consists of 75,694 observations including 72 variables: 16 controllable and 56 measured. The observations were collected every five minutes for almost one year with an average of 275 observations per day. The dataset contains two KPIs: Vapeur vs Solide (m3/Kg) (KPI-1), and STR corrigé à 8% cheminée (KPI-2). The KPI-1 is an economical indicator that indicates the ratio of the steam production to the black liquor consumption. The KPI-2 is an environmental indicator and measures the amount of total reduced Sulphide (TRS). The dataset was prepared and pre-processed (including removing noise and outliers) by the process expert and by using the EXPLORE software (NRCan, 2015).

Phase 1: definition of operating regions and pattern extraction from the BLRB dataset

In the initial phase, thresholds for the KPIs are set by the process expert to define the operating regions (classes). For KPI-1 the threshold was chosen to be 3.5 t vapeur/ t solides and 8 pmm for KPI-2. Therefore, 4 operating classes are defined namely, Normal, Abnormal_1, Abnormal_2, Abnormal_3, which are used to label each of the 75,694 observations. The Normal class refers to both KPIs having acceptable values; Abnormal 1 refers to only KPI-1 being acceptable; Abnormal_2 indicates that only KPI-2 is acceptable; and Abnormal_3 refers to neither KPIs having acceptable values. A graphical illustration of this step is shown in Fig. 12, considering the KPIs and the four operating classes defined. As mentioned, the output of this step is labeling the dataset where each observation is assigned one of the operating classes based on each observation’s KPI values. The labels are distributed in the dataset as 35,322 observations for Normal class, 19,458 for Abnormal_1 class, 10,854 for Abnormal_2 class, and 10,060 for Abnormal_3 class.

In the next step, the dataset is imported in Python for pattern generation using DT classifiers with the measured and controllable variables as the input variables, and the operating classes defined from the first step as the target variable. The DTs algorithm was applied on the dataset using the holdout strategy with 80% for training, and 20% for testing using the scikit-learn package (Pedregosa et al., 2011), scoring a test accuracy of 84.25%. We applied the IML enhancement method found in (Dhurandhar et al., 2018) in order to enhance the performance of the DTs classifier (the student) while maintaining its interpretability characteristic to generate meaningful patterns. The method is explained in Phase 1 of the proposed methodology and illustrated in Fig. 8. For the teacher model, we utilized the Random Forests classifier as a powerful model (teacher) to obtain a higher testing accuracy, scoring 92.71% on the testing data. The prediction probabilities from the Random Forest model were used to assign weights to each observation in the dataset (Pedregosa et al., 2011). The DTs model (the student) is trained again with the weighted dataset, to avoid difficult to classify observations, while focusing on the relatively easier observations that would contribute to better generalization performance and higher testing accuracy.

Afterwards, we perform hyperparameter optimization with GridSearch and post-pruning to obtain high-quality patterns that can represent the data and the different operating states of the system. Four hyperparameters were optimized with Gridsearch:

-

min_samples_leaf ranging from (1–10);

-

max_depth ranging from (1–14);

-

min_samples_split ranging from (2–10);

-

criterion = [gini, entropy]

The DT with the highest accuracy was found from the Gridsearch with the following hyperparameters: {min_samples_leaf = 5; max_depth = 5; min_samples_split = 2; criterion = gini}. Post-pruning was performed using the cost_complexity_pruning_path function to avoid overfitting to the training set and to improve the model generalization. The final model obtained after applying the IML enhancement method in (Dhurandhar et al., 2018), hyperparameter optimization, and post-pruning scored 89.95% on the test set. The values of precision recall and F1-Score for each class are shown in the confusion matrix given in Fig. 13. These values are calculated as follows: \(precsion= \frac{TP}{TP + FP}, recall= \frac{TP}{TP + FN}\). The F1-score is calculated based on the average of both precision and recall, defined as: \(F1=2\cdot \frac{precsion \cdot recall}{precsion+recall}=\frac{2TP}{2TP+FP+FN}\), where TP (true positives), FP (false positives), TN (true negatives) and FN (false negative). The readers may be referred to (Witten et al., 2016) for more details.

Furthermore, we used the k-fold cross-validation method which splits the data into k smaller independent sets (folds) to avoid overfitting (Witten et al., 2016). In that method, the classification model is trained k times, each time with k-1 folds for training and the remaining fold is used for testing. The result is the average of the computed accuracies for all testing folds. In this work, we set k = 5 and the obtained testing accuracies were 90.4%, 88.8%, 91.1%, 89.7%, and 88.7%, with a mean of 89.7%. The model generated 32 patterns including 8 ‘Normal’ patterns, 9 ‘Abnormal_1’ patterns, 9 ‘Abnormal_2’ patterns, and 6 ‘Abnormal_3’ patterns. Some of the extracted patterns are represented in Table 2. As shown in the table, each pattern is a combination of IF–THEN rules representing the paths from the top node of the DT classifier to each leaf node.

Phase 2: generating the event log and discovering the causal model for the Reboiler case study

The main objective of using the DTs was for interpretation, i.e., to extract the rules/patterns governing the classification, which is a significant strength of DTs. By applying an algorithm coded in Python, the patterns of the DTs are converted into IF–THEN rules to be mapped to the original dataset. The mapping would allow assigning each observation to one of the 32 extracted patterns, which satisfies its value ranges. The original dataset is now transformed into an event log, which comprises events (the extracted patterns), each with a timestamp and a Case id. In this event log, the Case id represents the events that occurred in one day of operation.

The constructed event log is imported into the ProM 6.9 software (Dongen et al., 2005) for analysis and process discovery. The ProM 6.9 software is one of the most famous open-source process mining tools. The software offers a wide variety of techniques for process discovery. Furthermore, the tool comprises plugins that execute conformance checking and process enhancement by providing new event logs to the existing model extracted in the discovery phase. By analyzing the event log, it was found that some noise exists especially for the Case start events, e.g., some Cases started with an event only one time through the whole event log and this can be considered as noise. Therefore, the ProM package, namely, “Filter Log Based on Heuristics,” was utilized in order to remove noise from the starting events. The preprocessing step can be important, due to the significance of cleaning the event log and filtering the outliers in discovering accurate and representative models. The filtering threshold was adjusted to the default value of 0.8 based on the occurrence frequency. The frequencies of all event occurrence, start events, and end events are shown in Table 3. These frequencies can help the operators identify the persistent as well as the rare events.

The next step is the model/process discovery, where the event log is processed by PM discovery techniques to generate causal models that allow conducting visual and mathematical analysis of the process. Therefore, we ran the IMf technique on the event log with a threshold parameter of 0.3. The Petri net model generated after running the IMf algorithm is depicted in Fig. 14.

As shown in the figure, the start Place is the circle with the blue color, which contains one token (black circle) to start the process. The end Place is the circle with the grey color. When a token reaches the end Place, it corresponds to the ending of a Case, thus a token in the end Place will leave all other Places empty. The discovered model comprises the different patterns (transitions in Petri net notations) represented by the colored rectangles. Each color represents the operating class of the pattern (Normal, Abnormal _1, Abnormal_2, Abnormal_3). The black transitions are silent transitions. These silent transitions do not represent events in the real process or the event log, however, they are important to allow the model to represent some complex sequences in the real process. Event loops and event skipping are examples of such sequences. Undetermenism can be observed in the model where there can be a choice or conflict between two or more events and only one can occur, such as the conflict between P23 and P12. The firing of a transition corresponds to the occurrence of an event in the real process and will cause a change in the distribution of tokens. By simulating the model with a Petri net simulator software like Workflow Petri Net Designer (WoPeD), the dynamics of the system could be observed and the operator can track the system’s trajectory in real-time through the tokens’ movements (marking change). Since each event is classified with one of the operating classes, operators can gain insights into avoiding specific events that would lead to abnormal operation and, thus, maintaining normal KPI values.

Following the model discovery, we conducted conformance checking to calculate the fitness and precision of the generated model. Specifically, we utilized the ‘Replay a log on Petri Net for Conformance Checking’ package in ProM 6.9 in order to compare the Cases found in the log with the discovered Petri net model. The model scored a total Case fitness of 98.66% and a precision score of 100%, where the former measures the percentage of the event log Cases that aligns with the discovered model, while the latter measures how precise the model is in not allowing for extra behavior that does not exist in the event log.

An important trade-off can be found between the accuracy of the DT classifier and the number of extracted patterns. Ultimately, we aim to find a DT model with the highest accuracy, without generating a large or excessive number of patterns. Defining a large number of patterns is subjective to the processes being modeled, however, too many patterns can result in the causal model discovered from process mining being too complex, difficult to visualize and track the system’s trajectory, and in some cases having a low model fitness (accuracy) score. Thus, a minimal number of meaningful and representative patterns is desired, while maintaining a high accuracy from the classification DT model. However, most importantly, these patterns have to accurately reflect the events that happen in the process.