Abstract

In response to concerns about the additional costs and time-to-degree associated with traditional developmental education programs, several states and postsecondary systems have implemented corequisite reform where academically underprepared students take both a developmental education course and college-level course in the same subject area within a single semester. Texas is one of the first and most diverse states to require all public institutions to scale-up corequisite developmental education. In this study, we use longitudinal survey data from the population of public two-year and four-year colleges and universities in Texas to examine heterogeneity in institutional responses to implementation of a statewide corequisite developmental education reform throughout the 4-year scale-up timeline. We provide insight into how challenges, costs, and data-informed efforts differ for postsecondary institutions that were compliant versus non-compliant with the annual statewide targeted participation rates for corequisite enrollment. We conclude with implications for policy and practice to better support statewide corequisite developmental education reform.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Academically underprepared students typically face additional costs and longer time-to-degree due to requirements to take developmental education courses that do not count for college credit. Some students never even begin taking developmental courses even though they are assigned to them, which may be attributed to issues of stigma or discouragement (Bailey & Jaggars, 2016). Other students may fail or drop out of developmental education courses, which are often taught by adjunct instructors with weaker qualifications and less on-campus availability to answer questions relative to full-time instructors. Even among students who begin making progress toward their developmental education requirements, academic momentum may be hindered as the more breaks that occur between courses, the more likely students are to drop out due to external circumstances such as family commitments and financial difficulties.

Several states and postsecondary education systems have sought to reform the developmental education process by offering corequisites where students enroll in a college-level course and a developmental education course in the same subject area during the same semester (Complete College America, 2016). The corequisite model is intended to improve student success through several mechanisms, First, it promotes academic acceleration by allowing students to immediately begin making progress toward their degree requirements, which may set them on a trajectory for future success (Logue et al., 2016, 2019; Miller et al., 2020; Ran & Lin, 2019). It may also reduce stigma by allowing students to take college-level courses and begin earning college credit in their first semester. Second, it provides greater access to college-level courses for students who may be likely to succeed even though they scored below college-ready on a placement test. These tests are not always an accurate predictor of students’ true abilities, so removing the barrier of a stand-alone full-semester course can help these students to progress more quickly (Jaggars et al., 2015; Logue et al., 2016; Run & Lin, 2019). Third, corequisite reforms usually involve instructional changes to improve the alignment of content between the developmental and college-level courses, which may help students to be more successful in these courses (Jaggars et al., 2015; Ran & Lin, 2019). In 2017, Texas passed House Bill 2223, which made corequisite the primary model of developmental education in math and integrated reading and writing (IRW) (H.B. 2223, 2017). The state set targeted corequisite participation rates ranging from 25% of developmental education enrollments in 2018 to 100% in 2021. Many of the decisions about how to implement corequisites were left up to individual colleges to decide, which required college leaders to make many decisions in a short amount of time to meet the state mandates for corequisite scale-up. The overarching research question examines how challenges, costs, and data-informed efforts differ for postsecondary institutions that were compliant versus non-compliant with the annual statewide targeted participation rates for corequisite enrollment.

In this paper, we explore heterogeneity in institutional implementation across the full scale-up period from 2018 to 2021 of the mandate for corequisite developmental education. We use longitudinal data from a statewide survey of college administrators at nearly all public postsecondary institutions in Texas. We use descriptive statistics to examine how challenges, costs, and data-informed efforts to improve corequisite implementation changed over time as the reform was scaled-up. We also examine differences in implementation among institutions that were compliant versus non-compliant with the annual statewide targeted participation rates for corequisite enrollment by using z-tests to identify statistically significant differences in the proportion of institutions incurring various costs and implementing data-driven efforts.

This study makes a scholarly contribution by demonstrating how sensemaking can be used to understand how stakeholders respond in a new and unknown policy environment. It also makes a contribution to practice by identifying the diversity of challenges that are faced in the implementation and scale-up of corequisite reform along with recommendations to address these challenges. In the following section, we describe prior literature on the implementation of corequisites and use a conceptual framework of sensemaking. Next, we describe our data and methods using longitudinal survey responses from the population of public colleges and universities in Texas from 2018 to 2021. Then we present the results showing how implementation and scale-up differed across the state, as well as variation among institutions that were compliant versus non-compliant with the statewide targets for corequisite participation. We conclude with implications for policy and practice to better support statewide corequisite developmental education reform.

Literature Review and Conceptual Framework

To understand variation across institutions in the implementation and scale-up of corequisite reform, our work is guided by a sensemaking framework that has been utilized in other policy implementation studies in educational settings (e.g., Doten-Snitker et al., 2021; Ehrenfeld, 2022; Klein, 2017; Mokher et al., 2020). Sensemaking is broadly defined as the process that occurs when individuals facing unfamiliar situations are required to make sense of their environment and identify how to respond. This framework provides insight into how individuals take action individually and in relation to others during times of change within the local context (Diehl & Golann, 2023). In educational settings, key stakeholders that influence the sensemaking process include both upper-level administrators and frontline workers such as teachers. Given that each organization has its own unique history, leadership, and personnel, there tends to be considerable heterogeneity in local responses to the same type of education reform.

When new educational policies are initiated, messages regarding the purpose and intended changes from higher-level officials are often misconstrued or reinterpreted at the local level (Coburn, 2001; Diehl & Golann, 2023). These messages may also diverge from educators’ own understandings and beliefs, which can create a sense of conflict (Ehrenfeld, 2022). If educators are able to successfully reconcile tensions in complex policy environments, they may develop a sense of professional achievement; otherwise, they may feel defeated or disappointed (Rom & Eyal, 2019). A common barrier to sensemaking is feelings of threat and fear, which can lead to ‘rigidity” (Ancona, 2012). In response to these feelings, some individuals may want to continue with business as usual instead of making the changes that are needed to succeed in a new environment. This type of response was seen in a pilot study of the implementation of corequisites at a select group of colleges in Texas prior to the statewide reform, where the majority of participating colleges noted a lack of buy-in from faculty, advisers, and students (Daugherty et al., 2018). Faculty were concerned about job security if they were not fully credentialed, and advisers struggled to deviate from traditional course sequencing options. Additionally, some English faculty preferred to work solely with college-ready students, while students at some colleges were not interested in the offered corequisite course. In order to develop more collaborative responses to organizational change, individuals must understand organizational cultural differences and develop a belief in a shared mission (Doten-Snitker et al., 2021; Klein, 2017). As Klein (2017) notes, “Sensemaking, associated with the awareness of others creates better-informed boundary spanners, who are able to craft more effective strategies for collaborative success” (p. 263).

A “divergent event” occurs when a new change conflicts with the normative expectations of stakeholders (Brown, 2021). For example, Brown (2021) provided a case where a private religious college president struggled to promote a new online education program because it was perceived as the type of practice undertaken by for-profit institutions (market logic) which conflicted with the values associated with non-profit institutions to maximize educational quality (professional logic). The president addressed this divergence by focusing on how online education could enhance prestige and access, rather than using market logic to motivate the decision. Thus, an important role of leaders is developing ways to make changes “fit” within the institution’s existing norms through the expansion of the logic’s boundary.

When implementing changes such as those that occur in a new reform effort, there is typically no single “right” way to adapt to an unknown situation (Ancona, 2012). Instead, responses emerge over time and become clearer upon collecting many different sources of data, including feedback from various stakeholders (Mandinach & Schildkamp, 2021). It is also important for leaders to create opportunities for engagement among various people across the organization to get different perspectives and ideas that may be tested and integrated into the planning process. Both formal and informal interactions with others involved in the same changes can help educators to make sense of these messages (Coburn, 2001; Diehl & Golann, 2023). They may provide opportunities for educators to challenge their assumptions and provide ideas for how to improve practice. During the implementation of another developmental education reform in Florida, this type of engagement occurred through collaborative leadership with key groups of stakeholders, ongoing conversations regarding implementation across campus, and the discussion of new ideas through national or regional professional conferences (Mokher et al., 2020).

In a review of efforts to scale-up changes in higher education, Kezar (2011) found that traditional approaches to scaling up innovation tend to be overly simplistic and evade the depth needed for true change. She identified three elements to improve existing and future educational scale-up projects in the U.S. which consist of deliberation and discussion, networks, and external supports and incentives. The element of deliberation and discussion is rooted in the exchange of ideas through different venues which may include white papers, journals, and other resource materials. Networks refer to the need to build a community to connect and prop-up innovation within education, both on- and off-campus. These networks can be built in either an online community or physically with individuals using existing resources and infrastructure to share ideas and serve a larger purpose of creating a coalition of change and support. External supports and incentives are centered around obtaining existing support from a variety of external stakeholders to facilitate change. These external stakeholders may also assist in influencing internal stakeholders in a positive manner to make changes to support reform efforts.

Another important part of sensemaking is learning from smaller experiments before implementing broader change (Ancona, 2012). After seeing what works well and what doesn’t, adaptations can be made before expanding changes more broadly. In evaluations of developmental math reforms in North Carolina and Virginia (Bickerstaff et al., 2016; Edgecombe, 2011), institutional leaders experimented with different changes to modularized developmental math courses, where students complete personalized learning assignments through a modularized computer program (this is similar in format to NCBOs in Texas). Some of the courses were offered as “shell courses” that were self-paced, while others had an instructor that dictated the course pace for the entire class. Course transcript data indicated that most students made slower progress than intended in the shell course, so only some students completed the self-paced format more quickly. In response to this finding, a few of the colleges began asking questions during advising sessions about students’ abilities to learn in a self-paced environment to determine which students may benefit from this option. Some colleges also experimented with different ways to set pacing benchmarks and provide incentives for students to improve student progressions through the shell courses.

In the context of the statewide scale-up of Texas’ corequisite reform, we anticipate that institutions may respond differently in their approach to implementation and their perceptions of the challenges faced based on how feasible it is for them to comply with the state mandates. We also examine ways in which institutions engage in data-informed efforts as part of the sensemaking process to assess their progress and develop further modifications to support implementation and scale-up.

Methodology

Context

Texas House Bill 2223 (2017) required all public postsecondary institutions to scale-up implementation over time so that the percentage of underprepared students enrolled in corequisites was 25% by Fall 2018, 50% by Fall 2019, and 75% by Fall 2020. Additional changes to the administrative code further scaled-up corequisite implementation to 100% for Fall 2021. We note, though, that while this policy applied to the vast majority of students, there were exemptions from these corequisite participation requirements for students from the lowest levels of academic preparation in adult basic education programs, as well as students classified as English as a Second Language (ESOL).

Corequisites could be offered in a standard classroom format or using an intervention model such as tutoring or supplemental instruction. Among the standard classroom format, courses could be offered concurrently where students take both the developmental and college-level components at the same time for the full duration of the term, or sequentially where students take the developmental component in the first half of the term followed by the college-level component in the second half. The intervention models could be offered either in a group format or a self-paced format. Additionally, colleges could decide on the intensity of the developmental component of the corequisite course, ranging from less than 1 credit to four credit hours.

This study seeks to examine how challenges, costs, and data-informed efforts differ for postsecondary institutions that were compliant versus non-compliant with the annual statewide targeted participation rates for corequisite enrollment. Specifically, we address the following research questions:

-

1.

How did institutional self-reported participation rates in corequisites compare to the statewide targets for corequisite participation in each year of scaling up the reform?

-

2.

How did challenges to implementation faced by institutions change over time during the scaling up of the corequisite reform?

-

3.

What types of costs did institutions incur during the implementation and scale-up of the corequisite reform?

-

4.

What types of data-informed efforts to improve corequisites did institutions engage in during the implementation and scale-up of the corequisite reform?

Data

The Texas Higher Education Coordinating Board (THECB) has been administering a Developmental Education Program Survey (DEPS) to all public colleges annually since 2010. The survey is completed by college staff familiar with developmental programs, such as provosts, developmental department chairs, and vice presidents of academic affairs. The survey has a high response rate since it is administered by state officials, with 93–100% of colleges completing the survey in each year of our analysis. There were 101 responses each in 2018 and 2019, 93 responses in 2020, and 100 responses in 2021, for a total sample size of 395 institutional responses over four years. This includes public institutions in both the two-year and four-year sectors throughout the state.

Since the implementation of HB 2223, the DEPS has focused on the implementation of corequisites and included questions on topics such as the availability of resources to implement corequisite courses, challenges to implementation, course formats, costs incurred due to corequisite implementation, and adoption of data-informed efforts to improve implementation. We use data from Fall 2018 to Fall 2021 which represents the full scale-up timeline for the reform with targeted corequisite participation rates from 25 to 100% of developmental education enrollments.

Analyses

To address research question 1, we collected responses from survey questions where institutions were asked to report the total number of students enrolled in developmental education courses and the number of students enrolled in corequisite developmental education courses during each year of the reform’s implementation. We used this information to calculate institution-level corequisite participation rates for each year. For each subject area (math and IRW), we categorized the institutions as either compliant or non-compliant with the statewide targets for corequisite participation for the year.

For research question 2, we used descriptive statistics to examine changes over time as institutions scaled up corequisite enrollments. Survey questions about challenges to the implementation of corequisites were on a five-point scale ranging from “not challenging” (1) to “very challenging” (5). This component of the analysis uses repeated measures where the same group of institutions is measured twice. The first measurement occurs during year 1 of corequisite implementation and the second measure occurs during year 5 of implementation. Following other similar studies using pre- and post-survey data (Liechty et al., 2022; Petersen et al., 2020; Prevost et al., 2018), we use a Wilcoxon signed rank test as a non-parametric procedure to compare ordinal values among two dependent samples (repeated measures). This test compares the distribution of values to determine whether one group has systematically larger values compared to the other group (Abbott, 2014). The null hypothesis is that both samples are from the same population, indicating no difference. The hypothesis is tested by computing the Wilcoxon rank sum statistic, W, which is the sum of the rank values from one of the samples. For this research question, we also compare the level of challenge associated with the implementation of corequisites in compliant versus non-compliant institutions. These analyses are conducted using a Wilcoxon rank-sum test to compare the two independent samples.

For research question 3, we used responses to questions about cost investments and data-informed efforts to improve corequisite implementation, where respondents were asked to “check all that apply” among a list of potential options. The responses to these questions were tabulated across institutions to indicate whether each of these items was ever selected during the scale-up period. For research question 4, we examined the percent of institutions that reported using different types of data-informed efforts to support corequisite implementation and scale-up, such as making policy or program changes, and establishing student success measures. For both research questions 3 and 4, we used z-tests to determine whether there were statistically significant differences in the proportions for institutions that were in compliance with the statewide targets for corequisite participation relative to institutions that were not in compliance. This approach has been used in other similar studies that have compared the equivalency of proportions for two groups (e.g. McCauley 2022; Park et al., 2016). The null hypothesis is that the proportions of the two populations are the same using a two-sided test (Moore & McCabe, 2003). To test this hypothesis, a z statistic is computed with a pooled standard error that uses information from both samples.

Findings

Scaling Up Corequisite Enrollments Over Time

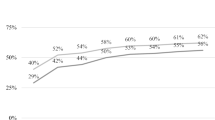

Research question 1 examines how institutional self-reported participation rates in corequisites compared to the statewide targets for corequisite participation in each year of scaling up the reform. We find that Texas institutions quickly scaled up the implementation of corequisites, exceeding the statewide targets for years 1 through 3 (Fig. 1). In the first year, the IRW corequisite participation rate of 62% was more than double the statewide target of 25%. The first-year math corequisite rate of 44% was also well above this statewide target. Yet as the targets increased each year, fewer institutions exceeded these targets. Approximately 9 out of 10 institutions in each subject area had scaled up to 100% participation among eligible students by the year 4 target, which indicates that nearly all institutions achieved full implementation.

Challenges to Implementation

Research question 2 examines how challenges to implementation faced by institutions changed over time during the scale-up of the corequisite reform. Each year respondents were asked to rank the level of challenge faced with potential barriers to implementation on a scale of 1= “not challenging” to 5= “very challenging” (Table 1). The challenges where there was a statistically significant decline from 2018 to 2021 include: scheduling corequisite courses (M=-0.56), determining the structure of the corequisite model (M=-0.55), challenges in communicating between faculty and advisors about corequisite options (M=-0.54), challenges in communicating between college-level and DE support faculty and/or staff (M=-0.48), advising students into corequisites (M=-0.46), aligning curriculum of DE course with the college-level course (M=-0.46), and educating students on the structure of corequisites (M=-0.37). Yet there were other challenges with no statistically significant differences from 2018 to 2021. These areas include accessing adequate content, curricula, and resources for corequisite instructors; providing a sufficient number of qualified faculty to teach in a corequisite model; funding corequisite models; having access to sufficient professional development; determining corequisite placement policies; and utilizing Texas Success Initiative Assessment (TSIA) scores for placement decisions.

In addition, we examined whether the types of challenges differed among institutions that were in compliance with the statewide targets for annual corequisite enrollment rates relative to those institutions not in compliance (Table 2). There were no statistically significant differences between the two groups, suggesting that the types of challenges faced were similar regardless of the level of compliance with the statewide targets.

Costs to Corequisite Implementation & Scale-Up

Research question 3 examines the types of costs institutions incurred during the implementation and scale-up of corequisite reform. We examined the percentage of institutions that ever reported each type of cost and found that the most common response (91%) was costs associated with enhancing academic support services such as supplemental instruction and tutoring (Table 3). Other common costs encountered by at least two-thirds of institutions included professional development for instructors of new developmental education courses (78%), compensation to faculty for curriculum development or course redesign work (67%), and development of new online or print materials to explain developmental education and gateway course options (66%).

Among institutions that provided written responses to the types of “other” costs incurred, personnel expenses were most common including hiring additional support personnel, providing overload pay for faculty of corequisite courses, and covering administrative costs associated with reporting. Other non-personnel costs included things such as memberships to organizations like NROC (Network | Resources | Open | College & Career), support for student information systems, and the purchase of an online peer editing program. There were also several.

respondents who noted that they did not have any costs associated with corequisite reform.

We examined whether there were differences in the types of costs incurred among institutions that were in compliance with 100% participation in corequisites by 2021 relative to non-compliant institutions (Table 3). The three statistically significant differences were that non-compliant institutions were less likely to have reported costs due to the development of early alert systems to identify at-risk students (51% for non-compliant institutions versus 74% for compliant institutions), professional development for instructors of new developmental education courses (68% versus 88%), and the purchase of new technology such as smartboards or document cameras (21% versus 40%).

Data-Informed Efforts to Improve Corequisites

Research question 4 examines the types of data-informed efforts that institutions engaged in to improve corequisite implementation and scale-up. Respondents were asked to report the types of data-informed activities their institutions engaged in to support co-requisite implementation. We examined the percent of institutions that ever reported engaging in these activities in any year, and also whether the responses differed for institutions that were in compliance with the 100% corequisite participation target in 2021 versus non-compliant institutions (Table 4). Overall, nearly all institutions reported that they monitored student success (99%), shared data and engaged key stakeholders in conversations around improvements to instruction (91%) and advising (87%); and made policy or program changes (87%).

In addition, we examined whether there were differences in the types of data-informed efforts undertaken by institutions that were compliant versus non-compliant with the 100% corequisite target for 2021. There were not statistically differences between compliant and non-compliant institutions which indicates that they used similar data-informed efforts.

Discussion and Conclusion

In Texas’ statewide corequisite reform, institutions were able to adapt their responses over a five-year scale-up period rather than fully adopting corequisite courses immediately for all students. The majority of institutions were able to meet or exceed the statewide annual targets for scaling up corequisite enrollments, with an average of 9 out of 10 institutions reaching 100% participation by Fall 2021 as intended. During the initial implementation efforts, the most commonly reported challenges related to scheduling, as well advising and placement of students. These types of challenges tended to be similar for both compliant and non-compliant institutions. Yet many issues became less challenging over time including scheduling corequisite courses, determining the structure of the corequisite model, challenges in communicating between faculty and advisors about corequisite options, challenges in communicating between college-level and DE support faculty and/or staff, advising students into corequisites, aligning curriculum of DE course with the college-level course, and educating students on the structure of corequisites. This is consistent with prior research that has shown that organizations tend to learn from their early efforts and learn from these experiences to make adaptions for expanding changes more broadly (Ancona, 2012; Mokher et al., 2020). Institutions in Texas may also have needed to experiment with processes for advising and placing students before finding an approach that worked best in their own contexts.

Not surprisingly, the most common costs encountered by institutions were associated with enhancing academic support services such as supplemental instruction and tutoring. Institutions not in compliance with statewide targets were more likely to not have costs due to voluntary additional supports such as early alert systems, relative to compliant institutions. We also found that nearly all institutions, regardless of their compliance status, engaged in data-informed efforts to support implementation including monitoring student success, sharing data and engaging key stakeholders in conversations around improvements to instruction and advising, and making policy or program changes.

The prevalence of Texas institutions’ use of practices including monitoring student success, sharing data with key stakeholders, and making changes based on data supports the sensemaking process of engaging in data-informed efforts to assess progress and develop further modifications to support implementation and scale-up. Engaging stakeholders in data use can also provide opportunities to make sense of reform efforts and develop ideas for improving practice (Coburn, 2001; Kezar, 2011; Mokher et al., 2020). Collective engagement in interpreting data is a critical part of the sensemaking process because it may lead the group to identify solutions and develop an action plan that may not be evident in individual efforts to analyze data (Mandinach & Schildkamp, 2021).

In order for large-scale reforms to be successful, policymakers need to ensure that the unique responses from institutions are addressed to overcome the diversity of challenges that are faced. The THECB has been active in supporting institutions through activities such as holding conferences that allow universities to send teams to engage in the identification of a specific problem of practice in implementing the corequisite reform and providing guided support for the development of their institution’s continuous improvement plan. This is important because engaging in a variety of interconnected professional development initiatives can help educators to make sense of new reform efforts in collaboration with their peers rather than working in isolation (Ehrenfeld, 2022). The THECB also provided college readiness and success grants to postsecondary during the early years of implementation to support the scale-up of corequisites. Institutions could determine for themselves the types of resources they needed most, including a wide variety of allowable expenses such as stipends for faculty development of new corequisite courses, instructional materials, information technology to incorporate best practices, and professional development for a wide range of stakeholders. These types of supports are important for helping to ensure that institutions are able to achieve the goals of new reform efforts, and may incentivize internal stakeholders to be supportive of reform efforts (Kezar, 2011). Policymakers in other states interested in corequisite reform should consider the various types of challenges faced by different institutions in Texas, and lessons learned from the response by the THECB.

References

Abbott, M. L. (2014). Understanding educational statistics using Microsoft Excel and SPSS. Wiley.

Ancona, D. (2012). Framing and acting in the unknown. S. Snook, N. Nohria, & R. Khurana (Eds.). The Handbook for Teaching Leadership, 3(19), 198–217.

Ancona, D. (2012). Sensemaking: Framing and acting in the unknown. In S. Snook, N. Nohria, & R. Khurana (Eds.), The handbook for teaching leadership: Knowing, doing, and being (pp. 3–19). Sage

Bailey, T., & Jaggars, S. S. (2016). Developmental education: When college students start behind. The Century Foundation. https://ccrc.tc.columbia.edu/publications/when-college-students-start-behind.html. Accessed 13 Jul 2020.

Bickerstaff, S., Fay, M. P., & Trimble, M. J. (2016). Modularization in developmental mathematics in two states: Implementation and early outcomes. Community College Research Center. Columbia University. https://ccrc.tc.columbia.edu/media/k2/attachments/modularization-developmental-mathematics-two-states.pdf. Accessed 31 Aug 2021.

Brown, J. T. (2021). The language of leaders: Executive sensegiving strategies in higher education. American Journal of Education, 127(2), 265–302.

Coburn, C. E. (2001). Collective sensemaking about reading: How teachers mediate reading policy in their professional communities. Educational Evaluation and Policy Analysis, 23(2), 145–170. https://doi.org/10.3102/01623737023002145

Complete College America (2016). Corequisite remediation: Spanning the completion divide: Breakthrough results fulfilling the promise of college access for underprepared students. Retrieved from http://completecollege.org/spanningthedivide/. Accessed 12 Jun 2020.

Daugherty, L., Gomez, C. J., Gehlhaus, D., Mendoza-Graf, A., & Miller, T. (2018). Designing and implementing corequisite models of developmental education: Findings from Texas community colleges. RAND Corporation. https://www.rand.org/content/dam/rand/pubs/research_reports/RR2300/RR2337/RAND_RR2337.pdf. Accessed 13 Aug 2021.

Diehl, D. K., & Golann, J. W. (2023). An integrated framework for studying how schools respond to external pressures. Educational Researcher. https://doi.org/10.3102/0013189X231159599

Doten-Snitker, K., Margherio, C., Litzler, E., Ingram, E., & Williams, J. (2021). Developing a shared vision for change: Moving toward inclusive empowerment. Research in Higher Education, 62, 206–229. https://doi.org/10.1007/s11162-020-09594-9

Edgecombe, N. (2011). Accelerating the academic achievement of students referred to developmental education. CCRC Working Paper No. 30. Community College Research Center, Columbia University. https://files.eric.ed.gov/fulltext/ED516782.pdf. Accessed 13 Aug 2021.

Ehrenfeld, N. (2022). Framing an ecological perspective on teacher professional development. Educational Researcher, 51(7), 489–495. https://doi.org/10.3102/0013189X221112113

H. B. 2223. (2017). Leg., 85th Reg. Sess. (Tex. 2017) (enacted). https://capitol.texas.gov/tlodocs/85r/billtext/html/hb02223i.htm. Accessed 20 Apr 2022.

Jaggars, S. S., Hodara, M., Cho, S. W., & Xu, D. (2015). Three accelerated developmental education programs: Features, student outcomes, and implications. Community College Review, 43(1), 3–26.

Kezar, A. (2011). What is the best way to achieve broader reach of improved practices in higher education? Innovative Higher Education, 36(2011), 235–247. https://doi.org/10.1007/s10755-011-9174-z

Klein, C. (2017). Negotiating cultural boundaries through collaboration: The roles of motivation, advocacy and process. Innovative Higher Education, 42, 253–267. https://doi.org/10.1007/s10755-016-9382-7

Liechty, J. M., Keck, A. S., Sloane, S., Donovan, S. M., & Fiese, B. H. (2022). Assessing transdisciplinary scholarly development: A longitudinal mixed method graduate program evaluation. Innovative Higher Education, 47(4), 661–681. https://doi.org/10.1007/s10755-022-09593-x

Logue, A. W., Watanabe-Rose, M., & Douglas, D. (2016). Should students assessed as needing remedial mathematics take college-level quantitative courses instead? A randomized controlled trial. Educational Evaluation and Policy Analysis, 38(3), 578–598.

Logue, A. W., Douglas, D., & Watanabe-Rose, M. (2019). Corequisite mathematics remediation: Results over time and in different contexts. Educational Evaluation and Policy Analysis, 41(3), 294–315.

Mandinach, E. B., & Schildkamp, K. (2021). Misconceptions about data-based decision making in education: An exploration of the literature. Studies in Educational Evaluation, 69, 1–10. https://doi.org/10.1016/j.stueduc.2020.100842

McCauley, D. (2022). A quantitative analysis of rural and urban student outcomes based on location of institution of attendance. Journal of College Student Retention: Research Theory & Practice. https://doi.org/10.1177/15210251221145007

Miller, T., Daugherty, L., Martorell, P., Gerber, R., LiCalsi, C., Tanenbaum, C., & Medway, R. (2020). Assessing the effect of corequisite English instruction using a randomized controlled trial. American Institutes for Research. https://collegecompletionnetwork.org/sites/default/files/2020-05/ExpermntlEvidncCoreqRemed-508.pdf. Accessed 5 Nov 2022.

Mokher, C. G., Park-Gaghan, T. J., Spencer, H., Hu, X., & Hu, S. (2020). Institutional transformation reflected: Engagement in sensemaking and organizational learning in Florida’s developmental education reform. Innovative Higher Education, 45, 81–97. https://doi.org/10.1007/s10755-019-09487-5

Moore, D. S., & McCabe, G. P. (2003). Introduction to the practice of Statistics. WH Freeman and Company.

Park, T., Woods, C. S., Richard, K., Tandberg, D., Hu, S., & Jones, T. B. (2016). When developmental education is optional, what will students do? A preliminary analysis of survey data on student course enrollment decisions in an environment of increased choice. Innovative Higher Education, 41, 221–236. https://doi.org/10.1007/s10755-015-9343-6

Petersen, S., Pearson, B. Z., & Moriarty, M. A. (2020). Amplifying voices: Investigating a cross-institutional, mutual mentoring program for URM women in STEM. Innovative Higher Education, 45, 317–332. https://doi.org/10.1007/s10755-020-09506-w

Prevost, L. B., Vergara, C. E., Urban-Lurain, M., & Campa, H. (2018). Evaluation of a high-engagement teaching program for STEM graduate students: Outcomes of the future academic scholars in teaching (FAST) fellowship program. Innovative Higher Education, 43, 41–55. https://doi.org/10.1007/s10755-017-9407-x

Ran, F. X., & Lin, Y. (2019). The effects of corequisite remediation: Evidence from a statewide reform in Tennessee. Columbia University, Teachers College, Community College Research Center. https://files.eric.ed.gov/fulltext/ED600570.pdf

Rom, N., & Eyal, O. (2019). Sensemaking, sense-breaking, sense-giving, and sense-taking: How educators construct meaning in complex policy environments. Teaching and Teacher Education, 78, 62–74.

Funding

The research reported here was supported by the Institute of Education Sciences, U.S. Department of Education, through Grant R305A210319 to Florida State University. The opinions expressed are those of the authors and do not necessarily represent the views of the Institute or the U.S. Department of Education.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Christine Mokher. The first draft of the manuscript was written by Christine Mokher and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The conclusions of this research do not necessarily reflect the opinions or official position of the Texas Education Agency, the Texas Higher Education Coordinating Board, the Texas Workforce Commission, or the State of Texas.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mokher, C.G., Park-Gaghan, T.J. Taking Developmental Education Reform to Scale: How Texas Institutions Responded to Statewide Corequisite Implementation. Innov High Educ 48, 861–878 (2023). https://doi.org/10.1007/s10755-023-09656-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10755-023-09656-7