Abstract

The paper presents a fast and stable solver algorithm for structural problems. The point is the distance between the eigenvector of the constrained stiffness matrix and the unconstrained matrix. The coarse motions are close to the kernel of the unconstrained matrix. We use lower-frequency deformation modes to construct an iterative solver algorithm through domain decomposition expressing near-rigid-body motions, deflation algorithms, and two-level algorithms. We remove the coarse space from the solution space and hand over the iteration space to the fine space. Our solver is parallelized, and the solver thus has two sets of domain decomposition. One decomposition generates the coarse space, and the other is for parallelization. The basic framework of the solver is the parallel conjugate gradient (CG) method on the fine space. We use the CG method for the basic framework instead of the (simplest) domain decomposition method. We conducted benchmark tests using elastic static analysis for thin plate models. A comparison with the standard CG solver results shows the new solver’s high-speed performance and remarkable stability.

Similar content being viewed by others

1 Introduction

The three-dimensional analysis is a standard technique for structural mechanics. However, it has yet to be used in the design of large-scale structures, safety assessment, and maintenance. Instead, frame and lumped mass models have long been used owing to historical background, insufficient computer power, and computational techniques. However, in recent years, three-dimensional analyses have been gradually but widely used for nuclear power plants (NPPs) [1], high-rise buildings [2], and bridges [3]. For example, Japan’s practice standard for the seismic probabilistic risk assessment (PRA) of NPPs requires a detailed evaluation of the damage limits of buildings and structures using three-dimensional seismic response analysis [4]. In a study of high-rise buildings [2], three-dimensional elastoplastic seismic response damage analysis has been conducted with as many as 74 million degrees-of-freedom (DOFs), which represents a level of detail and complexity of damage that is impossible to evaluate detailed damage through the frame and lumped mass analysis.

We need to shorten the calculation time and stability when performing three-dimensional analyses. The present study considers these two properties in the development of our solver.

It is widely known that small eigenvalues close to zero hamper the convergence of iterative methods, mainly because the condition number increases with a decrease in the smallest eigenvalue. Many studies have focused on avoiding eigenvalues close to zero [5,6,7,8]. The deflation algorithm mainly aims to eliminate eigenvectors corresponding to eigenvalues close to zero [7,8,9,10,11,12,13,14].

In the case of structural problems discretized by the finite element analysis (FEA), the stiffness matrices \(\varvec{A}\) are symmetric positive semi-definite. There are three parallel translations and three infinitesimal rotations, a total of six rigid-body motions, which implies that \(\varvec{A}\) duplicates the six zero eigenvalues. We must, therefore, impose at least six constraint conditions to eliminate the zero eigenvalues. Let \(\varvec{\bar{A}}\) be the stiffness matrix after imposing the constraint conditions as will be discussed in Sect. 3. The eigenvalues of \(\varvec{A}\) and \(\varvec{\bar{A}}\) are then different from each other. However, the eigenvalues of these two matrices have the so-called interlacing property [15]. The rigid-body mode is widely used in structural analysis for different objectives [10, 16,17,18,19,20, 22, 23].

We assume that the analysis models are constructed with solid elements. The rigid-body motions, which are the bases of \(\textrm{Ker}\varvec{A}\) (the kernel of \(\varvec{A}\)), relate to the lower or lowest eigenvalue(s) of \(\varvec{\bar{A}}\), owing to the interlacing property. We can construct the near rigid-body modes using domain decomposition in which the subdomains have six rigid-body modes. We can set a basis of a coarse space by aggregating the subdomains through a partition of unity. We use the above-noted six rigid-body motions to simulate low-frequency motions described in the literature [22, 24].

This paper discusses the distance between the eigenvectors of \(\varvec{\bar{A}}\) and \(\textrm{Ker}\varvec{A}\). The distance describes how the lower-frequency modes are segregated from the higher modes.

In our analysis, we use the parallelized conjugate gradient (CG) method as our basic framework on the entire domain instead of the simplest domain decomposition method (DDM) [25], which means that we do not apply a direct method to the decomposed subdomains in our method. The performance of the DDM and CG methods are compared in the next section, showing the reason for using the CG method.

Our solver uses two sets of domain decomposition. One decomposition is for parallelizing the CG method, and the other is for generating the coarse space. The former corresponds to the subdomains of the DDM, and the latter generates the projection in the deflation algorithm.

Near-rigid-body motions generate a projection from the entire space to the coarse space. The projection space is so small that the reduced equation can be solved using direct methods. In contrast, we can apply the CG method to the large complementary space, which is much easier to solve than the entire space containing “rough” components generated by lower-frequency modes. Given the direct sum decomposition of the solution space, our method is a two-level method [13, 26, 27].

Our definition of the projection constructs by the coarse motion, which is in contrast with definitions adopted in many studies (e.g., [7,8,9,10,11,12,13,14]), which will be described in Sect. 5.2.

The deflated domain decomposition method (DDDM) algorithm, which we describe in this paper, shares similarities with the algorithm given in [7] that expands the Krylov subspace, adding approximated eigenvectors corresponding to eigenvalues close to zero using the orthogonality of the residuals, which we describe in Sect. 6.

The DDDM solver outperforms a successive symmetric overrelaxation (SSOR) preconditioned solver, which we assume is a standard solver, as will be discussed in Sect. 6.3. Also, in an article [28], an elastic seismic response analysis of an NPP building installed on the ground consisting of one million DOFs with hexahedral and plate elements involving 2700 steps for 54 s took 1.1 h using the DDDM solver. In contrast, the SSOR solver took 13.8 h. We used sixteen parallel processes in both cases. The stability of the DDDM solver compared to the SSOR solver concerning the tolerance range of the convergence was also discussed in this article.

2 Basic framework of the linear solver

2.1 Displacement-based finite element equations

We outline the FEA method used in the paper. Only a static equilibrium state is assumed. We start from the principle of virtual work. We omit the commonly used isoparametric discretization here. Refer to the detailed discussion in [21].

(a) Discretization

We only consider the solid elements. We approximate the target body as an assembly of a finite number of discrete finite elements, e.g., tetrahedral, hexahedral, or other elements. Each element m has nodal points x, y, z in a local coordinate system. Let the number of DOFs be n.

We use indicial notation and summation convention. \(x_i\) denotes the coordinate axis, where \(i = 1, 2, 3\) in the three-dimensional analysis given to each nodal point and \(u_i\) denote the displacement components, where \(i = 1, 2, 3\).

(b) Principle of Virtual Work

We take virtual displacements \(\bar{u}_i\) and take the corresponding virtual strains \(\bar{\epsilon }_{ij}\), differentiating \(\bar{u}_i\). We then have the following principle of virtual displacements, which is the fundamental equation for the equilibrium of a general three-dimensional body:

where \(X_i\) is the components of the body force \(\varvec{X}\), and \(Y_i\) is the components of the surface traction \(\varvec{Y}\) on the surface S of the body. \(u_i^{(S)}\) is the displacements on the surface S. The displacement (essential) boundary conditions are given by

The natural boundary condition is given by

on the surface S, where \(\varvec{n}_j\) is the unit normal vector to the surface S. The left side of (1) corresponds to the internal virtual work, and the right side represents the external virtual work.

(c) Finite element equations

Let \(\hat{\varvec{u}}\) be the unknown vector of all the global displacements, where the unknown displacements \(u_i, i = 1, 2, 3\) of the nodal points are globally aligned.

Let \(\varvec{N}^{(m)}\) be a displacement interpolation matrix and \(\varvec{B}^{(m)}\) be the strain–displacement matrix for element m. We write

We assume the virtual displacements and strains are

We further need a relation between \(\varvec{\epsilon }\) and \(\varvec{\tau }\)

where \(\varvec{D}^{(m)}\) is the elasticity matrix of element m. Substituting \(\varvec{u}^{(m)}\), \(\varvec{\epsilon }^{(m)}\), \(\bar{\varvec{u}}^{(m)}\), \(\bar{\varvec{\epsilon }}^{(m)}\), and \(\varvec{\tau }^{(m)}\) into (1), we have a summation form of the virtual equation for the unknown displacements:

This equation leads to the finite element equation for the displacements \(\hat{\varvec{u}}\) of DOFs of the discretized entire body:

The second term of (8) on the right side corresponds to the constraint condition of the linear equation, which we will describe in the following sections, ensuring this equation is solvable.

As noted above, the explanation here describes the static equilibrium state. However, we can describe the dynamic or nonlinear dynamic state similarly. The corresponding dynamic equation is:

where \(\varvec{C}\) is a damping matrix. In many cases of the FEA, the dynamic equation is solved using, e.g., Newmark’s \(\beta \) method, which converts the dynamic equation to the static equation in the form of (9). The nonlinear equation is linearized by, e.g., Newton’s method and is reduced to the linear equations.

We use the symbol \(\varvec{A}\) for the stiffness matrix corresponding to \(\varvec{K}\) in (9), and we focus on the linear equation in this paper:

2.2 Comparison of the DDM and CG method

As noted in Sect. 1, In our DDDM algorithm, we use the CG method on almost all the solution space instead of the DDM. The DDM refers to the simplest iterative substructuring method using a domain decomposition, which overlaps only with the boundaries of the neighboring subdomains. The direct methods are applied on each subdomain inside the boundaries with the displacement boundary condition on the boundaries. It is not easy to compare the performance of the DDM with that of the CG method, even though the two methods were compared in previous work [29], wherein the advantages of the CG method were demonstrated under some conditions. However, the performances depend on the models, elements, boundary conditions, multi-point constraint (MPC)s, materials, and especially the sparsity of the stiffness matrix. We compare the two methods through simple static analysis using simple models.

Note that the results in this section do not have generality. The objective of this section is to show that our restricted but simple and standard examples have better performances than those using the DDM and the reason we take the CG method on the entire space. Significantly, the simple structure of the CG method helps the DDDM algorithm.

We used the open-source structural analysis code, Adventure [30], to compare the DDM and CG methods. Adventure has options of (a) the simplest DDM with the diagonal scaling, (b) the balancing domain decomposition (BDD) method, and (c) the parallel CG method in its simplest form with the diagonal scaling. Here, we used options (a) and (c). The CG method is parallelized, but only a single process was assumed. The CG method used the same code in the DDM, which could thus be fairly compared with the DDM. The computer used was a cluster computer with an Intel Xeon Platinum 9242, operating at 2.3 GHz, six nodes with 96 core processors per node, 384 GB of RAM (16 GB DDR4-2933 \(\times \) 24), and Infiniband EDR networking capability (100 Gbps). We assume a single thread for all the following cases.

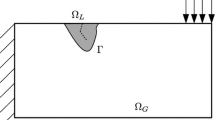

Thin-plate model with the dimensions of \(6\textrm{mm}\times 1\textrm{mm}\times 6\textrm{mm}\). We refer to it as the “\(6\times 1\times 6\)” model, used in comparing the performance of the CG method and DDM for cantilever-type dead weight analysis. We use this model in later sections in various dimensions

We use a plate model built by arranging hexahedral linear elements having dimensions of \(1\textrm{mm}\times 1\textrm{mm}\times 1\textrm{mm}\) as shown in Fig. 1. We conducted cantilever-type analyses as follows. The x, y, and z elements align in the directions of the x, y, and z-axes, respectively. We refer to this plate as “\(x\times y\times z\).” We use standard steel with Young’s modulus of 200 GPa and Poisson ratio of 0.3 as the material. The plate was rotated \(90^\circ \) around the x axis in the direction of \(-y\). This plate’s xy surface (\(z=0\)) was then constrained fully, and we applied a dead weight in the direction of \(-y\). y corresponds to the thickness of the plate, whereas z corresponds to the length of the plate.

The eight models had dimensions from \(50 \times 5 \times 50\) up to \(200 \times 10 \times 200\), as shown in Table 1.

We used up to 16 cores in the calculation. For each model, we conducted the analyses of the DDM with 2, 4, 8, and 16 processes with the same number of processor cores. The results were compared with those of the CG method without parallelization, resulting in five parallel cases. The elapsed times until convergence, measured in wall-clock time, are compared in Table 1. We set the tolerance value of the residual error for the convergence to \(1.0\times 10^{-7}\).

There are irregularities in Table 1 in the DDM analysis results. For example, the calculation time increases from two to four processes for the \(50\times 5\times 50\) and \(100\times 5\times 100\) models. The \(100\times 5\times 100\) model in Fig. 2 shows this state of irregularity.

2.3 Basic framework of the linear solver

The previous section showed that the CG method can be a basic framework. The well-known solver algorithm FETI (Finite Element Tearing Interconnecting) [17, 18], BDD [19, 20] both depend on the DDM in the sense that the entire domain is decomposed into non-overlapping subdomains, a direct method is applied to each of subdomain, and the solution is divided into the rigid-body motion and the motion that includes the strain. The rigid-body motions of the subdomains are solved globally. The subdomains are taken to be structural objects. The FETI method takes the surface traction for the unknowns on the inner boundaries between the neighboring subdomains. In contrast, the BDD method takes the displacement between the boundaries of the neighboring subdomains. The CG method removes the gaps in the displacements between the boundaries in the FETI method and the gaps in the surface traction between the boundaries in the BDD method.

As noted in Sect. 1, our approach uses the CG method on the entire space in contrast to the DDM. Our solver uses two classes of domain decomposition. One parallelizes the CG method, whereas the other generates the coarse space based on the rigid-body motions. The number of subdomains for the parallel CG method corresponds to the number of parallel processes. The number of the subdomains used in the deflation algorithm should be much greater than the number used in the parallel CG method, from our experience.

In the FETI and BDD methods, the rigid-body motions are used to build the approximated motions of the subdomains in the iteration processes. Meanwhile, in our method, We use the rigid-body motions to construct lower-frequency modes.

2.4 DDDM: deflated domain decomposition method

The strategy of our algorithm is summarized as follows.

-

(a)

We apply the parallel CG method to the entire domain as the basic framework, and we decompose the entire domain into subdomains that correspond to the parallel processes. The domain decomposition is the “nodal-point” base decomposition.

-

(b)

Separately and independently from the domain decomposition in a), the entire domain is decomposed into some non-overlapping subdomains, which approximate lower-frequency modes (i.e., the coarse grid modes) using the rigid-body motions. The domain decomposition is the “element” base decomposition.

-

(c)

The deflation algorithm removes the lower-frequency components from the solution based on the domain decomposition b). As will be explained in Sect. 4, there are lower-frequency eigenvectors close to the kernel of the unconstrained stiffness matrix, and we construct the lower-frequency modes using the basis of the kernel. Removal of the lower-frequency modes gives rise to high-speed performance and stability of the solver.

-

(d)

In the parallel CG method, we distribute the coarse grid motion generation process into the parallel processes of the CG method.

3 Rewriting of the stiffness matrix

Let \(\varvec{A} \in \textbf{R}^{n\times n}\) be a stiffness matrix without constraint conditions, i.e., a symmetric semi-positive definite matrix. \(\varvec{A}\) includes six zero eigenvalues and six corresponding eigenvectors, namely rigid-body motions. Our problem is to solve

by imposing necessary constraint conditions. Let \(r\ge 6\) be the number of constraint conditions. By relocating the DOF numbers of the constraint conditions to the upper position and taking the first r DOFs as the constraint conditions in ascending order, \(\varvec{A}\) is rewritten as:

where \(\varvec{\bar{A}}\) is a symmetric positive definite matrix. We rewrite (12) in the form:

whereFootnote 1

We further rewrite the components \(x_{r+1},\dots ,x_n\) as \(x_1,\dots ,x_{n-r}\) and rewrite \(b_{r+1},\dots ,b_n\) as \(b_1,\dots ,b_{n-r}\). We thus write

The original constrained components \(x_1,\dots ,x_r\) are included in \(\bar{\varvec{b}}\) in the form of a product sum of \(\varvec{\bar{b}}\) and the first to r-th components of \(\varvec{A}\varvec{x}\) in (12). We identify \(\varvec{\bar{x}}\in \textbf{R}^{n-r}\) and \(\varvec{x}'\in \textbf{R}^n\) given above to evaluate the distance between \(\varvec{\bar{x}}\) and the kernel of \(\varvec{A}\) in the following sections. Our problem (12) is then rewritten as

These procedures give a structure of \(\textbf{R}^{n-r}\) as a subspace of \(\textbf{R}^n\).

Let

be the eigenvalues of \(\varvec{A}\), and let

be the eigenvalues of \(\varvec{\bar{A}}\).

The relationship of \(\lambda _i,~(1\le i< n)\) and \(\bar{\lambda }_i~(1\le i< n-r)\) is known from the so-called interlacing properties [15]:

where \(\lambda _1^{(0)},\cdots ,\lambda _n^{(0)}\) are the eigenvalues of \(\varvec{A}\), which are the alias names of \(\lambda ,\cdots ,\lambda _n\) shown in (18), and \(\lambda _1^{(r)},\cdots ,\lambda _{n-r}^{(r)}\) are those of \(\varvec{\bar{A}}\), which are the alias names shown in (19). From this relation, we obtain the following relationship of the intervals of the maximum and minimum of the eigenvalues with the increasing the number of constraint conditions:

(21) shows that the existence ranges of the eigenvalues are monotonously decreasing, included in the range with the smaller number of constraints. Significantly, the range of the eigenvalues (19) are included in the range of the eigenvalues (18).

We let the eigenvectors corresponding to the eigenvalues (19) be

The eigenvalues of \(\varvec{A}\) and \(\varvec{\bar{A}}\) are close in the sense of (21), which gives the fundamentals of considering the distance between the eigenvectors of \(\varvec{A}\) and the kernel of \(\varvec{\bar{A}}\).

4 Distance between the eigenvector and the kernel of the unconstrained stiffness matrix

4.1 Coarse grid matrix

Let \(\varvec{A}\in \textbf{R}^{n\times n}\) be the unconstrained stiffness matrix, as noted above. In this section, we discuss the distances between \(\textrm{Ker}\varvec{A}\) and the eigenvectors of \(\varvec{\bar{A}}\).

Let \(\Omega \) be the target of the analysis model and \((x_i,y_i,z_i),1\le i\le n\) be the nodal points of \(\Omega \). All the elements are assumed to be solid elements. According to descriptions given in the literature [22, 24], let

where \((x_i,y_i,z_i)\) denotes the coordinates of nodal point i. Each column of \(\varvec{\Phi }\) is normalized. We refer to \(\varvec{\Phi }\) as a coarse-grid matrix.

The first three columns correspond to the parallel translations of the structure, and the last three are infinitesimal rotations around each coordinate. These six columns are independent and consist of a basis of \(\textrm{Ker}\varvec{A}\), which means that the six vectors are the basis of the rigid-body motions of \(\Omega \). We write these as \(\varvec{f}_1,\,\dots ,\,\varvec{f}_6\):

We replace these vectors’ first r rows with zero values, where these r rows correspond to the constraint condition. We write these as \(\varvec{\bar{f}}_1,\,\dots ,\,\varvec{\bar{f}}_6\). According to the rule described in Sect. 3, we identify \(\varvec{\bar{f}}_i\in \textbf{R}^{n-r}\) and \(\varvec{f}'\in \textbf{R}^n\):

\(\varvec{\Phi }\) is rewritten as \(\varvec{\Phi }'\in \textbf{R}^{n\times 6}\) or \(\varvec{\bar{\Phi }}\in \textbf{R}^{(n-r)\times 6}\) corresponding to (14) or (17):

where [r/3] represents the quotient r/3. In the expression for \(\varvec{\Phi }'\), the first r rows are zero matrix in \(\textbf{R}^{r\times 6}\), and in \(\varvec{\bar{\Phi }}\), these r rows are excluded. Although we assume that the active elements in both (26) and (27) start from the row \((1~~ 0~~ 0~~ 0~~ z_1~~-y_1)\) for simplicity, the expression of the first three rows depends on whether r is a multiple of 3 or not. In other words, although n is a multiple of 3, r is not necessarily a multiple of 3. We also refer to \(\varvec{\Phi }'\) and \(\varvec{\bar{\Phi }}\) as the coarse grid matrices, as we refer to \(\varvec{\Phi }\) defined by (23).

In the following, we assume the form of the equation for our problem to be (17), and accordingly, the form of the coarse grid matrix is taken to be (27); i.e., \(\varvec{\bar{\Phi }}\).

4.2 Distance between the eigenvector and the kernel of the unconstrained stiffness matrix

In this section, we consider the \(L^2\) distance between the eigenvector \(\bar{\varvec{x}}\) of \(\varvec{\bar{A}}\), which we identify with \(\varvec{x}'\), and \(\textrm{Ker}\varvec{A}\).

Let U be a subspace of \(\textbf{R}^n\). The distance between a point \(\varvec{x}\in \textbf{R}^n\) and U is then the distance between \(\varvec{x}\) and the closest point of U. We denote this distance as

Let \(\varvec{P}\) be an orthogonal projection from \(\textbf{R}^n\) to U. This equation is equal to

In particular, if \(\Vert \varvec{\varvec{x}}\Vert _2=1\), then

The projection from \(\textbf{R}^n\) to \(\textrm{Ker}\varvec{A}\) is given by

where \(\Phi \) is defined by (23).

In the following, we evaluate the distance between the eigenvector \(\bar{\varvec{x}}\in \textbf{R}^{n-r}\) of \(\varvec{\bar{A}}\) and \(\textrm{Ker} \varvec{\varvec{A}}\). According to the rule described in Sect. 3, we identify \(\bar{\varvec{x}}\in \textbf{R}^{n-r}\) and \(\varvec{x}'\in \textbf{R}^n\). We consider the distance to be

Additionally, we discuss, in the following theorem, the angle (minimal angle) between a point \(\varvec{x}\in \textbf{R}^n\) with the unit norm and a subspace U, which is defined as in [31]

Theorem 1

(Distance between the eigenvector and the kernel of the unconstrained stiffness matrix)

Let the eigenvalues and the corresponding eigenvectors of \(\varvec{\bar{A}}\) be given by (19) and (22), respectively. We then have the following properties.

-

(1)

For arbitrary \(1\le k\le n-r\), \(\varvec{x}_k'\notin \textrm{Ker}\varvec{A}\), and accordingly,

$$\begin{aligned} \textrm{dist}\,(\varvec{x}_k',\,\textrm{Ker}\varvec{A})>0. \end{aligned}$$(34) -

(2)

If \(k<n-r\) is large enough, then \(\varvec{x}_k'\) is nearly orthogonal to \(\textrm{Ker}\varvec{A}\), and accordingly,

$$\begin{aligned} \textrm{dist}\,(\varvec{x}_k',\,\textrm{Ker}\, \varvec{A})\approx 1. \end{aligned}$$(35) -

(3)

If \(k\ge 1\) is small enough, then

$$\begin{aligned} \textrm{dist}\,(\varvec{x}_k',\,\textrm{Ker}\varvec{A})\approx 0. \end{aligned}$$(36)In particular, if \(\varvec{x}_k'\) is the fundamental mode \(\varvec{x}_1'\), then \(\textrm{dist}\,(\varvec{x}_1',\,\textrm{Ker} \varvec{A})\) takes the smallest value.

Proof

As noted previously, the number of the eigenvalues and eigenvectors is assumed to be \(1\le k\le n-r\) imposing the r constrained conditions.

We prove 1). If \(\varvec{A}\varvec{x}_k'=\varvec{0}\), then \(\varvec{x}_k'\) is the rigid-body mode. The r zeros put above \(\bar{\varvec{x}}_k\) cannot be represented by the rigid-body motions. This proves (34).

We prove 2) and 3). It is known from modal analysis that the modal displacement components of a structural body are dominant for the lower frequency range, and the whole body vibrates largely, whereas, in the range of higher frequencies, vibrations become minute in proportion to the frequencies and depending on the shape of the body.

Take an arbitrary \(1\le k\le n-r\). Referring to (33), the angle (minimal angle) between \(\varvec{x}_k'\) and \(\textrm{Ker}\varvec{A}\) is given by

where \(\varvec{g}_1,\cdots ,\varvec{g}_6\) are the appropriate basis of \(\textrm{Ker}\varvec{A}\).

We write the components of the i-th nodal point of \(\varvec{x}_k'\) as \(\{x_{ki}'~y_{ki}'~z_{ki}'\}\). The first r components of \(\varvec{x}_k'\) are zeros, and \(\varvec{x}_k'\) can thus be written as

Although \(\varvec{g}_i\) in (37) does not coincide with \(\varvec{f}_i\) in general, by representing appropriate \(\varvec{g}_i\) as a linear combination of \(\varvec{f}_i\), we can take \(\varvec{f}_i\) instead of \(\varvec{g}_i\).

The representations of \(\varvec{f}_1,\varvec{f}_2,\varvec{f}_3\) are easily obtained according to their forms. \(\varvec{f}_4,\varvec{f}_5,\varvec{f}_6\) are given as

Assuming that all the vectors are normalized, the inner products of \(\varvec{x}_k'\) and \(\varvec{f}_1,\,\dots ,\,\varvec{f}_6\) are

We can evaluate the variation of the six inner products \({\varvec{x}_k'}^T \varvec{f}_i\) using these representations depending on k. The first three equations involve only the displacements, whereas the displacements are multiplied by the constant values of the coordinates of the nodal points in the last three equations. If \(1\le k\le n-r\) is sufficiently small, then the modal shape of the target model deforms largely from the static state. In particular, the deformation is largest for the fundamental mode with \(k=1\), which corresponds to the maximum of the six values of (40), and \(\textrm{dist}\,(\varvec{x}_1',\,\textrm{Ker}\varvec{A})\) is accordingly the smallest. This proves (36) and the last statement of property 3).

Meanwhile, if k becomes large, the vibration state of the model becomes minute, and the variations of the components of \(\varvec{x}_k'\) decrease. As a result, fixing \(1\le l \le n-r\) and taking sufficiently large \(k>l\), we have

and a larger k results in \(\varvec{x}_k'\) being closer to the direction orthogonal to \(\textrm{Ker} \varvec{A}\), which means \(\textrm{dist}(\varvec{x}_k',\,\textrm{Ker}\, \varvec{A})\) approaches 1. This proves (35).

4.3 Example of the distance between eigenvectors and the kernel of the unconstrained matrix

In this section, we present actual distance curves of the distance between eigenvectors and the kernel of the unconstrained matrix using simple examples.

We show three cuboid models in Fig. 4. On the left and at the center are \(1\times 1\times 6\) and \(4\times 3\times 12\) models with hexahedral linear elements, respectively. On the right is a model of the same size as the \(4\times 3\times 12\) model but with tetrahedral elements set to be linear and quadrilateral. Additionally, we include a \(2\times 2\times 12\) model with hexahedral linear elements in our testing. The constraint conditions are assumed to have 9, 12, and 18 DOFs for the \(1\times 1\times 6\), \(2\times 2\times 12\), and \(4\times 3\times 12\) models, respectively. The cuboid models are summarized in Table 2.

Moreover, we use long and short perforated plates and a pipe model. We present these three models in Fig. 5 and Table 3. We assume linear and quadrilateral hexahedral elements for these models.

We assume the physical properties to be those of standard steel. Young’s modulus and Poisson ratio are 200 GPa and 0.3, respectively.

Curves of the distance between the eigenvectors and the kernel for the cuboid models, those for the short perforated plate, those for the long perforated plate, and those for the pipe model are shown in Fig. 6, 7, 8, and 9, respectively.

The eigenvalues and the distance values up to \(k=15\) are given in Table 4 as examples; (a) corresponds to the cuboid \(1\times 1\times 6\) model with linear hexahedral elements, (b) corresponds to the cuboid \(4\times 3\times 12\) model with quadrilateral tetrahedral element, (c) corresponds to the long plate model with quadrilateral tetrahedral elements, and (d) corresponds to the pipe model with quadrilateral tetrahedral elements.

All the results are in accordance with (1), (2), and (3) described in Theorem 1.

Distance between the eigenvector and the kernel of the short perforated plate. The same tendency is seen in Fig. 6. A finer mesh decomposition reduces the distance between the first eigenvector and the kernel

5 Introduction to the DDDM

We use solid elements for all the elements given in this section.

5.1 Expression of the coarse grid by domain decomposition

We decompose \(\Omega \) into N non-overlapping subdomains \(\Omega _J,1\le J\le N\), except that the boundaries between subdomains overlap one another. Each \(\Omega _J\) is a closed domain that includes boundary surfaces. We write \(\Omega \) as

The domain decomposition here is “element-base” decomposition as opposed to the domain decomposition used for the parallelization is “nodal-point-base” decomposition which we describe in Sect. 7.1.

The domain decomposition is represented by diagonal matrices \(\varvec{\bar{D}}_J\in \textbf{R}^{(n-r)\times (n-r)}\) comprising \(d_{Ji}\):

where \(\Omega _J^\circ \) is the interior of the subdomain \(\Omega _J\). Representing i that appears in the component \(\varvec{\bar{D}}_J\) is complex, and thus in (43), an abbreviated expression \(d_{J*}\) is used. In (43), there are cases in which non-zero components leap and are not continuously aligned.

From the construction of \(\varvec{\bar{D}}_J\), the domain decomposition (42) corresponds to

This means that \(\{\varvec{\bar{D}}_J\}\) is a partition of unity on the space \(\textbf{R}^{n-r}\). We then have

Let

We align (47) and let

We refer to this matrix as the extension matrix. We write \(W\equiv \textbf{R}^{6N}\) and \(V\equiv \textbf{R}^{n-r}\). W is a coarse space, whereas V is the global solution space. \(\varvec{\bar{F}}\) embeds W into V. The column vectors of \(\varvec{\bar{F}}_1,\cdots ,\varvec{\bar{F}}_N\) constitute a basis of W. Although W is not a subspace of V, \(\varvec{\bar{F}}W\) is a subspace. Since \(\varvec{\bar{F}}^T\) is of full rank, \(\textrm{Im}\varvec{\bar{F}}^T\) is isomorphic to \(W: \varvec{\bar{F}}^T V\cong W\).

5.2 Framework of the DDDM algorithm

We describe the DDDM algorithm as follows. Construct a coarse grid using the rigid-body modes \(\varvec{f}_1,\,\dots ,\,\varvec{f}_6\) of the original problem without a constraint condition; remove the corresponding low-frequency modes from the entire space V using the deflation algorithm; and apply the CG method to the segregated complementary space.

Let

We refer to this matrix as a contraction matrix. Because the size of this matrix is small, we can obtain the inverse matrix \(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1}\) using a direct method or LU decomposition (lower-upper decomposition). Moreover, we extend \(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1}\) onto V and express \((\varvec{\bar{A}}_{\varvec{F}}^{-1})^*\) as

We refer to this matrix as a pullback of \(\varvec{\bar{A}}\) under \(\varvec{\bar{F}}\). Figure 10 is a diagram of \(\varvec{\bar{A}}_{\varvec{\bar{F}}}\) and \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\).

Furthermore, let \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\) be a matrix obtained by multiplying \(\varvec{\bar{A}}\) by the pullback from the right side:

Proposition 2

(Contraction projection)

The following properties hold:

Therefore, \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\) is a projection.

Proof

Easily shown.

We refer to \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\) as a contraction projection obtained from the pullback of \(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1}\), or simply a contraction projection. The image of V obtained by the contraction projection is called a contraction projection space, or simply a contraction space.

Remark 1

(Definition of the deflation projection)

In many articles (e.g., [7,8,9,10,11,12,13,14]), the deflation projection \(\varvec{\bar{P}}\) is defined as

if using our notation.

-

(a)

The second term on the right side of (53) corresponds to (51), but in (51), the matrix \(\varvec{\bar{A}}\) is multiplied from the right side, whereas in (53), \(\varvec{\bar{A}}\) is multiplied from the left.

-

(b)

Additionally, the projection \(\varvec{\bar{P}}\) here is defined as the complementary projection of the contraction projection (in our sense).

-

(c)

Our definition (51) is needed as will be described in Remark 2 in Sect. 6.2 to reduce the calculation cost in each CG step.

Proposition 3

(Contraction space and the image of the extension matrix)

The image of the extension matrix coincides with the contraction projection space:

Proof

Easily shown.

Because \(\varvec{\bar{F}} \in \textbf{R}^{(n-r)\times 6N}\) is of full rank and one-to-one, and Proposition 3 thus shows that \(\varvec{\bar{F}}\) is an isomorphism from the coarse space W onto the contraction space. We therefore refer to \(\varvec{\bar{F}}W\equiv \varvec{\bar{P}}_{\varvec{\bar{A}}}V\) as the coarse space like W.

Proposition 2 leads to the direct sum decomposition of V:

Here, \(\varvec{\bar{P}}_{\varvec{\bar{A}}}V=\textrm{Im}\varvec{\bar{P}}_{\varvec{\bar{A}}}\) is a space spanned by the eigenvectors with the smaller eigenvalues including the lowest one. Meanwhile, \((\varvec{I}-\varvec{\bar{P}}_{\varvec{\bar{A}}})V=\textrm{Ker}\varvec{\bar{P}}_{\varvec{\bar{A}}}\) is a space obtained by eliminating those eigenvectors with the small eigenvalues. In other words, \(\varvec{\bar{P}}_{\varvec{\bar{A}}}V\) is a coarse space and \((\varvec{I}-\varvec{\bar{P}}_{\varvec{\bar{A}}})V\) is a fine space.

Theorem 4

(Restriction of the stiffness matrix to the contraction space)

The following three properties hold.

-

(i)

The restriction of \(\varvec{\bar{A}}^{-1}\) onto \(\varvec{P}_{\varvec{\bar{A}}}V\) by the contraction projection \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\) coincides with the pullback of \(\varvec{\bar{A}}\) under \(\varvec{\bar{F}}\):

$$\begin{aligned} \varvec{\bar{P}}_{\varvec{\bar{A}}}\varvec{\bar{A}}^{-1}\varvec{\bar{P}}_{\varvec{\bar{A}}}^T=\varvec{\bar{F}}(\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}})^{-1}\varvec{\bar{F}}^T=(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*. \end{aligned}$$(56) -

(ii)

The image of the pullback coincides with the contraction space:

$$\begin{aligned} (\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*V=\varvec{\bar{P}}_{\varvec{\bar{A}}}V. \end{aligned}$$(57) -

(iii)

The restriction of the stiffness matrix \(\varvec{\bar{A}}\) onto the contraction space \(\varvec{\bar{P}}_{\varvec{\bar{A}}}V\) coincides with the pullback:

$$\begin{aligned} (\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{A}}(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*=(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*. \end{aligned}$$(58)

Proof

i) and ii) can be easily seen. (58) is a rewriting of (56).

(56) presents the restriction of \(\varvec{\bar{A}}^{-1}\) onto the contraction space by \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\), and (58) presents the restriction onto the contraction space by the pullback \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\), showing that the two restrictions give rise to the representations of the same \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\), and a reciprocal relation as seen in Fig. .

Reciprocal relation between the restrictions of \(\varvec{\bar{A}}^{-1}\) and \(\varvec{\bar{A}}\). Panel (a) shows that the pullback \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\) on the coarse space \(\varvec{\bar{P}}_{\varvec{\bar{A}}}V\) hides the inverse \(\varvec{\bar{A}}^{-1}\) on the global space V

The condition number of the problem (17) is

In the DDDM algorithm, we removed the lower, e.g., m modes, and the condition number changes to

which means that the DDDM algorithm reduces the condition number to

5.3 Examples of deformation modes obtained using the DDDM

The target object \(\Omega \) deforms in the coarse space, as represented by the coarse motion of the subdomains \(\Omega _J,1\le J\le N\). The coarse motion of \(\Omega \) is close to the rigid-body motion of \(\Omega \), though the imposed constraint conditions fix some part of the deformation of \(\Omega \). \(\Omega _J\) itself has rigid-body modes, and the boundaries of some neighboring subdomains or the constrained boundaries restrict the deformation of \(\Omega \).

We present an example of how the target object deforms in the coarse problem. The stiffness matrix in the coarse space is given by (49). We use here the \(1\times 1 \times 6\) model shown in Fig. 4 in Sect. 4.3. The material is standard steel. We give the constraint conditions to nodal points 1, 2, and 3, corresponding to DOFs 1, 2, 3, 4, 5, 6, 7, 8, and 9. We eliminate these DOFs. Thus, \(n=84\), \(r=9\), and \(n-r=75\), which are the same as noted in Table 2 in Sect. 4.3.

Our problem is to solve the following equation for the displacement \(\varvec{\bar{x}}\) given an external force \(\bar{b}\):

The results are shown in Fig. 12. In the figure, we show the nodal point numbers 25 and 28 in Case (a), and the element numbers 1, 2, 3, 4, 5, and 6 in Case (c). The number of decompositions N is two in Case (c) and three in Case (b), whereas Case (a) has no decomposition (fine analysis). In Case (b), we decomposed the model between elements 2 and 3 and between elements 4 and 5. In Case (c), we decomposed between elements 3 and 4.

Deformation of the \(1\times 1 \times 6\) model in the coarse problem setting. Case (a) shows the deformation in the fine space. There are three and two subdomains in Cases (b) and (c), respectively. In Case (c), the model body is decomposed only between elements 3 and 4, whereas in Case (b), the body is decomposed between elements 2 and 3 and between 4 and 5. In Case (c), elements 3 and 4 are dragged by the joining surface of elements 3 and 4, and both elements are distorted. The result of Case (b) is close to the fine result (a) because we give a finer decomposition than Case (c)

We apply the external force on the first DOF (the x coordinate) of nodal points 25 and 28 in the negative and positive directions of the x axis, respectively, clockwise with twisting. The force strength is assumed to be as high as 3000 N, which creates an artificially large displacement. The swelling seen in the upper part of the figures, especially in Cases (a) and (b), is supposed to be caused by a violation of the assumption of the infinitesimal deformation in the FEA. In Case (c), elements 3 and 4 are dragged by the joining surface between elements 3 and 4, and both elements are distorted. In Case (b), elements 2, 3, 4, and 5 are distorted. In particular, Case (c) has a deformation near the rigid-body motion of the model. The result of Case (b), which has a finer decomposition than Case (c), is close to the fine result of Case (a).

6 DDDM algorithm

The DDDM algorithm shares similarities with the algorithm given in [7] that expands the Krylov subspace, adding approximated eigenvectors corresponding to eigenvalues close to zero using the orthogonality of the residuals. In our method, instead of adding approximated eigenvectors to the Krylov subspace, we choose an appropriate domain decomposition, which we defined in Sect. 5.1, to eliminate eigenvectors corresponding to eigenvalues close to zero determining the contraction projection space; see Sect. 5.2.

We describe the DDDM algorithm below. The DDDM algorithm is the same as the standard deflation algorithm. We define the algorithm within the standard preconditioned CG algorithm. The two-level algorithm described below has a much shorter calculation time owing to the form of the contraction projection matrix (51).

6.1 Preconditioned CG method

For use in Theorem 6 below, we note here the general preconditioned CG algorithm. Let \(\varvec{\bar{M}}\in \textbf{R}^{(n-r)\times (n-r)}\) be an arbitrary precondition matrix, and let \(\varvec{\bar{x}}_0\in \textbf{R}^{n-r}\) be an arbitrary initial guess of the solution vector. Let the residual vector \(\varvec{\bar{r}}_0\in \textbf{R}^{n-r}\) and the gradient vector \(\varvec{\bar{p}}_0\in \textbf{R}^{n-r}\) be

Then, repeat for \(k=1,2,\cdots \)

6.2 Definition of the precondition matrix and the DDDM algorithm

Our problem is (17), which we obtained by imposing the constraint condition on (12). Let the precondition matrix be \(\varvec{\bar{M}}\). We multiply both sides of (17) by \(\varvec{\bar{M}}\) to obtain

Along with the direct sum decomposition (55), we assume \(\varvec{\bar{M}}\) as

where \(\varvec{\bar{R}}\in \textbf{R}^{(n-r)\times (n-r)}\) is a symmetric positive definite matrix that plays the role of a precondition matrix in the fine space. The relationship between the decomposition (55) and the precondition matrix (71) is shown in Fig. 13.

The second term of (71) is a precondition on the fine space. The first term on the right side of (71), which eliminates the residuals that comprise the lower modes, coincides with the pullback \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\) from (56) in Theorem 4, and then

The first term on the right side of (71) or (72), which we have already seen in (50), is

This expression hides \(\varvec{\bar{A}}^{-1}\) by the pullback; see Fig. . \(\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}}\in \textbf{R}^{6N\times 6N}\) is a symmetric positive definite matrix on \(W\equiv \textbf{R}^{6N}\). It is so small that we can obtain \((\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}})^{-1}\) can be obtained using a direct method (or LU decomposition).

Proposition 5

(Product of the projection and the precondition matrix)

It holds that

Proof

Multiplying both sides of (72) by \(\varvec{\bar{P}}_{\varvec{\bar{A}}}=(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{A}}\) yields

From (58) in Theorem 4, the first term on the right side of this equation is \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\), and the second term becomes zero because \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\) is a projection. Thus, (74) is obtained.

Theorem 6

(Range of the pullback and the gradient vector)

In the CG iteration steps, (63) to (69), let

Using this vector, we define

For \(k\ge 0\), the residual vector \(\varvec{\bar{r}}^k\) belongs to the kernel of \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\), and the gradient vector \(\varvec{\bar{p}}^k\) belongs to the kernel of \(\varvec{\bar{P}}_{\varvec{\bar{A}}}\), which is equal to the conjugate space \((\varvec{I}-\varvec{\bar{P}}_{\varvec{\bar{A}}})V\); see (55). Two equations thus hold for arbitrary \(k\ge 0\):

Proof

We show (79) and (80) simultaneously by induction. First, we have

Multiplying both sides from the left side of this equation by \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\), and using (58) in Theorem 4, we have

This result shows that

We then have

(82) and (84) show (79) and (80) for \(k=0\).

We then assume (79) and (80) for \(k\ge 1\). Multiplying both sides of (67) of the CG iteration steps by \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{A}}\), using (79) for k, we have

This shows (79) for \(k+1\). Multiplying both sides of (69) of the CG iteration steps by \(\varvec{\bar{P}}_{\varvec{\bar{A}}}=(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{A}}\), we obtain from (80) for k,

From (74) in Proposition 5, we obtain

Using (79) for \(k+1\), which we have already proved, the right side of this equation becomes zero, which means (80) for \(k+1\).

Theorem 6 shows that the residual vectors \(\varvec{\bar{r}}^k,~k\ge 1\) that appear in the iteration steps stay in the kernel of \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\), and the gradient vectors \(\varvec{\bar{p}}^k, k\ge 1\), stay in the fine space \((\varvec{I}-\varvec{\bar{P}}_{\varvec{\bar{A}}})V\), which means that none of these ever steps over to the counter space.

Remark 2

(Advantageous effect of the definition of the deflation projection. See also Remark 1in Sect. 5.2)

-

a)

In the CG iteration from (65) to (69), the matrix–vector products that are necessary for updating the steps from k to \(k+1\) are \(\varvec{\bar{A}}\varvec{\bar{p}}^k\) and \(\varvec{\bar{M}}\varvec{\bar{r}}^k\), and \(\varvec{\bar{p}}_k\) is determined by \(\varvec{\bar{M}}\varvec{\bar{r}}^k\). Theorem 6 shows that \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{r}}^k=\varvec{0}\) for all \(k\ge 0\); therefore, we have

$$\begin{aligned} {\varvec{\bar{M}}}\varvec{\bar{r}}^k= & {} (\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{r}}^k +(\varvec{I}-(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{A}})\varvec{\bar{R}}(\varvec{I}-\varvec{\bar{A}}(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*)\varvec{\bar{r}}^k\nonumber \\= & {} (\varvec{I}-(\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{A}})\varvec{\bar{R}}\varvec{\bar{r}}^k. \end{aligned}$$(88)Thus, the property \((\varvec{\bar{A}}_{\varvec{\bar{F}}}^{-1})^*\varvec{\bar{r}}^k=\varvec{0}\) eliminates one matrix–vector product in each CG iteration step.

-

b)

The reduction of the calculation in (88) is given by the definition of the contraction projection (51) and the complementary projection defined in the precondition matrix (71).

6.3 Performance of the DDDM algorithm

We discuss the performance of the DDDM. Although we parallelize the DDDM, we assumed only a single process calculation in this section.

In general, depending on their thickness, convergence is difficult to achieve for thin-plate models when using iterative methods. We thus use thin plate models for our benchmarks.

Remark 3

(Precondition matrix for the fine space in the DDDM)

We give the precondition matrix for the fine space in the DDDM as \(\varvec{\bar{R}}\) in the definition for \(\varvec{\bar{M}}\) in (71) or (72). Although we can set \(\varvec{\bar{R}}\) to be any precondition matrix, we assume \(\varvec{\bar{R}}\) is a matrix of the successive symmetric overrelaxation (SSOR) for the following reasons.

The DDDM solver for our benchmarks is incorporated into FrontISTR [32], an open-source structural analysis code. FrontISTR has the SSOR preconditioned CG solver as a standard solver. The choice of the SSOR precondition for the DDDM enables a fair comparison between the SSOR preconditioned CG solver on the entire space and the SSOR-preconditioned-on-the-fine-space DDDM solver. In the following benchmarks, we switch between the SSOR-preconditioned CG solver and the SSOR-preconditioned DDDM solver. SSOR below refers to the SSOR-preconditioned CG method, and DDDM below refers to the SSOR-preconditioned-on-the-fine-space of DDDM.

In this section, the computer used was a cluster computer with Intel Xeon E5-2670, operating at 2.6 GHz, 16 cores \(\times \) 13 nodes, and 128 GB of RAM per node. We do not parallelize with processes or the multi-threads. We used METIS [33] for the domain decomposition in the DDDM solver.

We built the plate model by arranging \(1\textrm{mm}\times 1\textrm{mm} \times 1\textrm{mm}\) hexahedral linear elements in the same way as in Sect. 2.2. The x, y, and z elements were aligned in the directions of the x, y, and z-axes, respectively, except for some models noted below. We refer to the “\(x\times y\times z\)” plate in the same manner as in Sect. 2.2. The direction of the plate, constraint conditions, and loading conditions were likewise the same as in Sect. 2.2; see Fig. 1.

The analysis is the same cantilever-type dead weight analysis as conducted in Sect. 2.2. We ran the analysis starting with the \(300\times 10\times 300\) model and then reducing the thickness (i.e., the number of hexahedral elements in the y-axis direction) to 9, 8, 7, 6, 5, 4, 3, 2, and 1. The DDDM converged even with \(y=1\). We then set y as thin as \(y=\) 0.5, 0.2, and 0.1.

We set the tolerance as \(1.0\times 10^{-7}\) in all cases and set the maximum number of the iterations as 10,000 for both the SSOR and DDDM. We considered that the iteration did not converge when the number of the iteration reached the maximum number, and there was no tendency for a decrease in the relative residual error.

We summarize the benchmark result in Table 5.

Stability of the DDDM solver. Table 5 presents the stability of the DDDM solver in the sense that the SSOR converged with the \(300\times 10\times 300\) model but failed to converge with the \(300\times 9\times 300\) model. This tendency shows the difficulty of handling thin plates using the SSOR preconditioned CG method. Meanwhile, the DDDM converged from the \(300\times 10\times 300\) model to the \(300\times 0.2\times 300\) model but did not converge for the \(300\times 0.1\times 300\) model. The number of iterations and calculation time of the DDDM decreased from the \(300\times 10\times 300\) model to the \(300\times 1\times 300\) model, presumably because of the decrease in the number of DOFs. However, the trend turned at the \(300\times 0.5\times 300\) model, which shows the difficulty of convergence with a reduction in the thickness even when using the DDDM.

Performance of the DDDM solver. Table 6 compares the performances of the DDDM solver and SSOR solver taken from the first row of Table 5 for \(y=10\). We can see that the DDDM solver is 85.9 times as fast as the SSOR solver in terms of the number of iterations and 17.2 times as fast as the calculation time.

We show the analysis results of the \(300\times 10\times 300\), \(300\times 5\times 300\), \(300\times 2\times 300\), \(300\times 1\times 300\), \(300\times 0.5\times 300\), and \(300\times 0.2\times 300\) models in Fig. 14. The color represents the displacement norms, and we enlarged the deformations by a factor of 10 in all cases.

Results of the thin model benchmark. Six cases from Table 5 are shown. We enlarged the deformations by a factor of 10 in all cases. The color contours show the displacement norms with the same range

7 Parallelization

As stated in Sect. 1 and Sect. 2.3, our solver uses two sets of domain decomposition. One is for the generation of the coarse grid, which corresponds to the structure of the DDDM, and we use the other for parallelizing the CG method in the DDDM.

7.1 Overview of the parallelization

We decompose the entire body \(\Omega \) into non-overlapping subspaces:

as in Fig. 15, where M is the number of parallel processes, and each \(\Omega _I\) corresponds to each of the parallel processes. The domain decomposition here is “nodal-point-base” as opposed to the domain decomposition used for generation of the coarse grid is “element-base.” \(\Omega _{IJ}\) is a region consisting of the points nearest the neighboring both \(\Omega _I\) and \(\Omega _J\). The points in the figure show the nodal points \(\varvec{\bar{x}}\). We fix I and J \((I\ne J)\). Though both \(\Omega _I\) and \(\Omega _J\) have other neighboring subspaces, we omit them for an explanation. We apply the CG method to the extended subdomains \(\Omega _I\cup \Omega _{IJ}\) and \(\Omega _J\cup \Omega _{IJ}\) in parallel. The CG iteration refers to the nodal value in \(\Omega _I\cup \Omega _{IJ}\) and also \(\Omega _J\cup \Omega _{IJ}\) updating the values of the nodal points in the iteration process through the communications between the parallel processes I and J. In the CG process, the points in \(\Omega _{IJ}\) have different values in each of the parallel processes I and J. After some number of the CG iterations, all the nodal values converge to some values, including the points in \(\Omega _{IJ}\) within a given residual error range.

In the DDDM algorithm, we parallelize the coarse grid generation process in the parallel CG method. As noted in Sect. 5.2, we use the contraction matrix \(\varvec{\bar{A}}_{\varvec{\bar{F}}}=\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}}\in \textbf{R}^{6N\times 6N}\) defined by Equation (49) in the whole space. The components of \(\varvec{\bar{A}}\) and \(\varvec{\bar{F}}\) are distributed to some parallel process I, we then calculate \((\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}})_I\) independently in the process I. We gather those components to the parent MPI process. We apply the LU decomposition to \(\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}}\). We then apply \((\varvec{\bar{F}}^T\varvec{\bar{A}}\varvec{\bar{F}})^{-1}\), which we distribute to each parallel process in the CG process in parallel for each I. The parallel process I includes not only the inner points of \(\Omega _I\) but points in \(\Omega _{IJ}\) outside of \(\Omega _I\).

7.2 Parallel performance

We present here the cantilever-type cuboid model under the dead weight conditions, which is the same analysis as in Sect. 6.3; however, we assumed a larger model with dimensions of \(500\times 30\times 500\). We compared the result of the DDDM with the result of the SSOR. We chose the configuration referring to Sect. 6.3. The configuration of \(500\times 30\times 500\) was almost the lower bound for convergence for the SSOR concerning the thickness; i.e., we cannot set the thickness to be less than 30 in this configuration, as the SSOR does not converge. The computer used was the Oakbridge-CX system [34] installed at the University of Tokyo.

The number of elements, DOFs, and constraints were 7,500,000, \(n=23,343,093\), and \(r=15,531\), respectively. We conducted the analyses using 1, 2, 4, 8, 16, 24, and 32 processes. The number of subdomains N for the DDDM (see Sect. 5.1) was set at 2500 for these processes by conducting preliminary benchmarks.

The performance results are given in Table 7. Although the superiority of the DDDM is clear, the parallel performance of the DDDM decreases with an increasing number of processes in contrast with the parallel performance of the SSOR. This tendency is because the larger number of parallel processes increases the communication traffic.

8 Conclusions

Static structural problems discretized by the FEA method without constraint conditions have singular stiffness matrices, and the kernel of the stiffness matrices has six eigenvectors with duplicated zero eigenvalues. The static equations become solvable by imposing at least six forced displacement conditions (i.e., constraint conditions).

The starting point of the DDDM algorithm is that we apply the (parallel) CG method to the entire domain as the basic framework rather than using the direct method as in the DDM. We decompose the entire domain in two ways. One decomposition parallelizes the CG method, and the other generates the coarse space based on the rigid-body modes.

We discussed the distance between the eigenvectors and the kernel of the unconstrained stiffness matrices. The basis of the kernel represents the rigid-body motions. The small distance between the eigenvectors with small eigenvalues gives rise to generating the space of the coarse motions together with the domain decomposition.

We construct the solver algorithm to remove the coarse space obtained by the coarse motion from the solution space. The iteration space is handed over to the fine space using the deflation and two-level algorithm. The two-level method contributes to high-speed performance.

We conducted benchmark tests of the elastic static analysis for thin plate models, and the results showed the high-speed performance and stability of the DDDM. We also presented a brief overview of the parallelization and its performance.

Code Availability

All the data used for the current study are available from the corresponding author upon reasonable request. However, the DDDM solver source code cannot be made available because it has been jointly developed with the Central Research Institute of Electric Power Industry.

Notes

We can also define

$$\begin{aligned} \varvec{A}'=\left( \begin{array}{cc} \varvec{\bar{A}}&{}\varvec{0}\\ \varvec{0}&{}\varvec{0}\\ \end{array} \right) ,\quad \varvec{x}'=\left( \begin{array}{c} x_1\\ \vdots \\ x_{n-r}\\ \varvec{0}\\ \end{array} \right) ,\quad \varvec{b}'=\left( \begin{array}{c} b_1\\ \vdots \\ b_{n-r}\\ \varvec{0}\\ \end{array} \right) . \end{aligned}$$

References

Miyamura T, Yamada T (2019) Feasibility study of full-scale elastic-plastic seismic response analysis of nuclear power plant. Mech. Eng. J. 6(6):19–002811900281. https://doi.org/10.1299/mej.19-00281

Miyamura T, Hori M (2015) Large-scale seismic response analysis of super-high-rise steel building considering soil-structure interaction using K computer. Int J High-Rise Build 4(1):75–83

Wu YS, Yang YB, Yau JD (2001) Three-dimensional analysis of train-rail-bridge interaction problems. Veh Syst Dyn 36(1):1–35

Yamada H, Miura H, Ebisawa K (2019) Improvement of fragility evaluation on seismic PRA—an evaluation method on realistic response of component considering dynamic nonlinear characteristics of building and enhancement of sub-response factor regarding input seismic motion. Research Report of Central Research Institute of Electric Power Industry, O18010, in Japanese

Morgan RB (1995) A restarted GMRES method augmented with eigenvectors. SIAM J Matrix Anal Appl 16(4):1154–1171

Chapman A, Saad Y (1996) Deflated and augmented Krylov subspace techniques. Numer Linear Algebra Appl 4(1):43–66

Saad Y, Yeung M, Erhel J (2000) Guyomarc’h: a deflated version of the conjugate gradient algorithm. SIAM J Sci Comput 21(5):1909–1926

Nabben R, Vulk C (2006) A comparison of deflation and the balancing preconditioner. SIAM J Sci Comput 27(5):1742–1759

Frank J, Vuik C (2001) On the construction of deflation-based preconditioner. SIAM J Sci Comput 23(2):442–462

Jönsthövel TB, Gijzen MB, Vuik C (2012) Comparison of the deflated preconditioned conjugate gradient method and algebraic multigrid for composite materials. Comput Mech 50:321–333

Nabben R, Vuik C (2004) A comparison of deflation and coarse grid correction applied to porous media flow. SIAM J Numer Anal 42(4):1631–1647

Nabben R, Vuik C (2008) A comparison of abstract versions of deflation, balancing and additive coarse grid correction preconditioners. Numer Linear Algebra Appl 15:355–372

Tang JM, Nabben R, Vulk C, Erlangga YA (2009) Comparison of two-level preconditioners derived from deflation, domain decomposition and multigrid methods. J Sci Comput 39(3):340–370

Nicolaides RA (1987) Deflation of conjugate gradients with applications to boundary value problems. SIAM J Numer Anal 24(2):355–365

Golub GH, Van Loan CF (2013) Matrix computations, 4th edn. The Johns Hopkins University Press, Baltimore, p 443

Jönsthövel TB, Gijzen MB, Scarpas A (2013) On the use of rigid body modes in the deflated preconditioned conjugate gradient method. SIAM J Sci Comput 35(1):207–225

Farhat C, Roux FX (1991) A method of finite element tearing and interconnecting and its parallel solution algorithm. Int J Numer Methods Eng 32(6):1205–1227

Farhat C, Lesoinne M, LeTallec P, Pierson K, Rixen D (2001) FETI-DP: A dual-primal unified FETI method-part I: a faster alternative to the two-level FETI method. Int J Numer Methods Eng 50:1523–1544

Mandel J, Dohrmann CR (1993) Balancing domain decomposition, communications numerical methods in engineering. Commun Numer Methods Eng 9:233–241

Mandel J, Dohrmann CR (2003) Convergence of a balancing domain decomposition by constraints and energy minimization. Numer Linear Algebra Appl 10:639–659

Bathe KJ (2014) Finite element procedures, 2nd edn. Prentice Hall, Inc., Hoboken

Miyamura T, Takaya T, Yoshimura S, Hori M (2014) Improvement of balancing domain decomposition method for problem with multi-point constraints, Barcelona, Spain. In: 11th World Congress on Computational Mechanics (WCCM XI)

Baggio R, Franceschini A, Spiezia N, Janna C (2017) Rigid body modes deflation of the preconditioned conjugate gradient in the solution of discretized structural problems. Comput Struct 185:15–26

Shioya R, Kanayama R, Tagami H, Ogino M (2000) 3D large scale structural analysis using balancing domain decomposition method. Trans JSCES 2:139–144 ((in Japanese))

Smith B, Bjørstad P, Grop W (1996) Domain decomposition. University Press, Cambridge

Falgout RD, Vassilevski PS, Zikatanov LT (2005) On two-grid convergence estimates. Numer. Linear Algebra Appl. 12:471–494

Ciaramella G, Vanzan T (2022) Substructured two-grid and multi-grid domain decomposition methods. Numer. Algorithms 91:413–448

Motoyama H, Sawada M, Hotta W, Ohtsuka Y, Akiba H, Hori M (2021) Development of a general-purpose parallel finite element method for analyzing earthquake engineering problems. Earthq Eng Struct Dyn 50(15):4180–4189

Garatani K, Okuda H, Yagawa G (1999) A study on solution method for large-scaled parallel FEM (performance evaluation of two methods based on DDM). In: Transaction of JSCES, 19990009 (in Japanese)

Adventure Project. https://adventure.sys.t.u-tokyo.ac.jp/ (2021)

Meyer CD (2000) Matrix analysis and applied linear algebra. SIAM, Philadelphia

FrontISTR Commons. https://manual.frontistr.com/en/ (2021)

METIS. http://glaros.dtc.umn.edu/gkhome/metis/metis/overview/

Oakbridge-CX (2023) https://www.cc.u-tokyo.ac.jp/en/supercomputer/obcx/service/

Acknowledgements

The author expresses great appreciation to Prof. Hiroshi Okuda (The University of Tokyo), Dr. Masataka Sawada (Central Research Institute of Electric Power Industry), Dr. Muneo Hori (Japan Agency for Marine-Earth Science and Technology), Prof. Ichiro Hagiwara (Meiji University), and Mr. Kuniaki Koike (Advanced Simulation Technology of Mechanics R &D, Co., Ltd) for their support of this work.

Funding

This work was partly supported through projects commissioned by the Agency for Natural Resources and Energy (ANRE), Ministry of Economy, Trade and Industry (METI), Japan.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Akiba, H. Deflated domain decomposition method for structural problems. J Eng Math 144, 21 (2024). https://doi.org/10.1007/s10665-023-10322-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10665-023-10322-2

Keywords

- Domain decomposition

- Deflation algorithm

- Distance between eigenvectors of stiffness matrix and kernel of unconstrained one

- Fast stable solver

- Rigid-body motion