Abstract

Background

In the pragmatic open-label randomised controlled non-inferiority LADI trial we showed that increasing adalimumab (ADA) dose intervals was non-inferior to conventional dosing for persistent flares in patients with Crohn’s disease (CD) in clinical and biochemical remission.

Aims

To develop a prediction model to identify patients who can successfully increase their ADA dose interval based on secondary analysis of trial data.

Methods

Patients in the intervention group of the LADI trial increased ADA intervals to 3 and then to 4 weeks. The dose interval increase was defined as successful when patients had no persistent flare (> 8 weeks), no intervention-related severe adverse events, no rescue medication use during the study, and were on an increased dose interval while in clinical and biochemical remission at week 48. Prediction models were based on logistic regression with relaxed LASSO. Models were internally validated using bootstrap optimism correction.

Results

We included 109 patients, of which 60.6% successfully increased their dose interval. Patients that were active smokers (odds ratio [OR] 0.90), had previous CD-related intra-abdominal surgeries (OR 0.85), proximal small bowel disease (OR 0.92), an increased Harvey-Bradshaw Index (OR 0.99) or increased faecal calprotectin (OR 0.997) were less likely to successfully increase their dose interval. The model had fair discriminative ability (AUC = 0.63) and net benefit analysis showed that the model could be used to select patients who could increase their dose interval.

Conclusion

The final prediction model seems promising to select patients who could successfully increase their ADA dose interval. The model should be validated externally before it may be applied in clinical practice.

Clinical Trial Registration Number

ClinicalTrials.gov, number NCT03172377.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Adalimumab (ADA), a subcutaneous anti-tumour necrosis factor (TNF) agent, is an effective therapy to induce and maintain steroid-free remission in Crohn’s disease (CD). The recommended induction doses are 160 mg and 80 mg at week 0 and week 2, followed by a maintenance dose of 40 mg every two weeks [1]. However, ADA can lead to adverse events (AEs) including injection site reactions and increased incidence of infections [2]. Moreover, biologicals contribute to high costs of inflammatory bowel disease (IBD) treatment for healthcare systems worldwide [3]. We conducted the open-label, non-inferiority, multicentre randomised controlled LADI trial between May 2017 and July 2020 to assess if adalimumab dose intervals could be increased [4].

The LADI trial showed that increasing ADA dose intervals was non-inferior to conventional dosing for persistent flares in patients with CD in stable remission, while healthcare costs and infection-related AEs were reduced. However, patients in the intervention group had lower rates of clinical remission and experienced more gastro-intestinal AEs after 48 weeks [4]. In addition, we reported in our previous publication on a model for predicting the likelihood of success for increasing ADA dose intervals to asses which subgroups of patients might benefit most of the increased ADA dose intervals [4].

That model showed that active smoking, longer remission duration, previous IBD-related intra-abdominal surgery, proximal small bowel disease, and increased Harvey–Bradshaw Index (HBI) and faecal calprotectin were risk factors for intervention failure, while previous exposure to ADA was predictive of intervention success. Patients with ADA concentrations between 10 and 11 μg/mL were more likely to successfully increase the adalimumab interval than patients with lower or higher drug concentrations [4]. This model was based on backward selection as specified in the study protocol. However, backward selection might lead to reduced predictive performance of the model [5]. Moreover, the model showed signs of overfitting, meaning that too many variables were included in the model [4]. Together these reduce generalisability of the model, and the model can likely not be used in clinical practice to determine which patients can successfully increase their ADA dose interval. Therefore, we conducted the current study, aiming to develop a prediction model that can identify patients who could successfully increase their ADA dose interval, using advanced statistical methods on original data from the LADI trial.

Methods

Study Design and Subjects

This is a secondary analysis of the pragmatic open-label randomised controlled non-inferiority LADI trial. The LADI trial was conducted in 14 general and six academic hospitals in the Netherlands between May 2017 and July 2020. To summarize, we included patients with CD who were in steroid-free clinical and biochemical remission for ≥ 9 months (Harvey–Bradshaw Index [HBI] score < 5, C-reactive protein [CRP] < 10 mg/L and faecal calprotectin [FCP] < 150 µg/g), on ADA 40 mg every two weeks. The intervention group increased ADA intervals to 3 weeks at baseline and to 4 weeks at week 24 if they remained in clinical and biochemical remission. Patients were followed for a period of 48 weeks. Clinical disease activity and medication use were assessed every six weeks, CRP and FCP every twelve weeks, and additional evaluation was planned when a flare was suspected. For more details, we refer to previously published papers [4, 6]. Only data from patients in the intervention group were used in this study as the success of increasing dosing intervals was the main predicted outcome by the prediction model.

Definitions and Outcomes

A successful dose interval increase was defined in a study group consensus meeting as follows: no persistent flare [4] intervention-related severe AE, or IBD escape medication use during the study, and a dose interval of 3- or 4-weekly while in clinical and biochemical remission at week 48. Budesonide use and transient flares were allowed if patients recaptured remission before week 48 and continued an increased dose interval.

A flare was defined as fulfilling at least two out of three of the following criteria HBI ≥ 5, CRP ≥ 10 mg/L and/or FCP > 250 µg/g with concurrent use of escape medication or decrease in the ADA dose interval. A persistent flare was defined as a flare for a period of longer than 8 weeks, while a transient flare was defined as a flare that lasted between 2 and 8 weeks. Patients were in clinical and biochemical remission at week 48 when they had a HBI score of < 5, CRP < 10 mg/L and FCP < 150 µg/g.[4] As this was a pragmatic trial, patients, healthcare providers and outcome assessors were not blinded.

Model Development

For the current, secondary analysis, baseline predictors were the same as in the initial model. Predictors were selected after a study group consensus meeting and before study results were available in order to reduce the risk of bias. Selected predictors included HBI score, CRP, FCP, active smoking, concomitant immunosuppressant use, disease duration, remission duration, time on ADA, previous therapy with infliximab or ADA, surgical history for IBD, Montreal classification and ADA drug levels as a continuous variable with a possible non-linear (quadratic) relationship with the outcome. ADA drug levels were centred around their mean to reduce multicollinearity. Prediction models were based on logistic regression regularised with relaxed LASSO. LASSO reduces the effect size of coefficients in the model, a procedure called shrinkage, which can improve generalisability and exclude variables from the model. A downside of shrinkage is that it can lead to reduced calibration. Relaxed LASSO can correct this by reducing shrinkage after variable selection [7, 8]. Hyperparameters that control shrinkage and relaxation were selected using tenfold cross-validation. We used both the minimal lambda and the stricter ‘one standard error rule’. The minimal lambda applies the shrinkage that performs best in cross-validation. The ‘one standard error rule’ excludes more variables in exchange for a slight decrease in performance [8].

Missing data on ADA use for dropouts (n = 3) was dealt with using last observation carried forward. We assumed that other data were missing at random and dealt with missing data by multiply imputing ten datasets in long format [9]. Linear mixed models were used to impute normally distributed variables while other variables were imputed with type 2 predictive mean matching. All predictors and outcomes were added in the imputation models to ensure congeniality. We added auxiliary variables with little missing data and which were likely to be related to the outcomes. The final models were created by averaging the parameter estimates for the variables from each imputed dataset where dropped variables counted as zero [8]. Confidence intervals could not be calculated as variables were selected per imputed dataset and models for each imputed dataset were different. Confidence intervals can only be calculated when each model contains identical variables.

The sample size of the original RCT was based on non-inferiority with regards to the primary outcome of persistent flares. The necessary sample size was 174 patients, with a 2:1 randomisation to the intervention and control group, respectively, to increase the sample size for development of a prediction model in the intervention group.

Model Evaluation and Analysis

Performance of the final models was evaluated for discrimination with the area under the receiver operating characteristic curve (AUC), calibration with calibration intercept and slope, and clinical benefit with decision curve analysis [10]. We opted to use decision curve analysis instead of reporting test characteristics such as sensitivity and specificity at a single cut-off to facilitate the use of varying cut-offs in clinical practice. Some patients or physicians might be risk averse, needing for example a probability of a successful dose interval increase of at least 80%. Other might have a higher tolerance for risk or suffer major side effects from ADA and would be willing to try increasing dose intervals with a predicted success probability of 50%.

Decision curve analysis shows the net benefit of using a model to select patients as compared to two default strategies (increase the interval for all patients and continue the conventional dose interval for all patients). The net benefit is the number of patients that successfully increased their interval minus the number of patients that failed times a weighing factor. The weighing factor represents the risk preferences of the patient and provider, and can be thought of as the probability of success that is necessary to accept the intervention (called the threshold probability). For example, when at least seven out of ten patients are required to be successful on an increased dose interval, the threshold probability is 70%. Relating this to the risk averse and risk tolerant patient examples in the previous paragraph, these would have threshold probabilities of 80% and 50%, respectively. Models were internally validated using bootstrap optimism correction with 400 bootstrap samples, in which the entire model building procedure was repeated and optimism-corrected estimates for discrimination, calibration and net benefit are reported [8].

Results

Cohort and Baseline Characteristics

We included all 109 enrolled patients from the intervention group for analysis [4], of which 60.6% successfully increased their ADA dose interval (Table 1). For distribution of predictors stratified by outcome, see Supplementary Table 1. Most variables had no or few (< 10%) missing data, and missing data were most frequent for the HBI score (23.5% of observations).

Model Development

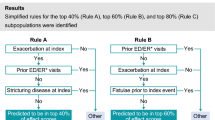

During model development, applying the ‘one standard error rule’ excluded more variables from the model as expected: for selected variables and their odds ratios (OR) see Table 2. These ORs can be used to calculate a predicted probability of successful dose interval increase. Apparent discrimination of the models was moderate, with AUCs between 0.70 and 0.73 (Fig. 1). Apparent calibration curves showed that risk predictions were too moderate, meaning that predicted probability of success for patients that were likely to succeed was too low, while probability of success was overestimated for patients that were likely to fail (Fig. 2).

Model Validation

Internal validation showed an optimism-corrected AUC for both models of 0.63, which indicates that model performance was initially overestimated. Optimism-corrected calibration estimates were fair, meaning that predicted probability of success was close to observed probability of success (Supplementary Table 2). Decision curve analysis showed that the prediction models could provide a benefit over the default strategies in the threshold probability range between 50% and 65%. Below this range the benefit was equal to simply increasing the interval for all patients (Fig. 3). Above a threshold probability of 65% it was better to continue the convention interval for all patients.

Discussion

The previously conducted LADI trial showed that an increased ADA dose interval was non-inferior compared with conventional dosing for the occurrence of persistent flares. Patients in both groups had low and similar rates of transient flares, but more patients on conventional dosing were in remission at the end of follow-up. After 48 weeks, about 61% of patients successfully increased their ADA dose interval. The current secondary analysis using original trial data included the development and internal validation of a prediction model. Evaluation showed that discrimination and calibration of models was adequate. The prediction models that we evaluated could provide clinical benefit over the default strategies in the threshold probability range of 50% to 65%, while performing equal or worse over the other threshold probabilities. Overall, the model using the ‘one standard error’ rule seems to be the most promising tool for selecting the right patient for increasing the ADA dose interval. It showed better performance than the previously developed backwards selection model from the primary analysis [4], comparable performance to the minimal lambda model and contains the lowest number of predictors. These characteristics likely result in increased generalisability.

Risk factors for failing dose interval increase in the ‘one standard error’ model are active smoking, previous IBD-related intra-abdominal surgeries, proximal small bowel disease, an increased HBI score and FCP at baseline. This model resembles the backwards selection model but contains less predictors [4]. ADA drug levels, remission duration and previous therapy with ADA were not predictive for successful dose interval increase in the new model. Most of the variables in the new model were also predictive for relapse after cessation (rather than de-escalation) of anti-TNF therapy in patients with CD in previously published studies [11]. While all patients in our study had relatively low inflammatory parameters at baseline (HBI score < 5, CRP < 10 mg/L and FCP < 150 µg/g), we found that increased HBI (OR 0.99 per 1 unit increase) and FCP (OR 0.997 per 1 unit increase) at baseline were still predictive of unsuccessful dose interval increase. Patients with higher HBI and FCP at baseline while remaining in the ‘remission range’ were less likely to succesfully increase their dose interval, indicating that these patients might have had residual disease activity at baseline.

Longer disease duration and immunosuppressant use were protective factors against relapse and second-line anti-TNF and younger age at diagnosis were risk factors for relapse after cessation of anti-TNF but were not included in our final model for increasing dose intervals [11]. It is possible that these factors are not relevant for predicting successful dose interval increase or that our sample size was too small to find all relevant predictors. There might be clinically important subgroups of patients, for example those with concomitant immunosuppressant use or who had a younger age at diagnosis, that are less likely to successfully increase their dose interval, but that these were not identified in our data.

Using decision curve analysis, we have found a range of probabilities in which the model can be used to select patients for increasing the dose interval. The main advantage of this analysis is that it assesses the value of the prediction model in different settings and for patients and providers with different risk and medication preferences. If patient and provider need less than a 50% probability that dose interval increase is successful before attempting to increase dose intervals, the best strategy is to simply attempt to increase the dose interval. If patient and provider need between 50% and 65% probability of success, the prediction model can be used to guide clinical decision making. However, if one is risk averse and prefers more than 65% certainty that dose interval increase is successful, continuing the conventional dose interval is favourable. In this range, the harm of the false positives from the prediction model trump the benefit of the true positives. The decision making for ADA interval extension in clinical practice will rely on a careful discussion between physician and patient about the anticipated risks and benefits and interpretation of this balance may be impacted by several factors including risk perception.

There were some challenges, even though advanced methods were used to develop and internally validate our prediction models and data from this pragmatic trial closely resembles clinical practice. The most important limitation is the relatively small sample size, which reduces the number of predictors that can be tested and could lead to problems with overfitting. This became clear in the internal validation procedure and is the reason regularisation was applied and we reported optimism-corrected estimates. However, even the model using the ‘one standard error rule’ which had the lowest number of predictors showed considerable optimism. Thus, before the model can be used in clinical practice, it should be validated externally using additional cohorts with well-defined interval extension and comparable outcome measures. Second, we did not mandate endoscopic confirmation of remission before participation in the trial. As such, the effect of endoscopic remission on likelihood of successfully increasing ADA dose intervals could not be evaluated, while endoscopic remission is known to be a predictor of successful anti-TNF withdrawal [12]. Third, ADA drug concentrations at baseline were not always true through concentrations, and as such might relatively overestimate drug concentrations. However, no correlation between time since last administration of adalimumab and adalimumab drug concentration was observed in a previous study [13].

In conclusion, patients with proximal small bowel CD, a history of intestinal resection, who are active smokers or have increased FCP or HBI are less likely to successfully increase their ADA dose interval. Selecting patients with our model may provide a clinical benefit when patients and physicians want a probability up to 65% that there are no major or minor negative clinical consequences of increasing the dose interval. When one is more risk averse, continuation of the conventional dose interval would be recommended.

Data availability

Requests for sharing of de-identified data by third parties will, after written request to the corresponding author, be considered. If the request is approved and a data access agreement is signed only de-identified data will be shared.

Abbreviations

- ADA:

-

Adalimumab

- AE:

-

Adverse event

- AUC:

-

Area under the receiver operating characteristic curve

- CD:

-

Crohn’s disease

- CRP:

-

C-reactive protein

- IBD:

-

Inflammatory bowel disease

- FCP:

-

Faecal calprotectin

- HBI:

-

Harvey-Bradshaw Index

- LASSO:

-

Least Absolute Shrinkage and Selection Operator

- OR:

-

Odds ratio

- RCT:

-

Randomised controlled trial

References

Sandborn WJ, Hanauer SB, Rutgeerts P, Fedorak RN et al. Adalimumab for maintenance treatment of Crohn’s disease: results of the CLASSIC II trial. Gut. 2007;56:1232–1239.

Singh S, Facciorusso A, Dulai PS, Jairath V, Sandborn WJ. Comparative Risk of Serious Infections With Biologic and/or Immunosuppressive Therapy in Patients With Inflammatory Bowel Diseases: A Systematic Review and Meta-Analysis. Clin Gastroenterol Hepatol. 2020;18:69-81.e3.

van Linschoten RCA, Visser E, Niehot CD, van der Woude CJ et al. Systematic review: societal cost of illness of inflammatory bowel disease is increasing due to biologics and varies between continents. Aliment Pharmacol Ther. 2021;54:234–248.

van Linschoten RCA, Jansen FM, Pauwels RWM, Smits LJT et al. Increased versus conventional adalimumab dose interval for patients with Crohn’s disease in stable remission (LADI): a pragmatic, open-label, non-inferiority, randomised controlled trial. The Lancet Gastroenterology & Hepatology. 2023. https://doi.org/10.1016/S2468-1253(22)00434-4.

Steyerberg EW, Eijkemans MJ, Harrell FE, Habbema DF. Prognostic Modeling with Logistic Regression Analysis In Search of a Sensible Strategy in Small Data Sets. Med Decis Making 2001;21:45–56.

Smits LJT, Pauwels RWM, Kievit W, de Jong DJ et al. Lengthening adalimumab dosing interval in quiescent Crohn’s disease patients: protocol for the pragmatic randomised non-inferiority LADI study. BMJ Open. 2020;10:e035326.

Hastie T, Tibshirani R, Tibshirani R. Best Subset, Forward Stepwise or Lasso? Analysis and Recommendations Based on Extensive Comparisons. Statistical Science. 2020. https://doi.org/10.1214/19-STS733.

Musoro JZ, Zwinderman AH, Puhan MA, ter Riet G, Geskus RB. Validation of prediction models based on lasso regression with multiply imputed data. BMC Med Res Methodol. 2014. https://doi.org/10.1186/1471-2288-14-116.

van Buuren S, Groothuis-Oudshoorn K. mice: Multivariate Imputation by Chained Equations in R. Journal of Statistical Software. 2011;45:1–67.

Vickers AJ, Elkin EB. Decision curve analysis: a novel method for evaluating prediction models. Med Decis Making. 2006;26:565–574.

Pauwels RWM, van der Woude CJ, Nieboer D, Steyerberg EW et al. Prediction of relapse after anti-tumor necrosis factor cessation in Crohn’s disease: individual participant data meta-analysis of 1317 patients from 14 studies. Clin Gastroenterol Hepatol. 2022;20:1671–86.e16.

Mahmoud R, Savelkoul EHJ, Mares W, Goetgebuer R, et al. Complete endoscopic healing is associated with a lower relapse risk after anti-TNF withdrawal in inflammatory bowel disease. Clin Gastroenterol Hepatol. 2022.

Lie MRKL, Peppelenbosch MP, West RL, Zelinkova Z, van der Woude CJ. Adalimumab in Crohn’s disease patients: pharmacokinetics in the first 6 months of treatment. Aliment Pharmacol Ther. 2014;40:1202–1208.

Funding

This work was supported by the Netherlands Organisation for Health Research and Development [ZonMw, Healthcare Efficiency programme, (Grant No. 848015002)]. ZonMw is part of the Netherlands Organisation for Scientific Research (NWO). Sponsor: Radboud University Medical Centre P.O. Box 9101, 6500 HB Nijmegen, The Netherlands.

Author information

Authors and Affiliations

Consortia

Contributions

RCAvL, CJvdW, FH, FMJ, FA, RWMP, LJTS, WK contributed to the conception and design of the study. RCAvL, FMJ, RWMP, LJTS, DJdJ, ACdV, PJB, RLW, AGLB, IAMG, FHJW, TEHR, MWMDL, AAvB, BO, MP, MGVMR, NKdB, RCMH, PCJtB, AEvdMdJ, JMJ, SVJ, ACITLT, CJvdW, FH contributed to patient inclusion and data collection. RCAvL, FMJ, RWMP and LJTS were responsible for project administration. CJvdW and FH were responsible for project supervision. RCAvL and FMJ had direct access and verified the underlying data. RCAvL was responsible for the statistical analysis with supervision from FA. All authors contributed to interpreting the data. RCAvL, FMJ, CJvdW, FH wrote the first draft of the manuscript and all authors reviewed and approved the manuscript for submission. All authors had access to all data and shared final responsibility for the decision to submit for publication.

Corresponding author

Ethics declarations

Competing interests

FMJ has received a research grant from ZonMW. DJdJ has received payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Galapagos and held leadership roles in the Dutch Initiative on Crohn and Colitis and the IBD workgroup of the Dutch Gastroenterology Society. ACdV has received research grants from Takeda, Janssen and Pfizer. RLW has received payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Ferring, Pfizer, Galapagos, AbbVie and Janssen. TEHR payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from AbbVie and has participated in the advisory board for Galapagos. MWMDL has received a grant for podcasts from Pfizer, payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Jansen-Cilag and Galapagos, participated in advisory boards of BMS and Galapagos, and held leadership roles in the Elisabeth Twee Steden Ziekenhuis. AAvB has received research grants from Pfizer, Teva and ZonMW, consulting fees from Ferring, Galapagos, AbbVie and BMS, payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Ferring, Galapagos and Janssen, support for attending meetings from Janssen, and held leadership roles in committees of the Dutch Gastroenterology Society and National Federation of Medical Specialists. BO has received research grants from Galapagos, Takeda, Ferring and Celltrion, has received consulting fees from AbbVie, Galapagos, Pfizer, Ferring, Takeda and Janssen, payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Takeda, Galapagos, AbbVie and Ferring and was chairman of the IBD committee of the Dutch Association of Gastroenterology (NVMDL). MJP has received consulting fees from Takeda, Janssen, Galapagos and AbbVie and payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Janssen. NKdB has served as a speaker for AbbVie, Takeda and MSD. He has served as consultant and principal investigator for Takeda and TEVA. He has received research grants from Dr. Falk, Takeda, TEVA and MLDS. All outside the submitted work.RMH has received payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from ITS and is a member of the national IBD committee of the Dutch Association for Gastroenterology and Hepatology. AEvdMdJ has received research grants from Galapagos, nestle, Cablon and Norgine, has received payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Galapagos, Tramedico and Janssen-Cilag, and has participated in an advisory board for Ferring. CJvdW has received funding for the present study from ZonMW, has received research grants from ZonMW, Falk and Pfizer, has received consulting fees from Janssen, Galapagos, and Pfizer, has received payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from Ferring and AbbVie and had leadership roles in the European Crohn’s & Colitis organisation, United European Gastroenterology council and the Dutch Association for Gastroenterology (NVGE). FH has received funding for the present study from ZonMW, has received research grants from Janssen, AbbVie, Pfizer and Takeda, and has received payment or honoraria for lectures, presentations, speakers bureaus, manuscript writing or educational events from AbbVie, Janssen, Takeda and Pfizer. RCAvL, RWMP, LJTS, FA, WK, PJB, AGLB, IAMG, FHJW, MGVMR, PCJtB, JMJ, SVJ, ACIATLT has nothing to disclose.

Ethical approval

The study protocol was approved by the Medical Ethical Review Committee of the Radboud University Medical Centre (registration number NL58948.091.16). Written informed consent was obtained from each patient who participated in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

van Linschoten, R.C.A., Jansen, F.M., Pauwels, R.W.M. et al. A Prediction Model for Successful Increase of Adalimumab Dose Intervals in Patients with Crohn’s Disease: Secondary Analysis of the Pragmatic Open-Label Randomised Controlled Non-inferiority LADI Trial. Dig Dis Sci 69, 2165–2174 (2024). https://doi.org/10.1007/s10620-024-08410-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10620-024-08410-z