Abstract

The statistical comparison of machine learning classifiers is frequently underpinned by null hypothesis significance testing. Here, we provide a survey and analysis of underrated problems that significance testing entails for classification benchmark studies. The p-value has become deeply entrenched in machine learning, but it is substantially less objective and less informative than commonly assumed. Even very small p-values can drastically overstate the evidence against the null hypothesis. Moreover, the p-value depends on the experimenter’s intentions, irrespective of whether these were actually realized or not. We show how such intentions can lead to experimental designs with more than one stage, and how to calculate a valid p-value for such designs. We discuss two widely used statistical tests for the comparison of classifiers, the Friedman test and the Wilcoxon signed rank test. Some improvements to the use of p-values, such as the calibration with the Bayes factor bound, and alternative methods for the evaluation of benchmark studies are discussed as well.

Similar content being viewed by others

Notes

This assumption is clearly violated: if A is better than B and B is better that C, then A must also be better than C.

Notice that for \(p = 0.0034\), the BFB is approximately 19; and with a false positive risk of 5%, the prior probability of the null hypothesis is \({\mathbb {P}}(H_0) = 0.5\).

The R function hdiBeta(0.95, a, b) from the the package nclbayes calculates the 95%-HDI of the beta distribution with parameters a and b.

It is of course assumed that nothing is known yet about this new data set.

References

Abelson R (2016) A retrospective on the significance test ban of 1999 (if there were no significance tests, they would need to be invented). In: Harlow L, Mulaik S, Steiger J (eds) What if there were no significance tests?. Routledge Classic Editions, pp 107–128

Althouse A (2016) Adjust for multiple comparisons? It’s not that simple. Ann Thorac Surg 101:1644–1645

Amrhein V, Greenland S (2018) Remove, rather than redefine, statistical significance. Nat Hum Behav 2(4):4

Amrhein V, Korner-Nievergelt F, Roth T (2017) The earth is flat (\(p > 0.05\)): significance thresholds and the crisis of unreplicable research. PeerJ 5:e3544

Bayarri M, Berger J (2000) P values for composite null models. J Am Stat Assoc 95(452):1127–1142

Bayarri M, Benjamin D, Berger J, Sellke T (2016) Rejection odds and rejection ratios: a proposal for statistical practice in testing hypotheses. J Math Psychol 72:90–103

Benavoli A, Corani G, Mangili F (2016) Should we really use post-hoc tests based on mean-ranks? J Mach Learn Res 17(5):1–10

Benavoli A, Corani G, Demšar J, Zaffalon M (2017) Time for a change: a tutorial for comparing multiple classifiers through Bayesian analysis. J Mach Learn Res 18(77):1–36

Benjamin D, Berger J (2016) Comment: a simple alternative to \(p\)-values. Am Stat (Online Discussion: ASA Statement on Statistical Significance and \(P\)-values) 70:1–2

Benjamin D, Berger J (2019) Three recommendations for improving the use of \(p\)-values. Am Stat 73(sup1):186–191

Benjamin D, Berger J, Johannesson M, Nosek B, Wagenmakers E, Berk R, Bollen K, Brembs B, Brown L, Camerer C, Cesarini D, Chambers C, Clyde M, Cook T, De Boeck P, Dienes Z, Dreber A, Easwaran K, Efferson C, Fehr E, Fidler F, Field A, Forster M, George E, Gonzalez R, Goodman S, Green E, Green D, Greenwald A, Hadfield J, Hedges L, Held L, Hua Ho T, Hoijtink H, Hruschka D, Imai K, Imbens G, Ioannidis J, Jeon M, Jones J, Kirchler M, Laibson D, List J, Little R, Lupia A, Machery E, Maxwell S, McCarthy M, Moore D, Morgan S, Munafó M, Nakagawa S, Nyhan B, Parker T, Pericchi L, Perugini M, Rouder J, Rousseau J, Savalei V, Schönbrodt F, Sellke T, Sinclair B, Tingley D, Van Zandt T, Vazire S, Watts D, Winship C, Wolpert R, Xie Y, Young C, Zinman J, Johnson V (2018) Redefine statistical significance. Nat Hum Behav 2(1):6–10

Berger J, Berry D (1988) Statistical analysis and the illusion of objectivity. Am Sci 76:159–165

Berger J, Delampady M (1987) Testing precise hypotheses. Stat Sci 2(3):317–352

Berger J, Sellke T (1987) Testing a point null hypothesis: the irreconcilability of \(p\) values and evidence. J Am Stat Assoc 82:112–122

Berger J, Wolpert R (1988) The Likelihood Principle, 2nd edn. Institute of Mathematical Statistics, Hayward, California

Berrar D (2017) Confidence curves: an alternative to null hypothesis significance testing for the comparison of classifiers. Mach Learn 106(6):911–949

Berrar D, Dubitzky W (2019) Should significance testing be abandoned in machine learning? Int J Data Sci Anal 7(4):247–257

Berrar D, Lozano J (2013) Significance tests or confidence intervals: which are preferable for the comparison of classifiers? J Exp Theor Artif Intell 25(2):189–206

Berrar D, Lopes P, Dubitzky W (2017) Caveats and pitfalls in crowdsourcing research: the case of soccer referee bias. Int J Data Sci Anal 4(2):143–151

Berry D (2017) A \(p\)-value to die for. J Am Stat Assoc 112:895–897

Birnbaum A (1961) A unified theory of estimation, I. Ann Math Stat 32:112–135

Carrasco J, García S, Rueda M, Das S, Herrera F (2020) Recent trends in the use of statistical tests for comparing swarm and evolutionary computing algorithms: practical guidelines and a critical review. Swarm Evol Comput 54:100665

Carver R (1978) The case against statistical significance testing. Harv Educ Rev 48(3):378–399

Christensen R (2005) Testing Fisher, Neyman, Pearson, and Bayes. Am Stat 59(2):121–126

Cockburn A, Dragicevic P, Besançon L, Gutwin C (2020) Threats of a replication crisis in empirical computer science. Commun ACM 63(8):70–79

Cohen J (1990) Things I have learned (so far). Am Psychol 45(12):1304–1312

Cohen J (1994) The earth is round (\(p <\) .05). Am Psychol 49(12):997–1003

Cole P (1979) The evolving case-control study. J Chronic Dis 32:15–27

Colquhoun D (2017) The reproducibility of research and the misinterpretation of \(p\)-values. R Soc Open Sci 4:171085

Cumming G (2012) Understanding the new statistics: effect sizes, confidence intervals, and meta-analysis. Routledge, Taylor & Francis Group, New York/London

Dau HA, Bagnall AJ, Kamgar K, Yeh CM, Zhu Y, Gharghabi S, Ratanamahatana CA, Keogh EJ (2019) The UCR time series archive. CoRR. arXiv:1810.07758

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Dietterich T (1998) Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput 10:31–36

Drummond C (2006) Machine learning as an experimental science, revisited. In: Proceedings of the 21st national conference on artificial intelligence: workshop on evaluation methods for machine learning. AAAI Press, pp 1–5

Drummond C, Japkowicz N (2010) Warning: statistical benchmarking is addictive. Kicking the habit in machine learning. J Exp Theor Artif Intell 2:67–80

Dua D, Graff C (2019) UCI machine learning repository. http://archive.ics.uci.edu/ml

Dudoit S, van der Laan M (2008) Multiple testing procedures with applications to genomics, 1st edn. Springer, New York

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32:675–701

García S, Herrera F (2008) An extension on statistical comparisons of classifiers over multiple data sets for all pairwise comparisons. J Mach Learn Res 9(89):2677–2694

Gelman A (2016) The problems with \(p\)-values are not just with \(p\)-values. The American Statistician, Online Discussion, pp 1–2

Gibson E (2020) The role of \(p\)-values in judging the strength of evidence and realistic replication expectations. Stat Biopharm Res 0(0):1–13

Gigerenzer G (1998) We need statistical thinking, not statistical rituals. Behav Brain Sci 21:199–200

Gigerenzer G (2004) Mindless statistics. J Socio-Econ 33:587–606

Gigerenzer G, Krauss S, Vitouch O (2004) The Null Ritual-What you always wanted to know about significance testing but were afraid to ask. In: Kaplan D (ed) The Sage handbook of quantitative methodology for the social sciences. Sage, Thousand Oaks, pp 391–408

Goodman S (1992) A comment on replication, \(p\)-values and evidence. Stat Med 11:875–879

Goodman S (1993) P values, hypothesis tests, and likelihood: implications for epidemiology of a neglected historical debate. Am J Epidemiol 137(5):485–496

Goodman S (1999) Toward evidence-based medical statistics 1: the P value fallacy. Ann Intern Med 130(12):995–1004

Goodman S (2008) A dirty dozen: twelve P-value misconceptions. Semin Hematol 45(3):135–140

Goodman S, Royall R (1988) Evidence and scientific research. Am J Public Health 78(12):1568–1574

Greenland S, Senn S, Rothman K, Carlin J, Poole C, Goodman S, Altman D (2016) Statistical tests, \(p\) values, confidence intervals, and power: a guide to misinterpretations. Eur J Epidemiol 31(4):337–350

Gundersen OE, Kjensmo S (2018) State of the art: reproducibility in artificial intelligence. In: McIlraith SA, Weinberger KQ (eds) Proceedings of the 32nd AAAI conference on artificial intelligence. AAAI Press, pp 1644–1651

Hagen R (1997) In praise of the null hypothesis significance test. Am Psychol 52(1):15–23

Hays W (1963) Statistics. Holt, Rinehart and Winston, New York

Hoekstra R, Morey R, Rouder J, Wagenmakers E-J (2014) Robust misinterpretation of confidence intervals. Psychon Bull Rev 21(5):1157–1164

Holm S (1979) A simple sequentially rejective multiple test procedure. Scand J Stat 6(2):65–70

Hubbard R (2004) Alphabet soup—blurring the distinctions between \(p\)’s and \(\alpha \)’s in psychological research. Theory Psychol 14(3):295–327

Hubbard R (2019) Will the ASA’s efforts to improve statistical practice be successful? Some evidence to the contrary. Am Stat 73(sup1: Statistical Inference in the 21st Century: A World Beyond \(p < 0.05\)):31–35

Hubbard R, Bayarri M (2003) P values are not error probabilities. Technical Report University of Valencia. http://www.uv.es/sestio/TechRep/tr14-03.pdf. Accessed 8 February 2021

Iman R, Davenport J (1980) Approximations of the critical region of the Friedman statistic. Commun Stat 9(6):571–595

Infanger D, Schmidt-Trucksäss A (2019) P value functions: an underused method to present research results and to promote quantitative reasoning. Stat Med 38(21):4189–4197

Isaksson A, Wallmana M, Göransson H, Gustafsson M (2008) Cross-validation and bootstrapping are unreliable in small sample classification. Pattern Recogn Lett 29(14):1960–1965

Japkowicz N, Shah M (2011) Evaluating learning algorithms: a classification perspective. Cambridge University Press, New York

Kass R, Raftery A (1995) Bayes factors. J Am Stat Assoc 90(430):773–795

Kruschke J (2010) Bayesian data analysis. WIREs Cogn Sci 1(5):658–676

Kruschke J (2013) Bayesian estimation supersedes the \(t\) test. J Exp Psychol Gen 142(2):573–603

Kruschke J (2015) Doing Bayesian data analysis, 2nd edn. Elsevier Academic Press, Amsterdam. http://doingbayesiandataanalysis.blogspot.com/

Kruschke J (2018) Rejecting or accepting parameter values in Bayesian estimation. Adv Methods Pract Psychol Sci 1(2):270–280

Kruschke J, Liddell T (2018) Bayesian data analysis for newcomers. Psychon Bull Rev 25:155–177

Lakens D (2021) The practical alternative to the \(p\) value is the correctly used \(p\) value. Perspect Psychol Sci 16(3):639–648

Lindley D (1957) A statistical paradox. Biometrika 44:187–192

McNemar Q (1947) Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 12:153–157

McShane BB, Gal D, Gelman A, Robert C, Tackett JL (2019) Abandon statistical significance. Am Stat 73(sup1: Statistical Inference in the 21st Century: A World Beyond \(p < 0.05\)):235–245

Meehl P (1967) Theory-testing in psychology and physics: a methodological paradox. Philos Sci 34(2):103–115

Miller J, Ulrich R (2014) Interpreting confidence intervals: a comment on Hoekstra, Morey, and Wagenmakers (2014). Psychon Bull Rev 23(1):124–130

Mulaik S, Raju N, R.A H (2016) There is a time and a place for significance testing. In: Harlow L, Mulaik S, Steiger J (eds) What if there were no significance tests? Routledge Classic Editions

Nadeau C, Bengio Y (2003) Inference for the generalization error. Mach Learn 52:239–281

Nosek B, Ebersole C, DeHaven A, Mellor D (2018) The preregistration revolution. Proc Natl Acad Sci USA 115(11):2600–2606

Nuzzo R (2014) Statistical errors. Nature 506:150–152

Perneger T (1998) What’s wrong with Bonferroni adjustments. BMJ 316:1236–1238

Poole C (1987) Beyond the confidence interval. Am J Public Health 2(77):195–199

Raschka S (2018) Model evaluation, model selection, and algorithm selection in machine learning. CoRR. arXiv:1811.12808

Rothman K (1990) No adjustments are needed for multiple comparisons. Epidemiology 1(1):43–46

Rothman K (1998) Writing for epidemiology. Epidemiology 9(3):333–337

Rothman K, Greenland S, Lash T (2008) Modern epidemiology, 3rd edn. Wolters Kluwer

Rozeboom W (1960) The fallacy of the null hypothesis significance test. Psychol Bull 57:416–428

Salzberg S (1997) On comparing classifiers: pitfalls to avoid and a recommended approach. Data Min Knowl Disc 1:317–327

Schmidt F (1996) Statistical significance testing and cumulative knowledge in psychology: implications for training of researchers. Psychol Methods 1(2):115–129

Schmidt F, Hunter J (2016) Eight common but false objections to the discontinuation of significance testing in the analysis of research data. In: Harlow L, Mulaik S, Steiger J (eds) What if there were no significance tests? Routledge, pp 35–60

Schneider J (2015) Null hypothesis significance tests: a mix-up of two different theories-the basis for widespread confusion and numerous misinterpretations. Scientometrics 102(1):411–432

Sellke T, Bayarri M, Berger J (2001) Calibration of \(p\) values for testing precise null hypotheses. Am Stat 55(1):62–71

Serlin R, Lapsley D (1985) Rationality in psychological research: the good-enough principle. Am Psychol 40(1):73–83

Sheskin D (2007) Handbook of parametric and nonparametric statistical procedures, 4th edn. Chapman and Hall, CRC

Simon R (1989) Optimal two-stage designs for stage II clinical trials. Control Clin Trials 10:1–10

Stang A, Poole C, Kuss O (2010) The ongoing tyranny of statistical significance testing in biomedical research. Eur J Epidemiol 25:225–230

Tukey J (1991) The philosophy of multiple comparisons. Stat Sci 6(1):100–116

Vovk V (1993) A logic of probability, with application to the foundations of statistics. J Roy Stat Soc B 55:317–351

Wagenmakers E-J (2007) A practical solution to the pervasive problems of \(p\) values. Psychon Bull Rev 14(5):779–804

Wagenmakers E-J, Ly A (2021) History and nature of the Jeffreys–Lindley Paradox. https://arxiv.org/abs/2111.10191

Wagenmakers E-J, Gronau Q, Vandekerckhove J (2019) Five Bayesian intuitions for the stopping rule principle. PsyArXiv 1–13. https://doi.org/10.31234/osf.io/5ntkd

Wasserstein R, Lazar N (2016) The ASA’s statement on \(p\)-values: context, process, and purpose (editorial). Am Stat 70(2):129–133

Wasserstein R, Schirm A, Lazar N (2019) Moving to a world beyond “\(p < 0.05\)". Am Stat 73(sup1: Statistical Inference in the 21st Century: A World Beyond \(p < 0.05\)):1–19

Wilcoxon F (1945) Individual comparisons by ranking methods. Biom Bull 1(6):80–83

Wolpert D (1996) The lack of a priori distinctions between learning algorithms. Neural Comput 8(7):1341–1390

Acknowledgements

I am very grateful to James O. Berger for our discussion of the p-value under optional stopping. I also thank the three reviewers and the editor very much for their constructive comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Johannes Fürnkranz.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Bayes factor bound

Appendix: Bayes factor bound

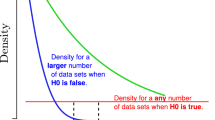

Under the null hypothesis, the p-value is known to be a random variable that is uniformly distributed over [0, 1]. Under the alternative hypothesis \(H_1\), the p-value has density \(f(p\,|\,\xi )\), with \(\xi \) being an unknown parameter (Sellke et al. 2001; Benjamin and Berger 2019),

In significance testing, larger absolute values of the test statistic (cf. Eq. 1) are taken as casting more doubt on the null hypothesis and thereby providing more evidence in favor of the alternative hypothesis. Under \(H_1\), the density should therefore be decreasing for increasing values of p. Sellke et al. (2001) propose the class of Beta(\(\xi ,1\)) densities, with \(0 < \xi \le 1\), for the distribution of the p-value,

For \(\xi = 1\), we obtain the uniform distribution under the null hypothesis, \(f(p\,|\,\xi = 1) = 1\). For a given prior density \(\pi (\xi )\) under the alternative hypothesis, the odds of \(H_1\) to \(H_0\) (i.e., the Bayes factor) are

The upper bound of \(\mathrm {BF}_{\pi }(p)\) is

Thus,

Hence,

The second derivative w.r.t. \(\xi \) is

and for \(\xi = - \frac{1}{\ln (p)}\),

Thus, \([-\ln (p)\,e\,p]^{-1}\) is an upper bound on the odds of the alternative to the null hypothesis for \(p \le e^{-1}\), and this bound holds for any reasonable prior distribution on \(\xi \) (Sellke et al. 2001).

Rights and permissions

About this article

Cite this article

Berrar, D. Using p-values for the comparison of classifiers: pitfalls and alternatives. Data Min Knowl Disc 36, 1102–1139 (2022). https://doi.org/10.1007/s10618-022-00828-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-022-00828-1