Abstract

Contagion arising from clustering of multiple time series like those in the stock market indicators can further complicate the nature of volatility, rendering a parametric test (relying on asymptotic distribution) to suffer from issues on size and power. We propose a test on volatility based on the bootstrap method for multiple time series, intended to account for possible presence of contagion effect. While the test is fairly robust to distributional assumptions, it depends on the nature of volatility. The test is correctly sized even in cases where the time series are almost nonstationary (i.e., autocorrelation coefficient \(\approx 1\)). The test is also powerful specially when the time series are stationary in mean and that volatility are contained only in fewer clusters. We illustrate the method in global stock prices data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With increasing storage space availability, real-time stock prices can now be recorded, including short-run spikes triggered by random shocks, e.g., news on a revamp of the management of a company, policy adjustment. This may not be an issue if stock prices are recorded on lower frequencies (e.g., weekly or monthly) since there will be a natural smoothing of irregular movements resulting from aggregation or averaging from higher to lower frequencies. As a result, low frequency measurements can lead to losses in valuable information about prices hence, these are typically recorded at the real-time level or at least at some intra-day levels. This could trigger high frequency time series data to manifest such shocks as conditional heteroskedasticity (volatility). Furthermore, individual securities may behave independently, but contagion within a sector, a market, a region, or even globally can force a group or all securities to exhibit similar price movement patterns.

Public information shared among the players in the financial market along with the impact of regulatory agencies may drive behavior of securities in the market. Different stakeholder may utilize the public information differently from others, resulting to a stylized behavior of stock prices. It is then imperative for both the regulatory agencies and stock market players to assess the spillover effects of certain news in a company or on the sector as whole as certain policy options or investment strategies can be developed depending on how the market reacts to this information. Given high frequency time series data, this phenomenon is viewed as volatility in clustered multiple time series data, i.e., while each time series (e.g., prices of different securities) behave independently, the events associated with public information available drives the time series into different paths, but a common pattern of the movement is taken as the common volatility pattern among multiple time series data.

Modeling procedures are available for multiple and multivariate time series data, but these are anchored on distributional assumptions about the random shocks and are greatly affected by irregularities or stylized facts about the data like volatility. Volatility causes perturbation in the dynamic behavior of the process, resulting to more complex data generating process causing difficulty in estimation and produces chaotic forecasts. Volatility has the potential to divert forecasts away from the direction of the time series even after the effect of localized perturbation vanished.

The paper is organized as follows: Sect. 2 summarizes previous literature on multiple time series and volatility; Sect. 3 presents the estimation algorithm and the proposed test for volatility in clustered time series; Sect. 4 discusses results of simulation studies to illustrate the size and power of the proposed test; Sect. 5 presents the application of the test to actual data; Sect. 6 summarizes the conclusions.

2 Multiple Time Series, Volatility and Contagion

Arellano and Bond (1991) considered multiple time series data as panel data whose common autoregressive parameter and random effect of individual time series were estimated using generalized method of moments (GMM). Although the method usually fails to converge when the length of time series is larger than the number of time series in the panel, Veron Cruz and Barrios (2014) proposed an estimation procedure that incorporates maximum likelihood estimation (MLE) and best linear unbiased predictors (BLUP) into the backfitting algorithm. Veron Cruz and Barrios (2014) noted that the advantages of the method are affected by the variance of the error term (possibly by heteroskedasticity), and to address this problem, Ramos et al (2016) proposed an estimation procedure that is robust to conditional heteroskedasticity of the multiple time series. Even in the presence of volatility, Ramos et al (2016) observed improvement in parameter estimates as well as the predictive ability of the fitted model. This is however still affected by localized non-stationarity induced by the block bootstrap method even if there is really no global heteroskedasticity in the time series. Veron Cruz and Barrios (2014) and Ramos et al (2016) are the basis for the postulated multiple time series presented in Sect. 3.

Volatility in time series has been typically assessed by incorporating models for conditional heteroscedasticity into the model structure, e.g., autoregressive conditional heteroskedastic (ARCH) model Engle (1982), generalized autoregressive conditional heteroskedastic (GARCH), Bollerslev (1986). Other volatility models which address issues regarding ARCH- and GARCH-like violation of the non-negative constraints for the variances are also proposed, e.g., exponential generalized autoregressive conditional heteroskedastic model (EGARCH), Nelson (1991). But these more general models also encounter issues such as in estimation (due to complex likelihood functions) and in forecasting (since volatility drive forecast errors to explode). While volatility can be generalized to any of these models, ARCH (1) was used in Sect. 3. The algorithm presented in Sect. 3 can be modified minimally to consider a more general volatility model.

The literature on multiple time series and volatility (separately) has been extensive, but a test for the presence of volatility in multiple time series is still open. This viewpoint of volatility in a clustered setting can help explain the dynamics of contagion in phenomena governed by certain policies or regulations like the financial markets.

Contagion is a phenomenon that is closely intertwined into multiple time series and volatility. Contagion in multiple time series results from availability of high frequency data (e.g., intra-day) since latent volatility characteristics can actually be inferred from realized volatility (see for example McAleen and Medeiros, 2008). Financial contagion representing the spillover effect of shocks that fuels systemic risk in an economy. Vodenska and Becker (2019) emphasized the need to understand the network structure of global financial markets, this will provide the stakeholders some insights on the repercussions of shock into the economy and subsequent mitigation strategies for the risks can be identified. Extreme events (tail risk) contagion in the financial markets as a result of the COVID-19 pandemic was studied by Guo et al. (2021) using some indices, these are then used in characterizing the contagion channels in the financial markets.

3 Test for Volatility in Multiple Time Series

Knowledge on whether volatility is present or not in the time series offers an opportunity to better understand the dynamic behavior of the data, thus facilitating modeling and forecasting. Campano and Barrios (2011) proposed a robust estimation procedure for time series data that exhibit structural change. Furthermore, Campano (2012) proposed a test for volatility in a time series data. Predictive ability of estimated models during tranquil period can be enhanced resulting from robust estimation of the model, noted Campano and Barrios (2011). The aim of this paper is to develop a nonparametric test of volatility in a possibly clustered multiple time series data. Clustering in multiple time series occurs from the simultaneous jumps (co-jumps) in prices associated with major news affecting a particular sector, see for example Caporin et al. (2017).

Given \(N\) time series each with \(T\) observations, Veron Cruz and Barrios (2014) considered the following model:

for \(i=\mathrm{1,2},\dots ,N\) and \(t=\mathrm{1,2},\dots ,T\). In a telecommunication setting, \({Y}_{i,t}\) can be the data usage of the \({i}^{th}\) customer for time t, and \({\lambda }_{i}\) can be the person-specific variability in the data usage while \({u}_{i,t}\) can represent the random shock that exhibit similar distributional properties among all subscribers over time. Suppose that the N time series is grouped into the m clusters, each with \({n}_{j}\) elements, \(N={n}_{1}+\dots +{n}_{m}\). Model (1) is modified to account for clustered conditional heteroscedasticity in the error term \({u}_{i,t}\) as follows:

where \({\sigma }_{kt}^{2}\) accounts for conditional heteroscedasticity present in cluster \(k\), \(k=1,\dots ,m\) and \({v}_{t}\) is a white noise process. This implies that the time series within each cluster exhibit similar volatility behavior, and volatility models may possibly vary across different clusters.

Now, assume that the volatility model for each cluster is ARCH(1), and that the time series within the cluster \(\left({n}_{k}\right)\) share the same set of parameters. Also, suppose the common dependence structure of the series is captured by the same parameter \(\phi \) in the dynamic model while the series-specific variation is expressed through the random effects (\({\lambda }_{i}\)) for each time series. Clearly, there is a need to estimate the parameters shared globally across all the time series, the parameters of the volatility model shared within the cluster, and series-specific random effects.

3.1 Estimation Phase

Here, model (2) is estimated in an iterative algorithm based on the backfitting framework. The backfitting algorithm estimates each component sequentially through the partial residuals obtained whenever a component has been estimated. Even though initially ignoring some components introduces bias in the estimates, such bias decreases in the iteration of implementing the algorithm. For model (2), we assume three components to be estimated separately, namely, the common autoregressive parameter \(\phi \), the random effects \({\lambda }_{i}\) and the ARCH(1) parameters per cluster. The initial estimates for \({\lambda }_{i}\) are obtained by ignoring the autoregressive and error terms from the model. On the other hand, the parameter \(\phi \) is initialized by fitting the residuals after removing the first estimated component (\({\widehat{\lambda }}_{i}\)). Meanwhile, the volatility parameters are initialized with the residuals obtained after removing the first and second estimated components (\({\widehat{\lambda }}_{i}\) and \(\widehat{\phi }\)). Specifically, for the \({b}^{th}\) iteration:

-

1.

Given previous estimates \({\widehat{\phi }}^{(b-1)}\) and computed residuals \({r}_{i,t}^{**\left(b-1\right)}={Y}_{i,t}-{\widehat{\phi }}^{\left(b-1\right)}{Y}_{i,t-1}\), estimate the series-specific random effects from the residuals \({r}_{i,t}^{**\left(b-1\right)}\) using the BLUP method, i.e., \({\widehat{\lambda }}_{i}^{(b)}={\widehat{\mu }}_{i}\) since \(E\left({\lambda }_{i}\right)={\mu }_{i}\).

-

2.

Compute new residuals:\({r}_{i,t}^{*\left(b\right)}={Y}_{i,t}-{\widehat{\lambda }}_{i}^{(b)}\).

Rescaling of residuals \({r}_{it}^{*\left(b\right)}\) by the estimated volatility component \({\widehat{\sigma }}_{it}^{2}\) is not necessary since the backfitting algorithm is fairly optimal with additivity of the model, see for example Opsomer (2000).

-

3.

Estimate \(\phi \) by \({\widehat{\phi }}^{(b)}\) from the following bootstrap method sub-steps:

-

a.

For each of the N time series of residuals \({r}_{it}^{*\left(b\right)}\), estimate \(\phi \) as the autoregressive parameter and intercept of the residuals using conditional least squares to obtain \({\widehat{\phi }}_{i}\).

-

b.

Resample from \({\widehat{\phi }}_{i}, i=1,\dots ,N\), to obtain \({\widehat{\phi }}^{(b)}= {\widehat{\phi }}^{BS(b)}\) (simple random sample with replacement of size \(N\), for \(R\) replicates). This is an ordinary bootstrap since each time series \(i\) provided one estimate for the autoregressive parameter \(\left({\widehat{\phi }}_{i}\right)\).

-

a.

-

4.

Compute two forms of new residuals:

$$ Y_{i,t} - \hat{\phi }^{\left( b \right)} Y_{i,t - 1} \;\;{\text{and}}\;\;r_{it}^{***\left( b \right)} = Y_{i,t} - \hat{\lambda }_{i}^{\left( b \right)} - \hat{\phi }^{\left( b \right)} Y_{i,t - 1} $$The first form of residuals \({r}_{it}^{**\left(b\right)}\) will be used to estimate the series-specific effects \({\lambda }_{i}\) in the next iteration while the second form of residuals \({r}_{it}^{***\left(b\right)}\) will be used to estimate the volatility model. Note that the bootstrap intercept is also subtracted from the original observations \({Y}_{i,t}\) in the second form of residuals \({r}_{i,t}^{***\left(b\right)}\). Presence of volatility in the model affects the level of the residuals, and by subtracting the bootstrap intercept, stabilization in the levels of the random component is achieved.

-

5.

For each time point \((t)\), the second form of residuals \({r}_{i,t}^{***(b)}\) mimics the behavior of the random shocks \({u}_{i,t}\). Thus, we use the square of these residuals, \({\widehat{\sigma }}_{i,t}^{2}= {\widehat{u}}_{i,t}^{2}={\left({r}_{i,t}^{***(b)}\right)}^{2}\), as an unbiased estimator of the heteroscedastic variance \({\sigma }_{i,t}^{2}\) and the squared shocks \({u}_{i,t}^{2}\). Estimate the variance model, e.g., \(\left({\sigma }_{i,t}^{2}\right)={\alpha }_{k,0}+{\alpha }_{k,1}{u}_{i,t-1}^{2}\) (for ARCH (1)) using \(\left({\widehat{u}}_{i,t}^{2}, {\widehat{u}}_{i,t-1}^{2}\right)\) through ordinary least squares (OLS) estimation to obtain \({\widehat{\alpha }}_{0i}^{(b)}\) and \({\widehat{\alpha }}_{1i}^{(b)}\) for each series.

-

6.

For cluster \(k\), estimate the volatility parameters \({\alpha }_{k,0}\) by \({\widehat{\alpha }}_{k,0}^{(b)}\) (the mean of \({\widehat{\alpha }}_{0i}^{(b)}\), \(i = 1,\dots , {n}_{k}\)) and \({\alpha }_{k,1}\) by \({\widehat{\alpha }}_{k,1}^{(b)}\) (the mean of \({\widehat{\alpha }}_{1i}^{(b)}\) \(i = 1,\dots , {n}_{k}\)). Here, \({\widehat{\alpha }}_{0i}^{(0)}\) and \({\widehat{\alpha }}_{1i}^{(b)}\) are the individual ARCH (1) parameter estimates of \({i}^{th}\) time series. This implies that different ARCH(1) parameters are estimated for each cluster.

Then, we iterate from Step 1 until convergence, e.g., when parameter changes in-between iteration by less than the tolerance level \(\varepsilon \).

3.2 Testing for Volatility

Given parameter estimates from the Estimation Phase,

-

1.

Reconstruct variance components for each resample through

$$ \left( {\hat{\sigma }_{i,t}^{2} } \right) = \hat{\alpha }_{k,0} + \hat{\alpha }_{k,1} \hat{u}_{i,t - 1}^{2} . $$ -

2.

Generate \({u}_{i,t}^{*}\) from \(N\left(0,{\widehat{\sigma }}_{i,t}^{2}\right)\).

-

3.

Compute replicates of \({Y}_{it}\) as \({Y}_{i,t}^{*}=\widehat{\phi }{Y}_{i,t-1}^{*}+{\widehat{\lambda }}_{i}+{u}_{i,t}^{*}\)

-

4.

Estimate parameters from each replicate of the data using the Estimation Phase presented above.

The proposed algorithm is based on the sieve bootstrap, see for example, Buhlmann (1997). The algorithm derives the empirical distribution of the parameters in the model through a sieve bootstrap method, the distribution is then used in making decisions pertaining to the hypothesis being tested.

Multiple clusters are tested simultaneously. To control the familywise error rate (FWER), size \(\alpha \) of the test is adjusted to \(\alpha /m\) (Bonferroni correction) where \(m\) is the number of clusters, see for example, Wright (1992). Given the bootstrap replicates, \({\left(\frac{\alpha }{2m}\right)}^{th}\) and \({\left(1-\frac{\alpha }{2m}\right)}^{th}\) percentiles of \({\widehat{\alpha }}_{k,1}\) s is computed and are used to test the significance of the parameter estimate for each cluster. Non-inclusion of zero in the interval provides enough empirical evidence against the null hypothesis (i.e., no significant volatility) while inclusion of zero indicates no evidence against the null hypothesis. For the variance model, \({\alpha }_{k,1}=0\) indicates no volatility (assuming ARCH (1) model). Hence, the test is equivalent to the null which is absence of volatility of specific model, e.g., ARCH (1) again the alternative that volatility of specific model exists.

The method discussed above assumes that clusters are identified. Existence of clusters (number of clusters and membership of time series to a cluster) can be postulated by the analyst, e.g., stocks that are more likely involved in a possible contagion. Alternatively, number and cluster membership can be determined statistically through time series clustering, see for example, Aghabozorgi et al (2015).

4 Simulation Study

We designed a simulation study to investigate the computational optimality of the test. Some conditions about the data generating process are controlled, and this includes: number of time series (N = 50), length of each time series (T = 50); autoregressive parameter (\(\phi \)=0.6, 0.95 to represent stationary and near nonstationary time series, respectively); mean of random effect (\({\mu }_{i}=0\)); constant standard deviation of random effect across all time series; number of clusters (1 or 5, absence or presence of clustering, respectively); ARCH parameters [\(\left({\alpha }_{k0}=1, {\alpha }_{k1}=1\right)\)-presence of volatility, \(\left({\alpha }_{k0}=1, {\alpha }_{k1}=0\right)\)-absence of volatility]; and when there are 5 clusters, 1 or 3 of the clusters are set to exhibit an ARCH(1) type of volatility. In all cases, level of significance is set at \(\left(\alpha =0.05\right).\)

The data was simulated with Eq. (2) as the data generating process. Random variables are first generated from the corresponding distribution. The white noise process \({v}_{t}\) was generated from the standard normal distribution. After initialization of the time series, repetitive substitution of previous values, assumed parameters, current and past values of random components to Eq. (2) is done until 2 T time points are generated. The first half of the simulated time series are dropped as this might have been influenced by initial values.

The nonparametric test is compared to a parametric test based on ARCH (1) model where each time series is treated in a univariate context. The parametric test for volatility is based on the likelihood ratio test, see for example, Engle (1982). The goal of the comparison is to assess whether knowledge of clustering can contribute in detecting group volatility. Power and size comparisons between parametric and nonparametric tests for various scenarios are summarized in Table 1.

4.1 Single Cluster, No Volatility

If all time series forms a single cluster, the nonparametric test is correctly-sized regardless on whether the time series are stationary (in mean) or nearly non-stationary. The parametric test is also correctly-sized when the time series is stationary in mean. However, size of the parametric test is distorted when the time series approaches nonstationarity in mean. This is not the case in the nonparametric test since all replicates under near nonstationarity failed to reject the null hypothesis of no volatility.

4.2 Single Cluster, Volatility (ARCH) is Present

ARCH-type volatility model is induced to the simulated time series in cases where there is only a single cluster. The nonparametric test that considers all time series to provide evidence against the null hypothesis of no volatility yield very high power compared to the parametric counterpart that considers each time series individually, regardless of the state of stationarity in mean. In cases where the time series are stationary in mean, the nonparametric test was able to provide evidence against the null hypothesis for all replicates of the simulated data, while very low power was observed in the parametric test. As the time series approaches nonstationarity, both the nonparametric and parametric tests suffer a decline in power, but the decline in power of the parametric test is much larger than the decline in power of the nonparametric test (still exhibiting a reasonable power).

4.3 5 Clusters, Volatility (ARCH) is Present in 1 Cluster

Assuming 5 clusters, without inducing volatility in the simulated time series, both parametric and nonparametric test are correctly-sized. However, when the time series approaches nonstationarity, the parametric test already suffers from size distortion since the procedure relies heavily on the stationarity in mean assumption. This is not the case for the nonparametric test that is still correctly-sized even if the time series approaches near-nonstationarity. When volatility is induced in simulated time series in one cluster (time series in four other clusters do not contain volatility), the nonparametric test exhibit over 20% advantage in power compared to the parametric test in time series that are stationary in mean. When the time series approaches nonstationarity, both the parametric and nonparametric tests have lower power, the nonparametric test though still have relative advantage over the parametric test.

4.4 5 Clusters, Volatility (ARCH) is Present in 3 of the Clusters

Both the parametric and nonparametric tests are consistently correctly-sized when all the time series in 5 clusters exhibit stationarity in mean. The parametric test however, exhibit distortion in size when the time series in all clusters approaches nonstationarity in mean, this is not the case for the nonparametric test which is still correctly sized even when the time series approaches nonstationarity. As volatility is induced in three of the five clusters, the nonparametric test still has over 20% advantage in terms of power over the parametric test. Power of both parametric and nonparametric tests suffer as the time series across all clusters approaches nonstationarity.

4.5 Misclassified Time Series

To verify robustness of the test to possible misclassification of time series into a cluster, a cluster of 50 time series with volatility is deliberately contaminated with some time series that does not exhibit volatility. Furthermore, similar cluster of 50 time series without volatility contaminated with some time series that actually exhibit volatility.

With 50 time series simulated to exhibit volatility, one time series (2%) or five (10%) time series that does not exhibit volatility were included. Provided that the time series are stationary (autoregressive parameter of 0.60), the test is able to identify volatility for all replicates. Relatively lower power (80%) is obtained when autoregressive parameter is 0.95.

The test is still able to detect even with only one (2%) or five (10%) time series with volatility are induced in a cluster of 50 time series. The chance of detecting volatility increases with more time series that actually exhibit volatilities in a cluster. Thus, regardless of the actual number of time series that exhibits volatility, the test is capable of detection of such. See Table 2 for details.

5 Application in Stock Market Price Indices

Contagion is a common event in stock markets usually resulting from interdependence among securities and among stock brokers. Volatility is another stylized fact among indicators that characterizes behavior of the market, often monitored at very high frequencies by various stakeholders. Lyocsa and Horvath (2018) noted that there is evidence of contagion from the US stock market to Japan, United Kingdom, France, Germany, Hong Kong, and Canada. They further noted that contagion is not just a crisis-specific event, but is present in the market all the time. Dewandaru et al. (2018) further observed that during the major crisis in European equity markets, contagion effects generated short-term shocks, also noted that there is evidence that the most recent US subprime crisis is brought about by contagion effect. These short-term shocks can easily drive volatility of key market indicators like prices.

We used prices of stocks traded in the European and US markets to investigate presence of volatility associated with contagion effect. Regional contagion can cause volatility among stock market prices in the region. In understanding the dynamic behavior of stock prices, time series data of prices of 30 stocks are postulated to cluster into European (19 stocks) and US (11 stocks) regions. Daily prices during 2011–2016 period are used in the analysis. The European and US markets and the period 2011–2016 were selected because of the contagion reported in the literature in these regions within the period. All stocks prices with available data from Yahoo Finance (https://finance.yahoo.com/quote/DATA.L/history?p=DATA.L) are include for the illustration discussed in this section.

5.1 Original Time Series Data

Six of nineteen European stocks are plotted in Fig. 1, while six of the eleven stocks in the US market are plotted in Fig. 2. While there are some periods where volatility seems to exists, this can potentially be masked by overall nonstationarity. From Table 3, The original time series data both from the European and US markets exhibit nonstationarity, most of the estimated autoregressive parameters are 0.99 or 0.98, smallest value was in a stock in the US market where autoregressive parameter is 0. 937.With the original time series data, nonstationarity in mean is dominating, so that the parametric test for volatility failed to reject the null hypothesis of no volatility for all time series, see Table 3 for details.

Using the estimation procedure for clustered time series data described in Sect. 3, parameters of the mean and variance models are estimated per cluster and presented in Table 4. In the multiple time series framework, we assumed similar model for the mean of the time series. The common autoregressive parameter is estimated at 0.9863, which is within the values of estimated autoregressive parameters (univariate) for the individual time series in Table 3.

The Bonferroni corrected CI for the European market do not include 0, indicating that as a cluster, the European market exhibits volatility. Note that consistent with results of simulation studies, the parametric test failed to provide empirical evidence on the existence of volatility, while the nonparametric test was able to recognize empirical evidence of joint volatility (possibly caused by contagion) among the stocks in the European market. The Bonferroni corrected CI for the US market includes 0, hence, even the nonparametric test failed to recognize empirical evidence of the existence of group volatility among the stocks in the US market. Power of the nonparametric test diminish when the time series are nearly nonstationary.

5.2 First Differenced Time Series

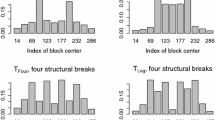

The parametric test for volatility suffers from size distortion when the time series approaches nonstationarity, which is not the case in the nonparametric test. Also, power is reduced even in the nonparametric test as the time series approaches nonstationarity, but with greater reduction in power for the parametric test. First differences of the time series are obtained to mitigate presence of nonstationarity. Time plots of six stocks in the European market are given in Fig. 3 and the time plots of six stocks in the US market are given in Fig. 4. Both clusters now exhibit stationary behavior and volatility has become more visually evident.

Univariate analysis was done with the individual (first-differenced) time series, estimates and results of parametric tests for volatility are summarized in Table 5. All first differenced time series are now stationary. In fact, many of the time series are actually random walk since no dependence structure is evident from the first differenced time series. Only four stocks in the European market and two stocks in the US market still exhibit dependencies after first differencing. The parametric test for volatility identifies only one time series in the European and one in the US market to exhibit volatility.

We also used the estimation procedure for clustered data described in Sect. 3 for the first differenced time series. Parameters of the mean and variance models are estimated per cluster and presented in Table 6. From the multiple time series assumption, the common autoregressive parameter is estimated to be − 0.0809, within the range of values of the autoregressive coefficients from the univariate analysis in Table 5.

From Table 6, the Bonferroni corrected CI for the European market do not include 0, indicating that as a cluster, the European market exhibits volatility. Similar is true for the US market, the Bonferroni corrected CI also precludes zero, indicating presence of volatility among the clustered time series. Recall that the simulation study indicates higher power for the nonparametric test when the individual time series are stationary. While the parametric test for volatility in Table 5 identifies only one time series to exhibit volatility, the nonparametric test in Table 6 provides empirical evidence that clustered volatility is present in both the European and US markets.

6 Conclusions

Given clustered time series data, a nonparametric test for volatility is proposed, this accounts for the possible contagion effect among time series in the same cluster. The simulation study illustrate that the test is correctly-sized even when the multiple time series approaches nonstationarity. The test is powerful if volatility is contained in fewer clusters only, a resemblance of localized contagion effect. As contagion causing volatility become global in nature, i.e., as more clusters are affected by volatility, even the nonparametric test exhibits low power. Note however that widespread volatility, i.e., practically all time series manifest volatility behavior, is also the case where volatility often becomes more obvious even visually. The nonparametric test offers a method of testing volatility in multiple time series that exhibit clustering, and that volatility spillover is contained only in few clusters. In the presence of contagion, whether local or global, the nonparametric test can benefit from the simultaneous evidence that all time series can provide against absence of volatility. A clear understanding of presence of volatility will facilitate identification and estimation of models that can generate reliable forecast of indicators involved, hence, better risk management in sectors that manifest such volatile behavior like the financial markets.

A more general abstraction of volatility in clustered multiple time exhibiting a generalized behavior can further enhance tools that could better understand features of some complicated phenomenon.

Code Availability

References

Aghabozorgi, S., Shirkhorshidi, A., & Wah, T. (2015). Time-series clustering-a decade review. Information Systems, 53, 16–38.

Arellano, M., & Bond, S. (1991). Some tests of specification for panel data: Monte Carlo evidence and an application to employment equations. The Review of Economic Studies, 58(2), 277–297.

Bollerslev, T. (1986). Generalized autoregressive conditional heteroskedasticity. Journal of Econometrics, 31(3), 307–327.

Buhlmann, P. (1997). Sieve bootstrap for time series. Bernoulli, 3(2), 123–148.

Campano, W. (2012). Robust methods in time series models with volatility. The Philippine Statistician, 61(2), 83–101.

Campano, W., & Barrios, E. (2011). Robust estimation of a time series model with structural change. Journal of Statistical Computation and Simulation, 81(7), 909–927.

Caporin, M., Kolokolov, A., & Reno, R. (2017). Systemic co-jumps. Journal of Financial Economics, 126, 563–591.

Dewandaru, G., Masih, R., & Masih, M. (2018). Unraveling the financial contagion in European stock markets during financial crises: Multi-timescale analysis. Emerging Markets Finance & Trade, 54, 859–880.

Engle, R. F. (1982). Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom Inflation. Econometrica, 50(4), 987–1008.

Guo, Y., Li, P., & Li, A. (2021). Tail risk contagion between international financial markets during COVID-19 pandemic. International Review of Financial Markets, 73, 101649.

Lyocsa, S., & Horvath, R. (2018). Stock market contagion: A new approach. Open Economic Review, 29, 547–577.

McAleen, M., & Medeiros, M. (2008). Realized volatility: A review. Econometric Reviews, 27(1–3), 10–45.

Nelson, D. (1991). Conditional heteroskedasticity in asset returns: A new approach. Econometrica, 59(2), 347–370.

Opsomer, J. (2000). Asymptotic properties of backfitting estimators. Journal of Multivariate Analysis, 73, 166–179.

Ramos, M., Barrios, E. B., & Lansangan, J. G. (2016). Estimation of multiple time series with volatility. School of Statistics Working Paper Series 2016, 3 (October 2016), p. 13.

Veron Cruz, R. K., & Barrios, E. B. (2014). Estimation procedure for a multiple time series model. Communications in Statistics - Simulation and Computation, 43(10), 2415–2431.

Vodenska, I., & Becker, A. (2019). Interdependence, vulnerability and contagion in financial and economic networks. In A. Abergel, B. Chakraborti, A. Chakraborti, N. Deo, & K. Sharma (Eds.), New Perspectives and Challenges in Econophysics and Sociophysics. Switzerland: Springer Nature.

Wright, S. (1992). Adjusted p-values for Simultaneous Inference. Biometrics, 48, 1005–1013.

Acknowledgement

The first version of this paper was presented during the 4th African International Conference on Statistics.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

This research has no potential conflict of interest and did not involve human participants and/or animal in the course of its implementation.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Barrios, E.B., Redondo, P.V.T. Nonparametric Test for Volatility in Clustered Multiple Time Series. Comput Econ 63, 861–876 (2024). https://doi.org/10.1007/s10614-023-10362-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-023-10362-x