Abstract

Shape optimization methods have been proven useful for identifying interfaces in models governed by partial differential equations. Here we consider a class of shape optimization problems constrained by nonlocal equations which involve interface–dependent kernels. We derive a novel shape derivative associated to the nonlocal system model and solve the problem by established numerical techniques. The code for obtaining the results in this paper is published at (https://github.com/schustermatthias/nlshape).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many physical relations and data-based coherences cannot satisfactorily be described by classical differential equations. Often they inherently possess some features, which are not purely local. In this regard, mathematical models which are governed by nonlocal operators enrich our modeling spectrum and present useful alternatives as well as supplemental approaches. That is why they appear in a large variety of applications including among others, anomalous or fractional diffusion [10, 11, 19], peridynamics [25, 27, 54, 64], image processing [31, 38, 42], cardiology [14], machine learning [44], as well as finance and jump processes [5, 6, 26, 37, 59]. Nonlocal operators are integral operators allowing for interactions between two distinct points in space. The nonlocal models investigated in this paper involve kernels that are not necessarily symmetric and which are assumed to have a finite range of nonlocal interactions; see, e.g, [23, 24, 26, 60] and the references therein.

Not only the problem itself but also various optimization problems involving nonlocal models of this type are treated in literature. For example matching-type problems are treated in [18, 20, 21] to identify system parameters such as the forcing term or a scalar diffusion parameter. The control variable is typically modeled to be an element of a suitable function space. Moreover, nonlocal interface problems have become popular in recent years [13, 17, 29, 32, 43]. However, shape optimization techniques applied to nonlocal models can hardly be found in literature. For instance, the articles [9, 41, 55] deal with minimizing (functions of) eigenvalues of the fractional Laplacian with respect to the domain of interest. Also, in [8, 15] the energy functional related to fractional equations is minimized. In [12] a functional involving a more general kernel is considered. All of the aforementioned papers are of theoretical nature only. To the best of our knowledge, shape optimization problems involving nonlocal constraint equations with truncated kernels and numerical methods for solving such problems cannot yet be found in literature.

Instead, shape optimization problems which are constrained by partial differential equations appear in many fields of application [34, 46, 52, 53] and particularly for inverse problems where the parameter to be estimated, e.g., the diffusivity in a heat equation model, is assumed to be defined piecewise on certain subdomains. Given a rough picture of the configuration, shape optimization techniques can be successfully applied to identify the detailed shape of these subdomains [48,49,50, 62].

In this paper we transfer the problem of parameter identification into a nonlocal regime. Here, the parameter of interest is given by the kernel which describes the nonlocal model. We assume that this kernel is defined piecewise with respect to a given partition \(\{\Omega _i\}_{i}\) of the domain of interest \(\Omega \). Thereby, the state of such a nonlocal model depends on the interfaces between the respective subdomains \(\Omega _i\). Under the assumption that we know the rough setting but are lacking in details, we can apply the techniques developed in the aforementioned shape optimization papers to identify these interfaces from a given measured state.

For this purpose we formulate a shape optimization problem which is constrained by an interface–dependent nonlocal convection–diffusion model. Here, we do not aim at investigating conceptual improvements of existing shape optimization algorithms. On the contrary, we want to study the applicability of established methods for problems of this type.

The realization of this plan basically requires two ingredients both of which are worked out here. First, we define a reasonable interface–dependent nonlocal model and provide a finite element code which discretizes a variational formulation thereof. Second, we need to derive the shape derivative of the corresponding nonlocal bilinear form which is then implemented into an overall shape optimization algorithm.

This leads to the following organization of the present paper. In Sect. 2 we formulate the shape optimization problem including an interface–dependent nonlocal model. Once established, we briefly recall basic concepts from the shape optimization regime in Sect. 3. Then Sect. 4 is devoted to the task of computing the shape derivative of the nonlocal bilinear form and the reduced objective functional. Finally we present numerical illustrations in Sect. 5 which corroborate theoretical findings.

2 Problem formulation

The system model to be considered is the homogeneous steady-state nonlocal Dirichlet problem with volume constraints, given by

posed on a bounded domain \(\Omega \subset \mathbb {R}^d\), \(d \in \mathbb {N}\) and its nonlocal interaction domain \({\Omega _{I}}\); see, e.g, [4, 23, 24, 26, 60] and the references therein. Here, we assume that this domain is partitioned into a simply connected interior subdomain \(\Omega _1 \subset \Omega \) with boundary \(\Gamma :=\partial \Omega _1\) and a domain \(\Omega _2 :=\Omega \backslash {\overline{\Omega }}_1\). Thus we have \(\Omega = \Omega (\Gamma ) = \Omega _1 {\dot{\cup }} \Gamma {\dot{\cup }} \Omega _2\), where \({\dot{\cup }}\) denotes the disjoint union. In the following, the boundary \(\Gamma \) of the interior domain \(\Omega _1\) is called the interface and is assumed to be an element of an appropriate shape space; see also Sect. 3 for a related discussion. The governing operator \(\mathcal {L}_\Gamma \) is an interface–dependent, nonlocal convection–diffusion operator of the form

which is determined by a nonnegative, interface–dependent (interaction) kernel \(\gamma _\Gamma :\mathbb {R}^d \times \mathbb {R}^d \rightarrow \mathbb {R}\). The second equation in (1) is called Dirichlet volume constraint. It specifies the values of u on the interaction domain

which consists of all points in the complement of \(\Omega \) that interact with points in \(\Omega \). For ease of exposition, we set \(u=0\) on \(\Omega _I\), but generally we can use the constraint \(u = g\) on \(\Omega _I\), if g satisfies some appropriate regularity assumptions.

Furthermore, we assume that the kernel depends on the interface in the following way

where \(\chi _{\Omega _i \times \Omega _j}\) denotes the indicator of the set \(\Omega _i \times \Omega _j\). For instance, in [51] the authors refer to \(\gamma _{ij}\) and \(\gamma _{iI}\) as inter– and intra–material coefficients. Notice that we do not need kernels \(\gamma _{Ii}\), since \(u=0\) on \(\Omega _I\). Furthermore, for \(i=1,2\) let \(\{{S}_i(\textbf{x})\}_{\textbf{x}\in \Omega }\), with \({S}_i(\textbf{x}) \subset {\mathbb {R}^d}\) for \(\textbf{x}\in \Omega \), be a family of sets, where the symmetry \(\textbf{y}\in {S}_i(\textbf{x}) \Leftrightarrow \textbf{x}\in {S}_i(\textbf{y})\) for \(\textbf{x},\textbf{y}\in \Omega \) holds. We additionally assume for \(i \in \{1,2\}\) that there exist two radii \(0<\varepsilon _{i}^1 \le \varepsilon _{i}^2<\infty \) such that \(B_{\varepsilon _{i}^1}(\textbf{x}) \subset {S}_{i}(\textbf{x})\subset B_{\varepsilon _{i}^2}(\textbf{x})\) for all \(\textbf{x}\in \Omega \), where \(B_{\varepsilon _{i}^k}(\textbf{x})\) denotes the Euclidean ball of radius \(\varepsilon _{i}^k\).

Throughout this work we consider truncated interaction kernels, which can be written as

for appropriate positive functions \({\phi }_{ij} :\mathbb {R}^d \times \mathbb {R}^d \rightarrow \mathbb {R}\) and \({\phi }_{iI} :\mathbb {R}^d \times \mathbb {R}^d \rightarrow \mathbb {R}\), which we refer to as kernel functions. In this paper we differentiate between square integrable kernels and singular symmetric kernels. For square integrable kernels we require \(\gamma _{ij}\in L^2(\Omega \times \Omega )\) and \(\gamma _{iI}\in L^2(\Omega \times \Omega _I)\), which also implies \(\gamma _\Gamma \in \) \(L^2((\Omega \cup \Omega _I) \times (\Omega \cup \Omega _I))\). We do not assume that (3) is symmetric for this type of kernels.

In the case of singular symmetric kernels we require the existence of constants \(0<\gamma _* \le \gamma ^* < \infty \) and a fraction \(s \in (0,1)\), such that

for \(\textbf{x}\in \Omega \text { and } \textbf{y}\in S_{1}(\textbf{x}) \cup S_{2}(\textbf{x})\). Also, since the singular kernel is required to be symmetric, the condition \(\gamma (\textbf{x},\textbf{y})=\gamma (\textbf{y},\textbf{x})\), and, respectively, \(\phi _{12}(\textbf{x},\textbf{y})=\phi _{21}(\textbf{y},\textbf{x})\), \(\phi _{ii}(\textbf{x},\textbf{y})=\phi _{ii}(\textbf{y},\textbf{x})\) has to hold. Because we do not need to define \(\gamma _{Ii}\), as described above, there is no further symmetry condition for \(\gamma _{iI}\) required.

Example 2.1

One example of such a singular symmetric kernel is given by

where \(s \in (0,1)\), \(0<\varepsilon <\infty \) and the functions \(\sigma _{ij},\sigma _{iI}:\mathbb {R}^d \times \mathbb {R}^d \rightarrow \mathbb {R}\) are bounded from below and above by some positive constants, say \(\gamma _*\) and \(\gamma ^*\). Additionally, the \(\sigma _{ii}\) are assumed to be symmetric on \(\Omega \times \Omega \) and \(\sigma _{12}(\textbf{x},\textbf{y}) = \sigma _{21}(\textbf{y},\textbf{x})\) holds for \({\textbf{x},\textbf{y}\in \Omega \cup \Omega _I}\).

For the forcing term \(f_\Gamma \) in (1) we assume a dependency on the interface in the following way

where we assume that \(f_i \in H^1(\Omega )\), \(i=1,2\), because we need that f is weakly differentiable in Sect. 4. Figure 1 illustrates our setting.

Here you can see one example configuration, where the domain \(\Omega \) is divided in \(\Omega _1\) and \(\Omega _2\) with \(\Gamma = \partial \Omega _1\) and \(\Omega _I\) is the nonlocal interaction domain. In this case the support of \(\gamma _{11}(\textbf{x},\cdot )\) for one \(\textbf{x}\in \Omega _1\) is depicted in blue and the support of \(\gamma _{22}(\textbf{y},\cdot )\) for one \(\textbf{y}\in \Omega _{2}\) is colored in red, where the latter can be expressed by using the \(||\cdot ||_\infty \)-ball in \(\mathbb {R}^2\)

Next, we introduce a variational formulation of problem (1). For this purpose we define the corresponding forms

for some functions \(u,v:\Omega \cup \Omega _I\rightarrow \mathbb {R}\), where \(v= 0\) on \(\Omega _I\). By inserting the definitions of the nonlocal operator (2) with the kernel given in (4) and the definition of the forcing term (5), we obtain the nonlocal bilinear form

and the linear functional

In order to derive the second bilinear form (8) we used Fubini’s theorem. We employ both representations (7) and (8) of the nonlocal bilinear form in the proofs of Sect. 4. For singular symmetric kernels we also use another equivalent representation of the nonlocal bilinear form given by

where we again used Fubini’s theorem and applied that \(u,v= 0\) on \(\Omega _I\). Next, we employ the nonlocal bilinear form to define a seminorm

With this seminorm, we further define the energy spaces

We now formulate the variational formulation corresponding to problem (1) as follows

Additionally, for \(s \in (0,1)\) we define the seminorm

and the fractional Sobolev space as

Moreover, we denote the volume-constrained spaces by

Then, for square integrable kernels one can show the equivalence between \(\left( V(\Omega \cup \Omega _I), ||\cdot ||_{V(\Omega \cup \Omega _I)}\right) \) and \(\left( L^2(\Omega \cup \Omega _I), ||\cdot ||_{L^2(\Omega \cup \Omega _I)}\right) \), i.e., there exist constants \(C_1,C_2 > 0\) such that

and consequently \( u \in V(\Omega \cup \Omega _I) \Leftrightarrow u \in L^2(\Omega \cup \Omega _I).\) Additionally, one can proof the equivalence of \(\left( V_c(\Omega \cup \Omega _I), |||\cdot |||\right) \) and \(\left( L_c^2(\Omega \cup \Omega _I), ||\cdot ||_{L^2(\Omega \cup \Omega _I)}\right) \), see related results in [24, 28, 61]. Moreover, the well-posedness of problem (11) for symmetric (square integrable) kernels is proven in [24] and in [28] the well-posedness for some nonsymmetric cases is also covered (again under certain conditions on the kernel and the forcing term f). For the singular symmetric kernels the well-posedness of problem (11), the equivalence between \(\left( V(\Omega \cup \Omega _I),||\cdot ||_{V(\Omega \cup \Omega _I)}\right) \) and the fractional Sobolev space \(\left( H^s(\Omega \cup \Omega _I),||\cdot ||_{H^s(\Omega \cup \Omega _I)}\right) \) and between \(\left( V_c(\Omega \cup \Omega _I),|||\cdot |||\right) \) and \(\left( H_c^s(\Omega \cup \Omega _I), |\cdot |_{H^s(\Omega \cup \Omega _I)}\right) \) is shown in [24].

Finally, let us suppose we are given measurements \({\bar{u}}:\Omega \rightarrow \mathbb {R}\) on the domain \(\Omega \), which we assume to follow the nonlocal model (11) with the interface–dependent kernel \(\gamma _\Gamma \) and the forcing term \(f_\Gamma \) defined in (3) and (5), respectively. In order to formulate the shape derivative in Chapter 4 we need \({\bar{u}} \in H^1(\Omega )\). Then, given the data \({\bar{u}}\) we aim at identifying the interface \(\Gamma \) for which the corresponding nonlocal solution \(u(\Gamma )\) is the “best approximation” to these measurements. Mathematically spoken, we formulate an optimal control problem with a tracking-type objective functional where the interface \(\Gamma \) represents the control variable and is modeled as an element of a shape space \(\mathcal {A}\), which will be specified in Chapter 3.1. We now assume \(\Omega := (0,1)^2\) and introduce the following nonlocally constrained shape optimization problem

The objective functional is given by

The first term \(j(u,\Gamma )\) is a standard \(L^2\) tracking-type functional, whereas the second term \(j_{reg}(\Gamma )\) is known as the perimeter regularization, which is commonly used in the related literature to overcome possible ill-posedness of optimization problems [3].

3 Basic concepts in shape optimization

For solving the constrained shape optimization problem (12) we want to use the same shape optimization algorithms as they are developed in [47, 48, 50] for problem classes that are comparable in structure. Thus, in this section we briefly introduce the basic concepts and ideas of the therein applied shape formalism. For a rigorous introduction to shape spaces, shape derivatives and shape calculus in general, we refer to the monographs [16, 56, 62]. From now on, we restrict ourselves to the cases \(d \in \{2,3\}\) for the remaining part of this paper, since shape optimization problems are typically formulated for a two- or three-dimensional setting.

3.1 Notations and definitions

Based on our perception of the interface, we now refer to the image of a simple closed and smooth sphere as a shape, i.e., the spaces of interest are subsets of

where \(S^{d-1}\) is the unit sphere in \({\mathbb {R}^d}\). By the Jordan-Brouwer separation theorem [33] such a shape \(\Gamma \in \mathcal {A}\) divides the space into two (simply) connected components with common boundary \(\Gamma \). One of them is the bounded interior, which in our situation can then be identified with \(\Omega _1\).

Functionals \(J :\mathcal {A}\rightarrow \mathbb {R}\) which assign a real number to a shape are called shape functionals. Since this paper deals with minimizing such shape functionals, i.e., with so-called shape optimization problems, we need to introduce the notion of an appropriate shape derivative. To this end we consider a family of mappings \(\textbf{F}_{\textbf{t}}:{\overline{\Omega }} \rightarrow \mathbb {R}^d\) with \(\textbf{F}_{\textbf{0}}= \textbf{id}\), where \(t\in [0,T]\) and T \(\in (0,\infty )\) sufficiently small, which transform a shape \(\Gamma \) into a family of perturbed shapes \(\left\{ \Gamma ^t\right\} _{t \in [0,T]}\), where \( \Gamma ^t:=\textbf{F}_{\textbf{t}}(\Gamma ) \) with \(\Gamma ^0 = \Gamma \). Here the family of mappings \(\left\{ \textbf{F}_{\textbf{t}}\right\} _{t\in [0,T]}\) is described by the perturbation of identity, which for a smooth vector field \(\textbf{V}\in C_0^k(\Omega , \mathbb {R}^d)\), \(k \in {\mathbb {N}}\), is defined by

We note that for sufficiently small \(t\in [0,T]\) the function \(\textbf{F}_{\textbf{t}}\) is injective, and thus \(\Gamma ^t\in \mathcal {A}\). Then the Eulerian or directional derivative of a shape functional J at a shape \(\Gamma \) in direction of a vector field \(\textbf{V}\in C_0^k(\Omega , \mathbb {R}^d)\), \(k \in {\mathbb {N}}\), is defined by

If \(D_\Gamma J(\Gamma )[\textbf{V}] \) exists for all \(\textbf{V}\in C_0^k(\Omega , \mathbb {R}^d)\), \(\textbf{V}\mapsto DJ(\Gamma )[\textbf{V}] \) is continuous and in the dual space \(\left( C_0^k(\Omega , \mathbb {R}^d)\right) ^*\), then \(DJ(\Gamma )[\textbf{V}] \) is called the shape derivative of J [62, Definition 4.6].

At this point, let us also define the material derivative of a family of functions \({ \{v^t:\Omega \rightarrow \mathbb {R}: t \in [ 0, T ] \} }\) in direction \(\textbf{V}\) by

For functions \(v\), which do not explicitly depend on the shape, i.e., \(v^t = v~\text {for all } t\in [0,T]\), we find

For more details on shape optimization we refer to the literature, e.g., [16] or [56].

Remark 3.1

In case of the nonlocal problem (12) we extend the vector field \(\textbf{V}\) to \(\Omega \cup \Omega _I\) by zero, i.e, \(\textbf{V}\in C_0^k(\Omega \cup \Omega _I, {\mathbb {R}^d}) :=\{\textbf{V}:\Omega \cup \Omega _I\rightarrow {\mathbb {R}^d}: \textbf{V}|_{\Omega } \in C_0^k(\Omega ,{\mathbb {R}^d}) \text { and } \textbf{V}= 0 \text { on } \Omega _I \}\). Accordingly, the shape of the interaction domain \(\Omega _I\) does not change. Moreover, in this work \(\textbf{V}\in C_0^1(\Omega \cup \Omega _I,\mathbb {R}^d)\) is sufficient for all computations.

3.2 Optimization approach: averaged adjoint method

Let us assume, that for each admissible shape \(\Gamma \), there exists a unique solution \(u(\Gamma )\) of the constraint equation, i.e., \(u(\Gamma )\) satisfies \({A}_\Gamma (u(\Gamma ), v) = F_{\Gamma }( v)\) for all \(v\in V_c(\Omega \cup \Omega _I)\). Then we can consider the reduced problem

In order to employ derivative based minimization algorithms, we need to derive the shape derivative of the reduced objective functional \(J^{red}\). By formally applying the chain rule, we obtain

where \(D_uJ\) and \(D_\Gamma J\) denote the partial derivatives of the objective J with respect to the state variable u and the control \(\Gamma \), respectively. In applications we typically do not have an explicit formula for the control-to-state mapping \(u(\Gamma )\), so that we cannot analytically quantify the sensitivity of the unique solution \(u(\Gamma )\) with respect to the interface \(\Gamma \). Thus, a formula for the shape derivative \(D_\Gamma u(\Gamma )[\textbf{V}] \) is unattainable. One possible approach to circumvent \(D_\Gamma u(\Gamma )[\textbf{V}] \) and access the shape derivative \(D_\Gamma J^{red}(\Gamma )[\textbf{V}]\) is the averaged adjoint method (AAM) developed in [36, 57, 58], which is a Lagrangian method, where the so-called Lagrangian functional is defined as

The basic idea behind Lagrangian methods is the aspect, that we can express the reduced functional as

Now let \(\Gamma \) be fixed and denote by \(\Gamma ^t:=\textbf{F}_{\textbf{t}}(\Gamma )\) and \(\Omega _i^t :=\textbf{F}_{\textbf{t}}(\Omega _i)\) the deformed interior boundary and the deformed domains, respectively. Furthermore we indicate by writing \(\Omega (\Gamma ^t)\) that we use the decomposition \(\Omega (\Gamma ^t) = \Omega _1^t \cup \Gamma ^t \cup \Omega _2^t \left( = \Omega \right) \), where \(\Gamma ^t = \partial \Omega _1^t\). Consequently, the norm \(||\cdot ||_{V(\Omega (\Gamma ^t) \cup \Omega _I)}\) of the space \(V(\Omega (\Gamma ^t) \cup \Omega _I)\) differs from the norm \(||\cdot ||_{V(\Omega \cup \Omega _I)}\) of the space \(V(\Omega \cup \Omega _I)\) due to the interface-sensitivity of the kernel, see (10). Then we consider the reduced objective functional regarding \(\Gamma ^t\), i.e.,

where \(u(\Gamma ^t) \in V_c(\Omega (\Gamma ^t) \cup \Omega _I) \). If we now try to differentiate L with respect to t in order to derive the shape derivative, we would have to compute the derivative for \(u(\Gamma ^t) \circ \textbf{F}_{\textbf{t}}\) and \(v\circ \textbf{F}_{\textbf{t}}\), where \(u(\Gamma ^t),v\in V_c(\Omega (\Gamma ^t) \cup \Omega _I) \) may not be differentiable. Additionally the norm \(||\cdot ||_{V(\Omega (\Gamma ^t) \cup \Omega _I) }\), and therefore the space \(V_c(\Omega (\Gamma ^t) \cup \Omega _I)\), is also dependent on t. Instead, since \(\textbf{F}_{\textbf{t}}\) is a homeomorphism, we can use that for \(u,v\in V_c(\Omega (\Gamma ^t) \cup \Omega _I) \), there exist functions \({\tilde{u}},\tilde{v} \in V_c(\Omega \cup \Omega _I)\), such that

Moreover let \(T \in (0, \infty )\) be sufficiently small. Then we define

Then we can reformulate (16) as

where \(u^t \in V_c(\Omega \cup \Omega _I)\) is the unique solution of the nonlocal equation corresponding to \(\Gamma ^t\)

Furthermore \({A}(t,u,v) - F(t,v)\) is obviously linear in \(v\) for all \((t,u) \in [0,T] \times V_c(\Omega \cup \Omega _I)\), which is one prerequisite of the AAM. Then, in order to use the AAM to compute the shape derivative, the following additional assumptions have to be met.

-

Assumption (H0): For every \((t,v) \in [0,T] \times V_c(\Omega \cup \Omega _I)\)

-

1.

\([0,1] \ni s \mapsto G(t,su^t+(1-s)u^0,v)\) is absolutely continuous and

-

2.

\([0,1] \ni s \mapsto d_uG(t,su^t + (1-s)u^0,v)[{\tilde{u}}] \in L^1((0,1))\) for all \({\tilde{u}} \in V_c(\Omega \cup \Omega _I)\).

-

1.

-

For every \(t \in [0,T]\) there exists a unique solution \(v^t \in L^2(\Omega )\), such that \(v^t\) solves the averaged adjoint equation

$$\begin{aligned} \int _0^1 d_u G(t,su^t + (1-s)u^0,v^t)[{\tilde{u}}]ds = 0 \quad \text {for all } {\tilde{u}} \in V_c(\Omega \cup \Omega _I). \end{aligned}$$(18) -

Assumption (H1):

Assume that the following equation holds

$$\begin{aligned} \lim _{t \searrow 0} \frac{G(t,u^0,v^t)- G(0,u^0,v^t)}{t}= \partial _t G(0,u^0,v^0). \end{aligned}$$

In our case, due to the linearity of \({A}(t, {\tilde{u}}, v^t)\) in the second argument, the left-hand side of the averaged adjoint equation (18) can be formulated as

where \(\xi ^t(\textbf{x}) :=det D \textbf{F}_{\textbf{t}}(\textbf{x})\) and \({\bar{u}}^t(\textbf{x}) :={\bar{u}}(\textbf{F}_{\textbf{t}}(\textbf{x}))\). As a consequence, (18) is equivalent to

For \(t=0\) we get

In this case we call (19) adjoint equation and the solution \(v^0\) is referred to as the adjoint solution. Moreover the nonlocal problem (11) for \(t=0\) is also called state equation and the solution \(u^0\) is named state solution.

Finally, the next theorem yields a practical formula for deriving the shape derivative.

Theorem 3.2

([36, Theorem 3.1]) Let the assumptions (H0) and (H1) be satisfied and suppose there exists a unique solution \(v^t\) to the averaged adjoint equation (18). Then for \(v\in V_c(\Omega \cup \Omega _I)\) we obtain

Proof

See proof of [36, Theorem 3.1]. \(\square \)

Remark 3.3

Under the assumption that the material derivatives of u and \(v\) exist and that \(D_m u, D_m v\in V_c(\Omega \cup \Omega _I)\), one can also use the material derivative approach of [7] to derive the shape derivative of the reduced functional (15).

3.3 Optimization algorithm

Let us assume for a moment that we have an explicit formula for the shape derivative of the reduced objective functional. We now briefly recall the techniques developed in [50] and describe how to exploit this derivative for implementing gradient based optimization methods or even Quasi-Newton methods, such as L-BFGS, to solve the constrained shape optimization problem (12).

In order to identify gradients we need to require the notion of an inner product, or more generally a Riemannian metric. Unfortunately, shape spaces typically do not admit the structure of a linear space. However, in particular situations it is possible to define appropriate quotient spaces, which can be equipped with a Riemannian structure. For instance consider the set \(\mathcal {A}\) introduced in (13). Since we are only interested in the image of the defining embedding, a re-parametrization thereof does not lead to a different shape. Consequently, two spheres that are equal modulo (diffeomorphic) re-parametrizations define the same shape. This conception naturally leads to the quotient space \({{\,\textrm{Emb}\,}}(S^{d-1}, \mathbb {R}^d) / {{\,\textrm{Diff}\,}}(S^{d-1}, S^{d-1}) \), which can be considered an infinite-dimensional Riemannian manifold [39, 62]. This example already intimates the difficulty of translating abstract shape derivatives into discrete optimization methods; see, e.g., the thesis [63] on this topic. A detailed discussion of these issues is not the intention of this work and we now outline Algorithm 1.

The basic idea can be intuitively explained in the following way. Starting with an initial guess \(\Gamma _0\), we aim to iterate in a steepest-descent fashion over interfaces \(\Gamma _k\) until we reach a “stationary point” of the reduced objective functional \(J^{red}\). The interface \(\Gamma _k\) is encoded in the finite element mesh and transformations thereof are realized by adding vector fields \(\textbf{U}:\Omega \rightarrow \mathbb {R}^d\) (which can be interpreted as tangent vectors at a fixed interface) to the finite element nodes which we denote by \(\Omega _k\).

Thus, the essential part is to update the finite element mesh after each iteration by adding an appropriate transformation vector field. For this purpose, we use the solution \(\textbf{U}(\Gamma ):\Omega (\Gamma ) \rightarrow \mathbb {R}^d\) of the so-called deformation equation

The right-hand side of this equation is given by the shape derivative of the reduced objective functional (20) and the left-hand side denotes an inner product on the vector field space \(H^1_0(\Omega ,{\mathbb {R}}^{d})\). In the view of the manifold interpretation, we can consider \(a_{\Gamma }\) as inner product on the tangent space at \(\Gamma \), so that \(\textbf{U}(\Gamma )\) is interpretable as the gradient of the shape functional \(J^{red}\) at \(\Gamma \). The solution \(\textbf{U}(\Gamma ):\Omega \rightarrow {\mathbb {R}}^{d}\) of (21) is then added in a scaled version to the coordinates \(\Omega _k\) of the finite element nodes.

A common choice for \(a_\Gamma \) is the bilinear form associated to the linear elasticity equation given by

for \(\textbf{U},\textbf{V}\in H^1_0(\Omega ,{\mathbb {R}}^{d})\) and the identity function \({{\,\mathrm{\textbf{Id}}\,}}:{\mathbb {R}^d}\rightarrow {\mathbb {R}^d}\), where

and

are the strain and stress tensors, respectively. Deformation vector fields \(\textbf{V}\) which do not change the interface do not have an impact on the reduced objective functional, so that

Therefore, the right-hand side \(D_\Gamma J^{red}(\Gamma )[\textbf{V}] \) is only assembled for test vector fields whose support intersects with the interface \(\Gamma \) and set to zero for all other basis vector fields. This prevents wrong mesh deformations resulting from discretization errors as outlined and illustrated in [49]. Furthermore, \(\lambda \) and \(\mu \) in (22) denote the Lamé parameters which do not need to have a physical meaning here. It is more important to understand their effect on the mesh deformation. They enable us to control the stiffness of the material and thus can be interpreted as some sort of step size. In [47], it is observed that locally varying Lamé parameters have a stabilizing effect on the mesh. A good strategy is to choose \(\lambda =0\) and \(\mu \) as solution of the following Laplace equation

Therefore \(\mu _\text {min},\mu _\text {max}\in {\mathbb {R}}\) influence the step size of the optimization algorithm. A small step is achieved by the choice of a large \(\mu _\text {max}\). Note that \(a_\Gamma \) then depends on the interface \(\Gamma \) through the parameter \(\mu = \mu (\Gamma ) :\Omega (\Gamma ) \rightarrow \mathbb {R}\).

How to perform the limited memory L-BFGS update in Line 13 of Algorithm 1 within the shape formalism is investigated in [49, Section 4]. Here, we only mention that the therein examined vector transport is approximated with the identity operator, so that we finally treat the gradients \(\textbf{U}_k:\Omega _k \rightarrow \mathbb {R}^d\) as vectors in \(\mathbb {R}^{d|\Omega _k|}\) and implement the standard L-BFGS update [47, Section 5]. For the sufficient decrease condition in Line 18 a small value for c, e.g., \(c= 10^{-4}\), is suggested in [40].

4 Shape derivative of the reduced objective functional

In Sect. 3 we have depicted the optimization methodology, that we follow in this work to numerically solve the constrained shape optimization problem (12). First, we need the following conclusion from [56, Proposition 2.32].

Lemma 4.1

If \(\gamma \in W^{1,1}({\mathbb {R}^d}\times {\mathbb {R}^d},\mathbb {R})\) and \(\tilde{\textbf{V}} \in C_0^1({\mathbb {R}^d}\times {\mathbb {R}^d}, {\mathbb {R}^d}\times {\mathbb {R}^d})\), then \(t \mapsto \gamma \circ \tilde{\textbf{F}}_{\textbf{t}}\), where \(\tilde{\textbf{F}}_{\textbf{t}}:=(\textbf{x},\textbf{y}) + t\tilde{\textbf{V}}(\textbf{x},\textbf{y})\), is differentiable in \(L^1({\mathbb {R}^d}\times {\mathbb {R}^d},\mathbb {R})\) and its derivative is given by

Proof

See proof of [56, Proposition 2.32]. \(\square \)

Remark 4.2

Given a subset \(D \subset {\mathbb {R}^d}\times {\mathbb {R}^d}\) of nonzero measure, we can replace the set \({\mathbb {R}^d}\times {\mathbb {R}^d}\) by D in Lemma 4.1 and the statement still holds, which can be proven by extending functions \(\gamma \in W^{1,1}(D,\mathbb {R})\) and \(\tilde{\textbf{V}} \in C_0^1(D, D)\) by zero to functions \(\hat{\gamma } \in W^{1,1}({\mathbb {R}^d}\times {\mathbb {R}^d}, \mathbb {R})\) and

\(\hat{\textbf{V}} \in C_0^1({\mathbb {R}^d}\times {\mathbb {R}^d}, {\mathbb {R}^d}\times {\mathbb {R}^d})\).

In our case, we set \(\tilde{\textbf{V}}(\textbf{x},\textbf{y}) :=\left( \textbf{V}(\textbf{x}),\textbf{V}(\textbf{y}) \right) \) in order to use Lemma 4.1 to derive several derivatives in this section.

In order to prove the requirements of the AAM, we need some additional assumptions.

Assumption (P0):

-

For every \(t \in [0,T]\), there exist unique solutions \(u^t,v^t \in V_c(\Omega \cup \Omega _I)\), such that

$$\begin{aligned} A(t,u^t,v)&= F(t,v) \text { for all } v\in V_c(\Omega \cup \Omega _I) \text { and } \nonumber \\ A(t,u,v^t)&= \left( -(\frac{1}{2}(u^t + u^0) - {\bar{u}}^t)\xi ^t,u \right) _{L^2(\Omega \cup \Omega _I)} \text { for all } u \in V_c(\Omega \cup \Omega _I), \end{aligned}$$(24)where \(A(t,u,v)\) and \(F(t,v)\) are defined as in (17), \({\bar{u}}^t(\textbf{x}) :={\bar{u}}(\textbf{F}_{\textbf{t}}(\textbf{x}))\) and

\(\xi ^t(\textbf{x}) :=\det D\textbf{F}_{\textbf{t}}(\textbf{x})\).

-

Additionally assume that there exists a constant \(0< C_0 < \infty \), such that

$$\begin{aligned} A(t,u,u) \ge C_0 ||u||^2_{L^2(\Omega )} \text { for all } t \in [0,T] \text { and } u \in V_c(\Omega \cup \Omega _I), \end{aligned}$$where A is defined as in (17).

Assumption (P1):

For each class of kernels additional requirements have to hold:

-

Define the sets \(D_n :=\{(\textbf{x},\textbf{y}) \in (\Omega \cup \Omega _I)^2: ||\textbf{x}- \textbf{y}||_2 > \frac{1}{n} \} \text { for } n \in \mathbb {N}.\) Then, singular kernels are assumed to have weak derivatives \(\nabla _x \gamma , \nabla _y \gamma \in L^{1}(D_n,{\mathbb {R}^d})\) for all \(n \in \mathbb {N}\) with

$$\begin{aligned}&|\nabla _\textbf{x}\gamma _{ij}(\textbf{x},\textbf{y})^\top \textbf{V}(\textbf{x}) + \nabla _{\textbf{y}}\gamma _{ij}(\textbf{x},\textbf{y})^\top \textbf{V}(\textbf{y})|||\textbf{x}- \textbf{y}||_2^{d+2s} \in L^{\infty }(\Omega \times \Omega ) \text { and} \\&|\nabla _\textbf{x}\gamma _{iI}(\textbf{x},\textbf{y})^\top \textbf{V}(\textbf{x})|||\textbf{x}- \textbf{y}||_2^{d+2s} \in L^{\infty }(\Omega \times \Omega _I). \end{aligned}$$ -

Square integrable kernels have to meet the following conditions

$$\begin{aligned}&\gamma _{ij}, \nabla \gamma _{ij}\in L^\infty (\Omega \times \Omega ) \text { and} \\&\gamma _{iI}, \nabla \gamma _{iI}\in L^\infty (\Omega \times \Omega _I). \end{aligned}$$

Remark 4.3

We recall that there exists a Lipschitz constant \(L > 0\) such that

if \(T>0\) is chosen small enough. Consequently we derive

Therefore \(\gamma ^t (\textbf{x}, \textbf{y})\le \frac{L \gamma ^*}{||\textbf{x}- \textbf{y}||_2^{d+2\,s}} < L \gamma ^* n^{d+2\,s}\) for \((\textbf{x},\textbf{y}) \in D_n\), \(t \in [0,T]\) and we get

\(\gamma ^t \in W^{1,1}(D_n, \mathbb {R})\) for singular symmetric kernels if Assumption (P1) is fulfilled.

Singular kernels already satisfy Assumption (P0) since the nonlocal equations in the first condition are well-posed, which can trivially be proven by using the theory of [24, 61]. Additionally, singular kernels also fulfill the second requirement of (P0), which is shown in the following Lemma:

Lemma 4.4

In the case of a singular kernel, there exists a constant \(0< C_0 < \infty \), so that

Proof

Let \(\varepsilon :=\min \{\varepsilon ^1_1,\varepsilon ^1_2\}\). Applying [24, Lemma 4.3] there exists a constant \(C_* > 0\) for the kernel \(\frac{\gamma _*}{||\textbf{x}- \textbf{y}||^{d+2\,s}}\chi _{B_\varepsilon (\textbf{x})}(\textbf{y})\), s.t.

So we conclude

Since T is chosen small enough, \([0,T] \times {\bar{\Omega }}\) is a compact set and \(\xi ^t\) is continuous on \([0,T] \times {\bar{\Omega }}\), there exists \(\xi _* > 0\), s.t. \(\xi ^t(\textbf{x}) \ge \xi _*\) for every \(t \in [0,T]\) and \(\textbf{x}\in {\bar{\Omega }}\). Therefore, by using that \(\textbf{F}_{\textbf{t}}(\Omega )=\Omega \), we derive

\(\square \)

In the following we prove that assumption (P1) also holds for a standard example of a singular symmetric kernel.

Example 4.5

For \(\gamma (\textbf{x},\textbf{y})= \frac{\sigma (\textbf{x},\textbf{y})}{||\textbf{x}-\textbf{y}||^{d+2\,s}}\chi _{B_\varepsilon (\textbf{x})}(\textbf{y})\) of Example 2.1, where additionally there exists a constant \(\sigma ^* \in (0,\infty )\) with \({|\nabla _\textbf{x}\sigma |,|\nabla _\textbf{y}\sigma | \le \sigma ^*}\), the assumption (P1) holds, since \(\Omega \cup \Omega _I\) is a bounded domain and

where we used that \(\textbf{V}\in C_0^1(\Omega \cup \Omega _I,\mathbb {R}^d)\) is Lipschitz continuous for some Lipschitz constant \(L > 0\) and that there exists a \(\textbf{V}^*>0\) with \(|\textbf{V}(\textbf{x})| \le \textbf{V}^* \) for \(\textbf{x}\in \Omega \cup \Omega _I\).

Now we can show, that the additional requirements of AAM are satisfied by problem (12):

Lemma 4.6

Let G be defined as in (17) and let the assumptions (P0) and (P1) be fulfilled. Then the assumptions (H0) and (H1) are satisfied and for every \(t \in [0,T]\) there exists a solution \({v^t \in V_c(\Omega \cup \Omega _I)}\) that solves the averaged adjoint equation (18).

Proof

Because of the length of the proof, we move it to Appendix A. \(\square \)

As a direct consequence of Theorem 3.2 and Lemma 4.6 we can state the next Corollary.

Corollary 4.7

Let G be defined as in (17) and (P0) and (P1) be fulfilled. Then the reduced cost functional of (12) is shape differentiable and the shape derivative of the reduced cost functional can be expressed as

where \(u^0\) is the solution to the state equation (11) and \(v^0\) the solution to the adjoint equation (19).

The missing piece to implement the respective algorithmic realization presented in

Section 3.3 is the shape derivative of the reduced objective functional, which is used in

Line 7 of Algorithm 1 and given by

As a first step, we formulate the shape derivative of the objective functional J and the linear functional F, which can also be found in the standard literature.

Theorem 4.8

(Shape derivative of the reduced objective functional) Let the assumptions (P0) and (P1) be satisfied. Further let \(\Gamma \) be a shape with corresponding state variable \(u^0\) and adjoint variable \(v^0\). Then, for a vector field \(\textbf{V}\in C_0^1(\Omega \cup \Omega _I,\mathbb {R}^d)\) we find

Proof

In order to prove this theorem, we just have to compute the shape derivative of the objective function \(J(u^0,\Gamma )\) and of the linear functional \(F_{\Gamma }(v^0)\). Therefore, let \(\xi ^t(\textbf{x}) :=\det D\textbf{F}_{\textbf{t}}(\textbf{x})\).

Then, we have \(\xi ^0(x)= \det D\textbf{F}_{\textbf{0}}(\textbf{x})= \det (\textbf{I})=1\) and \(\left. \frac{d}{dt}\right| _{t = 0^+} \xi ^t= {{\,\textrm{div}\,}}\textbf{V}\)(see, e.g., [45]), such that the shape derivative of the right-hand side \(F_\Gamma \) can be derived as a consequence of [56, Proposition 2.32] and the product rule of Fréchet derivatives as follows

Moreover, the shape derivative of the objective functional can be written as

Here the shape derivative of the regularization term is an immediate consequence of [62, Theorem 4.13] and is given by

where \(\textbf{n}\) denotes the outer normal of \(\Omega _1\). Additionally, we obtain for the shape derivative of the tracking-type functional

Putting the above terms into equation (25) yields the formula of Theorem 4.8. \(\square \)

The last step to derive the shape derivative of the reduced objective functional (25) is to compute the shape derivative of the nonlocal bilinear form \({A}_\Gamma \).

Lemma 4.9

(Shape derivative of the nonlocal bilinear form) Let the assumptions (P0) and (P1) be satisfied. Further let \(\Gamma \) be a shape with corresponding state variable \(u^0\) and adjoint variable \(v^0\). Then for a vector field \(\textbf{V}\in C_0^1(\Omega \cup \Omega _I,\mathbb {R}^d)\) we find for a square integrable kernel \(\gamma \) that

and for a singular kernel \(\gamma \) that

Proof

Define \(\xi ^t(\textbf{x}):=\det D\textbf{F}_{\textbf{t}}(\textbf{x})\) and \(\gamma _{ij}^t(\textbf{x},\textbf{y}) :=\gamma _{ij}(\textbf{F}_{\textbf{t}}(\textbf{x}), \textbf{F}_{\textbf{t}}(\textbf{y}))\).

Case 1: Square integrable kernels

Then, we can write by using representation (8) of the nonlocal bilinear form \({A}\)

First, we recall that the function \(\xi ^t\) is continuously differentiable and \(\left. \frac{d}{dt}\right| _{t=0} \xi ^t(\textbf{x}) = div \textbf{V}(\textbf{x})\), see, e.g., [45].

So we derive the shape derivative of the nonlocal bilinear form by applying Lemma 4.1, as described in Remark 4.2, on \(\gamma _{ij}\in W^{1,1}(\Omega \times \Omega ,\mathbb {R})\) and \(\gamma _{iI}\in W^{1,1}(\Omega \times \Omega _I,\mathbb {R})\) and by using the product rule for Fréchet derivatives

For the second equation, the following computations are used, which can be obtained by applying Fubini’s theorem and by swapping \(\textbf{x}\) and \(\textbf{y}\)

Case 2: Singular kernels

Since \(\xi ^t(\textbf{x}) = det D\textbf{F}_{\textbf{t}}(\textbf{x})\) is continuous and therefore bounded on \(\Omega \cup \Omega _I\), we get that the double integral

is well-defined(see also Remark 4.3). Moreover since \(\gamma ^t \in W^{1,1}(D_n, \mathbb {R})\) we can conclude, as outlined in Remark 4.2, that the function \(\gamma ^t\) is differentiable in \(L^1(D_n,\mathbb {R})\) with

Therefore, we follow

for the singular symmetric kernel

where the integrals (28)–(30) are also well-defined, since \({{\,\textrm{div}\,}}\textbf{V}\) is bounded on \(\Omega \) and Assumption (P1) yields the existence of derivatives \(\nabla \gamma _{ij}\) and \(\nabla \gamma _{iI}\) and of constants \(0 \le C_1,C_2 < \infty \) with

\(\square \)

5 Numerical experiments

In this section, we want to put the above derived formula (26) for the shape derivative of the reduced objective functional into numerical practice. In the following numerical examples we test one singular symmetric and one nonsymmetric square integrable kernel. Specifically,

where

with with scaling constants \(d_{\delta }:=\frac{2-2\,s}{\pi \delta ^{2-2\,s}}\) and

where

with scaling constants \(c_\delta :=\frac{1}{\delta ^4}\). We truncate all kernel functions by \(\Vert \cdot \Vert _2\)-balls of radius \(\delta = 0.1\) so that \(\Omega \cup \Omega _I\subset [-\delta ,1+\delta ]^2\). As a right-hand side we choose a piecewise constant function

i.e., \(f_1 = 100\) and \(f_2 = -10\). We note that the nonsymmetric kernel \(\gamma ^{nonsym}\) satisfies the conditions for the class of integrable kernels considered in [61], such that the corresponding nonlocal problem is well-posed and also assumptions (P0) and (P1) can easily be verified in this case. The symmetric kernel \(\gamma ^{sym}\) is a special case of Example 2.1 and therefore the assumptions (P0) and (P1) are met. The well-posedness of the nonlocal problem regarding the singular kernel is shown in [24]. As a perimeter regularization we choose \(\nu = 0.001\) and, since we only utilize \(\textbf{V}\) with \({\text {supp}(\textbf{V}) \cap \Gamma _k \ne \emptyset }\), where \(\Gamma _k\) is the current interface in iteration k of Algorithm 1, we additionally assume that the nonlocal boundary has no direct influence on the shape derivative of the nonlocal bilinear form \(D_\Gamma {A}_\Gamma \), such that for all \(\textbf{V}\in C_0^1(\Omega \cup \Omega _I,\mathbb {R}^2)\) with \({\text {supp}(\textbf{V}) \cap \Gamma _k \ne \emptyset }\) we have for the square integrable kernel

and for the singular symmetric kernel

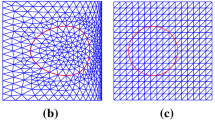

In order to solve problem (12), we apply a finite element method, where we employ continuous piecewise linear basis functions on triangular grids for the discretization of the nonlocal constraint equation. In particular we use the free meshing software Gmsh [30] to construct the meshes and a customized version of the Python package nlfem [35] to assemble the stiffness matrices of the nonlocal state and adjoint equation as well as the load vector regarding the shape derivative \(D_\Gamma {A}_\Gamma \). Moreover, to assemble the load vector of the state and adjoint equation and the shape derivatives \(D_\Gamma J\) and \(D_\Gamma F_\Gamma \), we employ the open-source finite element software FEniCS [1, 2]. For a detailed discussion on the assembly of the nonlocal stiffness matrix we refer to [22, 35]. Here we solely emphasize how to implement a subdomain–dependent kernel of type (3). During the mesh generation each triangle is labeled according to its subdomain affiliation. Thus, whenever we integrate over a pair of two triangles, we can read out the labels (i, j) and choose the corresponding kernel \(\gamma _{ij}\).

The data \({\bar{u}}\) is generated as solution \(u({\overline{\Gamma }})\) of the constraint equation associated to a target shape \({\overline{\Gamma }}\). Thus the data is represented as a linear combination of basis functions from the finite element basis. For the interpolation task in Line 3 of Algorithm 1 we solely need to translate between (non-matching) finite element grids by using the project function of FEniCS. In all examples below the target shape \({\overline{\Gamma }}\) is chosen to be a circle of radius 0.25 centered at (0.5, 0.5).

We now present two different non-trivial examples which differ in the choice of the initial guess \(\Gamma _0\). They are presented and described in the Figs. 2 and 4 for the singular symmetric kernel and in the Figs. 3 and 5 for the nonsymmetric integrable kernel. In each plot of the aforementioned figures the black line represents the target interface \({\overline{\Gamma }}\). Moreover the blue area depicts \(\Omega _1\), the grey area \(\Omega _2\) and the red area the nonlocal interaction domain \(\Omega _I\).

Since the start shapes are smaller than the target shape, the shape needs to expand in the first few iterations. Thereby the nodes of the mesh are pushed towards the boundary, so that the mesh quality decreases and the algorithm stagnates, because nodes are prohibited to be pushed outside of \(\Omega \). Therefore, we apply a remeshing technique, where we remesh after the fifth and tenth iteration. In order to remesh, we save the points of our current shape as a spline in a dummy.geo file, that also contains the information of the nonlocal boundary, and then compute a new mesh with Gmsh. In this new mesh the distance between the nodes and the boundary is sufficiently large to attain a better improvement regarding the objective function value by the new mesh deformations.

It is important to mention that computation times and the performance of Algorithm 1 in general are very sensitive to the choice of parameters and may strongly vary, which is why reporting exact computation times is not very meaningful at this stage. Particularly delicate choices are those of the system parameters including the kernel (diffusion and convection) and the forcing term, which both determine the identifiability of the model. But also the choice of Lamé parameters to control the step size, specifically \(\mu _{max}\) (we set \(\mu _{min} = 0\) in all experiments). The convergence history of each experiment is shown in Fig. 6.

Moreover, especially in the case of system parameters with high interface-sensitivity in combination with an inconveniently small \(\mu _{max}\), mesh deformations may be large in the early phase of the algorithm. Thus, such mesh deformations \(\tilde{\textbf{U}}_k\) of high magnitude lead to destroyed meshes so that an evaluation of the reduced objective functional \(J^{red}(({{\,\mathrm{\textbf{Id}}\,}}+ \alpha \tilde{\textbf{U}}_k)(\Omega _k))\), which requires the assembly of the nonlocal stiffness matrix, becomes a pointless computation. In order to avoid such computations we first perform a line search depending on one simple mesh quality criterion. More precisely, we downscale the step size, i.e., \(\alpha = \tau \alpha \), until all finite element nodes of the resulting mesh \(({{\,\mathrm{\textbf{Id}}\,}}+\alpha \tilde{\textbf{U}}_k)(\Omega _k)\) are a subset of \(\Omega \). After that, we continue with the backtracking line search in Line 18 of Algorithm 1.

6 Concluding remarks and future work

We have conducted a numerical investigation of shape optimization problems which are constrained by nonlocal system models. We have proven through numerical experiments the applicability of established shape optimization techniques for which the shape derivative of the nonlocal bilinear form represents the novel ingredient. All in all, this work is only a first step along the exploration of the interesting field of nonlocally constrained shape optimization problems.

Data availibility

The code to get the results in Sect. 5 and the corresponding raw data from these experiments can be freely downloaded from https://github.com/schustermatthias/nlshape.

References

Mardal, K.-A., Logg, A., Wells, G.N., et al.: Automated Solution of Differential Equations by the Finite Element Method. Springer, Berlin (2012)

Alnæs, M., Blechta, J., Hake, J., Johansson, A., Kehlet, B., Logg, A., Richardson, C., Ring, J., Rognes, M.E., Wells, G.N.: The FEniCS project version 1.5. Arch. Numer. Softw. 3(100), 9–23 (2015)

Ameur, H.B., Burger, M., Hackl, B.: Level set methods for geometric inverse problems in linear elasticity. Inverse Prob. 20(3), 673–696 (2004)

Andreu, F., Mazon, J.M., Rossi, J.D., Toledo, J.: Nonlocal Diffusion Problems. Mathematical Surveys and Monographs, vol. 165. AMS, Providence (2010)

Barlow, M.T., Bass, R.F., Chen, Z.-Q., Kassmann, M.: Non-local Dirichlet forms and symmetric jump processes. Trans. Am. Math. Soc. 361(4), 1963–1999 (2009)

Bass, R.F., Kassmann, M., Kumagai, T.: Symmetric jump processes: localization, heat kernels and convergence. Ann. Inst. H. Poincaré Probab. Stat. 46(1), 59–71 (2010)

Berggren, M.: A unified discrete-continuous sensitivity analysis method for shape optimization. In: Applied and Numerical Partial Differential Equations: Scientific Computing in Simulation, Optimization and Control in a Multidisciplinary Context, pp. 25–39. Springer (2009)

Fernández Bonder, J., Ritorto, A., Salort, A.: A class of shape optimization problems for some nonlocal operators. Adv. Calc. Var. 11(4), 373–386 (2017)

Fernández Bonder, J., Spedaletti, J.F.: Some nonlocal optimal design problems. J. Math. Anal. Appl. 459(2), 906–931 (2018)

Brockmann, D.: Anomalous diffusion and the structure of human transportation networks. Eur. Phys. J. Spec. Top. 157(1), 173–189 (2008)

Brockmann, D., Theis, F.: Money circulation, trackable items, and the emergence of universal human mobility patterns. IEEE Pervasive Comput. 7(4), 28–35 (2008)

Burchard, A., Choksi, R., Topaloglu, I.: Nonlocal shape optimization via interactions of attractive and repulsive potentials (2018). arXiv:1512.07282

Capodaglio, G., D’Elia, M., Bochev, P., Gunzburger, M.: An energy-based coupling approach to nonlocal interface problems. Comput. Fluids 207, 104593 (2020)

Cusimano, N., Bueno-Orovio, A., Turner, I., Burrage, K.: On the order of the fractional Laplacian in determining the spatio-temporal evolution of a space-fractional model of cardiac electrophysiology. PLoS ONE 10(12), 1–16 (2015)

Dalibard, A.-L., Gérard-Varet, D.: On shape optimization problems involving the fractional Laplacian. ESAIM Control Optim. Calc. Var. 19(4), 976–1013 (2013)

Delfour, M.C., Zolésio, J.-P.: Shapes and Geometries: Metrics, Analysis, Differential Calculus, and Optimization. Advances in Design and Control. SIAM, Philadelphia (2011)

D’Elia, M., Glusa, C.: A fractional model for anomalous diffusion with increased variability. Analysis, algorithms and applications to interface problems. arXiv preprint arXiv:2101.11765 (2021)

D’Elia, M., Glusa, C., Otárola, E.: A priori error estimates for the optimal control of the integral fractional Laplacian (2018). arXiv:1810.04262

D’Elia, M., Gunzburger, M.: The fractional Laplacian operator on bounded domains as a special case of the nonlocal diffusion operator. Comput. Math. Appl. 66(7), 1245–1260 (2013)

D’Elia, M., Gunzburger, M.: Optimal distributed control of nonlocal steady diffusion problems. SIAM J. Control. Optim. 52(1), 243–273 (2014)

D’Elia, M., Gunzburger, M.: Identification of the diffusion parameter in nonlocal steady diffusion problems. Appl. Math. Optim. 73(2), 227–249 (2016)

D’Elia, M., Gunzburger, M., Vollmann, C.: A cookbook for finite element methods for nonlocal problems, including quadrature rules and approximate Euclidean balls. arXiv preprint arXiv:2005.10775 (2020)

Du, Q.: Nonlocal Modeling, Analysis, and Computation. Society for Industrial and Applied Mathematics, Philadelphia (2019)

Du, Q., Gunzburger, M., Lehoucq, R.B., Zhou, K.: Analysis and approximation of nonlocal diffusion problems with volume constraints. SIAM Rev. 54(4), 667–696 (2012)

Du, Q., Zhou, K.: Mathematical analysis for the peridynamic nonlocal continuum theory. ESAIM: M2AN 45(2), 217–234 (2011)

D’Elia, M., Du, Q., Gunzburger, M., Lehoucq, R.B.: Nonlocal convection–diffusion problems on bounded domains and finite-range jump processes. Comput. Methods Appl. Math. 17(4), 707–722 (2017)

D’Elia, M., Perego, M., Bochev, P., Littlewood, D.: A coupling strategy for nonlocal and local diffusion models with mixed volume constraints and boundary conditions. Comput. Math. Appl. 71(11), 2218 – 2230 (2016). Proceedings of the conference on Advances in Scientific Computing and Applied Mathematics. A special issue in honor of Max Gunzburger’s 70th birthday

Felsinger, M., Kassmann, M., Voigt, P.: The Dirichlet problem for nonlocal operators. Math. Z. 279(3), 779–809 (2015)

Gal, C.G., Warma, M.: Nonlocal transmission problems with fractional diffusion and boundary conditions on non-smooth interfaces. Commun. Partial Differ. Equ. 42(4), 579–625 (2017)

Geuzaine, C., Remacle, J.-F.: Gmsh: a 3-d finite element mesh generator with built-in pre- and post-processing facilities. Int. J. Numer. Methods Eng. 79(11), 1309–1331 (2009)

Gilboa, G., Osher, S.: Nonlocal operators with applications to image processing. Multiscale Model. Simul. 7(3), 1005–1028 (2009)

Glusa, C., D’Elia, M., Capodaglio, G., Gunzburger, M., Bochev, P.: An asymptotically compatible coupling formulation for nonlocal interface problems with jumps. arXiv preprint arXiv:2203.07565 (2022)

Guillemin, V., Pollack, A.: Differential Topology, vol. 370. American Mathematical Soc., Providence (2010)

Hintermüller, M., Ring, W.: A second order shape optimization approach for image segmentation. SIAM J. Appl. Math. 64(2), 442–467 (2003)

Klar, M., Vollmann, C., Schulz, V.: A flexible 2d fem code for nonlocal convection-diffusion and mechanics (2022). arXiv:2207.03921

Laurain, A., Sturm, K.: Distributed shape derivative via averaged adjoint method and applications. ESAIM Math. Model. Numer. Anal. 50(4), 1241–1267 (2016)

Levendorskiǐ, S.Z.: Pricing of the American put under lévy processes. Int. J. Theor. Appl. Finance 07(03), 303–335 (2004)

Lou, Y., Zhang, X., Osher, S., Bertozzi, A.: Image recovery via nonlocal operators. J. Sci. Comput. 42(2), 185–197 (2010)

Michor, P.W., Mumford, D.: Vanishing geodesic distance on spaces of submanifolds and diffeomorphisms. Doc. Math. 10, 217–245 (2005)

Nocedale, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Parini, E., Salort, A.: Compactness and dichotomy in nonlocal shape optimization (2018). arXiv:1806.01165

Peyré, G., Bougleux, S., Cohen, L.: Non-local Regularization of Inverse Problems, pp. 57–68. Springer, Berlin (2008)

Rodriguez, G.E., Gimperlein, H., Stocek, J.: Nonlocal interface problems: modeling, regularity, finite element approximation, Preprint (2020). http://www.macs.hw.ac.uk/hg94/interface.pdf

Rosasco, L., Belkin, M., De Vito, E.: On learning with integral operators. J. Mach. Learn. Res. 11, 905–934 (2010)

Schmidt, S.: Efficient large scale aerodynamic design based on shape calculus. Ph.D. thesis, Universiät Trier (2010)

Schmidt, S., Ilic, C., Schulz, V., Gauger, N.: Three dimensional large scale aerodynamic shape optimization based on the shape calculus. AIAA J. 51(11), 2615–2627 (2013)

Schulz, V., Siebenborn, M.: Computational comparison of surface metrics for PDE constrained shape optimization. Comput. Methods Appl. Math. 16(3), 485–496 (2016)

Schulz, V., Siebenborn, M., Welker, K.: Structured inverse modeling in parabolic diffusion problems. SIAM J. Control. Optim. 53(6), 3319–3338 (2015)

Schulz, V., Siebenborn, M., Welker, K.: Efficient PDE constrained shape optimization based on Steklov–Poincaré-type metrics. SIAM J. Optim. 26(4), 2800–2819 (2016)

Schulz, V., Siebenborn, M., Welker, K.: A novel Steklov–Poincaré type metric for efficient PDE constrained optimization in shape spaces. SIAM J. Optim. 26(4), 2800–2819 (2016)

Seleson, P., Gunzburger, M., Parks, M.L.: Interface problems in nonlocal diffusion and sharp transitions between local and nonlocal domains. Comput. Methods Appl. Mech. Eng. 266, 185–204 (2013)

Siebenborn, M.: A shape optimization algorithm for interface identification allowing topological changes. J. Optim. Theory Appl. 177(2), 306–328 (2018)

Siebenborn, M., Vogel, A.: A shape optimization algorithm for cellular composites (2019). arXiv:1904.03860

Silling, S.A.: Reformulation of elasticity theory for discontinuities and long-range forces. J. Mech. Phys. Solids 48(1), 175–209 (2000)

Sire, Y., Vázquez, J.L., Volzone, B.: Symmetrization for fractional elliptic and parabolic equations and an isoperimetric application. Chin. Ann. Math. Ser. B 38(2), 661–686 (2017)

Sokolowski, J., Zolésio, J.-P.: Introduction to Shape Optimization. Springer, Berlin (1992)

Sturm, K.: Minimax Lagrangian approach to the differentiability of nonlinear pde constrained shape functions without saddle point assumption. SIAM J. Control Optim. 53(4), 2017–2039 (2015)

Sturm, K.: On shape optimization with non-linear partial differential equations. Ph.D. thesis, Technische Universiät Berlin (2015)

Tankov, P.: Financial Modelling with Jump Processes. CRC Press, Cambridge (2003)

Tian, X.: Nonlocal models with a finite range of nonlocal interactions. Ph.D. thesis, Columbia University (2017). https://doi.org/10.7916/D8ZG6XWN

Vollmann, C.: Nonlocal models with truncated interaction kernels—analysis, finite element methods and shape optimization. Ph.D. thesis, Universität Trier (2019). https://ubt.opus.hbz-nrw.de/frontdoor/index/index/docId/1225

Welker, K.: Efficient PDE constrained shape optimization in shape spaces. Ph.D. thesis, Universität Trier (2016). http://ubt.opus.hbz-nrw.de/volltexte/2017/1024/

Welker, K.: Suitable spaces for shape optimization (2017). arXiv:1702.07579

Zhou, K., Du, Q.: Mathematical and numerical analysis of linear peridynamic models with nonlocal boundary conditions. SIAM J. Numer. Anal. 48(5), 1759–1780 (2010)

Acknowledgements

This work has been partly supported by the German Research Foundation (DFG) within the Research Training Group 2126: ‘Algorithmic Optimization’.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof of Lemma 4.6

Proof of Lemma 4.6

Proof

Define \({\bar{u}}^t(\textbf{x}) :={\bar{u}}(\textbf{F}_{\textbf{t}}(\textbf{x}))\), \(\xi ^t(\textbf{x}) :=\det D\textbf{F}_{\textbf{t}}(\textbf{x}), \gamma ^t_\Gamma (\textbf{x},\textbf{y}) :=\gamma _\Gamma (\textbf{F}_{\textbf{t}}(\textbf{x}),\textbf{F}_{\textbf{t}}(\textbf{y})),\ f_\Gamma ^t(\textbf{x}) :=f_\Gamma (\textbf{F}_{\textbf{t}}(\textbf{x}))\) and \({\nabla f_\Gamma ^t(\textbf{x}) :=\nabla f_\Gamma (\textbf{F}_{\textbf{t}}(\textbf{x}))}\).

Assumption(H0):

Set \({\tilde{G}}:[0,1] \rightarrow \mathbb {R}\), \({\tilde{G}}(s) = G(t,su^t + (1-s)u^0,v)\). We show that \({\tilde{G}}\) is continuous differentiable and therefore also absolutely continuous. By using the linearity of \({A}\) we can compute

which is obviously continuous and therefore the first condition of Assumption (H0) holds.

Furthermore the second criterion of (H0) is also satisfied:

As mentioned in Sect. 3.2 the averaged adjoint equation (18) can be reformulated as

Since the right hand side of (31) is a linear and continuous operator with regards to \({\tilde{u}}\), Eq. (31) is a well-defined nonlocal problem, which has a unique solution \(v^t\) due to the assumptions (P0). By further using assumptions (P0), we can conclude for \(u^t\), that there exists a \(C_2 > 0\), such that

where we used in the last step, that \(||f_\Gamma ^t\xi ^t||_{L^2(\Omega )} \rightarrow ||f_\Gamma ||_{L^2(\Omega )}\) ( [58, Lemma 2.16]). Since \({\xi ^t(\textbf{x}) = \det (\textbf{I} + tD\textbf{V}(\textbf{x}))}\) is continuous on \([0,T]\times {\bar{\Omega }}\), there exists a \({\bar{\xi }}\), such that \(|\xi ^t(\textbf{x})|\le {\bar{\xi }}\) for all \((t,\textbf{x}) \in [0,T]\times {\bar{\Omega }}\). Because \({\bar{u}}^t \rightarrow {\bar{u}}\) in \(L^2(\Omega )\), there exists a \(C_3 > 0\) with \(||{\bar{u}}^t||_2 < C_3\) for \(t \in [0,T]\). Then we derive for \(v^t\) that

Assumption (H1):

Since \(u^t\) and \(v^t\) are bounded for \(t \in [0,T]\), then for every sequence \(\{t_n\}_{n \in \mathbb {N}}\) with \(t_n \rightarrow 0\) there exist subsequences \(\{t_{n_k}\}_{k \in \mathbb {N}}\) and \(\{t_{n_l}\}_{l \in \mathbb {N}}\), such that there exist functions \(q_1, q_2 \in L^2(\Omega \cup \Omega _I)\) with \(u^{t_{n_l}} \rightharpoonup q_1\) \(v^{t_{n_k}} \rightharpoonup q_2\) in \(L^2(\Omega \cup \Omega _I)\).

In the next part of the proof, we will make use of the following observation: Let \(\{t_k\}_{k \in \mathbb {N}} \in [0,T]^{\mathbb {N}}\) be a sequence with \(t_k \rightarrow 0\) for \(k \rightarrow \infty \) and \(g^t, h^{t_k}, h^0 \in L^2(\Omega \cup \Omega _I)\) for \(t \in [0,T]\) and \(k \in \mathbb {N}\). Additionally, assume that \(g^t \rightarrow g^0\) and \(h^{t_k} \rightharpoonup h^0\) in \(L^2(\Omega \cup \Omega _I)\) holds. Then, we can conclude

Case 1: Proof of (H1) for square integrable kernels

Since \(\phi _{ij}\) is essentially bounded on \(\Omega \times \Omega \), \(\phi _{iI}\) is essentially bounded on \(\Omega \times \Omega _I\) and \(\xi ^t\) is continuous and therefore bounded on \({\bar{\Omega }}\), we can conclude that

Then, applying [58, Lemma 2.16] yields \(\gamma ^{t} \rightarrow \gamma \) in \(L^2(\Omega \times (\Omega \cup \Omega _I))\) and therefore

\(\gamma ^t (\textbf{x},\textbf{y}) \rightarrow \gamma (\textbf{x},\textbf{y})\) for a.e. \((\textbf{x},\textbf{y}) \in \Omega \times (\Omega \cup \Omega _I)\). As a consequence by using the dominated convergence theorem we get

Thus, by (32) we derive

Due to the continuity of parameter integrals, we have

Analogously, we can show

So we can conclude \(\lim _{l \rightarrow \infty } {A}(t_{n_l},u^{t_{n_l}},v) = A(0,q_1,v)\). Because \(f_\Gamma ^t \xi ^t\rightarrow f_\Gamma \) in \(L^2(\Omega )\) according to [58, Lemma 2.16], we can compute for all \(v\in L_c^2(\Omega \cup \Omega _I)\)

Since the solution is unique we derive \(q_1 = u^0\) and \(u^t \rightharpoonup u^0\). Similarly, we have for \(q_2\) and for all \({\tilde{u}} \in L^2_c(\Omega \cup \Omega _I)\)

So we conclude \(q_2=v^0\) and \(v^t \rightharpoonup v^0(t \searrow 0)\). By using the mean value theorem, there exist \(s_t \in (0,t)\), s.t. \({s_t \rightarrow 0 (t \searrow 0)}\) and

Therefore we now prove assumption (H1) by showing

Computing the derivative regarding t yields

First we can show

By applying [58, Lemma 2.16], we obtain \(\nabla f_\Gamma ^t \xi ^t\rightarrow \nabla f_\Gamma \) in \(L^2(\Omega ,{\mathbb {R}^d})\) and \(\ f_\Gamma ^t \rightarrow f_\Gamma \) in \(L^2(\Omega )\). Since every \({\textbf{V}\in C_0^1(\Omega \cup \Omega _I,\mathbb {R}^d)}\) is bounded, we can conclude \((\nabla f_\Gamma ^t)^\top \textbf{V}\xi ^t\rightarrow \nabla f_\Gamma ^\top \textbf{V}\) in \(L^2(\Omega )\). Moreover for every \(t \in [0,T)\) the derivative \({\left. \frac{d}{dr}\right| _{r=t^+}\xi ^r = \left. \frac{d}{dr}\right| _{r=t^+} \det (I + r D\textbf{V})}\) is continuous in r and \({\left. \frac{d}{dr}\right| _{r=0^+}\xi ^r = {{\,\textrm{div}\,}}\textbf{V}}\)(see e.g. [45]), so we derive \(f_\Gamma ^t \left. \frac{d}{dr}\right| _{r=t^+}\xi ^r \rightarrow f_\Gamma {{\,\textrm{div}\,}}\textbf{V}\) in \(L^2(\Omega )\). Again by using (32), we obtain the convergence in (33).

Analogously, the convergence of \(\lim _{t \searrow 0 } \partial _t J(t, u^0) = \partial _t J(0, u^0)\) can be shown.

Furthermore, we now employ representation (7) of the nonlocal bilinear form \({A}\) to compute the partial derivative of \({A}\) regarding t

Since \(\left. \frac{d}{dr}\right| _{r=t^+} \xi ^r(\textbf{x})\) and \(\xi ^t(\textbf{x})\) are continuous in \({\textbf{x}\in {\bar{\Omega }}}\), \({\phi _{ij},\nabla \phi _{ij}}\) are essentially bounded for \({(\textbf{x},\textbf{y}) \in {\bar{\Omega }} \times {\bar{\Omega }}}\) and \(\phi _{iI},\nabla \phi _{iI}\) are essentially bounded for \({(\textbf{x},\textbf{y}) \in {\bar{\Omega }} \times {\bar{\Omega }}_I}\), we can conclude in the same manner as above that \(A_i(t,u^0)(\textbf{x},\cdot ) \in L^2(\Omega \cup \Omega _I)\) for all \(\textbf{x}\in \Omega \setminus \Gamma \) and therefore

As a consequence, we derive \(\lim _{s,t \searrow 0} \partial _t {A}(t,u^0,v^s) = \partial _t {A}(0,u^0,v^0)\).

All in all, we obtain

Case 2: Proof of (H1) for singular kernels

Define \(D_n:= \{(x,y) \in (\Omega \cup \Omega _I)^2: ||\textbf{x}- \textbf{y}||_2 > \frac{1}{n} \}\) for \(n \in \mathbb {N}\). Since, as shown in Remark 4.3, \(\gamma ^{t}(\textbf{x},\textbf{y}) < L\gamma ^* n^{d+2\,s} \) for all \(t \in [0,T],(x,y) \in D_n\) and \(\xi ^t\) is continuous on \({\bar{\Omega }} \cup {\bar{\Omega }}_I\), we can conclude that

and by using [58, Lemma 2.16] that

With this convergence and (32), we derive the second step and with the dominated convergence theorem we get the first and third step of the following computation

So we can conclude, that \(q_1 = u^0\) and \(u^t \rightharpoonup u^0\). Analogously, we can show

and therefore derive \(q_2 = v^0\) and \(v^t \rightharpoonup v^0\). As in case 1, the next step is to prove

By again applying (32) and the dominated convergence theorem we conclude

Analogously to case 1, we obtain

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schuster, M., Vollmann, C. & Schulz, V. Shape optimization for interface identification in nonlocal models. Comput Optim Appl (2024). https://doi.org/10.1007/s10589-024-00575-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10589-024-00575-7