Abstract

We derive novel algorithms for optimization problems constrained by partial differential equations describing multiscale particle dynamics, including non-local integral terms representing interactions between particles. In particular, we investigate problems where the control acts as an advection ‘flow’ vector or a source term of the partial differential equation, and the constraint is equipped with boundary conditions of Dirichlet or no-flux type. After deriving continuous first-order optimality conditions for such problems, we solve the resulting systems by developing a link with computational methods for statistical mechanics, deriving pseudospectral methods in space and time variables, and utilizing variants of existing fixed-point methods as well as a recently developed Newton–Krylov scheme. Numerical experiments indicate the effectiveness of our approach for a range of problem set-ups, boundary conditions, as well as regularization and model parameters, in both two and three dimensions. A key contribution is the provision of software which allows the discretization and solution of a range of optimization problems constrained by differential equations describing particle dynamics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work we describe a novel approach for tackling partial differential equation (PDE)-constrained optimization problems for systems in which the underlying dynamics are described by multiscale, interacting particle systems. Our methods are widely applicable to the optimization of many systems described by non-local, non-linear PDEs, some cases of which have recently received significant attention in the literature [1, 3, 4, 6, 13, 15, 30]. The optimal control of particle systems described by stochastic differential equations (SDEs) [66] is also an active field of research with applications in consensus formation [64], crowd control through external agents [80], pedestrian modelling [17], and molecular dynamics [73], to which our approach could be extended.

Here, we aim to provide a link between such PDE-constrained optimization problems and state-of-the-art methods in statistical mechanics (known as Dynamic Density Functional Theory, or DDFT) [27, 34, 52, 55, 68, 79, 86, 87], before devising numerical methods for such problems using a pseudospectral method in space and time, allowing highly efficient and accurate solution of both the forward and optimization problems [14, 40, 60, 81]. Having derived first-order optimality conditions using the formal Lagrange method, we modify existing ‘sweeping’, or fixed-point, algorithms [4, 17] to reliably solve such systems, and apply a recently developed Newton–Krylov method [41, 42] to tackle non-linear optimization problems to higher order. The combination of these approaches has not been applied to particle dynamics problems to our knowledge, and enables the accurate resolution of complicated optimization problems in up to three dimensions. We demonstrate how to efficiently implement such methods for problems with both Dirichlet and Robin (no-flux) boundary conditions, provide validation test cases, and accompany the paper with an open-source software implementation [2], based on 2DChebClass [40, 60].

Our use of pseudospectral methods has three main advantages over existing implementations: (i) due to a novel implementation of spatial convolutions, we are not restricted to periodic domains or the use of Fourier grids and can also tackle convolutions with bounded support; (ii) for problems of the types studied here, in particular when the solutions are expected to be smooth and we require accurate solutions, pseudospectral methods provide significant computational gains over finite difference, finite element, or finite volume methods; (iii) using pseudospectral interpolation in time allows us to move beyond fixed time-stepping methods, employing more accurate and efficient ordinary differential equation (ODE) and differential–algebraic equation (DAE) solvers, and a spectral-in-time Newton–Krylov method.

This paper is structured as follows. In Sect. 2 we provide relevant background to multiscale particle dynamics, pseudospectral methods, and PDE-constrained optimization. In Sect. 3 we give the associated first-order optimality conditions, followed by a description of the numerical methods in Sect. 4. The results of our numerical experiments are reported in Sect. 5, followed by some concluding remarks in Sect. 6.

2 Background

In this section we detail the necessary background required for the development of our algorithms. In Sect. 2.1 we describe relevant material on multiscale particle dynamics, in Sect. 2.2 we outline pseudospectral methods, in Sect. 2.3 we state the PDE-constrained optimization problems of particle dynamics problems that we will consider, and in Sect. 2.4 we survey related work in the area of mean-field optimal control.

2.1 Multiscale particle dynamics

The dynamics of many systems can be accurately described by interacting particles or agents. Examples range in scale from electrons in atoms and molecules [77], through biological cells in tissues [9], up to planets and stars in galaxies [12]. Other individual-based models include animals undergoing flocking and swarming [88], pedestrians walking [31], or people who interact and thus change their opinions [51].

In principle, such situations can be modelled by differential equations for the ‘state’ (e.g., position, momentum, opinion) of each individual. However, the challenge here is that physical systems typically have huge numbers of particles (e.g., \(\sim 10^{25}\) molecules in a litre of water) and, as such, are beyond the treatment of standard numerical methods, both in terms of storage and processor time. For N particles, typical algorithms scale as \(N^2\) or \(N^3\), which prevents direct computation for more than, say, \({\mathcal {O}}(10^4)\) particles. It is clear from the vast separation of scales between computationally tractable and physically relevant problems that this issue cannot be overcome through the sequential improvement of computer hardware.

An additional complication of directly solving the dynamics of such systems, e.g., through Newtonian dynamics, is the sensitive dependence on initial conditions [49]. For many physical systems, it is unreasonable to assume that one knows the exact initial conditions for each particle. As such, one is interested not in a particular realization of the dynamics, but rather in an ‘average’ behaviour, which is typical for the system. Both of these challenges suggest that it would be prudent to instead study the dynamics through a statistical mechanics approach, for which one is interested in the macroscopic quantities, rather than individual realizations [48]. However, this approach comes with its own challenges and drawbacks.

The first is that, at least without additional simplifying approximations, the resulting equations are no easier to solve than the underlying particle dynamics. For example, instead of treating the Langevin stochastic differential equation, which formally scales computationally as \(N^2\), one may treat the corresponding Fokker–Planck (forward Kolmogorov/Smoluchowski) equation, which is a partial differential equation in dN dimensions, where d is the number of degrees of freedom of the one-particle phase space (typically 6 when including momentum, and 3 when only considering the particle positions). A standard approach would then be to discretize each degree of freedom, reducing the PDE to a system of coupled ODEs, which may then, in principle, be solved numerically. The issue here lies with the curse of dimensionality: for M points in each degree of freedom, one requires a total of \(M^{dN}\) points. Taking, for the sake of argument, \(M=10\) points and \(N=10\) particles in three dimensions, then the total number of points required is \(10^{30}\), which is far too many for a reasonable computation, and far too few for an accurate solution.

A common approach to overcome this is to use ‘coarse-graining’, which reduces the dimensionality of the system, generally at the cost of a loss of accuracy or physical effects, and the introduction of unconstrained approximations [83]. This links to the second challenge, which concerns the multiscale nature of the problem. In many systems of interest, physically crucial effects manifest themselves on scales of the particle size, all the way up to the macroscale. Examples include volume exclusion of hard particles [16], biological cellular alignment [11], and nucleation of clusters and clouds [53]. A standard coarse-graining approach would be to ignore effects such as volume exclusion, and treat the whole system as a bulk, and hence determine quantities such as average densities and orientations [48]. Whilst this is viable in homogeneous systems close to equilibrium, it completely fails to capture heterogeneous systems, symmetry breaking, and many dynamical effects.

However, an extremely efficient and accurate example of coarse-graining which captures such effects is Dynamic Density Functional Theory (DDFT) [27, 55]. The crucial observation here is that the full N-body information in a system is a functional of the 1-body density, \(\rho (\mathbf {x},t)\) (i.e., the probability of finding any one particle at a given position at a given time). This is an extension of classical density functional theory (DFT) (see, e.g., the early works [34, 68] and later reviews [52, 86, 87]), which considers the equilibrium case, and is linked to the celebrated quantum version [44]. The main challenge here is that the proof is non-constructive; it is unknown how to map from \(\rho \) to the full information in the system. However, in many practical applications, it is \(\rho \) itself that is the quantity of interest. Hence it is desirable to derive closed equations of motion for the 1-body density, which is an object in \({\mathbb {R}}^d\), irrespective of N.

The simplest example is the diffusion equation, which corresponds to Brownian motion, and concerns non-interacting particles; here the reduction to the 1-body density is trivial. We are instead concerned with systems in which the particles interact, e.g., through electrostatic forces, volume exclusion, or exchange of information. Typical DDFTs can be thought of as generalized diffusion equations of the form

Here \({\mathcal {F}}\) is the Helmholtz free energy of the system. For the non-interacting case, at equilibrium, it is

from which it follows that \(\nabla \frac{\delta {\mathcal {F}}_{\mathrm{id}} [\rho ]}{\delta \rho } = \frac{\nabla \rho }{\rho }\), resulting in the diffusion equation.

For more general systems, the exact free energy is unknown (except in the special case of hard rods in one dimension [78]). As such, much effort has been devoted to determining accurate approximations of the free energy for a wide range of systems, but particular focus is given to hard spheres [69] and particles with soft interactions [43]; these cases may be combined in a perturbative manner [35]. Here we will focus on a relatively simple DDFT, which closes the equation for \(\rho \) by considering that the particles are, on average, uncorrelated. For particles which interact through an even pairwise potential \(V_2\), in an external potential field \(V_1\), the (approximate) free energy is modelled by

This is known as the mean-field approximation, which has been shown to be surprisingly accurate for a range of systems [10], and is known to be exact in the limit of dense systems of particles with soft interactions [58]. We note that this should be considered as the first stepping stone on a path to treating PDE-constrained optimal control systems for general DDFTs. Such systems are highly challenging, not only due to the non-local, non-linear nature of the PDEs, but also due to the complexity of the free energy functionals. For example, Fundamental Measure Theory, which describes the interactions of systems of hard particles, requires the computation of weighted densities through convolution integrals, followed by a further integral of a complicated function of these weighted densities [69]. As such, these challenges are postponed to future work.

A final challenge we will address here is the implementation of (spatial) boundary conditions. Most physical systems are constrained in some way, often in a ‘box’ with impassable walls, such that the number of particles is conserved. For DDFTs, the corresponding boundary condition is \(\mathbf {j}\cdot \mathbf {n}=0\) on the boundary, where \(\mathbf {j}\) is the flux, as in (2.1), and \(\mathbf {n}\) is the unit normal to the boundary. Whilst this is a standard Robin boundary condition, we note that the difficulty lies in the form of \(\mathbf {j}\); for interacting problems, \(\mathbf {j}\) is non-local and, as such, so is the corresponding boundary condition. This results in an equation which is challenging to solve numerically; see Sect. 4.

2.2 Pseudospectral methods

As discussed in Sect. 2.1, many approaches to modelling particle dynamics processes, such as Langevin SDEs or the Fokker–Planck equation, suffer from the curse of dimensionality. This prohibits their computational solution for even a relatively moderate number of particles. Approaches from statistical mechanics can be used to (somewhat) mitigate these issues. Here we focus on DDFT models, which typically depend only on \(d\le 3\) spatial dimensions, and not directly on the number of particles N. This makes DDFT computationally feasible for physically relevant systems, with essentially arbitrarily large numbers of particles, while also capturing crucial features of the system which arise on the particle scale. However, these models are non-local, non-linear PDEs in up to three dimensions, and still provide a number of numerical challenges, which tends to prohibit the direct use of ‘standard’ solvers. In particular, a feasible numerical method must be able to compute non-linear, non-local convolution integrals, as well as non-local no-flux boundary conditions. It should furthermore provide sufficient accuracy, in both space and time, to resolve particle level phenomena, while still being computationally tractable. There are a number of methods for solving DDFT-like problems, the two most common of which are the finite element method (FEM) and pseudospectral methods. Recently, the finite volume method has also been applied to a range of DDFT problems, see [21, 22, 25, 57, 70, 71]. Here we focus on pseudospectral methods, but note that the algorithm presented below (see Sect. 4) may be easily adapted to other numerical methods. The main challenge in using FEM for DDFT problems lies in their non-locality. Heuristically, the principal benefits of FEM are that it (i) produces large, but sparse matrices, leading to systems which may be efficiently solved, for example through the implementation of standard time-stepping schemes and carefully-chosen preconditioners (see e.g., [56, 61, 62, 67, 75, 89] for PDE-constrained optimization problems); and (ii) may be applied to complicated domains through standard triangulation/meshing routines. In contrast, for non-local problems such as DDFT the corresponding matrices are not only large, but also dense. Similar challenges arise with the use of finite volume methods. This prevents the use of standard numerical schemes and significantly increases the computational cost.

Recently, accurate and efficient pseudospectral methods have been developed to tackle these non-local, non-linear DDFTs [60]. Some details of the implementation will be discussed in Sect. 4; here we highlight the benefits and challenges. As is widely known [14, 81], pseudospectral methods are extremely accurate for problems with smooth solutions on ‘nice’ domains; here ‘nice’ roughly corresponds to domains which may be mapped to the unit square in a simple (e.g., conformal) manner. They are more challenging to apply on complicated domains (although spectral elements can be seen as a compromise between FEM and pseudospectral methods [14]), and are also of poor accuracy when the solutions are not smooth (the accuracy is order \((1/N)^p\) when the solution has p sufficiently nice derivatives [81], but still at the cost of dense matrices).

Their use to treat DDFT problems stems from three main observations: (i) at least in principle, the diffusion term present in all DDFTs should lead to smoothing of solutions for sufficiently smooth particle interactions; (ii) the pseudospectral matrices are always dense and, as such, treating non-local terms does not formally affect the numerical cost; (iii) the implementation of non-local boundary conditions may be treated via standard differential–algebraic equations solvers, thus removing the need for bespoke treatments of different boundary conditions. Furthermore, as demonstrated in Sect. 5.2, it is possible to solve three dimensional interacting problems using pseudospectral methods. Regardless of the particular numerical method chosen, the spatial non-localities in such applications will generally introduce dense matrices. In these cases, the low number of points required in pseudospectral approaches provides a significant benefit compared to, e.g., FEMs, allowing us to somewhat overcome the curse of dimensionality.

2.3 PDE-constrained optimization

In this section we introduce the two main PDE-constrained optimization problem structures that we consider within a multiscale particle dynamics setting. A significant additional complication compared to a standard PDE-constrained optimization problem is the addition of an integral, interaction term. In the following, the terms ‘flow control’ and ‘source control’ refer to the application of the control in the PDE constraint either non-linearly, as a vector field within an advection operator, or linearly, as a scalar source term in the PDE.

2.3.1 Flow control problem

We commence with the following problem involving minimizing a cost functional containing a sum of \(L^2\)-norm terms within the entire space–time interval \(\Omega \times (0,T)\), constrained by a non-linear time-dependent advection–diffusion equation with additional non-local integral term. The control is applied non-linearly in the form of a vector ‘flow’ term:

where

Here, \(\Omega \subset {\mathbb {R}}^{d}\), \(d\in \{1,2,3\}\), is some given domain with boundary \(\partial \Omega \), and T is a prescribed ‘final time’ up to which the process is modelled. The scalar function \(\rho \) and the vector-valued function \(\mathbf {w}\) are the state and control variables, respectively, \(\beta >0\) is a given regularization parameter, and \({\widehat{\rho }}(\mathbf {x},t)\), \(V_{\text {ext}}(\mathbf {x},t)\), \(f(\mathbf {x},t)\), \(\rho _{0}(\mathbf {x})\) are prescribed functions corresponding to the desired state, external potential, PDE source term, and initial condition, respectively. We highlight that frequently \(f(\mathbf {x},t)=0\), which with suitable boundary conditions results in conservation of mass; one reason we allow the case \(f(\mathbf {x},t)\ne 0\) is to enable us to more readily construct analytic test problems for (2.2). Additionally, the non-local integral term models interactions between individual particles, where \(\mathbf {K}\) denotes some vector function. We are particularly interested in the case where \(\mathbf {K}\) is odd, i.e., \(\mathbf {K}(\mathbf {x},\mathbf {x}\,') = -\mathbf {K}(\mathbf {x}\,',\mathbf {x}\,)\); this is the case when \(\mathbf {K}(\mathbf {x},\mathbf {x}\,') = \nabla _{\mathbf {x}} V_2(\mathbf {x}-\mathbf {x}\,')\) with \(V_2(\mathbf {x}) = V_2(\Vert \mathbf {x}\Vert )\) an even potential. However, for now we present the results for a general \(\mathbf {K}\). For \(V_2(\Vert \mathbf {x}\Vert )\) decreasing as \(\Vert \mathbf {x}\Vert \rightarrow \infty \), the integral term models repulsive (attractive) interactions when \(\kappa \) is positive (negative). Of course, much more general choices of \(V_2\) are possible. The parameter \(\kappa \) models the particle interaction strength. If \(\kappa \) is set to zero, the model reduces to a standard non-linear advection–diffusion equation control problem.

We consider two types of boundary conditions imposed on \(\rho \), specifically the Dirichlet condition:

for a given constant \(c\in {\mathbb {R}}\), and the ‘no-flux type’ condition:

Here,

with \(\frac{\partial }{\partial {}n}\) denoting the derivative with respect to the normal \(\mathbf {n}\). The latter is a no-flux boundary condition in the classical sense if \(f(\mathbf {x},t)=0\).

2.3.2 Source control problem

We also consider the following problem, with an analogous cost functional to the flow control problem, but now with a scalar function for the control variable, which is applied linearly in the form of a PDE source term. In order to distinguish the PDE operators below from those in the flow control problem in Sect. 2.3.1, we add a subscript, s, for source. This is again minimized subject to a non-linear time-dependent advection–diffusion equation with an additional integral term:

where \({\mathcal {I}}(\rho )\) is defined as in Sect. 2.3.1 and

This is posed along with the Dirichlet boundary condition (2.3), or the ‘no-flux type’ condition:

where

We highlight that this paper is focused on fast and effective numerical methods for solving problems of the form (2.2) and (2.5), as opposed to theoretical questions such as existence, uniqueness, and regularity. We refer to [1, 4, 13, 15] for discussion of the first two questions for optimization problems of similar structure, such as those arising from mean-field optimal control. We also note that proving such results for even the forward problem is highly challenging. As far as we are aware, current progress extends only as far as the case with periodic boundary conditions, see, e.g., [24, 26] and references therein; no-flux boundary conditions, such as those included here, introduce further challenges. One interesting, and potentially relevant, result is that the forward problem itself may not have a unique equilibrium depending on the relative strengths of diffusion and interaction [24]; we suspect that such results propagate to the constrained optimization problems. For PDE-constrained optimization problems of the structure examined here, it is typical to demand at least \(H^1\) regularity in space for the state variable, with at least \(L^2\) for the control variable [82]. We note that if ‘only’ this degree of regularity is to be expected, for example if functions such as \({\widehat{\rho }}\), f, \(V_{\text {ext}}\), and \(\mathbf {K}\) themselves have low regularity, the pseudospectral methods examined in this work may not perform substantially better than a finite difference method or a FEM, for instance. However, a key feature of the pseudospectral discretization is that it will exploit whatever regularity does exist within the solution, ensuring far superior convergence compared to alternative methods if the solution has a higher degree of regularity. The particle interaction term within the PDE constraints generally introduces dense matrices under discretization, removing one typical advantage of FEMs or finite difference methods over pseudospectral methods, specifically the presence of sparse matrices for local differential operators. Additionally, due to the potentially poor scaling of optimization methods with respect to the number of grid points, it is highly beneficial to use a method which requires fewer discretized points in space and time, motivating the novel methodology presented in the forthcoming sections. This is particularly important when solving optimization problems in three dimensions, for which the PDE model itself already requires a large number of points. We highlight that the software accompanying this work [2] is designed such that the pseudospectral discretization may readily be replaced with matrices arising from alternative spatial discretizations; we base the work on the pseudospectral method specifically as it is a relatively unexplored class of techniques for PDE-constrained optimization problems, including those arising from particle dynamics, which possesses the significant advantages outlined above.

2.4 Mean-field optimal control

The main challenge when solving the optimal control problems considered in the present work, as opposed to more standard PDE-constrained optimization problems, arises from the additional non-linear, non-local interaction term. Therefore, standard results in optimal control theory cannot readily be applied, and new approaches have to be developed to address theoretical and numerical challenges.

The most commonly studied controls are through the flow, e.g., [4]; interaction term, e.g., [38]; or external agents, e.g., [18]. A common assumption is that the particle distribution has compact support [18, 19, 37], which eliminates the need for boundary conditions. No-flux boundary conditions, which are a principal focus of our work, have been considered in limited settings [4, 23].

The two main avenues of research focus on Vlasov-type PDEs arising from the mean-field limit of Cucker–Smale-like [32, 33] models of flocking, and Fokker–Planck equations from the same limit of Langevin dynamics. For the former, Fornasier et al. provided theoretical results on the convergence of the microscopic sparse optimal control problem to a corresponding macroscopic problem, using methods of optimal transport and a \(\Gamma \)-limit argument, proving existence of optimal controls in the mean-field setting, see [37,38,39]. Additional work on sparse control strategies can be found in [63], as well as in the review paper [36]. In [19], convergence results are proved for systems in which the control is applied through interacting, external agents. For the Fokker–Planck case, analytical research has focused on the derivation of first-order optimality conditions [4], existence and regularity of optimal controls [26], and convergence of the microscopic optimal control problem to the mean-field limit [23, 64].

In terms of numerical implementations, Strang splitting schemes [28, 76] are commonly used, in particular for control strategies which employ external agents [18, 20, 64], in which the numerical results are used to verify convergence in the mean-field limit. In [7], different selective control strategies were considered, and an iterative numerical method was chosen, where the interaction term is approximated stochastically. Other approaches involve combining a Chang–Cooper scheme for the forward equation, finite differences for the adjoint equation, and Monte-Carlo integration [4] to solve the PDEs. The optimization step was performed with a sweeping algorithm, with updates through the gradient equation, which is similar to the gradient descent method in [17]. Other related numerical work applies to porous media Fokker–Planck equations [23], as well as the determination of steady state solutions [5, 8].

As described in Sect. 4, one of our recommended approaches is an optimization scheme that is inspired by existing sweeping or gradient methods [4, 17], but with a novel coupling to pseudospectral methods used to discretize the space and time domains. This composition of methods offers an efficient and accurate solver for a wide class of problems. To our knowledge, it is the first time that pseudospectral methods have been applied to non-local optimal control problems of this form.

3 First-order optimality conditions for particle dynamics models

In this section we derive the system of PDEs that we need to solve in order to tackle the models (2.2) and (2.5). In order to obtain first-order optimality conditions for (2.2) and (2.5), we apply an optimize-then-discretize method, meaning we derive appropriate conditions on the continuous level and then consider suitable discretization strategies. The alternative to this approach is the discretize-then-optimize method, however we select the former in order to obtain numerical solutions that better reflect the solutions to the continuous first-order optimality conditions. We highlight that an area of active interest in the PDE-constrained optimization community is to construct discretization schemes such that the two approaches coincide (see [29] for a fundamental example of a problem for which different results are obtained using the two methods). Below we briefly describe how the expected first-order optimality conditions are formed using the formal Lagrange method, for both flow control and source control problems with different boundary conditions, and refer to [82], for instance, for a rigorous justification of how such conditions are formed. In particular, [82] considers a range of elliptic and parabolic PDE constraints and the analytical properties of the associated control problems. Due to the non-linear, non-local structure these results may become significantly more involved, giving rise to many interesting theoretical questions. We refer to [4] for a rigorous as well as formal derivation of optimality conditions for a problem with a number of similar structures to our systems. A key assumption which we make below is the existence of a control-to-state mapping, allowing the expression of a reduced cost function in terms of the control variable.

3.1 Flow control with Dirichlet boundary condition

We first consider the advection–diffusion constrained optimization problem (2.2) with the Dirichlet boundary condition (2.3). This leads to the continuous Lagrangian:

where \(q_1\) and \(q_2\) correspond to the portions of the adjoint variable \(q\) arising in the interior of the spatial domain \(\Omega \) and its boundary \(\partial \Omega \), respectively.

To obtain first-order optimality conditions, we first follow the formal Lagrange method for deriving the adjoint equation for time-dependent PDE-constrained optimization, see [82, Chapter 3] for instance. We obtain that the Fréchet derivative of \({\mathcal {L}}\) in the direction of \(\rho \) must satisfy \(D_{\rho }{\mathcal {L}}({\bar{\rho }},{\bar{w}},q_1,q_2)\rho =0\) for all appropriate functions \(\rho \). Integrating the relevant terms of (3.1) by parts and applying the Divergence Theorem, any sufficiently smooth \(\rho \) such that \(\rho (\mathbf {x},0)=0\) satisfies

where

Noting first that (3.2) must hold for all \(\rho \in {}C_0^{\infty }(\Omega \times (0,T))\) (i.e., where \(\rho (\mathbf {x},T)\), \(\rho (\mathbf {x},0)\) vanish on \(\Omega \), and \(\rho \), \(\frac{\partial \rho }{\partial {}n}\) vanish on \(\partial \Omega \)), and observing that \(C_0^{\infty }(\Omega \times (0,T))\) is dense in \(L^2(\Omega \times (0,T))\), we obtain the adjoint PDE:

Removing the restriction that \(\rho (\mathbf {x},T)\) vanishes on \(\Omega \), and arguing similarly, leads to the adjoint boundary condition \(q_1(\mathbf {x},T)=0\). From here, we may similarly remove the condition that \(\frac{\partial \rho }{\partial {}n}\) vanishes on \(\partial \Omega \) to conclude that \(q_1=0\) on \(\partial \Omega \times (0,T)\). Setting the final integral term in (3.2) to zero then gives the relation between \(q_1\) and \(q_2\). Putting all the pieces together, and relabelling \(q_1\) as \(q\), we obtain the complete adjoint problem:

We now search for the stationary point upon differentiation with respect to \(\mathbf {w}\), using that \(\rho \) is contained in some Hilbert space which in turn allows us to represent the gradient. Using similar working as above gives

whereupon applying the Divergence Theorem leads to the control or gradient equation:

To summarize, the complete first-order optimality system for the problem (2.2) with the Dirichlet boundary condition \(\rho =c\) includes the PDE constraint itself (often referred to as the state equation), the adjoint problem (3.3), and the gradient equation (3.4).

Note that the adjoint terms arising from the particle interactions agrees with the representation of the interaction term in [4], where \(\kappa \mathbf {K}(\mathbf {x},\mathbf {x}\,') = P(\mathbf {x},\mathbf {x}\,')(\mathbf {x}\,'-\mathbf {x})\). For the special case when \(\mathbf {K}(\mathbf {x}, \mathbf {x} \,') = \nabla _{\mathbf {x}} V_2(\Vert \mathbf {x}- \mathbf {x}\,'\Vert )\), we have that \(\mathbf {K}\) is an odd function in the sense that \(\mathbf {K}(\mathbf {x}, \mathbf {x}\,') = - \mathbf {K}(\mathbf {x}\,',\mathbf {x})\) and

3.2 Flow control with no-flux type boundary condition

To provide an illustration of how the same working may be applied to problem (2.2) with the no-flux boundary condition (2.4), we briefly consider the Lagrangian given by:

Solving \(D_{\rho }{\mathcal {L}}({\bar{\rho }},{\bar{w}},q_1,q_2)\rho =0\) for all \(\rho \) such that \(\rho (\mathbf {x},0)=0\) gives that: Applying the same reasoning as above then leads to the adjoint problem:

along with the state equation as in (2.2), and the gradient equation (3.4).

3.3 Source control with Dirichlet boundary condition

We next consider the problem (2.5) with the Dirichlet boundary condition (2.3). This leads to the continuous Lagrangian:

Solving \(D_{\rho }{\mathcal {L}}({\bar{\rho }},{\bar{w}},q_1,q_2)\rho =0\) for all \(\rho \) such that \(\rho (\mathbf {x},0)=0\) gives that: with further boundary terms which are eliminated through relating \(q_1\) and \(q_2\). This then leads to the adjoint problem:

where

Searching for the stationary point upon differentiation with respect to w, using similar working as above, gives:

leading to the gradient equation:

To summarize, the complete first-order optimality system for the problem (2.5), with the Dirichlet boundary condition \(\rho =c\), includes the PDE constraint itself, the adjoint problem (3.5), and the gradient equation (3.6).

3.4 Source control with no-flux type boundary condition

Applying the same working to problem (2.5) with no-flux boundary condition (2.6), the Lagrangian is given by:

Applying the same reasoning as above then leads to the adjoint problem:

along with the state equation as in (2.5), and the gradient equation (3.6).

4 Numerical method for the optimization model

In this section we describe the structure of our algorithm for the PDE-constrained optimization models under consideration. After describing a pseudospectral method for the PDE constraints (the forward problem), and the adjoint equations, we outline the optimization solvers to be applied numerically, and detail the measures of accuracy that we will employ in our numerical tests. We emphasize that the structure of our algorithm is independent of the choice of solvers in each step, for example, the pseudospectral method in space may be replaced by finite differences or finite elements for problems with non-smooth solutions. To highlight this we will describe two different choices of solver for the optimization stage. Additionally, through the combination of 2DChebClass [40] and a fixed-point or (spectral-in-time) Newton–Krylov solver, one may enforce essentially arbitrary boundary conditions, such as non-local Robin type, with no additional cost to the user. This contrasts with traditional ‘boundary bordering’ approaches [14], for which significant analytical work is often required to derive the correct matrices to impose the boundary conditions (see below). These properties make the numerical method introduced in the present work highly versatile.

4.1 Pseudospectral method for the forward problem

As described in Sect. 2.2, we solve the forward problem using Chebyshev pseudospectral methods, in particular implemented in matlab using 2DChebClass [40, 60]. The principal novelties of the method concern the computation of convolution integrals and the implementation of spatial boundary conditions; the boundary conditions in time will be discussed in the following section. This makes the method particularly well-suited to problems on finite, non-periodic domains in which the interaction term involves a convolution on a region with finite support. Such applications arise in diverse fields such as hard-sphere DDFT using Fundamental Measure Theory [60, 69, 79], and opinion dynamics [51].

As described in [60], the convolution integrals are computed in real space, in contrast to many implementations in which they are computed via Fourier transforms. The principal advantage of Fourier methods is that they are computationally cheap, requiring only fast Fourier transforms and multiplication of functions. The main disadvantage is that for finite, non-periodic domains, one needs to pad the domain, which both increases computational cost for no accuracy gain and introduces difficulties when applying boundary conditions. Convolution integrals, including those with bounded support, can be implemented by a single matrix–vector multiplication in the spatial method, with the matrix precomputed for all time steps. Use of the physical domain allows efficient implementation of the boundary conditions.

As is standard, after discretization, in this case through the use of (mapped) Chebyshev pseudospectral points, the forward PDE(s) are converted into a system of ODEs. For example, the diffusion equation becomes

where \(\varvec{\rho }\) is a vector of values of the solution at each of the Chebyshev points, and \(D_2\) is the Chebyshev second-order differentiation matrix. In the interior of the domain, this can be solved using standard time-stepping solvers for ODEs. The challenge lies in imposing the correct spatial boundary conditions. One standard approach is to modify the matrix on the right hand side of (4.1) so that the boundary conditions are automatically satisfied. This is known as ‘boundary-bordering’ [14]. For simple boundary conditions, such as homogenenous Dirichlet or (local) Neumann, such an approach is relatively straightforward. For example, for homogeneous Dirichlet conditions, assuming that the initial conditions satisfy the boundary conditions, it is sufficient to set the rows and columns of \(D_2\) that correspond to points on the boundary of the domain to zero. For homogeneous Neumann, there is a similar approach (see [81]), which becomes more involved with more complicated right-hand sides of the PDE. Another approach is to restrict the computation to interpolants (solutions) which satisfy the boundary conditions; we do not discuss this here as it is highly non-trivial for the non-linear, non-local problems that we are interested in.

Here we take a more general approach. The imposition of spatial boundary conditions can be seen as extending the discretized system of ODEs to a system of differential–algebraic equations, where the discretized PDE is solved on the interior of the domain, and the boundary conditions correspond to algebraic equations. There are various numerical methods for solving such differential–algebraic equations, see e.g., [74] for a Runge–Kutta scheme with algebraic constraints, or [42] for a Newton–Krylov scheme which allows the inclusion of algebraic constraints alongside the PDE. The main advantage here is that the numerical method does not have to be explicitly adapted when one changes the boundary conditions; one simply has to specify different algebraic constraints that correspond to the boundary conditions. In fact, the 2DChebClass code automatically identifies the boundary of various geometries, allowing a simple implementation of this approach. This is illustrated in Listing 1 for the PDE model (2.2) with no-flux boundary conditions (2.4). In particular, the notation of the code is chosen so that it mirrors the mathematical notation of the PDE model.

4.2 Additional considerations for the adjoint equation

For the optimization problem, we have a pair of coupled PDEs: the forward PDE with an initial time condition, and the adjoint equation with a final time condition. Due to the inclusion of Laplacians of opposite sign in the two equations, one must be careful when using a standard time-stepping scheme, since one of the equations will be of backward parabolic form, leading to a (possible) lack of well-posedness and numerical instability. For example, the optimality system presented in (2.2), (3.3), and (3.4) results in the adjoint equation being unstable ‘forward in time’. Moreover, as described for the forward PDE in Sect. 4.1, the adjoint PDE needs to satisfy boundary conditions in space as well as in time. One possible approach is to apply a backward Euler method for the time derivative in the state equation, with the adjoint operator applied to the adjoint equation, whereupon a huge-scale coupled system of equations is obtained from matrices arising at each time-step. These may be tackled using a preconditioned iterative method, following methodology in e.g., [61, 62, 75], but note that the systems considered were sparse whereas our systems are dense. In contrast, in order to utilize our efficient and accurate forward solver, for our fixed-point approach we reverse time in the adjoint problem, resulting in a set of well-posed equations with initial conditions. For this approach, the forward and adjoint equations are coupled non-locally in time; the adjoint equation requires the value of the state variable at later times, so the two equations cannot be solved simultaneously. By contrast, the Newton–Krylov approach allows us to tackle state and adjoint equations simultaneously. The fixed-point and Newton–Krylov algorithms are discussed in detail in Sect. 4.4 and 4.5, and are applied to example problems in Sect. 5.

4.3 Optimization solver

Now that we have presented an accurate and efficient numerical scheme for the solution of a set of PDEs which includes the forward and adjoint equations, the remaining challenges are to: (i) determine a suitable time discretization for the optimality system; (ii) choose a suitable optimization scheme. For (i), we again choose a Chebyshev pseudospectral scheme (1D in time), which, assuming that the solutions are smooth in time, leads to exponentially accurate interpolation; it is also the foundation of the spectral-in-time Newton–Krylov scheme presented in [42]. For (ii), we note that the choice of optimization solver depends strongly on the nature of the solution, and the amount of information available. We consider: (a) a general fixed-point or sweeping method [4, 17], with an adaptive line search framework to determine a mixing rate [54], to solve the system of equations iteratively, which does not require the analytic computation of the Jacobian, and is also applicable to problems with additional inequality constraints as well as other systems for which the regularity of the solution is not sufficient to be exploited by the spectral-in-time nature of the Newton–Krylov approach; (b) a higher-order, more efficient Newton–Krylov scheme, which does require the computation of the Jacobian, and could potentially be more challenging to adapt to more general problems. For the fixed-point method, after applying the pseudospectral discretization, we require the solution of a system of differential–algebraic equations. As in Sect. 4.1, these can be solved using a standard DAE solver. In this paper, the matlab inbuilt ODE solver ode15s is used. However, our approach is highly modular and it is straightforward to replace our chosen solvers with any other optimization routine, including space or time discretization of the Newton–Krylov approach.

In the following, we denote the discretized versions of the variables \(\rho \), \(q\), and \(\mathbf {w}\) by P, Q, and W, respectively. Each of these matrices is of the form \(A = [\varvec{a_0}, \varvec{a_1},\ldots ,\varvec{a_n}]\), where the vectors \(\varvec{a_k}\) represent the solutions at the discretized times \(k \in \{0,1,\ldots ,n\}\), where n is the number of time steps. In particular, the first column of P, denoted by \(\varvec{\rho _0}\), corresponds to the initial condition \(\rho (\mathbf {x},0)\). If the spatial domain is one-dimensional, P, Q, and W are of size \(N \times (n+1)\), where N is the number of spatial points. In the two-dimensional case, P and Q are of size \((N_1N_2) \times (n+1)\), where \(N_j\) is the number of spatial points in the direction of \(x_j\). The discretized control W for linear (source) control problems is also \((N_1N_2) \times (n+1)\) dimensional, while it is \((2N_1N_2) \times (n+1)\) dimensional for non-linear (flow) control problems.

4.4 Fixed-point, sweeping method

Sweeping or gradient methods are a common technique used to tackle mean-field optimal control problems [4, 17]. As such, we first demonstrate our approach by using a first-order fixed-point method, modified to include a mixing rate which is standard in density functional theory problems of the type considered here [69]. We emphasize that this method is included for its simplicity and generality, as well as due to its use in the literature; we have also implemented a higher-order Newton–Krylov method – see Sect. 4.5.

The optimization algorithm, which can solve the flow control problem (or the source control problem, respectively) introduced in Sect. 2.3, is initialized with a guess for the control, \(W^{(0)}\). Then, in each iteration, denoted by i, the following steps are computed:

-

1.

Starting with a guess for the control \(W^{(i)}\) as input variable, the corresponding state \(P^{(i)}\) is found by solving the state equation (2.2) (or (2.5), respectively).

-

2.

The adjoint, \(Q^{(i)}\), is obtained as the solution of the (reversed in time) adjoint equation (3.3) (or (3.5), respectively), using \(W^{(i)}\) and \(P^{(i)}\) as inputs. Since \(P^{(i)}\) contains the solution for all discretized times \(k \in \{0,1,\ldots ,n\}\), pseudospectral interpolation circumvents issues resulting from the non-local coupling in time, mentioned in Sect. 4.2.

-

3.

The gradient equation (3.4) (or (3.6), respectively) is solved for the updated control, \(W^{(i)}_g\), using the computed \(P^{(i)}\), \(Q^{(i)}\).

-

4.

The convergence of the optimization scheme is measured by computing the error, \({\mathcal {E}}\), between \(W^{(i)}\) and \(W^{(i)}_{g}\); see Sect. 4.6. If \({\mathcal {E}}\) is smaller than a set tolerance, the algorithm terminates, otherwise we proceed to Step 5.

-

5.

We update \(W^{(i+1)}\) as a linear combination of the current guess \(W^{(i)}\), and the value obtained in step 3, \(W^{(i)}_{g}\), employing a mixing rate \(\lambda \in [0,1]\):

$$\begin{aligned} W^{(i+1)} = (1-\lambda )W^{(i)} + \lambda W^{(i)}_{g}. \end{aligned}$$(4.2)

A typical value of \(\lambda \), which provides stable convergence in the cases we study here, lies between 0.001 and 0.1, and is chosen depending on the problem. Note that, while the solutions \(P^{(i)}\) and \(Q^{(i)}\) change in each iteration, the initial condition \(\varvec{\rho _0}\) and final time condition \(\varvec{q_n}\) remain unchanged throughout the process; the updates are induced by changing \(W^{(i)}\).

It is also feasible to vary the mixing rate \(\lambda \), based on viewing \(W^{(i)}\) as approximate solutions of a fixed-point problem: an unchanged numerical solution for the control variable \(\mathbf {w}\) at successive iterates indicates that a solution of the PDE-constrained optimization problem has been found. Work in [54] proposes an adaptive line search framework that can determine \(\lambda \) which satisfies an Armijo-type condition and hence converges faster to a fixed-point of a system compared to using a constant mixing rate. Based on this, [45] uses a potential function \(E^{(i)}\) defined as follows based on an iterative scheme:

For the fixed-point scheme (4.2), we have that \(d^{(i)} := W^{(i)}_g - W^{(i)}\). In this notation, \(W^{(i)}(\lambda )\) coincides with that of the subsequent fixed-point iterate, \(W^{(i+1)}\). As in [45], we consider an adaptive mixing rate which at each iteration satisfies the minimization problem of \(E^{(i)}\) over [0, 1]:

Since the solution of (4.3) cannot be found easily in general, we may seek an approximate minimum which satisfies the Wolfe-type (or Armijo–Wolfe-type) conditions based on those of [84, 85]. Specifically, for some \(\delta , \sigma \in (0,1)\) with \(\delta < \sigma \), we wish that

It is possible that with only the Armijo-type condition (4.4) satisfied, the fixed-point algorithm would not achieve reliable convergence. Hence the condition (4.5), based on the curvature condition discussed in [59, Sect. 3.1], is used additionally to ensure that \(\lambda ^{(i)}\) is not too small and hence unacceptably short steps are ruled out. Note that for the iterative scheme being applied:

Based on discussion in [50], we select \(\delta = 0.3\) and \(\sigma = 0.5\) for our tests. The selection of mixing rate \(\lambda \) at each fixed-point iteration is then based on Algorithm 1, whilst also ensuring that \(\lambda \ge 0.01\). We set \(\lambda _0 = 0.2\). We note that it would also be possible to test the classical (Armijo–Wolfe) conditions using the value of \({\mathcal {J}}(\rho ,\mathbf {w})\) (see [84, 85] and [59, Chapter 3]), but the fixed-point approach described here is computationally cheaper and is found to be effective for our problems.

4.5 Newton–Krylov method

In addition to the first-order fixed-point method, we also wish to consider a higher-order, Newton-type method, with the aim of achieving satisfactory convergence in many fewer iterations than the fixed-point method. The usual disadvantage of such a method is that one typically needs to solve a number of very large linear systems of equations, unless we design a highly efficient discretization procedure. Further, the linear systems are certainly dense for the particle dynamics problems considered, due to the integral particle interaction terms in the problem.

To circumvent this key difficulty, and exploit the faster convergence achieved by higher-order optimization methods, we employ a recently devised Newton–Krylov method for PDE-constrained optimization problems [42] (see also [46, 47] for more general descriptions of such methods), and tailor this to the problem at hand by efficiently describing the PDEs and the associated Jacobian on the discrete level, as well as solving the Newton system efficiently. We highlight that such a method has not previously been applied to PDE-constrained optimization problems which involve integral terms, or problems in which the control variable is applied non-linearly. We now briefly describe how the Newton–Krylov method may be applied to both flow control and source control problems. For both problems the state and adjoint equations may be described in the following general form (see [42]), by separating the spatial and temporal derivatives in each case:

with the vector-valued functions \({\mathbf {u}},{\mathbf {v}}: [0,T] \mapsto {\mathbb {R}}^N\) denoting the state and adjoint variables \(\rho \) and q evaluated at each Chebyshev point in the time variable, and \({\mathbf {u}}_0\) corresponding to the initial condition \(\rho _0(\mathbf {x})\). The vector functions \({\mathbf {F}}\) and \({\mathbf {G}}\) arise from a method of lines discretization of the state and adjoint PDEs at each time-step, and correspond to the following spatial (derivative and linear) terms:

Note that the gradient equation (3.4) (for the flow control problem) or (3.6) (for the source control problem) has been substituted into the state and adjoint equations where applicable. We note that this formulation makes use of the explicit expression for the control variable, available through the first-order optimality system, in turn due to the presence of quadratic cost functionals and the form of the PDE constraints. We believe it would be possible to extend the implementation to other cases, by incorporating additional algebraic constraints (in terms of the time variable) arising from the gradient equation within the Newton system.

Following the working in [42], we may then consider approximations \(\widetilde{{\mathbf {u}}}_k\), \(\widetilde{{\mathbf {v}}}_k\) to \({\mathbf {u}}\), \({\mathbf {v}}\) at the kth time-step \(t_k\), \(k\in \{0,1,\ldots ,n\}\), and define Chebyshev interpolants \(\widetilde{{\mathbf {u}}}(t)\), \(\widetilde{{\mathbf {v}}}(t)\) based on these approximations. The residual functions:

can then be approximated at each time-step, along with the exact imposition of initial/final-time conditions, to obtain the expressions:

Here, \({\mathbf {r}}_{u,k}\) and \({\mathbf {r}}_{v,k}\) approximate \({\mathbf {r}}_u(t_k)\) and \({\mathbf {r}}_v(t_k)\), \({\mathbf {F}}_k\) and \({\mathbf {G}}_k\) denote the functions \({\mathbf {F}}\) and \({\mathbf {G}}\) evaluated at time \(t_k\), and \(Q=[q_{i,j}]_{i,j=1,\ldots ,n+1}\) is a \((n+1)\times (n+1)\) collocation matrix arising from cumulative integration. Based on this, we then wish to (approximately) solve is given by

Applying Newton iteration for this problem leads to an iterative procedure of the form \({\mathbf {x}}^{(k+1)} = {\mathbf {x}}^{(k)} - [{\mathbf {J}}({\mathbf {x}}^{(k)})]^{-1}{\mathbf {R}}({\mathbf {x}}^{(k)})\), with \({\mathbf {J}}\) denoting the Jacobian matrix of the residual function \({\mathbf {R}}\). Although Jacobian-free Newton–Krylov methods have been studied [47], we elect to form the blocks of the Jacobian matrix explicitly due to the availability of this information for the PDE systems under consideration, in order to achieve rapid convergence of the Newton scheme. This requires us to accurately form the functions \({\mathbf {F}}\) and \({\mathbf {G}}\), as well as the derivatives of these functions in the directions \({\mathbf {u}}\) and \({\mathbf {v}}\). In the code below, for the two-dimensional flow control problem with Dirichlet boundary conditions, these quantities are denoted JFu, JFv, JGu, JGv, and our software [2] allows us to compute these quantities to spectral accuracy:

In more detail, Dx1 and Dx2 are matrices applying spatial derivatives in each direction, with grad corresponding to the gradient function, and L the spectral discretization of the Laplacian operator. The function Conv applies a convolution integral with the function \(\mathbf {K}\), gradVextDotGrad applies an operator of the form \(\nabla {}V_{\text {ext}}\cdot \nabla \), with LapVext evaluating \(\nabla ^{2}V_{\text {ext}}\) to spectral accuracy. The function scalarOperator forms a scalar function, with dotVectors taking an inner product of two vectors, and dotVectorOperator similarly taking an inner product of the first argument with the second argument applied to a subsequent term. Finally, f and g describe the source term of the state equation f and the desired state \({\widehat{\rho }}\) within the PDE operators.

For no-flux type boundary conditions the interior and boundary nodes need to be separated within the code, with the Jacobians defined separately for the boundary conditions. We refer to the open-source software [2] (which also makes use of [41]) for the full implementation with different boundary conditions, as well as for source control problems. By devising routines to compute all derivatives and integration terms to spectral accuracy for particle dynamics systems, we are able to achieve rapid Newton convergence for a range of problems. Having formed the appropriate terms of the Newton system at each iteration, these are solved inexactly using an inner Krylov method, specifically the Generalized Minimal Residual (GMRES) algorithm [72]. Column operations may be applied to \({\mathbf {J}}\) so that the leading \(2N \times 2N\) block of the Jacobian matrix is invertible, at which point the Kronecker-product based preconditioner described in [42, Sect. 2.3] may be applied.

We believe there are advantages to both the fixed-point and Newton–Krylov methods we have described above. For a range of problems the higher-order Newton–Krylov method is expected to yield more rapid convergence, due to the inclusion of Jacobian information, and the spectral-in-time representation of the residual along with a pseudospectral discretization in space leads to an efficient solver. By contrast, the fixed-point method does not require the Jacobian matrix (or an approximation to it), and is likely to be applicable to more general problems such as those with additional inequality constraints, which may have lower regularity and therefore may not be amenable to the spectral-in-time approximation. In Sect. 5 we carry out a number of experiments using both fixed-point and Newton–Krylov methods, to demonstrate and compare their effectiveness.

4.6 Measures of accuracy

All errors presented in Sect. 5 are calculated as a measure of the difference between a variable of interest, y, and a reference value \(y_R\), e.g., a previous value of \(W^{(i)}\), or an analytic solution to a test problem. The error measure \({\mathcal {E}}\) is composed of an \(L^2\) error in space and an \(L^\infty \) error in time. We define absolute and relative \(L^2\) spatial errors

where the small additional term on the denominator prevents division by zero, which are used in the full error measure:

The minimum between absolute and relative spatial error is taken to avoid choosing an erroneously large relative error, caused by division of one numerically very small term by another.

We have benchmarked the fixed-point scheme against matlab’s inbuilt fsolve function. The latter uses the trust-region-dogleg algorithm, see [65], to solve the optimality system of interest. While it is very robust, it is also much slower than the fixed-point method, which works reliably for the types of problems considered in this paper.

5 Numerical experiments

The optimal control problems (2.2) and (2.5) require inputs in terms of the desired state \({{\widehat{\rho }}}\), the PDE source term f, and the external potential \(V_{\text {ext}}\), alongside initial and final time conditions for \(\rho \) and \(q\), respectively. Additionally, an initial guess for the control \(\mathbf {w}\) is needed when using the fixed-point method. These are given for each of the examples below. We also require an interaction kernel, which here we fix as

We note that the quality of our results is robust with respect to the precise choice of the interaction kernel; the example here is chosen for illustration. Interest lies in how the solution to the optimization problems changes upon varying the interaction strength, \(\kappa \). Here we consider three representative values: \(\kappa = 0\) (no interaction), \(\kappa = -1\) (attraction), and \(\kappa = 1\) (repulsion). The value \(\kappa = 0\) is chosen as a baseline, since in this case the PDE constraint reduces to an advection–diffusion equation. The attraction and repulsion strengths are chosen to showcase interesting differences between the solutions. If \(|\kappa |\) is too small, diffusion dominates the solution, and no significant differences can be observed from the non-interacting case. Furthermore, if \(|\kappa |\) is too large, steep gradients form, which are difficult to resolve numerically.

As a baseline for the cost, we solve the forward PDE using \(\mathbf {w}=\mathbf {0}\). We evaluate the associated cost functional \({\mathcal {J}}\), the value of which is denoted by \({\mathcal {J}}_{uc}\). We then expect that applying the optimization method lowers the value of the cost functional, which we then aim to minimize by optimizing \(\mathbf {w}\), resulting in a cost \({\mathcal {J}}_c\). This cost depends on the value of the regularization parameter \(\beta \) and it is expected that the norm of the optimal control applied will increase with decreasing \(\beta \). When an initial guess for the control is required, i.e., in the fixed-point method, we take \(\mathbf {w} = \mathbf {0}\), corresponding to the reference system. The Newton–Krylov solver requires an initial guess for the state and adjoint variables at all times. In the examples below, it suffices to choose the initial and final time conditions for the state and adjoint, respectively, as an initial guess at all time points.

In the following examples, the domain considered is \(\Omega \times (0,T) = (-1,1)^d \times (0,1)\); our results are robust to changes in the domain. The numbers of spatial (Chebyshev) points are \(N_1 = N_2 = 20\) for the two-dimensional examples, and \(N_1 = N_2 = N_3 = 20\) for the three dimensional example. The number of time points is \(n=11\), unless stated otherwise. The absolute and relative tolerances of the ODE solver (matlab’s ode15s [74]) for the forward problems are set to \(10^{-9}\). The tolerance for the Newton–Krylov and fixed-point solvers are \(10^{-16}\) and \(10^{-4}\), respectively. The mixing parameter \(\lambda \) for each iteration of the fixed-point method is determined using Algorithm 1, with \(\delta = 0.3\) and \(\sigma = 0.5\).

All tests are carried out on Dell PowerEdge R430 running Scientific Linux 7, four Intel Xeon E5-2680 v3 2.5GHz, 30M Cache, 9.6 GT/s QPI 192 GB RAM.

5.1 Two-dimensional examples

We now present four examples, applying no-flux and Dirichlet boundary conditions to both flow and source control problems. The precise initial condition, external potential, and target chosen in each case are given below. We recall that the two-body interaction is given by (5.1). For our first example (flow control with no-flux boundary conditions, Sect. 5.1.1), we show results using both Newton–Krylov and fixed-point solvers. However, since they produce very similar results, as further validated in Appendix A, for the remaining examples we restrict our results to the more efficient Newton–Krylov scheme.

5.1.1 Non-linear (flow) control problem with no-flux boundary conditions

Flow Control, No-Flux: Snapshots of the optimal control for different interaction strengths, \(\kappa = -1\), \(\kappa = 0\), and \(\kappa = 1\) (top to bottom), with \(\beta = 10^{-3}\). The lengths of the arrows are proportional to \(\Vert \mathbf {w}\Vert \). A contour plot of the external potential \(V_{\text {ext}}\) is superimposed for reference, with a corresponding colorbar on the right-hand side

Here we consider (2.2) with no-flux boundary conditions (2.4). The inputs are

where \(Z \approx 1.3791\) is a normalization constant.

In Table 1, the value of the cost functional for the initial configuration (\({\mathcal {J}}_{uc}\)), where \(\mathbf {w} = \mathbf {0}\), is compared with the optimized case (\({\mathcal {J}}_{c}\)) for different values of \(\beta \) and for each of the interaction strengths. As expected, in all cases \({\mathcal {J}}_{c} \le {\mathcal {J}}_{uc}\) and the lowest values of \({\mathcal {J}}_{c}\) occur for the smallest \(\beta \) values. For large values of \(\beta \), applying control is heavily penalized and the optimal control approaches zero, which coincides with the uncontrolled case. The numerical solution takes between 120 and 220 seconds for the Newton–Krylov solver, and between 16 seconds and 30 minutes for the fixed-point solver. While the Newton–Krylov solver always converges in fewer than 10 iterations for these tests, the fixed-point solver requires between 1 and 53 iterations, depending on the problem considered (fewer iterations when \(\beta \) is large and more when \(\beta \) is small). There is an outlier of 303 iterations (in 3 hours) taken for the problem with \(\kappa = -1\) and \(\beta = 10^{-3}\), which we believe is due to attractive interactions being harder to resolve numerically. Note that timings and numbers of iterations are provided for completion, and should not be used as a tool for direct comparison of the two methods.

The results (from the Newton–Krylov scheme) for \(\beta = 10^{-3}\) and various interaction strengths, \(\kappa \), are shown in Figs. 1 and 2, which display the optimal states and controls, respectively. At earlier times, the density accumulates in regions with potential wells and the areas where the potential is large are avoided. It is clear that the control acts to drive the particle distribution towards the desired state. However, it does not act uniformly around the peak of the desired state, but rather acts strongly in the area between the location of the desired peak and the point \((-1,-1)\). This is due to the external potential being large in this area, which requires more control to overcome. Since the desired state requires the particles to accumulate in one part of the domain, more control has to be applied to the repulsive particles, which oppose the desired clustering. This is evident in Fig. 2, when comparing the magnitude of the control, symbolised by differently sized arrows, for \(\kappa = 1\) (repulsion) with the other two cases. In the attractive configuration, the effect of the attraction supports the control action, so less control is needed to reach the desired state.

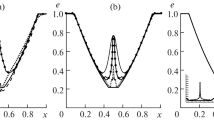

Figure 3 shows the convergence plot for the residuals arising from the Newton–Krylov scheme for different values of \(\beta \). For all values of \(\beta \), a residual error of \(10^{-13}\) is reached for both state and adjoint variables within 8 iterations. For larger values of \(\beta \), the convergence is slightly faster.

Table 2 shows the difference \({\mathcal {E}}\) as defined in Sect. 4.6 between the solutions of the Newton–Krylov and fixed-point methods. Here we use \(N_1 = N_2 = 30\) and \(n = 21\) to ensure accurate solutions with the fixed-point method. As expected, the resulting plots of the density and control for the fixed-point method are very similar to those for the Newton–Krylov solver, and hence we do not show them here. The difference between the two solvers is generally of the order of the tolerance of the fixed-point scheme (or lower), indicating convergence to the same numerical solutions. Note that convergence of the fixed-point method is measured in the norm discussed in Sect. 4.6, while the Newton-Krylov solver uses a relative \(L^\infty \) norm of the residual, as defined in Sect. 4.5.

5.1.2 Non-linear (flow) control problem with Dirichlet boundary conditions

Our next example is a control problem of type (2.2), with Dirichlet boundary conditions (2.3), and

The resulting costs are in Table 3. The solution of each example takes between 50 and 100 seconds.

The desired state prescribes the density to move from one uniform bump in the middle of the domain, to accumulate in a steeper, elongated shape across the \(x_1\)-axis. In this example, the effect of the different interaction strengths and the external potential on the control is clearly visible; see Fig. 5. The external potential is large on the left side of the domain, which naturally drives the density away from this region, so that most effort of the control variable is concentrated on the right side of the domain. However, for attractive particles, additional control must be applied in the right side of the domain, since the attractive particles oppose the density spreading out along the \(x_1\)-axis. For these attractive particles, the initially clumped density is a more ‘natural’ state; see Fig. 4. In contrast, for repulsive particles, most of the work of the control is done to push the particles together. These controls can be seen in Fig. 5.

Flow Control, Dirichlet: Snapshots of the optimal \(\rho \) for \(\kappa = -1\) and \(\beta = 10^{-3}\). Note that the optimal states for \(\kappa = 0\) and \(\kappa = 1\) look almost identical, due to the choice of \(\beta \) allowing the control to drive the state close to the desired state \({{\widehat{\rho }}}\), and are hence not shown here

Flow Control, Dirichlet: Snapshots of the optimal control for \(\kappa = -1\), \(\kappa = 0\), and \(\kappa = 1\) (top to bottom), for \(\beta = 10^{-3}\). See Fig. 2 for further details

5.1.3 Linear (source) control problem with no-flux boundary conditions

We consider problem (2.5) with no-flux boundary conditions (2.6). The chosen inputs for this example are

The resulting costs for different \(\beta \) and \(\kappa \) are in Table 4. We note that the solution of each example takes between 100 and 200 seconds, apart from the \(\beta = 10^{-5}\) case which takes around 25 seconds.

In Fig. 6 we show (for \(\beta = 10^{-3}\)) the optimal states for different interaction strengths. Since \(\beta \) is small, the optimal state is very close to the desired state \({{\widehat{\rho }}}\) (not shown). We can observe clear effects on the optimal state and the control from the external potential \(V_{\text {ext}}\). Since \(V_{\text {ext}}\) is large around \(x_2 = 1\), more control has to be applied in this area to force the density towards \({\widehat{\rho }}\). It can also be seen that the state is slightly asymmetric because of this effect, despite \({{\widehat{\rho }}}\) being symmetric.

The effect of the different interaction strengths on the state can be observed in Fig. 6, by inspecting the shape of the particle distribution. The desired state \({{\widehat{\rho }}}\) prescribes higher density near the two corners \((-1,-1)\) and (1, 1). Without control or an external potential, repulsive particles accumulate on the boundary of the domain, whilst attractive particles favour the centre of the domain. Hence in this example, where the target density is higher near the boundary, less control needs to be applied for repulsive particles. For attractive particles, the accumulated particles are arranged in a rounder shape, while the repulsive particles are more spread out, as would be expected from their interactions.

5.1.4 Linear (source) control problem with Dirichlet boundary conditions

We consider problem (2.5) with Dirichlet boundary conditions (2.3). The chosen inputs are

Note, in particular, that the external potential is time dependent. Since it decays over time, this results in the strongest effect of \(V_{\text {ext}}\) being visible at earlier times. The resulting costs for different \(\beta \) and \(\kappa \) can be seen in Table 5. The solution of each example takes between 20 and 90 seconds, apart from the \(\beta = 10^{-5}\) case which takes around 25 seconds.

We show the optimal state for \(\beta = 10^{-3}\) and varying \(\kappa \) in Fig. 7, which is once again close to the target state, \({{\widehat{\rho }}}\), irrespective of the interaction strength. The corresponding optimal controls are shown in Fig. 8. Since the external potential is large around the bottom half of the domain, the density is not centred in the middle of the domain, but shifted slightly upwards. At the same time it can be observed that at \(t = 0.1\), the control is applied where the external potential is steep. At later times, the control is mostly applied where the density is prescribed to accumulate approximately in the form of the desired state \({{\widehat{\rho }}}\), which is in the left half of the domain. While the qualitative behaviour of the control is similar in each case, it can be seen that less control has to be applied for attractive particles compared to repulsive ones, since the attraction causes the particles to clump together, which supports the shape of the desired state \({{\widehat{\rho }}}\).

Source Control, Dirichlet: Snapshots of the optimal control for \(\kappa = -1\), \(\kappa = 0\), and \(\kappa = 1\) (top to bottom), with \(\beta = 10^{-3}\). A contour plot of the external potential \(V_{\text {ext}}\) is superimposed on the control plots for reference, with a corresponding colorbar on the right-hand side

5.2 Three-dimensional example

Our final example is three-dimensional, featuring the non-linear flow control problem (2.2) with no-flux boundary conditions (2.4). This has been chosen as an illustrative example in three dimensions since it is both the most challenging combination of control type and boundary conditions and also the most physically relevant for our applications. The chosen inputs are

This example is only run for \(\beta = 10^{-3}\), due to a running time between 30 and 38 hours per problem. This is a simple consequence of the ‘curse of dimensionality’.

The effect of the different interaction strengths is clearly displayed in Fig. 9 and is particularly obvious in earlier times of the particle evolution. It is evident that attractive particles enhance the control in pushing the density into a cluster in the middle of the domain, as prescribed by the desired state \({{\widehat{\rho }}}\), while more control is needed for a similar effect in the repulsive setup. We get, for \(\kappa = 0\), \({\mathcal {J}}_c = 0.0078\). This can be compared to \({\mathcal {J}}_{uc} = 0.0185\) from the computed forward problem with \(\mathbf {w} = \mathbf {0}\). For \(\kappa = 1\) we obtain \({\mathcal {J}}_c = 0.0099\), compared to \({\mathcal {J}}_{uc} = 0.0222\) in the uncontrolled case, and for \(\kappa = -1\) we have \({\mathcal {J}}_c = 0.0059\), with \({\mathcal {J}}_{uc} = 0.0149\). As expected, the optimal control leads to a cost which is significantly lower than in the uncontrolled case.

6 Concluding remarks

We have derived an accurate and efficient algorithmic strategy for solving the first-order optimality conditions arising from PDE-constrained optimization problems, along with additional integral terms, describing multiscale particle dynamics problems. Our approach, linked to the DDFT approach applied to (non-optimized) systems in statistical mechanics, applies a pseudospectral method in space and time, and utilizes fixed-point and Newton–Krylov schemes within the optimization solver. This novel methodology is more general in scope than existing numerical implementations for similar problems, and exhibits the substantial computational benefits of applying such methods for non-local, non-linear systems of PDEs. Numerical tests indicate the potency of our approach for a range of examples, boundary conditions, and problem parameters. An open-source software implementation of our methodology is available at [2]. There are many possible extensions to our approach: for instance, one may apply our methodology to problems where the misfit between state and desired state is measured at some final time only, models with different cost functionals, and boundary control problems. Furthermore, methods of this type may be tailored to specific particle dynamics applications, in fields such as opinion dynamics, flocking, swarming, and optimal control problems in robotics, and such applications will be tackled in future work.

References

Achdou, Y., Capuzzo-Dolcetta, I.: Mean field games: Numerical methods. SIAM J. Numer. Anal. 48(3), 1136–1162 (2010)

Aduamoah, M., Goddard, B.D., Pearson, J.W., Roden, J.: 2DChebClassPDECO [Software]. https://bitbucket.org/bdgoddard/2dchebclasspdecopublic/ (2022)

Albi, G., Bongini, M., Cristiani, E., Kalise, D.: Invisible control of self-organizing agents leaving unknown environments. SIAM J. Appl. Math. 76(4), 1683–1710 (2016)

Albi, G., Choi, Y.-P., Fornasier, M., Kalise, D.: Mean field control hierarchy. Appl. Math. Opt. 76, 93–135 (2017)

Albi, G., Herty, M., Pareschi, L.: Kinetic description of optimal control problems and applications to opinion consensus. Commun. Math. Sci. 13(6), 1407–1429 (2015)

Albi, G., Kalise, D.: (Sub)Optimal feedback control of mean field multi-population dynamics. IFAC-PapersOnLine 51(3), 86–91 (2018)

Albi, G., Pareschi, L.: Selective model-predictive control for flocking systems. Commun. Appl. Ind. Math. 9(2), 4–21 (2018)

Albi, G., Pareschi, L., Zanella, M.: Boltzmann-type control of opinion consensus through leaders. Philos. Trans. Roy. Soc. A 372(2028), 20140138 (2014)

Alt, W., Chaplain, M., Griebel, M., Lenz, J.: editors. Polymer and Cell Dynamics: Multiscale Modelling and Numerical Simulations. Birkhäuser (2012)

Archer, A.J., Chacko, B., Evans, R.: The standard mean-field treatment of inter-particle attraction in classical DFT is better than one might expect. J. Chem. Phys. 147(3), 034501 (2017)

Aubin, H., Nichol, J.W., Hutson, C.B., Bae, H., Sieminski, A.L., Cropek, D.M., Akhyari, P., Khademhosseini, A.: Directed 3D cell alignment and elongation in microengineered hydrogels. Biomaterials 31(27), 6941–6951 (2010)

Binney, J., Tremaine, S.: Galactic Dynamics. Princeton University Press, Princeton, NJ (2011)

Bongini, M., Buttazo, G.: Optimal control problems in transport dynamics. Math. Models Methods Appl. Sci. 27(3), 427–451 (2017)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Dover Publications, Mineola, NY (2001)

Briceño-Arias, L.M., Kalise, D., Silva, F.J.: Proximal methods for stationary mean field games with local couplings. SIAM J. Control Opt. 56(2), 801–836 (2018)

Bruna, M., Chapman, S.J.: Excluded-volume effects in the diffusion of hard spheres. Phys. Rev. E 85(1), 011103 (2012)

Burger, M., Di Francesco, M., Markowich, P.A., Wolfram, M.-T.: Mean field games with nonlinear mobilities in pedestrian dynamics. Discrete Cont. Dyn.-B 19(5), 1311–1333 (2014)

Burger, M., Pinnau, R., Roth, A., Totzeck, C., Tse, O.: Controlling a self-organizing system of individuals guided by a few external agents – Particle description and mean-field limit. arXiv e-prints, arXiv:1610.01325 (2016)

Burger, M., Pinnau, R., Totzeck, C., Tse, O.: Mean-field optimal control and optimality conditions in the space of probability measures. SIAM J. Control. Optim. 59(2), 977–1006 (2021)

Burger, M., Pinnau, R., Totzeck, C., Tse, O., Roth, A.: Instantaneous control of interacting particle systems in the mean-field limit. J. Comput. Phys. 405, 109181 (2020)

Carrillo, J.A., Castro, M.J., Kalliadasis, S., Perez, S.P.: High-order well-balanced finite-volume schemes for hydrodynamic equations with nonlocal free energy. SIAM J. Sci. Comput. 43(2), A828–A858 (2021)

Carrillo, J.A., Chertock, A., Huang, Y.: A finite-volume method for nonlinear nonlocal equations with a gradient flow structure. Commun. Comput. Phys. 17(1), 233–258 (2015)

Carrillo, J.A., Choi, Y.-P., Totzeck, C., Tse, O.: An analytical framework for consensus-based global optimization method. Math. Models Methods Appl. Sci. 28(6), 1037–1066 (2018)

Carrillo, J.A., Gvalani, R.S., Pavliotis, G.A., Schlichting, A.: Long-time behaviour and phase transitions for the McKean-Vlasov equation on the torus. Archive Ration. Mech. Anal. 235(1), 635–690 (2020)

Carrillo, J.A., Kalliadasis, S., Perez, S.P., Shu, C.-W.: Well-balanced finite-volume schemes for hydrodynamic equations with general free energy. Multiscale Model. Sim. 18(1), 502–541 (2020)

Carrillo, J.A., Pimentel, E.A., Voskanyan, V.K.: On a mean field optimal control problem. Nonlinear Anal. 199, 112039 (2020)

Chan, G.K.-L., Finken, R.: Time-dependent density functional theory of classical fluids. Phys. Rev. Lett. 94(18), 183001 (2005)

Cheng, C.Z., Knorr, G.: The integration of the Vlasov equation in configuration space. J. Comput. Phys. 22(3), 330–351 (1976)