Abstract

Although quantile regression to calculate risk measures is widely established in the financial literature, when considering data observed at mixed-frequency, an extension is needed. In this paper, a model is built on a mixed-frequency quantile regressions to directly estimate the Value-at-Risk (VaR) and the Expected Shortfall (ES) measures. In particular, the low-frequency component incorporates information coming from variables observed at, typically, monthly or lower frequencies, while the high-frequency component can include a variety of daily variables, like market indices or realized volatility measures. The conditions for the weak stationarity of the daily return process are derived and the finite sample properties are investigated in an extensive Monte Carlo exercise. The validity of the proposed model is then explored through a real data application using two energy commodities, namely, Crude Oil and Gasoline futures. Results show that our model outperforms other competing specifications, on the basis of some popular VaR and ES backtesting test procedures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Risk management has spurred a vast literature in financial econometrics to meet the challenges imposed by the Basel-II and Basel-III agreements and develop model-based approaches to calculate regulatory capital requirements (Kinateder, 2016) in a forecasting perspective. For tail market risk, special attention was devoted to the Value-at-Risk (VaR) measure at a given confidence level \(\tau \), VaR(\(\tau \)), defined as the worst portfolio value movement (return) to be expected at \(1-\tau \) probability over a specific horizon (Jorion, 1997). The VaR measure is complemented by another tail risk measure called Expected Shortfall (ES), defined as the conditional expectation of returns in excess of the VaR (see Acerbi & Tasche, 2002a, Rockafellar & Uryasev, 2002, among others). Unlike VaR, ES is a coherent risk measure (Artzner et al., 1999; Acerbi & Tasche, 2002b) and provides deeper information on the shape and the heaviness of the tail in the loss distribution. Together, such measures represent the most popular benchmark in the risk management practice (Christoffersen & Gonçalves, 2005; Sarykalin et al., 2008).

Being the \(\tau \)-quantile of a portfolio return distribution, the VaR(\(\tau \)) can be predicted as the product of the portfolio volatility forecast times the quantile of the hypothesized distribution. For the first component, volatility clustering, modeled by conditionally autoregressive models (such as the ARCH/GARCH - Engle, 1982; Bollerslev, 1986), produces good forecasts capable of reproducing well known stylized facts of financial time series, including skewed behavior and fat tails (Cont, 2001, Engle & Patton, 2001, among others). Further improvements were made possible by the direct predictability of realized measures of financial volatility (Andersen et al., 2006b). While a choice of a specific parametric distribution for the innovation term may be uninfluential for model parameter estimation (Bollerslev & Wooldridge, 1992), unless a few extreme events (e.g. the Flash Crash of May 2005 or the presence of outliers, Carnero et al., 2012) occur, a wrong choice of distribution for the innovation term delivers inaccurate quantiles and hence an inadequate VaR(\(\tau \)) forecasting: see for example Manganelli and Engle (2001) and El Ghourabi et al. (2016).

As an alternative, the VaR(\(\tau \)) can be directly derived through quantile regression methods (Koenker & Bassett, 1978; Engle & Manganelli, 2004) where no distributional hypothesis is required. A first suggestion in this direction comes from Koenker and Zhao (1996) who use quantile regression for a particular class of ARCH models, i.e., the Linear ARCH models (Taylor, 1986), chosen for its ease of tractability in deriving theoretical properties. Subsequent refinements are, for instance, Xiao and Koenker (2009), Lee and Noh (2013), Zheng et al. (2018) for GARCH models, Noh and Lee (2016) who consider asymmetry, Chen et al. (2012) who consider nonlinear regression quantile approach with intra-day price, Bayer (2018) who combines VaR forecasts via penalized quantile regressions, Taylor (2019) who considers the Asymmetric Laplace distribution to jointly estimate VaR and ES and the multivariate generalization of Merlo et al. (2021).

A relatively recent stream of literature investigates the value of information provided by data available at both high- and low-frequency incorporated into the same model in assessing the dynamics of financial market activity: this is the case of the GARCH-MIDAS model proposed by Engle et al. (2013) (building on the MI(xed)-DA(ta) Sampling approach by Ghysels et al., 2007), the regime switching GARCH-MIDAS of Pan et al. (2017), the recent paper by Xu et al. (2021) who consider a MIDAS component in the Conditional Autoregressive Value-at-Risk (CAViaR) of Engle and Manganelli (2004), the work of Pan et al. (2021) where the parameters of the GARCH-MIDAS models for jointly calculating VaR and ES are obtained through the loss function of Fissler and Ziegel (2016), and the contribution of Xu et al. (2022) who calculate the weekly tail risks of three market indices using information from daily variables.

The main contribution of this paper is a novel Mixed-Frequency Quantile Regression model (MF-QR, extending Koenker & Zhao, 1996): we show how the constant term in the quantile regression can be written as a function of data sampled at lower frequencies (and hence becomes a low-frequency component), while the high-frequency component is regulated by the daily data. As a result, with the aim of capturing dependence on the business cycle, we benefit from the information contained in low-frequency variables (cf. Mo et al., 2018, Conrad & Loch, 2015, among others), and we achieve a rather flexible representation of volatility dynamics. Since both components enter additively, our model can be seen as a quantile model version of the Component GARCH by Engle and Lee (1999).

In the proposed model, we also include a predetermined variable observed daily, typically a realized measure: this adds the “–X” component in the resulting MF-QR-X model. This variable can capture extra information useful in modeling and forecasting future volatility and may improve the accuracy of tail risk forecasts. Such a use in the quantile regression framework is not new in itself: the paper by Gerlach and Wang (2020) jointly forecasts VaR and ES and Zhu et al. (2021) predict VaR by adopting a GARCH-X model for the volatility term. Also the work of Žikeš and Baruník (2016) uses the realized measures in the context of quantile regressions to investigate the features of conditional quantiles of realized volatility and asset returns.

The proposed MF-QR-X specification and its nested alternatives (including the QR version of Koenker and Zhao 1996) belong to the class of semi-parametric models, without resorting to restrictive assumptions about the error term distribution and are able to calculate the VaR directly. Such a model can also jointly forecast the VaR and ES via the Asymmetric Laplace distribution as proposed by Taylor (2019).

From a theoretical point of view, we provide the conditions for the weak stationarity of the daily return process suggested. The finite sample properties are investigated through an extensive Monte Carlo exercise. The empirical application is carried out on the VaR and ES predictive capability for two energy commodities, the West Texas Intermediate (WTI) Crude OilFootnote 1 and the Reformulated Blendstock for Oxygenate Blending (RBOB) Gasoline futures, both observed daily. The period under investigation starts on January 2010 and ends on July 2022, covering both the Covid-19 pandemic and some consequences of the Russian aggression of Ukraine. The competing models consist of many common parametric, semi-parametric and non-parametric choices. Some parametric models like the GARCH-MIDAS use the same low-frequency variable employed in the proposed MF-QR-X specification. Given our empirical interest in evaluating risks related to energy commodities, a relevant choice for such a variable is the geopolitical risk (GPR) index proposed by Caldara and Iacoviello (2022), observed monthly.Footnote 2 The resulting VaR and ES predictions are evaluated in- and out-of-sample, according to the customary backtesting procedures: our out-of-sample period starts on January 2017 and ends on July 2022, and the VaR and ES forecasts are obtained using a rolling window that updates the parameter estimates every five, ten and twenty days. The results show that our MF-QR-X outperforms all the other competing models considered, proving the merits of resorting to a mixed-frequency source of information. The useful contribution of a low-frequency variable in a risk management perspective thus lies in its capability of capturing secular movements in the conditional distributions related to risk factors slowly shifting through time.

The rest of the paper is organized as follows. In Sect. 2 we introduce the notation and the basis for a dynamic model for the VaR and ES and we provide details of the conditional quantile regression approach. Section 3 presents our MF-QR-X model. Section 4 is devoted to the Monte Carlo experiment. Section 5 details the backtesting procedures. Section 6 illustrates the empirical application. Conclusions follow.

2 Approaches to VaR and ES estimation

For the purposes of this paper we will adopt a double time index, i, t, where \(t=1,\ldots ,T\) scans a low frequency time scale (i.e., monthly) and \(i=1,\ldots ,N_t\) identifies the day of the month, with a varying number of days \(N_t\) in the month t, and an overall number N of daily observations \(N=\sum _{t=1}^T N_t\). Let the daily returns \(r_{i,t}\) be, as customarily defined, the log-first differences of prices of an asset or a market index, and let the information available at time i, t be \(\mathcal {F}_{i,t}\). In what follows, we are interested in the conditional distribution of returns, with the assumption:

where \(z_{i,t} \overset{iid}{\sim }(0,1)\) have a cumulative distribution function denoted by \(F(\cdot )\). The zero conditional mean assumption in Eq. (1) is not restrictive; in fact, when explicitly modeled, such a conditional mean is very close to zero, consistently with the market efficiency hypothesis.

Based on this setup, the conditional (one-step-ahead) VaR for day i, t at \(\tau \) level (\(VaR_{i,t}(\tau )\)) for \(r_{i,t}\) is defined as

i.e., the \(\tau \)-th conditional quantile of the series \(r_{i,t}\), given \(\mathcal {F}_{i-1,t}\); consequently, we can write

where \({F^{-1}(\tau )}= \inf \left\{ z_{i,t}: F(z_{i,t}) \ge \tau \right\} \). For a given \(\tau \), the traditional volatility–quantile approach to estimate the \(VaR_{i,t}(\tau )\) is thus based on modeling \(\sigma _{i,t}\) from a dynamic model of either the conditional variance of returns (following Engle, 1982, Bollerslev, 1986) or as a conditional expectation of a realized measure (Andersen et al., 2006a) and retrieving the constant \(F^{-1}(\tau )\) either parametrically or nonparametrically. In either case, from an empirical point of view, it turns out that distribution tests mostly reject specific parametric choices, and that using the empirical distributions is prone to bias/variance problems and lack of stability through time.

Alternatively, we can estimate \(Q_{r_{i,t}}\left( \tau |\mathcal {F}_{i-1,t}\right) \) directly using a quantile regression approach (Koenker & Bassett, 1978; Engle & Manganelli, 2004) which has become a widely used technique in many theoretical problems and empirical applications. While classical regression aims at estimating the mean of a variable of interest conditioned to regressors, quantile regression provides a way to model the conditional quantiles of a response variable with respect to a set of covariates in order to have a more robust and complete picture of the entire conditional distribution. This approach is quite suitable to be used in all the situations where specific features, like skewness, fat-tails, outliers, truncation, censoring and heteroskedasticity are present. The basic idea behind the quantile regression approach, as shown by Koenker and Bassett (1978), is that the \(\tau \)-th quantile of a variable of interest (in our case \(r_{i,t}\)), conditional on the information set \(\mathcal {F}_{i-1,t}\), can be directly expressed as a linear combination of a \(q+1\) vector of variables \({x}_{i-1,t}\) (including a constant term), with parameters \({\Theta }_\tau \), that is:

An estimator for the \((q+1)\) vector of coefficients \({{\Theta }}_\tau \) is obtained minimizing a suitable loss function (also known as check function):

with \(\rho _\tau (u)=u\left( \tau - \mathbbm {1}\left( u<0\right) \right) \), where \(\mathbbm {1}\left( \cdot \right) \) denotes an indicator function. In our context, the advantage of such an approach is to avoid the need to specify the distribution of \(z_{i,t}\) in Eq. (1), either parametrically or nonparametrically.

Following the approach by Koenker and Zhao (1996), we assume a dependence of \(\sigma _{i,t}\) on past absolute values of returns:

with \(0<\beta _0<\infty \), \(\beta _1,\ldots ,\beta _q\ge 0\). Thus, substituting the generic term \({x}_{i-1,t}\) in Eq. (3) with the specific vector in Eq. (5), we have

Such an approach turns out to be convenient, since it allows for a direct comparability of the two setups to estimate the VaR(\(\tau \)) in Eq. (2):

which establishes the equivalence \(\Theta \, F^{-1}(\tau )=\Theta _\tau \) which will prove useful later in our Monte Carlo simulations. Moreover, as also pointed out by Koenker and Zhao (1996), what we estimate in the conditional quantile regression framework are the parameters in \(\Theta _{\tau }\), which are different from the parameters included in \(\Theta \) of the volatility–quantile context. While the parameters in \(\Theta \) are constrained to be non-negative, the parameters in \(\Theta _{\tau }\) may be negative depending on the value of \(\tau \). The volatility–quantile and conditional quantile regression options in Eq. (7) give rise to the so-called parametric and semi-parametric models for the VaR, respectively. Alternatively, the most prominent example of a non-parametric approach to derive the VaR is the Historical Simulation (HS - Hendricks, 1996). The HS model calculates this risk measure as the empirical quantile over a window of returns with length w, that is:

where \({\varvec{r}}_{i,t}^{w} = (r_{i-w,t},r_{i-w+1,t},\dots ,r_{i-1,t})\).

The linear representation in (5) can be further justified by noting that the term \(\sigma _{i,t}\) defining the volatility of returns can also be seen as the conditional expectation of absolute returns in the Multiplicative Error Model representation used by Engle and Gallo (2006):

The term \(\eta _{i,t}\) is an i.i.d. innovation with non-negative support and unit expectation, and the Eq. (9) can be used to derive an estimate of the VaR. The representation in (5) can also be seen as a simple and convenient nonlinear autoregressive model for \(|r_{i,t}|\) with multiplicative errors, which we hold as the maintained base specification to explore the merits of our proposal. Moreover, this lays the grounds for extending the approach, using other specifications for \(\sigma _{i,t}\) in Eq. (5) as functions of past volatility-related observable variables. For example, as an alternative, we can consider:

with \(rv_{i,t}\) the daily realized volatility.

A similar framework can be adopted to calculate the ES, following, again, the same parametric, non-parametric and semi-parametric approaches as before. The parametric models with Gaussian error distribution calculate the ES through:

where \(h_{i,t}\) is the conditional variance, \(\phi (\cdot )\) and \(\Phi ^{-1}(\tau )\) are the probability density function (PDF) and quantile function of the standard Gaussian distribution, respectively. The parametric models with Student’s t error distribution calculate the ES via:

where \(g_{\nu }\) and \(G_{\nu }^{-1}(\tau )\) are the PDF and quantile function of the Student’s t with \(\nu \) degrees of freedom, respectively.

The HS calculates the ES as follows:

where \({VaR}_{i,t}(\tau )\) is the VaR obtained through Eq. (8).

Following Taylor (2019), the quantile regression framework allows to jointly estimate the VaR and ES by maximizing the following Asymmetric Laplace density (ALD), that is:

where the ES in (13) is calculated as:

We now move to the introduction of our MIDAS extension to the model in (5) in a quantile regression framework, taking advantage of the well-known predictive power of low-frequency variables for the volatility observed at a daily frequency (e.g. Conrad & Kleen, 2020). We also add an “–X” term to the proposed specification. This additional high-frequency variable could be a lagged realized measure of volatility (see also Gerlach & Wang, 2020, within a CAViAR context), in order to add the informational content of a more accurate measure to the volatility dynamics, or a volatility index, like the VIX, or even accommodate asymmetric effects associated to negative returns.

3 The MF-QR-X model

3.1 Model specification and properties

In order to take advantage of the information coming from variable(s) observed at different frequency, we introduce a low-frequency component in model (5). This low-frequency term represents a one-sided filter of K lagged realizations of a given variable \(MV_t\) (any low-frequency variable), through a weighting function \(\delta (\omega )\), where \(\omega =(\omega _1, \omega _2)\). Our resulting Mixed-Frequency Quantile Regression (MF-QR) model becomes:

where the parameter \(\theta \) represents the impact of the weighted summation of the K past realizations of \(MV_t\), observed at each period t, that is, \(WS_{t-1}= \sum _{k=1}^K \delta _k(\omega )MV_{t-k}\). The importance of each lagged realization of \(MV_t\) depends on \(\delta (\omega )\), which can be assumed as a Beta or Exponential Almon lag function (see, for instance, Ghysels & Qian, 2019). Here we use the former function, that is:

Equation (17) is a rather flexible function able to accommodate various weighting schemes. Here we follow the literature and give a larger weight to the most recent observations, that is, we set \(\omega _1=1\) and \(\omega _2 \ge 1\). The resulting weights \(\delta _k(\omega )\) are at least zero and at most one, and their sum equals one, so that \( \sum _{k=1}^K \delta _k(\omega )MV_{t-k}\) is an affine combination of \(\left( MV_{t-1},\cdots ,MV_{t-K}\right) \).

In order to refine the VaR dynamics in our model, we include a predetermined variable \(X_{i,t}\), so that we can explore the empirical merits of such an extended specification, already present in the GARCH and MEM literature (Han & Kristensen, 2015; Engle & Gallo, 2006). Such a variable may be the realized volatility of the asset or a market volatility index (see the use of the VIX in Amendola et al., 2021, among others). The resulting eXtended Mixed-Frequency Quantile Regression model, labelled MF-QR-X, becomes:

In either Eqs. (16) or (18), the first component (including the constant) depends only on the low-frequency term (changing at every t, according to the term \(WS_{t-1}\)), while the second comprises variables changing daily (i.e., every i, t) and include lagged returns and the high-frequency term. In such a representation, the two components enter additively, in the spirit of the component model of Engle and Lee (1999):

which, for the MF-QR-X model, becomes

In the following theorem we show that, under mild conditions, the process in (20) is weakly stationary:

Theorem 1

Let \(MV_{t}\) and \(X_{i,t}\) be weakly stationary processes. Assume that \(\beta _0 >0\), \(\beta _1,\cdots ,\beta _q,\beta _x \ge 0\) and \(\theta \ge 0\). Let \(z^*\equiv \left( E|z_{i,t}|^{p} \right) ^{1/p}<\infty \), for \(p=\left\{ 1,2 \right\} \) and the polynomial

has all roots \(\lambda \) inside the unit circle. Then the process \(r_{i,t}\) in (20) is weakly stationary.

Proof: see “Appendix A”.

3.2 Inference on the MF-QR-X Model

In order to make inference on the MF-QR-X model, we need to solve Eq. (4) where

The estimation of the vector \({\Theta }_\tau \) is encumbered by the fact that the mixed-frequency term \(WS_{t-1}\) is not observable, as it depends on the unknown \(\omega _2\) parameter of the weighting function \(\delta _k(\omega )\), also to be estimated. To make estimation feasible, we resort to the expedient of profiling outFootnote 3 the parameter \(\omega _2\), through a two-step procedure: we first fix \(\omega _2\) at an initial arbitrary value, say \(\omega _2^{(b)}\), which turns the vector \(x_{i-1,t}\) into a completely observable counterpart, in short \({x}_{i-1,t}^{(b)}\). This gives a solution to the minimization of the loss function, which is dependent on \(\omega _2^{(b)}\), that is,

This procedure is repeated over a grid of B values for \(\omega _2\), so that we have \(\left\{ \widehat{\Theta }^{(b)}_\tau \right\} _{b=1}^B\), and the chosen overall estimator is \(\left( \hat{\omega }_2^*,\widehat{\Theta }^{(*)}_\tau \right) \), corresponding to the smallest overall value of the loss function.

Accordingly, the MF-QR-X estimator of the VaR is

Summarizing, the proposed MF-QR-X is thus a flexible VaR model not requiring any distributional assumptions for the error term and accommodating both low-frequency and high-frequency additional variables. In Sect. 6, we will elaborate on its capability to jointly estimate the VaR and ES, adopting the approach proposed by Taylor (2019).

To obtain reliable VaR and ES estimates in our model (25), an important issue is the choice of the optimal number of lags q for the daily absolute returns in Eq. (5). To that end, we select the lag order suggested by a sequential likelihood ratio (LR) test on individual lagged coefficients (see also Koenker & Machado, 1999). In particular, for a given \(\tau \), at each step j of the testing sequence over a range of J values, we compare the unrestricted model where the number of lags is set equal to j (labelled U, with an associated loss function \({V}_{U,\tau }^{(j)}\)), against a restricted model where the number of lags is \(j-1\) (labelled R, with an associated loss function \({V}_{R,\tau }^{(j-1)}\)). In this setup, the null hypothesis of interest is

i.e., the coefficient on the most remote lag is zero. The procedure starts contrasting a lag-1 model against a model with just a constant, then a lag-2 against a lag-1, and so on.

For a given \(\tau \), at each step j, we calculate the test statistic

where \(s(\tau )\) is the so-called sparsity function estimated accordingly to Siddiqui (1960) and Koenker and Zhao (1996). Under the adopted configuration, \(LR_\tau ^{(j)}\) is asymptotically distributed as a \(\chi _1^{2}\), so that we select q to be the last value of j in the sequence, for which we reject the null hypothesis.

4 Monte Carlo simulation

The finite sample properties of the sequential test and of the estimator of the MF-QR modelFootnote 4 can be investigated by means of a Monte Carlo experiment. In what follows we consider \(R=5000\) replications of the data generating process (DGP):

where we assume a \(\mathcal {N}(0,1)\) distribution for \(z_{i,t}\) and we set to zero the relevant initial values for \(r_{i,t}\). Moreover, the stationary variable \(MV_t\) entering the weighted sum \(WS_{t-1}\) is assumed to be drawn from an autoregressive AR(1) process \(MV_t = \varphi MV_{t-1} + e_t\), with \(\varphi =0.7\) and the error term \(e_t\) following a Skewed t-distribution (Hansen, 1994), with degrees of freedom \(df=7\) and skewing parameter \(sp=-6\). The frequency of \(MV_{t}\) is monthly and \(K=24\). The values of the parameters (collected in a vector \(\Theta \)) are detailed in the first column of the Tables 2, 3 and 4. For the simulation exercise we consider \(N=1250\), \(N=2500\) and \(N=5000\) observations, to mimic realistic daily samples. Having fixed \(K=24\) (that is, two years of monthly data), the number of daily observations should be large enough to allows for model estimation. In our case, we set this limit to 1250 daily observations. Finally, three different levels of the VaR coverage level \(\tau \) are chosen: 0.01, 0.05, and 0.10.

In the Monte Carlo experiment, we start by evaluating the features of the LR test for the lag selection in Eq. (27). To that end, we test sequentially \(H_0:\beta _j=0\) over J steps at a significance level \(\alpha \). Since the DGP is a fourth-order process, we expect to have a high rejection rate when the null involves a zero restriction on coefficients \(\beta _j, \ j=1,\ldots ,4\). In order to confirm the expected low rate of rejections, we extend the sequence of testing of further \(\beta _j\)’s, up to \(J=6\).

Looking at the Table 1, where we report the percentages of rejections for different VaR coverage levels \(\tau =0.01, 0.05, 0.1\) at the nominal significance level of \(\alpha =5\%\) across replications, we validate the good behavior of the test. Overall, the sequential test procedure satisfactorily identifies the number of lags to be included in the MF-QR model, with the performance improving with the number of observations, especially for \(H_0:\beta _4=0\); for the latter case, the percentage of rejections of the null increases considerably across coverage levels when \(N=5000\).

Turning to the small sample properties of our estimator, the evaluation is done in terms of the original coefficients in the DGP, collected in the vector \(\Theta = \left( \beta _0,\theta ,\beta _1,\ldots ,\beta _q\right) \), using the relationship with the quantile regression parameters \(\Theta _\tau \), i.e., \(\Theta =\Theta _\tau / F^{-1}(\tau )\).Footnote 5 In Tables 2, 3 and 4 we report the Monte Carlo averages of the parameters (\(\hat{\Theta }\)) across replications for three levels of \(\tau \), and the estimated Mean Squared Errors relative to the true values.

Overall, the proposed model presents good finite sample properties: independently of the \(\tau \) level chosen, for small sample sizes, the estimates appear, in general, slightly biased, although, reassuringly, the MSE of the estimates relative to the true values always decreases as the sample period increases.

5 Model evaluation

In order to evaluate the quality of the tail risk estimates we can resort to a set of tests suitable to the needs of risk management. Above all, the backtesting procedure is very popular in evaluating risk measure performance (see the reviews of Campbell, 2006, Nieto & Ruiz, 2016, among others). For our model we use the Actual over Expected (AE) exceedance ratio and five other tests in this class: the Unconditional Coverage (UC, Kupiec, 1995), the Conditional Coverage (CC, Christoffersen, 1998), and the Dynamic Quantile (DQ, Engle & Manganelli, 2004) tests for the VaR and the UC and CC tests for the ES (Acerbi & Szekely, 2014).

The AE exceedance ratio is the number of times that the VaR measures have been violated over the expected VaR violations. The closer to one the ratio, the better is the model to forecast VaRs. The UC test is a LR-based test, where the null hypothesis assesses whether the actual frequency of VaR violations is equal to the chosen \(\tau \) level. Formally, the null hypothesis of the UC test is

where \(\pi =\mathbb {E}[L_{i,t}(\tau )]\), with \(L_{i,t}(\tau )=\mathbbm {1}_{\left( r_{i,t}<VaR_{i,t}(\tau )\right) }\) representing the series of VaR violations. The UC test statistic is asymptotically \(\chi ^2\) distributed, with one degree of freedom, assuming independence of the \(L_{i,t}(\tau )\) series.

Another critical aspect to test for is the independence of VaR violations over time. The main idea is to discard models whose VaR forecasts are violated in subsequent days. Moreover, if the assumption of independence is not satisfied by the violations, the asymptotic results on the distribution of the UC test can fail to hold. The independence test used in this context is that of Christoffersen (1998), where the null hypothesis consists of independence of \(L_{i,t}(\tau )\), while the alternative hypothesis is that \(L_{i,t}(\tau )\) follows a first-order Markov Chain. Under \(H_0\), the LR-based test is asymptotically \(\chi ^2\) distributed, with one degree of freedom.

An overall assessment of the VaR measures is given by the CC test conducted on both null hypotheses of the UC and of the independence tests jointly (asymptotically the test statistic is \(\chi ^2\) distributed, with two degrees of freedom).

The DQ test also applies to the independence of the VaR violations jointly with the correctness of the number of violations as the CC test, but it was shown (Berkowitz et al., 2011) to have more power over it. In particular, the DQ test consists of running a linear regression where the dependent variable is the sequence of VaR violations and the covariates are the past violations and possibly any other explanatory variables. More in detail, let \(Hit_{i,t}(\tau )=L_{i,t}(\tau )-\tau \) be the so-called series of the hit variable. This series, under correct specification, should have zero mean, be serially uncorrelated and, moreover, uncorrelated with any other past observed variables. The DQ test can be carried via the following OLS regression:

where \(u_{i,t}\) is the error term and \(Z_{i,t}(\tau )\)’s include potentially relevant variables belonging to the available information set, like, for instance, previous Hits, lagged VaR or past returns. In matrix notation, the OLS regression in (28) becomes:

where the vector \({\varvec{Hit}}\) has dimension N (with N indicating the total number of observations), the matrix of predictors \({\varvec{Z}}\) has dimension \(N \times (K_1+K_2+1)\), the vector \({\varvec{\psi }}=\left( \beta _0,\beta _1,\ldots ,\beta _{K_1},\gamma _1,\ldots ,\gamma _{K_2} \right) \) has dimension \((K_1+K_2+1)\), and the error vector \({\varvec{u}}\) has dimension N. Under correct specification we test the null \({\varvec{\psi }}={\varvec{0}}\) with a test statistic:

where \(\hat{{\varvec{\psi }}}\) is the estimated vector of coefficients obtained from the OLS regression in (29).

For the expected shortfall ES, the UC test of Acerbi and Szekely (2014) is based on the following statistic:

If the distributional assumptions are correct, the expected value of \(Z_{UC}\) is zero, that is \(\mathbb {E}\left( Z_{UC}\right) =0\). The CC test of Acerbi and Szekely (2014) has the following statistic:

where \(NumFail=\sum _{i=1}^{N_t}\sum _{t=1}^{T}L_{i,t}(\tau )\). If the distributional assumptions are correct, the expected value of \(Z_{CC}\), given that there is at least one VaR violation, is zero, i.e. \(\mathbb {E}\left( Z_{CC}|NumFail>0\right) =0\). The UC and CC tests are one-sided and reject the null when the model underestimates the risk (significantly negative test statistic).

6 Empirical analysis

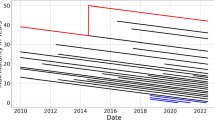

In this section, we apply the MF-QR-X model to estimateFootnote 6 VaR and ES for the daily log-returns of two energy commodities: the WTI Crude Oil and the RBOB Gasoline futures.Footnote 7 The low-frequency variable is the monthly GPR index, which enters our mixed-frequency models as the first difference divided by one lagged realization. The “–X” variable is the VIX index.Footnote 8 The period of investigation covers almost 13 years, from January 2010 to July 2022 on a daily basis, split between in- (from January 2010 to December 2016) and out-of-sample periods (from January 2017 to July 2022). The data are summarized in Table 5, and plotted in Fig. 1.

We compare the estimated VaR and ES with several well-known competitive specifications belonging to the class of parametric (GARCH, GJR (Glosten et al., 1993), and GARCH-MIDAS, with Gaussian and Student’s t error distributions), non-parametric (HS) and semi-parametric models (the Symmetric Absolute Value (SAV), Asymmetric Slope (AS) and Indirect GARCH (IG) specifications of the CAViaR (Engle & Manganelli, 2004)). As per the mixed-frequency specifications, the same low-frequency variable (GPR index) is inserted as the low-frequency variable in the GARCH-MIDAS specifications as well as our proposed MF-QR and MF-QR-X models. All the functional forms of these models are reported in Table 6.

6.1 In-sample analysis

Tables 7 reports the p-values of the LR test (Eq. (27)) using \(\tau =0.05\), on the period from 2010 to 2016, for the two commodities under investigation, which suggests the inclusion of up to six, respectively, five lagged daily log-returns in the models for the Crude Oil and Gasoline futures.

As regards the number of lagged realizations entering the low-frequency component, we choose \(K=36\), for all mixed frequency models. The in-sample estimated parameters for the parametric (with Quasi Maximum Likelihood standard errors, cf. Bollerslev & Wooldridge, 1992) and semi-parametric models (with bootstrap-based standard errors, as done also by Xu et al., 2021) are reported in Tables 8 (Crude Oil) and 9 (Gasoline). The algorithm used to obtain the bootstrap standard errors is sketched in “Appendix B”. Note that for the proposed MF-QR-X model, the low-frequency parameters as well as the parameters associated to the “–X” variable are generally significant.

The in-sample backtesting evaluations are reported in Tables 10 (Crude Oil) and 11 (Gasoline). All models pass the chosen backtesting procedures (p-values in columns 3–7), with a strong preference for the longer windows in the HS non-parametric model.

6.2 Out-of-sample evaluation

The empirical analysis is completed by the out-of-sample analysis. In line with Lazar and Xue (2020), the one-step-ahead VaR and ES forecasts of the parametric and semi-parametric models are obtained with parameters estimated every five days, using a rolling window of size 1500 observations. For our main MF-QR-X model, the VaR and ES forecasts are graphically reported in Fig. 2.

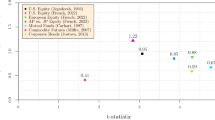

The results of the out-of-sample evaluations are synthesized in Tables 12 (Crude Oil) and 13 (Gasoline), respectively. While the AE ratios closest to one are seen for model GM-N for Crude Oil in Table 12, and for model QR for Gasoline (Table 13), a more formal statistical evaluation of the VaR and ES performances by different models is given by backtesting procedures. Contrary to the in-sample period where almost all the models passed the backtesting procedures, going out-of-sample, the proposed MF-QR-X is the only one that fails to reject the null for all the VaR and ES tests for both the Crude Oil and the Gasoline log-returns (while the QR model passes all tests only for the latter), with more scattered and less systematic evidence for the other models, but with a consistent failure of all the tests by GM-t, short window HS and SAV, AS and IG. In “Appendix C”, we also report the results of the backtesting evaluations using a slower frequency (ten/twenty days) of parameter updates. The results are quite robust to different frequency updating schemes, as it can be seen in Tables from 14, 15, 16 and 17.

7 Concluding remarks

This paper suggested the inclusion of mixed-frequency (MF) components in a quantile regression (QR) approach to VaR and ES estimations, within a dynamic model of volatility with the original introduction of a low- and a high-frequency (“–X”) components: the outcome was labelled MF-QR-X model. Given its nature of quantile regression, no explicit distribution for the returns is necessary and robustness to outliers in the data is guaranteed.

Starting from the assessment of the weak stationarity conditions of our semi-parametric MF-QR-X process, we suggested an estimation procedure the performance of which was investigated through an extensive Monte Carlo exercise in finite samples. Overall, we have satisfactory properties of the estimates and the resulting VaR forecasts are robust to some misspecification in the weighting parameter entering the mixed-frequency component.

Energy commodities—Crude Oil and Gasoline futures—take the center stage in the illustration of the empirical performance, both in- and out-of-sample, of the proposed MF-QR-X model, contrasting it against several popular parametric, non-parametric and semi-parametric alternatives. The results are encouraging since our model is the only model consistently passing all the VaR and ES backtesting procedures out-of-sample for the Crude Oil log-returns (together with the QR model for the Gasoline log-returns). The empirical results support the use of MF-QR-X models to exploit the information content of mixed-frequency data in a risk management framework.

Further research may focus on the multivariate extension of the tail risk forecasts, as done by Torres et al. (2015), Di Bernardino et al. (2015), Bernardi et al. (2017), and Petrella and Raponi (2019), among others. Another interesting point would be the investigation of the performance of the MF-QR-X with an asymmetric term, both for what concerns the daily returns and the low-frequency component, as done by Amendola et al. (2019), for instance.

Notes

The VaR and ES of this commodity have been recently investigated by Kuang (2022)

The monthly GPR index we use is built through an automated text-search on the articles of ten newspapers in relationship to eight risk categories. Such an index has been extensively used in many recent contributions concerning oil volatility (see, for instance, Liu et al., 2019, Mei et al., 2020, Qin et al., 2020, among others).

A profiling out strategy was used by Engle et al. (2013) for the parameter K in the GARCH-MIDAS model.

For simplicity, we have focused here on the case without the “–X” component.

As per the parameter \(\omega _2\), the grid search is done over 100 values and the applied rescaling factor is equal to 1, as its value is unaffected by \(\tau \).

In terms of computational efforts, it is worth noting that the proposed MF-QR-X model is not excessively demanding. For instance, VaR and ES (via maximization of the ALD) are obtained in 4 s, considering five years of data, with the –X variable, on the following PC: HP EliteDesk 800 G8 Desktop, Intel i7-11700, 32 GB of RAM.

Both the WTI and RBOB futures have been downloaded from the Yahoo Finance site (with, respectively, ticks “CL=F” and “RBOB=F”).

Taken from the Yahoo finance site and transformed by dividing it by \(\sqrt{252} \cdot 100\), in order to express it as daily volatility.

References

Acerbi, C., & Szekely, B. (2014). Back-testing expected shortfall. Risk, 27(11), 76–81.

Acerbi, C., & Tasche, D. (2002). Expected shortfall: A natural coherent alternative to value at risk. Economic notes, 31(2), 379–388.

Acerbi, C., & Tasche, D. (2002). On the coherence of expected shortfall. Journal of Banking & Finance, 26(7), 1487–1503.

Amendola, A., Candila, V., & Gallo, G. M. (2019). On the asymmetric impact of macro-variables on volatility. Economic Modelling, 76, 135–152.

Amendola, A., Candila, V., & Gallo, G. M. (2021). Choosing the frequency of volatility components within the Double Asymmetric GARCH-MIDAS-X model. Econometrics and Statistics, 20, 12–28.

Andersen, T. G., Bollerslev, T., Christoffersen, P. F., & Diebold, F. X. (2006). Practical volatility and correlation modeling for financial market risk management. In M. Carey & R. Stultz (Eds.), Risks of Financial Institutions. University of Chicago Press for NBER.

Andersen, T. G., Bollerslev, T., Christoffersen, P. F., & Diebold, F. X. (2006). Volatility and correlation forecasting. In G. Elliott, C. W. J. Granger, & A. Timmermann (Eds.), Handbook of economic forecasting. North Holland.

Artzner, P., Delbaen, F., Eber, J.-M., & Heath, D. (1999). Coherent measures of risk. Mathematical finance, 9(3), 203–228.

Bayer, S. (2018). Combining Value-at-Risk forecasts using penalized quantile regressions. Econometrics and Statistics, 8, 56–77.

Berkowitz, J., Christoffersen, P., & Pelletier, D. (2011). Evaluating value-at-risk models with desk-level data. Management Science, 57(12), 2213–2227.

Bernardi, M., Maruotti, A., & Petrella, L. (2017). Multiple risk measures for multivariate dynamic heavy-tailed models. Journal of Empirical Finance, 43, 1–32.

Bollerslev, T. (1986). Generalized autoregressive conditional heteroskedasticity. Journal of Econometrics, 31(3), 307–327.

Bollerslev, T., & Wooldridge, J. M. (1992). Quasi-maximum likelihood estimation and inference in dynamic models with time-varying covariances. Econometric Reviews, 11(2), 143–172.

Caldara, D., & Iacoviello, M. (2022). Measuring geopolitical risk. American Economic Review, 112(4), 1194–1225.

Campbell, S. D. (2006). A review of backtesting and backtesting procedures. Journal of Risk, 9(2), 1.

Carnero, M. A., Peña, D., & Ruiz, E. (2012). Estimating GARCH volatility in the presence of outliers. Economics Letters, 114(1), 86–90.

Chen, C. W. S., Gerlach, R., Hwang, B. B. K., & McAleer, M. (2012). Forecasting value-at-risk using nonlinear regression quantiles and the intra-day range. International Journal of Forecasting, 28(3), 557–574.

Christoffersen, P. F. (1998). Evaluating interval forecasts. International Economic Review, 39(4), 841–862.

Christoffersen, P., & Gonçalves, S. (2005). Estimation risk in financial risk management. Journal of Risk, 7(3), 1.

Conrad, C., & Kleen, O. (2020). Two are better than one: Volatility forecasting using multiplicative component GARCH-MIDAS models. Journal of Applied Econometrics, 35(1), 19–45.

Conrad, C., & Loch, K. (2015). Anticipating long-term stock market volatility. Journal of Applied Econometrics, 30(7), 1090–1114.

Cont, R. (2001). Empirical properties of asset returns: Stylized facts and statistical issues. Quantitative Finance, 1(2), 223–236.

Di Bernardino, E., Fernández-Ponce, J., Palacios-Rodríguez, F., & Rodríguez-Griñolo, M. (2015). On multivariate extensions of the conditional value-at-risk measure. Insurance: Mathematics and Economics, 61, 1–16.

El Ghourabi, M., Francq, C., & Telmoudi, F. (2016). Consistent estimation of the value at risk when the error distribution of the volatility model is misspecified. Journal of Time Series Analysis, 37(1), 46–76.

Engle, R. F. (1982). Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econometrica, 50(4), 987–1007.

Engle, R. F., & Gallo, G. M. (2006). A multiple indicators model for volatility using intra-daily data. Journal of Econometrics, 131, 3–27.

Engle, R. F., Ghysels, E., & Sohn, B. (2013). Stock market volatility and macroeconomic fundamentals. Review of Economics and Statistics, 95(3), 776–797.

Engle, R. F., & Lee, G. J. (1999). A long-run and short-run component model of stock return volatility. In R. F. Engle & H. White (Eds.), Cointegration, causality, and forecasting: A festschrift in honor of Clive W. J. Granger (pp. 475–497). Oxford University Press.

Engle, R. F., & Manganelli, S. (2004). CAViaR: Conditional autoregressive value at risk by regression quantiles. Journal of Business & Economic Statistics, 22(4), 367–381.

Engle, R. F., & Patton, A. J. (2001). What good is a volatility model? Quantitative Finance, 1(2), 237–245.

Fissler, T., & Ziegel, J. F. (2016). Higher order elicitability and Osband’s principle. The Annals of Statistics, 44(4), 1680–1707.

Gerlach, R., & Wang, C. (2020). Semi-parametric dynamic asymmetric Laplace models for tail risk forecasting, incorporating realized measures. International Journal of Forecasting, 36(2), 489–506.

Ghysels, E., & Qian, H. (2019). Estimating MIDAS regressions via OLS with polynomial parameter profiling. Econometrics and Statistics, 9, 1–16.

Ghysels, E., Sinko, A., & Valkanov, R. (2007). MIDAS regressions: Further results and new directions. Econometric Reviews, 26(1), 53–90.

Glosten, L. R., Jagannanthan, R., & Runkle, D. E. (1993). On the relation between the expected value and the volatility of the nominal excess return on stocks. The Journal of Finance, 48(5), 1779–1801.

Han, H. & Kristensen, D. (2015). Semiparametric multiplicative GARCH-X model: Adopting economic variables to explain volatility. Technical report, Working Paper.

Hansen, B. E. (1994). Autoregressive conditional density estimation. International Economic Review, 35, 705–730.

Hendricks, D. (1996). Evaluation of value-at-risk models using historical data. Economic policy review, 2(1), 39–69.

Jorion, P. (1997). Value at Risk. Irwin.

Kinateder, H. (2016). Basel II versus III: A comparative assessment of minimum capital requirements for internal model approaches. Journal of Risk, 18(3), 25–45.

Koenker, R., & Bassett, G. (1978). Regression quantiles. Econometrica, 46(1), 33–50.

Koenker, R., & Machado, J. A. (1999). Goodness of fit and related inference processes for quantile regression. Journal of the American Statistical Association, 94(448), 1296–1310.

Koenker, R., & Zhao, Q. (1996). Conditional quantile estimation and inference for ARCH models. Econometric Theory, 12(5), 793–813.

Kuang, W. (2022). Oil tail-risk forecasts: From financial crisis to COVID-19. Risk Management, 24, 420–460.

Kupiec, P. H. (1995). Techniques for verifying the accuracy of risk measurement models. The Journal of Derivatives, 3(2), 73–84.

Lazar, E., & Xue, X. (2020). Forecasting risk measures using intraday data in a generalized autoregressive score framework. International Journal of Forecasting, 36(3), 1057–1072.

Lee, S., & Noh, J. (2013). Quantile regression estimator for GARCH models. Scandinavian Journal of Statistics, 40(1), 2–20.

Liu, J., Ma, F., Tang, Y., & Zhang, Y. (2019). Geopolitical risk and oil volatility: A new insight. Energy Economics, 84, 104548.

Manganelli, S. & Engle, R. F. (2001). Value at risk models in finance. Technical report, ECB working paper.

Mei, D., Ma, F., Liao, Y., & Wang, L. (2020). Geopolitical risk uncertainty and oil future volatility: Evidence from MIDAS models. Energy Economics, 86, 104624.

Merlo, L., Petrella, L., & Raponi, V. (2021). Forecasting VaR and ES using a joint quantile regression and its implications in portfolio allocation. Journal of Banking & Finance, 133, 106248.

Mo, D., Gupta, R., Li, B., & Singh, T. (2018). The macroeconomic determinants of commodity futures volatility: Evidence from Chinese and Indian markets. Economic Modelling, 70, 543–560.

Nieto, M. R., & Ruiz, E. (2016). Frontiers in VaR forecasting and backtesting. International Journal of Forecasting, 32(2), 475–501.

Noh, J., & Lee, S. (2016). Quantile regression for location-scale time series models with conditional heteroscedasticity. Scandinavian Journal of Statistics, 43(3), 700–720.

Pan, Z., Wang, Y., & Liu, L. (2021). Macroeconomic uncertainty and expected shortfall (and value at risk): A new dynamic semiparametric model. Quantitative Finance, 21(11), 1791–1805.

Pan, Z., Wang, Y., Wu, C., & Yin, L. (2017). Oil price volatility and macroeconomic fundamentals: A regime switching GARCH-MIDAS model. Journal of Empirical Finance, 43, 130–142.

Petrella, L., & Raponi, V. (2019). Joint estimation of conditional quantiles in multivariate linear regression models with an application to financial distress. Journal of Multivariate Analysis, 173, 70–84.

Qin, Y., Hong, K., Chen, J., & Zhang, Z. (2020). Asymmetric effects of geopolitical risks on energy returns and volatility under different market conditions. Energy Economics, 90, 104851.

Rockafellar, R. T., & Uryasev, S. (2002). Conditional value-at-risk for general loss distributions. Journal of Banking & Finance, 26(7), 1443–1471.

Sarykalin, S., Serraino, G. & Uryasev, S. (2008). Value-at-risk versus conditional value-at-risk in risk management and optimization. Chapter 13, pp. 270–294. Informs.

Siddiqui, M. (1960). Distribution of quantiles in samples from a bivariate population. Journal of Research of the National Bureau of Standards, 64(B)(3), 145–150.

Taylor, S. J. (1986). Modeling financial time series. New York: Wiley.

Taylor, J. W. (2019). Forecasting value at risk and expected shortfall using a semiparametric approach based on the asymmetric Laplace distribution. Journal of Business & Economic Statistics, 37(1), 121–133.

Torres, R., Lillo, R. E., & Laniado, H. (2015). A directional multivariate value at risk. Insurance: Mathematics and Economics, 65, 111–123.

Xiao, Z., & Koenker, R. (2009). Conditional quantile estimation for generalized autoregressive conditional heteroscedasticity models. Journal of the American Statistical Association, 104(488), 1696–1712.

Xu, Q., Chen, L., Jiang, C., & Liu, Y. (2022). Forecasting expected shortfall and value at risk with a joint elicitable mixed data sampling model. Journal of Forecasting, 41(3), 407–421.

Xu, Y., Wang, X., & Liu, H. (2021). Quantile-based GARCH-MIDAS: Estimating value-at-risk using mixed-frequency information. Finance Research Letters, 43, 101965.

Zheng, Y., Zhu, Q., Li, G., & Xiao, Z. (2018). Hybrid quantile regression estimation for time series models with conditional heteroscedasticity. Journal of the Royal Statistical Society Series B, 80(5), 975–993.

Zhu, Q., Li, G., & Xiao, Z. (2021). Quantile estimation of regression models with GARCH-X errors. Statistica Sinica, 31, 1261–1284.

Žikeš, F., & Baruník, J. (2016). Semi-parametric conditional quantile models for financial returns and realized volatility. Journal of Financial Econometrics, 14(1), 185–226.

Acknowledgements

We thank participants in the 16th International Conference on Computational and Financial Econometrics (CFE 2022), held in London, UK. We are grateful to two anonymous referees for constructive comments that helped us in improving our contribution. Financial support from the Italian Ministry of Education, University and Research (MIUR) under Grant PRIN2017: Econometric Methods and Models for the Analysis of Big Data: Improving Forecasting Ability by Understanding and Modelling Complexity [Grant number 201742PW2W_002] is gratefully acknowledged.

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

1.1 Proof of Theorem 1

Proof

Let \(\Vert x \Vert _{p}= \left( E|x|^p \right) ^{1/p}\), and recall that \(MV_{t}\) and \(X_{i,t}\) are assumed to be weakly stationary processes. Let s be the compact time notation in lieu of i, t, that is,

Moreover, let \(\sigma _{s}=(\beta _0 + \beta _1 |r_{s-1}| + \cdots + \beta _q |r_{s-q}| + \theta |WS_{s-1}| + \beta _X |X_{s-1}|)\). Note that \(WS_{t}\), obtained as an affine combination of \(\left( MV_{t-1},\cdots ,MV_{t-K}\right) \), is weakly stationary.

From the model in (20), we can write:

given the independence between \(\sigma _{s}\) and \(z_{s}\). For \(p=1\), the right hand side (RHS) of (A.1) is zero, because \(z_{s} \overset{i.i.d.}{\sim }\ (0,1)\).

Let us now focus on \(p=2\); let us replace the second term of the RHS of (A.1), having assumed that \(\Vert z_{s}\Vert _{2} = z^* < \infty \):

Let us now translate this expression in matrix notation. Therefore, let us collect terms in a vector indexed by s, that is,

and let the \((q+2)\times (q+2)\) dimensional companion matrix A, the vectors b and c

where we have made us of the fact that, because of the stationarity of \(WS_{s}\) and \(X_{s}\), the vector c does not depend on time. Thus, we have:

Substituting recursively \(\xi _{s-1}\) backwards, and letting \(I_{q+2}\) be the identity matrix of size \(\left( q+2\right) \),

Recall the characteristic polynomial of A is \(\phi (\lambda )\), defined by Eq. (21), namely,

which has all eigenvalues \(\lambda \) lie inside the unit circle. When \(m \rightarrow \infty \), for the eigen-decomposition theorem, this implies that

and that

Putting terms together, therefore, as \(m\rightarrow \infty \) we can say that

that is the RHS converges to a finite expression not depending on time, establishing the result. \(\square \)

Appendix B

In what follows, we illustrate the bootstrap procedure used to calculate the standard errors. For simplicity, we focus on the QR model with just only one lag, being the procedure easily extensible to the other semi-parametric models. Let \(\widehat{\Theta }_\tau =\left( \hat{\beta }_{0,\tau },\hat{\beta }_{1,\tau }\right) \) be the estimated vector of parameters for the QR model. The resulting VaR is then \(\widehat{Q}_{r_{i,t}}\left( \tau \right) \). Letting \(r_{i,t}^{(boot)}\) be the bootstrap returns, we assume that \(r_{1,1}^{(boot)}=r_{1,1}\). The step-by-step procedure to obtain the bootstrap standard errors is as follows:

-

1.

Obtain the standardized residuals as \(\hat{z}_{i,t}=r_{i,t}/|\widehat{Q}_{r_{i,t}}\left( \tau \right) |\), for all i and t.

-

2.

Sample with replacement from \(\hat{z}_{i,t}\), obtaining the bootstrap residuals \(\hat{z}_{i,t}^{(boot)}\).

-

3.

Obtain the bootstrap series of VaR as \(\widehat{Q}_{r_{i,t}^{(boot)}}=\hat{\beta }_{0,\tau }+\hat{\beta }_{1,\tau }r_{i-1,t}^{(boot)}\).

-

4.

Obtain the bootstrap series of returns as \(r_{i,t}^{(boot)}=|\widehat{Q}_{r_{i,t}^{(boot)}}|\hat{z}_{i,t}^{(boot)}\).

-

5.

Repeat 2–4 for all i and t to get one complete bootstrap series of \(r_{i,t}^{(boot)}\).

-

6.

Estimate the VaR using \(r_{i,t}^{(boot)}\), obtaining \(\hat{\beta }_{0,\tau }^{(boot)}\) and \(\hat{\beta }_{1,\tau }^{(boot)}\).

-

7.

Repeat steps 2-6 BOOT number of times, obtaining the bootstrap series \(\left\{ \hat{\beta }_{0,\tau }\right\} _{boot=1}^{BOOT}\) and \(\left\{ \hat{\beta }_{1,\tau }\right\} _{boot=1}^{BOOT}\).

The bootstrap standard errors for \(\hat{\beta }_{0,\tau }\) and \(\hat{\beta }_{1,\tau }\) are then obtained as sample standard deviations of the series \(\left\{ \hat{\beta }_{0,\tau }\right\} _{boot=1}^{BOOT}\) and \(\left\{ \hat{\beta }_{1,\tau }\right\} _{boot=1}^{BOOT}\), respectively. It is worth noting that the previous procedure can be naturally extended to the models dedicated to the joint estimation of VaR and ES measures.

Appendix C

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Candila, V., Gallo, G.M. & Petrella, L. Mixed-frequency quantile regressions to forecast value-at-risk and expected shortfall. Ann Oper Res (2023). https://doi.org/10.1007/s10479-023-05370-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s10479-023-05370-x