Abstract

In this paper, we propose a new hybrid model based on a deep learning network to predict the prices of financial assets. The study addresses two key limitations in existing research: (1) the lack of standardized datasets, time scales, and evaluation metrics, and (2) the focus on prediction return. The proposed model employs a two-stage preprocessing approach utilizing Principal Component Analysis (PCA) for dimensionality reduction and de-noising, followed by Independent Component Analysis (ICA) for feature extraction. A Long Short-Term Memory (LSTM) network with five layers is fed with this preprocessed data to predict the price of the next day using a 5 day time horizon. To ensure comparability with existing literature, experiments employ an 18 year dataset of the Standard & Poor's 500 (S&P500) index and include over 40 technical indicators. Performance evaluation encompasses six metrics, highlighting the model's superiority in accuracy and return rates. Comparative analyses demonstrate the superiority of the proposed PCA-ICA-LSTM model over single-stage statistical methods and other deep learning architectures, achieving notable improvements in evaluation metrics. Evaluation against previous studies using similar datasets corroborates the model's superior performance. Moreover, extensions to the study include adjustments to dataset parameters to account for the COVID-19 pandemic, resulting in improved return rates surpassing traditional trading strategies. PCA-ICA-LSTM achieves a 220% higher return compared to the “hold and wait” strategy in the extended S&P500 dataset, along with a 260% higher return than its closest competitor in the comparison. Furthermore, it outperformed other models in additional case studies.

Graphical Abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data that changes over time is called time-series data. Time series data analysis is critical in the time-dependent application domains, such as health (Chialvo, 1987; Goldberger et al., 1988), finance (Hsieh, 1991; Peters, 1991), meteorology (Celik et al., 2014; Fraedrich, 1986; Nicolis & Nicolis, 1984; Özdoğan-Sarıkoç et al., 2023; Shekhar et al., 2008), industry (Huang et al., 2019; Mehdiyev et al., 2017), etc. Financial markets are one of the most challenging application areas to model and predict (Teixeira & De Oliveira, 2010). The complex nature of this field has led people to develop mathematical and statistical models that make the underlying structure clear, thereby making trade more efficient (Fayyad et al., 1996; Gandhmal & Kumar, 2019). Therefore, time series analysis and forecasting methods are frequently used in finance and economics (Abu-Mostafa & Atiya, 1996; Atsalakis & Valavanis, 2009; Kauffman et al., 2015; Kim, 2003; Pai & Lin, 2005; Rounaghi & Zadeh, 2016; Tay & Cao, 2001; Zhang, 2003). Recently, there has been remarkable progress in the development of deep learning models, which is a sub-branch of machine learning and has been shown to achieve successful results in various studies (Abu-Mostafa & Atiya, 1996; Nosratabadi et al., 2020; Ozbayoglu et al., 2020; Sezer et al., 2020).

The primary purpose of analyzing financial data is to predict stock market characteristics' effects and future directions for decision mechanisms based on market behavior (Cavalcante et al., 2016). In particular, financial time series forecasting constitutes the core of future decisions and transactions in the financial asset market. These forecasts help investors’ decisions and reduce potential risks. Investors always try to determine and predict the probable value of the stock or asset before deciding on their transactions (Gandhmal & Kumar, 2019; Teixeira & De Oliveira, 2010). Therefore, when the studies on financial data are analyzed, the stock market stands out as the most researched area (Nosratabadi et al., 2020). The stock market's primary target in the stock market research area is stock price or trend prediction (Cavalcante et al., 2016).

However, the large, complex, and variable structure of financial data makes it challenging to analyze and predict such data. In the reviews on financial markets, studies are generally classified according to the model used and the types of inputs (Bustos & Pomares-Quimbaya, 2020). The prediction models that can be used are divided into traditional prediction models and machine learning prediction models (Cavalcante et al., 2016; Liu et al., 2021; Nosratabadi et al., 2020). Some of the known traditional prediction models are autoregressive moving average (ARMA) and the autoregressive conditional heteroskedasticity (ARCH), while we can list some machine learning prediction models as support vector machine (SVM), naive Bayes (NB), artificial neural network (ANN). However, some authors state that traditional forecasting models are not as efficient as models based on artificial intelligence because they treat financial time series as linear systems (Atsalakis & Valavanis, 2009; Cavalcante et al., 2016; Li & Bastos, 2020). Studies in the literature are classified not only based on the mentioned model types but also according to input types. Figure 1 presents the input types to be used in the prediction models. The input type chosen for the forecasting model affects the forecasting model’s performance. For this reason, a dataset previously used in the literature was preferred in our study (Sethia & Raut, 2019; Thakkar & Chaudhari, 2021). At the same time, the authors emphasize that the dataset used is balanced (with a structure that includes both bullish (bull market) and bearish (bear market) movements) (Thakkar & Chaudhari, 2021). The data set used is of a structured input-type, consisting of market informations and technical indicators (Bustos & Pomares-Quimbaya, 2020). Our purpose in choosing a dataset with this input type is that the technical analysis approach is widely preferred in the literature (Atsalakis & Valavanis, 2009; Berradi & Lazaar, 2019; Gao & Chai, 2018; Gao et al., 2021; Kakade et al., 2023; Kwon & Moon, 2007; Li & Bastos, 2020; Sethia & Raut, 2019; Teixeira & De Oliveira, 2010; Thakkar & Chaudhari, 2021; Wei & Ouyang, 2024; Wen et al., 2020; Zheng & He, 2021). For this reason, machine learning techniques and deep learning models are very much recommended in the literature to analyze such data. In addition, in recent years, hybrid models have been proposed by combining both machine learning algorithms and deep learning models with different approaches instead of single models that cannot produce good results for every situation to improve the prediction performance of machine learning models (Nosratabadi et al., 2020; Ozbayoglu et al., 2020; Thakkar & Chaudhari, 2021).

Some of our motivations for preparing our research were as follows: (1) During our literature review, we observed that most deep learning-based asset price prediction models use different datasets and performance evaluation metrics. Although this situation limits the effectiveness or measurability of new technology prediction models, it can be considered a gap in the literature (Atsalakis & Valavanis, 2009; Bustos & Pomares-Quimbaya, 2020; Thakkar & Chaudhari, 2021). (2) Additionally, we noticed that most researchers in the existing literature focus on accuracy and low error rates in stock price prediction. However, the sole purpose of financial market participants is to reduce potential risks and achieve high returns in market volatility (Deng et al., 2023, 2024; Sethia & Raut, 2019; Zhang et al., 2020). Our research not only focuses on prediction accuracy but also calculates the return rate by establishing a simple trading strategy. Thus, the performance of the proposed method is presented to researchers as a simple decision support system. (3) Lastly, it is well-known that prediction models encounter challenges of dimensionality and overfitting due to large datasets and noisy data. In the literature, research aiming to address these structural issues and enhance the performance of prediction models is increasingly prevalent (Berradi & Lazaar, 2019; Chen et al., 2022; Guo et al., 2022; He & Dai, 2022; Huang et al., 2022; Jianwei et al., 2019; Kakade et al., 2023; Li et al., 2022; Ma et al., 2019; Sethia & Raut, 2019; Srijiranon et al., 2022; Wang et al., 2023; Wei & Ouyang, 2024; Zheng & He, 2021). To tackle these problems and improve the performance of prediction models, we aim to leverage the advantages of hybrid models to combine different methods and models. For all these reasons, we chose a dataset and model used in the literature in our study in addition to making efforts to keep the evaluation criteria of our study as broad as possible. Thus, we aimed to make our study comparable with other studies (Sethia & Raut, 2019; Thakkar & Chaudhari, 2021).

The novelty and contributions of the research to the literature are as follows: (1) We propose a hybrid PCA-ICA-LSTM model for predicting asset prices. While studies exist in the literature that combine PCA or ICA with different methods, to our knowledge, ours is the first study to combine these two statistical methods for a two-stage preprocessing and integrate them with a recurrent deep learning network to create a hybrid model. The proposed model introduces a new framework that combines PCA and ICA statistical methods to provide input to an LSTM deep learning network used for prediction. We combine PCA for dimensionality reduction and noise removal, and ICA for feature extraction from processed data, leveraging the advantages of both methods to significantly enhance prediction performance. We support this claim with various experiments. (2) Many studies in the literature use different datasets, time scales, and evaluation metrics, making it challenging to compare studies fairly. Therefore, we divide our experiments into two stages initially. In the first stage, we use a well-established dataset and time scale from the literature (Sethia & Raut, 2019; Thakkar & Chaudhari, 2021). Additionally, we prefer commonly used evaluation metrics for a fair comparison. Thus, our goal is to achieve a directly comparable study in the literature concerning dataset, time scale, and evaluation metrics. (3) The second phase of experiments expands the utilized dataset to include the turbulent period experienced in financial markets during the COVID-19 pandemic, subsequently re-evaluating the model's effectiveness. Furthermore, two additional case studies are incorporated into our work to establish a new benchmark for validating the model's efficacy. While conducting these experiments, we emphasize that the primary objective of predicting a financial asset is to achieve high returns and mitigate risks. Therefore, we go beyond focusing solely on high prediction accuracy and low error rates by incorporating the return rate metric into our study, aiming to highlight this gap in the existing literature. (4) Our experiments yielded promising results when compared to existing models that do not utilize dimensionality reduction for predicting asset prices. Our findings suggest that our model has the potential to offer researchers higher accuracy and lower error rates when working with high-dimensional datasets, while also achieving competitive return rates when compared to state-of-the-art approaches.

The outline of this article is organized as follows: Sect. 2 reviews studies on various methods that use technical indicators and dimensionality reduction techniques to analyze financial data. Section 3 presents the Study Methodology. This section presents information about the operation of the study, baseline settings, the dataset used, the cross-validation method, machine learning models used in the study, statistical methods, and the latest proposed hybrid PCA-ICA-LSTM model. The experimental results of the proposed PCA-ICA-LSTM approach in Sect. 4 are analyzed in four parts. First, the proposed model is compared with models from the same family using single-stage statistical methods. The second part presents comparisons with state-of-the-art models in the literature. The third part compares the results of the proposed model with similar studies in the literature. Finally, in the last part, we repeat our experiments by expanding our dataset from 2000–2017 to 2000–2024 to include the COVID-19 pandemic. We also include two additional case studies in our research as a benchmark. The conclusions are summarized in Sect. 5.

2 Research Review

Studies on financial markets have been analyzed according to various classification methods. We believe that the two most important of these classifications are the classification according to the type of dataset input and the classification according to the type of forecasting model. We form our research analysis on this basis and try to justify our choices based on these two classifications.

2.1 Dataset Input Types

In their study, Bustos et al. grouped the input types under two main headings: structured and unstructured data (Bustos & Pomares-Quimbaya, 2020). The authors classified structured inputs as (1) market information, (2) technical indicators, and (3) economic indicators. Unstructured inputs are categorized as (1) news, (2) social networks, and (3) blogs. The classification of studies according to input types is shown in Fig. 1 (Bustos & Pomares-Quimbaya, 2020).

In the literature, studies on structured inputs are in the majority, and two approaches to structured inputs come to the fore (Bustos & Pomares-Quimbaya, 2020; Cavalcante et al., 2016). These approaches are called technical analysis and fundamental analysis. Technical analysis information is the approach that uses stock prices and indicators derived from this price information (Atsalakis & Valavanis, 2009). Researchers who adopt this approach argue that the effect of fundamental analysis indicators and news already exists in the price of financial assets (Bustos & Pomares-Quimbaya, 2020). According to this approach, it is sufficient to analyze price movements when forecasting asset prices. On the other hand, the fundamental analysis approach uses macroeconomic and financial situation information and consists of time series information that tries to understand the reasons for price movements (Bustos & Pomares-Quimbaya, 2020; Cavalcante et al., 2016). Fundamental analysis information is challenging to obtain and may require expertise to interpret. For this reason, it is not as widely used as the technical analysis approach. In addition to these approaches, studies utilizing data from social media as input and performing price prediction using sentiment analysis have also emerged in recent years(Deng et al., 2023, 2024; Srijiranon et al., 2022).

2.2 Prediction Model Types

In the literature, other than the estimation input, another approach to classifying time series forecasting models is based on the forecasting model itself (Atsalakis & Valavanis, 2009; Bustos & Pomares-Quimbaya, 2020; Cavalcante et al., 2016; Liu et al., 2021; Nosratabadi et al., 2020). According to the forecasting model used, two forecasting models come to the fore: traditional forecasting models and machine learning forecasting models.

Traditional forecasting methods are based on mathematical and statistical foundations. It is possible to examine traditional forecasting models in linear and nonlinear classes. Famous traditional linear forecasting models are named as autoregressive (AR) model, moving average (MA) model, autoregressive moving average (ARMA) model, and autoregressive integrated moving average (ARIMA) models (Liu et al., 2021). Besides, well-known traditional nonlinear forecasting models are the Threshold Autoregressive (TAR) model, the Autoregressive Conditional Heteroskedasticity (ARCH) model, and the Constant Conditional Correlation (CCC) model, which can be listed (Cavalcante et al., 2016; Liu et al., 2021). Traditional forecasting methods assume the studied time series is produced after a linear process. They usually try to model the underlying process according to this assumption. However, financial time series are complex, noisy, and uncertain. Therefore, it exhibits non-linear behavior and makes it difficult to predict such data. This private nature of financial time series causes traditional statistical methods not to be applied effectively in the financial context (Cavalcante et al., 2016). For all these reasons, traditional forecasting models are not as reliable as necessary to predict the price of a financial asset (Nosratabadi et al., 2020).

Another predictive model classified according to the model used is the machine learning prediction model. Machine learning models provide the ability to learn from data and provide in-depth insight into problems (Nosratabadi et al., 2020). Some authors state that traditional forecasting models are not efficient because they treat financial time series as linear systems, and they get lower results than models based on artificial intelligence (Atsalakis & Valavanis, 2009; Cavalcante et al., 2016; Li & Bastos, 2020). The unpredictable dynamic nature of financial markets and the advantages as mentioned above of machine learning models have encouraged many researchers to work in this direction (Berradi & Lazaar, 2019; Chowdhury et al., 2018; Gao et al., 2021; Gudelek et al., 2017; Huang et al., 2019; Jianwei et al., 2019; Kao et al., 2013; Kauffman et al., 2015; Kim, 2003; Kwon & Moon, 2007; Long et al., 2019; Pai & Lin, 2005; Sarıkoç & Çelik, 2022; Sethia & Raut, 2019; Tay & Cao, 2001; Teixeira & De Oliveira, 2010; Thakkar & Chaudhari, 2021; Wen et al., 2020; Zhang, 2003). The deep learning models, a sub-branch of machine learning methods in recent years, have attracted attention with their successful results (LeCun et al., 2015; Schmidhuber, 2015). The advantage of deep learning models compared to other machine learning models is that deep learning models can effectively identify highly qualified features and outputs from a wide range of inputs (Nosratabadi et al., 2020). For this reason, studies focusing on deep learning models by keeping them separate from machine learning models are frequently seen in the literature (Bustos & Pomares-Quimbaya, 2020; Cavalcante et al., 2016; Li & Bastos, 2020; Nosratabadi et al., 2020; Ozbayoglu et al., 2020; Sezer et al., 2020; Thakkar & Chaudhari, 2021).

2.3 Related Work

Researchers on financial markets generally adopted a technical analysis approach and used market or technical analysis datasets(Berradi & Lazaar, 2019; Gao & Chai, 2018; Gao et al., 2021; Kakade et al., 2023; Kwon & Moon, 2007; Sethia & Raut, 2019; Thakkar & Chaudhari, 2021; Wei & Ouyang, 2024; Wen et al., 2020; Zheng & He, 2021). Due to its widespread use, ease of calculation, and ability to show changes in price movements, we adopted the technical analysis approach in our study. In addition, as a prediction model, we focus on deep learning models, which have attracted attention with their successful results in recent years and have become popular compared to other machine learning methods. Accordingly, some of the studies using technical analysis information and machine learning models on the dataset are summarized in Table 1.

Kwon and Moon attempted to optimize an iterative neural network-based prediction model on a dataset consisting of a set of technical indicators by using a genetic algorithm. This study is one of the first to use technical indicators to forecast financial time series (Kwon & Moon, 2007). Lu et al. used the SVR model to forecast financial time series (Lu et al., 2009). The authors developed a forecasting model that uses the ICA method to remove noise from the data, which they call ICA-SVR. The Nikkei 225 Index and TAIEX Index data are used to evaluate the performance of the proposed model. The ICA-SVR model outperformed the SVR-only forecasting model and the random walk model. In another study, Liu and Wang built a prediction model by integrating dimension reduction methods into a back-propagation neural network (Liu & Wang, 2011). PCA and ICA methods are preferred as dimensionality reduction methods. The prediction models are trained and assessed using Shanghai Composite (SHCI) data for two datasets. It has been reported that the ICA-BPNN model proposed by the authors outperforms the PCA-BPNN and BPNN models. Kao et al. suggested connecting nonlinear independent component analysis to support vector regression (SVR) to examine the effect of feature extraction methods in predicting stock prices and obtained successful results in their experimental studies (Kao et al., 2013). Gao et al. used a dataset with a very similar scale to the dataset in our study (Gao et al., 2017). Accordingly, they tried to predict the next day's movement of the S&P 500 index using the LSTM deep learning network. Their model showed that compared to other systems (i.e., moving average (MA), exponential moving average (EMA), and support vector machine (SVM)), the proposed model yields a higher prediction accuracy for the next day's closing price of the stock. In another study, Chowdhury et al. proposed a new model that combines PCA and ICA, which are dimension reduction and feature extraction mechanisms, for stock price prediction with SVR and successfully applied (Chowdhury et al., 2018). Gao et al. conducted case studies on Standard & Poor's 500, NASDAQ, and Apple (AAPL), utilizing dimensionality reduction techniques and the LSTM model for stock price prediction. The authors recommend principal component analysis (PCA) as the dimensionality reduction method for removing unnecessary information in the technical indicators used in the dataset and extracting highly correlated features (Gao & Chai, 2018). Long et al. stated in their studies that deep learning methods may be more suitable for asset price prediction models since statistical methods depend on initial assumptions and machine learning techniques have performance and overfitting problems due to manual feature selection (Long et al., 2019). Berradi and Lazaar used a recurrent neural network (RNN) deep learning model for stock price prediction. In this study, they proposed the PCA-RNN model, which applies the PCA technique to reduce the dimension of the dataset consisting of 90-day historical data and technical indicators of a stock, and emphasized that the prediction accuracy of the proposed model achieves better results than does the RNN model (Berradi & Lazaar, 2019). Jianwei et al. proposed a new model (ICA-GRU) combining the ICA approach and gated recurrent unit (GRU) deep learning network to predict gold prices. It has been reported that the proposed model outperforms the integrated autoregressive moving average (ARIMA), radial basis function neural network (RBFNN), LSTM, and ICA-LSTM models (Jianwei et al., 2019). Sethia and Raut (2019) proposed a model that predicts prices after five days by establishing a simple trading strategy on a dataset consisting of 18 years of historical data and technical indicators of the S&P 500 index. Within the scope of the study, deep learning models such as LSTM and GRU were compared with models such as SVM and artificial neural networks (ANNs) using the ICA technique in data preprocessing steps, and it was emphasized that the performance of the LSTM deep learning price prediction model was superior to that of other models (Sethia & Raut, 2019). Ma et al. proposed a model based on an LSTM deep learning network and preprocessing with PCA to forecast the closing price of the Shanghai Composite Index. The experimental results highlight the success of the PCA method in removing noise from the data and improving the prediction accuracy (Ma et al., 2019). In their experimental study to develop a price prediction model, Wen et al. used the PCA approach with the LSTM deep learning network to reduce dependencies and reduce the data dimension in a dataset consisting of two years of financial data and several technical indicators of a stock (Wen et al., 2020). The PCA-LSTM model can predict asset prices more successfully than traditional price-prediction models. By adopting the concept of decomposition-reconstruction-synthesis to forecast complex financial time series, Zhang and colleagues proposed a new forecasting model based on deep learning called CEEMD-PCA-LSTM. Initially, the model decomposes the time series into intrinsic mode functions (IMFs) using the complementary ensemble empirical mode decomposition (CEEMD) method to identify trends. Then, principal component analysis (PCA) is applied for dimensionality reduction to extract high-level features from the data. The new features feed the long short-term memory (LSTM) network to predict the closing price of the next trading day. The authors emphasize the high prediction accuracy and directional symmetry of the proposed model while also performing trading simulations to evaluate its profitability performance (Zhang et al., 2020). In their study focusing on the stock prices of two aviation companies, Zheng et al. proposed the PCA + RNN model. This study aims to demonstrate the impact of technical indicators and the PCA method on the prediction performance of RNNs in forecasting stock prices (Zheng & He, 2021). In another study, Gao et al. studied a dataset consisting of financial data, technical indicators, and investor sentiment indicators to improve stock forecasting. Accordingly, researchers have used approaches such as least absolute shrinkage and selection operator (LASSO) and principal component analysis (PCA) in dimension reduction processes and examined the effects of these approaches on the performance of long short-term memory (LSTM) and gated recurrent units (GRUs) deep learning prediction models (Gao et al., 2021). Building on the study by Sethia and Raut (2019), Thakkar and Chaudhari (2021) conducted a comparative analysis of deep neural networks for stock price trend prediction. They compared deep learning models by applying the same parameters and dataset across the models examined in their study. (Thakkar & Chaudhari, 2021). Huang et al. proposed a novel scaled PCA (sPCA) method that assigns higher weights to components with strong predictive power. The authors aim to address the weak aspect of the PCA method that does not consider target information. Experiments conducted on 123 macroeconomic variables from the FRED-MD database indicate that the sPCA method outperforms PCA (Huang et al., 2022). Guo et al. introduced the scaled PCA method for forecasting oil volatility in their study. This study compares the introduced s-PCA method with two other dimensionality reduction methods, PCA and PLS. Additionally, a series of experiments were conducted for hybrid models by integrating these methods with AR models for various variants. The proposed AR-sPCA model demonstrated robust performance in robustness tests, such as different window and lag selections (Guo et al., 2022). In another study demonstrating the effectiveness of hybrid models, Srijiranon et al. proposed the PCA-EMD-LSTM model to forecast the closing price of the Thai stock market. The model performs feature engineering using PCA and empirical mode decomposition (EMD) methods while utilizing LSTM for prediction tasks. The authors emphasized that the application of PCA to the EMD-LSTM model reduces prediction errors. Additionally, the model incorporates sentiment analysis of economic news to enhance performance based on news sensitivity (Srijiranon et al., 2022). In their study, Chen and Hu conducted a series of experiments on various models based on ANN and LSTM for predicting volatility in stock index futures trading in China and the United States utilizing feature extraction methods such as AE and PCA. The findings suggest that PCA outperforms AE in terms of prediction efficacy. In comparison, the LSTM (PCA) model achieved the most successful results (Chen & Hu, 2022). He and Dai conducted experiments on models combining ICA and LSTM to predict the prices of 5 stocks selected from the CSI 300 stock exchange. While the ICA method was used to eliminate noise, the predictive effect of the LSTM model was examined. The experiments confirm that the proposed ICA-Multi-LSTM model outperforms non-ICA-LSTM models in most cases, particularly from the perspective of individual stocks (He & Dai, 2022). Chen et al. proposed a new model that integrates various methods, such as CEEMD, sample entropy (SE), ICA, particle swarm optimization (PSO), and LSTM, to predict stock prices. The experiments utilize data from the Shanghai Stock Exchange (SSE) involving 4 selected stocks. The ICA technique is responsible for extracting the primary features of stock price data by separating the IMFs created with CEEMD. The proposed model achieves successful results compared to seven other models that do not include ICA (Chen et al., 2022). Li et al. proposed an optimized PCA-LSTM hybrid model for price prediction tasks. The experimental results emphasize the use of PCA to reduce noise in the dataset, improve sample quality, and eliminate input set correlations. The authors concluded that the PCA-LSTM model outperforms the original LSTM model in the prediction task (Li et al., 2022). Mendoza et al. aimed to enhance the prediction performance in time series of S&P 500, DAX, AEX, and SMI indices by leveraging fractal and self-similarity behaviors using simple recurrent neural networks, multilayer perceptron, and long short-term memory architectures. The authors noted that their proposed self-similarity approach improved the predictive capacity of deep neural network models, resulting in significant improvements of 23%, 11.26%, 21%, and 12% for the S&P 500, DAX, AEX, and SMI indices, respectively (Mendoza et al., 2023). Li and colleagues tested the performance of 12 models based on LSTM and GRU to predict volatility in energy sector indices. The authors employed PCA and SPCA methods for feature extraction, with the SPCA-MLSTM model providing the best predictions in comparison. Additionally, while LSTM models generally outperform GRU models in terms of prediction performance, the SPCA method is superior to PCA in terms of prediction efficacy (Li et al., 2023). Wang et al. proposed a PCA-IGRU-based model for predicting the Shanghai Composite Index (SCI) closing price. The PCA method is utilized to reduce the high dimensionality of the data while minimizing information loss. To prevent overfitting, an anti-overfitting conversion module (ACM) is incorporated into the GRU, resulting in an enhanced gated recurrent unit (IGRU). The experimental results demonstrate that the PCA-IGRU model outperforms seven other models in terms of prediction accuracy and shorter training time (Wang et al., 2023). Kakade et al. suggested that including explanatory fundamental and technical variables as inputs to prediction models helps enhance their predictive ability. They proposed a hybrid LSTM + PCA + ARIMA model for forecasting crude oil prices. The PCA method is utilized to reduce the input dimensionality of the LSTM network, mitigating the impact of multicollinearity. The proposed LSTM + PCA + ARIMA model outperforms the LSTM model across all dimensions, with an average improvement of 41% in prediction accuracy (Kakade et al., 2023). Wei and Ouyang employed a scaled principal component analysis (s-PCA) method on a multidimensional dataset to improve carbon price prediction accuracy. The authors utilize factors such as technical indicators, financial indicators, and commodity indicators to characterize carbon prices. This study employed the s-PCA method to reduce the dimensionality of these factors and integrated it with the linear regression method and the LSTM model. The proposed model can achieve higher average returns than other benchmark strategies in terms of market timing (Wei & Ouyang, 2024).

As mentioned, ICA, PCA, and similar techniques are used for dimension reduction and feature extraction in data preprocessing stages with machine learning algorithms. Dimension reduction techniques simplify the dataset and can eliminate the dimensionality problem by selecting the most relevant attributes (Zhong & Enke, 2017). At the same time, it provides an additional contribution by increasing the prediction accuracy of machine learning prediction models with which it is used (Bustos & Pomares-Quimbaya, 2020; Singh & Srivastava, 2017). In this way, hybrid forecasting models with successful results have been developed (Cavalcante et al., 2016; Nosratabadi et al., 2020; Ozbayoglu et al., 2020; Thakkar & Chaudhari, 2021).

However, when the studies conducted to date are examined, many different approaches and different datasets are used for these models in financial time series forecasting. Model performance depends on the data characteristics used. Accordingly, the selection of inappropriate model parameters, feature sets, and training-test intervals reduces the comparability of the studies. For these reasons, our proposed PCA-ICA-LSTM model, which we use for financial time series forecasting, is prepared by considering the work of Thakkar and Chaudhari (2021), who wanted to perform a comparative experimental study between deep neural networks (Thakkar & Chaudhari, 2021).

3 Methodology

The first goal of our work is to predict the price of a financial asset using a deep learning network. Accordingly, this section consists of six subsections: the basic settings of the study, the dataset, the method/models, the functioning of the prediction model, our proposed model, and the evaluation criteria. The first subsection provides information about basic settings and operations. The second subsection provides detailed information about data collection and datasets. The following subsection explains the statistical methods and deep learning models used. The fourth subsection provides information about the operation of the prediction model. The fifth subsection introduces the proposed model. The last subsection includes the evaluation metrics used to compare the performance of the proposed model with other models.

3.1 Baseline Settings

In the previous sections, we emphasized that we selected the LSTM network used in our study from the literature. Optimizing the selected LSTM model to improve it may be desirable in future studies, but we have not made any changes. Thus, we maintain our aim to directly compare our proposed hybrid deep learning model with previous similar studies. Thakkar and Chaudhari (2021), who has a similar goal to ours, used the same deep learning model and hyperparameters as Sethia and Raut in his work to make the comparison fair (Sethia & Raut, 2019; Thakkar & Chaudhari, 2021). We have applied the same basic settings as Thakkar and Chaudhari (2021) & Sethia and Raut (2019) in their work to our study. Table 2 provides a summary of the baseline settings of our study.

Accordingly, the prediction model architecture we have created in this study has a structure consisting of five consecutive layers. Dropout layers are added between the layers to prevent over-fitting. The model consists of two LSTM layers of 64 and 128 nodes in the first stage and provides long-term dependency handling. The subsequent two layers consist of dense layers consisting of 256 and 512 nodes. All the nodes in this layer are connected to the nodes of the previous layer and show a fully connected structure. In the last layer of the model, there is the output layer that will give the prediction value and consists of a single node. For the estimation model, the epoch of the training process was determined as 125, the heap size was 50, and the dropout value was 0.3. While the target attribute is determined as the adjusted closing price, the linear activation function, and Adam optimization algorithm are used. Work is carried out with the mean square error (MSE) loss function to update the parameters.

In addition, Thakkar and Chaudhari & Sethia and Raut used the holdout method to parse the dataset in their study (Sethia & Raut, 2019; Thakkar & Chaudhari, 2021). The holdout method is the simplest type of cross-validation. In its simplest form, the dataset is divided into two sets, called the training set (the validation set optional) and the test set. The main advantage of this method is that it takes a brief time to compute. However, the evaluation can have high variance. We apply the method precisely to our study. Figure 2 illustrates the partitioning of the dataset into periods using the holdout method within the scope of the study. Accordingly, the whole dataset consists of 4425 records: 3053 records for the training set (~ 69%), 525 records for the validation set (~ 11%), and 847 records for the test set (~ 20%). The visual for this is presented in Fig. 3.

In addition, there are statistical methods used in the pre-processing phase of the study. We include these methods in the pre-processing part because a new dataset is created after applying each statistical method. Therefore, after these statistical methods, the final dataset given as input to the prediction model will be quite different from the initial dataset at the beginning of the study. The most critical parameter we use in the basic settings of the statistical methods is the number of components. We apply this adjustment through PCA. The number of components for PCA determines the principal components found in a dataset. By using this parameter, we both reduce the size of our dataset and control the number of components of the ICA to be used afterward. Sethia and Raut (2019), who first used the dataset we used in our study, used the dimensionality reduction method for different numbers of attributes, such as 7, 12, 18, 25, 32, and 45 for the dataset. The authors reported reducing the number of features to 12 for the final dataset after the experiments (Sethia & Raut, 2019). Thakkar and Chaudhari followed the same path as Sethia and Raut (2019) in his study and performed similar tests (Thakkar & Chaudhari, 2021). Unlike Sethia and Raut (2019), the authors also conduct experiments for cases where the number of features is 5 and 48 without dimensionality reduction, but they compare the models based on a single metric. The evaluation metric used by the authors is different from Sethia and Raut’s (2019) study. However, Sethia and Raut (2019) conducted his experiments with increasingly common metrics. Therefore, we follow Sethia and Raut’s (2019) study because one of the goals of our work was to have a comparable study. For this purpose, before the experiments, we checked whether the number of 12 components was sufficient to represent our dataset. Accordingly, when we reduced the number of components by 12 by applying the PCA method to our dataset with 48 features, we found that the total variance explained was 95.539%. In other words, we can represent 95% of the dataset with 12 principal components. Since this ratio is a sufficient total variance value, we use the value of the number of components parameter exactly. The effect of each component on the total variance is shown in Fig. 4.

3.2 Dataset

This study aims to develop a price forecasting model for financial time series forecasting. Therefore, we are conducting a study aiming to predict the price after 5 days in the Standard & Poor's 500 (S&P500) dataset, relying on the work of Thakkar and Chaudhari (Thakkar & Chaudhari, 2021). This study used the dataset that Sethia and Raut prepared (Sethia & Raut, 2019). Thakkar and Chaudhari state that the dataset used in their study is balanced for up and downtrends (Thakkar & Chaudhari, 2021). The dataset covers 18 years, including daily data between 01.01.2000 and 23.10.2017, and is obtained from the Yahoo Finance website (SPY, 2024) (Fig. 3).

The dataset obtained from Yahoo Finance consists of the opening, closing, highest, lowest, and adjusted closing prices for each trading day in the S&P500 index, as well as volume information (SPY, 2024). An example set of the Yahoo Finance dataset is shown in Table 3. All of this information obtained on the Yahoo Finance web page regarding a financial asset is called market information. However, market information alone is often insufficient to determine the financial asset's future price trend. For this reason, researchers aim to increase forecast performance in many studies by adding technical indicators calculated using market information to datasets. Sethia and Raut conducted their studies on a dataset of 4425 records and 48 attributes, created using market information and technical indicators (Sethia & Raut, 2019). The list of attributes in the S&P500 dataset used in this study is presented in Table 4.

Each attribute in the dataset can be expressed with different ranges. This can result in a feature set of extremely high or low values. Sethia and Raut, in their study, first standardized the data attributes with Z-score standardization (Sethia & Raut, 2019). For this, Eq. (1) uses the formula (Furey, 2023). In the equation, x̄ represents the population mean, E[X] is the population mean of a known sample, σ(X) is the population standard deviation of a known sample, and n is the sample size. Then, it is normalized with the Min–Max scaling method to scale each data feature in the range of [0,1]. In our study, we apply the same method for our dataset. The sample representation of our dataset, which is formed as the result of these processes, is shown in Table 5.

Because of the multidimensional nature of the dataset, the noise in it is minimized by using the dimension reduction method, and then efficient features are extracted. In the last step, the price is estimated using a deep learning model for forecasting.

3.3 Methods and Models

3.3.1 Principal Component Analysis (PCA) for Dimension Reduction and Noise Removal

Principal Component Analysis (PCA) is a statistical technique introduced by Karl Pearson and is frequently used in areas such as image compression, face recognition, and classification (Pearson, 1901). The purpose of the use is to eliminate the low-efficiency features that will occur due to high input sizes when working with large datasets in experimental studies, to increase interpretability by reducing the data dimension, and to minimize information loss (Gao & Chai, 2018; Huang et al., 2022; Kakade et al., 2023; Li et al., 2022; Ma et al., 2019; Srijiranon et al., 2022; Wang et al., 2023; Wei & Ouyang, 2024; Zheng & He, 2021). The basic idea of this technique is to reduce the dimension of the dataset while preserving the diversity in the dataset. For this reason, PCA forms a new variable set that provides the greatest possible diversity in the dataset and is equal to or less than the original number of variables called the principal components.

A financial time series is a multi-dimensional particular time series formed due to complex interactions of many factors. The dataset we will use is a multidimensional dataset that is formed by adding over 40 technical analysis information to the S&P500 Index information, which is the financial time series. In our study, the PCA method allows us to eliminate the existing noise and low-efficiency features by reducing the dimension of this dataset (Bustos & Pomares-Quimbaya, 2020; Li et al., 2022; Ma et al., 2019; Singh & Srivastava, 2017; Zheng & He, 2021; Zhong & Enke, 2017). However, it allows us to create a new dataset by minimizing the loss of information in the dataset used while doing this.

3.3.2 Independent Component Analysis (ICA) for Feature Extraction

Independent Component Analysis (ICA) is a linear feature extraction technique that produces new statistically independent features by aiming to reduce first- and second-order dependencies in a dataset (Anowar et al., 2021). Applicable to many different datasets, ICA can generally analyze digital images, audio streams, radio signals, and biofeedback (brain wave, etc.) information and time series (Tharwat, 2021). ICA is a technique that can separate and recover unknown source signals from a complex signal without having sufficient prior knowledge of source signals and mixing mechanisms, especially in signal processing such as blind source separation (Tharwat, 2021) and cocktail party problems.

ICA's investigation of non-normally distributed and statistically independent features are the two most prominent features distinguishing it from other feature extraction mechanisms. For example, PCA searches for aspects representative of the data, while ICA searches for aspects independent of each other (Anowar et al., 2021). Based on these features of the ICA method, Sethia and Raut state that the financial dataset they use in their studies consists of different individual components and exhibits a non-normal distribution (Sethia & Raut, 2019). ICA is a valuable method as a dimension-preserving transformation because it produces statistically independent components in pattern recognition (Chen et al., 2022; Draper et al., 2003; Jianwei et al., 2019; Kao et al., 2013; Liu & Wang, 2011; Lu et al., 2009; Sethia & Raut, 2019; Thakkar & Chaudhari, 2021). Studies in the literature emphasize that ICA is a better feature extraction method than other statistical methods (Chen et al., 2022; Draper et al., 2003; Kao et al., 2013; Kwak, 2008; Liu & Wang, 2011; Reza & Ma, 2016). In our study, the ICA method was used to extract features because the dataset used had appropriate features, as stated in Sethia and Raut's study (Sethia & Raut, 2019), and it was a successful feature extraction technique.

3.3.3 Deep Learning Using Long Short-Term Memory (LSTM) Network

Long Short-Term Memory (LSTM), which is essentially an improvement of Recurrent Neural Networks (RNN), was introduced by Hochreiter and Schmidhuber (Hochreiter & Schmidhuber, 1997). What makes LSTM networks different is the ability to use historical information on time series efficiently. It can also solve the disappearing gradient problem when processing long-term dependencies in RNNs. LSTM network consists of neural network cells that repeat each other just like RNN networks, but unlike RNNs, it can remember and forget past information with mechanisms called gates in the memory cell (Hochreiter & Schmidhuber, 1997; Nosratabadi et al., 2020). This way, desired historical information can be discarded or stored in the LSTM network. Figure 5 presents the internal structure of a memory cell belonging to the LSTM network (Ozkok & Celik, 2022).

In an LSTM memory cell, three gate mechanisms, input, output, and forgetting, control the storage state. The functions and calculations of the gates are as follows:

-

The first structure of an LSTM cell is the forget gate. Forget gate performs the function of determining the information to be discarded. \(\sigma \) represents the activation function, w weight, and b offset in Eq.(2). The sigmoid function produces values in the range of 0 ~ 1. Accordingly, 0 information allows the information of the previous cell to be forgotten, and 1 allows it to be transferred to the next cell completely. Thus, \({f}_{t}\) determines how much of the previous cell's information should be remembered.

$${f}_{t}=\sigma ({w}_{f}\times \left[{h}_{t-1},{x}_{t}\right]+{b}_{f})$$(2) -

The following structure in the LSTM cell is the entry gate responsible for determining the information to be updated. First, in Eq. (3), with the help of a sigmoid function, as in the forget gate, \({i}_{t}\) decides which values to update. Then, the vector \(\overline{{c }_{t}}\) of the new candidate values that can be added to the situation with the tanh function in Eq. (4) is obtained.

$${i}_{t}=\sigma ({w}_{i}\times \left[{h}_{t-1},{x}_{t}\right]+{b}_{i})$$(3)$$\overline{{c }_{t}}=tanh({w}_{c}\times \left[{h}_{t-1},{x}_{t}\right]+{b}_{c}$$(4) -

Output Gate keeps the output information of the cell module. Entry and exit gates often use tanh or logistic sigmoid functions to perform their tasks. The information from the forget and entrance gates is combined in the first stage. Thus, the old cell state \({c}_{t-1}\) is updated, and the new cell state \({c}_{t}\) is obtained with Eq. (5). \({o}_{t}\), which decides which parts of the previous cell state to output, is calculated with the help of the sigmoid function in Eq. (6). The new cell state (\({c}_{t}\)) is subjected to the \(tanh\) function and multiplied by \({o}_{t}\) to output the parts decided in Eq. (7). This resulting output (\({h}_{t}\)) is based on the cell state but in a filtered state.

$${c}_{t}={f}_{t}*{c}_{t-1}+{i}_{t}*\overline{{c }_{t}}$$(5)$${o}_{t}=\sigma ({w}_{o}\times \left[{h}_{t-1},{x}_{t}\right]+{b}_{o})$$(6)$${h}_{t}={o}_{t}*{tanh(c}_{t})$$(7)

3.4 Prediction Model Steps

The models used in our study aim to predict the price of a financial asset. To this end, we conduct a series of experiments to evaluate the effects of dimensionality reduction and feature extraction mechanisms on prediction performance in the data preprocessing phase. In the first part of our experiments, we compare our proposed model with the plain LSTM, PCA-LSTM, and ICA-LSTM prediction models derived from the same deep learning network family. In this way, we aim to show the effectiveness of our proposed model, which uses a two-stage statistical method, against prediction models that do not use statistical methods or use a single statistical method. In the second part of our experiments, we evaluate the performance of our proposed model against different deep learning networks in the literature, namely RNN, LSTM, GRU, and CNN. In the last part of our experiments, we expand the time scale of our dataset and aim to show the effectiveness of our proposed model for two new additional cases. We conduct all these experiments by following the same procedure steps below. Figure 6 presents the pseudocode we used for our experiments.

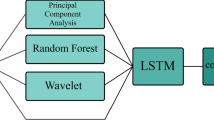

3.5 Proposed Hybrid PCA-ICA-LSTM Model

In this section, we introduce one of the main goals of our work, a hybrid deep learning model, which we call PCA-ICA-LSTM. The proposed model is built upon the LSTM neural network due to its capabilities among RNNs. RNNs, a type of deep learning model, have drawn attention for their success in analyzing and predicting datasets based on sequential data such as time series. Recurrent neural networks (RNNs), which can remember past time series and make decisions accordingly, have some disadvantages when dealing with large datasets. Next-generation recurrent neural networks like LSTM and GRU have become famous for mitigating these drawbacks. One of the most well-known disadvantages is the vanishing gradient problem when dealing with long-term dependencies in RNNs. This feature of LSTM networks has enabled them to be used in many fields, including finance. Sethia and Raut (2019) compared LSTM and GRU models and demonstrated that the LSTM model, an improved version of RNNs, achieved more successful results than GRU. Following this study, in our work, we opted for the classic LSTM deep learning network and used the same model architecture settings.

However, there are still challenges that the LSTM model has not yet overcome, such as the curse of dimensionality and issues like overfitting. To address these challenges, various methods and mechanisms are being developed. One of these methods is the hybrid model approach.

Recently, to enhance the prediction performance of machine learning models, hybrid models have been proposed by integrating both machine learning algorithms and deep learning models with different approaches, instead of relying solely on single models that may not yield satisfactory results in every scenario (Chen et al., 2022; He & Dai, 2022; Kakade et al., 2023; Li et al., 2022, 2023; Nosratabadi et al., 2020; Ozbayoglu et al., 2020; Srijiranon et al., 2022; Thakkar & Chaudhari, 2021; Wei & Ouyang, 2024). While certain solutions have been partially provided for specific issues such as exploding gradients with advanced recurrent neural network variants like LSTM and GRU in the analysis of time series data, these models still face unresolved challenges. While LSTMs, due to their advanced design, can regularly eliminate invalid information and preserve crucial information when ingesting time series data, experimental findings have indicated that direct use of raw data is not beneficial for training, and hence, subjecting them to a series of statistical methods beforehand is advantageous (Berradi & Lazaar, 2019). The literature suggests that combining dimensionality reduction techniques with various machine learning methods positively impacts the performance of machine learning methods (Chen et al., 2022; Gao & Chai, 2018; He & Dai, 2022; Jianwei et al., 2019; Kakade et al., 2023; Kao et al., 2013; Li et al., 2022, 2023; Liu & Wang, 2011; Lu et al., 2009; Ma et al., 2019; Srijiranon et al., 2022; Wei & Ouyang, 2024; Zhang et al., 2020; Zheng & He, 2021; Zhong & Enke, 2017).

Deep learning models capable of working with big data often utilize dimensionality reduction techniques to mitigate the dimensionality problem. Researchers continue to investigate a multitude of novel approaches to address the aforementioned structural limitations of advanced recurrent neural network variants such as LSTM and GRU. The novelty of our work is to address the structural problems faced by the LSTM deep learning network, such as overfitting and the curse of dimensionality when working with multidimensional data, by combining them with dimensionality reduction methods through our PCA-ICA preprocessing mechanism. Leveraging the advantages of both statistical methods, our proposed hybrid PCA-ICA-LSTM approach aims to overcome these issues and improve prediction performance.

PCA and ICA methods have been widely used by the research community due to their ability to achieve effective results in many past and current studies. Their capacity to identify crucial components across diverse data types, including time series, speech and image data, and medical signals, among others, is particularly notable (Jianwei et al., 2019). While both methodologies are grounded in elementary statistical techniques, they employ distinct strategies for problem resolution. PCA generates a new feature set, equal to or fewer than the original number of variables, aimed at maximizing the variance within the dataset, known as principal components. Conversely, ICA is a linear feature extraction technique that endeavors to minimize first- and second-order dependencies within a dataset, thereby generating statistically independent new features. While PCA endeavors to identify representative data directions, ICA seeks out independent directions (Anowar et al., 2021). Upon scrutinizing studies focused on the financial domain, PCA typically finds favor in dimensionality reduction and feature selection tasks (Bustos & Pomares-Quimbaya, 2020; Gao & Chai, 2018; Huang et al., 2022; Kakade et al., 2023; Li et al., 2022; Ma et al., 2019; Singh & Srivastava, 2017; Srijiranon et al., 2022; Wang et al., 2023; Wei & Ouyang, 2024; Zheng & He, 2021; Zhong & Enke, 2017); whereas ICA is employed for feature selection and noise reduction mechanisms (Chen et al., 2022; Draper et al., 2003; Jianwei et al., 2019; Kao et al., 2013; Kwak, 2008; Liu & Wang, 2011; Lu et al., 2009; Reza & Ma, 2016; Sethia & Raut, 2019; Thakkar & Chaudhari, 2021).

The S&P 500 dataset we are working on has transformed into a multidimensional dataset as a result of adding more than 40 technical indicators, as presented in Table 4. The use of technical indicators in the dataset contributes significantly to the predictive performance of the models (Gao & Chai, 2018; Kakade et al., 2023). However, the use of technical indicators also leads to certain disadvantages. One of these disadvantages is the expansion of the feature set due to the use of numerous technical indicators, resulting in the dimensionality problem. The dimensionality problem is also known as the curse of dimensionality and implies that as the number of features increases, the risk of overfitting also increases (Srijiranon et al., 2022; Zheng & He, 2021). The second disadvantage is the proliferation of redundant information due to the similar calculation techniques used by technical indicators, ultimately leading to noise in the data. Principal Component Analysis (PCA) is a dimensionality reduction technique that utilizes statistical methods to represent the entirety of a dataset using a minimal number of fundamental components while minimizing data loss. PCA is the oldest and most well-known statistical method employed for dimensionality reduction (Kakade et al., 2023; Wang et al., 2023; Wei & Ouyang, 2024). PCA not only reduces the dimensionality of the dataset but also decreases the dimensionality of noise in the data, making it commonly used for reducing noise in the data (Li et al., 2022; Ma et al., 2019; Zheng & He, 2021). For these reasons, PCA was employed in the initial stage of the proposed model to address the high dimensionality of the dataset and eliminate noise.

While the PCA method has been successfully applied for feature extraction in numerous studies, it has some drawbacks. The most notable disadvantage is that PCA assigns equal weights to all components, potentially disregarding the prediction target (Guo et al., 2022; Huang et al., 2022). For instance, in extreme cases, assigning equal weights to all components by PCA may overlook strong components, or conversely, it may assign excessive weight to irrelevant or weak components for the prediction target, leading to the generation of noisy information. This aspect could hinder PCA from achieving stable prediction results (Wei & Ouyang, 2024). Furthermore, PCA has limitations in extracting higher-order statistical information due to its utilization of second-order statistical properties (Zare et al., 2018). Therefore, exploring complex and multifaceted data like financial time series directly with the PCA method is challenging. In our study, we aimed to address this limitation of PCA by utilizing another statistical method. To this end, we preferred the ICA method for feature extraction due to its ability to utilize higher-level statistical properties and positive effects on the performance of using ICA after PCA (Draper et al., 2003).

As a result, in the second preprocessing stage of our proposed prediction model, the new feature set reduced in size and denoised by PCA is directly transferred to the ICA method for feature extraction. Several benefits of applying PCA before ICA have been emphasized in the literature (Draper et al., 2003). Firstly, the PCA method allows us to control the number of independent components obtained from ICA by reducing the data dimensionality. Secondly, using PCA for dimension reduction before whitening helps eliminate low-variance features. Another advantage of using the PCA method is that it enhances computational efficiency by reducing computational complexity by minimizing pairwise dependencies. All these benefits encourage the use of ICA after PCA and improve ICA performance (Draper et al., 2003). There are many studies in the literature demonstrating the effectiveness of the ICA method in feature extraction (Chen et al., 2022; Jianwei et al., 2019; Kao et al., 2013; Liu & Wang, 2011; Lu et al., 2009; Sethia & Raut, 2019; Thakkar & Chaudhari, 2021). The ICA method generates a new input set with a different number of features and a high-importance explanatory feature set after the initial preprocessing (refer to Table 6 and Table 7). The new input set, which cannot be processed by PCA due to its second-order feature processing capability, undergoes statistically higher-order feature processing with ICA preprocessing and is applied to the LSTM deep learning network forming the prediction system. Thus, the ability to combine the advantages of two different dimensionality reduction methods makes the hybrid PCA-ICA-LSTM prediction model remarkably effective in the conducted experimental studies.

While studies exist in the literature that combine PCA or ICA with different methods, to our knowledge, ours is the first study to combine these two statistical methods for a two-stage preprocessing and integrate them with a recurrent deep learning network to create a hybrid model. This highlights the novelty and theoretical contribution of our study. The proposed model is straightforward and presents a new framework that combines PCA and ICA statistical methods to provide input to an LSTM deep learning network used for prediction. The PCA-ICA-LSTM prediction model is constructed per the operation of the prediction model and basic adjustments described in the previous sections. Figure 7 shows the prediction framework of the proposed PCA-ICA-LSTM model.

If we briefly summarize the operation of the proposed model, it is first made into the original dataset by adding the calculated technical indicator information to the raw dataset consisting of market information. After the dataset is normalized, it is input to statistical methods for two-stage pre-treatment. In the first stage, the dataset, whose dimension is reduced by the PCA method, is purified from noise. The resulting essential components (PCs) are current information compressed by removing unnecessary information and noise. In the second stage, before the PCs are used as input to the LSTM network, they are subjected to a decomposition process to find the practical features. Therefore, they are decomposed into their independent components (ICs) by the ICA method, and ICs are obtained. This results in a feature set that contains noise-free, efficient information for the prediction system. This new feature set consists of essential and independent features that can represent 95% of the total variance of our original dataset. Example representations of the PCs and ICs obtained during the two-stage preprocessing of our dataset are given in Tables 6 and 7, respectively.

In the last stage, the obtained dataset is used for training and evaluating the LSTM deep learning network to predict prices five days after the determined date.

3.6 Evaluation Criteria

Evaluation criteria are needed to measure the predictability of the forecast model and the accuracy of the forecasts found. Error estimation methods such as root mean square error (RMSE), mean square error (MSE), mean absolute error (MAE), and Mean Absolute Percent Error (MAPE) are frequently used in the literature to evaluate the performance of deep learning models. As evaluation metrics within the scope of the study, coefficient of determination (R2), MSE, MAE, MAPE, Max Error, and Return Ratio are aimed to be compared with other studies (Chowdhury et al., 2018; Gao et al., 2021; Jianwei et al., 2019; Sethia & Raut, 2019; Wen et al., 2020). We present Eqs. (8–13) for the calculation of evaluation criteria below. Accordingly, \({\widehat{y}}_{i}\), i. is the predicted value of the sample, and \({y}_{i}\) represents the corresponding actual value.

Accordingly, the study uses two well-known evaluation metrics, such as R2 score and MSE, to see the model performance. The coefficient of determination measures how successful our prediction model is, and the higher its value, the higher the model's performance will be. The MSE metric, on the other hand, is a positive measure that shows that the prediction model's performance increases as its value approaches zero. In addition to R2 and MSE metrics, evaluation metrics used to measure regression performance, such as MAE, MAPE, and Max Error, were examined to compare with other studies in the literature. MAE performs the same task as MSE and is interpreted similarly. However, MAE looks at the absolute difference between the data and the model's predictions, and outlier residuals do not contribute as much to the total error as the MSE. MAPE is the percent equivalent of MAE. MAPE can eliminate the disadvantages when comparing models with different unit values. In addition, MAPE, since it expresses the estimation errors as a percentage, makes sense and can be interpreted on its own, making it different from other metrics. The Max Error metric calculates the maximum residual error, capturing the worst-case error between the predicted and actual values. So, the Max Error shows the extent of the model's error when fitted.

We add a different evaluation metric to our study for use in the second part of the experimental work outside of these evaluation metrics. In the second part of the experiments, we will compare our proposed model with state-of-the-art models and examine the return rates of the models through a simple trading strategy. This offers researchers the opportunity to evaluate models from a unique perspective. Equation (14) below demonstrates the success rate of models in trading strategy compared to the “hold and wait” strategy through the return ratio metric.

4 Experimental Results and Discussion

In the experiments, we test the success of the proposed PCA-ICA-LSTM model in predicting the price of a financial asset against models that use single-stage statistical methods and state-of-the-art models in the literature. At this point, we divide our experiments into four parts in line with the goals stated. In the first part, we prepare plain (LSTM) and hybrid (PCA-LSTM, ICA-LSTM, and PCA-ICA-LSTM) price prediction models and compare the proposed model against models derived from the same family. In this way, we aim to demonstrate the superiority of our PCA-ICA-LSTM model with two-stage preprocessing capability over prediction models that perform single-stage preprocessing. In the second part of our experiments, we compare our proposed model with commonly used deep learning models in the literature, including RNN, GRU, LSTM, and CNN. We also create a simple trading strategy for this comparison and evaluate the performance of the models in terms of return rates. In the third part of our experiments, we compare our proposed model with previous studies that utilized the same dataset in the literature. In the fourth part of our experiments, we make several changes. We extend the time scale of our dataset, selected from the literature for comparability, to update it. We set the time scale of our dataset to 2000–2024 to include the COVID-19 Pandemic. We investigate the most suitable dimensionality reduction component number for the changing time scale in our dataset and repeat our experiments for the found component number value. We also add two additional case studies to our research as a benchmark. All models used in the experiments follow the basic settings presented in Sect. 3.2. To ensure a fair comparison, we ran the experiments ten times and calculated the averages of the metrics. The experiments were performed on a system with Intel Core i7—2.5 GHz CPU and 32 GB RAM, using Python programming language and Keras library in a Google-Collaboratory environment.

4.1 Comparison of Our Proposed Model and Prediction Models Derived from the Same Family

The performance of the PCA-ICA-LSTM model, which we propose within the scope of experimental studies, was compared with the performances of LSTM, PCA-LSTM, and ICA-LSTM deep learning price-prediction models used in the literature to predict the price of a financial asset. The experimental results obtained for three different deep learning price prediction models and the proposed hybrid deep learning prediction model are shown in Table 8. PCA-ICA-LSTM price prediction model, the two procedures for dimension reduction and feature extraction, which we introduced and proposed in Sect. 3, sequentially link each other and the deep learning network. Models were run 10 times during the experiments, and the results of the evaluation criteria were analyzed under four headings: average, best, worst, and standard deviation. The best results for the evaluation metrics are shown in bold in Tables 8, 9, 10, 11.

When Table 8 is examined, it is possible to evaluate the forecasting performances of the models. Accordingly, we can see that the proposed hybrid PCA-ICA-LSTM price prediction model for all categories realizes the highest R2 and lowest MSE values, so much so that the hybrid PCA-ICA-LSTM model managed to reduce the average MSE value obtained by the LSTM price prediction model from 0.001457 to 0.000381 by improving it by 73.85%. An analogous situation is observed in the R2 score. The proposed hybrid model increased the average R2 value obtained by the LSTM price prediction model from 0.859670 by 12.05% to 0.963296.

When we compare the results obtained by the PCA-ICA-LSTM model with those of the PCA-LSTM model, the effect of using the ICA method for feature extraction will be more pronounced. According to the results, the hybrid PCA-ICA-LSTM model increased the average R2 value obtained with the PCA-LSTM price prediction model from 0.956055 to 0.963297 by improving it by 0.75%. At the same time, the proposed hybrid PCA-ICA-LSTM model reduced the average MSE value obtained with the PCA-LSTM price prediction model by 16.45% from 0.000456 to 0.000381.

The graphical representations of the experimental results are presented in Fig. 8. The blue solid line represents the actual value of the financial asset used in the test set, and the red dotted line represents the value estimated by the relevant deep-learning model. Accordingly, when the graphs in Fig. 8 are examined, it is seen that the most successful model is the proposed PCA-ICA-LSTM model shown in Fig. 8d, and the most unsuccessful model is the LSTM model shown in Fig. 8a. In the graphical representation presented in Fig. 8a, it is noteworthy that there are vast differences between the estimated values obtained by the LSTM model and the actual values. In addition, in the graphical representation of the proposed PCA-ICA-LSTM model presented in Fig. 8d, it is seen that the values estimated by the model are remarkably close to the actual values.

We can say that the proposed PCA-ICA-LSTM model is more stable than other models in sudden price changes. We understand this situation because when error metrics such as MSE, RMSE, MAE, and MAPE are examined in Table 8, the best and worst results of the proposed model are remarkably close to each other compared to other models. When Fig.8a–d are scrutinized, it will be seen that the success of the proposed PCA-ICA-LSTM hybrid deep learning model is more noticeable graphically around the 800th record.

The estimated price values obtained by the actual and deep learning prediction models of the S&P500 index were compared during the experimental study. In addition, the estimated price values and residuals obtained by the models were also compared. The results show that the proposed hybrid PCA-ICA-LSTM model outperforms other models. The proposed hybrid PCA-ICA-LSTM model's actual and estimated prices often match well and center around the diagonal. The results of this situation are presented in Fig. 9d. Accordingly, the graphics on the left side of the page show the actual and estimated prices of the relevant models, and the graphs on the right show the remnants of the respective models.

Each deep learning price prediction model compared in the experimental study achieves acceptable, successful results. Apart from R2 and MSE, values less than 5% obtained by our evaluation metric, MAPE, also support this situation (Montaño Moreno et al., 2013). Since the results obtained are remarkably close to each other and it is not easy to distinguish the results on the graphs (Fig. 9), we present new charts showing the density of the residues in Fig. 10.

When the distribution of residues in Figs. 9a and 10a is examined, it is seen that the plain LSTM model mostly has a scattered residue density in the range of −0.10 ~ 0. However, in a successful model, the residuals are expected to be in the 0-line as much as possible, for example, in Figs. 9d and 10d. So, when Fig. 10b–d, are examined, deep learning models that include dimension reduction and feature extraction methods in the distribution of residuals show a normal distribution compared to the plain LSTM deep learning model. When Fig. 10d is carefully examined, it can be seen that the proposed hybrid PCA-ICA-LSTM deep learning model shows a more balanced and dense distribution around the 0-line in terms of the distribution of residuals compared to the PCA-LSTM model in Fig. 10a and the ICA-LSTM model in Fig. 10c. This result demonstrates the success of our proposed PCA-ICA-LSTM hybrid deep learning model against other models in experimental studies.

These results reveal that deep learning price prediction models created with the addition of dimension reduction and feature extraction techniques are more effective than plain deep learning price prediction models (LSTM) in making the prediction values close to the actual price of the financial asset. In comparing the four models, the plain LSTM model obtained the worst rates in the evaluation metrics. In addition, it is observed that the proposed hybrid PCA-ICA-LSTM model reaches the highest R2 and lowest MSE, MAE, MAPE, and Max Error values even if the models obtain remarkably close results during the comparison.

4.2 Comparison of Our Proposed PCA-ICA-LSTM Model with Widely Used State-of-the-Art Models

The importance of prediction models in financial markets lies in the fact that they guide investors and assist in assessing new opportunities while mitigating risks in a dynamic market. The stock market can present a lucrative investment environment for investors, but there is a clear correlation between profitability and risk. As a result, investors seek to maximize returns while minimizing risk by predicting the probable value of financial assets. In this segment of our study, we will attempt to calculate the return ratio of models.

First, we develop a straightforward trading strategy to compute the returns of our models. Based on this strategy, the model issues a buy signal if it predicts a higher price for the financial asset on day t + 5 than on day t. Therefore, the model purchases the financial asset on day t and sells it on day t + 5. Conversely, if the model anticipates a lower price, it generates a sell signal and short-sells the financial asset. The difference between the two prices represents the model's profit or loss for that particular trade. Ultimately, the model's rate of return is calculated as the ratio between the total value earned at the end of each trade and the value obtained through the “hold and wait” approach. The equation for this calculation is provided in Sect. 3.6, Eq. (14).

This section will compare our proposed PCA-ICA-LSTM model with state-of-the-art models commonly used in financial data studies. Specifically, we created RNN, LSTM, GRU, and CNN models based on the "baseline settings" subsection from Sect. 2. We used 1D convolutional networks for the CNN model while adhering to these baseline settings. Since there are drawbacks to using dropout operations in CNN networks, we applied max-pooling operations instead of dropout operations. As a result, we created RNN, LSTM, GRU, and CNN models with a 5-layer architecture without any statistical preprocessing.

When we examine Fig. 11, which shows the S&P 500 Index's actual value and the prediction values of all models for comparison, the region between the 250th and 650th records becomes crucial as it displays sudden changes in the S&P 500 Index and allows us to observe the models' performance more clearly. According to this, we can observe that our proposed PCA-ICA-LSTM model closely mimics the actual price movements. Additionally, we can conclude that the CNN model also performs quite well. This is evident from the R2, MSE, and MAPE values obtained by the models and our observations in Fig. 11. Figure 12a–c display the R2, MSE, and MAPE values obtained by the models, respectively. When assessing model performance, a high R2 value indicates better performance. On the other hand, low MSE and MAPE values indicate successful model predictions. Out of the models we tested, the RNN model performed the worst, followed by the GRU model. Specifically, the RNN model tended to make pessimistic predictions and deviate from actual values.

Based on the rate of return metric results shown in Fig. 12d, it can be observed that the RNN model, which had pessimistic forecasts compared to the actual values of the S&P 500 Index, faced significant losses against the “hold and wait” strategy with the simple trading strategy that was designed. Similarly, the GRU model did not provide better returns than the hold-and-wait strategy. On the other hand, the other three models achieved much more profitable returns than the “hold and wait” strategy. Among these models, the LSTM and CNN models were particularly successful, with returns exceeding 200%. We believe this outcome is because the LSTM and CNN models produced overly optimistic results, especially after the 700th record in Fig. 11, but were still able to make profitable trades due to the significant upward trend. The predictions made by these models were far from the actual values.

Our analysis shows that while our proposed PCA-ICA-LSTM model may not be as effective as the LSTM and CNN models in this aspect, it has generated returns that are 165% higher compared to the “hold and wait” strategy. This signifies that our model is highly competitive regarding both rate of return and error rate metrics. However, it is essential to note that successful predictions with low error rates alone are not enough to calculate the rate of return. In our experiment, all models had R2 values above 0.90 and very low MSE values, but one model had negative returns compared to the “hold and wait” strategy, as illustrated in Fig. 12d. Developing a well-thought-out trading strategy is paramount in creating a model with high returns. We believe that with a well-developed trading strategy, our proposed PCA-ICA-LSTM model can achieve even more competitive results.

4.3 Comparison of the Recommended PCA-ICA-LSTM Model Similar Studies from the Literature

Under the study's first objective, we compared the PCA and ICA techniques used in the data preprocessing stage and the combined deep learning price prediction models with each other. In the first section, our PCA-ICA-LSTM hybrid model, distinguished as the most successful model, will be compared with similar studies in the literature in this section.