Abstract

To profit from price oscillations, investors frequently use threshold-type strategies where changes in the portfolio position are triggered by some indicators reaching prescribed levels. In this paper we investigate threshold-type strategies in the context of ergodic control. We make the first steps towards their optimization by proving ergodic properties of related functionals. Assuming Markovian price increments satisfying a minorization condition and (one-sided) boundedness we show, in particular, that for given thresholds, the distribution of the gains converges in the long run. We also extend recent results on the stability of overshoots of random walks from the i.i.d. increment case to Markovian increments, under suitable conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Perhaps the most naive approach to speculative trading is trying to “buy low and sell high” a given financial asset. More refined versions of such strategies are actually widely used by practitioners, see (What is mean reversion, 2021; Mean reversion trading, 2021; Mean reversion trading strategy, 2021). Their various aspects have been analysed in several papers, see e.g. (Dai et al., 2010; Zervos et al., 2013; Zhang & Zhang, 2008; Zhang, 2001) and our literature review in Sect. 3.

We intend to study such strategies in a different setting: that of ergodic control. A rigorous mathematical formulation turns out to pose thorny questions about the ergodicity of certain processes, as we shall point out below.

The present article starts to build a reasonable and mathematically sound framework for investigating such problems. We establish that key functionals converge to an invariant distribution and obey a law of large numbers. We are unaware of any previous study that would tackle these questions. Our results may serve as a basis for further related investigations, using techniques of ergodic and adaptive control.

We also investigate a closely related object, studied in Mijatović and Vysotsky (2020a, 2020b): the so-called overshoot process. We extend certain results from Mijatović and Vysotsky (2020a) from i.i.d. to Markovian summands.

The paper is organized as follows: In Sect. 2, we state our main results on the stability of level crossings and related quantities of random walks with Markovian martingale differences satisfying minorization and (one-sided) boundedness. Section 3 presents our results about overshoots. Section 4, concerns with the financial setting and the significance of our results in studying optimal trading with threshold strategies. In Sect. 5, we simulate a vanilla buying low and selling high (BLSH) trading strategy in a suitable modified discrete time stochastic volatility model which fits into our framework. We study how the clustering of volatility affects the expected daily return realized during each trading cycle. Additionally, we investigate whether the threshold strategy is indeed suitable for realizing profits from price fluctuations. Section 6 contains the proofs of the main results. Section 7 dwells upon future directions of research.

2 Stability of level crossings

Let \(M>0\) and let \((X_n)_{n\in \mathbb {N}}\) be a time-homogeneous Markov chain on the probability space \((\Omega ,\mathscr {F},\mathbb {P})\) with state space \(\mathscr {X}:=(-\infty ,M]\). Its transition kernel is denoted \(P:\mathscr {X}\times \mathscr {B}(\mathscr {X})\rightarrow [0,1]\). We consider the random walk

where \(S_0\) is a random variable independent of \(\sigma (X_k:\,k\in \mathbb {N})\).

The next minorization condition ensures that the chain jumps, with positive probability, in one step to a small neighborhood of zero independently of the initial state. Moreover, the random movements of S have an absolutely continuous component.

Assumption 2.1

There exist \(\alpha ,h>0\) such that, for all \(x\in \mathscr {X}\) and \(A\in \mathscr {B}(\mathscr {X})\),

holds where

is the normalized Lebesgue measure on \([-h,h]\).

Lemma 2.2

Under Assumption 2.1, there is a unique probability \(\pi _{*}\) on \(\mathscr {B}(\mathscr {X})\) such that \(\textrm{Law}(X_{n})\rightarrow \pi _{*}\) in total variation as \(n\rightarrow \infty \), at a geometric speed.

Proof

Assumption (2) implies that the state space \({\mathscr {X}}\) is a small set. Hence the chain is uniformly ergodic by Theorem 16.2.2 of Meyn and Tweedie (1993). \(\square \)

Assumption 2.3

For each \(z\in {\mathscr {X}}\),

and

Remark 2.4

Clearly, Assumption 2.3 guarantees that \(X_n\), \(n\in \mathbb {N}\), if integrable, is a martingale difference sequence and the limit distribution of Lemma 2.2 has zero mean, that is,

Let us fix thresholds \({\underline{\theta }},{\overline{\theta }}\in \mathbb {R}\), satisfying \({\underline{\theta }}<0<{\overline{\theta }}\). Furthermore, we define the sequence of crossing times corresponding to \({\underline{\theta }}\) and \({\overline{\theta }}\) by the recursion \(L_{0}:=0\) and for \(n\in \mathbb {N}\),

Lemma 2.5

Let \(EX_{n}^{2}<\infty \) hold for all \(n\ge 1\). (This is the case, in particular, if the \(X_{n}\) are bounded.) Under Assumptions 2.1 and 2.3, the random variables \(T_{n},L_{n}\) are well-defined and almost surely finite.

Proof

We will prove the statement inductively, the first step being trivial since \(L_{0}<\infty \). Assume that the statement has been shown for \(L_{0},L_{1},T_{1},\ldots ,L_{n}\) and we go on showing it for \(T_{n+1}\) and \(L_{n+1}\).

In the induction step, we work on the events \(B_{k}:=\{L_{n}=k\}\), \(k\in \mathbb {N}\) separately. Fixing k, the process \(M_{j}:=\sum _{l=0}^{j}\mathbbm {1}_{B_{k}}X_{k+l}\), \(j\in \mathbb {N}\) is a square-integrable martingale (remember Remark 2.4) with conditional quadratic variation

using Assumption 2.1. Hence \(\sum _{j=1}^{\infty }Q_{j}=\infty \) almost surely on \(B_{k}\). Proposition VII-3-9. of Neveu (1975) implies that \(\liminf _{j\rightarrow \infty }M_{j}=-\infty \) on \(B_{k}\). It follows that, on \(B_{k}\), almost surely

which implies, in particular, \(T_{n+1}<\infty \) on \(B_{k}\). A similar argument establishes that also \(P(L_{n+1}<\infty )=1\). \(\square \)

Although \(X_{T_1}\) can be positive when \(S_0<{\underline{\theta }}\), for \(n\ge 2\), \(X_{T_n}\) is always negative. Moreover it is also straightforward to verify that the process

is a time-homogeneous Markov chain on the state space

The next theorem states that under our standing assumptions, the law of \(U_n\) converges to a unique limiting law, as \(n\rightarrow \infty \), moreover, bounded functionals of \(U_n\) admit an ergodic behavior.

Theorem 2.6

Let \(EX_{n}^{2}<\infty \) hold for all \(n\ge 1\). Under Assumptions 2.1 and 2.3, there exists a probability \(\upsilon \) on \(\mathscr {B}(\mathscr {U})\) such that \(\textrm{Law}(U_n)\rightarrow \upsilon \) at a geometric speed in total variation as \(n\rightarrow \infty \). Furthermore, for any bounded and measurable function \(\phi :\mathscr {U}\rightarrow \mathbb {R}\),

almost surely.

Proof

See in Sect. 6. \(\square \)

A “mirror image” of the proof of Theorem 2.6 establishes the following result, the “symmetric pair” of Theorem 2.6.

Theorem 2.7

Let \({\tilde{X}}_{t}\), \(t\in \mathbb {N}\) be a Markov chain on the state space \([-M,\infty )\) for some \(M>0\) and define \({\tilde{S}}_{n}:=S_{0}+\sum _{k=1}^{n}{\tilde{X}}_{k}\). Let \(E{\tilde{X}}_{n}^{2}<\infty \) hold for all \(n\ge 1\). Let Assumptions 2.1 and 2.3 hold for \({\tilde{X}}_{t}\) Then the recursively defined quantities \({\tilde{T}}_{0}:=0\) and

are well-defined and finite. Furthermore, there exists a probability \(\upsilon \) on \(\mathscr {B}({\tilde{\mathscr {U}}})\) such that \(\textrm{Law}({\tilde{U}}_n)\rightarrow \upsilon \) at a geometric speed in total variation as \(n\rightarrow \infty \), where

is a homogeneous Markov chain on the state space

For any bounded and measurable function \(\phi :\tilde{{\mathscr {U}}}\rightarrow \mathbb {R}\),

almost surely. \(\square \)

3 Stability of overshoots

In Mijatović and Vysotsky (2020a) the authors consider a zero-mean i.i.d. sequence \(X_{n}\), \(n\ge 1\) and a random variable \(S_{0}\) independent of the \(X_{t}\). They determine the (stationary) limiting law \(\mu _{*}\) for the Markov process of overshoots defined by \(O_{0}:=\max (S_{0},0)\),

where \(L_{n}, T_{n}\) are defined as in (3) but with the choice \({\underline{\theta }}={\overline{\theta }}=0\).Footnote 1 They also establish the convergence of \(\textrm{Law}(O_{n})\) to \(\mu _{*}\) under suitable conditions. Generalizations to entrance Markov chains on more general state spaces have been obtained in Mijatović and Vysotsky (2020b).

Using methods of the present paper, we may obtain generalizations into another direction: we may relax the independence assumption on the \(X_{t}\).

Theorem 3.1

Under Assumption 2.1 and 2.3, there exists a probability \(\upsilon \) on \(\mathscr {B}((0,\infty ))\) such that \(\textrm{Law}(O_n)\rightarrow \upsilon \) at a geometric speed in total variation as \(n\rightarrow \infty \). Furthermore, for any bounded and measurable function \(\phi :(0,\infty )\rightarrow \mathbb {R}\),

almost surely.

Proof

See in Sect. 6. \(\square \)

Remark 3.2

In the i.i.d. case Theorem 3.1 applies if \(X_{t}\) is square-integrable, bounded from above and the law of \(X_{t}\) dominates constant times the Lebesgue measure in a neighborhood of 0. In Mijatović and Vysotsky (2020a) a much larger class of i.i.d. random variables is treated. On the other hand, we can handle Markovian summands unlike (Mijatović & Vysotsky, 2020a).

4 Trading with threshold strategies

Let the observed price of an asset be denoted by \(A_{t}\) at time \(t\in \mathbb {N}\). We may think of the price of a futures contract, for instance. Positive prices can also be handled, see Remark 4.4 below. We assume a simple dynamics:

where \(\mu \in \mathbb {R}\), \(S_{0}\in \mathbb {R}\) are constants and \(S_{t}:=S_{0}+\sum _{j=1}^{t}X_{j}\) for a Markov process X with values in \([-M,M]\) for some \(M>0\) and satisfying Assumptions 2.1 and 2.3.

In this price model, \(\mu t\) represents the drift (or “trend”) and \(S_t\) performs fluctuations around the trend (the martingale part). The minorization condition (2) is easy to interpret: whatever the current increment \(x\in [-M,M]\) of the fluctuations S is, with a positive probability the next increment will be small (that is, the price change will be close to 0) and the distribution of the movements of S has no atom, more precisely, it has an absolutely continuous component.

The practical situation we have in mind is an algorithm that tries to “buy low and sell high” an asset at high (but not ultra-high) frequencies, revising the portfolio, say, once every second or every minute. Such an algorithm is run continuously during the trading day which can be considered a “stationary” environment as economic fundamentals do not change significantly on such timescales. It seems that ergodic stochastic control is the right setting for such investment problems: the algorithm obeys the same rules prescribed by its programmer for a very long time (a horizon of one day is very long when the portfolio is revised every second) and its average perfomance should be optimized by choosing the best parameters (in our case the thresholds), possibly in an adaptive manner. We remark that \(\mu \) in such a setting is negligible and can safely be assumed 0, as often done in papers on high-frequency trading. Our results work nevertheless for arbitrary \(\mu \) which is of interest for trading on different timescales (e.g. daily revision of a portfolio for several months).

Now we set up the elements of our trading mechanism. Let the thresholds \({\underline{\theta }},{\overline{\theta }}\in \mathbb {R}\) be fixed, satisfying \({\underline{\theta }}<0<{\overline{\theta }}\). We interpret \({\underline{\theta }}\) as a level for \(S_{t}\) under which it is advisable to buy the asset. Analogously, it is recommended to sell the asset if \(S_{t}\) exceeds \({\overline{\theta }}\). Thus, to realize a “buying low, selling high”-type strategy, the asset should be bought at the times \(T_{n}\), \(n\ge 1\) and sold at the times \(L_{n}\), \(n\ge 1\), realizing the profit

We now explain the significance of Theorem 2.6 in studying optimal trading with threshold strategies. An investor aims to maximize in \({\underline{\theta }},{\overline{\theta }}\) the long-term average utility from wealth, that is,

where \(u:\mathbb {R}\rightarrow \mathbb {R}\) is a utility function and \(L_{k}(\theta ),T_{k}(\theta )\) refer to the respective stopping times defined in terms of the parameter \(\theta =({\underline{\theta }},{\overline{\theta }})\). The function \(p:\mathbb {R}_{+}\rightarrow \mathbb {R}_{+}\) serves to penalize long waiting times.

Remark 4.1

If the price is modelled by processes with continuous trajectories, as in Cartea et al. (2014), Dai et al. (2010), Zervos et al. (2013) and Zhang (2001) then the thresholds are hit precisely and the profit realized between \(T_{n}\) and \(L_{n}\) is exactly \({\overline{\theta }}-{\underline{\theta }}\). In the present setting (just like in the case of continuous-time processes with jumps), the profit realized may be significantly different due to the overshoot (resp. undershoot) of the level \({\overline{\theta }}\) (resp. \({\underline{\theta }}\)). From the point of view of ergodic control, it is crucial to establish that these overshoots/undershoots tend to a limiting law, which is the central concern of our present paper.

According to Theorem 2.6, the limsup in the above expression is, in fact, a limit, for a large class of u, p. One could easily incorporate various types of transaction costs in the model but we refrain from that.

Example 4.2

Let u, p be non-decreasing functions that are bounded from above (e.g. u can be the exponential utility, expressing high risk-aversion), and assume \(\mu \ge 0\). Since \(S_{L_{k}}-S_{T_{k}}\) is necessarily bounded from below, (7) holds with the choice \(\phi (U_{n}):= u(S_{L_k}-S_{T_k}+\mu (L_k-T_k))-p(L_{k}-T_{k})\) and the limsup is a limit in (13) above.

Remark 4.3

In the alternative setting of Theorem 2.7 above (with \({\tilde{X}}_{n}=X_{n}\)), the trader sells one unit of the financial asset at \({\tilde{L}}_{n}\) (shortselling) and then closes the position at \({\tilde{T}}_{n}\) thus realizing a profit

This is the analogue (with short positions) of the long-position strategy realizing (10). Theorem 2.7 implies that

is a limit in this case, too.

In future work, we intend to optimize \({\underline{\theta }},{\overline{\theta }}\) by means of adaptive control, using recursive schemes such as the Kiefer–Wolfowitz algorithm, see (Zhuang, 2008) and Section 6 of Rásonyi and Tikosi (2022). To prove the convergence of such procedures, it is a prerequisite that the process \({S_{L_k}-S_{T_k}+\mu (L_k-T_k)}\), \(k\in \mathbb {N}\) has favorable ergodic properties. This is precisely the content of Theorem 2.6 above.

Remark 4.4

In an alternative setting, \(A_{t}\) may model the logprice of an asset. In that case investing one dollar between \(T_{n}\) and \(L_{n}\) yields \(\exp \left( S_{L_{n}}-S_{T_{n}}+\mu (L_{n}-T_{n})\right) \) dollars. Let \(u:(0,\infty )\rightarrow \mathbb {R}\), \(p:\mathbb {R}_{+}\rightarrow \mathbb {R}_{+}\) be non-decreasing functions, p bounded and u bounded from above (such as a negative power utility function). In this setting the optimization

corresponds to maximizing the utility of the long-term investment of one dollar (minus an impatience penalty), using threshold strategies controlled by \(\theta \). When \(\mu \ge 0\), the limsup is a limit, again by Theorem 2.6.

Example 4.5

Let us look at a concrete case now, a very simple stochastic volatility model where the asset’s current volatility may depend on the previous price movement. Such models may display the important “leverage effect” observed in market data: that price movements are negatively correlated with volatility, see (Cont, 2001).

Let the dynamics be determined by \(X_{t+1}=\sigma (X_t)\varepsilon _{t+1}\) with a zero-mean i.i.d. sequence \(\varepsilon _t\) and with a measurable function \(\sigma :\mathbb {R}\rightarrow (0,\infty )\). Assume that \(\sigma \), \(\varepsilon _0\) are bounded, \(\varepsilon _0\) has an absolutely continuos law (with respect to the Lebesgue measure) which is bounded away from 0 in a neighborhood of 0. It is clear that both Assumptions 2.1 and 2.3 hold true and thus both Theorems 2.6 and 2.7 apply.

It is an intriguing question how realistic such a view of trading is in real-life situations. See (Gál & Lovas, 2022) where evidence on the law of large numbers is presented for both simulated autoregressive \(X_t\) and for empirical data (S& P 500 index).

We briefly compare our approach to existing ones. We do not survey the large literature on switching problems, see Chapter 5 of Pham (2008), only some of the directly related papers. Formulations as optimal stopping problems with discounting appear e.g. in Shiryaev et al. (2008), Dai et al. (2010) and Zhang (2001). Sequential buying and selling decisions are considered for mean-reverting assets in Zhang and Zhang (2008) and Song et al. (2009). Mean-reversion trading is also analysed in Leung and Li (2015). Zervos et al. Zervos et al. (2013) treats a general diffusion setting, again using discounting.

In our setting of intraday trading discounting is not an appealing option: on such timescales the decrease of the value of future money is not manifested. Here the ergodic control of averages seems more natural an objective to us. Recall also (Cartea et al., 2014) exploring high-frequency perspectives maximizing expectation on a finite horizon (without discounting).

All the above mentioned papers are about diffusion models where the phenomenon of “overshooting” and “undershooting” does not appear. They are, on the contrary, the main focus of the present work. Similar problems seem to come up in an ergodic control setting for continuous-time price processes with jumps. We are unaware of any related studies.

5 Buying low and selling high in a stochastic volatility model: a numerical experiment

Market risk and volatility are closely related concepts in financial markets. Market risk, also known as systematic risk, refers to the uncertainty that results from changes in the overall market or the economy. Volatility, on the other hand, refers to the degree of variation of a trading price over time. In other words, it is a measure of how much the price of a stock or other asset fluctuates in a given period of time. In general, increased market risk leads to increased volatility. For example, when there is a recession, investors become more fearful and may sell their assets, which causes the market to drop and the prices of assets to become more volatile. This can cause the prices of several assets to rise or fall at the same time, resulting in increased volatility. However, increased market risk does not always mean increased volatility, as there could be specific market conditions that could lead to the opposite, see (Risk vs. Volatility, 2023).

Volatility is not always a bad thing, as it can sometimes provide entry points from which investors can take advantage. On one hand, it can create opportunities for investors who are able to tolerate risk and have a long-term investment horizon. When stock prices are volatile, there are more opportunities to buy low and sell high. On the other hand, volatility can be a threat to investors who are strongly risk-averse or have a short-term investment horizon, because it can lead to significant losses in a short period of time, see (Market Volatility, 2023; Is there opportunity, 2023; If volatility is risk, 2023; Stock Market Volatility, 2023).

Out of the various possibilities for exploiting price fluctuations (option trading, etc.), buying low and selling high (BLSH) stands out as probably the simplest strategy that investors use to profit from stock market volatility. This can be difficult to do in practice, as it requires being able to predict market movements accurately, but many investors use technical analysis, fundamental analysis or both to help them time the market.

We consider a slightly modified version of a widespread standard stochastic volatility (SV) model. (It can even be used for energy prices, see (Chan & Grant, 2016)). As it was outlined in Remark 4.4, let \(A_t\) stand for the logarithmic price of the underlying asset. We intend to study the sequence of daily logarithmic returns \((r_n)_{n\in \mathbb {N}}\) over investment periods i.e. between \(T_n\) and \(L_n\),

where \(U_n\) is given by (4). Since \(\mu \) is a deterministic additive constant whose value is supposed to be known (estimated), from now on, we may and will assume that \(\mu =0\).

We model the time evolution of the daily log-returns of the underlying asset using the truncated stochastic volatility model

where for some fixed constants \(M_1, M_2>0\),

Furthermore, \((\varepsilon _t^X)_{t\in \mathbb {N}}\) and \((\varepsilon _t^\sigma )_{t\in \mathbb {N}}\) are i.i.d. sequences of standard Gaussian random variables, independent of each other, the autocorrelation factor \(\rho \) can be chosen between \(-1\) and 1.

It is also straightforward to check that \(\mathbb {E}(X_t^2)<\infty \), and by construction, \(\mathbb {E}(X_t\mid \sigma _t)=0\) hence Assumption 2.3 is clearly satisfied. (We remark that, for \(M_1,M_2\) large, the term \(\mathbb {E}({\tilde{X}}_t\mid \sigma _t)\) is practically 0.) Note that, for large \(M_1\) and \(M_2\), \(X_{t}\approx \sigma _t\varepsilon _t^X\), moreover, the state space of \((X_t)_{t\in \mathbb {N}}\) is bounded.

Remark 5.1

Theorem 2.6 does not apply to this model directly since \(X_t\) is not Markovian. However, \((\sigma _t,X_t)\) is Markovian. Thus, instead of (4), we have to consider \(U_{n}:=(\sigma _{T_n},X_{T_{n}},S_{T_{n}},X_{L_{n}},S_{L_{n}},L_n-T_n)\). Our arguments can be extended to this setting, too, but this is out of the scope of the present paper.

By observing the process \((r_n)_{n\ge 0}\) for a sufficiently long period of time, we can draw conclusions about the dependence of

on model parameters, where in the above expression \(\upsilon \) denotes the limiting law of \(U_n\) as \(n\rightarrow \infty \). This opens door to the statistical analysis of such models.

We also wrote a numerical simulation in Python whose source code has been uploaded to the public Github repository https://github.com/mathlov89/Trading-With-Threshold-Strategies.git. In our experiments, we set \(M_1=10^8\), \(M_2=1\), and simulated hundred trading cycles (buying and selling periods).

The simulated paths of \(\sigma _t\), \(X_t\), and \(S_t\), \(0\le t\le 5000\), processes are shown in Fig. 1.

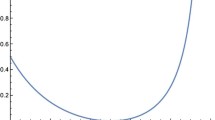

The graph in Fig. 2 shows the average of the daily logarithmic returns achieved over 100 trading periods under different autocorrelation parameter settings. We conjecture that the buying low and selling high can be profitable only when \(\rho \) is far from zero, so when volatility shows clustering behavior.

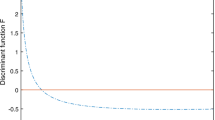

On the left side of Fig. 3, we presented the empirical histogram of the time elapsed between buying and selling. On the right side, we can see the ergodic average of the daily logarithmic returns realized on each trading cycle. We can see that, in most cases, the investor can sell the stock shortly after entering the market, and thus close his position. However, with a small probability, it can happen that the price reaches the selling point only about 1.5 million steps after buying. This means, in practice, that an investor following the threshold trading strategy might have to wait an unreasonably long time in certain cases. Adding a penalty term depending on \(L_n-T_n\) thus seems a reasonable idea.

Based on what we’ve seen so far, we can draw the conclusion that although the BLSH strategy can help investors to profit on price oscillation, the liquidity of the investment may decrease significantly hence from an investor’s perspective, it is not advisable to use this strategy on its own, rather combined with other strategies.

6 Proofs

Proof of Theorem 2.6

Iterated random function representation of Markov chains on standard Borel spaces is a commonly used construction, see e.g. (Bhattacharya & Waymire, 1990; Bhattacharya & Majumdar, 1999). A similar representation for \((X_n)_{n\in \mathbb {N}}\) is shown in Lemma 6.1 below which will play a crucial role in the proof. Although the proof is quite standard, we present it for the reader’s convenience.

Lemma 6.1

Let \((\xi _n)_{n\in \mathbb {N}}\) and \((\eta _n)_{n\in \mathbb {N}}\) be i.i.d. sequences, independent of each other, and also independent of \(\sigma (X_0, S_0)\), moreover let \(\xi _0,\eta _0\) be uniform on [0, 1]. Then there exists a map \(\Phi :\mathscr {X}\times [0,1]\times [0,1]\rightarrow \mathscr {X}\) such that for all \(x\in \mathscr {X}\), and \(u\in [0,1]\), we have

where \(h,\alpha >0\) are as in Assumption 2.1. Furthermore, the process \((X_n')_{n\in \mathbb {N}}\) given by the recursion \(X_0'= X_0\), \(X_{n+1}' = \Phi (X_n', \xi _{n+1}, \eta _{n+1})\), \(n\in \mathbb {N}\) is a version of \((X_n)_{n\in \mathbb {N}}\).

Proof

For \(x\in \mathscr {X}\) and \(A\in \mathscr {B}(\mathscr {X})\), let us consider the decomposition

where by Assumption 2.1,

is a probability kernel. For \(x\in \mathscr {X}\) and \(u,v\in [0,1]\), we define

where \(q^{-1}(x,u):=\inf \{r\in \mathbb {Q}\mid q(x, (-\infty ,r])\ge u\}\), \(u\in [0,1]\) is the pseudoinverse of the cumulative distribution function \(r\mapsto q(x,(-\infty ,r))\), \(x\in \mathscr {X}=(-\infty ,M]\).

Obviously, (18) holds true, and thus for any fixed \(u\in [0,1]\), the random map \(x\mapsto \Phi (x, u, \eta _{n+1})\) is constant on \(\mathscr {X}\) with probability \(\alpha \) showing that \(X_n'\) forgets its previous state with positive probability. This observation will play a central role later.

On the other hand, by the definition of the pseudoinverse, \(\textrm{Law}(q^{-1}(x,\xi _0))=q(x,\cdot )\), and thus we can write

To sum up, the chains \((X_n)_{n\in \mathbb {N}}\) and \((X_n')_{n\in \mathbb {N}}\) have the same transition kernel, and their initial states also coincide showing that these processes are versions of each other. \(\square \)

Since we are interested in the distribution of \(U_n\), from now on we may and will assume that the the random walk \((S_n)_{n\in \mathbb {N}}\) is driven by \((X_n')_{n\in \mathbb {N}}\), whereby for every \(n\in \mathbb {N}\), each of \(X_{T_n}\), \(S_{T_n}\), \(X_{L_n}\), \(S_{L_n}\), \(L_n\) and \(T_n\) is a function of \(X_0, S_0\), \((\xi _n)_{n\in \mathbb {N}}\) and \((\eta _n)_{n\in \mathbb {N}}\).

In what follows, we are going to prove that the minorization property of \((X_n)_{n\ge 1}\) is inherited by \((U_n)_{n\ge 1}\). Let us denote the transition kernel of U by \(Q: \mathscr {U}\times \mathscr {B}(\mathscr {U})\rightarrow [0,1]\), that is for all \(y\in \mathscr {U}\) and \(B\in \mathscr {B}(\mathscr {U})\)

holds. We aim to show that there exist a non-zero Borel measure \({\tilde{\kappa }}:\mathscr {B}(\mathscr {U})\rightarrow [0,\infty )\) such that for all \(y\in \mathscr {U}\) and \(B\in \mathscr {B}(\mathscr {U})\),

For \(n\in \mathbb {N}_+\), we define \(\tau _n = \sup \{t\in \mathbb {N}\mid \eta _{L_n+k}<\alpha ,\,k=1,\ldots ,t \}\). Clearly, \(\tau _n\) is independent of \(U_n\), moreover it follows a \(\textrm{Geo} (1-\alpha )\) distribution counting the number of failures until the first success i.e. \(\mathbb {P}(\tau _n=j)=\alpha ^j (1-\alpha )\), \(j\in \mathbb {N}\).

Now, let \(y=({\underline{x}},{\underline{s}},{\overline{x}},{\overline{s}},r)\in \mathscr {U}\) and \(B\in \mathscr {B}(\mathscr {U})\) be arbitrary and fixed. By the tower rule, we have

where we used that the sigma algebras \(\sigma (\xi _{L_n+k},\,\eta _{L_n+k},\,k\ge 1)\) and \(\sigma (U_n)\) are independent, moreover on sets \(\{S_{L_{n}}={\bar{s}}, \tau _{n}=k\}\), we have

implying that \(U_{n+1}\) and \((X_{T_{n}},S_{T_{n}},X_{L_{n}},L_n-T_n)\) are conditionally independent given \(\{S_{L_{n}}={\bar{s}}, \tau _{n}=k\}\) whenever \(k\ge 1\).

Furthermore, we can write

Let us introduce the auxiliary random walk \(W_n = {\overline{s}}+h\sum _{i=1}^{n} (2\xi _i-1)\), and we introduce the associated quantities \(L_{0}^W:=0\) and for \(n\in \mathbb {N}\),

Similarly, we define \(U_n^W=\left( h(2\xi _{T_n^W}-1),W_{T_n^W},h(2\xi _{L_n^W}-1),W_{L_n^W}, L_n^W-T_n^W\right) \), \(n\in \mathbb {N}\).

Obviously, for fixed \(1\le j<l\le k\), we have

We estimate this probability from below by taking into account only trajectories that consist of just one decreasing and one increasing segment (see Fig. 4 for an illustration).

Note that the conditional distribution of \((W_1,\ldots ,W_l)\) given \(\bigcap _{i=1}^{j} \{\xi _i<1/2\}\) and \(\bigcap _{i=j+1}^{l} \{\xi _i\ge 1/2\}\) coincides with the distribution of \((W'_1,\ldots ,W'_l)\), where

with the convention that empty sums are defined to be zero.

Using this, and that \(B\subset \mathscr {U}=(-\infty ,0)\times (-\infty ,{\underline{\theta }})\times (0,M]\times ({\overline{\theta }},{\overline{\theta }}+M)\times (\mathbb {N}{\setminus } \{0\})\), we can write

where for \(m\in \mathbb {N}_+\), \(f_m:[0,\infty )\rightarrow [0,\infty )\) stands for the probability density function of the sum of m independent random variables each having a uniform distribution on [0, 1]. Now, we can evaluate the quadruple integral using the substitution \(x_1=-hw\), \(x_2={\overline{s}}-hu-hw\), \(x_3=hz\), \(x_4={\overline{s}}-hu-hw+hv+hz\), and thus we have

where \({\textbf{x}}\) is a shorthand notation for \((x_1,x_2,x_3,x_4)\), \(\lambda \) is the Lebesgue measure on \(\mathbb {R}^4\), and \({\mathscr {V}}\) is used for \((-\infty ,0)\times (-\infty ,{\underline{\theta }})\times (0,M]\times ({\overline{\theta }},{\overline{\theta }}+M)\), moreover for \(j,m>1\)

Substituting this back into (22) and reindexing by \(m=l-j\) yields

and thus by (20), we arrive at

where \(\delta \) is the usual counting measure on \(\mathbb {N}\).

Let us observe that on \(\textrm{Supp}(g)\subseteq \mathscr {U}\), \({\underline{\theta }}\le x_2-x_1\le {\underline{\theta }}+h\). Now, we fix \(0<{\tilde{\gamma }}<\min \left( ({\overline{\theta }}-{\underline{\theta }})/h,1\right) \) and consider only \(x_1\) and \(x_2\) satisfying \({\underline{\theta }}\le x_2-x_1\le {\underline{\theta }}+{\tilde{\gamma }}h\). Furthermore, since the jumps of \((S_n)_{n\in \mathbb {N}}\) are bounded from above by M, we have \({\overline{\theta }}<{\overline{s}}<{\overline{\theta }}+ M\) hence for the argument of \(f_{j-1}\) in (25), we get

provided that \({\underline{\theta }}\le x_2-x_1\le {\underline{\theta }}+{\tilde{\gamma }}h\).

Introducing \(\omega _j=\min \{f_{j-1}(t)\mid ({\overline{\theta }}-{\underline{\theta }})/h-{\tilde{\gamma }}\le t\le ({\overline{\theta }}-{\underline{\theta }})/h+M/h\}\), we arrive at the estimate

which is uniform in \({\overline{s}}\), and \(\omega _j>0\) whenever \(j>({\overline{\theta }}-{\underline{\theta }})/h+M/h+1\).

If we put all together, for \(C_h = \{({\textbf{x}},m)\in \mathscr {U}\mid x_1\in [-h,0];\,{\underline{\theta }}\le x_2-x_1 \le {\underline{\theta }}+{\tilde{\gamma }} h;\,x_3\in [0,h];\,x_4-x_3\le {\overline{\theta }};\,m\ge 2\}\), we obtain

where the right hand-side depends only on \(\alpha , M, h, {\underline{\theta }}, {\overline{\theta }}\), but not on \({\overline{s}}\) hence (19) holds with

which is obviously a non-zero Borel measure on \(\mathscr {B}(\mathscr {U})\).

To sum up, we showed that the chain \((U_n)_{n\ge 1}\) satisfies the uniform minorization condition (19), and thus by Theorem 16.2.2 in Meyn and Tweedie (1993), there exist a probability measure, independent of \((S_0,X_0)\), such that \(\textrm{Law}(U_n)\rightarrow \upsilon \) in total variation. Moreover, by Theorem 17.0.1 in Meyn and Tweedie (1993), for bounded measurable functionals of \(U_n\) the law of large numbers holds as it is stated in Theorem 2.6. (Actually, even a central limit theorem could be established.) This completes the proof. \(\square \)

Proof of Theorem 3.1

The idea of the proof is similar to that of Theorem 2.6, but the details are somewhat simpler. We only sketch the main steps.

We consider the process \(Z_n = (X_{L_n}, S_{L_n})\), \(n>1\) which is obviously a time-homogeneous Markov chain on the state space \(\triangle :=\{(x,s)\in (0,M]^2\mid x\ge s\}\). In what follows, we prove that chain \((Z_n)_{n>1}\) satisfies a minorization condition similar to (19) in the proof of Theorem 2.6. More precisely, we aim to show that there exist a non-zero Borel measure \(\beta :\mathscr {B}(\triangle )\rightarrow [0,\infty )\) such that for all \(z\in \triangle \) and \(A\in \mathscr {B}(\triangle )\),

where \(Q:\triangle \times \mathscr {B}(\triangle )\rightarrow [0,1]\) is the transition kernel of the chain \((Z_n)_{n\in \mathbb {N}}\).

Let \((\xi _n)_{n\in \mathbb {N}}\), \((\eta _n)_{n\in \mathbb {N}}\), and \((\tau _n)_{n\in \mathbb {N}}\) be as in the the proof of Theorem 2.6. For \(z=({\overline{x}},{\overline{s}})\in \triangle \) and \(A\in \mathscr {B}(\triangle )\) arbitrary and fixed, by the tower rule, we have

where we applied the same principles as in the derivation of (20).

Again by introducing the auxiliary random walk \(W_n = {\overline{s}}+h\sum _{j=1}^{n} (2\xi _j-1)\), and the associated quantities \(L_n^W\), \(Z_n^W=(h(2\xi _{L_n^W}-1),W_{L_n^W})\), \(n\in \mathbb {N}\), where \(L_0^W:=0\) and for \(n\in \mathbb {N}\), \(L_{n+1}^W:=\inf \{k>L_n^W\mid W_{k-1}\le 0<W_k\}\), for fixed \(2\le l\le k\), we can write

where similarly to (24), we have taken into account trajectories decreasing in \(l-1>0\) steps and increasing only in the l-th step. For the conditional probability, we have

where \(f_m:[0,\infty )\rightarrow [0,\infty )\) is the probability density function of the sum of \(m\ge 1\) independent random variables each having a uniform distribution on [0, 1], \({\textbf{x}}=(x_1,x_2)\), and \(\lambda \) denotes the Lebesgue measure on \(\mathbb {R}^2\).

Notice that if \((x_1,x_2)\in (0,h]\cap \triangle \) then \(0\le x_1-x_2\le \min (M,h)\), moreover \(0<{\overline{s}}\le M\) hence we have \(0\le ({\overline{s}}-(x_2-x_1))/h\le M/h+\min (M/h,1)\), and thus we obtain

where \({\tilde{\gamma }}'\) can be any fixed number in (0, 1), and \(\omega _l' = \inf \{f_{l-1}(t)\mid {\tilde{\gamma }}'\min (M/h,1)\le t\le M/h+\min (M/h,1)\}\) that is a positive number not depending on \({\overline{s}}\) whenever \(l>M/h+1\).

If we put all together, we obtain the following lower estimate

where the right hand-side depends only on \(\alpha ,M,h\) and the choice of \({\tilde{\gamma }}'\in (0,1)\), but not depends on z, moreover

defines a non-zeros Borel measure on \(\mathscr {B}(\triangle )\), and thus (28) holds with this \(\beta \).

To sum up, we proved that the chain \((Z_n)_{n\in \mathbb {N}}\) satisfies the uniform minorization condition, and thus it admits a unique invariant probability measure \(\pi \) such that \(\textrm{Law}(Z_n)\rightarrow \pi \) at a geometric rate in total variation as \(n\rightarrow \infty \) (See for example Lemma 18.2.7 and Theorem 18.2.4 in Douc et al. (2018)) which completes the proof of Theorem 3.1. \(\square \)

Remark 6.2

We explain a seemingly innocuous but actually powerful extension of some of the arguments above. Let \(X_{t}\) be a time inhomogeneous Markov chain with kernels \(P_{n}\), \(n\ge 1\) such that

Let Assumption 2.1 hold for each \(P_{n}\), \(n\in \mathbb {N}\) (with the same \(\alpha ,h\)) and let Assumption 2.3 hold for each \(P_{n}\). In this case, \(U_{t}\), \(t\ge 2\) will be a time-inhomogeneous Markov chain and repeating the argument of the proof for Theorem 2.6 establishes the existence of a probability \({\tilde{\kappa }}\) such that \(Q_{n}(x,A)\ge {\tilde{\kappa }}(A)\), \(x\in {\mathscr {U}}\), \(A\in {\mathscr {B}}({\mathscr {U}})\), \(n\ge 3\), where \(Q_{n+1}(x,A)=P(U_{n+1}\in A|U_{n}=x)\) is the transition kernel of U.

Remark 6.3

One could treat certain stochastic volatility-type models where \(X_{t}=\sigma _{t}\varepsilon _{t}\) with \(\varepsilon _{t}\) i.i.d. and \(\sigma _{t}\) a Markov process. In this case \(X_{t}\) is not Markovian but the pair \((X_{t},\sigma _{t})\) is. An extension to even more general non-Markovian \(X_{t}\) also seems possible. We do not pursue these generalizations here.

7 Conclusions

It would be desirable to remove the (one-sided) boundedness assumption on the state space of \(X_{t}\) and relax the minorization condition (2) to some kind of local minorization. Due to the rather complicated dynamics of \(U_{t}\) such extensions do not appear to be straightforward at all.

Removing the boundedness hypothesis on u, p in Sect. 4 would also be desirable but looks challenging.

Replacing the constant drift \(\mu \) by a functional of \(X_{t}\) would also significantly extend the family of models in consideration.

An adaptive optimization of the thresholds \({\underline{\theta }},{\overline{\theta }}\) could be performed using the Kiefer–Wolfowitz algorithm, as proposed in Section 6 of Rásonyi and Tikosi (2022). There are a number of technical conditions (e.g. mixing properties, smoothness of the laws) that need to be checked for applying (Rásonyi & Tikosi, 2022) but the ergodic properties established in this article strongly suggest that this programme indeed can be carried out.

Extensions to non-Markovian stochastic volatility models (see (Comte & Renault, 1998; Gatheral et al., 2018)) seem feasible but require further technicalities.

Notes

Strictly speaking, in the definition of \(L_{n+1}\), see (3), they have \(\ge \) instead of >.

References

Risk vs. Volatility: What’s The Difference? Retrieved on 11th January 2023, from https://www.forbes.com/advisor/investing/risk-vs-volatility/.

Market Volatility—Concern or Opportunity? Retrieved on 11th January 2023, from https://hudsonfinancialplanning.com.au/market-volatility-concern-or-opportunity/

Is there opportunity in this stock market volatility? Retrieved on 11th January 2023, from https://www.capitalgroup.com/advisor/insights/articles/market-volatility-long-term-opportunity.html

If volatility is risk, why is a volatile market called an investor’s friend? Retrieved on 11th January 2023, from https://economictimes.indiatimes.com/markets/stocks/news/if-volatility-is-risk-why-is-a-volatile-market-called-an-investors-friend/articleshow/75804512.cms

Stock Market Volatility: an Opportunity or a threat? Retrieved on 11th January 2023, from https://www.wilseyassetmanagement.com/04-10-18-Stock-Market-Volatility-An-Opportunity-or-a-Threat

Mean reversion trading: Is it a profitable strategy? Retrieved on 24th November 2021, from https://www.warriortrading.com/mean-reversion/

Mean reversion trading strategy with a sneaky secret. Retrieved on 24th November 2021, from https://tradingstrategyguides.com/mean-reversion-trading-strategy/

What is mean reversion trading strategy. Retrieved on 24th November 2021, from https://tradergav.com/what-is-mean-reversion-trading-strategy/

Bhattacharya, R., & Majumdar, M. (1999). On a theorem of Dubins and Freedman. Journal of Theoretical Probability, 12, 1067–1087.

Bhattacharya, R., & Waymire, E. (1990). Stochastic processes with applications. New York: Wiley.

Cartea, Á., Jaimungal, S., & Ricci, J. (2014). Buy low sell high: A high frequency trading perspective. SIAM Journal on Financial Mathematics, 5, 415–444.

Chan, J. C., & Grant, A. L. (2016). Modeling energy price dynamics: GARCH versus stochastic volatility. Energy Economics, 54, 182–189.

Cont, R. (2001). Empirical properties of asset returns: Stylized facts and statistical issues. Quantitative Finance, 1, 223–236.

Comte, F., & Renault, É. (1998). Long memory in continuous-time stochastic volatility models. Mathematical Finance, 8, 291–323.

Dai, M., Jin, H., Zhong, Y., & Zhou, X. Y. (2010). Buy low and sell high. In C. Chiarella & A. Novikov (Eds.), Contemporary quantitative finance: Essays in honour of Eckhard Platen (pp. 317–333). Berlin: Springer.

Douc, R., Moulines, E., Priouret, P., & Soulier, Ph. (2018). Markov chains. Operation research and financial engineering. New York: Springer.

Gál, H., & Lovas, A. (2022). Ergodic behavior of returns in a buy low and sell high type trading strategy. In M. Corazza, C. Perna, C. Pizzi, & M. Sibillo (Eds.), Mathematical and statistical methods for actuarial sciences and finance. MAF 2022 (pp. 278–283). Cham: Springer.

Gatheral, J., Jaisson, T., & Rosenbaum, M. (2018). Volatility is rough. Quantitative Finance, 18, 933–949.

Leung, T. S., & Li, X. (2015). Optimal mean reversion trading: Mathematical analysis and practical applications. Singapore: World Scientific.

Meyn, S. P., & Tweedie, R. L. (1993). Markov chains and stochastic stability. Berlin: Springer.

Mijatović, A., & Vysotsky, V. (2020). Stability of overshoots of zero mean random walks. Electronic Journal of Probability, 25(none), 1–22.

Mijatović, A., & Vysotsky, V. (2020). Stationary entrance Markov chains, inducing, and level-crossings of random walks. preprint, arXiv:1808.05010.

Neveu, J. (1975). Discrete-parameter martingales. Amsterdam: North-Holland.

Pham, H. (2008). Continuous-time stochastic control and optimization with financial applications. Berlin: Springer.

Rásonyi, M., & Tikosi, K. (2022). Convergence of the Kiefer-Wolfowitz algorithm in the presence of discontinuities. Published online by Advances in Applied Probability. arXiv:2002.04832

Shiryaev, A., Xu, Z., & Zhou, X. Y. (2008). Thou Shalt Buy and Hold. Quantitative Finance, 8, 765–776.

Song, Q. S., Yin, G., & Zhang, Q. (2009). Stochastic optimization methods for buying-low-and-selling-high strategies. Stochastic Analysis and Applications, 27, 523–542.

Zervos, M., Johnson, T. C., & Alazemi, F. (2013). Buy-low and sell-high investment strategies. Mathematical Finance, 23, 560–578.

Zhang, H., & Zhang, Q. (2008). Trading a mean-reverting asset: Buy low and sell high. Automatica, 44, 1511–1518.

Zhang, Q. (2001). Stock trading: An optimal selling rule. SIAM Journal on Control and Optimization, 40, 64–87.

Zhuang, C. (2008). Stochastic approximation methods and applications in financial optimization problems. PhD thesis, University of Georgia.

Funding

Open access funding provided by ELKH Alfréd Rényi Institute of Mathematics. Both authors were supported by the NKFIH (National Research, Development and Innovation Office, Hungary) grant K 143529 and the “Lendület” Grant LP 2015-6 of the Hungarian Academy of Sciences.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest (such as honoraria; educational grants; participation in speakers’ bureaus; membership, employment, consultancies, stock ownership, or other equity interest; and expert testimony or patent-licensing arrangements), or non-financial interest (such as personal or professional relationships, affiliations, knowledge or beliefs) in the subject matter or materials discussed in this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Both authors benefitted from the support of the “Lendület” Grant LP 2015-6 of the Hungarian Academy of Sciences.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lovas, A., Rásonyi, M. Ergodic aspects of trading with threshold strategies. Ann Oper Res 336, 691–709 (2024). https://doi.org/10.1007/s10479-023-05233-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-023-05233-5