Abstract

The information in the real world often contains many properties such as fuzziness, randomness, and approximation. Although existing linguistic collections attempt to solve these problems, with the emergence of more and more constraints and challenges, this information cannot fully express the problem, leading to an increasing demand for methods that can contain multiple uncertain information. In this paper, we comprehensively consider the various characteristics of information including membership degree, credibility and approximation based on rough sets, and propose the concept of \(\chi\)-linguistic sets (\(\chi\)LSs), which depend on original data rather than prior knowledge and effectively solve the problem of incomplete information representation. At the same time, the corresponding theories such as the comparison method and operational rules have also been proposed. Subsequently, we construct a new \(\chi\)-linguistic VIKOR (\(\chi\)LVIKOR) method for multi-attribute group decision making (MAGDM) problem with \(\chi\)LSs, and apply it to the risk assessment of COVID-19. Through comparative analysis, we discuss the effectiveness and superiority of \(\chi\)LSs.

Similar content being viewed by others

1 Introduction

Due to the complexity and uncertainty of the environment, the information in the real word such as the COVID-19 cannot be accurately described. The handling of uncertain information is a widely concerned problem in decision making. Language is a way and tool for human communication and expression. Zadeh (1975) proposed the concept of linguistic variables to represent uncertain information. Compared with traditional values, linguistic variables such as “good" and “high" are more in line with decision maker (DM)’s cognition and intuition. And linguistic variables can express natural linguistic information as computable mathematical symbols (Herrera et al. 1996; Gou 2017; Zhang et al. 2023; Krishankumaar et al. 2022).

Subsequently, other linguistic representation methods have been continuously proposed and widely used in decision making. Wang and Li (2010) added linguistic evaluation on the basis of intuitionistic fuzzy sets (IFSs) (Atanassov 1986) and proposed intuitionistic linguistic sets, which contains three parts: linguistic evaluation, membership degree, and non-membership degree. Du and Zuo (2011) and Yu et al. (2018) respectively proposed the extended TOPSIS method and TODIM method based on intuitionistic linguistic numbers (ILNs), and applied them to decision making. Some generalized dependent aggregation operators were also proposed by Liu (2013). Considering DMs may hesitate between several linguistic terms, Rodriguez et al. (2012) put forward the hesitant fuzzy linguistic term set (HFLTS). This concept is proposed based on the hesitant fuzzy set (HFS) (Torra 2010) and the linguistic term set (LTS) (Zadeh 1975), and it can better express the preferences of DMs. Beg and Rashid (2013) extended the TOPSIS method to HFLTSs. Wei et al. (2013) proposed some operators and comparison methods based on HFLTSs. Besides, HFLTSs have been applied in practical decision making problems, such as the strategic management of liquor brands (Liao et al. 2020), the selection of eco-friendly cities (Boyaci 2020), and the selection of specific medicines for the COVID-19 (Ren et al. 2020). However, the weights of different linguistic terms in HFLTSs are not all the same. To express preference, Pang et al. (2016) proposed probabilistic linguistic term sets (PLTSs), which adds probabilistic information to the linguistic terms. Subsequently, its operational rules (Gou and Xu 2016; Liao et al. 2019) and comparison methods (Xian et al. 2019; Bai et al. 2017) were further discussed. PLTSs (Pang et al. 2016; Wu et al. 2018; Liu and Li 2019; Luo et al. 2020; Lin et al. 2021; Gou et al. 2021), its extended sets (Chai et al. 2021; Wei et al. 2020; Krishankumar et al. 2020; Zhang et al. 2020; Jin et al. 2019), and probabilistic linguistic preference relations (PLPRs) (Liu et al. 2019; Gao et al. 2019, 2019; Song and Hu 2019; Li et al. 2020; Opricovic 1998) have very good effects in group decision making (GDM). When expressing decision making information, the credibility of the information is often ignored. Therefore, Zadeh (2011) took it into consideration and proposed the Z-number. Wang et al. (2017) and Xian et al. (2019) combined language and Z-number and proposed linguistic Z-numbers and Z-linguistic variables, respectively. Because of its superiority in the completeness of information representation, it is widely used in decision making (Krohling et al. 2019; Peng et al. 2019; Dai et al. 2008; Tao et al. 2020; Xian et al. 2021).

However, the existing linguistic representation methods are simple to the form of linguistic representation. In practical problems, DMs’ cognition results of information may have some or all characteristics such as fuzziness, ambiguity, and randomness. And most of the decision making problems we encounter are one-dimensional, but there are also multi-dimensional ones.

Example 1

An expert evaluates the development potential of a project, and he needs to evaluate it from both the domestic market and the foreign market. So, this is a two-dimensional problem. For the evaluation of the domestic market potential, the degree belongs to “very good" is 0.8, and the degree does not belong to “very good" is 0.1. The credibility of the evaluation is “90% possible". As for foreign markets, the degree belongs to “good" is 0.6, and the degree does not belong to “good" is 0.2. The credibility of the evaluation is “70% possible". Then, the above contents can be expressed as

Therefore, linguistic variable, ambiguity(include membership degree and non-membership degree), and randomness(probability, credibility) appear simultaneously in the evaluation. However, the existing linguistic representation methods cannot completely represent these information. It is very necessary to propose a new linguistic representation method.

In addition, the decision information in the existing methods is DMs’ subjective evaluation, which leads to the lack of objectivity and inaccuracy of the decision results. The characterization of the upper and lower approximations in the rough sets (RSs) (Pawlak 1982) relies on the original data and does not rely on prior knowledge. With this idea, these data can be aggregated into objective and consistent group judgments, so as to obtain an evaluation close to the real ranking.

Example 2

Using the information in Example 1, and inspired by the approximations in the rough set, the lower and upper approximations A, B and \(A', B'\) can be obtained by calculation. The approximations are used to express the existing information in Example 1 as

In the MADGM, Saaty (1980) first proposed the famous Analytic Hierarchy Process (AHP), which is based on the principle of hierarchical decision-making problems. Hwang and Yoon (1981) proposed a TOPSIS method based on the ideal point principle. This method first determines an ideal point and selects the closest solution to the ideal point as the optimal solution. Opricovic (1998) proposed the VIKOR decision-making method, which is a compromise ranking method that uses maximizing group utility and minimizing individual regret values to compromise the ranking of finite decision options. Among the above methods, the VIKOR method has an additional decision-making mechanism coefficient compared to the TOPSIS method, which can enable decision-makers to make more aggressive or conservative decisions. The use of the VIKOR model increases the reflection of the importance of the decision-maker’s own needs. With the development of fuzzy mathematics, research on uncertain multi-attribute decision-making problems has begun again, and some achievements have been made one after another. In recent years, Khan et al. (2020) proposed a new technique for Pythagorean cubic fuzzy multi-criteria decision-making using the Topsis method, and based on this, proposed an MCDM method that utilizes PCF information.Meniz (2023) introduced fuzzy AHP-VIKOR to calculate "ideal vaccination". Tazzit et al. (2023) proposes an evaluation method based on mixed weight determination and extended VIKOR model.

The COVID-19 is a major public health crisis that poses a great threat to the lives of citizens around the world and has a major impact on the world economy. Since the outbreak of the COVID-19, scholars have conducted extensive and in-depth research on it. From a spatial perspective, Melin et al. (2020a) used self-organizing maps to analyze the global COVID-19 pandemic. A multiple ensemble neural network model with fuzzy response aggregation (Melin et al. 2020b) was proposed to predict the time series of COVID-19 in Mexico. Berekaa (2021) published his insights on the COVID-19 pandemic from the origin, pathogenesis, diagnosis and therapeutic intervention. Mustafa (2021) also conducted research and statistics on COVID-19. Pejic-Bach (2021) pointed out that electronic commerce met the communication needs of individuals and businesses during the epidemic. Because of the risk of inflow of some confirmed cases, the risk of medical resources, etc., the epidemic situation in some countries is repeated. Sporadic outbreaks still occur in better controlled countries. As things stand, the COVID-19 will last for a while yet. Therefore, assessing the risk of the COVID-19 in cities can help local governments to accurately prevent and control the epidemic.

Combined with the above four writing motivations, the contributions of this paper are as follows:

First, taking the fuzziness, randomness and approximation of information into consideration, the concept of \(\chi\)LSs is proposed in this paper. It overcomes the problem of incomplete information representation in previous methods and is more flexible in dimension.

Second, the comparison method, normalization, operational rules and distance measure of \(\chi\)LSs are also further studied.

Finally, we propose a \(\chi\)LVIKOR method based on \(\chi\)LSs for MAGDM problem. The subsequent use of this method to address COVID-19 risk assessment in cities demonstrates the effectiveness of the method.

The rest of the paper is distributed as follows: Sect. 2 is a review of existing linguistic representation methods. In Sect. 3, a concept of \(\chi\)LSs and related basic theories are put forward. A new \(\chi\)LVIKOR method for the MAGDM problem with \(\chi\)LSs is constructed in Sect. 4. In Sect. 5, a numerical case on the risk assessment of COVID-19 in cities illustrates the effectiveness of this method. In Sect. 6, conclude the entire text.

2 Preliminaries

This section mainly introduces several existing language set studies, providing a sufficient theoretical basis for the subsequent research methods of this article.

2.1 Linguistic term sets

For language is more in line with people’s cognition, the LTSs have more advantages in expressing uncertain information and have a wide range of applications.

Definition 1

(Herrera et al. 1995) The expression of the additive LTS whose subscripts are all non-negative numbers is as follows:

where \({s_i}\) is a linguistic term, \(\tau\) is an even number, \(s_0\) and \(s_{\tau }\) are the upper and lower limits of the LTS. S satisfies the following conditions:

-

(1)

If \(i > j\), then \({s_i} > {s_j}\);

-

(2)

There is a negative operator \(neg({s_i}) = {s_j}\), that makes \(i + j = \tau\).

To facilitate calculation and avoid losing information, Dai et al. (2008) expanded the discrete additive LTS.

Definition 2

(Dai et al. 2008) Let \(S = \{ {s_i} |i = 0,1, \ldots,\tau \}\) be an additive LTS, then an extended additive LTS is expressed as follows:

2.2 Intuitionistic linguistic sets

In order to reduce the limitations of linguistic or vague linguistic and better characterize non membership and decision-maker hesitation. On the basis of IFSs, Wang and Li (2010) proposed intuitionistic linguistic sets to reduce the limitations of fuzzy linguistic.

Definition 3

(Wang and Li 2010) Let \(\bar{S}\) be an extended additive LTS, \({s_{\theta (x)}} \in \bar{S}\), and X is the given universe of discourse, then the intuitionistic linguistic set (ILS) is expressed as follows:

where \({\mu }(x)\) and \({\nu }(x):X \rightarrow [0,1]\) denote the degree to which x belongs and does not belong to \({s_{\theta (x)}}\), respectively. \({\mu }(x) + {\nu }(x) \le 1\), and when \({\mu }(x) = 1,{\nu }(x) = 0\), the intuitionistic linguistic set degenerates into the LTS.

Definition 4

(Wang and Li 2010) Let \(A = \{ < x,[{s_{\theta (x)}},{\mu }(x),{\nu }(x)] > |x \in X\}\) be an ILS, then the triplet \(< {s_{\theta (x)}},{\mu }(x),{\nu }(x) >\) is an intuitionistic linguistic number (ILN).

Definition 5

(Wang and Li 2010) Let \({a_1} = < [{s_{\theta ({a_1})}},\mu ({a_1}),\nu ({a_1})] >\) and \({a_2} = < [{s_{\theta ({a_2})}},\mu ({a_2}),\nu ({a_2})]>\) be two ILNs, and \(\lambda \ge 0\), then

-

(1)

\({a_1} + {a_2} = < {s_{\theta ({a_1}) + \theta ({a_2})}},\) \(\frac{{\theta ({a_1})\mu ({a_1}) + \theta ({a_2})\mu ({a_2})}}{{\theta ({a_1}) + \theta ({a_2})}},\frac{{\theta ({a_1})\nu ({a_1}) + \theta ({a_2})\nu ({a_2})}}{{\theta ({a_1}) + \theta ({a_2})}} >\);

-

(2)

\({a_1} \cdot {a_2} = < {s_{\theta ({a_1})\theta ({a_2})}},\mu ({a_1})\mu ({a_2}),\nu ({a_1}) + \nu ({a_2})>\);

-

(3)

\(\lambda {a_1} = < {s_{\lambda \theta ({a_1})}},\mu ({a_1}),\nu ({a_1}) >\);

-

(4)

\({a_1}^\lambda = < {s_{\theta {{({a_1})}^\lambda }}},\mu {({a_1})^\lambda },1 - {(1 - \nu ({a_1}))^\lambda } >\).

Definition 6

(Wang and Li 2010) Let \(a = < [{s_{\theta (a)}},\mu (a),\nu (a)] >\) be an ILN, then its compromise expectation is:

2.3 Hesitant fuzzy linguistic term sets

To address the hesitation of experts in evaluating multiple values such as indicators, choices, and variables, the HFLTS proposed by Rodriguez et al. (2012) contains several possible linguistic term values, which indicate the hesitation of DMs.

Definition 7

(Rodriguez et al. 2012) Let \(S = \{ {s_i} |i = 0,1, \ldots,\tau \}\) be a LTS, then the HFLTS \({b_S}\) is a continuous ordered finite subset of S.

Definition 8

(Zhu and Xu 2013) Let \({b_1} = \{ {b_1}^k |k = 1,2, \ldots,\# {b_1}\}\) and \({b_2} = \{ {b_2}^k |k = 1,2, \ldots,\# {b_2}\}\) be two HFLTSs, \(\# {b_1}\) and \(\# {b_2}\) are the number of linguistic terms in \({b_1}\) and \({b_2}\), \(\# {b_1} = \# {b_2}\), and \(\lambda \ge 0\), then the the operational rules are as follows:

-

(1)

\({b_1} \oplus {b_2} = \mathop \cup \limits _{{b_1}^{\delta (k)} \in {b_1},{b_2}^{\delta (k)} \in {b_2}} \{ {b_1}^{\delta (k)} \oplus {b_2}^{\delta (k)}\}\);

-

(2)

\(\lambda {b_1} = \mathop \cup \limits _{{b_1}^{\delta (k)} \in {b_1}} \{ \lambda {b_1}^{\delta (k)}\}\),

where \({b_1}^{\delta (k)}\) and \({b_2}^{\delta (k)}\) are the kth linguistic terms in \({b_1}\) and \({b_2}\), respectively.

2.4 Z-linguistic sets

Considering the lack of comprehensive consideration for fuzziness, hesitation, and randomness in the aforementioned linguistic, Xian et al. (2019) proposed Z-linguistic sets on the basis of Z-numbers, which can simultaneously represent linguistic information and its credibility.

Definition 9

(Xian et al. 2019) Let X be a non-empty set, then a Z-linguistic set is defined as follows:

where \({s_\theta }:X \rightarrow S,x \mapsto {s_{\theta (x)}} \in S\), \({f_\sigma }:X \rightarrow F,x \mapsto {f_{\sigma (x)}} \in F\), and \({r_\rho }:X \rightarrow R,x \mapsto {r_{\rho (x)}} \in R\). S, F, and R are three linguistic scales. \({f_{\sigma (x)}}\) is the membership of \({s_{\theta (x)}}\), and \({r_{\rho (x)}}\) is the credibilty of \(< {s_{\theta (x)}},{f_{\sigma (x)}}>\).

2.5 Fuzzy rough sets

In order to solve the problem of incomplete and uncertain information, Dubois and Prade (1990) proposed the concept of fuzzy rough sets to solve this problem.

Definition 10

(Dubois and Prade 1990) Let (X, R) be an Pawlak approximate space, and A is a fuzzy set on X, then the lower approximation \(\underline{A}\) and the upper approximation \(\bar{A}\) of A on (X, R) are fuzzy sets. Their membership functions are:

where \({[x]_R}\) is the equivalent class of x under R.

3 \(\chi\)-Linguistic sets

In this section, we comprehensively consider the various characteristics of information including membership degree, credibility and approximation based on rough sets, and propose the concept of \(\chi\)-linguistic sets (\(\chi\)LSs) and its related theories.

3.1 The concept of \(\chi\)LSs

Definition 11

Let \(\bar{S}\) be an extended additive LTS, and X be a non-empty set, then an \(\chi\)-linguistic set (\(\chi\)LS) is defined as follows:

where \(\widetilde{s}\)=\(s_\theta ^n:X \rightarrow {\bar{S}^n},x \mapsto s_{\theta (x)}^n \in {\bar{S}^n}\), \(\widetilde{f}\)=\(f_\sigma ^n:X \rightarrow {F^n},x \mapsto f_{\sigma (x)}^n \in {F^n}\), \(\widetilde{p}\)=\(p_\rho ^n:X \rightarrow {P^n},x \mapsto p_{\rho (x)}^n \in {P^n}\), and \(\widetilde{g}\)=\(g_\varsigma ^n:X \rightarrow {G^n},x \mapsto g_{\varsigma (x)}^n \in {G^n}\). \({\bar{S}^n} = \bar{S} \times \bar{S} \times \cdots \times \bar{S}\) is a n-dimensional extended additive LTS, \({F^n} = F \times F \times \cdots \times F\) is a n-dimensional linguistic ambiguity vector set, \({P^n} = P \times P \times \cdots \times P\) is a possibility(credibility) vector set of n-dimensional ambiguity, and \({G^n} = G \times G \times \cdots \times G\) is an approximation vector set of n-dimensional linguistic ambiguity and its possibility. \(f_{\sigma (x)}^n\) is the linguistic ambiguity (include the membership degree \(\mu _{\sigma (x)}^n\) and the non-membership degree \(\nu _{\sigma (x)}^n\)) vector of \(s_{\theta (x)}^n\), \(p_{\rho (x)}^n\) is the probability (reliability) vector of the fuzzy linguistic information \((s_{\theta (x)}^n,f_{\sigma (x)}^n)\), and \(g_{\varsigma (x)}^n\) is the approximation (include the lower approximation and the upper approximation, which are introduced in detail in Definitions 13 and 14) vector of the fuzzy linguistic information \([(s_{\theta (x)}^n,f_{\sigma (x)}^n);p_{\rho (x)}^n]\). \(\mu _{\sigma (x)}^n,\nu _{\sigma (x)}^n,p_{\rho (x)}^n \in [0,1]\) and \(\mu _{\sigma (x)}^n + \nu _{\sigma (x)}^n \le 1\).

Definition 12

Let \(L\chi (x) = \{ < x,\{ [(s_{\theta (x)}^n,f_{\sigma (x)}^n);p_{\rho (x)}^n];g_{\varsigma (x)}^n\} > |x \in X\}\) be an \(\chi\)LS, then the quaternion \(< [(s_{\theta (x)}^n,f_{\sigma (x)}^n);p_{\rho (x)}^n];g_{\varsigma (x)}^n >\) is an \(\chi\)-linguistic variable (\(\chi\)LV), and it is denoted as \(l\chi (x)\).

In this way, the \(\chi\)LS can also be represented as \(L\chi (x) = \{ < x,l\chi (x) > |x \in X\}\).

The solution to the approximation \(g_{\varsigma (x)}^n\) (include the lower approximation and the upper approximation) is introduced below.

Definition 13

Let \(l'\chi (x) = < (s_{\theta (x)}^n,f_{\sigma (x)}^n);p_{\rho (x)}^n >\) be an IPLV, and \(L'\chi (x) = \{ < x,l'\chi (x) > |x \in X\}\) be an IPLS, then the lower approximation of the \(\chi\)LV \(l\chi (x)\) is defined as:

where \(L'\chi (x)({\dot{s}^n})\), \(L'\chi (x)({{\dot{f}}^n})\), and \(L'\chi (x)({\dot{p}^n})\) are \(\dot{s}^n\), \({\dot{f}}^n\), and \(\dot{p}^n\) in \(L'\chi (x)\), respectively.

Definition 14

Let \(l'\chi (x) = < (s_{\theta (x)}^n,f_{\sigma (x)}^n);p_{\rho (x)}^n >\) be an IPLV, and \(L'\chi (x) = \{ < x,l'\chi (x) > |x \in X\}\) be an IPLS, then the upper approximation of the ILV \(l\chi (x)\) is defined as:

where \(L'\chi (x)({\dot{s}^n})\), \(L'\chi (x)({{\dot{f}}^n})\), and \(L'\chi (x)({\dot{p}^n})\) are \(\dot{s}^n\), \({\dot{f}}^n\), and \(\dot{p}^n\) in \(L'\chi (x)\), respectively.

In order to facilitate calculation, we use the lower and the upper approximation to find the lower and upper limits of \(l\chi (x)\).

Definition 15

Let \(< \left( {\underline{apr} (s_{\theta (x)}^n),\underline{apr} (f_{\sigma (x)}^n)} \right) ;\,\underline{apr} (p_{\rho (x)}^n) >\) be the lower approximation of \(l\chi (x)\), then the lower limit of \(l\chi (x)\) is defined as:

where \({{N_{ls}}}\), \({{N_{lf}}}\), and \({{N_{lp}}}\) are the number of elements in \({\underline{apr} (s_{\theta (x)}^n)}\), \({\underline{apr} (f_{\sigma (x)}^n)}\), and \({\underline{apr} (p_{\rho (x)}^n)}\).

Definition 16

Let \(< \left( {\overline{apr} (s_{\theta (x)}^n),\,\overline{apr} (f_{\sigma (x)}^n)} \right) ;\,\,\overline{apr} (p_{\rho (x)}^n) >\) be the upper approximation of \(l\chi (x)\), then the upper limit of \(l\chi (x)\) is defined as:

where \({{N_{us}}}\), \({{N_{uf}}}\), and \({{N_{up}}}\) are the number of elements in \({\overline{apr} (s_{\theta (x)}^n)}\), \({\overline{apr} (f_{\sigma (x)}^n)}\), and \({\overline{apr} (p_{\rho (x)}^n)}\).

In this way, \(g_{\varsigma (x)}^n\) can also be expressed in the form of limits:

The boundary of \(l\chi (x)\) is:

Example 3

Use the linguistic terms in Example 2 to express the linguistic information in Example 1, then it is expressed as an IPLV: \({l_1}'\chi (x) = < [({s_6},0.8,0.1);0.9],[({s_5},0.6,0.2);0.7] >\).

A total of three DMs participated in the evaluation. The evaluations of the remaining two DMs are \({l_2}'\chi (x) = < [({s_4},0.7,0.1);0.6],[({s_6},0.5,0.3);0.8] >\) and \({l_3}'\chi (x) = < [({s_2},0.7,0.2);0.6],[({s_5},0.9,0.1);0.9] >\), then the three evaluations aggregate an IPLS, denoted as

(1) From Definitions 13 and 15, we use the lower approximation method to find the lower limit:

From Eq. (15), we can obtain the lower approximation of linguistic variables:

\(\underline{\lim } (s_{\theta ({x_1})}^1) = \frac{1}{3} \times ({s_6} \oplus {s_4} \oplus {s_2}) = {s_4}\)

From Eq. (16), we can obtain the lower approximation of linguistic membership degree:

\(\underline{\lim } (\mu _{\sigma ({x_1})}^1) = \frac{1}{3} \times (0.8 + 0.7 + 0.7) \approx 0.73\)

\(\underline{\lim } (\nu _{\sigma ({x_1})}^1) = \frac{1}{3} \times (0.1 + 0.1 + 0.2) \approx 0.13\)

From Eq. (17), we can obtain a lower approximation of the credibility of uncertain linguistic information:

\(\underline{\lim } (p_{\rho ({x_1})}^1) = \frac{1}{3} \times (0.9 + 0.6 + 0.6) = 0.7\)

(2) From Definitions 14 and 16, we use the upper approximation method to find the upper limit:

From Eq. (18), we can obtain the upper approximation of linguistic variables:

\(\overline{\lim } (s_{\theta ({x_1})}^1) = \frac{1}{1} \times {s_6} = {s_6}\)

From Eq. (19), we can obtain the upper approximation of linguistic membership:

\(\overline{\lim } (\mu _{\sigma ({x_1})}^1) = \frac{1}{1} \times 0.8 = 0.8\)

\(\overline{\lim } (\nu _{\sigma ({x_1})}^1) = \frac{1}{1} \times 0.1 = 0.1\)

From Eq. (20), we can obtain a upper approximation of the credibility of uncertain linguistic information:

\(\overline{\lim } (p_{\rho ({x_1})}^1) = \frac{1}{1} \times 0.9 = 0.9\)

(3) From Eq. (21), Based on the above calculation results, we can obtain one-dimensional approximations:

\(g_{\varsigma ({x_1})}^1 = \left( {< ({s_4},0.73,0.13);0.7>, < ({s_6},0.8,0.1);0.9 > } \right)\).

Similarly, we can obtain a two-dimensional approximation:

\(g_{\varsigma ({x_1})}^2 = \left( {< ({s_5},0.55,0.25);0.7>, < ({s_{5.3}},0.75,0.15);0.8 > } \right)\).

(4) Add \(g_{\varsigma ({x_1})}^1\) and \(g_{\varsigma ({x_1})}^2\) to the back of the corresponding dimension in \({l_1}'\chi (x)\) and obtain an \(\chi\)LV:

\(\begin{array}{l} \;\;\;{l_1}\chi (x) = \left\langle \begin{array}{l} \{ [({s_6},0.8,0.1);0.9];\left( \begin{array}{l}< ({s_4},0.73,0.13);0.7> ,\\< ({s_6},0.8,0.1);0.9> \end{array} \right) \},\\ \{ [({s_5},0.6,0.2);0.7];\left( \begin{array}{l}< ({s_5},0.55,0.25);0.7> ,\\ < ({s_{5.3}},0.75,0.15);0.8 > \end{array} \right) \} \end{array} \right\rangle \end{array}.\)

Similarly, we obtain \({l_2}\chi (x)\) and \({l_3}\chi (x)\).

Then, an \(\chi\)LS is aggregate as follows: \(\begin{array}{l} L\chi (x) = \left\{ \begin{array}{l} \left\langle \begin{array}{l} \{ [({s_6},0.8,0.1);0.9];\left( \begin{array}{l}< ({s_4},0.73,0.13);0.7> ,\\< ({s_6},0.8,0.1);0.9> \end{array} \right) \},\\ \{ [({s_5},0.6,0.2);0.7];\left( \begin{array}{l}< ({s_5},0.55,0.25);0.7> ,\\< ({s_{5.3}},0.75,0.15);0.8> \end{array} \right) \} \end{array} \right\rangle ,\\ \left\langle \begin{array}{l} \{ [({s_4},0.7,0.1);0.6];\left( \begin{array}{l}< ({s_3},0.7,0.15);0.6> ,\\< ({s_5},0.75,0.1);0.7> \end{array} \right) \},\\ \{ [({s_6},0.5,0.3);0.8];\left( \begin{array}{l}< ({s_{5.3}},0.5,0.3);0.75> ,\\< ({s_6},0.67,0.2);0.85> \end{array} \right) \} \end{array} \right\rangle ,\\ \left\langle \begin{array}{l} \{ [({s_2},0.7,0.2);0.6];\left( \begin{array}{l}< ({s_2},0.7,0.2);0.6> ,\\< ({s_3},0.73,0.2);0.7> \end{array} \right) \},\\ \{ [({s_5},0.9,0.1);0.9];\left( \begin{array}{l}< ({s_5},0.67,0.2);0.8> ,\\ < ({s_{5.3}},0.9,0.1);0.9 > \end{array} \right) \} \end{array} \right\rangle \end{array} \right\} \end{array}\).

Next, the operational rules of \(g_{\varsigma (x)}^n\) in \(\chi\)LVs are introduced.

Definition 17

Let \(g_{\varsigma ({x_1})}^{{n_1}} = (< ( {\underline{\lim } (s_{\theta ({x_1})}^{{n_1}}),\underline{\lim } (f_{\sigma ({x_1})}^{{n_1}})} ); \underline{\lim } (p_{\rho ({x_1})}^{{n_1}}) >, <( {\overline{\lim } (s_{\theta ({x_1})}^{{n_1}}),\,\overline{\lim } (f_{\sigma ({x_1})}^{{n_1}})} );\) \(\overline{\lim } (p_{\rho ({x_1})}^{{n_1}}) >)\) and \(g_{\varsigma ({x_2})}^{{n_2}} = (< \left( {\underline{\lim } (s_{\theta ({x_2})}^{{n_2}}),\underline{\lim } (f_{\sigma ({x_2})}^{{n_2}})} \right) ;\,\underline{\lim } (p_{\rho ({x_2})}^{{n_2}})>, < \left( {\overline{\lim } (s_{\theta ({x_2})}^{{n_2}}),\,\overline{\lim } (f_{\sigma ({x_2})}^{{n_2}})} \right) ; \overline{\lim } (p_{\rho ({x_2})}^{{n_2}}) >)\) be two approximations in \({l_1}\chi (x)\) and \({l_2}\chi (x)\), respectively. The subscript of \(s_{\theta (x)}^n\) is represented as \({\theta ^n}(x)\), \({n_1} = {n_2} = n\), and \(\lambda \ge 0\). Then, the operational rules are as follows:

(1) \(g_{\varsigma ({x_1})}^n \oplus g_{\varsigma ({x_2})}^n\) \(\begin{array}{l} = (< \left( \begin{array}{l} {s_{\underline{\lim } ({\theta ^n}({x_1})) + \underline{\lim } ({\theta ^n}({x_2}))}}, \underline{\lim } (f_{\sigma ({x_1})}^{{n_1}}) \oplus \underline{\lim } (f_{\sigma ({x_2})}^{{n_2}}) \end{array} \right) ;\frac{{\underline{\lim } (p_{\rho ({x_1})}^n) + \underline{\lim } (p_{\rho ({x_2})}^n)}}{2}> \;,\\ \;\;\;\;\; < \left( \begin{array}{l} {s_{\overline{\lim } ({\theta ^n}({x_1})) + \overline{\lim } ({\theta ^n}({x_2}))}}, \overline{\lim } (f_{\sigma ({x_1})}^n) \oplus \overline{\lim } (f_{\sigma ({x_2})}^n) \end{array} \right) ;\frac{{\overline{\lim } (p_{\rho ({x_1})}^n) + \overline{\lim } (p_{\rho ({x_2})}^n)}}{2} > ), \end{array}\) where \(\underline{\lim } (f_{\sigma ({x_1})}^n) \oplus \underline{\lim } (f_{\sigma ({x_2})}^n)\) \(= \left( \begin{array}{l} \frac{{\underline{\lim } ({\theta ^n}({x_1}))\underline{\lim } (\mu _{\sigma ({x_1})}^n) + \underline{\lim } ({\theta ^n}({x_2}))\underline{\lim } (\mu _{\sigma ({x_2})}^n)}}{{\underline{\lim } ({\theta ^n}({x_1})) + \underline{\lim } ({\theta ^n}({x_2}))}},\\ \frac{{\underline{\lim } ({\theta ^n}({x_1}))\underline{\lim } (\nu _{\sigma ({x_1})}^n) + \underline{\lim } ({\theta ^n}({x_2}))\underline{\lim } (\nu _{\sigma ({x_2})}^n)}}{{\underline{\lim } ({\theta ^n}({x_1})) + \underline{\lim } ({\theta ^n}({x_2}))}} \end{array} \right)\),

\(\overline{\lim } (f_{\sigma ({x_1})}^n) \oplus \overline{\lim } (f_{\sigma ({x_2})}^n)\) \(\;\;\; = \left( \begin{array}{l} \frac{{\overline{\lim } ({\theta ^n}({x_1}))\overline{\lim } (\mu _{\sigma ({x_1})}^n) + \overline{\lim } ({\theta ^n}({x_2}))\overline{\lim } (\mu _{\sigma ({x_2})}^n)}}{{\overline{\lim } ({\theta ^n}({x_1})) + \overline{\lim } ({\theta ^n}({x_2}))}},\\ \frac{{\overline{\lim } ({\theta ^n}({x_1}))\overline{\lim } (\nu _{\sigma ({x_1})}^n) + \overline{\lim } ({\theta ^n}({x_2}))\overline{\lim } (\nu _{\sigma ({x_2})}^n)}}{{\overline{\lim } ({\theta ^n}({x_1})) + \overline{\lim } ({\theta ^n}({x_2}))}} \end{array} \right)\), \({\underline{\lim } ({\theta ^{{n}}}({x_1}))}\), \({\underline{\lim } ({\theta ^{{n}}}({x_2}))}\), \({\overline{\lim } ({\theta ^{{n}}}({x_1}))}\), and \({\overline{\lim } ({\theta ^{{n}}}({x_2}))}\) are the subscripts of \({\underline{\lim } (s_{\theta ({x_1})}^{{n}})}\), \({\underline{\lim } (s_{\theta ({x_2})}^{{n}})}\), \({\overline{\lim } (s_{\theta ({x_1})}^{{n}})}\), and \({\overline{\lim } (s_{\theta ({x_2})}^{{n}})}\), respectively.

(2) \(g_{\varsigma ({x_1})}^n \otimes g_{\varsigma ({x_2})}^n\) \(\begin{array}{l} = (< \left( \begin{array}{l} {s_{\underline{\lim } ({\theta ^n}({x_1})) \times \underline{\lim } ({\theta ^n}({x_2}))}},\\ \underline{\lim } (f_{\sigma ({x_1})}^n) \otimes \underline{\lim } (f_{\sigma ({x_2})}^n) \end{array} \right) ;\,\underline{\lim } (p_{\rho ({x_1})}^n) \times \underline{\lim } (p_{\rho ({x_2})}^n)> \;,\\ \;\;\;\;\; < \left( \begin{array}{l} {s_{\overline{\lim } ({\theta ^n}({x_1})) \times \overline{\lim } ({\theta ^n}({x_2}))}},\\ \overline{\lim } (f_{\sigma ({x_1})}^n) \otimes \overline{\lim } (f_{\sigma ({x_2})}^n) \end{array} \right) ;\,\,\overline{\lim } (p_{\rho ({x_1})}^n) \times \overline{\lim } (p_{\rho ({x_2})}^n) > ), \end{array}\) where \(\underline{\lim } (f_{\sigma ({x_1})}^n) \otimes \underline{\lim } (f_{\sigma ({x_2})}^n)\) \(= \left( {\underline{\lim } (\mu _{\sigma ({x_1})}^n) \times \underline{\lim } (\mu _{\sigma ({x_2})}^n),\underline{\lim } (\nu _{\sigma ({x_1})}^n) + \underline{\lim } (\nu _{\sigma ({x_2})}^n)} \right) ,\;\;\;\) and \(\overline{\lim } (f_{\sigma ({x_1})}^n) \otimes \overline{\lim } (f_{\sigma ({x_2})}^n)\) \(= \left( {\overline{\lim } (\mu _{\sigma ({x_1})}^n) \times \overline{\lim } (\mu _{\sigma ({x_2})}^n),\,\overline{\lim } (\nu _{\sigma ({x_1})}^n) + \overline{\lim } (\nu _{\sigma ({x_2})}^n)} \right)\).

(3) \(\lambda g_{\varsigma ({x_1})}^n\) \(\begin{array}{l} = (< \left( {\lambda \underline{\lim } (s_{\theta ({x_1})}^n),\lambda \underline{\lim } (f_{\sigma ({x_1})}^n)} \right) ;\,\underline{\lim } (p_{\rho ({x_1})}^n)> \;< \left( {\lambda \overline{\lim } (s_{\theta ({x_1})}^n),\lambda \overline{\lim } (f_{\sigma ({x_1})}^n)} \right) ;\,\,\overline{\lim } (p_{\rho ({x_1})}^n)> )\\ = (< \left( {{s_{\lambda \underline{\lim } ({\theta ^n}({x_1}))}},\underline{\lim } (\mu _{\sigma ({x_1})}^n),\underline{\lim } (\nu _{\sigma ({x_1})}^n)} \right) ;\,\underline{\lim } (p_{\rho ({x_1})}^n)> \;< \left( {{s_{\lambda \overline{\lim } ({\theta ^n}({x_1}))}},\,\overline{\lim } (\mu _{\sigma ({x_1})}^n),\,\overline{\lim } (\nu _{\sigma ({x_1})}^n)} \right) ; \overline{\lim } (p_{\rho ({x_1})}^n) > ). \end{array}\)

(4) \({(g_{\varsigma ({x_1})}^n)^\lambda }\) \(\begin{array}{l} = (< \left( {{s_{\underline{\lim } {{({\theta ^n}({x_1}))}^\lambda }}},{{\left( {\underline{\lim } (f_{\sigma ({x_1})}^n)} \right) }^\lambda }} \right) ;{\left( {\underline{\lim } (p_{\rho ({x_1})}^n)} \right) ^\lambda }> \;< \left( {{s_{\overline{\lim } {{({\theta ^n}({x_1}))}^\lambda }}},{{\left( {\overline{\lim } (f_{\sigma ({x_1})}^n)} \right) }^\lambda }} \right) ;{\left( {\overline{\lim } (p_{\rho ({x_1})}^n)} \right) ^\lambda } > ), \end{array}\)

where \({\left( {\underline{\lim } (f_{\sigma ({x_1})}^n)} \right) ^\lambda } = \left( {{{\left( {\underline{\lim } (\mu _{\sigma ({x_1})}^n)} \right) }^\lambda },1 - {{\left( {1 - \underline{\lim } (\nu _{\sigma ({x_1})}^n)} \right) }^\lambda }} \right)\),

and \({\left( {\overline{\lim } (f_{\sigma ({x_1})}^n)} \right) ^\lambda } = \left( {{{\left( {\overline{\lim } (\mu _{\sigma ({x_1})}^n)} \right) }^\lambda },1 - {{\left( {1 - \overline{\lim } (\nu _{\sigma ({x_1})}^n)} \right) }^\lambda }} \right)\).

Example 4

Assume \(g_{\varsigma ({x_1})} = (< ({s_3},0.7,0.2);0.6>, < ({s_4},0.8,0.2);0.65 > )\) and \(g_{\varsigma ({x_2})} = (< ({s_4},0.65,0.1);0.5>, < ({s_5},0.8,0.1);0.55 > )\), then

-

(1)

\(g_{\varsigma ({x_1})} \oplus g_{\varsigma ({x_2})}\) \(= (< ({s_7},0.67,0.14);0.35>, < ({s_9},0.44,0.14);0.6 > )\);

-

(2)

\(g_{\varsigma ({x_1})} \otimes g_{\varsigma ({x_2})}\) \(= (< ({s_{12}},0.46,0.3);0.3>, < ({s_{20}},0.64,0.3);0.36 > )\);

-

(3)

\(2g_{\varsigma ({x_1})} = (< ({s_6},0.7,0.2);0.6>, < ({s_8},0.8,0.2);0.65 > )\);

-

(4)

\({(g_{\varsigma ({x_1})})^2}\) \(= (< ({s_9},0.49,0.36);0.36>, < ({s_{16}},0.64,0.36);0.42 > )\).

Remark 1

(1) When the upper approximation is equal to the lower approximation, the \(\chi\) LS degenerates into the intuitionistic probabilistic linguistic set (IPLS), and the \(\chi\)LV degenerates into the intuitionistic probabilistic linguistic variable (IPLV), which is

(2) When the upper approximation is equal to the lower approximation, and \(p_{\rho (x)}^1 = p_{\rho (x)}^2 = \cdots = p_{\rho (x)}^n\), the \(\chi\)LS degenerates into the intuitionistic hesitant linguistic set (IHLS), and the \(\chi\)LV degenerates into the intuitionistic hesitant linguistic variable (IHLV), which is

(3) When the upper approximation is equal to the lower approximation and \(n = 1\), the \(\chi\)LS degenerates into the intuitionistic Z-linguistic set (IZLS) (Xian et al. 2019, 2022), and the \(\chi\)LV degenerates into the intuitionistic Z-linguistic variable (IZLV), which is

(4) When the upper approximation is equal to the lower approximation, \(n = 1\), and \(\mu + \nu = 1\), the \(\chi\)LS degenerates into the Z linguistic set (ZLS) (Xian et al. 2019), and the \(\chi\)LV degenerates into the Z linguistic variable(ZLV), which is

(5) When the upper approximation is equal to the lower approximation, \(n = 1\), \(\mu = 1,\nu = 0\), and \(p = 1\), the \(\chi\)LS degenerates into the LTS (Zadeh 1975) and the \(\chi\)LV degenerates into the linguistic term, which is

The approximation of the \(\chi\)LS is similar to the RS, which includes the lower approximation, the upper approximation, and the boundary. It needs to be solved with the known fuzzy information in the \(\chi\)LS. The evaluation of a DM is expressed by the IPLV \(l'\chi (x) = < (s_{\theta (x)}^n,f_{\sigma (x)}^n);\,p_{\rho (x)}^n >\). The evaluations of multiple DMs are aggregated to obtain an IPLS \(L\chi (x) = \{ < x,l'\chi (x) > |x \in X\}\). After solving the approximation \(g_{\varsigma (x)}^n\) and adding it to \(l\chi (x)\), the IPLV becomes the \(\chi\)LV \(l\chi (x) = < [(s_{\theta (x)}^n,f_{\sigma (x)}^n);\,p_{\rho (x)}^n];\,g_{\varsigma (x)}^n >\), and the IPLS becomes the \(\chi\)LS \(L\chi (x) = \{ < x,l\chi (x)> |x \in X\}\).

3.2 Comparison method of \(\chi\)LSs

In this section, we propose the comparison methods of \(\chi\)LVs and \(\chi\)LSs, respectively. First, the concepts of the ambiguity expectation, the possibility expectation, and the approximation expectation of the \(\chi\)LV are introduced.

Definition 18

Let \({l_k}\chi (x) = < [(s_{\theta ({x_k})}^{{n_k}},\mu _{\sigma ({x_k})}^{{n_k}},\nu _{\sigma ({x_k})}^{{n_k}});\) \(p_{\rho ({x_k})}^{{n_k}}];\,g_{\varsigma ({x_k})}^{{n_k}} >\) be a \(\chi\)LV, then the ambiguity expectation of \({l_k}\chi (x)\) is

where \(\Delta\) is an equivalent transformation function of linguistic terms, \(\Delta :[0,\tau ] \rightarrow [0,1]\), and \(\Delta ({s_{\theta (x)}}) = \frac{{\theta (x)}}{\tau }\).

Definition 19

Let \({l_k}\chi (x) = < [(s_{\theta ({x_k})}^{{n_k}},\mu _{\sigma ({x_k})}^{{n_k}},\nu _{\sigma ({x_k})}^{{n_k}});\) \(p_{\rho ({x_k})}^{{n_k}}];\,g_{\varsigma ({x_k})}^{{n_k}} >\) be a \(\chi\)LV, then the possibility expectation of \({l_k}\chi (x)\) is:

Definition 20

Let \({l_k}\chi (x) = < [(s_{\theta ({x_k})}^{{n_k}},\mu _{\sigma ({x_k})}^{{n_k}},\nu _{\sigma ({x_k})}^{{n_k}});\) \(p_{\rho ({x_k})}^{{n_k}}];\,g_{\varsigma ({x_k})}^{{n_k}} >\) be a \(\chi\)LV, then the approximation expectation of \({l_k}\chi (x)\) is:

where \(E(\underline{\lim } {f^k}) = \frac{{\sum \nolimits _{n = 1}^{{n_k}} {\Delta \left( {\frac{{\underline{\lim } (s_{\theta (x)}^{{n_k}})\left( {\underline{\lim } (\mu _{\sigma (x)}^{{n_k}}) + 1 - \underline{\lim } (\nu _{\sigma (x)}^{{n_k}})} \right) }}{2}} \right) } }}{{{n_k}}}\), \(E(\overline{\lim } {f^k}) = \frac{{\sum \nolimits _{n = 1}^{{n_k}} {\Delta \left( {\frac{{\overline{\lim } (s_{\theta (x)}^{{n_k}})\left( {\overline{\lim } (\mu _{\sigma (x)}^{{n_k}}) + 1 - \overline{\lim } (\nu _{\sigma (x)}^{{n_k}})} \right) }}{2}} \right) } }}{{{n_k}}}\), \(E(\underline{\lim } {p^k}) = \frac{{\sum \nolimits _{n = 1}^{{n_k}} {\underline{\lim } (p_{\rho ({x_k})}^{{n_k}})} }}{{{n_k}}}\), \(E(\overline{\lim } {p^k}) = \frac{{\sum \nolimits _{n = 1}^{{n_k}} {\overline{\lim } (p_{\rho ({x_k})}^{{n_k}})} }}{{{n_k}}}\), and \(0 \le A,B \le 1\). \(\Delta\) is an equivalent transformation function of linguistic terms, \(\Delta :[0,\tau ] \rightarrow [0,1]\), and \(\Delta ({s_{\theta (x)}}) = \frac{{\theta (x)}}{\tau }\).

\(E({f^k}) \rightarrow [0,1]\), \(E({p^k}) \rightarrow [0,1]\), \(E({g^k}) \rightarrow [0,1]\). In this way, we map these three variables of \({{l_k}\chi (x)}\) to a three-dimensional coordinate system, and the value range they form is a cube with a side length of 1 in the first quadrant, as shown in Fig. 1. A certain point in this coordinate is denoted as \({{l_k}\chi (x)}\). Inspired by the spherical coordinate system, we can find the distance between this point and the origin O and two angles, respectively.

Definition 21

Let \({E({f^k})}\), \({E({p^k})}\), and \({E({g^k})}\) be the ambiguity expectation, the possibility expectation, and the approximation expectation of the \(\chi\)LV \({{l_k}\chi (x)}\), then the distance between \({{l_k}\chi (x)}\) and the origin O is

where \(0 \le A,B,C \le 1\) and \(A + B + C = 1\).

Definition 22

Let \({E({f^k})}\) and \({E({p^k})}\) be the ambiguity expectation and the possibility expectation of the \(\chi\)LV \({{l_k}\chi (x)}\), then the angle between the projection line of the line from the origin to the point \({{l_k}\chi (x)}\) on the \({E({f^k})}{E({p^k})}\)-plane and the positive \({E({f^k})}\)-axis is

Definition 23

Let \({E({g^k})}\) be the approximation expectation of the \(\chi\)LV \({{l_k}\chi (x)}\), \(r\left( {{l_k}\chi (x)} \right)\) be the distance between \({{l_k}\chi (x)}\) and the origin O, then the angle between the line from the origin O to the point \({{l_k}\chi (x)}\) and the positive \({E({g^k})}\)-axis is

In the information evaluation, the importance of the ambiguity, possibility, and approximation decreases in order (Fig. 2). Therefore, the comparison method of \(\chi\)LVs is as follows:

Definition 24

Let \({l_1}\chi (x)\) and \({l_2}\chi (x)\) be two ILVs, then:

-

(I)

If \(r\left( {{l_1}\chi (x)} \right) > r\left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \succ {l_2}\chi (x)\);

-

(II)

If \(r\left( {{l_1}\chi (x)} \right) < r\left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \prec {l_2}\chi (x)\);

-

(III)

If \(r\left( {{l_1}\chi (x)} \right) = r\left( {{l_2}\chi (x)} \right)\), then

-

(i)

If \(\vartheta \left( {{l_1}\chi (x)} \right) < \vartheta \left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \succ {l_2}\chi (x)\);

-

(ii)

If \(\vartheta \left( {{l_1}\chi (x)} \right) > \vartheta \left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \prec {l_2}\chi (x)\);

-

(iii)

If \(\vartheta \left( {{l_1}\chi (x)} \right) = \vartheta \left( {{l_2}\chi (x)} \right)\), then

-

(1)

If \(\varphi \left( {{l_1}\chi (x)} \right) < \varphi \left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \succ {l_2}\chi (x)\);

-

(2)

If \(\varphi \left( {{l_1}\chi (x)} \right) > \varphi \left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \prec {l_2}\chi (x)\);

-

(3)

If \(\varphi \left( {{l_1}\chi (x)} \right) = \varphi \left( {{l_2}\chi (x)} \right)\), then \({l_1}\chi (x) \sim {l_2}\chi (x)\).

-

(i)

Example 5

Let \(S = \{ {s_i} |i = 0,1, \ldots,6\}\) be an additive \(\chi\)TS, \({l_1}\chi (x) = \{ [({s_3},0.8,0.2);\,0.6];\,\, \left( \begin{array}{l}< ({s_3},0.7,0.2);\,\,0.6> ,\\ < ({s_4},0.8,0.2);\,0.65 > \end{array} \right) \}\) and \({l_2}\chi (x) = \{ [({s_5},0.8,0.1);\,0.5]\); \(\left( \begin{array}{l}< ({s_4},0.65,0.1);\,0.5> ,\\ < ({s_5},0.8,0.1);\,0.55 > \end{array} \right) \}\) be two \(\chi\)LVs, and \(A = 0.5,B = 0.3,C = 0.2\).

(1) From Definitions 18-20, we obtain:

(2) From Definitions 21 and 24, based on the above calculation results, we obtain:

(3) From

it can be concluded that

The concept of the probability degree is introduced before comparing \(\chi\)LSs.

Definition 25

Let \({L_1}\chi (x) = \{ < x,l_1^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_1}\chi (x)\}\) and \({L_2}\chi (x) = \{ < x,l_2^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_2}\chi (x)\}\) be two \(\chi\)LSs, \(l_1^k\chi (x)\) and \(l_2^k\chi (x)\) are the \(\chi\)LVs in \({L_1}\chi (x)\) and \({L_2}\chi (x)\), \(\# {L_1}\chi (x)\) and \(\# {L_2}\chi (x)\) are the numbers of \(\chi\)LVs in \({L_1}\chi (x)\) and \({L_2}\chi (x)\), then the possibility degree of \({L_1}\chi (x) \ge {L_2}\chi (x)\) is

where \({L_t}{\chi (x)^ + } = \mathop {\max }\limits _k \{ l_t^k\chi (x)\}\), \({L_t}{\chi (x)^ - } = \mathop {\min }\limits _k \{ l_t^k\chi (x)\} (t = 1,2)\). \(r(l_t^k\chi (x))\), \(\vartheta (l_t^k\chi (x))\), and \(\varphi (l_t^k\chi (x))\) are the distance between \(l_t^k\chi (x)(t = 1,2)\) and the origin O, and two angles of \(l_t^k\chi (x)(t = 1,2)\), respectively. Then, the value \(E(l_t^k\chi (x))\) is divided into three cases:

-

(1)

If all \(\chi\)LVs in \({L_1}\chi (x)\) and \({L_2}\chi (x)\) have the same \(r(l_t^k\chi (x))\), then \(E(l_t^k\chi (x)) = \vartheta (l_t^k\chi (x))\);

-

(2)

If all \(\chi\)LVs in \({L_1}\chi (x)\) and \({L_2}\chi (x)\) have the same \(r(l_t^k\chi (x))\) and \(\vartheta (l_t^k\chi (x))\), then \(E(l_t^k\chi (x)) = \varphi (l_t^k\chi (x))\);

-

(3)

If neither of the above two cases is satisfied, then \(E(l_t^k\chi (x)) = r(l_t^k\chi (x))\).

Therefore, the comparison method of \(\chi\)LSs is as follows:

Definition 26

Let \({L_1}\chi (x)\) and \({L_2}\chi (x)\) be two \(\chi\)LSs, then:

-

(I)

If \(p({L_1}\chi (x) \ge {L_2}\chi (x)) > p({L_2}\chi (x) \ge {L_1}\chi (x))\), then \({L_1}\chi (x) \succ {L_2}\chi (x)\);

-

(II)

If \(p({L_1}\chi (x) \ge {L_2}\chi (x)) = 1\), then \({L_1}\chi (x) > {L_2}\chi (x)\);

-

(III)

If \(p({L_1}\chi (x) \ge {L_2}\chi (x)) = 0.5\), then \({L_1}\chi (x) \sim {L_2}\chi (x)\).

Theorem 1

(Boundness) \(0< p({L_1}\chi (x) \ge {L_2}\chi (x)) < 1\).

Proof

According to Eq. (34), it is obvious.

Theorem 2

(Complementarity) \(p({L_1}\chi (x) \ge {L_2}\chi (x)) + p({L_2}\chi (x) \ge {L_1}\chi (x)) = 1\); especially, if \({L_1}\chi (x) = {L_2}\chi (x)\), then \(p({L_1}\chi (x) \ge {L_2}\chi (x)) = p({L_2}\chi (x) \ge {L_1}\chi (x)) = 0.5\).

The proof of Theorem 2 is shown in Appendix 1.

Example 6

Let \(S = \{ {s_i} |i = 0,1, \ldots,6\}\) be an additive LTS,

\({L_1}\chi (x) = \left\{ \begin{array}{l} \{ [({s_3},0.8,0.2);\,0.6];\,\left( \begin{array}{l}< ({s_3},0.7,0.2);\,0.6> ,\\< ({s_4},0.8,0.2);\,0.65> \end{array} \right) \},\\ \{ [({s_5},0.6,0.2);\,0.7];\,\left( \begin{array}{l}< ({s_4},0.6,0.2);\,0.65> ,\\ < ({s_5},0.7,0.2);\,0.7 > \end{array} \right) \} \end{array} \right\}\),

\({L_2}\chi (x) = \left\{ \begin{array}{l} \{ [({s_5},0.8,0.1);\,0.5];\,\left( \begin{array}{l}< ({s_4},0.65,0.1);\,0.5> ,\\< ({s_5},0.8,0.1);\,0.55> \end{array} \right) \},\\ \{ [({s_3},0.5,0.1);\,0.6];\,\left( \begin{array}{l}< ({s_3},0.5,0.1);\,0.55> ,\\ < ({s_4},0.65,0.1);\,0.6 > \end{array} \right) \} \end{array} \right\}\)

be two \(\chi\)LSs, and \(A = 0.5,B = 0.3,C = 0.2\). From Definition 25, we obtain:

3.3 The normalization of \(\chi\)LSs

In decision making, evaluations may be different dimensions and numbers of \(\chi\)LVs. To facilitate the operations of \(\chi\)LVs and \(\chi\)LSs, we need to normalize them. For \(\chi\)LVs, the normalization is to unify the dimensions of each \(\chi\)LV.

Definition 27

Let \({l_1}\chi (x) = < [(s_{\theta ({x_1})}^{{n_1}},\mu _{\sigma ({x_1})}^{{n_1}},\nu _{\sigma ({x_1})}^{{n_1}});\) \(p_{\rho ({x_1})}^{{n_1}}];\,g_{\varsigma ({x_1})}^{{n_1}} >\) and \({l_2}\chi (x) = < [(s_{\theta ({x_2})}^{{n_2}},\mu _{\sigma ({x_2})}^{{n_2}},\nu _{\sigma ({x_2})}^{{n_2}});\) \(p_{\rho ({x_2})}^{{n_2}}];\,g_{\varsigma ({x_2})}^{{n_2}} >\) be two \(\chi\)LVs, \({n_1}\) and \({n_2}\) are the dimensions of \({l_1}\chi (x)\) and \({l_2}\chi (x)\), respectively. If \({n_1} > {n_2}\), then add \({n_1} - {n_2}\)-dimensional \(\chi\)LV to \({l_2}\chi (x)\) so that the dimensions of \({l_1}\chi (x)\) and \({l_2}\chi (x)\) are unified. The added \(\chi\)LV is \({{n_1} - {n_2}}\)-dimensional \({\{ [({s_0},0,0);\,0];\,\left( \begin{array}{l}< ({s_0},0,0);\,0> ,\\ < ({s_0},0,0);\,0 > \end{array} \right) \} }\).

For \(\chi\)LSs, the normalization refers to the double unity of the dimensions and the number of \(\chi\)LVs it contains.

Definition 28

Let \({L_1}\chi (x) = \{ < x,l_1^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_1}\chi (x)\}\) and \({L_2}\chi (x) = \{ < x,l_2^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_2}\chi (x)\}\) be two \(\chi\)LSs, \(l_1^k\chi (x)\) and \(l_2^k\chi (x)\) are the normalized \(\chi\)LVs, \(\# {L_1}\chi (x)\) and \(\# {L_2}\chi (x)\) are the numbers of \(\chi\)LVs in \({L_1}\chi (x)\) and \({L_2}\chi (x)\), respectively. If \(\# {L_1}\chi (x) > \# {L_2}\chi (x)\), then add \(\# {L_1}\chi (x) - \# {L_2}\chi (x)\) \(\chi\)LVs to \(\# {L_2}\chi (x)\) so that the numbers of \(\chi\)LVs of \({L_1}\chi (x)\) and \({L_2}\chi (x)\) are unified. The added \(\chi\)LVs depend on the attitudes of DMs. There are two cases:

-

(1)

If DMs take a positive attitude, then the added \(\chi\)LVs are \({L_2}{\chi (x)^ + } = \mathop {\max }\limits _k \{ l_2^k\chi (x)\}\);

-

(2)

If DMs take a negative attitude, then the added \(\chi\)LVs are \({L_2}{\chi (x)^ - } = \mathop {\min }\limits _k \{ l_2^k\chi (x)\}\).

In this way, we summarize the normalization process of \(\chi\)LSs. Let \({L_1}\chi (x) = \{ < x,l_1^k\chi (x)> |x \in X,k = 1,2, \ldots,\# {L_1}\chi (x)\}\) and \({L_2}\chi (x) = \{ < x,l_2^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_2}\chi (x)\}\) be two \(\chi\)LSs, \({n_{1k}}(k = 1,2, \ldots,\# {L_1}\chi (x))\) and \({n_{2k}}(k = 1,2, \ldots,\# {L_2}\chi (x))\) are the dimensions of \({L_1}\chi (x)\) and \({L_2}\chi (x)\), then:

Step 1. If \({n_{1k}} \ne {n_{2k}}\), then according to Definition 27, we add \(\chi\)LVs of some dimensions to the \(\chi\)LVs with less dimension.

Step 2. If \(\# {L_1}\chi (x) \ne \# {L_2}\chi (x)\), then according to Definition 28, we add some \(\chi\)LVs to the \(\chi\)LS with the lower number of \(\chi\)LVs.

The normalized \(\chi\)LVs and \(\chi\)LSs are still denoted as \(l_1^k\chi (x)\), \(l_2^k\chi (x)\), \({L_1}\chi (x)\), and \({L_2}\chi (x)\).

3.4 Some operational rules of \(\chi\)LSs

In this section, we introduce some basic operational rules and related theorems of \(\chi\)LVs and \(\chi\)LSs. We assume all \(\chi\)LVs and \(\chi\)LSs have been normalized.

Definition 29

Let \({l_1}\chi (x) = < [(s_{\theta ({x_1})}^{{n_1}},\mu _{\sigma ({x_1})}^{{n_1}},\nu _{\sigma ({x_1})}^{{n_1}});\) \(p_{\rho ({x_1})}^{{n_1}}];\, g_{\varsigma ({x_1})}^{{n_1}} >\) and \({l_2}\chi (x) = < [(s_{\theta ({x_2})}^{{n_2}},\mu _{\sigma ({x_2})}^{{n_2}},\nu _{\sigma ({x_2})}^{{n_2}});\) \(p_{\rho ({x_2})}^{{n_2}}];\,g_{\varsigma ({x_2})}^{{n_2}} >\) be two \(\chi\)LVs, \({n_1} = {n_2}\), the subscript of \(s_{\theta (x)}^n\) is represented as \({\theta ^n}(x)\), and \(\lambda \ge 0\), then the operational rules of \(\chi\)LVs are as follows:

-

(1)

\({l_1}\chi (x) \oplus {l_2}\chi (x) = < [\mathop \cup \limits _{n = 1,2, \ldots,{n_1}} \{ ({s_{{\theta ^n}({x_1}) + {\theta ^n}({x_2})}},\frac{{{\theta ^n}({x_1})\mu _{\sigma ({x_1})}^n + {\theta ^n}({x_2})\mu _{\sigma ({x_2})}^n}}{{{\theta ^n}({x_1}) + {\theta ^n}({x_2})}}, \frac{{{\theta ^n}({x_1})\nu _{\sigma ({x_1})}^n + {\theta ^n}({x_2})\nu _{\sigma ({x_2})}^n}}{{{\theta ^n}({x_1}) + {\theta ^n}({x_2})}});\,\frac{{p_{\rho ({x_1})}^n + p_{\rho ({x_2})}^n}}{2}];\,g_{\varsigma ({x_1})}^n \oplus g_{\varsigma ({x_2})}^n\} >\).

-

(2)

\({l_1}\chi (x) \otimes {l_2}\chi (x) = < [\mathop \cup \limits _{n = 1,2, \ldots,{n_1}} \{ ({s_{{\theta ^n}({x_1}) \times {\theta ^n}({x_2})}},\mu _{\sigma ({x_1})}^n\mu _{\sigma ({x_2})}^n,\nu _{\sigma ({x_1})}^n + \nu _{\sigma ({x_2})}^n);\, p_{\rho ({x_1})}^n \times p_{\rho ({x_2})}^n];\,g_{\varsigma ({x_1})}^n \otimes g_{\varsigma ({x_2})}^n\} >\).

-

(3)

\(\lambda {l_1}\chi (x) =< [\mathop \cup \limits _{n = 1,2, \ldots,{n_1}} \{ ({s_{\lambda {\theta ^n}({x_1})}},\mu _{\sigma ({x_1})}^n,\nu _{\sigma ({x_1})}^n);\,p_{\rho ({x_1})}^n];\,\lambda g_{\varsigma ({x_1})}^n\}>\).

-

(4)

\({\left( {{l_1}\chi (x)} \right) ^\lambda } = < [\mathop \cup \limits _{n = 1,2, \ldots,{n_1}} \{ ({s_{{{\left( {{\theta ^n}({x_1})} \right) }^\lambda }}},{\left( {\mu _{\sigma ({x_1})}^n} \right) ^\lambda },1 - {\left( {1 - \nu _{\sigma ({x_1})}^n} \right) ^\lambda };\, {\left( {p_{\rho ({x_1})}^n} \right) ^\lambda }];\,{\left( {g_{\varsigma ({x_1})}^n} \right) ^\lambda }\} >\).

Theorem 3

Let \({l_1}\chi (x)= < [(s_{\theta ({x_1})}^{{n_1}},\mu _{\sigma ({x_1})}^{{n_1}},\nu _{\sigma ({x_1})}^{{n_1}});\) \(p_{\rho ({x_1})}^{{n_1}}];\,g_{\varsigma ({x_1})}^{{n_1}} >\) and \({l_2}\chi (x) = < [(s_{\theta ({x_2})}^{{n_2}},\mu _{\sigma ({x_2})}^{{n_2}},\nu _{\sigma ({x_2})}^{{n_2}});\) \(p_{\rho ({x_2})}^{{n_2}}];\,g_{\varsigma ({x_2})}^{{n_2}} >\) be two \(\chi\)LVs, and \(\lambda ,{\lambda _1},{\lambda _2} \ge 0\), then

-

(1)

\({l_1}\chi (x) \oplus {l_2}\chi (x) = {l_2}\chi (x) \oplus {l_1}\chi (x)\);

-

(2)

\({l_1}\chi (x) \otimes {l_2}\chi (x) = {l_2}\chi (x) \otimes {l_1}\chi (x)\);

-

(3)

\({\lambda _1}{l_1}\chi (x) \oplus {\lambda _2}{l_1}\chi (x) = ({\lambda _1} + {\lambda _2}){l_1}\chi (x)\).

The proof of Theorem 3 is shown in Appendix 2.

Example 7

Use the \(\chi\)LVs \({l_1}\chi (x)\) and \({l_2}\chi (x)\) in Example 4, then

-

(1)

\({l_1}\chi (x) \oplus {l_2}\chi (x)\) \(= \{ [({s_8},0.8,0.14);\,0.55];\,\left( \begin{array}{l}< ({s_7},0.67,0.14);\,0.35> ,\\ < ({s_9},0.44,0.14);\,0.6 > \end{array} \right) \}\).

-

(2)

\({l_1}\chi (x) \otimes {l_2}\chi (x)\) \(= \{ [({s_{15}},0.64,0.3);\,0.3];\,\left( \begin{array}{l}< ({s_{12}},0.46,0.3);\,0.3> ,\\ < ({s_{20}},0.64,0.3);\,0.36 > \end{array} \right) \}\).

-

(3)

\(2{l_1}\chi (x)\) \(= \{ [({s_6},0.8,0.2);\,0.6];\,\left( \begin{array}{l}< ({s_6},0.7,0.2);\,0.6> ,\\ < ({s_8},0.8,0.2);\,0.65 > \end{array} \right) \}\).

-

(4)

\({({l_1}\chi (x))^2}\) \(= \{ [({s_9},0.64,0.36);\,0.36];\,\left( \begin{array}{l}< ({s_9},0.49,0.36);\,0.36> ,\\ < ({s_{16}},0.64,0.36);\,0.42 > \end{array} \right) \}\).

Next, some basic operational rules of \(\chi\)LSs are introduced.

Definition 30

Let \({L_1}\chi (x) = \{ < x,l_1^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_1}\chi (x)\}\) and \({L_2}\chi (x) = \{ < x,l_2^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_2}\chi (x)\}\) be two \(\chi\)LVs, \(\# {L_1}\chi (x) = \# {L_2}\chi (x)\), and \(\lambda ,{\lambda _1},{\lambda _2} \ge 0\), then the operational rules of ILSs are as follows:

-

(1)

\({L_1}\chi (x) \oplus {L_2}\chi (x)\) \(= \mathop \cup \limits _{l_1^{\delta (k)}\chi (x) \in {L_1}\chi (x),\;\,l_2^{\delta (k)}\chi (x) \in {L_2}\chi (x)} \{ l_1^{\delta (k)}\chi (x) \oplus l_2^{\delta (k)}\chi (x)\}\);

-

(2)

\({L_1}\chi (x) \otimes {L_2}\chi (x)\) \(= \mathop \cup \limits _{l_1^{\delta (k)}\chi (x) \in {L_1}\chi (x),\;\,l_2^{\delta (k)}\chi (x) \in {L_2}\chi (x)} \{ l_1^{\delta (k)}\chi (x) \otimes l_2^{\delta (k)}\chi (x)\}\);

-

(3)

\(\lambda {L_1}\chi (x) = \mathop \cup \limits _{l_1^{\delta (k)}\chi (x) \in {L_1}\chi (x)} \{ \lambda l_1^{\delta (k)}\chi (x)\}\);

-

(4)

\({\left( {{L_1}\chi (x)} \right) ^\lambda } = \mathop \cup \limits _{l_1^{\delta (k)}\chi (x) \in {L_1}\chi (x)} \{ {\left( {l_1^{\delta (k)}\chi (x)} \right) ^\lambda }\}\), where \(l_1^{\delta (k)}\chi (x)\) and \(l_2^{\delta (k)}\chi (x)\) are the kth largest \(\chi\)LVs in \(l_1^k\chi (x)\) and \(l_2^k\chi (x)\).

Theorem 4

Let \({L_1}\chi (x) = \{ < x,l_1^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_1}\chi (x)\}\), \({L_2}\chi (x) = \{ < x,l_2^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_2}\chi (x)\}\), and \({L_3}\chi (x) = \{ < x,l_3^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_3}\chi (x)\}\) be three \(\chi\)LVs, \(\# {L_1}\chi (x) = \# {L_2}\chi (x) = \# {L_3}\chi (x)\), and \(\lambda ,{\lambda _1},{\lambda _2} \ge 0\), then

-

(1)

\({L_1}\chi (x) \oplus {L_2}\chi (x) = {L_2}\chi (x) \oplus {L_1}\chi (x)\);

-

(2)

\(({L_1}\chi (x) \oplus {L_2}\chi (x)) \oplus {L_3}\chi (x)= {L_1}\chi (x) \oplus ({L_2}\chi (x) \oplus {L_3}\chi (x))\);

-

(3)

\({L_1}\chi (x) \otimes {L_2}\chi (x) = {L_2}\chi (x) \otimes {L_1}\chi (x)\);

Proof

The above theorem is easy to prove, so we omit the proof here.

3.5 The distance measure of \(\chi\)LSs

In this section, the distance between \(\chi\)LVs and the distance between \(\chi\)LSs are introduced. We assume all \(\chi\)LVs and ILSs have been normalized.

Definition 31

Let \({l_1}\chi (x)\) and \({l_2}\chi (x)\) be two \(\chi\)LVs. The ambiguity expectation, the possibility expectation, and the approximation expectation of \({l_1}\chi (x)\) are \(E({f^1})\), \(E({p^1})\), and \(E({g^1})\), respectively. The ambiguity expectation, the possibility expectation, and the approximation expectation of \({l_2}\chi (x)\) are \(E({f^2})\), \(E({p^2})\), and \(E({g^2})\), respectively. Then, the generalized distance between \({l_1}\chi (x)\) and \({l_2}\chi (x)\) is:

where \(0 \le A,B,C \le 1\), \(A + B + C = 1\), and \(\lambda > 0\).

Remark 2

-

(1)

If \(\lambda = 1\), then the generalized distance degenerates into the Hamming distance:

$$\begin{aligned} \begin{array}{l} \;\;\;\;\;d\left( {{l_1}\chi (x),{l_2}\chi (x)} \right) \\ = A\left|{E({f^1}) - E({f^2})} \right|+ B\left|{E({p^1}) - E({p^2})} \right|+ C\left|{E({g^1}) - E({g^2})} \right|. \end{array} \end{aligned}$$(36) -

(2)

If \(\lambda = 2\), then the generalized distance degenerates into the Euclidean distance:

$$\begin{aligned} \begin{array}{l} \;\;\;\;\;d\left( {{l_1}\chi (x),{l_2}\chi (x)} \right) \\ = {\left( \begin{array}{l} A{\left|{E({f^1}) - E({f^2})} \right|^2} + B{\left|{E({p^1}) - E({p^2})} \right|^2} + C{\left|{E({g^1}) - E({g^2})} \right|^2} \end{array} \right) ^{1/2}}. \end{array} \end{aligned}$$(37) -

(3)

If \(E({f^2}) = E({p^2}) = E({g^2}) = 0\), and \(\lambda = 2\), then the generalized distance is equivalent to the distance between \({{l_1}\chi (x)}\) and the origin O proposed in Definition 21:

$$\begin{aligned} \begin{array}{l} \;\;\;\;\;d\left( {{l_1}\chi (x),{l_2}\chi (x)} \right) \\ = {\left( {A{{\left|{E({f^1})} \right|}^2} + B{{\left|{E({p^1})} \right|}^2} + C{{\left|{E({g^1})} \right|}^2}} \right) ^{1/2}} = r\left( {{l_1}\chi (x)} \right) . \end{array} \end{aligned}$$(38)

Theorem 5

Let \({l_1}\chi (x)\) and \({l_2}\chi (x)\) be two \(\chi\)LVs, then:

-

(1)

\(0 \le d\left( {{l_1}\chi (x),{l_2}\chi (x)} \right) \le 1\).

-

(2)

\(d\left( {{l_1}\chi (x),{l_2}\chi (x)} \right) = 0 \Rightarrow {l_1}\chi (x) \sim {l_2}\chi (x)\).

-

(3)

\(d\left( {{l_1}\chi (x),{l_2}\chi (x)} \right) = d\left( {{l_2}\chi (x),{l_1}\chi (x)} \right)\).

The proof of Theorem 5 is shown in Appendix 3.

Next, we introduce the distance between \(\chi\)LSs.

Definition 32

Let \({L_1}\chi (x) = \{ < x,l_1^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_1}\chi (x)\}\) and \({L_2}\chi (x) = \{ < x,l_2^k\chi (x) > |x \in X,k = 1,2, \ldots,\# {L_2}\chi (x)\}\) be two \(\chi\)LSs, and \(\# {L_1}\chi (x) = \# {L_2}\chi (x)\), then the distance between \({L_1}\chi (x)\) and \({L_2}\chi (x)\) is:

where \({d(l_1^k\chi (x),l_2^k\chi (x))}\) is the distance between \({l_1^k\chi (x)}\) and \({l_2^k\chi (x)}\).

Remark 3

Let \({f_1^k}\), \({f_2^k}\), \({p_1^k}\), \({p_2^k}\), \({g_1^k}\) and \({g_2^k}\) be the ambiguity expectations, the possibility expectations, and the approximation expectations of \({l_1^k\chi (x)}\) and \({l_2^k\chi (x)}\), respectively. \(0 \le A,B,C \le 1\), \(A + B + C = 1\), and \(\lambda > 0\). Then:

(1) If \(d(l_1^k\chi (x),l_2^k\chi (x))\) is the generalized distance, then \(d({L_1}\chi (x),{L_2}\chi (x))\) is the generalized distance between \({L_1}\chi (x)\) and \({L_2}\chi (x)\):

(2) If \(d(l_1^k\chi (x),l_2^k\chi (x))\) is the Hamming distance, then \(d({L_1}\chi (x),{L_2}\chi (x))\) is the Hamming distance between \({L_1}\chi (x)\) and \({L_2}\chi (x)\):

(3) If \(d(l_1^k\chi (x),l_2^k\chi (x))\) is the Euclidean distance, then \(d({L_1}\chi (x),{L_2}\chi (x))\) is the Euclidean distance between \({L_1}\chi (x)\) and \({L_2}\chi (x)\):

Theorem 6

Let \({L_1}\chi (x)\) and \({L_2}\chi (x)\) be two \(\chi\)LVs, then:

-

(1)

\(0 \le d\left( {{L_1}\chi (x),{L_2}\chi (x)} \right) \le 1\);

-

(2)

\(d\left( {{L_1}\chi (x),{L_2}\chi (x)} \right) = 0 \Rightarrow {L_1}\chi (x) = {L_2}\chi (x)\);

-

(3)

\(d\left( {{L_1}\chi (x),{L_2}\chi (x)} \right) = d\left( {{L_2}\chi (x),{L_1}\chi (x)} \right)\).

Proof

The above theorem is easy to prove, so we omit the proof here.

4 A new \(\chi\)LVIKOR method based on \(\chi\)-linguistic sets for MAGDM

\(\chi\)- linguistic information can be applied to MAGDM problems. Assume \(X = \{ {x_1},{x_2}, \ldots,{x_t}\}\) is a set of t alternatives, \(A = \{ {a_1},{a_2}, \ldots,{a_q}\}\) is a set of q attributes, \(\omega = {({\omega _1},{\omega _2}, \ldots,{\omega _q})^T}\) is the known attribute weight vector, \({\omega _\beta } \in [0,1](\beta = 1,2, \ldots,q)\), and \(\sum \limits _{\beta = 1}^q {{\omega _\beta }} = 1\). The evaluation of multiple DMs can be represented by an \(\chi\)LS \({L_{\alpha \beta }}(I) = \{ l_{\alpha \beta }^k\chi (x) |k = 1,2, \ldots,\# {L_{\alpha \beta }}\chi (x)\}\), which represents the decision information under the attribute \({a_\beta }(\beta = 1,2, \ldots,q)\) of the alternative \({x_\alpha }(\alpha = 1,2, \ldots,t)\). All the \(\chi\)LSs constitute an \(\chi\)-linguistic decision matrix:

where \({L_{\alpha \beta }}\chi (x) = \{ l_{\alpha \beta }^k\chi (x) |k = 1,2, \ldots,\# {L_{\alpha \beta }}\chi (x)\} (\alpha = 1,2, \ldots,t,\beta = 1,2, \ldots,q)\), and \(l_{\alpha \beta }^k\chi (x)\) is the kth \(\chi\)LV in \({L_{\alpha \beta }}\chi (x)\). We assume all \(\chi\)LSs and \(\chi\)LVs in this section are normalized.

4.1 Some basic operators for \(\chi\)LSs

To better aggregate the decision information, we propose some basic operators for \(\chi\)LSs.

Definition 33

Let \({L_\beta }\chi (x) = \{ l_\beta ^k\chi (x) |k = 1,2, \ldots,{L_\beta }\chi (x)\}\) \((\beta = 1,2, \ldots,q)\) be q \(\chi\)LSs, then the \(\chi\)- linguistic weighted averaging(\(\chi\)LWA) operator is defined as follows:

where \(l_\beta ^{\delta (k)}\chi (x)(\beta = 1,2, \ldots,q)\) is the kth largest ILVs in \(l_\beta ^k\chi (x)\), \(\omega = {({\omega _1},{\omega _2}, \ldots,{\omega _q})^T}\) is the weight vector, \({\omega _\beta } \in [0,1]\), and \(\sum \limits _{\beta = 1}^q {{\omega _\beta }} = 1\).

Remark 4

When \(\omega = {(\frac{1}{q},\frac{1}{q}, \ldots,\frac{1}{q})^T}\), the \(\chi\)LWA operator degenerates into the \(\chi\)-linguistic averaging(\(\chi\)LA) operator:

where \(l_\beta ^{\delta (k)}\chi (x)(\beta = 1,2, \ldots,q)\) is the kth largest \(\chi\)LVs in \(l_\beta ^k\chi (x)\).

Definition 34

Let \({L_\beta }\chi (x) = \{ l_\beta ^k\chi (x) |k = 1,2, \ldots,{L_\beta }\chi (x)\}\) \((\beta = 1,2, \ldots,q)\) be q \(\chi\)LSs, then the \(\chi\)-linguistic weighted geometric(\(\chi\)LWG) operator is defined as follows:

where \(l_\beta ^{\delta (k)}\chi (x)(\beta = 1,2, \ldots,q)\) is the kth largest \(\chi\)LVs in \(l_\beta ^k\chi (x)\), \(\omega = {({\omega _1},{\omega _2}, \ldots,{\omega _q})^T}\) is the weight vector, \({\omega _\beta } \in [0,1]\), and \(\sum \limits _{\beta = 1}^q {{\omega _\beta }} = 1\).

Remark 5

When \(\omega = {(\frac{1}{q},\frac{1}{q}, \ldots,\frac{1}{q})^T}\), the ILWG operator degenerates into the intelligent linguistic geometric(ILG) operator:

where \(l_\beta ^{\delta (k)}\chi (x)(\beta = 1,2, \ldots,q)\) is the kth largest \(\chi\)LVs in \(l_\beta ^k\chi (x)\).

4.2 The \(\chi\)LVIKOR method

The VIKOR method was proposed by Opricovic (1998). This method determines a compromise solution that provide a maximum group utility for most people and minimum of an individual regret for opponents. It also takes into account the relative importance of the distances among the compromise solution, the ideal solution, and the negative ideal solution. It is widely used in MAGDM problems. In this section, we propose the \(\chi\)LVIKOR method of \(\chi\)LSs.

Definition 35

Let \(R = {[{L_{\alpha \beta }}\chi (x)]_{t \times q}}\) be an intelligent linguistic decision matrix with \({L_{\alpha \beta }}\chi (x) = \{ l_{\alpha \beta }^k\chi (x) |k = 1,2, \ldots,\# {L_{\alpha \beta }}\chi (x)\} (\alpha = 1,2, \ldots,t,\beta = 1,2, \ldots,q)\). Then, the best one of all \({L_\beta }{\chi (x)}\) is denoted as \({L_\beta }{\chi (x)^*}\):

where \({l_\beta ^k{\chi (x)}^*}=< [({{s{_{\theta ({x_\beta })}^{{n_k}}}}^*},{{\mu {_{\sigma ({x_\beta })}^{{n_k}}}}^*},{{\nu {_{\sigma ({x_\beta })}^{{n_k}}}}^*});{{p{_{\rho ({x_\beta })}^{{n_k}}}}^*}];\) \({{g{_{\varsigma ({x_\beta })}^{{n_k}}}}^*} >\), \({s{_{\theta ({x_\beta })}^{{n_k}}}^*} = \mathop {\max }\limits _\alpha \{ s_{\theta ({x_{\alpha \beta }})}^{{n_k}}\}\), \({\mu {_{\sigma ({x_\beta })}^{{n_k}}}^*},{\nu {_{\sigma ({x_\beta })}^{{n_k}}}^*} = \{ \mu _{\sigma ({x_{\alpha \beta }})}^{{n_k}},\nu _{\sigma ({x_{\alpha \beta }})}^{{n_k}} |\mathop {\max }\limits _\alpha (\mu _{\sigma ({x_{\alpha \beta }})}^{{n_k}} - \nu _{\sigma ({x_{\alpha \beta }})}^{{n_k}})\}\), \({p{_{\rho ({x_\beta })}^{{n_k}}}^*} = \mathop {\max }\limits _\alpha \{ p_{\rho ({x_{\alpha \beta }})}^{{n_k}}\}\) and \({g{_{\varsigma ({x_\beta })}^{{n_k}}}^*} = \{ g_{\varsigma ({x_{\alpha \beta }})}^{{n_k}} |\mathop {\max }\limits _\alpha E(g_{\varsigma ({x_{\alpha \beta }})}^{{n_k}})\}\). \(E(g_{\varsigma ({x_{\alpha \beta }})}^{{n_k}})\) is the approximation expectation of \(l_{\alpha \beta }^k\chi (x)\).

Definition 36

Let \(R = {[{L_{\alpha \beta }}\chi (x)]_{t \times q}}\) be an intelligent linguistic decision matrix with \({L_{\alpha \beta }}\chi (x) = \{ l_{\alpha \beta }^k\chi (x) |k = 1,2, \ldots,\# {L_{\alpha \beta }}\chi (x)\} (\alpha = 1,2, \ldots,t,\beta = 1,2, \ldots,q)\). Then, the worst one of all \({L_\beta }{\chi (x)}\) is denoted as \({L_\beta }{\chi (x)^\# }\):

where \({l_\beta ^k{\chi (x)}^\#}=< [({{s{_{\theta ({x_\beta })}^{{n_k}}}}^\#},{{\mu {_{\sigma ({x_\beta })}^{{n_k}}}}^\#},{{\nu {_{\sigma ({x_\beta })}^{{n_k}}}}^\#});{{p{_{\rho ({x_\beta })}^{{n_k}}}}^\#}];{{g{_{\varsigma ({x_\beta })}^{{n_k}}}}^\#} >\), \({s{_{\theta ({x_\beta })}^{{n_k}}}^\#} = \mathop {\min }\limits _\alpha \{ s_{\theta ({x_{\alpha \beta }})}^{{n_k}}\}\), \({\{\mu {_{\sigma ({x_\beta })}^{{n_k}}}^\#},{\nu {_{\sigma ({x_\beta })}^{{n_k}}}^\#\}} = \{ \mu _{\sigma ({x_{\alpha \beta }})}^{{n_k}},\nu _{\sigma ({x_{\alpha \beta }})}^{{n_k}} |\mathop {\min }\limits _\alpha (\mu _{\sigma ({x_{\alpha \beta }})}^{{n_k}} - \nu _{\sigma ({x_{\alpha \beta }})}^{{n_k}})\}\), \({p{_{\rho ({x_\beta })}^{{n_k}}}^\#} = \mathop {\min }\limits _\alpha \{ p_{\rho ({x_{\alpha \beta }})}^{{n_k}}\}\) and \({g{_{\varsigma ({x_\beta })}^{{n_k}}}^\#} = \{ g_{\varsigma ({x_{\alpha \beta }})}^{{n_k}} |\mathop {\min }\limits _\alpha E(g_{\varsigma ({x_{\alpha \beta }})}^{{n_k}})\}\). \(E(g_{\varsigma ({x_{\alpha \beta }})}^{{n_k}})\) is the approximation expectation of \(l_{\alpha \beta }^k\chi (x)\).

After determining the best solution \(L{\chi (x)^*} = \{ {L_1}{\chi (x)^*},{L_2}{\chi (x)^*}, \ldots,{L_q}{\chi (x)^*}\}\) and the worst solution \(L{\chi (x)^\# } = \{ {L_1}{\chi (x)^\# },{L_2}{\chi (x)^\# }, \ldots,{L_q}{\chi (x)^\# }\}\) with Definitions 35 and 36, we calculate values \({{\mathcal {{S}}}_\alpha }\) and \({{\mathcal {{R}}}_\alpha } (\alpha = 1,2, \ldots,t)\):

where \({d({L_\beta }{{\chi (x)}^*},{L_{\alpha \beta }}\chi (x))}\) is the distance between \({{L_\beta }{{\chi (x)}^*}}\) and \({{L_{\alpha \beta }}\chi (x)}\), and \({d({L_\beta }{{\chi (x)}^\# },{L_{\alpha \beta }}\chi (x))}\) is the distance between \({{L_\beta }{{\chi (x)}^\# }}\) and \({{L_{\alpha \beta }}\chi (x)}\).

Then, the value \({{\mathcal {{Q}}}_\alpha }(\alpha = 1,2, \ldots,t)\) is calculated:

where \({{\mathcal {{S}}}^\# } = \mathop {\min }\limits _\alpha \{ {{\mathcal {{S}}}_\alpha }\}\), \({{\mathcal {{S}}}^*} = \mathop {\max }\limits _\alpha \{ {{\mathcal {{S}}}_\alpha }\}\), \({{\mathcal {{R}}}^\# } = \mathop {\min }\limits _\alpha \{ {{\mathcal {{R}}}_\alpha }\}\), and \({{\mathcal {{R}}}^*} = \mathop {\max }\limits _\alpha \{ {{\mathcal {{R}}}_\alpha }\}\). v is the weight of the strategy of “the majority of attribute". We assume \(v=0.5\).

Rank the alternatives with the values \({\mathcal {{S}}}\), \({\mathcal {{R}}}\), and \({\mathcal {{Q}}}\). And obtain three ranking results. The smaller the value is, the higher the ranking is.

Next, determine a compromise solution. When the following two conditions are satisfied, the best alternative \({x^1}\) obtained by \({\mathcal {{Q}}}\) (minimum) is the compromise solution. The conditions are as follows:

Condition 1. Acceptable advantage.

where \({x^2}\) is the second-ranked alternative obtained by \({\mathcal {{Q}}}\), and t is the number of alternatives.

Condition 2. Acceptable stability in decision making.

The alternative \({x^1}\) must be the best ranked by \({\mathcal {{S}}}\) or \({\mathcal {{R}}}\).

If one of the above conditions is not satisfied, a compromise solution set is proposed:

-

(1)

If condition 2 is not satisfied, then both \({x^1}\) and \({x^2}\) are compromise solutions.

-

(2)

If condition 1 is not satisfied, then obtain maximum T with the equation:

$$\begin{aligned} {\mathcal {{Q}}}({x^T}) - {\mathcal {{Q}}}({x^1}) < \frac{1}{{t - 1}}. \end{aligned}$$(54)Alternatives \({x^1},{x^2}, \ldots,{x^T}\) are close to the compromise solution.

4.3 A new \(\chi\)LVIKOR approach to decision making with \(\chi\)-linguistic information

In this section, we are going to develop a new \(\chi\)LVIKOR method to MAGDM problem with the\(\chi\)-linguistic environment, and comparative analysis with the \(\phi _{\chi LWA}\) and \(\phi _{\chi LWG}\) operator.

Step 1. Analyze the MAGDM problem and determine t alternatives \(X = \{ {x_1},{x_2}, \ldots,{x_t}\}\), q attributes \(A = \{ {a_1},{a_2}, \ldots,{a_q}\}\), and the attribute weight vector \(\omega = {({\omega _1},{\omega _2}, \ldots,{\omega _q})^T}\). For the attribute \(\beta (\beta = 1,2, \ldots,q)\) under alternative \(\alpha (\alpha = 1,2, \ldots,t)\), DMs use the linguistic term \(S = \{ {s_i} |i = 0,1, \ldots,\tau \}\) to represent their evaluation and give the evaluation’s membership, non-membership, and credibility with numbers between 0 and 1. Multiple DMs’ evaluation information is assembled into an IPLS, and a \(\chi\)LS \({L_{\alpha \beta }}\chi (x) = \{ l_{\alpha \beta }^k\chi (x) |k = 1,2, \ldots,\# {L_{\alpha \beta }}\chi (x)\}\) is obtained by adding the approximation from Definitions 13-16. Multiple \(\chi\)LSs are constructed into an intelligent linguistic decision matrix \(R = {[{L_{\alpha \beta }}\chi (x)]_{t \times q}}\). If DMs choose the \(\phi _{\chi LWA}\) and \(\phi _{\chi LWG}\) operator, then go to Step 2; if DMs choose the \(\chi\)LVIKOR method, then go to Step 5.

Step 2. Use the \(\phi _{\chi LWA}\) operator (Eq. (44)) or the \(\phi _{\chi LWG}\) operator (Eq. (46)) to aggregate the attribute values of the alternatives \({x_\alpha }(\alpha = 1,2, \ldots,t)\) to obtain \({L_\alpha }\chi (x)(\alpha = 1,2, \ldots,t)\).

Step 3. Construct a possibility degree matrix \(P = {({p_{\alpha k }})_{t \times t}}\), where \({p_{\alpha k }} = p({L_\alpha }\chi (x) \ge {L_k }\chi (x) )\) is calculated by Eq. (34), \({p_{\alpha k }} + {p_{k \alpha }} = 1\), \({p_{\alpha \alpha }} = 0.5\), and \(\alpha ,k = 1,2, \ldots,t\).

Step 4. Rank the alternatives \({x_\alpha }(\alpha = 1,2, \ldots,t)\) with the values of \({p_\alpha } = \sum \limits _{k = 1}^t {{p_{\alpha k}}},(\alpha = 1,2, \ldots,t)\) in descending order and select the optimal alternative. Then, go to Step 10.

Step 5. Determine the best solution \(L{\chi (x)^*}\) and the worst solution \(L{\chi (x)^\# }\) with Definitions 35 and 36.

Step 6. Calculate values \({{\mathcal {{S}}}_\alpha }\), \({{\mathcal {{R}}}_\alpha }\), and \({{\mathcal {{Q}}}_\alpha }(\alpha = 1,2, \ldots,t)\) with Eqs.(50-52).

Step 7. Obtain three ranking results by values \({{\mathcal {{S}}}_\alpha }\), \({{\mathcal {{R}}}_\alpha }\), and \({{\mathcal {{Q}}}_\alpha }(\alpha = 1,2, \ldots,t)\).

Step 8. If both conditions 1 and 2 are satisfied, the optimal alternative obtained by the minimum \({\mathcal {{Q}}}\) is the compromise solution. And go to Step 9. If any one of the conditions is not satisfied, then go to Step 8.

Step 9. Obtain the compromise solution set.

Step 10. End.

The \(\phi _{\chi LWA}\) and \(\phi _{\chi LWG}\) operator are easy to calculate. Compared with it, the \(\chi\)LVIKOR method is more complex but can reduce the loss of information.

5 A case study

5.1 A numerical example

It is now more than one year since COVID-19 first broke out. However, the epidemic situation in cities around the world has not been completely eliminated, and even rebounded. Therefore, it is necessary to make an effective epidemic risk assessment. Three DMs formed an expert group to assess the risk of cities from the second COVID-19 shock. We obtained data on the prevention and control of the COVID-19 in three cities through research. Experts assess the risks of the three cities(\(x_1,x_2,x_3\)), rank the cities, select the most risky city, and gave early warning to the cities with high risk, so as to help the local government adjust the prevention and control mechanism and prepare the response plan in advance. The evaluation considers the following three attributes: (1) the risk of inflow of confirmed cases (domestic and overseas); (2) the risk of medical resources; (3) the risk of accumulated experience in the epidemic. The attribute weight vector is \(\omega = {(0.4,0.3,0.3)^T}\). We assume \(A = 0.33\), \(B = 0.33\), \(C = 0.33\), and \(\lambda = 2\).

5.1.1 The \(\phi _{\chi LWA}\) and \(\phi _{\chi LWG}\) operator method

We take the \(\phi _{\chi LWA}\) operator as an example to solve this problem.

Step 1. Three DMs give their evaluations based on the LTS:

The corresponding ambiguity and credibility of the evaluation are also given. The above evaluation information is expressed as IPLVs. The intuitionistic probabilistic linguistic decision matrix of the three DMs is shown in Table 1. By integrating the evaluations from three DMs and solving the approximation degree, we obtain a group decision matrix in the form of \(\chi\)LS. After normalizing the group decision matrix and ranking the \(\chi\)LVs in \(\chi\)LSs in descending order, we obtain Table 2.

Step 2. Use the \(\chi\)LWA operator(Eq. (44)) to aggregate the attribute values of the alternatives \({x_\alpha }(\alpha = 1,2,3)\) to obtain \({L_\alpha }\chi (x)(\alpha = 1,2,3)\).

Step 3. Construct a possibility degree matrix \(P = {({p_{\alpha k }})_{3 \times 3}}\).

Step 4. Rank the cities \({x_\alpha }(\alpha = 1,2,3)\) with the values of \({p_\alpha }(\alpha = 1,2,3)\) in descending order and select the most risky city.

Therefore, the most risky city is \({x_2}\).

5.1.2 The \(\chi\)LVIKOR method

Next, we use the \(\chi\)LVIKOR method of \(\chi\)LSs to calculate this numerical example again.

Step 1. Construct a normalized \(\chi\)-linguistic decision matrix with all \(\chi\)LVs are in descending order, donated as Table 2.

Step 2. Determine the best solution \(L{\chi (x)^*} = \{ {L_1}{\chi (x)^*},\) \({L_2}{\chi (x)^*},\) \({L_3}{\chi (x)^*}\}\) and the worst solution \(L{\chi (x)^\# } = \{ {L_1}{\chi (x)^\# },\) \({L_2}{\chi (x)^\# },{L_3}{\chi (x)^\# }\}\) with Definitions 35 and 36.

Step 3. Calculate values \({{\mathcal {{S}}}_\alpha }\), \({{\mathcal {{R}}}_\alpha }\), and \({{\mathcal {{Q}}}_\alpha }(\alpha = 1,2,3)\) with Eqs.(50-52).

Step 4. Obtain three ranking results by values \({{\mathcal {{S}}}_\alpha }\), \({{\mathcal {{R}}}_\alpha }\), and \({{\mathcal {{Q}}}_\alpha }(\alpha = 1,2, \ldots,t)\), and show them in Table 3.

Step 5. Determine the compromise solution (set) by conditions 1 and 2.

In the ranking obtained by \({{\mathcal {{Q}}}}\), \({x_3}\) and \({x_2}\) are ranked first and second. For \(\frac{1}{{t - 1}} = 0.5\) and

it does not satisfy condition 1. \({x_3}\) is also the best alternative by \({\mathcal {{S}}}\) and \({\mathcal {{R}}}\). Therefore, it satisfy condition 2.

Therefore, \(\{{x_3},{x_2}\}\) is the compromise solution set. \({x_3}\) and \({x_2}\) are the most risky cities. And \({x_3}\) has more risky than \({x_2}\).

5.2 Analysis and discussion

In the previous section, the \(\phi _{\chi LWA}\) (or \(\phi _{\chi LWG}\)) operator and the \(\chi\)LVIKOR method are used to calculate the numerical example, and the obtained most risky city are different. This is caused by the different characteristics of the two methods. The former is easy to calculate but has the problem of information loss, and the latter effectively reduce information loss but is complicated in calculation. DMs can choose methods and results based on specific issues and needs.

Next, we compare and discussion from three aspects: the influence of changes in the values of A, B, and C, the influence of changes in the value of \(\lambda\), and the comparison with other existing methods.

5.2.1 The influence of the values A, B, and C on the ranking results

In different decision making problems, the importance of the ambiguity, possibility, and approximation of \(\chi\)LSs is correspondingly different. To distinguish the difference in importance among the above three, the variable parameter values A, B, and C are added when defining \(r\left( {{l^k}\chi (x)} \right)\), \(\vartheta \left( {{l^k}\chi (x)} \right)\), \(\varphi \left( {{l^k}\chi (x)} \right)\), and the distance between two \(\chi\)LSs or \(\chi\)LVs.

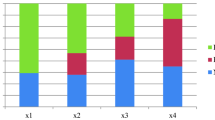

In order to explore the influence of the values A, B, and C on the decision results, we take different values and calculate them with the methods based on the \(\chi\)LWA operator and the \(\chi\)LWG operator, respectively. The ranking results are shown in Table 4. Obviously, in the ILWA operator, when \({(A,B,C)^T}\) takes \({(0.5,0.5,0)^T}\), the ranking result is \({x_2} \succ {x_1} \succ {x_3}\), which is different from the ranking result \({x_2} \succ {x_3} \succ {x_1}\) obtained with other values. In the \(\chi\)LWG operator, when \({(A,B,C)^T}\) takes \({(1/3,1/3,1/3)^T}\), the ranking result is \({x_3} \succ {x_2} \succ {x_1}\), which is different from the ranking result \({x_2} \succ {x_1} \succ {x_3}\) obtained with other values. Table 5 shows the ranking results under different values A, B, and C obtained by the \(\chi\)LVIKOR method. It can be seen that the obtained compromise solutions (sets) are not exactly the same.

These shows that the values A, B, and C have an impact on the ranking results. According to the different needs of the decision problems, they can be given different values, which makes the decision more flexible and changeable.

5.2.2 The influence of the value \(\lambda\) on the ranking results

The value \(\lambda\) is used to define the distance measurement, which can express the risk attitude of DMs and give DMs more choices.

Next, we explore the influence of \(\lambda\) on the ranking results. We use different \(\lambda\) to calculate the numerical example with the \(\chi\)LVIKOR method. The ranking results are shown in Table 6. As shown in the table, when \(\lambda\) takes 0.1, 0.5, 1, and 5, the compromise solution set is \(\{ {x_2},{x_3}\}\); when \(\lambda\) takes 2 and 10, the compromise solution changes to \({x_3}\).

Therefore, the variable parameter \(\lambda\) influence the ranking result. The value of \(\lambda\) can be determined according to the risk attitude of DMs.

5.2.3 Comparative analysis with existing methods

In this section, we compare the \(\chi\)LS proposed in this paper with other similar methods in the concept and ranking results. Comparison methods include HFLTS, PLTS, ILFS, Z-linguistic set, intuitionistic Z-linguistic set and fuzzy rough set. In the calculation, we process the data in the numerical example. Taking the HFLTS as an example, we only consider one dimension and ignore ambiguity, credibility, and approximation. The calculations are all based on operator-based method. We also compared the results with the PDHL-VIKOR[58] method. Table 7 shows the comparison results.