Abstract

Cervical cancer is one of the most common cancers in daily life. Early detection and diagnosis can effectively help facilitate subsequent clinical treatment and management. With the growing advancement of artificial intelligence (AI) and deep learning (DL) techniques, an increasing number of computer-aided diagnosis (CAD) methods based on deep learning have been applied in cervical cytology screening. In this paper, we survey more than 80 publications since 2016 to provide a systematic and comprehensive review of DL-based cervical cytology screening. First, we provide a concise summary of the medical and biological knowledge pertaining to cervical cytology, since we hold a firm belief that a comprehensive biomedical understanding can significantly contribute to the development of CAD systems. Then, we collect a wide range of public cervical cytology datasets. Besides, image analysis approaches and applications including cervical cell identification, abnormal cell or area detection, cell region segmentation and cervical whole slide image diagnosis are summarized. Finally, we discuss the present obstacles and promising directions for future research in automated cervical cytology screening.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Introduction of cervical cancer

Cervical cancer is a common malignancy that poses a serious threat to women’s health. It is the fourth most common cancer in terms of both incidence and mortality. In 2020, approximately 600,000 new cases of cervical cancer were diagnosed and more than 340,000 people died from this disease globally (Sung et al. 2021). The incidence and mortality of cervical cancer may vary among countries and regions, which is related to the level of health services, the implementation of screening and prevention measures, lifestyle, and environmental factors in that region (Cramer 1974).

Cervical cancer is a kind of malignant tumor arising from the cervix and threatens the life and health of women. There are two main types of cervical cancer: (1) squamous cell carcinoma (SCC); and (2) adenocarcinoma. About 90% of cervical cancer cases are SCC, most of which begin in the transformation zone and develop from cells in the outer part of the cervix (Waggoner 2003). Cervical cancer is by far the most common human papillomavirus (HPV) related disease, and almost all cervical cancers (more than 95%) are caused by persistent infection with some types of HPV. There are at least 13 known types of HPV that can persist and progress to cancer, called high-risk HPV, the most common being HPV 16 and 18 strains. Cervical cancer has a long period of the precancerous stage, and its development is continuous, as shown in Fig. 1. The main characteristics of precancerous cells focus on changes in the nucleus. For example, nuclear enlargement results in an increased nuclear-to-cytoplasmic ratio (N/C ratio). It’s common to see Binucleation and multinucleation. Besides, nucleoli are generally absent or inconspicuous if present. The contour of the nuclear membrane is quite irregular. Early forms of cervical cancer may have no symptoms or signs, but as the disease progresses, symptoms such as abnormal vaginal bleeding, vaginal discharge, and pelvic pain may appear. Thus, early diagnosis is crucial for the treatment and prognosis of cervical cancer (Cohen et al. 2019). There is compelling evidence that cervical cancer is one of the most preventable and treatable cancers if detected early and managed effectively through regular screening programs. There are currently three World Health Organization (WHO) recommended screening tests for cervical cancer: (1) HPV testing for high-risk HPV types; (2) cervical cytology screening; and (3) visual inspection with acetic acid (VIA). Nowadays, cervical cytology screening has been the basic method worldwide since the cytological features are significant indications of cervical cancer.

1.2 Motivation of this review

Traditionally, cervical cytology screening program requires the manual identification of abnormal cells under a microscope, which is time-consuming, tedious, and error-prone (Elsheikh et al. 2013). In this context, an increasing number of automatic screening systems have been proposed to reduce the burden on cytopathologists and improve diagnosis efficiency (Koss et al. 1994; Biscotti et al. 2005; Kardos 2004). With the advancement of artificial intelligence (AI) and digital image processing, machine learning (ML) technology has been widely applied in cervical cytology screening to analyze cytological images due to its high-performance results (Marinakis et al. 2009; Chen et al. 2013; William et al. 2018). Nevertheless, traditional machine learning approaches have complex image preprocessing and feature selection steps that limit the further progress of human–machine collaboration.

In the past few years, deep learning (DL), a branch of machine learning, has exploded in the field of computer vision (LeCun et al. 2015; Krizhevsky et al. 2017; Simonyan and Zisserman 2015; He et al. 2016; Ren et al. 2015). The end-to-end automatic feature extraction and learning process of DL eliminates the need for manual feature design and selection. DL has made a breakthrough in various fields of image processing, medical image analysis is no exception (Litjens et al. 2017; Liu et al. 2019; Rajpurkar et al. 2022). DL solutions have been successfully applied in many medical imaging tasks, such as thoracic Imaging, neuroimaging, cardiovascular imaging, abdominal imaging, and microscopy imaging (Zhou et al. 2021). The development of DL has also greatly accelerated automatic image analysis in cervical cytology screening. To understand the popularity and development trend of deep learning in cervical cytology, multiple literature databases (PubMed, Scopus, IEEE Xplore, ACM Digital Library, and Web of Science) are searched using the keywords related to cervical cytology screening (cervical cytology, cervical cancer diagnosis, deep learning, Pap smear, etc.). Fig. 2 illustrates the number of related publications from 2016 to 2022. Since 2016, there has been a notable surge in the use of DL for cervical cytology screening. Moreover, the object detection task has experienced significant growth since 2018, while the task of whole slide image (WSI) analysis has emerged in 2021 and shown impressive expansion recently.

There exist several surveys in the field of automated cervical cytology (William et al. 2018; Rahaman et al. 2020; Conceição et al. 2019; Chitra and Kumar 2022; Hou et al. 2022; Shanthi et al. 2022). Although these reviews provide valuable insights into automated cervical cytology, they are not exhaustive and some areas remain unexplored, calling for a further comprehensive investigation. First of all, the above reviews focus on classification and segmentation tasks at the cell level, and none of them investigate the application of object detection algorithms in automated cervical cytology screening. Secondly, the majority of these reviews primarily concentrate on conventional machine learning approaches, with comparatively limited coverage of DL-based methods. Moreover, few reviews provide biomedical context pertaining to cervical cytology, which is relevant for understanding the applicability of DL-based methods in this field. Last but not the least, there is currently no review specialized for automatic WSI analysis of cervical cytology as the related works have only recently started to emerge in 2021. Automatic WSI analysis of cervical cytology holds great promise for improving the efficiency and accuracy of cervical cancer screening. Staying abreast of the latest developments and advancements in this field will be important for researchers and practitioners.

1.3 Contribution and organization of paper

To address the above issues, a comprehensive overview of relevant works for automated cervical cytology is presented in this survey including over 80 publications since 2016. For researchers just entering this field, this survey provides background knowledge on cervical cytology such as a brief introduction to cervical cancer, popular cervical cytology screening procedures, and definite cell categories in the Bethesda system (TBS). It is worth noting that the comparison of different reporting terms is elaborated as well. This can often cause confusion and impact the construction of a correct and reasonable DL model. In addition, the historical development of automated screening systems and the specific tasks in cervical cytology screening have been introduced in detail. Besides, this survey has also compiled the most extensive collection of publicly available cervical cytology image datasets. Moreover, this survey summarizes the latest DL-based classification, detection, segmentation, and WSI analysis methods in automated cervical cytology screening. Towards the end of this paper, several challenges and opportunities (stain normalization, image super-resolution, incorporating medical domain knowledge, annotation-efficient learning, internet of medical things, etc.) are presented that may provide promising research directions in cervical cytology screening.

The paper is organized as follows: Sect. 1 introduces the background and objective of this survey. In Sect. 2, an overview of biomedical knowledge related to cervical cytology is provided. Section 3 elaborates on the research methodology to construct this systematic review. Section 4 lists the public datasets in cervical cytology screening and summarizes the detailed progress in the DL-based automated cervical cytology from cell identification to WSI analysis. In Sect. 5, existing challenges and potential opportunities in automated cervical cytology screening are discussed. Finally, Sect. 6 concludes this review paper.

2 Overview of cervical cytology

Before the review of deep learning-based methods for cervical cytology screening, a preliminary overview of cervical cytology is presented in this section. We believe that medical and biological domain knowledge has a critical impact on the construction of computational models and the design of Computer-aided diagnosis (CAD) systems. In Sect. 2.1, the detailed procedure of cervical cytology screening is first described. After that, we introduce the history of reporting terminology for cervical cytology and explain the corresponding relations and differences between the four reporting systems in Sect. 2.2. Next, we elaborate the cell categories in TBS in Sect. 2.3. Finally, the historical development of automated screening systems are briefly introduced in Sect. 2.4.

2.1 Procedure of cervical cytology screening

Cervical cytology screening is the most effective and widely used screening program for discovering cancerous or precancerous lesions. The primary goal of screening is to identify abnormal cervical cells with severe cell changes so that they can be monitored or treated in time to prevent the development of invasive cancer (Sankaranarayanan et al. 2001). A large number of medical organizations suggest conducting routine cervical cytology screening every few years. Currently, conventional Papanicolaou smear (CPS) test and liquid-based cytology (LBC) are performed for cervical cytology screening worldwide (Siebers et al. 2009). CPS is a procedure in which cervical cells are scraped and observed under a microscope. Figure 3a illustrates the whole process of CPS. Under the guidance of a vaginal speculum, a soft brush will insert into the vagina to collect cells from the cervix. Then a pap smear can be acquired by evenly spreading the cells from the brush onto the glass slide. After staining, cytologists can observe the sample under a microscope and make a diagnosis.

Due to the influence of blood, mucus, inflammation, and other factors, CPS often acquires blurred samples, resulting in poor imaging results and detection errors. In recent years, with the improvement of sample preparation level, LBC can significantly improve the imaging quality of cervical cell samples, and thus has gradually become the mainstream implement for cervical cytology screening. As shown in Fig. 3b, the collected cells will be placed in a preservation solution for further process. After oscillation and centrifugation, a liquid-based glass slide can be obtained by natural sedimentation. Then, the liquid-based sample preparation is completed via staining and air drying. Nowadays, with the development of imaging equipment and digital processing techniques, CPS and LBC samples are usually transformed into digital slides via pathological scanners to facilitate retrospective examination. Digital pathology brings a positive and profound impact on traditional pathological diagnosis, which digitizes glass slides into whole slide images (WSIs) to greatly reduce the workload of pathologists and improve the diagnosis efficiency compared to microscope-based visual observation (Al-Janabi et al. 2012; Niazi et al. 2019). Liquid-based preparation together with digital slides is a satisfactory alternative to conventional smear and has a great application prospect for nowadays’ large-scale cervical cancer screening programs.

2.2 History of reporting terminology

The establishment of a standard cervical cytology report system plays a vital role in the universality of diagnosis methods and the acceptance of diagnosis results. In practice, the standard report system can cross the gap between different regions and different countries, strengthen the exchange of relevant scientific research results, and greatly improve the efficiency of cervical cancer diagnosis (St Clair and Wright 2009). The earliest report system for cervical cytological diagnosis was the Papanicolaou classification system, which developed a numeric classification terminology to grade cervical cells for 5 levels (Traut and Papanicolaou 1943). Class I to Class V respectively indicated the absence of abnormal or atypical cells; atypical cells, but no evidence of malignancy; cytology suggestive of but not conclusive for malignancy; cytology strongly suggestive of malignancy; and cytology conclusive for malignancy. However, many pointed out that the Papanicolaou classification system was strongly subjective and there was no strict objective standard for the difference between Class II, III, and IV. In addition, the Papanicolaou classification system did not have a clear definition of precancerous lesions and was not able to correspond to histopathological diagnosis terms.

With the development and refinement of both cytological and histological diagnoses of cervical cancer, an understanding of the natural history of cervical intraepithelial neoplasms (CIN) has developed progressively. The term dysplasia was introduced to refer to precancerous abnormalities of squamous cells and the 3-tiers dysplasia system (mild/moderate/severe dysplasia, or carcinoma in situ) was proposed (Reagan et al. 1953). Recognizing the difficulty in differentiating severe dysplasia and carcinoma in situ (CIS), in 1966 (RICHART 1967), the CIN classification system was developed to describe CIN as a continuum of neoplastic change with progressively increasing risk of invasion, which was subdivided into grades I, II, and III. The advantage of both the 3-tiers dysplasia system and CIN classification was the ability to use it for cytological as well as histological samples.

In the 1970 s and 1980 s, as HPV testing became more available, vast epidemiological and biochemical evidence manifested the link between HPV and cervical dysplasia, which supported the role of high-risk HPV as a necessary factor in the development of cervical cancer (Hausen 1977; Crum et al. 1985). As a result, the first edition of the Bethesda system (TBS) for reporting cervical cytology was promulgated in 1988 (Workshop 1989). TBS aims to provide a uniform interpretation of cervical cytology, thereby facilitating communication between the clinician and the laboratory. With the change in practice to increased utilization of new technologies and findings in the last few decades, such as further insights into HPV biology and the development of liquid-based preparations, TBS has been updated three times to meet the evolving cervical cytology. The newest TBS 2014 guideline (Nayar and Wilbur 2015) offers comprehensive terminology for the reporting of cervical cytology.

Since TBS was established to better unify the reporting system, nowadays TBS has been the most standard reporting term for cervical cytology. 3-tier dysplasia or CIN systems are no longer used to report cervical cytology. However, when abnormalities are detected by cervical cytology, a further histological biopsy is performed. CIN system remains the standard reporting terminology for cervical histopathology and is used for the final diagnosis of cervical cancer because histopathology is the ’gold standard’ for determining cancer. Referring to Herbert et al. (2007), Kedra et al. (2012), the specific classification criteria and corresponding relations of these four systems are shown in Table 1.

2.3 Cell categories in TBS

TBS lays the foundation for our further comprehension of HPV biology and provides the necessary framework for the development of systematic evidence-based guidelines for cervical cancer screening and management. Since TBS is the widely recognized standard for cervical cytology reporting, in this section, cell categories in the latest version of TBS (TBS 2014) (Nayar and Wilbur 2015) are introduced for a better understanding of cervical cytology.

The specimen is reported as negative for intraepithelial lesion or malignancy (NIML) when there is no cellular evidence of neoplasia or epithelial abnormalities. Normal cellular elements include normal squamous cells and glandular cells. Squamous cells located in different positions of cervical epithelium have different characteristics, from shallow to deep can be divided into the superficial cell, intermediate cell, parabasal cell and basal cell. In a stained sample, the cytoplasm of superficial cells is pink or orange while the cytoplasm of all of the less mature cells is light green or cyan. Superficial cells and intermediate cells are large polygonal with a very low nuclear-to-cytoplasmic ratio (N/C ratio) while parabasal cells and basal cells are generally round or oval with a relatively high N/C ratio. Basal cells are small, and undifferentiated cells which are rarely seen in a Pap smear unless there is severe atrophy. Glandular cells consist of endocervical cells and endometrial cells. Viewed from above, sheets of endocervical cells have a honeycomb appearance, whereas when viewed from the side line up like “picket-fence” palisades. Endocervical glandular cells exhibit polarity with nuclei at one end of the cytoplasm and mucus present at the other. Endometrial cells which are spontaneously shed are derived from epithelial or stromal and often in a 3-dimensional cluster referred to as an “exodus” ball, which is generally present at the end of menstrual flow. Figure 4 exhibits various normal cervical cells.

Abnormal squamous cells or glandular cells can be discovered during cervical cytology screening which can be categorized as following types according to TBS reporting terminology:

-

Atypical squamous cells - undetermined significance (ASC-US) This type refers to changes that are suggestive of the low-grade squamous intraepithelial lesion (LSIL). The nuclei of ASC-US are about 2.5 to 3 times the area of a normal intermediate squamous cell nucleus (approximately 35 mm2) and the N/C ratio is slightly increased.

-

Atypical squamous cells - cannot exclude a high-grade squamous intraepithelial lesion (ASC-H) ASC-H primarily affects the squamous metaplastic cells and the nuclei are usually approximately 1.5–2.5 times larger than normal metaplastic cells’ nuclei. The cytological changes of ASC-H are suggestive of the high-grade squamous intraepithelial lesion (HSIL) but are insufficient for a definitive diagnosis of HSIL.

-

Low-grade squamous intraepithelial lesion (LSIL) To render an LSIL diagnosis, explicit abnormal changes must be found in the squamous cells. Cytological changes of LSIL usually occur in mature intermediate or superficial squamous cells and the nuclear enlargement is more than three times the area of normal intermediate nuclei. Additional characteristics of LSILs include hyperchromatic nuclei, absent or inconspicuous nucleoli, binucleation or multinucleation, and increased koilocytosis.

-

How-grade squamous intraepithelial lesion (HSIL) In general, the cells affected by HSIL are immature parabasal or basal cells. HSIL cells can appear in sheets, singly, or in syncytial clusters which may result in hyperchromatic crowded groups (HCG). The nuclear enlargement and small size of HSIL cells lead to a marked increase in the N/C ratio. The nucleoli are generally absent and the contour of the nuclear membrane is quite irregular.

-

Squamous cell carcinoma (SCC) SCC is defined as “an invasive epithelial tumor composed of squamous cells of varying degrees of differentiation” according to 2014 WHO terminology (Young 2014), which is the most common malignant tumor of cervical cancer. Cytological features of SCC usually include pleomorphic hyperchromatic nuclei, irregularly dispersed chromatin with nuclear clearing, prominent irregular often multiple nucleoli, keratinization of cells, and keratinous debris.

-

Atypical glandular cells (AGC) AGC is a generic terminology for atypical endocervical cells or atypical endometrial cells when there is difficulty in locating the origin of the cells. Atypical endocervical cells may be further qualified as “NOS” or “favor neoplasia”, while atypical endometrial cells don’t need it. The cytological features of AGC may include nuclear enlargement, crowding, variation in size, hyperchromasia, chromatin heterogeneity, and evidence of proliferation.

-

Endocervical adenocarcinoma in situ (AIS) AIS is considered to be the glandular counterpart of HSIL and the precursor to invasive endocervical adenocarcinoma. The criteria of AIS comprise of the following aspects: The cells present as sheets, pseudostratified strips or clusters - with loss of well-defined honeycomb patterns; The nuclei tend to be enlarged, variably sized, oval or elongated, and the loosely superficial cells of the cell groups incline to be tapered and spread out, referred to as “feathering”; Nucleoli are usually small or inconspicuous and may not be present; The quantity of cytoplasm is diminished and N/C ratio is increased; Nuclear hyperchromasia with evenly dispersed, coarsely granular chromatin; The chromatin pattern is coarsely granular with even distribution and mitoses are common.

-

Adenocarcinoma The Cytological criteria for adenocarcinoma may overlap those outlined for AIS. There are abundant abnormal cells, typically with columnar configuration. Nuclei tend to be enlarged, pleomorphic with nuclear membrane irregularities, and may be hypochromatic with irregularly distributed chromatin or chromatin clearing. Multinucleation and Macronucleoli are common features. Adenocarcinoma may coexist with squamous lesions.

Figure 5 shows an illustration of various abnormal cervical cells. In a large-scale cervical cell screening program for the general population, the number of abnormal squamous cases is far more than abnormal glandular cases and ASC-US, LSIL, ASC-H, and HSIL are the four most common types. ASC-US and LSIL lesions usually occur in superficial cells or intermediate cells while ASC-H and HSIL lesions usually occur in parabasal cells and basal cells.

2.4 Automation in cervical cytology screening

This subsection reviews the development and evolutionary history of automated screening systems for cervical cytology. The timeline of some major events is shown in Fig. 6.

2.4.1 Origin of Pap smear

The earliest cervical cytology screening originated in the late 1920s. At that time, Papanicolaou first described malignant cells in vaginal smears and suggested the Pap smear test which is poorly received by Papanicolaou’s contemporaries (Papanicolaou 1928). With the widespread adoption and practice of Pap smear tests in the 1940s, the incidence of cervical cancer was remarkably reduced (Papanicolaou and Traut 1943). Depending on the difficulty of the pap smear and the expertise of the cytopathologist, it takes about 5–10 mins on average to screen a sample (Traut and Papanicolaou 1943). Due to the lack of cytotechnologists and the increasing demand for Pap tests, manual screening of Pap smear is obviously a tedious and error-prone task. In this context, the development of automated screening systems is in full swing.

2.4.2 Early-stage screening Systems

The first attempt at automated Pap smear analyzers was the Cytoanalyzer (Tolles and Bostrom 1956), which utilized nuclear size and optical density to distinguish cancer cells from normal cells. Since then, the first-generation systems were all developed based on a primary concept that cancer cells could be differentiated from normal cells by morphometric features, such as the TICAS device (Wied et al. 1975) and CYBEST (Watanabe and Group 1974). These systems generated 2-dimensional histograms showing morphometric differences between normal and abnormal cells through hard-wired analogue video processing circuits. However, such systems lacked interactive computers or display units that could show available digital images and often produced too many false results. Thus, a series of second-generation screening systems came into being by the 1980s, such as BioPEPR, FAZYTAN, Cerviscan, LEYTAS and Discanner (Bengtsson and Malm 2014). This generation of systems realized simple interaction for users and was used to explore new image segmentation, feature extraction, and classification methods for cervical cytology. But they still encountered several problems: first of all, computers were slow and unable to process the whole Pap smear at once which contained up to 300,000 cells. Besides, it was difficult to deal with three-dimensional (3D) clumps of cells and detect cell boundaries.

2.4.3 First-generation commercial systems

In the late 1980s, the "Pap mills" problem was reported that laboratories engaged in screening the greatest number of slides, often at the expense of quality. The public became aware of the reality of "false-negative" slides and the potential risk of Pap tests (Watanabe and Group 1974; Boronow 1998). Therefore, relevant laws and guidelines were established, such as the Clinical Laboratory Improvement Amendments of 1988 (CLIA 1988) and the first edition of TBS criteria (Nayar and Wilbur 2017). At the same peroid, computers had significantly advanced in terms of processing speed, memory capacity, and image display. These improvements had revitalized the hopes for automation in cervical cytology screening and many new projects were started.

AutoPap 300QC (Neopath, USA) and PAPNET (Neuromedical Systems, USA) were the first-generation commercially available screening systems that received the Food and Drug Administration (FDA) approval for rescreening of manually screened conventional cervical smears in 1995 (Lew et al. 2021). PAPNET (Koss et al. 1994) developed an artificial neural network to select up to 128 images of potentially abnormal cells for display and further diagnosis by cytopathologists. AutoPap 300QC (Patten et al. 1996) was a computerized image processor that developed algorithms to give a cumulative slide score to determine the abnormality of potential overall slide. Both PAPNET and AutoPap were considered capable of performing primary screening in their initial configurations, but the data to evaluate the practicality of these new technologies were not adequately prepared. Thus, they initially focused on quality control (QC) applications and successfully obtained approval for the rescreening of conventional Pap smear preparations to reduce false negative rates.

2.4.4 Currently available screening systems

Simultaneous to these cytology screening systems, sample preparation techniques were also constantly evolving and being commercialized. A new way of sample preparation for liquid-based cytology (LBC), ThinPrep, was developed by the Cytyc corporation (Hutchinson et al. 1991). Later, TriPath Imaging developed another similar preparation method, called AutoCyte Prep (ultimately SurePath) (Howell et al. 1998). The FDA approved ThinPrep and AutoCyte Prep for manual screening in 1996 and 1999, respectively. The advent of LBC enabled computers to locate and visualize individual cells easily by creating a uniform spread of cells, and further facilitated the development of automated cervical cytology screening tools. Afterward, the FDA successively approved two commercial products, the ThinPrep Imaging System (Biscotti et al. 2005) and the FocalPoint GS (Kardos 2004), that used these two products as their main specimen types for automated primary screening. These approvals were granted in 2004 and 2008, respectively. Both systems were semi-automated slide scanning systems composed of a highly automated microscope and a processer that interpreted images of the FoV. The ThinPrep Imaging System detects and displays 22 FoVs containing the most suspicious cells on the slide, using a motorized microscope stage. Similarly, the FocalPoint GS operates in a similar way, but it also categorizes slides into quantiles based on the likelihood that they contain abnormalities.

2.4.5 Emerging cervical cytology screening systems

Recently, the new-generation analysis system for automated cervical cytology screening is in development, such as BestCyte (CellSolutions, USA) (Delga et al. 2014; Chantziantoniou 2022), CytoProcessor (DATEXIM, France) (Crowell et al. 2019), and Genius Digital Diagnostics System (Hologic, USA) (Ikenberg et al. 2023). The BestCyte consists of a digital scanner, networked storage and WSI analysis algorithm and enables remote access through web-based software. The CytoProcessor is a full web application that empowers users with virtual microscopy-like natural working environment. The CytoProcessor utilizes machine learning methods to select all suspicious abnormal cells to display in the gallery for cytopathologists’ further review. The Genius Digital Diagnostics System is a digital cytology cloud platform that enables seamless and dynamic collaboration across laboratories within a network. Consisting of a digital imager, an image management server (IMS), and a review station, the system utilizes a new artificial intelligence (AI) algorithm and advanced volumetric imaging technology to detect (pre-)cancerous cells.

All these three screening systems enable web connection and exploit AI algorithms for primary diagnosis. The future emerging cervical cytology screening systems will be the combination of high-quality imaging devices, convenient viewing software/website, and powerful AI (ML/DL-based) analysis algorithms.

3 Research methodology

3.1 Research questions

In this systematic literature review, referred to the PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses) guidelines (Liberati et al. 2009), we searched for studies that applied or validated a DL-based method for automatic screening of cervical cytology. Overall, we assessed the tendencies and main problems present in this research field, highlighting the biomedical background knowledge of cervical cytology, the availability of the used datasets, DL-based approaches and result evaluation metrics. Besides, we aimed to identify the research gaps based on the existing findings and to discuss the feasibility analysis and future research directions. To obtain a more detailed and comprehensive view of the subject, the overall objective is motivated by the following research questions (RQs).

- RQ 1:

-

What are the DL-based techniques in automated cervical cytology screening?

- RQ 2:

-

What tasks are involved in automated cervical cytology screening?

- RQ 3:

-

How are DL-based approaches helping doctors in screening lesions?

- RQ 4:

-

Which data sources can be reached?

- RQ 5:

-

What is the best achieved performance in each study?

- RQ 6:

-

What are the challenges faced by the researchers while using DL models in cervical cytology?

3.2 Data source and search strategy

In this study, we searched PubMed, Scopus, IEEE Xplore, ACM Digital Library, and Web of Science from 2016 to 2022. Because this review focuses on DL-based methods applied to cervical cytology, the search was not limited to medical databases such as Medline or PubMed, which were more inclined to biomedical topics and health informatics. Instead, several databases in the field of computer sciences (CS) were also accessed. The articles were searched using several keywords related to cervical cytology (e.g. ’Cervical cell’ or ’Liquid-based cytology’), deep learning (e.g. ’Deep Learning’ or ’Convolutional Neural Networks’) and screening tasks (e.g. ’Classification’ or ’WSI Analysis’). The full search strategy for each database can be found in Table S1 of the supplementary material.

3.3 Inclusion and exclusion criteria

The inclusion criteria for selecting an article were as follows: (a) It is written in English; (b) It is published in 2016 or later; (c) It is published as an original journal article or conference proceeding; and (d) It adopts, or proposes DL methods, systems, or applications to realize related tasks in automated cervical cytology screening. Studies were excluded from this review if: (a) the study is published as a book, book chapter, book review, executive summary, scientific report, conference abstract, newspaper article, social media content or workshop report, and research protocol. (b) the study is a duplicate that can be found through several scholarly databases; (c) articles research on animals or non-human samples; and (d) articles do not focus on one of the research questions.

3.4 Study selection

The Covidence software (www.covidence.org) was used for screening and study selection. Eligibility assessment was conducted by two investigators, who screened titles and abstracts of retrieved articles independently, and selected all relevant citations for full-text review. Disagreements were resolved through discussion with a third reviewer.

The search initially identified 1364 records from the stated databases, of which 799 were screened after removing 565 duplicated articles. Then, according to the predetermined inclusion criteria, 661 were excluded and a total of 138 articles were included through the second round of the selection process. After assessing the full-text articles, 89 papers in total were included in this systematic review (see Fig. 7).

3.5 Data extraction

Data extraction was conducted to explore different DL-based methods proposed or applied in cervical cytology screening programs. To ensure the reliability and quality of this review article, two reviewers with expertise in deep learning and biomedical engineering extracted study characteristics and diagnostic performance data. For each selected article, this study extracted the following data: authors, publication time, research objectives, study context, specific task, methodology used, dataset used, and study outcomes/findings. These items were extracted to enable researchers to find and compare current DL-based studies in their research fields or tasks. The extracted data were also synthesized and analyzed to summarize the existing research and identify the potential scopes for future research.

4 Deep learning in cervical cytology

In this section, we first give an overall introduction and structurized analysis of the automation of cervical cytology screening (Sect. 4.1). Next, we survey publicly available cervical cytology datasets and describe them in detail (Sect. 4.2). Then we comprehensively summarize the literature on various deep learning methods applied in cervical cytology, including several representative clinical tasks: cell-level identification (Sect. 4.3), detection (Sect. 4.4), segmentation (Sect. 4.5), and slide-level diagnosis (Sect. 4.6).

4.1 Overall introduction and problem analysis

The ultimate goal of automated cervical cytology screening is to improve the overall effectiveness of cervical cancer screening programs, reduce the incidence and mortality of cervical cancer, and ultimately improve women’s health outcomes. Additionally, automated systems can potentially reduce healthcare costs associated with cervical cancer screening and treatment, making it more accessible to women in resource-limited settings. From the evolutionary history of automated screening systems in the above Sect. 2.4, we know that the developing systems are all going to use a combination of digital scanners and AI technology to assist doctors in making cytological diagnoses. Future automated screening systems will fully enable autonomous diagnosis and reporting of results via the use of such techniques as medical imaging, computer vision, and machine learning. This analysis procedure of the whole specimen involves searching the region of interest (RoI), segmenting cells, and classifying precancerous or cancerous cells. The specific process is as follows (Fig. 8):

-

Image acquisition The first step in automating cervical cytology screening is to acquire high-quality images of the cervical cells. This can be done using various imaging techniques such as optical microscopy, digital imaging, or automated slide scanning.

-

Image preprocessing The acquired images are then preprocessed to enhance image quality and remove any noise or artifacts that may interfere with the analysis. This step involves image filtering, noise reduction, stain normalization, and contrast enhancement.

-

RoI detection The next step is to detect and classify different regions of interest (suspicious cells or areas) in the images for further analysis. Object detection algorithms such as Faster R-CNN, or YOLO can be employed in this step.

-

Cell segmentation Once RoIs are detected, they can be segmented into individual cells and different parts of cells can be identified. This step is not necessary for recent diagnosis methods of whole slide images since DL-based models can directly extract features from RoIs without precise segmentation results. However this step is critical to obtaining fine-grained characteristics of (pre-)cancerous cells for quantitative cytology. Quantitative computation of the segmentation results can provide morphological features that have clear medical diagnostic significance. This can further improve the classification accuracy and ensure the reliability of the classification results.

-

Feature extraction After the individual cells are segmented, features such as size, shape, texture, and color can be extracted from each cell. For example, the brightness, elongation, roundness, perimeter, and area (of nucleus and cytoplasm), N/C ratio, and nucleus relative position, which are discriminative features of biomedical significance. These features are then used for cell identification.

-

Cell identification Subsequently, the detected RoIs or the extracted features are exploited to classify the cells into different categories according to the TBS criteria, such as normal, LSIL, or HSIL. This can be done using various machine learning algorithms, such as decision trees, support vector machines, and deep learning models.

-

WSI diagnosis Then, all cell-level or patch-level classification results will be integrated to perform the slide-level prediction. There are two prevailing ways to integrate the results: the first one is to fuse the cell-level or patch-level features to generate a slide-level feature for WSI diagnosis and the other one is to directly combine all prediction probabilities and output the final slide-level classification probability.

-

Result reporting The final step is to generate a report that summarizes the results of the whole WSI analysis process. The report can cover information such as the location and characteristics of the identified cells or areas, the slide-level diagnosis result, the level of suspicion, etc. Besides, a recommended prognosis scheme may also be included.

The goal of image acquisition and preprocessing is to provide high-quality data support for subsequent image analysis steps. Deep learning can empower these two steps. Firstly, pathological staining is a time-consuming and labor-intensive process that requires specialized laboratory infrastructure, chemical reagents, and trained technicians. Developing virtual staining models based on deep learning can omit conventional staining steps and free up medical workers (Bai et al. 2023). Additionally, physical principles guided deep learning methods can help improve the quality of optical imaging (Li et al. 2022). Besides, because of differences in staining procedures, staining materials, imaging settings, and scanning devices, there are often variations in the style of cytological images collected. The utilization of generative adversarial networks (GANs) (Creswell et al. 2018), such as CycleGAN, for stain normalization can unify image styles. For example, Kang et al. realized stain normalization on cervical cytology images via StainNet (Kang et al. 2021). Furthermore, deep generative models based on GANs and diffusion models (Croitoru et al. 2023) can be used for out-of-focus and low-resolution images to enhance the resolution of acquired images, allowing for more detailed visualization of small structures.

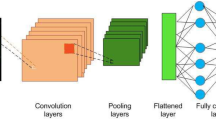

During the process of automatic image analysis, achieving better feature representation has always been a pursuit. Initially, simple neural networks with only a few layers were used for recognizing cervical cytology images. Later, deeper and wider networks have become a consensus for learning better representation and achieving better performance (Simonyan and Zisserman 2015; He et al. 2016; Szegedy et al. 2016; Xie et al. 2017). Various deep CNN models are used for cervical cell identification (Rahaman et al. 2020). However, too deep a network is prone to gradient disappearance and requires more data for fitting. In recent years, the attention mechanism has been proposed as a way to mimic the operation of the human visual system (Niu et al. 2021; Guo et al. 2022). The human visual system has varying levels of perception in different parts, with the highest sensitivity and strongest information processing ability located at the center of the retina. During the process of receiving external information, people first quickly scan the global information and then focus their gaze on a specific field of view for local information. The brain analyzes and processes this local information more carefully, while other information is filtered and ignored. The introduction of the attention mechanism to deep learning models allows models to selectively focus on the most important features of the input image while ignoring irrelevant or noisy information. Most recently, self-attention and vision transformer (ViT) architecture further expands visual attention capabilities and has been used for a wide range of computer vision tasks (Dosovitskiy et al. 2021; Liu et al. 2021; Touvron et al. 2021; Khan et al. 2022). In the field of automated cervical cytology, CVM-Cervix firstly employed ViT to identify abnormal cervical cells, which demonstrated its strong ability of feature extraction (Liu et al. 2022).

To further enhance the performance for practical production and broad application, introducing more additional information into deep learning models beyond existing cervical cytology datasets is a promising approach. Pretraining deep learning models on natural image datasets such as ImageNet (Russakovsky et al. 2015), and then fine-tuning on the target cervical cytology dataset, implicitly introduces the information learned from natural images. Other medical datasets with similar tasks to cervical cytology screening can also be used to introduce more relevant medical features. In addition to the above transfer learning approaches, incorporating medical domain knowledge is also helpful (Xie et al. 2021). Experienced cytopathologists can provide relatively accurate diagnoses, so their knowledge can better assist deep learning models with tasks related to cervical cytology screening. Medical knowledge related to cervical cytology screening includes the structure and function of cervical cells, the process of cell division and differentiation, how pathologists view cytology images, the specific areas they typically focus on, and the features they are particularly concerned with. This knowledge has been accumulated, summarized, and validated by numerous cytopathologists over many years based on a large number of cases. By incorporating this specialized knowledge into the model structuring or training process, DL-based models can better understand the underlying cytopathology of the images they are analyzing, resulting in more accurate and reliable diagnoses. Handcrafted features related to cell morphology give guidance to cervical cell identification (Dong et al. 2020) and shape priors are invaluable in assisting deep learning models in overlapping cell segmentation (Xu et al. 2018).

Compared to natural image datasets, there is currently a lack of large-scale high-quality image datasets for cervical cytology. The lack of cervical cytology datasets manifests in three aspects. Firstly, the number of images in the dataset is usually limited due to the high cost of data collection. The acquisition of cervical cytology images requires processes such as slide preparation, staining, and scanning stitching, which are expensive in terms of necessary equipment and labor. Secondly, only a small portion of the samples are annotated. These annotations include overall classification labels for the samples, as well as the location and category of abnormal cells. The annotation process requires a significant amount of experience from professional cytologists. Thirdly, cervical cytology screening is a preliminary screening for cervical cancer aimed at serving a broad population of women. Therefore, it is difficult to collect enough positive cases for rare and severe conditions to achieve a balanced dataset. To alleviate the above issues, annotation-efficient learning has been proposed to make full use of limited annotations and excavate potential discriminative information in unlabeled samples, including semi-supervised learning (Van Engelen and Hoos 2020), multiple instance learning (MIL) (Carbonneau et al. 2018), etc.

In addition to the above issues, the speed and convenience of automated screening systems also require consideration. Therefore, some researchers have studied lightweight modules (Wang et al. 2019; Zhao et al. 2022) and IoMT architectures (Jiang et al. 2022) to improve the efficiency in processing cervical cytology images and system operation, thereby reducing the overall costs of the system. Besides, DL-based models lack clinical interpretability and transparency, which limits their practicability and generality. Thus, the development of explainable methods in cervical cytology can foster trust between AI technologies and cytopathologists (Li et al. 2022). The use of visualization techniques is often considered a primary method for interpreting DL-based models. In the analysis of cervical cytology images, a number of studies have utilized class activation mapping (CAM) (Zhou et al. 2016) based methods (Grad-CAM Selvaraju et al. 2017, Score-CAM Wang et al. 2020, Relevance-CAM Lee et al. 2021, etc.), to generate heatmaps or attention scores for further investigation of the decision-making process. More visualization techniques are under exploration for improving interpretability.

4.2 Public datasets of cervical cytology

At the beginning of developing automatic methods for cervical cytology screening, many human and material resources were devoted to the collection of cervical cytological images because automatic analysis methods rely on large amounts of labeled data and there are few public datasets available. We summarize publicly available datasets for cervical cytology screening, as listed in Table 2. These public cervical cytology datasets can be utilized to develop automatic analysis algorithms for multiple tasks, including image classification, object detection, semantic segmentation, etc.

Herlev (Jantzen et al. 2005). Herlev is the most widely used dataset for the analysis of cervical cytology, which consists of 917 Papanicolaou (Pap) smear cervical images in 7 classes (3 normal classes and 4 abnormal classes) based on the classification rule of the 3-tiers dysplasia system. All images are collected by using a microscope connected to a frame grabber with a resolution of 0.201 μm/pixel at Herlev University Hospital(Denmark). Each cell image is segmented manually into the background, cytoplasm, and nucleus for further feature extraction.

ISBI 2014 (Lu et al. 2015). This dataset is released for the first Overlapping Cervical Cytology Image Segmentation Challenge under the auspices of the IEEE International Symposium on Biomedical Imaging (ISBI 2014). The main target of this challenge is to extract the boundaries of individual cytoplasm and nucleus from overlapping cervical cytology images. The dataset consists of 16 Extended Depth Field (EDF) cervical cytology images and 945 synthetic images. Each image consists of 20 to 60 Papanicolaou-stained cervical cells with different degrees of overlap. This dataset is built by the University of Adelaide in Australia and the images are captured by an Olympus BX40 microscope with a 40× objective and a four mega-pixel SPOT Insight camera. The resolution of the image is about 0.185 μm/pixel.

ISBI 2015 (Lu et al. 2016). This dataset is used for the second cervical cell segmentation challenge in ISBI 2015, consisting of a collection of 17 multi-layer cervical cell volumes, from which 8 will be used for training and 9 for testing. The main difference between ISBI 2015 and ISBI 2014 is that the input data will consist of a multi-layer cytology volume, which means that the input data is now a volume consisting of a set of multi-focal images acquired from the same specimen. This richer input dataset may provide more information on the task of detecting and segmenting cervical cells, thus enabling more accurate cytoplasmic and nuclear detection and segmentation of cervical cells.

SIPaKMeD (Plissiti et al. 2018). This database consists of 4049 images of isolated cervical cells which are acquired from the University of Ioannina, Greece, through a CCD camera adapted to an optical microscope (OLYMPUS BX53F). The cells are annotated by experienced cytopathologists into five different classes (superficial-intermediate, parabasal, koilocytotic, dyskeratotic, and meta-plastic cells), depending on their cytological appearance and morphology. Among these five classes, superficial-intermediate and parabasal are normal cells. Koilocytes and dyskeratotic are abnormal but not malignant cells while metaplastic belongs to benign. In each image of the SIPaKMeD database, the areas of the cytoplasm and the nucleus are manually defined.

CERVIX93 (Phoulady and Mouton 2018). This dataset consists of 93 stacks (frames) of images provided by Moffitt Cancer Center (Tampa, FL). All images are acquired by an integrated microscope system (Stereologer, SRC Biosciences, Tampa, FL) at 40× magnification. Each of the stacks has 10-20 images and all images are size 1280 × 960 pixels. Based on TBS, all frames are examined by cytologists and graded with three categories (Negative, LSIL, HSIL). A total of 2705 nuclei are manually annotated with bounding boxes according to all grade categories.

BHS (Araújo et al. 2019). This database collects 194 conventional pap smears from the Brazilian Health System (BHS). The collected glass slides are digitized by a Zeiss AxioCam MRc camera with a magnification of 40× to construct the training dataset (26 images) and test dataset (168 images). Each image has a resolution of 0.255 mm/pixel with a size of 1392 × 1040. The images are labeled into two classes (normal/abnormal) and abnormal images contain 5 different types of (pre-)cancerous cells (Carcinoma, HSIL, LSIL, ASCUS, and ASCH).

BTTFA (Zhang et al. 2019). South China University of Technology releases this real-world clinical dataset with well-annotated nuclei. This dataset contains 104 cervical LBC images with a size of 1024 × 768. All images are scanned via the Olympus microscope B x 51 with a magnification of 200× and the resolution of the image is 0.32 μm/pixel. All collected images are manually segmented by a professional pathologist to get the pixel-level segmentation label.

Mendeley LBC (Hussain et al. 2020). This dataset collects a total of 460 specimens from three medical diagnostic centers in India, including Babina Diagnostic Pvt. Ltd in Imphal, Gauhati Medical College and Hospital in Guwahati and Dr. B. Barooah Cancer Institute in Guwahati. The dataset consists of a total of 963 liquid-based cytology (LBC) images captured by a Leica ICC50 HD microscope collected in 400× (40× objective lens and 10× eyepiece) magnification. The size of each image is 2048 × 1536. Images have been subdivided into four categories: NIML (613), LSIL (163), HSIL (113), and SCC (74).

CRIC (Rezende et al. 2021). The collection of the CRIC dataset has 400 images of conventional cervical pap smears and 11,534 classified cells. The Pap smears are collected from 118 female patients in the Southeast region of Brazil and prepared and analyzed in the Cytology Laboratory of the Pharmacy School, Federal University of Ouro Preto, Minas Gerais, Brazil. All images are captured by conventional bright-field microscopy with a 40× objective and a 10× eyepiece, using a Zeiss AxionCam MRc digital camera coupled to the Zeiss AxioImager. The image size is 1376 × 1020 and the resolution is 0.228 μm/pixel. CRIC collection covers six types based on TBS nomenclature: NILM (6779), ASC-US (606), LSIL (1360), ASC-H(925), HSIL (1703), and SCC (161).

Comparison Detector (Liang et al. 2021). This database is collected by Central South University in China with samples scanned by Pannoramic MIDI II digital slide scanner. It consists of 7410 cervical images cropped from the WSIs. There is a total of 48,587 object instance bounding boxes labeled by experienced cytopathologists. According to TBS categories, the annotated objects belong to 11 categories: ASC-US, ASC-H, LSIL, HSIL, SCC, AGC, trichomonas (TRICH), candida (CAND), flora, herps and actinomyces (ACTIN).

RepoMedUNM (Riana et al. 2021). This database is released by Universitas Nusa Mandiri in Indonesia which is comprised of 6168 Pap smear cell images collected from 24 slides including both non-ThinPrep Pap test images and ThinPrep Pap test images. Images are obtained by an OLYMPUS CX33RTFS2 optical microscope and an X52-107BN microscope with a Logitech camera. For non-ThinPrep images, there are 3083 images in total containing two categories, normal and LSIL. ThinPrep images are divided into three categories: normal cells (1513), koilocyt cells (434), and HSIL (410).

CCEDD (Liu et al. 2022). This dataset collects 686 cervical images with a size of 2048 × 1536 from Liaoning Cancer Hospital & Institute. All samples are scanned with a Nikon ELIPSE Ci slide scanner, SmartV350D lens and a 3-megapixel digital camera. The magnification is 100× for negative patients and 400× for positive patients. The captured images contain overlapping cervical cell masses in various complex backgrounds and are labeled by 6 experienced cytologists to outline the contours of the cytoplasm and nucleus. The original images are divided into training, validation, and test sets using a ratio of 6:1:3. All raw images are cut into 512 × 384 pixels and 33,614 cut images are obtained.

Cx22 (Liu et al. 2022). This dataset is an extension of the CCEDD dataset released by Key Laboratory of Opto-Electronic Information Processing, Chinese Academy of Sciences, Shenyang in which more precise instances (cytoplasm and nucleus) are annotated. A total of 14,946 cell instances in 1320 images with the size of 512 × 512 are collected and divided into two sub-sets, Cx22-Multi (containing multiple instances) and Cx22-Pair (only containing a pair of instances).

4.3 Cervical cell identification

Cell-level identification is one of the most successful tasks applied by deep learning in cervical cytology screening. Traditional machine learning methods need to accurately segment the cell outline and even the nucleus, and then manually design the features (nucleus area, cytoplasm area, nucleus perimeter, cytoplasm perimeter, N/C ratio, etc.). The extracted hand-crafted features are fused and utilized for final classification, to realize the identification of cervical cells. Most of the traditional machine learning-based methods rely on the accuracy of cell segmentation, which is the key to feature extraction. However, in actual clinical practice, the complex background and fuzzy overlapping cells bring serious difficulties to the accurate segmentation of cervical cells. Conversely, a DL-based identification scheme in the form of a convolutional neural network (CNN) avoids complex image preprocessing steps such as pixel-level cell segmentation, feature selection, and extraction. Owing to the learning of abundant training data, DL-based approaches have gradually become a promising research direction use can realize end-to-end and high-performance identification of cervical cells. The most straightforward approach is to feed the cell image directly into a deep CNN model to extract the feature maps, then use the output layer and a classifier to obtain the predicted category. Bora et al. (2016) utilized AlexNet (Krizhevsky et al. 2017) and an unsupervised feature selection technique (Mitra et al. 2002) together with two classifiers, namely Least Square Support Vector Machine (LSSVM) and Softmax Regression, to classification of cervical dysplasia in Pap smear images. Shanthi et al. (2019) designed a CNN architecture composed of three convolutional layers, three max-pooling layers and one fully connected layer. They evaluated the proposed network on four different datasets using different settings (2 class, 3 class, 4 class, and 5 class), showing its ability for cervical cell identification. Chen et al. (2021) proposed a novel network CompactVGG, which is adapted from VGGNet to realize the high-performance classification of cervical cells. On public datasets Herlev and SIPaKMeD, and their collected private dataset, CompactVGG achieved the best performance compared to some classical CNN models. Similarly, DCAVN is proposed to identify cervical cells as normal or abnormal by using deep convolutional and variational autoencoder network (Khamparia et al. 2021).

In addition to using the classical CNN architectures or self-designed models, there are three commonly used approaches for cervical cell identification: transfer learning, multi-model ensemble, and hybrid feature fusion, as shown in Fig. 9. A summary of the deep learning-based methods for cervical cell classification is exhibited in Table 3.

4.3.1 Transfer learning based identification

The success of deep learning is closely related to large amounts of data, which means that insufficient training data can seriously affect the performance of deep learning models. However, one problem with applying deep learning to medical image analysis is the lack of effective annotation. Limited labels result in limited available data, which makes deep learning models difficult to train well and brings overfitting problems. Therefore, transfer learning is an effective alternative in this case (Pan and Yang 2009). In contrast to general deep learning algorithms that solve isolated tasks, transfer learning attempts to transfer learned knowledge in the source task and apply it to improve learning in the target task, such as transferring knowledge from a large public dataset (e.g. ImageNet) to a dome-specific task (e.g. Cervical cell identification), as shown in Fig. 9a. The application of transfer learning in the field of cervical cell identification can save a significant amount of labeling effort, reduce overfitting problems and improve the generalization ability of deep learning models. To transfer deep learning models, fine-tuning and feature extraction are two common strategies (Yu et al. 2022). Fine-tuning needs to train the pre-trained model which is obtained from the source dataset on the target dataset to fine-tune all parameters in the learnable layers of the networks. Feature extraction remains the same parameters in all layers except the top layer. The top layer connects with the classifier and is related to the specific classification task.

Zhang et al. (2017) first introduced a transfer learning approach to cervical cytology screening for both conventional Pap smear and liquid-based cytology datasets. They proposed a simple ConvNet, DeepPap, to classify the cervical cells into healthy and abnormal. The proposed ConvNet was firstly pre-trained on a natural image dataset, ImageNet and then fine-tuned on cervical cytological datasets. On both the CPS dataset, Herlev, and the LBC dataset, HEMLBC, the proposed ConvNet presented high-performance classification results.

Hyeon et al. (2017) utilized VGGNet-16 which was pre-trained on the ImageNet dataset to extract features of cervical cells and then trained an SVM classifier to perform the prediction. They collected 71,344 Pap smear microscopic images classified into six categories according to TBS criteria. To mitigate the imbalanced distribution they downsampled and regrouped all images into two classes: normal (8373) and abnormal (8373). Using 80% of the images for training and the rest for testing, the SVM classifier achieved the best performance with a 0.7817 F1 score when compared to logistic regression, random forest, and AdaBoost.

Nguyen et al. (2018) proposed a DL-based approach for microscopic image classification based on transfer learning and feature concatenation. They leveraged three different deep CNN models, namely Inception-v3, Resnet152, and Inception-Resnet-v2 which were pre-trained on ImageNet, to extract the initial features of cervical cells. Then, they concatenated the extracted features and used two extra fully connected layers to fuse and compress the feature for final classification. The proposed method achieved an average accuracy of 92.63% on the Herlev dataset showing its nice performance for cervical cell classification.

Ghoneim et al. (2020) introduced CNNs and extreme learning machine (ELM)-based classifier in cervical cell classification. They compared the shallow CNN model with two deep CNN models, VGG-16 and CaffeNet. Three deep learning models were fine-tuned on the Herlev dataset, and the proposed CNN-ELM-based system achieved 99.5% accuracy in the 2-class classification and 91.2% in the 7-class classification.

Khamparia et al. (2020) proposed a novel Internet of health Things (IoHT)-driven diagnostic system for cervical cancer. To classify abnormal cervical cells, they leveraged several classical CNN models (InceptionV3, VGG19, SqueezeNet, and ResNet50) as the feature extractor in conjunction with multiple machine learning classifiers (K nearest neighbor, naive Bayes, logistic regression, random forest, and support vector machines.) for final prediction. ResNet50 together with the random forest classifier achieved the highest classification accuracy of 97.89%. They also developed a web application for the prediction of uploaded test images and the proposed IoHT system can greatly improve the diagnosis efficiency of cytologists.

Wang et al. (2020) presented an adaptive pruning deep transfer learning model (PsiNet-TAP) to classify Pap smear images. PsiNet-TAP consists of 10 convolution layers and is firstly pre-trained on the ImageNet dataset. After that, transfer learning is applied by using the pre-trained weights as the initialized weights to fine-tune the model on Pap smear images. Furthermore, to discard all unimportant convolution kernels, they designed an adaptive pruning method based on the product of l1-norm and output excitation mean. Using their collected 389 cervical Pap smear images, PsiNet-TAP achieved a remarkable performance of more than 98% accuracy.

Bhatt et al. (2021) utilized progressive resizing together with a transfer learning technique to train several generic CNN models for the identification of cervical cells. They performed binary and multiclass experiments on Herlev and SIPaKMed datasets. The experimental results demonstrated the high performance of the proposed method and the activation results of GradCam highlights the pre-malignant or malignant lesions located by the proposed model.

4.3.2 Multi-model ensemble based identification

Ensemble learning is a machine learning technology that exploits multiple base learners to produce predictive results and fuse results with various voting mechanisms to achieve better performances of the learning systems (Yang et al. 2022). The basic guiding principle of ensemble learning is ’many heads are better than one’. In recent years, with the rapid development of deep learning, ensemble deep learning has been widely applied in biomedical and bioinformatic fields (Cao et al. 2020; Ganaie et al. 2022). The multi-model ensemble is the most straightforward way to realize ensemble deep learning. The diversity of individual networks is the essential characteristic of multi-model ensemble learning and various integration strategies can assist the basic model for better performance. The ensemble across multiple models has been a promising direction to improve accuracy for cervical cell identification, as illustrated in Fig. 9b.

Rahaman et al. (2021) proposed a hybrid deep feature fusion (HDFF) approach, DeepCervix for the multiclass classification task of cervical cells. Four deep learning networks, VGG16, VGG19, XceptionNet, and ResNet50 were used to extract the features and the subsequent feature fusion network was utilized to concatenate the extracted features to perform the final prediction. The HDFF Network achieved an accuracy of 99.85% for 2-class, 99.38% for 3-class, and 99.14% for 5-class classification on the SIPaKMeD dataset. For the Herlev dataset, the proposed method achieved 98.32% and 90.32% for 2-class and 7-class classification respectively.

Manna et al. (2021) developed an ensemble-based model for cervical cell classification using three general CNN models, Inception v3, Xception, and DenseNet-169. They presented a novel ensemble technique that the prediction scores of three CNN models were taken into account to make the final decision. The proposed ensemble method leveraged a fuzzy ranking-based approach, where two non-linear functions were applied to the probability scores of each base learner to determine the fuzzy ranks of the classes. The ranks assigned by the two non-linear functions were multiplied and the ranks of the three base learners were added and the lowest rank was assigned as the predicted class. Extensive experiments on two public datasets, SIPaKMeD, and Mendeley LBC demonstrated the high performance of the proposed method in terms of classification accuracy and sensitivity.

Diniz et al. (2021) proposed a simple but effective ensemble method to classify cervical cells. After the selection of the three best-trained models from all models, the final prediction was generated by the vote of these three models’ predictions. Using the public CRIC dataset, the proposed ensemble method outperformed EfficientNet, MobileNet, InceptionNetV3, and XceptionNet, showing its effectiveness in cervical cell classification.

Madhukar et al. (2022) integrated two classical DL networks, VGG16 and ResNet50, to realize the classification of cervical cells. By fusing the 2048 dimensional feature vector from each network, the concatenated features were finally used for classification. On the publicly available CRIC dataset, the proposed method achieved accuracies of 96.07%, 93.30%, and 85.07% on the test set for 2-class, 3-class, and 6-class classification, respectively.

Liu et al. (2022) proposed a DL-based framework, CVM-Cervix for cervical cell classification. CVM-Cervix first combined a CNN module with a visual transformer module to extract local and global features from cervical cell images. The Xception model was used as the CNN module to generate 2048-dimensional local features and the tiny DeiT model was used as a vision transformer module to generate 192-dimensional global features. Then a multilayer perceptron module fused the local and global features to perform the final identification. CVM-Cervix was evaluated on the combination of CRIC and SIPaKMeD datasets, which included 11 categories in total. The experimental results demonstrated the effectiveness of the proposed CVM-Cervix to classify cervical Pap smear images. To meet the practical needs of clinical work, they also introduced a lightweight post-processing to compress the model by using a quantization technique to reduce the storage space of each weight from 32 to 16 bits. The model parameter size was greatly reduced while the classification accuracy remained almost unchanged.

Kundu and Chattopadhyay (2022) employed an evolutionary metaheuristic algorithm, named Genetic Algorithm to select the features which were extracted from GoogLeNet and ResNet-18 models. After feature selection, an SVM served as the classifier to perform the final prediction. The proposed method achieved 99.07% accuracy and 98.31% F1-score on the Mendeley LBC dataset. For the SIPaKMeD dataset, the proposed method achieved 99.65% and 98.94% for 2-class and 5-class classification.

4.3.3 Hybrid feature fusion based identification

Although the DL-based model has achieved good results in the task of cervical cell classification, there is still a lot of room for improvement. Hand-crafted features, especially some features related to cell morphology, contain rich domain knowledge in the medical field. Incorporating medical domain knowledge with the deep learning network can promote the effective attention of the network and further improve the network performance. Figure 9c shows a general example of combining DL-based features with manual cytological characteristics.

Jia et al. (2020) proposed a novel deep learning-based framework called strong feature CNN-SVM. Gray-Level Co-occurrence Matrix (GLCM) and Gabor were used to calculate the strong features. The strong features were fused with abstract features extracted by CNN and then they were sent into the SVM for final prediction. The experimental results on two independent datasets indicated the effectiveness of the strong feature CNN-SVM model in cervical cytology screening.

Dong et al. (2020) proposed an innovative cell recognition algorithm that combines hand-crafted features with automatically extracted features via the Inception v3 network. To address the low universality of artificial feature extraction while maintaining the cervical cell domain knowledge, they extracted both deep features and hand-crafted features and leveraged a fully connected layer to fuse these features. Furthermore, this paper also utilized an image enhancement algorithm to reduce noise generated during image acquisition and conversion and improve the overall performance. Based on the public Herlev dataset, the proposed method achieved an accuracy of 98.23% for 2-class classification and an accuracy of 94.68% for 7-class classification.

Zhang et al. (2022) proposed a novel multi-domain hybrid deep learning framework (MDHDN) to classify cervical cells. It was the first time to apply cell spectrum for cervical cell classification. MDHDN was a three-path cooperative framework, in which two subpaths were used to extract deep features from the time and frequency domains respectively using the VGG-19 network, and the other subpath was used to extract and select hand-crafted features. The final classification results were obtained through the correlation analysis of the prediction of the three paths. On the Herlev dataset, MDHDN acquired an accuracy of 98.7% for 2-class classification and 94.8% for 7-class classification. The proposed framework also presented an excellent performance on the public SIPaKMeD dataset and their collected in-house dataset BJTU.

In Yaman and Tuncer (2022), Yaman et al. designed an exemplar pyramid deep feature extraction model for the classification of cervical cells. They fed pap-smear images of different resolutions into the DarkNet19/DarkNet53 to get the pyramid features. Then, a Neighborhood Component Analysis (NCA) algorithm was deployed to select the most discriminative features and an SVM classifier was utilized to execute the final classification. SIPaKMeD and Mendeley LBC datasets were used for method validation. Experimental results demonstrated that the proposed method outperformed some mainstream classification models such as ResNet, DenseNet, InceptionV3, Xception, etc.

Multi-task feature fusion model for cervical cell classification (Qin et al. 2022)

Qin et al. (2022) presented a multi-task feature fusion model that performed binary classification and 5-class classification for cervical cells (see Fig. 10). The whole model consisted of a manual features fitting branch and a multi-task classification branch. They utilized CE-Net (Gu et al. 2019) to segment cervical cells for further manual feature acquirement. Multiple discriminatively hand-crafted features including morphological features, integral optical Dens, and texture features were obtained and utilized in the manual features fitting branch to supply prior knowledge for more precise classification. They also utilized smoothing noisy label regularization and supervised contrastive learning strategy for model training. On the SIPaKMeD dataset, the proposed method achieved accuracy of 98.96% and 98.67% for 2-class and 5-class classification, which surpassed other SOTA methods. On the self-built dataset, the proposed method also achieved the best performance.

4.3.4 Method analysis and summary

In this section, we have surveyed in detail the application of deep learning in the task of cervical cell identification. As deep learning models continue to make breakthroughs in computer vision, many researchers have attempted to leverage them in cervical cytology identification. In the early period (2016–2017), simple models with a few convolutional layers, or classical networks such as AlexNet, VGG, ResNet, etc. were used. Due to the small number of images in cervical cytology datasets compared to natural image datasets such as ImageNet, transfer learning brings a good parameter initialization method to the cell identification task, which can help in the model training process. Tranfer learning reduced overfitting problems and improved the generalization ability of DL-based models for cervical cell identification. DeepPap (Zhang et al. 2017) is a typically successful example that has achieved excellent performance on images of both sample preparation schemes, CPS and LBC. After the release of the public SIPaKMeD dataset (Plissiti et al. 2018) in 2018, more options are available for practice. At present, Herlev and SIPaKMeD datasets are still the two most used publicly available datasets in the automated analysis of cervical cytology. Later, attempts have been made to adapt the network to make the model more suitable for cervical cell identification rather than simply applying the classical models (Chen et al. 2021; Khamparia et al. 2021). For instance, ensemble learning has been verified to be effective in improving the models, and multi-model ensemble based identification for cervical cells has been widely used (Rahaman et al. 2021; Manna et al. 2021; Diniz et al. 2021; Madhukar et al. 2022; Liu et al. 2022; Kundu and Chattopadhyay 2022). With the popularity of self-attention mechanism and Vision Transformer (ViT), CVM-Cervix (Liu et al. 2022) effectively integrates CNN and ViT modules to construct a more powerful classification system. CVM-Cervix has been extensively validated on multiple cervical cell datasets and even the peripheral blood cell dataset for similar tasks. In addition to model improvement, data augmentation, and pre-processing methods can also enhance the model’s accuracy and generalization performance (Martinez-Mas et al. 2020; Yu et al. 2021).

To further improve performance for better application in practical production, introducing more new information into deep learning models beyond existing medical datasets is a more promising approach (Xie et al. 2021). Experienced cytopathologists can usually provide fairly accurate diagnostic results, so their knowledge can better assist deep learning models in classifying cervical cells. A most straightforward solution is to concatenate hand-crafted features with the features extracted from deep learning models, as hand-crafted features contain rich biomedical knowledge, especially related to cell morphology (Jia et al. 2020; Dong et al. 2020; Zhang et al. 2022; Yaman and Tuncer 2022; Qin et al. 2022). Visual attention can also be used to simulate the focus areas of doctors’ diagnoses (Yu et al. 2022; Su et al. 2021; Jiang et al. 2022). When deploying the model in actual production to conduct large-scale screening programs, the execution speed of the model should be also given priority, thereby some researchers have used knowledge distillation methods (Gao et al. 2022; Chen et al. 2022) or designed lightweight modules (Wang et al. 2019) to improve the real-time performance of the model. In the future, DL-based methods for cervical cell identification should be high-performance and efficient. More deep learning methods considering stronger feature extractors together with lightweight design and incorporation with biomedical domain knowledge should be explored.

4.4 Abnormal cell detection

Identifying thousands of cells in a specimen using a classification network alone is time-consuming and inefficient. Thus, a fast search and localization of suspicious abnormal cervical cells are essential for cervical image analysis which further affects the slide-level diagnosis in cervical cytology screening. Object detection models from the computer vision field which simultaneously locate the objects and predict the categories have been well studied and applied in abnormal cervical cell detection.