Abstract

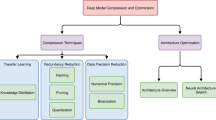

The research advances concerning the typical architectures of convolutional neural networks (CNNs) as well as their optimizations are analyzed and elaborated in detail in this paper. This paper proposes a typical approach to classifying CNNs architecture based on modules in order to accommodate more new network architectures with multiple characteristics that make them difficult to rely on the original classification method. Through the pros and cons analysis of diverse network architectures and their performance comparisons, six types of typical CNNs architectures are analyzed and explained in detail. The CNNs architectures intrinsic characteristics is also explored. Moreover, this paper provides a comprehensive classification of network compression and accelerated network architecture optimization algorithms based on the mathematical principle of various optimization algorithms. Finally, this paper analyses the strategy of NAS algorithms, discusses the applications of CNNs, and sheds light on the challenges and prospects of the current CNNs architecture and its optimizations. The explanation of the advantages brought by optimizing different network architecture types, the basis for constructively choosing appropriate CNNs in specific designs and applications are provided. This paper will help the readers to choose constructively appropriate CNNs in specific designs and applications.

Similar content being viewed by others

References

Aghli N, Ribeiro E (2021) Combining weight pruning and knowledge distillation for CN compression. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3191–3198

Alzubaidi L, Al-Shamma O, Fadhel MA, Farhan L, Zhang J, Duan Y (2020) Optimizing the performance of breast cancer classification by employing the same domain transfer learning from hybrid deep convolutional neural network model. Electronics 9(3):445

Alzubaidi L, Zhang J, Humaidi AJ, Al-Dujaili A, Duan Y, Al-Shamma O, Santamaría J, Fadhel MA, Al-Amidie M, Farhan L (2021) Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J Big Data 8(1):1–74

Alzubaidi L, Al-Shamma O, Fadhel MA, Farhan L, Zhang J (2018) Classification of red blood cells in sickle cell anemia using deep convolutional neural network. In: International conference on intelligent systems design and applications. Springer, Cham, pp 550–559

Anwar S, Hwang K, Sung W (2017) Structured pruning of deep convolutional neural networks. ACM J Emerg Technol Comput Syst JETC) 13(3):1–18

Astrid M, Lee S-I (2017) Cp-decomposition with tensor power method for convolutional neural networks compression. In: 2017 IEEE international conference on Big Data and Smart Computing (BigComp). IEEE, pp 115–118

Ba LJ, Caruana R (2013) Do deep nets really need to be deep? Adv Neural Inf Process Syst 3:2654–2662

Bengio Y (2013) Deep learning of representations: Looking forward. In: International conference on statistical language and speech processing. Springer, Berlin, pp 1–37

Bochkovskiy A, Wang C-Y, Liao H-YM (2020) Yolov4: optimal speed and accuracy of object detection. arXiv preprint. arXiv:2004.10934

Bucilu C, Caruana R, Niculescu-Mizil A (2006) Model compression. In: Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 535–541

Chen X, Hsieh C-J (2020) Stabilizing differentiable architecture search via perturbation-based regularization. In: International conference on machine learning (PMLR), pp 1554–1565

Chen T, Goodfellow I, Shlens J (2015a) Net2net: Accelerating learning via knowledge transfer. Computer Science

Chen W, Wilson J, Tyree S, Weinberger K, Chen Y (2015b) Compressing neural networks with the hashing trick. In: International conference on machine learning (PMLR), pp 2285–2294

Chen X, Li Z, Yuan Y, Yu G, Shen J, Qi D (2020) State-aware tracker for real-time video object segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision and pattern recognition, pp 9384–9393

Chen B, Li P, Li B, Lin C, Li C, Sun M, Yan J, Ouyang W (2021a) BN-NAS: neural architecture search with batch normalization. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 307–316

Chen M, Peng H, Fu J, Ling H (2021b) Autoformer: searching transformers for visual recognition. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 12270–12280

Chen Y, Dai X, Chen D, Liu M, Dong X, Yuan L, Liu Z (2021c) Mobile-former: bridging mobilenet and transformer. arXiv preprint. arXiv:2108.05895

Cheng A-C, Lin CH, Juan D-C, Wei W, Sun M (2020) InstaNAS: instance-aware neural architecture search. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 3577–3584

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

Choudhary T, Mishra V, Goswami A, Sarangapani J (2020) A comprehensive survey on model compression and acceleration. Artif Intell Rev 53(7):5113–5155

Chu X, Zhou T, Zhang B, Li J (2020a) Fair DARTS: eliminating unfair advantages in differentiable architecture search. In: European conference on computer vision. Springer, Munich, pp 465–480

Chu X, Zhang B, Xu R (2020b) Multi-objective reinforced evolution in mobile neural architecture search. In: European European conference on computer vision. Springer, Munich, pp 99–113

Clevert D-A, Unterthiner T, Hochreiter S (2015) Fast and accurate deep network learning by exponential linear units (ELUS). arXiv preprint. arXiv:1511.07289

Costa-Pazo A, Bhattacharjee S, Vazquez-Fernandez E, Marcel S (2016) The replay-mobile face presentation-attack database. In: International conference of the Biometrics Special Interest Group (BIOSIG). IEEE, pp 1–7

Courbariaux M, Bengio Y, David J-P (2015) BinaryConnect: training deep neural networks with binary weights during propagations. In: Advances in neural information processing systems, pp 3123–3131

Courbariaux M, Hubara I, Soudry D, El-Yaniv R, Bengio Y (2016) Binarized neural networks: training deep neural networks with weights and activations constrained to +1 or −1. arXiv preprint. arXiv:1602.02830

Csáji BC et al (2001) Approximation with artificial neural networks, vol 24(48). MSc thesis, Faculty of Sciences, Eötvös Loránd University, p 7

Dai Z, Liu H, Le QV, Tan M (2021) Coatnet: marrying convolution and attention for all data sizes. arXiv preprint. arXiv:2106.04803

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05), vol 1. IEEE, pp 886–893

de Freitas Pereira T, Anjos A, De Martino JM, Marcel S (2013) Can face anti-spoofing countermeasures work in a real world scenario? In: Proceedings of the 2013 international conference on biometrics (ICB). IEEE, pp 1–8

Denil M, Shakibi B, Dinh L, Ranzato M, Freitas ND (2013) Predicting parameters in deep learning. University of British Columbia, Vancouver

Denton EL, Zaremba W, Bruna J, LeCun Y, Fergus R (2014) Exploiting linear structure within convolutional networks for efficient evaluation. In: Advances in neural information processing systems, pp 1269–1277

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint. arXiv:1810.04805

Dey N, Ren M, Dalca AV, Gerig G (2021) Generative adversarial registration for improved conditional deformable templates. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3929–3941

Ding H, Chen K, Huo Q (2019a) Compressing CNN–DBLSTM models for ocr with teacher–student learning and Tucker decomposition. Pattern Recogn 96:106957

Ding R, Chin T-W, Liu Z, Marculescu D (2019b) Regularizing activation distribution for training binarized deep networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11408–11417

Ding X, Hao T, Tan J, Liu J, Han J, Guo Y, Ding G (2021) ResRep: lossless CNN pruning via decoupling remembering and forgetting. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4510–4520

Dong J-D, Cheng A-C, Juan D-C, Wei W, Sun M (2018) DPP-Net: deviceevice-aware progressive search for pareto-optimal neural architectures. In: Proceedings of the European conference on computer vision (ECCV), pp 517–531

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al (2020) An image is worth 16 × 16 words: transformers for image recognition at scale. arXiv preprint. arXiv:2010.11929

Elsken T, Metzen JH, Hutter F (2019) Neural architecture search: a survey. J Mach Learn Res 20(1):1997–2017

Erdogmus N, Marcel S (2014) Spoofing face recognition with 3d masks. IEEE Trans Inf Forensics Security 9(7):1084–1097

Fang J, Sun Y, Zhang Q, Li Y, Liu W, Wang X (2020) Densely connected search space for more flexible neural architecture search. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10628–10637

Fukushima K, Miyake S (1982) Neocognitron: a self-organizing neural network model for a mechanism of visual pattern recognition. In: Competition and cooperation in neural nets. Springer, Heidelberg, pp 267–285

Ge Z, Liu S, Wang F, Li Z, Sun J (2021) YOLOX: exceeding YOLO Series in 2021. arXiv preprint. arXiv:2107.08430

George A, Marcel S (2021) Cross modal focal loss for rgbd face anti-spoofing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7882–7891

Fukushima K (1989) Neocognitron: a hierarchical neural network capable of visual pattern recognition. Neural Netw 1:119–130

Girshick R (2015) Fast R-CNN. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Gong Y, Liu L, Ming Y, Bourdev L (2014) Compressing deep convolutional networks using vector quantization. Comput Sci+++

Graham B, El-Nouby A, Touvron H, Stock P, Joulin A, Jégou H, Douze M (2021) Levit: a vision transformer in convnet’s clothing for faster inference. arXiv preprint. arXiv:2104.01136

Gu J, Wang Z, Kuen J, Ma L, Shahroudy A, Shuai B, Liu T, Wang X, Wang G, Cai J et al (2018) Recent advances in convolutional neural networks. Pattern Recogn 77:354–377

Gulcehre C, Cho K, Pascanu R, Bengio Y (2014) Learned-norm pooling for deep feedforward and recurrent neural networks. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Springer, pp. 530–546

Guo Y, Yao A, Chen Y (2016) Dynamic network surgery for efficient DNNs. arXiv preprint. arXiv:1608.04493

Guo Z, Zhang X, Mu H, Heng W, Liu Z, Wei Y, Sun J (2020) Single path one-shot neural architecture search with uniform sampling. In: European conference on computer vision. Springer, Munich, pp 544–560

Guo J, Han K, Wang Y, Wu H, Chen X, Xu C, Xu C (2021) Distilling object detectors via decoupled features. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2154–2164

Han S, Mao H, Dally WJ (2015a) Deep compression: compressing deep neural networks with pruning, trained quantization and Huffman coding. Fiber 56(4):3–7

Han S, Pool J, Tran J, Dally WJ (2015b) Learning both weights and connections for efficient neural networks. MIT, Cambridge

Han D, Kim J, Kim J (2017) Deep pyramidal residual networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5927–5935

Hanson S, Pratt L (1988) Comparing biases for minimal network construction with back-propagation. Adv Neural Inf Process Syst 1:177–185

Hassibi B, Stork DG, Wolff G, Watanabe T (1994) Optimal brain surgeon: extensions and performance comparison. In: Cowan JD, Tesauro G, Alspector J (eds) Advances in neural information processing systems, vol 6. Morgan Kaufmann, San Mateo, pp 263–270

Håstad J, Goldmann M (1991) On the power of small-depth threshold circuits. Comput Complex 1(2):113–129

He K, Sun J (2015) Convolutional neural networks at constrained time cost. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5353–5360

He K, Zhang X, Ren S, Sun J (2015a) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

He K, Zhang X, Ren S, Sun J (2015b) Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

He Y, Zhang X, Sun J (2017) Channel pruning for accelerating very deep neural networks. In: Proceedings of the IEEE international conference on computer vision, pp 1389–1397

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. Comput Sci 14(7):38–39

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprint. arXiv:1704.04861

Howard A, Sandler M, Chu G, Chen L-C, Chen B, Tan M, Wang W, Zhu Y, Pang R, Vasudevan V et al (2019) Searching for mobilenetv3. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 1314–1324

Hua W, Zhou Y, De Sa C, Zhang Z, Suh GE (2019) Boosting the performance of cnn accelerators with dynamic fine-grained channel gating. In: Proceedings of the 52nd Annual IEEE/ACM international symposium on microarchitecture, pp 139–150

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Huang G, Liu S, Van der Maaten L, Weinberger KQ (2018) Condensenet: an efficient densenet using learned group convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2752–2761

Huang X, Xu J, Tai Y-W, Tang C-K (2020) Fast video object segmentation with temporal aggregation network and dynamic template matching. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8879–8889

Huang H, Zhang J, Shan H (2021) When age-invariant face recognition meets face age synthesis: a multi-task learning framework. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7282–7291

Hubel DH, Wiesel TN (1962) Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol 160(1):106–154

Hubel DH, Wiesel TN (2009) Republication of The Journal of Physiology (1959) 148, 574-591: Receptive fields of single neurones in the cat's striate cortex. 1959. J Physiol 587(Pt 12):2721–2732

Hu H, Peng R, Tai YW, Tang CK (2016) Network trimming: a data-driven neuron pruning approach towards efficient deep architectures. arXiv preprint. arXiv:1607.03250

Hu Q, Wang P, Cheng J (2018) From hashing to CNNs: training binary weight networks via hashing. In: 32nd AAAI conference on artificial intelligence, vol 32

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning (PMLR), pp 448–456

Jaderberg M, Vedaldi A, Zisserman A (2014) Speeding up convolutional neural networks with low rank expansions. Comput Sci 4(4):XIII

Jegou H, Douze M, Schmid C (2010) Product quantization for nearest neighbor search. IEEE Trans Pattern Anal Mach Intell 33(1):117–128

Ji G-P, Fu K, Wu Z, Fan D-P, Shen J, Shao L (2021) Full-duplex strategy for video object segmentation. In Proceedings of the IEEE/CVF international conference on computer vision, pp 4922–4933

Ji M, Shin S, Hwang S, Park G, Moon I-C (2021) Refine myself by teaching myself: Feature refinement via self-knowledge distillation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10664–10673

Jia Y et al (2013) An open source convolutional architecture for fast feature embedding. In: Proceedings of the 22nd ACM international conference on multimedia, Orlando, FL, pp 675–678

Jo G, Lee G, Shin D (2020) Exploring group sparsity using dynamic sparse training. In: 2020 IEEE international conference on consumer electronics-Asia (ICCE-Asia). IEEE, pp 1–2

Jocher G (2020) Yolo v5. https://github.com/ultralytics/yolov5 Accessed July 2020

Khan A, Sohail A, Zahoora U, Qureshi AS (2020) A survey of the recent architectures of deep convolutional neural networks. Artif Intell Rev 53(8):5455–5516

Kim YD, Park E, Yoo S, Choi T, Yang L, Shin D (2015) Compression of deep convolutional neural networks for fast and low power mobile applications. Comput Sci 71(2):576–584

Kim S-W, Kook H-K, Sun J-Y, Kang M-C, Ko S-J (2018) Parallel feature pyramid network for object detection. In: Proceedings of the European conference on computer vision (ECCV), pp 234–250

Kong T, Sun F, Tan C, Liu H, Huang W (2018) Deep feature pyramid reconfiguration for object detection. In: Proceedings of the European conference on computer vision (ECCV), pp 169–185

Kozyrskiy N, Phan A-H (2020) Cnn acceleration by low-rank approximation with quantized factors. arXiv preprint. arXiv:2006.08878

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

Lai Z, Lu E, Xie W (2020) Mast: a memory-augmented self-supervised tracker. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6479–6488

Lebedev V, Lempitsky V (2016) Fast convnets using group-wise brain damage. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2554–2564

Lebedev V, Ganin Y, Rakhuba M, Oseledets I, Lempitsky V (2014) Speeding-up convolutional neural networks using fine-tuned cp-decomposition. In: International conference on learning representations (ICLR Poster)

LeCun Y, Denker JS, Solla SA (1990) Optimal brain damage. In: Touretzky DS (ed) Advances in neural information processing systems. Morgan Kaufmann, San Francisco, pp 598–605

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

LeCun Y, Kavukcuoglu K, Farabet C (2010) Convolutional networks and applications in vision. In: Proceedings of 2010 IEEE international symposium on circuits and systems. IEEE, pp 253–256

Lee KH, Verma N (2013) A low-power processor with configurable embedded machine-learning accelerators for high-order and adaptive analysis of medical-sensor signals. IEEE J Solid-State Circuits 48(7):1625–1637

Lee H, Wu Y-H, Lin Y-S, Chien S-Y (2019) Convolutional neural network accelerator with vector quantization. In: 2019 IEEE international symposium on circuits and systems (ISCAS). IEEE, pp 1–5

Lee K, Kim H, Lee H, Shin D (2020) Flexible group-level pruning of deep neural networks for on-device machine learning. In: 2020 Design, automation & test in Europe cnference & exhibition (DATE). IEEE, pp 79–84

Lee D, Wang D, Yang Y, Deng L, Zhao G, Li G (2021) QTTNet: quantized tensor train neural networks for 3D object and video recognition. Neural Netw 141:420–432

Leng C, Dou Z, Li H, Zhu S, Jin R (2018) Extremely low bit neural network: Squeeze the last bit out with ADMM. In: Thirty-Second AAAI Conference on Artificial Intelligence

Li F, Zhang B, Liu B (2016) Ternary weight networks. arXiv preprint. arXiv:1605.04711

Li H, Kadav A, Durdanovic I, Samet H, Graf HP (2016) Pruning filters for efficient convnets. arXiv preprint. arXiv:1608.08710

Li L, Zhu J, Sun M-T (2019) A spectral clustering based filter-level pruning method for convolutional neural networks. IEICE Trans Inf Syst 102(12):2624–2627

Li C, Wang G, Wang B, Liang X, Li Z, Chang X (2021a) Dynamic slimmable network. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 8607–8617

Li Y, Ding W, Liu C, Zhang B, Guo G (2021b) TRQ: Ternary neural networks with residual quantization. In: Proceedings of the AAAI conference on artificial intelligence

Li Y, Gu S, Mayer C, Gool LV, Timofte R (2020) Group sparsity: The hinge between filter pruning and decomposition for network compression. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8018–8027

Lin M, Chen Q, Yan S (2013) Network in network. arXiv preprint. arXiv:1312.4400

Lin D, Talathi S, Annapureddy S (2016) Fixed point quantization of deep convolutional networks. In: International conference on machine learning (PMLR), pp 2849–2858

Lin X, Zhao C, Pan W (2017a) Towards accurate binary convolutional neural network. In: Advances in neural information processing systems

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017b) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Lin R, Ko C-Y, He Z, Chen C, Cheng Y, Yu H, Chesi G, Wong N (2020) Hotcake: higher order tucker articulated kernels for deeper CNN compression. In: 2020 IEEE 15th international conference on solid-state & integrated circuit technology (ICSICT). IEEE, pp 1–4

Liu B, Wang M, Foroosh H, Tappen M, Pensky M (2015) Sparse convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 806–814

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) SSD: single shot multibox detector. In: European conference on computer vision. Springer, Cham, pp 21–37

Liu Z, Li J, Shen Z, Huang G, Yan S, Zhang C (2017) Learning efficient convolutional networks through network slimming. In: Proceedings of the IEEE international conference on computer vision, pp 2736–2744

Liu C, Zoph B, Neumann M, Shlens J, Hua W, Li L-J, Fei-Fei L, Yuille A, Huang J, Murphy K (2018a) Progressive neural architecture search. In: Proceedings of the European conference on computer vision (ECCV), pp 19–34

Liu X, Pool J, Han S, Dally WJ (2018b) Efficient sparse-Winograd convolutional neural networks. arXiv preprint. arXiv:1802.06367

Liu C, Chen L-C, Schroff F, Adam H, Hua W, Yuille AL, Fei-Fei L (2019) Auto-deeplab: hierarchical neural architecture search for semantic image segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 82–92

Liu Z, Shen Z, Savvides M, Cheng K-T (2020a) Reactnet: towards precise binary neural network with generalized activation functions. In: European conference on computer vision. Springer, Berlin, pp 143–159

Liu J, Xu Z, Shi R, Cheung RC, So HK (2020b) Dynamic sparse training: Find efficient sparse network from scratch with trainable masked layers. arXiv preprint. arXiv:2005.06870

Liu D, Chen X, Fu J, Liu X (2021a) Pruning ternary quantization. arXiv preprint. arXiv:2107.10998

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021b) Swin transformer: hierarchical vision transformer using shifted windows. arXiv preprint. arXiv:2103.14030

Liu L, Zhang S, Kuang Z, Zhou A, Xue J-H, Wang X, Chen Y, Yang W, Liao Q, Zhang W (2021c) Group fisher pruning for practical network compression. In: International conference on machine learning (PMLR), pp 7021–7032

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Lu Z, Whalen I, Boddeti V, Dhebar Y, Deb K, Goodman E, Banzhaf W (2019) NSGA-Net: neural architecture search using multi-objective genetic algorithm. In: Proceedings of the genetic and evolutionary computation conference, pp 419–427

Luo P, Zhu Z, Liu Z, Wang X, Tang X (2016) Face model compression by distilling knowledge from neurons. In: Proceedings of the 30th AAAI conference on artificial intelligence. AAAI, Phoenix

Luo J-H, Wu J, Lin W (2017) Thinet: a filter level pruning method for deep neural network compression. In: Proceedings of the IEEE international conference on computer vision, pp 5058–5066

Ma N, Zhang X, Zheng H-T, Sun J (2018) Shufflenet v2: Practical guidelines for efficient cnn architecture design. In: Proceedings of the European conference on computer vision (ECCV), pp 116–131

Ma X, Guo F-M, Niu W, Lin X, Tang J, Ma K, Ren B, Wang Y (2020) PCONV: the missing but desirable sparsity in DNN weight pruning for real-time execution on mobile devices. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 5117–5124

Maas AL, Hannun AY, Ng AY, et al (2013) Rectifier nonlinearities improve neural network acoustic models. Proc ICML 30:3. Citeseer

Mao H, Han S, Pool J, Li W, Liu X, Wang Y, Dally WJ (2017) Exploring the regularity of sparse structure in convolutional neural networks. arXiv preprint. arXiv:1705.08922

Mao Y, Wang N, Zhou W, Li H (2021) Joint inductive and transductive learning for video object segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9670–9679

Maziarz K, Tan M, Khorlin A, Chang K-YS, Gesmundo A (2019) Evo-nas: Evolutionary-neural hybrid agent for architecture search

Mehta S, Rastegari M (2021) MobileViT: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv preprint. arXiv:2110.02178

Michel G, Alaoui MA, Lebois A, Feriani A, Felhi M (2019) Dvolver: Efficient pareto-optimal neural network architecture search. arXiv preprint. arXiv:1902.01654

Mikolov T, Karafiát M, Burget L, Černocky J, Khudanpur S (2010) Eleventh annual conference of the international speech communication association

Montremerlo M, Beeker J, Bhat S, Dahlkamp H (2008) The stanford entry in the urban challenge. J Field Robot 7(9):468–492

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines. In: International conference on international conference on machine learning (ICML)

Nguyen DT, Nguyen TN, Kim H, Lee H-J (2019) A high-throughput and power-efficient FPGA implementation of YOLO CNN for object detection. IEEE Trans Very Large Scale Integr (VLSI) Syst 27(8):1861–1873

Niu W, Ma X, Lin S, Wang S, Qian X, Lin X, Wang Y, Ren B (2020) PatDNN: achieving real-time DNN execution on mobile devices with pattern-based weight pruning. In: Proceedings of the 25th international conference on architectural support for programming languages and operating systems, pp 907–922

Novikov A, Podoprikhin D, Osokin A, Vetrov D (2015) Tensorizing neural networks. arXiv preprint. arXiv:1509.06569

Oseledets IV (2011) Tensor-train decomposition. SIAM J Sci Comput 33(5):2295–2317

Peng Z, Huang W, Gu S, Xie L, Wang Y, Jiao J, Ye Q (2021) Conformer: local features coupling global representations for visual recognition. arXiv preprint. arXiv:2105.03889

Perez-Rua J-M, Baccouche M, Pateux S (2018) Efficient progressive neural architecture search. arXiv preprint. arXiv:1808.00391

Punyani P, Gupta R, Kumar A (2020) Neural networks for facial age estimation: a survey on recent advances. Artif Intell Rev 53(5):3299–3347

Qin Z, Li Z, Zhang Z, Bao Y, Yu G, Peng Y, Sun J (2019) Thundernet: towards real-time generic object detection on mobile devices. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6718–6727

Radford A, Narasimhan K, Salimans T, Sutskever I (2018) Improving language understanding by generative pre-training

Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I et al (2019) Language models are unsupervised multitask learners. OpenAI Blog 1(8):9

Rastegari M, Ordonez V, Redmon J, Farhadi A (2016) XNOR-Net: Imagenet classification using binary convolutional neural networks. In: European conference on computer vision. Springer, Cham, pp 525–542

Razani R, Morin G, Sari E, Nia VP (2021) Adaptive binary-ternary quantization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4613–4618

Real E, Aggarwal A, Huang Y, Le QV (2019) Regularized evolution for image classifier architecture search. In: Proceedings of the aaai conference on artificial intelligence, vol 33, pp 4780–4789

Redfern AJ, Zhu L, Newquist MK (2021) BCNN: a binary CNN with all matrix OPS quantized to 1 bit precision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4604–4612

Redmon J, Farhadi A (2017) Yolo9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7263–7271

Redmon J, Farhadi A (2018) Yolov3: an incremental improvement. arXiv preprint. arXiv:1804.02767

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 28:91–99

Romero A, Ballas N, Kahou SE, Chassang A, Gatta C, Bengio Y (2014) Fitnets: Hints for thin deep nets. rXiv preprint. arXiv:1412.6550

Roy AG, Siddiqui S, Pölsterl S, Navab N, Wachinger C (2020) squeeze & exciteguided few-shot segmentation of volumetric images. Med Image Anal 59:101587

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C (2018) Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4510–4520

Seong H, Hyun J, Kim E (2020) Kernelized memory network for video object segmentation. In: European conference on computer vision. Springer, Cham, pp 629–645

Sharma M, Markopoulos PP, Saber E, Asif MS, Prater-Bennette A (2021) Convolutional auto-encoder with tensor-train factorization. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 198–206

Simard PY, Steinkraus D, Platt JC et al (2003) Best practices for convolutional neural networks applied to visual document analysis. In: ICDAR, vol 3

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arxiv preprint. arXiv:1409.1556

Srinivas S, Babu RV (2015) Data-free parameter pruning for deep neural networks. Comput Sci. https://doi.org/10.5244/C.29.31

Srinivas S, Sarvadevabhatla RK, Mopuri KR, Prabhu N, Kruthiventi SS, Babu RV (2016) A taxonomy of deep convolutional neural nets for computer vision. Front Robotics AI 2:36

Srinivas A, Lin T-Y, Parmar N, Shlens J, Abbeel P, Vaswani A (2021) Bottleneck transformers for visual recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 16519–16529

Srivastava RK, Greff K, Schmidhuber J (2015) Training very deep networks. In: Advances in neural information processing systems

Sun W, Zhou A, Stuijk S, Wijnhoven R, Nelson AO, Corporaal H et al (2021) DominoSearch: find layer-wise fine-grained N:M sparse schemes from dense neural networks. In: Advances in neural information processing systems 34 (NeurIPS 2021)

Sundermeyer M, Schlüter R, Ney H (2012) Lstm neural networks for language modeling. In: 13th annual conference of the international speech communication association

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: 31st AAAI conference on artificial intelligence

Takahashi N, Mitsufuji Y (2021) Densely connected multi-dilated convolutional networks for dense prediction tasks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 993–1002

Tan M, Chen B, Pang R, Vasudevan V, Sandler M, Howard A, Le QV (2019) Mnasnet: platform-aware neural architecture search for mobile. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2820–2828

Tang W, Hua G, Wang L (2017) How to train a compact binary neural network with high accuracy? In: 31st AAAI conference on artificial intelligence

Tang H, Liu X, Sun S, Yan X, Xie X (2021a) Recurrent mask refinement for few-shot medical image segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3918–3928

Tang Y, Wang Y, Xu Y, Deng Y, Xu C, Tao D, Xu C (2021a) Manifold regularized dynamic network pruning. In: Proceedings of the IEEE/CVF international conference on computer vision and pattern recognition, pp 5018–5028

Theis L, Korshunova I, Tejani A, Huszár F (2018) Faster gaze prediction with dense networks and fisher pruning. arXiv preprint. arXiv:1801.05787

Touvron H, Cord M, Douze M, Massa F, Sablayrolles A, Jégou H (2021) Training data-efficient image transformers and distillation through attention. In: International conference on machine learning (PMLR), pp 10347–10357

Uijlings JR, Van De Sande KE, Gevers T, Smeulders AW (2013) Selective search for object recognition. Int J Comput Vis 104(2):154–171

Vanhoucke V, Senior A, Mao MZ (2011) Improving the speed of neural networks on CPUS. In: Deep learning and unsupervised feature learning workshop

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, pp 5998–6008

Wang P, Cheng J (2016) Accelerating convolutional neural networks for mobile applications. In: Proceedings of the 24th ACM international conference on Multimedia, pp 541–545

Wang P, Cheng J (2017) Fixed-point factorized networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4012–4020

Wang P, Hu Q, Zhang Y, Zhang C, Liu Y, Cheng J (2018) Two-step quantization for low-bit neural networks. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 4376–4384

Wang Z, Lin S, Xie J, Lin Y (2019a) Pruning blocks for cnn compression and acceleration via online ensemble distillation. IEEE Access 7:175703–175716

Wang W, Fu C, Guo J, Cai D, He X (2019b) COP: customized deep model compression via regularized correlation-based filter-level pruning. Neurocomputing 464:533–545

Wang Z, Lu J, Tao C, Zhou J, Tian Q (2019c) Learning channel-wise interactions for binary convolutional neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 568–577

Wang C-Y, Liao H-YM, Wu Y-H, Chen P-Y, Hsieh J-W, Yeh I-H (2020a) Cspnet: a new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp 390–391

Wang N, Zhou W, Li H (2020b) Contrastive transformation for self-supervised correspondence learning. arXiv preprint. arXiv:2012.05057

Wang D, Li M, Gong C, Chandra V (2021a) Attentivenas: improving neural architecture search via attentive sampling. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6418–6427

Wang Y, Xu Z, Wang X, Shen C, Cheng B, Shen H, Xia H (2021b) End-to-end video instance segmentation with transformers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8741–8750

Wang D, Zhao G, Chen H, Liu Z, Deng L, Li G (2021c) Nonlinear tensor train format for deep neural network compression. Neural Netw 144:320–333

Wang C, Liu B, Liu L, Zhu Y, Hou J, Liu P, Li X (2021d) A review of deep learning used in the hyperspectral image analysis for agriculture. Artif Intell Rev 54:5205–5253

Wang Z, Xiao H, Lu J, Zhou J (2021e) Generalizable mixed-precision quantization via attribution rank preservation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 5291–5300

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, Lu T, Luo P, Shao L (2021f) Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. arXiv preprint. arXiv:2102.12122

Wen W, Wu C, Wang Y, Chen Y, Li H (2016) Learning structured sparsity in deep neural networks. Adv Neural Inf Process Syst 29:2074–2082

Wen W, Xu C, Yan F, Wu C, Wang Y, Chen Y, Li H (2017) Terngrad: Ternary gradients to reduce communication in distributed deep learning. arXiv preprint. arXiv:1705.07878

Wu J, Leng C, Wang Y, Hu Q, Cheng J (2016) Quantized convolutional neural networks for mobile devices. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4820–4828

Wu Y, Wu Y, Gong R, Lv Y, Chen K, Liang D, Hu X, Liu X, Yan J (2020a) Rotation consistent margin loss for efficient low-bit face recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6866–6876

Wu B, Xu C, Dai X, Wan A, Zhang P, Yan Z, Tomizuka M, Gonzalez J, Keutzer K, Vajda P (2020b) Visual transformers: token-based image representation and processing for computer vision. arXiv preprint. arXiv:2006.03677

Xie L, Yuille A (2017) Genetic CNN. In: Proceedings of the IEEE international conference on computer vision, pp 1379–1388

Xie S, Girshick R, Dollár P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1492–1500

Xu H (2020) Pnfm: a filter level pruning method for cnn compression. In: Proceedings of the 3rd international conference on information technologies and electrical engineering, pp 49–54

Xu B, Wang N, Chen T, Li M (2015) Empirical evaluation of rectified activations in convolutional network. arXiv preprint. arXiv:1505.00853

Xu Y, Xie L, Zhang X, Chen X, Qi G-J, Tian Q, Xiong H (2019) PC-DARTS: partial channel connections for memory-efficient architecture search. arXiv preprint. arXiv:1907.05737

Xu Y, Wang Y, Han K, Tang Y, Jui S, Xu C, Xu C (2021) Renas: relativistic evaluation of neural architecture search. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4411–4420

Yamamoto K (2021) Learnable companding quantization for accurate low-bit neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5029–5038

Yang Z, Wang Y, Chen X, Shi B, Xu C, Xu C, Tian Q, Xu C (2020a) CARS: continuous evolution for efficient neural architecture search. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1829–1838

Yang Z, Wang Y, Han K, Xu C, Xu C, Tao D, Xu C (2020b) Searching for low-bit weights in quantized neural networks. arXiv preprint. arXiv:2009.08695

Yang Z, Wang Y, Chen X, Guo J, Zhang W, Xu C, Xu C, Tao D, Xu C (2021) Hournas: extremely fast neural architecture search through an hourglass lens. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10896–10906

Yao L, Pi R, Xu H, Zhang W, Li Z, Zhang T (2021) G-DetKD: towards general distillation framework for object detectors via contrastive and semantic-guided feature imitation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3591–3600

Ye J, Li G, Chen D, Yang H, Zhe S, Xu Z (2020) Block-term tensor neural networks. Neural Netw 130:11–21

Ye M, Kanski M, Yang D, Chang Q, Yan Z, Huang Q, Axel L, Metaxas D (2021) DeepTag: an unsupervised deep learning method for motion tracking on cardiac tagging magnetic resonance images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7261–7271

Yuan L, Wang T, Zhang X, Tay FE, Jie Z, Liu W, Feng J (2020) Central similarity quantization for efficient image and video retrieval. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3083–3092

Yuan L, Chen Y, Wang T, Yu W, Shi Y, Jiang Z, Tay FE, Feng J, Yan S (2021) Tokens-to-token vit: training vision transformers from scratch on imagenet. arXiv preprint. arXiv:2101.11986

Zagoruyko S, Komodakis N (2016a) Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv preprint. arXiv:1612.03928

Zagoruyko S, Komodakis N (2016b) Wide residual networks, British Machine Vision Conference

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. In: European conference on computer vision. Springer, Cham, pp 818–833

Zhang X, Zou J, He K, Sun J (2015) Accelerating very deep convolutional networks for classification and detection. IEEE Trans Pattern Anal Mach Intell 38(10):1943–1955

Zhang Q, Zhang M, Chen T, Sun Z, Ma Y, Yu B (2019) Recent advances in convolutional neural network acceleration. Neurocomputing 323:37–51

Zhang T, Cheng H-P, Li Z, Yan F, Huang C, Li H, Chen Y (2020) Autoshrink: a topology-aware NAS for discovering efficient neural architecture. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 6829–6836

Zhang Z, Lu X, Cao G, Yang Y, Jiao L, Liu F (2021a) Vit-yolo: Transformer-based yolo for object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 2799–2808

Zhang C, Yuan G, Niu W, Tian J, Jin S, Zhuang D, Jiang Z, Wang Y, Ren B, Song SL et al (2021b) Clicktrain: efficient and accurate end-to-end deep learning training via fine-grained architecture-preserving pruning. In: Proceedings of the ACM international conference on supercomputing, pp 266–278

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2019) M2det: a single-shot object detector based on multi-level feature pyramid network. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 9259–9266

Zhao T, Cao K, Yao J, Nogues I, Lu L, Huang L, Xiao J, Yin Z, Zhang L (2021a) 3D graph anatomy geometry-integrated network for pancreatic mass segmentation, diagnosis, and quantitative patient management. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13743–13752

Zhao H, Zhou W, Chen D, Wei T, Zhang W, Yu N (2021b) Multi-attentional deepfake detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2185–2194

Zhong Z, Yang Z, Deng B, Yan J, Wu W, Shao J, Liu C-L (2020) Blockqnn: efficient block-wise neural network architecture generation. IEEE Trans Pattern Anal Mach Intell 43(7):2314–2328

Zhou H, Alvarez JM, Porikli F (2016) Less is more: towards compact CNNs. In: European conference on computer vision. Springer, Berlin, pp 662–677

Zhou M, Liu Y, Long Z, Chen L, Zhu C (2019) Tensor rank learning in CP decomposition via convolutional neural network. Signal Process Image Commun 73:12–21

Zhou S, Wang Y, Chen D, Chen J, Wang X, Wang C, Bu J (2021) Distilling holistic knowledge with graph neural networks. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10387–10396

Zhou S, Wu Y, Ni Z, Zhou X, Wen H, Zou Y (2016) DoReFa-Net: training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv preprint. arXiv:2004.10934

Zhu C, Han S, Mao H, Dally WJ (2016) Trained ternary quantization. arXiv preprint. arXiv:1612.01064

Zhu X, Lyu S, Wang X, Zhao Q (2021a) TPH-YOLOv5: improved yolov5 based on transformer prediction head for object detection on drone-captured scenarios. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 2778–2788

Zhu J, Tang S, Chen D, Yu S, Liu Y, Rong M, Yang A, Wang X (2021b) Complementary relation contrastive distillation. In: Proceedings of the IEEE/CVF international conference on computer visionand pattern recognition, pp 9260–9269

Zoph B, Le QV (2016) Neural architecture search with reinforcement learning. arXiv preprint. arXiv:1611.01578

Zoph B, Vasudevan V, Shlens J, Le QV (2018) Learning transferable architectures for scalable image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8697–8710

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants No. 61573330 and 61720106009.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cong, S., Zhou, Y. A review of convolutional neural network architectures and their optimizations. Artif Intell Rev 56, 1905–1969 (2023). https://doi.org/10.1007/s10462-022-10213-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-022-10213-5