Abstract

Over the past, deep neural networks have proved to be an essential element for developing intelligent solutions. They have achieved remarkable performances at a cost of deeper layers and millions of parameters. Therefore utilising these networks on limited resource platforms for smart cameras is a challenging task. In this context, models need to be (i) accelerated and (ii) memory efficient without significantly compromising on performance. Numerous works have been done to obtain smaller, faster and accurate models. This paper presents a survey of methods suitable for porting deep neural networks on resource-limited devices, especially for smart cameras. These methods can be roughly divided in two main sections. In the first part, we present compression techniques. These techniques are categorized into: knowledge distillation, pruning, quantization, hashing, reduction of numerical precision and binarization. In the second part, we focus on architecture optimization. We introduce the methods to enhance networks structures as well as neural architecture search techniques. In each of their parts, we describe different methods, and analyse them. Finally, we conclude this paper with a discussion on these methods.

Similar content being viewed by others

References

Krizhevsky, A., Sutskever, I., Hinton, G.E. (2012). Imagenet classification with deep convolutional neural networks, NIPS.

LeCun, Y., Bengio, Y., Hinton, G. (2015). Deep learning. Nature, 521, 436–444.

Simonyan, K., & Zisserman, A. (2015). Very deep convolutional networks for large-scale Image recognition. arXiv:1409.1556v6.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A. (2015). Going deeper with convolutions. In IEEE conference on computer vision and pattern recognition (pp. 1–9).

He, K., & Sun, J. (2015). Convolutional neural networks at constrained time cost, 5353–5360.

Chuangxia, H., Hanfeng, K., Xiaohong, C., Fenghua, W. (2013). An lmi approach for dynamics of switched cellular neural networks with mixed delays, Abstract and Applied Analysis.

Chuangxia, H., Jie, C., Peng, W. (2016). Attractor and boundedness of switched stochastic cohen-grossberg neural networks, Discrete Dynamics in Nature and Society.

Cheng, Y., Wang, D., Zhou, P., Zhang, T., Member, S. (2018). A Survey of Model Compression and Acceleration for Deep Neural Networks, IEEE Signal Processing Magazine.

Cheng, J., Wang, P., Li, G., Hu, Q., Lu, H. (2018). Recent Advances in Efficient Computation of Deep Convolutional Neural Networks. Frontiers of Information Technology & Electronic Engineering, 19(1), 64–77.

Dauphin, Y.N., & Bengio, Y. (2013). Big neural networks waste capacity.

Ba, J., & Caruana, R. (2014). Do deep nets really need to be deep?, NIPS, 2654–2662.

BuciluÇž, C., Caruana, R., Niculescu-Mizil, A. (2006). Model compression, ACM, 535–541.

Hinton, G., Vinyals, O., Dean, J. (2014). Distilling the Knowledge in a Neural Network. NIPS 2014 Deep Learning Workshop, 14, 1–9.

Chen, Y., Wang, N., Zhang, Z. (2017). Darkrank: Accelerating deep metric learning via cross sample similarities transfer.

Huang, Z., & Wang, N. (2017). Like what you like: Knowledge distill via neuron selectivity transfer.

Aguilar, G., Ling, Y., Zhang, Y., Yao, B., Fan, X., Guo, C. (2020). Knowledge distillation from internal representations.

Lee, H., Hwang, S.J., Shin, J. (2020). Self-supervised label augmentation via input transformations, ICML.

Müller, R., Kornblith, S., Hinton, G. (2019). When does label smoothing help?. In Advances in Neural Information Processing Systems.

Weinberger, K., Dasgupta, A., Langford, J., Smola, A., Attenberg, J. (2009). Feature hashing for large scale multitask learning. In Proceedings of the 26th annual international conference on machine learning (pp. 1113–1120).

Chen, W., Wilson, J., Tyree, S., Weinberger, K., Chen, Y. (2015). Compressing neural networks with the hashing trick, 2285–2294.

Spring, R., & Shrivastava, A. (2017). Scalable and sustainable deep learning via randomized hashing, ACM, 445–454.

Ba, J., & Frey, B. (2013). Adaptive dropout for training deep neural networks, 3084–3092.

Gionis, A., Indyk, P., Motwani, R. (1999). Similarity Search in High Dimensions via Hashing (pp. 518–529).

Shinde, R., Goel, A., Gupta, P., Dutta, D. (2010). Similarity search and locality sensitive hashing using ternary content addressable memories. In Proceedings of the 2010 ACM SIGMOD International Conference on Management of data (pp. 375–386).

Sundaram, N., Turmukhametova, A., Satish, N., Mostak, T., Indyk, P., Madden, S., Dubey, P. (2013). Streaming similarity search over one billion tweets using parallel locality-sensitive hashing. In Proceedings of the VLDB Endowment (pp. 1930–1941).

Huang, Q., Feng, J., Zhang, Y., Fang, Q., Ng, W. (2015). Query-aware locality-sensitive hashing for approximate nearest neighbor search. In Proceedings of the VLDB Endowment (pp. 1–12).

Shrivastava, A., & Li, P. (2014). Asymmetric lsh (alsh) for sublinear time maximum inner product search (mips).

Cun, Y.L., Denker, J.S., Solla, S. (1990). Optimal Brain Damage, Advances in Neural Information Processing Systems. arXiv:1011.1669v3.

Hassibi, B., & Stork, D.G. (1993). Second order derivatives for network pruning: Optimal brain surgeon.

Han, S., Pool, J., Tran, J., Dally, W. (2015). Learning both weights and connections for efficient neural network. In Advances in neural information processing systems (pp. 1135–1143).

Molchanov, P., Tyree, S., Karras, T., Aila, T., Kautz, J. (2017). Pruning convolutional neural networks for resource efficient transfer learning, ICLR.

Anwar, S., Hwang, K., Sung, W. (2017). Structured pruning of deep convolutional neural networks. ACM Journal on Emerging Technologies in Computing Systems, 13(3), 1–18.

Zhou, H., Alvarez, J.M., Porikli, F. (2016). Less Is More: Towards Compact CNNs. In Computer Vision – ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part IV Springer International Publishing Cham (pp. 662–677).

Alvarez, J.M., & Salzmann, M. (2016). Learning the number of neurons in deep networks, 2270–2278.

Lebedev, V., & Lempitsky, V. (2016). Fast convnets using group-wise brain damage.

Wen, W., Wu, C., Wang, Y., Chen, Y., Li, H. (2016). Learning structured sparsity in deep neural networks. NIPS, 2082–2090.

Li, H., Kadav, A., Durdanovic, I., Samet, H., Graf, H.P. (2017). Pruning filters for efficient ConvNets. arXiv:1608.08710.

Luo, J.-H., Wu, J., Lin, W. (2017). Thinet: A filter level pruning method for deep neural network compression, ICCV.

Liu, Z., Li, J., Shen, Z., Huang, G., Yan, S., Zhang, C. (2017). Learning Efficient Convolutional Networks through Network Slimming. arXiv:1708.06519.

Han, S., Mao, H., Dally, W.J. (2016). Deep Compression - Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv:1510.00149.

Yu, R., Li, A., Chen, C.-F., Lai, J.-H., Morariu, V.I., Han, X., Gao, M., Lin, C.-Y., Davis, L.S. (2018). NISP: pruning networks using neuron importance score propagation, CVPR.

Zhuang, Z., Tan, M., Zhuang, B., Liu, J., Guo, Y., Wu, Q., Huang, J., Zhu, J. (2018). Discrimination-aware channel pruning for deep neural networks. In Advances in Neural Information Processing Systems 31 (pp. 875–886).

He, Y., Liu, P., Wang, Z., Hu, Z., Yang, Y. (2019). Filter pruning via geometric median for deep convolutional neural networks acceleration, CVPR.

Lin, S., Ji, R., Yan, C., Zhang, B., Cao, L., Ye, Q., Huang, F., Doermann, D.S. (2019). Towards optimal structured CNN pruning via generative adversarial learning.

Sainath, T.N., Kingsbury, B., Sindhwani, V., Arisoy, E., Ramabhadran, B. (2013). Low-rank matrix factorization for Deep Neural Network training with high-dimensional output targets. In IEEE International Conference on Acoustics, Speech and Signal Processing (pp. 6655–6659).

Kolda, T.G, & Bader, B.W. (2009). Tensor decompositions and applications. SIAM Review, 51 (3), 455–500.

Cheng, Y., Wang, D., Zhou, P., Zhang, T. (2017). A survey of model compression and acceleration for deep neural networks, arXiv:1710.09282.

Lin, J., Rao, Y., Lu, J., Zhou, J. (2017). Runtime neural pruning.

Gong, Y., Liu, L., Yang, M., Bourdev, L. (2014). Compressing deep convolutional networks using vector quantization.

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P. (1998). Gradient-based learning applied to document recognition. In Proceedings of the IEEE (pp. 2278–2324).

Choi, Y., El-Khamy, M., Lee, J. (2017). Towards the limit of network quantization, ICLR.

Kingma, D., & Ba, J. (2014). Adam: A method for stochastic optimization.

Duchi, J., Hazan, E., Singer, Y. (2011). Adaptive subgradient methods for online learning and stochastic optimization. Journal of Machine Learning Research, 12(7), 2121–2159.

Zeiler, M.D. (2012). ADADELTA: An Adaptive Learning Rate Method 6. arXiv:1212.5701.

Hinton, G.E., Srivastava, N., Swersky, K. (2012). Lecture 6a- overview of mini-batch gradient descent.

Abadi, M. (2015). TensorFlow: Large-scale machine learning on heterogeneous systems. Software available from tensorflow.org.

Ahmad, J., Beers, J., Ciurus, M., Critz, R., Katz, M., Pereira, A., Pringle, M., Rames, J. (2017). ios 11 by tutorials: Learning the new ios apis with swift 4 1 Razeware LLC.

Farabet, C., Martini, B., Corda, B., Akselrod, P., Culurciello, E., Lecun, Y. (2011). NeuFlow: A runtime reconfigurable dataflow processor for vision IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops.

Gokhale, V., Jin, J., Dundar, A., Martini, B., Culurciello, E. (2014). A 240 G-ops/s mobile coprocessor for deep neural networks. IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, 696–701.

Vanhoucke, V., Senior, A., Mao, M.Z. (2011). Improving the speed of neural networks on cpus. In Deep Learning and Unsupervised Feature Learning Workshop, NIPS 2011.

Iwata, A., Yoshida, Y., Matsuda, S., Sato, Y., Suzumura, Y. (1989). An artificial neural network accelerator using general purpose 24 bit floating point digital signal processors. Proc. IJCNN, 18, 171–175.

Hammerstrom, D. (1990). A VLSI architecture for high-performance, low-cost, on-chip learning. In IJCNN International Joint Conference on Neural Networks (pp. 537–544).

Holt, J.L., & Hwang, J.N. (1993). Finite precision error analysis of neural network hardware implementations. IEEE Transactions on Computers, 42, 281–290.

Gupta, S., Agrawal, A., Gopalakrishnan, K., Narayanan, P. (2015). Deep Learning with Limited Numerical Precision. In International Conference on Machine Learning. arXiv:1502.02551(pp. 1737–1746).

Courbariaux, M., Bengio, Y., David, J.-P. (2014). Training deep neural networks with low precision multiplications, ICLR.

Williamson, D. (1991). Dynamically scaled fixed point arithmetic. In IEEE Pacific Rim Conference on Communications, Computers and Signal Processing Conference Proceedings (pp. 315–318).

Mamalet, F., Roux, S., Garcia, C. (2007). Real-time video convolutional face finder on embedded platforms, Eurasip Journal on Embedded Systems.

Roux, S., Mamalet, F., Garcia, C., Duffner, S. (2007). An embedded robust facial feature detector. In Proceedings of the 2007 IEEE Signal Processing Society Workshop, MLSP (pp. 170–175).

Courbariaux, M., Hubara, I., Soudry, D., El-Yaniv, R., Bengio, Y. (2016). Binarized neural networks: Training deep neural networks with weights and activations constrained to+ 1 or-1.

Lin, X., Zhao, C., Pan, W. (2017). Towards accurate binary convolutional neural network.

Srivastava, N. (2013). Improving Neural Networks with Dropout.

Srivastava, N., Hinton, G.E., Krizhevsky, A., Sutskever, I., Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting.

Courbariaux, M., Bengio, Y., David, J.-P. (2015). Binaryconnect: Training deep neural networks with binary weights during propagations.

Rastegari, M., Ordonez, V., Redmon, J., Farhadi, A. (2016). Xnor-net: Imagenet classification using binary convolutional neural networks Springer.

Newell, A., Yang, K., Deng, J. (2016). Stacked hourglass networks for human pose estimation Springer.

Bulat, A., & Tzimiropoulos, G. (2017). Binarized convolutional landmark localizers for human pose estimation and face alignment with limited resources.

Deng, L., Jiao, P., Pei, J., Wu, Z., Li, G. (2017). Gated xnor networks: Deep neural networks with ternary weights and activations under a unified discretization framework.

LeCun, Y. (1989). Generalization and network design strategies.

He, K., Zhang, X., Ren, S., Sun, J. (2016). Deep residual learning for image recognition.

Huang, G., Liu, Z., Weinberger, K.Q., Maaten, L.VD. (2017). Densely connected convolutional networks.

Iandola, F.N, Han, S., Moskewicz, M.W., Ashraf, K., Dally, W.J., Keutzer, K. (2016). Squeezenet: Alexnet-level accuracy with 50x fewer parameters and< 0.5 mb model size.

Nanfack, G., Elhassouny, A., Thami, R. O.H. (2017). Squeeze-segnet: A new fast deep convolutional neural network for semantic segmentation.

Badrinarayanan, V., Kendall, A., Cipolla, R. (2017). SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation, IEEE Transactions on Pattern Analysis and Machine Intelligence.

Brostow, G., Shotton, J., Fauqueur, J., Cipolla, R. (2008). Segmentation and recognition using structure from motion point clouds.

Mamalet, F., & Garcia, C. (2012). Simplifying convnets for fast learning.

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H. (2017). Mobilenets: Efficient convolutional neural networks for mobile vision applications.

Chollet, F. (2016). Xception: Deep learning with depthwise separable convolutions. arXiv:1610.02357.

Sifre, L., & Stephane, M. (2014). Rigid-Motion Scattering For Image Classification.

Zhang, X., Zhou, X., Lin, M., Sun, J. (2017). Shufflenet: An extremely efficient convolutional neural network for mobile devices.

Rosenblatt, F. (1962). Perceptrons and the Theory of Brain Mechanics.

Kohonen, T. (1982). Self-organized formation of topologically correct feature maps. Biological Cybernetics, 43(1), 59–69.

Willshaw, D.J., & Von Der Malsburg, C. (1976). How patterned neural connections can be set up by self-organization. Proceedings of the Royal Society of London. Series B, Biological Sciences, 194(1117), 431–445. http://www.jstor.org/stable/77138 .

Martinetz, T.M., Berkovich, S.G., Schulten, K.J. (1993). Neural-Gas Network for Vector Quantization and its Application to Time-Series Prediction, 4, 4.

Fritzke, B. (1995). A Growing Neural Gas Learns Topologies. Advances in Neural Information Processing Systems, 7, 625–632.

Fritzke, B. (1994). Supervised Learning with Growing Cell Structures.

Fritzke, B., & Bochum, R-. (1994). Fast learning with incremental RBF Networks 1 Introduction 2 Model description. Processing, 1(1), 2–5.

Fritzke, B. (1994). Growing cell structures-A self-organizing network for unsupervised and supervised learning. Neural Networks, 7(9), 1441–1460.

Montana, D.J., & Davis, L. (1989). Training feedforward neural networks using genetic algorithms. In Proceedings of the International Joint Conference on Artificial Intelligence (pp. 762–767).

Floreano, D., Dürr, P., Mattiussi, C. (2008). Neuroevolution: from architectures to learning. Evolutionary Intelligence, 1(1), 47–62.

Stanley, K.O., & Miikkulainen, R. (2002). Evolving neural networks through augmenting topologies, Evolutionary Computation.

Radcliffe, N.J. (1993). Genetic set recombination and its application to neural network topology optimisation. Neural Computing & Applications, 1(1), 67–90.

Thierens, D. (1996). Non-redundant genetic coding of neural networks.

Miikkulainen, R., Liang, J., Meyerson, E., Rawal, A., Fink, D., Francon, O., Raju, B., Shahrzad, H., Navruzyan, A., Duffy, N., Hodjat, B. (2017). Evolving Deep Neural Networks.

Elsken, T., Metzen, J.H., Hutter, F. (2018). Simple and efficient architecture search for convolutional neural networks. https://openreview.net/forum?id=SySaJ0xCZ,.

Cai, H., Chen, T., Zhang, W., Yu, Y., Wang, J. (2018). Efficient Architecture Search by Network Transformation.

Jin, H., Song, Q., & Hu, X. (2018). Efficient Neural Architecture Search with Network Morphism.

Cai, H., Yang, J., Zhang, W., Han, S., Yu, Y. (2018). Path-level network transformation for efficient architecture search. In Proceedings of the 35th International Conference on Machine Learning (pp. 678–687).

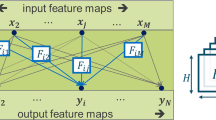

Saxena, S., & Verbeek, J. (2016). Convolutional Neural Fabrics.

Pham, H., Guan, M., Zoph, B., Le, Q., Dean, J. (2018). Efficient neural architecture search via parameters sharing. In Proceedings of the 35th International Conference on Machine Learning, Vol. 80.

Veniat, T., & Denoyer, L. (2018). Learning time/memory-efficient deep architectures with budgeted super networks. In Conference on Computer Vision and Pattern Recognition (pp. 3492–3500).

Zoph, B., Yuret, D., May, J., Knight, K. (2016). Transfer Learning for Low-Resource Neural Machine Translation.

Tan, M., Chen, B., Pang, R., Vasudevan, V., Le, Q.V. (2019). MnasNet: Platform-Aware Neural Architecture Search for Mobile.

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.-C. (2018). Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation.

Frankle, J., & Carbin, M. (2019). The lottery ticket hypothesis: Finding sparse, trainable neural networks. In ICLR.

Acknowledgments

This work has been sponsored by the Auvergne Regional Council and the European funds of regional development (FEDER).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Berthelier, A., Chateau, T., Duffner, S. et al. Deep Model Compression and Architecture Optimization for Embedded Systems: A Survey. J Sign Process Syst 93, 863–878 (2021). https://doi.org/10.1007/s11265-020-01596-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-020-01596-1