Abstract

Persistent homology is a rigorous mathematical theory that provides a robust descriptor of data in the form of persistence diagrams (PDs) which are 2D multisets of points. Their variable size makes them, however, difficult to combine with typical machine learning workflows. In this paper we introduce persistence codebooks, a novel expressive and discriminative fixed-size vectorized representation of PDs that adapts to the inherent sparsity of persistence diagrams. To this end, we adapt bag-of-words, vectors of locally aggregated descriptors and Fischer vectors for the quantization of PDs. Persistence codebooks represent PDs in a convenient way for machine learning and statistical analysis and have a number of favorable practical and theoretical properties including 1-Wasserstein stability. We evaluate the presented representations on several heterogeneous datasets and show their (high) discriminative power. Our approach yields comparable—and partly even higher—performance in much less time than alternative approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Topological data analysis (TDA) provides a powerful framework for the structural analysis of high-dimensional data. An important tool in TDA is persistent homology, PH (Edelsbrunner et al. 2002). It provides a comprehensive, multiscale summary of the underlying data’s shape and currently gains an increasing importance in data science (Ferri 2017). Recently, it has been successfully applied to computer vision problems, such as shape and texture analysis (Li et al. 2014; Reininghaus et al. 2015), 3D surface analysis (Adams et al. 2017; Zeppelzauer et al. 2017), 3D shape matching (Carrière et al. 2015), mesh segmentation (Skraba et al. 2010), and motion analysis (Vejdemo-Johansson et al. 2015). Further application areas include time series analysis (Seversky et al. 2016), music tagging (Liu et al. 2016) and social-network analysis (Hofer et al. 2017) as well as applications from the bio-medical domain, e.g. biomolecular analysis (Cang and Wei 2017), brain network analysis (Lee et al. 2012), protein investigation (Gameiro et al. 2015) and material science (Nakamura et al. 2015).

Persistent homology can be efficiently computed using various currently available tools (Bauer et al. 2017; Chen and Kerber 2011; De Silva et al. 2011; Dey et al. 2016; Edelsbrunner and Harer 2010; Maria et al. 2014). A basic introduction to PH is given in Sect. 2 and the more detailed one in the “Appendix”. The common representation of PH are persistence diagrams (PDs) which are multisets of points in \(\mathbb {R}^2\). Due to their variable size, which varies depending on the input data, PDs are not easy to integrate within common data analysis, statistics and machine learning workflows. To alleviate this problem, a number of kernel functions defined on PDs and vectorization methods for PDs have been introduced.

Kernel-based approaches have a strong theoretical background but in practice they often become inefficient when the number of training samples is large. As typically the entire kernel matrix must be computed explicitly (like in case of SVMs), this leads to roughly quadratic complexity in computation time and memory with respect to the size of the training set. Furthermore, vector-based approaches are limited to kernelized methods, such as SVM and kernel PCA. Vectorized representations, in contrast, are compatible with a much wider range of methods and do not suffer from complexity constraints of kernels. They, however, often lack in representational power, as they require the spatial quantization of the PDs, which is unsually non-adaptive and thus does not cope well with the sparseness of PDs.

In this work we present a novel adaptive representation of PDs which aims at combining the large representational power of kernel-based approaches with the general applicability of vectorized representations. To this end, we adapt the popular bag-of-words (BoW) encoding (McCallum et al. 1998; Sivic and Zisserman 2003), as well as its more comprehensive extensions, such as VLAD (Jégou et al. 2010) and Fisher vectors (Perronnin and Dance 2007) to cope with the inherent sparsity of PDs. The proposed persistent codebooks provide universally applicable fixed-sized feature vectors. They are, under mild assumptions, stable with respect to a standard metric in PDs and thus, also theoretically, built upon a solid basis. The presented method is to some extend a generalization of persistence images, PI (Adams et al. 2017), which adapts to the underlying data distribution. In contrast, PI samples the distribution in a regular grid (corresponding to an image), what often results in unnecessary codewords. Experiments show that the new representations achieve peak performance and even outperform numerous competitive methods while being more compact and requiring orders of magnitude less time.

This paper builds upon previous work of Zieliński et al. (2019). The additional contribution includes: (1) two new persistence codebook representations (PVLAD and PFV) building upon vectors of locally aggregated descriptors (VLAD) and Fisher vectors (FV); (2) the investigation of their stability; (3) the introduction of stable variant of PVLAD algorithm together with the proofs of its stability; (4) a significant number of additional experiments on an extended collection of datasets; and (5) an extended discussion of results.

The paper is structured as follows. Section 2 gives a basic introduction to PH and reviews related approaches. In Sect. 3 we introduce persistence codebooks and investigate their stability. Sections 4 and 5 present the experimental setup and results. We conclude the work in Sect. 6.

2 Background and related work

2.1 Background on persistent homology

In this section, we first introduce persistent homology, and then describe related state-of-the-art approaches, both kernel- and vectorization-based, that aim at making PH compatible with machine learning methods.

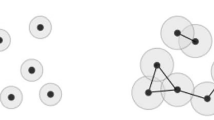

Under mild assumptions, persistent homology (PH) can be defined for a continuous function \(f :X \rightarrow \mathbb {R}\), where \(X \subset R^n\). Typically f is a distance function from a collection of points, or a scalar value function defined on a grid of points, but in principle it can be an arbitrary function that satisfies a tameness assumption specified below. Focusing on sub-level sets \(L_x = f^{-1}( (-\infty ,x] )\), we let x grow from \(-\infty\) to \(+\infty\). While this happens, we can observe a whole hierarchy of events. In dimension zero, connected components of \(L_x\) will be created and merged together. One dimensional cycles that are not bounded, or higher dimensional voids, will appear in \(L_x\) at critical points of f. The value of x on which a connected component, cycle or a higher dimensional void appears is refereed to as birth time. They will subsequently either become identical (up to a deformation) to other cycles and voids (created earlier), or they will be glued-in and become trivial. The value of x on which that happens is refereed to as death time. Every connected component, a cycle, or a higher dimensional void can, therefore, be characterized by a pair of numbers, b and d, its birth and death time. The difference between the death and the birth, \(p = d-b\), is the so-called persistence value. In this paper, we will use the birth-persistence pair [b, p] to encode the feature. The multi-set of birth-persistence pairs makes up a persistence diagram (PD). The set of all persistence diagrams will be denoted as \(\mathcal {D}\). Example PDs for three different input point clouds are shown in Fig. 1.

The persistence coordinate is often an indicator of whether a cycle is structurally relevant or more likely to be related to noise. This observation is justified by many stability theorems for persistence (Cohen-Steiner et al. 2007), which state that a small change in the space X, or in a function f, implies only a small change in the resulting persistence diagram. Consequently, points in the PD with low persistence can be removed by a small perturbation of the data; and therefore, are not considered stable features. Those stability results make PDs a robust tool in data analysis.

Throughout this paper we assume that the given function f is tame, i.e. it induces a finite number of birth-persistence points. There are various metrics on finite PDs. To define them, the finite diagrams have to be enriched with an infinite collection of points (b, 0), which represent features that are born and immediately die. Having the enriched PDs B and \(B'\) let us consider all possible matchings \(\eta : B \rightarrow B'\). The 1-Wasserstein distance is defined as:

In this paper, when considering stability of the representations, we will consider the stability with respect to 1-Wasserstein distance. A more in-depth introduction to PH is provided in “Appendix”.

The principle behind persistent codebooks on the example of the computational workflow of the persistence bag-of-words representation (PBoW): From the input data we compute PDs in dimension 1 in birth-persistence coordinates and combine them into one consolidated diagram (for the entire dataset). Next, a subset of points is obtained from this diagram by either a weighted or unweighted sub-sampling. Subsequently, we cluster the sub-sampled consolidated diagram to retrieve the codewords which will form our codebook. Finally, the points for each input PD are encoded by the codewords (BoW quantization). In this illustration a hard assignment of points to words (PBoW) is performed. The result is a codeword histogram for each input PD that represents how many points fall into which cluster of the codebook, i.e. codeword cardinalities. These codeword histograms are a compact and fixed-size vectorial representation. It is worth mentioning that while the hard assignment presented here gives the idea of the procedure, in practice we often employ soft assignment for stability reasons. Please, note further that the workflows for other persistent codebook encodings (e.g. based on VLAD or Fisher Vectors) are structurally similar, but partly use different codeword generation, quantization, and assignment schemes

2.2 Kernels and vectorized representations of PDs

Numerous kernel-based and vectorized approaches have been introduced to make PDs compatible with statistical analysis and machine learning methods. The goal of kernel-based approaches is to define dissimilarity measures (also known as kernel functions) on PDs to compare them, and thereby make them compatible with kernel-based machine learning methods, such as Support Vector Machines (SVMs), and kernel Principal Component Analysis (kPCA).

Li et al. (2014) combine the traditional bag-of-features (BoF) approach with PDs by using various distance functions between 0-dimensional PDs (bottleneck and Wasserstein distances for PDs, \(L^p\) distance functions for persistence landscapes of PDs) to generate kernels. On different datasets (SHREC 2010, TOSCA, hand gestures, Outex) they show that topological information is complementary to the information of traditional BoF. Reininghaus et al. (2015) propose a kernel for persistence diagrams by turning PDs into a continuous distribution by appropriate placement of Gaussian distributions in \(\mathbb {R}^2\). Subsequently, they define a kernel as a scalar product of the two corresponding distributions. They apply topological descriptors together with the novel kernel to shape retrieval and texture classification. Kusano et al. (2016) propose a persistence weighted Gaussian kernel, which employs the framework of kernel embedding of measures into reproducing kernel Hilbert spaces. Carrière et al. (2017) propose another kernel based on sliced approximation of the Wasserstein distance. The authors show that the kernel is not only stable, but also mimics bottleneck distances between PDs. They subsequently develop an approximation technique to reduce the kernel computation time. They apply it to 3D shape segmentation, texture classification, and orbit recognition in dynamical systems. Another approach for the representation of PDs are persistence landscapes, PL (Bubenik 2015). PL is a stable functional representation of a PD obtained from transforming it into a sequence of real-valued piece-wise linear functions. To compare two landscapes, the authors use standard \(L^p\) distance. This distance can be used to define a kernel function. Note that PL can also be transformed into a fixed-length vectorized representation by sampling the values of the landscape function. The authors, however, are not reporting results for vectorized PLs; therefore, we compare to kernels derived from PLs in our experiments. More recently, Le and Yamada (2018) proposed persistence Fisher kernel. It is based on a Fisher information distance between persistence diagrams and preserves some of the geometrical properties of the persistence diagrams space. Another approach, proposed by Som et al. (2018), embeds persistence diagrams into a Grassmann manifold, where PDs are compared using a geodesic distance.

Vectorized representations aim at deriving fixed-size encodings of PDs that can be used directly as input to current machine learning methods. One of the first attempt to vectorize PDs was presented by Aadcock et al. (2014). Given a collection of PDs \(D_1,\ldots ,D_n\), a vector characterizing \(D_i\) is obtained by taking the vector of matching distances between \(D_i\) and \(D_j\), for every \(j \in \{1,\ldots ,n\}\). A more recent approach, called persistence image, PI (Adams et al. 2017) is built upon earlier work on size functions (Donatini et al. 1998; Ferri et al. 1998). It maps a PD to a space of functions from \(\mathbb {R}^2\) to \(\mathbb {R}\) by taking a weighted sum of two dimensional Gaussian kernels placed in the points of PD. Subsequently, a discretization of the obtained function on a fixed grid of points provides the vectorization of PDs. Anirudh et al. (2016) propose an alternative approach based on the reconstruction of a certain Riemannian manifold (RM) based on PDs and its subsequent representation by a fixed-size vector. In Di Fabio and Ferri (2015), PDs are represented as the coefficients of a complex polynomial having points of PD as roots. Similarly, using a sequence of weighting functions, Wang et al. (2019) transform PDs \(D_1,\ldots ,D_n\) into vectors \(V_1,\ldots ,V_n\) and obtain a polynomial representation of \(D_i\) by taking a polynomial system having roots in the corresponding \(V_i\). Recently, as a continuation of this idea, the tropical algebra was used to construct a polynomial representation of diagrams (Kališnik 2019; Monod et al. 2019).

Recently, a third type of approach has been introduced, which aims at learning which points in the PD are of particular importance for the given task in a supervised manner by end-to-end learning (Hofer et al. 2017).

Overall, we can distinguish between approaches that learn the representation in a supervised or unsupervised manner. While supervised learned representations may better adapt to a specific task, the representations or kernels constructed in an unsupervised fashion bear less risk for overfitting because their construction is task agnostic. The proposed approach falls in the category of task agnostic representations and can be applied in supervised and unsupervised problem settings.

3 Persistence codebooks

In this section, we adapt the bag-of-words (BoW) model (McCallum et al. 1998; Sivic and Zisserman 2003) as well as its more comprehensive extensions, such as VLAD (Jégou et al. 2010) and Fisher vector (Perronnin and Dance 2007), introduced originally in text and image retrieval, for adaptive quantization of PDs into a fixed length vectorial representation. The idea behind BoW is to quantize variable length input data into a fixed-size representation by using a common dictionary, also called codebook of constant size. The codebook is generated from the input data in an unsupervised manner by extracting centers of clusters obtained from data clustering. The basic assumption behind BoW is that the clusters (i.e. codewords) capture the intrinsic structure of the data and, thereby represent an efficient vocabulary for the quantization of the data.

The overall approach of bag-of-words for persistence diagrams is visualized in Fig. 1. The input is a set of PDs extracted from all instances of a given dataset. First, all PDs are merged into one diagram. This consolidated diagram is then sub-sampled to reduce the influence of noise. In this paper, we consider two types of sub-sampling. A standard one which does not consider the persistence of the points, and one where points of higher persistence are more likely to be sampled, see Sect. 3.2 (we refer to those two types of sub-sampling as without and with weighting, respectively). From the (sub-sampled) consolidated diagram, the codebook C is generated using clustering. Given a codebook C, every input point P is encoded by assigning it to the nearest codeword from C. In traditional BoW this encoding leads to a codeword histogram, i.e. a histogram for which each codeword from C counts how many points from P are closest to this codeword. Further encodings investigated include vector of locally aggregated descriptors (VLAD) and Fisher vector (FV), see below.

For the proposed approaches, three important hyperparameters need to be identified: (1) the clustering algorithm used to generate the codebook, (2) the size of the codebook, i.e., the number of clusters, and (3) the type of proximity encoding which is used to obtain the final descriptors, i.e. hard and soft assignment. In this paper, we use k-means and Gaussian mixture models (GMM) for clustering. The codebook size is investigated empirically. In the following sections, we introduce persistent codebook approaches based on different quantizations and encodings, such as standard BoW, VLAD and FV. Consult Table 1 for an overview of representations introduced and evaluated in this paper.

For all approaches presented in these sections, we show if they are stable with respect to 1-Wasserstein distance. We would like to indicate that since the representations presented here are additive (consider the definition of additivity from Reininghaus et al. 2015), they are not stable for a p-Wasserstein distance for any \(p > 1\) as indicated in Theorem 3 in Reininghaus et al. (2015).

3.1 Persistence bag of words (PBoW)

Let us first consider a direct adaptation of BoW (Baeza-Yates et al. 1999; Sivic and Zisserman 2003) to PDs. Given a collection of persistence diagrams \(B_1 , B_2, \ldots , B_n\), they are consolidated into \(D = B_1 \cup B_2 \cup \ldots \cup B_n\) and a codebook of size N is obtained by using k-means clustering on D. Let \(\{\mu _i \in \mathbb {R}^2, i=1,\ldots ,N\}\) denote the centers of obtained clusters (the codewords). Moreover, for a PD \(B=\{x_t \in \mathbb {R}^2\}_{t=1}^T\), let us denote \(NN(x_t)\) as the index of the codeword nearest to \(x_t\), \(NN(x_t) = i |\ d(x_t,\mu _i) \le d(x_t,\mu _j)\) for all \(j \in \{1,\ldots ,N\}\). For every codeword \(\mu _i\), \(v^{PBoW}_i(B)=card\{x_t\in B\ |\ NN(x_t)=i\}\) captures the number of points from B, which are closer to \(\mu _i\) than to any other \(\mu _j\). Then the persistence bag of words (PBoW) is defined as a vector:

Subsequently, \(\mathbf{v} ^\mathbf{PBoW }(B)\) is normalized by taking the square root of each component (preserving the initial sign) and dividing it by the norm of the whole vector:

This is a standard normalization for BoW (Perronnin et al. 2010), which reduces the influence of outliers.

Remark

Let \(B,B'\in \mathcal {D}\) be persistence diagrams containing only finitely many off-diagonal points. The persistence bag of words, PBoW with N words is not stable with respect to 1-Wasserstein distance.

Proof

Let us assume that we have two clusters with centers \(\mu _1=(0,0),\mu _2 = (1,0)\in \mathbb {R}^2\), and PD B containing only one point \(x_1=(\frac{1}{2}+\epsilon ,0)\), for some small \(\epsilon >0\). Then, \(\mathbf{v} ^\mathbf{PBoW }(B)=[0, 1]\), because \(x_1\) is closer to \(\mu _2\) than \(\mu _1\). However, a small perturbation in B, e.g. by \(-2\epsilon\), changes the assignment of \(x_1\) from \(\mu _2\) to \(\mu _1\). In this case \(B'=\{ y_1=(\frac{1}{2}-\epsilon ,0) \}\) and \(\mathbf{v} ^\mathbf{PBoW }(B')=[1, 0]\). In order to be stable in 1-Wasserstein sense, PBoW should fulfill the following condition:

therefore \(C>1/\epsilon\). As \(\epsilon >0\) can be arbitrarily small, there does not exist a constant C that meets this condition. Hence, the direct adaption of BoW to PDs (PBoW) is not stable. \(\square\)

3.2 Weighted subsampling for codebook generation

Aside from being unstable, the straight-forward application of BoW to PD would neglect an important property of persistence diagrams, i.e. that points in a PD with higher persistence are typically considered more important than points with lower persistence. It is a consequence of a stability theorem, Edelsbrunner and Harer (2010) indicating that points with low persistence are more likely to originate from a noise than the points of high persistence.

In order to integrate this property into the codebook generation procedure, we perform k-means clustering on a subset of points obtained by a weighted sampling described below. This results in extended procedure of codebook generation which is as follows:

-

1.

Place all the persistence diagrams (or all diagrams of a certain dimension) on to a single consolidated persistence diagram D.

-

2.

Subsample n points from D in a way that points of higher persistence are more likely to be sampled. In the experiments presented in this paper we set it to \(n=10{,}000\).Footnote 1

-

3.

Perform k-means clustering on the obtained subset of n points to extract the centers of the clusters (the codewords).

For the weighted sampling of points from a persistence diagram we define a piecewise linear weighting function \(w_{a,b}: \mathbb {R}\rightarrow \mathbb {R}\) as:

and use it to weight second coordinates (persistence) of points in PD. In our experiments we set a and b to the persistence values corresponding to 0.05 and 0.95 quantiles of the persistence coordinate of the points in D. In the performed sub-sampling persistence points having longer values of the function w are more likely to be sampled.

We want to highlight that in this case we have selected a linear weighting with respect to persistence, i.e. the probability of sampling of a point is proportional to its persistence. It works well in the cases considered in this paper, however in the case of very noisy data with just a few dominant persistent points, the points of high persistence may not be sampled at all. In such case, we suggest to consider the weighting to be a higher degree polynomial or an exponential function to boost the probability of capturing the high persistence points.

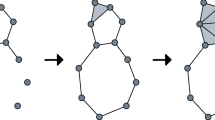

Please, note further that the sub-sampling does not directly enforce the points of the highest persistence to be automatically selected as the centers of clusters, but it makes the probability of such an event considerably larger. Examples of birth-persistence distributions with standard (unweighted) and weighted codebooks obtained with k-means and GMM are presented in Fig. 2. The unweighted clustering produces larger clusters, which are less adaptive to the strongest topological structures. At the same time, the weighted clustering yields a more adaptive codebook with a more uniform sampling of the space.

Codebook generation based on the consolidated PD with \(N=7\) codewords (top: k-means, bottom: GMM, left: no weighting, right: weighting). Using the language of computational geometry; one may tell that the cells in the top diagrams form a Voronoi diagram of the codewords. Equivalently all points in the same Voronoi cell have the same closest codeword. Weighting allows to sample more points of higher persistent from the diagram, representing more stable topological structures and yields more balanced cluster weights (even though there are many more points on the bottom of consolidated PD). Note that a cluster can (but does not have to) be created for a single high persistence point which is well separated from the others, as is the case here with the most persistent point (top-left quadrant)

3.3 Weighted codeword assignment for persistence bag of words (wPBoW)

The weighting function from Sect. 3.2 can be similarly used to weight the histogram assignments to give points with higher persistence more influence in the final representation. For this purpose, instead of counting the number of points, we sum up the weights of their persistent coordinates.

where \(B \in \mathcal {D}\). We will refer to this representation as weighted persistence bag of words (wPBoW) in the following. Similary to standard PBoW, wPBoW is not stable with respect to 1-Wasserstein distance. The couterexample is identical with the one in Sect. 3.1, when we assume that function \(w_{a,b}\) is identity.

3.4 Stable persistence bag of words (sPBoW)

After having integrated persistence-based weighting into codebook generation and also into histogram assignment, we aim at making the representation stable. To this end we adapt soft assignment of points to clusters and prove that such an approach guarantees stability of the resulting representation. Stable persistence bag of words (sPBoW) similarly to PBoW (and wPBoW) first consolidates PDs in the initial step of construction, and then generates a GMM based on the sub-sampled points (e.g. by expectation maximization algorithm Nasrabadi 2007). This approach was originally introduced by Van Gemert et al. (2008).

Let the parameters of the fitted GMM be \(\lambda =\{w_i,\mu _i,\Sigma _i, i=1,\ldots ,N\}\), where \(w_i\), \(\mu _i\) and \(\Sigma _i\) denote the weight, mean vector and covariance matrix of i-th Gaussian, and N denotes the number of Gaussians. Given a PD B, the stable PBoW is defined as:

where \(w_i>0\), \(\sum _{i=1}^N w_i=1\), and \(p_i(x_t|\lambda )\) is the likelihood that observation \(x_t\) was generated by Gaussian i:

The intuition behind this approach is to assign each point to all codewords, but with weight inversely proportional to the distance to the codewords.

Theorem

Let B and \(B'\) be persistence diagrams with a finite number of non-diagonal points. Stable persistence bag of words, sPBoW with N words is stable with respect to 1-Wasserstein distance between the diagrams, that is

where C is a constant.

Proof

Let \(\eta : B \rightarrow B'\) be the optimal matching in the definition of 1-Wasserstein distance. For a fixed \(i\in \{1,\ldots ,N\}\) we have:

As \(p_i: \mathbb {R}^2 \rightarrow \mathbb {R}\) are Lipschitz continuous with the Lipschitz constants \(L_i\), we get

\(\square\)

3.5 Persistence VLAD

Persistence VLAD (PVLAD) is based on vector of locally aggregated descriptors (VLAD) by Jégou et al. (2010), an extension of the bag-of-words concept, which accumulates the residual of each descriptor with respect to its assigned cluster. The first computation step is similar to PBoW: a codebook \(\{\mu _i \in \mathbb {R}^2, i=1..N\}\) is obtained from a training set using k-means clustering. Given a new PD B, each point \(x_t \in B\) is associated with its nearest codeword \(NN(x_t)\). In the second step, for each codeword \(\mu _i\), we compute a sum of differences between \(\mu _i\) and all \(x_t \in B\) for which \(NN(x_t) = i\). This results in:

The dimension of \(v^{PVLAD}_i\) equals 2 (differences on two coordinates), therefore \(\mathbf{v} ^{\mathbf{PVLAD }}\) is of size 2N. Intuitively, this vector should capture more information than PBoW alone, because it encodes the first order moments of the points assigned to a codeword instead of simply counting those points.

Similarly to PBoW, PVLAD is not stable with respect to 1-Wasserstein distance. Therefore, in Sect. 3.6, we propose to adapt a stable variant of VLAD, called soft VLAD.

Remark

Let \(B,B'\in \mathcal {D}\) be persistence diagrams containing only finite off-diagonal points. The persistence vector of locally aggregated descriptors, PVLAD with N words is not stable with respect to 1-Wasserstein distance.

Proof

Starting from two clusters with centers \(\mu _1=(0,0),\mu _2 = (1,0)\in \mathbb {R}^2\) and a persistence diagram \(B = \{ (\frac{1}{2}+\epsilon ,0) \}\), for small \(\epsilon >0\), we get \(v^{PVLAD}_1=[0,0]\) and \(v^{PVLAD}_2=[\epsilon -\frac{1}{2},0]\). However, similarly to the case of PBoW, a small perturbation of B, e.g. by \([-2\epsilon ,0]\) will change B to \(B' = \{ (\frac{1}{2}-\epsilon ,0) \}\) and the corresponding components of PVLADs to \([\frac{1}{2}-\epsilon ,0]\) and [0, 0]. Calculating the difference between \(\mathbf{v} ^{\mathbf{PVLAD }}(B)\) and \(\mathbf{v} ^{\mathbf{PVLAD }}(B')\):

In order to be stable in 1-Wasserstein sense, PVLAD should fulfill the following condition: \(1=|\mathbf{v} ^{\mathbf{PVLAD }}(B)-\mathbf{v} ^{\mathbf{PVLAD }}(B'))|<C|x_1-y_1|<2C\epsilon\), therefore \(C>\frac{1}{2\epsilon }\). As \(\epsilon >0\) can be arbitrarily small, and there does not exist a constant C that meets this condition. Therefore, PVLAD is not stable. \(\square\)

3.6 Stable persistence VLAD

Similarly to PBoW, the hard association with codewords can be replaced by soft association in VLAD (Jégou et al. 2012), to account for instability. To this end, we define stable persistence VLAD (sPVLAD) as follows:

where \(\gamma _i(x_t)\) is the soft assignment of descriptor \(x_t\) to ith Gaussian:

In the stability theorem for stable persistence VLAD (presented below) we assume that coordinates of the points in the considered persistence diagrams are limited to a certain compact subset of \(\mathbb {R}^2\). This limitation is crucial to prove the stability and it is a reasonable assumption in case of TDA. Moreover, this theorem is true for any \(\mathbb {R}^n\) (not only for \(\mathbb {R}^2\)).

Theorem

Let \(B,B'\in \mathcal {D}\) be persistence diagrams, such that \(B, B'\subset [a,b]\times [a,b]\) and let \(\lambda =\{w_i,\mu _i,\Sigma _i, i=1,\ldots ,N\}\) be a GMM with \(w_i\ne 0\). The stable persistence VLAD with N words is stable with respect to 1-Wasserstein distance, that is:

where C is a constant depending on \([a,b]\times [a,b]\).

Proof

Let us first consider the following difference:

where:

while \(M_i\) and \(L_i\) are the maximal value and Lipschitz constant of i-th Gaussian \(p_i\). Note that the constant \(C_1\) exists because diagrams are supported in a compact subset of \(\mathbb {R}^2\). Therefore, the Gaussians achieve a minimum value, which is bounded away from zero. When it comes to assignment (i), we simply put \(p_j(y_t)= p_j(y_t) - p_j(x_t) + p_j(x_t)\).

For a fixed i we can estimate:

where:

In (ii) we used the estimation (7) and the fact that \(\sup _y{\gamma _i(y)}=1\). The boundaries of \(\mathbb {R}^2\) compact subset allow to determine the maximal distance between diagram points and the Gaussian centers, which is used in (iii) to estimate \(C_3\). Note that \(C_4\) is independent of i, hence:

and

\(\square\)

We would like to point out that in the estimation of \(C_1\) and (iii) we use an assumption that diagrams are supported in the compact \(\mathbb {R}^2\) subset \([a,b] \times [a,b]\). As a result, if the support of a persistence diagram sequence diverges to \(\infty\), then the corresponding sequence of \(C_1\) also diverges to infinity. Therefore \(C_4\) is not a global constant and the persistence VLAD is not globally stable. We want to indicate, however, that for any practical case, the assumption about compact support of diagrams is always satisfied.

3.7 Persistence fisher vector

The idea of persistence Fisher vector (PFV) is based on Fisher vectors introduced by Perronnin and Dance (2007) and relies on the gradient of the log-likelihood with respect to the parameters of a Gaussian mixture model. Compared to the traditional BoW model, it captures first and second order moments. It can be extended to PDs as follows. Given a PD \(B\in \mathcal {D}\) we aim a characterizing it with a gradient vector derived from a generative probability model (obtained for all PDs used for codebook generation). This is similar to sPVLAD, however in case of PFV we compute not only the first order, but also the second order moments of the points assigned to a codeword (i.e. not only the gradient for \(\mu _i\) but also for \(\Sigma _i\)).

Let \(\mathcal {L}(B|\lambda )=log\,p(B|\lambda )\), where under the independence assumption:

where:

is the likelihood that point \(x_t\) was generated by the GMM.

Assuming that the covariance matrices are diagonal (for ease of calculation), the derivations \(\frac{\partial \mathcal {L}(B|\lambda )}{\partial \mu _i^d}\) and \(\frac{\partial \mathcal {L}(B|\lambda )}{\partial \sigma _i^d}\) (where \(\sigma _i^d = diag(\Sigma _i)\) and superscript d denotes the d-th dimension of a vector) can be effectively computed as (Perronnin and Dance 2007):

The gradient vector is just a concatenation of the partial derivatives with respect to all the parameters.

To normalize the dynamic range of the different dimensions of the gradient vectors, the diagonal of the Fisher information matrix \(F_\lambda\) is computed as:

applied to partial derivatives, resulting in the final definition of Fisher vector:

where \(f_{\mu _i^d}\) and \(f_{\sigma _i^d}\) are the corresponding terms on the diagonal of \(F_\lambda\). Vector \(\mathbf{v} ^{\mathbf{PFV }}\) is the concatenation of N pairs of components containing \(D=2\) values for every Gaussian component, therefore it is of size 4N.

Theorem

Let \(B,B'\in \mathcal {D}\) be persistence diagrams, such that \(B, B'\subset [a,b]\times [a,b]\). The persistence Fisher vector with N words is stable with respect to 1-Wasserstein distance, that is:

where C is a constant depending on \([a,b]\times [a,b]\).

Proof

Persistence Fisher Vector is a concatenation of the two components presented in Eqs. (9) and (10). In order to be stable, both components have to be stable with respect to 1-Wasserstein distance; therefore, we estimate them separately (we skip d superscript from the original notation for clarity).

The first FV component (10) can be estimated using the theorem about sPVLAD stability (8):

The second component (10) can be estimated as follows:

where \(D_1\) is an upper bound for \(\frac{(x_t-\mu _i)^2}{(\sigma _i)^3}-\frac{1}{\sigma _i}\) (iv), \(D_2\) is a bound for \(\frac{\gamma _i(y_t)}{(\sigma _i)^3}\) (v), both on the domain \([a,b] \times [a,b]\), and \(D_3 = D_1\ max\{L_i\} + D_2\). \(\square\)

Summing up the two estimates above, we conclude that persistence Fisher vector is stable with respect to 1-Wasserstein distance with a constant \(D_3+\frac{C_4}{(\sigma _i)^2}\), where \(D_3\) is defined above and \(C_4\) is defined in Sect. 3.6.

4 Experimental setup

To evaluate the proposed persistence BoW representations (PBoW, sPBoW, wPBoW, PVLAD, sPVLAD and PFV), we compare them with a number of state-of-the-art approaches including kernel-based methods and vectorized PD representations. The evaluation is performed on classification tasks involving different datasets representing heterogeneous data including, among others, 3D shapes, textures, and social media graphs. In the following, we describe the datasets used in our experiments, list the state-of-the-art approaches we compare with, and discuss the setup of the experiments.

4.1 Datasets

For the evaluation we incorporate various datasets which cover a wide range of different data types. Firstly, to provide a proof-of-concept, we evaluate all the approaches on a synthetically generated shape classes from Adams et al. (2017). Next, the approaches are evaluated on real-world datasets for 3D shape segmentation (Carrière et al. 2017), activity recognition in 3D motion capture data (Ali et al. 2007), geometry-informed material recognition (DeGol et al. 2016), classification of social network graphs (Hofer et al. 2017) and analysis of 3D surface texture (Zeppelzauer et al. 2017). The datasets are described in detail in the following sections. Where possible, we have used pre-computed PDs available with the datasets to foster reproducibility and comparability. As the computation times for some of the considered methods, especially for kernel-based approaches, do not scale well with the sizes of datasets, we have decided to randomly sub-sample some of the datasets (see details below).

4.1.1 Synthetic dataset

The first dataset is a synthetic dataset introduced by Adams et al. (2017). It consists of seven shape classes represented by point clouds in \(\mathbb {R}^3\) of the following geometrical objects: unit cube, circle of diameter one, sphere of diameter one, three clusters with centers randomly chosen from unit cube, hierarchical structure of three minor clusters within three major clusters (where the centers of the minor clusters are chosen as small perturbations from the major cluster centers), and a torus (see Fig. 3 for example shapes). Each point cloud is randomly perturbed by positioning a Gaussian distribution of standard deviation 0.1 at this point and sampling novel points from the distribution. Overall, this dataset contains 50 point clouds for each of the six classes, each containing 500 3D points. This gives 300 point clouds in total.

From each point cloud, we compute the PDs in dimension 1 for a Vietoris-Rips filtration for a radius parameter equal to the maximal distance between points in the point cloud.Footnote 2 We employ the approximation method proposed by Dey et al. (2016) and the SimBa implementation based on the work of Dayu Shi.Footnote 3

4.1.2 Geometry-informed material recognition dataset (GeoMat)

The GeoMat dataset provides geometry information (point clouds) as well as visual images of 19 different materials, such as “brick”, “grass” and “gravel” (DeGol et al. 2016). The GeoMat dataset contains patches sampled from larger photographs of surfaces from buildings and grounds. Each patch predominantly represents only one material, while each class consists of 600 images, each of size \(100\times 100\) pixels. Among them, there are pictures of different scales, i.e. \(100\times 100\), \(200\times 200\), \(400\times 400\) and \(800\times 800\).

For each patch, the dataset provides a depth image,Footnote 4 containing the local (fine-grained) surface texture and the global surface curvature. To filter out the global curvature, we transform each depth image into a point cloud in 3D space, consisting of 10, 000 points (every point represents one of the \(100\times 100\) pixels). Then, the resulting point cloud is rotated in a way that the Z axis represents depth and the global surface curvature is removed by fitting a second degree function (paraboloid) to the point cloud and subtracting the approximated values from the Z coordinates of the original points. The values of the Z-coordinates are then centered at 0 and the point cloud is projected back into a bitmap (depth map). Ultimately, PDs are computed by gray-scale filtration.

4.1.3 Social network graph datasets (Reddit)

To extend the range of different data types in our evaluation, we further incorporate graph-based datasets. To this end we employ the reddit-5k and reddit-12k datasets from Yanardag and Vishwanathan (2015), which contain discussion graphs from the reddit platform.Footnote 5 Nodes in the graphs correspond to users, and edges between users exist if one user has commented a posting of the other user. Different graphs are labeled by subreddits, which refer to different topics. The dataset reddit-5k contains overall 4999 graphs for 5 popular subreddits. The larger dataset, reddit-12k, contains 11929 graphs for 11 subreddits including topics like, e.g. “worldnews”, “videos” and “atheism”. The task for both datasets is to predict the subreddit (topic) from the input graph. For both datasets we use the pre-computed PDs available online.Footnote 6 They are obtained using filtration based on the vertex degree, that is, the number of edges incident to a vertex. More precisely, concerning vertices V, edges E, and vertex \(v \in V\), \(\deg (v)\) denote the number of edges in E that contain v. The filtration \(f(v)=\deg (v)\) on vertices can be then extended to edges.That yields nontrivial persistent homology in dimension zero and one, where all one-dimensional classes are essential.

4.1.4 3D surface texture dataset (PetroSurf3D)

A further dataset in our experiments is the recently released PetroSurf3D dataset, which contains high-resolution 3D surface reconstructions from the archaeological domain with a resolution of approximately 0.1 mm (Poier et al. 2017). The reconstructions represent 26 natural rock surfaces that exhibit human-made engravings (so-called rock art), and thereby exhibit complex 3D surface textures. The classification task for PetroSurf3D is to automatically predict which areas of the surface have been manipulated by tools (engravings) and which have not, i.e. there are two classes of surface topographies: engraved areas and the natural rock surface. Engraved areas represent approximately 19% of the data. For each surface, a precise pixel-accurate ground truth exists together with a depth map of the surface. The depth maps are analyzed in a patch-wise manner. Overall, there are 754.386 square patches to classify from 26 surfaces. In order to keep the number of training samples in a practical range, we randomly subsampled each surface. Overall, a balanced set (equal class cardinalities) of 600 patches per surface (\(26*600=15{,}600\) samples) is used in each repetition of an experiment.

For each patch, a PD is computed by grayscale filtration over the surface depth ranges (depth maps) as a basis for our experiments. To normalize the values for different shaped surfaces, the depth value range is z-standardized before filtration.

4.1.5 3D shape segmentation dataset

We further employ the 3D shape dataset from Chen et al. (2009) which was preprocessed by Carrière et al. (2015) for topological data analysis. The preprocessed dataset contains PDs for 5700 3D points from airplane models. Each point is assigned to one sub-part (segment) of an airplane, e.g., ’wing’, ’vertical stabilizer’ and ’horizontal stabilizer’. For our experiments we use the PDs computed by Carrière et al. (2015), available in their repository.Footnote 7 The PDs were generated by tracking topology evolution of a geodesic ball centered at the individual points of the input 3D model. Thereby, the radius grows from 0 to infinity. We focus on PDs of dimension 1 as the considered 3D shapes are connected. The task is to classify each point according to the segment it belongs to.

4.1.6 Motion capture dataset

Another real-world dataset represents 3-dimensional motion capture sequences of body joints (Ali et al. 2007). The dataset describes the following five activities: dancing, jumping, running, sitting and walking with 31, 14, 30, 35 and 48 instances, respectively. For each activity, a set of 19 3D motion trajectories (each corresponding to the motion of one tracked joint) is extracted. This corresponds to \(3 \cdot 19=57\) curves of individual (x, y, and z) components for which 57 separate PDs are computed by Ali et al. (2007). For our experiments we employ the original pre-computed PDs.Footnote 8

Experiments with this dataset are performed only on the vectorized representations. For kernel-based approaches we would have to compute 57 full kernel matrices, which is computationally expensive and would further require an adequate method for the combination of the kernels. For vectorized representations, the proceedure is much more efficient and straight-forward. We simply compute one vector per PD and concatenate them into a final feature vector for classification. For the BoW approaches, we compute 57 codebooks, one for each 3D motion component, and concatenate the corresponding codeword histograms. In case of the Riemannian manifold representation, RM Anirudh et al. (2016), we generate vectorial features by PCA and concatenate them as proposed by the authors. We also use original procedure for PI (Adams et al. 2017).

4.2 Compared approaches

We compare our bag-of-word approaches with both kernel-based techniques and vectorized representations. Kernel-based approaches include: 2-Wasserstein distance,Footnote 9 2Wd (Kerber et al. 2017.), the multi-scale kernel,Footnote 10 MK (Reininghaus et al. 2015), and sliced Wasserstein kernel,Footnote 11 SWK (Carrière et al. 2017). Furthermore, we employ the persistence landscapeFootnote 12\(^,\)Footnote 13 (PL) representation and generate a kernel matrix by the distance metric defined in Bubenik (2015). Vectorized PD representations include: persistence imageFootnote 14, PI Adams et al. (2017) and the Riemannian manifold approach,Footnote 15 RM Anirudh et al. (2016). The original PI implementation is rather inefficient since it takes into account the exact location of all birth-persistence points when calculating the values of PI. Therefore, we additionally perform experiments with an approximated unstable version of PI (referred to as approxPI in the following), which applies the Gaussian filter to 2D histogram of birth-persistence points.Footnote 16

We refrained from incorporating descriptors composed of simple topological statistics (such as minimum, maximum, and average of birth, death, and persistence) because, according to our previous research (Zeppelzauer et al. 2017), they lack sensitivity and are easily outperformed by more sophisticated approaches like persistence images.

4.3 Setup

For all datasets, except Reddit, in Sect. 4.1 we consider the PDs of dimension 1 as a common input (cycles) since they best express the internal structure in the data and yielded the most promising results in related works (Adams et al. 2017; Carrière et al. 2015). In case of Reddit database we use PDs of dimension 0 (connected components), since graphs are considered as 1-complex; thus, first dimensional homology generators never die. In the considered datasets no infinite intervals of dimension 1 occur. In cases where infinite intervals are present, there are different ways to proceed: (1) ignoring them, (2) substituting infinity with some (large) number or (3) building separate representations for finite and infinite intervals. In the general case, we recommend to compute persistence codebooks for PDs of all available dimensions separately and to combine them before classification.

The classification pipeline is as follows. For the kernel-based approaches, we take the PDs as input and compute the explicit kernel matrices for the training and test samples. Next, we train an SVM from the explicitly computed kernel-matrix and evaluate it on the test set. For the vectorized representations we compute the respective feature vectors from the PDs and feed them into a linear SVM for training. This procedure allows direct comparison between kernel-based approaches and vectorized representations.

For all datasets, we aim at solving a supervised classification task. In order to enhance the comparability with results obtained in original experiments, if available, we employ train/test divisions of samples based on the original procedures. To find optimal parameters for each evaluated approach, we run a grid search over their respective hyperparameters. The hyperparameters and their evaluated values for each approach are listed in Table 4 (for the kernel-based approaches) and in Table 5 (for the vectorized representations). The optimal parameters are highlighted in bold. For each parameter combination, we run a complete experiment including cross-validation on the training set to evaluate its performance. The number of repetitions for each parameter combination in the grid search depends on the dataset and is provided in Tables 2 and 3.

Our evaluation is partitioned into two sets of experiments. EXP-A uses all related approaches on a sub-sampled version of the datasets, while EXP-B operates only on the vectorized representations and uses larger datasets. The reason for this is that for the larger datasets in our study it is inconvenient to compute the explicit kernel matrices for the kernel-based approaches for computational reasons. Nevertheless, to still enable a fair comparison of all approaches, we sub-sample the datasets in EXP-A to reduce their size, i.e. by randomly selecting 30 (GeoMat), 100 (reddit-5k), 50 (reddit-12k) and 390 (PetroSurf3D) samples for each class. In EXP-B we solely evaluate the vectorized representations on the datasets as described in Sect. 4.1.

The evaluation procedure for each dataset is as follows. For the synthetic dataset, we subsample 80% of the samples as training data and use the remaining samples for testing. For GeoMat dataset, we use the original train/test partition with 400/200 samples per class. For Reddit experiments, we employ the original ratio of 90% graphs in the training set and the remaining 10% in the test set. For the 3D shape segmentation dataset, we employ the original 50/50 split. The train/test split ratio of the motion capture dataset is 80/20. For all datasets, we average the achieved performance of grid search over 5 repetitions of random selected training and test partitions. The only exception is PetroSurf3D, where we divided the set of all surfaces into 4 folds (resulting in four repetitions) according to original work of Poier et al. (2017).

Ultimately, we run a Wilcoxon signed-rank test (with p value of 0.1) on the results to identify which results significantly differ from the best obtained result, and which ones do not, and can thus be considered equally good. The comparison is performed between the best method (the one with the best mean accuracy) and all the other methods, for each experiment separately. The mean accuracy is obtained as an average over 5 runs with the same train/test divisions used by all compared methods. The number of repetitions is relatively small for statistical tests, therefore we set p value to 0.1.

The entire code of all experiments (implemented in Matlab) is available at https://github.com/bziiuj/pcodebooks. For external approaches, we use the publicly available implementations of the original authors. For clustering and bag-of-words encoding, we employ the VLFeat library (Vedaldi and Fulkerson 2008).

5 Results

Table 2 summarizes the results obtained in our experiments for EXP-A and EXP-B. For each combination of dataset and approach, we provide the obtained classification accuracy (including the standard deviation) and the processing time needed to construct the representations (excluding the time for classification). Note that for the synthetic dataset and the 3D shape segmentation dataset, results of EXP-A and EXP-B are equal, as no sub-sampling was needed to perform EXP-A.

In all experiments, codebook representations of persistence diagrams can compete with or even outperform the compared approaches. From EXP-A we further observe that vectorized representations (including the proposed ones), in general, perform better than kernel-based approaches. In case of the PetroSurf3D dataset, it is impossible to unambiguously determine the best method, since all approaches work equally well. For all other datasets, only the 2-Wasserstein distance and the sliced Wasserstein kernel attain accuracy comparable to vectorized approaches. Among the compared vectorized representations, PI in most cases outperforms RM and will thus serve as the primary approach for comparison with our approaches in subsequent sections. When comparing the stable vs. unstable variants of PBoW and PVLAD, we observe that PBoW in most cases outperforms its stable equivalent, especially for motion capture database in EXP-B, where sPBoW is \(\sim\)5% worse than the other methods. Opposite is the case for PVLAD, where sPVLAD in most cases yields a higher performance. Nevertheless, sPVLAD, for almost all datasets, is still significantly worse than any other codebook variant. The wPBoW works almost as well as non-weighted PBoW. Only in GeoMat and Reddit12K in EXP-B there exist visible differences in performance; however, even these results are very close (\(\sim\)1% and \(\sim\)1.5%). PFV variant seems to be the best approach, since in all experiments we can find it among the best performing methods. Overall, however, PBoW versus PFV, there is no clear winner.

Large differences exist in the processing times of the different approaches. The highest runtimes are obtained for the kernel-based approaches going up to 63k seconds for the PetroSurf3D dataset. The slowest kernel is 2Wd followed by MK, and approximately one order of magnitude faster PL and SWK. Note that computation complexity depends not only on a number of persistence diagrams, but is also highly affected by the average number of points per diagram. For the vectorized approaches, PI takes longest to compute. The runtimes, however, vary strongly, depending on the resolution of the employed PI (note that we have estimated the optimal parameters for each dataset by a grid search over all hyperparameters, see Tables 4 and 5). The RM representation is one to two magnitudes faster than PI.Footnote 17 The proposed approaches outperform almost all evaluated approaches in runtime for all datasets, both for EXP-A and EXP-B. The gain in runtime efficiency ranges from one to up to four orders of magnitude. For the largest dataset in the experiments (PetrSurf3D in EXP-B), the fastest (PBoW) and the slowest (sPBoW) codebook approaches are still 3 and 2 magnitudes faster than PI, while reaching comparable accuracy. Competitive runtimes can be achieved by the approximated version of PI (approxPI). However, this leads to a slight drop in performance for most datasets compared to the exact (and stable) implementation of PI. Moreover, we observed that approxPI performs much worse than our approaches in the case of 3D Shape Segm., the largest database of EXP-A. Detailed investigation on this performance revealed that it is primarily dominated by kernel computations (reported in EXP-A due to comparison with kernel methods). It is expected, as the size of approxPI in the experiment mentioned above is 4900, while the size of sPBoW is only 70. Concerning EXP-A, the codebook approaches are comparable in computing time, which is due to the small size of the datasets. EXP-B demonstrates well how the different approaches scale to larger data. It shows that PBoW scales best (is fastest) and still obtains optimal results in all but one case. It thus represents the best tradeoff between time efficiency and classification accuracy. See Sect. 5.2 for further discussion.

From our experiments we conclude that persistence codebooks are significantly faster than most approaches while achieving similar or even better performance level. This shows that the codebooks capture well the essential information contained in the PDs and important for the respective classification tasks. The variablity of runtimes between the different codebook variants is low compared to the other approaches. Thus, for the selection of the appropriate codebook approach for a given problem in practice, the runtime plays a secondary role.

In the following sections, we analyze selected aspects of the novel representations in greater detail, such as runtime, dependency on parameters and the scalability of the approach to large number of input PDs.

5.1 Accuracy versus codebook size and weighted sub-sampling

The most important parameter for codebook-based representations is the codebook size N, i.e. the number of clusters. There is no commonly agreed analytic method to estimate the optimal codebook size; thus, the estimation is usually performed empirically. To investigate the sensitivity of codebook approaches and their performance on the codebook size, each approach was evaluated for a sequence of N values (see Tables 4, 5). The results are presented in Fig. 4, both without (left column) and with weighted sub-sampling (right column) of the consolidated PD.

We can observe that all three variants of PBoW (PBoW, wPBoW and sPBoW) reach optimal performance at the level of about 50 words in a codebook (or earlier), a further increase of codebook size does not necessarily further improve its efficiency. In some cases, there is a slight improvement (synthetic data), in other cases performance goes down slightly (GeoMat) or remains a the same level. This shows that the codebook size is not rather insensitive parameter, once a certain minimum size is surpassed.

The remaining approaches (PVLAD, sPVLAD and PFV) show a general tendency to achieve their best performance early, with codebooks containing less than 40 words, and after that the accuracy drops substantially. The most prominent example of this behavior is depicted by the PVLAD method. It is caused by the fact that in cases where there is just a few clusters, it is simpler to capture well both the zeroth and the first moments, because clusters occupy large regions. However, once the number of clusters gets larger, cluster size shrinks and the assignment gets unstable.

Figure 4 further shows the effect of weighting during sub-sampling for codebook generation. This can be best observed from the performance curves of sPBoW, where the effect is largest. For 3D shapes, weighting leads to a dramatic improvement in accuracy. For for synthetic data, GeoMat and PetroSurf3D, there is also a moderate improvement in performance. Only for Reddit5K, weighted subsampling degrades performance. For other methods, however, the introduction of weighted subsampling does yield an improvement on the Reddit5K experiment (see e.g. PBoW, wPBoW and sPVLAD). In the majority of cases, weighted subsampling has a positive impact on performance. For PVLAD, weighted subsampling even seems to compensate for weaknesses of the representation in situations where codebook sizes are large.

Overall, we conclude, that optimal and universal choice for codebook size is about 50 in case of PBoW, wPBoW and sPBoW; while for the remaining methods, 20 words seems to be sufficient. These values are thus good starting points for hyperparameter optimization on other datasets. The choice of weighted vs. non-weighted subsampling seems to be dataset dependent. For 3D shapes, for example, strong performance gains are achieved. For the other datasets the trend is not so clear.

5.2 Accuracy versus time

Tables 2 and 3 show that our approaches achieve comparable performance on almost all of the evaluated datasets and partly even outperform the compared approaches. Additionally, they beat all methods in speed. While the above tables show results for the optimal parameters (from the classification accuracy point of view), we decided to analyze the relationship between accuracy and computation time. For this purpose, we use PI and approxPI as references for comparison, because they represent the strongest competitors (in the sense of accuracy) of the proposed representations.

Accuracy vs. time for codebook approaches compared with PI and approxPI (the strongest related representations) applied to all datasets from EXP-B. The size of colored points represents the size N of codebooks or the resolution r of PI and approxPI (evaluated values for N and r are those listed in Table 5). Note that the actual times of computation for the construction of the representations are presented. Codebooks and approxPI were computed on 1 CPU, while PI was constructed by using 8 CPUs in parallel. Moreover, SVM training and prediction time was not taken into consideration. This would further increase computational times, especially for PI and approxPI due to their larger dimension. The bottom-right plot shows results for codebook approaches and approxPI (but skipping PI) in the case of GeoMat dataset for a narrower range of x-axis (for better visibility)

In Fig. 5, we plot accuracy vs. time for the proposed approaches and PI for all datasets from EXP-B. We decided to focus on EXP-B here, because it operates on larger datasets than EXP-A and is thus better suited to study runtime efficienty. We vary the parameters with most influence placed on runtime (codebook size for persistent codebooks as well as the resolution of PI and approxPI) according to the values provided in Table 5. This directly influences the output dimension of the representation and is reflected by the area of the circles in Fig. 5, i.e. larger diameter means higher dimension. Note that experiments with codebooks and approxPI were performed on 1 CPU, while experiments on PI were performed in parallel on 8 CPUs. Therefore, the total runtime differences are in fact even larger than depicted. For more compact visualization (and avoiding a logarithmic scale which would compress too much), we decided not to take the number of CPUs into account for plotting. We can see clearly that the runtime of PI is always significally larger than any codebook representation. The accuracy obtained varies. For all datasets the performance level of PI is reached (or even superseeded) much quicker. In the case of GeoMat dataset, codebooks clearly outperform PI (while consuming much less time); and in case of the other experiments, they quickly achieve a similar performance level. The computational cost of achieving a higher performance with PI is over-proportionally high, while the performance gain is actually rather limited (approx. \(+1\%\)). Interestingly, approxPI strongly outperforms PI and can compete well with our representations in terms of runtime. The computation time of approxPI is in the same order of magnitude as that of the persistence codebook representation. However, the performance is in most cases lower as can be seen in Fig. 5 where pink dots represent approxPI. Note that for better visibility, we provide a wider and a narrower range of x-axis for the performance comparison of GeoMat dataset (last row of Fig. 5) because the latter allows easier comparison between the codebook approaches. Among our methods, PBoW is the fastest. We observe that runtimes of PBoW, wPBoW and PVLAD are almost not affected by codebook size. The other approaches, i.e. sPBoW, sPVLAD and PFV, that involve the computation of Gaussians, clearly require much more time when codebook size is increased. However, as observed before, larger codebook sizes are not necessarily required to obtain good accuracy, which mitigates the situation. approxPI is equally fast as PBoW but is not able to achieve the same level of performance.

5.3 Time versus dataset size

To investigate the runtime behavior of the proposed approaches in more detail, we evaluate how they scale to increasing dataset sizes (i.e. increasing numbers of input PDs). To this end, we employ the largest dataset in our experiments (PetroSurf3D) and randomly sample different numbers of PDs, starting from 1000 to 10, 000 in steps of 1000. To get a detailed breakdown of computation time, we separately measure the time needed for codebook generation, histogram assignment, and classification. The computation of the PDs is the same for all approaches, and thus is not included in this breakdown.

From the results presented in Fig. 6, we conclude that runtime grows almost linearly with dataset size. For the approaches without weighted subsampling (upper part in Fig. 6), most of the computation time is spent on histogram assignment and classification. Histogram assignment takes more time for more complex encoding methods, such as PVLAD and PFV. In case of PBoW, histogram assignment is particularly fast because of k-d trees being used (Bentley 1975). For sPBoW, Gaussian likelihood has to be computed, which slows down the computation. Assignment time, however, grows linearly with dataset size. Classification time takes the major part for PVLAD and PFV. This is due to the fact that the computational complexity of both, primal and dual SVM optimization, depends on dimensionality (Chapelle 2007), which is higher in case of PVLAD and PFV. The distribution of computation times is similar for the persistence codebook approaches with weighted subsampling (lower part in Fig. 6).

Time versus dataset size for all proposed persistent bag-of-words approaches (all with codebook size \(N=50\)). We measure the time needed for codebook generation (blue, bottom), histogram assignment (orange, middle), and classification (green, top), separately. Every consecutive bar represents an increasingly growing number of samples, from 1000, 2000, ..., 10000. Upper figure shows results for unweighted methods, while those for the weighted versions are presented in the bottom. (Color figure online)

5.4 Qualitative analysis

In this section, we investigate PBoW with a special focus on its discriminative abilities. For this purpose, we employ the synthetic dataset as a proof-of-concept and GeoMat (for which we outperform other representations by a large margin) to investigate how this performance increase is achieved by PBoW compared to related approaches.

5.4.1 Synthetic dataset

We compute PBoW with \(N=20\) clusters for the synthetic dataset and visually analyze the codeword histograms obtained by (hard) assignment. To this end, for each of the six shape classes, we compute the average codebook histogram (over all samples of each class) to obtain one representative PBoW vector per class. The averaged PBoW histograms for all classes are presented in Fig. 7. Instead of only providing the histograms themselves, for each codeword of the histogram we plot the corresponding cluster center as a circle in the original birth-persistence domain and encode the number of assigned codeworks (the actual values of the histograms) in the area of the circles, i.e. the larger the count for a cluster, the larger the circle. The advantage of this representation is that the spatial distribution of the codewords in the PD is preserved.

Average codebook histograms computed for each of the six shape classes of the synthetic dataset. The cluster center of each codeword is presented as a circle in the birth-persistence domain. The area of the circles reflects the histogram values of the specific class. For all classes, the same codebook (same clustering) is employed; thus, dot locations are the same on all plots. The differences between the circles reflect the class differences

From Fig. 7 we can see that, except for the classes “random cloud” and “sphere” (which are difficult to differentiate), all classes generate strongly different cluster distributions. Class “circle”, for example, uniquely activates four clusters with strong persistence (top-left corner) and the “torus” class distributes its corresponding code words across a large number of clusters representing less persistent components.

Figure 7 further illustrates an important property of persistence bag-of-words, namely its sparse nature. More specifically, areas with no points in the consolidated persistence diagram will contain no codewords (clusters). In Fig. 7, for example, no codeword is obtained in the upper-right quadrant of the diagram, since no components are located there for the underlying data. Therefore, these unimportant areas are neglected and not encoded into the final representation. This not only reduces the dimension of the final representation, but further makes the representation adaptive to the underlying data. This in turn increases the information density in the obtained representation.

5.4.2 GeoMat dataset

We further investigate the performance on the GeoMat dataset to explain why (s)PBoW outperforms PI, approxPI and RM by such a large margin (see Table 2). To this end, we generate confusion matrices for PI and PBoW (see Fig. 8) to investigate their discriminative abilities. The matrices show that PBoW, for example, achieves better discrimination between classes “cement smooth” and “concrete cast-in-place” (i.e. classes 4 and 5). Average PBoW histograms for those classes are shown in Fig. 9. The histograms are on the first sight similar (upper row in Fig. 9). However, by zooming-in towards the birth-persistence plane in Fig. 9 (bottom row), differences become better visible. The plots in the center illustrate the difference between the class distributions (red color means left class is stronger, blue means right class is stronger for this cluster). The classes differ by fine-grained spatial differences. The set of three blue points around birth time of 0 (which are characteristic for class “concrete cast-in-place”) surrounded by red points (which are characteristic for class “cement smooth”) illustrates this well (see lower central plot). For the discrimination of these two classes, a particularly fine-grained codebook with many clusters is needed. PI (and approxPI) have problems with such fine-grained structures, because due to its limited resolution, all topological components in the most discriminative area would most likely fall into one PI-pixel. Therefore, an extraordinary high resolution would be necessary to capture the discriminative patterns between those two classes. The bag-of-words model makes our approaches independent of the resolution and enables to capture even fine differences adaptively and in an unsupervised way. In Fig. 10 we show a similar comparison for classes “brick” and “concrete cast-in-place” (i.e. classes 2 and 5).

Comparison of averaged PBoW histograms for class “cement smooth” (left, red) and “concrete cast-in-place” (right, blue) from GeoMat dataset (top row: total view; bottom row is zoomed in). The plot in the center shows the difference between the classes, where red color means that the left class has stronger support for this cluster and blue means that the right class has stronger support. The classes differ by fine-grained spatial differences, which are not distinguishable in other vectorized representations. (Color figure online)

Comparison of averaged PBoW histograms for classes “brick” (left, red) and “concrete cast-in-place” (right, blue) from GeoMat dataset (top row: total view; 2nd row: zoomed in view; 3rd row: even further zoomed in view). The plot in the center shows the difference between the classes, where red color means that the left class has stronger support for this cluster and blue means that the right class has stronger support. The classes differ by fine-grained spatial differences, which are not distinguishable in other vectorized representations. (Color figure online)

6 Conclusion

We have introduced the concept of persistence codebooks, a novel fixed-length vectorial representation for persistence diagrams. Persistence codebooks employ bag-of-words encodings to quantize the persistence diagram into a vectorized representation. We propose different types of encodings (based on traditional bag-of-words, VLAD and Fisher Vectors), investigate their theoretic properties, such as their stability with respect to 1-Wasserstein, and introduce robust variants of the representations. Experiments on seven heterogeneous datasets show that they consistently achieve comparable performance to related methods, and partly even outperform them, with significantly shorter computation time. Though there is no overall winner among the introduced representations, we conclude that PFV is a powerful representation, as it achieves peak performance over all evaluated datasets. It is followed by the PBoW variants, which also consistently achieve peak performance (but not for all datasets). PVLAD cannot compete with the other representations in our experiments and is thus less recommended. Moreover, we observe a certain tradeoff between computation time and accuracy when comparing stable and non-stable representations. Unstable representations like approxPI and PBoW are particularly fast. However, in general, they cannot compete with stable descriptors like PFV in terms of accuracy.

The novel representations have both attractive theoretic properties as well as practical properties, i.e. compactness, expressiveness, as well as the ability to adapt to the inherent sparsity of persistence diagrams. They can be constructed in a completely unsupervised fashion and achieve a high discriminativity compared to related approaches. The high computational efficiency of persistence codebooks could in future facilitate the application of TDA to larger datasets than possible today and enable real-time applications.

Notes

Preliminary experiments have shown that this number is insensitive and has little influence on the results (evaluated value range: 1000–100,000).

We use Vietoris-Rips complexes to be consistent with the previous works. However, it should be noted that the alpha complex is a more optimal choice to compute persistent homology for a collection of points in low dimensional Euclidean space.

http://web.cse.ohio-state.edu/~dey.8/SimBa/Simba.html, last visited September, 2019.

Source: http://web.engr.illinois.edu/~degol2/pages/MatRec_CVPR16.html, last visited September, 2019.

Reddit is a content-aggregation website: http://reddit.com.

Source: https://github.com/c-hofer/nips2017, last visited September, 2019.

Source: https://github.com/MathieuCarriere/sklearn_tda, last visited September, 2019.

Source: https://github.com/rushilanirudh/pdsphere, last visited September, 2019.

Source: https://bitbucket.org/grey_narn/hera, last visited September, 2019

Source: https://github.com/rkwitt/persistence-learning, last visited September, 2019.

Code obtained from Mathieu Carrière.

Source: https://www.math.upenn.edu/~dlotko/persistenceLandscape.html, last visited September, 2019.

Source: https://github.com/queenBNE/Persistent-Landscape-Wrapper, last visited September, 2019.

Source: https://github.com/CSU-TDA/PersistenceImages, last visited September, 2019.

Source: https://github.com/rushilanirudh/pdsphere, last visited September, 2019.

Code available at: https://github.com/bziiuj/pcodebooks.

Note that for both representations we use the implementations provided by the original authors.

A number of points involved in the simplex minus one is a dimension of the simplex.

References

Aadcock ADRGC (2014) Classification of hepatic lesions using the matching metric. Comput Vis Image Underst 121:36–42

Adams H, Emerson T, Kirby M, Neville R, Peterson C, Shipman P, Chepushtanova S, Hanson E, Motta F, Ziegelmeier L (2017) Persistence images: a stable vector representation of persistent homology. J Mach Learn Res 18(8):1–35

Ali S, Basharat A, Shah M (2007) Chaotic invariants for human action recognition. In: ICCV, IEEE Computer Society, pp 1–8. http://dblp.uni-trier.de/db/conf/iccv/iccv2007.html#AliBS07

Anirudh R, Venkataraman V, Natesan Ramamurthy K, Turaga P (2016) A Riemannian framework for statistical analysis of topological persistence diagrams. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 68–76

Baeza-Yates R, Ribeiro-Neto B et al (1999) Modern information retrieval, vol 463. ACM press, New York

Bauer U, Kerber M, Reininghaus J, Wagner H (2017) Phat-persistent homology algorithms toolbox. J Symb Comput 78:76–90

Bentley JL (1975) Multidimensional binary search trees used for associative searching. Commun ACM 18(9):509–517

Bubenik P (2015) Statistical topological data analysis using persistence landscapes. J Mach Learn Res 16(1):77–102

Cang Z, Wei G (2017) Topologynet: topology based deep convolutional and multi-task neural networks for biomolecular property predictions. PLoS Comput Biol 13(7):e1005690

Carrière M, Oudot S, Ovsjanikov M (2015) Stable topological signatures for points on 3d shapes. In: Computer graphics forum, vol 34, Wiley Online Library, pp 1–12

Carrière M, Cuturi M, Oudot S (2017) Sliced Wasserstein kernel for persistence diagrams. In: International conference on machine learning (ICML)

Chapelle O (2007) Training a support vector machine in the primal. Neural Comput 19(5):1155–1178

Chen C, Kerber M (2011) Persistent homology computation with a twist. In: Proceedings 27th European workshop on computational geometry, vol 11

Chen X, Golovinskiy A, Funkhouser T (2009) A benchmark for 3d mesh segmentation. In: ACM SIGGRAPH 2009 papers, SIGGRAPH ’09, ACM, New York, NY, USA, pp 73:1–73:12. https://doi.org/10.1145/1576246.1531379

Cohen-Steiner D, Edelsbrunner H, Harer J (2007) Discrete Comput Geom. https://doi.org/10.1007/s00454-006-1276-5

DeGol J, Golparvar-Fard M, Hoiem D (2016) Geometry-informed material recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1554–1562

De Silva V, Morozov D, Vejdemo-Johansson M (2011) Dualities in persistent (co) homology. Inverse Probl 27(12):124003

Dey T, Shi D, Wang Y (2016) Simba: an efficient tool for approximating rips-filtration persistence via simplicial batch-collapse. In: Sankowski P, Zaroliagis C (eds) 24th annual European symposium on algorithms, ESA 2016, August 22–24, 2016, Aarhus, Denmark, LIPIcs, vol 57, Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, pp 35:1–35:16. https://doi.org/10.4230/LIPIcs.ESA.2016.35

Di Fabio B, Ferri M (2015) Comparing persistence diagrams through complex vectors. In: International conference on image analysis and processing, Springer, pp 294–305

Donatini P, Frosini P, Lovato A (1998) Size functions for signature recognition. Proc SPIE 3454:178–183. https://doi.org/10.1117/12.323253

Edelsbrunner H, Letscher D, Zomorodian A (2002) Topological persistence and simplification. Discrete Comput Geom 28:511–533

Edelsbrunner H, Harer J (2010) Computational topology: an introduction. American Mathematical Soc

Ferri M (2017) Persistent topology for natural data analysis: a survey. In: Holzinger A, Goebel R, Palade V (eds) Towards integrative machine learning and knowledge extraction. Springer, Cham, pp 117–133

Ferri M, Frosini P, Lovato A, Zambelli C (1998) Point selection: A new comparison scheme for size functions (with an application to monogram recognition). In: Computer vision—ACCV’98: third Asian conference on computer vision Hong Kong, China, January 8–10, 1998 proceedings, vol I, Springer, pp 329–337

Gameiro M, Hiraoka Y, Izumi S, Kramar M, Mischaikow K, Nanda V (2015) A topological measurement of protein compressibility. Jpn J Ind Appl Math 32(1):1–17

Hofer C, Kwitt R, Niethammer M, Uhl A (2017) Deep learning with topological signatures. In: Advances in neural information processing systems, pp 1633–1643