Abstract

Persistent homology is a methodology central to topological data analysis that extracts and summarizes the topological features within a dataset as a persistence diagram. It has recently gained much popularity from its myriad successful applications to many domains, however, its algebraic construction induces a metric space of persistence diagrams with a highly complex geometry. In this paper, we prove convergence of the k-means clustering algorithm on persistence diagram space and establish theoretical properties of the solution to the optimization problem in the Karush–Kuhn–Tucker framework. Additionally, we perform numerical experiments on both simulated and real data of various representations of persistent homology, including embeddings of persistence diagrams as well as diagrams themselves and their generalizations as persistence measures. We find that k-means clustering performance directly on persistence diagrams and measures outperform their vectorized representations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Topological data analysis (TDA) is a recently-emerged field of data science that lies at the intersection of pure mathematics and statistics. The core approach of the field is to adapt classical algebraic topology to the computational setting for data analysis. The roots of this approach first appeared in the early 1990s by Frosini (1992) for image analysis and pattern recognition in computer vision (Verri et al. 1993); soon afterwards, these earlier ideas by Frosini and Landi (2001) were reinterpreted, developed, and extended concurrently by Edelsbrunner and Zomorodian (2002) and Zomorodian and Carlsson (2005). These developments laid the foundations for a comprehensive set of computational topological tools which have then enjoyed great success in applications to a wide range of data settings in recent decades. Some examples include sensor network coverage problems (de Silva and Ghrist 2007); biological and biomedical applications, including understanding the structure of protein data (Gameiro et al. 2014; Kovacev-Nikolic et al. 2016; Xia et al. 2016) and the 3D structure of DNA (Emmett et al. 2015), and predicting disease outcome in cancer (Crawford et al. 2020); robotics and the problem of goal-directed path planning (Bhattacharya et al. 2015), as well as other general planning and motion problems, such as goal-directed path planning (Pokorny et al. 2016) and determining the cost of bipedal walking (Vasudevan et al. 2013); materials science to understand the structure of amorphous solids (Hiraoka et al. 2016); characterizing chemical reactions and chemical structure (Murayama et al. 2023); financial market prediction (Ismail et al. 2020); quantifying the structure of music (Bergomi and Baratè 2020); among many others (e.g., Otter et al. 2017).

Persistent homology is the most popular methodology from TDA due to its intuitive construction and interpretability in applications; it extracts the topological features of data and computes a robust statistical representation of the data under study. A particular advantage of persistent homology is that it is applicable to very general data structures, such as point clouds, functions, and networks. Notice that among these examples, the data may be endowed with only a notion of relatedness (point clouds) or may not even be a metric space at all (networks).

A major limitation of persistent homology, however, is that its output takes a somewhat unusual form of a collection of intervals; more specifically, it is a multiset of half-open intervals together with the set of zero-length intervals with infinite multiplicity, known as a persistence diagram. Statistical methods for interval-valued data (e.g., Billard and Diday 2000) cannot be used to analyze persistence diagrams because their algebraic topological construction gives rise to a complex geometry, which hinders their direct applicability to classical statistical and machine learning methods. The development of techniques to use persistent homology in statistics and machine learning has been an active area of research in the TDA community. Largely, the techniques entail either modifying the structure of persistence diagrams to take the form of vectors (referred to as embedding or vectorization) so that existing statistical and machine learning methodology may be applied directly (e.g., Bubenik 2015a; Adams et al. 2017); or developing new statistical and machine learning methods to accommodate the algebraic structure of persistence diagrams (e.g., Reininghaus et al. 2015). The former approach is more widely used in applications, while the latter approach tends to be more technically challenging and requires taking into account the intricate geometry of persistence diagrams and the space of all persistence diagrams. Our work studies both approaches experimentally and contributes a new theoretical result in the latter setting.

In machine learning, a fundamental task is clustering, which groups observations of a similar nature to determine structure within the data. The k-means clustering algorithm is one of the most well-known clustering methods. For structured data in linear spaces, the k-means algorithm is widely used, with well-established theory on stability and convergence. However, for unstructured data in nonlinear spaces, such as persistence diagrams, it is a nontrivial task to apply the k-means framework. The key challenges are twofold: (i) how to define the mean of data in a nonlinear space, and (ii) how to compute the distance between the data points. These challenges are especially significant in the task of obtaining convergence guarantees for the algorithm. For persistence diagrams specifically, the cost function is not differentiable nor subdifferentiable in the traditional sense because of their complicated metric geometry.

In this paper, we study the k-means algorithm on the space of persistence diagrams both theoretically and experimentally. Theoeretically, we establish convergence of the k-means algorithm. In particular, we provide a characterization of the solution to the optimization problem in the Karush–Kuhn–Tucker (KKT) framework and show that the solution is a partial optimal point, KKT point, as well as a local minimum. Experimentally, we study various representations of persistent homology in the k-means algorithm, as persistence diagram embeddings, on persistence diagrams themselves, as well as on their generalizations as persistence measures. To implement k-means clustering on persistence diagram and persistence measure space, we reinterpret the algorithm taking into account the appropriate metrics on these spaces as well as constructions of their respective means. Broadly, our work contributes to the growing body of increasingly important and relevant non-Euclidean statistics (e.g., Blanchard and Jaffe 2022) that deals with, for example, spaces of matrices under nonlinear constraints (Dryden et al. 2009); quotients of Euclidean spaces defined by group actions (Le and Kume 2000); and Riemannian manifolds (Miolane et al. 2020).

The remainder of this paper is organized as follows. We end this introductory section with an overview of existing literature relevant to our study. Section 2 provides the background on both persistent homology and k-means clustering. We give details on the various components of persistent homology needed to implement the k-means clustering algorithm. In Sect. 3, we provide main result on the convergence of the k-means algorithm in persistence diagram space in the KKT framework. Section 4 provides results to numerical experiments comparing the performance of k-means clustering on embedded persistence diagrams versus persistence diagrams and persistence measures. We close with a discussion on our contributions and ideas for future research in Sect. 5.

1.1 Related work

A previous study of the k-means clustering in persistence diagram space compares the performance to other classification algorithms; it also establishes local minimality of the solution to the optimization problem in the convergence of the algorithm (Marchese et al. 2017). Our convergence study expands on this previous study to the more general KKT framework, which provides a more detailed convergence analysis, and differs experimentally by studying the performance of k-means clustering specifically in the context of persistent homology on simulated data as well as a benchmark dataset.

Clustering and TDA more generally has also been recently overviewed, explaining how topological concepts may apply to the problem of clustering (Panagopoulos 2022). Other work studies topological data analytic clustering for time series data as well as space-time data (Islambekov and Gel 2019; Majumdar and Laha 2020).

2 Background and preliminaries

In this section, we provide details on persistent homology as the context in which our study takes place; we also outline the k-means clustering algorithm. We then provide more technical details on the specifics of persistent homology that are needed to implement the k-means clustering algorithm, as well as of Karush–Kuhn–Tucker optimization.

2.1 Persistent homology

Algebraic topology is a field in pure mathematics that uses abstract algebra to study general topological spaces. A primary goal of the field is to characterize topological spaces and classify them according to properties that do not change when the space is subjected to smooth deformations, such as stretching, compressing, rotating, and reflecting; these properties are referred to as invariants. Invariants and their associated theory can be seen as relevant to complex and general real-world data settings in the sense that they provide a way to quantify and rigorously describe the “shape” and “size” of data, which can then be considered as summaries for complex, non-Euclidean data structures and settings are becoming increasingly important and challenging to study. A particular advantage of TDA, given its algebraic topological roots, is its flexibility and applicability both when there is a clear formalism to the data (e.g., rigorous metric space structure) and when there is a less obvious structure (e.g., networks and text).

Persistent homology adapts the theory of homology from classical algebraic topology to data settings in a dynamic manner. Homology algebraically identifies and counts invariants as a way to distinguish topological spaces from one another; various different homology theories exist depending on the underlying topological space of study. When considering the homology of a dataset as a finite topological space X, it is common to use simplicial homology over a field. A simplicial complex is a reduction of a general topological space to a discrete version, as a union of simpler components, glued together in a combinatorial fashion. An advantage of considering simplicial complexes and studying simplicial homology is that there are efficient algorithms to compute homology. More formally, these basic components are k-simplices, where each k-simplex is the convex hull of \(k+1\) affinely independent points \(x_0, x_1, \ldots , x_k\), denoted by \([x_0,\, x_1,\, \ldots ,\, x_k]\). A set constructed combinatorially of k-simplices is a simplicial complex, K.

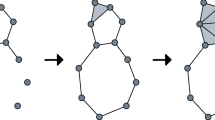

Persistent homology is a dynamic version of homology, which is built on studying homology over a filtration. A filtration is a nested sequence of topological spaces, \(X_0 \subseteq X_1 \subseteq \cdots \subseteq X_n = X\); there exist different kinds of filtrations, which are defined by their nesting rule. In this paper, we study Vietoris–Rips (VR) filtrations for finite metric spaces \((X, d_X)\), which are commonly used in applications and real data settings in TDA. Let \(\epsilon _1 \le \epsilon _2 \le \cdots \le \epsilon _n\) be an increasing sequence of parameters where each \(\epsilon _i \in {\mathbb {R}}_{\ge 0}\). The Vietoris–Rips complex of X at scale \(\epsilon _i\) \(\textrm{VR}(X, \epsilon _i)\) is constructed by adding a node for each \(x_j \in X\) and a k-simplex for each set \(\{x_{j_1}, x_{j_2}, \ldots , x_{j_{k+1}}\}\) with diameter \(d(x_i, x_j)\) smaller than \(\epsilon _i\) for all \(0 \le i,j \le k\). We then obtain a Vietoris–Rips filtration

which is a sequence of inclusions of sets (Fig. 1).

The sequence of inclusions given by the filtration induces maps in homology for any fixed dimension \(\bullet\). Let \(H_\bullet (X,\epsilon _i)\) be the homology group of \(\textrm{VR}(X,\epsilon _i)\) with coefficients in a field. This gives rise to the following sequence of vector spaces:

The collection of vector spaces \(H_\bullet (X,\epsilon _i)\), together with their maps (vector space homomorphisms), \(H_\bullet (X,\epsilon _i)\rightarrow H_\bullet (X,\epsilon _j)\), is called a persistence module.

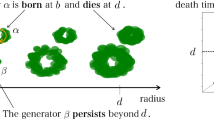

The algebraic interpretation of a persistence module and how it corresponds to topological (more specifically, homological) information of data is formally given as follows. For each finite dimensional \(H_\bullet (X,\epsilon _i)\), the persistence module can be decomposed into rank one summands corresponding to birth and death times of homology classes (Chazal et al. 2016): Let \(\alpha \in H_\bullet (X,\epsilon _i)\) be a nontrivial homology class. \(\alpha\) is born at \(\epsilon _i\) if it is not in the image of \(H_\bullet (X,\epsilon _{i-1})\rightarrow H_\bullet (X,\epsilon _i)\) and similarly, it dies entering \(\epsilon _j\) if the image of \(\alpha\) via \(H_\bullet (X,\epsilon _i)\rightarrow H_\bullet (X,\epsilon _{j-1})\) is not in the image \(H_\bullet (X,\epsilon _{i-1})\rightarrow H_\bullet (X,\epsilon _{j-1})\), but the image of \(\alpha\) via \(H_\bullet (X,\epsilon _i)\rightarrow H_\bullet (X,\epsilon _{j})\) is in the image \(H_\bullet (X,\epsilon _{i-1})\rightarrow H_\bullet (X,\epsilon _{j})\). The collection of these birth–death intervals \([\epsilon _i,\epsilon _j)\) represents the persistent homology of the Vietoris–Rips filtration of X. Taking each interval as an ordered pair of birth–death coordinates and plotting each in a plane \({\mathbb {R}}^2\) yields a persistence diagram; see Fig. 3a. This algebraic structure and its construction generate the complex geometry of the space of all persistence diagrams, which must be taken into account when performing statistical analyses on persistence diagrams representing the topological information of data.

More intuitively, homology studies the holes of a topological space as a kind of invariant, since the occurrence of holes and how many there are do not change under continuous deformations of the space. Dimension 0 homology corresponds to connected components; dimension 1 homology corresponds to loops; and dimension 2 homology corresponds to voids or bubbles. These ideas can be generalized to higher dimensions and the holes are topological features of the space (or dataset). Persistent homology tracks the appearance of these features with an interval, where the left endpoint of the interval signifies the first appearance of a feature within the filtration and the right endpoint signifies its disappearance. The length of the interval can therefore be thought of as the lifetime of the topological feature. The collection of the intervals is known as a barcode and summarizes the topological information of the dataset (according to dimension). A barcode can be equivalently represented as a persistence diagram, which is a scatterplot of the birth and death points of each interval. See Fig. 2 for a visual illustration.

Example illustrating the procedure of persistent homology (Ghrist 2008). The panel on the left shows an annulus, which is the underlying space of interest; the topology of an annulus is characterized by 1 connected component (corresponding to \(H_0\)), 1 loop (corresponding to \(H_1\)), and 0 voids (corresponding to \(H_2\)). 17 points are sampled from the annulus and a simplicial complex (in order to be able to use simplicial homology) is constructed from these samples in the following way: The sampled points are taken to be centers of balls with radius \(\epsilon\), which grows from 0 to \(\infty\). At each value of \(\epsilon\), whenever two balls intersect, they are connected by an edge; when three balls mutually intersect, they are connected by a triangle (face); this construction continues for higher dimensions. The simplicial complex resulting from this overlapping of balls as the radius \(\epsilon\) grows is shown to the right of each snapshot at different values of \(\epsilon\). As \(\epsilon\) grows, connected components merge, cycles are formed, and fill up. These events are tracked by a bar for each dimension of homology, shown on the right; the lengths of the bars corresponds to the lifetime of each topological feature as \(\epsilon\) grows. Notice that as \(\epsilon\) reaches infinity, there remains a single connected component tracked by the longest bar for \(H_0\). For \(H_1\), there are bars of varying length, including several longer bars, which suggests irregular sampling of the annulus; the longest bar corresponds to the single loop of the annulus. Notice also that there is one short \(H_2\) bar, which is likely to be a spurious topological feature corresponding to noisy sampling

Definition 1

A persistence diagram D is a locally finite multiset of points in the half-plane \(\Omega = \{(x,y)\in {\mathbb {R}}^2 \mid x<y\}\) together with points on the diagonal \(\partial \Omega =\{(x,x)\in {\mathbb {R}}^2\}\) counted with infinite multiplicity. Points in \(\Omega\) are called off-diagonal points. The persistence diagram with no off-diagonal points is called the empty persistence diagram, denoted by \(D_\emptyset\).

Intuitively, points that are far away from the diagonal are the long intervals corresponding to topological features that have a long lifetime in the filtration. These points are said to have high persistence and are generally considered to be topological signal, while points closer to the diagonal are considered to be topological noise.

2.1.1 Persistence measures

A more general representation of persistence diagrams that aims to facilitate statistical analyses and computations is the persistence measure. The construction of persistence measures arises from the equivalent representation of persistence diagrams as measures on \(\Omega\) of the form \(\sum _{x\in D\cap \Omega }n_x\delta _x\), where x ranges over the off-diagonal points in a persistence diagram D, \(n_x\) is the multiplicity of x, and \(\delta _x\) is the Dirac measure at x (Divol and Chazal 2019). Considering then all Radon measures supported on \(\Omega\) gives the following definition.

Definition 2

(Divol and Lacombe (2021b)) Let \(\mu\) be a Radon measure supported on \(\Omega\). For \(1\le p<\infty\), the p-total persistence in this measure setting is defined as

where \(x^\top\) is the projection of x to the diagonal \(\partial \Omega\). Any Radon measure with finite p-total persistence is called a persistence measure.

Persistence measures resemble heat map versions of persistence diagrams on the upper half-plane where individual persistence points are smoothed, so the intuition on their birth–death lifetimes as described previously and illustrated in Figure 2 are lost. However, they gain the advantage of a linear structure where statistical quantities are well-defined and much more straightforward to compute (Divol and Lacombe 2021a).

By generalizing persistence diagrams to persistence measures, a larger space of persistence measures is obtained, which contains the space of persistence diagrams as a proper subspace (Divol and Lacombe 2021b). In other words, when it comes to discrete measures on the half plane, a persistence measure is nothing but a persistence diagram with different representation. Thus, any distribution on the space of persistence diagrams is naturally defined on the space of persistence measures. The mean of distributions is also well defined for persistence measures if it is well defined for persistence diagrams (see Sect. 2.3). Note that on the space of persistence measures, the mean of a distribution is a persistence measure, which can be an abstract Radon measure that is not representable as a persistence diagram. In fact, in general, the empirical mean on the space of persistence measures does not lie in the subspace of persistence diagrams. However, it enjoys the computational simplicity which does not happen to other means defined on the space of persistence diagrams.

2.1.2 Metrics for persistent homology

There exist several metrics for persistence diagrams; we focus on the following for its computational feasibility and the known geometric properties of the set of all persistence diagrams (persistence diagram space) induced by this metric.

Definition 3

For \(p > 0\), the p-Wasserstein distance between two persistence diagrams \(D_1\) and \(D_2\) is defined as

where \(\Vert \cdot \Vert _q\) denotes the q-norm, \(1\le q \le \infty\) and \(\gamma\) ranges over all bijections between \(D_1\) and \(D_2\).

For a persistence diagram D, its p-total persistence is \(\textrm{W}_{p,q}(D, D_\emptyset )\). Notice here that the intuition is similar to that given in Definition 2, but technically different to the Radon measure setting. We refer to the space of persistence diagrams \({\mathcal {D}}_p\) as the set of all persistence diagrams with finite p-total persistence. Equipped with the p-Wasserstein distance, \(({\mathcal {D}}_p, \textrm{W}_{p,q})\) is a well-defined metric space.

The space of persistence measures is equipped with the following metric.

Definition 4

The optimal partial transport distance between equivalent representation persistence measures \(\mu\) and \(\nu\) is

with \({\bar{\Omega }} = \Omega \cup \partial \Omega\). Here, \(\Pi\) denotes the set of all Radon measures on \({\bar{\Omega }} \times {\bar{\Omega }}\) where for all Borel sets \(A, B \subseteq \Omega\), \(\Pi (A \times {\bar{\Omega }}) = \mu (A)\) and \(\Pi (B \times {\bar{\Omega }}) = \nu (B)\).

The space of persistence measures \({\mathcal {M}}_p\), together with \(\textrm{OT}_{p, q}\), is an extension and generalization of \(({\mathcal {D}}_p, \textrm{W}_{p,q})\). In particular, \(({\mathcal {D}}_p, \textrm{W}_{p,q})\) is a proper subset of \(({\mathcal {M}}_p, \textrm{OT}_{p,q})\) and \(\textrm{OT}_{p,q}\) coincides with \(\textrm{W}_{p,q}\) on the subset \({\mathcal {D}}_p\) (Divol and Lacombe 2021a).

2.2 k-means clustering

The k-means clustering algorithm is one of the most widely known unsupervised learning algorithms. It groups data points into k distinct clusters based on their similarities and without the need to provide any labels for the data (Hartigan and Wong 1979). The process begins with a random sample of k points from the set of data points. Each data point is assigned to one of the k points, called centroids, based on minimum distance. Data points assigned to the same centroid form a cluster. The cluster centroid is updated to be the mean of the cluster. Cluster assignment and centroid update steps are repeated until convergence.

A significant limitation of the k-means algorithm is that the final clustering output is highly dependent on the initial choice of k centroids. If initial centroids are close in terms of distance, this may result in suboptimal partitioning of the data. Thus, when using the k-means algorithm, it is preferable to begin with centroids that are as far from each other as possible such that they already lie in different clusters, which makes it more likely for convergence to the global optimum rather than a local solution. k-means\(++\) (Arthur and Vassilvitskii 2006) is an initialization algorithm that addresses this issue which we adapt in our implementation of the k-means algorithm, in place of the original random initialization step.

Finding the true number of clusters k is difficult in theory. Practically, however, there are several heuristic ways to determine the near optimal number of clusters. For example, the elbow method seeks the cutoff point of the loss curve (Thorndike 1953); the information criterion method constructs likelihood functions for the clustering model (Goutte et al. 2001); the silhouette method tries to optimize the silhouette function of data with respect to the number of clusters (De Amorim and Hennig 2015). An experimental comparison of different determination rules can be found in Pham et al. (2005).

The original k-means algorithm Hartigan and Wong (1979) takes vectors as input and calculates the squared Euclidean distance as the distance metric in the cluster assignment step. In this work, we aim to adapt this algorithm that intakes representations of persistent homology as input (persistence diagrams, persistence measures, and embedded persistence diagrams) and the Wasserstein distance (Definition 3) and the optimal partial transport distance (Definition 4) between persistence diagrams and persistence measures, respectively, as metrics.

2.3 Means for persistent homology

The existence of a mean and the ability to compute it is fundamental in the k-means algorithm. This quantity and its computation are nontrivial in persistence diagram space. A previously proposed notion of means for sets of persistence diagrams is based on Fréchet means—generalizations of means to arbitrary metric spaces—where the metric is the p-Wasserstein distance Turner et al. (2014).

Specifically, given a set of persistence diagrams \({\textbf{D}} = \{D_1, \ldots , D_N\}\), define an empirical probability measure \({\hat{\rho }} = \frac{1}{N} \sum _{i=1}^N \delta _{D_i}\). The Fréchet function for any persistence diagram \(D \in {\textbf{D}}\) is then

Since any persistence diagram D that minimizes the Fréchet function is the Fréchet mean of the set \({\textbf{D}}\), the Fréchet mean in general need not be unique. This is the case in \(({\mathcal {D}}_2, W_{2,2})\), which is known to be an Alexandrov space with curvature bounded from below, meaning that geodesics and therefore Fréchet means are not even locally unique (Turner et al. 2014). Nevertheless, they are computable as local minima, using a greedy algorithm that is a variant of the Hungarian algorithm, but have caveats: the Fréchet function is not convex on \({\mathcal {D}}_2\), which means the algorithm often finds only local minima; additionally, there is no initialization rule and arbitrary persistence diagrams are chosen as starting points, meaning that different initializations lead to an unstable performance and potentially different local minima. In our work, we will focus on the 2-Wasserstein distance and we use the notation \(\textrm{W}_2\) for short.

For a set of persistence measures \(\{\mu _1, \ldots , \mu _N\}\), their mean is the mean persistence measure, which is simply the empirical mean of the set: \({\bar{\mu }} = \frac{1}{N}\sum _{i=1}^N\mu _i\).

We outline the k-means algorithm for persistence diagrams and persistence measures in Algorithm 1.

2.4 The Karush–Kuhn–Tucker conditions

The Karush–Kuhn–Tucker (KKT) conditions are first order necessary conditions for a solution in a nonlinear optimization problem to be optimal.

Definition 5

Consider the following minimization problem,

where f, \(g_i\), and \(h_j\) are differentiable functions on \({\mathbb {R}}^d\). The Lagrange dual function is given by

The dual problem is defined as

The Karush–Kuhn–Tucker (KKT) conditions are given by

Given any feasible x and u, v, the difference \(f(x)-g(u,v)\) is called the dual gap. The KKT conditions are always sufficient conditions for solutions to be optimal; they are also necessary conditions if strong duality holds, i.e., if the dual gap is equal to zero (Boyd and Vandenberghe 2004).

The KKT conditions are applicable if all functions are differentiable over Euclidean spaces. The setup can be generalized for non-differentiable functions over Euclidean spaces but with well-defined subdifferentials (Boyd and Vandenberghe 2004). In the case of persistent homology, the objective function is defined on the space of persistence diagrams, which is a metric space without linear structure and therefore requires special treatment. Specifically, we follow Selim and Ismail (1984) and define the KKT point for our optimization problem and show that the partial optimal points are always KKT points. These notions are formally defined and full technical details of our contributions are given in the next section.

3 Convergence of the k-means clustering algorithm

Local minimality of the solution to the optimization problem has been previously established by studying the convergence of the k-means algorithm for persistence diagrams (Marchese et al. 2017). Here, we further investigate the various facets of the optimization problem in a KKT framework and study and derive properties of the solution taking into account the subtleties and refinements of the KKT setting previously discussed in Sect. 2.4. We focus on the 2-Wasserstein distance \(\textrm{W}_2\) as discussed in Sect. 2.3.

Problem setup

Let \({\textbf{D}} = \{D_1,\ldots ,D_n\}\) be a set of n persistence diagrams and let \({\textbf{G}}=\{G_1,\ldots ,G_k\}\) be a partition of \({\textbf{D}}\), i.e., \(G_i\cap G_j=\emptyset\) for \(i\ne j\) and \(\cup _{i=1}^kG_i={\textbf{D}}\). Let \({\textbf{Z}}=\{Z_1,\ldots ,Z_k\}\) be k persistence diagrams representing the centroids of a clustering. The within-cluster sum of distances is defined as

Define a \(k\times n\) matrix \(\omega =[\omega _{ij}]\) by setting \(\omega _{ij} = 1\) if \(D_j \in G_i\) and 0 otherwise. Then (2) can be equivalently expressed as

We first define a domain for each centroid \(Z_i\): Let \(\ell _i\) be the number of off-diagonal points of \(D_i\); let V be the convex hull of all off-diagonal points in \(\cup _{i=1}^n D_i\). Define \({\textbf{S}}\) to be the set of persistence diagrams with at most \(\sum _{i=1}^n\ell _i\) off-diagonal points in V; the set \({\textbf{S}}\) is relatively compact (Mileyko et al. 2011, Theorem 21).

Our primal optimization problem is

We relax the integer constraints in optimization (\({\textbf{P}}_{0}\)) to continuous constraints by setting \(\omega _{ij}\in [0,1]\). Let \(\Theta\) be the set of all \(k\times n\) column stochastic matrices, i.e.,

The relaxed optimization problem is then

Finally, we reduce \({\textbf{F}}(\omega ,{\textbf{Z}})\) to a univariate function \(f(\omega )=\min \{F(\omega ,{\textbf{Z}}),{\textbf{Z}}\in {\textbf{S}}^k\}\) by minimizing over the second variable. The reduced optimization problem is

Lemma 1

The optimization problems (\({\textbf{P}}_{0}\)), (\({\textbf{P}}\)), and (\({\textbf{RP}}\)) are equivalent in the sense that the minimum values of the cost functions are equal.

Proof

Problems (\({\textbf{P}}\)) and (\({\textbf{RP}}\)) are equivalent as they have the same constraints and equal cost functions. It suffices to prove that the reduced problem (\({\textbf{RP}}\)) and the primal problem (\({\textbf{P}}_{0}\)) are equivalent.

Claim 1: f(w) is a concave function on the convex set \(\Theta\).

Let \(\omega _1,\omega _2\in \Theta\), and \(\lambda \in [0,1]\). Set \(\omega _\lambda =\lambda \omega _1+(1-\lambda )\omega _2\). Note that \(\sum _{i}(\omega _\lambda )_{ij} = \lambda \sum _{i}(\omega _1)_{ij}+(1-\lambda )\sum _i(\omega _2)_{ij}=1\). Thus \(\omega _\lambda \in \Theta\). Furthermore,

As a consequence, \(f(\omega )\) always attains its minimum on the extreme points of \(\Theta\). Note that an extreme point is a point that cannot lie within the open interval of the convex combination of any two points.

Claim 2: The extreme points of \(\Theta\) satisfy the constraints in (\({\textbf{P}}_{0}\)).

The extreme points of \(\Theta\) are 0–1 matrices which have exactly one 1 in each column (Cao et al. 2022). They are precisely the constraints in (\({\textbf{P}}_{0}\)).

In summary, any optimal point for (\({\textbf{RP}}\)) is also an optimal point for (\({\textbf{P}}_{0}\)) and vice versa. Thus, all three optimization problems are equivalent. \(\square\)

3.1 Convergence to partial optimal points

We prove that Algorithm 1 for persistence diagrams always converges to partial optimal points in a finite number of steps. The proof is an extension of Theorem 3.2 in Marchese et al. (2017).

Definition 6

(Selim and Ismail 1984) A point \((\omega _\star ,{\textbf{Z}}_\star )\) is a partial optimal point of (\({\textbf{P}}\)) if

-

1.

\({\textbf{F}}(\omega _\star ,{\textbf{Z}}_\star )\le {\textbf{F}}(\omega ,{\textbf{Z}}_\star )\) for all \(\omega \in \Theta\), i.e., \(\omega _\star\) is a minimizer of the function \({\textbf{F}}(\cdot ,{\textbf{Z}}_\star )\); and

-

2.

\({\textbf{F}}(\omega _\star ,{\textbf{Z}}_\star )\le {\textbf{F}}(\omega _\star ,{\textbf{Z}})\) for all \({\textbf{Z}}\in {\textbf{S}}^k\), i.e., \({\textbf{Z}}_\star\) is a minimizer of the function \({\textbf{F}}(\omega _\star ,\cdot )\).

Theorem 1

( Marchese et al. 2017, Theorem 3.2) The k-means algorithm over \(({\mathcal {D}}_2, \textrm{W}_{2})\) converges to a partial optimal point in finitely many steps.

Proof

The value of \({\textbf{F}}\) only changes at two steps during each iteration. Fix an iteration t and let \({\textbf{Z}}^{(t)} = \{Z_1^{(t)},\ldots ,Z_k^{(t)}\}\) be the k centroids from iteration t. At the first step of the \((t+1)\)st iteration, since we are assigning all persistence diagrams to a closest centroid, for any datum \(D_j\),

Summing over \(j=1,\ldots ,n\), we have \({\textbf{F}}(\omega ^{(t+1)},{\textbf{Z}}^{(t)})\le {\textbf{F}}(\omega ^{(t)},{\textbf{Z}}^{(t)})\).

At the second step of the \((t+1)\)st iteration, by the definition of Fréchet means, we have

Thus

Summing over \(i=1,\ldots ,k\), we have \({\textbf{F}}(\omega ^{(t+1)},{\textbf{Z}}^{(t+1)})\le {\textbf{F}}(\omega ^{(t+1)},{\textbf{Z}}^{(t)})\).

In summary, the function \({\textbf{F}}(\omega ,{\textbf{Z}})\) is nonincreasing at each iteration. Since there are only finitely many extreme points in \(\Theta\) (Cao et al. 2022), the algorithm is stable at a point \(\omega _\star\) after finitely many steps. If we fix one Fréchet mean \({\textbf{Z}}_\star\) for \({\textbf{F}}(\omega _\star ,\cdot )\), then \((\omega _\star ,{\textbf{Z}}_\star )\) is a partial optimal point for (\({\textbf{P}}\)) by construction. \(\square\)

Theorem 1 is a revision of the original statement in Marchese et al. (2017), since the algorithm may not converge to local minima of (\({\textbf{P}}\)).

Note that \({\textbf{F}}(\omega ,{\textbf{Z}})\) is differentiable as a function of \(\omega\) but not differentiable as a function of \({\textbf{Z}}\). However, given that we are restricting to \(({\mathcal {D}}_2, \textrm{W}^2_2)\), there is a differential structure and in particular, the square distance function admits gradients in the Alexandrov sense (Turner et al. 2014).

Given a point \(D\in {\mathcal {D}}_2\), let \(\Sigma _D\) be the set of all nontrivial unit-speed geodesics emanating from D. The angle between two geodesics \(\gamma _1,\gamma _2\) is defined as

By identifying geodesics \(\gamma _1\sim \gamma _2\) with zero angles, we define the space of directions \(({\widehat{\Sigma }}_D,\angle _D)\) as the completion of \(\Sigma _D/\sim\) under the angular metric \(\angle _D\). The tangent cone \(T_D={\widehat{\Sigma }}_D\times [0,\infty )/{\widehat{\Sigma }}_D\times \{0\}\) is the Euclidean cone over \({\widehat{\Sigma }}_D\) with the cone metric defined as

The inner product is defined as \(\langle [\gamma _1,t],\, [\gamma _2,s] \rangle _D = st\cos \angle _D(\gamma _1,\gamma _2).\) Let \(v=[\gamma ,t]\in T_D\). For any function \(h:{\mathcal {P}}\rightarrow {\mathbb {R}}\), the differential of h at a point D is a map \(T_D\rightarrow {\mathbb {R}}\) defined by

if the limit exists and is independent of selection of \(\gamma _v\). The gradient of h at D is a tangent vector \(\nabla _Dh\in T_D\) such that (i) \(\textrm{d}_Dh(u)\le \langle \nabla _Dh,u \rangle _D\) for all \(u\in T_D\); and (ii) \(\textrm{d}_Dh(\nabla _Dh) = \langle \nabla _Dh,\nabla _Dh\rangle\). Let \(Q_D(\cdot )=\textrm{W}_2^2(\cdot ,D)\) be the squared distance to D.

Lemma 2

(Turner et al. 2014)

-

1.

\(Q_D\) is Lipschitz continuous on any bounded set;

-

2.

The differential and gradient are well-defined for \(Q_D\);

-

3.

If \(D'\) is a local minimum of \(Q_D\) then \(\nabla _{D'}Q_D=0\).

Since \({\textbf{F}}\) is a linear combination of squared distance functions, by Lemma 2, the gradient exists for any variable \(Z_i\). Thus, we formally write the gradient of \({\textbf{F}}\) as \(\nabla _{{\textbf{Z}}}{\textbf{F}} = (\nabla _{Z_1}{\textbf{F}},\ldots ,\nabla _{Z_k}{\textbf{F}}).\)

Following Selim and Ismail (1984), we define the KKT points for (\({\textbf{P}}\)) as follows.

Definition 7

A point \((\omega _\bullet ,{\textbf{Z}}_\bullet )\) is a Karush–Kuhn–Tucker (KKT) point of (\({\textbf{P}}\)) if there exists \(\mu _1,\ldots ,\mu _n\in {\mathbb {R}}\) such that

where \(\varvec{1}\) is the all-1 vector.

Theorem 2

The partial optimal points of (\({\textbf{P}}\)) are KKT points of (\({\textbf{P}}\)).

Proof

Suppose \((\omega _\star ,{\textbf{Z}}_\star )\) is a partial optimal point. Since \(\omega _\star\) is a minimizer of the function \({\textbf{F}}(\cdot ,{\textbf{Z}}_\star )\), it satisfies the KKT conditions of the constrained optimization problem

which are exactly the first two conditions of being a KKT point. Similarly, since \({\textbf{Z}}_\star\) is a minimizer of the function \({\textbf{F}}(\omega _\star ,\cdot )\), by Lemma 2, the gradient vector is zero, which is the third condition of a KKT point. Thus, \((\omega _\star ,{\textbf{Z}}_\star )\) is a KKT point of (\({\textbf{P}}\)). \(\square\)

Conversely, suppose \((\omega _\star ,{\textbf{Z}}_\star )\) is a KKT point. Since \({\textbf{F}}(\cdot ,{\textbf{Z}}_\star )\) is a linear function of \(\omega\) and \(\omega \in \Theta\) is a linear constraint, the first two conditions are sufficient for \(\omega _\star\) to be a minimizer of \({\textbf{F}}(\cdot ,{\textbf{Z}}_\star )\). Thus \(\omega _\star\) satisfies the first condition of a partial optimal point. However, the condition \(\nabla _{{\textbf{Z}}}{\textbf{F}}(\omega _\star ,{\textbf{Z}}_\star )=0\) cannot guarantee that \({\textbf{Z}}_\star\) satisfies the second condition of a partial optimal point. Note that the original proof in Selim and Ismail (1984) of Theorem 4 is also incomplete.

Moreover, being a partial optimal point or KKT point is not sufficient for being a local minimizer of the original optimization problem. A counterexample can be found in Selim and Ismail (1984).

3.2 Convergence to local minima

We now give a characterization of the local minimality of \(\omega\) using directional derivatives of the objective function \(f(\omega )\) in (\({\textbf{RP}}\)). For any fixed element \(\omega _0\in \Theta\), let \(v\in {\mathbb {R}}^{kn}\) be a feasible direction, i.e., \(\omega _0+tv\in \Theta\) for small t. The directional derivative of \(f(\omega )\) along v is defined as

if the limit exists. Let \({\mathcal {Z}}(\omega _0)\) be the set of all \({\textbf{Z}}\) minimizing the function \({\textbf{F}}(\omega _0,\cdot )\). We have the following explicit formula for the directional derivatives of \(f(\omega )\).

Lemma 3

For any \(\omega _0\in \Theta\) and any feasible direction v, the directional derivative \(Df(\omega _0;v)\) exists, and

Proof

Denote

For any \({\textbf{Z}}_\star \in {\mathcal {Z}}(\omega _0)\), we have

Thus we have

It suffices to prove

To do this, let \({t_j}\) be a sequence tending to 0 and

as \(j\rightarrow \infty\). Choose \({\textbf{Z}}_j\in {\mathcal {Z}}(\omega _0+t_jv)\) for each j. Since \({\textbf{S}}^k\) is relatively compact, there is a subsequence of \(\{{\textbf{Z}}_j\}\) converging to some point \({\textbf{Z}}_\star\). We still denote the subsequence by \(\{{\textbf{Z}}_j\}\) for the sake of convenience. By Lemma 2, \({\textbf{F}}(\omega ,{\textbf{Z}})\) is continuous. Thus, for any \({\textbf{Z}}\in {\textbf{S}}^k\),

That is, \({\textbf{Z}}_\star \in {\mathcal {Z}}(\omega _0)\). Therefore, we have

Combining with (3), we showed that the limit exists and is equal to \(L(\omega _0;v)\). \(\square\)

Since the function \(f(\omega )\) is a differentiable continuous function on a convex set, the first-order necessary condition of local minima is a standard result in optimization.

Theorem 3

If \(\omega _\star\) is a local minimizer of \(f(\omega )\) over \(\Theta\), then for any feasible direction \(v\in {\mathbb {R}}^{kn}\) at \(\omega _\star\),

4 Numerical experiments

We experimentally evaluate and compare performance of the various persistence diagram representations in the k-means algorithm on simulated data as well as a benchmark shape dataset. We remark that our experimental setup differs from those conducted in the previous study by Marchese et al. (2017), which compares persistence-based clustering to other non-persistence-based machine learning clustering algorithms. Here, we are interested in the performance of the k-means algorithm in the context of persistent homology. We note that the aim of our study is not to establish superiority of persistence-based k-means clustering over other existing statistical or machine learning clustering methods, but rather to understand the behavior of the k-means clustering algorithm in settings of persistent homology. The practical benefit to such a study is that it can make clustering by k-means actually feasible in certain settings where it would otherwise not be: a notable example that we will study later on Sect. 4.2 is on data comprising sets of point clouds, where the natural Gromov–Hausdorff metric between point clouds is difficult to compute, and moreover, where the Fréchet mean for sets of point clouds—crucial for the implementation of k-means—has not yet been defined or studied, to the best of our knowledge.

Specifically, in our numerical experiments, we implement the k-means clustering algorithms on vectorized persistence diagrams, persistence diagrams, and persistence measures. In the spirit of machine learning, many embedding methods have been proposed for persistence diagrams so that existing statistical methods and machine learning algorithms can then be applied to these vectorized representations, as discussed earlier. We systematically compare the performance of k-means on three of the most popular embeddings. We also implement k-means on persistence diagrams themselves as well as persistence measures, which requires adapting the algorithm to comprise the respective metrics as well as the appropriate means. In total, we study a total of five representations for persistent homology in the k-means algorithm: three are embeddings of persistence diagrams, persistence diagrams themselves, and finally, their generalization to persistence measures.

4.1 Embedding persistence diagrams

The algebraic topological construction of persistence diagrams as outlined in Sect. 2.1 results in a highly nonlinear data structure where existing statistical and machine learning methods cannot be applied. The problem of embedding persistence diagrams has been extensively researched in TDA, with many proposals for vectorizations of persistence diagrams. In this paper, we study three of the most commonly-used persistence diagram embeddings and their performance in the k-means clustering algorithm: Betti curves; persistence landscapes (Bubenik 2015b); and persistence images (Adams et al. 2017). These vectorized persistence diagrams can then be directly applied to the original, non-persistence-based k-means algorithm. In this sense, we are providing a comparison of the implementation of the persistent homology version of k-means clustering to the implementation of classical k-means clustering on vectors computed from the output of persistent homology (persistence diagrams). This experiment thus remains restricted to studying exclusively the k-means algorithm as a clustering method, as well as restricted to persistent homology, but nevertheless provides a comparison of the algorithm in two distinct metric spaces.

The Betti curve of a persistence diagram D is a function that takes any \(z \in {\mathbb {R}}\) and returns the number of points (x, y) in D, counted with multiplicity, such that \(z \in [x, y)\). Figure 3a shows an example of a persistence diagram and its corresponding Betti curve is shown in Fig. 3b. It is a simplification of the persistence diagram, where information on the persistence of the points is lost. The Betti curve takes the form of a vector by sampling values from the function at regular intervals.

Persistence landscapes (Bubenik 2015b) as functional summaries of persistence diagrams were introduced with the aim of integrability into statistical methodology. The persistence landscape is a set of functions \(\{\lambda _k\}_{k \in {\mathbb {N}}}\) where \(\lambda _k(t)\) is the kth largest value of

Figure 3c continues the example of the persistence diagram in Fig. 3a and shows its persistence landscape. The construction of the persistence landscape vector is identical to that of the Betti curve, but for multiple \(\lambda\) functions for one persistence diagram.

The persistence image (Adams et al. 2017) is 2-dimensional representation of a persistence diagram as a collection of pixel values, shown in Fig. 3d. The persistence image is obtained from a persistence diagram by discretizing its persistence surface, which is a weighted sum of probability distributions. The original construction in Adams et al. (2017) uses Gaussians, which are also used in this paper. The pixel values are concatenated row by row to form a single vector representing one persistence diagram.

The persistence image is the only representation we study that lies in Euclidean space; Betti curves and persistence landscapes are functions and therefore lie in function space.

We remark that there is a notable computational time difference between raw persistence diagrams and embedded persistence diagrams. This is because when updating centroids of persistence diagrams (i.e., mean persistence measures and Fréchet means), expensive algorithms and techniques are involved to approximate the Wasserstein distance and search for local minima of the Fréchet function (1) (Turner et al. 2014; Flamary et al. 2021; Lacombe et al. 2018).

4.2 Simulated data

We generated datasets equipped with known labels, so any clustering output can be compared to these labels using the Adjusted Rand Index (ARI)—a performance metric where a score of 1 suggests perfect alignment of clustering output and true labels, and 0 suggests random clustering (Hubert and Arabie (1985)).

We simulated point clouds sampled from the surfaces of 3 classes of common topological shapes: the circle \(S^1\), sphere \(S^2\), and torus \(T^2\). In terms of homology, any circle is characterized by one connected component and one loop, so we have \(H_0(S^1) \cong {\mathbb {Z}}\) and \(H_1(S^1) \cong {\mathbb {Z}}\). Similarly, a sphere is characterized by one connected component and one void (bubble), so \(H_0(S^2) \cong {\mathbb {Z}}\) and \(H_2(S^2) \cong {\mathbb {Z}}\), but there are no loops to a sphere since every cycle traced on the surface of a sphere can be continuously deformed to a single point, so \(H_1(S^2) \cong 0\). Finally, for the case of the torus, we have one connected component so \(H_0(T^2) \cong {\mathbb {Z}}\) and one void so \(H_2(T^2) \cong {\mathbb {Z}}\); notice that there are two cycles on the torus that cannot be continuously deformed to a point, one that traces the surface to enclose the “donut hole” and the other smaller one that encircles the “thickness of the donut” so \(H_1(T^2) \cong {\mathbb {Z}} \times {\mathbb {Z}}\).

We add noise from the uniform distribution on \([-s,s]\) coordinate-wise to each data point of the point clouds, where s stands for the noise scale. Figure 4 shows noisy point cloud data from the torus with different noise scales.

Table 1a shows the results of k-means clustering with 3 clusters on the five persistent homology representations of the simulated dataset. We fix the number of clusters to 3, given our prior knowledge of the data and because our focus is to consistently compare the performance accuracy of the k-means algorithms. Since circles, spheres, and tori are quite different in topology as described previously, the results from k-means algorithm is consistent with our knowledge of the topology of these classes of topological shapes. Figure 5 presents the persistence diagrams of the three different shapes we study.

Table 1a and c show the average results from 100 repetitions comparing the persistence landscapes and persistence images, and the persistence diagrams and persistence measures, respectively. We see that persistence measures outperform persistence diagrams with consistently higher scores on average. Among the embedded persistence diagrams, the persistence landscapes produced the best clustering results, followed by the persistence images, and finally the Betti curves, which were not able to cluster accurately for any level of noise.

4.3 3D shape matching data

We now demonstrate our framework on a benchmark dataset from 3D shape matching (Sumner and Popović 2004). This dataset contains 8 classes: Horse, Camel, Cat, Lion, Face, Head, Flamingo, Elephant. Each class contains triangle meshes representing different poses. See Fig. 6 for an visual illustration.

For each triangle mesh we extract the coordinates of its vertices to obtain a point cloud. Then we compute the persistent homology of the point clouds. We apply the k-means clustering algorithm to raw/embedded persistence diagrams. From Table 2, we see that the best ARI score appears when we set \(k=3\). Moreover, we find that most point clouds in Face and Head form one cluster, Horse and Camel form the second, and Cat and Lion form the third, with other classes scattering in all these clusters. Our results are consistent with a similar clustering experiment previously performed by Lacombe et al. (2018), who find two main clusters on a smaller database with 6 classes. We also find that persistence diagrams perform slightly better than persistence measures on this dataset. Among embedded persistence diagrams, the persistence landscapes give better clustering results, followed by persistence images and Betti curves, which is consistent with the performance seen in the previous section on the simulated datasets.

4.4 Software and data availability

The code used to perform all experiments is publicly available at the GitHub repository: https://github.com/pruskileung/PH-kmeans.

5 Discussion

In this paper, we studied the k-means clustering algorithm in the context of persistent homology. We studied the subtleties of the convergence of the k-means algorithm in persistence diagram space and in the KKT framework, which is a nontrivial problem given the highly nonlinear geometry of persistence diagram space. Specifically, we showed that the solution to the optimization problem is a partial optimal point, KKT point, as well as a local minimum. These results refine, generalize, and extend the existing study by Marchese et al. (2017), which shows convergence to a partial optimal point, which need not be a local minimum. Experimentally, we studied and compared the performance of the algorithm for inputs of three embedded persistence diagrams and modified the algorithm for inputs of persistence diagrams themselves as well as their generalizations to persistence measures. We found that empirically, clustering results on persistence diagrams and persistence measures directly were better than on vectorized persistence diagrams, suggesting a loss of structural information in the most popular persistence diagram embeddings.

Our results inspire new directions for future studies, such as other theoretical aspects of k-means clustering in the persistence diagram and persistence measure setting, for example, convergence to the “correct” clustering as the input persistence diagrams and persistence measures grow; and other properties of the algorithm such as convexity, other local or global optimizers, and analysis of the cost function. The problem of information preservation in persistence embedding has been previously studied where statistically, an embedding based on tropical geometry results in no loss of statistical information via the concept of sufficiency (Monod et al. 2019). Additional studies from the perspective of entropy would facilitate a better understanding and quantification of the information lost in embedding persistence diagrams that negatively affect the k-means algorithm. This in turn would inspire more accurate persistence diagram embeddings for machine learning. Another possible future direction for research is to adapt other clustering methods, including deep learning-based methods, to the setting of persistent homology. This would then allow for a comprehensive study of a wide variety of statistical and machine learning approaches for clustering in the context of persistent homology.

References

Adams H, Emerson T, Kirby M, Neville R, Peterson C, Shipman P, Chepushtanova S, Hanson E, Motta F, Ziegelmeier L (2017) Persistence images: a stable vector representation of persistent homology. J Mach Learn Res 18(8):1–35

Arthur D, Vassilvitskii S (2006) k-means++: The advantages of careful seeding. Technical Report 2006-13, Stanford InfoLab

Bergomi MG, Baratè A (2020) Homological persistence in time series: an application to music classification. J Math Music 14(2):204–221

Bhattacharya S, Ghrist R, Kumar V (2015) Persistent homology for path planning in uncertain environments. IEEE Transact Robot 31(3):578–590. https://doi.org/10.1109/TRO.2015.2412051

Billard L, Diday E (2000) Regression analysis for interval-valued data, data analysis, classification, and related methods. Springer, pp 369–374

Blanchard M, Jaffe AQ (2022) Fréchet mean set estimation in the hausdorff metric, via relaxation. arXiv preprint arXiv:2212.12057

Boyd SP, Vandenberghe L (2004) Convex optimization. Cambridge University Press

Bubenik P (2015) Statistical topological data analysis using persistence landscapes. J Mach Learn Res 16(3):77–102

Bubenik P (2015) Statistical topological data analysis using persistence landscapes. J Mach Learn Res 16:77–102

Cao L, McLaren D, Plosker S (2022) Centrosymmetric stochastic matrices. Linear Multilinear Algebr 70(3):449–464

Chazal F, De Silva V, Glisse M, Oudot S (2016) The structure and stability of persistence modules. Springer

Crawford L, Monod A, Chen AX, Mukherjee S, Rabadán R (2020) Predicting clinical outcomes in glioblastoma: an application of topological and functional data analysis. J Am Stat Assoc 115(531):1139–1150. https://doi.org/10.1080/01621459.2019.1671198

De Amorim RC, Hennig C (2015) Recovering the number of clusters in data sets with noise features using feature rescaling factors. Inf Sci 324:126–145

de Silva V, Ghrist R (2007) Coverage in sensor networks via persistent homology. Algeb Geom Topol 7(1):339–358. https://doi.org/10.2140/agt.2007.7.339

Divol V, Chazal F (2019) The density of expected persistence diagrams and its kernel based estimation. J Comput Geom 10(2):127–153

Divol V, Lacombe T (2021) Estimation and quantization of expected persistence diagrams. International conference on machine learning, pp 2760–2770

Divol V, Lacombe T (2021) Understanding the topology and the geometry of the space of persistence diagrams via optimal partial transport. J Appl Comput Topol 5(1):1–53

Dryden IL, Koloydenko A, Zhou D (2009) Non-Euclidean statistics for covariance matrices, with applications to diffusion tensor imaging. Ann Appl Stat 3(3):1102–1123. https://doi.org/10.1214/09-AOAS249

Edelsbrunner Letscher, Zomorodian (2002) Topological Persistence and Simplification. Discr Comput Geom 28(4):511–533. https://doi.org/10.1007/s00454-002-2885-2

Emmett K, Schweinhart B, Rabadan R (2015) Multiscale topology of chromatin folding

Flamary R, Courty N, Gramfort A, Alaya MZ, Boisbunon A, Chambon S, Chapel L, Corenflos A, Fatras K, Fournier N, Gautheron L, Gayraud NT, Janati H, Rakotomamonjy A, Redko I, Rolet A, Schutz A, Seguy V, Sutherland DJ, Tavenard R, Tong A, Vayer T (2021) Pot: python optimal transport. J Mach Learn Res 22(78):1–8

Frosini P (1992) Measuring shapes by size functions. In: Intelligent robots and computer vision X: algorithms and techniques, vol 1607, pp 122–134. International society for optics and photonics

Frosini P, Landi C (2001) Size functions and formal series. Appl Algebr Eng Commun Comput 12(4):327–349. https://doi.org/10.1007/s002000100078

Gameiro M, Hiraoka Y, Izumi S, Kramár M, Mischaikow K, Nanda V (2014) A topological measurement of protein compressibility. Japan J Ind Appl Math 32:1–17. https://doi.org/10.1007/s13160-014-0153-5

Ghrist R (2008) Barcodes: the persistent topology of data. Bull Am Math Soc 45(1):61–75

Goutte C, Hansen LK, Liptrot MG, Rostrup E (2001) Feature-space clustering for FMRI meta-analysis. Human Brain Mapp 13(3):165–183

Hartigan JA, Wong MA (1979) Algorithm AS 136: a \(k\)-means clustering algorithm. Appl Stat 28(1):100. https://doi.org/10.2307/2346830

Hiraoka Y, Nakamura T, Hirata A, Escolar EG, Matsue K, Nishiura Y (2016) Hierarchical structures of amorphous solids characterized by persistent homology. Proc Natl Acad Sci 113(26):7035–7040

Hubert L, Arabie P (1985) Comparing partitions. Journal of Classification 2(1):193–218. https://doi.org/10.1007/BF01908075

Islambekov U, Gel YR (2019) Unsupervised space-time clustering using persistent homology. Environmetrics 30(4):e2539. https://doi.org/10.1002/env.2539

Ismail MS, Hussain SI, Noorani MSM (2020) Detecting early warning signals of major financial crashes in bitcoin using persistent homology. IEEE Access 8:202042–202057. https://doi.org/10.1109/ACCESS.2020.3036370

Kovacev-Nikolic V, Bubenik P, Nikolić D, Heo G (2016) Using persistent homology and dynamical distances to analyze protein binding. Stat Appl Genet Mol Biol. https://doi.org/10.1515/sagmb-2015-0057

Lacombe T, Cuturi M, Oudot S (2018) Large scale computation of means and clusters for persistence diagrams using optimal transport. arXiv:1805.08331 [cs, stat]

Le H, Kume A (2000) The fréchet mean shape and the shape of the means. Adv Appl Probab 32(1):101–113. https://doi.org/10.1239/aap/1013540025

Majumdar S, Laha AK (2020) Clustering and classification of time series using topological data analysis with applications to finance. Expert Syst Appl 162:113868. https://doi.org/10.1016/j.eswa.2020.113868

Marchese A, Maroulas V, Mike J (2017) \(K\)-means clustering on the space of persistence diagrams. Wavel Sparsity XVII 10394:103940W. https://doi.org/10.1117/12.2273067

Mileyko Y, Mukherjee S, Harer J (2011) Probability measures on the space of persistence diagrams. Invers Probl 27(12):124007. https://doi.org/10.1088/0266-5611/27/12/124007

Miolane N, Guigui N, Le Brigant A, Mathe J, Hou B, Thanwerdas Y, Heyder S, Peltre O, Koep N, Zaatiti H, Hajri H, Cabanes Y, Gerald T, Chauchat P, Shewmake C, Brooks D, Kainz B, Donnat C, Holmes S, Pennec X (2020) Geomstats: a python package for riemannian geometry in machine learning. J Mach Learn Res 21(1)

Monod A, Kališnik S, Patino-Galindo JA, Crawford L (2019) Tropical sufficient statistics for persistent homology. SIAM J Appl Algebr Geom 3(2):337–371. https://doi.org/10.1137/17M1148037

Murayama B, Kobayashi M, Aoki M, Ishibashi S, Saito T, Nakamura T, Teramoto H, Taketsugu T (2023) Characterizing reaction route map of realistic molecular reactions based on weight rank clique filtration of persistent homology. J Chem Theor Comput. https://doi.org/10.1021/acs.jctc.2c01204

Otter N, Porter MA, Tillmann U, Grindrod P, Harrington HA (2017) A roadmap for the computation of persistent homology. EPJ Data Sci 6(1):17. https://doi.org/10.1140/epjds/s13688-017-0109-5

Panagopoulos D (2022) Topological data analysis and clustering. arXiv preprint arXiv:2201.09054

Pham DT, Dimov SS, Nguyen CD (2005) Selection of k in k-means clustering. Proc Inst Mech Eng Part C J Mech Eng Sci 219(1):103–119

Pokorny FT, Hawasly M, Ramamoorthy S (2016) Topological trajectory classification with filtrations of simplicial complexes and persistent homology. Int J Robot Res 35(1–3):204–223. https://doi.org/10.1177/0278364915586713

Reininghaus J, Huber S, Bauer U, Kwitt R (2015) A stable multi-scale kernel for topological machine learning. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), Boston, MA, USA, pp 4741–4748. IEEE

Selim SZ, Ismail MA (1984) K-means-type algorithms: a generalized convergence theorem and characterization of local optimality. IEEE Transact Pattern Anal Mach Intell 1:81–87

Sumner RW, Popović J (2004) Deformation transfer for triangle meshes. ACM Transact Graph (TOG) 23(3):399–405

Thorndike RL (1953) Who belongs in the family? Psychometrika 18(4):267–276

Turner K, Mileyko Y, Mukherjee S, Harer J (2014) Fréchet means for distributions of persistence diagrams. Discr Comput Geom 52(1):44–70

Vasudevan R, Ames A, Bajcsy R (2013) Persistent homology for automatic determination of human-data based cost of bipedal walking. Nonlinear Anal Hybrid Syst 7(1):101–115. https://doi.org/10.1016/j.nahs.2012.07.006

Verri A, Uras C, Frosini P, Ferri M (1993) On the use of size functions for shape analysis. Biol Cybern 70(2):99–107. https://doi.org/10.1007/BF00200823

Xia K, Li Z, Mu L (2016) Multiscale persistent functions for biomolecular structure characterization

Zomorodian A, Carlsson G (2005) Computing persistent homology. Discr Comput Geom 33(2):249–274. https://doi.org/10.1007/s00454-004-1146-y

Acknowledgements

We wish to thank Antonio Rieser for helpful discussions. We also wish to acknowledge the Information and Communication Technologies resources at Imperial College London for their computing resources which were used to implement the experiments in this paper.

Funding

Y.C. is funded by a President’s PhD Scholarship at Imperial College London.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cao, Y., Leung, P. & Monod, A. k-means clustering for persistent homology. Adv Data Anal Classif (2024). https://doi.org/10.1007/s11634-023-00578-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11634-023-00578-y

Keywords

- Alexandrov geometry

- Karush–Kuhn–Tucker optimization

- k-means clustering

- Persistence diagrams

- Persistent homology