Abstract

We study local, analytic solutions for a class of initial value problems for singular ODEs. We prove existence and uniqueness of such solutions under a certain non-resonance condition. Our proof translates the singular initial value problem to an equilibrium problem of a regular ODE. Then, we apply classical invariant manifold theory. We demonstrate that the class of ODEs under consideration captures models which describe the shape of axially symmetric surfaces which are closed on one side. Our main result guarantees smoothness at the tip of the surface.

Similar content being viewed by others

1 Introduction

The problem in this paper is motivated by a specific application in which the shape of an axial symmetric surfaces with a smooth tip is sought. We postpone the details of this model to Sect. 4, and focus here on a more general setting.

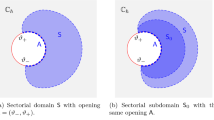

Suppose we are modeling an axially symmetric surface with a smooth tip in cylindrical variables as the solution of an ordinary differential equation. We parametrize the axial co-ordinate \(z\) with respect to the axial distance co-ordinate \(r\); see Fig. 1. Hence, \(r\) is the independent variable in the ODE and \(z\) is the dependent variable. We are interested in deriving conditions under which solutions \(z(r)\) are unique. The requirement that the tip of the surface is smooth translates to the requirement that \(z\) is even and smooth in a neighborhood around 0. For convenience, we further assume that \(z\) is locally analytic. Then, the requirements on \(z\) can be reformulated as the requirement that there exist \(\varepsilon > 0\) and \(g \in C^{\omega}((-\varepsilon ,\varepsilon ), \mathbb{R})\) with \(g(0)=0\) such that

We assume that the governing equations are of the form

where \(0< r < r_{0}\) and \(V \in C^{\omega}(\mathbb{R}^{2}, \mathbb{R})\) with \(V(0) = 0\). The singularity \(1/r\) arises from the expression of the gradient in cylindrical coordinates. The argument \(r^{2}\) in \(V\) forces evenness of the solution. In applications the argument \(r^{2}\) arises naturally from surface force terms or from a cumulative flux. We are interested in finding sufficient conditions on \(V\) for which solutions of (2) satisfying (1) are unique.

Example 1.1

A simple but insightful example of (2) is when \(V\) is linear. Then, (2) reads as

with \(\lambda ,b \in \mathbb{R}\). The general solution is given by

where \(C_{1}\) a free constant and

If \(\lambda \neq 2\), then the solution \(z\) with \(C_{1} = 0\) satisfies (1). If \(\lambda \notin 2 \mathbb{N}_{+}\), then this is the only solution which satisfies (1).

The condition \(\lambda \notin 2 \mathbb{N}_{+}\) in Example 1.1 is a type of non-resonance condition. It suggests that (2) will not have unique solutions for any analytic \(V\), and that in addition a requirement such as \(\partial _{z} V(0) \notin 2 \mathbb{N}_{+}\) is needed. This additional requirement turns out to be exactly the sufficient condition on \(V\) in the main result in this paper.

The biological application in Sect. 4 requires the following generalization of (1)–(2) to higher dimensions. The solution concept for the unknown \(x \in \mathbb{R}^{n} \) is that there exists some \(g \in C^{\omega}((-\varepsilon ,\varepsilon ), \mathbb{R}^{n})\) with \(g(0)=0\) such that

The ODE for \(x\) is

where \(V \in C^{\omega}(\mathbb{R}^{n+1}, \mathbb{R}^{n})\) with \(V(0) = 0\). To reveal the connection with Example 1.1, we expand

where \(A \in \mathbb{R}^{n \times n}\), \(b \in \mathbb{R}^{n}\) and \(f(y) = O(|y|^{2})\) with \(y = (x,r^{2})\). Our main result, Corollary 4.1, is that a sufficient condition on \(V\) for the existence and uniqueness of solutions to (4) satisfying (3) is that \(\lambda _{i} \notin 2 \mathbb{N}_{+}\) for all \(1 \leq i \leq n\), where \(\lambda _{i}\) are the eigenvalues of \(A\).

To prove Corollary 4.1, we transform (4) into an autonomous system with no singularity and where the problem of uniqueness of analytic solutions turns into an equilibrium study. To remove the singularity, we introduce the independent variable \(t\) given by \(r={\mathrm{e}}^{t}\), and obtain from (4) that \(\hat{x}(t) := x({\mathrm{e}}^{t})\) satisfies

Note that the initial condition in (4) at \(r = 0\) is transformed to the equilibrium point 0 at \(t = -\infty \). To make this system autonomous, we introduce the dependent variable \(\rho = {\mathrm{e}}^{2t}\) and consider

Along this transformation, (3) implies that

Hence, Corollary 4.1 can be formulated in terms of local properties of the equilibrium of (7). Theorem 2.3 provides the precise statement. We consider Theorem 2.3 as our main mathematical result, and Corollary 4.1 as the main statement regarding its application. We prove Theorem 2.3 by using classical invariant manifold theory [2, 5, 10–14].

To demonstrate the applicability and use of Corollary 4.1, we apply it to the Ballistic Ageing Thin viscous Sheet (BATS) model [7]. The BATS model describes tip growth for single fungal cells in terms of a system of ODEs for an axial symmetric surface with a smooth tip. We show that Corollary 4.1 provides sufficient conditions for the parameters in the BATS model under which unique solutions with a smooth tip exist. More specifically, our result implies that if the expansion is of sufficiently high order then it approximates the smooth solution at the tip. This gives a theoretical motivation for the numerical approach in [9] in which approximations to solutions to the BATS model are constructed from asymptotic expansions.

The paper is organized as follows. In Sect. 2 we formulate (7)–(8) in a general dynamical systems framework and present Theorem 2.3. We prove it in Sect. 3. In Sect. 4 we formulate and prove Corollary 4.1 and apply it to the BATS model. In Sect. 5 we give concluding remarks and suggest future research.

2 Main Mathematical Result

In this section we present Theorem 2.3, which is our main mathematical result.

We define the phase space

On \(M\) we consider the generalization of (7) given by

where \(b \in \mathbb{R}^{n}\), \(\sigma > 0\), \(A \in \mathbb{R}^{n \times n}\) and \(f \in C^{\omega}(\mathbb{R}^{n+1}, \mathbb{R}^{n})\) with \(f(y) = O(|y|^{2})\) as \(y \to 0\). The vector field corresponding to (9) has an equilibrium at 0 with linearization

Definition 2.1

\(\rho \)-analytic

A solution \((x,\rho )\) of (9) is called \(\rho \)-analytic if there exist \(R, \varepsilon >0\) and \(g \in C^{\omega}((-\varepsilon , \varepsilon ), \mathbb{R}^{n})\) with \(g(0)=0\) such that \(x(t)= g(\rho (t))\) for all \(t \in (-\infty , -R)\).

We make three preliminary observations. First, the equilibrium \((x, \rho ) = 0\) is a \(\rho \)-analytic solution. Second, since \(\rho \) can be solved directly from (9) (i.e. \(\rho (t) = c_{0} {\mathrm{e}}^{\sigma t}\) for some \(c_{0} \in \mathbb{R}\)) the \(x\)-component of a \(\rho \)-analytic solution can be expressed as an analytic function of \({\mathrm{e}}^{\sigma t}\). Third, the freedom in the choice of \(c_{0}\) corresponds to a translation in time. Hence, \(\rho \)-analytic solutions are invariant in translation in time, and it suffices to consider \(c_{0} \in \{-1,0,1\}\).

We want to express Definition 2.1 in the language of invariant manifolds. We introduce \(W^{\rho}(0) \subset M\) as the set generated by all the orbits of \(\rho \)-analytic solutions. Denote by \(W^{u}(0)\) the unstable manifold corresponding to (9).

Proposition 2.2

\(W^{\rho}(0) \subset W^{u}(0)\).

Proof

Let \((x,\rho )\) be a \(\rho \)-analytic solution on \(W^{\rho}(0)\). By Definition 2.1\((x,\rho )(t)\) decays exponentially as \(t \rightarrow -\infty \). Hence, \((x,\rho )\) is contained in \(W^{u}(0)\) (see e.g. [6, Problem 11, page 347]). □

Theorem 2.3

Let \(\lambda _{1}, \ldots , \lambda _{n}\) be the eigenvalues of \(A\). If \(\lambda _{i} \notin \sigma \mathbb{N}_{+}\) for all \(1 \leq i \leq n\), then \(W^{\rho}(0)\) is a one-dimensional smooth manifold and there exist \(\varepsilon > 0\) and \(g \in C^{\omega}( (-\varepsilon , \varepsilon ) , \mathbb{R}^{n})\) such that

Equation (11) implies that \(W^{\rho}(0)\) is the union of three \(\rho \)-analytic solution orbits: the equilibrium 0, \(W^{\rho}(0)\) restricted to \(\rho >0\) and \(W^{\rho}(0)\) restricted to \(\rho <0\). Furthermore, there exist \(\rho \)-analytic solutions, and they are unique modulo translation in time if we include the constraint \(\rho =0\), \(\rho >0\) or \(\rho <0\). Note that orbits on \(W^{\rho}(0)\) cannot have a complicated geometry in \(M\) since (9) is linear in \(\rho \).

3 Proof Main Theorem

The proof of the main theorem, Theorem 2.3, is given at the end of this section. It relies on Lemmas 3.1 and 3.2. Lemma 3.1 states that Theorem 2.3 holds under the additional assumption that \({\mathrm{Re}}(\lambda _{i})<0\). The proof of Lemma 3.1 relies on the analytic version of the unstable manifold theorem. This gives analyticity of the solution without the need to prove convergence of power series. Lemma 3.2 introduces a recursive transformation under which the eigenvalues can be shifted to the left half-plane in ℂ such that Lemma 3.1 can be applied. At each iteration of this transformation we linearize around the next coefficient in the power series of the analytic solution.

Lemma 3.1

If \({\mathrm{Re}}(\lambda _{i})<0\) for all \(1 \leq i \leq n\), then \(W^{\rho}(0)\) is a one-dimensional smooth manifold satisfying (11).

Proof

As preparation, we denote by \(E^{s}\) and \(E^{u}\) the stable and unstable subspace of the eigenspaces of (10), respectively. Since \({\mathrm{Re}}(\lambda _{i})<0\), we observe that \({\mathrm{dim}}(E^{s})= n\), \({\mathrm{dim}}(E^{u})= 1\) and that the eigenvalue corresponding to \(E^{u}\) is \(\sigma \). Moreover, \(E^{u} = \langle \overline{v} \rangle \) with \(\overline{v} := (v,1) \in M\) for some \(v \in \mathbb{R}^{n}\).

First, we prove Lemma 3.1 for \(W^{u}(0)\) instead of \(W^{\rho}(0)\). We start with property (11). With this aim, we prepare for applying the local unstable manifold theorem [1]. We use the corresponding notation. Let \(E^{u}(\varepsilon ) := E^{u} \cap B_{\varepsilon}(0)\) and \(E^{s}(\varepsilon ) := E^{s} \cap B_{\varepsilon}(0)\), where \(B_{\varepsilon}(0)\) is the ball in \(M\) centred at 0 with radius \(\varepsilon > 0\). Denote by \(W^{u}_{\mathrm{loc},\varepsilon}(0)\) the local unstable manifold induced by \(B_{\varepsilon}(0)\). Then, the local unstable manifold theorem states that \(W^{u}_{\mathrm{loc},\varepsilon}(0)\) is the graph of some \(\hat{g} \in C^{\omega}(E^{u}(\varepsilon ),E^{s}(\varepsilon ) )\) with \(\hat{g}(0)=0\) and \(D\hat{g}(0)=0\). Using this and recalling \(E^{u} = \langle \overline{v} \rangle \), we parametrize \(W^{u}_{\mathrm{loc},\varepsilon}(0)\) by \(\overline{g} \in C^{\omega}((-\varepsilon , \varepsilon ), \mathbb{R}^{n+1})\) given by \(\overline{g}(\rho ) = \hat{g}(\rho \overline{v}) + \rho \overline{v}\). Restricting \(\overline{g}\) to the \(x\)-component we obtain that \(W^{u}_{\mathrm{loc},\varepsilon}(0)\) satisfies (11).

Next we extend \(W^{u}_{\mathrm{loc},\varepsilon}(0)\) to the global manifold

with \(\phi \) denoting the flow of (9). By this construction, \(W^{u}(0)\) is a one-dimensional smooth manifold and \(W^{u}(0) \subset W^{\rho} (0)\). Hence, \(W^{u}(0)\) satisfies Lemma 3.1, and, by Proposition 2.2, \(W^{\rho}(0) = W^{u}(0)\). This completes the proof. □

Next we define the recursive transformation mentioned at the start of Sect. 3. Given some \(\tilde{c} \in \mathbb{R}^{n}\), let

Lemma 3.2 shows that under this transformation for a specific \(\tilde{c}\) the transformed orbit \(\psi _{\tilde{c}}(x,\rho )\) satisfies a system similar to (9) in which the eigenvalues of the corresponding \(A\) are shifted by distance \(\sigma \) to the left. Moreover, if the set of solutions of the resulting system satisfies the properties stated in Theorem 2.3, then \(W^{\rho}(0)\) satisfies these properties too.

Lemma 3.2

Let \(\lambda _{i} \neq \sigma \) for all \(1 \leq i \leq n\). Take \(\tilde{c} = -(A-\sigma I)^{-1}b\) and let \((x, \rho )\) be a \(\rho \)-analytic solution on \(W^{\rho}(0)\). Then, \((\tilde{x} ,\rho ) := \psi _{\tilde{c}}(x,\rho )\) is a \(\rho \)-analytic solution of

for some \(\tilde{b} \in \mathbb{R}^{n}\) and some \(\tilde{f} \in C^{\omega}(\mathbb{R}^{n+1}, \mathbb{R}^{n})\) with \(\tilde{f}(y) = O(|y|^{2})\) as \(y \to 0\). Moreover, \(\psi _{\tilde{c}} : {W}^{\rho}(0) \to \tilde{W}^{\rho}(0)\) is invertible, where \(\tilde{W}^{\rho}(0)\) is the set of \(\rho \)-analytic solutions of (13). Finally, if \(\tilde{W}^{\rho}(0)\) is a smooth 1-dimensional manifold which satisfies (11), then \({W}^{\rho}(0)\) is also a smooth 1-dimensional manifold which satisfies (11).

We note that at this stage it is not clear yet whether \(\psi _{\tilde{c}} : {W}^{\rho}(0) \to \tilde{W}^{\rho}(0)\) is a diffeomorphism because a priori \({W}^{\rho}(0)\) need not be a smooth manifold.

Proof of Lemma 3.2

First we show that \((\tilde{x}, \rho )\) satisfies (13). Using (9) we compute

Since \(f\) is analytic with \(f(y) = O(|y|^{2})\) as \(y \to 0\), we can write it as \(f(y) = B(y,y) + \hat{f}(y)\) for some bilinear map \(B : (\mathbb{R}^{n+1})^{2} \to \mathbb{R}^{n}\) and some analytic \(\hat{f}\) with \(\hat{f}(y) = O(|y|^{3})\) as \(y\to 0\). Then,

Thus, taking

Equation (13) follows. Furthermore, as required, \(\tilde{f}\) is analytic and \(\tilde{f} (\tilde{x},\rho ) = O(\rho ^{2} + |\rho \tilde{x}|) = O(| \tilde{x}|^{2} + \rho ^{2})\) as \((\tilde{x},\rho ) \to 0\).

Next we show that \((\tilde{x},\rho )\) is \(\rho \)-analytic. This is obvious for the stationary orbit \((x,\rho ) = 0\); we assume in the following that \((x,\rho ) \neq 0\). With \(g\) as in Definition 2.1, we have

Since \(g(0) = 0\), we obtain that \(\tilde{g}\) is smooth in a neighborhood of 0. Then, since \(\tilde{x}(t) \to \tilde{g} (0)\) as \(t \to -\infty \), it follows from (13), \(A - \sigma I\) being regular and \(\tilde{f}(\tilde{x}, \rho ) = O(\rho (\rho + | \tilde{x}|))\) that \(\tilde{g} (0) = 0\). Hence, \((\tilde{x},\rho )\) is \(\rho \)-analytic.

Next we show that \(\psi _{\tilde{c}} : {W}^{\rho}(0) \to \tilde{W}^{\rho}(0)\) is invertible. Injectivity is easy to verify; we omit the details. To show that \(\psi _{\tilde{c}}\) is surjective, let \((\tilde{x},\rho ) \in \tilde{W}^{\rho}(0)\). It is easy to see that with \(x = (\tilde{x} + \tilde{c})\rho \) it holds that \(\psi _{\tilde{c}}(x, \rho ) = (\tilde{x},\rho )\) and that \((x,\rho )\) is \(\rho \)-analytic. To conclude that \((x, \rho ) \in W^{\rho}(0)\), it is left to show that \(x\) satisfies the ODE in (9). We obtain this as follows by reversing (14) and recalling the construction of (15) and (16):

Finally, we prove the final statement in Lemma 3.2. Note from (11) that \(\tilde{W}^{\rho}(0)\) is the union of three orbits \((\tilde{x}, \rho )\): 0, the orbit where \(\rho > 0\) and the orbit where \(\rho < 0\). Then, the final statement in Lemma 3.2 follows from the construction of \(\psi _{\tilde{c}}^{-1}\) in the proof of the surjectivity above. □

Proof of Theorem 2.3

Let \(\Lambda := \max _{1 \leq i \leq n} {\mathrm{Re}}(\lambda _{i})\) and set \(K = \lfloor \Lambda /\sigma \rfloor + 1\). Applying the transformation in (12) \(K\) times recursively (see Lemma 3.2 for details; because of \(\lambda _{i} \notin \sigma \mathbb{N}_{+}\) we may apply this lemma recursively) we obtain that the eigenvalues of the matrix in the resulting system (13) are in the negative half-plane. Consequently, Lemma 3.1 guarantees that Theorem 2.3 holds for the resulting system (13). Then, reversing the transformation in (12) \(K\) times, it follows from Lemma 3.2 that Theorem 2.3 also holds for the original system (9). □

4 Application

We first reformulate Theorem 2.3 in the setting of the singular ODE (3)–(4) and then apply it to the Ballistic Ageing Thin viscous Sheet (BATS) model for fungal tip growth [7].

4.1 Uniqueness of Solutions of Singular ODEs

Recall (3), (4), (5). We obtain the following corollary from Theorem 2.3.

Corollary 4.1

Let \(\lambda _{1}, \ldots , \lambda _{n}\) be the eigenvalues of \(A\). If \(\lambda _{i} \notin 2 \mathbb{N}_{+}\) for all \(1 \leq i \leq n\), then there exists a unique solution \(x\) of (4) satisfying (3).

Proof

Take \(\sigma =2\) in (9). Theorem 2.3 implies that there exists a unique solution \((x, \rho )\) to (9) up to translation in time with \(\rho > 0\) and \(x(t) = g(\rho (t))\) for some \(g \in C^{\omega}((-\varepsilon ,\varepsilon ), \mathbb{R}^{n})\) and all \(t\) negative enough. Since the transformation introduced in Sect. 1 to transform (3)–(4) into (9) is invertible, Corollary 4.1 follows. □

4.2 The BATS Model

The BATS model [7–9] describes the shape of a single axially symmetric fungal cell wall during growth. It assumes a constant speed of growth and an equilibrium shape of the cell tip in the co-ordinate frame which moves along the cell tip. The independent variable describing the cell wall is the arclength \(s\). Specifically, we have that \(s \rightarrow 0\) describes the tip of the cell, see Fig. 2.

In [9] the shape of the cell tip is computed numerically with asymptotic expansions. The authors observed that for certain special choices of the parameters in the BATS model the coefficients in these expansions blow up and the expansions fail to capture the shape of the cell tip. Yet, no theoretical explanation was found for this observation.

Our aim is to seek such theoretical explanation. We will cast the system of ODEs of the BATS model in a form to which Corollary 4.1 can be applied. The non-resonance condition on the eigenvalues translates to a condition on the parameters of the BATS model. If the BATS model has a unique, local, analytic solution, then we expected that the numerically computed solutions constructed from asymptotic expansions converge to the corresponding coefficients in the power series of the exact solution. Furthermore, it will turn out that the non-resonance condition in Corollary 4.1 precisely characterizes all cases in [9] where the coefficients blow up. This demonstrates in a specific setting the necessity of the non-resonance condition in Corollary 4.1 and Theorem 2.3.

First, we introduce the BATS model. We consider the phase space given by

The \(h\) variable represents the cell wall thickness, \(\Psi \) is the age of the cell wall material, \(z\) is the axial co-ordinate variable, \(r\) is the radial distance variable and \(\varsigma = dr/ds\). We note that \(\varsigma \in (-1,1)\) since parametrization of \(z,r\) by \(s\) gives the equality \((dr/ds)^{2} + (dz/ds)^{2}=1\). The governing equations are given by [7]:

where

and \(\mu \in C^{\omega}(\mathbb{R}_{+}, \mathbb{R}_{+})\) satisfies

The function \(\mu \) corresponds to viscosity. The viscosity of the cell wall increases with age which corresponds to hardening of the cell wall.

For the tip shape to be smooth we require two conditions on \((\varsigma , h,\Psi ,z,r)\) as \(s \to 0\):

-

T1

Tip limits: there exist \(h_{0} >0\) and \(z_{0}<0\) such that

$$\begin{gathered} \lim _{s \rightarrow 0} (\varsigma ,h,\Psi ,z,r)(s) = (1, h_{0}, h_{0} z_{0}^{2}, z_{0}, 0); \end{gathered}$$(18) -

T2

Analyticity: there exist \(s_{1}>0\) and \(g \in C^{\omega}\left ( (-\varepsilon ,\varepsilon ), \mathbb{R}^{4} \right )\) with \(\varepsilon =r(s_{1})^{2}\) such that

$$\begin{aligned} (\varsigma ,h,\Psi ,z)(s)= g(r(s)^{2}) \qquad \forall s \in (0,s_{1}). \end{aligned}$$

Condition T1 follows from local analysis of solutions with a tip [7]. Specifically, \(z_{0}\) corresponds to the distance of the tip to the cell wall producing organelle. Condition T2 is a result of requiring a smooth shape at the tip as in Fig. 2. It allows for expressing the solutions as \((\varsigma , h,\Psi ,z)\) as an even analytic function of \(r\) on a neighborhood around 0.

Next we write the BATS model and its desired solution in the form (3)–(4). Since \(z\) and \(r\) are dependent variables, we can reduce the number of equations from five to four. We do so by considering \(r\) as the variable which replaces \(s\). Simultaneously, we change the unknown \(\varsigma \) to

to avoid a removable singularity. Denoting by ′ the derivative with respect to \(r\), we obtain

with

Next we compute the initial condition. From (18) this is trivial for \(h, \Psi , z\). The initial condition for \(\eta \) requires some computation. Indeed, while

the numerator and denominator vanish as \(s \to 0\). Using l’Hopital and noting from \(z' = \sqrt{1 - \varsigma ^{2}} = \sqrt{1 - (r')^{2}}\) that \(z'' = -r'' r' / z' = - \varsigma ' r' / z'\) we obtain

Solving for \(\eta (0)\) yields \(\eta (0) = 0\) or \(\eta (0) = 2 z_{0}^{2} / (3 \mu (h_{0} z_{0}^{2}))\). If \(\eta (0) = 0\), then (19) and T1 imply \(\lim _{r \to 0}r h'(r) = - \infty \), which contradicts with T2. Therefore, we only consider \(\eta (0) = 2 z_{0}^{2} / (3 \mu (h_{0} z_{0}^{2}))\). In conclusion, we obtain

Finally, T2 implies directly local analyticity of \(h, \Psi , z\) and ensures that the odd coefficients of the expansions for \(h, \Psi , z\) are zero. Then, writing \(\eta (r) = \frac{ z'(r) \varsigma (r)}{r}\) and using that all even coefficients of \(z'(r)\) are zero, we conclude that \(\eta \) satisfies the same property. In conclusion, there exist \(\varepsilon >0\) and \(\tilde{g} \in C^{\omega}\left ( (-\varepsilon ,\varepsilon ), \mathbb{R}^{4} \right )\) such that

Next we cast (19) in the form (4). Suppose there exists a local solution \(\overline{x} := ( \eta , h , \Psi , z)\) to (19). Let \(r_{0}\) be small enough such that \(\overline{x}\) exists on \([0, r_{0}]\), \(\sup _{(0, r_{0})} z < 0\) and \(\inf _{(0, r_{0})} \min \{ \Psi , \eta \} > 0\). Then, the right-hand side in (19) can be written as \(\overline{V} (\overline{x}, r^{2})/r\), where \(\overline{V} : \mathbb{R}^{5} \to \mathbb{R}^{4}\) is analytic in a neighborhood around \(p_{0}(h_{0},z_{0})\). To obtain the initial condition in (4), we shift variables to \({x} := \overline{x} - p_{0}(h_{0},z_{0})\) and set \(V(x, r^{2}) := \overline{V} (x + p_{0}, r^{2})\). We observe from (21) that \(x\) satisfies (3).

Finally, we note that this transformation can easily be inverted, i.e. if (19)–(20) has a solution satisfying (21), then (17)–(18) has a solution satisfying T2. Indeed, \(\varsigma (r) = \sqrt{1- \eta (r)^{2} r^{2}}\) satisfies the condition in T2. Introducing \(s(r)\) as the solution of \(\frac{ds}{dr}(r) = 1/\varsigma (r), s(0) = 0 \), we apply the inverse function theorem (relying on \(\frac{ds}{dr}(0) =1 \neq 0\)) to parametrize \(\varsigma , h, \Psi , z, h\) in \(s\) around \(s=0\). Then, (17)–(18) follows.

To summarize the above, (17)–(18) has a solution satisfying T2 if and only if (4) has a solution satisfying (3), where \(V\) is as constructed above. Hence, we may work with (3)–(4) in the remainder. Corollary 4.1 provides a sufficient condition for the existence and uniqueness of solutions to (4) which satisfy (3). To make this condition explicit, we need to compute the eigenvalues of \(A := \nabla _{x} V(0)\) (see (5)). From (19) we compute

The eigenvalues corresponding to \(A\) are given by

Since \(\lambda _{3}(h_{0},z_{0}) < 0\) for all \(h_{0} > 0 > z_{0}\), the condition in Corollary 4.1 translates to

In conclusion, (23) gives a sufficient conditions on the parameters \(h_{0} > 0 > z_{0}\) of the BATS model (see (18)) under which the BATS model describes a unique shape for the cell tip in the class of local analytic functions. Since this condition is a new result, we compare it with the findings in [9] mentioned at the start of Sect. 4.2. In the Appendix we show that there is a one-to-one connection between (23) and the values of \(h_{0}, z_{0}\) for which the asymptotic expansions for the solution in [9] fail. This demonstrates that non-resonance conditions are indeed required in practice, and that the condition in Corollary 4.1 is in fact minimal at least in the particular case of the BATS model investigated in [9].

5 Concluding Remarks and Future Work

Corollary 4.1 provides a new tool for obtaining existence and uniqueness for solutions in the sense of (3) to singular ODEs of type (4). Such ODEs appear for instance in models for the shape of axially symmetric surfaces. In Sect. 4.2 we have demonstrated that Corollary 4.1 provides new properties for the BATS model, and that the sufficient conditions in Corollary 4.1 can be minimal in practice. The tangent space at the equilibrium uniquely determines the one dimensional unstable manifold in Lemma 3.1. Then, it follows from Lemma 3.2 that an expansion of sufficiently high order approximates the desired analytic solution.

Our results open up four interesting problems. First, we expect that Theorem 2.3 also applies if in the equation for \(\rho \) in (9) a nonlinear term is included. Indeed, this does not alter the linearized equation and thus the proof of Lemma 3.1 will remain identical. While Lemma 3.2 requires modifications since additional nonlinear terms appear when transforming the ODE, these terms can be absorbed in the nonlinearities corresponding to the \(x\)-component. We left out this generalization because the applications which we have in mind are captured by the linear setting.

Second, from a proof perspective we expect that a more direct approach would work which only relies on a contraction-type argument. Specifically, we could rewrite (9) as the non-autonomous ODE as in (6). For (6) we can write a Duhamel-formula and proceed with an application of Banach’s fixed point theorem. Such a proof would be somewhat technical. In our approach the technicalities of a fixed point argument are hidden in the application of the unstable manifold theorem. We note that an approach by applying Poincaré-Dulac to (9) does not work if we only assume that \(\lambda _{i} \notin \sigma \mathbb{N}_{+}\), because there might exists a \(\lambda _{i} = (\lambda , \sigma ) \cdot k \) with \(k \in \mathbb{N}^{n+1}_{+}\), \(|k| \geq 2\) and \(k_{n+1} \geq 1\) [3, 4]. Furthermore, Poincaré-Dulac would only yield a formal transformation which is not necessarily analytic.

Third, one can try to generalize Theorem 2.3 to system (9) in which the nonlinear term \(f\) is merely Lipschitz. Then, our argument by applying Lemma 3.2 recursively does not work. Instead, it seems that one is forced to apply a Banach’s fixed point type argument.

Fourth, in the setting of the BATS application in Sect. 4.2 it is desirable to know whether solutions depend continuously on the parameters \(h_{0},z_{0}\). This translates to the question on whether solutions to (4) of type (3) are continuous with respect to perturbations of \(V\). To answer this question, the procedure of Sect. 3 can be repeated. However, in addition it needs to be shown that \(W^{\rho}(0)\) and the coefficients obtained in Lemma 3.2 are continuous with respect to the perturbation of \(V\). We expect that center manifold theory [5] may provide tools to prove this.

References

Abbondandolo, A., Majer, P.: Lectures on the Morse complex for infinite-dimensional manifolds. In: Morse Theoretic Methods in Nonlinear Analysis and in Symplectic Topology, pp. 1–74. Springer, Berlin (2006)

Abbondandolo, A., Majer, P.: On the global stable manifold. Stud. Math. 177, 2 (2006)

Anosov, D.V., Aranson, S.Kh., Arnold, V.I., Bronshtein, I.U., Il’yashenko, Yu.S., Grines, V.Z.: Ordinary Differential Equations and Smooth Dynamical Systems. Springer, Berlin (1997)

Broer, H.W.: Normal forms in perturbation theory. In: Encyclopedia of Complexity and System Science, pp. 6310–6329. Springer, Berlin (2009)

Carr, J.: Applications of Centre Manifold Theory, vol. 35. Springer, Berlin (2012)

Coddington, E.A., Levinson, N.: Theory of Ordinary Differential Equations. Tata McGraw-Hill Education, New York (1955)

de Jong, T.G., Hulshof, J., Prokert, G.: Modelling fungal hypha tip growth via viscous sheet approximation. J. Theor. Biol. 492, 110189 (2020)

de Jong, T.G., Sterk, A.E., Broer, H.W.: Fungal tip growth arising through a codimension-1 global bifurcation. Int. J. Bifurc. Chaos 30(07), 2050107 (2020)

de Jong, T.G., Sterk, A.E., Guo, F.: Numerical method to compute hypha tip growth for data driven validation. IEEE Access 7, 53766–53776 (2019)

Guckenheimer, J., Holmes, P.: Nonlinear Oscillations, Dynamical Systems, and Bifurcations of Vector Fields, vol. 42. Springer, Berlin (2013)

Irwin, M.C.: On the stable manifold theorem. Bull. Lond. Math. Soc. 2(2), 196–198 (1970)

Irwin, M.C.: Smooth Dynamical Systems, vol. 17. World Scientific, Singapore (2001)

Kelley, A.: The stable, center-stable, center, center-unstable, unstable manifolds. J. Differ. Equ. (1966)

Shub, M.: Stabilité globale des systèmes dynamiques. Asterisque 58 (1978)

Acknowledgements

PvM gratefully acknowledges support from JSPS KAKENHI Grant Number 20K14358.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: The BATS Model for Specific \(\mu \)

Appendix: The BATS Model for Specific \(\mu \)

In [9] the BATS model from Sect. 4.2 is considered for the following choices of the viscosity function:

They construct expansions for the solutions to the BATS model. They observed that for \(m=2,3\) the coefficients in their expansions were well-defined for any choice of the parameters \(h_{0} > 0 > z_{0}\), but that for \(m =4,5\) the coefficients were singular if and only if

Here we investigate to which extend these observations match with the condition (23). From (22) we observe that

where we have added the dependence of \(\mu _{m}\) in the arguments of \(\lambda _{4}\). In particular,

Consequently, for \(\mu _{2},\mu _{3}\) the condition in (23) imposes no restrictions on \((h_{0},z_{0})\), and for \(\mu _{4},\mu _{5}\) this condition translates to \(\lambda _{4}(h_{0},z_{0}; \mu _{m}) \neq 2\). Since \(\lambda _{4}\) is increasing in \(h_{0} z_{0}^{2}\) (see the display above), this corresponds to a single value for \(h_{0} z_{0}^{2}\), and it is readily verified that this value is given by (24). Hence, for each case examined in [9], condition (23) characterizes precisely those values of \(h_{0}, z_{0}\) for which the expansions in [9] are not singular.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Jong, T.G., van Meurs, P. Uniqueness of Local, Analytic Solutions to Singular ODEs. Acta Appl Math 180, 14 (2022). https://doi.org/10.1007/s10440-022-00517-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10440-022-00517-7