Abstract

Accurate detection of fibrotic interstitial lung disease (f-ILD) is conducive to early intervention. Our aim was to develop a lung graph-based machine learning model to identify f-ILD. A total of 417 HRCTs from 279 patients with confirmed ILD (156 f-ILD and 123 non-f-ILD) were included in this study. A lung graph-based machine learning model based on HRCT was developed for aiding clinician to diagnose f-ILD. In this approach, local radiomics features were extracted from an automatically generated geometric atlas of the lung and used to build a series of specific lung graph models. Encoding these lung graphs, a lung descriptor was gained and became as a characterization of global radiomics feature distribution to diagnose f-ILD. The Weighted Ensemble model showed the best predictive performance in cross-validation. The classification accuracy of the model was significantly higher than that of the three radiologists at both the CT sequence level and the patient level. At the patient level, the diagnostic accuracy of the model versus radiologists A, B, and C was 0.986 (95% CI 0.959 to 1.000), 0.918 (95% CI 0.849 to 0.973), 0.822 (95% CI 0.726 to 0.904), and 0.904 (95% CI 0.836 to 0.973), respectively. There was a statistically significant difference in AUC values between the model and 3 physicians (p < 0.05). The lung graph-based machine learning model could identify f-ILD, and the diagnostic performance exceeded radiologists which could aid clinicians to assess ILD objectively.

Graphical Abstract

Given a sequence of HRCT slices from a patient, the lung field is first automatically extracted. Next, this lung region is divided into 36 sub-regions using geometric rules, obtaining a lung atlas. And then, the lung graph is built based on 3D radiomics features of each sub-region of the lung atlas. Finally, the model’s predictions were compared to the physicians’ assessment results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Interstitial lung diseases (ILDs) are a group of heterogeneous diseases caused by various causes of alveolar inflammation and/or fibrosis [1, 2]. The degree and distribution of inflammation and fibrosis vary among different types of ILDs and at different development stages. Fibrotic interstitial lung diseases (f-ILDs) are the end-stage of ILD [3]. Idiopathic pulmonary fibrosis (IPF) is the classic form of f-ILD with a sustained progressive phenotype which manifests as fibrosis at an early stage [4,5,6,7,8,9]. As the most common form of f-ILD, the imaging and pathological histology of IPF present as usual interstitial pneumonia (UIP) [2, 10,11,12]. Whereas hypersensitivity pneumonitis (HP), nonspecific interstitial pneumonia (NSIP), and connective tissue disease–associated interstitial lung disease (CTD-ILD) present predominantly with inflammation in the early stages; fibrosis develops and gradually worsens with disease progression to f-ILD [13,14,15,16]. Fibrotic ILD is often accompanied by progressive lung structural destruction and decreased lung function [17, 18].

Despite the diversity of f-ILD classes and the difficulty of clinical management, these patients have similarities in clinical course, imaging, treatment, and prognosis, and the disease continues to progress [19,20,21,22]. Early and rapid identification of f-ILD is particularly important to improve the long-term survival and prognosis. HRCT is a key method to demonstrate the characteristics of ILD including non-f-ILD and f-ILD [19], which mainly present as a reticular pattern, traction bronchiectasis with or without honeycombing on HRCT. However, its evaluation extremely depends on the experience of radiologist and is time-consuming for the large amount of data on HRCT. The graph model is a complete framework that was first proposed for brain connectivity analysis, which divides the brain into a fixed number of anatomical regions and compares the neural activity in different regions. The method was subsequently improved by Dicente Cid et al. who proposed a more complex graph model for lung diseases. This 3D lung texture–based structural analysis has achieved better results in the classification of tuberculosis, early identification of multi-drug-resistant tuberculosis, and identification of pulmonary hypertension and pulmonary embolism [23, 24]. Thus, our objective is to apply this 3D lung texture–based structural analysis to assist in identification of f-ILD.

Materials and Methods

Study Cohort and Design

This lung graph–based machine learning study based on an ILD cohort was performed according to the Declaration of Helsinki and was approved by the ethics committee of our hospital (NO. 2017–25). Written consent of individuals was obtained. We retrospectively extracted 417 HRCTs of 279 patients with ILD at our hospital from January 2018 to December 2021. The determination of ILD was made by a multidisciplinary team (MDT) including at least one attending physician in Pulmonary and Critical Care Medicine, especially a specialist in ILD, two specialists with more than 10 years of experience in chest radiology, and one lung pathologist with 10 years of experience in lung pathology according to the diagnostic guidelines [1, 6]. All patients suspected of ILD experienced standard diagnostic procedures for ILD in our center, including detailed investigation of medical history, clinical symptoms and physical examination, laboratory tests for routine, connective tissue diseases, pulmonary function tests (PFTs), HRCT, bronchoalveolar lavage, and/or transbronchial lung biopsy or transbronchial lung cryobiopsy, sometimes video-assisted thoracoscopic surgery or surgical lung biopsy depending on the clinical requirements and multidisciplinary discussion [25]. The patients were treated by guidelines or consensus recommended and followed up by a clinic visit per 3 to 6 months or at time on clinical requirement. Each patient completed 1 to 5 HRCT scans. Cases diagnosed from January 2018 to December 2019 were used to train and test the model. Cases from January 2020 to December 2021 were used for independent validation and also visual assessment by radiologists. The detailed screening flow chart is shown in Fig. 1. The inclusion criteria are as follows: (1) patients with ILD diagnosed during the study period, (2) they received at least one supine HRCT sequence. The exclusion criteria are as follows: (1) significant respiratory motion artifacts on HRCT, (2) comorbidity with malignancy or other lung diseases, such as emphysema, (3) combined heart failure.

Patients with radiological and/or pathological evidence of fibrosis and a restrictive physiologic impairment pattern of FVC < 80% prediction were defined as f-ILD. The fibrotic patterns on HRCT were defined as reticulations, interlobular septal thickening, lung architectural distortion, honeycombing, and traction bronchiectasis. The fibrotic histologic findings included alveolar and interlobular septal thickening, fibroblast proliferation with collagen deposition, and architectural distortion. The flow chart of ILD diagnosis and study design is shown in Fig. 1.

Pulmonary Function Tests

All patients underwent PFTs (MasterScreen, Vyaire Medical GmbH, Hochberg, Germany). PFT measurements included the percentage of predicted forced vital capacity (FVC%), percentage of forced expiratory volume in one second (FEV1%), FEV1/FVC%, percentage of predicted total lung capacity (TLC%), and percentage of predicted DLco corrected for the measured hemoglobin (DLco%).

CT Protocol

All patients were scanned in the supine position on a multilayer spiral CT device (Lightspeed VCT/64, GE Healthcare; Toshiba Aquilion ONE TSX-301C/320; Philips iCT/256; Siemens FLASH Dual Source CT) at the end of inspiration scanning from the lung apex to the lung base. Acquisition and reconstruction parameters for HRCT sequences included tube voltage of 100–120 kV, tube current of 100–300 mAs, slice thickness of 0.625–1 mm, reconstruction increments of 1–1.25 mm, table speed of 39.37 mm/s, and gantry rotation time of 0.8 s.

Lung Atlas Segmentation

All CT scans were first resampled into isometric voxels with a voxel size of 1 mm in all three dimensions. After this pre-processed step, a pipeline consisting of two steps was performed to obtain a specific human lung per CT scan, regarded as the lung atlas. As the first step, the lung fields were automatically extracted by a deep learning–based segmentation method (i.e., U-Net) provided by a medical imaging solution software (InferRead™ CT Lung, version R3.12.3; Infervision Medical Technology Co., Ltd., Beijing, China). And then, each lung mask was divided into 36 sub-regions via several geometric rules, obtaining a lung atlas.

Radiomics-based Lung Graph Model Construction

Given a division of the lung with N regions r = {r1, r2, …, rN}, for a radiomics feature f, we define a graph model GF of the lung based on that feature as the set of N regional feature nodes F = {f1, f2, …, fN}.

For each sub-region ri from a given lung atlas, 1004 radiomics features were extracted: 187 first-order statistical features, 14 three-dimensional shape features, 253 GLCM features, 176 GLRLM features, 165 GLSZM features, 55 NGTDM features, and 154 GLDM features. This feature extraction procedure was done by using an open-source PyRadiomics software package (version 3.0.1; https://pyradiomics.readthedocs.io) in the Python environment (version 3.7.3; https://www.python.org/).

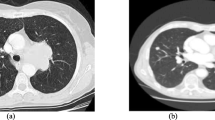

Using the obtained lung atlas and the extracted features, 1004 radiomics-based lung graph models were established, and each graph model contained 36 feature nodes. (Figs. 2 and 3: The construction flow of the lung graph).

The construction flow of the lung graph. Given a sequence of HRCT slices from a patient, the lung field is first automatically extracted. And then, this lung region is divided into 36 sub-regions using geometric rules, obtaining a lung atlas. Finally, the lung graph is built based on 3D radiomics features of each sub-region of the lung atlas

Two examples of lung graph construction. A A patient (male, age 78 years old) with non-fibrotic interstitial lung disease (NSIP). B A patient (male, age 70 years old) with fibrotic interstitial lung disease (idiopathic pulmonary fibrosis). From left to right: cropped CT slices of these two patients, the automatically generated lung atlas slices, the corresponding lung subgraph. This subgraph was obtained by the radiomics feature called log_sigma-1–0-mm-3D_glszm_SizeZoneNonUniformity. Each dots represents the strength of that feature in the subgraph. For better visualization, the values of each sub-region were scaled to (0,1)

Graph-based Lung Descriptor Generation

Considering data derived from patients who have undergone lung resection or with lung shrinking, the number of the nodes in the lung graph was 36 at most. Hence, a fixed number of statistics were selected to describe the distribution of each graph in order to compare the differences between different patients.

Ten statistics were calculated for each lung graph GF in this study, including maximum value (s1(GF) = max(GF)), minimum value (s2(GF) = min(GF)), median value (s3(GF) = median(GF)), the 10th percentile value (s4(GF) = percentile(GF,10)), the 90th percentile value (s5(GF) = percentile(GF,90)), mean value (s6(GF) = mean(GF)), standard deviation (s7(GF) = std(GF)), interquartile range (s8(GF) = percentile(GF,75)-percentile(GF,15)), skewness (s9(GF) = skew(GF)), and kurtosis (s10(GF) = kurt(GF)). And then, the graph-based lung descriptor was defined as the following vector:

s(GF) = (s1(GF), s2(GF),…, s10(GF)) ∈ ℝ.10

As mentioned above, 1004 radiomics-based lung graphs were generated, so the final lung descriptor in our study was defined as the concatenation of the 1004 graph-based lung descriptors:

S = (s(GF1) || s(GF2) || … || s(GF1004)) ∈ ℝ.10040

Dimensionality Reduction on Lung Descriptor

The dimension of the lung descriptor is massive, leading easily to the overfitting problem. To avoid this problem, a two-step flow for dimensionality reduction was adopted before the training model. Firstly, the Mann–Whitney U test was done between fibrotic and non-fibrotic ILD patients for all elements in the graph-based lung descriptor. Ranking these elements according to the obtained p-values in ascending order, the top 1% of the sorted ones were retained and fed into the subsequent step. Secondly, the Pearson correlation coefficient (r) was calculated between each pair of the inputted features. If the absolute r of a pair of features was greater than 0.85, the feature with the larger p-value from the abovementioned test in this pair was removed from the feature set.

Machine Learning Model Development and Validation

Using the dimensionality-reduced lung descriptor as input, 14 machine learning (ML) methods were used to establish the model for pulmonary fibrosis prediction on the training set. These machine learning methods were provided by the AutoGluon framework (version 0.3.1; https://auto.gluon.ai/stable/index.html) and named as CatBoost, ExtraTreesEntr, ExtraTreesGini, KNeighborsDist, KNeighborsUnif, LightGBM, LightGBMLarge, LightGBMXT, NeuralNetFastAI, NeuralNetMXNet, RandomForestEntr, RandomForestGini, XGBoost, and Weighted Ensemble.

Fivefold cross validation was used as the training strategy to select the best model and the optimal hyper-parameters for each model. And the area under curve (AUC) was selected as the criterion for model evaluation. For the model using the given hyper-parameters, the average of AUC values during cross validation was calculated as its predictive power. The ML model that obtained the best results on the training cohort would be chosen to apply to the testing set. CT from patients diagnosed between May 2021 and March 2022 were used for model validation. All model implementations were done in the Python environment (version 3.7.3; https://www.python.org/).

The diagnosis Performance of Radiologists

In the external validation set, each case was classified visually by three chest radiologists respectively with 20 years, 5 years, and 10 years of experience. Three radiologists independently evaluated the HRCT of patients in the external group without knowing the clinical information and diagnosis classification.

Experimental Setting and Statistical Analysis

In our study, all HRCT images were considered for this binary classification task. Five random splits (80% training − 20% testing) were generated, ensuring that CT scans for the same patient were all grouped into either the training or the testing set, to do unbiased estimates of model evaluation. In each split, dimensionality reduction operations and model building with fivefold cross validation were executed on the training set, and the best model was selected via cross-validation results, validating on the testing set.

Since at least one CT scan was collected for each patient, model performance was validated both at the scan-level and patient-level. In this study, the result of the model at the patient-level was calculated by averaging the predicting results of all CT scans from the same patient. Continuous variables were expressed as means ± standard deviations. Identification ability of lung graph–based approach was assessed by using AUC, accuracy, sensitivity, specificity, the positive predictive value (PPV), and the negative predictive value (NPV). The performance lung graph–based ML model and chest radiologists in assessment of PPF was compared ROC. AUCs of external validation set were compared using DeLong’s test, and bootstrap (1000 times) was used to estimate 95% confidence intervals (CIs) of the above evaluation indicators.

Results

Population Characteristics

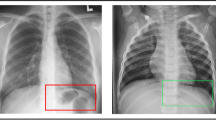

During January 2018 to December 2021, 279 patients with ILD diagnosed by MDT at our hospital were included (156 f-ILD, 123 non-f-ILD). The median age of all included patients was 65 years (IQR, 59 to 71 years) and 160 (57.3%) were males. The median age was 67 years (IQR, 62 to 73 years) for f-ILD and 61 years (IQR, 55 to 68 years) for non-f-ILD. All included patients underwent one HRCT at least. A total of 417 HRCT images of 279 patients were included in the analysis. Figure 4 shows HRCT and corresponding pulmonary pathology of non-f-ILD and f- ILD. According to PFTs, there were 270 mild restrictive lung function (70–80% predicted), 111 moderate restrictive lung function (60–70% predicted), 28 moderately severe restrictive lung function (50–60% predicted), and 8 severe restrictive lung function (< 50% predicted).

A A patient with a definite diagnosis of nonspecific interstitial pneumonia. CT showed non-fibrotic presentation of large bilateral pulmonary ground glass opacity with bilateral lower lung basal reticular pattern. Medium magnification of the lung showed lung tissue focal alveolar septum widening with lymphocyte and plasma cell infiltration, focal multinucleated giant cells, and alveolar epithelial hyperplasia, and no granulomas were seen. B A patient with a definite diagnosis of idiopathic pulmonary fibrosis. CT demonstrated fibrotic presentation of subpleural reticular pattern and ground glass opacity with honeycombing changes at the base of both lungs. Low magnification of the lung showed some of the alveolar septa widened with a little lymphocyte and plasma cell infiltration and alveolar epithelial hyperplasia; macrophages were seen in the alveolar lumen

Identification of f-ILD on the Testing Set

After reducing the dimension, the final lung descriptor varied for each grouping split and contained an average number of 10 elements. Table 1 shows the predictive ability of the used 14 ML models on the training sets from five data splits. Among these models, the Weighted Ensemble obtained the best classification performance on the training set from each grouping split. Hence, the trained Weighted Ensemble models were applied to the corresponding testing sets from the five data splits for validating model robustness.

To verify the robustness of the model, the trained Weighted Ensemble model was applied to the corresponding testing set from the five randomly divided data. Table 2 presents the mean and standard deviation of the performance evaluation metrics for the five randomly grouped models. At the CT sequence level, the lung graph–based machine learning model obtained good classification performance with an AUC value of 0.971 ± 0.032. The accuracy, sensitivity, and specificity of the model were 0.930 ± 0.057, 0.942 ± 0.040, and 0.921 ± 0.094, respectively. Similarly, the lung graph–based machine learning model showed good performance at the patient level with AUC values and accuracy of 0.973 ± 0.019 and 0.918 ± 0.059. The ROC curves for the five random groupings at the sequence level and patient level are shown in Fig. 5.

External Validation of the Model and Comparison with Radiologists

Model performance was further evaluated in the independent validation set. At the CT sequence level, the diagnostic accuracy of the model radiologist A, radiologist B, and radiologist C was 0.968 (95% CI 0.926 to 1.000), 0.936 (95% CI 0.883 to 0.979), 0.830 (95% CI 0.755 to 0.904), and 0.894 (95% CI 0.830 to 0.947), respectively. The AUC values were 0.999 (95% CI 0.994 to 1.000), 0.933 (95% CI 0.879 to 0.979), 0.842 (95% CI 0.769 to 0.909), and 0.904 (95% CI 0.846 to 0.953), respectively. In addition, at the patient level, the diagnostic accuracy of the model radiologist A, radiologist B, and radiologist C was 0.986 (95% CI 0.959 to 1.000), 0.918 (95% CI 0.849 to 0.973), 0.822 (95% CI 0.726 to 0.904), and 0.904 (95% CI 0.836 to 0.973), respectively. The AUC values were 1.000 (95% CI 1.000 to 1.000), 0.917 (95% CI 0.855 to 0.973), 0.828 (95% CI 0.742 to 0.903), and 0.908 (95% CI 0.844 to 0.969), respectively. The sensitivity of the model was 0.971 (95% CI 0.912 to 1.000), and the specificity was 1.000 (95% CI 1.000 to 1.000) (Table 3).

The diagnostic performance of the model was superior to that of the radiologist, both at the CT sequence level and at the patient level, and there was a statistically significant difference in AUC values between the model and radiologist A, radiologist B, and radiologist C (p < 0.05) (Table 4). The corresponding ROC curves are shown in Fig. 6.

Discussion

To our knowledge, this is the first study to develop and validate a lung graph–based machine learning model for identification of f-ILD, and the lung graph-based Weighted Ensemble model exhibited excellent classification performance in the validation set.

ILD is characterized by varying degrees of inflammation and fibrosis in interstitial lung [1]. Compared to patients with non-f-ILD, patients with f-ILD had a poorer quality of life and prognosis. Imaging characteristics of f-ILD tend to be dominated by reticular pattern and traction bronchiectasis with or without honeycombing [3]. Whereas non-f-ILD mainly presents as ground glass opacity or consolidation. However, definite diagnosis depends on tissue pathology. The treatment and prognosis of f-ILD and non-f-ILD are significantly different; early and accurate identification is especially essential to improve the prognosis [26,27,28]

Rafaee et al. [29] achieved for the first time the identification of UIP in patients with IPF based on handcrafted radiomics with an AUC of 0.66. However, this artificial labeling of regions of interest was time- and effort-consuming, and the diagnostic performance of handcrafted radiomics-based model and physician remains unknown. The underlying structure of the lung map model used in this study is based on the 3D morphology of the lungs, dividing the lung into different regions, extracting each node and coding for different nodes, filtering features according to their importance, and analyzing the correlation between different features. Unlike previous studies that selected only a few levels of images and based on regions of interest [30,31,32], the 3D lung graph combined with deep learning algorithms achieved the integration from local analysis to 3D images. The potential of graph model–based disease classification was confirmed in various disease such as chronic thromboembolic pulmonary hypertension, multidrug resistance prediction, and pulmonary tuberculosis type [23, 24, 33]. In our research, classification method divided the lung region into different sub-regions and completed the overall analysis to encode the subtle differences between different types of ILD by considering the feature nodes of the associated sub-regions and analyzing the global radiomics feature distribution. The performance of the model at both patient level and CT sequence level was considered. Our lung graph–based method gained good diagnostic accuracy in patient level and CT scan level in the testing set. Further, the external validation cohort demonstrated the excellent performance of the model, achieving higher diagnostic accuracy compared to radiologists.

There are several limitations in this study. First, more studies are needed to verify this result for a better clinical interpretation, depending on more diverse data. Second, although this classification model achieved f-ILD screening, it could not realize the segmentation and quantitative analysis of specific lesions. Third, the performance of the deep learning model was compared to only three chest radiologists in different experiences, which may not fully represent the entire range of physician capabilities, but as a national respiratory medicine center, the radiologists are likely to be more experienced compared to most hospitals and may overestimate the diagnostic capabilities of the physicians. Finally, the model is helpful to dichotomize only f-ILD and non-f-ILD without performing disease classification for other different types of ILD, and this is the next major step of our further study. Moreover, the correlation of quantitative fibrosis and restrictive lung dysfunction need further research.

Conclusions

The lung graph-based machine learning model achieved high accuracy in identifying f-ILD. This model improves the efficiency of f-ILD diagnosis which could aid clinicians to accurately assess ILD.

Data Availability

The original contributions presented in the study are included in the article/Supplementary Materials; further inquiries can be directed to the corresponding author.

Abbreviations

- ILD:

-

Interstitial lung disease

- f-ILD:

-

Fibrotic interstitial lung disease

- Non-f-ILD:

-

Non fibrotic interstitial lung disease

- IPF:

-

Idiopathic pulmonary fibrosis

- NSIP:

-

Nonspecific interstitial pneumonia

- CTD-ILD:

-

Connective tissue disease-associated interstitial lung disease

- COP:

-

Cryptogenic organizing pneumonia

- HRCT:

-

High resolution computed tomography

- ML:

-

Machine learning

- AUC:

-

Area under the curve

- PPV:

-

Positive predict value

- NPV:

-

Negative predict value

References

Travis W D, Costabel U, Hansell D M, et al. An official American Thoracic Society/European Respiratory Society statement: Update of the international multidisciplinary classification of the idiopathic interstitial pneumonias[J]. Am J Respir Crit Care Med, 2013, 188(6):733-48.

Travis W, Costabel U, Hansell D, et al. An official American Thoracic Society/European Respiratory Society statement: Update of the international multidisciplinary classification of the idiopathic interstitial pneumonias[J], 2013, 188(6):733–48.

Hobbs S, Chung J H, Leb J, et al. Practical Imaging Interpretation in Patients Suspected of Having Idiopathic Pulmonary Fibrosis: Official Recommendations from the Radiology Working Group of the Pulmonary Fibrosis Foundation[J]. Radiol Cardiothorac Imaging, 2021, 3(1):e200279.

Pitre T, Mah J, Helmeczi W, et al. Medical treatments for idiopathic pulmonary fibrosis: a systematic review and network meta-analysis[J]. Thorax, 2022, 77(12):1243-1250.

Raghu G, Remy-Jardin M, Myers J L, et al. Diagnosis of Idiopathic Pulmonary Fibrosis. An Official ATS/ERS/JRS/ALAT Clinical Practice Guideline[J]. Am J Respir Crit Care Med, 2018, 198(5):e44-e68.

Raghu G, Collard H R, Egan J J, et al. An official ATS/ERS/JRS/ALAT statement: idiopathic pulmonary fibrosis: evidence-based guidelines for diagnosis and management[J]. Am J Respir Crit Care Med, 2011, 183(6):788-824.

Pitre T, Mah J, Helmeczi W, et al. Medical treatments for idiopathic pulmonary fibrosis: a systematic review and network meta-analysis[J], 2022.

Raghu G, Remy-Jardin M, Myers J, et al. Diagnosis of Idiopathic Pulmonary Fibrosis. An Official ATS/ERS/JRS/ALAT Clinical Practice Guideline[J], 2018, 198(5):e44-e68.

Raghu G, Collard H, Egan J, et al. An official ATS/ERS/JRS/ALAT statement: idiopathic pulmonary fibrosis: evidence-based guidelines for diagnosis and management[J], 2011, 183(6):788–824.

Fischer A, Du Bois R. Interstitial lung disease in connective tissue disorders[J]. Lancet, 2012, 380(9842):689-98.

Romagnoli M, Nannini C, Piciucchi S, et al. Idiopathic nonspecific interstitial pneumonia: an interstitial lung disease associated with autoimmune disorders?[J]. Eur Respir J, 2011, 38(2):384-91.

Hobbs S, Chung J, Leb J, et al. Practical Imaging Interpretation in Patients Suspected of Having Idiopathic Pulmonary Fibrosis: Official Recommendations from the Radiology Working Group of the Pulmonary Fibrosis Foundation[J], 2021, 3(1):e200279.

Kwon B S, Choe J, Chae E J, et al. Progressive fibrosing interstitial lung disease: prevalence and clinical outcome[J]. Respir Res, 2021, 22(1):282.

Khor Y H, Gutman L, Abu Hussein N, et al. Incidence and Prognostic Significance of Hypoxemia in Fibrotic Interstitial Lung Disease: An International Cohort Study[J]. Chest, 2021, 160(3):994-1005.

Fischer A, Du Bois R J L. Interstitial lung disease in connective tissue disorders[J], 2012, 380(9842):689–98.

Romagnoli M, Nannini C, Piciucchi S, et al. Idiopathic nonspecific interstitial pneumonia: an interstitial lung disease associated with autoimmune disorders?[J], 2011, 38(2):384–91.

Guler S A, Hur S A, Stickland M K, et al. Survival after inpatient or outpatient pulmonary rehabilitation in patients with fibrotic interstitial lung disease: a multicentre retrospective cohort study[J]. Thorax, 2022, 77(6):589-595.

Kwon B, Choe J, Chae E, et al. Progressive fibrosing interstitial lung disease: prevalence and clinical outcome[J], 2021, 22(1):282.

Nathan S D, Pastre J, Ksovreli I, et al. HRCT evaluation of patients with interstitial lung disease: comparison of the 2018 and 2011 diagnostic guidelines[J]. Ther Adv Respir Dis, 2020, 14:1753466620968496.

Richiardi J, Eryilmaz H, Schwartz S, et al. Decoding brain states from fMRI connectivity graphs[J]. Neuroimage, 2011, 56(2):616-26.

Khor Y, Gutman L, Abu Hussein N, et al. Incidence and Prognostic Significance of Hypoxemia in Fibrotic Interstitial Lung Disease: An International Cohort Study[J], 2021, 160(3):994–1005.

Guler S, Hur S, Stickland M, et al. Survival after inpatient or outpatient pulmonary rehabilitation in patients with fibrotic interstitial lung disease: a multicentre retrospective cohort study[J], 2021.

Dicente Cid Y, Jimenez-Del-Toro, O., Platon, A., M¨ Uller, H., Poletti, P.A. From Local to Global: A Holistic Lung Graph.[J]. In: Medical Image Computing and Computer–Assisted Intervention – MICCAI 2018 (2018).

Dicente Cid Y, Batmanghelich, K., M¨ Uller, H. Textured graph–based model of the lungs: Application on tuberculosis type classification and multi–drug resistance prediction.[J]. In: CLEF 2018. Springer LNCS (2018).

Wahidi M M, Argento A C, Mahmood K, et al. Comparison of Forceps, Cryoprobe, and Thoracoscopic Lung Biopsy for the Diagnosis of Interstitial Lung Disease - The CHILL Study[J]. Respiration, 2022, 101(4):394-400.

Raghu G, Anstrom K J, King T E, Jr., et al. Prednisone, azathioprine, and N-acetylcysteine for pulmonary fibrosis[J]. N Engl J Med, 2012, 366(21):1968-77.

Tashkin D P, Roth M D, Clements P J, et al. Mycophenolate mofetil versus oral cyclophosphamide in scleroderma-related interstitial lung disease (SLS II): a randomised controlled, double-blind, parallel group trial[J]. Lancet Respir Med, 2016, 4(9):708-719.

Solomon J J, Ryu J H, Tazelaar H D, et al. Fibrosing interstitial pneumonia predicts survival in patients with rheumatoid arthritis-associated interstitial lung disease (RA-ILD)[J]. Respir Med, 2013, 107(8):1247-52.

Refaee T, Bondue B, Van Simaeys G et al. A Handcrafted Radiomics-Based Model for the Diagnosis of Usual Interstitial Pneumonia in Patients with Idiopathic Pulmonary Fibrosis[J]. J Pers Med, 2022, 12(3).

Walsh S L F, Calandriello L, Silva M, et al. Deep learning for classifying fibrotic lung disease on high-resolution computed tomography: a case-cohort study[J]. Lancet Respir Med, 2018, 6(11):837-845.

Anthimopoulos M, Christodoulidis S, Ebner L, et al. Semantic Segmentation of Pathological Lung Tissue With Dilated Fully Convolutional Networks[J]. IEEE J Biomed Health Inform, 2019, 23(2):714-722.

Anthimopoulos M, Christodoulidis S, Ebner L, et al. Lung Pattern Classification for Interstitial Lung Diseases Using a Deep Convolutional Neural Network[J]. IEEE Trans Med Imaging, 2016, 35(5):1207-1216.

Jimenez-Del-Toro O, Dicente Cid Y, Platon A, et al. A lung graph model for the radiological assessment of chronic thromboembolic pulmonary hypertension in CT[J]. Comput Biol Med, 2020, 125:103962.

Funding

This work was supported by National Key R & D Program of China (Nos. 2021YFC2500700 and 2016YFC0901101) and the National Natural Science Foundation of China (No. 81870056).

Author information

Authors and Affiliations

Contributions

All authors attest that they meet the current International Committee of Medical Journal Editors (ICMJE) criteria for authorship. All authors drafted the manuscript and approved the final version of the manuscript. All authors had access to all of the data in the study and had final responsibility for the decision to submit for publication.

Corresponding authors

Ethics declarations

Ethical Approval

This study was approved by the China-Japan Friendship Hospital Committee (2022-KY-031).

Consent to Participate

The authors declare that this report does not contain any personal information that could lead to the identification of the patient(s). The authors declare that they obtained a written informed consent from the patients and/or volunteers included in the article. The authors also confirm that the personal details of the patients and/or volunteers have been removed.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• Lung graph-based machine learning model achieved high accuracy in identifying f-ILD.

• The diagnostic performance of the model exceeded that of radiological experts.

• Model improved the efficiency of f-ILD diagnosis and could aid clinicians to assess ILD objectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, H., Liu, M., Liu, A. et al. Developing the Lung Graph-Based Machine Learning Model for Identification of Fibrotic Interstitial Lung Diseases. J Digit Imaging. Inform. med. 37, 268–279 (2024). https://doi.org/10.1007/s10278-023-00909-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-023-00909-7