Abstract

Optimization problems in software engineering typically deal with structures as they occur in the design and maintenance of software systems. In model-driven optimization (MDO), domain-specific models are used to represent these structures while evolutionary algorithms are often used to solve optimization problems. However, designing appropriate models and evolutionary algorithms to represent and evolve structures is not always straightforward. Domain experts often need deep knowledge of how to configure an evolutionary algorithm. This makes the use of model-driven meta-heuristic search difficult and expensive. We present a graph-based framework for MDO that identifies and clarifies core concepts and relies on mutation operators to specify evolutionary change. This framework is intended to help domain experts develop and study evolutionary algorithms based on domain-specific models and operators. In addition, it can help in clarifying the critical factors for conducting reproducible experiments in MDO. Based on the framework, we are able to take a first step toward identifying and studying important properties of evolutionary operators in the context of MDO. As a showcase, we investigate the impact of soundness and completeness at the level of mutation operator sets on the effectiveness and efficiency of evolutionary algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Various software engineering problems, such as software modularization [13], software testing [62], and release planning [8], can be viewed as optimization problems. Search-based software engineering (SBSE) [34] explores the application of meta-heuristic techniques to such problems. One of the widely used approaches to efficiently explore a search space is the application of evolutionary algorithms [35]. In this approach, elements of the search space are generated from existing elements using evolutionary operators such as mutation operators. However, the proper application of SBSE techniques is often not an easy task. As pointed out in [66], “the problem domains in software engineering are too complex to be effectively captured with traditional representations as they are typically used in search-based systems.” Compared to traditional encodings, e.g., by vectors, domain-specific models allow to more easily capture structural information about the problem and solution domains. Thus, their use can facilitate the exploratory search for solutions using evolutionary operators, especially for structural software engineering problems.

Model-driven engineering (MDE) [58] aims to represent domain knowledge in models and solve problems through model transformations. MDE can be used in the context of SBSE to minimize the expertise required of users of SBSE techniques. This combination of SBSE and MDE is referred to in the literature as model-based or model-driven optimization (MDO) [38, 66]. Two main approaches have emerged in MDO: The model-based approach [18, 66] performs optimization directly on the models, while the rule-based approach [2, 11, 31] searches for optimized model transformation sequences. In this paper, we focus on the model-based approach to MDO and refer to it as MDO for short. Problem instances and solutions are represented as models and the search space is explored by model transformations.

With reference to [30, 66], the definition of an evolutionary algorithm requires a representation of problem instances and search space elements (i.e., solutions). It also includes a formulated optimization problem that clarifies which of the solutions are feasible (i.e., satisfy all constraints of the optimization problem) and best satisfy the objectives. The key ingredients of an evolutionary algorithm are a procedure for generating an initial population of solutions, a mechanism for generating new solutions from existing ones (in this case, by mutation), a selection mechanism that typically implements the evolutionary concept of survival of the fittest, and a condition for stopping evolutionary computations. Selecting these ingredients so that an evolutionary algorithm is effective and efficient is a challenge.

Using MDO the application of search-based techniques in software engineering can be simplified since the search space consists of models evolved with model transformations. However, this does not prevent us from creating suboptimal specifications of evolutionary operators. For example, a particular set of mutation operators may not be complete, i.e., it may not reach all regions of the search space, so an optimum or a good enough solution may be missed. For optimization, however, it can be quite advantageous if the entire search space is reachable with a given set of evolutionary operators. Furthermore, too many of the possible mutations can lead to infeasible solutions, so that it may be advantageous if the given set of operators is sound in the sense that mutating a feasible solution yields a feasible solution again. In [15, 38, 59], several sets of mutation operators were evaluated for their effectiveness, i.e., ability to produce good results, and for their efficiency, i.e., low computational cost. In particular, the results in [15] suggest that feasibility-preserving (i.e., sound) mutation operators can be advantageous. To clarify what soundness and completeness can mean in the first place, and what implications they can have for evolutionary computation, we need a formal basis.

According to Harman et al. [35], the initial excitement about SBSE is over; it is now time for consolidation, i.e., “to develop a deeper understanding and scientific basis for the results obtained so far.” This statement has motivated us to develop a formal framework for MDO that will hopefully lead to a deeper understanding of MDO, which combines SBSE with MDE. Our contributions are as follows:

-

(1)

We present a graph-based framework for (the model-based approach to) MDO using mutation operators and evolutionary algorithms, and exemplify an instantiation based on the well-known NSGA-II algorithm [24]. We use the theory of graph transformation [26] to define model-driven optimizations since graphs are a natural means to encode models of different types. Mutations of models can be formally defined as graph transformations. Our framework precisely defines all the relevant components of MDO and is intended to assist the developer in using MDO to solve optimization problems.

-

(2)

We identify and define soundness and completeness as interesting properties of mutation operator sets. We select these properties because previous evaluations suggest that they may play a role and because these properties can be analyzed statically for certain types of mutation operator sets.

-

(3)

In an evaluation, we investigate the impact of soundness and completeness on the effectiveness and efficiency of evolutionary algorithms. We use the framework to clarify all critical factors for conducting a reproducible experiment. In the experiment conducted, we stick to three state-of-the-art evolutionary algorithms (NSGA-II [24], PESA-II [21], and SPEA2 [64]) and we study different sets of mutation operators for three optimization problems: the Class Responsibility Assignment problem (CRA case) [13, 15, 32, 38, 47], the problem of Scrum Planning [15], and the Next Release Problem [8, 15]. The experiment is based on the tools MDEOptimiser [15] and Henshin [4].

In the next section, the model-based approach to MDO is presented using an example. Section 3 considers the state of the art of MDO and other work related to our framework. Then, in Sect. 4, we present our graph-based framework for MDO. Soundness and completeness of evolutionary operators are defined in Sect. 5. To enable comparison of evolutionary algorithms, we define their effectiveness and efficiency with respect to our framework in Sect. 6. The evaluation is presented in Sect. 7. We conclude in Sect. 8. All proofs and additional material for the evaluation can be found in Appendices A and B.

2 Running example

Since the CRA case [13] is a structural optimization problem in software engineering and has become one of the most well-known cases when considering MDO, we use it to recall the core concepts of MDO, illustrate our formalization, and conduct a set of experiments. The CRA case aims to provide a high-level design for object-oriented systems. Given a class model with features (i.e., attributes and methods) and their usage relationships as the problem instance, each partial assignment of features to classes forms a solution. What is sought is a complete assignment of features to classes such that coupling between classes is low and cohesion within classes is high.

Meta-modeling is used to define what kind of domain models are considered for optimization. A suitable meta-model for the CRA case is presented in [32] and a slightly adapted version is reproduced in Fig. 1. Since the CRA case is a structural problem, we neglect all meta-attributes (which are attributes of meta-model classes) such as class and feature names to keep the running example as simple as possible. The meta-model specifies class models that contain features (i.e., attributes and methods) and prescribes the possible usage relationships. While methods can use attributes and methods, attributes are used only by methods. To represent solutions, a class model may contain classes to encapsulate features. A solution model is considered to be feasible if each feature is assigned to exactly one class (the feasibility constraint).

To assess the quality of a class design, two quality aspects are important: cohesion and coupling. While cohesion confirms that dependent features are within a single class, coupling refers to the dependencies of features between different classes. Good solutions exhibit a class design with high cohesion and low coupling because it is considered easy to understand and maintain. Cohesion and coupling are measured by the CohesionRatio and CouplingRatio presented in [32], respectively.

Meta-model of the CRA case as in [32], slightly adapted. White solid elements are invariant problem parts, the colored, dashed class element and its incoming and outgoing references are solution-related

3 Related work

Recently, several papers have been published on MDO optimizing models or rule-based model transformation sequences. We consider related work on both approaches below. Since our main contribution is a graph-based framework for MDO, we also consider related work on evolving graphs and other frameworks for (evolutionary) optimization.

3.1 The rule-based approach to MDO

Early approaches combining SBSE with MDE seek optimized model transformation sequences [2, 11, 31]. More precisely, a solution is a sequence of rule calls that is to be applied to a given input model. The successful application of such a sequence then yields a solution model. The sequences are optimized using local search algorithms and evolutionary algorithms. While a mutation operator can change sequence slots, a crossover operator splits sequences into parts and recombines them in a different order. The behavior of these operators is largely similar to the operation of traditional variants for sequential encoding. As sequences of rule calls do not per se satisfy consistency constraints, they can easily become inapplicable to the input model after mutation or crossover has taken place. Thus, a disruptive repair step (e.g., truncation of a sequence) is typically needed to regain applicable sequences.

To our knowledge, soundness and completeness have not yet been formally defined in the rule-based approach. In addition, the effects of soundness and completeness on the effectiveness and efficiency of evolutionary algorithms have not been investigated in the rule-based approach.

A comparison of the rule-based approach with the model-based approach [38] revealed that the model-based approach tends to be more effective than the rule-based approach. For that reason, we decided to first develop a framework for the model-based approach to MDO and plan to extend the framework toward the rule-based approach in future work.

3.2 The model-based approach to MDO

Since our framework follows the model-based approach to MDO, we consider related work in more detail. We selected the following papers on the model-based approach to MDO that have been published in journals, at conferences, and at workshops on modeling and SBSE: [15, 17, 18, 37, 38, 40, 59, 66]. We investigate which core concepts of MDO were considered. In addition, we compare MDO approaches by describing how they account for the soundness and completeness of mutation operators.

An early work proposing an MDE-based framework to facilitate the application of SBSE to MDE problems is given in [40]. A generic meta-model for encoding is presented that can be extended for specific optimization problems. However, only preliminary ideas are presented for how to specify an evolutionary algorithm based on that encoding.

Generally, early papers such as [17, 18, 40] mainly discuss the representation of a problem and the search space. The computation space is specified with meta-models that cover the representation of problems and solutions. A distinction between problem and solution models was introduced in [17, 18]. Feasibility constraints and objectives for comparing search space elements are not explicitly distinguished in the early papers. In [40, 66], the authors consider objectives and fitness functions and suggest that, for example, a Java implementation can be used to measure the quality of solutions.

Almost all papers on model-based MDO propose to define mutations of solution models as model transformations. Later works such as [15, 38, 59, 66] go into more details and define as well as evaluate concrete mutation operators. Models are selected according to how well they meet the objectives or by giving preference to feasible solutions [15, 38, 59]. To terminate an evolutionary computation, various forms of termination conditions were presented in [15, 37, 38]: Simple conditions limit the number of evolutionary iterations performed in an evolutionary computation or the total runtime of these computations. Alternatively, the computation can be terminated if a certain number of iterations does not yield a sufficient improvement regarding the quality of solutions.

In [15, 38, 59] several groups of mutation operators are compared with respect to effectiveness and efficiency. In [59], mutation operators are generated by higher-order transformations and compared to manually constructed operators. Since the higher-order transformations generate larger rules than the manually constructed ones, the evolutions can be shortened, resulting in higher efficiency. In [38], the effects of destructive mutation operators were studied. Evolutionary computations using these rules were faster but resulted in models of less quality. In [15], the generation of consistency-preserving (i.e., sound) mutation operators with regard to multiplicity constraints is presented. The generated operators were compared to manually designed operators; some effects on effectiveness and efficiency were noted. In [37], the authors use techniques from model-based MDO to tackle the problem of optimal configuration of product lines. Concretely, from a feature model with basic constraints they derive consistency-preserving configuration operators that transform valid configurations into valid configurations, i.e., they also construct sound operators. These are used as mutation operators in a genetic algorithm to search for optimal configurations. In an evaluation, in particular, concerning effectiveness, their approach outperforms other approaches that do not use sound mutation operators but rely on repair techniques instead. They mention that their technique might not result in a complete set of operators. Generally, the completeness of operator sets has not yet been explicitly investigated.

In summary, related approaches to MDO consider several evolutionary operators and compare them in experiments with respect to their effects on the effectiveness and efficiency of evolutionary algorithms. The understanding of the core concepts of MDO remains implicit or problem-specific. In contrast, we will present a graph-based framework for MDO that concisely defines the core concepts. It will help clarify the critical factors for conducting experiments in MDO and increase the reproducibility of experiments. In particular, the soundness and completeness of mutation operators have only been roughly discussed. We will precisely define these properties with the help of our framework and investigate whether they have an impact on the effectiveness and efficiency of evolutionary algorithms.

3.3 Evolving graphs

Since our framework is based on graphs and graph transformation, a closely related approach is Evolving Graphs by Graph Programming (EGGP) by Atkinson et al. [5,6,7]. The general motivation is to increase the effectiveness of evolutionary algorithms that operate on graph-like structures by operating directly on graphs as genotypes (as opposed to a linear encoding of graphs). In EGGP, so-called function graphs serve as genotypes, and graph programs based on graph transformation rules serve as mutation operators. The mutations are designed to respect certain constraints, namely acyclicity, the arity of nodes (since a node represents a function of a certain arity), and the maximal depth. No other constraints are discussed, and the effects of unsound mutations are not measured. Our framework does not generally restrict the type of constraints used to specify feasibility, but we propose to stick with graph constraints [33] since the soundness of mutation operators for graphs can be statically shown for this kind of constraints. In this sense, we consider a more general form of soundness than that shown for EGGP. To our knowledge, the completeness of operator sets has not yet been considered for EGGP.

3.4 Other formal frameworks

While MOMoT [11] and MDEOptimiser [16] are tooling frameworks for combining search-based optimization and model transformations, we are developing a formal framework for the model-based approach to MDO. While we are the first to develop a formal framework for this particular area, there are other formal frameworks for evolutionary computation or processes. Two such frameworks are discussed below as examples.

The first approach, which encompasses a large class of evolutionary (and other randomized search) algorithms, regards population-based algorithms as algorithms that generate a new population at each iteration such that each individual is selected from the pool of all possible individuals according to a probability distribution that depends on the current population [22]. This highly abstract view on evolutionary computation allows the development of common formal techniques that can be applied to analyze different kinds of evolutionary algorithms on different problems (see, e.g., the results in [19, 22, 23]). However, this framework is too abstract for our purposes; it does not provide support for determining the ingredients of an evolutionary algorithm for which we need to find model-based implementations. Furthermore, this framework does not capture elitism or methods used to preserve the diversity of a population during evolutionary computation.

The most recent theoretical framework for evolutionary processes that we are aware of is [52]; we also refer the reader to this paper for an overview of attempts to develop such frameworks. In this work, the authors define in modular terms the parts that make up evolutionary processes, namely, selection and variation operators. To show the adequacy of their framework, they demonstrate how various evolutionary models from the domain of population genetics and various evolutionary algorithms can be instantiated within it. While soundness is not discussed in [52], completeness (in our terminology) serves as the defining property that a mutation operator should have. They deal with recombination operators, a topic we leave for future work, and do not yet cover multi-objective problems that MDO regularly addresses.

Because the existing frameworks do not adequately fit our purposes, we develop our own framework in the following instead of presenting our framework as an instantiation of an existing one.

4 A graph-based framework for model-driven optimization

MDO has been used in the literature to solve a variety of optimization problems [15, 18, 32, 37, 38, 66] and the key ideas of the evolutionary algorithms presented are similar. To clarify the design space of MDO problems and evolutionary algorithms that solve them, we present a framework for model-driven optimization below. The definition of the framework is deliberately generic since we want to include the existing variants (of the model-based approach to MDO) into the framework. The framework is also intended to be formal to allow for formal reasoning on MDO. In particular, we want to facilitate impact analysis of important properties of evolutionary operators since domain experts can easily specify suboptimal operators. In Sect. 5, we define two properties of mutation operator sets, soundness and completeness, and in Sect. 7 we study their impact on effectiveness and efficiency of evolutionary algorithms.

We will present the framework in two steps: First, the key concepts of the framework are presented using a meta-model. It represents these key concepts as interface classes and captures their structure and relations.

In a second step, all the key concepts identified in the meta-model are defined. The definitions capture various requirements that have to be met when using the framework to define concrete MDO problems, develop appropriate evolutionary algorithms, and design suitable experiments in MDO. Since models in general have a graph structure and evolutionary operators generate new models, it is natural to define the framework based on graph transformation theory.

The presentation of the formal framework is accompanied by a discussion of the characteristics of each key concept and examples of how the concept can be instantiated. The nature of instantiation varies depending on the concept: while the underlying computation space determines the type of models we work with, an instantiation of the term “optimization problem” is primarily concerned with an appropriate formulation of constraints and objectives. When instantiating the term “evolutionary algorithm,” we must specify how to generate an initial population, which evolutionary operators are to be applied, how they are applied, and when to terminate.

4.1 Meta-model for MDO

Figure 2 presents a meta-model that contains the core concepts of MDO and their interrelationships. We use this meta-model to remind us what evolutionary optimization is and to facilitate the understanding of our framework, which defines each of the concepts appearing in this meta-model.

To formulate an optimization problem, we encode it in terms of a computation space that defines all computation models that can occur in the context of the optimization problem. A problem instance spans a search space that contains only those computation models that represent solutions to this problem instance, the solution models. In addition, there are objective relations (which may be realized by functions) to evaluate how well the solution models satisfy the optimization objectives. Also, there may be feasibility constraints; a solution model that satisfies all feasibility constraints is said to be feasible. A problem instance is itself considered a (potentially infeasible) solution model. A population is a finite multi-set of solution models over a common search space; a sequence of populations is called evolutionary sequence.

To solve an optimization problem with respect to a particular problem instance, an evolutionary algorithm iteratively evolves a population. A population generator first creates an initial population for a given problem instance. Given a set of evolutionary operators, the evolution is performed following a computation specification that dictates how the evolutionary operators are applied in each iteration. An evolutionary computation of an evolutionary algorithm is represented as an evolutionary sequence which can be generated by that specific algorithm. While element mutation operators are used to perform changes on computation models, a population mutation operator organizes at the population level how a new population is created by applying element mutation operators. Survival selection operators decide which elements from a population survive and are candidates for further evolution. Evolutionary operators usually make decisions based on the given objective relations and constraints. Finally, an evolution is terminated when a given termination condition is met.

4.2 Computation space

A computation space for MDO forms the basis for encoding and solving an optimization problem (see Fig. 2). Basically, it defines a domain-specific modeling language to specify both optimization problems and their solutions. In evolutionary computing, phenotypes, which represent elements externally, are distinguished from genotypes, which represent their internal encodings. In MDO, we do not consider this distinction when formulating an evolutionary algorithm. Problem instances and search space elements are all domain-specific models. It is the task of future work to compare the efficiency of model-based encodings with traditional encodings and to translate models into more efficient encodings as needed. (An example where models are translated to bit strings is given in [37].)

However, to show formal properties and implement evolutionary computation, we choose appropriate formal representations such as typed graphs for formalizing models (see below) and Ecore models for implementing them (in Sect. 7). Since there are several modeling languages used in software engineering, we are looking for a formalization that is generic such that it is not restricted to models of a particular type. Graph-like structures are a natural way to formalize models of different types.

In MDO, computation spaces are defined based on modeling languages, typically specified with meta-models. Several MDO approaches in the literature [15, 38, 66] have chosen to represent problem instances by models and solutions by models with dedicated problem models. This means that each model of a computation space has a particular part, the problem model, which represents important information of the given problem instance and is invariant throughout evolutionary computations. Typical examples of such encoding are the CRA case [15, 38, 59], the NRP case [15], and the SCRUM case [15]. Accordingly, the meta-model for a computation space, called computation meta-model, contains a dedicated problem meta-model. All solution models must conform to the computation meta-model. A problem model is a solution model that is fully typed over the problem meta-model.

A meta-model contains typing information as well as multiplicities and optionally other constraints. We distinguish constraints that restrict the language (called language constraints) from constraints that specify the feasibility of solutions (called feasibility constraints). The problem meta-model induces a subset of the language constraints as problem constraints, namely the constraints that affect only the problem elements. Feasibility constraints specify properties that are expected to be satisfied by reasonable solutions, i.e., they constitute side conditions for the optimization. In contrast, language constraints serve to exclude instances that would not constitute a well-posed optimization problem, or to exclude instances for technical reasons. We distinguish problem constraints because, as will be shown in Proposition 1, they are particularly easy to use in optimization. In the context of graph transformation, constraints are formalized with nested graph constraints [33].

A computation model is given by an instance model that conforms to a computation meta-model. Its problem model and solution part are fully specified by the types in the computation meta-model. This basic structuring is reflected in the following definitions and is shown in Fig. 3. It is based on graphs typed over type graphs; a type graph contains a node for each node type and an edge for each edge type. The parallels to the structural part of meta-models are therefore striking. Typed graphs are presented in [26] (and recalled in the appendix). For simplicity reasons, we neglect the handling of attributes here.

Appendix A presents a generalized form of computation space based on category theory. A generalized computation space can have various instantiations. For example, it allows to define models as typed, attributed graphs using type inheritance and model changes as typed, attributed graph transformations. All the propositions presented in this section are proven in the appendix based on category theory.

Definition 1

(Computation space). A computation meta-model is a pair \( MM = (\subseteq : TG _{\textrm{P}}\hookrightarrow TG , LC )\) where \(\subseteq \) is an inclusion between type graphs \( TG _{\textrm{P}}\) and \( TG \), and \( LC \) is a set of graph constraints typed over \( TG \), called language constraints. The set \( PC \subseteq LC \), called problem constraints, is the subset of constraints that can be considered as being already typed over \( TG _{\textrm{P}}\). \(( TG _{\textrm{P}}, PC )\) is called problem meta-model. A computation element or computation model \((E, type _E)\) over \( MM \) is a graph E together with a graph morphism \( type _E: E \rightarrow TG \) such that \(E \models LC \). The computation space over \( MM \) is

Given a computation model \((E, type _E)\) over \( MM \), the model \((E_{\textrm{P}}, type _{E_{\textrm{P}}})\) where

is the problem model and \(E \setminus E_{\textrm{P}}\) is the solution part of \((E, type _E)\).

A computation-model morphism, short cm-morphism, m between computation models \((E, type _E)\) and \((F, type _F)\) is a graph morphism \(m: E \rightarrow F\) such that m is compatible with typing, i.e., \( type _F \circ m = type _E\) (Fig. 3). A cm-morphism m is problem-invariant if \(m_{\textrm{P}}\), the restriction of m to the problem model of E, is an isomorphism between \(E_{\textrm{P}}\) and \(F_{\textrm{P}}\).

Characteristics. The definition of a computation model reflects the core idea that it consists of a problem model (which specifies a problem) and a solution part (which contains information about the solution). The problem model should stay invariant during evolution (which must be ensured); the solution part is developed during an evolutionary computation. A special case is an empty problem model (and problem meta-model). A computation model (meta-model) would be a simple model (meta-model) in this case.

A meta-model may contain constraints to specify the well-formedness of the modeling language it defines. For example, certain elements of the solution part must always occur together or the number of instantiations of a certain problem type must be restricted. The latter would even be a problem constraint since it applies only to the problem model. Language constraints impose hard constraints that any computation model must satisfy at any point in an evolutionary computation.

Example 1

(Computation space). The meta-model underlying the computation space for the CRA case is shown in Fig. 1. We consider the presence of exactly one class model as a problem constraint. Additionally, representing a dependency between two features by multiple edges is not useful. Parallel edges are thus forbidden by further language constraints; avoiding parallel edges between features can actually be realized by problem constraints.

For simplicity, the graph TG in Fig. 4 focuses on the core structural part and shows the computation meta-model for the CRA case without abstract types and names of edge types. Edge types are still distinguishable by the types of their source and target nodes. As there will always be one class model, we also neglect the node type ClassModel and its containment-edges shown in Fig. 1. As for type inheritance, the graph TG shows a flattened version where all inherited edges are shown. (For details on the flattening construction, see [43].) The black part of TG indicates the node and edge types of the problem meta-model TG\(_{P}\), while the red, dashed part with filled node rectangles indicates the components of the solution part of computation models. Note that the solution part itself is usually not a graph.

Figure 4 also shows two computation models E and S. They are both typed over TG and use the same color coding as TG. The type within each node indicates how it maps to the corresponding node in TG. S shows only a part of the problem model of E along with its solution part. It can be included in E; the inclusion morphism from S to E is indicated by numbers. Each node of S is mapped to the node in E with the same number. The mapping of edges is not shown explicitly but can be inferred from the node mapping. All morphisms between the graphs E and S and to the type graph TG are shown by arrows between the graphs in Fig. 4. Note that due to the definition of cm-morphisms, the red and black parts are mapped separately. Problem-invariant morphisms will be used for the definition of element mutation operators in Def. 4.

Remark 1

In the remainder of this paper, we assume that every computation model E and every cm-morphism is typed over a graph \( TG \), even if this is not explicitly stated. For simplicity, we omit the definition of type inheritance in Sect. 4, but use it in the evaluation. The interested reader can find a suitable definition of type graphs with inheritance in [44]. The meta-model in Fig. 1 is an example of a type graph with inheritance. Class models and classes can refer to features, which can be attributes or methods. In that case, morphisms between computation models are allowed to map objects with compatible types. This means that objects in the image model may have more concrete types than their origins. For example, a Feature object may be mapped to an Attribute object. We explain and prove our formal results for type graphs without inheritance. However, all results can be applied to the case with inheritance as long as inheritance hierarchies are limited to either the problem or the solution part introduced in Def. 1 (i.e., problem elements cannot inherit from solution elements and vice versa). The inheritance hierarchy in Fig. 1, for example, is limited to the problem meta-model. When we prove our results in Appendix A, we also explain why the results are transferable in this way.

4.3 Optimization problem

To formulate an optimization problem, we need a computation space that contains problem instances, solutions, and any other models we need to perform evolutionary computations. As in the literature on evolutionary algorithms, we define an optimization problem in MDO as containing both a set of feasibility constraints and a set of objective relations (see Fig. 2), so that it belongs to the category of Constrained Optimization Problems [30]. Unlike the language constraints, feasibility constraints can be violated by a model, and that model remains an element of the modeling language and the search space. However, a reasonable solution must not violate feasibility constraints. For each optimization objective, there is a corresponding relation that allows to compare solutions in terms of how well they satisfy the respective objective. Ultimately, the extent to which each of the objectives is met determines the perceived quality of a solution. A concrete problem to be optimized is given by a problem instance; it defines its search space within the computation space. This search space includes all models that have the same problem model as the given problem instance.

Definition 2

(Optimization problem. Search space). Let a computation space \(\textit{CS}\) over a meta-model \( MM = (\subseteq : TG _{\textrm{P}}\hookrightarrow TG , LC )\) be given. An optimization problem \(\mathscr {P} = ( FC ,\le _{\textrm{O}})\) over \(\textit{CS}\) consists of

-

a set \( FC \) of graph constraints typed over \( TG \), called feasibility constraint set, which defines

$$\begin{aligned} FE (\textit{CS}, FC ):=\{ E \in \textit{CS}\mid E \models FC \}, \end{aligned}$$the set of feasible elements of \(\textit{CS}\), and

-

a finite set \(\le _{\textrm{O}}\) of total preorders \(\le _j \, \subseteq \textit{CS}\times \textit{CS}\) for \(j \in J\), where J indexes \(\le _{\textrm{O}}\), which are called objective relations.

A problem instance \( PI \) for \(\mathscr {P}\) is a computation model in \(\textit{CS}\). It defines the search space

Each element of a search space is called solution model for \( PI \). A solution model E with \(E \models FC \) is called feasible; we also write \(E \in FE (S( PI ), FC )\).

Characteristics. There are two special cases for an optimization problem, both of which are covered by the definition: If \( FC \) is empty, it is an unconstrained optimization problem. All computation models are then automatically feasible, i.e., \( FE (\textit{CS},\emptyset ) = \textit{CS}\). If \(\le _{\textrm{O}}\) is empty (but \( FC \) is not), we get a classical constraint satisfaction problem that only looks for a feasible element in the computation space.

When \(|\le _{\textrm{O}}| = 1\), we have a single-objective problem. An objective is often given as a function and is referred to as an objective function or fitness function in the literature. A metric that measures the ratio of coupling and cohesion can be defined as an objective function in the CRA case. We use objective relations instead of functions because they are more general. We also avoid the term “fitness function” because it is used variously in the literature.

For \(|\le _{\textrm{O}}| > 1\), a multi-objective problem is defined. In this case, it can happen that the objectives are contradictory. This means that a solution may be better than another with respect to one objective but at the same time worse with respect to another objective. As stated in [65] by Zitzler et al., “we consider the most general case, in which all objectives are considered equally important – no additional knowledge about the problem is available.” Thus, to compare two elements with regard to multiple objectives, we can use the dominance relation. We say that a solution model E dominates a model F if it is as good as the other in all defined objective relations and, in addition, E is better in at least one of these relations. Formally this means that, if \(E \le _j F\) for all \(j \in J\) and there is at least one \(k \in J\) with \(F \not \le _k E\), then E dominates F. A solution model that is not dominated by any other model is called Pareto optimum. The set of all Pareto optima of a search space forms the Pareto front. The set of non-dominated solutions of a population is called approximation set.

Example 2

(CRA problem). In the CRA case, each feature must be assigned to exactly one class, which represents two feasibility constraints, an assignment to at least one class and at most one class. For each constraint, the extent of its violation in a solution can be determined by counting the number of features that violate the constraint.

Two computation models are compared using two metrics for coupling and cohesion. Combining both metrics into a single one (which is called CRA Index in [47]), the CRA case can be considered as single-objective problem. These metrics can also be used to define two objective relations, which would then lead to a multi-objective problem. Class models can then easily become incomparable because one model has better coupling and another model has better cohesion. Only if both the coupling and cohesion of model A are better than those of model B, does A dominate B, so B is likely to be discarded.

A problem instance is given by a class model that contains a set of features that can use each other (and usually no class is given). It conforms to the type graph in Fig. 4. The problem models of the two computation models S and E shown in Fig. 4 can be used as problem instances. All the models in this figure formalize feasible solutions (albeit for different problem instances) because they provide a single class assignment for each of their features, implying that the feasibility constraints are satisfied.

The following lemma states that the validity of problem constraints only depends on the problem model of a computation model. For arbitrary constraints (typed over the entire given meta-model), an analogous statement is obviously false; their validity depends on the entire computation model, not just its problem part. This result especially means that, only depending on the problem model of a problem instance \( PI \), either every element of its search space \(S( PI )\) satisfies the problem constraints or none of it does.

Lemma 1

Given a computation meta-model \( MM = (\subseteq : TG _{\textrm{P}}\hookrightarrow TG , LC )\) with a set of problem constraints \( PC \subseteq LC \), a typed graph \((E, type _E)\) satisfies the problem constraints from \( PC \) if and only if \((E_{\textrm{P}}, type _{E_{\textrm{P}}})\) satisfies them.

Later, in order to think about the quality of evolutionary computations, we need the notion of an evolutionary sequence for a given problem instance. Evolutionary sequences are computed by evolutionary algorithms (see Def. 7).

Definition 3

(Population. Evolutionary sequence). Given an optimization problem \(\mathscr {P}\) over \(\textit{CS}\) and a problem instance \( PI \) for \(\mathscr {P}\), a finite multi-set over \(S( PI )\) is called a population for \( PI \). \(\mathscr {Q}( PI )\) denotes the set of all populations for \( PI \). An evolutionary sequence for \( PI \) is a sequence of populations \(Q_0 Q_1Q_2 \ldots \) with \(Q_j \in \mathscr {Q}( PI )\) for \(j = 0, 1, 2, \ldots \) The set \(\mathscr {E}( PI )\) consists of all evolutionary sequences for \( PI \).

4.4 Evolutionary operators

One of the most important configuration parameters of an evolutionary algorithm are the evolutionary operators. These can be divided into change (or variation) operators and selection operators. Evolutionary operators control the evolution with the goal of finding solutions of ever better quality. In order to not leave the search space of a problem instance, evolutionary operators need to be problem-invariant, i.e., they may not change the problem model of solutions.

In this paper, we stick to mutation operators as the only kind of change operators; crossover operators will be studied in the future. Mutations are usually considered as local changes of search space elements. Therefore, in the context of MDO, it is natural to define so-called element mutations as model transformations, as done in the literature [15, 38, 66].

We specify an element mutation operator for computation models as a model transformation rule with a pre-condition (L) and a post-condition (R). Nodes and edges of \(L \setminus R\) are deleted, while nodes and edges of \(R \setminus L\) are created. In addition, the application of a rule can be prohibited by negative application conditions (NACs). A NAC N is an extension of L and the pattern \(N \setminus L\) is forbidden to occur. A concrete mutation of a computation model is realized as a rule application.

In the formal specification of element mutations, we follow the algebraic approach to graph transformation, which takes a transformation rule with a match and performs a transformation step on a graph representing a solution model. In the following, we give a set-theoretic definition (omitting many details) and stick to simple application conditions for mutation operators. A generalized form of transformation that allows more complex forms of application conditions is defined in the appendix. A detailed definition of graph transformation based on set and category theory can be found, for example, in [26]. All models and their relations used to define an element mutation are shown in Fig. 5.

Definition 4

(Element mutation operator. Element mutation). Given a computation space \(\textit{CS}\) over a meta-model \( MM = (\subseteq : TG _{\textrm{P}}\hookrightarrow TG , LC )\) including problem constraints \(PC \subseteq LC\), an (element) mutation operator mo is defined by \(mo = (L {\mathop {\hookleftarrow }\limits ^{ le }} I {\mathop {\hookrightarrow }\limits ^{ ri }} R, \mathscr {N})\), where L, I, and R are typed graphs over \( TG \) with \( le \) and \( ri \) being injective, typed morphisms. \(\mathscr {N}\) is a set of negative application conditions defined by injective, typed morphisms \(n_j: L \hookrightarrow N_j\) with \(j \in J\) (where J enumerates the elements of \(\mathscr {N}\)).

Given a computation model E, an element mutation operator mo is applicable at an injective cm-morphism \(m: L \rightarrow E\) if the dangling condition holds: A node \(n \in E\) must not be deleted if there is an edge \(e \in E \setminus m(L)\) which would dangle afterward. In addition, there must not be a cm-morphism \(q_j:N_j \hookrightarrow E\) with \(q_j \circ n_j = m\) for a \(j \in J\); m is then called match. An element mutation \(E \Longrightarrow _{mo} F\) using mo at match m is defined as follows: If mo is applicable at m, construct graph \(C = E \setminus m(L \setminus le (I))\). Then, \(F = C \dot{\cup }(R \setminus ri (I))\), i.e., a new copy of \(R \setminus ri (I)\) is added disjointly to graph C, so that the dangling edges of \(R \setminus ri (I)\) are connected to the nodes in C as prescribed by their preimages in I (see Fig. 5).

An element mutation \(E \Longrightarrow _{mo} F\) is called pc-preserving if \(E \models PC \) implies \(F \models PC \). An element mutation \(E \Longrightarrow _{mo} F\) is called lc-preserving if \(E \models LC \) implies \(F \models LC \). An element mutation \(E \Longrightarrow _{mo} F\) is called problem-invariant if \(E_{\textrm{P}}\cong F_{\textrm{P}}\). An element mutation operator mo is called pc-preserving (lc-preserving) if every element mutation \(E \Longrightarrow _{mo} F\) with \(E \models PC \) (\(E \models LC \)) is pc-preserving (lc-preserving). An element mutation operator mo is called problem-invariant if every element mutation \(E \Longrightarrow _{mo} F\) is problem-invariant.

A sequence \(E = E_0 \Longrightarrow _{mo_1} E_1 \Longrightarrow _{mo_2} \ldots E_n = F\) of element mutations (where mutation operators \(mo_i\) and \(mo_j\) are allowed to coincide for \(1 \le i \ne j \le n\)) is denoted by \(E \Longrightarrow ^*_{M} F\), where M is a set containing all mutation operators that occur. For \(n = 0\), we have \(E = F\).

Remark 2

Element mutation operators must not change the types of nodes or edges. This also applies to node types in type hierarchies. Any nodes that are newly created must not have abstract types (such as Feature) since these types must not be instantiated. However, abstract types are useful in the pre-condition of a mutation operator, or in its NACs. For example, in the CRA case, the moving of a method or attribute from one class to another can be specified with only one operator if the abstract type Feature is used. Otherwise, two operators would be required, one for moving a method and one for moving an attribute. When applying a mutation operator, a node can be mapped to a node with a more concrete type if they are compatible with related edge types.

Characteristics. In order to not leave the computation space, element mutations must be lc-preserving. In principle, this condition can be satisfied in two ways: Either the system checks after each mutation whether the resulting model satisfies LC if the input model does and retracts the mutation result if it does not, or the modeler ensures that the underlying element mutation operator is designed to be lc-preserving.

We will see below that preservation of problem constraints PC can be easily ensured by not creating, deleting or changing elements that are typed over the problem meta-model. In this case, the resulting computation model F has the same problem model as E and if E satisfies PC, so does F. Proposition 1 states that problem invariance of mutation operators can be statically characterized by problem-invariant morphisms \( le \) and \( ri \) in the mutation operator. Hence, Proposition 1 provides a static analysis check that can be easily performed.

However, preserving PC still does not ensure that an element mutation remains in the computation space. For this, one must additionally ensure that an element mutation operator cannot introduce violations of the remaining language constraints, i.e., for constraints from \( LC \setminus PC \). There are several approaches to (semi-)automatically check whether a transformation rule preserves a given graph constraint [9, 41, 50, 51, 53]. If a constraint is first-order, it can be expressed as a nested graph constraint and then ensured by integrating it as an application condition into a transformation rule [33, 54]; the resulting rule forms an element mutation operator that preserves the given constraint. This constraint integration was automated in the tool OCL2AC by Nassar et al. [50, 51]. However, the resulting element mutation operator is more restricted than the original one since it is only applicable if the mutations do not violate the integrated constraints. Consequently, it may not or hardly be applicable to a computation model anymore. When checking a computed application condition, it may be subsumed by an already existing application condition of the respective operator or it may cover a case that is known to not occur in solutions at all (such as multiple class models in the CRA case). In these cases, the integration of the computed application condition is not necessary.

Example 3

(Element mutation). As a concrete example, we consider the element mutation operator moveFeatureToExClass in Fig. 6; it moves a feature from one existing class to another. Given the computation model E in Fig. 4 and applying moveFeatureToExClass to 3:Attribute (an instance of the abstract type Feature), 5:Class, and 6:Class and the included edge, we get model F in Fig. 7 as a result. Note that it represents a computation model with the same problem model as E. Based on model E, four different element mutations can be performed with moveFeatureToExClass: either 1:Method, 2:Method, 3:Attribute, or 4:Attribute can change their encapsulating class.

Operator moveFeatureToExClass is problem-invariant since \(L_{\textrm{P}}\cong R_{\textrm{P}}\) and therefore, is pc-preserving (as shown below). However, it can introduce a language constraint violation by introducing a parallel edge between the nodes matched by f:Feature and c2:Class. This can happen in infeasible solution models where a feature is contained in more than one class. To preserve the language constraint, the operator can be extended with a negative application condition that checks whether f:Feature is already assigned to c2:Class.

Result of an element mutation of solution model E in Fig. 4

The next proposition ensures that an element mutation \(E \Longrightarrow _{mo} F\) returns a computation model F that has the same problem model as E (formally: there exists an isomorphism between \(E_{\textrm{P}}\) and \(F_{\textrm{P}}\)) if operator mo is problem-invariant. This is not obvious in MDO, since unrestricted element mutations can easily change the problem model.

Proposition 1

Let \(mo = (L {\mathop {\hookleftarrow }\limits ^{ le }} I {\mathop {\hookrightarrow }\limits ^{ ri }} R, \mathscr {N})\) be an element mutation operator, and let \(E, F \in \textit{CS}\) be computation models such that there is an element mutation \(E \Longrightarrow _{mo} F\) (compare Fig. 5). Then the operator mo is problem-invariant if the morphisms \( le \) and \( ri \) in mo are problem-invariant.

Together with Lemma 1, the above proposition clarifies that problem constraints are trivial to treat in optimization: If the given problem instance \( PI \) (or equivalently, its problem model \( PI _{\textrm{P}}\)) satisfies the problem constraints, Lemma 1 ensures that each computation model E of the search space \(S( PI )\) does. Proposition 1 then ensures that this also holds for any computation model F obtained by an element mutation \(E \Longrightarrow _{mo} F\). Thus, one only needs to verify (i) that a given problem instance satisfies the problem constraints (otherwise it specifies an ill-posed optimization problem) and (ii) that the morphisms used to specify the element mutation operators are indeed problem-invariant (an easy condition to verify).

Next, we consider mutations of populations. Normally, not the entire population is mutated, but a so-called parent selection decides whether and how often an element is mutated. A parent selection can be considered as the first step of mutating a population. After the selection of elements to be mutated, the actual mutations take place, which are primarily element mutations but can also be sequences of element mutations. Since there are innumerable variations in the literature on how populations can be mutated, especially how parents can be selected, the following definition is deliberately generic.

Definition 5

(Population mutation. Population mutation operator). Let a problem instance \( PI \) for an optimization problem \(\mathscr {P}\) over the computation space \(\textit{CS}\) and a set of element mutation operators M be given. A population mutation operator \( MO \) is a binary relation over \(\mathscr {Q}( PI )\) with \(Q \subseteq Q'\) for each \((Q,Q')\in MO \). For each \(F \in Q'\) there is a (possibly empty) finite sequence of element mutations \(E \Longrightarrow _{M}^* F\) with \(E \in Q\). Each \((Q,Q') \in MO \) is called a population mutation of Q to \(Q^{\prime }\) via \( MO \).

Characteristics. Usually, it is not desired that each element of a population is evolved to an offspring solution. A parent selection decides whether an element is evolved once, several times or not at all. In addition, population mutations can differ in how many offspring solutions they generate and which and how many element mutations they apply to evolve an existing solution, i.e., what the finite transformation sequences that produce offspring are. The framework leaves open how these sequences are specified. In particular, such a sequence might be a complex programmed graph transformation sequence.

All of these decisions can be based on the fitness of solutions, which is usually determined by the objective relations (e.g., considering the dominance relation) and the satisfaction of the feasibility constraints. However, meta-information about the population can also be taken into account. Due to its generality, our definition supports all these variants.

Example 4

(Population mutation). In addition to the element mutation operator moveFeatureToExClass shown in Ex. 3, other element mutation operators can be addUnassignedFeatureToNewClass, which creates a class and assigns an unassigned feature to it, addUnassignedFeatureToExClass, to assign an unassigned feature to an existing class, and deleteEmptyClass to remove an empty class from the model. Apart from their names, which were chosen for clarity, these mutation operators are analogous to those in [14, 15]. All the element mutation operators presented form the set \( M \).

For parent selection, the NSGA-II algorithm [24] uses, for example, binary tournament selection, which we reproduce below: Let a population mutation operator CraMutation rely on n binary tournament selections (where n is the size of the population) to decide which solutions to evolve. In each tournament, two randomly chosen elements of the input population compete with regard to the following fitness specification: (1) Given two feasible solutions (assigning each feature to exactly one class), a solution that dominates the other in terms of the coupling and cohesion metrics is considered fitter. (2) A feasible solution is automatically fitter than a non-feasible one. (3) If two infeasible solutions are compared, the one with a lower degree of constraint violations is the fitter one. The extent to which a constraint is violated can be determined by the number of violations of the constraint. Alternatively, if a constraint is defined by attribute values, the extent to which a desired value is missed can also be considered. The degree of violation of a constraint can be calculated by summing up the extent of violation of each constraint.

For the CRA case, if two infeasible solutions are equal in terms of the degree of constraint violations, the solution that dominates the other in terms of the coupling and cohesion metrics is the fitter solution. Solutions of equal fitness with respect to the aforementioned rules can be further distinguished by their crowding distance [24]. The crowding distance estimates the proximity of a solution to other solutions in a population. To maintain diversity in a population, solutions with a high crowding distance are considered fitter. (For more details, we refer to [24].) The solution with the higher fitness wins the tournament. It is cloned, and the clone is mutated with an arbitrarily chosen applicable element mutation operator of \( M \). If none of the element mutation operators is applicable, the clone remains unchanged. When n tournaments have been run, all clones are merged with the elements of the input population to form the output population.

In a population, not all its elements are required or desired to form the next generation. A survival selection filters the next generation from a population according to certain criteria. The following definition is also deliberately generic, as we do not want to exclude certain implementations from our framework.

Definition 6

(Survival selection. Survival selection operator). Given a problem instance \( PI \) for an optimization problem \(\mathscr {P}\), a survival selection operator SO is a binary relation over \(\mathscr {Q}( PI )\) such that for all \((Q,Q^{\prime }{}) \in SO\) holds: \(Q^{\prime }{} \subseteq Q \in \mathscr {Q}( PI )\). Each \((Q,Q^{\prime }{}) \in SO\) is called a survival selection that selects \(Q^{\prime }{}\) from Q.

Characteristics. Following nature’s example, survival selection is used to realize the concept of survival of the fittest. The concept of fitness often coincides with that used in parent selection of population mutation; the objective relations and feasibility constraints are the most influencing criteria for defining fitness. However, fitness in survival selection can also be viewed from a different perspective. Factors such as the age of solutions, i.e., how many generations they have survived, or the diversity of the resulting next generation can be considered. To avoid losing the most valuable solutions achieved so far, survival selection typically employs elitism, i.e., the fittest solutions are (partially) preserved for the next generation. On the other hand, survival of less optimal or even infeasible solutions may allow broader exploration of the search space. As with population mutation, there are many ways to implement survival selection.

Example 5

(CRA survival selection). A survival selection operator corresponding to the one used in the NSGA-II algorithm can be based on the notion of fitness presented in Ex. 4. First, the three rules that take the constraints and objectives into account are used to create a preorder (rank) of population elements, i.e., each rank contains solutions that have the same overall constraint violation and do not dominate each other. Beginning with the rank of best solutions, all solutions from subsequent ranks are selected until the selection of the entire next rank would exceed the number of desired solutions in the output population. At this point, solutions from the next rank are selected, taking their crowding distance (introduced in Ex. 4) into account, until the desired size of the output population is reached.

4.5 Evolutionary algorithm

A given instance of an optimization problem is solved using an evolutionary algorithm. Since MDO has been performed with several evolutionary algorithms and there are many more variants of evolutionary algorithms in the literature, we want to keep the definition of evolutionary algorithm deliberately generic. Actually, we define a skeleton of an evolutionary algorithm as shown in the pseudo code in Algorithm 1. The minimal set of parameters for an evolutionary algorithm includes a problem instance and a set of evolutionary operators. Concrete evolutionary algorithms may have further parameters, such as an initial population. If the algorithm does not receive the initial population as input, it first generates an initial population for the given problem instance PI. Then it iteratively applies operators from the given set \( OP \) of evolutionary operators to evolve this population. To this end, the computation specification C specifies how the operators of \( OP \) are orchestrated over the course of an iteration. After each iteration, a termination condition t determines whether further iterations are performed. Algorithm 1 leaves G, C, and t completely generic since the framework is intended to support all kinds of evolutionary algorithms. In the following, we define such a generic algorithm and its semantics.

Definition 7

(Evolutionary algorithm and its semantics). Given a problem instance \( PI \) for an optimization problem \(\mathscr {P}\) and a set of evolutionary operators \( OP \) for \(\mathscr {P}\), let \(\rightarrow _{ OP } \subseteq \mathscr {Q}( PI ) \times \mathscr {Q}( PI )\) be defined as

An evolutionary algorithm \(\mathscr {A}( PI , OP ) = (G,C,t)\) consists of a population generator G to generate a starting population \(Q_0\) based on \( PI \), a computation specification C based on \( OP \), and a termination condition t.

The semantics Sem(C) of C is a subset of the binary relation \(\rightarrow _{ OP }^*\). The termination condition t is a predicate over \(\mathscr {E}( PI )\), the set of evolutionary sequences for \( PI \). An evolutionary computation of \(\mathscr {A}( PI , OP )\) is an evolutionary sequence \(Q_0 Q_1 Q_2 \ldots \) \(\in \mathscr {E}( PI )\) with \((Q_{j},Q_{j+1}) \in Sem(C)\) for \(j = 0,1, \ldots \). Each \((Q_{j},Q_{j+1})\) is called an iteration. The semantics \(Sem(\mathscr {A}( PI , OP ))\) of \(\mathscr {A}( PI , OP )\) is the set of all its evolutionary computations satisfying t. An execution of \(\mathscr {A}( PI , OP )\) results in an evolutionary computation of \(Sem(\mathscr {A}( PI , OP ))\). A set of executions of \(\mathscr {A}( PI , OP )\), called execution batch of \(\mathscr {A}( PI , OP )\), yields a batch result, a multi-set of evolutionary computations of \(Sem(\mathscr {A}( PI , OP ))\).

Characteristics. In general, evolutionary algorithms are non- deterministic since the generator for initial populations, the computation specification, and the evolutionary operators can introduce probabilistic behavior. Therefore, an evolutionary algorithm generally determines a set of possible evolutionary computations. When experimenting with an evolutionary algorithm, it is executed a certain number of times. Each execution of the algorithm results in an evolutionary computation being part of its semantics. An experiment usually includes a whole batch of executions. It may happen that the same computation is obtained several times in one experiment. Therefore, an execution batch may result in a multi-set of evolutionary computations. As a special case, algorithms with deterministic behavior (leading to a single computation) are also included.

The generator G, the computation specification C and the termination condition t can be instantiated by familiar patterns for existing evolutionary algorithms, adapted to MDO. In most cases, a random initialization procedure is used to generate an initial population from a given problem instance. Properties such as feasibility or diversity of elements in the initial population can affect the efficiency and effectiveness of an evolutionary algorithm. Ideally, the entire search space should be reachable from an initial population. However, depending on the problem, specifying diversity, implementing an efficient generator, and analyzing reachability can be challenges in themselves. Traditionally, the set of operators consists of at least one population change operator and a survival selection; the computation specification combines them by first applying the change operators sequentially, followed by the survival selection to choose the population for the next iteration. More sophisticated concepts (such as self-adaptive evolutionary algorithms [30]) can also be expressed using our framework but may require the implementation of more complex computation specifications.

Example 6

(CRA evolutionary algorithm). For the CRA case, consider an example evolutionary algorithm with an initial population of 100 models, all equal to the given problem instance. The set \( OP \) contains the population mutation operator CraMutation introduced in Example 4. Also, \( OP \) contains the selection operator presented in Example 5. The computation specification C prescribes that both operators in \( OP \) are applied alternately, starting with the mutation of a population, followed by the selection of a population for the next iteration.

An example termination condition t is the following: It monitors the progress of an evolutionary computation with respect to the improvement of the approximation set. The improvement between the approximation sets \(A_1, A_2\) of the populations of two iterations is measured by the Euclidean distance of the solution vectors of their solutions. For each solution E, its solution vector \(v_{\textrm{S}}(E)\) consists of the values of the cohesion and coupling metrics and two values reflecting the extent to which the two feasibility constraints are violated. For \(E_2 \in A_2\), let \(d^{A_1}_{\textrm{min}}(E_2)\) be the minimum Euclidean distance of \(v_{\textrm{S}}(E_2)\) to the solution vectors of all solutions in \(A_1\). The distance between \(A_1\) and \(A_2\) is then defined as

Let a finite evolutionary computation \(Q_0 Q_1 \ldots Q_k\) and a corresponding sequence of approximation sets \(A_0 A_1 \ldots A_k\) be given. Furthermore, let a sequence of progression indices \(x_1 x_2 \ldots x_i\) be defined with \(1 \le x_j \le k\) for all \(1 \le j \le i\) as follows. The first progression index \(x_1\) represents the iteration at which the approximation set changed for the first time. Further progression indices represent iterations where the approximation set has improved by at least 3 percent compared to the approximation set of the iteration represented by the previous progression index. Thus, for any progression index \(x_j\) with \(1 < j \le i\), it holds that

Let the sequence of progression indices be complete, i.e.,

hold for all m, n with \(x_{j-1}< m < x_j\) and \(x_i < n \le k\). The termination condition t is satisfied if \(k - x_i = 100\).

5 Soundness and completeness

Evolutionary algorithms aim at finding optimal solutions or at least approximating them. The operators chosen determine the effectiveness and efficiency of the search. In this section, we will consider two fundamental properties of sets of element mutation operators that can affect the effectiveness and efficiency of evolutionary algorithms: soundness and completeness. Soundness refers to preserving the feasibility of solutions and completeness refers to preserving the reachability of all feasible solutions. Investigating whether properties such as soundness and completeness actually have an impact on the effectiveness and efficiency of evolutionary search is of particular interest in the context of MDO. One of its promises is that domain knowledge can be integrated into problem-specific search operators, thereby improving evolutionary search. Determining general properties that operator sets should have provides guidelines and limits for constructing problem-specific operators.

While evolutionary algorithms typically operate on populations, in this paper we first introduce our notions at the level of element operators. There are two reasons for this: First, we deliberately introduced the population mutation operators in general terms. At the level of element operators, it is still clear what soundness and completeness should mean. At the level of population operators, it becomes more complex; we think that it might be more promising to look for appropriate definitions for more concrete (classes of) population operators. Second, we also develop our definitions from the point of view of analyzability. The properties we define can be analyzed statically since they are based on element operators. Analyzing comparable properties at the level of population operators would likely be more difficult and costly. The fact that an element operator has a particular property with respect to all or certain classes of solutions needs to be checked only once. At the level of population operators, runtime verification might be required to obtain a particular property. We will outline the possibilities for static analysis after the respective definitions and give some hints on how to define the respective properties for the more general level of population operators.

We consider an element mutation operator sound if it ensures the feasibility of all generated solution models under the assumption that the input models are already feasible.

Definition 8

(Soundness of element mutation (operator sets)). Let \(\mathscr {P} = ( FC ,\le _{\textrm{O}})\) be an optimization problem for a computation space CS. Assuming \(E \in FE (\textit{CS}, FC )\), an element mutation \(E \Longrightarrow _{mo} F\) is sound, where mo is an element mutation operator, if \(F \in FE (\textit{CS}, FC )\).

An element mutation operator mo is sound if every element mutation \(E \Longrightarrow _{mo} F\) via mo with \(E \in FE (\textit{CS}, FC )\) is sound. A set of element mutation operators is sound if each of its operators is sound.

Note that a population mutation that starts with feasible solutions can lead to a population with only feasible solutions, even if the element mutation operators are not sound. This is because the soundness of the element mutation operators is a sufficient condition for preserving the feasibility of solutions but not a necessary condition.

Example 7

(CRA soundness). In the CRA case, the element mutation operator moveFeatureToExClass in Fig. 6 is sound because it reassigns a feature to another class, i.e., the feature is neither left without a class assignment nor given a second one. In contrast, an operator that simply removes a feature from a class is obviously unsound.

Static analysis. In the formal framework developed so far, element mutation operators are not generally sound. It is up to the modeler to prove that concrete element mutation operators are sound. We discussed above (after Def. 4) which approaches exist in the literature to (semi-)automatically check whether a transformation rule preserves a given graph constraint. Since feasibility constraints are also specified as graph constraints, these approaches can also be used for checking soundness. In principle, it is also possible to check at runtime whether unsound operators preserve feasibility (and if not, to undo their application), but this would require additional computational effort at runtime.

Outlook on population operators and runtime verification. Since feasibility is a property of an individual solution, it is not immediately obvious how soundness should be defined for population mutation or survival selection (operators). One might think about requiring that at least the proportion of feasible solutions be preserved in a population. Preserving the proportion of feasible solutions (while allowing enough newly computed solutions to be selected for the next population) might require sophisticated control of the selection of solutions and element mutation operators used during population mutation to ensure that enough feasible offspring are computed. Note that the probability for this could be increased by the use of sound element mutation operators (without further control): In (most) evolutionary algorithms, feasible solutions are preferred over infeasible ones in both selection for reproduction (here: for mutation) and survival selection.

In our evaluation, we investigate whether the use of sound element mutation operators supports the finding of optimal solutions more efficiently and effectively.

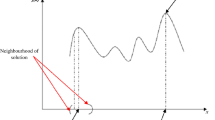

Next, we consider the completeness of a set of element mutation operators. It is satisfied if, for a given problem instance, all feasible solutions of its search space can be generated at each point of an optimization.

Definition 9

(Completeness of element mutation operator sets). Let \(\mathscr {P} = ( FC ,\le _{\textrm{O}})\) be an optimization problem, \( PI \) a problem instance for \(\mathscr {P}\), and M a set of element mutation operators. The set \( M \) is complete if, for every solution model \(E \in S( PI )\) and every feasible solution model \(F \in FE (S( PI ), FC )\), there exists a finite sequence of element mutations \(E \Longrightarrow _{M}^* F\).

Only considering element mutations based on a set of mutation operators, the completeness of that set is a sufficient (but not a necessary) condition for the reachability of all optimal solutions. It should be noted that the evolutionary algorithm may still miss these optimal solutions since it further constrains which element mutations are actually applied. Therefore, the completeness of a set of element mutation operators does not imply that an evolutionary algorithm using that set definitely reaches all optimal solutions. Conversely, an evolutionary algorithm that uses an incomplete set of element mutation operators can, in principle, still find optimal solutions. However, using an incomplete set of element mutation operators bares the risk of completely truncating regions of the search space that contain optimal solutions. Knowing whether a set of element mutation operators is complete or not allows the developer to make an informed decision about it.

Example 8

(CRA completeness). The set of element mutation operators discussed in Ex. 4 is not complete since no new classes can be created after all features are assigned. To obtain a complete set of element mutation operators, we make a small change. We add an additional element mutation operator moveFeatureToNewClass that can move an already assigned feature to a new class. The resulting set of element mutation operators is complete since any computation model (feasible or not) can be converted into a feasible model with one class using the operators addUnassignedFeatureToNewClass at most once, the operator addUnassignedFeatureToExClass as often as possible, and operators moveFeatureToExClass and deleteEmptyClass as often as needed. This feasible model with one class can then be transformed into any feasible model using the operators moveFeatureToExClass and moveFeatureToNewClass. Summarizing, every feasible model is reachable from any model. Note that this argumentation (implicitly) uses the fact that computation models satisfy the language constraints of the CRA case: General graphs that are typed over the meta-model of the CRA case could contain more than one class model or classes that are not contained in a class model. In such situations, we could, for example, not assign two features that are contained in different class models to the same class. Hence, our (extended) set of operators is not complete for general graphs.

Static analysis. We are not aware of any formal approach that automatically checks the completeness of sets of element mutation operators as defined above. Basically, the problem is a reachability problem that can be analyzed for a single model using model checking for graph transformation [56]. However, we do not ask about the reachability of a single model from a single model but about the reachability of a (possibly infinite) set of models from each possible model. Maybe surprisingly, in all three example cases of our evaluation the same simple technique can be used to manually prove completeness of a set of element mutation operators. As sketched for the CRA case in the example above, one looks for one model for which one can argue that it can be transformed (via the given element mutation operators) into any feasible model, and that any model can be transformed into it. This (manual) analysis is still static in the sense that it only needs to be done once for a given set of element mutation operators. Since we selected our case studies before developing operator sets for them, for which we then proved completeness, and the same simple proof technique worked in all cases, we are confident that proving completeness manually will be feasible also in other cases.

Outlook on population operators and runtime verification. If a chosen set of element mutation operators is complete, we can be sure that in principle all optimal feasible solutions can be found using these operators. However, much weaker forms of completeness suffice to obtain this property. It is sufficient that during an evolutionary computation every feasible solution remains reachable from one element of the current population, but it need not be reachable from all elements. Defining such a population as complete, a survival selection operator would be completeness-preserving if it transforms complete populations to complete populations. Note that completeness of a set of element mutation operators, as introduced above, ensures this milder notion and, as mentioned above, can be argued statically.

Note also that the completeness of element mutation operator sets we introduced also allows a more free choice of initial populations. While an initial population can still affect the search, a complete set of element mutation operators ensures that, in principle, every feasible element can be reached, regardless of the initial population chosen.