Abstract

Distributed, software-intensive systems (e.g., in the automotive sector) must fulfill communication requirements under hard real-time constraints. The requirements have to be documented and validated carefully using a systematic requirements engineering (RE) approach, for example, by applying scenario-based requirements notations. The resources of the execution platforms and their properties (e.g., CPU frequency or bus throughput) induce effects on the timing behavior, which may lead to violations of the real-time requirements. Nowadays, the platform properties and their induced timing effects are verified against the real-time requirements by means of timing analysis techniques mostly implemented in commercial-off-the-shelf tools. However, such timing analyses are conducted in late development phases since they rely on artifacts produced during these phases (e.g., the platform-specific code). In order to enable early timing analyses already during RE, we extend a scenario-based requirements notation with allocation means to platform models and define operational semantics for the purpose of simulation-based, platform-aware timing analyses. We illustrate and evaluate the approach with an automotive software-intensive system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Distributed, software-intensive systems are becoming more and more complex. For instance, in the automotive domain, the growing number of functionalities has led to thousands of software operations distributed across hundreds of electronic control units (ECUs) that communicate via multiple bus systems [104]. Cyber-physical systems additionally communicate among themselves via wireless ad hoc networks (e.g., automotive vehicle-to-X communication) to provide more advanced functionalities. More generally, these systems increasingly rely on message-based communications. Additionally, the correctness of such systems does not only rely on the functional correctness but also on the time at which actions are performed: They are real-time systems. Since a timing error can lead to human life threat, these systems have to fulfill hard real-time requirements.

For example, the so-called Emergency Braking & Evasion Assistance System (EBEAS) [64, Chapter 4] is an automotive vehicle-to-vehicle driver assistance system, which coordinates with other vehicles (and other in-vehicle ECUs) to autonomously perform actions like emergency braking or evasion of obstacles. Performing emergency braking or evasion only milliseconds too late can harm the life of the passengers and other lives in the environment. Usually, such functionality is subject to end-to-end real-time requirements (e.g., the EBEAS has to perform emergency braking within 50 time units after it detected an obstacle).

Violations of such real-time requirements can occur for various reasons: The ECUs executing the software have restricted resources (e.g., processing power, memory) that increase execution times; the buses and wireless communication media have restricted resources (e.g., throughput, latency) increasing transmission times; the preemption induced by scheduling policies increase response times, etc. More generally, the various properties of the particular resources of the execution platform (resource properties) impact the timing behavior by inducing timing effects (i.e., delays) during the provision of the actual functionality.

Hence, safety standards for the development of software-intensive systems like the automotive-specific ISO 26262 [67] require the estimation of execution times and needed communication resources. Additionally, to make sure that real-time requirements are fulfilled, timing analyses particularly for the highly safety-critical parts of the system under development shall be performed.

Most of the state-of-the-art approaches for timing analyses taking into account the execution platform apply simulative techniques typically implemented in commercial-off-the-shelf tools (e.g., [102, 113]). However, such approaches are applied late in the development process, mostly because they rely on the existence of the execution platform or the compiled platform-specific code [29, 90, 91] (e.g., to compute or measure a worst-case execution time [107]). The detection and fixing of such problems in later engineering phases causes costly development iterations [13, 103]. Consequently, there is a need to apply platform-aware timing analyses earlier in the development process, ideally in the requirements engineering (RE) phase. Particularly, the timing-relevant platform resource properties are typically known or well estimated in such early engineering phases due to the knowledge from prior development projects [60, 91].

For enabling timing analyses already in the early RE phase, related work provides means to specify and analyze timed behavioral models (typically relying on scenario- or automata-based notations), thereby abstracting from the final platform-specific artifacts. However, approaches analyzing timed scenario-based models require to pre-calculate the timing effects induced by the resource properties and to specify them as part of the time-constrained scenarios [45, 55, 56, 119] or as part of design models that are verified against the scenarios [77, 78, 81, 82]. Approaches analyzing automata-based models likewise require specifying the pre-calculated timing effects as part of the automata [2, 11, 70, 71, 79, 98], require detailed task models like the state-of-the-art approaches mentioned above [4, 5], or provide neither simulation nor visualization means to reveal the causes of real-time requirement violations [40]. Summarizing, most of the related work on analyzing timed models requires reenacting, pre-calculating, and explicitly specifying the timing effects induced by the resource properties (e.g., CPU processing power, bus throughput) in a low-level manner as part of the behavioral models. Thus, timing analysts cannot pragmatically (re-)use platform models with specified resource properties stemming from other sources in the development process (e.g., for documentation and design review purposes) and verify them against the real-time requirements, thereby hindering a broad acceptance of such approaches.

In order to relieve the timing analysts from the burden to pre-calculate and to specify the timing effects induced by the platform resource properties as part of behavioral models, we propose an approach to enable early and platform-aware timing analyses already during the RE phase. Since the targeted real-time software-intensive systems strongly rely on message-based communication, we base the real-time requirements on our timed and component-based dialect [17, 64, 65] of the scenario-based notation of Modal Sequence Diagrams (MSDs) [48]. Like the related work, the modeling and analysis means provided by our dialect enable specifying and validating real-time requirements but incorporate platform-specific aspects only insufficiently. Thus, to provide both an abstract specification of the execution platform with its particular resource properties and the allocation of MSD specifications to the execution platform, we furthermore extend platform modeling concepts of the real-time modeling UML profile Marte [93]. Based on the modeling languages mentioned above, we mainly introduce a new operational semantics for platform-aware MSDs dedicated to timing analyses. This semantics encompasses an extended MSD message event handling semantics as inspired by Tindell et al. [115] and particularly encapsulates the computation of the resource properties into platform-induced timing effects. This enables verifying the timing effects w.r.t. the real-time requirements specified by timed MSDs in timing analyses through applying simulation and model checking in our tool suite TimeSquare [28]. To operationalize the semantics, we apply our Gemoc approach [22, 80] for the specification of executable modeling languages. We illustrate and evaluate the approach with the automotive software-intensive system EBEAS.

In terms of related work, our previous works, and article contributions, we reformulate as follows:

-

In contrast to related work, our approach enables that the timing effects do not have to be pre-calculated and explicitly specified as part of the behavioral models, so that scenario- and component-based models with real-time requirements and platform models with resource properties can be independently conceived and (re-)used.

-

In terms of our previous works, we employ our timed [17, 64] and component-based [65] dialect of MSDs [48] for the specification of time-constrained scenario requirements and software architectures. Furthermore, we apply different languages and the tooling of our Gemoc approach [22, 80] for the specification of our platform-aware MSD semantics. Based on our semantics and taking the platform models and the allocated time-constrained scenario requirements and software architectures as input, Gemoc generates timed models based on our Clock Constraint Specification Language [24]. These models are executable in our timing analysis tool suite TimeSquare [28].

-

As the main contribution, we introduce our new operational semantics for platform-aware MSDs through the application of Gemoc, which computes and thereby separates the timing effects from their inducing platform resource properties, and which extends the abstract MSD message event handling semantics as inspired by Tindell et al. [115]. Furthermore, we introduce a new modeling language for enabling the specification of execution platforms with their resource properties and the allocation of MSD specifications to them through extending the platform modeling concepts of the real-time modeling UML profile MARTE [93].

In Sect. 2, we introduce the foundations that our approach relies on. Section 3 outlines an overview of the approach. Subsequently, we present it in more detail by first presenting our modeling language for execution platforms and the allocation from requirement scenarios to them (cf. Sect. 4). In Sect. 5, we provide conceptual extensions and definitions for message event semantics and timing effects to be considered by timing analyses. Based on these ingredients, Sect. 6 presents our operational semantics for timing analyses. Section 7 illustrates the results through an exemplary timing analysis and model checking. We describe the evaluation in Sect. 8 and related work in Sect. 9. Finally, we conclude and sketch future work in Sect. 10.

2 Foundations

In the following, we present the foundations for the comprehension of the particular ingredients that our approach relies on. Section 2.1 introduces general foundations on the kind of timing analysis we focus on. In Sect. 2.2, we present the basics of MSDs. Section 2.3 outlines the Marte profile, and Sect. 2.4 introduces the basics of a language for the specification of executable time models. Finally, Sect. 2.5 outlines the Gemoc approach.

2.1 Timing analysis for hard real-time systems

We focus on hard real-time systems, for which the violation of a hard real-time requirement may cause catastrophic consequences (e.g., people are harmed) [18]. Hard real-time systems must be designed to tolerate worst-case conditions [72]. Typically, a schedulability analysis (e.g., [18]) for hard real-time systems investigates whether jobs with each an activation time, a processing time, and a deadline w.r.t. the activation time can be scheduled on resources so that always all deadlines are met. As motivated in the introduction, such schedulability analyses are demanded by standards for the development of safety-critical systems.

Response time analysis [8, 112] is a well-established a priori analysis technique to check the timing properties of hard real-time systems, which is implemented in many commercial-off-the-shelf tools (e.g., [102, 113]). It computes upper bounds on the response times of all jobs and checks whether all response times fulfill the corresponding timing requirements. In simplified terms, the response time of a job is defined as its activation time plus its processing time plus the sum of potential preemption times by other jobs. A job can be a task to be executed on a processing unit or a message to be transmitted via a communication medium. In the case of tasks, the job processing time is the execution time that a processing unit needs to execute the task. The worst-case execution times of the tasks are inputs to task response time analyses [105], and their computation requires the final platform-specific code or a very detailed model of the system [107].’

In the case of messages, the job processing time is the transmission time that the communication medium needs to transmit the message. Its computation relies on the properties of the physical medium which influence the transmission time (e.g., technology or protocol). Additionally, the activation time also encompasses a queuing jitter that is inherited from the worst-case response time of the sending task [117]. Thus, the results of message response time analyses also depend on the final platform-specific code.

Beyond the timing properties of individual tasks and messages, determining the overall timing properties of distributed real-time systems requires a more holistic view of the system [75]. These timing properties are usually based on event chains starting with an initial system stimulus; involving multiple software components that may be deployed on different ECUs; until the production of an externally observable response event. The timing behavior of event chains converges from the occurrence of task start and completion events, as well as of different events involved in the message transmission. The most used event chain timing property is the end-to-end response time, which is defined as the amount of time elapsed between the arrival of an event at the first task and the production of the response by the last task in the chain [90].

This is of specific importance in our approach, because high-level real-time requirements are usually formulated w.r.t. such end-to-end response times of the event chains. That is, such requirements impose timing constraints between an initial system stimulus and an externally observable response of event chains.

To verify such high-level real-time requirements, existing approaches for end-to-end response time analysis like [29, 36, 88,89,90, 116] still rely ultimately on the response times of the individual jobs and consequently require the final platform-specific implementation. Thus, they can be applied only in late development phases, like the techniques and tools for the analysis of the individual response times.

Here, we rely on the fact that coarse-grained information about the timing-relevant resource properties is mostly known in the early RE phase from prior projects or expert knowledge [60, 91]. Also, we propose to express the real-time requirements based on Modal Sequence Diagrams introduced in the next section.

2.2 Modal Sequence Diagrams (MSDs)

Scenario-based notations enable the intuitive specification and comprehension of message-based interaction requirements, and UML Interactions [96, Clause 17] provide such a notation as a visual modeling language by means of sequence diagrams.

To make UML Interactions more suitable regarding universal/existential properties, the Modal profile [48] syntactically extends UML Interactions with modeling constructs as known from Live Sequence Charts (LSCs) [23]. Therefore, this profile introduces a UML-compliant form of LSCs, called Modal Sequence Diagrams (MSDs). In previous works, based on the Play-out algorithm [50], we extended MSDs with modeling constructs and operational semantics for component-based software architectures [65] and for high-level real-time requirements [17, 64]. The resulting Real-time Play-out approach [17] defined the operational semantics of such timed MSD requirements and thereby enables their simulative validation.

In this paper, we focus on providing modeling constructs and an operational semantics for the early consideration of execution platform impacts on the timing requirements. However, in order to ease the reading of the proposition, we introduce MSD constructs and semantics (and later our approach) based on the EBEAS example (see Fig. 1). The proposed excerpts of the EBEAS example in Fig. 1 highlight a real-time requirement on the automatic emergency braking maneuver in the case of an obstacle detection.

Figure 1 represents a component-based MSD specification (in the middle) together with involved types (in the top) and one of the MSDs (in the bottom).

The next sections describe more in detail the structure of component-based MSD specifications (Sect. 2.2.1) as well as their basic and timed semantics (Sect. 2.2.2).

2.2.1 Structure of MSD specifications

A component-based MSD specification is structured by means of MSD use cases. Each MSD use case encapsulates for a specific functionality several interrelated scenarios, which specify requirements on the message-based interaction behavior to be provided by the system under development.

An MSD use case encompasses the participants involved in providing the functionality, as well as a set of MSDs describing the requirements on the interactions between these participants. Such an MSD specification is subdivided into three parts: Types, collaborations, and interaction behaviors, explained in the following.

UML interfaces and components provide reusable types for all MSD use cases of the specification. The interfaces encompass operations that are used as message signatures in the MSDs. For example, the class diagram for the UML package ObstacleDetection::Interfaces in the top of Fig. 1 contains an interface Decisions, which contains an operation enableBraking. This interface is used as required and provided interface in each one port of the components SituationAnalysis and VehicleControl, respectively (see package ObstacleDetection::Types in Fig. 1). For any MSD use case, the actual component-based software architecture is defined by roles of the components. More precisely, we specify component roles and their interconnections by using an UML collaboration (dashed ellipse symbol) [96, Clause 11.7] (ObstacleDetection in Fig. 1). We distinguish between system component roles that are controlled by the system under development (component symbols) and environment component roles that are controlled by the environment (cloud symbols).

For example, the system components sa: SituationAnalysis and vc: VehicleControl communicate with each other via the connector sa2vc. The interface Decisions typing the ports of the corresponding component types determine which messages can be exchanged through this connector. Additionally, both system component roles in turn interact with the environment component role esc: ElectronicStabilityControl via dedicated connectors.

The MSDs define the behavior of the collaboration (cf. abstract syntax links ownedBehavior). We distinguish MSDs into requirement MSDs (no stereotype applied) and assumption MSDs (an MSD with the stereotype «EnvironmentAssumption» applied). The former ones specify requirements on the interaction behavior of the system under development, whereas the latter ones specify assumptions on the behavior of the environment. For example, the MSD EmcyBraking in the bottom of Fig. 1 is a requirement MSD specifying the emergency braking behavior of the EBEAS in the case the adaptive cruise control detects an obstacle, whereas an assumption MSD is only indicated.

An MSD itself encompasses MSD messages, which are associated with a sending and a receiving lifeline, an operation signature, and a connector.

For example, the lifeline vc: VehicleControl (receiving the enableBraking message) represents the equally named role in the collaboration. Consequently, the enableBraking message is associated with the operation signature from the Decisions interface and is specified to be sent via the connector sa2vc in the software architecture.

Based on the kind of the sender role, MSD messages are further distinguished into environment messages and system messages. The former ones are messages sent by the environment to the system (e.g., obstacle and standstill), whereas the latter ones are messages sent by the system internally (e.g., enableBraking) or to the environment (e.g., emcyBraking).

After this short introduction about the structure of component-based MSD specifications, we explain the basic and timed MSD semantics in the following.

2.2.2 MSD semantics

An MSD progresses as message events corresponding to the specified MSD messages occur in the system at runtime (i.e., during Play-out or an actual system execution). Each MSD message is of two different kinds, where both kinds determine for the corresponding message events their safety and liveness properties, respectively. In this article, we focus on MSD messages that allow no occurrences of message events that the scenario specifies to occur earlier or later (safety) and whose corresponding message events must occur eventually (liveness). Message events that do not correspond to any MSD messages are ignored, that is, they do not influence the progress of the MSDs and the MSDs do not impose requirements on them.

As message events occur that can be correlated by the Play-out algorithm with MSD messages, the MSDs progress. This progress is captured by the cut, which marks for every lifeline the locations of the MSD messages that were correlated with the message events.

For example, Fig. 1 shows for the depicted MSD in the bottom its particular cuts c0–c4.

Timed MSDs allow defining real-time requirements by referring to clock variables, which are adopted from Timed Automata [2] and represent real-value variables that increase synchronously and linearly with time. We distinguish clock resets and time conditions. Clock resets are visualized as rectangles with an hour-glass icon, containing an expression of the form \(c = 0\) over a clock variable c. Time conditions are visualized as hexagons with an hour-glass icon and define assertions w.r.t. clock variables. To this end, each time condition defines an expression of the form \(c \bowtie value\), with a clock c, an operator \(\bowtie \,\in \lbrace <,\le ,>,\ge \rbrace \), and an Integer value value. We distinguish minimal delays (\(\bowtie \,\in \lbrace >,\ge \rbrace \)) and maximal delays (\(\bowtie \,\in \lbrace <,\le ,\rbrace \)).

For example, the MSD in Fig. 1 contains a clock reset and a maximal delay defining that the message events corresponding to all enclosed MSD messages must occur within 50 time units after the message event occurrence corresponding to the MSD message obstacle prior to the clock reset. Such a combination of a clock reset and a time condition forms a real-time requirement. More complex real-time requirements can be formed by specifying multiple MSDs with constraints on overlapping message events (see also our MSD requirement pattern catalog [38] for details).

We opt for applying these existing timed MSD modeling constructs and semantics, instead of ignoring them and adding real-time requirements to the scenarios by means of the Marte profile (cf. next section). By doing so, we do not introduce a further variant of the MSD language and thereby can use the timed MSDs both in Real-time Play-out and in the approach presented in this article.

2.3 Platform modeling and allocation with MARTE

The UML profile Modeling and Analysis of Real-Time Embedded Systems (Marte) [93, 111] provides modeling means for design and analysis aspects for the embedded software part of software-intensive systems. Marte consists of several subprofiles; and we have sought to reuse as much as possible existing suitable concepts from these subprofiles. In the following, we introduce the subprofiles we use and/or extend: Non-functional properties; generic resource modeling; generic quantitative analysis; and allocations.

-

From the Marte subprofile Non-functional Properties Modeling (NFPs) [93, Chapter 7/Annex F.2] and its model library Marte_Library [93, Annex D] we used pre-defined measurement units. We mostly reused measurement units for the time. We also reused intervals for numeric data types, percentages, durations, data sizes, and transmission rates.

-

The Marte subprofile Generic Resource Modeling (GRM) [93, Chapter 10/Annex F.4] provides modeling means for the specification of generic resources of execution platforms. From this subprofile, we reuse modeling concepts for memory resources, processing resources (with a relative speed factor), communication media (with a transmission rate and blocking time), schedulers (with a scheduling policy), and resource usages (with operation execution times and message sizes).

-

The Marte subprofile Generic Quantitative Analysis Modeling (GQAM) [93, Chapter 15/Annex F.10] provides modeling means for the specification of generic and quantitative aspects relevant to automatic analysis techniques. From this subprofile, we reused so-called analysis contexts, which encompass workload behaviors based on the distribution of stimulus events.

-

The Marte subprofile Allocation Modeling (Alloc) [93, Chapter 11/Annex F.5] provides modeling means for the specification of allocations of logical elements (i.e., application software) to physical and technical elements (i.e., execution platform). From this subprofile, we reused the «allocate» stereotype, which enable the specification of a directed allocation link between software and execution platform components, which are part of an MSD specifications (cf. Sect. 2.2.1).

2.4 Clock Constraint Specification Language (CCSL)

Associated with the Marte profile, we proposed in previous work [24, 28] the Clock Constraint Specification Language (CCSL) dedicated to timing specifications. This language is formally defined and tooled to enable the analysis of resulting specifications. CCSL is a formal declarative language for the modeling and manipulation of time in real-time embedded systems [7], initially and informally introduced in Marte [93, Chapter 9/Annex C.3]. The formalism bases on the notion of logical time [37, 76], which was originally designed for distributed and concurrent systems, but which was also used in synchronous languages. CCSL generalizes different descriptions of time, based on the notion of clocks, a clock being an ordered set of instants (or ticks) named \(\mathcal {I}\). The notion provides a sound way to mix synchronous and asynchronous constraints between clocks. Such a mix enables the symbolic specification of partial order sets on the instants of clocks, which are well suited for the description of a large set of model control flow (e.g., [41, 42, 73, 85, 101]).

Solving a CCSL model (i.e., doing a run) results in a schedule. A schedule \(\sigma \) over a set of clocks C is a possibly infinite sequence of steps, where a step is a set of ticking clocks \(\sigma : \mathbb {N}\rightarrow 2^C\). For each step, one or several clock(s) can tick depending on the constraints.

The operational semantics of CCSL models [6] specifies how to construct the acceptable schedules step by step and is given as a mapping to a Boolean expression on \(\mathscr {C}\), where \(\mathscr {C}\) is a set of Boolean variables in bijection with C. For any \(c \in \mathscr {C}\), if c is valued to true, then the corresponding clock ticks; if valued to false, then it does not tick. Note that if no constraints are defined, each Boolean variable can be either true or false and, consequently, there are \(2^n\) possible futures for all steps, where n is the number of clocks.

Each time a constraint is added to the specification, it adds Boolean constraints on \(\mathscr {C}\). The Boolean constraints depend on the definition of the constraint and its internal state. When several constraints are defined, their Boolean expressions are put in conjunction so that each added constraint reduces the set of acceptable schedules. (It is a trace refinement according to [3].)

CCSL models are inputs to our tool suite TimeSquare [28], which supports their simulation with the generation of timing diagrams, the animation of UML models, etc. It also supports the exhaustive simulation (when the state space is bounded), enabling the model checking of CCSL models. TimeSquare has been applied for the timing analysis (cf. Sect. 2.1) of software-intensive systems (e.g., [20, 41, 42, 84, 85, 101]).

For a CCSL model, the actual constraints can be specified by means of two ways explained in the following sections. On the one hand, CCSL provides pre-defined constraints on language level (metamodel level M2) that the engineer can call and pass arguments to at model level (metamodel level M1) (cf. Sect. 2.4.1). On the other hand, a CCSL extension enables the engineer to specify user-defined constraints that can be used like the pre-defined ones afterward (cf. Sect. 2.4.2). Finally, a CCSL model, either based on pre- or user-defined constraints, can be used to perform an exhaustive state space exploration (if the state space is finite) and model checking (cf. Sect. 2.4.3).

2.4.1 Pre-defined CCSL constraints

CCSL defines the two constraint kinds clock expressions and clock relations. André [6] formalizes a set of pre-defined CCSL constraints, which TimeSquare provides as a model library so that these constraints can be conveniently used during the specification and the TimeSquare-based simulation of CCSL models. In the following, we explain the pre-defined clock expressions and relations that we apply in this article.

Clock relations impose (synchronous or asynchronous) orderings between the instants of participating clocks.

-

\(\boxed {\subset }\): SubClock (subClock: Clock, superClock: Clock ) This relation constrains all ticks of subClock to coincide with a tick of superClock but not vice versa. That is, subClock can only tick when superClock ticks, but it does not have to.

(1)

(1)where the coincidence relation \(\equiv \) is an equivalence relation (reflexive, symmetric and transitive). It reflects the fact that two instants have the exactly same logical time.

-

\(\boxed {=}\): Coincides (clock1: Clock, clock2: Clock )

This relation constrains all instants of clock1 and clock2 to coincide. That is, the events represented by clock1 and clock2 must occur simultaneously.

(2)

(2) -

\(\boxed {\prec }\): Precedes (leftClock: Clock, rightClock: Clock )

This relation constrains the \(k^{th}\) instant of leftClock to precede the \(k^{th}\) instant of rightClock \(\forall k \in \mathbb {N}\). That is, the event represented by leftClock always occurs before rightClock.

(3)

(3)where the precedence relation \(\mathrel {\prec }\) is a strict order relation (irreflexive, asymmetric, and transitive) between two instants. Figure 2 depicts an exemplary TimeSquare simulation run of this clock relation. In this example, the relation enforces that clock1 always ticks before clock2 ticks.

-

\(\boxed {\preccurlyeq }\): NonStrictPrecedes (leftClock: Clock, rightClock: Clock) This non-strict version of the Precedes relation constrains the \(k^{th}\) instant of leftClock to coincide with or precede the \(k^{th}\) instant of rightClock \(\forall k \in \mathbb {N}\). That is, the events can also occur simultaneously.

(4)

(4)where the non-strict precedence relation \(\mathrel {\preccurlyeq }\) is defined by \(\mathrel {\prec }\vee \equiv \).

Clock expressions define a new clock based on other clocks and possibly extra parameters. In the following, we describe the expressions used in this article.

-

\(\mathrel {+}\): Union ( clocks: Set(Clock) ) The clock specified by this expression ticks whenever one of the clocks in its parameter set clocks ticks. Consequently, the instant set of the resulting clock (named c here) is such that:

(5)

(5)where \(\equiv \) means coincides with. Intuitively, for all instants of the clocks a and b, there exists an instant of the clock c and there is no instant of c that does not coincide with an instant of a, or b, or both. Figure 3 depicts an exemplary TimeSquare simulation run of this clock expression. In this example, a new clock unionOfCl1Cl2 is specified that ticks whenever one or both of the argument clocks clock1 and clock2 tick.

-

$: DelayFor (clockForCounting: Clock, clockToDelay: Clock, delay: Integer) This expression delays any tick of the clock clockToDelay by delay ticks w.r.t. a reference clock clockForCounting. Note that we apply in this article an always ticking clock globalTime as argument for the reference clock parameter clockForCounting, so that the clock defined by this expression simply ticks delay instants after clockToDelay (named a below).

(6)

(6)meaning that it exists X instants of b between instants of a and the delayed instant of c, and instants of c coincide with some instants of b.

-

\(every ... on\): PeriodicOffsetP (baseClock: Clock, period: Integer) The clock specified by this expression ticks any periodth tick of the baseClock. Note that we apply in this article an always ticking clock globalTime as argument for baseClock so that the clock defined by this expression simply ticks any periodth tick.

(7)

(7)where \(idx(i_{bc})\) is the index of the \(i_{bc}\) instant in \(\mathcal {I}_{bc}\).

-

\(\mathrel {\vee }\): Sup ( clocks: Set(Clock) ) The clock specified by this expression ticks with a clock in the parameter set that does not precede the other clocks; that is, it specifies the supremum of the particular instant sets.

(8)

(8)meaning that there is no clock d being slower than a or b or being faster than c.

We provide the whole formal semantics definition in [24]. These clock constraints are integrated in our tool suite TimeSquare. Additionally, to ease the application of constraints to specific domains, it is possible to specify user-defined constraints, as introduced in the next subsection.

2.4.2 User-defined constraints

To ease the specification of domain-specific CCSL constraints, we extended CCSL in prior work with the Model of Concurrency and Communication Modeling Language (MoCCML) [25, 26]. MoCCML is a specific form of automata that integrates seamlessly with the semantics of CCSL. The automata are a way to specify clock relations, which can be simulated in TimeSquare and stored in user-defined model libraries.

Exemplary TimeSquare simulation run of the MoCCML relation depicted in Fig. 4

For example, Fig. 4 depicts the MoCCML relation MyUser-definedRelation, which has three clock parameters and a local Integer variable counter initialized with zero. Figure 5 depicts a corresponding exemplary TimeSquare simulation run that initializes three clocks and applies the clock relation myRelation typed by the MoCCML relation on them. The automaton specifies two states A and B with a transition from one state to the other for each of the states. Such transitions specify possibly coincident parameter clock triggers, guards, and effects. For example, the transition from A to B fires when both the clock parameters cl1 and cl2 (i.e., the clock arguments clock1 and clock2 in the simulation run) tick simultaneously and additionally the guard [counter < 1] holds. When the transition fires, its effect counter++ increments the counter. The transition from state B to A fires on the tick of the clock parameter cl3 (i.e., the clock clock3 in the simulation run) and sets the counter to zero.

The triggers of a transition that leave a state specify which clocks are allowed to tick in this state. For example, the clock parameter cl3 is not allowed to tick in state A, and the clock parameters cl1 and cl2 are not allowed to tick in state B. Furthermore, when in state A, the clocks cl1 and cl2 are forced to tick simultaneously to fire the transition. We provide all the details on the formal semantics in [25].

2.4.3 Model checking CCSL models

TimeSquare is a direct implementation of the formal operational semantics as specified in our previous work [25]. As such, the state of each constraint during the simulation is clearly defined, so that it is also for a CCSL model. Consequently, it is possible based on a CCSL model to exhaustively explore its acceptable simulations. Each time a new state is reached, it is compared to already visited states. If it does not exist, it is added to the visited states, otherwise, a new transition to an existing visited state is created, and it creates a loop representing an acceptable periodic behavior of the CCSL model. In case the set of possible simulations is computed completely, it means that the whole state space is also computed completely and can be serialized. In TimeSquare, such state spaces are typically serialized in the dot language [30] as well as in the Aldebaran format [1]. The resulting files (and consequently the CCSL model) can be verified against properties written in the Model Checking Language [86].

2.5 Specifying operational semantics with GEMOC

According to Harel and Rumpe [51], a modeling language consists of an abstract syntax specifying the language concepts and their relations, a semantic domain describing the language meaning, and a semantic mapping relating the language concepts to the semantic domain elements. Our Gemoc approach [22, 80] enables to flexibly specify operational semantics for a modeling language following these definitions.

Specifically, the semantic domain is specified by means of a Model of Concurrency and Communication (MoCC). This MoCC is defined by semantic constraints in the form of pre-defined CCSL constraints (cf. Sect. 2.4.1) as well as user-defined MoCCML constraints (cf. Sect. 2.4.2). The MoCC defines the concurrency, the synchronizations, and the possibly timed way the elements of a program interact during an execution. The semantic mapping is specified by the declaration of Domain-Specific Events (DSEs), which associate the abstract syntax and the MoCC. The DSEs are specified by means of our declarative Event Constraint Language (ECL) [27]. ECL is an extension of the Object Constraint Language [94], augmented with the notions of DSEs as well as behavioral invariants, which use CCSL and MoCCML constraints.

The approach is implemented in our modeling language workbench Gemoc Studio [16] for building and composing executable modeling languages. Gemoc Studio takes a language metamodel, an ECL mapping specification, and semantic constraints specified through a MoCC as inputs and automatically derives a modeling workbench with simulation and debugging facilities. Specifically, it automatically derives a dedicated QVTo model transformation [95]. This derived model transformation takes an instance of the language metamodel as input and generates a CCSL model that parameterizes an execution engine based on TimeSquare. The model transformation maps the associated DSEs to CCSL clocks based on the ECL mapping specification and applies the semantic constraints from the behavioral invariants on these clocks.

3 Approach overview

As outlined in the introduction, our approach encompasses three main ingredients:

-

1.

We propose a Marte-based (cf. Sect. 2.3) UML profile to augment the timed and component-based version of the scenario notation Modal Sequence Diagrams (MSDs) (cf. Sect. 2.2) with platform aspects. This encompasses specification means for a) an execution platform model together with timing-relevant resource properties, b) the allocation of MSD specifications onto these platform models, and c) analysis contexts to be considered during the timing analyses. We call the resulting models platform-specific MSD specifications and describe them in Sect. 4.

-

2.

We conceptually extend the message event semantics for scenario notations in general and MSDs in particular by introducing additional event kinds that occur during the execution of the software on its target platforms. This enables time to elapse between such events during our end-to-end response time analyses, and consequently to introduce platform-specific delays (cf. Sect. 2.1). We also provide means to compute these different delays based on the resource properties defined in platform-specific MSD specifications, which we describe in Sect. 5.

-

3.

The main contribution is the specification of platform-aware MSD operational semantics dedicated to timing analyses. We apply the Gemoc approach (cf. Sect. 2.5) to declaratively specify these operational semantics, which formalizes and spawns the platform-induced timing behavior of MSD specifications. Based on the semantics specification, Gemoc automatically derives CCSL models that are input to the timing simulation and model checking tool TimeSquare (cf. Sect. 2.4). We present the semantics specification in Sect. 6 and an illustrating timing analysis in Sect. 7.

Figure 6 gives an overview of our application of the Gemoc approach. The Abstract Syntax of conventional (i.e., component-based and timed) MSD specifications is defined at the language level (metamodel level M2) by several parts of the UML metamodel and the Modal profile (cf. Sect. 2.2). We extended this Abstract Syntax by introducing platform and timing analysis aspects through our Marte-based TAM profile. The Mapping Specification declares Domain-Specific Events (DSEs) in the context of Abstract Syntax concepts and constrains their behavior through Semantic Constraints applied by using the Event Constraint Language (ECL) (cf. Sect. 2.5). The set of Semantic Constraints defines the Model of Concurrency and Communication (MoCC) by means of Pre-defined CCSL Constraints (cf. Sect. 2.4.1) as well as User-defined MoCCML Constraints (cf. Sect. 2.4.2).

At the model level (metamodel level M1), we provide a Preprocessing QVTo [95] model transformation that takes a Platform-specific MSD Specification as input and computes derived properties. That is, based on the resource properties, this transformation computes delays, which we conceptually present in Sect. 5. The output is a Platform-specific MSD Specification with Computed Static Delays, which is input to another QVTo model transformation MSD-to-CCSL Transformation. This model transformation is automatically derived by Gemoc Studio from our declared DSEs and their MoCC. It encodes the functional and real-time requirements as well as the pre-computed delays and further timing-relevant resource properties into constrained timing effects as part of a CCSL Model. At runtime level (M0), a timing analyst can simulate such CCSL models in TimeSquare to reveal potential real-time requirement violations.

4 The TAM profile for platform-specific interactions

Conventional component-based and timed MSD specifications as introduced in Sect. 2.2 define platform-independent requirement specifications with real-time constraints; i.e., the software architecture and the MSD specifications have no correlation to any concrete target execution platform. In this paper, we propose to consider the timing behavior emerging from the allocation of component-based MSD specifications to concrete target execution platforms, which we together call platform-specific MSD specifications.

In order to support the modeling of platform-specific MSD specifications, we present in this section the most important concepts of our Timing Analysis Modeling (TAM) UML profile: Execution platforms including the specification of the resource properties that have to be considered in the timing analysis (and consequently in the proposed platform-specific MSD semantics), allocations of logical software components to the platform elements, and analysis contexts. All these concepts extend existing concepts from the Marte UML profile [93] (cf. Sect. 2.3). The overall profile (as fully presented in [61, Section 4.6.1]) encompasses 5 subprofiles containing 29 stereotypes with 54 tagged values (including tagged values inherited from Marte) and stems from a literature review on resource properties that impact the timing behavior [12, Chapter 3]. We do not present the full TAM profile here but illustrate its use through a platform-specific MSD specification for the EBEAS example, which will also be used as basis to explain the extension of the MSD semantics amenable to timing analysis (cf. Sect. 6).

In the following, we explain the most important stereotypes by illustrating their application to an EBEAS execution platform model depicted in Fig. 7. Therefore, we add platform-specific information to the platform-independent MSD specification introduced in Fig. 1. In Sect. 4.1, we explain how we specify execution platforms with our TAM profile. Subsequently, we explain the allocation of the software components to the resulting execution platform elements in Sect. 4.2. Finally, we present the specification of application software timing properties in Sect. 4.3 and the definition of analysis contexts in Sect. 4.4.

4.1 Specifying execution platforms

We provide three subprofiles for the specification of concrete execution platforms together with the properties that impact the timing behavior of the system. For instance, the bottom of Fig. 7 (Platform Model package) illustrates the use of the subprofiles to define the EBEAS execution platform model. In the following subsections, we use this package to illustrate the application of the three subprofiles: The hardware execution platform (Sect. 4.1.1), the real-time operating system (Sect. 4.1.2), and the communication infrastructure (Sect. 4.1.3).

4.1.1 Specifying the hardware processing

In this section, we illustrate means for the specification of hardware elements and processing units. The TamECU is an extension of the Marte stereotype GRM::Resource, which serves as a container for other elements of the hardware platform (e.g., the memory units, the communication media, the peripherals, the processing units or the operating system). The stereotype TamProcessingUnit, contained by the TamECU, extends the Marte stereotype GRM::ProcessingResource. It describes the properties of the processing unit of an ECU or of a microcontroller. Among further properties, it inherits the tagged value speedFactor, which describes the relative speed w.r.t. to the normalized speed of a reference processing unit [93, Section 10.3.2.10]. Furthermore, it adds the tagged value numCores, which specifies the amount of cores that the processing unit provides and consequently the number of tasks that can be handled concurrently.

For example, the EBEAS execution platform (Platform Model package of Fig. 7) contains two microcontrollers («TamECU») :\(\mu \) C1 and :\(\mu \) C2. Both of them specify each 1 processing unit («TamProcessingUnit»), respectively :PU\(\mu \) C1 and :PU\(\mu \) C2; and both of them are single-core. However, according to their speed factor value, the :PU\(\mu \) C2 processing unit is two times faster than :PU\(\mu \) C1.

4.1.2 Specifying the real-time operating system

In this section, we illustrate means to specify timing-related properties of real-time operating systems, which run on ECUs or microcontrollers and provide services for the application software. The stereotype TamRTOS describes properties of such real-time operating systems. Among others, it enables the specification of shared resources, operating system services, and communication channels on operating system level for ECU-internal communication. In the following, we focus on the specification of the operating system’s scheduler since the scheduling strategy strongly impacts the timing behavior of the system. For this purpose, we provide the stereotype TamScheduler, which extends the Marte stereotype GRM::Scheduler. Besides specifications means of properties like the overhead introduced by the scheduler, it inherits two tagged values: the scheduling policy (schedPolicy, e.g., fixed priority or round-robin), and the preemption capability (isPreemptible).

For example, in the execution platform of the EBEAS, the schedulers of both :PU\(\mu \) C1 and :PU\(\mu \) C2 processing units depicted in Fig. 7 implement the most prominent [92, 112] real-time operating system scheduling policy FixedPriority and are specified to be non-preemptible. In this policy, all tasks have fixed priorities so that the scheduler dispatches the highest priority task among the ready tasks again and again after the task that is executing has finished. This scheduling strategy is supported by the widespread real-time operating systems of AUTOSAR [9] and OSEK/VDX [66], for example.

4.1.3 Specifying the communication infrastructure

In this section, we illustrate means for the specification of the infrastructure for the communication between distributed components. We provide the stereotype TamComConnection, which extends the Marte stereotype GRM::CommunicationMedia. It provides additional properties compared to the communication media stereotype, but we focus here on the most important ones that are inherited from Marte. The first important property of a communication medium is its latency, which is specified through the inherited blockT tagged value. The second important property of a communication medium is its throughput, specified through the inherited capacity tagged value.

Furthermore, the network interfaces between a TamECU and a TamComConnection need time to encode messages from the software representation to a representation suitable for the transport via a communication medium and vice versa. Such properties are captured as part of the TamComInterface stereotype for ports of TamECUs, inter alia. The TamComInterface stereotype extends the Marte stereotype GQAM::GaExecHost and inherits two tagged values representing the overhead duration implied by the encoding/decoding of the information to and from a communication media: commTxOvh and commRcvOvh.

For example, in the execution platform of the EBEAS, one bus ( Can Bus) is used to communicate between the two ECUs. The throughput of the «TamComConnection» Can Bus connector is set to 100kbit/s and its latency is set to 1ms. Additionally, the communication interface of :\(\mu \) C1’s port («TamComInterface») specifies a message encoding overhead of 1ms (commTxOvh=1ms), and the communication interface of :\(\mu \) C2’s port specifies a message decoding overhead of 1ms (commRcvOvh=1ms).

4.2 Specifying allocations

The Marte subprofile Alloc provides means to allocate software elements to resources of execution platforms (cf. Section 2.3). We reuse the Alloc::Allocate stereotype to specify allocations of MSD application elements onto TAM execution platform elements. This stereotype defines a link that can be used to allocate software components onto processing units, as well as logical connectors onto communication media.

For instance, in the platform-specific EBEAS example, the software components sa: SituationAnalysis and vc: VehicleControl are, respectively, allocated to the :\(\mu \) C1 and :\(\mu \) C2 microcontrollers. This is illustrated in Fig. 7 by the «allocate» links from the logical software components as part of the collaboration to the «TamECU» microcontrollers as part of the execution platform. Analogously, logical connectors between the software components in the collaboration are allocated to «TamComConnection» links in the Platform Model. For instance, the logical connector sa2vc between sa: SituationAnalysis and vc: VehicleControl is allocated to the Can Bus connecting :\(\mu \) C1 and :\(\mu \) C2.

4.3 Defining the software timing properties

In this section, we illustrate means to specify information about the estimated resource consumption of the application software. Its most important element is the TamOperation stereotype, which inherits tagged values from the Marte stereotype GRM::ResourceUsage [93, Section 10.3.2.13]. It is used to specify the platform-specific timing-related aspects of the operations used as MSD message signatures. We consider here only the two most important tagged values: execTime and msgSize. The tagged value execTime specifies the best-/worst-case execution time of an operation with respect to a processing unit with a speed factor of 1. The msgSize specifies the size of the message associated with the operation.

For instance, in the EBEAS example, both the «TamOperation»s obstacle and trajectoryBeacon on the right-hand side of Fig. 7 have a worst-case execution time of 5ms (i.e., the worst-case execution time is specified through one value). In contrast, the operation enableBraking has, specified by the interval value, a best-case execution time of 6ms and a worst-case execution time of 9ms. Note that since the enableBraking operation is part of the vc: VehicleControl, which is allocated to the :\(\mu \) C2 processing unit; and that :\(\mu \) C2 has a speed factor of 2, then the actual best-/worst-case execution time of enableBraking spans an interval of \([3\mathrel {..}4.5]ms\). Additionally, the enableBraking operation is associated with a message whose size is 500bit, and its actual message transmission time has to be calculated based on this size w.r.t. the throughput of the Can Bus. These are concrete examples of timing effects (i.e., concrete delay times in an execution context) that are induced by the specified resource properties.

4.4 Specifying analysis contexts

In this section, we illustrate means to specify the timing behavior of the system environment. More precisely, it defines a set of timed scenarios that make explicit the hypothesis under which the timing behavior of the system is realized. Such simulation scenarios are called analysis contexts [111, Chapter 9] (cf. Section 2.3).

The main stereotype of the corresponding TAM subprofile is the TamAnalysisContext, which extends the Marte stereotype GQAM::GaAnalysisContext. This stereotype references the platform under analysis and the concrete workload that defines the timed scenarios. The workload is specified through the TamWorkloadBehavior stereotype, which extends the GQAM::GaWorkloadBehavior stereotype. From the extension, it inherits the tagged values demand and behavior that, respectively, define the analysis (timing) assumptions on the environment on the one hand and the system expected (timing) requirements on the other hand. In our context, the behaviors are the requirement MSDs as presented earlier while the demands are assumption MSDs (cf. Section 2.2) triggering the system behavior, where the timing of the environment message is constrained by an arrival pattern. For this purpose, we provide the TAM stereotype TamAssumptionMSD that refines the Modal stereotype EnvironmentAssumption and references an arrival pattern.

We support periodic and sporadic arrival patterns. A periodic arrival pattern, specified by the stereotype TamPeriodicPattern, constrains the environment message to occur periodically every period time units. We currently do not support explicit jitter deviations from the periodical occurrences, as this timing information would be very detailed in the early RE phase. However, if a jitter is known and as large that a requirements engineer wants to specify it, sporadic arrival patterns can be applied. These are specified by the stereotype TamSporadicPattern and constrain an environment message to occur with a uniform distribution between a minArrivalRate and/or a maxArrivalRate.

For example, Fig. 8 depicts an analysis context for the EBEAS. The entry point is the «TamAnalysisContext» Ebeas AnalysisContext. It references the Ebeas Platform container depicted in the bottom of Fig. 7 as well as the «TamWorkloadBehavior» Ebeas Workload. The Ebeas Workload references the behavior MSDs depicted or indicated in Fig. 1. Furthermore, the workload references demand assumption MSDs. These specify that the trajectoryBeacon message occurs periodically every 25ms and that the obstacle message occurs sporadically with a rate ranging from 50ms to 55ms, respectively.

5 Definition of interaction events and delays required for timing analyses

The existing operational semantics for platform-independent MSD specifications as defined by Real-time Play-out [17] only considers synchronous messages, where the events of sending and receiving a message at runtime coincides and no notion of tasks exists. This abstraction is well suited to analyze idealized systems but is not adequate for platform-aware analyses. Such analyses require considering different delays introduced by the actual execution on a platform. In order to define these delays, we introduce in this section additional message event kinds, in between which the delays are defined. We associate each message event kind with an equally named lifeline location for MSD messages. To ease readability, we refer to the event kinds and the associated lifeline locations in an undifferentiated way.

According to Tindell et al. [115], four kinds of delays are required for the analysis of distributed real-time systems: the message dispatch delay, the message transmission delay, the message consumption delay and the task execution delay (see Fig. 9). These delays are based on locations allowing a more fine-grain cut progression. In case the execution platform does not introduce some of the delays, then the associated events occur immediately one after the other (i.e., at the next instant). In the other case, these delays usually contain two parts, a so-called static part, which can be computed statically according to the properties of the system; and a so-called dynamic part which dynamically emerges from certain workload situation at runtime due to mutual resource exclusion. The static part of the delays and their computation are defined in the remainder of the section and technically implemented as part of the preprocessing transformation mentioned in Sect. 3, whereas the handling of the dynamic part is presented in Sect. 6.2.2.

Figure 9 illustrates the location kinds, the fine-grained cuts, and the delays for the enableBraking and emcyBraking MSD messages.

In the definitions of the delays, instead of considering only the worst case (execution/transmission) delays, we also consider their best cases, which are likewise of high interest since they potentially modify the access orders to mutually exclusive resources [10, 19, 100]. Thus, we compute both lower and upper bounds for all delays and define them as intervals (cf. the specification of best-/worst-case execution times in Sect. 4.3). For space reasons, we only show hereafter the formulas for each upper bound, where the corresponding lower bounds are computed analogously.

The delay definitions presented in the following encompass derived properties (prefixed with a / as in UML). These derived properties are calculated based on a variety of detailed property values as part of the TAM platform models. Furthermore, we encapsulate behind the derived properties the distinct delay computations regarding message exchange between software components allocated to different ECUs (i.e., distributed communication) or to the same ECU (i.e., ECU-internal communication). We only outline the particular ingredients of the derived properties in the following and present the full computations behind them in detail in [61, Section 4.6.1.2].

5.1 Message transmission delays

The use of communication media and communication protocols (e.g., the properties of the connector Can Bus in Fig. 7) causes a message transmission delay, which must be taken into account by a timing analysis [115]. In order to consider this delay, we encompass the concept of synchronous and asynchronous messages introduced by Harel’s original Play-out semantics [50] for Live Sequence Charts (LSCs) [23]. In other words, we distinguish between message send events and message reception events. In case the platform is not defined, such events coincide and correspond to synchronous messages as in Real-time Play-out [17]. However, once the platform is defined, these events are not synchronously correlated with a whole MSD message. In contrast, a causality is defined between the message send and the message reception location of the corresponding MSD message, respectively. See, for instance, the enableBraking message in Fig. 9, where the cut c1.2 marks that the message is sent but not yet received, whereas the cut c1.3 marks that the message is received but not yet consumed.

The message transmission delays encompass the overall propagation latency (i.e., net latency plus potential overheads) of the communication channel as well as the time to transmit the message. This transmission time depends on the overall message size (i.e., net message size plus control overheads like check sums) in relation to the overall throughput (i.e., media throughput minus potential overhead deductions) of the communication channel. In case of a distributed communication, this overall throughput encompasses its net throughput minus the percentage transmission overhead of the applied transmission protocol and of the applied middleware communication services. Thus, we compute the upper bound of the message transmission delay for an MSD message m as

where m.connector is a UML::Connector associated with m, m.connector.supplier is a TamComConnection (distributed communication) or a TamOSComChannel (ECU-internal communication) that the connector is allocated to, and m.signature is a TamOperation associated with m.

5.2 Message dispatch and consumption delays

The notion of message reception in scenario-based formalisms is ambiguous, because it is not clear whether a message reception is the instant when the message arrives at the receiver communication interface or the instant when the receiver application software consumes the message [59]. The distinction between message reception and message consumption is necessary in order to take the message consumption delays into account [115]. Such delays occur due to the decoding of network messages from a representation suitable for the transport via a network to a logical representation suitable to be processed by the application software. Similarly, there is a message dispatch delay between the instant when a message is created by the sending software component and the instant when it is actually dispatched to the network by its network interface [115]. Such delays occur due to the encoding of a logical representation into a representation suitable for the transport via a communication medium.

To distinguish between message creation and message sending as well as between message reception and message consumption, we introduced two additional message event kinds message creation event and message consumption event. These event kinds capture the instant when a message is created by the sending software component and consumed by the receiving software component, respectively. Figure 9 illustrates these event kinds and locations. We define the message creation location to be positioned on the sending lifeline directly before the message sending location (cf. cut c1.1). Similarly, the message consumption location is positioned on the receiving lifeline directly after the message reception location (cf. cut c1.4).

The message dispatch delays encompass the overhead to gain write access to a communication channel (in case of distributed communication, an arbitration time for gaining access to the overall communication system is added) as well as the time to encode a message from its logical representation to a format suitable for the transfer via the communication channel. This encoding time depends on the overall message size (i.e., net size plus potential overheads) in relation to the encoding rate of the communication channel. In case of distributed communication, this encoding rate further depends on the encoding rate of the applied transmission protocol and of the applied middleware communication services. Thus, we compute the upper message dispatch delay for an MSD message m as

where m.connector is a UML::Connector associated with m, m.connector.supplier is a TamComConnection (distributed communication) or a TamOSComChannel (ECU-internal communication) that the connector is allocated to, and m.signature is a TamOperation associated with m.

Analogously to message dispatch delays, message consumption delays encompass the time to gain read access to a communication channel as well as the time to decode a message from the communication channel format to its logical representation. The decoding time depends on the overall message size in relation to the decoding rate of the communication channel. Thus, we compute the upper bound of the message consumption delay for an MSD message m as

where m.connector is a UML::Connector associated with m, m.connector.supplier is a TamComConnection (distributed communication) or a TamOSComChannel (ECU-internal communication) that the connector is allocated to, and m.signature is a TamOperation associated with m.

5.3 Task execution delays

The semantics for platform-independent MSD specifications focuses on the message exchange between software components. However, it neglects internal procedures (i.e., tasks) that are executed by the software components to process consumed messages and to create the messages to be sent. This message processing by tasks leads to task execution delays that affect the timing behavior of the system [115] (cf. the execution times of the particular software operations in Fig. 7).

In order to consider such effects, we do not specify explicit task models but rather define that each message is associated with exactly one task that is executed upon the consumption of the message by the receiving software component. That is, we introduce the two new event kinds task start event and task completion event, which, respectively, represent the start and the end of a task execution. Figure 9 illustrates the new event and location for the enableBraking MSD message. We define the task start to be positioned on the receiving lifeline directly after the message consumption (cf. cut c1.5). Similarly, the task completion is positioned on the receiving lifeline directly after the task start, representing also the cut for the next MSD message (cf. cut c2). The next location is the message creation location (cf. cut 2.1), and so on.

Task execution delays encompass the normalized overall execution time (i.e., net execution time plus potential overheads) required to process a message in relation to the relative speed factor of the executing processing unit (cf. Sect. 4.1) as well as the overall times for accessing memory and resources (i.e., net access times plus overheads). Omitting the access times for comprehensibility reasons, we hence compute the upper task execution delay for an MSD message m as

where m.signature is a TamOperation associated with m, m.connector[receiver] is the receiving software component, m.connector[receiver].supplier is a TamECU that the receiving software component is allocated to, and m.con[receiver].supplier.procUnit is the TamProcessingUnit of the ECU.

We define a task to start immediately (i.e., at the next instant) after it has consumed its corresponding message if the scheduler can dispatch it (cf. cuts c1.4 and c1.5 in Fig. 9). If the scheduler cannot dispatch it immediately, a dynamic delay occurs (cf. Sect. 6.2.2). When a software component completed a task, it creates a potential subsequent message at the next instant afterward (cf. c2 and c2.1 in Fig. 9).

6 Specifying operational semantics for the timing analysis of platform-specific interaction models

In Sect. 4, we introduced the TAM profile to enable the modeling of platform-specific MSD specifications. Furthermore, we introduced additional message event kinds to support platform-specific timing analyses as well as the computations for the static delays in between these events (see Sect. 5). In this section, we overview the proposed platform-specific MSD operational semantics dedicated to timing analyses.

This section consequently details the semantics-related parts of Fig. 6 from the approach overview section (Sect. 3). The section elaborates on the EBEAS model to illustrate the most important concepts of the semantics, and the reader can refer to [61, Appendix B] for the illustrations of further concepts as well as the complete operational semantics of the TAM profile. Furthermore, our supplementary material [63] and our companion webpage [62] provide the actual technical artifacts as well as additional information.

For each of the following subsections, we start by describing the semantic mapping between the element of the abstract syntax and the CCSL constraints, realized at the language level (metamodel level M2). At this level, Domain-Specific Events (DSEs) are specified with the Event Constraint Language (ECL) in the context of concepts from the abstract syntax, and constrained by behavioral invariants (cf. Section 2.5). This generates a transformation of TAM models into CCSL specifications. Then, we illustrate the CCSL models generated for some TAM models (metamodel level M1). Finally, at the runtime level (metamodel level M0) we describe the corresponding CCSL simulation runs.Section 6.1 describes our encoding of the extended message event handling semantics for MSDs in terms of CCSL. Section 6.2 describes how we encode timing effects induced by the resource properties, and Sect. 6.3 describes our encoding of real-time requirements on these effects and of timing analysis contexts.

6.1 Encoding of message event kinds and their order

In order to enable simulative timing analyses of platform-specific MSD requirements, we encoded the semantics of MSDs in terms of CCSL. This encompasses the general occurrences of message events corresponding to the specified MSD messages (cf. Section 2.2.2) as well as the additional message event kinds introduced in Sect. 5.

Language level At the language level, we explicitly define DSEs and constraints that define the acceptable orders between the occurrences (at runtime) of the DSE instances (at the model level). The order of the occurrences represents the fine-grained cut progression w.r.t. the MSD message locations (cf. Fig. 9).

The upper part of Fig. 10 depicts an excerpt from the semantics specification of MSD message event occurrences. It depicts part of the Abstract Syntax, of the Mapping Specification, and of the applied Semantic Constraints.

Applying ECL, the Mapping Specification in the middle upper part of Fig. 10 defines the DSEs identified in Sect. 5 in the context of a TamModalMessage, like msgCreateEvt (1) and msgSendEvt (2). Each instance of a TamModalMessage will then be equipped with an instance of each DSE. The Mapping Specification also specifies an invariant eventOrder in the context of a TamModalMessage. Each instance of the DSEs will then be constrained according to this invariant, which specifies the allowed order of the message event occurrences. This order is specified by the user-defined MoCCML relation EventOrderRelation, whose parameters are DSEs.

Consequently, the DSE definition together with the invariants define the mapping between the concepts from the abstract syntax and the semantic constraints.

Looking at the constraint EventOrderRelation, it is specified by a constraint automaton, which defines the allowed order of the DSE parameters. Here, it specifies that the events shall only occur in the following order msgCreateEvt, msgSendEvt, msgReceiveEvt, msgConsumeEvt, taskStartEvt, and taskCompleteEvt; possibly infinitely. Note that there is no notion of time in this constraint, meaning that an arbitrary time can elapse between two occurrences.

From this specification, a transformation is automatically generated, to be used at the model level.

Model level At the model level, a timing analyst creates a platform-specific MSD specification and uses the previously generated transformation to generate a CCSL model, which acts as a symbolic representation of all acceptable schedules of the MSD specification; as defined by the semantics specification. The lower part of Fig. 10 depicts an excerpt from the Platform-specific MSD Specification and the corresponding CCSL Model, generated through the MSD-to-CCSL Transformation.

The MSD in the left lower part specifies the enableBraking MSD message and its event kinds (1–6) as part of the EmcyBraking MSD (cf. Section 5).

As indicated in the generated CCSL Model in the right lower part of Fig. 10, the derived transformation translates any MSD message to six clocks.

For instance, the enableBraking MSD message is translated to the six clocks (1–6) of the CCSL model of Fig. 10.

Furthermore, for any MSD message, the transformation generates a clock relation typed by the MoCCML relation associated by the ECL invariant (defined at the language level).

For example, for the enableBraking MSD message, the transformation generates the enableBraking_eventOrder clock relation typed by the MoCCML relation EventOrderRelation. This relation gets the argument enableBraking_msgCreateEvt for the parameter msgCreateEvt, the argument enableBraking_msgSendEvt for the parameter msgSendEvt, etc.

Runtime level The CCSL models generated at the model level are simulated in TimeSquare at the runtime level. Figure 11 depicts a CCSL run resulting from the CCSL model depicted in the right lower part of Fig. 10. This CCSL run represents the occurrence order of the particular message event kinds for the MSD message enableBraking.

Example: Simulated order of message event occurrences, enforced by the CCSL model clock relation enableBraking_eventOrder (cf. CCSL Model in the lower right of Fig. 10)

The rows depict ticks of the clocks, which themselves represent the particular message event occurrences. They are ordered from the top to the bottom, where the topmost row represents the occurrence of a message creation event and the bottommost row represents the occurrence of a task completion event. The ticks correspond to the transitions in the MoCCML relation EventOrderRelation. We assume that at instant 0 the MoCCML relation is in state 1, so that due to the tick of the clock \(\textsf {enableBraking}_{\textsf {msgCreateEvt}}\) at instant 1 the state is changed to 2. After the subsequent tick of the clock representing the message send event occurrence at the instant 2, the relation is in state 3 for the next 3 instants, and so on. The arrows visualize the causal dependencies between the clock ticks.

6.2 Encoding of platform-induced timing effects

In this section, we present how we encoded the semantics of the timing effects that are induced by the properties of the execution platform. We support two general classes of timing behavior effects. The first class encompasses the different kinds of static delays between the particular message event kinds as discussed in Sect. 5. The second class encompasses delays that dynamically emerge from mutual exclusion of resources when different software components try to access the same resource (e.g., the processor for the task execution, peripheral hardware, or an operating system service) at the same time.

6.2.1 Static delays between message event kinds

As discussed in Sect. 5, message-based communication and task processing involves multiple events during the actual execution on a target platform, and static delays occur between such events. In this section, we present how we encoded these static delays, based on the example of task execution time. Besides the different static delay kinds presented in Sect. 5, the complete semantics also distinguishes between distributed and ECU-internal communication.

As outlined in Sect. 3, we apply a preprocessing step for the computation of the static delays. The lower part of Fig. 12 exemplifies this Preprocessing transformation at model level by illustrating how some delays are computed based on the information in the MSD model. The computed lower and upper bound values are then stored in derived properties specified by tagged values defined as part of the TamModalMessage stereotype at the language level. In the following, we present how we specified the operational semantics based on these pre-computed static delays.

Language level In order to consider timing behaviors, we have to keep track of the global time progress. For this purpose, we introduced the globalTime DSE defined in the context of a Model (see Mapping Specification in the middle upper part of Fig. 12). The occurrence of the globalTime instance represents the discretization of the time. This discretization is usually set to the greatest common divisor of all timing requirements; however, to simplify, here it is set to 1ms in the remainder of this paper.

Consequently, we used the globalTime DSE as a reference for counting time, that is, to determine the occurrence of the different delayed DSE instances that are introduced in the following.

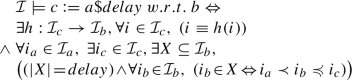

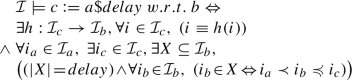

Besides the two taskStartEvt (1) and taskCompleteEvt (2) DSEs defined in the context of a TamModalMessage (cf. Sect. 6.1), the Mapping Specification defines two invariants to encode the task execution delay interval. The minExecutionDelay invariant defines the timing behavior for the lower bound of this interval.

To this end, a new DSE taskStartAfterMinExecDelay (3) is defined through the CCSL expression DelayFor (cf. Equation 6). According to the provided parameter arguments (i.e., globalTime, taskStartEvt, minTaskExecutionDelay), this clock expression delays the DSE taskStartEvt by the minimum task execution delay. Then, we specified that the DSE taskCompleteEvt must not occur earlier than this delayed event through the CCSL relation NonStrictPrecedes (cf. Equation 4). Analogously, the invariant maxExecutionDelay defines the timing behavior for the upper bound of task execution delay intervals and restricts the DSE taskCompleteEvt to occur not later than the maximum execution delay (4).

Model level The excerpt from the MSD in the left lower part shows the MSD message enableBraking with the focus on its Task Start (1) and Task Completion (2) events. The MSD message references the equally named «TamOperation» with a minimum execution time of 6ms and a maximum execution time of 9ms. The lifeline vc: VehicleControl represents the equally named component role allocated to the «TamECU» :µC2. This ECU contains a «TamProcessingUnit» with the speed factor 2.

Our Preprocessing model transformation takes these resource properties as input and computes the static delay intervals from it as defined in Sect. 5, resulting in the Platform-specific MSD Specification with Computed Static Delays. The transformation stores the computed lower and upper bounds in the corresponding tagged values of «TamModalMessage».