Abstract

Domains such as utilities, power generation, manufacturing and transport are increasingly turning to data-driven tools for management and maintenance of key assets. Whole ecosystems of sensors and analytical tools can provide complex, predictive views of network asset performance. Much research in this area has looked at the technology to provide both sensing and analysis tools. The reality in the field, however, is that the deployment of these technologies can be problematic due to user issues, such as interpretation of data or embedding within processes, and organisational issues, such as business change to gain value from asset analysis. 13 experts from the field of remote condition monitoring, asset management and predictive analytics across multiple sectors were interviewed to ascertain their experience of supplying data-driven applications. The results of these interviews are summarised as a framework based on a predictive maintenance project lifecycle covering project motivations and conception, design and development, and operation. These results identified critical themes for success around having a target- or decision-led, rather than data-led, approach to design; long-term resourcing of the deployment; the complexity of supply chains to provide data-driven solutions and the need to maintain knowledge across the supply chain; the importance of fostering technical competency in end-user organisations; and the importance of a maintenance-driven strategy in the deployment of data-driven asset management. Emerging from these themes are recommendations related to culture, delivery process, resourcing, supply chain collaboration and industry-wide cooperation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Domains such as pharmaceutical production (McDonnell et al. 2015), vehicle operations (Figueredo et al. 2015; Chowdhury and Akram 2013), manufacturing (Lee et al. 2014) and rail (Dadashi et al. 2014) are turning to data-driven tools for management and maintenance of key assets. Typically, asset management and remote condition monitoring technologies report data from assets, often from multiple sensor points, and build a picture, over time, of asset performance. Together, whole ecosystems of sensors and analytical tools can provide complex views of network asset performance (Dadashi et al. 2014). In the ideal situation, this picture can inform not just an awareness of where an asset has failed, but of future states of degradation. The aspiration is to achieve predictive maintenance scheduling (Kajko-Mattsson et al. 2010), with the twin benefits of identifying asset degradation before actual failure, and to allow planning of maintenance and renewals to be responsive to the actual state of the asset, rather than driven by a fixed schedule (McDonnell et al. 2015).

These benefits can maximise asset availability, ensuring operational continuity (Ciocoiu et al. 2015), and can provide huge advantage in terms of planning cost effective maintenance and renewal strategies, particularly where assets are geographically remote or difficult to access. It is also enabling an increasing movement towards the servitisation of maintenance. Original equipment manufacturers (OEMs) no longer just manufacture equipment—they have an ongoing service relationship with their customer, in part enabled through remote monitoring of asset performance (Lee et al. 2014). Hence, predicate maintenance technologies are allowing new forms of e-business to emerge (Smith 2013). Closing the loop, OEMs can use the data generated from assets to design more reliable, higher performing assets in the future (Suh et al. 2008).

Despite the potential applications, the reality is that the deployment of these technologies can be problematic. Much research in area of predictive asset management has focussed on the technology required to provide both sensing and analysis tools (Koochaki and Bouwhuis 2008; Chowdhury and Akram 2013; Dadashi et al. 2014). In practice, there can be substantial barriers to adoption due to human issues such as adequate knowledge of how to interpret data, interpreting large data sets and adapting data and decision-making for different roles (Dadashi et al. 2014). Equally, there may be organisational challenges in accommodating change to make sure operational strategy makes best of use of advanced data-driven analyses tools (Aboelmaged 2014; Ciocoiu et al. 2015). These issues can be most challenging when a new ecosystem of sensors is being embedded within an existing network of assets that follow legacy organisational processes (Kefalidou et al. 2015). This so-called ‘retrofit’ embedding of sensors and asset management is common in domains where infrastructure has a long lifespan, such as railways or highways, and requires adaptation to long-standing maintenance practices.

A key challenge is therefore how to ensure that the technology for intelligent, predictive maintenance can be designed to best reflect user and organisational needs—this includes the presentation of the information (the human–machine interface (HMI)) as well as the design and visibility of the whole of the automated system, including algorithms or measures on uncertainty, to reflect the needs of the decision maker (Morison and Woods 2016). Equally important is the challenge of ensuring technology is deployed to best effect—that is, introduced and used in manner that will deliver actionable decisions providing benefit to asset owners, operators and maintainers. This is a combination of understanding how technology must fit with processes, and conversely, how processes and strategy will need to adapt in order to benefit from the technology (Jonsson et al. 2010).

The study presented in the following paper looks to identify the factors that might support or inhibit the design and deployment of predictive asset management. We focus on user and organisational issues, thereby addressing a gap in the predictive asset management literature that is overwhelmingly orientated towards sensors and/or algorithms. Also, work typically focuses on one domain e.g. rail (Dadashi et al. 2014), urban transit (Kefalidou et al. 2015; Houghton and Patel 2015) or vehicle engines (Jonsson et al. 2010). The ambition in this paper is to look across a number of sectors to inform human factors models (e.g. Wilson and Sharples 2015) at a level applicable to multiple domains. Through interviews with thirteen industry experts from domains including gearbox monitoring, rail rolling stock, pharmaceuticals, nuclear power and commercial vehicles, we uncover issues across the data-driven technology lifecycle, through conception, development and, critically, long-term deployment. This is turn can be used to inform human factors that has historically been strong on user-centred design of technology, but have said less about the cross-organisational factors that might influence successful deployment.

2 Background

Remote condition monitoring (RCM) provides excellent opportunities for capturing ‘live’ empirical data where it would otherwise be neither physically feasible or safe under ordinary circumstances (e.g. oil temperatures inside a running engine; data on gearboxes on an offshore windfarm) (Jonsson et al. 2010), offering a great advantage in understanding asset behaviour over time. Sensors can be placed at multiple points on larger assets (e.g. across an escalator [Houghton and Patel 2015]), or sense multiple types of data on a single asset (e.g. temperature, vibration, speed on a gearbox). Tracked over time, coupled with contextual data such as type of asset, history, performance, conditions of use, location or weather conditions (Kefalidou et al. 2015) or compared over sets of assets (e.g. a vehicle fleet (Figueredo et al. 2015)), it is possible to build a rich picture of asset performance. With enough data, patterns of performance behaviour emerge that allow not just the identification of failure states, but of indicators of future failure and/or prognoses of the life span of an asset. This allows organisations to move from regular inspection or replacement of assets, to a condition-based approach where assets are inspected or replaced based on analysis of future degradation (Kajko-Mattsson et al. 2010)—for our purposes, we refer to this type of technology as ‘predictive maintenance’.

The introduction of such technology can, however, be problematic and is often unsuccessful (Koochaki and Bouwhuis 2008). The literature highlights that the introduction of predictive maintenance technology needs sensitivity to a number of issues that can affect the users of the technology. This covers the remote technicians using the diagnostic and prognostic information, as well as local maintainers who will execute repairs, and organisational decision makers charged with planning and costing renewal programmes.

Also from a human perspective there are organisational and cross-organisational factors that arise from the collaborative structures that seek to gain value from remote condition monitoring (Kajko-Mattsson et al. 2010).

2.1 User factors

The vision of remote condition monitoring and prognostic systems is to provide information, planning and decision support to people supporting assets and asset maintenance. This requires the integration and presentation of information from multiple sources, and presentation of the information in a manner that supports decision making. Getting this representation right, so that patterns in the data are meaningful and clear, is not a trivial manner. The kind of representation used must match the performance needs of the user, and Houghton and Patel (2015) propose an approach based on ecological interface design (Vicente 2002; Burns and Hajdukiewicz 2004) for making salient the behaviours of assets. More generally, decision support is a known human factors/HMI challenge where the automation of cognitive tasks can often neglect critical secondary information sources or constraints that human operators use to arrive at solutions (Golightly and Dadashi 2016). Design also extends beyond the presentation of trends, and to make salient the manner in which the analysis is achieved, including uncertainties or ambiguities in the data (Morison and Woods 2016).

Predictive maintenance still requires the involvement of people to interpret data, and to discuss implications to local maintenance operators in position to effect repairs (Jonsson et al. 2010) or to triangulate information and maintenance strategies (Kefalidou et al. 2015). Context is vital to the interpretation of data either because assets within a fleet have their own structural idiosyncrasies (Houghton and Patel 2015; Kefalidou et al. 2015), or because similar assets are used in different ways and face different demands (Lee et al. 2014). Therefore, despite the potential for remote monitoring to reduce the numbers of on-site maintenance teams, a reduced local presence is still vital, not only to carry out repairs but also because data sensing is not complete (Jonsson et al. 2010). It must also be recognised that human interpretation of data regarding an asset state is one of the sources of uncertainty that must be anticipated in the usage of prognostic condition monitoring, another being the variability in human performance of the maintenance task itself (McDonnell et al. 2015).

2.2 Organisational factors

2.2.1 Within an organisation

There is a need to capture current maintenance processes to understand how asset management technology is going to be embedded at both an operational and strategic level. This applies to all forms of asset management or predictive maintenance technology but is most acute when applied within an environment with a high degree of legacy—for example when applying new sensors to existing assets such as mid-life rail rolling stock. Expert elicitation of processes is vital given that documented procedures may be incomplete or erroneous. It is also important to identify who currently has the appropriate knowledge for maintenance processes, and who has the responsibility to act (Kefalidou et al. 2015). Ciocoiu et al. (2015) present a process mapping approach that can be used for asset management technology.

Asset management is supported by technology capabilities—the right IT infrastructure needs to be in place in order to enable the deployment of prognostic condition monitoring. This might be the servers that can store large volumes of data, or telecommunications networks to allow the transfer of information from the asset to the control centre. Having a body of existing IT competence is deemed a critical factor in successful e-maintenance deployment (Aboelmaged 2014). This requires appropriate competency for the staff working the system both as technical skills and IT management skills (Chowdhury and Akram 2013).

Also, as different technologies may be procured and deployed at different times from different suppliers for different assets there is a risk of siloes developing between different functional units with limited knowledge exchange or organisational crossover (Koochaki and Bouwhuis 2008). This can be exacerbated by a lack of a common ontology or frame of reference (Koochaki and Bouwhuis 2008; Dadashi et al. 2014; Iung et al. 2009) for what remote condition monitoring or predictive maintenance means for different functions. Ultimately, the deployment of predictive maintenance technology may need a significant culture change in the organisation to one that proactively and coherently embraces predictive maintenance (Dadashi et al. 2014) in terms of its resourcing, planning and procurement. As with all forms of culture change, this requires appropriate leadership (Armstrong and Sambamurthy 1999).

2.2.2 Cross-organisational factors

It is in the area of cross-organisation factors that e-maintenance (maintenance enabled by the internet) truly enters the area of e-business (businesses and services that are enabled by the internet). Historically, large infrastructure operators owned, operated, maintained and even manufactured their assets. Through the availability of data and analysis delivered by ICT, these roles are becoming increasingly fragmented across organisations with specific suppliers for a number of aspects of the value chain. While some of these suppliers still produce products (e.g. sensors), connectivity is allowing some aspects of that value chain to migrate from products to services, through the remote analysis of data. This might be the OEM monitoring the product on behalf of the asset owner/operator (Smith 2013; Lee et al. 2014), or could be third parties who take on responsibility product and customer support (Kajko-Mattsson et al. 2010). Suppliers are therefore looking at new e-business models (Petrovic et al. 2001) to generate revenue through condition monitoring, but understanding client needs can be a complex process (Jonsson et al. 2010). Also, both service provider and service user should acknowledge the need for a higher level of collaboration and human contact in comparison to traditional product sales contracts (Baines et al. 2013). As supply chains for condition monitoring become more distributed there can be challenges in establishing a business case for some of the underpinning technologies, particularly in areas such as the application of standards or common data frameworks to support the exchange of data to enable e-business in remote condition monitoring. There are also concerns around trust and of different stakeholders having access to performance data (Golightly et al. 2013). When predictive maintenance can be deployed effectively, however, there may be multiple gains to be enjoyed as additional, unexpected uses and benefits are found for different stakeholders across the supply chain (Chowdhury and Akram 2013).

2.3 Theoretical frameworks

The work that motivated the following study came from a human factors/ergonomics perspective—that is, to understand the human contribution to safe, effective and productive work, and to understand where limitations may impinge on safety or system performance (Wilson and Sharples 2015). The human factors perspective is useful because it places an emphasis on effective design of technology. This view is rooted in a sociotechnical systems perspective (e.g. Wilson et al. 2007) whereby the unit of analysis (or in the case of error, of attribution) is not restricted to the individual user or, increasingly, to a dyad constructed between in the individual and an artefact or technology. Instead, work and performance is emergent (Wilson 2014) from the interactions between people in groups, with technology, and shaped by organisational factors such as team design, communications, culture and priorities.

Wilson and Sharples (2015) offer one variant on a family of frameworks—the ‘onion model’ (Grey et al. 1987)—that place the ‘user’ at the heart of a set of considerations that will impact their effectiveness (including comfort, safety and performance) within a working environment. While the user is immediately impacted by the technology and artefacts they work with, tasks and goals will structure how the work is performed. This performance takes place within a personal and/or virtual workspace, similar to the notion of ‘organisation’, that will shape the effectiveness of work. Impinging on these considerations are external factors including cultural, social, legal and financial considerations that influence work.

This type of layered approach is the mainstay of sociotechnical theory within human factors underpinning analytical approaches in areas such as safety and accident analysis (see Underwood and Waterson 2014 for examples). However, factors from outside of the organisation are typically governmental or regulatory, rather than considering factors from other organisations within a supply chain.

External organisations have not typically been an explicit consideration in previous human factors work for predictive maintenance. For example, Dadashi et al. (2014) proposed a framework for human factors in intelligent infrastructure (i.e. rail predictive maintenance) based on the (primarily) cognitive considerations for users at each level of a transitional process from data through to information to decision making and intelligence. However, the emphasis of the framework is on HMI, and cross-organisational factors were not explicitly identified. Ciocoiu et al. (2015) consider the organisational level, but was primarily focussed on integration of technology into a single organisation’s own maintenance delivery.

In the small body of organisational literature within e-maintenance, however, there is some support for the relevance of cross-organisation factors. In particular, Aboelmaged (2014) demonstrated the relevance of the Technology, Organizational and Environment (TOE) framework, where environment includes not only the government, but suppliers, customers and competitors. Similarly, Jonsson et al. (2010) also stress the criticality of boundary spanning information flows, including those beyond any given organisation. Kefalidou et al. (2015) highlight supply chain, knowledge, and obsolescence as a factor that can impinge on the meaningfulness of predictive maintenance.

2.4 Research approach

An identified research gap is that pre-existing work is overwhelmingly focussed on technology rather than user issues (Koochaki and Bouwhuis 2008; Chowdhury and Akram 2013; Dadashi et al. 2014). As described above, the small body of human factors work in remote condition monitoring has typically focussed on technology development (Ciocoiu et al. 2015; Houghton and Patel 2015; Dadashi et al. 2016) in specific settings and does not explicitly seek out cross-organisational issues, though there is evidence from both more organisational work (Jonsson et al. 2010; Aboelmaged 2014) and human factors models (e.g. Wilson and Sharples 2015) that cross-organisational issues are relevant. Also, the more human or organisational work that has been conducted tends to look at a single sector (e.g. Dadashi et al. 2014; Chowdhury and Akram 2013) or a small subset (e.g. Jonsson et al. 2010) of industries. Aboelmagoed (2014) presented a cross-domain quantitative survey to identify factors relating to technology readiness in e-maintenance, but recommended subsequent qualitative work to further understand readiness for e-maintenance.

To address these gaps, the aim was to collect data from a sample representing multiple domains and with different design and operational perspectives. To achieve this, the approach taken was to elicit multiple case studies through the use of semi-structured interview (Runeson and Höst 2009). This approach was considered to be flexible enough to capture general beliefs and experiences related to predictive maintenance deployment (Hove and Anda 2005). As the literature had already suggested themes for analysis (e.g. user, organisational, cross-organisation; Dadashi et al.’s (2014) data to intelligence framework) and the nature of the data concerned technical case studies rather than highly experiential or subjective data, a thematic analysis approach was taken in preference to an approach such as grounded theory or interpretative phenomenological analysis (Hignett and McDermott 2015).

3 Method

3.1 Participants

Twenty participants were approached through contacts of authors following a purposive/snowball sampling strategy (Hignett and McDermott 2015). Of these, 13 agreed to be interviewed. Participants were chosen to reflect a wide range of knowledge and experience with remote condition monitoring or predictive data visualisation expertise, and across multiple domains. All participants were either working in industry (7), were currently in research but delivering production-standard remote condition monitoring projects for industry (3), or were now in research but had worked previously in industry providing remote condition monitoring services (3). Domains covered included rail rolling stock (3), rail infrastructure (2), manufacturing process monitoring (2), fleet commercial vehicles (1), vibration monitoring (1), gearbox monitoring (1), nuclear (1) and highways (1). Also, one participant came from the domain of financial services—while not ‘predictive maintenance’, this is a domain with established technology and practices for capturing, analysing and visualising predictions for decision making (e.g. for mortgage underwriting), and thereby had relevant expertise to the topic at hand.

Participants reflected different roles including human–computer interaction design/human factors (4), suppliers of third-party remote condition monitoring and predictive maintenance solutions (4), in-house deployment and operation of remote condition monitoring and predictive maintenance (4), and change management with application to remote condition monitoring and predictive maintenance (1). Of the 8 participants working in supply or in-house deployment, 6 participants had worked on the whole system (i.e. from sensing through to analysis). The remaining 2 participants specialised in the software relating to analysis. Also, potentially reflecting the ambiguity of roles and bespoke nature of development in this field, there was no clear distinction between suppliers and end-users, as those participants in a supplier role had either experience as an end-user, or were tasked at some stage by their end-user organisation with doing the analysis themselves.

3.2 Procedure

Each participant was introduced to the aims of the study both verbally and through a written information/consent sheet. Participants were then asked to introduce their own experience and background in remote condition monitoring and predictive maintenance, using the following question guide.

-

1.

What type of remote condition monitoring/predictive maintenance solutions do you engage with (are you a supplier, user, academic)?

-

2.

What are the set of stakeholders you typically work with?

-

3.

What are your experiences of using/deploying RCM? Can you give a specific example?

-

4.

What are the major barriers to successful organisational change with RCM?

-

5.

What are the major enablers of successful organisational change with RCM?

-

6.

How are the future challenges for RCM deployment?

-

7.

Do you know of any other participants who would be willing to take part in this study?

Question 3 served as the basis for extended discussion of that particular case or cases selected by the participant. Interview duration was typically 60 min.

The primary form of data collection took the form of extensive contemporaneous written notes. This was felt to be more appropriate than audio recording, even with ethics and assurances of confidentiality, as topics covered often related to commercially sensitive projects and specific commercial organisations. This was supported by participants asking occasionally for very sensitive points to be left out of the notes.

3.3 Analysis

Analysis proceeded in three phases (Hignett and McDermott 2015). The first phase involved review and familiarisation of the notes through transfer from written to an electronic format. Then all notes were printed, read and annotated to highlight themes. Initially the intention was to code according to Dadashi et al.’s data to intelligence framework (2014). However, the case study nature of the data which typically involved narratives covering one or two projects from each participants suggested a coding structure based around three high level codes.

-

1.

Motivation—Drivers for the projects, or motivations for procurement, of predictive maintenance technology.

-

2.

Development—Factors surrounding the design of hardware, software and HMI.

-

3.

Operations—Deploying the technology into a working environment; user adoption and organisational change.

The second phase involved reviewing all notes with a view to allocating codes to the data. Codes were assigned initially according to the high level themes, and then subthemes were allocated as they emerged. An example of interview notes, with allocated themes is presented in Table 1.

The third phase involved writing the report, which involved reviewing the grouping of themes and sub-themes as a textual description. The report was reviewed internally before a respondent validation phase (Hignett and McDermott, 2015) that involved sending the analysis to all participants, and two senior project stakeholders who were not interviewed, in the form of a draft of this paper. Six participants and both stakeholders responded, all with positive feedback and, in one case, with additional supporting material regarding HMI design.

4 Results

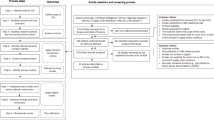

A summary of themes and subthemes is presented in Fig. 1.

4.1 Motivations

4.1.1 Use cases

All participants offered at least one example of current or future application that served as use cases for motivating predictive maintenance solutions. These covered.

-

1.

Optimising maintenance—Assets were already being maintained effectively (i.e. there were no or few failures under the current maintenance regime) but this was achieved through much redundant inspection, presenting opportunities for most cost-effective allocation of maintenance resources. Also, inspection or maintenance had the potential to introduce variability or failure into a system. Therefore, a predictive maintenance system allowed to an asset to only be maintained when needed.

-

2.

Extending asset lifespan—Similar to optimising maintenance, asset prognostics allowed better estimates of the remaining lifespan of the asset, allowing assets to be used for longer before requiring renewal.

-

3.

Safer maintenance—Where assets are remote or unsafe environments (e.g. deserts, offshore, nuclear), assets could be monitored to ensure maintenance was conducted only when necessary.

-

4.

Understanding behaviour—At a more general level, there was a motivation to understand asset behaviour, or asset useage (e.g. how many times a day a set of rail points were being used). Primarily, this allowed decision-makers to move to “evidence-based decisions”, rather than decisions based on received wisdom or common (but potentially inappropriate) practice.

-

5.

Asset failure—This motivation involved ensuring the asset was available and functioning either because it was safety critical (e.g. in rail), or failure would lead to quality issues (e.g. manufacturing) or unplanned, expensive down time of the asset (e.g. gearboxes). In some manufacturing cases, remote sensing may be the only way of knowing that the asset has failed and therefore product quality has been compromised before large batches are produced at high cost. Also, enhanced data supported failure targeting—that is, a general failure had been identified but not the specific location. For example, a fault with a train door is identified on a train, but not which specific door. The data and analysis from a predictive maintenance solution allows the exact door and type of failure to be identified, allowing local maintenance crews to spend less time finding the fault, and instead focussing on getting the asset back into service for the next morning. It is worth noting that the use case around asset failure is not strictly predictive (the asset has already failed). While some examples were given where this was not a priority, and the focus was purely on prediction of future states, there were cases where the technology had to perform functions related to failure as well as prediction.

4.1.2 Strategy

A number of points emerged regarding the nature of strategy when embarking on predictive maintenances projects, and how this was essential for effective adoption at the individual and organisational level. First, leadership was critical and it was essential, particularly for domains that were relatively new to advanced condition monitoring solutions, that a programme of work needed to be pushed at the highest levels of the organisation. The most effective projects were linked to a philosophy of maintenance and service, and sectors such as automotive and aero engines were put forward as examples that had got this right by putting customer service, through predictive maintenance, at the core of their business. In large sectors with multiple inter-connected stakeholders (e.g. rail), leadership may need to be supported at a whole industry or regulatory level, and one of the major differences between domains covered was the influence of regulation, with greater regulation generally leading to slower development and adoption of predictive maintenance. Despite these strong motivations, however, maintenance planning and technology to support maintenance was acknowledged to be only one priority, and often far from the highest priority in an organisation. Also, motivating an active programme of monitoring and predictive maintenance could be challenging in the optimising maintenance situation, where current processes worked well, if inefficiently, as operators were averse to the risk that accompanied change.

A second point was any development had to be linked to a maintenance strategy. There needed to be a clear connection in terms of how a solution would support organisational targets, such as quality levels for manufacturing, levels of continuity or availability for transport assets, or costs associated with unexpected downtime in power generation. Buy in for senior management was achieved through demonstrating the financial benefits of adopting predictive maintenance. For operational staff, the benefits were around how they could use their time more effectively. In particular, two participants cited their own projects in industry where they saw an opportunity or need, and voluntarily explored solutions themselves or in small teams, before taking them to senior management for more formal support and deployment.

A third aspect of strategy was an acceptance of project risk. All of the cases studies discussed broke new ground both in terms of the technologies there were using to sense data, and in the analyses being performed. This required iteration, learning and some inevitable failure along the way. Therefore, the organisation needed to be positive in accepting and managing risk as part of adopting predictive maintenance. When projects involved third parties and suppliers, it was important that relationships should be collaborative rather than contractual. This was in part due to the uncertain nature of developing projects, or even of effectively deploying existing projects in new settings, making it difficult to specify a purely contractual relationship in advance. Also, as discussed below, the volume of knowledge exchange required meant that collaborators had to work in partnership to freely exchange data and knowledge as much as possible.

4.2 Development

A number of challenges were identified with the process of designing a new predictive maintenance solution, or with effective procurement of a system. While these could be technical issues, they had a user or organisational aspect.

4.2.1 Design approach

Underpinning the development of any project was an emphasis on being decision- or action-driven. There had to be a clear target for what was being achieved with the data. A system design orientated to converting data into information was likely to fail. It was essential to take a ‘top–down’ approach that clearly linked information to the ‘levers’ of action or decision-making, and then identify the appropriate data to support that decision making. Also, technology that aimed to support actual decisions was anticipated to be of more use and more accepted by operational staff, even in cases of partial data, than one where the design was driven purely by data. This top–down approach warranted close involvement of actual users and decision-makers. Another important factor in design, particularly for retrofit (which was relevant to all but one of the participants), was that the introduction of the sensors themselves should not introduce a safety or failure risk. Ensuring the asset could still be certified could be a major hurdle (e.g. nuclear, pharmaceuticals).

A major factor was whether the design involved third parties in any part of the solution. A regular theme of discussion was around suppliers who offered a ‘black box’, with the implication that the analysis process was opaque to the user. While some operators and organisations were looking for the simplicity that a black box could offer, the concern was that this often masked important aspects of analytical complexity. For example, if a maintenance environment combined one or more ‘black boxes’ from different suppliers, it was not always possible to tell if different technologies, and their resulting guidance, were comparable. Also, if elements of the solution were to be procured from another sector (e.g. the example was given of transferring sensor technology from utilities into the rail environment), it was not clear if thresholds or analytical processes were relevant to the process where it was now being applied. Finally, sometimes the black box masked fundamental issues in the development of the technology (to quote “it is a black box for a reason”). This had a crucial user implication, as without knowing where the data was coming from or how it would be applied, there would be a lack of trust and eventual rejection of the technology.

4.2.2 Complexity and knowledge

The technical design of the system itself had human implications. Critically, all of the implementations discussed had multiple, interlinked elements. The asset itself may have multiple components and materials, each with different characteristics. One or more aspects of a component (e.g. temperature, vibration) would be monitored which involved a sensor, possibly a means of logging the data or conducting some local analysis, and transmission of a signal (e.g. via a 3G network). Multiple data sources would then have to be combined on a network resource (e.g. a server) and then analysed by at least one stage of algorithms. Every element of this chain would have a bearing on the success of the analysis, with a particular importance placed on understanding the failure modes and the degradation process of a component. Information about how these interconnected links behaved resided as specialised, often undocumented, knowledge across many people, sometimes across organisations. Even academic knowledge was limited, particularly for retrofit, due to the unique combination of assets and technologies.

Where there were gaps in knowledge, cycles of manual data interpretation, testing and experimentation were required, which could constitute a very resource intensive phase of development. In line with Chowdhury and Akram (2013), this often extended to the IT platform and capabilities, though sophisticated IT knowledge was rarely the core strength of any given industrial sector (e.g. transport, manufacturing). At some point, these interlinked systems would involve suppliers. This might involve the manufacturer of a component or sensor, or access to a data stream. Accessing this data could be a challenge, either because the knowledge of the data did not exist in the supplier (e.g. the behaviour of a material in a component) or because of IP concerns. For example, access to the meaning of automotive fault codes was often proprietary and unavailable, and developers of third-party engine maintenance solutions would need to find alternative sources of data.

Having accessed the data, the interpretation and analysis of data was generally believed to be very challenging in terms of expertise, particularly where analysis or prediction was new to a sector, or where the number of variables were high. Predictive systems (rather than simple failure alarms) would require multiple levels of analysis—not just looking at deviations, but how deviations diverted from expected norms over time. Analysis would often require triangulation of multiple data streams or, for some assets, collecting emerging pictures over multiple assets and/or over time. The human implication was that this was again dependent on knowledge and took time, particularly for assets such as a highway where degradation might occur over months or years. A second implication was that few systems could deliver complete ‘decisions’. In many situations, at some point a human operator was still expected to perform a level of analysis or interpretation on top of the one provided the technology.

A final point was the full, predictive maintenance solutions were extremely challenging. Such a system would require not only effective analytics, but integration with maintenance scheduling systems, systems for tracking replacement parts and of availability of maintenance staff. While a future aspiration, the integration of such diverse systems meant that the actual planning of maintenance was still, primarily, a human task.

4.2.3 Human–machine interface

As there was nearly always a level of human interpretation in the use of predictive analytics, HM Iplayed an important role in how predictive information was to be presented to the user. Modes of use varied—where there was a clear set of steps a user had to complete (e.g. a checklist) then a structured workflow was most useful. This was most relevant for situations where data and analytical processes were well established and highly reliable (e.g. consumer credit decisioning). Where the situation was more complex, or the domain was newer to analytic technology, there was more emphasis on exploration and interpretation by a user. It was not considered appropriate design to show huge amounts of raw data but rather, relating to the point above, show the relations between patterns, or highlight deviations from the norm. Libraries of designs exist (e.g. an open source library of visualisation templates at D3js.org), or new designs could be captured through a process such as ecological interface design (Vicente 2002). Techniques like card sorting (Wood and Wood 2008) could also be used to capture workflow, where appropriate. There was, however, a danger of over simplification. While traffic light displays (red, amber, green) may prove a simple and common form of interface, it had to be used with caution because, like the black box example, the representation masked complexity and/or uncertainty in the underlying data and processes, giving a false sense of trust or limiting access to data for diagnostic purposes. A final point for HMI design was that these systems were not necessarily used all day every day. In some cases, particularly where they were used for maintenance scheduling, they may only be referred to on a regular (e.g. weekly) or as needed basis. This was particularly the case where the ‘asset failure’ use case, described above, was not a consideration (i.e. the HMI did not need constant monitoring). The implication was that the standard of usability had to be high, as users would be required to reacquaint themselves with the technology on an frequent basis.

4.3 Operation

4.3.1 Deployment

One clear theme, building on the points from above, was that there was not a clear cut line between ‘development’ and ‘operation’. For newer domains, predictive systems needed to be run in parallel with existing regimes for some time in order to first set baselines, and for the organisation to adapt to the new forms of analysis that were emerging. The trial phase for new solutions could be very long (sometimes several years). Even in more advanced sectors of prediction (e.g. consumer credit scoring) it was acknowledged that development had to be an iterative process. In practice, however, many organisations would treat deployment as simply the end of the process, and not put the appropriate resources on the project. As a result solutions were often not embedded effectively. It was also highlighted that in domains where patterns change slowly over long periods of time (e.g. for large physical infrastructure such as power generation) it was easy for the technology to fall out of use, and eventually be ignored altogether. This was more of an issue where the ‘asset failure’ use case was not relevant (i.e. there was no need to monitor the technology).

Long-term development required a long-term commitment from a budget holder—this involved both funding the project, but more importantly, that the budget holder for maintenance and renewals should match the budget cycle to the timescales that it might take to give for the predictive maintenance to prove its value. For example, there would be a mismatch where maintenance budgets might be allocated (and therefore at risk) in 12 month cycles but the predictive solution might take 5 years to prove its worth. One suggestion was that research funders should put aside a small budget to support ongoing development of a project, beyond its initial delivery.

One further theme was the resourcing required, particularly as technology was being developed. While organisations might see predictive maintenance as a way of reducing resource, the initial period at least would require significant extra resource as people had to learn new technology, conduct manual analysis to interpret new data streams (to achieve as understanding of ‘deviations over time’ discussed above) and keep the new system running alongside existing methods until validation was achieved. As well as being demanding for individuals, this needed to be acknowledged at an organisational and planning level.

4.3.2 Knowledge

The ongoing operation of a predictive maintenance system had implications for knowledge and knowledge management at a number of levels. First, the amount of time it took to run a project meant that staff inevitably left or moved around an organisation. It was therefore critical that knowledge was retained from these people in some form. Second, with projects involving multiple partners, there was a significant risk that after the initial project team dispersed, vital knowledge and understanding of design rationale would be lost. Given the point above about the complexity of solutions, this might apply to sensors, analysis, or the nuances of the underpinning IT. Third, there had to be knowledge of how to get the most out of the analysis being generated. One example was given of training engineers how to design assets in the future to support better monitoring and maintenance. Capturing and maintaining all of these different sources of knowledge had to be an active process, and several examples were given of where the knowledge had not been maintained, resulting in a loss of understanding of how to use or support the predictive maintenance technology, leading to eventual rejection. Conversely, where teams and partnerships had been actively managed through training, handover and relationships, long-term adoption was more likely to succeed.

Finally, in industries and sectors as a whole, there were opportunities to share knowledge and move the state of the art forward. Sectors such as rail would benefit from ‘centres of excellence’ where knowledge could be captured and shared. This would cover both technical knowledge as well as the process of deployment and adoption.

4.3.3 Benefits

With the right strategy and given sufficient time, not only could the use cases listed earlier be delivered, but additional benefits were often found. This would often include technical discoveries (that parts could be built more effectively in the future, that other unanticipated types of fault could be diagnosed and other unanticipated maintenance savings be made). They often, also, uncovered behavioural factors or human variability issues—for example, monitoring would identify more cost efficient ways of operating machinery or vehicles, or that certain maintenance behaviours were leading to types of fault. Once technology was embedded, there were multiple benefits to be gained and effective organisations were alert to the possibilities that might emerge.

5 Discussion

The work conducted was primarily practical in its aims to support an actual development and deployment project. In this regard, a number of recommendations have been brought to light—summarised in Table 2.

In addition, the analysis has both confirmed existing research, and extended findings in a number of directions. These issues can be reflected upon with the context of human factors models.

Looking first to the relation between users and technology/artefacts (Wilson and Sharples 2015), probing on issues of HMI has highlighted, as with others (Houghton and Patel 2015; Morison and Woods 2016), the importance of balance between overloading the user with all forms of raw data streams, and the risks of hiding too much of the data in the form of a simplified traffic light system. Even in the mature financial decision-making case where decisioning is highly reliable, there was still a need to show the nature of the data that underpins a decision in part for diagnostics, and in part for ensuring user trust in the data. The volume of this data can be managed by showing the salient relationships in the data, rather than just the raw data itself.

These interviews have highlighted, however, not just the importance of representation, but also of the structure of the interaction commensurate with the notion of ‘task’, and the use of process analyses as suggested by Ciocoiu et al. (2015) could be useful here. These interviews emphasise the decision-led nature of successful predictive maintenance development, highlighting the need to work closely with both operational (e.g. maintenance) and strategic (e.g. planning) staff to capture these decisions and embed them within design. Also, the pacing of interaction must reflect the types of task the users engage in. The predictive nature of these systems suggests a move away from reactive/alarm-handling type interfaces (Dadashi et al. 2016) to one that requires only occasional use. Also in terms of users, the importance of knowledge both at the design and operational stage, and both in the specifics of the predictive maintenance and in more general ICT have been found to be crucial enablers of successful predictive maintenance-type projects (Koochaki and Bouwhuis 2008; Aboelmaged 2014; Chowdhury and Akram 2013). We note that, in distinction to this being a set of purely technical aspects of delivery, as captured within the TOE framework (Aboelmaged 2014), these factors are a combination of design, task and user factors such as knowledge working together to shape successful performance.

In terms of supporting and understanding of wider organisational factors, as with Dadashi et al. (2014) we found the criticality of leadership and of strategy within the organisation as vital to the successful implementation and useage of a new technology. Also, the timescales of delivery and of drawing value from the embedding of technology and the resources involved are new issues that this data suggests must be taken into account when adopting predictive maintenance. The make-up of virtual teams within organisations, as with Jonsson et al. (2010) highlights the issues with the boundary spanning nature of predictive maintenance. However, the challenges of boundary spanning knowledge apply as much at the design phase as operation. We also find organisational factors such as resourcing in terms of time, people and money, to be critical.

In terms of theoretical contribution, the major outcome of this work has been to define the role of cross-organisational considerations as an external factor when applying human factors models such as Wilson and Sharples (2015) to the context of predictive maintenance. It is important to note these are not just ‘off stage’ factors that impact the design of technology or artefacts. Cross-organisational factors such as access to shared knowledge across the supply chain, the understanding of technical capabilities and their bearing on the quality or interpretation of decision-making, and ultimately the acceptance of technology is dependent on these external parties, and remain live during the lifespan of any system (Baines et al. 2013).

Hierarchical human factors models typically anticipate the influence of standards and regulatory bodies. In the predictive maintenance case they had the potential to be a barrier (e.g. in blocking certification of assets with monitoring technology) but also to act as an enabler, through supporting onward funding of projects beyond deployment, and by encouraging knowledge sharing within sectors (e.g through centres of excellence). However, like Kajko-Mattsson et al. (2010) we found issues around cross-organisational working, including trust and intellectual property (Golightly et al. 2013). The complexity of the supply chain is far greater than expected at the outset, and relates not just to technical issues but a ‘knowledge supply chain’ of expertise distributed across organisations. The knowledge within this supply chain must be both visible (i.e. partners need to open to sharing how their technology works) and managed (i.e. the knowledge must be available not just at the time of launch, but into the future). This is not just for negative reasons, such as understanding why the technology may no longer work. As with Chowdhury and Akram (2013), operators find new ways to gain value from the technology and gather new insights, but the interviews suggest these emergent forms of working (Wilson 2014) must be supported through cooperation between multiple suppliers and customers.

Limitations with the work include the number of participants. While there was considerable thematic consistency in the contribution of participants, it would be useful to expand the participant base further to include domains such as aerospace or automotive (representatives from this sector were sought at the time of the study). Also, the contribution from financial services which, while not strictly maintenance, covered visualisation of predictive analytics, proved very relevant and valuable in the area of HMI design. Further participants of this type (e.g. from weather prediction) could shed valuable light on visual design practices for predictive maintenance. Second, other frameworks could be applied to the interview data set. For example, comments could have been assessed for their relevance against Dadashi et al.’s (2014) data, information, decision, intelligence framework. This might capture new aspects of the data.

6 Conclusions

Predictive maintenance offers significant advantages in terms of better use of resources, more effective, evidence-based use of assets, and more cost effective renewals planning. The technology is underpinned by a complex network of sensors, data and interpretation—this is complex not only in terms of the sophistication of the analyses, but also through the complexity of the arrangements of people within and across organisations.

The work presented in the paper aimed at confirming and extending prior domain specific work in user and organisational issues associated with the development and adoption of predictive maintenance (e.g. Jonsson et al. 2010; Chowdhury and Akram 2013; Dadashi et al. 2014; Kefalidou et al. 2015), with a view to informing a human factors perspective (e.g. Wilson and Sharples 2015) on predictive maintenance. An analysis of interviews with experts in the domain of predictive maintenance, remote condition monitoring and visualisations for predictive analytics has focussed on user, organisational and cross-organisational issues. Emerging from these themes are recommendations related to culture, delivery process, resourcing, supply chain collaboration and industry-wide cooperation. Most interestingly, the relevance of cross-organisational issues that are found in more organisational-orientated studies (Aboelmaged 2014; Jonsson et al. 2010) but to a much lesser extent in the small number of human factors studies of predictive maintenance (Kefalidou et al. 2015) have been identified for adoption into future human factors work. While for now this primarily means design and evaluation of performance, the increasing role predictive maintenance is taking in the fabric of society means that in future the relevance of the issues identified in this paper may be relevant to resilience modelling or accident investigation frameworks (Underwood and Waterson 2014).

As well as addressing limitations, future work could formalise and validate the recommendations presented above. From here, it would be possible to present these recommendations as a structured benchmarking tool. One path would be to extend the Technology, Organisation, Environment (TOE) framework presented by Aboelmaged (2014), particularly with a view to cross-organisational issues. Such a tool would allow organisations to assess their readiness for adoption of predictive maintenance. Critically, this would apply not just to customers (or end user organisations) but, following the results found in this study, suppliers and whole configurations of supply chains delivering predictive maintenance solutions.

References

Aboelmaged MG (2014) Predicting e-readiness at firm-level: an analysis of technological, organizational and environmental (TOE) effects on e-maintenance readiness in manufacturing firms. Int J Inf Manag 34(5):639–651

Armstrong CP, Sambamurthy V (1999) Information technology assimilation in firms: the influence of senior leadership and IT infrastructures. Inf Syst Res 10(4):304–327

Baines T, Lightfoot H, Smart P, Fletcher S (2013) Servitization of manufacture: exploring the deployment and skills of people critical to the delivery of advanced services. J Manuf Technol Manag 24(4):637–646

Burns CM, Hajdukiewicz JR (2004) Ecological interface design. CRC Press, Boca Raton

Chowdhury S, Akram A (2013) Challenges and opportunities related to remote diagnostics: an IT-based resource perspective. Int J Inf Commun Technol Hum Dev (IJICTHD) 5(3):80–96

Ciocoiu L, Siemieniuch CE, Hubbard EM (2015) The changes from preventative to predictive maintenance: the organisational challenge. In: Proceedings of 5th international rail human factors conference, London, September 2015

Dadashi N, Wilson JR, Golightly D, Sharples S (2014) A framework to support human factors of automation in railway intelligent infrastructure. Ergonomics 57(3):387–402

Dadashi N, Wilson JR, Golightly D, Sharples S (2016) Alarm handling for health monitoring: operator strategies used in an electrical control room of a rail network. Proc Inst Mech Eng, Part F: J Rail Rapid Transit 230(5):1415–1428

Figueredo GP, Quinlan PR, Mesgarpour M, Garibaldi JM, John RI (2015) A data analysis framework to rank HGV drivers. In: 2015 IEEE 18th international conference on intelligent transportation systems (pp 2001–2006). IEEE

Golightly D, Dadashi N (2016) How do principles for human-centred automation apply to Disruption Management Decision Support?’ In: Proceedings of IEEE ICIRT 2016, Birmingham, UK

Golightly D, Easton JM, Roberts C, Sharples S (2013) Applications, value and barriers of common data frameworks in the rail industry of Great Britain. Proc Inst Mech Eng, Part F: J Rail Rapid Transit 227(6):693–703

Grey SM, Norris BJ, Wilson JR (1987) Ergonomics in the electronic retail environment. ICL, Slough

Hignett S, McDermott H (2015) Qualitative methodology. In: Wilson JR, Sharples S (eds) Evaluation of human work, 4th edn. CRC Press, Boca Raton, pp 119–138

Houghton RJ, Patel H (2015) Interface design for prognostic asset maintenance. In: Proceedings 19th triennial congress of the IEA (Vol. 9, p. 14)

Hove SE, Anda B (2005) Experiences from conducting semi-structured interviews in empirical software engineering research. In: Software metrics, 2005. 11th IEEE international symposium (pp. 10-pp). IEEE

Iung B, Levrat E, Marquez AC, Erbe H (2009) Conceptual framework for e-maintenance: illustration by e-maintenance technologies and platforms. Annu Rev Control 33(2):220–229

Jonsson K, Holmström J, Levén P (2010) Organizational dimensions of e-maintenance: a multi-contextual perspective. Int J Syst Assur Eng Manag 1(3):210–218

Kajko-Mattsson M, Karim R, Mirjamsdotter A (2010) Fundamentals of the e-maintenance concept. In: 1st international workshop and congress on e-mainteance (pp 22–24)

Kefalidou G, Golightly D, Sharples S (2015) Understanding factors for design and deployment of predictive maintenance. In: Proceedings of 5th international rail human factors conference, London, September 2015

Koochaki J, Bouwhuis IM (2008) The role of knowledge sharing and Transactive Memory System on Condition Based Maintenance policy. In: 2008 IEEE international conference on industrial engineering and engineering management (pp 32–36). IEEE

Lee J, Kao HA, Yang S (2014) Service innovation and smart analytics for industry 4.0 and big data environment. Procedia CIRP 16:3–8

McDonnell D, Balfe N, Baraldi P, O’Donnell GE (2015) Reducing uncertainty in PHM by accounting for human factors—a case study in the biopharmaceutical industry. Procedia CIRP 38:84–89

Morison AM, Woods DD (2016) Opening up the black box of sensor processing algorithms through new visualizations. Informatics, vol 3. Multidisciplinary Digital Publishing Institute, Basel, p 16

Petrovic O, Kittl C, Teksten RD (2001) Developing business models for e-business. International Electronic Commerce Conference, Vienna. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1658505. Accessed 18 May 2017

Runeson P, Höst M (2009) Guidelines for conducting and reporting case study research in software engineering. Empir Softw Eng 14(2):131

Smith DJ (2013) Power-by-the-hour: the role of technology in reshaping business strategy at Rolls-Royce. Technol Anal Strateg Manag 25(8):987–1007

Suh SH, Shin SJ, Yoon JS, Um JM (2008) UbiDM: a new paradigm for product design and manufacturing via ubiquitous computing technology. Int J Comput Integr Manuf 21(5):540–549

Underwood P, Waterson P (2014) Systems thinking, the Swiss Cheese Model and accident analysis: a comparative systemic analysis of the Grayrigg train derailment using the ATSB, AcciMap and STAMP models. Accid Anal Prev 68:75–94

Vicente KJ (2002) Ecological interface design: progress and challenges. Hum Factors: J Hum Factors Ergon Soc 44(1):62–78

Wilson JR (2014) Fundamentals of systems ergonomics/human factors. Appl Ergon 45(1):5–13

Wilson JR, Sharples SC (2015) Methods in the understanding of human factors. In: Wilson JR, Sharples S (eds) Evaluation of human work, 4th edn. CRC Press, Boca Raton, pp 1–32

Wilson JR, Farrington-Darby T, Cox G, Bye R, Hockey GRJ (2007) The railway as a socio-technical system: human factors at the heart of successful rail engineering. Proc Inst Mech Eng, Part F: J Rail and Rapid Transit 221(1):101–115

Wood JR, Wood LE (2008) Card sorting: current practices and beyond. J Usability Stud 4(1):1–6

Acknowledgements

This work is funded by the Innovate UK-funded project PCIPP: People-Centred Infrastructure for Intelligent, Proactive and Predictive Assets Maintenance with Condition Monitoring, project number 101698. Many thanks are due to the participants who contributed their time and expertise.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Golightly, D., Kefalidou, G. & Sharples, S. A cross-sector analysis of human and organisational factors in the deployment of data-driven predictive maintenance. Inf Syst E-Bus Manage 16, 627–648 (2018). https://doi.org/10.1007/s10257-017-0343-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10257-017-0343-1