Abstract

The coronavirus disease (COVID-19) is a severe, ongoing, novel pandemic that emerged in Wuhan, China, in December 2019. As of January 21, 2021, the virus had infected approximately 100 million people, causing over 2 million deaths. This article analyzed several time series forecasting methods to predict the spread of COVID-19 during the pandemic’s second wave in Italy (the period after October 13, 2020). The autoregressive moving average (ARIMA) model, innovations state space models for exponential smoothing (ETS), the neural network autoregression (NNAR) model, the trigonometric exponential smoothing state space model with Box–Cox transformation, ARMA errors, and trend and seasonal components (TBATS), and all of their feasible hybrid combinations were employed to forecast the number of patients hospitalized with mild symptoms and the number of patients hospitalized in the intensive care units (ICU). The data for the period February 21, 2020–October 13, 2020 were extracted from the website of the Italian Ministry of Health (www.salute.gov.it). The results showed that (i) hybrid models were better at capturing the linear, nonlinear, and seasonal pandemic patterns, significantly outperforming the respective single models for both time series, and (ii) the numbers of COVID-19-related hospitalizations of patients with mild symptoms and in the ICU were projected to increase rapidly from October 2020 to mid-November 2020. According to the estimations, the necessary ordinary and intensive care beds were expected to double in 10 days and to triple in approximately 20 days. These predictions were consistent with the observed trend, demonstrating that hybrid models may facilitate public health authorities’ decision-making, especially in the short-term.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The coronavirus disease (COVID-19) is a severe, ongoing, novel pandemic that officially emerged in Wuhan, China, in December 2019. As of January 21, 2021, it had affected 219 countries and territories with almost 100 million cases and over 2 million deaths [77]. At the time of writing, the countries most significantly affected include both advanced and developing countries, such as Brazil, France, India, Italy, Russia, Spain, the UK, and the US. From October to December 2020, several European countries, including Italy, saw a worrisome surge of COVID-19 infections.

Italy was the first European country to be severely impacted by COVID-19, and it remained one of the main epicenters of the pandemic for approximately 2 months, i.e., from mid-February 2020 to mid-April 2020. After that first peak, the pandemic curve progressively decreased until mid-August 2020. However, the spread of infection accelerated again in the late Summer and early Fall of 2020, and this second surge continues today. As of January 21, 2021, Italy has suffered 84,202 deaths and 2,428,221 cases.

The likelihood of new and consecutive COVID-19 waves is real, and efforts to study the pandemic’s trajectory are imperative to purchase medical devices and healthcare facilities and to manage health centers, clinics, hospitals, and ordinary and intensive care beds.

Thus, the first goal of this paper is to provide short-term and mid-term forecasts for the number of patients hospitalized with COVID-19 during the second wave of COVID-19 infections, i.e., during the period after October 13, 2020. COVID-19-related hospitalization trends offer a clear picture of the overall pressure on the national healthcare system. Moreover, models fitted to hospitalized patients are usually more reliable and accurate than models fitted to confirmed cases [30].Footnote 1 The paper’s second goal is to compare and investigate the accuracy of several statistical methods.

In particular, I estimated four time series forecast techniques and all of their feasible hybrid combinations: the autoregressive moving average (ARIMA) model, innovations state space models for exponential smoothing (ETS), the neural network autoregression (NNAR) model, and the trigonometric exponential smoothing state space model with Box–Cox transformation, ARMA errors, and trend and seasonal components (TBATS).

The rest of this paper is organized as follows. “Related literature” reviews the relevant literature while “Materials and methods” presents the data used in the analysis and discusses the empirical strategy. “Evaluation metrics” presents the evaluation metrics used to measure the performance of the models. “Results and discussion” discusses the main findings and policy implications. Finally, “Conclusions” provides some conclusive considerations.

Related literature

From the beginning of 2020, an increasing body of literature has employed various approaches to forecast the spread of the COVID-2019 outbreak [9, 22, 26, 58, 73, 78, 79, 83, 85]. The most frequently used were ARIMA models [3, 8, 14, 62], ETS models [13, 44], artificial neural network (ANN) models [55, 75], TBATS models [68, 71], models derived from the susceptible–infected–removed (SIR) basic approach [22, 26, 58, 78, 85], and hybrid models [15, 29, 68, 69]. The implementation and comparison of these approaches—with the exception of mechanistic–statistical models (such as SIR)—represents the core of this paper.

Ala’raj et al. [2] utilized a dynamic hybrid model based on a modified susceptible–exposed–infected–recovered–dead (SEIRD) model with ARIMA corrections of the residuals. They provided long-term forecasts for infected, recovered, and deceased people using a US COVID-19 dataset, and their model had a remarkable ability to make accurate predictions. Using a nonseasonal ARIMA model, Ceylan [14] made short-term predictions of cumulative confirmed cases after April 15, 2020, for France, Italy, and Spain. The forecasts showed low mean absolute percentage errors (MAPE) and seemed to be sufficiently reliable and suitable for the short-term epidemiological analysis of COVID-19 trends.

Hasan [29] proposed a hybrid model that incorporates ensemble empirical mode decomposition (EEMD) and neural networks to forecast real-time global COVID-19 cases for the period after May 18, 2020. The analysis showed that the ANN-EEMD approach was quite promising and outperformed traditional statistical methods, such as regression analysis and moving average.

Ribeiro et al. [65] provided short-term estimates of COVID-19 cumulative confirmed cases in Brazil by employing multiple approaches and selecting several models, such as ARIMA, cubist regression (CUBIST), random forest (RF), ridge regression (RIDGE), support vector regression (SVR), and stacking-ensemble learning (SEL). The models’ reliabilities were evaluated based on the improvement index, mean absolute error (MAE), and symmetric MAPE criteria. The analysis demonstrated that SVR and SEL performed best, but all models exhibited good forecasting performances.

Using ARIMA, TBATS, their statistical hybrid, and a mechanistic mathematical model combining the best of the previous models, Sardar et al. [68] attempted to forecast daily COVID-19 confirmed cases across India and in five different states (Delhi, Gujarat, Maharashtra, Punjab, and Tamil Nadu) from May 17, 2020, until May 31, 2020. The ensemble model showed the best prediction skills and suggested that COVID-19 that daily COVID-19 cases would significantly increase in the considered forecast window and that lockdown measures would be more effective in states with the highest percentages of symptomatic infection.

Wieczorek et al. [75] implemented deep neural network architectures, which learned by using a Nesterov-accelerated adaptive moment (Nadam) training model, to forecast cumulative confirmed COVID-19 cases in several countries and regions. The predictions, which referred to different time windows, revealed that the models had an extremely high level of accuracy (approximately 87.7% for most regions but, in some cases, reaching almost 100%).

Talkhi et al. [71] attempted to forecast the number of COVID-19 confirmed infections and deaths in Iran between August 15, 2020, and September 14, 2020, using several single and hybrid models. The extreme learning machine (ELM) and hybrid ARIMA–NNAR models were the most suitable for forecasting confirmed cases, while the Holt–Winters (HW) approach outperformed the others in predicting death cases.

Finally, Table 1 reports 30 international studies that utilized single or hybrid ARIMA, ETS, neural network, and TBATS models to forecast the transmission patterns of COVID-19 across the world.

Materials and methods

The data used in this article, which include 236 observations, referred to the real-time number of COVID-19 hospitalizations of patients with mild symptoms and patients assigned to the ICU in Italy from February 21, 2020, to October 13, 2020. I extracted the data from the official Italian Ministry of Health’s website (www.salute.gov.it). The confirmed COVID-19-related hospitalization trends appear in Fig. 1.

Source: Italian Ministry of Health [43]

Patients hospitalized with mild symptoms and in the ICU from February 21, 2020 to October 13, 2020.

The data showed that the number of COVID-19 patients hospitalized with mild symptoms and the number of COVID-19 patients assigned to the ICU reached an initial peak on April 4, 2020. They then followed a downward trend until mid-August before accelerating again from the end of September 2020 to mid-October 2020. Recognizing that the use of only one model is never wise and may lead to unreliable forecasts [68], I computed the forecasts by employing different statistical techniques and their combinations. Specifically, linear ARIMA models, ETS models, linear and nonlinear NNAR models, TBATS models, and their feasible hybrid combinations were examined.

ARIMA models, which were first proposed by Box and Jenkins [11], represent one of the most widely used frameworks for epidemic/pandemic and disease time series predictions [66, 84]. Considering only the linear trend of a time series, they are able to capture both nonseasonal and seasonal patterns of that series. The following should be noted regarding the nonseasonal component of ARIMA models: (i) the autoregressive (AR) process aims to forecast a time series using a linear combination of its past values; (ii) the differencing (I) is required to make the time series stationary by removing (or mitigating) trend or seasonality (if any), and (iii) the moving average (MA) process aims to forecast future values using a linear combination of previous forecast errors. The seasonal component is similar to the nonseasonal component, but implies backshifts of the seasonal period, i.e., adding the seasonal parameters to the AR, I, and MA components, which allows the model to handle most of the seasonal patterns in the real-world data. Therefore, the final seasonal model can be denoted as ARIMA (p,q,d)(P,Q,D)m, where m is the seasonal period and the lowercase and uppercase letters indicate the number of nonseasonal and seasonal parameters for each of its three components, respectively [34], Sect. 8).

The ETS class of models was introduced in the late 1950s [12, 31, 76] to consider different combinations of trend and seasonal components. The basic ETS model consists of two main equations: a forecast equation and a smoothing equation. By integrating these two equations into an innovation state space model, which may correspond to the additive (A) or multiplicative (M) error assumption, it is possible to obtain an observation/measurement equation and a transition/state equation, respectively.Footnote 2 The first equation describes the observed data while the second equation describes the behavior of the unobserved states. The states refer to the level, trend, and seasonality. The trend and seasonal components may be none (N), additive (A), additive damped (Ad),Footnote 3 or multiplicative (M), resulting in a wide range of model combinations. The final model assumes the form of a three-character string (Z,Z,Z), where the first letter identifies the error assumption of the state space model, the second letter identifies the trend type, and the third letter identifies the season type. These models are able to produce a time series forecast by using the weighted average of its past values and adding more weight to recent observations [34], Sect. 7, [40].

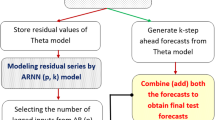

NNAR models can be viewed as a network of neurons or nodes that depict complex nonlinear relationships and functional forms. In a basic neural network framework, the neurons are organized in two layers: (i) the bottom layer identifies the original time series, and (ii) the top layer identifies the predictions. The resulting model is equivalent to a simple linear regression and becomes nonlinear only when an intermediate layer with “hidden neurons” is included. For seasonal data, NNAR models can be described with the notation NNAR (p,P,k)m, where m is the seasonal period, p denotes the number of nonseasonal lagged inputs for the linear AR process, P represents the seasonal lags for the AR process, and k indicates the number of nodes/neurons in the hidden layer [34], Sect. 11.3).

Finally, TBATS models are a class of models that combine different approaches: trigonometric terms for modeling seasonality, Box–Cox transformation [10] for addressing heterogeneity, ARMA errors for addressing short-term dynamics, damping (if any) trends, and seasonal components. Therefore, TBATS models have several properties: (i) they deal well with very complex seasonal patterns, which might, for example, exhibit daily, weekly, and annual patterns simultaneously; (ii) they are able to consider time series nonlinear patterns, and iii) they can handle any type of autocorrelation in the residuals [34], Sect. 11.1, [71].

The combination of different times series forecast methods maximizes the chance of capturing seasonal, linear, and nonlinear patterns [60, 82] and is especially useful for predicting real-world phenomena, such as the COVID-19 pandemic, which are characterized by complex dynamics [7]. Well established from the seminal work of Bates and Granger [6], combining techniques with unique properties could allow models to achieve better performance and forecast accuracy.Footnote 4 The models were calculated by using the following analytical procedures:

-

ARIMA models were detected by applying the “auto.arima()” function included in the package “forecast” (in the R environment) and developed by Hyndman and Khandakar [35]. This function followed sequential steps to identify the best model, i.e., the number of p parameters of the autoregressive process (AR), the order i of differencing (I), the number of q parameters of the MA, and the number of the parameters of the seasonal component. It combined unit root testsFootnote 5 and the minimization of the following estimation methods: the bias-corrected Akaike’s information criterion (AICc)Footnote 6 and the maximum likelihood estimation (MLE). The unit root tests identified the order of differencing while the AICc and the MLE methods identified the order and the values of the parameters (respectively) of the seasonal and nonseasonal AR and MA processes;

-

ETS models were identified by using the “ets()” function included in the package “forecast” (in the R environment) and developed by Hyndman et al. [39].Footnote 7 In particular, I applied the Box–Cox [10] transformation to the data before estimating the model and then used the AICc metric to determine if the trend type was damped or not. The final three-character string identifying method (Z,Z,Z) was selected automatically;

-

NNAR models were identified via the “nnetar()” function included in the package “forecast” (in the R environment) written by Hyndman [33].Footnote 8 I proceeded as follows: (i) first, the Box–Cox transformation [10] was applied to the data before estimating the model; (ii) second, the optimal number of nonseasonal p lags for the AR(p) process was obtained by using the AICc metric; (iii) third, the seasonal P lags for the AR process were set to 1Footnote 9; and (iv) finally, the optimal number of neurons was identified using the formula \(k=\frac{(p+P+1)}{2}\) [34], Sect. 11.3);

-

TBATS models were identified using the “tbats()” function included in the package “forecast” (in the R environment) as described in De Livera et al. [17]. The optimal Box–Cox transformation parameter, ARMA (p,q) order, damping parameter, and number of Fourier terms were selected using the Akaike’s information criterion (AIC) metric;Footnote 10

-

Hybrid models were identified via the “hybridModel()” function included in the “forecastHybrid” package (in the R environment) developed by Shaub and Ellis.Footnote 11 The individual time series forecasting methods were combined as follows: (i) first, the Box–Cox power transformation [10] was applied to the inputs to increase the plausibility of the normality assumption; and (ii) then, the individual models were combined using both equal weights and cross-validated errors (“cv.errors”), which gave greater weight to the models that performed relatively better. In fact, since the best weighting procedure has not been established, I adopted a parsimonious approach and chose the one that performed better. Specifically, I tested the overall goodness-of-fit of all models with four common forecast accuracy measures: MAE, MAPE, mean absolute scaled error (MASE), and root mean square error (RMSE).

The estimated basic equation for the ARIMA was the following [16]:

where \({\Delta }^{d}\) is the second difference operator, \({y}_{t}\) indicates the predicted values, p is the lag order of the AR process, \(\phi\) is the coefficient of each parameters p, q is the order of the MA process, \(\gamma\) is the coefficient of each parameter q, and \({\varepsilon }_{t}\) denotes the residuals of the errors at time t.

The estimated equations for the basic ETS (A,N,N) model with additive errors were the following [34], Sect. 7):

where \({l}_{t}\) is the new estimated level, \({\widehat{y}}_{t+1|t}\) denotes each one-step-ahead prediction for time t+1 which results from the weighted average of all the observed data, \(0\le \alpha \le 1\) is the smoothing parameter, which controls the rate of decrease of the weights, and \({y}_{t}-{l}_{t-1}\) is the error at time t. Hence, each forecasted observation is the sum of the previous level and an error, and each type of error, additive or multiplicative, corresponds to a specific probability distribution. For a model with additive errors, as is this case here, errors are assumed to follow a normal distribution. Thus, Equations (2) and (3), respectively, can be rewritten as follows:

Equations (4) and (5) represent the innovation state space models that underlie the exponential smoothing methods.

The basic form of the neural network autoregression equation was the following [34], Sect. 11.3):

where \({y}_{t}\) indicates the predicted values, \({y}_{t-1}{=\left({y}_{t-1,}{y}_{t-2}\dots ,{y}_{t-n}\right)}^{{'}}\) is a vector containing the lagged values of the observed data, f is the neural network with n hidden neurons in a single layer, and \({\varepsilon }_{t}\) is the error at time t. A simple graphical example of a nonlinear neural network is shown in Fig. 2.

Finally, the basic equation of the TBATS model took the following form [17]:

where \({y}_{i}^{(\omega )}\) indicates the Box–Cox transformation parameter (ω) applied to the observation \({y}_{t}\) at time t, \({l}_{t}\) is the local level, \(\phi\) is the damped trend, b is the long-run trend, T denotes the seasonal pattern, \({s}_{t}^{(i)}\) is the ith seasonal component,Footnote 12\({m}_{i}\) denotes the seasonal periods, and \({d}_{t}\) indicates an ARMA (p,q) process for residuals.

Evaluation metrics

The main metrics used to compare the performances of the single and hybrid prediction models were MAE, MAPE, MASE, and RMSE. The formulae used to calculate each of these metrics appear below (Eqs. 8–11):

where n represents the number of observations, \({y}_{i}\) denotes the actual values, and \(\widehat{{y}_{i}}\) indicates the predicted values. Specifically, MAE and RMSE are both scale-dependent measures, although based on different errors. MAE is easier to interpret because minimizing it leads to predictions of the median, while minimizing RMSE leads to predictions of the mean. In fact, if the first metric is based on absolute errors, the second is based on squared errors. MAPE is probably the most widely employed error measure [27, 47], and unlike MAE and RMSE, it is not scale-dependent because it is based on percentage errors. Thus, it has the advantage of being a unit-free metric. However, it also requires some critical considerations. For example, it can lead to biased forecasts because it gives infinite or undefined results when one or more time series data point equals 0, and it puts a heavier penalty on negative errors (i.e., when predicted values are higher than actual values) than on positive errors. Finally, MASE, which was proposed by Hyndman and Koehler [36], is a scale-free error metric and probably the most versatile and reliable measure of forecast accuracy. It is superior to MAPE in that it does not give infinite or undefined values and can be used to compare forecast accuracy both on single and multiple time series.Footnote 13 Since each model thus entails specific strengths and disadvantages, I opted for the prudent approach—evaluating the output of all of them.

Results and discussion

Table 2 reports the best selected parameters for the single modelsFootnote 14 while Tables 3 and 4 include the forecast accuracy measures for the single and hybrid models.Footnote 15 For patients hospitalized with mild symptoms (Table 2), the optimal single models were the seasonal ARIMA (1,2,3) (0,0,1)7, ETS (A,Ad,N),Footnote 16 NNAR (7,1,4)7, and TBATS (0.428, {2,2}, 1, {< 7,2 >}).Footnote 17 For patients hospitalized in the ICU (Table 2), the optimal single models were the seasonal ARIMA (1,2,2)(0,0,1)7, ETS (A,A,N),Footnote 18 NNAR (6,1,4)7, and (T)BATS (0.427,{0,0},1,−).Footnote 19 The hybrid models were derived by combining the optimal single models with equal weights, which proved to be more suitable than weights based on error values.Footnote 20

In particular, for patients hospitalized with mild symptoms, the most accurate single model was NNAR, followed by seasonal ARIMA, while the best hybrid model was NNAR–TBATS, followed by ARIMA–NNAR, and ARIMA–NNAR–TBATS. For patients hospitalized in the ICU, the best single model was NNAR, followed by ARIMA, while the best hybrid model was ARIMA–NNAR, followed by ARIMA–ETS–NNAR, and ETS–NNAR.Footnote 21

Autocorrelation function (ACF) indicated that current values were not correlated with previous values at lag 1 (Tables 2 and 3). In fact, the correlation coefficient between one point and the next in the time series ranged from − 0.07 to 0.19 for patients hospitalized with mild symptoms and from − 0.16 to 0.31 for patients in the ICU. The highest values (0.19 and 0.31) were obtained for the TBATS model.Footnote 22

According to Lewis’ [52] interpretation, since MAPE was always significantly lower than 10, all predictive models can be considered as highly accurate. Moreover, MASE was much lower than 1 for all models; therefore, all the proposed forecasting approaches performed significantly better than the forecasts from the (no-change) “naïve” methods, i.e., the forecasts with no adjustments for casual factors (Hyndman and Koehler 2006), which justifies the use of more complex and sophisticated models.

Tables 5 and 6 compare the hybrid models with the respective single models considering the minimization of MAE, MAPE, MASE, and RMSE metrics. For patients hospitalized with mild symptoms, the hybrid models outperformed the respective single models in 98 out of 112 metrics, i.e., on 87.5% of all the forecast accuracy measures. For patients hospitalized in the ICU, the hybrid models outperformed the respective single models in 81 out of 112 metrics, i.e., on 72.3% of all measures. In the latter case, however, almost all losses of efficiency (25 out 31) were attributable to NNAR.Footnote 23 Thus, the hybrid models generally increased forecast accuracy, but this increase was more evident for patients hospitalized with mild symptoms.

The best hybrid model for patients hospitalized with mild symptoms—NNAR–TBATS—outperformed the single NNAR and TBATS models by 5.63–9.23% on MAE and 5.88–9.95% on RMSE. While, the best hybrid model for patients hospitalized in the ICU—ARIMA–NNAR—outperformed the single ARIMA and NNAR models by 1.2–8.67% on MAE, and 2.81–11.44% on RMSE. Figures 3 and 4 graphically represent the models for both time series ranked by the MAE and RMSE metrics.

Figures 5 and 6 show the best six models and the remaining nine models for patients hospitalized with mild symptoms, respectively. Similarly, Figs. 7 and 8 show the best six models and the remaining nine models for patients hospitalized in the ICU, respectively. The light blue area in each graph shows the prediction intervals at 80%, while the dark blue area shows the prediction intervals at 95%.Footnote 24 The forecasts of the best single and hybrid models anticipated an increase in the number of patients hospitalized with mild symptoms and in the number of patients admitted to the ICU over the next 30 days, i.e., from October 14, 2020, to November 12, 2020. This predicted trend was also confirmed by the remaining estimated models.

Specifically, the NNAR–TBATS, ARIMA–NNAR, and ARIMA–NNAR–TBATS models predicted that: (i) after 10 days (October 23), the number of patients hospitalized with mild symptoms should have been 9624, 9397, and 9259, respectively; (ii) after 20 days (by November 2), the number should have been 18,000, 16,986, and 16,062, respectively; and (iii) after 30 days (by November 12), the number should have been 25,039, 21,669, and 21,430, respectively (Fig. 5). Regarding the number of patients hospitalized in the ICU, the ARIMA–NNAR, NNAR, and ARIMA–ETS–NNAR models predicted that: (i) after 10 days, the required number of intensive care beds should have been 1175, 972, and 1114, respectively; (ii) after 20 days, the required number should have been 2164, 1493, and 1915, respectively; and (iii) after 30 days, the number should have been 3270, 1985, and 2726, respectively (Fig. 7).Footnote 25

Figures 9, 10, 11 and 12 compare all 15 of the estimated models and the observed data over the period October 14, 2020, to November 12, 2020. For patients hospitalized with mild symptoms, the NNAR–TBATS models best fit the observed data (Fig. 9). Of the remaining models, predictions from ARIMA–NNAR, ARIMA–NNAR–TBATS, and NNAR most closely approximated the observed data (Figs. 9, 10). For patients hospitalized in the ICU, Fig. 11 reveals that ARIMA–NNAR, NNAR–TBATS, and ARIMA–NNAR–TBATS hybrid models also fit the observed data quite well. All estimated models generally exhibited a strong match between predictions and observed data, except for ETS, NNAR, and ETS–TBATS, where the trends differed (Figs. 11 and 12). Notably, the models with lowest loss of efficiency adapted better to the observed data, which confirms the consistency and robustness of the statistical approach.

Thus, a second wave of COVID-19 was predicted for the period following October 13, 2020, which could have several policy implications both for the national healthcare system and the economy. In particular, the predictions underscored the importance of implementing adequate containment measures and increasing the number of ordinary and intensive care beds, hiring additional healthcare personnel, and buying care facilities, protective equipment, and ventilators to fight the infection and reduce deaths.Footnote 26 Meanwhile, the opportunity to implement more or less restrictive non-pharmaceutical interventions (NIPs) to tackle the pandemic—such as social distancing, travel bans, the use of face masks, hand hygiene, and bar and restaurant restrictions [20]—should be evaluated carefully in light of these measures’ potentially negative economic impacts. In fact, according to Fitch Rating’s [24] previsions, the first wave of COVID-19 and the consequent massive lockdown measures may already have caused up to a 9.5% contraction in Italy’s 2020 GDP.

While the models with the lowest loss of efficiency seemed to adapt substantially well to the observed data in the forecast window, the predictions should, in general, be treated with caution and employed mainly to inform short-term decisions. In fact, pandemic forecasting has raised many doubts in the last year due to several issues that can affect its accuracy and reliability, including, for example, (i) high interval predictions and sensitivity of the estimates, especially with long-term forecasts; (ii) inaccurate modeling assumptions, and (iii) the lack of or difficulty in measuring and identifying biological features of COVID-19 transmission [30, 42]. These limitations—and the possibility for misleading forecasts—may erode public trust in science and thus affect compliance with policies intended to mitigate the spread of COVID-19 [49]. Indeed, the inevitable uncertainty associated with this novel disease and the general failure of long-term and even mid-term forecasts require a different scientific approach toward model predictions. More prudent and balanced communication with the public is crucial if the field of science desires to maintain its leading role in human development and policymaking.

Conclusions

This paper attempted to forecast the short-term dynamics of real-time patients hospitalized from COVID-19 in Italy. In particular, it employed both single time series forecast methods and their feasible hybrid combinations. The results demonstrated that (i) the best single models were NNAR and ARIMA for both patients hospitalized with mild symptom and patients admitted to the ICU, (ii) the most accurate hybrid models were NNAR–TBATS, ARIMA–NNAR, and ARIMA–NNAR–TBATS for patients hospitalized with mild symptoms and ARIMA–NNAR, ARIMA–ETS–NNAR, and ETS–NNAR for patients hospitalized in the ICU, (iii) hybrid models generally outperformed the respective single models by offering more accurate predictions, and (iv) finally, predictions for the number of patients hospitalized in the ICU generally better fit the observed data than did predictions for patients hospitalized with mild symptoms. Notably, the best hybrid models always included a NNAR process, confirming the extensive and successful use of this algorithm in the COVID-19 related literature [56, 57, 71, 72, 81].

Compared to the single models, the hybrid statistical models captured a greater number of properties in the data structure, and the predictions seemed to offer useful policy implications. In fact, consistent with real-time data, the models predicted that the number of patients hospitalized with mild symptoms and admitted to the ICU would grow significantly until mid-November 2020. According to the estimations, the necessary ordinary and intensive care beds were expected to double in 10 days and to triple in approximately 20 days. Thus, since new waves of COVID-19 infections cannot be excluded, it may be necessary to strengthen the national healthcare system by buying protective equipment and hospital beds, managing healthcare facilities, and training healthcare staff.

Although the hybrid models proved to be sufficiently accurate, it is nevertheless important to stress that statistical methods may lead to unavoidable uncertainty and bias, which tend to grow over time, due, for example, to public authorities’ progressive implementation of NIPs, such as the closure of public spaces and national or local lockdown measures, which the forecasts cannot adequately incorporate. Combining hybrid models with mechanistic mathematical models may partially overcome these issues by considering the effects of lockdowns on epidemiological parameters [68]. Thus, future research could proceed along these lines. Ultimately, since other factors may have affected COVID-19 dynamics, especially as the pandemic progressed, these predictions should be treated with caution and utilized only to inform short-term decision-making processes.

Availability of data and material

I extracted the data from the official Italian Ministry of Health’s website (www.salute.gov.it).

Code availability

The statistic software and codes are mentioned in the manuscript (“Materials and methods”).

Notes

In fact, the number of infected people presents a high degree of uncertainty, especially because the number of infected but asymptomatic people is high [59].

It is valid only for the trend component.

See also, for example, Fallah et al. [21].

A description of the “ets()” function is provided by Hyndman and Athanasopoulos [34], Sect. 7.6).

A description of the “nnetar()” function is provided by Hyndman and Athanasopoulos [34], Sect. 11.3).

This was the default value for the seasonal time series in the “nnetar()” function.

In fact, AICc is not included in the “tbats()” function.

A detailed description of the “forecastHybrid” package was provided at https://cran.r-project.org/web/packages/forecastHybrid/forecastHybrid.pdf.

To this regard, it is important to stress that Livera et al. [17] introduced a specific trigonometric representation of seasonal components based on Fourier series.

As suggested by Hyndman [38], since the time series covered less than year and had daily observations, the frequency was set to 7, which allowed for weekly seasonality.

The selected ETS model is also known as the damped trend method with additive errors.

The estimated TBATS model was derived by applying a Box–Cox transformation of 0.428, an ARMA{2,2} process for modeling errors, a damping parameter of 1 (doing nothing), and two Fourier pairs with seasonal periods of 7.

The selected ETS model is also known as Holt’s linear trend method. In this case, both error and trend were additive.

In this case, the “tbats()” algorithm gave a BATS model that differed from TBATS only in the way that it modeled seasonality. In particular, it did not implement the Fourier series to model seasonality. For simplicity, the notation TBATS was used for the discussion of hybrid models forecasting patients hospitalized in the ICU. This model employed a Box–Cox transformation of 0.427, no ARMA {0,0} errors, and a damping parameter of 1 (doing nothing).

The forecast performance measures of the hybrid models combined with the cross-validation procedure were reported in the Appendix B (Tables B1 and B2). With regard to patients hospitalized with mild symptoms and patients hospitalized in the ICU, the models derived using equal weights outperformed those obtained with cross-validated errors in 26 and 31 (out of 33) performance accuracy metrics, respectively Tables B3 and B4.

In particular, ARIMA–ETS–NNAR and ETS–NNAR models exhibited nearly identical accuracy.

Tables C1 and C2 (Appendix C) also reported the results of Ljung–Box’s [54] test for residual autocorrelation using no more than 10 lags, as suggested by Ljung [53] when the sample size is not large. The output showed that for patients hospitalized with mild symptoms, ARIMA, TBATS, and all hybrid models, except for ETS-TBATS, had no autocorrelation issue, while single ETS and NNAR exhibited some autocorrelation, especially at lags 8 and 10. With regard to patients hospitalized in the ICU, ARIMA and most of hybrid models, except for ARIMA–TBATS, ETS–TBATS, and ARIMA–ETS–TBATS, had no particular signs of autocorrelation. In contrast, single ETS, NNAR, and TBATS showed some problems at lags 8 and 10.

This did not change the meaning of the results because, as confirmed by Table C2 (Appendix C), the residuals of the hybrid model’s output were generally less affected by autocorrelation than were those for NNAR.

The choice of these percentages was consistent with Hyndman and Athanasopoulos (34, Sect. 3.5).

This is consistent with my recent paper [64] in which I demonstrated that the Italian healthcare system’s saturation played a key role in explaining the variability of COVID-19 mortality. In particular, the saturation of ordinary and intensive care beds explained almost 90% of the COVID-19 mortality across Italian regions at the first peak of the pandemic.

References

Abotaleb, M.S.A.: Predicting COVID-19 cases using some statistical models: an application to the cases reported in China Italy and USA. Acad. J. Appl. Math. Sci. 6(4), 32–40 (2020)

Ala’raj, M., Majdalawieh, M., Nizamuddin, N.: Modeling and forecasting of COVID-19 using a hybrid dynamic model based on SEIRD with ARIMA corrections. Infect. Dis. Model. 6, 98–111 (2021)

Alzahrani, S.I., Aljamaan, I.A., Al-Fakih, E.A.: Forecasting the sSpread of the COVID-19 Pandemic in Saudi Arabia using ARIMA prediction model under current public health interventions. J. Infect. Public Health 13(7), 914–919 (2020)

Aslam, M.: Using the kalman filter with Arima for the COVID-19 pandemic dataset of Pakistan. Data Brief 31, 105854 (2020)

Awan, T.M., Aslam, F.: Prediction of daily COVID-19 cases in European countries using automatic ARIMA model. J. Public Health Res. (2020). https://doi.org/10.4081/jphr.2020.1765

Bates, J.M., Granger, C.W.: The combination of forecasts. J. Oper. Res. Soc. 20(4), 451–468 (1969)

Batista, M.: Estimation of the final size of the COVID-19 epidemic. MedRxiv (2020)

Benvenuto, D., Giovanetti, M., Vassallo, L., Angeletti, S., Ciccozzi, M.: Application of the ARIMA model on the COVID-2019 epidemic dataset. Data Brief 29, 105340 (2020)

Bhardwaj, R.: A predictive model for the evolution of COVID-19. Trans. Indian Natl. Acad. Eng (2020). https://doi.org/10.1007/s41403-020-00130-w

Box, G.E., Cox, D.R.: An analysis of transformations. J. Roy. Stat. Soc.: Ser. B (Methodol.) 26(2), 211–243 (1964)

Box, G., Jenkins, G.: Time series analysis: forecasting and control. Holden-Day, San Francisco (1970)

Brown, R.G.: Statistical forecasting for inventory control. McGraw/Hill, New York (1959)

Cao, J., Jiang, X., Zhao, B.: Mathematical modeling and epidemic prediction of COVID-19 and its significance to epidemic prevention and control measures. J. Biomed. Res. Innov. 1(1), 1–19 (2020)

Ceylan, Z.: Estimation of COVID-19 prevalence in Italy, Spain, and France. Sci. Total Environ. 729, 138817 (2020)

Chakraborty, T., Ghosh, I.: Real-time forecasts and risk assessment of novel coronavirus (COVID-19) cases: a data-driven analysis. Chaos Solitons Fractals 135, 109850 (2020)

Davidson, J.: Econometric theory. Wiley Blackwell, Hoboken (2000)

De Livera, A.M., Hyndman, R.J., Snyder, R.D.: Forecasting time series with complex seasonal patterns using exponential smoothing. J. Am. Stat. Assoc. 106(496), 1513–1527 (2011)

Dhamodharavadhani, S., Rathipriya, R., Chatterjee, J.M.: Covid-19 mortality rate prediction for India using statistical neural network models. Front. Public Health 8, 441 (2020)

Dickey, D.A., Fuller, W.A.: Likelihood ratio statistics for autoregressive time series with a unit root. Econometrica 49(4), 1057–1072 (1981)

ECDC. Guidelines for the implementation of non-pharmaceutical interventions against COVID-19 (2020). Available at: https://www.ecdc.europa.eu/en/publications-data/covid-19-guidelines-non-pharmaceutical-interventions. Accessed 10 Oct 2020

Fallah, S.N., Deo, R.C., Shojafar, M., Conti, M., Shamshirband, S.: Computational intelligence approaches for energy load forecasting in smart energy management grids: state of the art, future challenges, and research directions. Energies 11(3), 596 (2018)

Fanelli, D., Piazza, F.: Analysis and forecast of COVID-19 spreading in China, Italy and France. Chaos Solitons Fractals 134, 109761 (2020)

Fantazzini, D.: Short-term forecasting of the COVID-19 pandemic using Google Trends data: evidence from 158 countries. Appl. Econometr. 59, 33–54 (2020)

Fitch Rating.: Fitch Affirms Italy at 'BBB-'; Outlook Stable (2020). https://www.fitchratings.com/research/sovereigns/fitch-affirms-italy-at-bbb-outlook-stable-10-07-2020. Accessed 17 Oct 2020

Ganiny, S., Nisar, O.: Mathematical modeling and a month ahead forecast of the coronavirus disease 2019 (COVID-19) pandemic: an Indian scenario. Model. Earth Syst. Environ. 7(1), 29–40 (2021)

Giordano, G., Blanchini, F., Bruno, R., Colaneri, P., Di Filippo, A., Di Matteo, A., Colaneri, M.: Modelling the COVID-19 epidemic and implementation of population-wide interventions in Italy. Nat. Med. 26, 855–860 (2020)

Goodwin, P., Lawton, R.: On the asymmetry of the symmetric MAPE. Int. J. Forecast. 15(4), 405–408 (1999)

Gujarati, D.N., Porter, D.C.: Basic econometrics, 5th edn. McGraw Hill Inc., New York (2009)

Hasan, N.: A methodological approach for predicting COVID-19 epidemic using EEMD-ANN Hybrid Model. Intern. Things 11, 100228 (2020)

Holmdahl, I., Buckee, C.: Wrong but useful—what covid-19 epidemiologic models can and cannot tell us. N. Engl. J. Med. 383(4), 303–305 (2020)

Holt, C.E.: Forecasting seasonals and trends by exponentially weighted averages (O.N.R. Memorandum No. 52). Carnegie Institute of Technology, Pittsburgh (1957). https://doi.org/10.1016/j.ijforecast.2003.09.015 . (reprint)

Hurvich, C.M., Tsai, C.L.: Regression and time series model selection in small samples. Biometrika 76(2), 297–307 (1989)

Hyndman.: New in forecast 4.0. https://robjhyndman.com/hyndsight/forecast4/ (2012)

Hyndman, R. J. and Athanasopoulos, G.: Forecasting: principles and practice, OTexts, Melbourne. OTexts.com/fpp2 (2018)

Hyndman, R.J., Khandakar, Y.: Automatic time series forecasting: the forecast package for R. J. Stat. Softw. 27(1), 1–22 (2008)

Hyndman, R.J., Koehler, A.B.: Another look at measures of forecast accuracy. Int. J. Forecast. 22(4), 679–688 (2006)

Hyndman, R.J.: Another look at forecast-accuracy metrics for intermittent demand. Foresight 4(4), 43–46 (2006)

Hyndman, R. J.: Forecasting with daily data, September 13, 2013. https://robjhyndman.com/hyndsight/dailydata/ (2013)

Hyndman, R.J., Koehler, A.B., Ord, J.K., Snyder, R.D.: Forecasting with exponential smoothing: the state space approach. Springer-Verlag, Berlin (2008)

Hyndman, R.J., Koehler, A.B., Snyder, R.D., Grose, S.: A state space framework for automatic forecasting using exponential smoothing methods. Int. J. Forecast. 18(3), 439–454 (2002)

Ilie, O.D., Cojocariu, R.O., Ciobica, A., Timofte, S.I., Mavroudis, I., Doroftei, B.: Forecasting the spreading of COVID-19 across nine countries from Europe, Asia, and the American continents using the ARIMA models. Microorganisms 8(8), 1158 (2020)

Ioannidis, J.P., Cripps, S., Tanner, M.A.: Forecasting for COVID-19 has failed. Int. J. Forecast (2020). https://doi.org/10.1016/j.ijforecast.2020.08.004

Italian Ministry of Health (Open Data). http://www.salute.gov.it/ (2020)

Joseph, O., Senyefia, B.A., Cynthia, N.C., Eunice, O.A., Yeboah, B.E.: Covid-19 projections: single forecast model against multi-model ensemble. Int. J. Syst. Sci. Appl. Math. 5(2), 20–26 (2020)

Katoch, R., Sidhu, A.: An application of ARIMA model to forecast the dynamics of COVID-19 epidemic in India. Glob. Bus. Rev. (2021). https://doi.org/10.1177/0972150920988653

Katris, C.: A time series-based statistical approach for outbreak spread forecasting: application of COVID-19 in Greece. Expert Syst Appl 166, 114077 (2021)

Kim, S., Kim, H.: A new metric of absolute percentage error for intermittent demand forecasts. Int. J. Forecast. 32(3), 669–679 (2016)

Kırbaş, İ, Sözen, A., Tuncer, A.D., Kazancıoğlu, F.Ş: Comparative analysis and forecasting of COVID-19 cases in various European countries with ARIMA, NARNN and LSTM approaches. Chaos Solitons Fractals 138, 110015 (2020)

Kreps, S.E., Kriner, D.L.: Model uncertainty, political contestation, and public trust in science: evidence from the COVID-19 pandemic. Sci. Adv. 6(43), eabd4563 (2020)

Kwiatkowski, D., Phillips, P.C.B., Schmidt, P., Shin, Y.: Testing the Null Hypothesis of Stationarity against the Alternative of a Unit Root. J. Econometrics 54(1–3), 159–178 (1992)

Lee, D.H., Kim, Y.S., Koh, Y.Y., Song, K.Y., Chang, I.H.: Forecasting COVID-19 confirmed cases using empirical data analysis in Korea. Healthcare 9(3), 254 (2021)

Lewis, C.D.: Industrial and Business Forecasting Methods: A Practical Guide to Exponential Smoothing and Curve Fitting. Butterworth Scientific, Boston, London (1982)

Ljung, G.M.: Diagnostic testing of univariate time series models. Biometrika 73(3), 725–730 (1986)

Ljung, G.M., Box, G.E.: On a measure of lack of fit in time series models. Biometrika 65(2), 297–303 (1978)

Melin, P., Monica, J.C., Sanchez, D., Castillo, O.: Multiple ensemble neural network models with fuzzy response aggregation for predicting COVID-19 time series: the case of Mexico. Healthcare 8(2), 181 (2020). (Multidisciplinary Digital Publishing Institute)

Moftakhar, L., Seif, M.: The Exponentially Increasing Rate of Patients Infected with COVID-19 in Iran. Arch. Iran. Med. 23(4), 235–238 (2020). https://doi.org/10.34172/aim.2020.03

Namasudra, S., Dhamodharavadhani, S., and Rathipriya, R.: Nonlinear Neural Network based forecasting model for predicting COVID-19 cases. Neural Process. Lett. 1–21 (2021)

Nesteruk, I.: Statistics-based predictions of coronavirus epidemic spreading in Mainland China. Innov. Biosyst. Bioeng. 4(1), 13–18 (2020)

Oran, D.P., Topol, E.J.: Prevalence of asymptomatic SARS-CoV-2 infection: a narrative review. Ann. Intern. Med. 173(5), 362–367 (2020)

Panigrahi, S., Behera, H.S.: A hybrid ETS–ANN model for time series forecasting. Eng. Appl. Artif. Intell. 66, 49–59 (2017)

Papastefanopoulos, V., Linardatos, P., Kotsiantis, S.: Covid-19: A comparison of time series methods to forecast percentage of active cases per population. Appl. Sci. 10(11), 3880 (2020)

Perone, G.: An ARIMA model to forecast the spread and the final size of COVID-2019 epidemic in Italy (No. 20/07). HEDG, c/o Department of Economics, University of York (2020)

Perone, G.: ARIMA forecasting of COVID-19 incidence in Italy, Russia, and the USA. http://arxiv.org/abs/2006.01754 (2020)

Perone, G.: The determinants of COVID-19 case fatality rate (CFR) in the Italian regions and provinces: an analysis of environmental, demographic, and healthcare factors. Sci Total Environ 755, 142523 (2021)

Ribeiro, M.H.D.M., da Silva, R.G., Mariani, V.C., dos Santos Coelho, L.: Short-term forecasting COVID-19 cumulative confirmed cases: perspectives for Brazil. Chaos Solitons Fractals 135, 109853 (2020)

Rios, M., Garcia, J.M., Sanchez, J.A., Perez, D.: A statistical analysis of the seasonality in pulmonary tuberculosis. Eur. J. Epidemiol. 16(5), 483–488 (2000)

Sahai, A.K., Rath, N., Sood, V., Singh, M.P.: ARIMA modelling and forecasting of COVID-19 in top five affected countries. Diabetes Metab. Syndr. 14(5), 1419–1427 (2020)

Sardar, T., Nadim, S.S., Rana, S., Chattopadhyay, J.: Assessment of lockdown effect in some states and overall India: A predictive mathematical study on COVID-19 outbreak. Chaos Solitons Fractals 139, 110078 (2020)

Singh, S., Parmar, K. S., Kumar, J. and Makkhan, S. J. S.: Development of new hybrid model of discrete wavelet decomposition and autoregressive integrated moving average (ARIMA) models in application to one month forecast the casualties cases of COVID-19. Chaos Solitons Fractals 135, 109866 (2020)

Sugiura, N.: Further analysts of the data by Akaike’s information criterion and the finite corrections: further analysts of the data by Akaike’s. Commun. Stat.-Theory Methods 7(1), 13–26 (1978)

Talkhi, N., Fatemi, N.A., Ataei, Z., Nooghabi, M.J.: Modeling and forecasting number of confirmed and death caused COVID-19 in IRAN: a comparison of time series forecasting methods. Biomed. Signal Process. Control 66, 102494 (2021)

Toğa, G., Atalay, B., Toksari, M.D.: COVID-19 Prevalence Forecasting using Autoregressive Integrated Moving Average (ARIMA) and Artificial Neural Networks (ANN): case of Turkey. J. Infect. Public Health 14(7), 811–816 (2021)

Tuite, A.R., Ng, V., Rees, E., Fisman, D.: Estimation of COVID-19 outbreak size in Italy. Lancet. Infect. Dis 20(5), 537 (2020)

Wang, Y., Xu, C., Yao, S., Zhao, Y., Li, Y., Wang, L., Zhao, X.: Estimating the prevalence and mortality of coronavirus disease 2019 (COVID-19) in the USA, the UK, Russia, and India. Infect. Drug Resist. 13, 3335–3350 (2019)

Wieczorek, M., Siłka, J., Woźniak, M.: Neural network powered COVID-19 spread forecasting model. Chaos Solitons Fractals 140, 110203 (2020)

Winters, P.R.: Forecasting sales by exponentially weighted moving averages. Manage. Sci. 6(3), 324–342 (1960)

Worldometer (2021). https://www.worldometers.info/coronavirus/.

Wu, J.T., Leung, K., Leung, G.M.: Nowcasting and forecasting the potential domestic and international spread of the 2019-nCoV outbreak originating in Wuhan, China: a modelling study. Lancet 395(10225), 689–697 (2020)

Xu, C., Dong, Y., Yu, X., Wang, H., Cai, Y.: Estimation of reproduction numbers of COVID-19 in typical countries and epidemic trends under different prevention and control scenarios. Front. Med. (2020). https://doi.org/10.1007/s11684-020-0787-4

Yonar, H., Yonar, A., Tekindal, M.A., Tekindal, M.: Modeling and Forecasting for the number of cases of the COVID-19 pandemic with the Curve Estimation Models, the Box–Jenkins and Exponential Smoothing Methods. EJMO 4(2), 160–165 (2020)

Yu, G., Feng, H., Feng, S., Zhao, J., Xu, J.: Forecasting hand-foot-and-mouth disease cases using wavelet-based SARIMA–NNAR hybrid model. PLoS ONE 16(2), e0246673 (2021)

Zhang, G.P.: Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 50, 159–175 (2003)

Zhao, S., Lin, Q., Ran, J., Musa, S.S., Yang, G., Wan, W., Lou, Y., Gao, D., Yang, L., He, D., Wang, M.H.: Preliminary estimation of the basic reproduction number of novel coronavirus (2019-nCoV) in China, from 2019 to 2020: a data-driven analysis in the early phase of the outbreak. Int. J. Infect. Dis. 92, 214–217 (2020)

Zheng, Y.L., Zhang, L.P., Zhang, X.L., Wang, K., Zheng, Y.J.: Forecast model analysis for the morbidity of tuberculosis in Xinjiang, China. PLoS ONE 10(3), e00116832 (2015)

Zhou, T., Liu, Q., Yang, Z., Liao, J., Yang, K., Bai, W., Xin, L., Zhang, W.: Preliminary prediction of the basic reproduction number of the Wuhan novel coronavirus 2019-nCoV. J. Evid. Based Med. 13(1), 2–7 (2020)

Acknowledgements

I thank three anonymous reviewers for their valuable comments and suggestions on earlier drafts of the manuscript.

Funding

Open access funding provided by Università degli studi di Bergamo within the CRUI-CARE Agreement. None.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Appendix B

Appendix C

Appendix D

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Perone, G. Comparison of ARIMA, ETS, NNAR, TBATS and hybrid models to forecast the second wave of COVID-19 hospitalizations in Italy. Eur J Health Econ 23, 917–940 (2022). https://doi.org/10.1007/s10198-021-01347-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10198-021-01347-4