Abstract

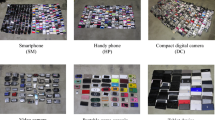

To improve the recycling process of waste from electrical products, we developed a sensor-based sorting system using convolutional neural network (CNN)-based image recognition. We sampled a total of 1571 models in three categories of waste digital devices (484 smartphones, 580 non-smart mobile phones, and 507 digital cameras) and examined a method of fusing information from 2D/3D appearance, volume, and mass into a single input image to VGG16 (a well-known CNN). The identification performance of the VGG16 was improved by pre-image-processing that placed the digital device at a fixed orientation and fixed location. In the three-category classification of 628 untrained digital devices, the accuracy was 97.7% for using the “4ch” image that results from inserting the 3D image fusing density information of the digital device into a fourth channel of the BGR color image. In the individual product model identification of trained 943 digital devices using the output vector from the fully connected layer of VGG16, the correctly identified rate was 90.5% for using the “BG + 3D” image that results from overwriting the third channel of the BGR color image with the 3D image fusing density information of the digital device under “fine-tuning” of the VGG16 pre-trained by the ImageNet dataset.

Similar content being viewed by others

Data availability statement

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Forti V, Baldé CP, Kuehr R, Bel G (2020) The Global E-waste Monitor 2020: Quantities, flows and the circular economy potential. United Nations University/United Nations Institute for Training and Research, International Telecommunication Union, and International Solid Waste Association, Bonn/Geneva/Rotterdam

Andersen T (2022) A comparative study of national variations of the European WEEE directive: manufacturer’s view. Environ Sci Pollut Res 29:19920–19939

Ministry of Environment (2020) Report on the E valuation and Study of the Enforcement Status of the Small Home Appliance Recycling System (in Japanese). https://www.env.go.jp/content/900516006.pdf. Accessed 7 October 2022

Ministry of Environment (2011) Recovery and Appropriate Disposal of Rare Metals from Used Small Home Appliances (in Japanese). https://www.env.go.jp/content/900535744.pdf. Accessed 7 October 2022

Hayashi N, Koyanaka S, Oki T (2019) Constructing an automatic object-recognition algorithm using labeling information for efficient recycling of WEEE. Waste Manage 88:337–346

Wang L, Wang XV, Gao L, Váncza J (2014) A cloud-based approach for WEEE remanufacturing. CIRP Ann 63:409–412

Musa A, Gunasekaran A, Yusuf Y, Abdelazim A (2014) Embedded devices for supply chain applications: towards hardware integration of disparate technologies. Expert Syst Appl 41:137–155

Li Q, Luo H, Xie PX, Feng XQ, Du RY (2015) Product whole life-cycle and omni-channels data convergence oriented enterprise networks integration in a sensing environment. Comput Ind 70:23–45

Yawei X, Lihong D, Haidou W, Jiannong J, Yongxiang L (2017) A review of passive self-sending tag. Sens Rev 37:338–345

Koyanaka S, Kobayashi K (2011) Incorporation of neural network analysis into a technique for automatically sorting lightweight metal scrap generated by ELV shredder facilities. Resour Conserv Recycl 55:515–523

Koyanaka S, Kobayashi K, Yamamoto T, Kimura M, Rokucho K (2013) Elemental analysis of lightweight metal scraps recovered by an automatic sorting technique combining a weight meter and a laser 3D shape-detection system. Resour Conserv Recycl 75:63–69

Koyanaka S, Kobayashi K (2015) Automatic sorting of small electronic device scraps to facilitate tantalum recycling. Res Process 62:1–7

Qzdemir ME, Ali Z, Subeshan B, Asmatulu E (2021) Applying machine learning approach in recycling. J Mater Cycles Waste Manage 23:855–871

Lu W, Chen J (2022) Computer vision for solid waste sorting: A critical review of academic research. Waste Manage 142:29–43

Kroell N, Chen X, Greiff K, Feil A (2022) Optical sensors and machine learning algorithms in sensor-based material flow characterization for mechanical recycling processes: A systematic literature review. Waste Manage 149:259–290

Nowakowski P, Pamula T (2020) Application of deep learning classifier to improve e-waste collection planning. Waste Manage 109:1–9

Tan M, Le Q (2019) EfficientNet: Rethinking model scaling for convolutional neural networks. In: Proceedings of the 36th international conference on machine learning, vol 97, p 6105–6114

Mao WL, Chen WC, Wang CT, Lin YH (2021) Recycling waste classification using optimized convolutional neural network. Resour Conserv Recycl 164:105132

Zang Q, Zhang X, Mu X, Wang Z, Tian R, Wang X, Liu X (2021) Recyclable waste image recognition based on deep learning. Resour Conserv Recycl 171:105636

Yang M (2016) Thung G (2016) Classification of trash for recyclability status. CS229 Project Rep 1:3

Vo AH, Vo MT, Le T (2019) A novel framework for trash classification using deep transfer learning. IEEE Access 7:178631–178639

Panwar H, Gupta PK, Siddiqui MK, Morales-Menendez R, Bhardwaj P, Sharma S, Sarker IH (2020) AquaVision: Automating the detection of waste in water bodies using deep transfer learning. Case Stud Chem Environ Eng 2:100026

Deng J, Dong W, Socher R, Li LJ, Li K, Li FF (2009) Imagenet: A large-scale hierarchical image database. CVPR 2009:248–255

Lin TY, Marie M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitinick L (2014) Microsoft COCO: Common objects in context. ECCV 2014:740–755

Everingham M, Eslami SMA, Van Gool L, Williams CKI, Winn J (2015) The pascal visual object classes challenge: A retrospective. Int J Comput Vision 111:98–136

Shao L, Cai ZC, Liu Li Lu, Ke, (2017) Performance evaluation of deep feature learning for RGB-D image/video classification. Inf Sci 385–386:266–283

Ophoff T, Beeck KV, Goedeme T (2019) Exploring RGB+Depth fusion real-time object detection. Sensors 19:866

Diaz-Romero D, Sterkens W, Eynde SVD, Goedeme T, Dewulf W, Peeters J (2021) Deep learning computer vision for the separation of Cast- and Wrought-Aluminum scrap. Resour Conserv Recycl 172:106585

Katagiri J, Koyanaka S (2020) A new criterion for decision-making in mesh simplification of 3D-scanned objects used in discrete-element modelling. Mater Trans 61:1158–1163

Katagiri J, Ueda T, Hayashi N, Koyanaka S (2021) Genetic algorithm based automatic input parameter calibration method for the discrete element modeling of vibration feeders. Mater Trans 62:551–556

Simonyan K, Zinsserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Tensorflow (2015) Large-scale machine learning on heterogeneous systems. https://tensorflow.org. Accessed 7 October 2022

Lin W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu CY, Rerg AC (2016) SSD: Single shot multibox detector. ECCV 2016:21–37

Redmon J, Farhadi A (2018) Yolo v3: An incremental improvement. arXiv:1804.02767

Hang G, Liu Z, Van der Maaten L, Weinberger KQ (2016) Densely connected convolutional Networks. arXiv: 1608.0693

Keras (2020) Available models. https://keras.io/api/applications/. Accessed 7 October 2022

Bendale A, Boult TE (2016) Towards open set deep networks. CVPR 2016:1563–1572

Taigman Y, Yang M, Ranzato M, Wolf L (2014) Deep face : Closing the gap to human-level performance in face verification. CVPR 2014:1701–1708

Acknowledgements

The present paper is based on results obtained from a project (code: P17001) commissioned by the New Energy and Industrial Technology Development Organization (NEDO), Japan. The test samples were obtained with the cooperation of Daiei Kankyo Co., Ltd., Japan and Re-Tem Co., Ltd., Japan.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Koyanaka, S., Kobayashi, K. Sensor-based sorting of waste digital devices by CNN-based image recognition using composite images created from mass and 2D/3D appearances. J Mater Cycles Waste Manag 25, 851–862 (2023). https://doi.org/10.1007/s10163-022-01565-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10163-022-01565-9