Abstract

We consider the weighted k-set packing problem, where, given a collection \({\mathcal {S}}\) of sets, each of cardinality at most k, and a positive weight function \(w:{\mathcal {S}}\rightarrow {\mathbb {Q}}_{>0}\), the task is to find a sub-collection of \({\mathcal {S}}\) consisting of pairwise disjoint sets of maximum total weight. As this problem does not permit a polynomial-time \(o(\frac{k}{\log k})\)-approximation unless \(P=NP\) (Hazan et al. in Comput Complex 15:20–39, 2006. https://doi.org/10.1007/s00037-006-0205-6), most previous approaches rely on local search. For twenty years, Berman’s algorithm SquareImp (Berman, in: Scandinavian workshop on algorithm theory, Springer, 2000. https://doi.org/10.1007/3-540-44985-X_19), which yields a polynomial-time \(\frac{k+1}{2}+\epsilon \)-approximation for any fixed \(\epsilon >0\), has remained unchallenged. Only recently, it could be improved to \(\frac{k+1}{2}-\frac{1}{63,700,993}\) by Neuwohner (38th International symposium on theoretical aspects of computer science (STACS 2021), Leibniz international proceedings in informatics (LIPIcs), 2021. https://doi.org/10.4230/LIPIcs.STACS.2021.53). In her paper, she showed that instances for which the analysis of SquareImp is almost tight are “close to unweighted” in a certain sense. But for the unit weight variant, the best known approximation guarantee is \(\frac{k+1}{3}+\epsilon \) (Fürer and Yu in International symposium on combinatorial optimization, Springer, 2014. https://doi.org/10.1007/978-3-319-09174-7_35). Using this observation as a starting point, we conduct a more in-depth analysis of close-to-tight instances of SquareImp. This finally allows us to generalize techniques used in the unweighted case to the weighted setting. In doing so, we obtain approximation guarantees of \(\frac{k+\epsilon _k}{2}\), where \(\lim _{k\rightarrow \infty } \epsilon _k = 0\). On the other hand, we prove that this is asymptotically best possible in that local improvements of logarithmically bounded size cannot produce an approximation ratio below \(\frac{k}{2}\).

Similar content being viewed by others

1 Introduction

For a positive integer k, the weighted k-Set Packing problem is defined as follows: Given a family \({\mathcal {S}}\) of sets each of size at most k together with a positive weight function \(w:{\mathcal {S}}\rightarrow {\mathbb {Q}}_{>0}\), the task is to find a sub-collection A of \({\mathcal {S}}\) of maximum weight such that the sets in A are pairwise disjoint. For \(k\le 2\), the weighted k-Set Packing problem reduces to the Maximum Weight Matching problem by adding dummy elements to sets of cardinality less than two. The Maximum Weight Matching problem is known to be solvable in polynomial time [8].

For \(k\ge 3\), the weighted k-Set Packing problem is NP-hard since it generalizes the optimization variant of the NP-complete 3-Dimensional Matching problem [14]. In addition, Hazan, Safra and Schwartz [12] have shown that there cannot even be a polynomial-time \(o\left( \frac{k}{\log k}\right) \)-approximation for the weighted k-Set Packing problem unless \(P=NP\).

On the positive side, a simple greedy algorithm yields an approximation guarantee of k (see for example [5]). In order to improve on this, the technique that has proven most successful so far is local search. The basic idea is to start with an arbitrary solution (e.g. the empty one) and to iteratively improve the current solution by applying some sort of local modifications until no more of these exist.

More precisely, given a feasible solution A to the weighted k-Set Packing problem, we call a collection \(X\subseteq {\mathcal {S}}\setminus A\) consisting of pairwise disjoint sets a local improvement of A of size |X| if

that is, if replacing the collection of sets in A that intersect sets in X by the sets in X increases the weight of the solution. Note that whenever A is sub-optimum and \(A^*\) is a solution of maximum weight, then \(A^*\setminus A\) defines a local improvement of A. However, if one aims at designing a polynomial-time algorithm, it is of course infeasible to check subsets of \({\mathcal {S}}\) of arbitrarily large size.

1.1 The unit weight case

For the special case of unit weights, Hurkens and Schrijver [13] showed that local improvements of arbitrarily large but constant size result in approximation guarantees arbitrarily close to \(\frac{k}{2}\). Their paper also provides matching lower bound examples proving their result to be tight. Since then, a lot of progress has been made regarding the special case where \(w\equiv 1\), which we will also refer to as the unweighted k-Set Packing problem. In 1995, at the cost of a quasi-polynomial running time, Halldórsson [11] achieved an approximation factor of \(\frac{k+2}{3}\) by applying local improvements of size logarithmic in the total number of sets. Cygan, Grandoni and Mastrolilli [7] managed to get down to an approximation factor of \(\frac{k+1}{3}+\epsilon \), still with a quasi-polynomial running time.

The first polynomial-time algorithm improving on the result by Hurkens and Schrijver [13] was obtained by Sviridenko and Ward [21] in 2013. By combining color coding with the algorithm presented in [11], they achieved an approximation ratio of \(\frac{k+2}{3}\). This result was further improved to \(\frac{k+1}{3}+\epsilon \) for any fixed \(\epsilon >0\) by Cygan [6], obtaining a polynomial running time doubly exponential in \(\frac{1}{\epsilon }\). The best approximation algorithm for the unweighted k-Set Packing problem in terms of performance ratio and running time is due to Fürer and Yu [10] from 2014. They achieve the same approximation guarantee as Cygan [6], but a running time only singly exponential in \(\frac{1}{\epsilon ^2}\). Moreover, they show that their result is best possible in that there exist arbitrarily large instances that feature solutions that do not permit any local improvement of size \(o(|{\mathcal {S}}|^{\frac{1}{5}})\), but that are by a factor of \(\frac{k+1}{3}\) smaller than the optimum.

1.2 General weights and the MWIS in (k+1)-claw free graphs

In the weighted setting, much less is known. Arkin and Hassin [2] have shown that unlike the unit weight case, local improvements of constant size cannot produce an approximation ratio better than \(k-1\) for general weights. Both papers improving on this deal with a more general problem, the Maximum Weight Independent Set problem (MWIS) in \(k+1\)-claw free graphs [3, 5]:

For \(d\ge 1\), a d-claw C [3] is defined to be a star consisting of one center node and a set \(T_C\) of d talons connected to it. An undirected graph is said to be d-claw free if none of its induced subgraphs form a d-claw.

For \(d\le 3\), the MWIS in d-claw free graphs can be solved in polynomial time (see [15, 19] for the unweighted, [16] for the weighted variant), while for \(d\ge 4\), again no \(o(\frac{d}{\log d})\)-approximation algorithm is possible unless \(P=NP\) [12].

Given an undirected graph \(G=(V,E)\) and an independent set \(A\subseteq V\), we call an independent set \(X\subseteq V\setminus A\) a local improvement of A if the weight of X exceeds the weight of its neighborhood in A. Using this definition, most of the previous results for the (weighted or unweighted) k-Set Packing problem also apply to the more general context of the MWIS in \(k+1\)-claw free graphs. However, it is not known how to get down to a polynomial (instead of quasi-polynomial) running time for the algorithms in [6, 21] and [10] since there is no obvious equivalent to applying color coding to the underlying universe.

By considering the conflict graph \(G_{\mathcal {S}}\) associated with an instance of the weighted k-Set Packing problem, we obtain a weight preserving one-to-one correspondence between feasible solutions to the weighted k-Set Packing problem and independent sets in \(G_{\mathcal {S}}\). The vertices of \(G_{\mathcal {S}}\) are given by the sets in \({\mathcal {S}}\) and are equipped with the respective weights. The edges represent non-empty set intersections. To see that \(G_{\mathcal {S}}\) is indeed \(k+1\)-claw free, observe that the set corresponding to the center of a \(k+1\)-claw in \(G_{{\mathcal {S}}}\) would need to intersect each of the \(k+1\) pairwise disjoint sets corresponding to the talons. But this contradicts the fact that its cardinality is bounded by k.

The first significant improvement over the approximation guarantee of k achieved by the greedy approach for the MWIS in \(k+1\)-claw free graphs was made by Chandra and Halldórsson [5]. In each iteration, their algorithm BestImp picks a certain type of local improvement that maximizes the ratio between the total weight of the vertices added to and removed from the current solution. By further scaling and truncating the weight function to ensure a polynomial number of iterations, Chandra and Halldórsson [5] obtain a \(\frac{2(k+1)}{3}+\epsilon \)-approximation algorithm for the MWIS in \(k+1\)-claw free graphs.

1.3 Berman’s algorithm SquareImp

For 20 years, Berman’s algorithm SquareImp [3] has been the state-of-the-art for both the MWIS in \(k+1\)-claw free graphs and the weighted k-Set Packing problem. SquareImp proceeds by iteratively applying local improvements of the squared weight function that arise as sets of talons of claws in G, until no more exist. In doing so, SquareImp achieves an approximation ratio of \(\frac{k+1}{2}\), leading to a polynomial-time \(\frac{k+1}{2}+\epsilon \)-approximation algorithm for both the MWIS in \(k+1\)-claw free graphs and the weighted k-Set Packing problem for any fixed \(\epsilon >0\).

Berman [3] also provides an example for \(w\equiv 1\) showing that his analysis of SquareImp is tight. As the example uses unit weights, he concludes that applying the same type of local improvement algorithm for a different power of the weight function does not provide further improvements. However, as also implied by the result in [13], while no small improvements forming the set of talons of a claw in the input graph exist in the tight example given by Berman [3], once this additional condition is dropped, improvements of small constant size can be found quite easily. This observation is the basis of a recent paper by Neuwohner [17], who managed to obtain an approximation guarantee slightly below \(\frac{k+1}{2}\) by taking into account a broader class of local improvements, namely all improvements of the squared weight function of size at most \(k^2+k\).

2 Our contribution

The main result of this paper is the following theorem:

Theorem 1

There exists a polynomial-time algorithm that obtains approximation guarantees of \(\frac{k+\epsilon _{k}}{2}\) for the weighted k-Set Packing problem for \(k\ge 3\), where \(0\le \epsilon _{k}\le 1\) for all \(k\ge 3\) and \(\lim _{k\rightarrow \infty }\epsilon _{k} = 0\).

In order to prove Theorem 1, it will be convenient to phrase our algorithm and most of our results in terms of the more general MWIS in \(k+1\)-claw free graphs instead of the weighted k-Set Packing problem. In fact, the algorithm we propose also yields a quasi-polynomial time \(\frac{k+\epsilon _{k}}{2}\)-approximation for the MWIS in \(k+1\)-claw free graphs, but to get down to a polynomial running time, we exploit the additional structure (the conflict graph of) an instance of the weighted k-Set Packing problem features.

The algorithm LogImp we propose builds on Berman’s algorithm SquareImp in that it also searches for local improvements with respect to the squared weight function that constitute the set of talons of a claw in the input graph, as well as an additional type of improvement of up to logarithmic size (again with respect to the squared weight function). In particular, we can apply Berman’s analysis of SquareImp to our algorithm. Following [17], we show that whenever Berman’s analysis of SquareImp is close to being tight, the instance is locally unweighted in the sense that almost every time that a vertex from the solution chosen by SquareImp and a vertex from any optimum solution share an edge, their weights must be very similar. While [17] merely focuses on one of the two major steps in Berman’s analysis, we consider both of them, allowing us to derive much stronger statements concerning the structure of instances where SquareImp does not do much better than a \(\frac{k+1}{2}\)-approximation. In particular, we are able to transfer techniques that are used in the state-of-the-art works on the unweighted k-Set Packing problem [6, 10] to a setting where weights are locally similar. This idea gives rise to the precise notion of a local improvement of logarithmic size we consider and is the main ingredient towards Theorem 1.

We further prove the approximation guarantees stated in Theorem 1 to be asymptotically best possible by showing that a local improvement algorithm that, for an arbitrarily chosen, but fixed parameter \(\alpha \in {\mathbb {R}}\), starts with the empty solution and iteratively applies local improvements of \(w^\alpha \) of size \({\mathcal {O}}(\log (|{\mathcal {S}}|))\) until no more exist, cannot produce an approximation guarantee better than \(\frac{k}{2}\) for the weighted k-Set Packing problem with \(k\ge 3\).Footnote 1

Theorem 2

Let \(k\ge 3\), \(\alpha \in {\mathbb {R}}\), \(0<\epsilon <1\) and \(C>0\). Then for each \(N_0\in {\mathbb {N}}\), there exists an instance \(({\mathcal {S}}, w)\) of the weighted k-Set Packing problem with \(|{\mathcal {S}}|\ge N_0\) and such that there are feasible solutions \(A,A^*\subseteq {\mathcal {S}}\) with the following two properties:

-

There is no local improvement of A of size at most \(C\cdot \log (|{\mathcal {S}}|)\) with respect to \(w^\alpha \), that is, there is no disjoint sub-collection \(X\subseteq {\mathcal {S}}{\setminus } A\) of sets such that

$$\begin{aligned}|X|\le C\cdot \log (|{\mathcal {S}}|)\text { and }w^\alpha (X)> w^\alpha (\{a\in A:\exists x\in X: a\cap x\ne \emptyset \}).\end{aligned}$$ -

We have \(w(A^*)\ge \frac{k-\epsilon }{2}\cdot w(A)\).

Theorem 2 significantly extends the state of knowledge in terms of lower bound examples. Even more importantly, we can finally (at least asymptotically) answer the long-standing question of how far one can get by using pure local search in the weighted setting. In doing so, we are also the first ones to port the idea of searching for local improvements of logarithmic size, which has proven very successful for unit weights [6, 7, 10, 11, 21], to the weighted setting.

The rest of this paper is organized as follows: Sect. 3 recaps some of the definitions and main results from [3] that we will employ in the analysis of our local improvement algorithm LogImp in Sect. 4. Section 5 then shows how to make LogImp run in polynomial time by means of color coding. In Sect. 6, we explain our lower bound construction. Finally, Sect. 7 provides a brief conclusion.

3 Preliminaries

Definition 3

(Neighborhood [3]) Given an undirected graph \(G=(V,E)\) and subsets \(U,W\subseteq V\) of its vertices, we define the neighborhood N(U, W) of U in W as \(N(U,W):=\{w\in W:\exists u\in U: \{u,w\}\in E \vee u=w\}.\) For \(u\in V\) and \(W\subseteq V\), we write N(u, W) instead of \(N(\{u\}, W)\).

Notation

Given \(w:V\rightarrow {\mathbb {Q}}\) and \(U\subseteq V\), we write \(w^2(U):=\sum _{u\in U} w^2(u)\).

Definition 4

(Local improvement, claw-shaped improvement [3]) Given an undirected graph \(G=(V,E)\), a weight function \(w:V\rightarrow {\mathbb {Q}}_{> 0}\) and an independent set \(A\subseteq V\), we say that a vertex set \(B\subseteq V\) improves \(w^2(A)\)/ is a local improvement of A if B is independent in G and we have

We define a 0-claw to consist of a single talon and an empty center. For a d-claw C in G with \(d\ge 0\), we say that C improves \(w^2(A)\) if \(T_C\) does and call \(T_C\) a claw-shaped improvement in this case.

Note that in contrast to the introduction, we do not require a local improvement B to be disjoint from A anymore. However, it is not hard to see that B defines a local/ claw-shaped improvement of A if and only if \(B\setminus A\) does. Further observe that an independent set B improves A if and only if we have \(w^2(B)>w^2(N(B,A))\).

Using the notation introduced above, Berman’s algorithm SquareImp [3] can now be formulated as in Algorithm 1.

SquareImp [3]

As all weights are positive, every \(v\not \in A\) such that \(A\cup \{v\}\) is independent constitutes the talon of a 0-claw improving \(w^2(A)\), so the algorithm returns a maximal independent set.

Theorem 5

([3]) Let \(k\ge 3\), let \(G=(V,E)\) be a \(k+1\)-claw free graph and let \(w:V\rightarrow {\mathbb {Q}}_{>0}\). Let further \(A\subseteq V\) be independent such that no claw improves A, and let \(A^*\) be an independent set of maximum weight in G.

Then \(w(A^*)\le \frac{k+1}{2}\cdot w(A)\).

In particular, Theorem 5 implies that SquareImp obtains an approximation guarantee of \(\frac{k+1}{2}\). The main idea of the proof of Theorem 5 presented in [3] is to charge the vertices in A for preventing adjacent vertices in an optimum solution \(A^*\) from being included into A. The latter is done by spreading the weight of the vertices in \(A^*\) among their neighbors in the maximal independent set A in such a way that no vertex in A receives more than \(\frac{k+1}{2}\) times its own weight. The suggested distribution of weights proceeds in two steps:

First, each vertex \(u\in A^*\) invokes costs of \(\frac{w(v)}{2}\) at each \(v\in N(u,A)\).

In a second step, each \(u\in A^*\) sends the remaining amount of \(w(u)-\frac{w(N(u,A))}{2}\) to a heaviest neighbor it possesses in A, which is captured by the following definition of charges:

Definition 6

(Charges [3]) For each \(u\in A^*\), pick a vertex \(v\in N(u,A)\) of maximum weight and call it n(u) (recall that A is maximal).

For \(u\in A^*\) and \(v\in A\), define

Definition 6 directly implies the following statement.

Proposition 7

In the situation of Theorem 5, we have

In order to prove Theorem 5, it suffices to see that each vertex \(v\in A\) receives at most \(\frac{k+1}{2}\) times its own weight. To prove this, we analyze the two steps of the weight distribution separately and show that in the first step, each \(v\in A\) has to pay at most \(\frac{k}{2}\cdot w(v)\), while in the second step, the total charges v has to pay can be bounded by \(\frac{w(v)}{2}\). In doing so, we roughly follow [3], but introduce some new notation that we will need later for the analysis of our improved algorithm.

Looking at the first step of the weight distribution, the total weight a vertex \(v\in A\) receives equals

In particular, we can bound the total weight distributed in the first step by

Proposition 8 further bounds the right-hand side by \(\frac{k}{2}\cdot w(A)\) as claimed.

Proposition 8

In the situation of Theorem 5, we have \(|N(v,A^*)|\le k\) for every \(v\in A\).

Proof

As G is \(k+1\)-claw free, no vertex \(v\in A\setminus A^*\) can have more than k neighbors in the independent set \(A^*\). Moreover, if \(v\in A\cap A^*\), then \(N(v,A^*)=\{v\}\) and \(|N(v,A^*)|=1\le k\).\(\square \)

The weight distributed in the second step equals

Our next goal is to show that the total amount of charges a vertex \(v\in A\) has to pay is bounded by \(\frac{w(v)}{2}\). To this end, we need to exploit the fact that when SquareImp terminates, there is no improving claw centered at v. Suppose that we want to construct an improving claw C centered at v and consider adding \(u\in N(v,A^*)\) to its set \(T_C\) of talons. On the one hand, this increases \(w^2(T_C)\) by \(w^2(u)\). On the other hand, \(w^2(N(T_C,A))\) may also increase by up to \(w^2(N(u,A)\setminus \{v\})\) (if our claw should be centered at v, we have to pay for v anyways). In case \(w^2(u) > w^2(N(u,A){\setminus }\{v\})\), we surely want to add u to our claw, otherwise, we may choose not to. This is captured by the definition of the contribution:

Definition 9

(Contribution [17]) Define a contribution map \(\textrm{contr}:A^*\times A\rightarrow {\mathbb {Q}}_{\ge 0}\) by setting

The fact that there is no improving claw directly implies that the total contribution to \(v\in A\) is bounded by w(v), which is the statement of Proposition 10.

Proposition 10

In the situation of Theorem 5, we have

for every \(v\in A\). In particular, \(\sum _{u\in A^*}\textrm{contr}(u,n(u))\le w(A)\).

Proof

For \(v\in A^*\), the independence of both A and \(A^*\) implies

and, hence,

Assume towards a contradiction that there is \(v\in A\setminus A^*\) such that

Then

forms the set of talons of a claw centered at v. As \(w(v)>0\), we obtain

Hence, \(w^2(N(T,A))<w^2(T)\), contradicting the fact that no claw improves \(w^2(A)\).\(\square \)

We will further show that for all \(u\in A^*\), we have

This implies that \(\sum _{u\in A^*: v=n(u)}\textrm{charge}(u,n(u))\le \frac{w(v)}{2}\) for every \(v\in A\) as claimed. However, for the analysis of our algorithm, where we are not only interested in the statement of Theorem 5 itself, but would also like to know when this is close to being tight, it is more convenient to write

and show that the total slack \(\sum _{u\in A^*}\left[ \frac{1}{2}\cdot \textrm{contr}(u,n(u))-\textrm{charge}(u,n(u))\right] \) we have in this estimate is non-negative. This is taken care of by Definition 11 and Lemma 12.

Definition 11

(Slack) In the situation of Theorem 5, for \(u\in A^*\), we define

Lemma 12

In the situation of Theorem 5, we have \(\textrm{slack}(u)\ge 0\) for every \(u\in A^*\).

We prove Lemma 13, a slightly stronger statement which we will need again at a later point. It directly implies Lemma 12 since all weights are strictly positive, meaning that we can divide the inequality \(2\cdot \textrm{slack}(u)\cdot w(v)\ge 0\) by \(2\cdot w(v)\).

Lemma 13

Let \(u\in A^*\) and let \(v:=n(u)\). Then

where \(\max \emptyset := 0\).

Proof

We have

Then penultimate inequality follows since \(v = n(u)\in N(u,A)\) is of maximum weight and all weights are positive. \(\square \)

We have now assembled all ingredients to prove Theorem 14, which in particular implies Theorem 5.

Theorem 14

Let \(k\ge 3\), let \(G=(V,E)\) be a \(k+1\)-claw free graph and let \(w:V\rightarrow {\mathbb {Q}}_{>0}\). Let further \(A\subseteq V\) be independent such that no claw improves A, and let \(A^*\) be an independent set of maximum weight in G. Then

Proof

\(\square \)

This concludes the analysis of SquareImp.

4 Our algorithm LogImp

In this section, we introduce our algorithm LogImp for the MWIS in \(k+1\)-claw free graphs and analyze its performance ratio.

LogImp (Algorithm 2) is a local search algorithm that starts with the empty solution, and then iteratively checks for the existence of improving claws as in Berman’s algorithm SquareImp and another type of local improvement, which we call circular. It corresponds to a cycle of logarithmically bounded size in a certain auxiliary graph.

Definition 15

Let \(G=(V,E)\) be a \(k+1\)-claw free graph, let \(w:V\rightarrow {\mathbb {Q}}_{>0}\) and let \(A\subseteq V\) be independent.

Let further two maps \(n:\{u\in V: |N(u,A)|\ge 1\}\rightarrow A\) mapping u to an element of N(u, A) of maximum weight, and \(n_2:\{u\in V: |N(u,A)|\ge 2\}\rightarrow A\) mapping u to an element of \(N(u,A)\setminus \{n(u)\}\) of maximum weight be given. We call an independent set \(X\subseteq \{u\in V{\setminus } A: |N(u,A)|\ge 1\}\) a circular improvement if

-

(i)

\(\exists U\subseteq X\) s.t. \(|U|\le 8\cdot \lceil \log (|V|)\rceil \), each \(u\in U\) has at least two neighbors in A and \(C:=(\bigcup _{u\in U}\{n(u), n_2(u)\}, \{e_u=\{n(u), n_2(u)\}, u\in U\})\) is a cycle.

-

(ii)

If we let \(Y_v:=\{x\in X\setminus U: n(x)=v\}\), then \(X=U\cup \bigcup _{v\in V(C)} Y_v\).

-

(iii)

For every \(u\in U\), we have

$$\begin{aligned}&w^2(u)+\frac{1}{2}\cdot \left( \sum _{z\in Y_{n(u)}} w^2(z)+\sum _{z\in Y_{n_2(u)}} w^2(z)\right) \\ {}&>\frac{w^2(n(u))+w^2(n_2(u))}{2}+w^2(N(u,A)\setminus \{n(u), n_2(u)\})\\ {}&\qquad +\frac{1}{2}\cdot \left( \sum _{z\in Y_{n(u)}} w^2(N(z,A)\setminus \{n(u)\})+\sum _{z\in Y_{n_2(u)}} w^2(N(z,A)\setminus \{n_2(u)\})\right) .\end{aligned}$$

Proposition 16

If X is a circular improvement and U, C and \(Y_v,v\in V(C)\) are as in Definition 15, then we have \(|Y_v|\le k\) for all \(v\in V(C)\). In particular, we obtain \(|X|\le 8\cdot (k+1)\cdot \lceil \log (|V|)\rceil \).

Proof

As X is independent and \(Y_v\subseteq N(v,V\setminus A)\) by definition of \(Y_v\) and the map n, we know that for every \(v\in V(C)\), \(Y_v\) constitutes the set of talons of a claw centered at v, provided it is non-empty. Hence, \(|Y_v|\le k\) for all \(v\in V(C)\). In particular, we obtain

where \(|U|=|V(C)|\) follows by definition of C. \(\square \)

Proposition 17

Every circular improvement X satisfies \(w^2(X)>w^2(N(X,A))\) and, thus, constitutes a local improvement.

Proof

Let X be a circular improvement and let U, C and \(Y_v,v\in V(C)\) as in Definition 15. We compute

The equations labeled \((*)\) follow from the fact that

forms a cycle, meaning that each \(v\in V(C)\) occurs exactly twice among all of the sets \(\{n(u),n_2(u)\},u\in U\). \(\square \)

The intuition behind Definition 15 is the following: Similar to the state-of-the-art algorithm by Fürer and Yu [10] for the unweighted case, we build up an auxiliary graph H on the vertex set A, where each vertex \(u\in V{\setminus } A\) with at least two neighbors in A induces an edge between its two heaviest ones n(u) and \(n_2(u)\). The backbone of our circular improvement is given by a cycle C of logarithmic size in H. Additionally, for each \(v\in V(C)\), we can add some additional vertices u with \(n(u)=v\) that contribute a positive amount to v. Now, we want to cover for the weight of each \(v\in V(C)\subseteq N(X,A)\) by using the vertices corresponding to the two incident edges in C as well as the contributions from the vertices in \(Y_v\). More precisely, for each edge induced by \(u\in U\), we would like \(w^2(u)\), together with half of the contributions to n(u) and \(n_2(u)\), to be able to pay for all neighbors of u in A other than n(u) and \(n_2(u)\), as well as half of n(u) and \(n_2(u)\), which is precisely the constraint (iii).

When LogImp terminates, there cannot be any further improving claw, so we can apply the analysis of SquareImp presented in Sect. 3 to our algorithm. Similar to [17], the key idea in analyzing LogImp is the following: Either the analysis of SquareImp is far enough from being tight to achieve the desired approximation ratio, or the instance at hand bears a certain structure that allows us to derive the existence of a circular improvement. But this contradicts the termination criterion of LogImp.

The remainder of this section is dedicated to the proof of Theorem 18, which bounds the approximation guarantee obtained by LogImp. In Sect. 5, we then derive our main result, Theorem 1, by showing how to implement each iteration of LogImp in polynomial time, provided the input graph is the conflict graph of an (explicitly given) instance of the weighted k-Set Packing problem, and by explaining how to obtain a polynomial number of iterations by scaling and truncating the weight function.

Theorem 18

For \(k\ge 3\), LogImp yields a \(\frac{k+\delta _k}{2}\)-approximation for the MWIS in \(k+1\)-claw free graphs, where

We have \(\lim _{k\rightarrow \infty }\delta _k=0\) and a simple calculation shows \(\delta _k\in (0,1)\) for all \(k\ge 4\). We further point out that for \(k\ge 4\), we obtain an approximation guarantee strictly better than the ratio of \(\frac{k+1}{2}\) obtained by SquareImp, but also than the improvement to \(\frac{k+1}{2}-\frac{1}{63,700,993}\) (or \(\frac{k+1}{2}-\frac{1}{63,700,992}+\epsilon \), to be precise) by Neuwohner [17].

For \(k=3\), Theorem 18 is implied by Theorem 5. To see why LogImp cannot yield an improvement over the approximation gurantee of \(\frac{k+1}{2}\) for \(k=3\), consider a graph G that consists of a cycle of even length 2m the vertices of which alternately belong to the solution A that LogImp may find vertex by vertex and an optimum solution \(A^*\). Moreover, each vertex from A has an additional neighbor from \(A^*\) of degree 1. All weights are 1. As the maximum degree in G equals 3, G is clearly 4-claw free. Furthermore, G is (isomorphic to) the conflict graph of the instance of the weighted 3-Set Packing problem we obtain by identifying each vertex with its set of incident edges.

We have \(w(A^*)=|A^*|=2\cdot m = 2\cdot |A|=\frac{3+1}{2}\cdot w(A)\). It is not hard to see that no claw improves A.Footnote 2 But for \(m\ge 40\), there also is no circular improvement because the set U from Definition 15 would need to consist of all vertices from \(A^*\) that have two neighbors in A. But there are m such vertices and since \(|V|=3\cdot m \ge 120\), we have \(m=\frac{|V|}{3}>8\cdot (\log (|V|)+1)\). Hence, there exists no circular improvement, either.

Now, we deal with the case \(k\ge 4\). We introduce two auxiliary constants \(\epsilon _k'\) and \({\tilde{\epsilon }}_k\) and we consider vertex weights to be “close” if they differ by a factor of at most \(1\pm \sqrt{\epsilon _k'}\). Moreover, for a vertex \(v\in A\), \({\tilde{\epsilon }}_k\cdot w(v)\) constitutes a threshold telling us whether the total contribution to v should be regarded as small or large. We choose our constants to be

Our choices of \(\epsilon _k'\), \({\tilde{\epsilon }}_k\) and \(\delta _k\) satisfy a bunch of inequalities that pop up during our analysis and are listed in Sect. 4.4. However, the main intuition the reader should have about them is that \(0<\epsilon _k'\ll \sqrt{\epsilon _k'}<{\tilde{\epsilon }}_k<\delta _k<1.\)

For the analysis, we fix an instance (G, w) of the MWIS in \(k+1\)-claw free graphs consisting of a \(k+1\)-claw free graph \(G=(V,E)\) and a positive weight function \(w:V\rightarrow {\mathbb {Q}}_{>0}\). Denote the solution returned by LogImp when applied to (G, w) by A, and let \(A^*\) be an optimum solution for this instance. Finally, fix two maps n and \(n_2\) according to Definition 15. We point out that A is maximal when LogImp terminates since there is no claw-shaped improvement. This implies that the domain of n is V.

4.1 Classification of vertices from \(A^*\)

We now provide a classification of the vertices in \(A^*\) that helps us to understand the structural properties of near-tight instances.

Definition 19

Let \(u\in A^*\). We call u contributive if

If u is not contributive, but

we call u single. Finally, if u is neither contributive nor single, and \(|N(u,A)|\ge 2\),

\(w(N(u,A))< (2+\epsilon _k')\cdot w(u)\), we call u double.

For a contributive vertex u, we gain a constant fraction of the weight of its neighborhood in A in our bound on \(w(A^*)\) (cf. Theorem 14). For a single vertex u, the heaviest neighbor n(u) of u in A has almost the same weight as u and makes up almost all of N(u, A) in terms of weight. Single vertices correspond to vertices of degree 1 to A in the unit weight case.

For a double vertex u, its two heaviest neighbors in A, n(u) and \(n_2(u)\), have roughly the same weight as u and make up most of N(u, A). Double vertices can be thought of as having degree 2 to A.

Lemma 20

Each \(u\in A^*\) is single, double, or contributive.

Proof

Pick \(u\in A^*\) and let \(v:=n(u)\).

Case 1: \(w(N(u,A))\ge (2+\epsilon _k')\cdot w(u)\).

By non-negativity of the contribution, we obtain

This implies that u is contributive.

Case 2: \(w(N(u,A))< (2+\epsilon _k')\cdot w(u)\). If u is contributive, we are done, so assume that this is not the case. In particular,

We further claim that \(w(v)\ge \frac{w(u)}{2}\), implying

Indeed, if we had \(w(v)<\frac{w(u)}{2}\), then Lemma 13 would yield

a contradiction. By combining (5), multiplied with \(2\cdot w(v)\), and Lemma 13, we obtain

Note that both summands on the left hand side are non-negative since real squares are non-negative, all weights are positive, and \(v=n(u)\in N(u,A)\) is of maximum weight. In particular, both

need to hold. From (6), we can infer that \(|w(u)-w(v)|\le \sqrt{\epsilon _k'}\cdot w(v)\), which in turn implies that

In addition to that, (7) tells us that at least one of the two inequalities

must hold. If we have (9), then together with (8) and our assumption that u is not contributive, we may conclude that u is single. Thus, we are left with the case where u is neither contributive nor single, and (10) applies. The fact that \(\epsilon _k'<1\) by (19), together with \(w(v)>0\), implies that \(N(u,A)\setminus \{v\}\ne \emptyset \), so let \(v_2:=n_2(u)\). Then \(w(v)-w(v_2)\le \sqrt{\epsilon _k'}\cdot w(v)\) and, hence, \(\left( 1-\sqrt{\epsilon _k'}\right) \cdot w(v)\le w(v_2)\le w(v)\) by maximality of w(v) in N(u, A). Thus, together with (8) and our case assumption \(w(N(u,A))< (2+\epsilon _k')\cdot w(u)\), all conditions for u being double are fulfilled. \(\square \)

4.2 Missing, profitable and helpful vertices

We now discuss the role the different types of vertices in \(A^*\) play in our analysis. We start by recalling that in the first step of the weight distribution in the analysis of SquareImp, each \(v\in A\) pays \(|N(v,A^*)|\cdot \frac{w(v)}{2}\), which we bound by \(k\cdot \frac{w(v)}{2}\). In particular, if the number of neighbors of v in \(A^*\) happens to be less than k (we say that v has \(k-|N(v,A^*)|\) missing neighbors in this case), we gain \(\frac{w(v)}{2}\) for each missing neighbor of v.

We partition the neighbors a vertex \(v\in A\) has in \(A^*\) into those that are helpful to v, and those that are profitable for v. While helpful vertices are those vertices that would be considered neighbors of v in an unweighted approximation of our instance, and that help us to construct local improvements of logarithmic size, profitable vertices are the remaining neighbors that in some sense keep the instance from being close to unweighted and hence, tight. Therefore, they improve the analysis (i.e. our bound on \(w(A^*)\) profits from these).

Definition 21

Let \(u\in A^*\) and \(v\in N(u,A)\). We say that u is helpful to v if

-

u is single and \(v=n(u)\), or if

-

u is double and \(v\in \{n(u),n_2(u)\}\).

Otherwise, we call u profitable for v. Moreover, for \(u\in A^*\), we define

and for \(v\in A\), we let \(\textrm{helped}(v):=\{u\in A^*: v\in \textrm{help}(u)\}\).

One can show that for each profitable neighbor that a vertex \(v\in A\) possesses in \(A^*\), we gain a constant fraction of w(v) in bounding \(w(A^*)\). Intuitively, this is because v increases \(\textrm{slack}(u)\) for every profitable neighbor u of v. Lemma 22 formalizes the way missing and profitable neighbors improve our bound on \(w(A^*)\).

Lemma 22

Note that Proposition 10 tells us that \( \sum _{u\in A^*: v= n(u)} \textrm{contr}(u,v)\le w(v)\) for \(v\in A\). To prove Lemma 22, we provide a lower bound on the slack of a vertex in \(A^*\) (see Lemma 23). Then, we distribute the slack among the vertices in \(N(u,A)\setminus \textrm{help}(u)\) proportional to their weight.

Lemma 23

Let \(u\in A^*\). Then \(\textrm{slack}(u)\ge \frac{\epsilon _k'}{10}\cdot w(N(u,A){\setminus }\textrm{help}(u))\).

Proof of Lemma 22, assuming Lemma 23

We calculate

By Theorem 14, we, hence, obtain

\(\square \)

Proof of Lemma 23

By Lemma 20, its suffices to verify the statement for the three cases where u is contributive, double, or single, respectively.

Case 1: u is contributive. Then \(\textrm{slack}(u)\ge \frac{\epsilon _k'}{10}\cdot w(N(u,A))\) by definition.

Case 2: u is double. Let \(v_1:=n(u)\) and \(v_2:=n_2(u)\). Then \(\textrm{help}(u)=\{v_1,v_2\}\). By Lemma 13, we calculate

The second and third inequality follow from the facts that all weights are positive and that \(v_1\) is of maximum weight in N(u, A). As u is double, we further know that

Plugging this into (11) yields

Case 3: u is single.

Let \(v_1:=n(u)\). Then \(\textrm{help}(u)=N(u,A)\setminus \{v_1\}\) and \(w(N(u,A))\le (1+\sqrt{\epsilon _k'})\cdot w(v_1)\) by definition of single. By Lemma 13, we obtain

Division by \(2\cdot w(v_1)>0\) yields

as claimed. \(\square \)

4.3 Local improvements of logarithmic size

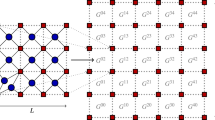

While missing and profitable neighbors improve our bound on \(w(A^*)\), our goal is to use helpful neighbors towards a local improvement of logarithmic size. In order to get a better idea of what we are aiming at, we take a brief detour and recapitulate how these vertices are handled in the unit weight case. In [10], an auxiliary graph \(G_A\) is constructed, the vertices of which correspond to the vertices in the current solution A. Each vertex from an optimum solution \(A^*\) with exactly one neighbor in A creates a loop on that neighbor, while a vertex from \(A^*\) with exactly two neighbors in A results in an edge connecting these. Now, it is not hard to see that there is a one-to-one correspondence between local improvements only featuring vertices from \(A^*\) with degree one or two to A, and subgraphs of \(G_A\) with more edges than vertices. A minimal such subgraph is called a binocular [4]. Now, a result by Berman and Fürer [4] comes into play:

Lemma 24

([4]) Let \(s\in {\mathbb {N}}_{>0}\) and let \(G=(V,E)\) be a graph with \(|E|\ge \frac{s+1}{s}\cdot |V|\). Then G contains a binocular with at most \(4\cdot s\cdot \lceil \log (|V|)\rceil \) vertices.

In particular, Lemma 24 implies the existence of a cycle of logarithmically bounded size. Moreover, if the number of vertices from \(A^*\) of degree 1 or 2 to A exceeds \((1+\epsilon )\cdot |A|\), one can find local improvements of size \({\mathcal {O}}(\frac{1}{\epsilon }\cdot \log (|A|))\), which is one of the key ingredients of the result in [10].

We would like to port this approach to our weighted setting where vertex weights are locally similar. If we could ensure that for each double vertex u, its squared weight is at least as large as the average squared weight of its neighbors n(u) and \(n_2(u)\), plus the squared weight of the neighbors of u in A other than n(u) and \(n_2(u)\), then we would be done since in a binocular, every vertex has degree at least two, and there is at least one vertex of degree more than two. However, this need not be the case in general, and even if we only lose an \(\epsilon _k'\) fraction of the weight of each vertex involved, the total loss might be arbitrarily large if we consider improvements of logarithmic size. In order to overcome this issue, we have to make sure that locally, we have some additional vertices with a positive contribution to the endpoints of the edges that we can add to guarantee that we can make up for the slight inaccuracies caused by the weight differences. This idea is captured by the following definition.

Definition 25

(\(T_v\) and B) For \(v\in A\), let

(Note that \(T_v\subseteq N(v,A^*)\) and that \(n(u)=v\) for all \(u\in T_v\), implying that the sets \((T_v)_{v\in A}\) are pairwise disjoint.) We define

For a vertex \(v\in B\), we can use the contributions of the vertices in \(T_v\) to cover for the inaccuracies caused by local weight differences as discussed before. In order to make sure that the neighborhood of our local improvement in A features sufficiently many vertices from B, we will only allow edges in our auxiliary graph that have at least one endpoint in B. The idea is that then each (sufficiently short) cycle in our auxiliary graph gives rise to a circular improvement by choosing \(Y_v:=T_v{\setminus } U\) for \(v\in B\), and \(Y_v:=\emptyset \) otherwise, where U denotes the set of vertices from \(V\setminus A\) inducing the edges of the cycle. To find such a cycle, we need to see that the weighted sum of the number of incident edges (induced by helpful vertices) of vertices in B is large enough. Lemma 26 tells us that if

then we reach the desired approximation guarantee of \(\frac{k+\delta _k}{2}\) stated in Theorem 18. On the other hand, Lemma 27 shows that if this condition is not fulfilled, we can indeed find a circular improvement, contradicting the termination criterion of LogImp. This then concludes the analysis.

Lemma 26

If

then \(w(A^*)\le \frac{k+\delta _k}{2}\cdot w(A)\) holds.

Proof

For \(v\in A\), we get \(\sum _{u\in A^*: v=n(u)} \textrm{contr}(u,v)=\sum _{u\in T_v}\textrm{contr}(u,v)\) by non-negativity of the contribution. For \(v\in A\setminus B\), we have

while for \(v\in B\), Proposition 10 tells us that

By Lemma 22, we obtain

If \(\left( \frac{1-{\tilde{\epsilon }}_k}{2}-\frac{k\cdot \epsilon _k'}{10}\right) \ge 0\), using \(w(B)\le w(A)\) as \(B\subseteq A\), the latter can be upper bounded by

On the other hand, if \(\left( \frac{1-{\tilde{\epsilon }}_k}{2}-\frac{k\cdot \epsilon _k'}{10}\right) \le 0\), we obtain an upper bound of

since \(w(B)\ge 0\). For \(4\le k\le 2153\), we have

Hence,

For \(2154\le k \le 5007\), we obtain \({\tilde{\epsilon }}_k=18\cdot \sqrt{\epsilon _k'}{\mathop {\le }\limits ^{(19)}} 18\cdot 10^{-\frac{3}{2}}\), which implies

Thus, we may conclude that

Finally, for \(k > 5007\), we have

so

This concludes the proof. \(\square \)

Lemma 27

If

then there exists a circular improvement.

To prove Lemma 27, we first state Lemma 28, which tells us that any graph in which the average degree is by some constant factor larger than 2 contains a cycle of logarithmically bounded size. It is implied by the proof of Lemma 3.1 in [4].

Lemma 29 then provides a generalization to a setting where we are given positive vertex weights and only have a bound on the average weighted degree, but know that the weights of neighboring vertices can only differ by a constant factor.

We employ it in our proof of Lemma 27 to derive the existence of a cycle of logarithmically bounded size in a certain auxiliary graph, which then gives rise to a circular improvement.

Lemma 28

Let \(s\in {\mathbb {N}}_{>0}\) and let \(G=(V,E)\) be an undirected graph with \(|V|\ge 2\) such that \(|E|\ge \frac{s+1}{s}\cdot |V|\). Then G contains a cycle of size at most \(2\cdot s\cdot \lceil \log (|V|)\rceil \).

Lemma 29

Let \(G=(V,E)\) be an undirected graph with \(|V|\ge 2\) equipped with positive vertex weights \(w:V(G)\rightarrow {\mathbb {Q}}_{>0}\).

Let further \(\alpha \ge 1\) such that for every edge \(\{u,v\}\in E(G)\), we have

and let \(s\in {\mathbb {N}}\) such that

where \(\delta (v)\) denotes the set of incident edges of v in G. Then G contains a cycle of length at most \(2\cdot s\cdot \lceil \log (|V|)\rceil \).

Proof

If G contains a loop, we are done since \(\log (|V|)\ge \log (2)=1\), so assume that this is not the case. By Lemma 28, it suffices to show that G contains a non-empty subgraph \(G'\) with \(|E(G')|\ge \frac{s+1}{s}\cdot |V(G')|\). Note that each such subgraph satisfies \(|V(G')|\ge 2\) by our assumption that G does not contain any loop. Let \(w_{max}:=\max _{v\in V} w(v)\). For \(x\in (0,\infty )\), let \(V_{\ge x}:=\{v\in V: w(v)\ge x\}\) be the set of vertices of weight at least x, and let \(G_{\ge x}:=(V_{\ge x}, E_{\ge x}):=G[V_{\ge x}]\) denote the subgraph of G induced by \(V_{\ge x}\). Observe that by definition of \(w_{max}\), \(V_{\ge x}\ne \emptyset \) for \(x\in (0,w_{max})\). We claim that there is \(x\in (0,w_{max})\) such that \(|E_{\ge x}|\ge \frac{s+1}{s}\cdot |V_{\ge x}|\). Assume towards a contradiction that this were not the case, i.e.

By our assumption on \(\alpha \), we know that for every \(x\in (0,\infty )\) and every \(v\in V_{\ge \alpha \cdot x}\), the neighborhood of v in G is contained in \(V_{\ge x}\). Hence, we obtain

This yields

i.e. \(2\cdot \frac{s+1}{s} \cdot \sum _{v\in V} w(v) < 2\cdot \frac{s+1}{s}\cdot \sum _{v\in V} w(v)\), a contradiction. Note that when applying (12) in the fourth line, we indeed inherit the strict inequality because both \(|E_{\ge x}|\) and \(|V_{\ge x}|\) are constant on the interval \((0,\min _{v\in V} w(v))\) of positive length. \(\square \)

Proof of Lemma 27

Let \(v\in B\). We show that there is at most one vertex \(u\in N(v,A^*)\) such that u is single and \(v=n(u)\). Observe that for each such vertex u, we have

so \(\textrm{contr}(u,v)>\frac{w(v)}{2}\). But by Proposition 10, the total contribution to \(v\in B\subseteq A\) is bounded by w(v) since there is no claw-shaped improvement when LogImp terminates. Hence, there can be at most one such vertex u since the contribution is non-negative.

Moreover, recall that \(v\in \textrm{help}(u)\) if and only if u is single and \(v=n(u)\), or u is double and \(v\in \{n(u),n_2(u)\}\). As a consequence, we obtain

Now, we want to apply Lemma 29. We consider the following graph \(G^*\):

-

\(V(G^*):=A\)

-

\(E(G^*):=\{\{n(u),n_2(u)\}:u \text {is double }\wedge \{n(u),n_2(u)\}\cap B\ne \emptyset \}\)

The vertex weights are given by w. By definition of being double, we can choose \(\alpha :=\left( 1-\sqrt{\epsilon _k'}\right) ^{-1}\). Then

In particular, as \(G^*\) does not contain any loops by definition, we must have \(|V(G^*)|\ge 2\). Hence, we can apply Lemma 29 to obtain a cycle C of size at most \(8\cdot \lceil \log (|A|)\rceil \le 8\cdot \lceil \log (|V(G)|)\rceil \). We need to see how to get a circular local improvement out of C. To this end, denote the set of vertices from \(A^*\) corresponding to the edges of C by \(U_e\), and define

Then \(X\subseteq A^*\) is independent. Let \(e=\{n(u),n_2(u)\}\in E(C)\) and let \(\{v,z\}:=e\) such that \(v\in B\) (and we do not make any assumptions on whether or not \(z\in B\)). This is possible since every edge of \(G^*\) and hence of C must intersect B by definition. We claim that

We have \(\sum _{x\in T_v} w^2(x)-w^2(N(x,A){\setminus }\{v\})\ge {\tilde{\epsilon }}_k\cdot w^2(v)\) by definition of B and \(T_v\) and the contribution. Moreover, we know that each vertex \(x\in T_v\cap U_e\) satisfies \(n(x)=v\) since it is in \(T_v\), and is double since it is in \(U_e\). Hence,

Next, as the edge in E(C) that \(x\in T_v\cap U_e\) induces is \(\{n(x),n_2(x)\}=\{v,n_2(x)\}\), this edge must be incident to v. But in the cycle C, v has exactly two incident edges. Therefore, \(|T_v\cap U_e|\le 2\) and

We have \(e=\{n(u),n_2(u)\}=\{v,z\}\), which implies

As u is double, we either get \(w(n_2(u))\le w(n(u))\le w(u)\) and, hence,

or

and

leading to

Hence, the weaker inequality

holds in either case. Additionally, as

and \(w(N(u,A))\le (2+\epsilon _k')\cdot w(u)\) by definition of double, we get

This gives

where the last strict inequality follows from (23) and \(w(v)>0\) since weights are positive. This proves our claim (14).

Moreover, we have \(|U_e|=|E(C)|\le 8\cdot \lceil \log (|V(G)|)\rceil \). Hence, choosing \(U:=U_e\) and \(Y_v:=T_v{\setminus } U_e\) for \(v\in V(C)\cap B\) and \(Y_v:=\emptyset \) for \(v\in V(C)\setminus B\), we see that our local improvement is circular by (14) and (15), provided it is a subset of \(V{\setminus } A\). To this end, note that \(V(C)\subseteq A{\setminus } A^*\) since vertices in \(A\cap A^*\) are only adjacent to themselves in \(A^*\), and, hence, isolated in \(G^*\). As a consequence, as all vertices in \(X=U_e\cup \bigcup _{v\in V(C)\cap B} T_v\) are adjacent to some \(v\in V(C)\subseteq A{\setminus } A^*\), they cannot be contained in \(A^*\cap A\) either, because in this case, they could only be adjacent to themselves in A and in particular not to a vertex in \(A{\setminus } A^*\). Hence, \(X\subseteq A^*{\setminus } A\subseteq V{\setminus } A\) defines a circular improvement. \(\square \)

4.4 Conditions satisfied by our choices of \({\tilde{\epsilon }}_k\), \(\epsilon _k'\) and \(\delta _k\)

The following constraints can be easily verified by plugging in our choices of \({\tilde{\epsilon }}_k\), \(\epsilon _k'\) and \(\delta _k\). Due to page limit, we omit the calculations.

5 A polynomial running time

In this section, we complete the proof of Theorem 1 by showing how to obtain a polynomial running time for (conflict graphs of) instances of the weighted k-Set Packing problem. In doing so, Lemma 30, which we prove in Sect. 5.1, guarantees a polynomial number of iterations, while Lemma 31 tells us that we can implement each iteration of LogImp in polynomial time. We prove it in Sect. 5.2.

Lemma 30

Let \(k\ge 3\) and denote the approximation guarantee that LogImp achieves for the MWIS in \(k+1\)-claw free graphs by \(\rho \). Given an instance (G, w) of the MWIS in \(k+1\)-claw free graphs and \(N\in {\mathbb {N}}_{>0}\), we can, in polynomial time, compute an induced subgraph \(G'\) of G and a weight function \(w'\) with the following two properties:

-

When applied to \((G',w')\), LogImp terminates after a number of iterations that is polynomial in |V(G)| and N.

-

The output of LogImp on \((G',w')\) yields an \(\frac{N}{N-1}\cdot \rho \)-approximation for the MWIS on (G, w).

Lemma 31

There is an algorithm, which, given a family \({\mathcal {S}}\) consisting of sets each of cardinality at most k, a positive weight function \(w:{\mathcal {S}}\rightarrow {\mathbb {Q}}_{>0}\) and \(A\subseteq {\mathcal {S}}\) such that the sets in A are pairwise disjoint, in polynomial time either returns a claw-shaped or circular improvement X of A in \(G_{\mathcal {S}}\), or decides that none exists.

Proof of Theorem 1

For \(k=3\), we can, for example, run the polynomial time \(\frac{k+1}{2}-\frac{1}{63,700,993}\)-approximation algorithm for the MWIS in \(k+1\)-claw free graphs given in [17], yielding \(\epsilon _3=1-\frac{2}{63,700,993}\). Now, let \(k\ge 4\). Theorem 18 tells us that LogImp achieves an approximation guarantee of \(\frac{k+\delta _k}{2}\) for the MWIS in \(k+1\)-claw free graphs, where \(\delta _k\in (0,1)\) and \(\lim _{k\rightarrow \infty }\delta _k=0\). Define

This is a constant that only depends on k, but not on the input instance. We can obtain an \(\frac{N_k}{N_k-1}\cdot \frac{k+\delta _k}{2}\)-approximation for the weighted k-Set Packing problem by

-

computing the conflict graph \(G_{{\mathcal {S}}}\) of a given instance \(({\mathcal {S}},w)\),

-

applying Lemma 30 to obtain an induced subgraph \(G'\) of \(G_{{\mathcal {S}}}\) (which equals the conflict graph of the corresponding sub-instance of \({\mathcal {S}}\)) and a weight function \(w'\) and

-

finally running LogImp on \((G',w')\).

By Lemmas 30 and 31, we can perform each of these steps in polynomial time. Concerning the approximation guarantee, we have

where \(\epsilon _k:=\delta _k+\frac{1}{N_k-1}\cdot (k+\delta _k)\). Then \(\delta _k\in (0,1)\) and our choice of \(N_k\) imply

Moreover,

and \(\lim _{k\rightarrow \infty }\delta _k=0\) implies \(\lim _{k\rightarrow \infty }\epsilon _k=0\). This concludes the proof of Theorem 1. \(\square \)

5.1 A polynomial number of iterations

Proof of Lemma 30

Let \(G=(V,E)\). We first apply the greedy algorithm, which considers the vertices of G by decreasing weight and picks every vertex that is not adjacent to an already chosen one. Denote the solution it returns by \(A'\). It is known that \(A'\) is a k-approximate solution to the MWIS in (G, w) (see for example [5]). Now, we construct a weight function \(w_s\) from w by re-scaling it such that \(w_s(A')=N\cdot |V|\) holds. Then \(A'\) also constitutes a k-approximate solution to the MWIS in \((G,w_s)\). Next, we define \(w'\) by \(w'(v):=\lfloor w_s(v)\rfloor \) and obtain \(G'\) by deleting vertices with \(w'(v)=0\), which does not change the weight of an optimum solution (w.r.t. \(w'\)). Consider a run of LogImp on \((G',w')\) and denote the solution that LogImp maintains by A. As \(w'\) is positive and integral, we know that \({w'}^2(A)\) must increase by at least 1 in every iteration except for the last one. On the other hand, at each point, we must have

which bounds the total number of iterations by \(k^2\cdot N^2\cdot |V|^2+1\). Finally, if A now denotes the solution LogImp returns and \(A^*\) is an independent set in G of maximum weight with respect to w (and \(w_s\)), we know that

so \(w_s(A^*)\le \frac{N}{N-1}\cdot \rho \cdot w_s(A)\) and thus, also \(w(A^*)\le \frac{N}{N-1}\cdot \rho \cdot w(A)\). \(\square \)

5.2 A polynomial running time per iteration

This section is dedicated to the proof of Lemma 31, which consists of two parts. First, Proposition 32 tells us that we can search for a claw-shaped improvement in polynomial time (for general \(k+1\)-claw free graphs). Then, Lemma 33 makes sure that for conflict graphs of instances of the weighted k-Set Packing problem, we can also find a circular improvement, or decide that none exists, in polynomial time.

Proposition 32

Given a \(k+1\)-claw free graph \(G=(V,E)\), a positive weight function \(w:V\rightarrow {\mathbb {Q}}_{>0}\) and an independent set \(A\subseteq V\), we can, in polynomial time (considering k a constant), either return a claw-shaped improvement, or decide that none exists.

Proof

We can check for the existence of a claw-shaped improvement in polynomial time by looping over all \({\mathcal {O}}((|V|+1)^k)={\mathcal {O}}(|V|^k)\) subsets X of V of size at most k. For each of them, we can check in polynomial time whether it is independent and whether it is claw-shaped (either \(|X|=1\) or all vertices in X have a common neighbor in A). Finally, we can check whether \(w^2(X)>w^2(N(X,A))\) in polynomial time. \(\square \)

It is not hard to see that we can search for a circular improvement in quasi-polynomial time. By Proposition 16, there are

many possible choices for X and we can check in polynomial time whether a given one constitutes a circular improvement. More precisely, we can first check whether X is independent, and then try all of the at most

possible choices for \(U\subseteq X\) with \(|U|\le 8\cdot \lceil \log (|V|)\rceil \). For each choice of U, we can check in polynomial time whether each vertex in U has at least two neighbors in A, and if so, compute V(C) (cf. Def. 15). Then, the partition into the sets \(Y_v\) is uniquely determined by the map n, although it might happen that for some vertex \(x\in X{\setminus } U\), n(x) is not contained in V(C), meaning that our choice of X and U does not meet the requirements of Definition 15. Finally, we can check the condition (iii) from Definition 15 in polynomial time.

For the general case of the MWIS in \(k+1\)-claw free graphs, it is not clear whether and how one can, in polynomial time, either find a circular improvement X, or decide that none exists. However, for the weighted k-Set Packing problem, which is the main application we have in mind, we will show in the remainder of this section how to perform this task in polynomial time. Fur this purpose, we employ the color coding technique similar as in [10] (but in a much simpler way).

Lemma 33

There is an algorithm, which, given a family \({\mathcal {S}}\) consisting of sets each of cardinality at most k, a positive weight function \(w:{\mathcal {S}}\rightarrow {\mathbb {Q}}_{>0}\) and \(A\subseteq {\mathcal {S}}\) such that the sets in A are pairwise disjoint and there is no claw-shaped improvement of A in \(G_{{\mathcal {S}}}\), in polynomial time (considering k a constant) either returns a circular improvement X of A in \(G_{\mathcal {S}}\), or decides that none exists.

The remainder of this section is dedicated to the proof of Lemma 33. To simplify notation, we identify a set \(S\in {\mathcal {S}}\) and its corresponding vertex in the \(k+1\)-claw free conflict graph \(G_{{\mathcal {S}}}=:G=:(V,E)\).

In order to search for a circular improvement, we first compute two maps \(n:V\setminus A\rightarrow A\) mapping u to an element of N(u, A) of maximum weight, and \(n_2:\{u\in V{\setminus } A: |N(u,A)|\ge 2\}\rightarrow A\) mapping u to an element of \(N(u,A)\setminus \{n(u)\}\) of maximum weight. These maps are guaranteed to exist since the assumption that there is no claw-shaped improvement implies that A constitutes a maximal independent set in \(G_{{\mathcal {S}}}\). n and \(n_2\) can be easily computed in polynomial time. We define an auxiliary multi-graph H as follows:

The vertices of H are pairs (v, Y), where \(v\in A\) and \(Y\subseteq \{u\in V\setminus A: n(u)=v\}\) is an independent set of cardinality at most k. Then for each \(v\in A\), there are at most \(\sum _{i=0}^{k}|V|^i =\frac{|V|^{k+1}-1}{|V|-1}\in {\mathcal {O}}(|V|^k)\) many possible choices for Y, so we have \(|V(H)| \in {\mathcal {O}}(|V|^{k+1})\).

As far as the set of edges is concerned, for each \(u\in \{x\in V{\setminus } A: |N(x,A)|\ge 2\}\) and each pair of vertices \((n(u), Y_1),(n_2(u), Y_2)\in V(H)\) such that

and \(\{u\}\), \(Y_1\) and \(Y_2\) are pairwise disjoint and their union is independent, we add a copy of the edge \(\{(n(u),Y_1), (n_2(u), Y_2)\}\) to the multi-set E(H). We say that this edge is induced by u. As we have as most |V| many possible choices for u and \({\mathcal {O}}(|V|^{k})\) possibilities to choose each of \(Y_1\) and \(Y_2\), we get \(|E(H)|\in {\mathcal {O}}( |V|^{2k+1})\).

For convenience, let \(\mu :E(H)\rightarrow V\setminus A\) such that \(e\in E(H)\) is induced by the vertex \(\mu (e)\).

We claim that there is a correspondence between circular improvements and cycles \({\bar{C}}\) in H of length at most \(8\cdot \lceil \log (|V|)\rceil \) such that for every \(v\in A\), there is at most one set Y with \((v,Y)\in V({\bar{C}})\) and such that \(\{\mu (e),e\in E({\bar{C}})\}\cup \bigcup _{(v,Y)\in V({\bar{C}})}Y\) defines an independent set a.k.a. a disjoint sub-family of \({\mathcal {S}}\), and the union is disjoint. More precisely, we prove the following statement:

Proposition 34

An independent set \(X\subseteq V\setminus A\) constitutes a circular improvement if and only if there is a cycle \({\bar{C}}\) in H of length at most \(8\cdot \lceil \log (|V|)\rceil \) such that for every \(v\in A\), there is at most one set Y with \((v,Y)\in V({\bar{C}})\) and such that

and the union is disjoint.

Proof

First, assume that X is a circular improvement. Then X constitutes an independent set. Let U, C and \(Y_v,v\in V(C)\) be as in Definition 15. By (ii) and (iii) and since X is independent, we have \({\bar{e}}_u:=\{(n(u), Y_{n(u)}),(n_2(u),Y_{n_2(u)})\}\in E(H)\) for every \(u\in U\), and \(\mu ({\bar{e}}_u)=u\). By (i), \({\bar{C}}:=(\{(v,Y_v),v\in V(C)\},\{{\bar{e}}_u,u\in U\})\) yields a cycle in H of length at most \(8\cdot \lceil \log (|V|)\rceil \). By construction, for every \(v\in A\), there is at most one set Y with \((v,Y)\in V({\bar{C}})\) and we have

By definition of the sets \(Y_v,v\in V(C)\), this union is disjoint.

Now, let \(X\subseteq V\setminus A\) be independent and let \({\bar{C}}\) be a cycle in H as described in the proposition. Define \(U:=\{\mu (e):e\in E({\bar{C}})\}\). Then \(|U|\le 8\cdot \lceil \log (|V|)\rceil \). By definition of H,

is a closed edge sequence because it arises from \({\bar{C}}\) by projecting every vertex \((v,Y)\in V({\bar{C}})\) to its first component. As for every \(v\in A\), there is at most one set Y with \((v,Y)\in V({\bar{C}})\), C is a cycle, so condition (i) from Definition 15 holds. Moreover, as for every \((v,Y)\in V({\bar{C}})\), we have \(Y\subseteq \{u\in V{\setminus } A: n(u)=v\}\), we can infer that for every \((v,Y)\in V({\bar{C}})\), we have \(Y\cap X{\setminus } U=\{z\in X{\setminus } U:n(z)=v\}=Y_v\). In particular, \(X=U\cup \bigcup _{v\in V(C)} Y=U\cup \bigcup _{v\in V(C)} Y_v\), which yields condition (ii). As the first union is disjoint, we can further conclude that \(Y=Y_v\) for all \(v\in V(C)\). Hence, the definition of E(H) implies that condition (iii) from Definition 15 is satisfied. \(\square \)

It remains to see how to, in polynomial time, find a cycle in H with the properties described in Prop. 34, or decide that none exists.

First, for each pair of parallel edges in E(H), we can check in polynomial time whether or not it yields such a cycle, so we can restrict ourselves to cycles of length at least 3 (and at most \(8\cdot \lceil \log (|V|)\rceil \)) in the following.

To find these, we want to apply the color coding technique. For this purpose, we introduce the following terminology:

Definition 35

(t-perfect family of hash functions, [1]) For \(t,m\in {\mathbb {N}}\) with \(t\le m\), a family \({\mathcal {F}}\subseteq {}^{\{1,\dots ,m\}}\{1,\dots ,t\}\) of functions mapping \(\{1,\dots ,m\}\) to \(\{1,\dots ,t\}\) is called a t-perfect family of hash functions if for all \(I\subseteq \{1,\dots ,m\}\) of size at most t, there is \(f\in {\mathcal {F}}\) with \(f\upharpoonright I\) injective.

Theorem 36

(Stated in [1] referring to [20]) For \(t,m\in {\mathbb {N}}\) with \(t\le m\), a t-perfect family \({\mathcal {F}}\) of hash functions of cardinality \(2^{{\mathcal {O}}(t)}\cdot (\log (m))^2\), where each function is encoded using \({\mathcal {O}}(t)+2\log \log m\) many bits, can be explicitly constructed such that the query time is constant.

In order to find a cycle \({\bar{C}}\) in H of logarithmically bounded size such that each vertex \(v\in A\) occurs at most once as the first entry of a vertex \((z,Y)\in V({\bar{C}})\) and \(X:=\{\mu (e),e\in E({\bar{C}})\}\cup \bigcup _{(v,Y)\in V({\bar{C}})}Y\) defines an independent set in G (i.e. a disjoint family of k-sets) and the union is disjoint, the idea is to color both the vertices in A and the elements of the k-sets corresponding to the vertices in X, and to replace the distinctness/disjointness constraints by the stronger property that for each of the vertices \((v,Y)\in V({\bar{C}})\), v receives a different color, and moreover, the colors of the elements of the k-sets in X are pairwise distinct. We call a cycle with this property colorful (see Definition 37). If we only use a logarithmically bounded number of colors, then we can use dynamic programming to check for the existence of a colorful cycle. Moreover, we can show that if we pick our colorings from appropriately chosen families of perfect hash functions (of polynomial size), we can guarantee that for each cycle \({\bar{C}}\) in H meeting the requirements of Prop. 34, there exist colorings from our families for which \({\bar{C}}\) is colorful. This means that by looping over all colorings from our families and checking for a colorful cycle, we will find a circular improvement, if one exists.

Let \({\mathcal {F}}\) be a \(t_1:=8\cdot \lceil \log (|V|)\rceil \) perfect family of hash functions with domain \(A\subseteq V\). There exists such a family of size

which is polynomial.

In addition, let \({\mathcal {G}}\) be a \(t_2:=8\cdot (k+1)\cdot k\cdot \lceil \log (|V|)\rceil \)-perfect family of functions with domain \(\bigcup {\mathcal {S}}\), i.e. the underlying universe of the k-Set Packing problem (or, more precisely, its restriction to the set of elements that appear in at least one set). Clearly, \(|\bigcup {\mathcal {S}}|\le k\cdot |{\mathcal {S}}|=k\cdot |V|\), so we obtain such a family \({\mathcal {G}}\) of size

which is polynomial. For each pair of functions \((f,g)\in {\mathcal {F}}\times {\mathcal {G}}\), we do the following:

For every \(v=(z,Y)\in V(H)\), we set

meaning that we color v with the color assigned to the vertex z by f. Additionally, we define

i.e. we color v with all of the colors occurring among the elements of the k-sets represented by the vertices in Y.

Finally, we assign to an edge \(e=\{(v_1,Y_1),(v_2,Y_2)\}\in E(H)\) the set of colors

where we interpret \(\mu (e)\) as the corresponding k-set.

Definition 37

Following [10], we call a path or cycle P in H colorful if

-

The colors \(\textrm{col}^f(v),v\in V(P)\) are pairwise distinct.

-

The color sets \(\textrm{col}^{g}(e),e\in E(P)\) and \(\textrm{col}^{g}(v),v\in V(P)\) are pairwise disjoint.

Lemma 38

Let \({\bar{C}}\) be a cycle of length at least 3 and at most \(8\cdot \lceil \log (|V|)\rceil \) in H. Then the following are equivalent:

-

There exist \(f\in {\mathcal {F}}\) and \(g\in {\mathcal {G}}\) for which \({\bar{C}}\) is colorful.

-

\({\bar{C}}\) meets the requirements of Prop. 34.

Proof

For a colorful cycle \({\bar{C}}\), the sets of colors assigned (by g) to the (union of) k-sets in the sets \(Y,(v,Y)\in V({\bar{C}})\) and the sets of colors assigned (by g) to the k-sets \(\mu (e),e\in E({\bar{C}})\) are pairwise disjoint. In particular, the (unions of) k-sets in the list \(\bigcup Y,(v,Y)\in V({\bar{C}})\), \(\mu (e),e\in E({\bar{C}})\) are pairwise disjoint. Moreover, when constructing H, we made sure that for each \((v,Y)\in V(H)\), Y is independent in \(G_{{\mathcal {S}}}\), meaning that the contained k-sets are pairwise disjoint. Hence, the k-sets in \(X:=\bigcup _{(v,Y)\in V({\bar{C}})} Y\cup \{\mu (e), e\in E({\bar{C}})\}\) are pairwise disjoint and the union is disjoint. In particular, X constitutes an independent set in \(G_{{\mathcal {S}}}\). Moreover, the colors \(f(v),(v,Y)\in V({\bar{C}})\) are pairwise distinct, meaning that for each \(v\in A\), there is at most one Y with \((v,Y)\in V({\bar{C}})\). Hence, \({\bar{C}}\) meets the requirements of Prop. 34.

On the other hand, if \({\bar{C}}\) satisfies the conditions from Prop. 34, then we have \(|V({\bar{C}})|\le 8\cdot \lceil \log (|V|)\rceil =t_1\) and for each \(v\in A\), there is at most one Y such that \((v,Y)\in V({\bar{C}})\). Hence, there is \(f\in {\mathcal {F}}\) that assigns a different color to each one of the vertices \(v,(v,Y)\in V({\bar{C}})\). Moreover, the k-sets contained in the sets \(Y,(v,Y)\in V(H)\) and the k-sets \(\mu (e),e\in E({\bar{C}})\) are pairwise distinct and pairwise disjoint. In addition, as every set Y with \((v,Y)\in V(H)\) contains at most k k-sets, the total number of elements from \(\bigcup {\mathcal {S}}\) contained in the k-sets in the sets \(Y,(v,Y)\in V({\bar{C}})\) or the k-sets \(\mu (e),e\in E({\bar{C}})\) is bounded by

Hence, there exists \(g\in {\mathcal {G}}\) assigning distinct colors to all of these elements. Thus, \({\bar{C}}\) is colorful for f and g. \(\square \)

As the sizes of \({\mathcal {F}}\) and \({\mathcal {G}}\) are polynomially bounded, Lemma 39 concludes the proof of Lemma 33.

Lemma 39

Let \(f\in {\mathcal {F}}\) and \(g\in {\mathcal {G}}\). We can, in polynomial time, either find a colorful cycle \({\bar{C}}\) of length at least 3 and at most \(8\cdot \lceil \log (|V|)\rceil \) in H, or decide that none exists.

Proof

We observe that there is a colorful cycle \({\bar{C}}\) meeting the above size bounds if and only if there exist an edge \(e_0=\{s,t\}\in E(H)\) and a colorful s-t-path P of length at least 2 and at most \(8\cdot \lceil \log (|V|)\rceil -1\) such that \(\textrm{col}^g(e_0)\) and \(\bigcup _{e\in E(P)}\textrm{col}^g(e)\cup \bigcup _{v\in V(P)}\textrm{col}^g(v)\) are disjoint. For \(s, t\in V(H)\), \(C^f\subseteq \{1,\dots ,t_1\}\), \(C^g\subseteq \{1,\dots ,t_2\}\) and \(i\in \{0,\dots ,8\cdot \lceil \log |V|\rceil -1\}\), we define the Boolean value \(\textrm{Path}(s,t,C^f,C^g,i)\) to be true if and only if there exists a colorful s-t-path P of length i with

We apply dynamic programming to compute these values in order of non-decreasing i, and we use backlinks to be able to retrace a corresponding path for each value that is set to true. The total number of values we have to compute is bounded by

which is polynomial bounded. Hence, it suffices to show how to, in polynomial time, determine \(\textrm{Path}(s,t,C^f,C^g,i)\), provided that all of the values \(\textrm{Path}(s',t',C^{f,'},C^{g,'},i')\) with \(i'<i\) are already known to us. We have \(\textrm{Path}(s,t,C^f,C^g,0)=\textrm{true}\) if and only if \(s=t\), \(C^f=\{\textrm{col}^f(s)\}\) and \(C^g=\textrm{col}^g(s)\). For \(i\ge 1\), we observe that \(\textrm{Path}(s,t,C^f,C^g,i)=\textrm{true}\) if and only if \(\textrm{col}^f(s)\in C^f\) and there exists an edge \(e=\{s,v\}\in E(H)\) such that \(\textrm{col}^g(s)\cap \textrm{col}^g(e)=\emptyset \), \(\textrm{col}^g(s)\cup \textrm{col}^g(e)\subseteq C^g\) and \(\textrm{Path}(v,t,C^f\setminus \{\textrm{col}^f(s)\},C^g\setminus (\textrm{col}^g(s)\cup \textrm{col}^g(e)),i-1)=\textrm{true}\). We can check this in polynomial time. \(\square \)

6 The lower bound

In this section, we show that our result is asymptotically best possible in the sense of Theorem 2.

Theorem 2

Let \(k\ge 3\), \(\alpha \in {\mathbb {R}}\), \(0<\epsilon <1\) and \(C>0\). Then for each \(N_0\in {\mathbb {N}}\), there exists an instance \(({\mathcal {S}}, w)\) of the weighted k-Set Packing problem with \(|{\mathcal {S}}|\ge N_0\) and such that there are feasible solutions \(A,A^*\subseteq {\mathcal {S}}\) with the following two properties:

-

There is no local improvement of A of size at most \(C\cdot \log (|{\mathcal {S}}|)\) with respect to \(w^\alpha \), that is, there is no disjoint sub-collection \(X\subseteq {\mathcal {S}}{\setminus } A\) of sets such that

$$\begin{aligned}|X|\le C\cdot \log (|{\mathcal {S}}|)\text { and }w^\alpha (X)> w^\alpha (\{a\in A:\exists x\in X: a\cap x\ne \emptyset \}).\end{aligned}$$ -

We have \(w(A^*)\ge \frac{k-\epsilon }{2}\cdot w(A)\).

Proof

For \(\alpha \le 0\), we can just choose the weights in A to be arbitrarily small. Hence, we can assume \(\alpha >0\). Now, there is a result by Erdős and Sachs [9] telling us that for every \(N_1\ge N_0\), there is a k-regular graph H on \(|V(H)|\ge N_1\) vertices such that the girth of H, i.e. the minimum length of a cycle in H, is at least \(\frac{\log (|V(H)|)}{\log (k-1)}-1\). Consider the graph G with vertex set \(V(G):=V(H)\cup E(H)\) and edge set \(E(G):=\{\{v,e\}:v\in e\in E(H)\}\), i.e. each edge of H is connected via edges in G to both of its endpoints. We define \({\mathcal {S}}:=\{\delta _G(x), x\in V(G)\}\), where \(\delta _G(x)\) is the set of incident edges of x in G. By k-regularity of H, \(|\delta _G(v)|=k\ge 3\) for \(v\in V(H)\) and \(|\delta _G(e)|=2\) for \(e\in E(H)\), so each element of \({\mathcal {S}}\) has cardinality at most k. By definition, G is simple, so no two vertices share more than one edge. As all degrees are at least two, the sets \(\delta _G(x),x\in V(G)\) are pairwise distinct. Finally, V(H) and E(H) constitute independent sets in G, implying that \(A:=\{\delta _G(v), v\in V(H)\}\) and \(A^*:=\{\delta _G(e),e\in E(H)\}\) each consist of pairwise disjoint sets. We define positive weights on \({\mathcal {S}}\) by setting \(w(\delta _G(v)):=1\) for \(v\in V(H)\) and \(w(\delta _G(e)):=(1-{\bar{\epsilon }})^{\frac{1}{\alpha }}\), where \({\bar{\epsilon }}>0\) is chosen such that \(\frac{1}{{\bar{\epsilon }}}\in {\mathbb {N}}\) and \((1-{\bar{\epsilon }})^{\frac{1}{\alpha }}\ge 1-\frac{\epsilon }{k}\). By k-regularity of H, \(|A^*|=|E(H)|=\frac{k}{2}\cdot |V(H)|=\frac{k}{2}\cdot |A|\), so \(w(A^*)\ge \frac{k-\epsilon }{2}\cdot w(A)\). To see that there is no local improvement \(X\subseteq {\mathcal {S}}\setminus A\) of \(w^\alpha (A)\) with

we observe that X being a local improvement would imply

by our choice of weights. But the sets from A that X intersects are precisely the sets \(\delta _G(v)\) for those vertices \(v\in V(H)\) that are endpoints of edges \(e\in E(H)\) with \(\delta _G(e)\in X\). Hence, we have found a subgraph J of H with

which implies the existence of a cycle of length \(\frac{2}{{\bar{\epsilon }}}\cdot \lceil \log (C\cdot \log (\frac{k+2}{2}\cdot |V(H)|))\rceil \) by Lemma 28. But as a function of |V(H)|, this grows asymptotically slower than our lower bound of \(\frac{\log (|V(H)|)}{\log (k-1)}-1\) on the girth, resulting in a contradiction for \(N_1\) and, hence, |V(H)| chosen large enough.\(\square \)

7 Conclusion

In this paper, we have seen how to use local search to approximate the weighted k-Set Packing problem with an approximation ratio that gets arbitrarily close to \(\frac{k}{2}\) as k approaches infinity. At the cost of a quasi-polynomial running time, this result applies to the more general setting of the Maximum Weight Independent Set problem in \(k+1\)-claw free graphs. Moreover, we have seen that our result is asymptotically best possible in the sense that for every \(\alpha \in {\mathbb {R}}\), a local improvement algorithm for the weighted k-Set Packing problem that considers local improvements of \(w^\alpha \) of logarithmically bounded size cannot produce an approximation guarantee better than \(\frac{k}{2}\).

As a consequence, our paper seems to conclude the story of (pure) local improvement algorithms for both the MWIS in \(k+1\)-claw free graphs and the weighted k-Set Packing problem. Hence, the search for new techniques beating the threshold of \(\frac{k}{2}\) is one of the next goals for research in this area.

Notes

Observe that such an algorithm might just pick the solution A from Theorem 2 set by set.

By symmetry, one can, for example, just pick one vertex from A as a potential center and check all 8 subsets of its neighbors in \(A^*=V\setminus A\).

References

Alon, N., Yuster, R., Zwick, U.: Color-coding. J. ACM 42(4), 844–856 (1995). https://doi.org/10.1145/210332.210337

Arkin, E.M., Hassin, R.: On local search for weighted \(k\)-set packing. Math. Oper. Res. 23(3), 640–648 (1998). https://doi.org/10.1287/moor.23.3.640

Berman, P.: A \(d/2\) approximation for maximum weight independent set in \(d\)-claw free graphs. In: Scandinavian Workshop on Algorithm Theory, pp. 214–219. Springer (2000). https://doi.org/10.1007/3-540-44985-X_19

Berman, P., Fürer, M.: Approximating maximum independent set in bounded degree graphs. In: Proceedings of the Fifth Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 365–371 (1994). https://dl.acm.org/doi/pdf/10.5555/314464.314570

Chandra, B., Halldórsson, M.M.: Greedy local improvement and weighted set packing approximation. J. Algorithms 39(2), 223–240 (2001). https://doi.org/10.1006/jagm.2000.1155

Cygan, M.: Improved approximation for 3-dimensional matching via bounded pathwidth local search. In: 54th Annual IEEE Symposium on Foundations of Computer Science, FOCS 2013, 26–29 October, 2013, Berkeley, CA, USA, pp. 509–518. IEEE Computer Society (2013). https://doi.org/10.1109/FOCS.2013.61

Cygan, M., Grandoni, F., Mastrolilli, M.: How to sell hyperedges: the hypermatching assignment problem. In: Proceedings of the 2013 Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 342–351. SIAM (2013). https://doi.org/10.1137/1.9781611973105.25

Edmonds, J.: Maximum matching and a polyhedron with 0,1-vertices. J. Res. Natl. Bureau Stand. Sect. B Math. Math. Phys. 69B, 125–130 (1965). https://doi.org/10.6028/jres.069b.013

Erdős, P., Sachs, H.: Reguläre Graphen gegebener Taillenweite mit minimaler Knotenzahl. Wiss. Z. Martin-Luther-Univ. Halle-Wittenberg Math.-Natur. Reihe 12(3), 251–257 (1963)

Fürer, M., Yu, H.: Approximating the \(k\)-set packing problem by local improvements. In: International Symposium on Combinatorial Optimization, pp. 408–420. Springer (2014). https://doi.org/10.1007/978-3-319-09174-7_35

Halldórsson, M.M.: Approximating discrete collections via local improvements. In: Proceedings of the Sixth Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 160–169. Society for Industrial and Applied Mathematics, USA (1995). https://dl.acm.org/doi/10.5555/313651.313687

Hazan, E., Safra, S., Schwartz, O.: On the complexity of approximating \(k\)-Set Packing. Comput. Complex. 15, 20–39 (2006). https://doi.org/10.1007/s00037-006-0205-6

Hurkens, C.A.J., Schrijver, A.: On the size of systems of sets every \(t\) of which have an SDR, with an application to the worst-case ratio of heuristics for packing problems. SIAM J. Discrete Math. 2(1), 68–72 (1989). https://doi.org/10.1137/0402008

Karp, R.M.: Reducibility among combinatorial problems. In: Miller, R.E., Thatcher, J.W., Bohlinger, J.D. (eds.) Complexity of Computer Computations: Proceedings of a symposium on the Complexity of Computer Computations. Plenum Press (1972). https://doi.org/10.1007/978-1-4684-2001-2_9

Minty, G.J.: On maximal independent sets of vertices in claw-free graphs. J. Combin. Theory Ser. B 28(3), 284–304 (1980). https://doi.org/10.1016/0095-8956(80)90074-X

Nakamura, D., Tamura, A.: A revision of Minty’s algorithm for finding a maximum weight stable set of a claw-free graph. J. Oper. Res. Soc. Japan 44(2), 194–204 (2001). https://doi.org/10.15807/jorsj.44.194

Neuwohner, M.: An improved approximation algorithm for the maximum weight independent set problem in d-claw free graphs. In: 38th International Symposium on Theoretical Aspects of Computer Science (STACS 2021), Leibniz International Proceedings in Informatics (LIPIcs), vol. 187, pp. 53:1–53:20 (2021). https://doi.org/10.4230/LIPIcs.STACS.2021.53

Neuwohner, M.: The limits of local search for weighted k-set packing. In: Aardal, K., Sanità, L. (eds.) Integer Programming and Combinatorial Optimization—23rd International Conference, IPCO 2022, Eindhoven, The Netherlands, June 27–29, 2022, Proceedings, Lecture Notes in Computer Science, vol. 13265, pp. 415–428. Springer (2022). https://doi.org/10.1007/978-3-031-06901-7_31

Sbihi, N.: Algorithme de recherche d’un stable de cardinalité maximum dans un graphe sans étoile. Discrete Math. 29(1), 53–76 (1980). https://doi.org/10.1016/0012-365X(90)90287-R

Schmidt, J.P., Siegel, A.: The spatial complexity of oblivious k-probe hash functions. SIAM J. Comput. 19(5), 775–786 (1990). https://doi.org/10.1137/0219054

Sviridenko, M., Ward, J.: Large neighborhood local search for the maximum set packing problem. In: International Colloquium on Automata, Languages, and Programming, pp. 792–803. Springer (2013). https://doi.org/10.1007/978-3-642-39206-1_67

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.