Abstract

Due to their relation to the linear complementarity problem, absolute value equations have been intensively studied recently. In this paper, we present error bound conditions for absolute value equations. Along with the error bounds, we introduce a condition number. We consider general scaled matrix p-norms, as well as particular p-norms. We discuss basic properties of the condition number, including its computational complexity. We present various bounds on the condition number, and we give exact formulae for special classes of matrices. Moreover, we consider matrices that appear based on the transformation from the linear complementarity problem. Finally, we apply the error bound to convergence analysis of two methods for solving absolute value equations.

Similar content being viewed by others

1 Introduction

We consider the absolute value equation problem of finding an \(x\in \mathbb {R}^n\) such that

where \(A\in \mathbb {R}^{n\times n}\), \(b\in \mathbb {R}^{n}\) and \(|\cdot |\) denotes the componentwise absolute value. A slightly more generalized form of AVE was introduced by Rohn [43] (see also [41]).

Many methods, including Newton-like methods [12, 30, 53] or concave optimization methods [32, 33, 52], have been developed for solving AVE. An important point concerning numerical methods is the precision of the computed solution. To the best knowledge of the authors, there exist only few papers which are devoted to this subject for AVE; for instance see [1, 49, 50]. Wang et al. [49, 50] use interval methods for numerical validation. Hladík [20] derives various bounds for the solution set of AVE.

Error bounds play a crucial role in theoretical and numerical analysis of linear algebraic and optimization problems [11, 13, 14, 18, 38]. In this paper, we study error bounds for AVE under the assumption that uniqueness of the solution of AVE is guaranteed. Then, we compute upper bounds for \(\Vert x- x^\star \Vert \), the distance to the solution \(x^\star \) of AVE, in terms of a computable residual function.

1.1 Organization and contribution of the paper

The paper is organized as follows. Section 1.2 presents basic definitions and preliminaries needed to state the results. In Sect. 2, we propose error bounds for the absolute value equations. They naturally give rise to a corresponding condition number of AVE. We further investigate properties of the condition number for various norms, including the computational complexity issues. Since the calculation of the condition number can be computationally hard, we present several bounds, and we also inspect special classes of matrices, for which it can be computed efficiently.

It is well known that a linear complementarity problem can be formulated as an absolute value equation [31]. Indeed, it is one of the main applications of absolute value equations. In Sect. 3, we study error bounds for absolute value equations obtained by the reformulation of linear complementarity problems. In addition, thanks to the given results, we provide a new error bound condition for linear complementarity problems.

Section 4 is devoted to a relative condition number of AVE. The motivation stems from the relative error bounds that we propose there.

Error bounds are important in convergence analysis of iterative methods. That is why in Sect. 5, we apply the presented error bounds for AVE to convergence analysis of two prominent methods; we prove their convergence and also address the rate of convergence.

Lastly, Sect. 6 discusses the case when AVE has not a unique solution. We show a so-called local error bounds property under certain assumptions.

1.2 Basic definitions and preliminaries

The n-dimensional Euclidean space is denoted by \(\mathbb {R}^n\). We use e and I to denote the vector of ones and the identity matrix, respectively. We denote an arbitrary scaling p-norm on \(\mathbb {R}^n\) by \(\Vert \cdot \Vert \), that is, \(\Vert x\Vert =\Vert Dx\Vert _p\) for a positive diagonal matrix D and a p-norm. In particular, \(\Vert \cdot \Vert _1\), \(\Vert \cdot \Vert _2\) and \(\Vert \cdot \Vert _\infty \) stand for 1-norm, 2-norm and \(\infty \)-norm, respectively. We use \(\hbox {sgn}(x)\) to denote the componentwise sign of x.

Let A and B be \(n\times n\) matrices. We denote the smallest singular value and the spectral radius of A by \(\sigma _{\min }(A)\) and \(\rho (A)\), respectively. The eigenvalues of a symmetric matrix \(A\in {\mathbb {R}}^{n\times n}\) are denoted and sorted as follows: \(\lambda _{\max }(A)=\lambda _1(A)\ge \dots \ge \lambda _n(A)=\lambda _{\min }(A)\). For a given norm \(\Vert \cdot \Vert \) on \(\mathbb {R}^n\), \(\Vert A\Vert \) denotes the induced matrix norm by \(\Vert \cdot \Vert \), i.e.,

Throughout the paper, we consider only induced matrix norms. The matrix inequality \(A\ge B\), |A| and \(\max (A, B)\) are understood entrywise. For \(d\in \mathbb {R}^n\), \(\hbox {diag}(d)\) stands for the diagonal matrix whose entries on the diagonal are the components of d. In contrast, \(\hbox {Diag}(A)\) denotes the vector of diagonal elements of A. The ith row and jth column of A are denoted by \(A_{i*}\) and \(A_{*j}\), respectively. We denote the comparison matrix of A by \(\langle A\rangle \), which is defined as

We recall the following definitions for an \(n\times n\) real matrix A:

-

A is a P-matrix if each principal minor of A is positive.

-

A is an M-matrix if \(A^{-1}\ge 0\) and \(A_{ij}\le 0\) for \(i, j = 1,2,\dots ,n\) with \(i\ne j\).

-

A is an H-matrix if its comparison matrix is an M-matrix.

We will exploit some results from interval linear algebra, so we recall some results from this discipline. For two \(n\times n\) matrices \(\underline{{A}}\) and \(\overline{{A}}\), \(\underline{{A}}\le \overline{{A}}\), the interval matrix \(\varvec{A} = [\underline{{A}}, \overline{{A}}]\) is defined as \(\varvec{A}=\{A: \underline{{A}}\le A \le \overline{{A}}\}\). An interval matrix A is called regular if each \(A\in \varvec{A}\) is nonsingular; similarly, we define H-matrix property of interval matrices. Furthermore, we denote and define the inverse of a regular interval matrix \(\varvec{A}\) as \(\varvec{A}^{-1}:=\{A^{-1}: A\in \varvec{A}\}\). Note that the inverse of an interval matrix is not necessarily an interval matrix.

In this paper, generalized Jacobian matrices [9] are used in the presence of nonsmooth functions. Let \(f:{\mathbb {R}}^n \rightarrow {\mathbb {R}}^m\) be a locally Lipschitz function. The generalized Jacobian of f at \({\hat{x}}\), denoted by \(\partial f({\hat{x}})\), is defined as

where \( X_f\) is the set of points at which f is not differentiable and \(\hbox {co}(S)\) denotes the convex hull of a set S.

In what follows, we remind some known theorems that we will need later on.

Theorem 1

(Wu and Li [51, Theorem 3.3]) AVE has a unique solution for each \(b\in \mathbb {R}^n\) if and only if the interval matrix \([A-I, A+I]\) is regular.

Theorem 2

(Rohn et al. [45, Theorem 4]) AVE has a unique solution for each \(b\in \mathbb {R}^n\) if \(\rho (|A^{-1}|)<1\).

Theorem 3

(Kuttler [25]) An interval matrix \(\varvec{A}\) is inverse nonnegative (i.e., \(A^{-1}\ge 0\) for every \(A\in \varvec{A}\)) if and only if \(\underline{{A}}^{-1}\ge 0\) and \(\overline{{A}}^{-1}\ge 0\). In which case, \( \varvec{A}^{-1}\subseteq [\overline{{A}}^{-1},\underline{{A}}^{-1}]. \)

The first item of the following results can be found, e.g., in Berman and Plemmons [3], Theorem 2.3 in Chapter 6. The second item is Proposition 3.6.3(iii) from Neumaier [36] with \(B:=I\).

Theorem 4

If \(A\in {\mathbb {R}}^{n\times n}\) is an M-matrix, then the following properties hold:

-

(i)

\(A+I\) is an M-matrix and \(\rho ((A+I)^{-1}(A-I))<1\);

-

(ii)

\(A-I\) is an M-matrix if and only if \(\rho (A^{-1})<1\).

The following result is a special case of Theorem 3.7.5 from Neumaier [36].

Theorem 5

Let \(A\in {\mathbb {R}}^{n\times n}\) be an H-matrix. Then

-

(i)

\(|A^{-1}| \le \langle A\rangle ^{-1}\);

-

(ii)

\([A-I,A+I]\) is an H-matrix if and only if \(\rho (\langle A\rangle ^{-1})<1\).

The Sherman-Morrison formula for the inverse of a rank-one update can be found, e.g., in [22].

Theorem 6

(Sherman-Morrison formula) Let \(A\in {\mathbb {R}}^{n\times n}\) be nonsingular and \(u,v\in {\mathbb {R}}^n\). If \(v^TA^{-1}u\not =-1\), then \((A+uv^T)^{-1}=A^{-1}-\frac{1}{1+v^TA^{-1}u}A^{-1}uv^TA^{-1}\).

2 Error bounds for the absolute value equations

Consider an absolute value equation AVE. We will need the assumption of regularity of \([A-I, A+I]\) throughout this section; by Theorem 1, AVE has then a unique solution and we denote it by \(x^\star \). Even though AVE can possess multiple solutions in practice (we discuss the case of multiple solutions in Sect. 6), there are also important classes of problems leading to a unique solution. Consider, for instance, strictly convex quadratic programs or Rohn’s characterization of extreme points of the solution set of interval equations [41].

Theorem 7

If the interval matrix \([A-I, A+I]\) is regular, then

Proof

Note that due to regularity of \([A-I, A+I]\) the right side of the above inequality is finite. Define the residual function \(\phi :{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) by \(\phi (x)=Ax-|x|-b\). By the mean value theorem, see Theorem 8 in [19],

where \({\mathfrak {A}}_i\in \partial \phi (x_i)\), \(x_i\in co(\{x, x^\star \})\), \(\lambda _i\ge 0\), \(i=1, \dots , n\) and \(\sum _{i=1}^n \lambda _i=1\). It is easily seen that \(\partial \phi (y)\subseteq \{A+\hbox {diag}(d): \Vert d\Vert _{\infty }\le 1\}=[A-I, A+I]\) for \(y\in \mathbb {R}^n\). Due to the convexity of \(\{A+\hbox {diag}(d): \Vert d\Vert _{\infty }\le 1\}\), we have

for some \({\hat{A}}\in [A-I, A+I]\). By multiplying \({\hat{A}}^{-1}\) on both sides and using the induced norm property, we obtain

which completes the proof. \(\square \)

To take advantage of this formulation, we need to compute the optimal value of the following optimization problem,

We call the optimal value of (2) the condition number of the absolute value equation AVE with respect to the norm \(\Vert \cdot \Vert \). In addition, we denote the condition number with respect to the 1-norm, 2-norm and \(\infty \)-norm by \(c_1(A)\), \(c_2(A)\) and \(c_\infty (A)\), respectively. By the properties of matrix norms, we have the following results.

Proposition 1

Let \([A-I, A+I]\) be regular and \(\alpha \) be a scalar with \(|\alpha |\ge 1\). Then c(A) and \(c(\alpha A)\) exist, and

-

(i)

\(c(-A)=c(A)\);

-

(ii)

\(c_1(A^T)=c_\infty (A)\);

-

(iii)

\(c(\alpha A)\le |\alpha ^{-1}| c(A)\).

Proof

Parts (i) and (ii) are straightforward. Part (iii) follows from the fact that

\(\square \)

In the next proposition, we show that optimization problem (2) attains its maximum at some vertices of the box \(\{d:\Vert d\Vert _\infty \le 1\}=[-e,e]\).

Proposition 2

Let the interval matrix \([A-I, A+I]\) be regular. Then, there exists a vertex of the box \([-e,e]\) which is a solution of (2).

Proof

It is enough to show that function \(d\mapsto \Vert ( A-\hbox {diag}(d))^{-1}\Vert \) is convex in each coordinate \(d_i\), \(i=1,\dots ,n\). Then the maximum must be attained in a vertex of \([-e,e]\).

Without loss of generality we show convexity in \(d_1\). Let \(f:[-1, 1]\rightarrow \mathbb {R}\) be given by \(f(t)=\Vert (A-\hbox {diag}((t, \check{d})))^{-1}\Vert \), where \({\check{d}}\) is obtained by removing the first component of \({\hat{d}}\). By the Sherman-Morrison formula (Theorem 6), \(f(t)=\Vert {\hat{A}}^{-1}-\frac{t}{1+t{\hat{A}}^{-1}_{11}}E\Vert \), where \({\hat{A}}=A+\hbox {diag}((0, {\check{d}}))\) and \(E={\hat{A}}^{-1}_{*1}{\hat{A}}^{-1}_{1*}\). Due to regularity of \([A-I, A+I]\), function \(\frac{t}{1+t{\hat{A}}^{-1}_{11}}\) is well-defined for \(t\in [-1, 1]\). Since \(\Vert A+\tau E\Vert \) as a function of \(\tau \) is convex and \(g(t)=\frac{t}{1+t{\hat{A}}^{-1}_{11}}\) is strictly monotone on \([-1, 1]\), f is convex on its domain [4]. \(\square \)

Remark 1

Note that function \(d\mapsto \Vert ( A-\hbox {diag}(d))^{-1}\Vert \) is not necessarily convex or concave; see Example 1. By Proposition 2, to handle problem (2), one needs to check solely all vertices of \([-e,e]\). As the number of vertices is \(2^n\), this method may not be effective for large n. Indeed, problem (2) is NP-hard in general. It is known that for any rational \(p\in [1, \infty )\), except for \(p=1, 2\), computation of the matrix p-norm of a given matrix is NP-hard [17]. Consequently, problem (2) is NP-hard for any rational \(p\in [1, \infty )\) except \(p=1, 2\). We prove intractability for 1-norm, so it is NP-hard for \(\infty \)-norm, too. We conjecture that it is also NP-hard for 2-norm.

Proposition 3

Computation of \(c_1(A)\) is an NP-hard problem.

Proof

By [42], solving the problem

is NP-hard. Even more, it is intractable even with accuracy less than \(\frac{1}{2}\) when \(A^{-1}\) is a so called MC-matrix [42]. Recall that \(M\in {\mathbb {R}}^{n\times n}\) is an MC matrix if it is symmetric, \(M_{ii}=n\) and \(M_{ij}\in \{0,-1\}\), \(i\not =j\). For an MC matrix M we have \(\lambda _{\max }(M)\le 2n-1\), from which \(\lambda _{\min }(M^{-1})\ge \frac{1}{2n-1}\). Therefore \(\lambda _{\min }(A)\ge \frac{1}{2n-1}\) and we can achieve \(\lambda _{\min }(A)>1\) by a suitable scaling. As a consequence, \([A-I,A+I]\) is regular.

Feasible solutions to the above optimization problem can be equivalently characterized as

or, substituting \(b=\hbox {diag}(b)e=\hbox {diag}(b)y\) with \(y=e\),

Introducing an auxiliary variable \(z=1\), we get

Rewrite the system as

Let \(\alpha >0\) be sufficiently large. The system equivalently reads

Now, we relax the system by introducing intervals on the remaining diagonal entries

Denote by \(M(D,D',d)\) the constraint matrix. The solution is \(\frac{2}{\alpha }\)-multiple of the last column of the inverse matrix \(M(D,D',d)^{-1}\). That is why we analytically express the inverse matrix (notice that it exists due to regularity of \([\alpha A-I,\alpha A+I]\))

where \(C:=(\alpha A-DD')^{-1}\), \(|D|, |D'|\le I, |d|\le 1\). The idea of the proof is to reduce the above mentioned NP-hard problem to computation of the condition number for matrix M(0, 0, 0). Obviously, 1-norm of \(M(D,D',d)^{-1}\) is attained for the value of \(d=-1\), so we can fix it for the remainder of the proof.

Claim A. There exist \({\bar{D}}\) and \({\bar{D}}'\) such that \(|{\bar{D}}|=|{\bar{D}}'|=I\) and \(c_1(M(0,0,0))=\Vert M({\bar{D}}, \bar{D}', -1)^{-1}_{*(2n+1)}\Vert _1\).

Proof of the Claim A

By Proposition 2, the maximum norm is attained for \(|D|=|D'|=I\). Therefore, we need only to investigate the matrices with \(|D|=|D'|=I\). Let \(c_1(M(0,0,0))=\Vert M( D, D', -1)^{-1}\Vert _1\) with \(|D|=|D'|=I\). If 1-norm of \(M( D, D', -1)^{-1}\) is attained for the last column, the claim is resulted. Otherwise, since \(\alpha >0\) is arbitrarily large, the 1-norm is attained for no column of the middle part. Suppose that the norm is attained for the ith column of the first column block. We compare the norms of this column and the last column of \(M(D,D',d)^{-1}\), that is, we compare vectors

We compare separately their three blocks. Obviously, for the last entry the latter is larger. Since \(C\rightarrow 0\) as \(\alpha \rightarrow \infty \), the first block of entries of the former vector is arbitrarily small and neglectable. Thus, we focus on the second block. The former vector has entries \(\alpha C_{*i}\). Notice that by the triangle inequality one has either \(\Vert u\Vert \le \Vert u+v\Vert \) or \(\Vert u\Vert \le \Vert u-v\Vert \) for any \(u,v\in {\mathbb {R}}^n\) and any norm. Thus, one can choose a suitable \({\bar{D}}\) such that \(|{\bar{D}}|=I\) and \(\Vert \alpha C_{*i} \Vert _1\le \Vert \alpha C{\bar{D}}e \Vert _1=\Vert \alpha C_{*i}+\alpha \sum _{j\ne i} C_{*j}{\bar{d}}_{jj} \Vert _1\). Furthermore, one can select a matrix \({\bar{D}}'\) with \(|{\bar{D}}'|=I\) and \(\Vert e+D'CDe\Vert _1=\Vert e+\bar{D}'C{\bar{D}}e\Vert _1\). Because \(c_1(M(0,0,0))=\Vert M( D, D', -1)^{-1}\Vert _1\), the given matrices \({\bar{D}}\) and \({\bar{D}}'\) fulfill the claim.

Claim B. The 1-norm of the last column is arbitrarily close to \(1+n+ e^T|A^{-1}De|\).

Proof of the Claim B

The last entry of the column is 1. Since \(C\rightarrow 0\) as \(\alpha \rightarrow \infty \), the first block tends to e as \(\alpha \rightarrow \infty \). The second block reads \(-\alpha CDe=-(A-\frac{1}{\alpha }DD')^{-1}De\), which tends to \(-A^{-1}De\) as \(\alpha \rightarrow \infty \). So its 1-norm tends to \(e^T|A^{-1}De|\).

By Claim B, the 1-norm of the last column is by \(1+n\) larger than the objective value of (3). So by maximizing 1-norm of \(M(D,D',d)^{-1}\) we can deduce the maximum of (3) with arbitrary precision. Notice that \(e^T|A^{-1}|e\) is an upper bound on (3) and it has polynomial size, so we can find \(\alpha \) of polynomial size, too by the standard means (c.f. [46]). \(\square \)

In general, the computation of c(A) is not easy. However, computation of the condition number with respect to some norms or for some classes of matrices is not difficult. In the rest of the section, we study the given condition number from this aspect.

Proposition 4

If \(\max _{D\in [-I, I]}\Vert A^{-1}D\Vert \le \gamma <1\), then

Proof

Let \(D:=\hbox {diag}(d)\) for some d with \(|d|\le e\). By the assumption, \(\rho (A^{-1}D)\le \Vert A^{-1}D\Vert <1\). By using Neumann series [22],

We have

\(\square \)

We say that a matrix norm is monotone if \(|A|\le B\) implies \(\Vert A\Vert \le \Vert B\Vert \). For instance, the scaled matrix p-norms are monotone. It is seen that if \(\Vert ( |A^{-1}| ) \Vert <1\) for a monotone norm \(\Vert \cdot \Vert \), the assumption of Proposition 4 holds. It is worth mentioning that if \(\Vert A^{-1}\Vert <1\) and if \(\max _{D\in [-I, I]}\Vert D\Vert \le 1\), then we have the the assumption of Proposition 4, too.

Theorem 8

If \(\rho (|A^{-1}|)<\gamma <1\), then there exists a scaling 1-norm  such that

such that

Proof

By Theorems 1 and 2, AVE has a unique solution and \([A-I, A+I]\) is regular. Due to the continuity of eigenvalues with respect to the matrix elements, there exists an invertible matrix B with

and

\(\rho (B)= \gamma \). By Perron-Frobenius theorem, there exists \(v> 0\) such that \(Bv=\rho (B)v\). We define norm

and

\(\rho (B)= \gamma \). By Perron-Frobenius theorem, there exists \(v> 0\) such that \(Bv=\rho (B)v\). We define norm  as

as

. Note that

. Note that

.

As

.

As  ,

we have

,

we have

By Neumann series theorem [22],

and

\((I-B)^{-1}\) exist and are non-negative. Hence,

and

\((I-B)^{-1}\) exist and are non-negative. Hence,

The last inequality follows from \((I-|A^{-1}|)^{-1}=\sum _{i=0}^\infty |A^{-1}|^i\le \sum _{i=0}^\infty B^i=(I-B)^{-1}\). Hence,

Moreover, for d with \(\Vert d\Vert _\infty \le 1\),

Since \(\sum _{i=0}^\infty B^i=-(B^{-1}-I)^{-1}\), the Perron–Frobenius theorem then implies  .\(\square \)

.\(\square \)

One may wonder why we do not use the well-known result which states the existence of a matrix norm  with

with  , see Lemma 5.6.10 in [22], to prove the above theorem. The underlying reason is that the given matrix norm by this result is not necessarily a scaled matrix p-norm. It is worth mentioning that, under the assumption of Theorem 8, when

, see Lemma 5.6.10 in [22], to prove the above theorem. The underlying reason is that the given matrix norm by this result is not necessarily a scaled matrix p-norm. It is worth mentioning that, under the assumption of Theorem 8, when  , one obtains

, one obtains

for some scaling 1-norm. Note that a sufficient condition for having  is the existence of a diagonal matrix S with \(|S|=I\) such that \(A^{-1}S\ge 0\) and \((A-S)^{-1}S\ge 0\). In fact, Theorem 5.2 in Chapter 7 of [3] implies that \(\rho (A^{-1}S)<1\) under this condition, which is equivalent to \(\rho (|A^{-1}|)<1\).

is the existence of a diagonal matrix S with \(|S|=I\) such that \(A^{-1}S\ge 0\) and \((A-S)^{-1}S\ge 0\). In fact, Theorem 5.2 in Chapter 7 of [3] implies that \(\rho (A^{-1}S)<1\) under this condition, which is equivalent to \(\rho (|A^{-1}|)<1\).

Error bounds can be utilized as a tool in stability analysis [10, 14]. As mentioned earlier, AVE has a unique solution for each \(b\in {\mathbb {R}}^n\) if and only if \([A-I, A+I]\) is regular. Denote

It is easily seen that \({\mathcal {A}}\) is an open set. Let function \(X(A, b):{\mathcal {A}}\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) return the solution of AVE. In the following proposition, we list some properties of function X.

Proposition 5

Let \(A\in {\mathcal {A}}\).

-

(i)

For any \(b_1, b_2\in \mathbb {R}^n\),

$$\begin{aligned} \Vert X(A,b_1)-X(A,b_2)\Vert \le c(A)\Vert b_1-b_2\Vert . \end{aligned}$$ -

(ii)

Function X is locally Lipschitz with modulus c(A), that is,

$$\begin{aligned} \Vert X(A_1,b_1)-X(A_2,b_2)\Vert \le c(A)(\Vert A_1-A_2\Vert +\Vert b_1-b_2\Vert ) \end{aligned}$$(6)for any \(A_1, A_2\) and \(b_1, b_2\) in certain neighborhoods of A and b, respectively.

Proof

First, we show the first part. Suppose that \(X(A,b_1)=x_1\) and \(X(A,b_2)=x_2\). Thus,

There exists a matrix \(D\in [-I, I]\) such that \(|x_2|-|x_1|= D(x_1-x_2)\). So the above equality can be written as

which implies that \(\Vert x_1-x_2\Vert \le \Vert (A+D)^{-1}\Vert \cdot \Vert b_1-b_2\Vert \le c(A)\Vert b_1-b_2\Vert \).

Now, we prove the second part. Consider the locally Lipschitz function \(\phi :{\mathcal {A}}\times \mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) given by \(\phi (A, b, x)=Ax-|x|-b\). We have \(\partial _x\phi (A, b, x)\subseteq [A-I, A+I]\). As \([A-I, A+I]\) is regular, the implicit function theorem (see Chapter 7 in [9]) implies that there exists a locally Lipschitz function \(X(A, b):{\mathcal {A}}\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) with \(\phi (A, b, X(A, b))=0\). In addition, (6) holds. \(\square \)

2.1 Condition number of AVE for 2-norm

Since \(\Vert A^{-1}\Vert _2=\frac{1}{\sigma _{\min }(A)}\), the value of \(c_2(A)\) can be computed as the optimal value of the following optimization problem,

In general, the function \(\sigma _{\min }(\cdot )\) is neither convex nor concave; see Remark 5.2 in [39]. In (7), \(\sigma _{\min }(\cdot )\) is a function of the matrix diagonal entries. Nonetheless, \(\sigma _{\min }(\cdot )\) is also neither convex nor concave in this case; the following example clarifies this point. From this perspective, Proposition 2 mentioned above is by far not obvious.

Example 1

Let \( A=\left( {\begin{matrix} 2 &{} 1\\ -2 &{} 1 \end{matrix}}\right) \) and \(E=\left( {\begin{matrix} 0 &{} 0\\ 0 &{} 1 \end{matrix}}\right) \). We have

In the next proposition, we give a formula for symmetric matrices. Before we get to the proposition, we present a lemma, which follows directly from [21, Thm. 17].

Lemma 1

Let A be symmetric. The interval matrix \([A-I, A+I]\) is regular if and only if

Note that condition (8) is equivalent to \(\sigma _{\min }(A)>1\).

Proposition 6

Let the interval matrix \([A-I, A+I]\) be regular. If A is symmetric, then \(c_2(A)=\frac{1}{\sigma _{\min }(A)-1}\).

Proof

As \([A-I, A+I]\) is regular, \(\sigma _{\min }(A)>1\). For d with \(\Vert d\Vert _\infty \le 1\), \(\sigma _{\min }(A+\hbox {diag}(d))\ge \sigma _{\min }(A)-1\). By the proof of Lemma 1, it is seen that there exists \({\bar{d}}\) with \(\Vert {\bar{d}}\Vert _\infty = 1\) such that \(\sigma _{\min }(A+\hbox {diag}({\bar{d}}))= \sigma _{\min }(A)-1\). Hence, the proposition follows from formulation (7). \(\square \)

Proposition 7

If \(\sigma _{\min }(A)>1\), then

Proof

Note that under the assumption, AVE has a unique solution for any b, see Proposition 3 in [31], and consequently \([A-I, A+I]\) is regular. Let \({\hat{d}}\in \{d:\Vert d\Vert _\infty \le 1\}\). Consider the formulation (7). Since \(\sigma _{\min }(A+B)\ge \sigma _{\min }(A)-\Vert B\Vert _2\) and \(\max _{\Vert d\Vert _\infty \le 1} \Vert \hbox {diag}(d)\Vert _2=1,\) we obtain the desired inequality. \(\square \)

In the following example, we show that the bound (9) can be arbitrary large while the error bound with respect to 2-norm, \(c_2(A)\), is bounded.

Example 2

Let \(\epsilon >0\),

As \(\sigma _{\min }(A)=1+\epsilon \), we have the assumption of Proposition 7. By Proposition 2,

With a little algebra, it is seen that \( c_2(A)\le 6\), while \(\frac{1}{\sigma _{\min }(A)-1}\) goes to infinity as \(\epsilon \) tends to zero.

For matrix A, let

Proposition 8

Let \({\bar{d}}=\hbox {sgn}(\hbox {Diag}(A))\). If

then \(c_2(A)= \Vert (A-\hbox {diag}({\bar{d}}))^{-1}\Vert _2\).

Proof

Let \(d\in \{d:\Vert d\Vert _\infty \le 1\}\). By Theorem 3 in [23], \(\sigma _{\min }(A-\hbox {diag}(d))\ge \alpha -1\). So, \([A-I, A+I]\) is regular. Since \(\Vert A^{-1}\Vert ^{-2}_2 = \lambda _{\min }(A^TA)\), by Proposition 2, \(c_2(A)^{-2}=\min _{|d|=e}\lambda _{\min }\big ((A-\hbox {diag}(d))^T(A-\hbox {diag}(d))\big )\). Suppose that \(|d|=e\). Consider matrix

It is easily seen that T is diagonally dominant with nonnegative diagonal, so it is positive semi-definite. Consequently, \(\lambda _{\min }\big ((A-\hbox {diag}(d))^T(A-\hbox {diag}(d))\big )\ge \lambda _{\min }\big ((A-\hbox {diag}({\bar{d}}))^T(A-\hbox {diag}({\bar{d}}))\big )\), which implies the desired equality. \(\square \)

Note that under the assumptions of Proposition 8, we also have the following bound

As for a permutation matrix P, \(\Vert AP\Vert _2=\Vert A\Vert _2\) and \([-I, I]P=[-I, I]\), the following corollary gives a more generalized form of Proposition 8.

Corollary 1

Let P be a permutation matrix and \(B=AP\). If

then \(c_2(A)= \Vert (B-\hbox {diag}({\bar{d}}))^{-1}\Vert _2\), where \(\bar{d}=\hbox {sgn}(\hbox {Diag}(B))\).

As mentioned earlier, one class of effective approaches to handle .AVE is concave optimization methods. Mangasarian [32] proposed the following concave optimization problem,

He showed that AVE has a solution if and only if the optimal value of (10) is zero. Now, we show that (10) has the weak sharp minima property. Consider an optimization problem \(\min _{x\in X} f(x)\) with the optimal solution set S. The set S is called a weak sharp minima if there is an \(\alpha >0\) such that

where \({{\,\mathrm{dist}\,}}_S(x):=\min \{\Vert x -s\Vert _2 : s\in S\}\). Weak sharp minima notion has wide applications in the convergence analysis of iterative methods and error bounds [5, 6].

Proposition 9

Let \(A\in {\mathcal {A}}\). Then the optimal solution of (10) is a weak sharp minimum.

Proof

Let X and \(x^\star \) denote the feasible set and the unique solution of (10), respectively. By Theorem 7, \(c_2(A)\in \mathbb {R}_+\) and

As \(\Vert Ax-|x|-b \Vert _2\le \Vert Ax-|x|-b \Vert _1\) and \(Ax-|x|-b \ge 0\) for \(x\in X\), we have

which shows that \(x^\star \) is a weak sharp minimum. \(\square \)

2.2 Condition number of AVE for \(\infty \)-norm

Some upper bounds were proposed for \(\Vert A^{-1}\Vert _\infty \) and \(\Vert A^{-1}\Vert _1\); see [24, 26, 35, 48]. As Theorem 7 holds for any scaling p-norm, it would be advantageous to use these norms.

Proposition 10

If \((AP-I)^{-1}\ge 0\) and \((AP+I)^{-1}\ge 0\) for some diagonal matrix P with \(|\hbox {Diag}(P)|=e\), then \(c_\infty (A)=\Vert (AP-I)^{-1}e\Vert _\infty \).

Proof

By Theorem 3, under the assumptions of the proposition, the interval matrix \([AP-I, AP+I]\) is regular and inverse nonnegative. In addition, \([AP-I, AP+I]^{-1}\subseteq [(AP+I)^{-1}, (AP-I)^{-1}]\). Since \([AP-I, AP+I]=[A-I, A+I]P\), the interval matrix \([A-I, A+I]\) is regular. It is easily seen that for any non-negative matrix M we have \(\Vert M\Vert _\infty =\Vert Me\Vert _\infty \). Because \(\Vert PM\Vert _\infty =\Vert M\Vert _\infty \) for any matrix M, we get \(c_\infty (A)=\Vert (AP-I)^{-1}e\Vert _\infty \). \(\square \)

One can establish that the assumption of Proposition 10 is equivalent to the condition that each row of B has a constant pattern of signs for any \(B\in [A-I, A+I]^{-1}\). Moreover, we have \(c_1(A)=\Vert (AP-I)^{-1}\Vert _1\) under the assumptions of Proposition 10.

Proposition 11

If \(\rho (|A^{-1}|)<1\), then

where \(H=(I-|A^{-1}|)^{-1}\), \(T=(2\hbox {diag}(\hbox {Diag}(H))-I)^{-1}\) and

Proof

By Theorem 2.40 in [15], \([A-I, A+I]^{-1}\subseteq [B_1, B_2]\). Thus,

\(\square \)

Proposition 12

Let A be an M-matrix. If \(\rho (A^{-1})<1\), then

Proof

By Theorem 4(ii), \(A-I\) is an M-matrix. In addition, as M-matrices are preserved by the addition of positive diagonal matrices [3], \(A+I\) is also an M-matrix. Hence, by Theorem 3, \([A-I, A+I]\) is inverse nonnegative. The statement now follows from Proposition 10 with \(P=I\). \(\square \)

Proposition 13

Let A be an H-matrix. If \(\rho (\langle A\rangle ^{-1})<1\), then

Proof

By Theorem 5, the interval matrix \([A-I, A+I]\) is an H-matrix, and thus it is regular. In addition, \(\langle [A-I, A+I]\rangle =[\langle A\rangle -I, \langle A\rangle +I]\). By Theorem 3, \([\langle A\rangle -I, \langle A\rangle +I]^{-1} \subseteq [(\langle A\rangle +I)^{-1}, (\langle A\rangle -I)^{-1}]\). Because \((\langle A\rangle +I)^{-1}\ge 0\),

where the first inequality follows from Theorem 5. \(\square \)

Proposition 14

Let \(r>0\) and  . If

. If

then  .

.

Proof

First, we show that for a given d with \(\Vert d \Vert _\infty \le 1\), we have the following inequality

Suppose that  and

and  . We have

. We have

Consequently, interval matrix \([A-I, A+I]\) is regular. Similarly to the proof of Proposition 8, one can show that

The above equality and (14) imply  , and the proof is complete. \(\square \)

, and the proof is complete. \(\square \)

Corollary 2

If \(\alpha :=\min _{i=1, \dots , n}\{|A_{ii}|-r_i(A)\}>1,\) then  .

.

Corollary 3

If \(\beta :=\min _{j=1, \dots , n}\{|A_{jj}|-\hbox {cl}_j(A)\}>1,\) then \(c_{1}(A)\le \frac{1}{\beta -1}\).

3 Error bounds and a condition number of AVE related to linear complementarity problems

The study of AVE is inspired from the well-known linear complementarity problem (LCP) [31], which provides a unified framework for many mathematical programs [10]. In the section, we study error bounds for AVE obtained by transforming LCPs. Consider a general linear complementarity problem

where \(M\in \mathbb {R}^{n\times n}\) and \(q\in \mathbb {R}^{n}\). Throughout the section, without loss of generality, we may assume that one is not an eigenvalue of M. So matrix \((M-I)\) is non-singular. This assumption is not restrictive, as one can rescale M and q in LCP. Problem LCP can be formulated as the following AVE,

see [29]. The following proposition states the relationship between M and \((M+I)(M-I)^{-1}\); see Theorem 2 in [44].

Proposition 15

Let \(M-I\) be non-singular. Matrix M is a P-matrix if and only if \([(M+I)(M-I)^{-1}-I, (M+I)(M-I)^{-1}+I]\) is regular.

In addition to the error bounds introduced for some classes of matrices in the former section, in the following results, we propose error bounds for absolute value equation (15) according to some properties of M.

Proposition 16

Let M be an M-matrix with \(\hbox {Diag}(M)\le e\) and \(M-I\) be nonsingular. Then

Proof

Since the off-diagonal elements of M are non-positive and \(M^{-1}\ge 0\), we have \(\hbox {Diag}(M^{-1})\ge e\). Putting \(A=(M+I)(M-I)^{-1}\), we get

Therefore, \((A-I)^{-1}=\frac{1}{2}(M-I)\le 0\) and \((A+I)^{-1}=\frac{1}{2}(I-M^{-1})\le 0\). Theorem 3 implies that \([A-I, A+I]\) is regular and \([A-I, A+I]^{-1}\subseteq \frac{1}{2}[I-M^{-1},M-I]\), and consequently, \(c(A)=\frac{1}{2}\Vert I-M^{-1}\Vert \). \(\square \)

It is worth noting that the assumption \(\hbox {Diag}(M)\le e\) is not restrictive since LCP(M, q) is equivalent to LCP\((\lambda M, \lambda q)\) for \(\lambda >0\). In the following, we investigate the case that M is an H-matrix. Before we get to the theorem, which gives a bound in this case, we need to present a lemma first.

Lemma 2

If M is an H-matrix with non-negative diagonal, then \(M+I\) is an H-matrix.

Proof

By the assumption, \(\langle M+I\rangle =\langle M\rangle +I\). By using Theorem 4(i), \(M+I\) should be an H-matrix. \(\square \)

Theorem 9

Let \(M-I\) be nonsingular and let M be an H-matrix with \(0\le \hbox {Diag}(M)\le e\). Then

Proof

Consider vector \(d\in \mathbb {R}^n\) with \(\Vert d\Vert _\infty \le 1\). We have

where the last inequality follows from \(|M-I|\le I-\langle M\rangle \), Theorem 5 and Lemma 2. Thus, \(\rho ((M-I)(M+I)^{-1}\hbox {diag}(d))\le \rho ((I-\langle M\rangle )(\langle M\rangle +I)^{-1})\). Since \(\langle M\rangle \) is an M-matrix and \(\rho (BC)=\rho (CB)\), we have \(\rho ((I-\langle M\rangle )(\langle M\rangle +I)^{-1})<1\); see Theorem 4(i). Hence, \(\rho ((M-I)(M+I)^{-1}\hbox {diag}(d))<1\).

Let \({\hat{A}}\in [(M+I)(M-I)^{-1}-I, (M+I)(M-I)^{-1}+I]\). So \({\hat{A}}=(M+I)(M-I)^{-1}-\hbox {diag}(d)\) for some d with \(\Vert d\Vert _\infty \le 1\). Hence,

By applying Neumann series and the obtained results, we have

where the last equality is obtained by using the relations \((I-A)^{-1}A=(A^{-1}-I)^{-1}\) and \(((I+\langle M\rangle )(I-\langle M\rangle )^{-1}-I)^{-1}=(2\langle M\rangle (I-\langle M\rangle )^{-1})^{-1}=\frac{1}{2}(\langle M\rangle ^{-1}-I)\). Therefore, \(\Vert {\hat{A}}^{-1}\Vert \le \frac{1}{2}\Vert \langle M\rangle ^{-1}-I\Vert \), and the proof is complete. \(\square \)

In the rest of this section, by using the obtained results, we present new error bounds for linear complementarity problems. Many papers were devoted to the error bounds for the LCP(M, q); see [7, 8, 10, 16, 38]. It is easily seen that \({\hat{x}}\) is a solution of LCP if and only if \({\hat{x}}\) solves

The function \(\theta (x)\) is called the natural residual of LCP. As mentioned earlier, LCP has a unique solution for each q if and only if M is a P-matrix. For M being a P-matrix, Chen and Xiang [7] proposed the following error bound

where \(x^\star \) is the solution of LCP and \(x\in {\mathbb {R}}^n\) arbitrary. By introducing a new variable d with \(\hbox {diag}(d)=2D-I\), we have

Because \(c(A)=c(-A)\), we have

Therefore, the given results in this paper can be exploited for providing an upper bound for this maximization. For instance, Chen and Xiang, see Theorem 2.2 in [7], proved that when M is an M-matrix, then

where \(f(v)=\max _{1\le i \le n}(e+v-M^Tv)_i\) and \(V=\{v: M^Tv\le e, v\ge 0\}\). As seen, f is a piece-wise linear convex function. However, maximization of a convex function is an intractable problem in general. In this case, one needs to solve n linear programs. In the next proposition, we give an explicit formula for the optimal value for \(\infty \)-norm.

Proposition 17

Let M be an M-matrix with \(\hbox {Diag}(M)\le e\). Then

where for \(i, j=1, \dots , n\)

and \(\hat{B}=\max (| \underline{{B}}|, | \overline{{B}}|)\).

Proof

Similarly to the proof of Proposition 16, if \(\hbox {Diag}(M)\le e\), we have \([(I+M)(I-M)^{-1}-I, (I+M)(I-M)^{-1}+I]^{-1}\subseteq \frac{1}{2}[I-M, M^{-1}-I]\). Therefore, by (16)

Furthermore, \(\{ (I-M)^{-1} X: I-M\le X \le M^{-1}-I \}\subseteq [\underline{{B}}, \overline{{B}}]\). Hence,

On the other hand, suppose that \(\Vert \hat{B}\Vert _\infty =\Vert \hat{B}_{i*}\Vert _\infty \). There exist \({\check{B}}\in \{ (I-M)^{-1} X: I-M\le X \le M^{-1}-I \}\) such that \(| {\check{B}}_{i*}|=\hat{B}_{i*}\), which implies the above inequality holds as equality, and the proof will be complete. \(\square \)

For M being an H-matrix with \(0\le \hbox {Diag}(M)\le e\), similarly to the proof of Theorem 9, one can show that for d with \(\Vert d\Vert _\infty \le 1\),

Therefore, by (16), we get

which is a well-known bound; see Theorem 2.1 in [7]. Here, we obtain inequality (17) with a different method as a by-product of our analysis.

4 Relative condition number of AVE

We introduce a relative condition number as follows

which is equal to \(c(A) \max _{\Vert d\Vert _\infty \le 1}\Vert A-\hbox {diag}(d)\Vert \). The meaning of the relative condition number follows from the bounds presented in the proposition below. They extend the bounds known for the error of standard linear systems of equations [18].

Proposition 18

If the interval matrix \([A-I, A+I]\) is regular and \(b\ne 0\), then for each \(x\in \mathbb {R}^n\)

Proof

Since \(b\ne 0\), we have \(x^\star \ne 0\). First, we show the upper bound. Denote \(s^\star :=\hbox {sgn}(x^\star )\). As \(Ax^\star -b=|x^\star |=\hbox {diag}(s^\star )x^\star \), we derive \((A-\hbox {diag}(s^\star ))x^\star =b\), from which \(\Vert A-\hbox {diag}(s^\star )\Vert \cdot \Vert x^\star \Vert \ge \Vert b\Vert \). Now, we have by Theorem 7

from which the bound follows.

Now, we establish the lower bound. From the proof of Theorem 7, we know that there exist some \({\hat{A}}\in [A-I, A+I]\) such that \(Ax-|x|-b=\hat{A}(x-x^\star )\). Hence,

from which the statement follows. \(\square \)

Remark 2

The solutions of AVE lying in orthant \(\hbox {diag}(d)\ge 0\), \(d\in \{\pm 1\}^n\), are described by \((A-\hbox {diag}(d))x=b\). This may suggest to introduce the condition number as

where \(\kappa \) is the classical condition number. This value then reads

The main difference to \(c^*(A)\) is that in the definition of \(c^*(A)\) we have two separated maximization problems. The need for that may stem from possible variations of the solution between different orthants (e.g., when it lies on the border between two of them), whereas the above expression handles orthants separately.

In order to compute \(c^*(A)\) we have to determine c(A) and \(\max _{\Vert d\Vert _\infty \le 1}\Vert A-\hbox {diag}(d)\Vert \). The former is discussed in detail in the previous sections, so we focus on the latter now. Recall that a norm is absolute if \(\Vert A\Vert =\Vert |A|\Vert \), and it is monotone if \(|A|\le |B|\) implies \(\Vert A\Vert \le \Vert B\Vert \). For example, 1-norm, \(\infty \)-norm, Frobenius norm or max norm are both absolute and monotone.

Proposition 19

For any absolute and monotone matrix norm

Proof

We have

and equation is attained for certain d with \(\Vert d\Vert _\infty =1\). \(\square \)

Proposition 20

For spectral norm we have

Moreover, It holds as an equality when A is symmetric.

Proof

We have \(\Vert A-\hbox {diag}(d)\Vert _2\le \Vert A\Vert _2+\Vert \hbox {diag}(d)\Vert _2\le \Vert A\Vert _2+1\). \(\square \)

5 Error bounds and convergence analysis

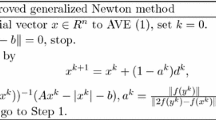

As mentioned earlier, error bounds were employed as a powerful tool for the analysis of iterative methods. In the section, we study two well-known algorithms, a generalized Newton method [30] and the Picard method [45], for solving AVE. By using error bounds, we provide some sufficient conditions for the convergence. In addition, we establish the linear convergence of the aforementioned methods. Our approach is in the spirit of convergence analysis in [27, 28, 47].

Mangasarian [30] proposed a generalized Newton method for solving AVE. In this method, the starting point \(x^1\) is chosen arbitrary and the Newton iteration is as follows,

where \(D^k=\hbox {diag}(\hbox {sgn}(x^k))\). The function \(U(x)=\Vert Ax-|x|-b\Vert _2\) is non-negative and \(U({\bar{x}})=0\) if and only if \({\bar{x}}\) is a solution of AVE. In the literature, function U is called a potential function or a Lyapunov function.

Mangasarian established that the generalized Newton method is convergent when \(\sigma _{\min }(A)>4\); see Proposition 7 in [30]. Cruz et al. proved the convergence under the weaker assumption \(\sigma _{\min }(A)>3\); see Remark 3 in [12]. In the next theorem, we show the convergence of the generalized Newton method with a different method. Indeed, we prove the linear convergence of \(\{U(x^k)\}\) by using error bounds.

Theorem 10

If \(\sigma _{\min }(A)>3\), then the generalized Newton iteration (18) converges linearly from any starting point and

Proof

The assumption implies that the interval matrix \([A-I, A+I]\) is regular, and consequently it has a unique solution. Due to the Newton iteration (18), we have

Hence, by Theorem 7, we get

On the other hand, by (20) together with (18), we obtain

where the last inequality follows from \(\sigma _{\min }(A-D^k)\ge \sigma _{\min }(A)-\sigma _{\max }(D^k)\). Inequalities (20) and (22) imply

yielding (19). Since the function U is non-negative, inequality (19) implies that \(U(x^k)\) goes to zero as k tends to infinity. Hence, by (21), \(\Vert x^{k+1}-x^\star \Vert _2\) tends to zero and the algorithm is convergent. Moreover, inequalities (19) implies the linear convergence of \(\{U(x^k)\}\). \(\square \)

In the next theorem, we prove that the generalized Newton method is convergent under the assumptions of Proposition 10. Barrios et al. employed similar assumptions to prove the convergence of a semi-smooth Newton method for the piecewise linear system \(\max (x,0)+Tx=b\), where \(A\in \mathbb {R}^{n\times n}\), \(b\in \mathbb {R}^{n}\); see Theorem 3 in [2]. Note that the piecewise linear system \(\max (x,0)+Tx=b\) is equivalent to \(-(I+2T)x-|x|=-2b\). To prove the convergence of the generalized Newton method, we use the potential function \(W(x)=\Vert x-x^\star \Vert _1\).

Theorem 11

Let \((AP-I)^{-1}\ge 0\) and \((AP+I)^{-1}\ge 0\) for a diagonal matrix P such that \(|\hbox {Diag}(P)|=e\). Then the generalized Newton iteration (18) converges linearly from any starting point and

where \(\ell =\max _{\Vert d\Vert _\infty \le 1} \Vert A-\hbox {diag}(d)\Vert _1\).

Proof

By the proof of Proposition 10, one can infer that AVE has a unique solution. Without loss of generality, we may assume that \(P=I\). For the residual function \(\phi (x)=Ax-|x|-b\), we have

for \( x, y\in \mathbb {R}^n\). By (24) together with \(\phi (x^{k})=(A-D^k)(x^{k}-x^{k+1})\), we get

By virtue of (24) for \(k\ge 2\), we obtain

where the last inequalities follows from \((A-D^k)^{-1}\ge 0\) and (25). Because \(x^{k+1}=x^k-(A-D^k)^{-1}\phi (x^k)\), we get

By the above inequality, we get

By using Theorem 7 and \(\phi (x^{k})=(A-D^k)(x^{k}-x^{k+1})\), we have

We can infer from inequalities (26)–(28),

from which the statement follows. \(\square \)

Note that, under the assumptions of Theorem 11, the unique solution of AVE can be obtained by solving just one linear program; see Proposition 4 in [52]. In the following proposition, we establish the finite convergence of the generalized Newton method under some mild conditions.

Proposition 21

Let \([A-I, A+I]\) be regular. If \(\{x^k\}\) converges to \(x^\star \), then the generalized Newton method is finitely convergent.

Proof

First, we consider the case that all components of \(x^\star \) are non-zero. In this case, the proof follows from the fact that we have \(D^k=\hbox {diag}(\hbox {sgn}(x^\star ))\) for \(x^k\) sufficiently close to \(x^\star \). Now, we investigate the case that some components of \(x^\star \) are zero. Let \({\mathcal {K}}=\{i: x^\star _i=0\}\). Due to the regularity of \([A-I, A+I]\), it is seen that \(x^\star \) is the unique solution of the linear system \((A-D)x=b\) for any diagonal matrix D with

Hence, for \(x^k\) sufficiently close to \(x^\star \), we have \((A-D^k)x^{\star }=b\) and the proof is complete. \(\square \)

In what follows, we investigate the Picard iterative method for solving AVE. We refer the interested reader to Chapter 7 in [37] for more information on this method.

The Picard iterative method was employed by Rohn et al. [45] for tackling AVE. The method can be summarized as follows

where \(x^1\in \mathbb {R}^n\) is an arbitrary point. They proved that the Picard method (29) is convergent if \(\rho (|A^{-1}|)<1\); see Theorem 2 in [45]. The next proposition gives a sufficient condition for the convergence by using error bounds.

Proposition 22

If \(\sigma _{\min }(A)>1\), then the Picard method (29) converges from any starting point and

Proof

We follow the analogous arguments used in Theorem 10. By (29),

Due to Theorem 7, we have

By virtue of (29) and (30), we get

The rest of the proof is analogous to that of Theorem 10. \(\square \)

It is worth mentioning that the conditions \(\sigma _{\min }(A)>1\) and \(\rho (|A^{-1}|)<1\) do not necessarily imply each other. To prove the convergence under the assumption \(\rho (|A^{-1}|)<1\) by using this framework, one needs to modify the potential function U. Let  . Similarly to the proof of Theorem 8, there exists an invertible matrix B with \(|A^{-1}|<B\) and \(\rho (B)= \gamma \). In addition, for some \(v>0\),

. Similarly to the proof of Theorem 8, there exists an invertible matrix B with \(|A^{-1}|<B\) and \(\rho (B)= \gamma \). In addition, for some \(v>0\),  is a norm with

is a norm with  . We define the potential function

. We define the potential function  .

.

Proposition 23

If \(\rho (|A^{-1}|)<\gamma <1\), then the Picard method (29) converges from any starting point and

Proof

By virtue of (29),

where the last inequality follows from  . By Theorem 8, we have

. By Theorem 8, we have

Equations (29) and (31) imply that

The rest of the proof is analogous to the proof of Theorem 10. \(\square \)

6 Error bounds for the absolute value equations with multiple solutions

The section studies error bounds for AVE when the solution set is non-empty and the interval matrix \([A-I, A+I]\) is not necessarily regular. Under this setting, AVE may have multiple or infinite number of solutions. Let  denote the solution set of AVE. It is easily seen that \(X^\star \) may be written as a finite union of polyhedral sets.

denote the solution set of AVE. It is easily seen that \(X^\star \) may be written as a finite union of polyhedral sets.

By the locally upper Lipschitzian property of polyhedral set-valued mappings, see Proposition 1 in [40], AVE has the local error bounds property. That is, there exist \(\epsilon >0\) and \(\kappa >0\) such that

when \(\Vert Ax-|x|-b\Vert \le \epsilon \). However, in general, the global error bounds property does not hold necessarily. The following example illustrates this point.

Example 3

Consider the system AVE in the form

One can check that \(X^\star =\left\{ (-2,-2)^T,(-3,5)^T,(10,-6)^T \right\} \). Let \(x(t)=(t,t)^T\), where \(t>0\). It is seen that \(\Vert Ax(t)-|x(t)|-b\Vert =\Vert b\Vert \), while \({{\,\mathrm{dist}\,}}_{X^\star }(x(t))\) tends to infinity as \(t\rightarrow \infty \).

In the next theorem, we give a sufficient condition under which the global error bounds hold.

Theorem 12

Let \(X^\star \) be non-empty. If zero is the unique solution of \(Ax-|x|=0\), then there exists \(\kappa >0\) such that

Proof

The idea of the proof is similar to that of Theorem 2.1 in [34]. Suppose to the contrary that (33) does not hold. Hence, for each \(k\in \mathbb {N}\), there exists \(x^k\) such that

where \({\bar{x}}\in X^\star \). Due to the local error bounds property (32), there exists \(\epsilon >0\) such that \(\Vert Ax^k-|x^k|-b\Vert >\epsilon \) for each \(k\ge k_0\), where \(k_0\) is sufficiently large. Consequently, \(\Vert x^k-{\bar{x}}\Vert \) tends to infinity as \(k\rightarrow \infty \). Choosing subsequences if necessary, we may assume that \(\frac{x^k}{\Vert x^k\Vert }\) goes to a non-zero vector d. By dividing both sides of (34) by \(k\Vert x^k\Vert \) and taking the limit as k goes to infinity, we get

which contradicts the assumptions. \(\square \)

7 Conclusion

In this paper, we studied error bounds for absolute value equations. We suggested formulas for the computation of error bounds for certain classes of matrices. The investigation of other classes of matrices may be of interest for further research. The proposed formulas can be employed not only for the absolute value equations obtained by transforming the linear complementarity problem, but also for the linear complementarity problem itself. In addition, we showed that the computation of error bounds, except for 2-norm, for a general matrix is an NP-hard problem, and it remains an open problem for 2-norm. To demonstrate importance of the error bounds, we applied them in a convergence analysis of two methods used to solve the absolute value equations.

References

Abdallah, L., Haddou, M., Migot, T.: Solving absolute value equation using complementarity and smoothing functions. J. Comput. Appl. Math. 327, 196–207 (2018)

Barrios, J., Cruz, J.B., Ferreira, O.P., Németh, S.Z.: A semi-smooth Newton method for a special piecewise linear system with application to positively constrained convex quadratic programming. J. Comput. Appl. Math. 301, 91–100 (2016)

Berman, A., Plemmons, R.J.: Nonnegative Matrices in the Mathematical Sciences, Classics in Applied Mathematics, vol. 9. SIAM, Philadelphia (1994)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press (2004)

Burke, J.V., Deng, S.: Weak sharp minima revisited, part II: application to linear regularity and error bounds. Math. Program. 104(2–3), 235–261 (2005)

Burke, J.V., Ferris, M.C.: Weak sharp minima in mathematical programming. SIAM J. Control Optim. 31(5), 1340–1359 (1993)

Chen, X., Xiang, S.: Computation of error bounds for P-matrix linear complementarity problems. Math. Program. 106(3), 513–525 (2006)

Chen, X., Xiang, S.: Perturbation bounds of P-matrix linear complementarity problems. SIAM J. Optim. 18(4), 1250–1265 (2007)

Clarke, F.H.: Optimization and Nonsmooth Analysis, Classics in Applied Mathematics, vol. 5. SIAM, Philadelphia (1990)

Cottle, R.W., Pang, J.S., Stone, R.E.: The Linear Complementarity Problem. SIAM (2009)

Coulibaly, A., Crouzeix, J.P.: Condition numbers and error bounds in convex programming. Math. Program. 116(1–2), 79–113 (2009)

Cruz, J.B., Ferreira, O.P., Prudente, L.: On the global convergence of the inexact semi-smooth Newton method for absolute value equation. Comput. Optim. Appl. 65(1), 93–108 (2016)

Fabian, M.J., Henrion, R., Kruger, A.Y., Outrata, J.V.: Error bounds: necessary and sufficient conditions. Set-Valued Var. Anal. 18(2), 121–149 (2010)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer (2007)

Fiedler, M., Nedoma, J., Ramík, J., Rohn, J., Zimmermann, K.: Linear Optimization Problems with Inexact Data. Springer, New York (2006)

García-Esnaola, M., Peña, J.M.: A comparison of error bounds for linear complementarity problems of H-matrices. Linear Algebra Appl. 433(5), 956–964 (2010)

Hendrickx, J.M., Olshevsky, A.: Matrix \(p\)-norms are NP-hard to approximate if \(p\ne 1,2,\infty \). SIAM J. Matrix Anal. Appl. 31(5), 2802–2812 (2010)

Higham, N.J.: Accuracy and Stability of Numerical Algorithms. SIAM, Philadelphia (2002)

Hiriart-Urruty, J.: Mean value theorems in nonsmooth analysis. Numer. Funct. Anal. Optim. 2(1), 1–30 (1980)

Hladík, M.: Bounds for the solutions of absolute value equations. Comput. Optim. Appl. 69(1), 243–266 (2018)

Hladík, M.: An overview of polynomially computable characteristics of special interval matrices. In: Kosheleva, O., et al. (eds.) Beyond Traditional Probabilistic Data Processing Techniques: Interval, Fuzzy etc. Methods and Their Applications, Studies in Computational Intelligence, vol. 835, pp. 295–310. Springer, Cham (2020)

Horn, R.A., Johnson, C.R.: Matrix Analysis, 2nd edn. Cambridge University Press, Cambridge (2013)

Johnson, C.R.: A Gersgorin-type lower bound for the smallest singular value. Linear Algebra Appl. 112, 1–7 (1989)

Kolotilina, L.Y.: Bounds for the infinity norm of the inverse for certain M-and H-matrices. Linear Algebra Appl. 430(2–3), 692–702 (2009)

Kuttler, J.R.: A fourth-order finite-difference approximation for the fixed membrane eigenproblem. Math. Comput. 25(114), 237–256 (1971)

Li, C., Cvetković, L., Wei, Y., Zhao, J.: An infinity norm bound for the inverse of Dashnic-Zusmanovich type matrices with applications. Linear Algebra Appl. 565, 99–122 (2019)

Luo, Z.Q.: New error bounds and their applications to convergence analysis of iterative algorithms. Math. Program. 88(2), 341–355 (2000)

Luo, Z.Q., Tseng, P.: Error bound and convergence analysis of matrix splitting algorithms for the affine variational inequality problem. SIAM J. Optim. 2(1), 43–54 (1992)

Mangasarian, O.: Absolute value programming. Comput. Optim. Appl. 36(1), 43–53 (2007)

Mangasarian, O.: A generalized Newton method for absolute value equations. Optim. Lett. 3(1), 101–108 (2009)

Mangasarian, O., Meyer, R.: Absolute value equations. Linear Algebra Appl. 419(2–3), 359–367 (2006)

Mangasarian, O.L.: Absolute value equation solution via concave minimization. Optim. Lett. 1(1), 3–8 (2007)

Mangasarian, O.L.: A hybrid algorithm for solving the absolute value equation. Optim. Lett. 9(7), 1469–1474 (2015)

Mangasarian, O.L., Ren, J.: New improved error bounds for the linear complementarity problem. Math. Program. 66(1), 241–255 (1994)

Morača, N.: Bounds for norms of the matrix inverse and the smallest singular value. Linear Algebra Appl. 429(10), 2589–2601 (2008)

Neumaier, A.: Interval Methods for Systems of Equations. Cambridge University Press, Cambridge (1990)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. SIAM (2000)

Pang, J.S.: Error bounds in mathematical programming. Math. Program. 79(1–3), 299–332 (1997)

Qi, L., Womersley, R.S.: On extreme singular values of matrix valued functions. J. Convex Anal. 3, 153–166 (1996)

Robinson, S.M.: Some continuity properties of polyhedral multifunctions. In: Mathematical Programming at Oberwolfach, pp. 206–214. Springer (1981)

Rohn, J.: Systems of linear interval equations. Linear Algebra Appl. 126(C), 39–78 (1989)

Rohn, J.: Computing the norm \(\Vert A\Vert _{\infty,1}\) is NP-hard. Linear Multilinear Algebra 47(3), 195–204 (2000)

Rohn, J.: A theorem of the alternatives for the equation \(Ax + B|x| = b\). Linear Multilinear Algebra 52(6), 421–426 (2004)

Rohn, J.: On Rump’s characterization of P-matrices. Optim. Lett. 6(5), 1017–1020 (2012)

Rohn, J., Hooshyarbakhsh, V., Farhadsefat, R.: An iterative method for solving absolute value equations and sufficient conditions for unique solvability. Optim. Lett. 8(1), 35–44 (2014)

Schrijver, A.: Theory of Linear and Integer Programming. Repr. Wiley, Chichester (1998)

Solodov, M.V.: Convergence rate analysis of iteractive algorithms for solving variational inequality problems. Math. Program. 96(3), 513–528 (2003)

Varah, J.M.: A lower bound for the smallest singular value of a matrix. Linear Algebra Appl. 11(1), 3–5 (1975)

Wang, H., Cao, D., Liu, H., Qiu, L.: Numerical validation for systems of absolute value equations. Calcolo 54(3), 669–683 (2017)

Wang, H., Liu, H., Cao, S.: A verification method for enclosing solutions of absolute value equations. Collectanea Math. 64(1), 17–38 (2013)

Wu, S.L., Li, C.X.: The unique solution of the absolute value equations. Appl. Math. Lett. 76, 195–200 (2018)

Zamani, M., Hladík, M.: A new concave minimization algorithm for the absolute value equation solution. Optim. Lett. 15(6), 2241–2254 (2021)

Zhang, C., Wei, Q.: Global and finite convergence of a generalized Newton method for absolute value equations. J. Optim. Theory Appl. 143(2), 391–403 (2009)

Acknowledgements

The authors would like to thank two anonymous referees for their valuable comments and suggestions which help to improve the paper considerably. The authors were supported by the Czech Science Foundation Grant P403-18-04735S.

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zamani, M., Hladík, M. Error bounds and a condition number for the absolute value equations. Math. Program. 198, 85–113 (2023). https://doi.org/10.1007/s10107-021-01756-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-021-01756-6

Keywords

- Absolute value equation

- Error bounds

- Condition number

- Linear complementarity problem

- Interval matrix

- Convergence rate