Abstract

Background

Psychometric instruments assessing behavioural and functional outcomes (BFIs) in neurological, geriatric and psychiatric populations are relevant towards diagnostics, prognosis and intervention. However, BFIs often happen not to meet methodological-statistical standards, thus lowering their level of recommendation in clinical practice and research. This work thus aimed at (1) providing an up-to-date compendium on psychometrics, diagnostics and usability of available Italian BFIs and (2) delivering evidence-based information on their level of recommendation.

Methods

This review was pre-registered (PROSPERO ID: CRD42021295430) and performed according to PRISMA guidelines. Several psychometric, diagnostic and usability measures were addressed as outcomes. Quality assessment was performed via an ad hoc checklist, the Behavioural and Functional Instrument Quality Assessment.

Results

Out of an initial N = 830 reports, 108 studies were included (N = 102 BFIs). Target constructs included behavioural/psychiatric symptoms, quality of life and physical functioning. BFIs were either self- or caregiver-/clinician-report. Studies in clinical conditions (including neurological, psychiatric and geriatric ones) were the most represented. Validity was investigated for 85 and reliability for 80 BFIs, respectively. Criterion and factorial validity testing were infrequent, whereas content and ecological validity and parallel forms were almost never addressed. Item response theory analyses were seldom carried out. Diagnostics and norms lacked for about one-third of BFIs. Information on administration time, ease of use and ceiling/floor effects were often unreported.

Discussion

Several available BFIs for the Italian population do not meet adequate statistical-methodological standards, this prompting a greater care from researchers involved in their development.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Psychometric instruments assessing behavioural dysfunctions (i.e. neuropsychiatric alterations within the affective, motivational, social and awareness dimensions) and functional outcomes (i.e. quality of life, functional independence and other aspects of physical status—e.g. sleep, pain or fatigue) in neurological, psychiatric and geriatric populations are relevant towards clinical phenotyping, prognosis and intervention practice [21]. Indeed, besides aiding clinical diagnosis, behavioural/functional instruments (BFIs) are often addressed as relevant to provide estimates of patients’ prognosis, being also adopted as clinical endpoints during interventional programs [21].

BFIs often present either self- or proxy-report (i.e. caregiver or healthcare professional) questionnaires, thus requiring sound psychometric and diagnostics, as well as evidence on clinical usability in target populations [6]. However, it has been highlighted that BFIs often do not meet methodological-statistical requirements, both when developed de novo and when adapted from a different language and culture [60]. Of note, such methodological-statistical lacks have been identified as detrimentally influencing the level of recommendation of a given tool both within clinical practice and research [13], [42].

Given the abovementioned premises, and based on the current knowledge on health measurement tools [58], this work aimed at assessing psychometrics, diagnostics and usability in neurological, geriatric and psychiatric populations of BFIs currently available in Italy, in order to (1) provide an up-to-date compendium on Italian BFIs designed for clinical and research aims in clinical populations and (2) deliver evidence-based information on the level of recommendation for Italian BFIs.

Methods

Search strategy

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines were consulted [27]. This review was pre-registered on the International Prospective Register of Systematic Reviews (PROSPERO; ID: CRD42021295430; https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42021295430).

A systematic literature search was performed on December 1, 2021 (no date limit set), entering the following search string into Scopus and PubMed databases: ( behavioural OR behavioral OR “quality of life” OR psychiatric OR psychopathological OR apathy OR depression OR anxiety OR qol OR mood OR “activities of daily living” OR “functional independence”) AND (validation OR validity OR standardization OR psychometric AND properties OR reliability OR version ) AND ( italian OR italy ) AND ( neurolog* OR neuropsych* OR cognitive ) AND ( questionnaire OR inventory OR tool OR instrument OR scale OR test OR interview OR checklist ). Fields of search were title, abstract and key words for Scopus whereas title and abstract for PubMed. Only peer-reviewed, full-text contributions written in English/Italian were considered. In addition, the reference lists of all relevant articles were further hand-searched in order to identify further eligible studies.

Study eligibility criteria

Studies were evaluated for eligibility if they focused either on the psychometric/diagnostic/normative study of Italian/adapted to-Italian BFIs or their usability in healthy participants (HPs) and in patients with neurological/geriatric conditions or their proxies (e.g. caregivers). More specifically, eligible studies had to focus on (1) BFI psychometrics (i.e. validity and reliability) and (2) diagnostics (i.e. intrinsic (i.e. sensitivity, specificity) and post-test features (e.g. positive and negative predictive values and likelihood ratios)), or (3) norm derivation. Studies that did not aim at providing normative data were included only if at least one property among validity, reliability and sensitivity/specificity (or related metrics) was assessed.

Conference proceedings, letters to the Editor, commentaries, animal studies, single-case studies, reviews/meta-analyses, abstracts, research protocols, qualitative studies, opinion papers and studies on paediatric populations were excluded.

Data collection and quality assessment

Screening stage was performed by two authors (E.N.A. and A.D.) and eligibility stage was performed by two other authors (G.M. and C.G.) via Rayyan (https://rayyan.qcri.org/ welcome); these stages were supervised by another author (V.B.).

Data extraction was performed by four independent Authors (S.M., G.S.D.T., V.B. and F.P.), whereas one independent author (E.N.A.) supervised this stage and checked extracted data.

Extracted outcomes included (1) sample size, (2) sample representativeness (geographic coverage, exclusion criteria), (3) participants’ demographics, (4) instruments adaptation procedures, (5) administration time, (6) validity metrics, (7) reliability metrics (including significant change measures), (8) measures of sensitivity and specificity, (9) metrics derived from sensitivity and specificity, (10) norming methods and (11) other psychometric/diagnostic properties (e.g. accuracy, acceptability rate, assessment of ceiling/floor effects, ease of use).

Formal quality assessment was performed by four Authors (S.M., G.S.D.T., V.B. and F.P.), and supervised by a further, independent one (E.N.A.). Quality assessment was performed for each BFI by developing two ad-hoc checklists, the Behavioural and Functional Instrument Quality Assessment-Normative Sample (BFIQA-NS) and the Behavioural and Functional Instrument Quality Assessment-Clinical Population (BFIQA-CP) (Supplemental Material 1), which were adapted from the Cognitive Screening Standardization Checklist (CSSC) [1]. Scores were “cumulatively” assigned for each BFI by evaluating all available studies on it among those included. Although some studies met the selection criteria, they did not answer some of the questions included in the CSSC, (e.g. diagnostic criteria for quality of life tools). In these cases, a group of items was scored as “non-applicable” and this was accounted, i.e. weighted, in the final score.

Both BFIQA-NS and BFIQA-CP total scores range from 0 to 50 and a given BFI was considered “statistically/methodologically sound” if scoring was ≥25, which means 50% out of the maximum. When more than one study focused on the same BFI in different populations, BFIQA scores were averaged (as both the BFIQA-NS and BFIQA-CP range from 0 to 50).

Results

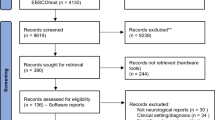

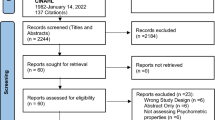

One-hundred and eighteen studies were included; study selection process according to PRISMA guidelines is shown in Figure 1.

Adapted from Moher et al. (2009) (www.prisma-statement.org)

Study selection process according to PRISMA guidelines. Notes. Study selection process according to PRISMA guidelines.

The included BFIs (N=102), along with the summarization of the general clinical features and BFIQA scores are detailed in Table 1, while the most relevant psychometrics, diagnostics and usability evidence are described in Table 2 and Table 3. The full reference list of included studies is shown in the Supplementary File 2.

The most represented constructs assessed by the included BFIs were behaviour/psychiatric symptoms (apathy: N=6; anxiety: N=7; depression: N=11; general: N=13; other: N=11), quality of life (QoL; N=14) and physical (activity of daily living/functional independence: N=16; other: N=10). Multidimensional BFIs (N=11) included behavioural, QoL and physical constructs. Forty-one BFIs were self-report, 21 were caregiver, whereas the remaining ones clinician-report.

The vast majority of studies (N=109) aimed at providing psychometric, diagnostic or normative data in clinical populations, of whom 16 also addressed normotypical samples. The most represented neurological conditions were those of a degenerative/disimmune etiology: multiple sclerosis (N=13), amyotrophic lateral sclerosis (N=6) and Parkinson’s disease (N=10). Dementia was addressed in 17 BFIs (Alzheimer’s disease: N=12; vascular dementia: N=3; frontotemporal dementia: N=1; Lewy Body disease: N=1). Acute cerebrovascular accidents and traumatic brain injury were addressed in 5 and 3 BFIs, respectively. Nonspecific geriatric populations (see the footnotes for the available details regarding the inclusion criteriaFootnote 1) were addressed in 5 BFIs, whereas mild cognitive impairment in 8. Psychiatric populations were addressed in 4 BFIs (major depressive disorder: N=1; schizophrenia spectrum disorders: N=1; other: N=2). Three BFIs specifically addressed healthy populations. Validity was investigated for 85 BFIs, mostly by convergence (N=58) and divergence (N=34). Criterion validity was assessed for 31 BFIs, whereas content validity for 3 BFIs. Ecological validity was assessed only in 3 studies. Factorial structure underlying BFIs by means of dimensionality reduction approaches was examined in 34 BFIs.

Reliability was investigated for 80 BFIs and mostly as internal consistency (N=64), test-retest (N=39) and inter-rater (N=25). Parallel forms were developed for one BFI only.

Item response theory (IRT) analyses were carried out for 9 BFIs only.

Among BFIs for which diagnostic properties could be computed (N=79), sensitivity and specificity measures were reported for 25 tools, whereas derived metrics such as predictive values and likelihood ratios for 16. With respect to norming, when applicable (N=79), 21 BFIs derived norms through receiver-operating characteristics (ROC) analyses, while other methods (e.g. percentiles or z-scores) were address to derive cut-offs in other 31 studies. Diagnostic accuracy was tested in 21 studies.

As to feasibility, back-translation was performed in 57 BFIs; the ease of use was assessed in 12 whereas ceiling/floor effects in 22. Strikingly, time of administration was explicitly reported for very few BFIs (N=18).

Discussion

Overview

The present review provides Italian clinicians and researchers with a comprehensive, up-to-date compendium on available BFIs along with information on their psychometrics, diagnostics and clinical usability. This work was designed not only to serve as a guide to practitioners in selecting the appropriate tool based on the clinical questions but also to researchers involved in clinical psychometrics applied to neurology and geriatrics. In the view of raising the awareness on the statistical-methodological standards that are expected to be met by such instruments, checklists herewith delivered (BFIQA) would hopefully come in handy for orienting both the development and the psychometric/diagnostic/usability study of BFIs. Indeed, at variance with the literature on diagnostic test accuracy as applied to performance-based psychometric instruments [26], such guidelines for BFIs mostly focus on psychometrics while lacking thorough sections specifically devoted to diagnostics and clinical usability [30]. Albeit each of the BFIs included in this study can undoubtedly be recognized in its peculiarities and usefulness in research and clinical contexts, as to the level of recommendations as assessed by the BFIQA, it has to be noted that 63.5% out of those referred to clinical populations (N=96) fell under the pre-established cut-off of 25 (i.e. half of the full range of the scale). More specifically, the following BFIs addressed to clinical populations reached a BFIQA score ≥25: ALS Depression Inventory [36], Apathy Evaluation Scale–Self Report version [44, 51], Anosognosia Questionnaire Dementia [17], Bedford Alzheimer Nursing Severity Scale [4], Beaumont Behavioural Inventory [20], Beck Depression Inventory-II [11, 64] Care-Related Quality of Life [63], Coop/Wonca [37], Disability Assessment Dementia Scale [12], Dimensional Apathy Scale [46, 53, 54], Dual-Task Impact in Daily-living Activities Questionnaire [38], Electronic format of Multiple Sclerosis Quality of Liufe-29 [48], Epilepsy-Quality of Life [40], Frontal Behavioural Inventory [2, 29], Geriatric Handicap Scale [62], Hospital Anxiety and Depression Scale [31], Hamilton Depression Rating Scale [33, 43, 45], Medical Outcomes Study-Human Immunodeficiency Virus [59], Multiple Sclerosis Quality of Life-29 [47], Multiple Sclerosis Quality of Life-54 [56], Neuropsychiatric Invenotry–Nursing Home version [3], Non-Motor Symptoms Scale for Parkinson's disease [9], Non-Communicative Patient’s Pain Assessment Instrument [15], Observer-Rated version of the Parkinson Anxiety Scale [53], Pain Assessment in Advanced Dementia [8, 32], Progressive Supranuclear Palsy–Quality of Life [41], Quality of Life in Alzheimer’s Disease [5], Quality of Life in the Dysarthric Speaker [39], Quality of Life after Brain Injury [16, 19], Stroke Impact Scale 3.0 [61] and State-Trait Anxiety Inventory [24, 53, 55]. Moreover, out of those also or exclusively referred to normative populations (N=22), only 4 were classified above the same cut-off (Table 1)—Beaumont Behavioural Inventory [20], Barratt Impulsiveness Scale [28], Dimensional Apathy Scale [46, 53, 54] and Starkstein Apathy Scale [18]. Although a specific, and of course empirical, methodology has been adopted for quality assessment, such findings should warn practitioners about possible statistical and methodological lacks of several available BFIs. In this respect, several issues have been highlighted as to psychometrics, diagnostics and clinical usability of BFIs.

Psychometrics

About two-thirds of all instruments were characterized by basic validity evidence.

However, as far as validity is concerned, its assessment was often based on convergence/divergence, whereas criterion validity was only seldom examined. With this regard, it was not uncommon that criterion validity has been tested via correlational, instead of regression, analyses—the latter being the proper ones to test such a property. Indeed, although the two approaches are mathematically related, while correlations are non-directional techniques solely intended to determine whether variables synchronously covary, regressions allow to test whether a first variable, which is attributed the status of a predictor, is able to account for the variability of a second one, which is instead addressed as a criterion.

In this respect, also ecological validity—testable through correlational analyses and predictive models—was infrequently investigated, raising the issue whether certain BFIs effectively reflect functional outcomes in daily life. Moreover, it is striking that factorial structure was explored in 34 BFIs only, albeit such analysis appears to be fundamental, especially for questionnaires [58]. Finally, content validity was almost never addressed: although for some BFIs can be difficult to assess content validity (e.g. in multi-domain instruments), our results strongly suggest the necessity of test such parameter by collecting ratings from experts as to the goodness of the operationalization of the target construct. We encourage this practice, as this expedient would provide practitioners with useful information about the target construct.

As to reliability, about 80% of BFIs come with such data. However, it is unfortunate to note inter-rater agreement measures lacked for 44 proxy-report BFIs, which are known to be highly subjected to heterogeneity in score attribution from examiner to examiner, also considering their different backgrounds (e.g. neurologists vs. psychologists). In this respect, it should be also noted that assessing inter-rater reliability in self-report BFIs is possible, albeit methodologically complex—as evidenced by the fact that such a feature was almost never assessed within included self-report BFIs. This aim could be reached, for instance, by evaluating the rate of agreement between a below- vs. above-cut-off classification delivered by the target BFI and that yielding from another one measuring the same construct (e.g. presence vs. absence of apathetic features). Indeed, if one considers that a below- vs. above-cut-off classification refers to standardized clinical judgments provided by the instruments, then such a scenario could be compared, for instance, to two clinicians (i.e. raters) evaluating a given clinical sign.

Moreover, parallel forms of included BFIs were almost never provided, limiting to an extent their usage for longitudinal applications. Although the development of parallel forms appears to be more relevant to performance-based instruments, practice effects cannot be ruled out in questionnaires either, especially those that are short-lived and thus likely to be remembered by the examinee [58].

Finally, it should be noted that, outside the framework of classical test theory, IRT analyses were almost never performed, despite them possibly providing relevant insights into the interpretation of BFI scores. In fact, while looking at total scores is crucial in order to draw clinical judgments, single item-level information would help clinicians to orient themselves towards a given diagnostic hypothesis, also possibly providing relevant prognostic information—albeit at a qualitative level. In this respect, data on item discrimination, i.e. an IRT parameter quantifying how much a given item is able to discriminate between different levels of the underlying trait, and thus the extent to which it is informative, would allow examiners to address responses to such items with greater attention. For instance, within a BFI assessing dysexecutive behavioural features, an item on the development of a sweet tooth (for instance, following the onset of a neurodegenerative condition) might result as highly informative towards the diagnosis of a frontal disorder. By contrast, within the same tool, items targeting depressive symptoms might be less informative towards such a behavioural syndrome, as being common to different brain disorders.

With that said, since practitioners and clinical researchers most of the times look at the global score yielded by a given BFI, a further useful output of potential IRT analyses might be represented by the test information function, which describes the overall informativity of the BFI based on the underlying level of the target construct. For instance, a BFI aimed at measuring apathy, which reveals itself as mostly informative for individuals having higher levels of the underlying construct (i.e., high levels of apathetic features), should be used with caution when assessing patients who do not display overt symptoms (and thus are unlikely to suffer from severe apathy) since possibly yielding false negative results.

Diagnostics

It is unfortunate to note that, out of BFIs for which diagnostics could be computed and norms derived, such data lacked for about one-third of them—this rate further dropped when addressing non-intrinsic diagnostics (i.e. predictive values and likelihood ratios). This represents a major drawback as to the clinical usability of certain BFIs as tools intended to convey diagnostic information. It is undoubtable that diagnostic properties and norms should be more accurately addressed in future studies aimed at developing and standardizing BFIs. In this respect, researchers devoted to such scopes should note that diagnostic and normative investigations do not necessarily overlap. For instance, ROC analyses allow to both derive a cut-off and to provide intrinsic/post-test diagnostics, but may be used only to the latter aim. Moreover, norms can be derived through approaches other than ROC analyses, e.g. by means of z-based, percentile-based or regression-based techniques.

As to the derivation of cut-offs via ROC analyses, it should be noted that an advisable practice would be that of providing different values based on different trade-offs between sensitivity and specificity. This would not only allow clinicians to be adaptive in selecting the most suitable cut-off values based on whether they intend to favour the sensitivity or specificity of a given BFI, but also help clinical researchers identify an adequate threshold value for inclusion/exclusion purposes in research settings. Indeed, when including a given deficit as an exclusion criterion for recruitment, stricter cut-offs might be preferred by researchers as they guarantee higher specificity and hence fewer false positives.

Finally, on the notion of “disease-specificity,” it would be reasonable not to limit the application of certain disease-specific BFIs to those clinical population(s) to which they were originally addressed. For instance, questionnaires designed to assess depression in amyotrophic lateral sclerosis (ALS) by overcoming disability-related confounders [36] might as well be applied to other motor conditions (e.g. extra-pyramidal disorders, multiple sclerosis). Similarly, tools assessing dysexecutive-like behavioural changes in ALS [20] might come in handy for the detection of such disturbances in other neurological conditions known to affect frontal networks (e.g. Huntington’s disease). This proposal rises from the consideration of common phenotypic manifestation possibly being underpinned by different pathophysiological processes; therefore, an extended application of disease-specific BFIs should occur only when such an assumption is met. Moreover, such “off-label” adoptions would undoubtedly need studies that support the feasibility of these disease-specific BFIs in desired populations.

Usability

Despite being widely accepted that back-translation is required when adapting a given BFI to a new (target) language, very few BFIs appeared to undergo such a procedure, and information on BFI adaptation often lacked. Such a finding is in line with the notion according to which statistical and methodological deficiencies of psychometric instruments derived especially from cross-cultural adaptation frameworks [60].

Moreover, data on possible ceiling and/or floor effects were often unreported, preventing clinicians and researchers to evaluate whether a given BFI can be deemed as suitable for a target clinical or non-clinical population. For instance, a BFI assessing behavioural disorders and putatively presenting with a relevant ceiling/floor effect might be scarcely informative if administered with the aim of detecting sub-clinical alterations. However, such an issue is of course even more relevant when dealing with BFIs addressed to clinical populations: indeed, while ceiling/floor effects might be expected in normotypical individuals if a given BFI is aimed at detecting a clearly clinical symptoms (e.g. neuropsychiatric manifestations within the dysexecutive spectrum), the same would not apply for clinical populations known to present with such features (e.g. patients with frontal lobe damages). In other terms, a BFI yielding ceiling/floor effects in diseased populations is likely to be poorly usable at a clinical level. It follows that the assessment of ceiling/floor effects is mostly relevant when exploring the clinical usability of BFIs.

As for the ease of use, researchers devoted to the development and psychometric/diagnostic/usability study of BFIs are encouraged to assess how difficult a questionnaire is, from the examiner’s standpoint, to be administered, scored and interpreted, as well as, from the examinee’s standpoint, to be understood and completed. The vast majority of tools included in the present review did not come with such information.

Time requirement of BFIs was also frequently find as lacking, although this information is undoubtedly needed in order to determine whether a given tool is suitable for the target setting. For instance, not all BFIs might be adequate for bedside administrations, as being relatively long and thus scarcely appropriate to time-restricted settings. Similarly, time requirements could be different depending on whether it is in in-patient vs. out-patient setting.

Further suggestions for researchers

A number of further elements, not explicitly addressed earlier in this work, can be herewith listed in order to help researchers devoted to BFI development and psychometric/diagnostic/usability study.

First, IRT analyses can be also useful, within the development of either a novel BFI or a shortened version of a previous one, to select items that adequately measure the target construct [14]. To such aims, IRT can be also complemented with classical test theory approaches in order to identify, through an empirical, data-driven approach, a set of criteria to be met in order for an item to be included into a given BFI in development—as recently proposed within the Italian scenario [28].

Second, the a priori estimation of the adequate sample size for the main target analyses within a psychometric/diagnostic/usability study for a given BFI is advisable. In this respect, a number of studies are available that suggest optimal, either empirical or simulation-based sample size estimation procedures for, e.g. validity and reliability analyses [23], dimensionality-reduction techniques [22], ROC analyses [35], IRT analyses [50] and regression-based norming [49]. In this respect, an a posteriori evaluation of the robustness of normative data can be also performed, as suggested by Crawford and Garthwaite [10].

Finally, researchers focused on BFI development and psychometric/diagnostic/usability study have to be aware of procedures aimed at handling missing data according to their categorization (e.g. at-random vs. not-at-random missing values) [34]. This is particularly relevant when administering several tools within a same data collection session, especially to patients: indeed, participants might not agree or be able to complete the full range of instruments included in a study protocol, e.g. due to fatigue.

Conclusions

With the present work, practitioners have been provided with an up-to-date compendium of available BFIs in Italy and also to present some possible criticisms about their properties, and deliver hopefully useful insights into best-practice guidelines. To this last aim, it is believed that the BFIQA scales herewith provided may serve as a plot for researchers in order to carefully consider relevant aspects associated with the development and psychometric/diagnostic/usability of BFIs, in order to strengthen their level of recommendation for their use in clinical practice as applied to diagnostic, prognostic and interventional setting.

Data availability

Data collected and analyzed within the present study are accessible upon reasonable request to the Corresponding Author.

Notes

NPI-NH [3]: patients with a diagnosis of dementia and/or psychiatric disorders, according to DSM-IV-TR; AES-I [7]: patients with either major depressive disorder or dementia and community-dwelling controls with a Mini-Mental State Examination raw score > 18; MDS-HC [25]: all eligible individuals that could benefit from home care services; IPGDepressionS [57]: residents in geriatric institutions; GHS [62]: community-dwelling elderlies.

References

Aiello EN, Rimoldi S, Bolognini N, Appollonio I, Arcara G (2022) Psychometrics and diagnostics of Italian cognitive screening tests: a systematic review. Neurol Sci 43:821–845

Alberici A, Geroldi C, Cotelli M, Adorni A, Calabria M, Rossi G … Kertesz A (2007) The Frontal Behavioural Inventory (Italian version) differentiates frontotemporal lobar degeneration variants from Alzheimer’s disease. Neurol Sci. 28(2): 80-86

Baranzini F, Grecchi A, Berto E, Costantini C, Ceccon F, Cazzamalli S, Callegari C (2013) Factor analysis and psychometric properties of the Italian version of the Neuropsychiatric Inventory-Nursing Home in an institutionalized elderly population with psychiatric comorbidity. Riv Psichiatr 48(4):335–344

Bellelli G, Frisoni GB, Bianchetti A, Trabucchi M (1997) The Bedford Alzheimer Nursing Severity scale for the severely demented: validation study. Alzheimer Dis Assoc Disord 11(2):71–77

Bianchetti A, Cornali C, Ranieri P, Trabucchi M (2017) Quality of life in patients with mild dementia. Validation of the Italian version of the quality of life Alzheimer’s disease (QoL-AD) Scale. Journal of Gerontology and Geriatrics 65:137–143

Blais M, Baer L (2009) Understanding rating scales and assessment instruments. In: Baer L, Blais MA (eds) Handbook of Clinical Rating Scales and Assessment in Psychiatry And Mental Health. Humana Press, Totowa, NJ, pp 1–6

Borgi M, Caccamo F, Giuliani A, Piergentili A, Sessa S, Reda E … Miraglia F (2016) Validation of the Italian version of the Apathy Evaluation Scale (AES-I) in institutionalized geriatric patients. Ann Ist Super Sanita, 52(2): 249-255

Costardi D, Rozzini L, Costanzi C, Ghianda D, Franzoni S, Padovani A, Trabucchi M (2007) The Italian version of the pain assessment in advanced dementia (PAINAD) scale. Arch Gerontol Geriatr 44(2):175–180

Cova I, Di Battista ME, Vanacore N, Papi CP, Alampi G, Rubino A … Pomati S (2017) Validation of the Italian version of the non motor symptoms scale for Parkinson’s disease. Parkinsonism Relat Disord, 34:38-42

Crawford JR, Garthwaite PH (2008) On the “optimal” size for normative samples in neuropsychology: capturing the uncertainty when normative data are used to quantify the standing of a neuropsychological test score. Child Neuropsychol 14:99–117

Cuoco S, Cappiello A, Abate F, Tepedino MF, Erro R, Volpe G … Picillo M (2021) Psychometric properties of the Beck Depression Inventory-II in progressive supranuclear palsy. Brain and behavior, 11:10 e2344

De Vreese LP, Caffarra P, Savarè R, Cerutti R, Franceschi M, Grossi E (2008) Functional disability in early Alzheimer’s disease–a validation study of the Italian version of the disability assessment for dementia scale. Dement Geriatr Cogn Disord 25(2):186–194

Dichter MN, Schwab CG, Meyer G, Bartholomeyczik S, Halek M (2016) Linguistic validation and reliability properties are weak investigated of most dementia-specific quality of life measurements—a systematic review. J Clin Epidemiol 70:233–245

Edelen MO, Reeve BB (2007) Applying item response theory (IRT) modeling to questionnaire development, evaluation, and refinement. Qual Life Res 16:5–18

Ferrari R, Martini M, Mondini S, Novello C, Palomba D, Scacco C … Visentin M (2009) Pain assessment in non-communicative patients: the Italian version of the Non-Communicative Patient’s Pain Assessment Instrument (NOPPAIN). Aging Clin Exp Res, 21(4): 298-306

Formisano R, Longo E, Azicnuda E, Silvestro D, D’Ippolito M, Truelle JL … Giustini M (2017) Quality of life in persons after traumatic brain injury as self-perceived and as perceived by the caregivers. Neurol Sci, 38(2): 279-286

Gambina G, Valbusa V, Corsi N, Ferrari F, Sala F, Broggio E … Moro V (2015) The Italian validation of the Anosognosia Questionnaire for Dementia in Alzheimer’s disease. Am J Alzheimers Dis Other Demen, 30(6): 635-644

Garofalo E, Iavarone A, Chieffi S, Carpinelli Mazzi M, Gamboz N, Ambra FI, … Ilardi CR (2021) Italian version of the Starkstein Apathy Scale (SAS-I) and a shortened version (SAS-6) to assess “pure apathy” symptoms: normative study on 392 individuals. Neurol Sci, 42(3): 1065-1072

Giustini M, Longo E, Azicnuda E, Silvestro D, D’Ippolito M, Rigon J … Formisano R (2014) Health-related quality of life after traumatic brain injury: Italian validation of the QOLIBRI. Funct Neurol, 29(3): 167

Iazzolino B, Pain D, Laura P, Aiello EN, Gallucci M, Radici A … Chiò A (2022) Italian adaptation of the Beaumont Behavioral Inventory (BBI): psychometric properties and clinical usability. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 23, 81-86

Kaufer DI (2015) Neurobehavioral assessment. CONTINUUM: Lifelong Learning in Neurology 21:597–612

Kyriazos TA (2018) Applied psychometrics: sample size and sample power considerations in factor analysis (EFA, CFA) and SEM in general. Psychology 9:2207

Hobart JC, Cano SJ, Warner TT, Thompson AJ (2012) What sample sizes for reliability and validity studies in neurology? J Neurol 259:2681–2694

Ilardi CR, Gamboz N, Iavarone A, Chieffi S, Brandimonte MA (2021) Psychometric properties of the STAI-Y scales and normative data in an Italian elderly population. Aging Clin Exp Res 33(10):2759–2766

Landi F, Tua E, Onder G, Carrara B, Sgadari A, Rinaldi C, ... Bernabei R (2000) Minimum data set for home care: a valid instrument to assess frail older people living in the community. Med Care 38:1184–1190

Larner AJ (2017) Cognitive screening instruments: a practical approach. Springer, Cham

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP … Moher D (2009) The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol, 62, e1-e34

Maggi G, Altieri M, Ilardi CR, Santangelo G (2022) Validation of a short Italian version of the Barratt Impulsiveness Scale (BIS-15) in non-clinical subjects: psychometric properties and normative data. Neurol Sci 1–9. https://doi.org/10.1007/s10072-022-06047-2

Milan G, Lamenza F, Iavarone A, Galeone F, Lore E, De Falco C, …, Postiglione A (2008) Frontal Behavioural Inventory in the differential diagnosis of dementia. Acta Neurol Scand, 117(4): 260-265

Mokkink LB, Terwee CB, Patrick DL, Alonso J, Stratford PW, Knol DL, ... & De Vet HC (2010). The COSMIN checklist for assessing the methodological quality of studies on measurement properties of health status measurement instruments: an international Delphi study. Qual Life Res, 19, 539-549

Mondolo F, Jahanshahi M, Grana A, Biasutti E, Cacciatori E, Di Benedetto P (2006) The validity of the hospital anxiety and depression scale and the geriatric depression scale in Parkinson’s disease. Behav Neurol 17(2):109–115

Mosele M, Inelmen EM, Toffanello ED, Girardi A, Coin A, Sergi G, Manzato E (2012) Psychometric properties of the pain assessment in advanced dementia scale compared to self assessment of pain in elderly patients. Dement Geriatr Cogn Disord 34(1):38–43

Mula M, Iudice A, La Neve A, Mazza M, Mazza S, Cantello R, Kanner AM (2014) Validation of the Hamilton Rating Scale for Depression in adults with epilepsy. Epilepsy Behav 41:122–125

Newman DA (2014) Missing data: five practical guidelines. Organ Res Methods 17:372–411

Obuchowski NA (2005) ROC analysis. Am J Roentgenol 184:364–372

Pain D, Aiello EN, Gallucci M, Miglioretti M, Mora G (2021) The Italian Version of the ALS Depression Inventory-12. Front Neurol, 12. https://doi.org/10.3389/fneur.2021.723776

Pappalardo A, Chisari CG, Montanari E, Pesci I, Borriello G, Pozzilli C, ... & Patti F (2017) The clinical value of Coop/Wonca charts in assessment of HRQoL in a large cohort of relapsing-remitting multiple sclerosis patients: results of a multicenter study. Mult Scler Relat Disord, 17, 154-171

Pedullà L, Tacchino A, Podda J, Bragadin MM, Bonzano L, Battaglia MA, …, Ponzio M (2020) The patients’ perspective on the perceived difficulties of dual-tasking: development and validation of the Dual-task Impact on Daily-living Activities Questionnaire (DIDA-Q). Mult Scler Relat Disord 46:102601.

Piacentini V, Zuin A, Cattaneo D, Schindler A (2011) Reliability and validity of an instrument to measure quality of life in the dysarthric speaker. Folia Phoniatr Logop 63(6):289–295

Piazzini A, Beghi E, Turner K, Ferraroni M (2008) Health-related quality of life in epilepsy: findings obtained with a new Italian instrument. Epilepsy Behav 13(1):119–126

Picillo M, Cuoco S, Amboni M, Bonifacio FP, Bruschi F, Carotenuto I, ... & Barone P (2019) Validation of the Italian version of the PSP quality of life questionnaire. Neurol Sci, 40(12), 2587-2594

Pottie K, Rahal R, Jaramillo A, Birtwhistle R, Thombs BD, Singh H, ... & Canadian Task Force on Preventive Health Care. (2016). Recommendations on screening for cognitive impairment in older adults. CMAJ, 188, 37-46

Quaranta D, Marra C, Gainotti G (2008) Mood disorders after stroke: diagnostic validation of the poststroke depression rating scale. Cerebrovasc Dis 26(3):237–243

Raimo S, Trojano L, Spitaleri D, Petretta V, Grossi D, Santangelo G (2014) Apathy in multiple sclerosis: a validation study of the apathy evaluation scale. J Neurol Sci 347(1–2):295–300

Raimo S, Trojano L, Spitaleri D, Petretta V, Grossi D, Santangelo G (2015) Psychometric properties of the Hamilton Depression Rating Scale in multiple sclerosis. Qual Life Res 24(8):1973–1980

Raimo S, Trojano L, Gaita M, Spitaleri D, Santangelo G (2020) Assessing apathy in multiple sclerosis: validation of the dimensional apathy scale and comparison with apathy evaluation scale. Multiple Sclerosis and Related Disorders 38:101870

Rosato R, Testa S, Bertolotto A, Confalonieri P, Patti F, Lugaresi A, ... & Solari A (2016). Development of a short version of MSQOL-54 using factor analysis and item response theory. PLoS One, 11(4), e0153466

Rosato R, Testa S, Bertolotto A, Scavelli F, Giovannetti AM, Confalonieri P, ... & Solari A (2019) eMSQOL-29: prospective validation of the abbreviated, electronic version of MSQOL-54. Mult Scler J, 25(6), 856-866

Rothstein HR, Borenstein M, Cohen J, Pollack S (1990) Statistical power analysis for multiple regression/correlation: a computer program. Educ Psychol Measur 50:819–830

Şahin A, Anıl D (2017) The effects of test length and sample size on item parameters in item response theory. Educational Sciences: Theory & Practice 17:321–335

Santangelo G, Barone P, Cuoco S, Raimo S, Pezzella D, Picillo M, ... & Vitale C (2014). Apathy in untreated, de novo patients with Parkinson’s disease: validation study of Apathy Evaluation Scale. J Neurol, 261(12), 2319-2328

Santangelo G, Falco F, D'Iorio A, Cuoco S, Raimo S, Amboni M, ... & Barone P (2016) Anxiety in early Parkinson’s disease: validation of the Italian observer-rated version of the Parkinson Anxiety Scale (OR-PAS). J Neurol Sci, 367, 158-161

Santangelo G, Sacco R, Siciliano M, Bisecco A, Muzzo G, Docimo R, ... & Gallo A (2016). Anxiety in multiple sclerosis: psychometric properties of the State‐Trait Anxiety Inventory. Acta Neurologica Scandinavica, 134(6), 458-466

Santangelo G, Raimo S, Siciliano M, D’Iorio A, Piscopo F, Cuoco S, ... & Trojano L (2017). Assessment of apathy independent of physical disability: validation of the Dimensional Apathy Scale in Italian healthy sample. Neurol Sci, 38(2), 303-309

Siciliano M, Trojano L, Trojsi F, Monsurrò MR, Tedeschi G, Santangelo G (2019) Assessing anxiety and its correlates in amyotrophic lateral sclerosis: the state-trait anxiety inventory. Muscle Nerve 60(1):47–55

Solari A, Filippini G, Mendozzi L, Ghezzi A, Cifani S, Barbieri E, ... & Mosconi P (1999). Validation of Italian multiple sclerosis quality of life 54 questionnaire. J Neurol, Neurosurg Psychiat, 67(2), 158-162

Spagnoli A, Foresti G, Macdonald A, Williams P (1987) Italian version of the organic brain syndrome and the depression scales from the CARE: evaluation of their performance in geriatric institutions. Psychol Med 17(2):507–513

Streiner DL, Norman GR, Cairney J (2015) Health measurement scales: a practical guide to their development and use. Oxford University Press

Tozzi V, Balestra P, Murri R, Galgani S, Bellagamba R, Narciso P, ... & Wu AW (2004) Neurocognitive impairment influences quality of life in HIV-infected patients receiving HAART. Int J STD & AIDS, 15(4), 254-259

Uysal-Bozkir Ö, Parlevliet JL, de Rooij SE (2013) Insufficient cross-cultural adaptations and psychometric properties for many translated health assessment scales: a systematic review. J Clin Epidemiol 66:608–618

Vellone E, Savini S, Fida R, Dickson VV, Melkus GDE, Carod-Artal FJ, ... & Alvaro R (2015). Psychometric evaluation of the stroke impact scale 3.0. J Cardiovasc Nurs, 30(3), 229–241

Verrusio W, Renzi A, Spallacci G, Pecci MT, Pappadà MA, Cacciafesta M (2018) The development of a new tool for the evaluation of handicap in elderly: the Geriatric Handicap Scale (GHS). Aging Clin Exp Res 30(10):1187–1193

Voormolen DC, van Exel J, Brouwer W, Sköldunger A, Gonçalves-Pereira M, Irving K, ... & Handels RL (2021). A validation study of the CarerQol instrument in informal caregivers of people with dementia from eight European countries. Qual Life Res, 30(2), 577-588

Sacco R, Santangelo G, Stamenova S, et al (2016) Psychometric properties and validity of Beck Depression Inventory II in multiple sclerosis. Eur J Neurol 23:744–750.

Funding

The project was co-financed by 5 x 1000 funds of the year 2020 from Fondazione di Ricerca in Neuroriabilitazione San Camillo Onlus; it was also partially supported by the Italian Ministry of Health to N.B. Open access funding provided by Università degli Studi di Milano - Bicocca within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

This study did not require any ethical approval or informed consent acquisition, as being a review on published data.

Conflict of interest

None.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aiello, E.N., D’Iorio, A., Montemurro, S. et al. Psychometrics, diagnostics and usability of Italian tools assessing behavioural and functional outcomes in neurological, geriatric and psychiatric disorders: a systematic review. Neurol Sci 43, 6189–6214 (2022). https://doi.org/10.1007/s10072-022-06300-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10072-022-06300-8