Abstract

Background

Neurological disorders remain a worldwide concern due to their increasing prevalence and mortality, combined with the lack of available treatment, in most cases. Exploring protective and risk factors associated with the development of neurological disorders will allow for improving prevention strategies. However, ascertaining neurological outcomes in population-based studies can be both complex and costly. The application of eHealth tools in research may contribute to lowering the costs and increase accessibility. The aim of this systematic review is to map existing eHealth tools assessing neurological signs and/or symptoms for epidemiological research.

Methods

Four search engines (PubMed, Web of Science, Scopus & EBSCOHost) were used to retrieve articles on the development, validation, or implementation of eHealth tools to assess neurological signs and/or symptoms. The clinical and technical properties of the software tools were summarised. Due to high numbers, only software tools are presented here.

Findings

A total of 42 tools were retrieved. These captured signs and/or symptoms belonging to four neurological domains: cognitive function, motor function, cranial nerves, and gait and coordination. An additional fifth category of composite tools was added. Most of the tools were available in English and were developed for smartphone device, with the remaining tools being available as web-based platforms. Less than half of the captured tools were fully validated, and only approximately half were still active at the time of data collection.

Interpretation

The identified tools often presented limitations either due to language barriers or lack of proper validation. Maintenance and durability of most tools were low. The present mapping exercise offers a detailed guide for epidemiologists to identify the most appropriate eHealth tool for their research.

Funding

The current study was funded by a PhD position at the University of Groningen. No additional funding was acquired.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

Neurological disorders, including among others Alzheimer’s Disease and other dementias, Parkinson’s Disease, Multiple Sclerosis, epilepsy and headache, represent approximately 3% of the global burden of disease [1]. The burden of all neurological disorders combined has increased steadily since the early 1990s. The disability-adjusted life-years (DALYs) due to neurological conditions have increased by 15% worldwide in 2016 compared to 1990, despite the decline in communicable neurological disorders. Similarly, deaths by neurological disorders have increased by 39% in the same time period [2]. The highest incidence and mortality of neurological disorders are reported in low and middle-income countries, where they often coexist with limited clinical and research resources [2]. No curative treatment is currently available for the majority of neurological disorders, therefore prevention is essential to reduce the overall burden [3].

The use of electronic tools has become widely available at present, and information technology has played an increasingly prominent role in clinical medicine and research [4]. In this field, the various tools are collectively referred to as electronic health tools, or eHealth, in short. In general, eHealth tools contribute to improving assessment and intervention, closing the physical distance between patient and clinician, and assisting research [4, 5]. The use of eHealth tools may involve the presence of a skilled health worker (in-person or via video-conferencing) or be available as a fully automated tool or device, e.g. eHealth services that screen for disorders, as often seen for example in mental health [6]. eHealth tools can be divided into those that rely solely on software, and those using specific hardware. The tools in the first group (e.g., web-based, mobile app) have wider application as they rely solely on the availability of adequate support, i.e. a smartphone and/or a laptop. eHealth tools relying on specific equipment (i.e., a handle to measure grip strength), on the other hand, often require additional logistics, such as transportation and trained personnel. The development and use of eHealth tools became more relevant during the recent COVID-19 pandemic when access to in-person contacts was limited [7].

A large proportion of eHealth is used for diagnosis or disease management [8]. Nonetheless, some of these tools are extremely relevant for research, as well. In particular, eHealth tools collecting data outside hospital settings and without relying on specialised personnel are of particular interest for epidemiological studies [9]. Population-based epidemiological studies often require the assessment of clinical outcomes in large cohorts, and eHealth tools can enable data collection on a large scale. This is particularly relevant for studying hard-to-reach populations or large cohorts in low-income settings, where research-related resources can be scarce [10]. Among the eHealth tools available to be used for data collection, those focusing on the assessment of neurological function are particularly valuable. For research purposes only, a comprehensive eHealth assessment of the neurological function could potentially replace the assessment based on the neurological examination made by clinical neurologists, which is a very expensive resource. Capturing neurological signs and symptoms distribution at the population level might allow the estimate of the prevalence of selected neurological disorders in epidemiological studies.

Mapping and describing tools to be potentially used for research serves as a basis for the creation and implementation of novel eHealth tools in the field of neuroepidemiology. A comprehensive map, therefore, can be useful both for guiding epidemiological research and for the development of future tools. This systematic review aimed to capture and map eHealth tools capable of identifying any neurological sign and/or symptom in the general population (i.e., that can be used for epidemiological research, as opposed to their clinical application), currently available in the literature [11]. The intent was, therefore, to focus on the description and characterisation of these tools, rather than the studies in which they were used or the underlying populations. Given the large number of records found, only software tools were reported in this paper (i.e. eHealth tools that do not require extra equipment, other than a mobile device or computer), while hardware will be the focus of a future paper.

Methods

A protocol for this systematic review was registered in the PROSPERO Database (ID: 314,489), and subsequently published [11].

Search strategy and selection criteria

The search strategy was devised to capture all relevant papers. A total of four main fields were identified and linked with an AND Boolean connector: electronic tool (mobile app, electronic app, app, device, eHealth, mHealth, wearable), assessment (screening, assessment, measurement), sign and/or symptom (sign, symptom, outcome, disease, disorder), and neurological examination (neuro, brain, speech, tremor, cognitive, gait, motor, cranial, coordination, sensation). Within each field, similar terms were linked with an OR Boolean connector. An additional field containing terms capturing tools used for diagnostic or clinical purposes (i.e., intervention, improvement, rehabilitation, care, treatment) was defined and removed from the search by using a NOT Boolean connector. The search terms referring to the neurological symptoms/signs were based on a conventional neurological examination [12]. A full list of terms by field is reported in the protocol [11].

Searches were conducted on the 11th of February 2022, in four electronic databases: PubMed, Web of Science, EBSCOHost and Scopus. The searches were limited to the period from 2008 to date; 2008 was chosen as the year when the first modern smartphone was released, to capture only tools in line with contemporary technology.

The inclusion and exclusion criteria were defined according to an adapted version of the Population Intervention Control Outcome (PICO) criteria.

Population – Studies with human participants of every age, sex and gender were included. Intervention – tools that could be used outside clinical settings and without the assistance of a clinical neurologist in the process of data collection (i.e. tools to be used in research and not in clinical practice).

Outcome – Studies addressing the development, validation, or implementation of software eHealth interventions that assess a neurological sign, symptom or function.

Only empirical research published in English in peer-reviewed journals was considered. Animal studies, and studies using Artificial Intelligence or automated analysis to make a diagnosis were excluded. Likewise, studies that collected data using non-portable equipment (e.g., neuroimaging), lab procedures (e.g., biomarkers), or specialised medical personnel were excluded to identify solely the tools for epidemiological research and not clinical practice. When more than one paper reported data on the same tool, only the paper reporting data coming from the largest population was summarised in tables.

Further detail on eligibility can be found in the protocol [11].

Data analysis

The Zotero software was used to store references and relevant information on each publication. Reference lists obtained from each search engine were combined, and duplicates removed. For initial eligibility purposes, titles and abstracts were screened. Subsequently, two reviewers independently assessed the inclusion/exclusion criteria of identified papers. Whenever there was a disagreement on the inclusion or exclusion of a given paper, a third reviewer offered their input, solving the disagreement.

Data extraction was structured according to the following categories:

-

General characteristics of the paper: authors, year of publication, country;

-

Type of study: development, validation, or implementation of electronic tools;

-

eHealth tool: name, length of assessment, internet connection requirement, self-assessment vs. instructor-mediated assessment, validated vs. non-validated in a population, availability (i.e., platform);

-

Participants: sample size, mean age and gender distribution if applicable;

-

Context: setting of the research, source of funding;

-

Outcome: sign/symptom assessed, type of output variable (e.g., score, measurement on a continuous scale);

-

Technical characteristics and availability: licensing status, maintenance strategy, accessing link.

Corresponding authors were contacted to complement data provided by the published paper, where needed.

Included papers were not formally assessed in terms of their quality given the very high heterogeneity of the published article for their reporting. However, the quality of the descriptive papers and their validation studies, such as for example validation measures and group comparisons, were taken into consideration when summarising the results. eHealth tools were considered still active if a URL or another access mode was found to access them. All sections of the systematic review were reported following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [13].

The information extracted from the original papers was reported in a series of tables aimed at providing an overview of relevant items at a glance, by technical characteristics, and by sign/symptom assessed. In addition, a conceptual graph mapping each tool by neurological function assessed was drawn using Visio Microsoft Software [14].

Role of the funding source

The funder of the study had no role in study design, data collection, data analysis, data interpretation, or writing of the report.

Findings

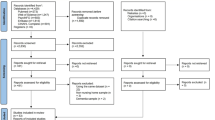

A total of 16,404 papers were initially obtained from the database searches. After duplicate removal, 9,619 papers remained to be screened. After excluding non-relevant items through titles and abstracts, a total of 380 reports were considered for inclusion. Of these, full texts were retrieved for the 136 papers reporting on software tools. After applying the inclusion and exclusion criteria, a total of 94 papers were excluded, leading to a final sample of 42 software eHealth tools included in the present review (Fig. 1). Reasons for exclusion were a) did not refer to a neurological sign or symptom (n = 30), b) did not refer to tools suitable for a research setting (n = 34), c) did not refer to a software tool, or required extra equipment (n = 19), d) duplicated tools (n = 6) or e) non-empirical studies (n = 4).

The main clinical characteristics of the tools are reported in Table 1, according to the neurological function assessed: 19 tools assessing the cognitive function [15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33]; six tools assessing the motor function [34,35,36,37,38,39]; two tools assessing cranial nerve function [42, 43]; and nine tools assessing gait and coordination [44,45,46,47,48,49,50,51,52]. In this table, the tools are organised by symptom or sign assessed (i.e. hand tremor), and the type of measurement used for assessment (i.e. measure of tremor intensity). In addition, general information on their validation is reported. There is an additional section in which a total of six composite tools [53,54,55,56,57,58], i.e. tools screening for a wider set of signs and/or symptoms in patients with a specific neurological condition (e.g., elevateMS in Multiple Sclerosis [53]) were reported. The technical properties of all the tools were summarised in Table 2, where information such as the need for an internet connection, or in which platform (Android OS or iOS) the tool is available from, were collated. A conceptual map displaying all the captured eHealth tools organised by neurological function is shown in Fig. 2.

The technical properties of the tools are described in Table 2. Of the total, 15 tools (36%) required an internet connection [15, 19, 22, 23, 27, 30, 31, 33, 38, 40, 50, 52, 55, 56, 58], mostly due to real-time data transfer or data upload. At least 26 tools (62%) collect data through self-assessment [15, 17,18,19,20,21,22,23,24, 26, 30,31,32, 36, 38, 39, 41, 44, 46, 47, 49, 52, 53, 55, 56, 58] without the need for external aid; although some of these highlight the presence of an instructor, mostly at the beginning to explain the procedure. None except one [43] of the included tools required further expertise of a clinician to interpret the output of the data collected. A total of 26 tools (62%) were available in English [15,16,17,18,19,20,21,22, 25, 27,28,29,30,31, 36, 37, 39,40,41,42,43,44, 53, 56,57,58], with only 4 (10%) of the tools being available in more than one language [20, 29, 31, 44]. Only 18 tools (43%) were validated in a given population [17, 18, 25, 26, 29,30,31,32, 37, 38, 40, 42,43,44, 51, 52, 54, 56] and another 10 (24%) partially validated against similar measures [15, 16, 20, 21, 23, 36, 39, 41, 45, 58]. Most tools were available on mobile software (e.g., a tablet or smartphone), with at least 5 (12%) being mobile smartphone applications that were compatible with both Android OS and iOS platforms [19, 25, 29, 44, 45]. The remaining tools, which were not available for mobile, were developed for a web-based platform and accessible through a browser.

Information on how to access the eHealth tools was often incomplete in the scientific paper. We reached out to each of the corresponding authors, but only gathered a 24% response rate. Furthermore, of the tools where corresponding authors provided additional information on accessibility, at least one had yet to have a consumer-ready version. Information on tool accessibility can be found in Table 3. By the end of the process, the authors were able to identify Uniform Resource Locators (URLs) for 22 (52%) tools, either to an application store, database, or website [15, 16, 19, 23,24,25, 27,28,29, 38, 40,41,42,43,44,45,46, 50, 51, 53,54,55].

Out of a total of 16 studies solely publicly funded, at least 10 tools were still accessible at the time of the review [19, 21, 23,24,25, 38, 40,41,42,43]. Out of the 4 privately funded studies, at least 2 tools were still accessible [50, 53]. Of the 8 studies that received both public and private funding, at least six tools were still accessible [15, 16, 28, 29, 44, 55]. The remaining studies disclosed no external funding or had no source of funding information available, with at least 6 tools still accessible [27, 33, 45, 46, 51, 54]. All of the 17 tools that were found to have proprietary licensing (i.e., owned by a private entity or corporation) were still accessible at the time of this review [15, 16, 19, 21, 25, 27,28,29, 41, 42, 44,45,46, 50, 51, 54, 55]. One tool had a license belonging to a Non-Profit Organisation [53], and 3 tools were open source [24, 38, 40], all still accessible at the time of data collection.

Interpretation

This systematic review mapped a total of 42 eHealth software tools that assess one or more neurological signs and/or symptoms, potentially useful for research purposes. The most targeted neurological domain was cognitive function, followed by tools to assess gait, balance and coordination. Interestingly, 6 tools that assess a combination of symptoms and signs were also identified: these were designed to monitor the neurological function in patients affected by specific conditions, i.e., Parkinson’s disease [56, 58], Multiple Sclerosis [53], stroke [54, 55], or consequence of concussion [57]. Relatively less frequent were the tools assessing motor function alone, or cranial nerves.

The disproportionally higher number of tools assessing cognitive function might be due to the fact that cognitive impairment is a frequent manifestation of several late-stage neurological conditions [59, 60]. In addition, it may be easier to transpose a pen-and-paper test to a digital format, in some cases even improving performance in data collection compared to their analogue counterparts [61]. Some neurological domains, such as cranial nerve functions (e.g., facial symmetry, swallowing…), and sensation (e.g., pain, deep sensation), appear seemingly underrepresented in the reviewed studies. This is an important gap for population-based research, where peripheral neuropathies associated with metabolic syndrome [62], and pre-clinical stages of diabetes [63], in particular in the obese population, might go under detected. A tool aimed at screening neurological symptoms for research purposes in the general population would ideally also cover these domains.

While some tools have been either fully or partially validated, facilitating implementation in real-world contexts, the heterogeneity of the description and reporting of the included tools was very high. Some items were described, but testing in a population was not reported, limiting their potential applicability. Other studies reported tools used in clinical settings with patients, as opposed to the general population; however, these were included in this systematic review as they were deemed useful for epidemiological research. In addition, while approximately half of the described tools were available in English, only a very small proportion was available in more than one language, adding to the challenge of performing epidemiological research beyond English-speaking populations.

A notable finding in this systematic review was the scarcity of tools specifically designed for children. Only one tool targeted a young paediatric population [16]. This could partly be attributed to ethical considerations and boundaries that make research on children more complex and challenging [64]. Nevertheless, these hurdles should not deter researchers from focusing on developing age-appropriate tools for children. There is a pressing need to bridge this gap in the field and develop more child-focused tools, designed considering ethical and developmental aspects, to better serve this population group in research settings. No study specifically assessed the ability of the elderly to use eHealth, despite some articles reporting a mean age over 65 for their samples [26, 30, 31, 36, 41, 58]. Previous studies show that this age group experiences higher difficulty working with digital tools [65, 66]. Alongside the expansion of eHealth, a greater emphasis on digital literacy is often promoted, especially since it has been highlighted that eHealth literacy programs have been well received by the elderly in general, both in the form of multimedia training and as paper-based training [67].

Only one tool presented an attempt at cross-cultural validation [29] e.g., the acceptability, feasibility and correct interpretation of outcomes in populations with different cultural norms, including beliefs towards disease, different levels of literacy, or trust in technology, by validating the tool in the different cultural contexts of Central Europe and South Africa [29, 68]. Cross-cultural validation is particularly relevant considering that in some cultures the origin of neurological signs and symptoms in particular, such as seizures or tremor, is often attributed to supernatural causes or prejudiced views (i.e., demonisation and witchcraft) [69, 70]. With the increasing availability of smartphones, eHealth tools could enable data collection for epidemiological research in previous hard-to-reach environments or populations. However, this will not be problem free and additional strategies such as for example involving relevant stakeholders such as policymakers, will be likely needed, as some behavioural and technological barriers still persist in many populations [10, 71,72,73].

During the review process, the authors searched online for the tools, their original authors, and developers. Access was often a challenge due to missing URLs in papers, missing information on whether the tool was still active or discontinued, and the fact that some of the tools did not have a specific name, had been since renamed or had a successor app that was named differently or looked visually different. These findings replicated previous systematic reviews experiences on app-based research, in the broader healthcare sector. For example, Montano and collaborators [74] reviewed 26 papers on mobile triage applications, of which only 13 (50%) could be identified on the basis of the paper, and only two were still accessible via Google Play Store at the time the review was conducted. In addition to the lack of information to find the tools, the unresponsiveness of the authors posed yet another challenge to accessibility. The inaccessibility of many research applications shortly after the related paper is published is especially relevant in light of the so-called replication crisis [75], in this case highlighting the need for accountability and transparency beyond the peer-review process.

The heterogeneity in study design captured by this systematic review suggests that often researchers did not publish the description of the tool they have devised together with its validation as a separate paper (see for example [76] and [77]), but already in the context of the study they are conducting. This inevitably reduces the room for the description of the technical property of the eHealth tools (e.g. its technical design, functionality, implementation, and maintainability) in these papers. When this happens, the specific application is considered as a sufficient method, rather than a required one, meaning that the chosen tool can fulfil the research objective, but can be replaced with another similar application. This reduces considerably the consistency across studies and the ability of pooling or meta-analysing results. Interestingly, the comparison of functionally similar but independently developed software products with small but important differences in design or engineering may introduce errors distorting data collection and biasing data comparison [78]. In general, variations in technology components that are implemented together, or variations in the strategy for their implementation reduce replication fidelity [78]. Most of the tools captured in this systematic review were created in the function of a broader research project, or in preparation for it. The development process was not a primary research objective or method. Separating the app development process from the research question and eliminating any questions related to software engineering from the discussion, compromises replicability, accessibility, and longevity. Unfortunately, it is a common misconception that accessibility and maintenance issues are considered solely as a matter of software engineering. When eHealth tools are specifically developed for a study and their use is a crucial part of the study design, providing information on accessibility and maintenance should not be disregarded as a mere software engineering issue. They must be thoroughly planned and addressed to ensure the replicability of the findings. In this mapping exercise, studies which had a combined source of public and private funding were those most likely to maintain their eHealth tool, in terms of availability and accessibility until the time of this review. However, licensing models were found to be essential for longevity: when the authors and developers of the tool incorporate a strategy of private ownership, either via a company or person, the tools are more likely to remain active. This was evidenced by the fact that all 16 tools that reported private ownership as licensing were still active and accessible at the time of data extraction.

It was not possible to assess the costs of the eHealth tools as such, or in function of their longevity, given a lack of relevant information. Longevity of tools depends mainly on their maintenance strategy to make them compatible with the fast developing and updating mobile technology. Implementing an adequate and lasting maintenance strategy is key to increasing the longevity of eHealth. Challenges of implementing eHealth in real-life contexts, such as the need for it to be more interactive and interoperable, designed to be able to fit multiple contexts, consumers, and providers [79] are well known. However, the ability of eHealth tools to be preserved and usable after development is often overlooked by the scientific literature. By disregarding proper maintenance strategies of eHealth tools, authors may indirectly be raising further challenges to the advancement of eHealth research, development and implementation, at least in the long term. We foresee two main possible strategies that could match costs with longevity. One possible option would be that upcoming eHealth takes into account accessibility and shareability (i.e., making their code open source) so that the scientific and developer community may contribute to keeping eHealth active and usable. Conversely, in the case of proprietary tools, having a designated team that regularly updates the tool and focuses on platform stability appears to be crucial to preserving it over time. However, assuring a maintenance strategy may require constant acquisition and allocation of funds. It is important that the implementation of strategies to promote longevity are established and clarified since the very beginning of the development of eHeatlth tools (i.e., the design phase), to ensure a feasible plan for longevity. Furthermore, future research should focus on producing a standardised measure to assess eHealth, similar to the existing Mobile App Rating Scale (MARS) [80], with the ability to address tool longevity (i.e., accessibility, shareability, costs, ownership, maintenance strategy, etc.).

Given the high number of papers retrieved matching inclusion and exclusion criteria, this review only included software tools. Software with incorporated Artificial Intelligence has been excluded to avoid capturing tools aimed at categorising disease severity or aiding a formal clinical diagnosis. Maintaining the focus on research allowed to map tools to be potentially used for data collection in the field, screening for neurological impairment.

It is important to note that some of these studies and tools focused on collecting signs and symptoms (e.g., tremor) referred to one neurological disease in particular (e.g., Parkinson’s disease). This implies that only symptoms frequently reported by patients with that specific condition are assessed. However, this may not limit the ability of the tool to assess the same set of symptoms in patients with other conditions and in different settings, or in the general population, as pointed out by some of the authors [21, 40]. Nonetheless, the lack of validation of the captured tools is still an ongoing challenge within the eHealth field, representing one of the main barriers to their use. The vast number of studies focusing on proposing and/or developing such tools is not matched by an equivalent number of reports of their validation and application in real-life contexts, with very few being fully validated. Furthermore, the heterogeneity of validation and methods to measure reliability makes it more difficult to draw comparisons. The use of gold standards, combined with appropriate comparison groups (i.e. healthy vs. impaired population), could be a potential solution to reduce heterogeneity of validations.

Conclusions

eHealth represents a unique opportunity for researchers, to collect data in the field at contained costs. However, eHealth development appears to often neglect the needs of the population it targets, leading to higher heterogeneity, and lesser validity and reliability. It also appears to disregard the implementation of strategies to keep the tools active over time. Establishing rigorous standards to guide the development of eHealth is increasingly vital in guaranteeing its success. This study mapped existing eHealth software tools aimed at assessing neurological signs and symptoms in populations outside the clinical setting. The mapping and tool descriptions can be used as a guide for neuroepidemiological research. This mapping exercise highlighted the high heterogeneity and low comparability of existing tools, which hamper their use for a much needed, new unique eHealth software, able to screen a wider range of signs and symptoms in population-based studies, for research purposes. This review also emphasises the need to produce more replicable and accessible eHealth research.

Data avalibility

All data is available within the article and supplementary material.

References

Reddy KS (2016) Global Burden of Disease Study 2015 provides GPS for global health 2030. The Lancet 388(10053):1448–1449

Feigin VL, Nichols E, Alam T, Bannick MS, Beghi E, Blake N et al (2019) Global, regional, and national burden of neurological disorders, 1990–2016: a systematic analysis for the Global Burden of Disease Study 2016. The Lancet Neurology 18(5):459–480

Feigin VL, Vos T, Nichols E, Owolabi MO, Carroll WM, Dichgans M et al (2020) The global burden of neurological disorders: translating evidence into policy. The Lancet Neurology 19(3):255–265

Eysenbach G (2001) What is e-health? J Med Internet Res 3(2):e20

Peterson CB, Hamilton C, Hasvold P. From innovation to implementation: eHealth in the WHO European region. Copenhagen, Denmark: WHO Regional Office for Europe; 2016. 98 p.

Chattopadhyay S (2012) A prototype depression screening tool for rural healthcare: a step towards ehealth informatics. J Med Imaging Hlth Inform 2(3):244–249

Guitton MJ (2021) Something good out of something bad: eHealth and telemedicine in the Post-COVID era. Comput Hum Behav 123:106882

Steele Gray C, Miller D, Kuluski K, Cott C (2014) Tying ehealth tools to patient needs: exploring the use of ehealth for community-dwelling patients with complex chronic disease and Disability. JMIR Res Protoc 3(4):e67

Mentis AFA, Dardiotis E, Efthymiou V, Chrousos GP (2021) Non-genetic risk and protective factors and biomarkers for neurological disorders: a meta-umbrella systematic review of umbrella reviews. BMC Med 19(1):6

Archer N, Lokker C, Ghasemaghaei M, DiLiberto D (2021) eHealth Implementation Issues in Low-Resource Countries: Model, Survey, and Analysis of User Experience. J Med Internet Res 23(6):e23715

Ferreira VR, Seddighi H, Beumeler L, Metting E, Gallo V (2022) eHealth tools to assess neurological function: a systematic review protocol for a mapping exercise. BMJ Open 12(9):e062691

Fuller G. Neurological Examination Made Easy. 6th ed. Elsevier; 2019.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA et al (2009) The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 6(7):e1000100

Microsoft Corporation. Microsoft Visio [Internet]. 2018. Available from: https://products.office.com/en/visio/flowchart-software

Hsu WY, Rowles W, Anguera JA, Anderson A, Younger JW, Friedman S et al (2021) Assessing cognitive function in multiple sclerosis with digital tools: observational study. J Med Internet Res 23(12):e25748

Twomey DM, Wrigley C, Ahearne C, Murphy R, De Haan M, Marlow N et al (2018) Feasibility of using touch screen technology for early cognitive assessment in children. Arch Dis Child 103(9):853–858

Rentz DM, Dekhtyar M, Sherman J, Burnham S, Blacker D, Aghjayan SL et al (2016) The Feasibility of At-Home iPad Cognitive Testing For Use in Clinical Trials. J Prev Alzheimers Dis 3(1):8–12

Middleton RM, Pearson OR, Ingram G, Craig EM, Rodgers WJ, Downing-Wood H et al (2020) A rapid electronic cognitive assessment measure for multiple sclerosis: validation of cognitive reaction, an electronic version of the symbol digit modalities test. J Med Internet Res 22(9):e18234

Thabtah F, Mampusti E, Peebles D, Herradura R, Varghese J (2019) A Mobile-based screening system for data analyses of early dementia traits detection. J Med Syst 44:1

Simfukwe C, Youn Y, Kim S, An S. Digital trail making test-black and white: Normal vs MCI. Applied Neuropsychology-Adult.

Hsu WY, Rowles W, Anguera J, Zhao C, Anderson A, Alexander A et al (2021) Application of an adaptive, digital, game-based approach for cognitive assessment in multiple sclerosis: observational study. J Med Internet Res 23(1):e24356

Newland P, Oliver B, Newland JM, Thomas FP (2019) Testing feasibility of a mobile application to monitor fatigue in people with multiple sclerosis. J Neurosci Nurs 51(6):331–334

Jongstra S, Wijsman L, Cachucho R, Hoevenaar-Blom M, Mooijaart S, Richard E (2017) Cognitive testing in people at increased risk of dementia using a smartphone app: the ivitality proof-of-principle study. JMIR Mhealth And Uhealth. 5(5):e68

Holding BC Ingre, M, Petrovic, P, Sundelin, T, Axelsson, J. (2021) Quantifying Cognitive Impairment After Sleep Deprivation at Different Times of Day: A Proof of Concept Using Ultra-Short Smartphone-Based Tests. Frontiers in Behavioral Neuroscience 15.

Weizenbaum EL, Fulford D, Torous J, Pinsky E, Kolachalama VB, Cronin-Golomb A (2021) Smartphone-based neuropsychological assessment in Parkinson’s disease: feasibility, validity, and contextually driven variability in cognition. J Int Neuropsycho Societ : JINS 17:1–13

Zorluoglu G, Kamasak ME, Tavacioglu L, Ozanar PO (2015) A mobile application for cognitive screening of dementia. Computer Methods Programs Biomed 118(2):252

Clionsky M, Clionsky E (2014) Psychometric equivalence of a paper-based and computerized (iPad) version of the memory orientation screening test (MOST®). Clin Neuropsychol 28(5):747–755

Brearly T, Rowland J, Martindale S, Shura R, Curry D, Taber K (2019) Comparability of iPad and web-based nih toolbox cognitive battery administration in veterans. Arch Clin Neuropsychol 34(4):524–530

Demeyere N, Haupt M, Webb S, Strobel L, Milosevich E, Moore M et al (2021) Introducing the tablet-based Oxford cognitive screen-Plus (OCS-Plus) as an assessment tool for subtle cognitive impairments. Sci Rep 12(11):1

Dorociak KE, Mattek N, Lee J, Leese MI, Bouranis N, Imtiaz D et al (2021) The survey for memory, attention, and reaction time (SMART): development and validation of a brief web-based measure of cognition for older adults. Gerontology 67(6):740–752

Lunardini F, Luperto M, Romeo M, Basilico N, Daniele K, Azzolino D et al (2020) Supervised digital neuropsychological tests for cognitive decline in older adults: usability and clinical validity study. JMIR Mhealth Uhealth 8(9):e17963

Kokubo N, Yokoi Y, Saitoh Y, Murata M, Maruo K, Takebayashi Y et al (2018) A new device-aided cognitive function test, User eXperience-trail making test (UX-TMT), sensitively detects neuropsychological performance in patients with dementia and Parkinson’s disease. BMC Psychiatry 18(1):220

Dimauro G Di Nicola, V, Bevilacqua, V, Caivano, D, Girardi, F. (2017) Assessment of speech intelligibility in Parkinson’s disease using a speech-to-text system. IEEE Access 5

Wilson P, Leitner C, Moussalli A. Mapping the Potential of eHealth: Empowering the Citizen through eHealth Tools and Services. 53.

Wilson RS, Leurgans SE, Boyle PA, Schneider JA, Bennett DA (2010) Neurodegenerative basis of age-related cognitive decline. Neurology 75(12):1070–1078

Alberts JL, Koop MM, McGinley MP, Penko AL, Fernandez HH, Shook S et al (2021) Use of a smartphone to gather parkinson’s disease neurological vital signs during the COVID-19 pandemic. Parkinsons Dis 2021:5534282

Rosenthal BD Jenkins, TJ, Ranade, A, Bhatt, S, Hsu, WK, Patel, AA. (2019) The use of a novel tablet application to quantify dysfunction in cervical spondylotic myelopathy patients. Spine Journal. 19.

Kostikis N Hristu Varsakelis, D, Arnaoutoglou, M, Kotsavasiloglou, C. A smartphone-based tool for assessing parkinsonian hand tremor. IEEE Journal of Biomedical and Health Informatics. 2015;19.

Kassavetis P Saifee, TA, Roussos, G, Drougkas, L, Kojovic, M, Rothwell, JC, Edwards, MJ, Bhatia, KP. (2016) Developing a tool for remote digital assessment of parkinson’s disease. Movement Disorders Clinical Practice 3

Lee CY, Kang SJ, Hong SK, Ma HI, Lee U, Kim YJ (2016) A validation study of a smartphone-based finger tapping application for quantitative assessment of bradykinesia in parkinson’s disease. PLoS ONE 11(7):e0158852

Kuosmanen E, Wolling F, Vega J, Kan V, Nishiyama Y, Harper S et al (2020) Smartphone-based monitoring of parkinson disease: quasi-experimental study to quantify hand tremor severity and medication effectiveness. JMIR Mhealth Uhealth 8(11):e21543

Linder S, Koop M, Tucker D, Guzi K, Gray D, Alberts J (2021) Development and validation of a mobile application to detect visual dysfunction following mild traumatic brain injury. Mil Med 186:584–591

Quinn TJ, Livingstone I, Weir A, Shaw R, Breckenridge A, McAlpine C et al (2018) Accuracy and feasibility of an android-based digital assessment tool for post stroke visual disorders-the strokevision app. Front Neurol 9:146

Tosic L, Goldberger E, Maldaner N, Sosnova M, Zeitlberger AM, Staartjes VE et al (2020) Normative data of a smartphone app-based 6-minute walking test, test-retest reliability, and content validity with patient-reported outcome measures. J Neurosurg Spine 29:1–10

Arcuria G, Marcotulli C, Amuso R, Dattilo G, Galasso C, Pierelli F et al (2020) Developing a smartphone application, triaxial accelerometer-based, to quantify static and dynamic balance deficits in patients with cerebellar ataxias. J Neurol 267(3):625–639

Marano M Motolese, F, Rossi, M, Magliozzi, A, Yekutieli, Z, Di Lazzaro, V. (2021) Remote smartphone gait monitoring and fall prediction in Parkinson’s disease during the COVID-19 lockdown. Neurological Sciences 42.

Bourke A, Scotland A, Lipsmeier F, Gossens C, Lindemann M. Gait Characteristics Harvested during a Smartphone-Based Self-Administered 2-Minute Walk Test in People with Multiple Sclerosis: Test-Retest Reliability and Minimum Detectable Change. SENSORS. 2020

Serra-Añó P, Pedrero-Sánchez JF, Inglés M, Aguilar-Rodríguez M, Vargas-Villanueva I, López-Pascual J (2020) Assessment of Functional activities in individuals with Parkinson’s disease using a simple and Reliable smartphone-based procedure. Int J Environ Res Public Health 17:11

Obuchi SP, Tsuchiya S, Kawai H (2018) Test-retest reliability of daily life gait speed as measured by smartphone global positioning system. Gait Posture 61:282

Ishikawa M, Yamada S, Yamamoto K, Aoyagi Y (2019) Gait analysis in a component timed-up-and-go test using a smartphone application. J Neurol Sci 15(398):45–49

Lee JB, Kim IS, Lee JJ, Park JH, Cho CB, Yang SH et al (2019) Validity of a smartphone application (sagittalmeter pro) for the measurement of sagittal balance parameters. World Neurosurg 126:e8-15

Su D, Liu Z, Jiang X, Zhang F, Yu W, Ma H et al (2021) Simple smartphone-based assessment of gait characteristics in parkinson disease: validation study. JMIR Mhealth Uhealth 9(2):e25451

Pratap A, Grant D, Vegesna A, Tummalacherla M, Cohan S, Deshpande C et al (2020) Evaluating the utility of smartphone-based sensor assessments in persons with multiple sclerosis in the real-world using an app (Elevatems): observational, prospective pilot digital health study. JMIR Mhealth Uhealth 8(10):e22108

Frank B Fabian F, Brune B, Bozkurt B, Deuschl C, Nogueira RG, Kleinschnitz C, Köhrmann M, (2021) Validation of a shortened FAST-ED algorithm for smartphone app guided stroke triage. Therapeutic Advances in Neurological Disorders 14

Baldereschi M, Di Carlo A, Piccardi B, Inzitari D (2016) The Italian stroke-app: ICTUS3R. Neurol Sci 37(6):991–994

Arora S Venkataraman, V, Zhan, A, Donohue, S, Biglan, KM, Dorsey, ER, Little, MA. (2015) Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Parkinsonism and Related Disorders 21

Park G, Balcer MJ, Hasanaj L, Joseph B, Kenney R, Hudson T et al (2022) The MICK (Mobile integrated cognitive kit) app: Digital rapid automatized naming for visual assessment across the spectrum of neurological disorders. J Neurol Sci 11(434):120150

Pan D, Dhall R, Lieberman A, Petitti DB (2015) A mobile cloud-based Parkinson’s disease assessment system for home-based monitoring. JMIR Mhealth Uhealth 3(1):e29

Karr JE, Graham RB, Hofer SM, Muniz-Terrera G (2018) When does cognitive decline begin? A systematic review of change point studies on accelerated decline in cognitive and neurological outcomes preceding mild cognitive impairment, dementia, and death. Psychol Aging 33(2):195–218

Braak H, Rüb U, Del Tredici K (2006) Cognitive decline correlates with neuropathological stage in Parkinson’s disease. J Neurol Sci 248(1–2):255–258

Dale O, Hagen KB (2007) Despite technical problems personal digital assistants outperform pen and paper when collecting patient diary data. J Clin Epidemiol 60(1):8–17

Kazamel M, Stino AM, Smith AG (2021) Metabolic syndrome and peripheral neuropathy. Muscle Nerve 63(3):285–293

Kirthi V, Perumbalath A, Brown E, Nevitt S, Petropoulos IN, Burgess J et al (2021) Prevalence of peripheral neuropathy in pre-diabetes: a systematic review. BMJ Open Diab Res Care 9(1):e002040

Fernandez C, Canadian Paediatric Society (CPS), Bioethics Committee. Ethical issues in health research in children. Paediatrics & Child Health. 2008 Oct 1;13(8):707–12.

Ali MA, Alam K, Taylor B, Ashraf M (2021) Examining the determinants of eHealth usage among elderly people with disability: The moderating role of behavioural aspects. Int J Med Informatics 149:104411

Alam K, Mahumud RA, Alam F, Keramat SA, Erdiaw-Kwasie MO, Sarker AR (2019) Determinants of access to eHealth services in regional Australia. Int J Med Informatics 131:103960

De Main AS, Xie B, Shiroma K, Yeh T, Davis N, Han X (2022) Assessing the Effects of eHealth Tutorials on Older Adults’ eHealth Literacy. J Appl Gerontol 41(7):1675–1685

Humphreys GW, Duta MD, Montana L, Demeyere N, McCrory C, Rohr J et al (2017) Cognitive Function in low-income and low-literacy settings: validation of the tablet-based oxford cognitive Screen in the health and aging in africa: a longitudinal study of an INDEPTH community in South Africa (HAALSI). GERONB 72(1):38–50

Raynor G, Baslet G (2021) A historical review of functional neurological disorder and comparison to contemporary models. Epilepsy & Behavior Reports 16:100489

Spittel S, Kraus E, Maier A (2021) Dementia awareness challenges in sub-saharan africa: a cross-sectional survey conducted among school students in ghana. Am J Alzheimers Dis Other Demen 36:153331752110553

Steinman L, van Pelt M, Hen H, Chhorvann C, Lan CS, Te V et al (2020) Can mHealth and eHealth improve management of diabetes and hypertension in a hard-to-reach population? —lessons learned from a process evaluation of digital health to support a peer educator model in Cambodia using the RE-AIM framework. mHealth 6(40):40

Karlyn A, Odindo S, Onyango R, Mbindyo C, Mberi T, Too G et al (2020) Testing mHealth solutions at the last mile: insights from a study of technology-assisted community health referrals in rural Kenya. mHealth 6(43):43

Lewis T, Synowiec C, Lagomarsino G, Schweitzer J (2012) E-health in low- and middle-income countries: findings from the center for health market innovations. Bull World Health Org 90(5):332–340

Montano IH, de la Torre DI, López-Izquierdo R, Villamor MAC, Martín-Rodríguez F (2021) Mobile triage applications: a systematic review in literature and play store. J Med Syst 45(9):86

Ioannidis JPA (2005) Why most published research findings are false. PLoS Med 2(8):e124

James L, Davies M, Mian S, Seghezzo G, Williamson E, Kemp S et al (2021) The BRAIN-Q, a tool for assessing self-reported sport-related concussions for epidemiological studies. Epidemiol Health 19(43):e2021086

Gallo V, McElvenny DM, Seghezzo G, Kemp S, Williamson E, Lu K et al (2022) Concussion and long-term cognitive function among rugby players - The BRAIN Study. Alzheimer’s Dementia Journal Alzheimer’s Association 18(6):1164–1176

Coiera E, Ammenwerth E, Georgiou A, Magrabi F (2018) Does health informatics have a replication crisis? J Am Med Inform Assoc 25(8):963–968

Kreps GL, Neuhauser L (2010) New directions in eHealth communication: Opportunities and challenges. Patient Educ Couns 78(3):329–336

Stoyanov SR, Hides L, Kavanagh DJ, Zelenko O, Tjondronegoro D, Mani M (2015) Mobile app rating scale: a new tool for assessing the quality of health mobile apps. JMIR mHealth uHealth 3(1):e27

Acknowledgements

The Systematic Review authors would like to thank Joost Driesens, librarian at the University of Groningen, for all his support during the planning of this review.

Funding

The present Systematic Review stems from a funded PhD scholarship position at the University of Groningen. No further funding was acquired.

Author information

Authors and Affiliations

Contributions

VRF, together with VG and EM developed the structure of the Systematic Review (Search strategy, criteria selection, and data analysis). VRF and JS did data extraction, VRF mainly about the characteristics of the study and JS about the characteristics of the tool. LB double-checked some of the data extraction. VRF wrote the manuscript together with VG and JS. HS and LB provided feedback and edits to the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ferreira, V.R., Metting, E., Schauble, J. et al. eHealth tools to assess the neurological function for research, in absence of the neurologist – a systematic review, part I (software). J Neurol 271, 211–230 (2024). https://doi.org/10.1007/s00415-023-12012-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00415-023-12012-6