Abstract

In this study, the novelty effect or initial fascination with new technology is addressed in the context of an immersive Virtual Reality (iVR) experience. The novelty effect is a significant factor contributing to low learning outcomes during initial VR learning experiences. The aim of this research is to measure the effectiveness of a tutorial at mitigating the novelty effect of iVR learning environments among first-year undergraduate students. The iVR tutorial forms part of the iVR learning experience that involves the assembly of a personal computer, while learning the functions of the main components. 86 students participated in the study, divided into a Control group (without access to the tutorial) and a Treatment group (completing the tutorial). Both groups showed a clear bimodal distribution in previous knowledge, due to previous experience with learning topics, giving us an opportunity to compare tutorial effects with students of different backgrounds. Pre- and post-test questionnaires were used to evaluate the experience. The analysis included such factors as previous knowledge, usability, satisfaction, and learning outcomes categorized into remembering, understanding, and evaluation. The results demonstrated that the tutorial significantly increased overall satisfaction, reduced the learning time required for iVR mechanics, and improved levels of student understanding, and evaluation knowledge. Furthermore, the tutorial helped to homogenize group behavior, particularly benefiting students with less previous experience in the learning topic. However, it was noted that a small number of students still received low marks after the iVR experience, suggesting potential avenues for future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interest in immersive Virtual Reality (iVR) applications has significantly increased in various fields following the release onto the market of high-quality, affordable iVR media hardware and software in or around 2015. Even so, the effectiveness of iVR at improving the performance of end-users has been met with limited success when compared with well-established teaching methods. Positive impacts on learning and training outcomes when utilizing iVR applications have been demonstrated in approximately 30% of all education and training-related studies; conversely, no clear advantage between the use of iVR and conventional methodologies was found in a notable 10% of studies (Checa & Bustillo 2020a).

There are plenty of recent studies in which high levels of student excitement and engagement when utilizing iVR experiences have been noted (Di Natale et al. 2020). Moreover, increased motivation is often expected to lead to improved learning outcomes; a correlation that is however not substantiated in most cases. The question is therefore to do with the factors that may be contributing to such low performance improvement rates observed during iVR experiences. This limitation appears to stem from a multifaceted approach, including such issues as misconceived designs, inadequate technical development, and poor user adaptation to the iVR experience (Neumann et al. 2018). Among the issues that contribute to a mismatch between the user and the iVR experience is the novelty effect. The novelty effect in iVR experiences can be defined as the additional cognitive load imposed on users, due to new, unfamiliar, and unexpected ways of interacting with the iVR environment (Huang 2003). The concept can be expanded to the excitement that any new technology awakens in final users, increasing their attention, in their first interactions, as with Augmented Reality advertising (Hopp and Gangadharbatla 2016). Novice users tend to focus on controlling the hand-controllers, exploring the iVR environment, and trying out all possible interactions, rather than focusing on the proposed learning tasks. It can result in low engagement with the learning objectives and little knowledge transfer. The novelty effect may influence the duration of an iVR experience, which may last longer for novice users and decrease their expected learning gains (Merchant et al. 2014). Likewise, users may be more distracted, because of their unfamiliarity with the iVR environment (Huang et al. 2021) and may additionally rate satisfaction and perceived learning better, without considering whether their learning has been enhanced (Sung et al. 2021). It will all have a negative impact on any evaluation of iVR applications and their suitability for learning (Häkkilä et al. 2018). Another study (Tsay et al. 2019) lent support to the notion that when the novelty of a new tool wears off, users may experience decreased autonomous motivation and lower academic performance. One possible explanation is that the initial curiosity diminishes over time as users become more familiar with the tool. Building upon this idea, it has been suggested elsewhere (Jeno et al. 2019) that the negative effects of the novelty effect can be mitigated by incorporating gamification elements, in this case, when evaluating the novelty effect of an m-learning tool on internalization and achievement.

In recent research, strategies have been proposed to overcome the novelty effect in iVR learning experiences, based on iVR serious games (Miguel-Alonso et al. 2023). One such strategy is the inclusion of an extensive pre-training stage, where students gain sufficient skills through interactions with the iVR environment. This strategy is not novel, as it has been successfully implemented in the context of video games (Koch et al. 2018). Video games often include tutorials as an introduction, which serve to counter the novelty effect by helping the user become familiar with the handheld controllers, providing instructions on their use, and introducing the mechanics of the game, and interactions with the environment, and objects. These tutorials are designed for players to acquire skills through practice without the constraints of time, space, and interactions, in a complete environment, in preparation for the real game. If the challenges of the game are to be met, a balance must be struck between the skills that are required and how well each skill needs to be learned. If a player has not developed sufficient skills to negotiate a task, it will negatively impact on the gaming experience, just as it would if the skill were over-developed for the task (Andersen et al. 2012). Additionally, different approaches, such as context-sensitive and context-insensitive tutorials (Csikszentmihalyi 1991), should be given careful consideration in serious game tutorial designs.

The design challenges of video game tutorials may differ when applied to tutorials for iVR serious games. The unique effects of immersion in the iVR environment, the novel interfaces and handheld controllers, and the potential for cybersickness must all be weighed up (Checa and Bustillo 2020b). When users first enter an iVR environment, they may not be familiar with how to use the iVR hand-controllers, nor how to interact with objects. Additionally, iVR environments can provide a strong sense of immersion, creating a feeling of presence, as if the iVR environment is the real world. This sense of presence and the freedom provided to users in tutorials may involve abrupt movements and interactions that can exacerbate symptoms of cybersickness. Cybersickness can lead to disorientation and physical discomfort, thus negatively impacting on the iVR experience (Morin et al. 2016). These unique factors should therefore be considered in the design of iVR serious game tutorials, to ensure an effective and comfortable learning experience for users.

The best ways of designing iVR tutorials for learning experiences have been researched in some recent works, through comparisons between different tutorial modalities. One example is the research of Frommel et al. (2017), in which instructions shown on a screen with a context-sensitive tutorial were compared. Those authors concluded that the tutorial has to be part of the iVR video game, due to the increasing engagement and motivation. Another is the research of Zaidi et al. (2019), in which the differences between verbal instructions, a developer’s tutorial, and a user-centered design tutorial were studied. In their research, they concluded that the user-centered design tutorial increased user engagement and understanding of the iVR video game. However, their research was validated with few participants. Tutorials have also been included in training scenarios, for example, in the research of Fussell et al. (2019). They used the tutorial for teaching users how to maneuver a plane with successful results: user satisfaction improved, and users found it easier to complete the tasks in the iVR video game. Nevertheless, the efficiency of the tutorial was not measured. Additionally, some users found some tasks difficult, which might have been due to the novelty effect, although it was never measured. The research gap on the effects of iVR tutorials on learning rates was therefore the starting point of this study.

Although this research is focused on the use of a VR-tutorial to overcome the novelty effect in VR-serious games, other approaches to this task may also be effective. While the tutorial-based solution first trains the student to be an expert in VR-interaction before the learning task, an alternative solution might be to adapt the learning task and the VR-environment to the student's capabilities, moving the student up from novice to expert level as the learning takes place. Based on AI-driven technologies and models, such as agents and expert systems, the most promising solutions to have followed that strategy have been discussed in the recent review of Westera et al. (2020). These solutions are focused on evaluating user performance and reactions, from human language to facial expressions, to adapt the VR-environment to the user's state and capabilities in real time. An alternative solution might be to train the user playing against an AI, to learn how to interact with the VR environment. Nebel et al. (2020) tested that solution, comparing 3 forms of social competition: playing against a human competitive agent, playing against an artificial competitive agent, and playing against an artificial leaderboard. The results of the study showed a beneficial effect of adaptive mechanisms on learning performance and efficiency, fewer feelings of shame, increased empathy, and behavioral engagement when facing competitive agents. However, the strategy presents two limitations from a research point of view: (1) it requires extensive modifications and programming complexity of the VR serious game (the previously cited studies are not on VR-games), making it difficult to compare the new outcomes with those from previous works on this VR learning environment, and (2) it will complicate how to measure the influence of the novelty effect in itself, because learning concepts and VR mechanics will be simultaneous.

As mentioned, this research therefore began with an examination of the suitability of tutorials for countering the novelty effect in iVR learning experiences. With that purpose in mind, an iVR learning experience was designed and tested with an extensive sample of students (86), comparing different key performance indicators between a Treatment group, where an iVR tutorial was used to address the novelty effect, and a Control group, with no way of addressing that effect before the iVR learning experience. The systematic procedure guiding this comparison solved the main limitations of previous studies that have addressed the novelty effect in iVR learning experiences. The procedure included: ad-hoc iVR learning experience design, significant control and treatment group sizes, and a wide range of key performance indicators (5 on usability and satisfaction, 2 quantitative performance indicators, and 3 types of knowledge acquisition). Such a systematic methodology has not previously been followed to measure the impact of the novelty effect on student satisfaction and learning performance within iVR learning environments.

The subsequent sections of this paper will be organized as follows: in Sect. 2, a brief description may be found of the development of the iVR serious game used in this research. The evaluation process and the methods employed to assess learning experience performance are outlined in Sect. 3. The results of the learning experiences will be presented in Sect. 4, through comparisons between the performance of the students who followed the iVR tutorial and the students who had no access to it before playing the iVR serious game, as well as comparisons with recent related works and limitations of the study. Finally, in Sect. 5, the conclusions and potential avenues for future research will be presented.

2 iVR serious game for PC-assembly

The virtual reality game in this research was initially developed for a first approximation to research on VR-based learning (Checa et al. 2021). In that research, the iVR serious game “PC Virtual LAB” (Checa and Bustillo 2022) was developed for teaching the assembly of computer hardware. The game was systematically validated and can be downloaded from the Steam Platform. The aim of the game is to improve learning in basic computer concepts (identification and location of computer components) and to provide students with a tool, so that they can deepen their knowledge autonomously in an environment where they can practice and fail. In a first step, this game was used to compare traditional non-immersive learning methodologies with a VR-based learning experience. However, several obstacles identified in iVR educational applications, such as the novelty effect, were not extensively considered in that study. It was thought that the incorporation of an introductory tutorial in this serious game might overcome those limitations. Both applications, serious game and iVR tutorial, are freely available in the public domain. The educational game can be download at: http://3dubu.es/en/virtual-reality-computer-assembly-serious-game; and the tutorial at: http://3dubu.es/virtual-reality-tutorial-learning-experience.

2.1 Tutorial design

The main objective of the tutorial is so that the user can practice the mechanics of the game in a non-paced environment in preparation for the iVR serious game. An objective that requires different modules with different types of interaction. These modules must offer different tasks that require the use of specific interactions, so that all the basic iVR interactions are covered. The Cognitive Theory of Multimedia Learning Theory (Mayer 2009) was considered as a foundation, to ease understanding of the tutorial tasks and to avoid distractions. Maintaining user focused concentration on the tasks improves performance and reinforces learning. The following principles of Cognitive Multimedia Learning Theory (adapted for iVR) were considered in the conceptual design phase:

-

Multimedia principle the premise that the combined use of graphics and text will improve both comprehension of the tasks and how to navigate and control the movements. The principle is applied in the tutorial that shows graphics of iVR controllers and how to interact with them.

-

Signaling principle the idea that learning can be improved when essential information is highlighted. in the tutorial. An assistant robot was programmed that offers visual cues to guide user attention and to reduce potential distraction.

-

Coherence principle avoiding irrelevant material improves learning. The tutorial should not overload the environment with distracting information. For this purpose, neutral colors are used, and minimal objects are placed on the screen, so that users can concentrate on relevant content.

-

Redundancy principle information is complemented from different sources. In the tutorial, instead of repeating information, different data sources show information that is not duplicated. It provides users with multiple perspectives and reinforces their understanding.

-

Personalization principle the learning process is enhanced when an informal rather than a formal narrative style is used. In the tutorial, the information is shown in an informal conversational style to the learner. By adopting a more relaxed and relatable narrative style, the tutorial fosters a connection between the learner and the instructional material, increasing engagement, and reducing the novelty effect.

In addition, some other features of the tutorial such as context-sensitivity (information is displayed in synchrony with the pace and even the needs of the user), free movement of the user, and access to additional information were sourced from the research of Andersen et al. (2012). These elements ensure that the tutorial is adaptable to the user's pace and needs, offering a more personalized and immersive learning experience.

Having completed the conceptual design of the tutorial, its technical development began in Unreal Engine™. A process that was streamlined with a previously tested and validated framework (Checa et al. 2020). Four sequential modules were designed, as shown in Fig. 1: introduction (Fig. 1A), basic interactions (Fig. 1B), complex interactions (Fig. 1C), and exploration and creative play (Fig. 1D). The user gains familiarity with the iVR environment in the Introductory module. The second module, Basic interactions, was designed to introduce the user to the iVR handheld and headset controllers. The third module, Complex interactions, offers the opportunity to practice and to master different interactions. Finally, the design of the last module, Exploration and creative play, was designed as a consolidation of all the previous stages, where the user can explore and freely practice all the mechanics introduced above. A more detailed presentation of this tutorial can be found in (Miguel-Alonso et al. 2023).

2.2 Serious game design

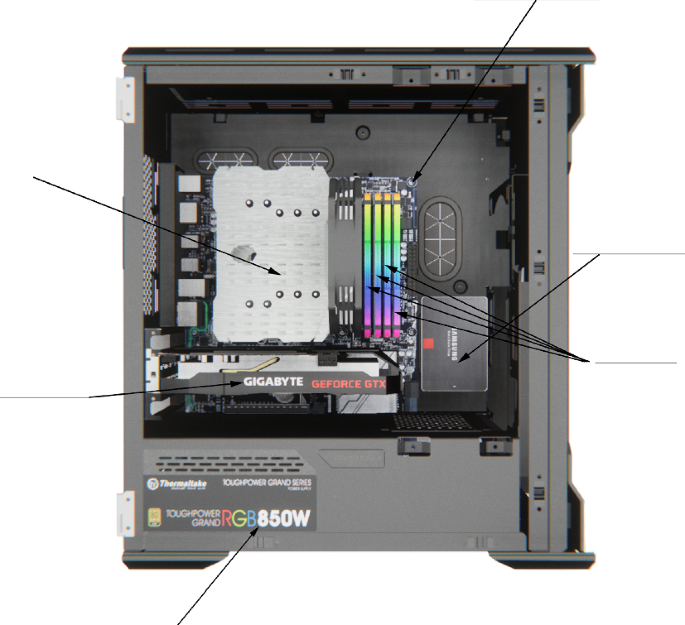

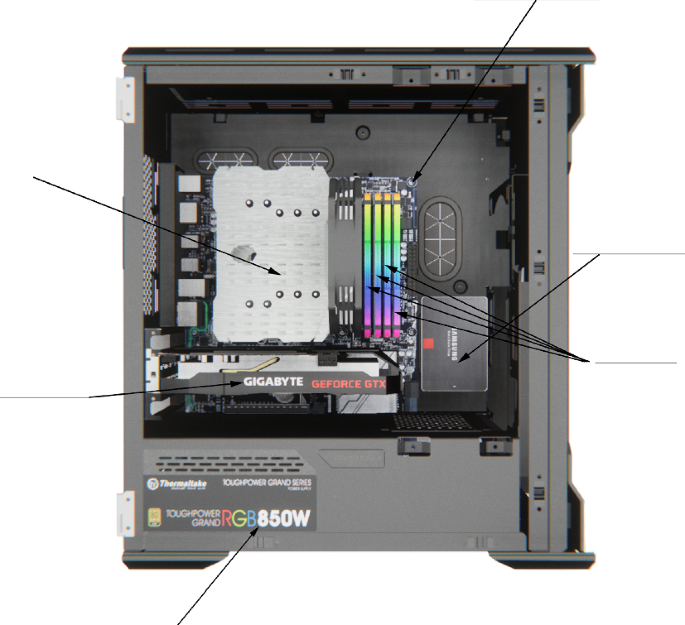

Having completed the tutorial, the users move on to the serious game “PC Virtual LAB”, as shown in Fig. 2A. This serious game was designed, so that students could progress through the following stages:

-

Introduction the student selects an age and genre by grabbing and placing the responses on the wall, as shown in Fig. 2B. This step serves as a brief initial introduction to the iVR environment.

-

Guided assembly at this stage, the student, under the guidance of the virtual teacher, has the task of assembling a desktop computer, as shown in Fig. 2C. This is a fixed step-by-step process where students receive continuous feedback and help from the robot assistant to learn where to place each component: motherboard, CPU and its cooler, power supply, graphic card, hard disk, and RAM memories.

-

Placement the student is shown a motherboard and has to associate each part with its proper name, as shown in Fig. 2D.

-

Airflow the user has to place the desktop computer fans properly in position, for efficient heat removal from within the casing, as shown in Fig. 2E.

-

Unguided assembly the student is given the task of assembling a desktop computer, as was done at the Guided assembly stage. However, the difficulty is increased, so the user has to select compatible components (with more than one model for each component), position them in the proper place, and connect them in a sensible order. There is a wide range of hardware from which to choose and the desktop computer to be built must meet certain requirements (Fig. 2F). The required specifications of the computer are shown on the whiteboard. If the component is placed within an area of the assembly table, the student can check the specifications of each component to complete this task successfully. In this way, the student will be able to select the correct components and then assemble the hardware in the cage.

3 Learning experience

In this section, the design of the learning experience is first explained. A description than follows of the sample of participants with whom the experience was validated.

3.1 Learning experience design

The learning experience was designed with a Treatment group and a Control group for comparative purposes. While the Control group tested the iVR serious game with no tutorial, the Treatment group practiced with the iVR tutorial before testing the game. The structure of the experience is shown in Fig. 3. The iVR experience was developed in three phases: (1) pre-test; (2) iVR learning experience and usability and satisfaction survey; and (3) post-test. An interval of one week separated each phase.

The pre-test included 13 questions, as can be seen in Appendix 1, of which 2 questions inquired into previous experience of assembling a desktop computer and 1 was on previous experience with iVR environments. The remaining 10 questions were related to expertise on computer components: 8 questions were Multiple Choice Questions (MCQ), one was an open-ended question, and the last one consisted of an image in which students had to identify and to install 6 components of a desktop computer. Each question was marked with 1 point out of 15, in order to rate the 8 MCQ, the open-ended question asked respondents to rate their own computer knowledge, and the image scored 6 out of 15 points.

A week after the pre-test, the iVR learning experience was performed. Participants were randomly assigned to two groups. Those in the Treatment group played the tutorial and then the 5 levels of the ‘Assemble a computer’ game in the same order. Those in the Control group only played the 5 levels of the game in the same order as the Treatment group. Immediately after finishing this experience, participants were administered a satisfaction and usability survey that can be consulted in Appendix 2. In the survey, participants had to evaluate their level of cybersickness and 5 aspects of satisfaction and usability of the iVR tutorial and the serious game by rating 21 MCQ on a 1-to-5 point Likert-type scale. That scale was then converted to a scale of 1 to 10, for a more precise analysis of the results. The 0 value was used to refer to an unanswered question in the scale and was therefore not included in the analysis.

According to the research of Tcha-Tokey et al. (2016), the 5 aspects to consider in a usability and satisfaction survey of an iVR experience are as follows: engagement, presence, flow, immersion, and skill. In his study (Shernoff 2012), Shernoff explained that engagement is concentration and participation in a task or an experience and is related to adaptive learning. Presence is the sense of being in the virtual environment, which is perceived, even though the user knows that the environment is unreal, as Slater also explained in his research (Slater 2018). According to Csíkszentmihályi (1996), flow is the optimal psychological state in which the user feels immersed, and concentrated, and is enjoying the experience. As proposed in Slater & Wilbur’s study (1997), immersion entails the suspension of disbelief and full engagement with the virtual environment. Evoked through the arousal of user sensations, full immersion will depend on the effectiveness of the stimuli that the HMDs can offer the user in their simulation of real-world events. Finally, skill is user-acquired knowledge after mastering actions within the virtual environment. The number of questions for evaluating each aspect was: 3 questions for engagement, 5 for presence, 4 for immersion, 3 for flow, and 6 for skill. In addition, some of these questions are extracted and adapted from the System Usability Scale (7 questions), NASA-TLX (8 questions), and User Experience Questionnaire (6 questions). Furthermore, the usability and satisfaction survey included 3 open questions, in which participants could comment on the positive and negative aspects of the iVR experience and suggest improvements.

Finally, the third stage of the iVR experience, the post-test, is summarized in Fig. 3 and can be consulted in detail in Appendix 3. The post-test was performed a week after the iVR learning experience was completed. This post-test consisted of 15 MCQ, 3 open questions, and 2 images: a desktop computer, and a motherboard. Both questions referred to two levels of the iVR serious game, when users had to do the same thing. The 15 MCQ and the 3 open-ended questions were valued at 1 point, and the questions about the images, each worth 6 (out of 30) points, were assessed. The questions in the post-test were significantly more complex than the ones presented in the pre-test. The knowledge that each participant acquired was evaluated in the post-test. Both the post-test and pre-test questions differed, in order to avoid learning from the responses to the pre-test.

The setup of the experience consisted of three HTC Vive Pro Eye Head Mounted Displays (HMDs) which were connected to workstations equipped with Intel Core i7-10710U, 32 GB RAM, with NVIDIA GTX 2080 graphic cards (see Fig. 4), so that three participants at a time could perform the experience. In addition, the health and safety regulations that were in force at that time of the iVR experience were closely followed.

3.2 Description of the sample

The participants taking part in this study were students following either a Media Studies Bachelor’s Degree or a Video Game Design Bachelor’s Degree at the University of Burgos (Spain). The sample was divided into two groups, following the proposals of Mayer (2014) where one was the Control group, for use as a reference. In Fig. 5A, the sample of 86 participants is shown, of whom 40 were randomly assigned to the Treatment group (N = 40; 40% female, light gray in Fig. 5A) and 46 to the Control group (N = 46; 35% female, dark gray in Fig. 5A). Both groups presented similar features with regard to group size, gender, age, and iVR pre-experience, compared to the recent studies on iVR learning experiences (Checa et al. 2021). In both groups, participants were following high school studies (73% and 70%), and vocational training (20% and 22%), while a minority (7% and 8%) had not specified their stage of education. The previous iVR experience of the participants had been limited to 40% of the Treatment group and to 50% of the Control group with some prior experience. In all, 31% and 43% of them had used iVR with a smartphone, 38% and 35% with HMD, and 19% and 13% with both systems. Most of them had tested it in video games (69% and 65%), 12% and 22% in serious games, and the rest in 360-degree videos and tourism. As regards participant experience at assembling a desktop computer, 37% and 44% of participants had seen it once in class, 6% and 14% had seen friends doing it, 37% and 36% had assembled their own computer, and 20% and 6% had seen it in shops (25% and 22% had no prior experience).

In summary, all 86 participants were randomly divided into Treatment and Control groups. Sample size, gender, age, and previous iVR experience were similar in both groups. In addition, the previous studies of the participants were mostly at the level of higher education, and the majority had some very basic previous experience of assembling a desktop computer.

According to the pre-test results, the distribution of both groups was bimodal. Both groups were therefore representative of a set of students, some with highly positive results and others with significantly worse results. The violin plot in Fig. 5B shows the distribution of the Treatment and Control group pre-test marks. The violin plot combines a box plot and a kernel density plot to show the data distribution of a single variable with its probability density. It was used in this research for clear data visualization, as both groups were similar.

As the pre-test marks showed a bimodal statistical distribution (Fig. 5B), both the Treatment and the Control groups were divided into two sub-groups. A cut-off mark of 6.50 was applied to the pre-test results, considering the bimodal structure of the groups. Three reasons were considered to select this threshold: 1) a bottle neck in both distributions can be identified at around 6.5 (Fig. 5B); 2) both sides of this threshold presented roughly half of the students in both groups; and 3) as the knowledge questionnaire is a multiple-choice test, lucky responses should be taken into account that can shift the mean value (5) to higher values. Both the Treatment and the Control groups are shown in Fig. 6 with each of the sub-groups arranged in a balanced manner: Group A with the minimum pre-test cut-off mark of 6.50 or more, and Group B with pre-test marks lower than 6.50. After this process, the violin plot of the four new groups presented the modal distribution shown below in Fig. 6B.

4 Results and discussion

In this section, the results of the usability and satisfaction survey, performance, and learning improvement (based on the pre-post-test comparisons) are presented, analyzed, and compared with similar recent studies.

An issue to consider before analysis is the variability in the results. This variability is frequently observed in current research on iVR in education, often attributed to the constraints of limited sample groups. A significant effort was made in this research to test the proposed methodology with an extensive sample of students and all groups had 20 or more participants with the sole exception of one (17). However, even with this sample size, variability in the results was notable. The number (20 per group) is recommended in some recent reviews of IVR in education (Checa and Bustillo 2020a, b), although there are many studies with smaller group sizes. One example is Parong & Mayer’s study (2021), in which the effects of iVR in learning contexts were analyzed, offering a direct comparison with traditional desktop slideshow presentations. Beyond a mere qualitative examination, their study incorporated biometric measures to assess learner experiences. They proposed a case study with 61 participants, divided into 4 groups (none therefore achieved the minimum of 20 participants). Additionally, the results of this research showed high standard deviations (i.e., for learning outcomes in the range 25–40% and 44–65% of the mean values for retention score and transfer score, respectively). Also, the work of Selzer et al. (2019) on spatial cognition and navigation in iVR was tested in 3 groups with 14 participants each and the standard deviation values for learning outcomes were high (i.e., for learning outcomes in the range of 28–48% of the mean value). In view of the above and the nature of the experiment, in which the only difference between two iVR-based learning methods was a 5 min tutorial, no statistical differences were reached with standard tests (i.e., Student-T test or ANOVA). A similar observation was noted in (Hopp & Gangadharbatla 2016) when evaluating the novelty effects in Augmented Reality Advertising Environments. Therefore, alternative statistical tests, such as the Chi Square test, Cronbach’s alpha test, and the Kuder-Richardson formula were whenever possible applied.

4.1 Satisfaction and usability

After the iVR experience, all participants completed the satisfaction and usability survey. Its reliability was assessed through a Cronbach’s alpha test that yielded a result of 0.84, indicating that the survey items had a high level of internal consistency. The results of the mean and standard deviation of each component (engagement, presence, flow, immersion, and skill) of both the Treatment and the Control groups, as well as the difference between the marks between both groups are represented in Table 1. The evaluation was performed on a scale from 1 to 10. The highest results of both groups are shown in bold. In addition, those marks are shown in the radar plot in Fig. 7.

As indicated in Table 1 and Fig. 7, both groups generally reported high levels of satisfaction with the iVR experience (6.50–8.90), although with high standard deviations (1.68–2.33). The Treatment group showed higher levels of satisfaction (3.4–5.4%) than the Control group in all components, except immersion (1.5% worse). However, these differences between groups were not statistically significant. The high marks outlined that both iVR learning experiences stimulated the students, a general result common to many iVR educational solutions (Checa and Bustillo 2020a). Besides, the students improved both iVR-learning-experience satisfaction and usability levels, as was the case in the research of Zaidi et al. (2019). In their work, they concluded that the tutorial improved usability, because it helped to understand what to do in advance. Frommel et al. (2017) also studied the effects of the tutorial in their research and they pointed out that tutorials improved player satisfaction, regardless of the complexity of the iVR experience. Finally, the high standard deviations of all components outlined the need for large samples in iVR experiences, due to the diverse human perception of these experiences. The higher standard deviations observed in the Control group (15%) could be attributed to the larger size of the Control group (15% larger).

Engagement and skill were the components with the highest marks. High engagement levels are expected in iVR learning experiences nowadays, due to the novelty effect, as also mentioned in the work of Allcoat and von Mühlenen (2018). Engagement and skill levels were, respectively, 3.4% and 4.2% better in the Treatment group than in the Control group. A result that coincided with the research of Miguel-Alonso et al. (2023), where satisfaction and usability were assessed using three groups with different levels of knowledge of the iVR learning experience. As they explained, the high values for skill levels could be due to the graded difficulty of the actions during the tutorial, which might help to avoid boredom and to master control over the mechanics of the iVR environment. The tutorial, therefore, appeared to enhance the skills of the participants, enabling them to overcome the challenges presented by the iVR learning experience. The distinct difference in skill-related marks between the treatment and control groups emphasized the importance of this analysis.

The marks for immersion and presence were a bit lower than for engagement and skill in both groups. Both the marks and the standard deviations were strongly correlated in the case of the Treatment group. Presence was 5.4% better in the Treatment group, but 1.5% worse in the immersion compared to the Control group. Presence could have been affected by some unnatural actions, as Rafiq et al. proposed in their research (Rafiq et al. 2022), as naturalness is a characteristic of presence. Some participants at the Placement stage reported having problems when trying to grab some objects, and others complained about the difficulty of this action that made them feel frustrated. Even though both groups had to perform this action, the tutorial provided early preparation for this specific challenge, aiming to minimize student frustration and potentially increase presence marks. A higher feeling of presence in the Treatment group was also reported in the study of Schomaker and Wittmann (2021). They explained that presence was higher among the participants who had had similar iVR experiences beforehand (as is the case of the tutorial for treatment group participants). The slightly higher value of immersion in the Control group could be explained considering that this component has a strong high standard deviation.

Flow was the component with the lowest marks, although the Treatment group rated it more highly (4.2%) than the Control group. According to Ruvimova et al. (2020), flow happens during complete immersion in the iVR experience, as the student is achieving the challenges. They pointed out that distraction may complicate concentration during the iVR experience, affecting flow. In our case, as was previously explained, most of the participants reported difficulties when grabbing objects during the Placement stage of the Tutorial, which distracted them from the learning task. It was an obstacle to complete enjoyment of experience despite having sufficient skills to achieve all the proposed tasks. This frustration was reduced in students who complete the tutorial, as they gave better marks to flow. For further research, some improvements are required in the iVR learning experience, so as not to hinder participant enjoyment and learning, presumably increasing the marks for flow.

Finally, some participants complained of cybersickness: 18 of the 86 participants (10 in the Treatment group; 8 in the Control group). Most of them complained of very light levels and only 2 (1 in each group) complained of moderate levels. In addition, all participants finished the experience regardless of the intensity of their cybersickness. No different behaviors were noted between these students and the students without cybersickness symptoms, neither for satisfaction, nor performance, nor learning issues. All of them were included in the research and no distinction was made between students with and without cybersickness symptoms, due to the small number of participants with cybersickness and its negligible effect on performance. Other authors, such as Smith and Burd (2019) also followed the same procedure in cases of light symptoms of cybersickness.

4.2 Performance

As students progressed through the Airflow and the Unguided assembly stages, presented in Sect. 2.2, time and failures were recorded for each user experience. Both variables were used to evaluate user performance and to measure the influence of the iVR tutorial. Table 2 summarizes the mean time spent on each stage of the iVR serious game, and the average mistakes committed per student. Both the Control and the Treatment groups were split into the A and B groups (higher and lower marks in the pre-test) for detailed analysis, as presented in Sect. 3.2. The lowest marks are highlighted in bold.

As Table 2 shows, the Treatment group, on average, spent less time (19–37%) on the first 2 stages (Introduction and Guided assembly), the same on the third and fourth stages (Placement and Airflow), and up to 23% (80 s) more on the final stage (Unguided assembly) of the iVR serious game. This suggests that students in both groups, A and B, acquired basic skills within the iVR environment through the tutorial, enabling them to progress more swiftly through the initial stages of the serious game without encountering knowledge difficulties. However, these acquired skills proved insufficient to tackle the challenges of the last 3 stages, which are more closely related to knowledge than to skills. During the intermediate stages, Group A of the Control group and Group B of the Treatment group demonstrated slightly faster progress than their counterparts, resulting in no significant average differences between the Control and Treatment groups. The last and most complex stage was where both Treatment groups, A and B, were significantly slower than the Control group. A performance that may be due to the lower previous knowledge of the Treatment groups, previously reported in Sect. 3.2. The students might therefore have felt insecure, possibly prompting them to search for additional information on computer components within the iVR environment, thereby requiring more time to complete that stage. The increase in time observed in the Treatment group could also be explained by the findings of Tsay et al. (2019), who noted that when the novelty effect disappeared, autonomous motivation decreased, and academic performance may also slow down. This result has also been reported in Rodrigues et al. (2022) among STEM learners from introductory programming courses. The completion of the tutorial might have led to the disappearance of the novelty effect, resulting in a slight decrease in motivation and performance and prolonging the duration of the experience.

In the last two stages, the Treatment group had fewer mistakes than the Control group (11% in the last stage). It was only in the Airflow stage that Treatment Group B had lower marks than Control Group B. It could be inferred that completing the tutorial assisted students in making fewer mistakes when faced with an autonomous self-learning task, though this conclusion might warrant further scrutiny. The most interesting result may arise from the comparison between time spent on the last two stages and errors made. Figure 8 plots the time required by the student to perform those last two stages and the number of errors committed. One immediate conclusion drawn is that time was the main factor affecting student performance. Students who spent more time on the iVR game made fewer errors, at least in these brief educational experiences. Dai et al. (2022) also reached the same conclusion in their research. Their conclusions were that long educational iVR experiences were required for high learning rates and complex educational goals. As with this experience, the Treatment groups therefore took longer to perform these last two stages and, on average, they also made fewer mistakes.

4.3 Learning improvement

The assessment of learning improvement covered a comprehensive analysis of both pre-test and post-test results, so as to elucidate the interplay between the prior knowledge of each student and the impact of the iVR experience. The internal consistency reliability of both the pre-test and post-test results was meticulously tested with the Kuder-Richardson formula (KR20), revealing KR20 values of 0.78 and 0.76 for the pre-test and the post-test, respectively. Values that indicate acceptable inter-item consistency, underlining the reliability of the assessment.

The pre- and post-test marks of both the A and B Treatment and Control groups were also compared, due to the bimodal distribution of the students. The average marks of the 4 groups are presented in Table 3, as well as the percentile differences between the pre-test and the post-test marks of each group, and the variation with respect to the pre-test. The highest results and percentile differences appear in bold.

The marks of Groups A and B in Table 3 appear well-suited: each group showing distinct mean values with small standard deviations for each group. Post-test marks were noticeably lower than pre-test marks, attributed to the increased difficulty of the questions. The experience seems particularly beneficial for students with less previous knowledge (B groups) as it narrows the gap in marks compared to their peers with greater prior knowledge (A groups). Moreover, employing the Chi Square test to assess the distribution of improving, worsening, or no change in knowledge yielded statistically significant differences (p < 0.05). Searching for different behaviors between different groups, the Control group achieved higher marks, both in the pre-test and in the post-test, in all cases. However, the Control groups behaved in a slightly worse way than the Treatment groups (1.30%). In Fig. 9, these results are visually represented with violin plots for the four groups and the two tests.

In relation to the two B groups, Fig. 9 shows that most of the students in the Treatment group (represented in orange) performed slightly better than those in the Control group, underlining the relevance of the tutorial. However, the long tails of the Control group violin plots for the post-test remained similar to those representing the pre-test marks for both groups, A and B. This suggests that some of the students in the Control group did not fully capitalize on the iVR experience, especially when compared to the Treatment group students with previous experience of the iVR environment who had completed the iVR tutorial. In Treatment group A, the tutorial seemed to contribute to the homogenization of students' marks. The marks of that group equaled the marks of the Control group, despite its previously lower knowledge levels of the learning topic.

So, although the complete iVR learning experience demonstrated improved learning results, this research highlights the significant influence of previous knowledge on learning results. This finding is aligned with the results of Dengel et al. (2020), who showed that previous knowledge was a predictor of iVR-serious-game learning outcomes. Lui et al. (2020) may also have reached a similar conclusion when testing an iVR learning experience, in a comparison of various previous knowledge levels and positions. In their research, the control group, which performed the learning experience with a desktop, gained higher knowledge than the iVR group. According to this research, one potential reason suggested is the inherent cognitive overload that can occur in iVR. More positive emotions were however noted in this group, which can be explained due to their higher presence. The Treatment group, for example, marked presence higher than the control group, but lower in the post-test, which may be explained by their more limited previous knowledge, leading to higher cognitive overload in the tasks where previous knowledge was essential.

Post-test questions were classified in accordance with the taxonomy of Bloom et al., as revised by Anderson et al. (2001), to extract more accurate conclusions on Remembering, Understanding and Evaluation. Remembering questions referred to identification and recall of the concepts learnt on the Bachelor’s course; Understanding included those questions that needed interpretation, exemplification, classification, summarization, comparison, and explanation; finally, Evaluation questions were focused on making judgements based on the information students had learned and on their own conclusions. In Table 4, the average results of the questions following this classification are summarized. Besides, each knowledge classification was compared against the pre-test averages (pre-test questions could not be split into those 3 categories). The highest marks, comparing the Control and the Treatment groups, are highlighted in bold.

As depicted in Table 4, the Control groups had better marks for Remembering (shown in green in Fig. 10: between 2 and 9%). However, the Treatment group B (represented in orange in Fig. 10) had the highest standard deviation, potentially attributed to a notable proportion of unanswered questions among some group B students. The high standard deviation was similar to the research results of Selzer et al. (2019) on spatial cognition and navigation in iVR, due perhaps to the low number of questions in that category that the students had to answer (they explained the results in terms of a ceiling effect, as the tests only had 10 questions each with 4 possible answers). This segment of Treatment group B students that seemed to take little advantage of the iVR experience formed the long tail in the violin plot; low marks that might conceal that, as the Group B students had lower previous knowledge levels of computer components, they might have felt insecure when responding to the post-test and might have preferred not to answer the questions, rather than risking a wrong answer. Regardless of the reason for this result, in both groups (Control and Treatment), the iVR learning experience showed a low impact for a small number of students, whose marks remained especially low, which should be further investigated in the future. These results were in line with the research of Schomaker and Wittmann (2021), in which they studied the influence of the novelty effect and type of exposure on memory. They concluded that the less the participants were familiar with the iVR environment, the better their marks for memory. An aspect that may explain the reason why the Control groups, which were less familiar with the iVR environment, had higher marks for Remembering than the Treatment groups. Furthermore, previous knowledge of the Treatment groups was lower than in the Control groups, which may be explained by Paxinou et al.’s research (2022). Those authors contended that when students have very low levels of previous knowledge of a topic, then a traditional introductory lesson of the topic after the pre-test is advisable before the iVR experience, to boost the autonomous learning capabilities of the student. The absence of this introductory lesson might have contributed to the decrease in Remembering scores for the Treatment groups, possibly influenced by their lower pre-test marks for computer components.

The Treatment groups exhibited superior marks for understanding (between 11 and 24%). The tutorial emerged as a significant factor in enhancing performance, particularly for students with lower marks, resulting in a more homogeneous group behavior. The learning outcomes were positively related to presence in iVR, according to Petersen et al. (2022). In their research, presence was highly influenced by interactivity, which is a particular focus of the tutorial.

Evaluation scores slightly improved in both the Treatment group A (6%) and the Control group B (6%). In addition, the tutorial nearly removed the bimodal structure in both Treatment group distributions, although some students still struggled to acquire sufficient knowledge (forming a long tail). In the case of the Control groups, the bimodal structure in the distribution of the results was strengthened. Therefore, the tutorial seemed to contribute to homogenizing the results. However, some unique features are shown in the evaluation of Fig. 10: the longest tails, the strongest bimodal distributions and the lowest marks. These 3 features might also be a consequence of the structure and the complexity of the questions: the evaluation category only had 3 questions and their higher complexity meant that students with weaker backgrounds simply avoided answering them. This reason cannot be counteracted by the tutorial, where the long tail and low average marks of group B remain. Besides, the strong bimodal distribution of the Control group could also be due to a number of unmotivated students in this group. According to Rafiq et al. (2022), there is a strong correlation between student interest and engagement in iVR experiences and Table 6 showed that Control group B had the lowest levels of engagement with the iVR educational experience; in this way, their lower levels of motivation might mean that they are less attuned to the experience and its complex concepts that they might otherwise have learnt. On the contrary, the higher post-tutorial engagement levels reaffirmed a willingness to work harder in the autonomous iVR educational experience. Despite their lower previous knowledge levels, they were nevertheless able to acquire the same level of understanding of computer components as the students with previous knowledge levels that were higher than their own. Finally, the authors reached the conclusion that the tutorial was effective, although not as much as the previous knowledge level of the participants.

4.4 Limitations

While the present study provides valuable insights into the usability, satisfaction, performance, and learning improvement in iVR-based educational experiences related to the avoidance of the novelty effect, it is essential to acknowledge certain limitations that may impact the generalizability and interpretation of the findings.

One of the primary limitations stems from the inherent challenges associated with sample size and variability in iVR research. Despite efforts to include a relatively extensive sample of students, the high scatter of results observed in both Treatment and Control groups may be attributed to the diverse perceptions and experiences of participants. The recommendation of a sample size of 20 per group, as suggested in recent reviews, was followed; however, the observed variability in outcomes underscores the need for larger sample sizes to capture the complexity of human perception in iVR learning experiences.

In addition, the study faced challenges in achieving statistical significance in certain comparisons, possibly due to the limited sample size and the nature of the experimental design. The differences in satisfaction and usability between the Treatment and Control groups, while notable, did not reach statistical significance. The use of alternative statistical tests, such as the Chi Square test, Cronbach’s alpha test, and the Kuder-Richardson formula, was employed to address this limitation. In the case of the Chi Square test, it shows significant differences for knowledge acquisition. Table 5 shows the quantity of participants which have improved, worsened or any of them comparing pre-test and post-test results. Chi Square showed a result below 0.05 which means that exists a significant difference. Fisher’s Exact Test also yielded the same results. This reflects that the tutorial was effective but not as much as the previous knowledge of the participants had before the experience. Further research will study the influence of the prior knowledge by dividing the groups considering the score in the pre-test instead of doing it in a randomized way.

However, paired sampled t-test, ANOVA and ANCOVA analysis were not included in the discussion as they show no statistical differences between both groups for any considered variable, which might be explained by the limited time of the tutorial in comparison to not having it. Table 6 summarizes the results of the one-way ANOVA analysis over the pre-test and post-test (α = 0.05).

Related to the complexity of evaluation questions, the limited number and high complexity of evaluation questions posed challenges in data interpretation. The avoidance of some questions by a subset of participants, possibly due to their complexity, introduces a potential source of bias. Future studies might consider refining the evaluation questions to ensure clarity and reduce the likelihood of avoidance. However, the consistency of pre-test and post-test was assessed with the Kuder-Richardson formula, which demonstrated acceptable reliability between the items in both tests, as Table 7 shows.

Despite the overall positive satisfaction levels, a notable limitation is the occurrence of cybersickness reported by a subset of participants. While the number of participants experiencing cybersickness was relatively small, it is crucial to acknowledge its potential impact on the overall participant experience. Future studies may need to incorporate measures to mitigate cybersickness and explore its potential influence on performance and satisfaction.

Furthermore, the influence of previous knowledge on learning outcomes, particularly in the evaluation category, highlights a limitation. The tutorial's effectiveness in homogenizing results and enhancing understanding was evident, but the challenges faced by participants with lower previous knowledge levels remained. The study did not include a traditional introductory lesson before the iVR experience, and future research could explore the impact of such an introduction on participants with varying levels of prior knowledge.

Related to engagement levels, while generally high, displayed variations among groups, especially in Control group B. The link between engagement, motivation, and performance suggests a need for further investigation into the motivational aspects influencing participant engagement in iVR educational experiences.

Finally, the observed decrease in performance in the final stage of the iVR serious game for the Treatment group raises questions about the duration of the novelty effect. Future research could explore the temporal aspects of participant motivation and performance in iVR learning experiences. Additionally, while the study design follows a pattern observed in related research, it's essential to note that the design of the iVR learning experience itself could be a potential limitation. Although this approach is in line with methodologies used by other researchers, variations in iVR content, instructional design, and user interface elements can significantly impact the learning outcomes and should be considered in future studies.

5 Conclusions

The novelty effect can lead users to focus more on the technology itself, rather than its practical applications or potential benefits. One consequence is that the technology may be under-utilized or not fully leveraged for its intended purpose. In this research, the impact of the most common solution for overcoming the novelty effect in iVR learning applications, the introduction of an iVR tutorial in the learning experience, has been measured.

The iVR tutorial was first designed and incorporated in an existing iVR learning experience. The purpose of the learning experience was to introduce first-year undergraduate students to the structure and functions of the main components of a computer. The iVR tutorial was organized into 4 modules: a general module to familiarize the user with the iVR environment, a module with basic interactions to introduce the user to the iVR controllers, a module with complex interactions, and the last module based on exploration and creative play for consolidation of all the previous stages. The learning experience also included 4 steps: guided assembly of computer components (to know the proper place for each component), a step to label the name of each component and its function, a step to evaluate the role of airflow within the PC, and unguided assembly of a computer. The last step leaves the student with total freedom to select any component from a wide range of possibilities and assemble them in any order in the PC chassis or cage, providing final feedback on the number of errors, and giving an opportunity to the student to correct these mistakes.

This experience has been tested with an extensive sample of students (N = 86). They were split between a Control group (no tutorial) and a Treatment group (with a tutorial). Both groups showed a clear bimodal distribution of previous knowledge, due to the previous experience of some students with the learning topic, which leads on to further conclusions. Usability, satisfaction, and pre- and post-test knowledge were used to evaluate the experience. Knowledge was divided into Remembering, Understanding and Evaluation for a better analysis.

The results have shown us that the use of such iVR tutorial had the following tangible effects:

-

significantly increased user satisfaction (specially engagement, flow and presence) compared to standard iVR experience, a significant result because non-tutorized iVR learning experiences have previously shown higher degrees of user satisfaction compared with traditional learning experiences (Checa et al. 2021).

-

reduction in the time required to learn the iVR mechanics, which meant that the students were more relaxed and capable of carefully studying the autonomous learning stages, reducing the number of mistakes at each stage.

-

significantly improved understanding among students who completed the tutorial.

-

improved evaluation of the knowledge categories at a lower rate.

-

sharpened learning rates of the students with the lowest marks, making group behavior very much more homogeneous. Those improvements might be due to the suitability of the iVR tutorial for improving user skills within the iVR environment, reducing the cognitive load of the experience, and training students to contend with frustration in reaction to the most complex mechanics.

-

Finally, in both groups (Control and Treatment), the iVR learning experience had a low impact for a small number of students, whose marks remained especially low even after the iVR experience, which could have been due to their poor previous knowledge or even lack of motivation, as outlined in a previous study (Dengel and Magdefrau 2020). Therefore, previous knowledge may have had more influence on learning improvements rather than the novelty effect.

From the viewpoint of the authors, insight into the factors that should be considered when managing an iVR learning experience is provided in this study. The novelty effect provides benefits to increase performance and motivation at an initial stage. However, the novelty effect and its associated benefits disappear over time. The findings have also suggested to us that the previous knowledge of students may have a more influential effect on learning outcomes than the novelty effect. This fact highlights the importance of considering different levels of individual knowledge and the need for personalized approaches when designing effective iVR learning experiences.

Future research lines will include the design of learning experiences that can be useful for the type of student whose response to traditional iVR experiences can be problematic, as has been observed in this research, extending the number of questions and final users, to avoid the ceiling effect, and to improve data reliability. A lengthier duration of both tutorial and learning experience is also desirable for the improvement of learning rates. In addition, some techniques will be considered, so that the novelty effect can reemerge in a controllable way, to benefit from its positive effects (increasing motivation and performance). Artificial intelligence modules may also be incorporated into longer iVR experiences that can adapt the difficulty of the tasks to the learning pace of students and flexible gamification elements may likewise be incorporated in the experience. Finally, it is important to consider the learning effect which can influence the results of the experience, while dividing the student groups in accordance with their previous background in relation to the topic.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Notes

: * refers to questions based on User Experience Questionnaire.

: ** refers to questions based on System Usability Scale.

: *** refers to questions based on NASA-TLX.

References

Allcoat D, von Mühlenen A (2018) Learning in virtual reality: Effects on performance, emotion and engagement. Res Learn Technol. https://doi.org/10.25304/rlt.v26.2140

Andersen E, O’Rourke E, Liu Y-E, Snider R, Lowdermilk J, Truong D, Cooper S, Popovic Z (2012) The impact of tutorials on games of varying complexity. In: Proceedings of the SIGCHI conference on human factors in computing systems. https://doi.org/10.1145/2207676.2207687

Anderson LW, Krathwohl DR (2001) A taxonomy for learning, teaching and assessing: a revision of bloom’s taxonomy of educational objectives. Longman, Harlow

Checa D, Bustillo A (2020a) A review of immersive virtual reality serious games to enhance learning and training. Multimed Tools Appl 79(9–10):5501–5527. https://doi.org/10.1007/s11042-019-08348-9

Checa D, Bustillo A (2020b) Advantages and limits of virtual reality in learning processes: Briviesca in the fifteenth century. Virtual Reality 24(1):151–161. https://doi.org/10.1007/s10055-019-00389-7

Checa D, Gatto C, Cisternino D, de Paolis LT, Bustillo A (2020) A framework for educational and training immersive virtual reality experiences. In: de Paolis LT, Bourdot P (eds) Augmented reality, virtual reality, and computer graphics. Springer International Publishing, Berlin, pp 220–228

Checa D, Miguel-Alonso I, Bustillo A (2021) Immersive virtual-reality computer-assembly serious game to enhance autonomous learning. Virtual Reality. https://doi.org/10.1007/s10055-021-00607-1

Checa D, Bustillo A (2022) PC virtual LAB. https://Store.Steampowered.Com/App/1825460/PC_Virtual_LAB/

Csikszentmihalyi M (1996) Creativity: flow and the psychology of discovery and invention. Harper Collins, New York

Csikszentmihalyi, M. (1991). Flow: the psychology of optimal experience: steps toward enhancing the quality of life. Design Issues 8(1)

Dai C, Ke F, Dai Z, Pachman M (2022) Improving teaching practices via virtual reality-supported simulation-based learning: scenario design and the duration of implementation. Br J Edu Technol. https://doi.org/10.1111/bjet.13296

Dengel A, Magdefrau J (2020). Immersive learning predicted: presence, prior knowledge, and school performance influence learning outcomes in immersive educational virtual environments. In: 2020 6th International Conference of the Immersive Learning Research Network (ILRN), 163–170. https://doi.org/10.23919/iLRN47897.2020.9155084

Di Natale AF, Repetto C, Riva G, Villani D (2020) Immersive virtual reality in K-12 and higher education: a 10-year systematic review of empirical research. Br J Edu Technol 51(6):2006–2033. https://doi.org/10.1111/bjet.13030

Frommel J, Fahlbusch K, Brich J, Weber M (2017) The effects of context-sensitive tutorials in virtual reality games. In: Proceedings of the annual symposium on computer-human interaction in play. pp 367–375. https://doi.org/10.1145/3116595.3116610

Fussell SG, Derby JL, Smith JK, Shelstad WJ, Benedict JD, Chaparro BS, Thomas R, Dattel AR (2019). Usability Testing of a Virtual Reality Tutorial. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 63(1):2303–2307. https://doi.org/10.1177/1071181319631494

Häkkilä J, Colley A, Väyrynen J, Yliharju A-J (2018) Introducing virtual reality technologies to design education. Seminar Net 14(1):1–12. https://doi.org/10.7577/seminar.2584

Hopp T, Gangadharbatla H (2016) Novelty effects in augmented reality advertising environments: the influence of exposure time and self-efficacy. J Curr Issues Res Advert 37(2):113–130. https://doi.org/10.1080/10641734.2016.1171179

Huang M-H (2003) Designing website attributes to induce experiential encounters. Comput Hum Behav 19(4):425–442. https://doi.org/10.1016/S0747-5632(02)00080-8

Huang W, Roscoe RD, Johnson-Glenberg MC, Craig SD (2021) Motivation, engagement, and performance across multiple virtual reality sessions and levels of immersion. J Comput Assist Learn 37(3):745–758. https://doi.org/10.1111/jcal.12520

Jeno LM, Vandvik V, Eliassen S, Grytnes J-A (2019) Testing the novelty effect of an m-learning tool on internalization and achievement: A Self-Determination Theory approach. Comput Educ 128:398–413. https://doi.org/10.1016/j.compedu.2018.10.008

Koch M, von Luck K, Schwarzer J, Draheim S (2018) The novelty effect in large display deployments—experiences and lessons-learned for evaluating prototypes. ECSCW Exploratory Papers

Lui M, McEwen R, Mullally M (2020) Immersive virtual reality for supporting complex scientific knowledge: augmenting our understanding with physiological monitoring. Br J Edu Technol 51(6):2181–2199. https://doi.org/10.1111/bjet.13022

Mayer RE (2009) Multimedia learning, 2nd edn. Cambridge University Press, Cambridge

Mayer R (2014) Computer games for learning: an evidence-based approach. MIT press, Cambridge

Merchant Z, Goetz ET, Cifuentes L, Keeney-Kennicutt W, Davis TJ (2014) Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: a meta-analysis. Comput Educ 70:29–40. https://doi.org/10.1016/j.compedu.2013.07.033

Miguel-Alonso I, Rodriguez-Garcia B, Checa D, Bustillo A (2023) Countering the novelty effect: a tutorial for immersive virtual reality learning environments. Appl Sci. https://doi.org/10.3390/app13010593

Morin R, Léger P-M, Senecal S, Bastarache-Roberge M-C, Lefèbrve M, Fredette M (2016) The effect of game tutorial: a comparison between casual and hardcore gamers. In: Proceedings of the 2016 annual symposium on computer-human interaction in play companion extended abstracts, 229–237. https://doi.org/10.1145/2968120.2987730

Nebel S, Beege M, Schneider S, Rey GD (2020) Competitive agents and adaptive difficulty within educational video games. Front Educ. https://doi.org/10.3389/feduc.2020.00129

Neumann DL, Moffitt RL, Thomas PR, Loveday K, Watling DP, Lombard CL, Antonova S, Tremeer MA (2018) A systematic review of the application of interactive virtual reality to sport. Virtual Reality 22(3):183–198. https://doi.org/10.1007/s10055-017-0320-5

Parong J, Mayer RE (2021) Cognitive and affective processes for learning science in immersive virtual reality. J Comput Assist Learn 37(1):226–241. https://doi.org/10.1111/jcal.12482

Paxinou E, Georgiou M, Kakkos V, Kalles D, Galani L (2022) Achieving educational goals in microscopy education by adopting virtual reality labs on top of face-to-face tutorials. Res Sci Technol Educ 40(3):320–339. https://doi.org/10.1080/02635143.2020.1790513

Petersen GB, Petkakis G, Makransky G (2022) A study of how immersion and interactivity drive VR learning. Comput Educ 179:104429. https://doi.org/10.1016/j.compedu.2021.104429

Rafiq AA, Triyono MB, Djatmiko IW (2022) Enhancing student engagement in vocational education by using virtual reality. Waikato J Educ 27(3):175–188. https://doi.org/10.15663/wje.v27i3.964

Rodrigues L, Pereira FD, Toda AM, Palomino PT, Pessoa M, Carvalho LSG, Fernandes D, Oliveira EHT, Cristea AI, Isotani S (2022) Gamification suffers from the novelty effect but benefits from the familiarization effect: Findings from a longitudinal study. Int J Educ Technol High Educ 19(1):13. https://doi.org/10.1186/s41239-021-00314-6

Ruvimova A, Kim J, Fritz T, Hancock M, Shepherd DC (2020) Transport me away: fostering flow in open offices through virtual reality. In: Proceedings of the 2020 CHI conference on human factors in computing systems. pp 1–14. https://doi.org/10.1145/3313831.3376724

Schomaker J, Wittmann BC (2021) Effects of active exploration on novelty-related declarative memory enhancement. Neurobiol Learn Mem 179:107403. https://doi.org/10.1016/j.nlm.2021.107403

Selzer MN, Gazcon NF, Larrea ML (2019) Effects of virtual presence and learning outcome using low-end virtual reality systems. Displays 59:9–15. https://doi.org/10.1016/j.displa.2019.04.002

Shernoff DJ (2012) Engagement and positive youth development: Creating optimal learning environments. In: Harris KR, Graham S, Urdan T (eds) The APA educational psychology handbook. American Psychological Association, Washington

Slater M (2018) Immersion and the illusion of presence in virtual reality. Br J Psychol 109(3):431–433. https://doi.org/10.1111/bjop.12305

Slater M, Wilbur S (1997) A framework for immersive virtual environments (FIVE): speculations on the role of presence in virtual environments. Presence Teleoperators Virtual Environ 6(6):603–616. https://doi.org/10.1162/pres.1997.6.6.603

Smith SP, Burd EL (2019) Response activation and inhibition after exposure to virtual reality. Array 3–4:100010. https://doi.org/10.1016/j.array.2019.100010

Sung B, Mergelsberg E, Teah M, D’Silva B, Phau I (2021) The effectiveness of a marketing virtual reality learning simulation: A quantitative survey with psychophysiological measures. Br J Edu Technol 52(1):196–213. https://doi.org/10.1111/bjet.13003

Tcha-Tokey K, Christmann O, Loup-Escande E, Richir S (2016) Proposition and validation of a questionnaire to measure the user experience in immersive virtual environments. Int J Virtual Reality 16(1):33–48. https://doi.org/10.20870/IJVR.2016.16.1.2880

Tsay CH-H, Kofinas AK, Trivedi SK, Yang Y (2019) Overcoming the novelty effect in online gamified learning systems: an empirical evaluation of student engagement and performance. J Comput Assist Learn 36(2):128–146. https://doi.org/10.1111/jcal.12385

Westera W, Prada R, Mascarenhas S, Santos PA, Dias J, Guimarães M, Georgiadis K, Nyamsuren E, Bahreini K, Yumak Z, Christyowidiasmoro C, Dascalu M, Gutu-Robu G, Ruseti S (2020) Artificial intelligence moving serious gaming: presenting reusable game AI components. Educ Inf Technol 25(1):351–380. https://doi.org/10.1007/s10639-019-09968-2

Zaidi SFM, Moore C, Khanna H (2019) Toward integration of user-centered designed tutorials for better virtual reality immersion. In: Proceedings of the 2nd international conference on image and graphics processing—ICIGP ’19, 140–144. https://doi.org/10.1145/3313950.3313977

Acknowledgements

Special thanks to Prof. Dr. Juan J. Rodriguez from the University of Burgos for his kind-spirited and useful advice. This work was partially supported by the ACIS project (Reference Number INVESTUN/21/BU/0002) of the Consejeria de Empleo e Industria of the Junta de Castilla y León (Spain), the Erasmus+ RISKREAL Project (2020-1-ES01-KA204-081847) of the European Commission, and the HumanAid Project (TED2021-129485B-C43) of the Proyectos Estratégicos Orientados a la Transición Ecológica y Digital of the Spanish Ministry of Science and Innovation.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

All procedures involving human participants were carried out in accordance with the ethical standards of the Institutional Review Board of the U.S. Army Research Laboratory and with the 1964 Helsinki declaration and its later amendments. The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of Burgos University (protocol code IR-14/2022, date of approval 1st of December 2022).

Consent to participate

All participants signed informed consent forms prior to their participation, in accordance with the standards of the relevant ethics committees.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

User code: _______________________________________________________________________

Genre: _____ Age: ____

Have you had any experience with Virtual Reality before? □ Yes* **. □ No.

*If you responded “yes”, with which device?

□ Mobile □ HMD VR (Oculus Rift, Oculus Quest, HTC Vive…) □ Don’t know.

** If you responded “yes”, what type of experience did you test in Virtual Reality?

□ Mobile □ HMD VR (Oculus Rift, Oculus Quest, HTC Vive…) □ Don’t know.

Have you ever seen the inside of a desktop computer? If so, describe the situation (i.e., at school, when assembling my own PC, I have seen my friends replacing some components…).

_________________________________________________________________________________

How much time do you think it takes to mount two RAM modules in a computer (in minutes)? _________________________________________________________________________________

Check the correct answer:

1. What is the name of the set of physical parts of a computer?

□ Software

□ Hardware

□ Freeware

□ Peripherical

2. What is the main function of the motherboard?

□ It processes the instructions given to the computer.

□ It links all the functions of the computer.

□ It is used to display videos.

□ It acts as the brain of the computer.

3. The computing speed of a processor is measured in:

□ Watts.

□ Volts.

□ GHz.

□ MB.

4. The RAM memory:

□ permanently stores information on the computer.

□ controls the flow of data from the computer.

□ transforms digital data into analog signals.

□ stores the data that is currently being used.

5. Which of these storage devices can store more data?

□ Hard disk. □ RAM memory.

□ ROM memory. □ DVD-R.

6. Which are the main manufacturers of Video Card GPUs?

□ Nvidia & Gigabyte.

□ Nvidia & AMD.

□ MSI & AMD.

□ Asus & Nvidia.

7. The electronic component used to supply electricity to the computer is:

□ Socket.

□ Power supply.

□ Control unit.

□ Chipset.

8. If we play a video with a 21:9 aspect ratio on our 16:9 monitor:

□ We will not be able to because we have a different aspect ratio.

□ It will be seen with two black stripes (top and bottom).

□ It will be seen with two black stripes (left and right).

□ The aspect ratio does not influence viewing.

9. Fill in the number of each component according to the image:

Motherboard____

CPU & heatsink_____

RAM_____

Memory____

Graphic card______

Power supply______

Appendix 2

User code: ___________________________________________________________________

Check the box that best corresponds to your answer:

1. Were you dizzy during the experience?

□ A lot, I had to abandon the experience □ Moderately □ A little □ No

2. Have you enjoyed the experience with the iVR application? *Footnote 1

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

3. Do you find this iVR application useful for learning? *

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

4. Did your interactions with the iVR environment seem natural? **Footnote 2

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

5. Did the iVR environment respond to the actions you initiated (i.e., when you wanted to pick up an object, it worked correctly)? ***Footnote 3

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

6. Could you examine objects from multiple points of view and examine them up close? **

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

7. Did you get so involved in the iVR environment that you did not notice the things that were happening around you? *

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

8. Did you feel physically good in the iVR environment? *

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

9. Did you get so involved in the iVR environment that you lost notion of the time? *

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

10. Did you feel that you could perfectly control your actions? ***

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes.

11. Did you know what to do in each proposed task? **

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

12. Do you think the information provided by the iVR application was clear? **

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

13. Do you think the iVR environment was realistic? *

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes

14. Were the controls of the iVR device easy to use? **

□ Definitely not □ Mostly not □ It is hard to say □ Mostly yes □ Definitely yes