Abstract

Medical images are a rich source of invaluable necessary information used by clinicians. Recent technologies have introduced many advancements for exploiting the most of this information and use it to generate better analysis. Deep learning (DL) techniques have been empowered in medical images analysis using computer-assisted imaging contexts and presenting a lot of solutions and improvements while analyzing these images by radiologists and other specialists. In this paper, we present a survey of DL techniques used for variety of tasks along with the different medical image’s modalities to provide critical review of the recent developments in this direction. We have organized our paper to provide significant contribution of deep leaning traits and learn its concepts, which is in turn helpful for non-expert in medical society. Then, we present several applications of deep learning (e.g., segmentation, classification, detection, etc.) which are commonly used for clinical purposes for different anatomical site, and we also present the main key terms for DL attributes like basic architecture, data augmentation, transfer learning, and feature selection methods. Medical images as inputs to deep learning architectures will be the mainstream in the coming years, and novel DL techniques are predicted to be the core of medical images analysis. We conclude our paper by addressing some research challenges and the suggested solutions for them found in literature, and also future promises and directions for further developments.

Similar content being viewed by others

1 Introduction

Health no doubt is on the top of concerns hierarchy in our life. Through the lifetime, human has struggled of diseases which cause death; in our life scope, we are fighting against enormous number of diseases, moreover, improving life expectancy and health status significantly. Historically medicine could not find the cure of numerous diseases due to a lot of reasons starting from clinical equipment and sensors to the analytical tools of the collected medical data. The fields of big data, AI, and cloud computing have played a missive role at each aspect of handling these data. Across the worldwide, Artificial Intelligence (AI) has been widely common and well known enough to most of the people due to the rapid progress achieved in almost every domain in our life. The importance of AI comes from the remarkable progress within the last 2 decades only, and it is still growing and specialists from different fields are investing. AI’s algorithms were attributed to the availability of big data and the efficiency of modern computing criteria that is provided lately.

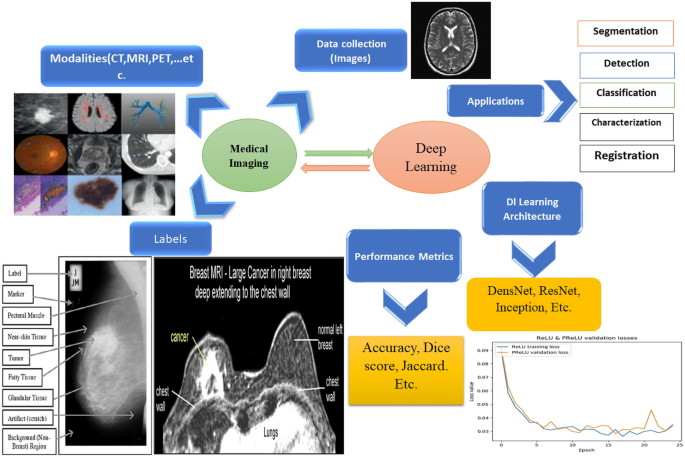

This paper aims to give a holistic overview in the field of healthcare as an application of AI and deep learning particularly. The paper starts by giving an overview of medical imaging as an application of deep learning and then moving to why do we need AI in healthcare; in this section, we will give the key terms of how AI is used in both the main medical data types which are medical imaging and medical signals. To provide a moderate and rich general perspective, we will mention the well-known data which are widely used for generalization and the main pathologies, as well. Starting from classification and detection of a disease to segmentation and treatment and finally survival rate and prognostics. We will talk in detail about each pathology with the relevant key features and the significant results found in literature. In the last section, we will discuss about the challenges of deep learning and the future scope of AI in healthcare. Generally, AI is being a fundamental path in nowadays medicine which is in short a software that can learn from data like human being and it can develop an experience systematically and finally deliver a solution or diagnostic even faster than humans. AI has become an assistive tool in medicine with benefits like error reduction, improving accuracy, fast computing, and better diagnosis were introduced to help doctors efficiently. From clinical perspective, AI is used now to help the doctors in decision-making due to faster pattern recognition from the medical data which also in turn are registered more precisely in computers than humans; moreover, AI has the ability to manage and monitor the patients’ data and creating a personalized medical plan for future treatments. Ultimately, AI has proved to be helpful in medical field with different levels, such as telemedicine diagnosis diseases, decision-making assistant, and drug discovery and development. Machine learning (ML) and deep learning (DL) have tremendous usages in healthcare such as clinical decision support (CDS) system which incorporate human’s knowledge or large datasets to provide clinical recommendations. Another application is to analyze large historical data and get the insights which can predict the future cases of a patient using pattern identification. In this paper, we will highlight the top deep learning advancement and applications in medical imaging. Figure 1 shows the workflow chart of paper highlights.

2 Background concepts

2.1 Medical imaging

Deep learning in medical imaging [1] is the contemporary scope of AI which has the top breakthroughs in numerous scientific domains including computer vision [2], Natural Language Processing (NLP) [3] and chemical structure analysis, where deep learning is specialized with highly complicated processes. Lately due to deep learning robustness while dealing with images, it has attracted big interest in medical imaging, and it holds big promising future for this field. The main idea that DL is preferable is that medical data are large and it has different varieties such as medical images, medical signals, and medical logs’ data of patients monitoring of body sensed information. Analyzing these data especially historical data by learning very complex mathematical models and extracting meaningful information is the key feature where DL scheme outperformed humans. In other words, DL framework will not replace the doctors, but it will assist them in decision-making and it will enhance the accuracy of the final diagnosis analysis. Our workflow procedure is shown in Fig. 1.

2.1.1 Types of medical imaging

There are plenty of medical image types, and selecting the type depends on the usage, in a study which was held in US [4], it was found that there are some basic and widely used modalities of these medical images which also have increased, and these modalities are Magnetic Resonance Images (MRI), Computed Tomography (CT) scans, and Positron Emission Tomography (PET) to be on the top and some other common modalities like, X-ray, Ultrasound, and histology slides. Medical images are known to be so complicated, and in some cases, acquisition of these images is considered to be long process and it needs specific technical implications, e.g., an MRI which may need over 100 Mega Byte of memory storage.

Because of a lack of standardization while image acquisition and diversity in the scanning devices’ settings, a phenomenon called “distribution drift” might arise and cause non-standard acquisition. From a clinical need perspective, medical images are the key part of diagnosis of a disease and then the treatment too. In traditional diagnosis, a radiologist reviews the image, and then, he provides the doctors with a report of his findings. Images are an important part of the invasive process to be used in further treatment, e.g., surgical operations or radiology therapies for example [5, 6].

2.2 DL frameworks

Conceptually, Artificial Neural Networks (ANN) are a mimic of the human neuro system in the structure and work. Medical imaging [7] is a field by which is specialized in observing and analyzing the physical status of the human body by generating visual representations like images of internal tissues or some organs of the body through either invasive or non-invasive procedure.

2.2.1 Key technologies and deep learning

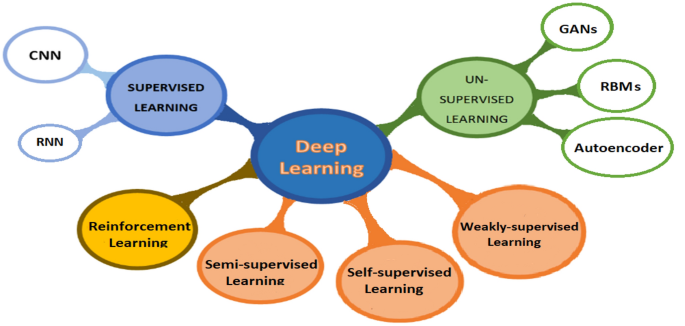

Historically, AI scheme has been proposed in 1970s and it has mainly the two major subcategories, such as Machine Learning (ML) and Deep Learning (DL). The earlier AI used heuristics-based techniques for extracting features from data, and further developments started using handcrafted features’ extraction and finally to supervised learning. Where basically Convolutional Neural Networks (CNN) [8] is used in images and specifically in medical images. CNN is known to be hungry for data, so it is the most suitable methodology for images, and the recent developments in hardware specifications and GPUs have helped a lot in performing CNN algorithms for medical image analysis. The generalized formulation of how CNN work was proposed by Lecun et al. [9], where they have used the error backpropagation for the first example of digits hand written recognition. Ultimately, CNNs have been the predominant architecture among all other algorithms which belong to AI, and the number of research of CNN has increased especially in medical images analysis and many new modalities have been proposed. In this section, we explain the fundamentals of DL and its algorithmic path in medical imaging. The commonly known categories of deep learning and their subcategories are discussed in this section and are shown in Fig. 2.

2.2.2 Supervised learning

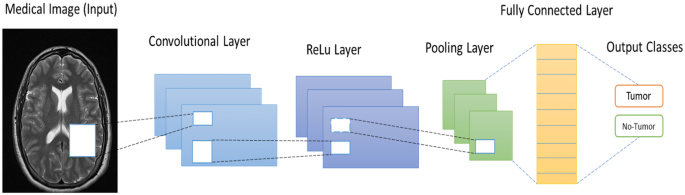

Convolutional neural networks: CNN [10] have taken the major role in many aspects and have lead the work in image-based tasks, including image reconstruction, enhancement, classification, segmentation, registration, and localization. CNNs are considered to be the most deep learning algorithm regarding images and visual processing because of its robustness in image dimensionality reduction without losing image’s important features; in this way, CNN algorithm deals with less parameters which mean increasing the computational efficiency. Another key term about CNN is that this architecture is suitable for hospitals use, because it can handle both 2D and 3D images, because some of medical images modalities like X-ray images are 2D-based images, while MRI and CT scan images are 3-dimensional images. In this section, we will explain the framework of CNN architecture as the heart of deep learning in medical imaging.

Convolutional layer: Before deep learning and CNN, in image processing, convolution terminology was used for extracting specific features from an image, such as corners, edges (e.g., sobel filter), and noise by applying a particular filters or kernels on the image. This operation is done by sliding the filter all over the image in a sliding window form until all the image is covered. In CNN, usually, the startup layers are designed to extract low-level features, such as lines and edges, and the progressive layers are built up for extracting higher features like full objects within an image. The goodness of using modern CNNs is that the filters could be 2D or 3D filters using multiple filters to form a volume and this depends on the application. The main discrimination in CNN is that this architecture obliges the elements in a filter to be the network weights. The idea behind CNN architecture is the convolution operation which is denoted by the symbol *. Equation (1) represents the convolution operation

where s(t) is the output feature map and I(t) is the original image to be convolved with the filter K(a).

Activation function: Activation functions are the enable button of a neuron; in CNN, there are multiple popular activation functions which are widely used such as, sigmoid, tanh, ReLU, Leaky ReLU, and Randomized ReLU. Especially, in medical imaging, most papers found in literature uses ReLU activation function which is defined using the formula

where x represents the input of a neuron.

There are other used activation functions used in CNN, such as sigmoid, tanh, and leaky-ReLu

Pooling layer: Mainly, this layer is used to reduce the parameters needed to be computed and it reduces the size of the image but not the number of channels. There are few pooling layers, such as Max-pooling, average- pooling, and L2-normalization pooling, where Max-pooling is the widely used pooling layer. Max-pooling means taking the maximum value of a position of the feature map after convolution operation.

Fully connecter layer: This layer is the same layer that is used in a casual ANN where usually in such network each neuron is connected to all other neurons in both the previous and next layer’s neurons; this makes the computation very expensive. A CNN model can get the help of the stochastic gradient descent to learn significant associations from the existing examples used for training. Thus, the benefit of a CNN usage is that it gradually reduces the feature map size before finally is get flatten to feed the fully connected layer which in turn computes the probability scores of the targeted classes for the classification. Fc-connected layer is the last layer in a CNN model, Furthermore, this layer processes the strongly extracted features from an image due to the convolutional a pooling layer before and finally fc-layer indicate to which class is an image belong to.

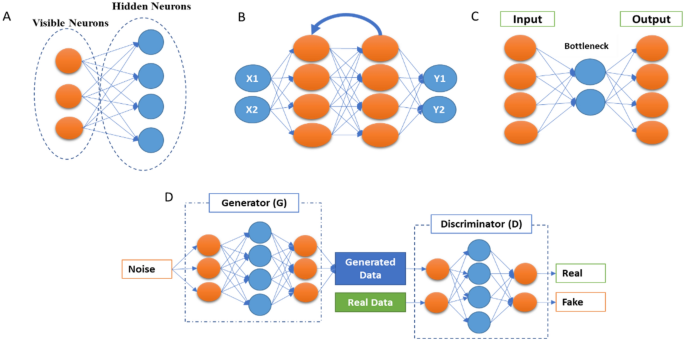

Recurrent neural networks: RNN is a major part from supervised deep learning models, and this model is specific with analyzing sequential data and time series. We can imagine an RNN as a casual neural network, while each layer of it represents the observations at a particular time (t). In [11], RNN was used for text generating which further connected to speech recognition and text prediction and other applications too. RNN are recurrent, because same work is done for every element in a sequence and the output depends on the previous output computation of the previous element in that sequence general, the output of a layer is fed as an input to the new input of the same layer as it is shown in Fig. 3. Moreover, since the backpropagation of the output will suffer of vanishing gradient with time, so commonly a network is evolved which is Long Short-Term Memory (LSTM).

In network and three bidirectional gated recurrent units is (BGRU) to help the RNN to hold long-term dependencies.

There were few papers found in the literature of RNN in medical imaging and particularly in segmentation, in [12], Chen et al. have used RNN along with CNN for segmenting fungal and neuronal structures from 3D images. Another application of RNN is in image caption generation [13], where these models can be used for annotating medical images like X-ray with text captions extracted and trained from radiologists’ reports [14]. RuoxuanCui et al. [15] have used a combination of CNN and RNN for diagnosing Alzheimer disease where their CNN model was used for classification task, after that the CNN model’s output is fed to an RNN model with cascaded bidirectional gated recurrent units (BGRU) layers to extract the longitudinal features of the disease. In summary, RNN is commonly used with a CNN model in medical imaging. In [16], authors have developed a novel RNN for speeding up an iterative MAP estimation algorithm.

2.2.3 Unsupervised deep learning

Beside the CNN as a supervised machine leaning algorithm in medical imaging, there are a few unsupervised learning algorithms for this purpose as well, such as Deep Belief Networks (DBNs), Autoencoders, and Generative Adversarial Networks (GANs), where the last has been used for not only performing the image-based tasks but as a data synthesis and augmentation too. Unsupervised learning models have been used for different medical imaging applications, such as motion tracking [17] general modeling, classification improvement [18], artifact reduction [19], and medical image registration [20]. In this section, we will list the mostly used unsupervised learning structures.

2.2.3.1 Autoencoders

Autoencoders [21, 22] are an unsupervised deep learning algorithm by which this model refers to the important features of an input data and dismisses the other data. These important representations of features are called ‘codings’ where it is commonly called representation learning. The basic architecture is shown in Fig. 3. The robustness of autoencoders stems from the ability to reconstruct output data, which is similar to the input data, because it has cost function which applies penalties to the model when the output and input data are different. Moreover, autoencoders are considered as an automatic features detector, because they do not need labeled data to learn from due to the unsupervised manner. Autoencoders architecture is similar to a formal CNN model, but with the feature is that the number of input neurons must be equal to the number in the output layer. Reducing dimensionality of the raw input data is one of the features of autoencoders, and in some cases, autoencoders are used for denoising purpose [23], where this autoencoders are called denoising autoencoders. In general, there are few kinds of autoencoders used for different purposes, we mention here the common autoencoders, for example, Sparse Autoencoders [24] where the neurons in the hidden layer are deactivated through a threshold which means limiting the activated neurons to get a representation in the output similar to the input where for extracting most of the features from the input, most of the hidden layer neurons should be set to zero. Variational autoencoders (VAEs) [25] are generative model with two networks (Encoder and Decoder) where the encoder network projects the input into latent representation using Gaussian distribution approximation, and the decoder network maps the latent representations into the output data. Contractive autoencoders [26] and adversarial autoencoders are mostly similar to a Generative Adversarial Network (GAN).

2.2.3.2 Generative Adversarial Networks

GANs [27]28 were first introduced by Ian Goodfellow in 2014; it consists basically on a combination of two CNN networks: the first one is called Generative model and another is the discriminator model. For better understanding how GANs work, scientists describe the two networks as a two players who competing against each other, where the generator network tries to fool the discriminator network by generating near authentic data (e.g., artificial images), while the discriminator network tries to distinguish between the generator output and the real data, Fig. 3. The name of the network is inspired from the objective of the generator to overcome the discriminator. After the training process, both the generator and discriminator networks get better, where the first generates more real data, and the second learns how to differentiate between both previously mentioned data better until the end-point of the whole process where the discriminator network is unable to distinguish between real and artificial data (images). In fact, the criteria by which both networks learn from each other are using the backpropagation for the both, Markov chains, and dropout too. Recently, we have seen tremendous usage of GANs for different applications in medical imaging such as, synthetic images for generating new images and enhance the deep learning models efficiency by increasing the number of training images in the dataset [29], classification [30, 31], detection [32], segmentation [33, 34], image-to-image translation [35], and other application too. In a study by Kazeminia et al. [36], they have listed all the applications of GANs in medical imaging and the most two used applications of this unsupervised models are image synthesis and segmentation.

2.2.3.3 Restricted Boltzmann machines

Axkley et al. were the first to introduce the Boltzmann machines in 1985 [37], Fig. 3, also known as Gibbs distribution, and further Smolensky has modified it to be known as Restricted Boltzmann Machines (RBMs) [38]. RBMs consist on two layers of neural networks with stochastic, generative, and probabilistic capabilities, and they can learn probability distributions and internal representations from the dataset. RBMs work using the backpropagation path of input data for generating and estimating the probability distribution of the original input data using gradient descent loss. These unsupervised models are used mostly for dimensionality reduction, filtering, classification, and features representation learning. In medical imaging, Tulder et al. [39] have modified the RBMs and introduced a novel convolutional RBMs for lung tissue classification using CT scan images; they have extracted the features using different methodologies (generative, discriminative, or mixed) to construct the filters; after that, Random Forest (RF) classifier was used for the classification objective. Ultimately, a stacked version of RBMs is called Deep Belief Networks (DBNs) [40]. Each RBM model performs non-linear transformation which will again be the input for the next RBM model; performing this process progressively gives the network a lot of flexibility while expansion.

DBNs are generative models, which allow them to be used as a supervised or unsupervised settings. The feature learning is done through an unsupervised manner by doing the layer-by-layer pre-training. For the classification task, a backpropagation (gradient descent) through the RBM stacks is done for fine-tuning on the labeled dataset. In medical imaging applications, DBNs were used widely; for example, Khatami et al. [41] used this model for classification of X-ray images of anatomic regions and orientations; in [42], AVN Reddy et al. have proposed a hybrid deep belief networks (DBN) for glioblastoma tumor classification from MRI images. Another significant application of DBNs was reported in [43] where they have used a novel DBNs’ framework for medical images’ fusion.

2.2.4 Self-supervised learning

Self-supervised learning is basically a subtype of unsupervised Learning, by which it learns features’ representations using a proxy task where the data contain supervisory signals. After representation learning, it is fine-tuned using annotated data. The benefit of self-supervised learning is that it eliminates the need of humans to label the data, where this system extracts the visibly natural relevant context from the data and assign metadata with the representations as supervisory signals. This system matches with unsupervised learning, because both systems learn representations without using explicitly provided labels, but the difference is that self-supervised learning does not learn inherent structure of data and it is not centered around clustering, anomaly detection, dimensionality reduction, and density estimation. The genesis model of this system can retrieve the original image from a distorted image (e.g., non-linear gray-value transformation, image inpainting, image out-painting, and pixels shuffle) using proxy task [44]. Zhu et al. [45] have used self-supervised learning and its proxy task to solve Rubik’s cube which mainly contain three operations (rotating, masking, and ordering) the robustness of this model comes from that the network is robust to noise and it learns features that are invariant to rotation and translation. Shekoofeh et al. [46] have exploited the effectiveness of self-supervised learning in pre-training strategy used to classify medical images for tow tasks (dermatology skin condition classification, and multi-label chest X-ray classification). Their study has improved the classification accuracy after using two self-supervised learning systems: the first one is trained on ImageNet dataset and the second one is trained on unlabeled domain specific medical images.

2.2.5 Semi-supervised learning

Semi-supervised learning is a system by which it stands in between supervised learning and unsupervised learning systems, because for example, it is used for classification task (supervised learning) but without having all the data labeled (Unsupervised learning). Thus, this system is trained on small, labeled dataset, and then generates pseudo-labels to get larger dataset with labels, and the final model is trained by mixing up both the original dataset and the generated one of images. Nie et al. [47] have proposed semi-supervised learning-based deep network for image segmentation, the proposed method trains adversarially a segmentation model, from the confidence map is computed, and the semi-supervised learning strategy is used to generate labeled data. Another application of semi-supervised learning is used for cardiac MRI segmentation, [48]. Liu et al. [49] have presented a novel relation-driven semi-supervised model to classify medical images, they have introduced a novel Sample Relation Consistency (SRC) paradigm to use unlabeled data by generalizing and modeling the relationship information between different samples; in their experiment, they have applied the novel method on two benchmark medical images for classification, skin lesion diagnosis from ISIC 2018 challenge, and thorax disease classification from the publicly dataset ChestX-ray14, and the results have achieved the state-of-the-art criteria.

2.2.6 Weakly (partially) supervised learning

Weak supervision is basically a branch of machine learning used to label unlabeled data by exploiting noisy, limited sources to provide supervision signal that is responsible of labeling large amount of training data using supervised manner. In general, the new labeled data in “weakly-supervised learning” are imperfect, but it can be used to create a robust predictive model. The weakly supervised method uses image-level annotations and weak annotations (e.g., dots and scribbles) [50]. Weakly supervised multi-label disease system was used for classification task of chest X-ray [51], Also, it is used for multi-organ segmentation, [52] by learning single multi-class network from a combination of multiple datasets, where each one of these datasets contains partially organ labeled data and low sample size. Roth et al. [53] have used weakly supervised learning system for medical image segmentation and their results has speeded up the process of generating new training dataset used for the development purpose of deep learning in medical images analysis. Schleg et al. [54] have used this type of deep learning approach to detect abnormal regions from test images. Hu et al. [55] proposed an end-to-end CNN approach for displacement field prediction to align multiple labeled corresponding structures, and the proposed work was used for medical image registration of prostate cancer from T2-weighted MRI and 3D transrectal ultrasound images; the results reached 0.87 of Mean Dice score. Another application is applied in diabetic retinopathy detection in a retinal image dataset [56].

2.2.7 Reinforcement learning

Reinforcement learning (RL) is subtype of deep learning by which it takes the beneficial action toward maximizing the rewards of specific situation. The main difference between supervised learning and reinforcement learning is that in the first one, the training data have the answer within it, but in case of reinforcement learning, the agent decides how to act with the task where in the absence of the training dataset the model learn from its experience. Al Walid et al. [57] have used reinforcement learning for landmark localization in 3D medical images; they have introduced the partial policy-based RL, by learning optimal policy of smaller partial domains; in this paper, the proposed method was used on three different localization task in 3D-CT scans and MR images and proved that learning the optimal behavior requires significantly smaller number of trials. Also in [58], RL was used for object detection PET images. RL was also used for color image classification on neuromorphic system [59].

2.2.7.1 Transfer learning

Transfer learning is one of the powerful enablers of deep learning [60], which involves training a deep leaning model by re-using of a an already trained model with related or un-related large dataset. It is known that medical data face the problem of lacking and insufficient for training deep learning models perfectly, so Transfer learning can provide the CNN models with large learned features from non-medical images which in turn can be useful for this case [61]. Furthermore, Transfer Learning is a key feature for time-consuming problem while training a deep neural network, because it uses the freeze weights and hyperparameters of another model. In usual using transfer learning the weights which is already trained on different data (images) are freezed to be used for another CNN model, and only in the few last layers, modifications are done and these few last layers are trained on the real data for tuning the hyperparameters and weights. For these reasons, transfer learning was widely used in medical imaging, for example a classification of the interstitial lung disease [61] and detecting the thoraco-abdominal lymph nodes from CT scans; it was found that transfer learning is efficient, even though the disparity between the medical images and natural images. Transfer learning as well could be used for different CNN models (e.g., VGG-16, Resnet-50, and Inception-V3), Xue et al. [62], have developed transfer learning-based model for these models, and furthermore, they have proposed an Ensembled Transfer Learning (ETL) framework for classification enhancement of cervical histopathological images. Overall, in many computer vision tasks, tuning the last classification layers (fully connected layers) which is called “shallow tuning” is probably efficient, but in medical imaging, a deep tuning for more layers is needed [63], where they have studied the benefit of using transfer learning in four applications within three imaging modalities (polyp detection from colonoscopy videos, segmentation of the layers of carotid artery wall from ultrasound scans, and colonoscopy video frame classification), their study results found that training more CNN layers on the medical images is efficient more than training from the scratch.

2.3 Best deep learning models and practices

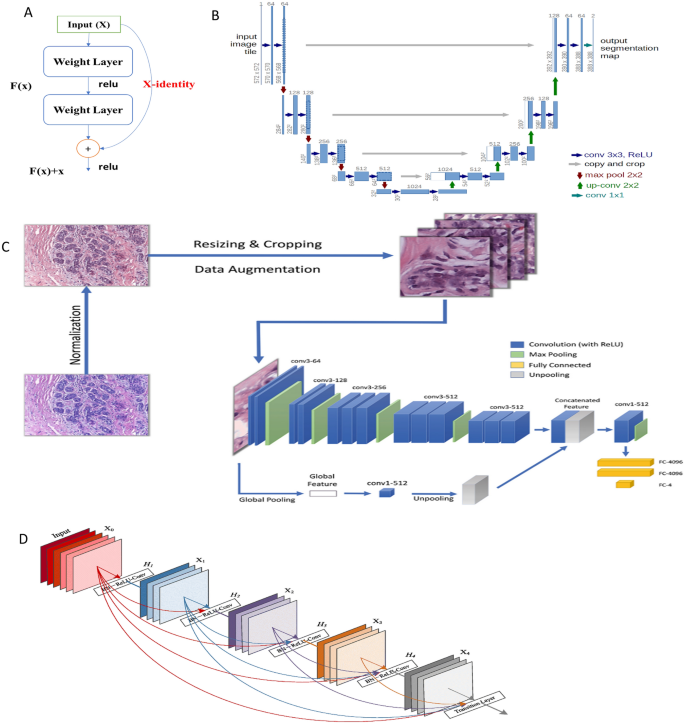

Convolutional Neural Networks (CNNs) based models are usually used in different ways with keeping in minds that CNNs remains the heart of any model; in general, CNN could be trained on the available dataset from the scratch when the available dataset is very large to perform a specific task (e.g., segmentation, classification, detection, etc.), or a pre-trained model with a large dataset (e.g., ImageNet) where this model could be used to train new datasets (e.g., CT scans) with fine-tuning some layers only; this approach is called transfer learning (TL) [60]. Moreover, CNN models could be used for feature extraction only from the input images with more representation power before proceeding to the next stage of processing these features. In the literature, there were commonly used CNN models which has proven their effectiveness, and based on these models, some developments have arisen; we will mention the most efficient and used models of deep learning in medical images analysis. First, it was AlexNet which was introduced by Alex Krizhevsky [64] and Siyuan Lu et al. [65], have used transfer learning with a pre-trained AlexNet with replacing the parameters of the last three layers with a random parameters for pathological brain detection. Another frequently used model is Visual Geometry Group (VGG-16) [66] where 16 refers to the number of layers; later on, some developments were proposed for VGG-16 like VGG-19; in [67], they have listed medical imaging applications using different VGGNet architectures. Inception Network [68] is one of the most common CNN architectures which aim to limit the resources consumption. And further modifications on this basic network were reported with new versions of it [69]. Gao et al. [70] have proposed a new architecture of Residual Inception Encoder–Decoder Neural Network (RIEDNet) for medical images synthesis. Later on, Inception network was called Google Net [71]. ResNet [72] is a powerful architecture for very deep architectures sometimes over than 100 layers, and it helps in limiting the loss of gradient in the deeper layers, because it adds residual connections between some convolutional layers Fig. 4. Some of ResNet models in medical imaging are mostly used for robust classification [73, 74], for pulmonary nodes and intracranial hemorrhage.

DenseNet exploits same aspect of residual CNN (ResNet) but in a compact mode for achieving good representations and feature extraction. Each layer of the network has in its input outputs from the previous layers, so comparing to a traditional CNN, DenseNet contains more connections (L) than CNN (L connections) where DenseNet has [L(L − 1)]/2 connections. DenseNet is widely used with medical images, Mahmood et al.[78] have proposed a Multimodal DenseNet for fusing multimodal data to give the model the flexibility of combining information from multiple resources, and they have used this novel model for polyp characterization and landmark identification in endoscopy. Another application used transfer learning with DenseNet for fundus medical images [79].

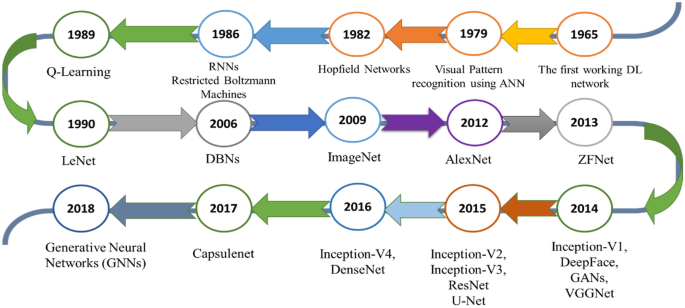

U-net [80] is one of the most popular network architectures used mostly for segmentation, Fig. 4. The reason behind it is mostly used in medical images is that because it is able to localize and highlight the borders between classes (e.g., brain normal tissues and malignant tissues) by doing the classification for each pixel. It is called U-net, because the network architecture takes the shape of U alphabet and it contains concatenation connections; Fig. 4 shows the basic structure of the U-Net. Some developments of U-Net were U-Net + + [75], have proposed a new architecture U-Net + + for medical image segmentation, and in their experiments, U_Net + + has outperformed both U-Net and wide U-Net architectures for multiple medical image segmentation tasks, such as liver segmentation from CT scans, polyp segmentation in colonoscopy videos, and nuclei segmentation from microscopy images. From these popular and basic DL models, some other models were inspired and even some of these models were inspired and rely on the insights from others (e.g., inception and ResNet); Fig. 5 shows the timeline of the mentioned models and other popular models too.

3 Deep learning applications in medical imaging

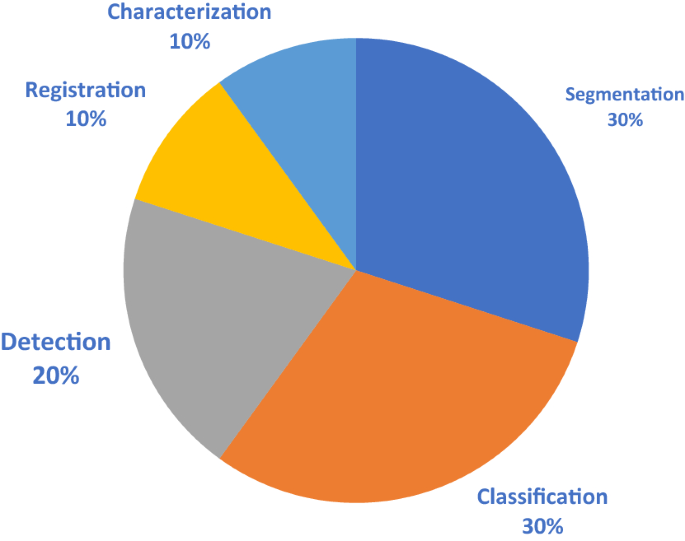

For the purpose of studying the most applications of deep learning in medical imaging, we have organized a study based on the most-cited papers found in literature from 2015 to 2021; the number of surveyed literatures for segmentation, detection, classification, registration, and characterization are: 30, 20, 30, 10, and 10, respectively. Figure 6 shows the pie chart of these applications.

3.1 Image segmentation

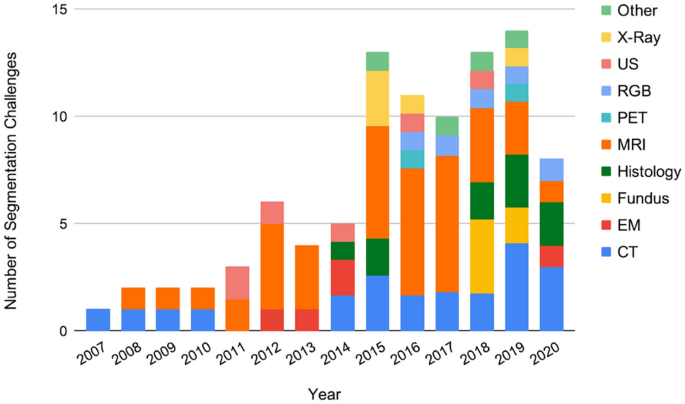

Deep learning is used to segment different body structures from different imaging modalities such as, MRI, CT scans, PET, and ultrasound images. Segmentation means portioning an image into different segments where usually these segments belongs to specific classes (tissue classes, organ, or biological structure) [81]. In general overview, for CNN models, there are two main approaches for segmenting a medical image; the first is using the entire image as an input and the second is using patches from the image. Segmentation process of Liver tumor using CNN architecture is shown in Fig. 7 according to Li et al., and both the methods work well in generating an output map which provides the segmented output image. Segmentation is potential for surgical planning and determining the exact boundaries of sub-regions (e.g., tumor tissues) for better guidance during the direct surgery resection. Most likely segmentation is common in neuroimaging field and with brain segmentation more than other organs in the body. Akkus et al. [82] have reviewed different DL models for segmentation of different organs with their datasets. Since CNN architecture can handle both 2-dimensional and 3-dimensional images, it is considered suitable for MRI which is in 3D scheme; Milleteria et al. [83] have used 3D MRI images and applier 3D-CNN for segmenting prostate images. They have proposed new CNN architecture which is V-Net which relies on the insights of U-Net [80] and their output results have achieved 0.869 dice similarity coefficient score; this is considered as efficient model regarding to the small dataset (50 MRI for training and 30 MRI for testing). Havaei et al. [84] have worked on Glioma segmentation from BRATS-2013 with 2D-CNN model and this model took only 3 min to run. From clinical point of view, segmentation of organs is used for calculating clinical parameters (e.g., volume) and improving the performance of Computer-Aided Detection (CAD) to define the regions accurately. Taghanaki et al. [85] have listed the segmentation challenges from 2007 to 2020 with different imaging modalities; Fig. 8 shows the number of these challenges. We have summarized Deep Learning models for segmentation for different organs in the body, based on the highly cited paper and variations in deep learning models shown in Table 1

Liver tumor segmentation using CNN architecture [86]

3.2 Image detection/localization

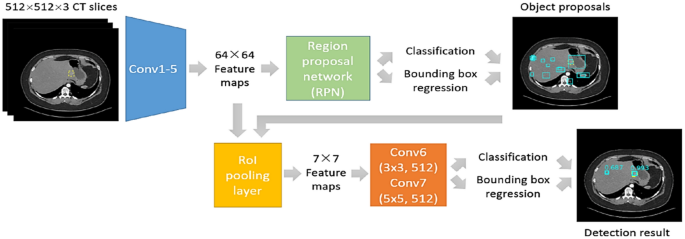

Detection simply means is to identify a specific region of interest in an image and finally to draw a bounding box around it. Localization is just another terminology of detection which means to determine the location of a particular structure in images. In deep learning for medical images, analysis detection is referred as Computer-Aided Detection (CAD), Fig. 9. CAD is divided commonly for anatomical structure detection or for lesions (abnormalities) detection. Anatomical structure detection is a crucial task in medical images analysis due to determining the locations of organs substructures and landmarks which in turn guide for better organ segmentation and radiotherapy planning for analysis and further surgical purposes. Deep learning for organ or lesion detection can be either classification-based or regression-based methods; the first one is used for discriminating body parts, while the second method is used for determining more detailed locations information. In fact, most of the deep learning pathologies are connected; for example, Yang et al. [114] have proposed a custom CNN classifier for locating landmarks which is the initialization steps for the femur bone segmentation. In case of lesion detection which is considered to be clinically time-consuming for the radiologists and physicians and it may lead to errors due to the lack of data needed to find the abnormalities and also to the visual similarity of the normal and abnormal tissues in some cases (e.g., low contrast lesions in mammography). Thus, the potential of CAD systems comes from overcoming these cons, where it reduces the times needed, computational cost, providing alternative way for the people who live in areas that lacks specialists and improve the efficiency of thereby streamlining in the clinical workflow. Some CNN custom models were developed specifically for lesion detection [115, 116]. Both organ anatomical structures and lesion detection are applicable for mostly all body’s organs (e.g., Brain, Eye, Chest, Abdominal, etc.), and CNN architectures are used for both 2D and 3D medical images. When using 3D volumes like MRI, it is better to use patches fashion, because it is more efficient than sliding window fashion, so in this way, the whole CNN architecture will be trained using patches before the fully connected layer, [117]. Table 2 shows top-cited papers with different deep learning models for both structure and lesion detection within different organs.

Lesion detection algorithm flowchart [118]

3.3 Image classification

This task is the fundamental task for the computer-aided diagnosis (CAD), and it aims to discover the presence of disease indicators. Commonly in medical images, the deep learning classification model’s output is a number that represents the disease presence or absence. A subtype of classification is called lesion classification and is used in a segmented images from the body [136]. Traditionally, classification used to rely on the color, shape, and texture, etc. but in medical images, features are more complicated to be categorized as these low-level features which lead to poor model generalization due to the high-level features for medical image. Recently, deep learning has provided an efficient way of building an n end-to-end model which produce classification labels-based from different medical images’ modalities. Because of the high resolution of medical images, expensive computational costs arise and limitations in the number of deep model layers and channels; Lai Zhifei et al. [137] have proposed the Coding Network with Multilayer Perceptron (CNMP) to overcome these problems by combining high-level features extracted by CNN and other manually selected common features. Xiao et al. [138] have used parallel attention module (PAM-DenseNet) for COVID-10 diagnosis, and their model can learn strong features automatically from channel-wise and spatial-wise which help in making the network to automatically detect the infected areas in CT scans of lungs without the need of manual delineation. As any deep learning application, classification task is performed on different body organs for detecting diseases’ patterns. Back in 1995, a CNN model was developed for detecting lung nodules from X-ray of chest [139]; classifying medical images is essential part for clinical aiding and further treatments, for example detecting and classifying pneumonia presence from chest X-ray scans [140]; CNN-based models have introduced various stratifies to better the classification performance especially when using small datasets, for example data augmentation [141, 142]. GANs’ network was widely used for data augmentation and image synthesis [143]. Another robust strategy is transfer learning [61]. Rajpurkar et al. have used custom DenseNet for classifying 14 different diseases using chest X-ray from the chestXray14 dataset [129]. Li et al. have used 3D-CNN for interpolating the missing pixels data between MRI and PET modalities, where they have reconstructed PET images from MRI images from the (ADNI) dataset of Alzheimer disease which contain MRI and PET images [144]. Xiuli Bi et al. [31] have also worked on Alzheimer disease diagnosing using a CNN architecture for feature extraction and unsupervised predictor for the final diagnosis results on (ADNI-1 1.5 T) dataset and achieved accuracy of 97.01% for AD vs. MCI, and 92.6% for MCI vs. NC. Another 3D-CNN architecture employed in an autoencoder architecture is also used to classify Alzheimer disease using transfer learning on a pre-trained CAD Dementia dataset, they have reported accuracy of 99% on the publicly dataset ADNI, and the fine-tuning process is done in a supervised manner [145]. Diabetic Retinopathy (DR) could be diagnosed using fundus photographs of the eye, Abramoff et al.[146] have used custom CNN inspired from Alexnet and VGGNet to train a device (IDx-DR) version X2.1 on a dataset of 1.2 million DR images to record 0.98 AUC score. Figure 10 shows the classification of medical images. A few notable results found in literature are summarized in Table 3.

3.4 Image registration

Image registration means to allow images’ spatial alignment to a common anatomical field. Previously, image registration was done manually by clinical experts, but after deep learning, image registration has changed [176,177,178]. Practically, this task is considered main scheme in medical images, and it relies on aligning and establishing accurate anatomical correspondences between a source image and target image using transformations. In the main theme of image registration, both handcrafted and selected features are employed in a supervised manner. Wu et al. [179, 180] have employed unsupervised deep learning approach for learning the basis filters which in turn represent image’s patches and detect the correspondence detection for image registration. Yang et al. [177] have used an autoencoder architecture for predicting of deformation diffeomorphic metrics mapping (LDDMM) to get fast deformable image registration and the results shows improvements in computational time. Commonly, image registration is employed for spinal surgery or neurosurgery in form of localization of spinal bony or tumor landmarks to facilitate the spinal screw implant or tumor removal operation. Miao et al. [181] have trained a customized CNN on X-ray images to register 3D models of hand implant and knee implant onto 2D X-ray images for pose estimation. An overview of registration operation is shown in Table 4, which shows a summary of medical images registration as an application of deep learning.

3.5 Image characterization

Characterization of a disease within deep learning is a stage of computer-aided diagnosis (CADx) systems. For example, radiomics is an expansion of CAD systems for other tasks such as prognosis, staging, and cancer subtypes’ determination. In fact, characterization of a disease will rely on the disease type in the first place and on the clinical questions related to it. There is two ways used for features extraction, either handcrafted features extraction or deep learned features, in the first, radiomic features is similar to radiologist’s way of interpretation and analysis of medical images. These features might include tumor size, texture, and shape. In literature, the handcrafted features are used for many purposes, such as tumor aggressiveness, the probability of having cancer in the future, and the malignancy probability [190, 191]. There are two main categories for characterization, lesion characterization and tissue characterization. In deep learning applications of medical imaging, each computerized medical image requires some normalization plus customization to be handled and suited to the task and image modality. Conventional CAD is used for lesion characterization. For example, to track the growth of lung nodules, the characterization task is needed for the nodules and the change of lung nodules over time, and this will help of reducing the false-positive of lung cancer diagnosis. Another example of tumor characterization is found in imaging genomics, where the radiomic features are used as phenotypes for associative analysis with genomics and histopathology. A good report which was done with multi-institutes’ collaboration about breast phenotype group through TGCA/TCIA [192,193,194]. Tissue characterization is to examine when particular tumor areas are not relevant. The main focus in this type of characterization is on the healthy tissues that are susceptible for future disease; also focusing on the diffuse disease such as interstitial lung disease and liver disease [195]. Deep learning has used conventional texture analysis for lung tissue. The characterization of lung pattern using patches can be informative of the disease which commonly is interpreted by radiologists. Many researchers have employed DL models with different CNN architectures for interstitial lung disease classification characterized by lung tissue sores [149, 196]. CADx is not only a detection/localization task only, but it is classification and characterization task as well. Finding the likelihood of disease subtyping is the output of a DL model and characteristic features’ presentation of a disease too. For the characterization task, especially with limited dataset, CNN models are not trained from scratch in general, data augmentation is an essential tool for this application, and performing CNN on dynamic contrast-enhanced MRI is important too. For example, while using VGG-19-Net, researchers have used DCE-MRI temporal images with pre-contrast, first post-contrast, and the second post-contrast MR images as an input to the RGB channels. Antropova et al. [197] have used the maximum intensity projections (MIP) as an input to their CNN model. Table 5 shows some highlighted literature of characterization which includes diagnosis and prognosis.

3.6 Prognosis and staging

Prognosis and staging refer to the future prediction of a disease status for example after cancer identification, further treatment process through biopsies which give a track on the stage, molecular type, and genomics which finally provides information about prognosis and the further treatment process and options. Since most of the cancers are spatially heterogeneous, specialists and radiologists are interested about the information on spatial variations that medical imaging can provide. Mostly, many imaging biomarkers include only the size and another simple enhancement procedures; therefore, the current investigators are more interested in including radiomic features and extending the knowledge from medical images. Some deep learning analysis have been investigated in cancerous tumors for prognosis and staging [192, 206]. The goal of prognosis is to analyze the medical images (MRI or ultrasound) of cancer and get the better presentation of it by gaining the prognostic biomarkers from the phenotypes of the image (e.g., size, margin morphology, texture, shape, kinetics, and variance kinetics). For example, Li et al. [192] found that texture phenotype enhancement can characterize the tumor pattern from MRI, which lead to prediction of the molecular classification of the breast cancers; in other words, the computer-extracted phenotypes provide promises regarding the quality of the breast cancer subtypes’ discrimination which leads to distinct quantitative prediction in terms of the precise medicine. Moreover, with the enhancement of the texture entropy, the vascular uptake pattern related to the tumor became heterogeneous which in turn reflects the heterogeneous temperament of the angiogenesis and the treatment process applicability and this is termed as the virtual digital biopsy location based. Gonzalez et al. [204] have applied DL on thoracic CT scans for prediction of staging of chronic obstructive pulmonary disease (COPD). Hidenori et al. [207] have used CNN model for grading diabetic retinopathy and determining the treatment and prognosis which involves a non-typically visualized on fundoscopy of retinal area; their novel AI system suggests treatment and determines prognoses.

Another term related to staging and prognosis is survival prediction and disease outcome, Skrede et al. [208] have performed DL using a large dataset over 12 million pathology images to predict the survival outcome for colorectal cancer in its early stages, a common evaluation metric is Hazard function which indicate the risk measures of a patient after treatment, and their results yield a hazard ration of 3.84 for poor against good prognosis in the validation set cohort of 1122 patients, and a hazard ratio of 3.04 after adjusting for prognostic markers which contain T and N stages. Sillard et al. [209] used deep learning for predicting survival outcomes after hepatocellular carcinoma resection.

3.7 Medical imaging in COVID-19

Basically, after COVID-19 has been identified in 31 December 2019 [210] and it is based on polymerase chain reaction (PCR) test. However, it was found that it can be analyzed and diagnosed through medical imaging, even though most radiologists’ societies do not recommend it, because it has similar features of various pneumonia diseases. Simpson et al. [211], have prospected a potential use of CT scans for clinical managing, and eventually, they have proposed four standard categories for reporting COVID-19 languages. Mahmood et al. [212] have studied 12,270 patients and recommend to be subjected for CT screening for early detection of COVID-19 to limit the speedy spread of the disease. Another approach for classification of COVID-19 is using portable (PCXR) which uses chest X-ray scans instead of the expensive CT scans; furthermore, this has the potential of minimizing the chances of spreading the virus. For the identification of COVID-19, Pereira et al. [152] have flowed using chest X-ray scans using the portable manner. For the comparison of different screening methods, it was suggested by Soldati et al. [213], which stated the Lung Ultrasound (LUS) is needed to be compared with chest X-ray and CT scans to help designing better diagnostic system to be suitable for the technological resources. COVID-19 has gained the attention of deep learning researchers who have employed different DL models for the main pathologies for diagnosing this disease using different medical imaging modalities from different datasets. Starting with segmentation, a new proposed system for screening coronavirus disease was done by Butt et al. [214], who have employed 3D-CNN architecture for segmenting multiple volumes of CT scans; a classification step is included to categorize patches into COVID-19 from other pneumonia diseases, such as influenza and a viral pneumonia. After that, Bayesian function is used to calculate the final analysis report. Wang et al. [215] have performed their CNN model on chest X-ray images, for extracting the feature map, classification, regression, and finally the needed mask for segmentation. Another DL model using chest X-ray scans was introduced by Murphy et al. [108], using U-Net architecture for detecting of tuberculosis and finally classifying images, with AUC of 0.81. For the detection of COVID-19, Li et al. [124] have developed a new tool of deep learning to detect COVID-19 from CT scans; the main work consists of few steps starting from extracting the lungs as ROI using U-Net, then generating features using ResNet-50, and finally using fully connected layer for generating the probability score of COVID-19 and the final results have reported AUC of 0.96. Another COVID-19 detection system from X-rays and CT scans was proposed by Kassani et al. [126], who have used multiple models for their strategy, DenseNet 121 have achieved accuracy of 99%, and REsNet achieved accuracy of 98% after being trained by LightGBM, and also, they have used other backbone models such as MobileNet, Xception, and Inception-ResNet-V2,NASNe, and VGG-Net. For classification of COVID-19, Wu et al. [150] have used the fusion of DL networks, starting from segmenting lung regions using threshold-based method using CT scans, next using ResNet-50 to extract the features map which further is fed to fully connected layer to record AUC of 0.732 and 70% accuracy. Ardakani et al. [216] have compared ten DL models for classification of COVID-19, including AlexNet, VGG-16, VGG-19, GoogleNet, MobileNet, Xception, ResNet-101, ResNet-18, ResNet-50, and SqueezNet. Where ResNet-101 has recorded the best results regarding sensitivity. A few used deep learning themes that have been used for different applications of COVID-19 are listed in Tables 1, 2, 3.

4 Deep learning schemes

4.1 Data augmentation

It was clearly that deep learning approach performs better than the traditional machine learning and shallow learning methods and other handcrafted feature extraction from images, because deep learning models learn image descriptors automatically for analysis. It is commonly possible to combine deep learning approach with the knowledge learned from the handcrafted features for analyzing medical images [153, 200, 217]. The main key feature of deep learning is the large-scale datasets which contain images from thousands of patients. Although some vast data of clinical images, reports, and annotations are recorded and stored digitally in many hospitals for example, Picture Archiving and Communication systems (PACS) and Oncology Information System (OIS), in practice, these kinds of large-scale datasets with semantic labels are an efficiency measure for deep learning models used in medical imaging analysis. As it is known that medical images face the lack of dataset, data augmentation has been used to create new samples either depending on the existing samples or using generative models to generate new images. The new augmented samples are emerged with the original samples; thus, the size of the dataset is increased with the variation in the data points. Data augmentation is used by default with deep learning due to its added efficiency, since it reduces the chance of overfitting and it eliminates the imbalanced issue while using multi-class datasets, because it increases the number of the training samples and this also helps in generalizing the models and enhance the testing results. The basic data augmentation techniques are simple and it was widely adopted in medical imaging, such as cropping, rotating, flipping, shearing, scaling, and translation of images [80, 218, 219]. Pezeshk et al. [220] have proposed mixing tool which can seamlessly merge a lesion patch into a CT scan or mammography modality, so the merged lesion patches can be augmented using the basic transformations and inserted to the lesion shape and characteristics.

Zhang et al. [221] have used DCNN for extracting features and obtaining image representations and similarity matrix too, their proposed data augmentation method is called unified learning of features representation, their model was trained on seed-labeled dataset, and authors intended to classify colonoscopy and upper endoscopy medical images. The second method to tackle limited datasets is to synthesize medical data using an object model or physics principles of image formation and using generative models schemes to serve as applicable medical examples and therefore increase the performance of any deep learning task at hand. The most used model for synthesizing medical data is Generative Adversarial Networks (GANs); for example [143], GANs were used to generate lesion samples which increase CNN performance while the classification task of liver lesions. Yang et al. [222] used Radon Transform for objects with different modeled conditions by adding noise to the data for synthesizing CT dataset and the trained CNN model does the estimation of high-dose projection from low-dose. Synthesizing medical images is used for different purposes; for example, Chen et al. [223] have generating training data for noise reduction for reconstructed CT scans by applying deep learning algorithm by synthesizing noisy projections from patient images. While, CUI et al. [224] have used simulated dynamic PET data and used stacked sparse autoencoders for dynamic PET reconstruction framework.

4.2 Datasets

Deep learning models are famous to be dataset hungry, and the good quality of dataset has been always the key-parameter for deep learning for learning computational models and provide trusted results. The task of deep learning models is more potential when handling medical data because the accuracy is highly needed, recently many publicly available datasets have been released online for evaluating the new developed DL models. Commonly, there are different repositories which provide useful compilations of the public datasets (e.g., Github, Kaggle, and other webpages). Comparing to the datasets for general computer vision tasks (thousands to million annotated images), medical imaging datasets are considered to be too small. According to the Conference on Machine Intelligence in Medical Imaging (C-MIMI) that was held in 2016 [225], ML and DL are starving for large-scale annotated datasets, and the most common regularities and specifications (e.g., sample size, cataloging and discovery, pixel data, metadata, and post-processing) related to medical images datasets are mentioned in this white paper. Therefore, different trends in medical imaging community have started to adopt different approaches for generating and increasing the number of samples in dataset, such as generative models, data augmentation, and weakly supervised learning, to avoid overfitting on the small dataset and finally provide an end-to-end fashion reliable deep learning model. Martin et al. [226] have described the fundamental steps for preparing the medical imaging datasets for the usage of AI applications. Fig. 11 shows the flowchart of the process; moreover, they have listed the current limitations and problem of data availability of such datasets. Examples of popular used databases for medical images analysis which exploit deep learning were listed in [227]. In this paper, we provide the typically mostly used datasets in the literature of medical imaging which are exploited by deep learning approaches in Table 6.

4.3 Feature’s extraction and selection

Feature extraction is the tool of converting training data and trying to establish as maximum features as possible to make deep learning algorithms much efficient and adequate. There are some common algorithms used for medical image features’ extractors, such as Gray-Level-Run-Length-Matrix (GLRM), Local Binary Patterns (LBP), Local Tetra Patterns (LTrP), Completed Local Binary Patterns (CLBP), and Gray-Level-Co-Occurrence Matrix (GLCM); these techniques are used in the first place before applying the main DL algorithm for different medical imaging tasks.

GLCM: is a common used feature extractor by which it searches for the textural patterns and their nature within gray-level gradients [234]. The main extracted features through this technique are autocorrelation, contrast, Dissimilarity, correlation, cluster prominence, energy, homogeneity, variance, entropy, difference variance, sum variance, cluster shade, sum entropy, information measure of correlation.

LBP: is another famous feature extractor which uses the locally regional statistical features [235]. The main theme of this technique is to select a central pixel and the rest pixels along a circle are taken to be binary encoded as 0 if their values are less than the central pixel, and 1 for the pixels which have values greater than the central pixel. In histogram statistics, these binary codes are encoded to decimal numbers.

Gray-Level Run Length Matrix (GLRLM): this method removes the higher order statistical texture data. In case of the maximum gray dimensions G, the image is repeatedly re-quantizing to aggregate the network. The mathematical formula of GLRLM is given as follows:

where (u,v) refers to the sizes of the array values, Nr refers to the maximum gray-level values, and Kmax is the more length.

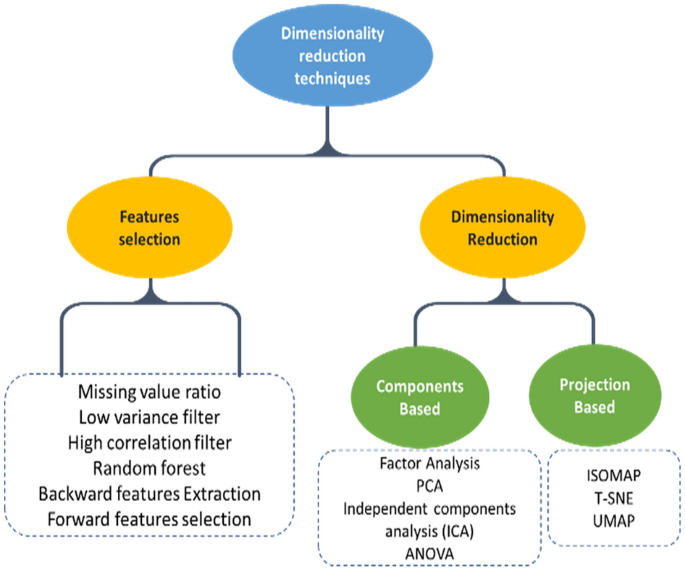

Raj et al. [236] have used both GLCM and GLRLM as the main features’ extraction techniques for extracting the optimal features from the pre-processed medical images, which further the optimal features have improved the final results of classification task. Figure 12 shows the features extraction and selection types used for dimensionality reduction.

4.3.1 Feature selection techniques

Analysis of Variance—ANOVA: is a statistical model by which it evaluates and compares two or more experiments averages. The idea behind this model is that the difference between means are substantial to evaluate the performance of two estimates [237]. Surendiran et al. [238] have used the stepwise ANOVA Discriminant Analysis (DA) for mammogram masses’ classification.

The basic steps of performing ANOVA on data distribution are

-

1.

Defining Hypothesis:

-

2.

Calculating the sum of squares

It is used to determine the dispersion from datapoints and it can be written as

$$\mathrm{Sum of Squares}=\sum_{i=0}{({X}_{i}-\overline{X })}^{2}.$$(5)ANOVA performs F test to compare the variance difference between groups and within groups. And this can be done using total sum of squares which is defined as the distance between each point from the grand mean x-bar.

-

3.

Determining the degree of freedom

-

4.

Calculating F value

-

5.

Acceptance or rejection of null hypothesis

Principal Component Analysis (PCA): is considered as the most used tool for extracting structural features from potentially high-dimensional datasets. It extracts the eigenvectors (q) which are connected to (q) largest eigenvalues from an input distribution. PCA results develop new features that are independent of another. The main goal of PCA is to apply linear transformation for obtaining a new set of samples, so that the components of y are un-correlated, [239]. The linear transform is given as follows:

where x is the input element vector ∈ RI, after that the PCA algorithm will choose the most significant components (y), and the main steps to do this are summarized as follows:

-

1.

Standardize and normalization of the datapoints: after calculating the mean and standard deviation of the input distribution

$${X}_{\mathrm{new}}=\frac{X-\mathrm{mean}(X)}{\mathrm{std}(X)}.$$(7) -

2.

Calculating the covariance matrix from the input datapoints:

$$C\left[i,j\right]=\mathrm{cov}\left({x}_{i},{x}_{j}\right).$$(8) -

3.

From the covariance matrix extract the eigenvalues:

$$C=V\sum {V}^{-1}.$$(9) -

4.

Choosing k eigenvectors with the highest eigenvalues by sorting the eigenvalues and eigenvectors, k refers to the number of dimensions in the dataset

$${\sum }_{\mathrm{sort}}=\mathrm{sort}(\sum ) {\bigvee }_{\mathrm{sort}}=\mathrm{sort}(\bigvee {\sum }_{\mathrm{sort}}).$$(10)

Another major feature of PCA algorithm is used for feature dimensionality reduction.

In medical imaging, PCA was used mostly for dimensionality reduction, Wu et al. [240] have used PCA-based nearest neighbor for estimation of local structure distribution and extracted the entire connected tree, and in their results over retinal fundus data, they have achieved state-of-the-art results by producing more information regarding the tree structure.

PCA was also used as a data augmentation process before training the discriminative CNN for different medical imaging tasks; for capturing the important characteristics of natural images, different algorithms were compared to perform data augmentation [241] (Fig. 12).

4.4 Evaluation metrics

For the purpose of evaluating and measuring the performance of deep learning models while validating medical images, different evaluation metrics are used according to some specific regularities and criteria. For example, some particular evaluation metrics are used with specific tasks like Dice score and F1-score are mostly used for segmentation, while accuracy and sensitivity are mostly used for classification task. Here, we will focus on the most used performance measurement metrics in the literature and will cover the metrics mentioned in our tables of comparison.

-

1.

The Dice coefficient is the most used metric for segmentation task for validating the medical images. It is common also to use dice score to measure reproducibility [242]. The general formula to calculate the Dice coefficient is

$$\mathrm{Dice}=\frac{2\left|{S}_{g}^{1}\cap {S}_{t}^{1}\right|}{\left|{S}_{g}^{1}\right|+\left|{S}_{t}^{1}\right|}=\frac{2\mathrm{TP}}{2\mathrm{TP}+\mathrm{FP}+\mathrm{FN}}.$$(11) -

2.

Jaccard-index (similarity coefficient) [JAC]:

Jaccard-index is a statistic metric used for finding the similarities between sample-sets. It is defined as the ratio between the size of intersection and the size of union of the sample-set, and the mathematical formula is given by

$$\mathrm{JAC}=\frac{\left|{S}_{g}^{1}\cap {S}_{t}^{1}\right|}{\left|{S}_{g}^{1}\right|+\left|{S}_{t}^{1}\right|}=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FP}+\mathrm{FN}}.$$(12)From the formula above, we note that the JAC-index is always greater than the dice score and the relation between the two metrics is defined by

$$\mathrm{JAC}=\frac{\left|{S}_{g}^{1}\cap {S}_{t}^{1}\right|}{\left|{S}_{g}^{1}\right|+\left|{S}_{t}^{1}\right|}=\frac{2\left|{S}_{g}^{1}\cap {S}_{t}^{1}\right|}{2(\left|{S}_{g}^{1}\right|+\left|{S}_{t}^{1}\right|-\left|{S}_{g}^{1}\cap {S}_{t}^{1}\right|)}=\frac{\mathrm{DICE}}{2-\mathrm{DICE}}.$$(13) -

3.

True-Positive Rate (TPR):

Also is called as Sensitivity and Recall, is used to maximize the prediction of a particular class and it measures the portion of the positive voxels from the ground truth which also were identified as positive when performing segmentation process. It is given by the formula

$$\mathrm{TPR}=\mathrm{Sensitivity}=\mathrm{Recall}=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FN}}.$$(14) -

4.

True-Negative Rate (TNR):

Also called specificity, it measures the number of negative voxels (background) from the ground truth which are also identified to be negative after the segmentation process, and it is given by the formula

$$\mathrm{TNR}=\mathrm{Specificity}=\frac{\mathrm{TN}}{\mathrm{TN}+\mathrm{FP}}.$$(15)However, both TNR and TPR metrics are not used commonly for medical images’ segmentation due to their sensibility to the segments size.

-

5.

Accuracy [ACC]

Accuracy means exactly how good the DL model at guessing the right labels (ground truth). Accuracy is commonly used to validate the classification task and it is given using the formula

$$\mathrm{ACC}=\frac{\mathrm{no}.\mathrm{of correct predictions}}{\mathrm{total number of predictions}}=\frac{\mathrm{TP}+\mathrm{TN}}{\mathrm{TP}+\mathrm{TN}+\mathrm{FP}+\mathrm{FN}}.$$(16) -

6.

F1-Score:

It is used to get the best precision and recall together; thus, the F1-score is called the harmonic mean of precision and recall values; it is given by the formula

$${F}_{1}={(\frac{{\mathrm{recall}}^{-1}+{\mathrm{Precision}}^{-1}}{2})}^{-1}=2.\frac{\mathrm{precision}.\mathrm{recall}}{\mathrm{precision}+\mathrm{recall}}.$$(17)The predictive accuracy of a classification model is related to the F1-score; when F1-score is higher means, we have better classification accuracy.

-

7.

F-beta score:

It is a combination of advantages of precision and recall metrics when both the False-Negative (FN) and False-Positive (FP) have equal importance. F-beta-score is given using the same formula for F1-score by altering its formula a bit by including an adjustable parameter (beta), and the formula became

$${F}_{\beta }=\left(1+{\beta }^{2}\right).\frac{\mathrm{precision}.\mathrm{recall}}{\left({\beta }^{2}.\mathrm{precision}\right)+\mathrm{recall}}.$$(18)This evaluation metric measures the effectiveness of a DL model according to a user who attaches beta times.

-

8.

AUC-ROC:

Receiver-Operating Characteristics Curve (ROC) is a graph between True-Positive Rate (TPR) (sensitivity) and False-Positive Rate (FPR) (1- specificity) by which it shows the performance of classification model, and the plot is characterized at different classification thresholds. The biggest advantage of ROC curve is that its independency of the change in number of responders and response rate.

AUC is the area under curve of ROC, and it measures the 2D area under the ROC curve which in turn means the integral of the ROC curve from 0 to the AUC measures the aggregate performance of classification at all the possible thresholds. One way to understand the AUC is as the probability that a model classifies random positive samples more than random negative samples. The ROC curve is shown in Fig. 13.

5 Discussion and conclusion

5.1 Technical challenges

In this overview paper, we have presented a review of the previous literature of deep learning applications in medical imaging. It contributed three main sections: first, we have presented the core of deep learning concepts considering the main highlights of understanding of basic frameworks in medical images analysis. The second section contains the main applications of deep learning in medical imaging (e.g., segmentation, detection, classification, and registration) and we have presented a comprehensive review of the literature. The criteria that we have built our overview consists of the mostly cited papers, the mostly recent (from 2015 to 2021), and the papers with better results. The third major part of this paper is focused on the deep learning themes regarding some challenges and the future directions of addressing those challenges. Besides focusing on the quality of the mostly recent works, we have highlighted the suitable solutions for different challenges in this field and the future directions that have been concluded from different scientific perspective. Medical imaging can get the benefit from other fields of deep learning, that have been encouraged from collaborative research works from computer vision communities, and furthermore, this collaboration is used to overcome the lack of medical dataset using transfer learning. Cho et al. [243] have answered the question of how much is the size of medical dataset needed to train a deep learning model. Creating synthetic medical images is another solution presented in deep learning using Variational Autoencoders (VAEs) and GANs for tackling the limited labeled medical data. For instance, Guibas et al. [244] have used 2 GANs for segmenting and then generating new retinal fundus images successfully. Another applications of GANs for segmentation and synthetic data generation were found [132, 245].

Data or class imbalance [246] is considered a critical problem in medical imaging, and it refers to that medical images that are used for training are skewed toward non-pathological images; rare diseases have less number of training examples which cause the problem of imbalanced data which lead to incorrect results. Data augmentation represents good solution for this, because it increases the number of samples of the small classes. Away from dataset challenges strategies, there are algorithmic modification strategies which are used to improve DL models’ performance for data imbalance issue [247].

Another important non-technical challenge is the public reception of humans that the results are being analyzed using DL models (not human). In some papers in our report, DL models have outperformed specialists in medicine (e.g., dermatologists and radiologists) and mostly in image recognition tasks. Yet, a moral culpability may arise whenever a patient is mistakenly diagnosed or morbidity cases may arise too when using DL-based diagnostic, since the work of a DL algorithms is considered a black box. However, the continued development and evolving of DL models might take a major role in the medicine as it is involving in various facets of our life.

AI systems have started to emerge in hospitals from a clinical perspective. Bruijne [248] have presented five challenges facing the broader family of deep learning which is machine learning in medical imaging field including the data preprocessing of different modalities, interpretation of results to clinical practice, improving the access of medical data, and training the models with little training data. These challenges further have addressed the future directions of DL models improvement. Another solutions of small datasets were reported in [8, 249].

DL models’ architectures were found not to be the only factor that provides quality results, where data augmentation and preprocessing techniques are also substantial tools for a robust and efficient system. The big question is that how to benefit from the results of DL models for the best of medical images analysis in the community.

Considering the historical developments of ML techniques in medical imaging gives us a future perspective how DL models will continue to improve in the same field. Accordingly, medical images quality and data annotations are crucial for proper analysis. A significant concept is the relevance between statistical sense and clinical sense, even though the statistical analysis are quiet important in research, but in this field, researchers should not lose the sight from clinical perspective; in other words, even when a good CNN models provides good answers from the statistical perspective, it does not mean that it will replace a radiologist even after using all the helping techniques like data augmentation and adding more layers to get better accuracies.

5.2 Future promises

After reviewing literature and the most competitive challenges that face deep leaning in medical imaging, we concluded that three aspects will probably carry the revolution of DL according to most of researchers, which are the availability of large-scale datasets, advances in deep learning algorithms, and the computational power for data processing and evaluation of DL models. Thus, most of the DL techniques are directed into the above aspects for alleviating the DL performance more; moreover, the need for investigations to improve data harmonization, standards developments which is needed for reporting and evaluation, and accessibility of larger annotated data such as the public datasets which lead to better independent benchmarks’ services. Some of the interesting applications in medical imaging was proposed by Nie et al. [250], by which they have used GANs to generate or CT scans from MRI images for brain; the benefit of such work will reduce the risk of patients being exposed to ionizing radiation from CT scanners, which also reserve patients’ safety. Another significant perspective relies on increasing the resolution and quality of medical images and also reduces the blurriness from CT scans and MRI images which means getting higher resolution with lower costs and better results, because it has lower field strength [251].

The new trends’ technology of deep learning approach is concerned about medical data collection. Wearable technologies are getting the interest of the new research which provide the benefits of flexibility, real-time monitoring of patients, and the immediate communication of the collected data. Whenever the data become available, Deep learning and AI will start to use the unsupervised data exploration, which in turn will provide better analysis power plus suggesting better treatments’ methodologies in healthcare. In summary, the new trends of AI in healthcare pass through stages; the quality of performance (QoP) related to deep learning will lead to standardization in terms of wearable technology which represent the next stage of healthcare applications and personalized treatment. Diagnosing and treatment depend on specialists, but with deep learning enabled, some small changes and signs in human body can be seen and early detection becomes possible which in turn will launch the treatment process of pre-stage of diseases. DL model optimization mainly focuses on the network architecture, while the standard term of optimization means the distribution and standardization with respect to other parts of DL (e.g., optimizers, loss functions, preprocessing and post-processing, etc.). In many cases to achieve better diagnosis, medical images are not sufficient, where another data are required to be combined (e.g., historical medical reports, genetic information, lab values, and other non-image data), though by linking and normalizing these data with medical images will lead to better diagnosis of diseases, more accurately through analyzing these data in higher dimensions.

References

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015). https://doi.org/10.1038/nature14539