Abstract

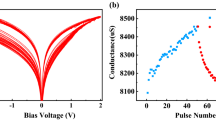

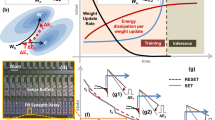

Traditional computing architecture (Von Neumann) that requires data transfer between the off-chip memory and processor consumes a large amount of energy when running machine learning (ML) models. Memristive synaptic devices are employed to eliminate this inevitable inefficiency in energy while solving cognitive tasks. However, the performances of energy-efficient neuromorphic systems, which are expected to provide promising results, need to be enhanced in terms of accuracy and test error rates for classification applications. Improving accuracy in such ML models depends on the optimal learning parameter changes from a device to algorithm-level optimisation. To do this, this paper considers the Adadelta, an adaptive learning rate technique, to achieve accurate results by reducing the losses and compares the accuracy, test error rates, and energy consumption of stochastic gradient descent (SGD), Adagrad and Adadelta optimisation methods integrated into the Ag:a-Si synaptic device neural network model. The experimental results demonstrated that Adadelta enhanced the accuracy of the hardware-based neural network model by up to 4.32% when compared to the Adagrad method. The Adadelta method achieved the best accuracy rate of 94%, while DGD and SGD provided an accuracy rate of 68.11 and 75.37%, respectively. These results show that it is vital to select a proper optimisation method to enhance performance, particularly the accuracy and test error rates of the neuro-inspired nano-synaptic device-based neural network models.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are not openly available due to reasons of sensitivity and are available from the corresponding author upon reasonable request.

Abbreviations

- ACC:

-

Accuracy

- Adadelta:

-

Adaptive delta

- Adagrad:

-

Adaptive gradient

- Ag:a-Si:

-

Silver (Ag) and amorphous silicon (a-Si)

- ANN:

-

Artificial neural network

- BL:

-

Bit line

- CMOS:

-

Complementary metal-oxide-semiconductor

- DGD:

-

Default gradient descent

- eNVM:

-

Embedded non-volatile memory

- FeFET:

-

Ferroelectric field-effect transistor

- FFNN:

-

Feed-forward neural network

- HfZrOx:

-

Hafnium (Hf), zirconium (Zr), and oxygen (O)

- HZO:

-

Hafnium zinc oxide

- LR:

-

Learning rate

- LTD:

-

Long-term depression

- LTP:

-

Long-term potentiation

- ML:

-

Machine learning

- MNIST:

-

Modified National Institute of Standards and Technology

- NH:

-

Number of hidden neuron

- NVM:

-

Non-volatile memory

- SGD:

-

Stochastic gradient descent

- WL:

-

Word line

References

Wang P, Fan E, Wang P (2021) Comparative analysis of image classification algorithms based on traditional machine learning and deep learning. Pattern Recogn Lett 141:61–67. https://doi.org/10.1016/j.patrec.2020.07.042

Sarpeshkar R (1998) Analog versus digital: extrapolating from electronics to neurobiology. Neural Comput 10(7):1601–1638. https://doi.org/10.1162/089976698300017052

Zhu J, Zhang T, Yang Y, Huang R (2020) A comprehensive review on emerging artificial neuromorphic devices. Appl Phys Rev 7(1):011312. https://doi.org/10.1063/1.5118217

Kim S, Kim H-D, Choi S-J (2019) Impact of synaptic device variations on classification accuracy in a binarized neural network. Sci Rep 9(1):15237. https://doi.org/10.1038/s41598-019-51814-5

Burr GW, Shelby RM, Sidler S, Di Nolfo C, Jang J, Boybat I, Shenoy RS, Narayanan P, Virwani K, Giacometti EU et al (2015) Experimental demonstration and tolerancing of a large-scale neural network (165 000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans Electron Devices 62(11):3498–3507. https://doi.org/10.1109/iedm.2014.7047135

Jo SH, Chang T, Ebong I, Bhadviya BB, Mazumder P, Lu W (2010) Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett 10(4):1297–1301. https://doi.org/10.1021/nl904092h

Jerry M, Chen P-Y, Zhang J, Sharma P, Ni K, Yu S, Datta S (2017) Ferroelectric FET analog synapse for acceleration of deep neural network training. In: 2017 IEEE international electron devices meeting (IEDM), pp 2–6. https://doi.org/10.1109/iedm.2017.8268338

Oh S, Kim T, Kwak M, Song J, Woo J, Jeon S, Yoo IK, Hwang H (2017) Hfzro x-based ferroelectric synapse device with 32 levels of conductance states for neuromorphic applications. IEEE Electron Device Lett 38(6):732–735. https://doi.org/10.1109/led.2017.2698083

Liao Y, Deng N, Wu H, Gao B, Zhang Q, Qian H (2018) Weighted synapses without carry operations for RRAM-based neuromorphic systems. Front Neurosci 12:167. https://doi.org/10.3389/fnins.2018.00167

Zeiler MD (2012) Adadelta: an adaptive learning rate method. arXiv preprint arXiv:1212.5701

Deng L (2012) The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Process Mag 29(6):141–142. https://doi.org/10.1109/msp.2012.2211477

He H, Wu D (2019) Transfer learning for brain-computer interfaces: a Euclidean space data alignment approach. IEEE Trans Biomed Eng 67(2):399–410. https://doi.org/10.1109/tbme.2019.2913914

Tang J, Yuan F, Shen X, Wang Z, Rao M, He Y, Sun Y, Li X, Zhang W, Li Y et al (2019) Bridging biological and artificial neural networks with emerging neuromorphic devices: fundamentals, progress, and challenges. Adv Mater 31(49):1902761. https://doi.org/10.1002/adma.201902761

Ryu J-H, Kim B, Hussain F, Ismail M, Mahata C, Oh T, Imran M, Min KK, Kim T-H, Yang B-D et al (2020) Zinc tin oxide synaptic device for neuromorphic engineering. IEEE Access 8:130678–130686. https://doi.org/10.1109/access.2020.3005303

Sahu DP, Jetty P, Jammalamadaka SN (2021) Graphene oxide based synaptic memristor device for neuromorphic computing. Nanotechnology 32(15):155701. https://doi.org/10.1088/1361-6528/abd978

Guo T, Pan K, Sun B, Wei L, Yan Y, Zhou Y, Wu Y (2021) Adjustable leaky-integrate-and-fire neurons based on memristor-coupled capacitors. Mater Today Adv 12:100192. https://doi.org/10.1016/j.mtadv.2021.100192

Ravenscroft D, Occhipinti LG (2021) 2d material memristor devices for neuromorphic computing. In: 2021 17th international workshop on cellular nanoscale networks and their applications (CNNA), pp 1–4. https://doi.org/10.1109/cnna49188.2021.9610802

Sun J, Han J, Wang Y, Liu P (2021) Memristor-based neural network circuit of emotion congruent memory with mental fatigue and emotion inhibition. IEEE Trans Biomed Circuits Syst 15(3):606–616. https://doi.org/10.1109/tbcas.2021.3090786

Xu W, Wang J, Yan X (2021) Advances in memristor-based neural networks. Front Nanotechnol 3:645995. https://doi.org/10.3389/fnano.2021.645995

Lederer M, Kämpfe T, Ali T, Müller F, Olivo R, Hoffmann R, Laleni N, Seidel K (2021) Ferroelectric field effect transistors as a synapse for neuromorphic application. IEEE Trans Electron Devices 68(5):2295–2300. https://doi.org/10.1109/ted.2021.3068716

Muñoz-Martín I, Bianchi S, Melnic O, Bonfanti AG, Ielmini D (2021) A drift-resilient hardware implementation of neural accelerators based on phase change memory devices. IEEE Trans Electron Devices 68(12):6076–6081. https://doi.org/10.1109/ted.2021.3118996

Wang L, Shen X, Gao Z, Fu J, Yao S, Cheng L, Lian X (2022) Review of applications of 2d materials in memristive neuromorphic circuits. J Mater Sci 57(8):4915–4940. https://doi.org/10.1007/s10853-022-06954-x

Zhou G, Wang Z, Sun B, Zhou F, Sun L, Zhao H, Hu X, Peng X, Yan J, Wang H et al (2022) Volatile and nonvolatile memristive devices for neuromorphic computing. Adv Electron Mater 8(7):2101127. https://doi.org/10.1002/aelm.202101127

Pradeep J, Srinivasan E, Himavathi S (2011) Neural network based handwritten character recognition system without feature extraction. In: 2011 international conference on computer, communication and electrical technology (ICCCET), pp 40–44. https://doi.org/10.1109/icccet.2011.5762513. IEEE

Bai K, An Q, Liu L, Yi Y (2020) A training-efficient hybrid-structured deep neural network with reconfigurable memristive synapses. IEEE Trans Very Large Scale Integr Syst 28(1):62–75. https://doi.org/10.1109/tvlsi.2019.2942267

Davies T (1981) Kirchhoff’s circulation law applied to multi-loop kinematic chains. Mech Mach Theory 16(3):171–183. https://doi.org/10.1016/0094-114x(81)90033-1

Yu S (2018) Neuro-inspired computing with emerging nonvolatile memorys. Proc IEEE 106(2):260–285. https://doi.org/10.1109/jproc.2018.2790840

Hu M, Li H, Wu Q, Rose GS (2012) Hardware realization of BSB recall function using memristor crossbar arrays. In: DAC design automation conference 2012, pp 498–503. https://doi.org/10.1145/2228360.2228448

Gul F (2020) Nano-scale single layer tio2-based artificial synaptic device. Appl Nanosci 10(2):611–616. https://doi.org/10.1007/s13204-019-01179-y

Luo Y, Peng X, Yu S (2019) Mlp+ neurosimv3. 0: Improving on-chip learning performance with device to algorithm optimizations. In: Proceedings of the international conference on neuromorphic systems, pp 1–7. https://doi.org/10.1145/3354265.3354266

Ilyas N, Li D, Li C, Jiang X, Jiang Y, Li W (2020) Analog switching and artificial synaptic behavior of ag/sio x: Ag/tio x/p++-si memristor device. Nanoscale Res Lett 15(1):1–11. https://doi.org/10.1186/s11671-020-3249-7

Chen P-Y, Peng X, Yu S (2018) Neurosim: a circuit-level macro model for benchmarking neuro-inspired architectures in online learning. IEEE Trans Comput Aided Des Integr Circuits Syst 37(12):3067–3080. https://doi.org/10.1109/tcad.2018.2789723

Mustapha A, Mohamed L, Ali K (2020) An overview of gradient descent algorithm optimization in machine learning: Application in the ophthalmology field. In: Smart applications and data analysis: third international conference, SADASC 2020, Marrakesh, Morocco, June 25–26, 2020, Proceedings 3, pp 349–359. https://doi.org/10.1007/978-3-030-45183-7_27. Springer

Bottou L (2012) Stochastic gradient descent tricks. Neural networks: tricks of the trade, 2nd edn. Springer, Berlin, pp 421–436. https://doi.org/10.1007/978-3-642-35289-8_25

Ruder S (2016) An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747

Duchi J, Hazan E, Singer Y (2011) Adaptive subgradient methods for online learning and stochastic optimization. J Mach Learn Res 12(7):2121–2159

Dean J, Corrado G, Monga R, Chen K, Devin M, Mao M, Ranzato M, Senior A, Tucker P, Yang K, et al (2012) Large scale distributed deep networks. Adv Neural Infor Process Syst 25

Kochenderfer MJ, Wheeler TA (2019) Algorithms for optimization. Mit Press, Cambridge, p 500

Li Y, Ren X, Zhao F, Yang S (2021) A zeroth-order adaptive learning rate method to reduce cost of hyperparameter tuning for deep learning. Appl Sci 11(21):10184. https://doi.org/10.3390/app112110184

Zhang J, Hu F, Li L, Xu X, Yang Z, Chen Y (2019) An adaptive mechanism to achieve learning rate dynamically. Neural Comput Appl 31(10):6685–6698. https://doi.org/10.1007/s00521-018-3495-0

Baldominos A, Saez Y, Isasi P (2019) A survey of handwritten character recognition with mnist and emnist. Appl Sci 9(15):3169. https://doi.org/10.3390/app9153169

Chen P-Y, Yu S (2018) Technological benchmark of analog synaptic devices for neuroinspired architectures. Design Test 36(3):31–38. https://doi.org/10.1109/mdat.2018.2890229

Oymak S, Soltanolkotabi M (2019) Overparameterized nonlinear learning: gradient descent takes the shortest path. In: International conference on machine learning, PMLR, pp 4951–4960.

Gao L, Wang I-T, Chen P-Y, Vrudhula S, Seo J-S, Cao Y, Hou T-H, Yu S (2015) Fully parallel write/read in resistive synaptic array for accelerating on-chip learning. Nanotechnology 26(45):455204. https://doi.org/10.1088/0957-4484/26/45/455204

Woo J, Moon K, Song J, Lee S, Kwak M, Park J, Hwang H (2016) Improved synaptic behavior under identical pulses using alo x/hfo 2 bilayer Rram array for neuromorphic systems. Electron Device Lett 37(8):994–997. https://doi.org/10.1109/led.2016.2582859

Beohar D, Rasool A (2021) Handwritten digit recognition of mnist dataset using deep learning state-of-the-art artificial neural network (ANN) and convolutional neural network (CNN). In: 2021 international conference on emerging smart computing and informatics (ESCI), pp 542–548. https://doi.org/10.1109/esci50559.2021.9396870

He P, Zhou Y, Duan S, Hu X (2022) Memristive residual capsnet: a hardware friendly multi-level capsule network. Neurocomputing 496:1–10. https://doi.org/10.1016/j.neucom.2022.04.088

Yang Y, Deng L, Wu S, Yan T, Xie Y, Li G (2020) Training high-performance and large-scale deep neural networks with full 8-bit integers. Neural Netw 125:70–82. https://doi.org/10.1016/j.neunet.2019.12.027

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interest in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yilmaz, Y. Accuracy improvement in Ag:a-Si memristive synaptic device-based neural network through Adadelta learning method on handwritten-digit recognition. Neural Comput & Applic 35, 23943–23958 (2023). https://doi.org/10.1007/s00521-023-08995-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08995-y