Abstract

The purpose of this review is to present a comprehensive overview of the theory of ensemble Kalman–Bucy filtering for continuous-time, linear-Gaussian signal and observation models. We present a system of equations that describe the flow of individual particles and the flow of the sample covariance and the sample mean in continuous-time ensemble filtering. We consider these equations and their characteristics in a number of popular ensemble Kalman filtering variants. Given these equations, we study their asymptotic convergence to the optimal Bayesian filter. We also study in detail some non-asymptotic time-uniform fluctuation, stability, and contraction results on the sample covariance and sample mean (or sample error track). We focus on testable signal/observation model conditions, and we accommodate fully unstable (latent) signal models. We discuss the relevance and importance of these results in characterising the filter’s behaviour, e.g. it is signal tracking performance, and we contrast these results with those in classical studies of stability in Kalman–Bucy filtering. We also provide a novel (and negative) result proving that the bootstrap particle filter cannot track even the most basic unstable latent signal, in contrast with the ensemble Kalman filter (and the optimal filter). We provide intuition for how the main results extend to nonlinear signal models and comment on their consequence on some typical filter behaviours seen in practice, e.g. catastrophic divergence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider a time-invariant, continuous-time, signal and observation model of the form,

where \(\mathscr {X}_t\) is the underlying signal (latent) process, \(\mathscr {Y}_t\) is the observation signal, \(a(\cdot )\) and \(h(\cdot )\) are the signal and sensor model functions, and \(\mathscr {V}_t\) and \(\mathscr {W}_t\) are continuous-time Brownian motion (noise) signals. The filtering problem [4, 9] is concerned with estimating some statistic(s) of the signal \(\mathscr {X}_t\) conditioned on the observations \(\mathscr {Y}_s\), \(0\le s\le t\). For example, one may want to characterise fully the distribution of \(\mathscr {X}_t\) given \(\mathscr {Y}_t\), or one may seek some moments of this distribution. The conditional distribution of \(\mathscr {X}_t\) given \(\mathscr {Y}_s\), \(0\le s\le t\) is called the (optimal, Bayesian) filtering distribution. When the model functions \(a(\cdot )\), \(h(\cdot )\) are linear, the exact (optimal, Bayesian) solution to this problem is completely characterised by the first two moments of the filtering distribution and these moments are given by the celebrated Kalman–Bucy filter [4, 16, 77].

Apart from the most special of nonlinear models, there is in general no finite dimensional optimal filter [9, 12]. In practice, some filter approximations are needed. For example, one may consider a type of “extended” Kalman filter [4] based on linearisation of the nonlinear model and application of the classical Kalman–Bucy filter. This method works well in suitably regular, and sufficiently close to linear problems. This method does not handle well multiple modes in the true filtering distribution. So-called Gaussian-sum filters are another Kalman-filter-type/based approximation designed to handle in some sense multiple modes in the filtering distribution [4]. More recently, there has been some focus on Monte Carlo integration methods for approximating the optimal Bayesian filter [9, 47]. Such methods, termed particle filters or sequential Monte Carlo filters/methods [56, 57, 63], have the advantage of not being subject to the assumption of linearity or Gaussianity in the model. These particle filters are consistent in the number of Monte Carlo samples, i.e. with infinite computational power these methods converge to the optimal nonlinear filter. However, typical particle filtering algorithms exhibit high computational costs with approximation errors that grow (with a fixed sample size) with the signal/observation dimensions [47, 118]. These methods are not scalable to the high-dimensional filtering or state estimation problems found in the geosciences and other areas [60, 78, 97, 121].

The ensemble Kalman–Bucy filter (generally abbreviated EnKF) [59, 60] is a type of Monte Carlo sample approximation of a class of linear (in the observations) filter in the spirit of the Kalman filter. The EnKF is a recursive algorithm for propagating and updating the sample mean and sample covariance of an approximated Bayesian filter [60]. The filter works via the evolution of a collection (i.e. an ensemble) of samples (i.e. ensemble members, or particles) that each satisfies a type of Kalman–Bucy update equation, linear in the observations. In classical Kalman–Bucy filtering [4, 16, 77], a gain function, that depends on the filter error covariance, is used to weight a predicted state estimate with the signal observations, see [16, 60]. In the EnKF, the error covariance in the gain function is replaced by a type of sample covariance. The result is a system of interacting particles in the spirit of a mean-field approximation of a certain McKean–Vlasov-type diffusion equation [53, 107]. We may refine this discussion by giving the relevant equations for a most basic form of EnKF. Let \((\mathcal {V}^i_t,\mathcal {W}^i_t,\mathcal {X}_0^i)\) with \({1\le i\le \textsf{N}+1}\) be \((\textsf{N}+1)\) independent copies of \((\mathscr {V}_t,\mathscr {W}_t,\mathscr {X}_0)\). The most basic ensemble Kalman filter, originally due to Evensen [34, 59, 60], is defined by,

with \(1\le i\le \textsf{N}+1\) and the (particle) sample mean and the sample cross-covariance defining the so-called Kalman gain matrix given by,

and we may also write the standard sample covariance,

In this work, we study this most basic ensemble Kalman filter as described above, and also more sophisticated variants, including the method of Sakov and Oke [125], that exhibit less fluctuation due to sampling noise. Readers familiar with the Kalman filter will recognise immediately some structural similarities as discussed above. However, there is no evolution equation given above for the covariance as in the Kalman filter (e.g. no Riccati-type matrix flow equation). Instead, we replace the relevant covariance matrices with their sample-based counterparts.

Importantly, if the underlying model is linear and Gaussian, then the filtering distribution is Gaussian, and the EnKF propagates exactly the sample mean and covariance of the optimal Bayesian filter and is provably consistent. If the model is nonlinear and/or non-Gaussian, then a standard implementation of the EnKF propagates a sample-based estimate of the filtering mean and covariance (but not the true posterior sample mean or covariance and with no results on consistency). In the context of estimation theory, we may contrast the notion of a state estimator (or observer) with the notion of a Bayesian filter. The goal of the former is to design an observer that tracks in some suitable (typically point-wise) sense the underlying signal and perhaps provides some usable measure of uncertainty on this estimate. The goal of the latter is to compute or approximate the true (Bayesian) filtering distribution (or some related statistics). In the nonlinear setting, even with infinite computational power, the EnKF methods do not converge to the optimal nonlinear filter; and indeed their limiting objects are not well understood in this setting. As discussed more technically later, ensemble Kalman filters are probably best viewed in practice as a type of (random) sample-based state estimator for nonlinear signal/observation models. However, in the special case of linear signal and observation models they are indeed provably consistent approximations of the optimal Bayesian filter.

In practice, the ensemble Kalman filtering methodology is applied in high-dimensional, nonlinear state-space models, e.g. see [59, 60] and the application references listed later in this introduction. Empirically, this method has shown good tracking performance in these applications, see [60] and the application references listed later. This tracking behaviour of the EnKF when applied to practical models may be explainable by viewing the EnKF as a dynamic state estimator. The fluctuation, stability, and contraction properties of the EnKF studied in this article (albeit mainly for linear-Gaussian models) may be viewed in this context also and provide some insight into the state estimate tracking behaviour seen in practice.

1.1 Purpose

The purpose of this review is to present a comprehensive overview of the theory of ensemble Kalman–Bucy filtering with an emphasis on rigorous results and behavioural characterisations for linear-Gaussian signal/observation models. We present a system of equations that describe the flow of individual particles, the flow of the sample covariance, and the flow of the sample mean in continuous-time ensemble filtering. We consider these equations and their characteristics in a number of popular EnKF varieties. Given these equations, we study in detail some fluctuation, stability, and error contraction results for the various ensemble Kalman filtering equations. We discuss the relevance and importance of these results in terms of characterising the EnKF behaviour, and we contrast these results with those considered in classical studies of stability in Kalman–Bucy filtering.

Classical studies of stability in (traditional, non-ensemble-type) Kalman–Bucy filtering are important because they rigorously establish the type of “tracking” properties desired in a filtering or estimation problem; and they establish intuitive, testable, model-based conditions (e.g. model observability) for achieving these convergence properties. Classical results in Kalman–Bucy filtering also establish the (exponential) convergence of the error covariance to a fixed steady-state value computable from the model parameters. See the review [16, 18] for detailed results in the classical context and historical remarks. The results in this work seek to characterise in an analogous manner the practical performance and behaviour of ensemble Kalman filtering, and these results then provide guidance and intuition on the tracking, approximation error, and other properties of these practical methods. Notably, the stochastic fluctuation properties of ensemble Kalman methods also need to be established; and counterparts of this latter analysis do not arise at all in classical Kalman filtering analyses.Our results are presented under testable, model-based assumptions. In particular, we rely on the standard controllability assumption from classical Kalman filtering theory; and, typically, a more restrictive (but testable) observability-type assumption (i.e. linear fully observed processes, which imply classical observability).

1.2 Overview of the main topics and literature

In this subsection, we touch on the main topics and related literature as it pertains to the EnKF. These topics include the fluctuation, stability, and contractive properties of the relevant EnKF stochastic equations. Later, toward the end of this article, we discuss some of these topics in the context of filtering and state estimation more broadly, and we touch on other related but somehow distinct results as they pertain to the EnKF more specifically.

The EnKF is a key numerical method for solving high-dimensional forecasting and data assimilation problems; see, e.g. [59, 60]. In particular, applications have been motivated by inference problems in ocean and atmosphere sciences [78, 102, 104, 112], weather forecasting [5, 6, 34, 70], environmental and ecological statistics [1, 75], as well as in oil reservoir simulations [61, 110, 129], and many others. This list is by no means exhaustive, nor the cited articles fully representative of the respective applications. We refer to (some of) the seminal methodology papers in [5,6,7, 26, 34, 58, 65, 70, 71, 120, 125, 136, 142, 144]. This long list is not exhaustive; see also the books [60, 78, 97, 121] for more background, and the detailed chronological list of references in Evensen’s text [60].

In continuous-time, we may broadly break down the class of EnKF methods into three distinct types; distinguished by the level of fluctuation added via sampling noise needed to ensure that the EnKF sample mean and covariance are consistent in the linear-Gaussian setting. The original form of the EnKF is the so-called vanilla EnKF of Evensen [34, 60], see also [94]; and this method exhibits the most fluctuations due to sampling of both signal and observation noises. The next class is the so-called deterministic EnKF of Sakov and Oke [125], see also [13, 120], which exhibits (considerably) less fluctuation. In the continuous-time linear-Gaussian setting, this class is representative of the so-called square-root EnKF methods [95, 136] (which differ somewhat in discrete-time, e.g. contrast [125] with [136], see also [95]). Finally, there has been recent interest in so-called transport-inspired EnKF methods [120, 132], which apart from initialisation noise/randomisation are completely deterministic and whose analysis in the linear model setting follows closely that of the classical Kalman–Bucy filter, cf. [16]. These classes do not distinguish the totality of EnKF methodology (especially in nonlinear or non-Gaussian models); which may further consist of so-called covariance regularisation methods [7, 59, 65, 71, 108], etc. However, in the linear-Gaussian case, these three classes broadly capture the fundamentals.

As discussed later, the fully deterministic, transport-inspired EnKF method, see [120, 132], is a rather special case in the linear-Gaussian setting and is not studied in detail in this article where linear-Gaussian models are the focus. Nevertheless, we point to [43, 44] for certain mean-field consistency results, non-asymptotic fluctuation (e.g. finite sample size) results, and the long-time behaviour of this particular method in the case of a nonlinear signal model and linear observations. We also touch on this method briefly throughout; but when we refer to the general EnKF we typically mean the so-called vanilla [34, 94] or deterministic [13, 125] methods (which will become clear as the article progresses).

Convergence to a mean-field limit, and large-sample asymptotics, of the discrete-time EnKF was studied in [90, 93, 99, 106], in the sense of taking the number of particles to infinity. The discrete-time square root form of the EnKF is accommodated in [90, 93], and nonlinear state-space models are accommodated in [99]. In the continuous-time, linear-Gaussian, setting, the convergence (in sample size) of the three broad classes of EnKF to the true Kalman–Bucy filter is more immediate and follows from the sample mean and sample covariance evolution equations in [19, 53]. In this latter sense, we recover the fact that the EnKF is a consistent approximation of the optimal, Bayesian filter (i.e. the classical Kalman–Bucy filter) in the linear-Gaussian setting as discussed earlier. The mean-field limit of various EnKF methods in the continuous-time, nonlinear model setting is studied in [43, 93, 98].

We remark in the nonlinear model setting (discrete or continuous-time), see [43, 93, 98, 99, 122], the mean-field limiting equations (and distribution) are not easily related to the optimal filter. Moreover, in practice, one is typically interested in the non-asymptotic (in terms of ensemble size) fluctuation properties as well as the long time/stability behaviour of the particle-type filtering approximations.

The fluctuation analysis of the EnKF is studied in detail in the linear-Gaussian setting in [19, 21, 22]. In [22], a complete Taylor-type stochastic expansion of the sample covariance is given at any order with bounded remainder terms and estimates. Both non-asymptotic and asymptotic bias and variance estimates for the EnKF sample covariance and sample mean are given explicitly in [22]. These latter expansions directly imply an almost sure strong form of a central limit-type result on the sample covariance and sample mean at any time. The analysis in [22] is considered over the entire path space of the matrix-valued Riccati stochastic differential equation that describes the flow of the sample covariance. However, most of the non-asymptotic time-uniform results in [22] hold only when the underlying signal is stable. In [19, 21], we consider the case in which the underlying signal may be unstable, and we provide time-uniform, non-asymptotic moment estimates and time-uniform control over the fluctuation of the sample covariance and mean about their limiting Riccati and Kalman–Bucy filtering terms.

The emphasis of time-uniformity on the moment bounds and on the fluctuation bounds on the sample mean and sample covariance (about the true optimal Bayesian filtering mean and covariance) is important. If these bounds are allowed to grow in time, e.g. typically in this analysis one can easily obtain bounds that grow exponentially in time, then these bounds quickly become useless for any practical numerical application; e.g. an exponent \(>200\) may induce an exceedingly pessimistic bound greater than the estimated number of particles of matter in the visible universe. We remark also that our emphasis on accommodating unstable (latent) signal models is important because time-uniform fluctuation results in such cases (which are of real practical importance) are significantly more difficult to obtain under testable and realistic model assumptions (like the classical observability and controllability model assumptions in the control and filtering literature [4, 16]).

In [53], stability of the EnKF in continuous-time linear-Gaussian models is considered under the assumption that the underlying signal model is also stable. This latter assumption is in contrast with classical Kalman–Bucy filter stability results, which hold in the linear-Gaussian setting under the much weaker (and more natural) condition of signal detectability [16, 18, 139]. The classical Kalman–Bucy filter is stable as a result of the closed-loop stabilising properties of the so-called Kalman gain matrix, which is closely connected to the flow of the filter error covariance described by a Riccati differential equation. The EnKF analogue, in linear-Gaussian settings, is the sample covariance, and its random fluctuation properties (noted in the preceding paragraph) are the main source of difficulty in establishing the closed-loop filter stability in those models in which the underlying signal itself is unstable.

In [137], the authors analyse the long-time behaviour of the (discrete-time) EnKF in a class of nonlinear systems, with finite ensemble size, using Foster-Lyapunov techniques. Applying the results of [137] to the basic linear-Gaussian filtering problem, the analysis and assumptions in [137] then also require stability of the underlying signal model. In a traditional sense, the conditions needed in [137] are hard to check, e.g. as compared to the classical observability or controllability-type model conditions in Kalman filtering analysis, but a range of examples are given in [137]. In [81], the long-time behaviour of the EnKF is analysed in both discrete and continuous time settings with similar conditions on the model as in [137], and which again if linearised equates to a form of stability on the signal model.

We emphasise again that the type of analysis in [53, 81, 137] cannot handle unstable, or transient, signal models; i.e. signals with sample paths with at least one coordinate that may grow unbounded. In the context studied in [53, 81, 137] dealing with stable or bounded latent signal processes (e.g. the Lorenz-class of signal models [81, 137]), the important question on the filter stability or filtering error estimates relies on obtaining meaningful quantitative fluctuation constants decreasing with the number of ensemble members to achieve a desired performance. Of course, time uniformity of these bounds follows trivially in this setting from the boundedness properties of the latent signal process.

Covariance inflation is a mechanism used in practical methods to increase the positive-definiteness of the sample covariance matrix and essentially amplify its effect on the stabilisation properties of the Kalman gain matrix. In [81], time-uniform EnKF error boundedness results follow under a true signal stability condition and given a sufficiently large variance inflation regime. See also [105, 138] for related stability analysis in the presence of adaptive covariance inflation and projection techniques. In [19], in the continuous-time linear-Gaussian setting, the mechanism by which covariance inflation acts to stabilise the ensemble filter is exemplified, see also [24]. Covariance localisation is studied rigorously in [44] in the case of the fully deterministic, transport inspired ensemble filter [120, 132].

In the continuous-time, and linear-Gaussian setting, the first work to relax the assumption of underlying signal stability for the EnKF is in [19, 21, 22]. In those articles, latent signals with sample paths that may grow unbounded (to infinity exponentially fast) are accommodated. That work is based on both a fluctuation analysis of the sample covariance and the sample mean [19, 21, 22], followed by studies on the long-time behaviour, e.g. stability properties, of both the sample covariance and mean [19, 21]. Time-uniform fluctuation properties are given under a type of (strong) signal observability condition. In this setting, time-uniformity of these results is non-trivial. This assumption is in keeping with classical Kalman–Bucy filtering and Riccati equation results and does not require any form of underlying signal stability. As the authors of [137] note in their stability analysis, they use “few properties of the forecast [predicted] covariance matrix other than positivity”. As noted in [137], this lends generality to their results, but conversely places the burden back on the signal model assumptions (including those assumptions of true signal stability). Contrast this with the work in [19, 21, 22] where emphasis is placed on the fluctuation analysis of the sample covariance, with a primary aim of removing the stability assumptions needed on the underlying signal model. The time-uniform fluctuation and stochastic perturbation contributions in [19, 21, 22] were discussed earlier. Given this fluctuation analysis, the stability of the filter sample mean and sample covariance and their (time) asymptotic properties are studied in [19, 21, 22] without stability assumptions on the underlying signal model. These results rigorously establish the type of “tracking” properties desired by a filtering or estimation solution.

Although of lesser practical use in applications, strong results in the one-dimensional setting are also derived in [21] that converge, e.g. in the limit with the ensemble size, to those properties of the classical Kalman–Bucy filter. For example, we can recover the optimal exponential contraction and filter stability rates, etc. In the multidimensional setting, the decay rates to equilibrium are not sharp, and the stationary measures are not given in closed form.

1.3 Aims and contributions

The main goal of this article is to: (1) present a novel formulation for ensemble filtering in linear-Gaussian, continuous-time, systems that lends itself naturally to analysis; (2) provide detailed fluctuation analysis of the ensemble Kalman–Bucy flow, the sample mean, and the stochastic Riccati equation describing the sample covariance; (3) study the stability of the resulting stochastic Riccati differential equation that describes the flow of the sample covariance; (4) study the stability of the continuous-time ensemble Kalman–Bucy update equation that is coupled to this stochastic Riccati equation, and which describes the flow of the sample mean (or the sample mean minus the true signal, i.e. the sample error signal). This article is primarily a review of the literature and results in these directions. The prime focal point of this review are the articles [17, 19, 21, 22, 53], which focus heavily on the linear-Gaussian model setting. In this review, an emphasis is placed on deriving time-uniform fluctuation, stability, and contraction results under testable model conditions equivalent and/or closely related to the classical observability and controllability-type model assumptions. Importantly, we do not generally assume the true underlying signal is stable in this review.

Throughout this review, we contrast and discuss the presented results with the broader literature on the rigorous mathematical behaviour of ensemble Kalman-type filtering. For example, we find easily that the sample covariance matrix in the broad class of EnKF methods considered is always under-biased when compared to the true covariance matrix. This may motivate, from a pure uncertainty quantification viewpoint, some form of covariance regularisation [7, 59, 65, 71, 108]. We provide detailed analysis illustrating the effect of inflation regularisation on stability (similarly to [81, 105, 138]). As another example, we provide strong intuition for so-called catastrophic filter divergence (studied previously in [64, 66, 82]) based on rigorous (heavy-tailed) fluctuation properties inherent to the relevant sample covariance matrices and their invariant distributions. We contrast the so-called vanilla EnKF of [34, 60] with the ‘deterministic’ EnKF of Sakov and Oke [125] in terms of their fluctuation and sample noise characteristics, and we show how this affects their respective sample behaviour and stability properties.

As with classical (non-ensemble) Kalman filtering, the importance of the results reviewed is in rigorously establishing the type of tracking and stability behaviour desired in filtering applications [4, 9, 16, 47]. For example, our results imply conditions under which the initial estimation errors are forgotten, and that the flow of the sample mean converges to the true Kalman filtering (conditional mean) state estimate (and thus the signal) in the average. In the case of the EnKF, there must be some emphasis placed on the stochastic behaviour of the ensemble (Monte Carlo) mean and covariance in order to establish filter stability. We also provide the analogue of the error covariance fixed point in classical Kalman filtering [4, 16]; whereby we state results that ensure the sample covariance matrix converges to an invariant, steady-state, distribution. We characterise the properties of this invariant distribution and relate this to the sample behaviour of the ‘vanilla’ EnKF [34, 60] and the ‘deterministic’ EnKF [125].

We focus on the linear, continuous-in-time, Gaussian setting in this review and note that in this case the sample mean and sample covariance are consistent approximations of the optimal Bayesian filtering mean and covariance. We emphasise that even in the linear-Gaussian case, the samples themselves are not in general independent. The analysis even in the linear setting is highly technical [17, 19, 21, 22, 53], and the results presented in this case are aimed as a step in the progression to more applied results and intuition in nonlinear model settings. There is some precedent for studying the relative properties, behaviour, or performance of ensemble Kalman filtering firstly with linear-Gaussian signal models [59]. For example, the seminal article [34] illustrated that a perturbation of the observations in the ensemble Kalman filter was necessary to recover a consistent covariance limit (to the true Kalman filter for linear-Gaussian systems); or to achieve the standard Monte Carlo error rate with a finite set of particles. The analysis (and even derivation) of ensemble square root filters for linear-Gaussian system models is standard [103, 126], etc. Convergence of the ensemble Kalman filter in inverse problems is studied in [127] in the linear setting. We discuss connections and extensions of the results in this article to the nonlinear model setting toward the end.

We also briefly contrast the approximation capabilities of particle filtering (sequential Monte Carlo) methods [57, 63] with the EnKF. We give a revealing, and perhaps surprising, simple result illustrating the complete failure of the bootstrap particle filter [63] to track unstable linear-Gaussian latent signals. Compared to the EnKF, the fluctuation and stability of various particle filtering methods (e.g. see [39, 47,48,49, 55, 113, 141]) is a rather mature topic. Nevertheless, time-uniform particle filtering estimates rely on mixing-type, or certain contractive, conditions on the mutation transition which do not hold in general in the case of unstable linear-Gaussian models. We contrast this new (rather negative) particle filtering result with its (positive) counterpart for the EnKF.

Note that the analysis and proofs in [17, 19, 21, 22, 53], while motivated originally by ensemble Kalman-type filtering methods, are largely presented as independent technical results on certain general classes of matrix-valued Riccati diffusion equations and associated linear stochastic differential equations with random coefficients. In this review, we emphasise the work in [17, 19, 21, 22, 53] via a series of results directly and solely stated in the context of ensemble Kalman-type filtering. Throughout we relate our results to the broader technical literature on ensemble Kalman filtering and we emphasise the practical significance of these results, e.g. via the tracking property of the filter, its stability, or via their error fluctuation or catastrophic divergence behaviour, among other topics. We also contrast the behaviour of the various classes of continuous-time EnKF methods.

1.4 Notation

We remark firstly that some care must be taken throughout to keep track of the font stylings; e.g. upright vs. calligraphic vs. script, etc. There is typically a relationship between like symbols appearing with different stylings.

Hatted terms \({\widehat{\cdot }}\) should be viewed as being indexed to the ensemble size \(\textsf{N}\ge 1\), i.e. \({\widehat{\cdot }}:=\cdot ^\textsf{N}\). Time is indexed variously by \(s,t,u,\tau \in [0,\infty [\). We write \(c,c_{n},c_{\tau },c_{n,\tau },c_{n,\tau }(Q),c_{n,\tau }(z,Q)\ldots \) for some positive constants whose values may vary from result to result, and which only depend on the indexed/referenced parameters \(n,\tau ,z,Q\), etc, as well as implicitly on the model parameters \((A,H,R,R_1)\) introduced later. Importantly, these constants do not depend on the time horizon t, nor on the number of ensemble particles \(\textsf{N}\).

Let \(\mathbb {M}_{d}\) be the set of \((d\times d)\) real matrices with \(d\ge 1\) and \(\mathbb {M}_{d_1,d_2}\) the set of \((d_1\times d_2)\) real matrices. Let \(\mathbb {S}_d\subset \mathbb {M}_{d}\) be the subset of symmetric matrices, and \(\mathbb {S}^0_d\), and \(\mathbb {S}^+_d\) the subsets of positive semi-definite and definite matrices, respectively. We write \(A \ge B\) when \(A-B\in \mathbb {S}^0_d\); and \(A > B\) when \(A-B\in \mathbb {S}^+_d\). We denote by 0 and I the null and identity matrices, for any \(d\ge 1\). Given \(R\in \partial \mathbb {S}_d^+:= \mathbb {S}_d^0-\mathbb {S}_d^+\) we denote by \(R^{1/2}\) a (non-unique) symmetric square root of R. When \(R\in \mathbb {S}_d^+\), we choose the unique symmetric square root. We write \(A^{\prime }\) the transpose of A, and \(A_{\textrm{sym}}=(A+A^{\prime })/2\) its symmetric part. We denote by \(\textrm{Absc}(A):=\max {\left\{ \textrm{Re}(\lambda )\,:\,\lambda \in \textrm{Spec}(A)\right\} } \) its spectral abscissa. We also denote by \(\textrm{Tr}(A)\) the trace. When \(A\in \mathbb {S}_d\), we let \(\lambda _1(A)\ge \ldots \ge \lambda _d(A)\) denote the ordered eigenvalues of A. We equip \(\mathbb {M}_{d}\) with the spectral norm \(\Vert A \Vert =\Vert A \Vert _2=\sqrt{\lambda _{1}(AA^{\prime })}\) or the Frobenius norm \(\Vert A \Vert =\Vert A \Vert _{\textrm{Frob}}=\sqrt{\textrm{Tr}(AA^{\prime })}\).

Let \(\mu (A)\) denote a matrix logarithmic “norm” (which can be \(<0\)), see [131]. The logarithmic norm is a tool to study the growth of solutions to ordinary differential equations and the error growth in approximation methods. For any square matrix \(A\in \mathbb {M}_{d}\), the logarithmic norm is the smallest element in the set \(\{h\in \mathbb {R}\,:\, \Vert \exp (At) \Vert \le \exp (ht),\,t\ge 0\}\) where \(\Vert \cdot \Vert \) is any matrix norm and the value \(\mu (A)\) may be considered to be indexed to the matrix norm employed. For example, the (2-)logarithmic “norm”, or spectral log-norm, is given by \(\mu (A)=\lambda _{1}(A_{\textrm{sym}})\). We have \(\mu (\cdot )\ge \textrm{Absc}(\cdot )\) in general, but importantly we note that if \(\textrm{Absc}(\cdot )<0\), then there is a matrix norm \(\Vert \cdot \Vert \) defining a logarithmic norm such that \(\mu (\cdot )<0\), see [131, Theorem 5].

2 Kalman–Bucy filtering

Consider a time-invariant linear-Gaussian filtering model of the following form,

where \(A\in \mathbb {M}_{d}\) and \(H\in \mathbb {M}_{d_y,d}\) are the signal and sensor model matrices, respectively, and \(R\in \mathbb {S}^0_{d}\) and \(R_1\in \mathbb {S}^+_{d_y}\) are the respective signal and sensor noise covariance matrices. The noise inputs \(\mathscr {V}_t\) and \(\mathscr {W}_t\) are d and \(d_y\)-dimensional Brownian motions, and \(\mathscr {X}_0\) is an d-dimensional Gaussian random variable (independent of \((\mathscr {V}_t,\mathscr {W}_t)\)) with mean \(\mathbb {E}(\mathscr {X}_0)\) and covariance \(P_0\in \mathbb {S}_d^0\).

We let \(\mathscr {Y}_0=0\) and \(\mathcal {Y}_t=\sigma \left( \mathscr {Y}_s,~s\le t\right) \) be the \(\sigma \)-algebra generated by the observations. The conditional distribution \(\eta _t:=\textrm{Law}\left( \mathscr {X}_t~|~\mathcal {Y}_t\right) \) of the signal states \(\mathscr {X}_t\) given \(\mathcal {Y}_t\) is Gaussian with a conditional mean and covariance given by

The mean and the covariance obey the Kalman–Bucy and the Riccati equations

with the Riccati drift function from \(\mathbb {S}^0_{d}\) into \(\mathbb {S}_{r}\) defined for any \(Q\in \mathbb {S}^0_{d}\) by

and with,

Importantly, the covariance of the conditional distribution \(\textrm{Law}(\mathscr {X}_t~|~\mathcal {Y}_t)\) in this case does not depend on the observations \(\mathcal {Y}_t\). The error \(Z_t:= (X_t - \mathscr {X}_t)\) satisfies

where \(\mathscr {B}_t\) is some independent d-dimensional Brownian motion. Here, we make use of a martingale representation theorem, e.g. [79, Theorem 4.2], see also [54].

Let \(\phi _t(Q):=P_t\) denote the flow of the matrix differential equation (2.3) with \(P_0=Q\in \mathbb {S}^0_d\). Let \(\psi _t(z,Q):=Z_t\) denote the flow of the stochastic error (2.6) with \(Z_0=z=(x-\mathscr {X}_0)\in \mathbb {R}^d\) and \(P_t= \phi _t(Q)\). Finally, we denote the flow of the Kalman–Bucy update (2.2) with \(X_0=x\in \mathbb {R}^d\) by \(\chi _t(x,Q):=X_t\). This notation allows us to reference the flows \(\psi _t(z,Q)\), \(\phi _t(Q)\), \(\chi _t(x,Q)\) with respect to their initialisation at \(t=0\) which is useful when we compare flows and study stability.

Throughout this section, we assume that \((A,R^{1/2})\) and (A, H) are controllable and observable pairs in the sense that

Note that if \(R\in \mathbb {S}^+_d\) is positive definite, which is quite common in filtering problems, it follows that controllability holds trivially. We consider the observability and controllability Gramians \((\mathcal {O}_{t},\mathcal {C}_{t}(\mathcal {O}))\) and \((\mathcal {C}_{t},\mathcal {O}_{t}(\mathcal {C}))\) associated with the triplet (A, R, S) and defined by

Given (2.7), for any finite \(\tau >0\), there exists some finite parameters \(\varpi ^{o,c}_{\pm },\varpi ^{c}_{\pm }(\mathcal {O}),\varpi ^{o}_{\pm }(\mathcal {C})>0\) such that

The parameter \(\tau \) is often called the interval of observability-controllability, see [30].

These rank conditions (2.7) ensure the existence and the uniqueness of a positive definite fixed-point matrix \(P_\infty \) solving the algebraic Riccati equation

Indeed, if (2.7) holds, then \(P_\infty \in \mathbb {S}_d^+\) and \(\textrm{Absc}(A-P_\infty S)<0\). We may relax the controllability assumption to just stabilisability, in which case \(P_\infty \in \mathbb {S}_d^0\) and \(\textrm{Absc}(A-P_\infty S)<0\); see [87, 91, 109] and the convergence results in [35, 89]. Under just a detectability condition, it follows that \(P_\infty \in \mathbb {S}_d^0\) and \(\textrm{Absc}(A-P_\infty S)\le 0\), i.e. \((A-P_\infty S)\), is only marginally stable, and convergence to this solution is given under mild additional conditions in [36, 115, 117]. In [139], given only detectability, the time-varying “closed loop” matrix \((A-\phi _t(Q)S)\) is shown to be stabilising, even when \((A-P_\infty S)\) is only marginally stable.

In the context of ensemble Kalman–Bucy filtering considered later, we will require the same controllability assumption as considered above, and a more restrictive observability condition (that implies the classical observability/detectability discussed above).

For any \(s\le t\) and \(Q\in \mathbb {S}_{d}^0\), we define the state-transition matrix,

When \(s=0\), we often write \(\mathcal {E}_{t}(Q)\) instead of \(\mathcal {E}_{0,t}(Q)\). The matrix \(\mathcal {E}_{t}(Q)\) is the fundamental matrix. We have \(\mathcal {E}_{s,t}(Q)=\mathcal {E}_{t}(Q)\mathcal {E}_{s}(Q)^{-1}\). The following convergence estimates follow from [16, 18]: For any \(Q,Q_1,Q_2\in \mathbb {S}^{0}_{d}\) and any \(t\ge 0\), we have the local contraction inequalities

for some finite \(\alpha ,c>0\) and with \({P}_\infty \) solving (2.11) and

for some finite constant \(c( Q_1, Q_2)>0\). In addition, there exists some parameter \(\tau > 0\) such that for any \(s\ge 0\) and any \(t\ge \tau >0\) we have the uniform estimates,

Note it is desirable to relate the decay of \(\mathcal {E}_{s,s+t}(Q)\) to the decay at the fixed point \(\Vert \mathcal {E}_{t}({P}_{\infty })\Vert = \Vert e^{t(A-P_\infty S)} \Vert \le c\,e^{-\alpha \, t}\) (since as \(t\rightarrow \infty \) it is clear that we cannot do better). See [18] for an explicit Floquet-type expression of \(\mathcal {E}_{t}(Q)\) in terms of \(\mathcal {E}_{t}(P_\infty )\).

The convergence and stability properties of the Kalman–Bucy filter and the associated Riccati equation are directly related to the contraction properties of the state-transition matrix \(\mathcal {E}_{s,t}(Q)\). To get some intuition for this we note,

and

for any \(s\le t\).

From [16], for any \(t\ge \tau >0\) and any \(Q\in \mathbb {S}_d^0\) we have the uniform estimates

We also have

The following stability result follows from [16, 18]: For any \(Q_1,Q_2\in \mathbb {S}^{0}_{d}\) and for any \(t\ge 0\),

and recall the exponential contraction estimate on \(\Vert \mathcal {E}_{t}({P}_{\infty })\Vert \) in (2.13). Similarly, using (2.15), for any \(s\ge 0\) and any \(t\ge \tau >0\), we have

Note that both (2.20) and (2.21) imply immediately that \(\phi _{t}(Q)\rightarrow _{t\rightarrow \infty }{P}_\infty \) exponentially fast for any \(Q\in \mathbb {S}^0_d\); e.g. by letting \(Q_2=P_\infty \).

Note that the uniform estimates with constants independent of the initial condition stated throughout, involve some arbitrarily small, positive time parameter \(\tau \), which can be directly related to the notion of a so-called observability/controllability interval introduced earlier; for further details on this topic, we refer to [16, 30]. Contrast, for example, the stability results (2.20) and (2.21). The symbol \(\tau \) is reserved for this arbitrary small time parameter throughout the article.

Results (e.g. bounds and convergence results) on the flow of the inverse of the solution of the Riccati equation are considered in [16] and are relevant for proving results on the flow of the Riccati equation itself; e.g. upper bounds on the flow of the inverse solution help to lower bound solutions of the Riccati flow. The flow of the inverse Riccati solution may also be of interest on its own as it relates to the flow of “information” (as the inverse of covariance).

Given the contraction properties on \(\mathcal {E}_{s,t}(Q)\), it is often said the “deterministic part” of the filter error \(\partial _tZ_t=\left( A-P_t\,S\right) Z_t\) is stable. From [16], we can be more explicit if desired, for example, for any \(t\ge \tau \) we have the uniform estimate,

for some rate \(\alpha >0\) and some finite constant \(c>0\). Moreover, the conditional probability of the following event

given the state variable \(\mathscr {X}_0\) is greater than \(1-e^{-\delta }\), for any \(\delta \ge 0\). And, for any \(t\ge 0\), \(z_1,z_2\in \mathbb {R}^{d}\), \(Q_1,Q_2\in \mathbb {S}^0_{d}\) and any \(n\ge 1\) we have the almost sure local contraction estimate

with some rate \(\alpha >0\) and the finite constants \(c(Q_1,Q_2),c_n(Q_1,Q_2)>0\).

3 Kalman–Bucy diffusion processes

For any probability measure \(\eta \) on \(\mathbb {R}^d\), we let \(\mathcal {P}_{\eta }\) denote the \(\eta \)-covariance

with the identity function \(\iota (x):=x\) and the column vector \(\eta (f):=\int f\, d\eta \) for some measurable function \(f:\mathbb {R}^d\rightarrow \mathbb {R}^d\).

We now consider three different cases of a conditional nonlinear McKean–Vlasov-type diffusion process,

where

and thus, the diffusions in (3.2) depend in some nonlinear fashion on the conditional law of the diffusion process itself. In all three cases, \((\mathcal {V}_t,\mathcal {W}_t,\mathcal {X}_0)\) are independent copies of \((\mathscr {V}_t,\mathscr {W}_t,\mathscr {X}_0)\). These diffusions are time-varying Ornstein–Uhlenbeck processes [53] and consequently \({\overline{\eta }}_t\) is Gaussian; see also [16]. These Gaussian distributions have the same conditional mean \({\overline{\eta }}_t(\iota )\) and conditional covariance \(\mathcal {P}_{{\overline{\eta }}_t}\).

Proposition 3.1

and \({X}_t:={\overline{\eta }}_t(\iota )={\eta }_t(\iota )\) and \(P_t=\mathcal {P}_{{\overline{\eta }}_t}=\mathcal {P}_{\eta _t}\) where \(X_t\) and \(P_t\) correspond to the Kalman–Bucy filter update and Riccati equations in (2.2) and (2.3).

We may refer to this specific class (3.2) of McKean–Vlasov-type diffusion as a Kalman–Bucy diffusion process [16]. The case (F1) corresponds to the limiting object that is sampled in the continuous-time version of the ‘vanilla’ EnKF [60]; while (F2) is the continuous-time limiting object that is sampled in the ‘deterministic’ EnKF of [125], see also [120]; and (F3) is a fully deterministic transport-inspired equation [120, 132]. Note that in this case (F3) the existence of the inverse of \(\mathcal {P}_{{\overline{\eta }}_t}\) is given by the positive-definiteness properties of the solution of the Riccati equation in (2.3). In the next section, we detail the Monte Carlo ensemble filters derived from these Kalman–Bucy diffusion processes.

Note we may define a generalised version of case (F3) by,

for any skew symmetric matrix \(G^\prime _t=-G_t\) that may also depend \({\overline{\eta }}_t\). This added tuning parameter may be related to an optimality metric, when deriving this transport equation from an optimal transport beginning. We may also write similar generalised versions (F1\('\)) and (F2\('\)) by adding \(G_t\,\mathcal {P}_{{\overline{\eta }}_t}^{-1}\left( \mathcal {X}_t-{\overline{\eta }}_t(\iota )\right) \) to (F1) and (F2); though practically it likely makes little sense.

4 Ensemble Kalman–Bucy filtering

Ensemble Kalman–Bucy filters (EnKF) coincide with the mean-field particle interpretation of the nonlinear diffusion processes defined in (3.2).

Let \((\mathcal {V}^i_t,\mathcal {W}^i_t,\mathcal {X}_0^i)\) with \({1\le i\le \textsf{N}+1}\) be \((\textsf{N}+1)\) independent copies of \((\mathcal {V}_t,\mathcal {W}_t,\mathcal {X}_0)\). Again, we consider three different cases of Kalman–Bucy-type interacting diffusion process,

with \(1\le i\le \textsf{N}+1\) and the rescaled (particle) sample mean and covariance

In cases \((\texttt {F1})\) and \((\texttt {F2})\), we have \(\textsf{N}\ge 1\), and in case \((\texttt {F3})\), we require \(\textsf{N}\ge d\) for the almost sure invertibility of \(\widehat{P}_{t}\) (although in case \((\texttt {F3})\) one may substitute a pseudo-inverse of \(\widehat{P}_{t}\) without changing the mathematical analysis). The scaling factor on the sample covariance ensures unbiasedness. A sampled version of case \((\texttt {F3}')\) may also be derived in the same way.

The filters of (4.1) are mean-field approximations of those in (3.2). In (4.1), we see the utility of the Kalman–Bucy filter formulation in (3.2). In particular, in (4.1) we have eliminated the classical Riccati matrix differential equation completely and replaced it with an ensemble of (interacting) particle flows and the computation of a sample covariance matrix from this ensemble. The sample mean and covariance of (4.2) can also be used for inference or decision making, etc.

4.1 Vanilla ensemble Kalman–Bucy filter

The vanilla EnKF, denoted by VEnKF, is associated with the first case \((\texttt {F1})\) of nonlinear process \(\mathcal {X}_t\) in (3.2) and is defined by the Kalman–Bucy-type interacting diffusion process \((\texttt {F1})\) in (4.1). We then have the following key result.

Proposition 4.1

[53] Let \(\textsf{N}\ge 1\). The stochastic flow of the sample mean satisfies,

where \(\mathcal {B}_t\) is an independent d-dimensional Brownian motion.

The sample covariance evolves according to a so-called matrix-valued Riccati diffusion process of the form,

where \(\mathcal {M}_t\) is a \((d\times d)\)-matrix with independent Brownian entries (also independent of \(\mathcal {B}_t\)).

We see that for the vanilla EnKF, the convergence of \(\widehat{X}_{t}\rightarrow X_{t}\) and \(\widehat{P}_{t}\rightarrow P_{t}\) as \(\textsf{N}\rightarrow \infty \) follows immediately. This result follows via the martingale representation theorem, e.g. Theorem 4.2 in [79], see also [54].

4.2 ‘Deterministic’ ensemble Kalman–Bucy filter

The ‘deterministic’ EnKF, denoted DEnKF, is associated with the second case \((\texttt {F2})\) of nonlinear process \(\mathcal {X}_t\) in (3.2) and is defined by the Kalman–Bucy-type interacting diffusion process \((\texttt {F2})\) in (4.1). This ‘deterministic’ epithet in the DEnKF follows because the update ‘part’ of the particle flow is deterministic and does not rely on the stochastic perturbations by \(\mathcal {W}_t^i\) appearing in the VEnKF. This name and idea was taken from [125]; see also [13, 120] and [95, 136]. We have the following key result.

Proposition 4.2

[19, 21] Let \(\textsf{N}\ge 1\). The stochastic flow of the sample mean satisfies,

where \(\mathcal {B}_t\) is an independent d-dimensional Brownian motion.

The sample covariance evolves according to a so-called matrix-valued Riccati diffusion process of the form,

where \(\mathcal {M}_t\) is a \((d\times d)\)-matrix with independent Brownian entries (also independent of \(\mathcal {B}_t\)).

Again, for the DEnKF, the convergence of \(\widehat{X}_{t}\rightarrow X_{t}\) and \(\widehat{P}_{t}\rightarrow P_{t}\) as \(\textsf{N}\rightarrow \infty \) follows immediately. Note the simplified diffusion weighting(s) in the case of the DEnKF, as compared to the VEnKF.

4.3 Transport-inspired ensemble transport filter

The fully deterministic ensemble transport filter DEnTF is associated with the third case \((\texttt {F3})\), defined by the Kalman–Bucy-type interacting diffusion process \((\texttt {F3})\) in (4.1). In this case, we have the special result.

Proposition 4.3

[120, 132] Let \(\textsf{N}\ge 1\). The flow of sample mean is given by,

The sample covariance evolves according to the deterministic Riccati equation,

Note that the particle mean \(\widehat{X}_{t}\) and the particle covariance \(\widehat{P}_{t}\) associated with the particle interpretation \((\texttt {F3})\) discussed in (4.1) satisfy exactly the equations of the Kalman–Bucy filter with the associated deterministic Riccati equation.

The “randomness” in this case only comes from the initial conditions. The stability analysis of this class of DEnTF model resumes to the one of the Kalman–Bucy filter and the associated Riccati equation. Thus, the results, e.g. in (2.20), (2.22), (2.23) and (2.24) hold immediately; see also [16] in the linear-Gaussian setting. In [43, 44], this filter is analysed in the case of a nonlinear signal, but fully observed (linear observation) model. The fluctuation analysis in this case can also be developed easily by combining certain stability results w.r.t. the initial state (see [16]) with conventional sample estimates based on independent copies of the initial states (see, e.g. [23] for estimates associated with classical sample covariance estimates). Consequently, we do not consider this class of model going forward, but recommend [16, 43, 44].

When \(\textsf{N}\) is small compared to d, the inverse of the sample covariance defining the DEnTF is ill-posed and this is likely a limiting factor in the applicability of this method in high-dimensional applications with stochastic state evolutions. With non-Gaussian signal noise, one may also prefer the stochastic perturbation method in the DEnKF.

4.4 Nonlinear ensemble filtering in practice

In practice, the ensemble Kalman filtering methodology is applied in high-dimensional, nonlinear state-space models, e.g. see [59, 60] and the application references listed in the introduction.

It is rather straightforward to extend the algorithmic particle methods in (4.1) to nonlinear systems as we now outline. Consider a time-invariant nonlinear diffusion model of the form,

where \(a:\mathbb {R}^d\rightarrow \mathbb {R}^d\) and \(h:\mathbb {R}^d\rightarrow \mathbb {R}^{d_y}\) are the nonlinear signal and sensor model functions of some sufficient regularity.

Let \((\mathcal {V}^i_t,\mathcal {W}^i_t,\mathcal {X}_0^i)\) with \({1\le i\le \textsf{N}+1}\) be \((\textsf{N}+1)\) independent copies of \((\mathscr {V}_t,\mathscr {W}_t,\mathscr {X}_0)\). We consider the three EnKF variants as before and define the flow of particles by,

with \(1\le i\le \textsf{N}+1\) and the (particle) sample mean \(\widehat{X}_{t}\) and sample covariance \(\widehat{P}_{t}\) defined as usual, e.g. see (4.2), and with the observation function sample mean and sample cross-covariance defined as,

The mean-field limit of these interacting nonlinear conditional particle diffusion systems (4.10) is studied in [43, 93]. The (conditional) law of these mean field McKean–Vlasov diffusions may even be given in terms of a Kushner/Fokker-Planck-type partial differential equation, e.g. see [43, 93]. However, if the mean-field limit in this nonlinear setting is denoted by, say, \(\mathcal {X}_t\), then it is certainly true that,

in the nonlinear model setting. Said differently, even with infinite computational power, the EnKF methods as applied in this nonlinear model setting do not converge to the optimal nonlinear Bayes filter. As noted earlier, and again later, the EnKF in this nonlinear model setting is probably best viewed in practice as a type of (random) sample-based (point-valued) state estimator or a stochastic observer. In general, it should not be seen as an approximation of the optimal Bayesian filter.

We discuss connections and extensions of our results to the nonlinear model setting, including different instances of the EnKF in these settings, in a later section (at the end of this article).

5 Theory in the linear-Gaussian setting

Going forward, we consider only the VEnKF (case (F1)) and DEnKF (case (F2)) since as noted the theory of the DEnTF in the linear-Gaussian setting reverts to that of the standard Kalman–Bucy filter as detailed in [16]. The parameter \(\kappa \in \{0,1\}\) will distinguish the two cases (\(\kappa =1\) in case (F1), and \(\kappa =0\) in case (F2)) throughout.

We may unify the analysis via the following representation,

with the mapping,

Let \(\widehat{Z}_t:=(\widehat{X}_t-\mathscr {X}_t)\) and observe that

for some independent d-dimensional Wiener process \({\widehat{\mathscr {B}}}_t\) and with,

Note we often refer to the flows \(\widehat{Z}_t\) or \({Z}_t\) as error flows.

We also underline that

so that the difference between the noisy error flow \(\widehat{Z}_t\) and the classical Kalman–Bucy error flow \(Z_t\) is equal to the difference between the EnKF (sample mean) state estimate and the classical Kalman–Bucy state estimate.

Let \({\widehat{\phi }}_t(Q):=\widehat{P}_t\) denote the flow of the Riccati diffusion equation in (5.2) with \(\widehat{P}_0=Q\in \mathbb {S}^0_d\). Let \({\widehat{\psi }}_t(z,Q):=\widehat{Z}_t\) denote the flow of the stochastic error (5.4) with \(\widehat{Z}_0=z=(x-\mathscr {X}_0)\in \mathbb {R}^d\) and \(\widehat{P}_t= {\widehat{\phi }}_t(Q)\). Finally, we denote the flow of the sample mean in (5.1) with \(\widehat{X}_0=x\in \mathbb {R}^d\) by \({\widehat{\chi }}_t(x,Q):=\widehat{X}_t\).

We underline further that the difference between two error flows satisfies,

and is thus equal to the difference between the two corresponding sample means (with compatible starting points). Studying the difference between two error flows \(({\widehat{\psi }}_{t}(z_1,Q_1) - {\psi }_{t}(z_2,Q_2))\) subsumes the study of something like \(({\widehat{\chi }}_t(x_1,Q_1) - {\chi }_t(x_2,Q_2))\) which is the difference between the EnKF (sample mean) state estimate and the classical Kalman–Bucy state estimate (with different initial conditions).

For any \(s\le t\) and \(Q\in \mathbb {S}_{d}^0\), we define the stochastic state-transition matrix,

As with the classical Kalman–Bucy filter, e.g. see (2.16) and (2.17), the convergence and stability properties of the ensemble Kalman–Bucy filter and the associated Riccati diffusion equation are directly related to the contraction properties of the stochastic state-transition matrix \({\widehat{\mathcal {E}}}_{s,t}(Q)\). For example, the flow of the stochastic error equation (5.4) is given by,

and the stochastic flow of the matrix Riccati diffusion (5.2) is given implicitly by

for any \(s\le t\). We denote by \({\widehat{\Pi }}_t\) the Markov semigroup of \({\widehat{\phi }}_t(Q)\) defined for any bounded measurable function F on \(\mathbb {S}_d\) and any \(Q\in \mathbb {S}_d^0\) with the property that,

When Q is random with distribution \(\Gamma (dQ)\) on \(\mathbb {S}_d^+\), by Fubini’s theorem we have,

This yields the formula

for the distribution of \({\widehat{\phi }}_t(Q)\) on \(\mathbb {S}_d^+\).

We then have the first result concerning the quadratic, matrix-valued, Riccati diffusion process (5.10).

Theorem 5.1

For any \(\textsf{N}\ge 1\), the Riccati diffusion (5.10) has a unique weak solution on \(\mathbb {S}^0_d\). For \(\textsf{N}\ge d+1\), there exists a unique strong solution on \(\mathbb {S}^+_d\). Moreover, \({\widehat{\Pi }}_t(Q,dP)\) is a strongly Feller and irreducible semigroup with a unique invariant probability measure \({\widehat{\Gamma }}_{\infty }\) on \(\mathbb {S}^+_d\). This measure admits a positive density with respect to the natural Lebesgue measure on \(\mathbb {S}_d\).

Given the existence of a solution to the Riccati diffusion (5.2), it follows a solution for \(\widehat{X}_t\) in (5.1) or a solution \(\widehat{Z}_t\) in (5.4) exists and is unique. This result is proven in [19, Theorem 2.1].

Once the problem of existence and uniqueness is tackled, one major problem in this equation is the behaviour at infinity: existence of a stationary measure and speed of convergence towards this stationary measure or even distance between two solutions starting at different points.

We will make wide use of the following two assumptions in the remainder of this article.

Assumption O

The matrix \(S:=H^{\prime }R_1^{-1}H\) is strictly positive-definite, i.e. \(S\in \mathbb {S}_d^+\). This is a strong form of observability, and it implies classical observability as defined in (2.7).

Assumption C

The pair \((A,R^{1/2})\) is controllable, as defined in (2.7).

Under both Assumptions O and C, it follows that \(P_\infty \in \mathbb {S}_d^+\) and \(\textrm{Absc}(A-P_\infty S)<0\), see the earlier discussion on this topic. We may relax the controllability Assumption C to just stabilisability. We discuss Assumption O more later as it (re-)appears throughout our presentation and is more restrictive than the classical observability/detectability assumptions in classical Kalman filtering (noting again it implies observability/detectability).

We emphasise the following:

Proof of this statement follows from the fact that \(\textrm{Absc}(A-P_\infty S)<0\) under just detectability and stabilisability model conditions, and then, an application of [131, Theorem 5]. The logarithmic norm \({\overline{\mu }}(\cdot )\) is not necessarily unique, but any particular chosen logarithmic norm \({\overline{\mu }}(\cdot )\) is indexed to the model parameters \((A,H,R,R_1)\). We use the notation \({\overline{\mu }}(\cdot )\) to distinguish the log-norms for which \({\overline{\mu }}(A-P_\infty S)<0\) whenever \(\textrm{Absc}(A-P_\infty S)<0\) holds, or more specifically throughout this work whenever Assumptions O and C hold.

In prior work [17, 19, 21, 22, 53] and even the first draft of this article, we state certain results in terms of \({\mu }(A-P_\infty S)\), and under the assumption \({\mu }(A-P_\infty S)<0\); for some, but we do not care which, logarithmic norm \(\mu (\cdot )\). We knew of course that certain observability and controllability model conditions ensured \(\textrm{Absc}(A-P_\infty S)<0\). However, it was unclear that negativity of the spectral abscissa translated in general to \({\mu }(A-P_\infty S)<0\) for some version of the logarithmic norm. Thus, in many results we start with the assumption \({\mu }(A-P_\infty S)<0\) in prior work [17, 19, 21, 22, 53] and claimed somewhat informally that this amounts to asking for a strong form of observability and controllability (given its similarity to \(\textrm{Absc}(A-P_\infty S)<0\), but without actually giving testable model conditions). Owing to [131, Theorem 5], we can begin results simply with some form of observability and controllability assumption (typically we need the stronger observability Assumption O, for different reasons) and state results in terms of the special class of logarithmic norms \({\overline{\mu }}(A-P_\infty S)<0\); which we know is negative because \(\textrm{Absc}(A-P_\infty S)<0\). This is a significant relaxation of the conditions precedent in many of the subsequent results and places these results back in the testable and relatable context of classical controllability and observability assumptions.

In Table 1, we denote the relevant flows and notation of interest going forward. This notation allows us to relate (for example) the flow of the approximation relative to the true object with respect to their initial conditions, e.g. fluctuation-type results: \({\widehat{\chi }}_t(x,Q) - {\chi }_t(x,Q)\); or (for example) the flow of two approximated objects with respect to different initial positions, e.g. stability/contraction-type results: \({\widehat{\psi }}_{t}(z_1,Q_1) - {\widehat{\psi }}_{t}(z_2,Q_2)\).

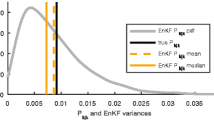

In Fig. 1, we plot the flow of some of the subsequent sections and the main results. The presentation ordering is given mostly in terms of the dependencies and natural progression of the derivations. We discuss briefly the dependencies and reasoning as we progress.

5.1 Fluctuation and contraction results for the Riccati diffusion

5.1.1 Fluctuation properties of the Riccati diffusion

In this section, we consider the fluctuation of \({\widehat{\phi }}_t(Q)\) about \({\phi }_t(Q)\) and of \({\widehat{\psi }}_t(z,Q)\) about \({\psi }_t(z,Q)\).

The fluctuation properties and moment boundedness properties of \({\widehat{\phi }}_t(Q)\) and \({\widehat{\psi }}_t(z,Q)\) depend naturally on the size on the fluctuation as determined by \(\textsf{N}\).

Typically, we will write either of the following expressions in stating our results,

In case (F1) with \(\kappa =1\), there is often a minimum threshold on \(\textsf{N}\) needed to prove the results. In case (F1), this lower threshold on \(\textsf{N}\) may be large. In case (F2) with \(\kappa =0\), these same results typically hold, but moreover, we can often refine the relevant results and at the same time relax the conditions on \(\textsf{N}\), often needing just \(\textsf{N}\ge 1\). This is a significant analytical advantage of the DEnKF over the VEnKF. In some cases, this advantage is practically realised and provable (and not just a by-product of analysis methods). For example, we will show later that some moments of the VEnKF sample covariance in one-dimension provably do not exist in the steady-state without a sufficient number of particles, whereas in the DEnKF these moments always exist with \(\textsf{N}\ge 1\). In some cases, the results stated in this work are only known for the DEnKF. If we do not specify a particular case, or a value for \(\kappa \in \{0,1\}\), then the stated results may be assumed to hold for both the VEnKF and the DEnKF.

We start with the following under-bias estimate on the sample covariance which holds for both the VEnKF and the DEnKF.

Theorem 5.2

For any \(t\ge 0\), any \(Q\in \mathbb {S}_d^0\), and any \(\textsf{N}\ge 1\), we have the uniform under-bias estimate,

for a finite constant \(c>0\) that does not depend on the time horizon.

We may refine this under-bias result as is done in [19]. For example, if we assume further that \(S\in \mathbb {S}_d^+\), i.e. under Assumption O, then for any \(t\ge 0\) we also have the refined bias estimates,

when \(\textsf{N}\) is sufficiently large in case (F1), \(\kappa =1\); or for any \(\textsf{N}\ge 1\) in case (F2), \(\kappa =0\). The proof of this refinement, and details on the constant c(Q), is in [19, Theorem 2.3] and in [22].

We will see subsequently that Assumption O, i.e. the condition \(S\in \mathbb {S}_d^+\), ensures that for any \(n\ge 1\), the n-th moments of the trace of the sample covariance are uniformly bounded w.r.t. the time horizon (with a sufficient number of particles) even when the matrix A is unstable.

The next theorem concerns these time-uniform moment estimates on the stochastic Riccati flow in (4.4), i.e. on the flow of the sample covariance matrix.

Theorem 5.3

Suppose Assumption O holds. For any \(n\ge 1\), \(t\ge 0\), any \(Q\in \mathbb {S}_d^0\), and any \(\textsf{N}\) sufficiently large, we have the uniform estimate,

Furthermore, for any time horizon \(t\ge \tau >0\) we also have the uniform estimates

In addition, in case (F2), for any \(\textsf{N}\ge 1\), any \(n\ge 1\), \(Q\in \mathbb {S}_d^0\), \(t\ge 0\) and any \(s\ge \tau >0\) we have the refined estimates,

The proof of this result is provided in [19, Theorem 2.2] where a precise description of the (finite) parameters \(c_{n},c_{n,\tau },c,c_{\tau }>0\) is also provided. The first estimate in (5.17) also holds without Assumption O, and even if \(S=0\), when \(\textrm{Absc}(A)<0\). The proof of this Theorem is based on a reduction in (4.4) to a scalar Riccati diffusion, a novel representation of its n-th powers, and a comparison of its moments to a judiciously designed deterministic scalar Riccati equation. We discuss this proof later, but this scalar reduction necessitates the condition \(S\in \mathbb {S}_d^+\), i.e. Assumption O. The proof is conservative by nature (due to the scalar reduction and comparison).

Now, we turn to quantifying the fluctuations of the matrix Riccati diffusions around their limiting (deterministic) values as found when \(\textsf{N}\) tends to \(\infty \). That is, we quantify the fluctuation of the EnKF sample covariance about the limiting covariance of the classical Kalman–Bucy filter.

Theorem 5.4

Suppose Assumption O holds. For any \(n\ge 1\), \(t\ge 0\), any \(Q\in \mathbb {S}_d^0\), and any \(\textsf{N}\) sufficiently large we have the uniform estimates,

In case (F2), for any \(\textsf{N}\ge 1\), any \(n\ge 1\), \(t\ge 0\), and any \(Q\in \mathbb {S}_d^0\), we have

The estimates in Theorem 5.4 do not depend on \(Q\in \mathbb {S}_d^0\) when \(t\ge \tau \) for any \(\tau >0\) and with \(c_n,c\) replaced with \(c_{n,\tau },c_\tau \); e.g. similarly to (5.18) in Theorem 5.3.

The proof of the preceding Theorem is provided in [19, Theorem 2.3] and in [22]. The proof follows from a second-order expansion of the stochastic flow \({\widehat{\phi }}_t\) about the deterministic flow \(\phi _t\) and then an appropriate bounding of the first- and second-order stochastic terms. More generally, in [22] we consider a Taylor-type perturbation expansion of the form,

for any \(n\ge 1\), and a stochastic flow \({\varphi }^{(k)}_t\) whose values do not depend on the ensemble size \(\textsf{N}\), and a stochastic remainder term \(\widehat{{\varphi }}^{\,(n)}_t\). Odd order stochastic terms \({\varphi }^{(k)}_t\), with k odd, are zero mean (i.e. centred). This representation allows us in [22] to present sharp and non-asymptotic expansions of the matrix moments of the matrix Riccati diffusion with respect to \(\textsf{N}\).

In [22], we provide uniform estimates of the stochastic flow \({\varphi }^{(k)}_t\) w.r.t. the time horizon even when the matrix A is unstable. These estimates are stronger than the conventional functional central limit theorems for stochastic processes. For example, these results imply the almost sure central limit theorem on the sample covariance,

Bias and variance estimates based on the expansion (5.22) are also given in [22]. See also in particular [22, Section 1.3] for detailed exposition of this functional central limit theorem and the bias and variance estimates. In the scalar case, we explore this expansion (5.22) up to second-order in detail in a later section to illustrate this form.

The under bias result (5.15) holds with any \(\textsf{N}\ge 1\) in both the VEnKF of case (F1), and in the DEnKF of case (F2). This under-bias is a motivation for so-called sample covariance regularisation in practice; e.g. so-called sample covariance inflation or localisation methods [7, 59, 65, 71, 108]. Later, we discuss the effects of inflation in particular.

As with the deterministic Riccati equation, we may bound the moments of the inverse of the stochastic Riccati flow \({\widehat{\phi }}_t(Q)\) under stronger conditions on the number of particles \(\textsf{N}\) required; e.g. see [19]. It follows that with \(Q\in \mathbb {S}_d^+\) and with additional conditions on \(\textsf{N}\), that for \(t\ge \tau >0\) there exists a uniform positive definite lower bound on \(\mathbb {E}[{\widehat{\phi }}_t(Q)]\).

A number of basic corollaries follow the proofs in [19, 22]; for instance, we have the monotone property,

and, for any \(Q\in \mathbb {S}_d^0\), the fixed upper bound,

These estimates hold for any \(\textsf{N}\ge 1\) without any additional assumptions, as in Theorem 5.2.

Several spectral estimates can be deduced from the estimates (5.16), (5.20) and (5.21). For example, in case (F2), with \(\kappa =0\) and \(\textsf{N}\ge 1\) then combining (5.21) with the n-version of the Hoffman-Wielandt inequality we have the uniform estimate,

Finally, it is worth noting briefly that all moment boundedness and fluctuation results stated in this section hold with any \(\textsf{N}\ge 1\) and without further assumptions, if one replaces the constants \(c,c_{n}, c_{\tau },c_{n}(Q),\ldots \) with functions that now depend on (and grow with) the time horizon \(t\ge 0\). However, if these bounds depend exponentially on time (as is quite typical in analysis), an exponent of the form \((\alpha \,t)>200\) induces an exceedingly pessimistic estimate larger than the estimated number of elementary particles of matter in the visible universe. In this sense, non-time-uniform bounds of this form are clearly impractical from a numerical user-case perspective.

5.1.2 Contraction and long time properties of the Riccati diffusion

With \(Q\in \mathbb {S}_d^+\), we set \(\Lambda (Q):=\Vert Q\Vert _2+\Vert Q^{-1}\Vert _2\) and we consider the collection of \(\Lambda \)-norms on the set of probability measures \(\Gamma _1,\Gamma _2\) on \(\mathbb {S}_d^+\), indexed by \(\hbar >0\), and defined by,

In the above display, the supremum is taken over all measurable function F on \(\mathbb {S}_d\) such that

It is known that the deterministic Riccati equation that describes the flow of the covariance matrix in classical Kalman–Bucy filtering tends to a fixed point \(P_\infty \) for any initial point \(Q\in \mathbb {S}_d^0\) when the (time-invariant) model (2.1) is detectable and stabilisable; e.g. see (2.20) and [16]. The next result is the analogue of this idea in the EnKF setting and describes the stability of the flow of the sample covariance.

Theorem 5.5

Assume the fluctuation parameter \(\textsf{N}\) is sufficiently large such that \(\mathbb {E}[\Vert {\widehat{\phi }}_t(Q)\Vert ]\) and \(\mathbb {E}[\Vert {\widehat{\phi }}^{-1}_t(Q)\Vert ]\) are uniformly bounded (e.g. as in Theorem 5.3 for bounds on \(\mathbb {E}[\Vert {\widehat{\phi }}_t(Q)\Vert ]\)). Then, there exists some finite constants \(c, \alpha ,\hbar >0\) such that for any \(t\ge 0\) and probability measures \(\Gamma _1,\Gamma _2\) on \(\mathbb {S}_d^+\), we have the \(\Lambda \)-norm contraction inequality

Of course, setting \(\Gamma _2={\widehat{\Gamma }}_{\infty }\) where \({\widehat{\Gamma }}_{\infty }\) is the unique invariant probability measure described in Theorem 5.1 implies that for any initial probability measure \(Q\sim \Gamma \) on \(\mathbb {S}^+_d\) we have that \({\widehat{\phi }}_t(Q)\) tends to be distributed according to \({\widehat{\Gamma }}_{\infty }\). The proof of the above theorem is provided in [19, Theorem 2.4] and is based on matrix-valued Lyapunov and minorisation conditions (choosing the Lyapunov candidate, \(\Lambda (\cdot )\)).

For one-dimensional models, the article [21] provides explicit analytical expressions for the reversible measure of \(\widehat{P}_t\) in terms of the model parameters. As expected, heavy tailed reversible measures arise when \(\kappa =1\), and weighted Gaussian distributions when \(\kappa =0\). The article [21] also provides sharp exponential decay rates to equilibrium, in the sense that the decay rates tend to those of the limiting deterministic Riccati equation when \(\textsf{N}\) tends to \(\infty \).

In a later section, we explore the one-dimensional case in more detail and explicitly examine the invariant measures in each model \(\kappa \in \{0,1\}\). The contrast between the steady-state invariant measures in each case \(\kappa \in \{0,1\}\) provides some insight into various phenomenon seen in practice we believe, e.g. so-called catastrophic divergence, and fluctuations of the sample covariance, etc. We also state the strong \(\mathbb {L}_n\)-type contraction of \({\widehat{\phi }}_t(Q)\) in both cases (F1) and (F2).

5.2 Contraction properties of exponential semigroups

Recall that the stability properties of the deterministic (\(\textsf{N}=\infty \)) semigroups \(\mathcal {E}_{s,t}(Q)\) associated with the classical Kalman–Bucy filter are rather well understood, e.g. see (2.13), (2.15), and (2.14) and also [16, 18]. We emphasise that in the deterministic case, stability of the matrix-valued Riccati differential equation, e.g. as in (2.20), follows from the contraction properties of \(\mathcal {E}_{s,t}(Q)\) in (2.13); see [16, 18] for the derivation. Some intuition for this follows from the implicit form for the solution in (2.17). Similarly, in classical Kalman–Bucy filter, the stability properties of the error flow (2.6) are related to the contraction properties of the state-transition matrix \(\mathcal {E}_{s,t}(Q)\). Again, the intuition follows from the solution form in (2.16). The stability properties of the classical Kalman–Bucy error flow are given in, e.g. (2.22) and (2.24); see [16].

We come now to the contractive properties of \(\widehat{\mathcal {E}}_{s,t}(Q)\) defined in (5.8). The stability of \(\widehat{\mathcal {E}}_{s,t}(Q)\) will naturally play a role in the derivation of contraction results on, e.g. the sample error flow \({\widehat{\psi }}_t(z,Q)\), see (5.9). Indeed, we also require stability of \(\widehat{\mathcal {E}}_{s,t}(Q)\) to derive fluctuation results on the sample error flow \({\widehat{\psi }}_t(z,Q)\). Note we did not need stability of the exponential semigroup to derive fluctuation results on the sample covariance \({\widehat{\phi }}_t(z,Q)\) earlier.

Firstly, we remark that if \(S\in \mathbb {S}_d^+\), then up to a change of basis we can always assume that \(S=I\). Then, for any \(s,t\in [0,\infty [\) we immediately have the rather crude almost sure estimate

for any logarithmic norm. Note again that if \(\textrm{Absc}(A)<0\), then \(\mu \left( A\right) <0\) for some log-norm. In any case, in general, asking for A to be stable is a very strong and restrictive condition. We typically seek contraction results on \(\widehat{\mathcal {E}}_{s,t}(Q)\) that accommodate arbitrary \(A\in \mathbb {M}_d\) matrices; in particular, we seek to accommodate unstable signal matrices A, i.e. matrices with (some) non-negative eigenvalues. To this end, fix \(Q\in \mathbb {S}^0_d\) and consider the process \({\widehat{\mathcal {A}}}\) defined by

We write \(\mathcal {A}\) for the analogous process driven by \(\phi _t(Q)\), i.e. with \(\textsf{N}=\infty \), which we know under just detectability conditions is a time-varying stabilising matrix process [139].

We seek to characterise, in a useful manner, the fluctuation of the stochastic process \( {\widehat{\mathcal {A}}}\) about \(\mathcal {A}\), with the hope that the contractive properties of \(\widehat{\mathcal {E}}_{s,t}\) can then be in some sense related to the established contractive properties of \({\mathcal {E}}_{s,t}\).

For example, given Assumption O and \(\kappa =0\), combining (5.19) (5.21) and (2.15) with Krause’s inequality [86] for any \(nd\ge 1\), we have the uniform fluctuation estimate,

where we define the optimal matching distance between the spectrum of matrices \(A,B\in \mathbb {M}_d\) by

where the minimum is taken over the set of d! permutations of \(\{1,\ldots ,d\}\). This spectral estimate is of interest on its own, but is not immediately usable for controlling the contraction properties of the exponential semigroups.

By Theorem 5.3 and Theorem 5.4, under Assumption O, the collection of processes \((\mathcal {A},{\widehat{\mathcal {A}}})\) satisfy the following regularity properties:

-

Case \(\kappa \in \{1,0\}\): For any \(n\ge 1\), \(t\ge 0\), \(Q\in \mathbb {S}_d^0\), and any \(\textsf{N}\) sufficiently large, we have the uniform estimates

$$\begin{aligned} \sqrt{\textsf{N}}\, \mathbb {E}\left[ \left\| \mathcal {A}_t-{\widehat{\mathcal {A}}}_t \right\| ^n\right] ^{\frac{1}{n}} \,\le \, c_n\,(1+\Vert Q\Vert ^7)~\quad \textrm{and}\quad ~ \mathbb {E}\left[ \left\| {\widehat{\mathcal {A}}}_t \right\| ^n\right] ^{\frac{1}{n}} \,\le \, c_n\,(1+ \Vert Q\Vert )\nonumber \\ \end{aligned}$$(5.34) -

Case \(\kappa =0\): For any \(n\ge 1\), \(t\ge 0\), \(Q\in \mathbb {S}_d^0\), and any \(\textsf{N}\ge 1\), we have the uniform estimates

$$\begin{aligned} \sqrt{\textsf{N}}\,\mathbb {E}\left[ \left\| \mathcal {A}_t-{\widehat{\mathcal {A}}}_t \right\| ^n\right] ^{\frac{1}{n}} \,&\le \, c\,(1+ \Vert Q\Vert ^5)\,\left( 1+\frac{\sqrt{n}}{\sqrt{\textsf{N}}}\right) ^5, \end{aligned}$$(5.35)and

$$\begin{aligned} \sqrt{\textsf{N}} \,\mathbb {E}\left[ \left\| {\widehat{\mathcal {A}}}_t \right\| ^n\right] ^{\frac{1}{n}} \,&\le \, c\,(1+ \Vert Q\Vert )\,(1+\sqrt{n}) \end{aligned}$$

The stability properties of stochastic semigroups associated with a general collection of stochastic flows \((\mathcal {A},{\widehat{\mathcal {A}}})\) satisfying fluctuation and moment boundedness properties in a general form accommodating both (5.34) and (5.35) have been developed in our prior work [17]. Several local-type contraction estimates can now be derived.

Theorem 5.6

Let \(\kappa \in \{1,0\}\) and suppose Assumptions O and C hold. Then, for any increasing sequence \(0\le s \le t_k\uparrow _{k\rightarrow \infty }\infty \), and for any \(Q\in \mathbb {S}_d^0\), the probability of the following event

for any \(\nu \in ]0,1[\), as soon as \(\textsf{N}\) is sufficiently large (as a function of \(\nu \in ]0,1[\)).

This log-Lyapunov estimate (5.36) immediately implies the semigroup \(\widehat{\mathcal {E}}_{s,t_k}(Q)\) is exponentially contracting with a high probability (in both cases \(\kappa \in \{1,0\}\)); given a sufficient number of particles, and the observability and controllability Assumptions O and C.

A number of reformulations of this result that offer insight individually are worth stating:

-

Let \(\kappa \in \{1,0\}\). For any \(0\le s \le t_{k_1}\uparrow _{{k_1}\rightarrow \infty }\infty \), there exists a sequence \(\textsf{N}:=\textsf{N}_{k_2}\uparrow _{{k_2}\rightarrow \infty } \infty \) such that we have the almost sure Lyapunov estimate

$$\begin{aligned} \limsup _{{k_2}\rightarrow \infty }\limsup _{{k_1}\rightarrow \infty }\frac{1}{t_{k_1}}\,\log {\Vert \widehat{\mathcal {E}}_{s,s+t_{k_1}}(Q))\Vert }\,\le \, \frac{1}{2}\,{\overline{\mu }}(A-{P}_{\infty }S) \end{aligned}$$(5.37) -

Let \(\kappa \in \{1,0\}\). Then, for any increasing sequence of times \(0\le s \le t_k\uparrow _{k\rightarrow \infty }\infty \), the probability of the following event,

$$\begin{aligned} \left\{ \begin{array}{l} \forall 0<\nu _2\le 1~~~ \exists l\ge 1 ~~~\hbox {such~that}~~~ \forall k\ge l~~~\hbox {it~holds~that~} \\ ~ \qquad \qquad \qquad \qquad \qquad \qquad \qquad \displaystyle \frac{1}{t_k}\log {\Vert \widehat{\mathcal {E}}_{s,t_k}(Q)\Vert } \,\le \, \frac{1}{2}\,(1-\nu _2)\,{\overline{\mu }}(A-{P}_{\infty }S) \end{array}\right\} \end{aligned}$$(5.38)is greater than \(1-\nu _1\), for any \(\nu _1\in ]0,1[\), as soon as \(\textsf{N}\) is sufficiently large (as a function of \(n\ge 1\) and \(\nu _1\in ]0,1[\)).

-