Abstract

Consider a finite collection of affine hyperplanes in \(\mathbb R^d\). The hyperplanes dissect \(\mathbb R^d\) into finitely many polyhedral chambers. For a point \(x\in \mathbb R^d\) and a chamber P the metric projection of x onto P is the unique point \(y\in P\) minimizing the Euclidean distance to x. The metric projection is contained in the relative interior of a uniquely defined face of P whose dimension is denoted by \(\text {dim}(x,P)\). We prove that for every given \(k\in \{0,\ldots , d\}\), the number of chambers P for which \(\text {dim}(x,P) = k\) does not depend on the choice of x, with an exception of some Lebesgue null set. Moreover, this number is equal to the absolute value of the k-th coefficient of the characteristic polynomial of the hyperplane arrangement. In a special case of reflection arrangements, this proves a conjecture of Drton and Klivans [A geometric interpretation of the characteristic polynomial of reflection arrangements. Proc. Amer. Math. Soc. 138(8), 2873–2887 (2010)].

Similar content being viewed by others

1 Introduction and Statement of Results

1.1 Introduction

The starting point of the present paper is the following conjecture of Drton and Klivans [7, Conjecture 6]. Consider a finite reflection group \(\mathscr {W}\) acting on \(\mathbb {R}^d\). The mirror hyperplanes of the reflecting elements of \(\mathscr {W}\) dissect \(\mathbb {R}^d\) into isometric cones or chambers. Let C be one of these cones. Take some \(k\in \{0,\ldots ,d\}\). A point \(x\in \mathbb {R}^d\) is said to have a k-dimensional projection onto C if the unique element \(y\in C\) minimizing the Euclidean distance to x is contained in a k-dimensional face of C but not in a face of smaller dimension. For example, points in the interior of C have a d-dimensional projection onto C.

Conjecture 1.1

(Drton and Klivans [7]) For a “generic” point \(x\in \mathbb {R}^d\), the number of points in the orbit \(\{gx: g\in \mathscr {W}\}\) having a k-dimensional projection onto C is constant, that is independent of x. Moreover, this number equals the absolute value \(a_k\) of the coefficient of \(t^k\) in the characteristic polynomial of the reflection arrangement.

Drton and Klivans [7] observed that in the case of reflection groups of type A their conjecture follows from the work of Miles [19], proved it for reflection groups of types B and D, and gave further partial results on the conjecture including numerical evidence for its validity in the case of exceptional reflection groups. Somewhat later, Klivans and Swartz [16] proved that if x is chosen at random according to a rotationally invariant distribution on \(\mathbb {R}^d\), then the conjecture of Drton and Klivans is true on average, that is the expected number of points in the orbit \(\{gx: g\in \mathscr {W}\}\) having a k-dimensional projection onto C equals \(a_k\).

The aim of the present paper is to prove Conjecture 1.1 in a much more general setting of arbitrary affine hyperplane arrangements. After collecting the necessary definitions in Sect. 1.2 we shall state our main results in Sect. 1.3.

1.2 Definitions

A polyhedral set in \(\mathbb {R}^d\) is an intersection of finitely many closed half-spaces. A bounded polyhedral set is called a polytope. If the hyperplanes bounding the half-spaces pass through the origin, the intersection of these half-spaces is called a polyhedral cone, or just a cone. We denote by \(\mathscr {F}_k(P)\) the set of all k-dimensional faces of a polyhedral set \(P\subset \mathbb {R}^d\), for all \(k\in \{0,\ldots , d\}\). For example, \(\mathscr {F}_0(P)\) is the set of vertices of P, while \(\mathscr {F}_d(P) = \{P\}\) provided P has non-empty interior. The set of all faces of P of whatever dimension is then denoted by \(\mathscr {F}(P) = \bigcup _{k=0}^d \mathscr {F}_k(P)\). The relative interior of a face F, denoted by \({{\,\textrm{relint}\,}}F\), consists of all points belonging to F but not to a face of strictly smaller dimension. It is known that any polyhedral set is a disjoint union of the relative interiors of its faces:

For more information on polyhedral sets and their faces we refer to [20, Sections 7.2 and 7.3], [21] and [26, Chapters 1 and 2]. Polyhedral sets form a subclass of the family of closed convex sets; for the face structure in this more general setting we refer to [22, §2.1 and §2.4].

Given a polyhedral set P and a point \(x\in \mathbb {R}^d\), there is a uniquely defined point minimizing the Euclidean distance \(\Vert x-y\Vert \) among all \(y\in P\). This point, denoted by \(\pi _P(x)\), is called the metric projection of x onto P. For example, if \(x\in P\), then \(\pi _P(x) = x\). By (1), the metric projection \(\pi _P(x)\) is contained in a relative interior of a uniquely defined face F of P. If the dimension of F is k, we say that the point x has a k-dimensional metric projection onto P and write \(\dim (x,P) = k\).

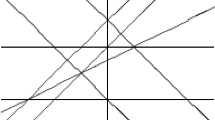

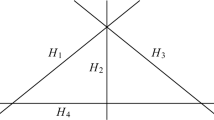

Next we need to recall some basic facts about hyperplane arrangements referring to [25] and [3, Section 1.7] for more information. Let \(\mathscr {A}= \{H_1,\ldots ,H_m\}\) be an affine hyperplane arrangement in \(\mathbb {R}^d\), that is a collection of pairwise distinct affine hyperplanes \(H_1,\ldots , H_m\) in \(\mathbb {R}^d\). In general, the hyperplanes are not required to pass through the origin, but if they all do, the arrangement is called linear (or central). The connected components of the complement \(\mathbb {R}^d\backslash \bigcup _{i=1}^m H_i\) are called open chambers, while their closures are called closed chambers of \(\mathscr {A}\). The closed chambers are polyhedral sets which cover \(\mathbb {R}^d\) and have disjoint interiors. The collection of all closedFootnote 1 chambers will be denoted by \(\mathscr {R}(\mathscr {A})\). If not otherwise stated, the word “chamber” always refers to a closed chamber in the sequel. The characteristic polynomial of the affine hyperplane arrangement \(\mathscr {A}\) may be defined by the following Whitney formula [25, Theorem 2.4]:

Here, \(\# \mathscr {B}\) denotes the number of elements in \(\mathscr {B}\). The empty set \(\mathscr {B}=\varnothing \), for which the corresponding intersection of hyperplanes is defined to be \(\mathbb {R}^d\), contributes the term \(t^d\) to the above sum. The notation \(\subset \) is also used in the cases of equality in this paper. The classical Zaslavsky formulae [25, Theorem 2.5] state that the total number of chambers is given by \(\#\mathscr {R}(\mathscr {A}) = (-1)^d \chi _\mathscr {A}(-1)\), while the number of bounded chambers is equal to \((-1)^{\mathop {\textrm{rank}}\nolimits \mathscr {A}}\chi _\mathscr {A}(1)\), where \(\mathop {\textrm{rank}}\nolimits \mathscr {A}\) is the dimension of the linear space spanned by the normals to the hyperplanes of \(\mathscr {A}\). For the coefficients of the characteristic polynomial it will be convenient to use the notation

1.3 Main Result

We are now ready to state a simplified version of our main result.

Theorem 1.2

Let \(\mathscr {A}\) be an affine hyperplane arrangement in \(\mathbb {R}^d\) whose characteristic polynomial \(\chi _\mathscr {A}(t)\) is written in the form (3). Take some \(k\in \{0,\ldots ,d\}\). Then,

for every \(x\in \mathbb {R}^d\) outside a certain exceptional set which is a finite union of affine hyperplanes.

Example 1.3

(Zaslavsky’s first formula) Let us show that Theorem 1.2 generalizes Zaslavsky’s first formula \(\#\mathscr {R}(\mathscr {A}) = (-1)^d \chi _\mathscr {A}(-1)\). Take some point \(x\in \mathbb {R}^d\) outside the exceptional set. On the one hand, for every chamber \(P\in \mathscr {R}(\mathscr {A})\) there is a unique face whose relative interior contains the metric projection \(\pi _P(x)\), hence interchanging the order of summation we get

On the other hand, the sum on the left-hand side equals \(\sum _{k=0}^d a_k\) by Theorem 1.2. Altogether, we arrive at \(\# \mathscr {R}(\mathscr {A}) = \sum _{k=0}^d a_k\), which is Zaslavsky’s first formula.

Example 1.4

(Reflection arrangements) Consider a finite reflection group \(\mathscr {W}\) acting on \(\mathbb {R}^d\). This means that \(\mathscr {W}\) is a finite group generated by reflections with respect to linear hyperplanes; see the books [10] and [13] for the necessary background. The associated reflection arrangement consists of all hyperplanes H with the property that the reflection with respect to H belongs to \(\mathscr {W}\). Let \(\chi (t)\) be the characteristic polynomial of this arrangement and C one of its chambers. Drton and Klivans [7, Conjecture 6] conjectured that for a “generic” point \(x\in \mathbb {R}^d\) the number of group elements \(g\in \mathscr {W}\) with \(\dim (gx, C) = k\) is equal to the absolute value of the coefficient of \(t^k\) in \(\chi (t)\), for all \(k\in \{0,\ldots ,d\}\). This conjecture is an easy consequence of Theorem 1.2. Indeed, since every \(g\in \mathscr {W}\) is an isometry, \(\dim (gx, C)\) equals \(\dim (x, g^{-1}C)\). If g runs through all elements of \(\mathscr {W}\), then \(g^{-1}C\) runs through all chambers of the reflection arrangement, and the conjecture follows from Theorem 1.2. Note that the characteristic polynomials of the reflection arrangements are known explicitly; see, e.g., [3, page 124].

Remark 1.5

Theorem 1.2 has been obtained by [17, Corollary 5.13]. Our proof is quite different and more elementary.

Let us now restate Theorem 1.2 in a more explicit form involving a concrete description of the exceptional set. First we need to define the notions of tangent and normal cones. Let

denote the positive hull of a set \(A\subset \mathbb {R}^d\). The tangent cone of a polyhedral set P at its face \(F\in \mathscr {F}(P)\) is defined by

where \(f_0\) is an arbitrary point in \({{\,\textrm{relint}\,}}F\). It is known that this definition does not depend on the choice of \(f_0\) and that \(T_F(P)\) is a polyhedral cone. Moreover, \(T_F(P)\) contains the linear subspace \(\mathop {{\textrm{aff}}}\nolimits F- f_0\), where \(\mathop {{\textrm{aff}}}\nolimits F\) is the affine hull of F, i.e., the minimal affine subspace containing F. For a polyhedral cone \(C\subset \mathbb {R}^d\), its polar cone is defined by

where \(\langle \cdot , \cdot \rangle \) denotes the standard Euclidean scalar product on \(\mathbb {R}^d\). The normal cone of a polyhedral set P at its face \(F\in \mathscr {F}(P)\) is defined as the polar cone of the tangent cone:

By definition, \(N_F(P)\) is a polyhedral cone contained in \((\mathop {{\textrm{aff}}}\nolimits F)^\bot \), the orthogonal complement of \(\mathop {{\textrm{aff}}}\nolimits F\). Here, the orthogonal complement of an affine subspace \(A\subset \mathbb {R}^d\) is the linear subspace

Now, the metric projection of a point \(x\in \mathbb {R}^d\) onto a polyhedral set P satisfies \(\pi _P(x) \in F\) for a face \(F\in \mathscr {F}(P)\) if and only if \(x\in F+N_F(P)\). Here, \(A+B = \{a+b: a\in A, b\in B\}\) is the Minkowski sum of the sets \(A,B\subset \mathbb {R}^d\), which in our special case is even orthogonal meaning that every vector from \(N_F(P)\) is orthogonal to every vector from F. Similarly, we have

Let \({{\,\textrm{int}\,}}A\) denote the interior of a set A, and let \(\partial A=A\backslash {{\,\textrm{int}\,}}A\) be the boundary of A. We are now ready to restate our main result in a more explicit form.

Theorem 1.6

Let \(\mathscr {A}\) be an affine hyperplane arrangement in \(\mathbb {R}^d\) whose characteristic polynomial \(\chi _\mathscr {A}(t)\) is written in the form (3). Then, for every \(k\in \{0,\ldots ,d\}\) we have

where the exceptional set \(E_k\) is given by

Also, we have \(\varphi _k(x) \ge a_k\) for every \(x\in \mathbb {R}^d\).

An equivalent representation of the set \(E_k\), implying that it is a finite union of affine hyperplanes, will be given below; see (42) and (43).

Example 1.7

Let us consider a simple example showing that the exceptional set cannot be removed from the statement of Theorem 1.6. Consider an arrangement \(\mathscr {A}\) consisting of the coordinate axes \(\{x_1=0\}\) and \(\{x_2=0\}\) in \(\mathbb {R}^2\). There are four chambers and the characteristic polynomial is given by \(\chi _\mathscr {A}(t) = (t-1)^2\). It is easy to check that the functions \(\varphi _0\) and \(\varphi _1\) defined in (5) are given by

These functions are strictly larger than \(a_0=1\) and \(a_1=2\) on the exceptional set \(E_1=E_2 = \{x_1=0\}\cup \{x_2=0\}\).

Remark 1.8

(Similar identities) It is interesting to compare Theorem 1.6 to the following identity: For every polyhedral set \(P\subset \mathbb {R}^d\) we have

for all \(x\in \mathbb {R}^d\), without an exceptional set. Various versions of this formula valid outside a certain exceptional sets of Lebesgue measure 0 have been obtained starting with the work of McMullen [18, page 249]; see [24, Proof of Theorem 6.5.5], [8, Hilfssatz 4.3.2], [9, 11, Corollary 2.25 on page 89]. The exceptional set has been removed independently in [23] (for polyhedral cones) and in [12] (for general polyhedral sets). Cowan [4] proved another identity for alternating sums of indicator functions of convex hulls. The exceptional set in Cowan’s identity has been subsequently removed in [14].

2 Implications and Extensions of the Main Result

2.1 Conic Intrinsic Volumes and Characteristic Polynomials

As another consequence of our result we can re-derive a formula due to Klivans and Swartz [16, Theorem 5] which expresses the coefficients of the characteristic polynomial of a linear hyperplane arrangement through the conic intrinsic volumes of its chambers. Let us first define conic intrinsic volumes; see [24, Sect. 6.5] and [1, 2] for more details. Let \(\xi \) be a random vector having an arbitrary rotationally invariant distribution on \(\mathbb {R}^d\). As examples, one can think of the uniform distribution on the unit sphere in \(\mathbb {R}^d\) or the standard normal distribution. The k-th conic intrinsic volume \(\nu _k(C)\) of a polyhedral cone \(C\subset \mathbb {R}^d\) is defined as the probability that the metric projection of \(\xi \) onto C belongs to a relative interior of a k-dimensional face of C, that is

To state the formula of Klivans and Swartz [16, Theorem 5], consider a linear hyperplane arrangement, i.e., a finite collection \(\mathscr {A}=\{H_1,\ldots ,H_m\}\) of hyperplanes in \(\mathbb {R}^d\) passing through the origin. The hyperplanes dissect \(\mathbb {R}^d\) into finitely many polyhedral cones (the chambers of the arrangement). The formula of Klivans and Swartz [16, Theorem 5] states that for every \(k\in \{0,\ldots ,d\}\) the sum of \(\nu _k(C)\) over all chambers is equal to the absolute value of the k-th coefficient of the characteristic polynomial \(\chi _\mathscr {A}(t)\), namely

For proofs and extensions of the Klivans–Swartz formula see [15, Theorem 4.1], [1, Section 6] and [23, Equation (15) and Theorem 1.2]. To see that (8) is a consequence of our results, note that by Theorem 1.2 applied with x replaced by \(\xi \),

Taking the expectation and interchanging it with the sum yields (8). Thus, in the setting of linear arrangements, our result can be seen as an a.s. version of the Klivans–Swartz formula.

2.2 Extension to j-th Level Characteristic Polynomials

Let us finally mention one simple extension of the above results. Let \(\mathscr {L}(\mathscr {A})\) be the set of all non-empty intersections of hyperplanes from \(\mathscr {A}\). By convention, the whole space \(\mathbb {R}^d\) is also included in \(\mathscr {L}(\mathscr {A})\) as an intersection of the empty collection. Take some \(j\in \{0,\ldots ,d\}\) and let \(\mathscr {L}_j(\mathscr {A})\) denote the set of all j-dimensional affine subspaces in \(\mathscr {L}(\mathscr {A})\). The restriction of the arrangement \(\mathscr {A}\) to the subspace \(L\in \mathscr {L}(\mathscr {A})\) is defined as

which is an affine hyperplane arrangement in the ambient space L. Note that it may happen that \(H_1\cap L = H_2\cap L\) for some different \(H_1,H_2\in \mathscr {A}\), in which case the corresponding hyperplane is listed just once in the arrangement \(\mathscr {A}^L\).

Now, the j-th level characteristic polynomial of \(\mathscr {A}\) may be defined as

We refer to [1, Section 2.4.1] for this and other equivalent definitions. Note that in the case \(j=d\) we recover the usual characteristic polynomial \(\chi _\mathscr {A}(t)\). For the coefficients of the j-th level characteristic polynomial we use the notation

Recall that \(\mathscr {R}(\mathscr {A})\) denotes the set of all closed chambers generated by the arrangement \(\mathscr {A}\). For \(j\in \{0,\ldots ,d\}\), let \(\mathscr {R}_j(\mathscr {A})\) be the set of all j-dimensional faces of all chambers, that is

The j-th level extension of Theorem 1.6 reads as follows.

Theorem 2.1

Let \(\mathscr {A}\) be an affine hyperplane arrangement in \(\mathbb {R}^d\) whose j-th level characteristic polynomial \(\chi _{\mathscr {A},j}(t)\) is written in the form (10). Then, for every \(j\in \{0,\ldots ,d\}\) and \(k\in \{0,\ldots ,j\}\) we have

where the exceptional set \(E_{kj}\) is given by

Proof of Theorem 2.1 assuming Theorem 1.6

Consider any \(L\in \mathscr {L}_j(A)\) and apply Theorem 1.6 to the hyperplane arrangement \(\mathscr {A}^L\) in the ambient space L. This yields

where \(L_0:=L-\pi _L(0)\) is a shift of the affine subspace L that contains the origin, the \(a_{k,L}\)’s are defined by the formulae

and the exceptional sets \(E_{k,L}\subset L\) are given by

Here, \(\partial _L\) denotes the boundary operator in the ambient space L. Note that in (12) the normal cone of \(F\in \mathscr {F}_k(P)\) in the ambient space L is represented as \(N_F(P)\cap L_0\), where \(N_F(P)\) denotes the normal cone in the ambient space \(\mathbb {R}^d\). Also, we have the orthogonal sum decomposition \(N_F(P) = (N_F(P) \cap L_0) + L^\bot \). Hence, we can rewrite (12) as

Since each j-dimensional face \(P\in \mathscr {R}_j(\mathscr {A})\) is contained in a unique affine subspace \(L\in \mathscr {L}_j(\mathscr {A})\), we can take the sum over all such L arriving at

for all \(x\in \mathbb {R}^d\) outside the following exceptional set:

Here, we used that \(\partial _L(A) + L^\bot = \partial (A+L^\bot )\) for every set \(A\subset L\). It follows from (9), (10), (13) that

which completes the proof. \(\square \)

Remark 2.2

Using almost the same argument as in Sect. 2.1, Theorem 2.1 yields the following j-th level extension of the Klivans–Swartz formula obtained in [1, Theorem 6.1] and [23, Equation (15)]:

3 Proof of Theorem 1.6

The remaining part of this paper is devoted to the proof of Theorem 1.6 which we shall subdivide into several steps. In Step 1 we prove Theorem 1.6 for \(k=0\) and linear arrangements. In Steps 2, 3, 4 we reduce the general case to this special case. Finally, in Step 5 we simplify the representation of the exceptional set.

Step 1. We start with a proposition which, as we shall see in Remark 3.3 below, implies Theorem 1.6 for linear hyperplane arrangements in the special case \(k=0\). Recall that the polar cone of a polyhedral cone \(C\subset \mathbb {R}^d\) is defined by

It is known that \(C^{\circ \circ } = C\); see [1, Proposition 2.3]. The lineality space of a cone C is the largest linear space contained in C and is explicitly given by \(C\cap (-C)\). It is known that the linear space spanned by the polar cone \(C^\circ \) coincides with the orthogonal complement of the lineality space of C; see, e.g., [1, Proposition 2.5] for a more general statement. In particular, the lineality space of C is trivial (i.e., equal to \(\{0\}\)) if and only if \(C^\circ \) has non-empty interior.

Proposition 3.1

Let \(\mathscr {A}\) be a linear hyperplane arrangement in \(\mathbb {R}^d\). Then,

where \(a_0\) is defined by (2) and (3) and the exceptional set \(E_0^*\) is given by

Proof

Since for linear arrangements \(C\mapsto -C\) defines a bijective self-map of \(\mathscr {R}(\mathscr {A})\) and since \((-C)^\circ = -(C^\circ )\), we have

Therefore, it suffices to prove that

Since every cone \(C\in \mathscr {R}(\mathscr {A})\) is full-dimensional, implying that the polar cone has trivial lineality space \(C^\circ \cap (-C^\circ ) = \{0\}\), it suffices to prove that

Let \(L(x):= \{\lambda x: \lambda \in \mathbb {R}\}\) be the 1-dimensional line generated by \(x\in \mathbb {R}^d\backslash \{0\}\). Then, \(x\in C^\circ \cup -C^\circ \) if and only if \(L\cap C^\circ \ne \{0\}\). It therefore suffices to prove that

In a slightly different form, this result is contained in [23, Theorem 1.2, Equation (16)]. For completeness, we provide a proof. By the Farkas lemma [1, Lemma 2.4], \(L(x) \cap C^\circ \ne \{0\}\) is equivalent to \(L(x)^\bot \cap {{\,\textrm{int}\,}}C = \varnothing \). Thus, we need to show that

By Zaslavsky’s first formula, the total number of chambers of \(\mathscr {A}\) is given by \(\# \mathscr {R}(\mathscr {A}) = (-1)^d \chi _\mathscr {A}(-1) = \sum _{k=0}^d a_k\). By Zaslavsky’s second formula, \(\chi _\mathscr {A}(1)=0\) (because there are no bounded chambers in a linear arrangement). Hence, \(\sum _{k=0}^d (-1)^k a_k = 0\) and it follows that \(\# \mathscr {R}(\mathscr {A}) = 2 \sum _{k=0}^{[d/2]}a_{2k}\). In view of this, it suffices to show that

This identity is known [15, Theorem 3.3] provided that the hyperplane \(L(x)^\bot \) is in general position with respect to the arrangement \(\mathscr {A}\). By definition [15, Section 3.1], the general position condition means that for every \(L\in \mathscr {L}(\mathscr {A})\) with \(L\ne \{0\}\), we have \(\dim (L\cap L(x)^\bot ) = \dim L-1\). This is the same as to require that L is not a subset of \(L(x)^\bot \) or, equivalently, that \(x\notin L^\bot \). So, the above identity holds for all \(x\in \mathbb {R}^d \backslash \bigcup _{L\in \mathscr {L}(\mathscr {A})\backslash \{0\}} (L^\bot )\), which completes the proof of (17). The second representation of the exceptional set \(E_0^*\) in (18) was mentioned just for completeness. We shall prove it in Lemma 3.8 without using it before. \(\square \)

Lemma 3.2

Let \(\mathscr {A}\) be a linear hyperplane arrangement in \(\mathbb {R}^d\). Then,

Proof

The proof of Proposition 3.1 applies with minimal modifications. Indeed, by [15, Lemma 3.5, Equation (38)] (note that \(\mathscr {R}(\mathscr {A})\) denotes the collection of open chambers there), the equality in (20) has to be replaced by the inequality \(\le \), which means that the equality in (19) should be replaced by \(\ge \). The rest of the proof applies. \(\square \)

Remark 3.3

With Proposition 3.1 at hand, we can prove Theorem 1.6 for \(k=0\) provided the arrangement \(\mathscr {A}\) is linear. Assume first that \(\mathscr {A}\) is essential, i.e., it has full rank meaning that \(\bigcap _{H\in \mathscr {A}} H = \{0\}\). Then, \(F=\{0\}\) is the only 0-dimensional face of every chamber \(C\in \mathscr {R}(\mathscr {A})\). The normal cone of C at this face is \(N_{\{0\}}(C) = C^\circ \). Hence, the case \(k=0\) of Theorem 1.6 follows from Proposition 3.1 and Lemma 3.2. In the case of a non-essential linear arrangement, that is if \(L_*:= \bigcap _{H\in \mathscr {A}} H \ne \{0\}\), Theorem 1.6 becomes trivial for \(k=0\) as there are no 0-dimensional faces and the zeroth coefficient of \(\chi _\mathscr {A}(t)\) vanishes by its definition (2). Proposition 3.1 also becomes trivial since the polar cone \(C^\circ \) of every chamber C is contained in \(L_*^\bot \), which coincides with the exceptional set \(\bigcup _{L\in \mathscr {L}(\mathscr {A})\backslash \{0\}} (L^\bot )\). Since \(a_0=0\), both sides of (17) vanish for \(x\notin L_*^\bot \).

Remark 3.4

In the special case of reflection arrangements, Proposition 3.1 can be found in the paper of Denham [6, Theorem 2]; see also [5] for a related work.

Step 2. We are interested in the function

In this step we shall split \(\varphi _k(x)\) into contributions, denoted by \(\varphi _L(x)\), of faces lying in a common k-dimensional linear space L. First of all note that in the case \(k=d\) we trivially have \(\varphi _d(x) = 1\) for all \(x\in \mathbb {R}^d \backslash \bigcup _{H\in \mathscr {A}} H\). In the following, fix some \(k\in \{0,\ldots ,d-1\}\). Recall that \(\mathscr {R}_j(\mathscr {A})= \bigcup _{P\in \mathscr {R}(\mathscr {A})} \mathscr {F}_j(P)\) is the set of all j-dimensional faces of all chambers of \(\mathscr {A}\) (without repetitions). Interchanging the order of summation, we may write

Recall also that \(\mathscr {L}(\mathscr {A})\) is the set of all non-empty intersections of hyperplanes from \(\mathscr {A}\) and that \(\mathscr {L}_k(\mathscr {A})\) is the set of all k-dimensional affine subspaces in \(\mathscr {L}(\mathscr {A})\). Since each k-dimensional face \(F\in \mathscr {R}_k(\mathscr {A})\) is contained in a unique k-dimensional affine subspace \(L\in \mathscr {L}_k(\mathscr {A})\), we may split the sum in the above formula for \(\varphi _k(x)\) as follows:

where for each \(L\in \mathscr {L}_k(\mathscr {A})\) we define

Step 3. In this step we shall prove that for every \(k\in \{0,\ldots , d-1\}\) and every \(L\in \mathscr {L}_k(\mathscr {A})\) the function \(\varphi _L(x)\) defined in (22) is constant outside the exceptional set

where

For \(k=0\) we put \(E'(L):= \varnothing \). Note that E(L) is a finite union of affine hyperplanes. Moreover, we shall identify the value of the constant in terms of the characteristic polynomial of some hyperplane arrangement in \(L^\bot \), the orthogonal complement of L. The final result will be stated in Proposition 3.5 at the end of this step.

First we need to introduce some notation. Recall that \(\langle \cdot , \cdot \rangle \) denotes the standard Euclidean scalar product on \(\mathbb {R}^d\). Let the affine hyperplanes \(H_1,\ldots ,H_m\) constituting the arrangement \(\mathscr {A}\) be given by the equations

for some vectors \(y_1,\ldots ,y_m \in \mathbb {R}^d\backslash \{0\}\) and some scalars \(c_1,\ldots , c_m\in \mathbb {R}\). Every closed chamber of the arrangement \(\mathscr {A}\) can be represented in the form

with a suitable choice of \(\varepsilon _1,\ldots ,\varepsilon _m\in \{-1,+1\}\). Conversely, every set of the above form defines a closed chamber provided its interior is non-empty. Note in passing that the interior of this chamber is represented by the corresponding strict inequalities as follows:

Finally, the chambers determined by two different tuples \((\varepsilon _1,\ldots ,\varepsilon _m)\) and \((\varepsilon _1',\ldots ,\varepsilon _m')\) have disjoint interiors. Indeed, if the tuples differ in the i-th component, then any point z in the relative interior of one chamber satisfies \(\langle z, y_i\rangle < c_i\), whereas the points in the relative interior of the other chamber satisfy the converse inequality.

Fix some k-dimensional affine subspace \(L\in \mathscr {L}_k(\mathscr {A})\), where \(k\in \{0,\ldots ,d-1\}\). It can be written in the form

for a suitable subset \(I\subset \{1,\ldots ,m\}\). Without restriction of generality we may assume that L passes through the origin (otherwise we could translate everything). It follows that \(c_i=0\) for \(i\in I\). Moreover, after renumbering (if necessary) the hyperplanes and their defining equations, we may assume that the linear subspace L is given by the equations

for some \(\ell \in \{d-k,\ldots , m\}\). Finally, without loss of generality we may assume that \(H_i\cap L\) is a strict subset of L for all \(i\in \{\ell +1,\ldots ,m\}\) since otherwise we could include the defining equation of \(H_i\) into the list on the right-hand side of (25).

Take any point \(x\in \mathbb {R}^d\backslash E(L)\), where we recall that E(L) is defined by (23) and (24). The orthogonal projection of x onto the linear subspace L, denoted by \(\pi _L(x)\), is contained in the relative interior of some uniquely defined face \(G\in \bigcup _{p=0}^k \mathscr {R}_p (\mathscr {A})\) with \(G\subset L\). In fact, we even have \(G\in \mathscr {R}_k (\mathscr {A})\) because if the dimension of G would be strictly smaller than k, we could find some \(L_{k-1}\in \mathscr {L}_{k-1}(\mathscr {A})\) with \(G\subset L_{k-1}\subset L\). This would contradict the assumption \(x\notin E'(L)\). So, we have

Then, the definition of \(\varphi _L(x)\) given in (22) simplifies as follows:

Indeed, for every \(F\in \mathscr {R}_k(\mathscr {A})\) and \(P\in \mathscr {R}(\mathscr {A})\) with \(F\subset L\), \(F\in \mathscr {F}_k(P)\) and \(F\ne G\) we have \(x\notin F + N_F(P)\), which follows from the fact that \(x\in {{\,\textrm{relint}\,}}G + L^\bot \), while \({{\,\textrm{relint}\,}}G \cap F = \varnothing \) and \(N_F(P)\subset L^\bot \). This means that all terms with \(F\ne G\) do not contribute to the right-hand side of (22).

By changing, if necessary, the signs of some \(y_i\)’s and the corresponding \(c_i\)’s, we may assume that the face G is given as follows:

The relative interior of G is given by the following strict inequalities:

Let now \(P\in \mathscr {R}(\mathscr {A})\) be a closed chamber such that \(G\in \mathscr {F}_k(P)\). Then, there exist some \(\varepsilon _1,\ldots ,\varepsilon _\ell \in \{-1,+1\}\) such that P is given by

Conversely, if for some \(\varepsilon _1,\ldots ,\varepsilon _\ell \in \{-1,+1\}\) the interior of the set \(P_{\varepsilon _1,\ldots ,\varepsilon _\ell }\) defined above is non-empty, then \(P_{\varepsilon _1,\ldots ,\varepsilon _\ell }\) is a chamber in \(\mathscr {R}(\mathscr {A})\) and it contains G as a k-dimensional face. Hence, we can rewrite (26) as follows:

Write \(x=\pi _L(x)+\pi _{L^\bot }(x)\) as a sum of its orthogonal projections \(\pi _L(x)\) and \(\pi _{L^\bot }(x)\) onto L and \(L^\bot \), respectively. Since \(\pi _L(x)\in G\subset L\) and \(N_G(P_{\varepsilon _1,\ldots ,\varepsilon _\ell }) \subset L^\bot \), we arrive at

Let us now characterize first the tangent and then the normal cone of the face G in the polyhedral set \(P_{\varepsilon _1,\ldots , \varepsilon _\ell }\). Take some \(z_0 \in {{\,\textrm{relint}\,}}G\). Then, by (28),

By definition, see (4), the tangent cone is given by

It follows from this definition together with (29) and (31) that

Note that the linear span of \(y_1,\ldots ,y_\ell \) is \(L^\bot \) by (25). The tangent cone \(T_G(P_{\varepsilon _1,\ldots , \varepsilon _\ell })\) contains the linear space L. Let us now restrict our attention to the space \(L^\bot \) and define the cone

Then, the tangent cone \(T_G(P_{\varepsilon _1,\ldots , \varepsilon _\ell })\) can be represented as the direct orthogonal sum

Taking the polar cone, we obtain the normal cone of the face G in the polyhedral set \(P_{\varepsilon _1,\ldots ,\varepsilon _\ell }\):

That is, \(N_G(P_{\varepsilon _1,\ldots , \varepsilon _\ell })\) is just the polar cone of \(T_{\varepsilon _1,\ldots ,\varepsilon _l}\) taken with respect to the ambient space \(L^\bot \). Although we shall not use this fact in the sequel, let us mention that the normal cone can be represented as the positive hull

In the following, we shall argue that those cones of the form \(T_{\varepsilon _1,\ldots ,\varepsilon _l}\) that have non-empty interior are the chambers of a certain linear hyperplane arrangement \(\mathscr {A}(L)\) in \(L^\bot \). The polar cones of these chambers are the normal cones \(N_G(P_{\varepsilon _1,\ldots , \varepsilon _\ell })\). It is crucial that this arrangement is completely determined by \(y_1,\ldots ,y_\ell \) and does not depend on \(G\subset L\). Applying Proposition 3.1, we shall prove that \(\varphi _L(x)\) is constant outside some explicit exceptional Lebesgue null set.

Let us be more precise. First of all, note that the vectors \(y_1,\ldots ,y_\ell \) are pairwise different. Indeed, if two of them would be equal, say \(y_1=y_2\), then (in view of \(c_1=c_2=0\)) the corresponding hyperplanes \(H_1\) and \(H_2\) would be equal, which is prohibited by the definition of the hyperplane arrangement. Therefore, the orthogonal complements of the vectors \(y_1,\ldots , y_\ell \) (taken with respect to the ambient space \(L^\bot \)) are also pairwise different and define a linear hyperplane arrangement in \(L^\bot \) which we denote by

Since the linear span of \(y_1,\ldots ,y_\ell \) is \(L^\bot \) by (25), this arrangement is essential, that is the intersection of its hyperplanes is \(\{0\}\). The chambers of the arrangement \(\mathscr {A}(L)\) are those of the cones \(T_{\varepsilon _1,\ldots ,\varepsilon _\ell }\), \((\varepsilon _1,\ldots ,\varepsilon _\ell )\in \{-1,+1\}^\ell \), defined in (32), that have non-empty interior in \(L^\bot \). Note also that \(\mathscr {A}(L)\) is uniquely determined by the choice of \(L\in \mathscr {L}_k(\mathscr {A})\) and does not depend on G.

Now we claim that for \((\varepsilon _1,\ldots ,\varepsilon _\ell )\in \{-1,+1\}^\ell \) the relative interior of the cone \(T_{\varepsilon _1,\ldots ,\varepsilon _\ell }\) is non-empty if and only if the interior of the polyhedral set \(P_{\varepsilon _1,\ldots , \varepsilon _\ell }\) is non-empty. If \({{\,\textrm{int}\,}}P_{\varepsilon _1,\ldots , \varepsilon _\ell }\) is non-empty, then it has dimension d, \(G\in \mathscr {F}_k(P)\), and the tangent cone \(T_G(P_{\varepsilon _1,\ldots ,\varepsilon _\ell })\) is strictly larger than the linear space L (because the latter has dimension \(k<d\)). It follows from (32) that \({{\,\textrm{relint}\,}}T_{\varepsilon _1,\ldots ,\varepsilon _\ell }\ne \varnothing \). Conversely, if \({{\,\textrm{relint}\,}}T_{\varepsilon _1,\ldots ,\varepsilon _\ell }\ne \varnothing \), then \(T_{\varepsilon _1,\ldots ,\varepsilon _\ell }\) has the same dimension as \(L^\bot \), while G has the same dimension as L. It follows that the dimension of \(P_{\varepsilon _1,\ldots ,\varepsilon _\ell }\) is d, thus its interior is non-empty.

From the above it follows that the formula for the function \(\varphi _L\) stated in (30) can be written as the following sum over the chambers of the arrangement \(\mathscr {A}(L)\):

We are now going to apply Proposition 3.1 to the hyperplane arrangement \(\mathscr {A}(L)\) in the ambient space \(L^\bot \). This is possible provided \(\pi _{L^\bot }(x)\) does not belong to the exceptional set \(E_0^*\) defined in Proposition 3.1. In our setting of the ambient space \(L^\bot \), the exceptional set is given by

Each linear subspace \(M\in \mathscr {L}(\mathscr {A}(L))\backslash \{0\}\) has the form \(M= (\bigcap _{i\in I} y_i^\bot ) \cap L^\bot \) for some set \(I\subset \{1,\ldots ,\ell \}\). Then, the corresponding orthogonal complement \(M^\bot \cap L^\bot \) has the form \(\mathop {\textrm{lin}}\nolimits \{y_i: i\in I\}\), where \(\mathop {\textrm{lin}}\nolimits A\) denotes the linear subspace spanned by the set A. Moreover, the condition \(M\ne \{0\}\) is equivalent to the condition \(\mathop {\textrm{lin}}\nolimits \{y_i: i\in I\} \ne L^\bot \). Since the linear span of the vectors \(y_1,\ldots ,y_\ell \) is \(L^\bot \) by (25), any linear subspace of the form \(\mathop {\textrm{lin}}\nolimits \{y_i: i\in I\}\ne L^\bot \) is contained in a linear subspace of the form \(\mathop {\textrm{lin}}\nolimits \{y_i: i\in I'\}\), for some \(I'\subset \{1,\ldots ,\ell \}\) satisfying the following condition:

Therefore, we have

Given \(I'\subset \{1,\ldots ,\ell \}\) such that (37) holds, define the linear subspace

Then, \(L_{k+1}\) is non-empty since \(L \subset L_{k+1}\) and, moreover, the dimension of \(L_{k+1}\) equals \(k+1\), that is \(L_{k+1}\in \mathscr {L}_{k+1}(\mathscr {A})\) (recall that the case \(k=d\) has been excluded from the very beginning). Conversely, every \(L_{k+1}\in \mathscr {L}_{k+1}(\mathscr {A})\) containing L can be represented in the form (38) with some \(I'\subset \{1,\ldots ,\ell \}\) satisfying (37). Taking into account that \(\mathop {\textrm{lin}}\nolimits \{y_i: i\in I'\} = L_{k+1}^\bot \), it follows that

Proposition 3.1 applies to all \(x\in \mathbb {R}^d\) such that \(\pi _{L^\bot }(x) \notin E_0^*\). This is equivalent to the condition that x is outside the set

which coincides with the set \(E''(L)\) introduced in (24).

Applying Proposition 3.1 and Lemma 3.2 with the ambient space \(L^\bot \) to the right-hand side of (35), we arrive at the following result.

Proposition 3.5

Let \(\mathscr {A}\) be an affine hyperplane arrangement in \(\mathbb {R}^d\). Fix some \(k\in \{0,\ldots ,d-1\}\) and \(L\in \mathscr {L}_k(\mathscr {A})\). Then, the function \(\varphi _L\) defined in (22) satisfies

where the exceptional set E(L) is given by (23) and (24), and \(a_0(L)\) is \((-1)^{d-k}\) times the zeroth coefficient of the characteristic polynomial of the linear arrangement \(\mathscr {A}(L)\) in \(L^\bot \) defined by (34). Also, for all \(x\in \mathbb {R}^d\) we have \(\varphi _L(x)\ge a_0(L)\).

Step 4. Now we are going to show that \(\varphi _k(x) = a_k\) for all \(x\in \mathbb {R}^d\) outside some exceptional set. Let us first write down a more explicit expression for \(a_0(L)\) appearing in Proposition 3.5. Recalling the definition of the characteristic polynomial, see (2), we can write

where \(\mathop {\textrm{rank}}\nolimits \{y_j: j\in J\}\) denotes the dimension of the linear span of a system of vectors \(\{y_j: j\in J\}\). Taking the zeroth coefficient of this polynomial and multiplying it with \((-1)^{d-k}\), we can write Proposition 3.5 as follows:

Recalling the representation of L stated in (25), we see that a set of vectors \(\{y_j: j\in J\}\) with \(J\subset \{1,\ldots ,\ell \}\) contributes to the above sum if and only if \(L = \bigcap _{j\in J} H_j\). Moreover, a set \(J\subset \{1,\ldots ,m\}\) which is not completely contained in \(\{1,\ldots ,\ell \}\) cannot satisfy \(L = \bigcap _{j\in J} H_j\) since \(H_j\cap L\) is a strict subset of L for all \(j\in \{\ell +1,\ldots ,m\}\); see the discussion after (25). Therefore, we can rewrite the above sum as follows:

for all \(x\in \mathbb {R}^d\backslash E(L)\). Taking the sum over all k-dimensional affine subspaces \(L\in \mathscr {L}_k(\mathscr {A})\) generated by the arrangement \(\mathscr {A}\) and recalling (21), we arrive at

for all \(x\in \mathbb {R}^d\) such that

with

By the definition of the characteristic polynomial \(\chi _\mathscr {A}(t)\), see (2) and (3), the right-hand side of (40) is nothing but \(a_k\). So, \(\varphi _k(x) = a_k\) for all \(x\in \mathbb {R}^d\) satisfying (41). If (41) is not satisfied, we can use the inequality \(\varphi _L(x) \ge a_0(L)\) to prove that \(\varphi _k(x) \ge a_k\).

Step 5. To complete the proof of Theorem 1.6, it remains to check the following equality of the exceptional sets:

for all \(k\in \{0,\ldots ,d-1\}\). We need some preparatory lemmas.

Lemma 3.6

Let \(\mathscr {A}= \{H_1,\ldots ,H_m\}\) be a linear hyperplane arrangement in \(\mathbb {R}^d\). Suppose that \(\mathscr {A}\) is of full rank meaning that \(\bigcap _{i=1}^m H_i = \{0\}\). Then, \(\bigcup _{C\in \mathscr {R}(\mathscr {A})} (C^\circ ) = \mathbb {R}^d\).

Proof

By Lemma 3.2 it suffices to show that \(a_0>0\). By Proposition 3.1, the function \(\varphi _0(x) = \sum _{C\in \mathscr {R}(\mathscr {A})} \mathbb {1}_{C^\circ }(x)\) is Lebesgue-a.e. equal to \(a_0\), hence \(a_0\ge 0\). Since the arrangement is of full rank, the lineality space of each chamber is trivial, that is \(C\cap (-C) = \{0\}\). This implies that the polar cone \(C^\circ \) has non-empty interior, hence the the function \(\varphi _0(x)\) cannot be a.e. 0 implying that \(a_0\ne 0\). \(\square \)

Lemma 3.7

Let \(C\subset \mathbb {R}^d\) be a polyhedral cone with a trivial lineality space, that is \(C\cap (-C) = \{0\}\). Let \(v\in \mathbb {R}^d\backslash \{0\}\) be a vector. Then, at least one of the cones \(\mathop {\textrm{pos}}\nolimits (C\cup \{+v\})\) or \(\mathop {\textrm{pos}}\nolimits (C\cup \{-v\})\) has a trivial lineality space.

Proof

It follows from \(C\cap (-C)= \{0\}\) that there exists \(\varepsilon \in \{-1,+1\}\) such that \(\varepsilon v\notin -C\). We claim that \(\mathop {\textrm{pos}}\nolimits (C\cup \{\varepsilon v\})\) has a trivial lineality space. To prove this, take some w such that both \(+w\) and \(-w\) are contained in \(\mathop {\textrm{pos}}\nolimits (C \cup \{\varepsilon v\})\). We then have \(w= z_1 + \lambda _1 \varepsilon v = -z_2 - \lambda _2 \varepsilon v\) for some \(z_1,z_2\in C\) and \(\lambda _1,\lambda _2\ge 0\). If \(\lambda _1 = \lambda _2=0\), then \(z_1=-z_2\) implying that \(z_1=z_2=0\) and thus \(w=0\). So, let \(\lambda _1+\lambda _2>0\). Then, we have \( \varepsilon v = - (z_1+z_2)/ (\lambda _1 + \lambda _2) \in -C, \) a contradiction. \(\square \)

Lemma 3.8

Let \(\mathscr {A}= \{H_1,\ldots ,H_m\}\) be a linear hyperplane arrangement in \(\mathbb {R}^d\). Then,

Proof

If \(\mathscr {A}\) is not essential meaning that \(L_*:=\bigcap _{i=1}^m H_i \ne \{0\}\), then the left-hand side of (44) equals \(L_*^\bot \). On the other hand, the cones \(C^\circ \) are contained in \(L_*^\bot \), satisfy \(\partial (C^\circ ) = C^\circ \), and cover the space \(L_*^\bot \) by Lemma 3.6 applied to the ambient space \(L_*^\bot \), thus proving that (44) holds.

In the following let \(\mathscr {A}\) be of full rank meaning that \(\bigcap _{i=1}^m H_i = \{0\}\). Let \(H_1= y_1^{\bot }, \ldots , H_m=y_m^{\bot }\) for some vectors \(y_1,\ldots ,y_m\in \mathbb {R}^d\backslash \{0\}\). The linear span of \(y_1,\ldots ,y_m\) is \(\mathbb {R}^d\) since the arrangement has full rank. Any subspace \(L\in \mathscr {L}(\mathscr {A})\) has the form \(L = \bigcap _{i\in I} H_i = \mathop {\textrm{lin}}\nolimits \{y_i:i\in I\}^\bot \) for some set \(I\subset \{1,\ldots ,m\}\). The corresponding orthogonal complement is \(L^\bot = \mathop {\textrm{lin}}\nolimits \{y_i:i\in I\}\). It follows that

To complete the proof, we need to show that

To prove the inclusion \(\subset \), let \(v\in \mathop {\textrm{lin}}\nolimits \{y_i:i\in I\}\ne \mathbb {R}^d\) for some \(I\subset \{1,\ldots ,m\}\). By first extending I and then excluding the superfluous linearly dependent elements, we may assume that \(M:=\mathop {\textrm{lin}}\nolimits \{y_i: i\in I\}\) has dimension \(d-1\) and that the vectors \(\{y_i:i\in I\}\) are linearly independent. We can find \(\varepsilon _i\in \{-1,+1\}\), for all \(i\in I\), such that \(v\in \mathop {\textrm{pos}}\nolimits \{\varepsilon _i y_i:i\in I\}\). Let \(M_+\) and \(M_-\) be the closed half-spaces in which the hyperplane M dissects \(\mathbb {R}^d\). Let \(J_1\), respectively \(J_2\), be the set of all \(j\in \{1,\ldots ,m\}\backslash I\) such that \(y_j\in M\), respectively \(y_j\in \mathbb {R}^d\backslash M\). The cone \(\mathop {\textrm{pos}}\nolimits \{\varepsilon _i y_i:i\in I\}\subset M\) has a trivial lineality space because \(\{\varepsilon _i y_i:i\in I\}\) is a basis of M. By inductively applying Lemma 3.7 in the ambient space M, we can find \(\varepsilon _j\in \{-1,+1\}\), for all \(j\in J_1\), such that the cone \(D:= \mathop {\textrm{pos}}\nolimits \{\varepsilon _i y_i: i\in I\cup J_1\}\subset M\) has a trivial lineality space. Furthermore, for every \(j\in J_2\) we can find \(\varepsilon _j\in \{-1,+1\}\) such that \(\varepsilon _j y_j \in {{\,\textrm{int}\,}}M_+\). With the signs \(\varepsilon _1,\ldots ,\varepsilon _m\in \{-1,+1\}\) constructed as above, we consider the cone

The polar cone is the positive hull

By construction, \(C^\circ \subset M_+\) and \(C^\circ \cap M = D\). Also, the cone \(C^\circ \) has a trivial lineality space because \(\pm w\in C^\circ \) would imply \(\pm w \in C^\circ \cap M = D\), which implies \(w=0\) because D has a trivial lineality space by construction. By polarity, C has non-empty interior. It follows that C is a chamber of the arrangement \(\mathscr {R}(\mathscr {A})\). By construction, \(C^\circ \subset M_+\) and \(v\in C^\circ \cap M\), hence \(v\in \partial (C^\circ )\), thus completing the proof of the inclusion \(\subset \) in (45).

To prove the inclusion \(\supset \) in (45), take any \(C\in \mathscr {R}(\mathscr {A})\) and any \(v\in \partial (C^\circ )\). Then, C and \(C^\circ \) must be of the same form as in (46) and (47). Moreover, since C has non-empty interior, the lineality space of the cone \(C^\circ \) is trivial. If \(v\in \partial (C^\circ )\), then \(v\in F\) for some face \(F\in \mathscr {F}(C^\circ )\) of dimension \(d-1\). Let I be the set of all \(i\in \{1,\ldots ,m\}\) with \(\varepsilon _i y_i\in F\). Then, we have \(\mathop {\textrm{lin}}\nolimits \{\varepsilon _i y_i: i\in I\} = \mathop {\textrm{lin}}\nolimits F\), which contains v and does not coincide with \(\mathbb {R}^d\). It follows that v belongs to the left-hand side of (45), thus completing the proof. \(\square \)

Now we are in position to prove (43). We have

with

We claim that \(H'(L) = E'(L)\). To prove this it suffices to show that for every \(G\in \mathscr {R}_k(\mathscr {A})\) such that \(G\subset L\) we have \(\bigcup _{P\in \mathscr {R}(\mathscr {A}):G\in \mathscr {F}_k(P)} N_G(P)= L^\bot \). In (33) we characterized the normal cones \(N_G(P)\) as the polar cones of the chambers of some essential (full rank) linear hyperplane arrangement \(\mathscr {A}(L)\) in \(L^\bot \). These polar cones cover \(L^\bot \) by Lemma 3.6, thus proving the claim.

It remains to show that \(H''(L) = E''(L)\). To this end, it suffices to prove that for every \(G\in \mathscr {R}_k(\mathscr {A})\) such that \(G\subset L\) we have

Again, recall from (33) that the normal cones \(N_G(P)\) are the polar cones of the chambers of the linear full-rank hyperplane arrangement \(\mathscr {A}(L)= \{y_1^\bot \cap L^\bot ,\ldots , \)\(y_\ell ^{\bot }\cap L^\bot \}\) in \(L^\bot \). Applying Lemma 3.8 to this arrangement, we obtain

The right-hand side coincides with the set \(E_0^*\) defined in (36). Thus, the claim (48) follows from the identity already established in (39). \(\Box \)

Notes

This convention deviates from the standard notation [25], where \(\mathscr {R}(\mathscr {A})\) is the collection of open chambers, but will be convenient for the purposes of the present paper.

References

Amelunxen, D., Lotz, M.: Intrinsic volumes of polyhedral cones: a combinatorial perspective. Discrete Comput. Geom. 58(2), 371–409 (2017)

Amelunxen, D., Lotz, M., McCoy, M., Tropp, J.: Living on the edge: Phase transitions in convex programs with random data. Inform. Inference 3, 224–294 (2014)

Bóna, M.: Handbook of Enumerative Combinatorics. Discrete Mathematics and its Applications (Boca Raton). CRC Press, Boca Raton (2015)

Cowan, R.: Identities linking volumes of convex hulls. Adv. Appl. Probab. 39(3), 630–644 (2007)

De Concini, C., Procesi, C.: A curious identity and the volume of the root spherical simplex. Atti Accad. Naz. Lincei Rend. Lincei Mat. Appl. 17(2), 155–165 (2006)

Denham, G.: A note on De Concini and Procesi’s curious identity. Atti Accad. Naz. Lincei Rend. Lincei Mat. Appl. 19(1), 59–63 (2008)

Drton, M., Klivans, C.J.: A geometric interpretation of the characteristic polynomial of reflection arrangements. Proc. Am. Math. Soc. 138(8), 2873–2887 (2010)

Glasauer, S.: Integralgeometrie konvexer Körper im sphärischen Raum. PhD thesis, University of Freiburg (1995). http://www.hs-augsburg.de/~glasauer/publ/diss.pdf

Glasauer, S.: An Euler-type version of the local Steiner formula for convex bodies. Bull. Lond. Math. Soc. 30(6), 618–622 (1998)

Grove, L.C., Benson, C.T.: Finite Reflection Groups, 2nd edn. Springer, New York (1985)

Hug, D.: Measures, Curvatures and Currents in Convex Geometry. Habilitation thesis, University of Freiburg (1999)

Hug, D., Kabluchko, Z.: An inclusion-exclusion identity for normal cones of polyhedral sets. Mathematika 64(1), 124–136 (2018)

Humphreys, J.E.: Reflection Groups and Coxeter Groups. Cambridge Studies in Advanced Mathematics, vol. 29. Cambridge University Press, Cambridge (1990)

Kabluchko, Z., Last, G., Zaporozhets, D.: Inclusion-exclusion principles for convex hulls and the Euler relation. Discrete Comput. Geom. 58(2), 417–434 (2017)

Kabluchko, Z., Vysotsky, V., Zaporozhets, D.: Convex hulls of random walks, hyperplane arrangements, and Weyl chambers. Geom. Funct. Anal. 27(4), 880–918 (2017)

Klivans, C.J., Swartz, E.: Projection volumes of hyperplane arrangements. Discrete Comput. Geom. 46(3), 417–426 (2011)

Lofano, D., Paolini, G.: Euclidean matchings and minimality of hyperplane arrangements. Discrete Math. 344(3), 112232 (2021)

McMullen, P.: Non-linear angle-sum relations for polyhedral cones and polytopes. Math. Proc. Camb. Philos. Soc. 78(2), 247–261 (1975)

Miles, R.E.: The complete amalgamation into blocks, by weighted means, of a finite set of real numbers. Biometrika 46, 317–327 (1959)

Padberg, M.: Linear Optimization and Extensions. Algorithms and Combinatorics, vol. 12. Springer, Berlin (1999)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series, vol. 28. Princeton University Press, Princeton (1970)

Schneider, R.: Convex Bodies: The Brunn–Minkowski Theory. Encyclopedia of Mathematics and Its Applications, vol. 151. Cambridge University Press, Cambridge (2014)

Schneider, R.: Combinatorial identities for polyhedral cones. St. Petersburg Math. J. 29(1), 209–221 (2018)

Schneider, R., Weil, W.: Stochastic and Integral Geometry. Probability and its Applications. Springer, Berlin (2008)

Stanley, R.P.: An introduction to hyperplane arrangements. In: Geometric Combinatorics. IAS/Park City Mathematics Series, vol. 13, pp. 389–496. Amer. Math. Soc., Providence (2007)

Ziegler, G.M.: Lectures on Polytopes. Graduate Texts in Mathematics, vol. 152. Springer, New York (1995)

Acknowledgements

Supported by the German Research Foundation under Germany’s Excellence Strategy EXC 2044 – 390685587, Mathematics Münster: Dynamics - Geometry - Structure.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Kenneth Clarkson

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kabluchko, Z. An Identity for the Coefficients of Characteristic Polynomials of Hyperplane Arrangements. Discrete Comput Geom 70, 1476–1498 (2023). https://doi.org/10.1007/s00454-023-00577-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-023-00577-y

Keywords

- Hyperplane arrangement

- Metric projection

- Chambers

- Reflection arrangement

- Characteristic polynomial

- Normal cone

- Conic intrinsic volume