Abstract

We study the dimer model on subgraphs of the square lattice in which vertices on a prescribed part of the boundary (the free boundary) are possibly unmatched. Each such unmatched vertex is called a monomer and contributes a fixed multiplicative weight \(z>0\) to the total weight of the configuration. A bijection described by Giuliani et al. (J Stat Phys 163(2):211–238, 2016) relates this model to a standard dimer model but on a non-bipartite graph. The Kasteleyn matrix of this dimer model describes a walk with transition weights that are negative along the free boundary. Yet under certain assumptions, which are in particular satisfied in the infinite volume limit in the upper half-plane, we prove an effective, true random walk representation for the inverse Kasteleyn matrix. In this case we further show that, independently of the value of \(z>0\), the scaling limit of the centered height function is the Gaussian free field with Neumann (or free) boundary conditions. It is the first example of a discrete model where such boundary conditions arise in the continuum scaling limit.

Similar content being viewed by others

1 Introduction

1.1 Free boundary dimers

Let \(\mathcal {G}=(V,E)\) be a finite, connected, planar bipartite graph (in our analysis we will actually only consider subgraphs of the square lattice \(\mathbb {Z}^2\)). Let \(\partial \mathcal {G}\) be the set of boundary vertices, i.e., vertices adjacent to the unique unbounded external face, and let \(\partial _{\text {free}} \mathcal {G}\subseteq \partial \mathcal {G}\) be a fixed set called the free boundary. A boundary monomer-dimer cover of \(\mathcal {G}\) is a set \(M \subseteq E\) such that

-

each vertex in \(V \setminus \partial _{\text {free}} \mathcal {G}\) belongs to exactly one edge in M,

-

each vertex in \(\partial _{\text {free}} \mathcal {G}\) belongs to at most one edge in M.

We write \(\text {mon}(M) \subseteq \partial _{\text {free}} \mathcal {G}\) for the set of vertices that do not belong to any edge in M, and call its elements monomers. Let \(\mathcal{M}\mathcal{D}(\mathcal {G})\) be the set of all boundary monomer-dimer covers of \(\mathcal {G}\). We will often call such configurations simply monomer-dimer covers, keeping in mind that monomers are only allowed on the free boundary. Finally let \(\mathcal {D}(\mathcal {G})\) be the set of all dimer covers, i.e. monomer-dimer covers M such that \(\text {mon}(M)=\emptyset \).

We assign to each edge \(e\in E\) a weight \(w_e \ge 0\), and to each vertex \(v\in \partial _{\text {free}}\mathcal {G}\) a weight \(z_v \ge 0\). The dimer model with a free boundary (or free boundary dimer model) is a random choice of a boundary monomer-dimer cover from \(\mathcal{M}\mathcal{D}(\mathcal {G})\) according to the following probability measure:

where \(\mathcal Z\) is the normalizing constant called the partition function. For convenience we will always assume that the partition function \(\mathcal {Z}>0\), i.e., that \(\mathcal {M}\mathcal {D}(\mathcal {G}) \ne \emptyset \). In this work we will only focus on the homogeneous case \(w_e= 1\), for all \( e\in E\), and \( z_v=z>0\) for all \( v\in \partial _{\text {free}} \mathcal {G}\), with the exception of the technical assumption on the weight of corner monomers described in the next section. If we did not make the exception for the corner monomers, i.e., if \(z_v=z\) for all \( v\in \partial _{\text {free}} \mathcal {G}\), then

The dimer model on \(\mathcal {G}\) can be now defined as the free boundary dimer model conditioned on \(\mathcal {D}(\mathcal {G})\), i.e., the event that there are no monomers.

The main observable of interest for us will be the height function of a boundary monomer-dimer cover which is an integer-valued function defined (up to a constant) on the bounded faces of \(\mathcal {G}\). Its definition is identical to the one in the dimer model (see [39]). We simply note that the presence of monomers on the boundary does not lead to any topological complication (i.e., the height function is not multivalued): if u and \(u'\) are two faces of the graph, and \(\gamma \) and \(\gamma '\) are two distinct paths in the dual graph connecting u and \(u'\), the loop formed by connecting \(\gamma \) and \(\gamma '\) (in the reverse direction) does not enclose any monomer. More precisely, we view a configuration \(M \in \mathcal {M}\mathcal {D}(\mathcal {G})\) as an antisymmetric flow (in other words a 1-form) \(\omega _M\) on the directed edges of \(\mathcal {G}\) in the following manner: if \(e=\{ w,b\}\in M\), then \(\omega _M(w,b) =1\) and \(\omega _M (b,w) = -1\) where b is the black vertex of e and w its white vertex (since \(\mathcal {G}\) is bipartite, a choice of black and white vertices can be made in advance). Otherwise, we set \(\omega _M(e) = 0\). Equivalently, we may view \(\omega _M\) as an antisymmetric flow on the directed dual edges, where if \(e^\dagger \) is the dual edge of e (obtained by a counterclockwise \(\pi /2\) rotation of e), then \(\omega _M(e^\dagger ) = \omega _M(e)\). To define the height function we still need to fix a reference flow \(\omega _0\) which we define to be \(\omega _0= \textbf{E}[ \omega _M]\), i.e., the expected flow of M under the free boundary dimer measure. Now, if u and \(u'\) are two distinct (bounded) faces of \(\mathcal {G}\), we simply define

where \(\gamma \) is any path (of dual edges) connecting u to \(u'\). This definition does not depend on the choice of the path since the flow \(\omega _M(e^\dagger )-\omega _0(e^\dagger )\) is closed (sums over closed dual paths vanish), and hence yields a function h up to an additive constant, as desired. Note that our choice of the reference flow automatically guarantees that the height function is centered, i.e., \(\textbf{E}(h(u) - h(u'))=0\) for all faces u and \(u'\).

We finish this short introduction to the free boundary dimer model with a few words on its history and the nomenclature. In the original model studied in [18, 19] monomers could occupy any vertex of the graph, and hence the name monomer-dimer model. This generalization poses two major complications from our point of view. Firs of all, the height function is not well defined, and secondly the model does not admit a Kasteleyn solution as was shown in [20]. From this point of view, it would therefore be natural if the version of the model studied here was called the boundary-monomer-dimer model. However we choose to use the less cumbersome name of free boundary dimers.

1.2 Boundary conditions

We now state conditions on the graph \(\mathcal {G}=(V,E)\) which will be enforced throughout this paper. First, we assume that \(\mathcal {G}\) is a subgraph of the square lattice \(\mathbb {Z}^2\), and without loss of generality that \(0 \in V\) and is a black vertex. This fixes a unique black/white bipartite partition of V. We also assume that

-

V is contained in the upper half plane \(\mathbb {H}= \{ z \in \mathbb {C}: \Im (z) \ge 0\}\).

-

\(\partial _{\text {free}} \mathcal {G}= V \cap \mathbb {R}{(\ne \emptyset )}\), so the monomers are allowed only on the real line. Furthermore, we assume \(\partial _{\text {free}} \mathcal {G}\) is a connected set (interval) of an even number of vertices. The leftmost and rightmost vertices of \(V \cap \mathbb {R}= \partial _{\text {free}} \mathcal {G}\) will be referred to as the monomer-corners of \(\mathcal {G}\).

-

\(\mathcal {G}\) has at least one black dimer-corner and one white dimer-corner (where a dimer-corner is a vertex \(v\in V\) that is not a monomer-corner, and is adjacent to the outer face of \(\mathcal {G}\), and has degree either 2 or 4 in \(\mathcal {G}\)).

See Fig. 3 for an example of a domain satisfying these assumptions (ignore the bottom row of triangles for now, which will be described later). We make a few comments on the role of the last assumption that there are corners of both colours. For this it is useful to make a parallel with Kenyon’s definition of Temperleyan domain [22, 23]. In that case, this condition ensured that the associated random walk on one of the four possible sublattices of \(\mathbb {Z}^2\) (the two types of black and the two types of white vertices) was killed somewhere on the boundary. As we will see, in our case the random walk may change the lattice from black to white when it is near the real line, resulting in only two different types of walks. Then the role of the third assumption (at least one dimer-corner of each type) is to ensure that each of the two walks is killed on at least some portion of the boundary (possibly a single vertex). This follows from an observation that the boundary condition of a walk on a black (resp. white) sublattice changes from Neumann to Dirichlet (and vice-versa) at a white (resp. black) corner. See Fig. 5 for an example of a vertex with Neumann and Dirichlet bondary conditions.

1.3 Statement of main results

The free boundary dimer model as defined above was discussed in a paper of Giuliani et al. [16]. It was shown there that the partition function \(\mathcal Z\) can be computed as a Pfaffian of a certain (signed) adjacency matrix. Furthermore, a bijection was provided to a non-bipartite dimer model (the authors indicate that this bijection was suggested by an anonymous referee). Hence using Kasteleyn theory the correlation functions can be expressed as Pfaffians of the inverse Kasteleyn matrix \(K^{-1}\). The bijection, which is a central tool of our analysis, will be defined in Sect. 2 where we will also recall the precise definition of the Kasteleyn matrix K.

We will now state our first main result which gives a full random walk representation for \(K^{-1}\). Suppose that \(\mathcal {G}\) is a graph satisfying the assumptions from the previous section. Fix \(z>0\) and assign weight z to every monomer on \(\partial _{\text {free}} \mathcal {G}\) except at either monomer-corner, where (for technical reasons which will become clear in the proof) we choose the weight to be

Thus formally the free boundary dimer model we consider is of type (1.1) rather than of type (1.2). For \(k \in \mathbb {N}= \{0, 1, \ldots \}\), let us call \(V_k =V_k(\mathcal {G}) = \{v \in V: \Im (v) = k \}\), so \(\partial _{\text {free}} \mathcal {G}=V_0\), where \(\Im (v)\) denotes the imaginary part of the vertex v seen as a complex number given by the embedding of the graph. Let us call \(V_{\text {even}}=V_{\text {even}}(\mathcal {G}) = V_0 \cup V_2 \cup \ldots \) and \(V_{\text {odd}}=V_{\text {odd}}(\mathcal {G})= V_1 \cup V_3 \cup \ldots \).

Theorem 1.1

(Random walk representation of the inverse Kasteleyn matrix) There exist two random walks \(Z_\text {even}\) and \(Z_{\text {odd}}\) on the state spaces \(V_{\text {even}}(\mathcal {G})\) and \(V_{\text {odd}}(\mathcal {G})\) respectively, whose transition probabilities will be described in Sect. 2.6 (see (2.26) and (2.30)), such that the following holds. Consider the monomer-dimer model on \(\mathcal {G}\) where the monomer weight is \(z>0\) on \(V_0(\mathcal {G})\) except at its monomer-corners where the monomer weight is \(z'\), as defined in (1.3). Let K be the associated Kasteleyn matrix, and \(D = K^* K\), so that \(K^{-1} = D^{-1} K^*\). Then for all \(u,v \in V\), we have

where \(G_{\text {even}}, G_{\text {odd}} \) are the Green’s functions of \(Z_{\text {even}}\) and \(Z_{\text {odd}}\) respectively, normalised by D(v, v).

Here, by normalised Green’s function of a random walk (with at least one absorbing state), we mean

where Z is the corresponding random walk. We now specify a few properties of the random walks \({Z_{\text {even}}}\) and \(Z_{\text {odd}}\) which may be interesting to the reader already, even though the exact definition is postponed until Sect. 2.6. Both \({Z_{\text {even}}}\) and \(Z_{\text {odd}}\) behave like simple random walk away (at distance more than 2) from the boundary vertices, but with jumps of size \(\pm 2\), so the parity of the walk does not change. Both have nontrivial boundary conditions, including some reflecting and absorbing boundary arcs along the non-monomer part of the boundary \(\partial \mathcal {G}\setminus \partial _{\text {free}} \mathcal {G}\). Furthermore, both walks are allowed to make additional jumps (i.e., not necessarily equal to \(\pm 2\)) along their bottommost rows of vertices (\(V_0\) for \(Z_{\text {ev}}\) and \(V_1\) for \(Z_{\text {odd}}\)). These jumps are symmetric, bounded in the even case but not in the odd case (although they do have exponentially decaying tail). Hence in the scaling limit, these walks would converge to Brownian motion in the upper half plane \(\mathbb {H}\) with reflecting (or equivalently Neumann) boundary conditions on the real axis, and with whatever boundary conditions are inherited from the Neumann/Dirichlet parts of the other boundary arcs.

An important property of these random walks that highlights the difference with the setup of [22], is that they can change colour of the vertex (in a bipartite coloring of \(\mathbb {H}\cap \mathbb {Z}^2\)). However, this can happen only when the walker visits the real line. This in turn means that the entries of the inverse Kasteleyn matrix indexed by two vertices of the same colour (which automatically vanish in Kenyon’s work) have a natural interpretation in terms of walks that go through the real line (the free boundary). This is a clear analogy with the construction of reflected random walks via the reflection principle for a walk in a reflected domain. Remarkably, this exact correspondence with reflected random walks is present already at the discrete level of the dimer model with free boundary conditions, and is the reason why the reflected Brownian motion appears in the correlation kernel of the scaling limit of the height function.

To illustrate this we explain here briefly a simple computation using Kasteleyn theory (for more details see Sect. 5.2) where this phenomenon is apparent. Let \( e= \{w,b\}\) and \(e' = \{w', b'\}\) be two edges of \(\mathbb Z^2\cap \mathbb H\) with \(w,w'\) white and \(b,b'\) black vertices in a fixed chessboard coloring of the lattice. Then, writing \(\mathcal {M}\) for a random boundary monomer-dimer cover, and using Kasteleyn theory for the dimer representation described in Sect. 2.1, we have

where the matrix is antisymmetric and \(a=K(w,b)K(w',b')\). We also have \(\textbf{P}( e \in \mathcal {M})=K(w,b)K^{-1} (w, b)\) and \(\textbf{P}( e' \in \mathcal {M})=K(w',b')K^{-1} (w', b')\), which leads to

Here, the second term is new compared to Kenyon’s computation in [22] (in that case one has \(K^{-1} ( w, w') = K^{-1} (b,b') = 0\)). Furthermore, using our random walk representation, \(K^{-1} ( w, w')\) and \(K^{-1} (b, b')\) can be interpreted as a derivative of the Green’s function of the appropriate walks \(Z_{\text {even}}\) and \(Z_{\text {odd}}\) evaluated at pairs of vertices of different colors. Then by construction the walks which contribute to these Green’s functions must visit the boundary.

This intuition is what guides us to the next result, which however requires us to first take an infinite volume (thermodynamic) limit where an increasing sequence of graphs eventually covers \(\mathbb {H}\cap \mathbb {Z}^2\). We first show that the monomer-dimer model converges in such a limit. For this we need to specify a topology: we view a monomer-dimer configuration on \(\mathbb {H}\cap \mathbb {Z}^2\) as an element of \(\{0,1\}^{E(\mathbb {H})}\) where \(E(\mathbb {H})\) is the edge set of \(\mathbb {Z}^2 \cap \mathbb {H}\), and equip this space with the product topology (so convergence in this space corresponds to convergence of local observables).

To state the result we will fix a sequence \(\mathcal {G}_n\) of graphs such that \(\mathcal {G}_n\) satisfies the assumptions of Sect. 1.2, and moreover \(\mathcal {G}_n \uparrow \mathbb {Z}^2 \cap \mathbb {H}\). For simplicity of the arguments and ease of presentation, we have chosen \(\mathcal {G}_n\) to be a concrete approximation of rectangles, although the result is in fact true much more generally; we have not tried to find the most general setting in which this applies.

Theorem 1.2

(Infinite volume limit) Let \(\mathcal {G}_n\) be rectangles of diverging even sidelengths (number of vertices on a side) whose ratio remains bounded away from zero and infinity as \(n\rightarrow \infty \), and such that in the top row the right-hand side half of the vertices are removed (if this number is even, otherwise we remove one less vertex to keep the graph dimerable). Let \(\mu _n\) denote the law of the free boundary dimer model on \(\mathcal {G}_n\) with monomer weight \(z>0\) except at the monomer-corners where the weight is \(z'\), as in (1.3). Then \(\mu _n\) converges weakly as \(n \rightarrow \infty \) to a law \(\mu \) which describes a.s. a random boundary monomer-dimer configuration on \(\mathbb {Z}^2 \cap \mathbb {H}\).

We note that the particular type of domains chosen in this statement (see e.g. Fig. 3 for an illustration) guarantees that both the odd and even walks mentioned above are killed on a macroscopic part of the upper rows of \(\mathcal {G}_n\) (the odd walk is killed on the right-hand side half and the even walk on the left-hand side half of its uppermost row); importantly this killing can occur with positive probability without touching the real line starting from any position away from the real line. The other key requirement is that the domain grows to infinity in a “homogeneous” way: for instance, \(\mathcal {G}_n\) both contains a ball of radius \(c_1n\) and is contained in a ball of radius \(c_2n\) for some suitable constants \(c_1, c_2>0\). Subject to these two requirements (both walks may be killed with positive probability without touching the real line once they are far away from it, and the growth is homogeneous in all directions), there should be no difficulty extending the result in Theorem 1.2; see immediately above Proposition 3.7 for the kind of domains which are explicitly allowed in the proof. For concreteness and simplicity we opted to choose the sequence of domains as in the statement: removing (approximately) half vertices in the top row makes it possible for walks of all types to be killed without touching the real line.

We stress the fact that the limiting law \(\mu \) depends on the monomer weight \(z>0\). As mentioned before, we can associate to the monomer-dimer configuration in the infinite half-plane a height function which is defined on the faces of \(\mathbb {H}\cap \mathbb {Z}^2\), up to a global additive constant. The last main result of this paper shows that in the scaling limit, this (centered) height function converges to a Gaussian free field with Neumann (or free) boundary conditions, denoted by \(\Phi ^{\text {Neu}}\). We will not define this in complete generality here (see [8] for a comprehensive treatment). We will simply point out what is concretely relevant for the theorem below to make sense. Given a simply connected domain \(\Omega \) with a smooth boundary, \(\Phi ^{\text {Neu}}_{\Omega }\) may be viewed as a stochastic process indexed by the space \(\mathcal {D}_0(\Omega )\) of smooth test functions \(f: \Omega \rightarrow \mathbb {R}\) with compact support and with zero average (meaning \(\int _\mathbb {H}f(z) dz = 0\)). The latter requirement corresponds to the fact that \(\Phi \) is only defined modulo a global additive constant. The law of this stochastic process is characterised by a requirement of linearity (i.e. \((\Phi ^{\text {Neu}}_{\Omega }, a f + b g) = a (\Phi ^{\text {Neu}}_{\Omega }, f) + b (\Phi ^{\text {Neu}}_{\Omega }, g)\) a.s. for any \(f, g \in \mathcal {D}_0(\Omega )\) and \(a, b \in \mathbb {R}\)), and moreover \((\Phi ^{\text {Neu}}_{\Omega }, f)\), \((\Phi ^{\text {Neu}}_{\Omega }, g)\) follow centered Gaussian distributions with covariance

where \(G^{\text {Neu}}_\Omega (x,y)\) is a Green’s function in \(\Omega \) with Neumann boundary conditions. (Note that by contrast to the Dirichlet case, such Green’s functions are not unique and are defined only up to a constant.) In the case of the upper-half plane \(\Omega = \mathbb {H}\), the Green’s function is given explicitly by

Informally, pointwise differences \(\Phi ^{\text {Neu}}_{\mathbb {H}}(a) - \Phi ^{\text {Neu}}_{\mathbb {H}} (b)\) for \(a,b\in \mathbb {H}\) (which do not depend on the choice of the global additive constant) are centered Gaussian random variables with covariances

Note that our Green’s function is normalised so that it behaves like \(1 \times \log (1/ | x-y| )\) as \(y -x \rightarrow 0\). Naturally, (1.5) must be understood in an integrated way since pointwise differences are not actually defined.

We may now state the announced result. For \(\delta >0\) (the mesh size), let \(h^\delta \) denote the height function (defined up to a constant, and by definition centered) of the free boundary dimer model \(\mu \) with weight z in the infinite half-plane \(\mathbb {H}\cap \delta \mathbb {Z}^2\) (rescaled by \(\delta \)). We identify \(h^\delta \) with a function defined almost everywhere on \(\mathbb {H}\) by taking the value of \(h^\delta \) to be constant on each face, and view \(h^\delta \) as a random distribution (also called a random generalized function) acting on smooth compactly supported functions f on \(\mathbb {H}\) with zero average, i.e., satisfying \(\int _\mathbb {H}f(a)da =0\) (see Sect. 5.5 for details).

Theorem 1.3

(Scaling limit) Let \(f_{1}, \ldots , f_k \in \mathcal {D}_0(\mathbb {H})\) be arbitrary test functions. Then for all \(z>0\), as \(\delta \rightarrow 0\),

in distribution.

Note that, maybe surprisingly, the scaling limit does not depend on the value of \(z>0\) (we discuss this in more detail in Sect. 1.4). We also wish to call the attention of the reader to the normalising factor \(1/ (\sqrt{2}\pi )\) in front of \(\Phi \) on the right-hand side of Theorem 1.3. It is equal to the one appearing in the usual dimer model in which the centered height function has zero (Dirichlet) boundary conditions. We note that comparisons with other works such as [6, 23] should be made carefully, since the normalisation of the Green’s function and of the height function may not be the same: for instance, Kenyon takes the Green’s function to be normalised so that \(G(x,y) \sim 1/(2\pi ) \log 1/|x-y|\) as \(y \rightarrow x\), so his GFF is \(1/\sqrt{2\pi }\) ours (ignoring different boundary conditions). Also, in Kenyon’s work [22], the height function is such that the total flow out of a vertex is 4 instead of 1 here (so his height function is 4 times ours), while it is \(2\pi \) in [6] (so their height function is \(2\pi \) times ours). Adjusting for these differences, there is no discrepancy between the constant \(1/(\sqrt{2}\pi )\) on the right-hand side of Theorem 1.3 and the one in [22, 23].

1.4 Heuristics: reflection and even/odd decomposition

As noted before, Theorem 1.3 may be surprising at first sight, when we consider the behaviour of the model in the two extreme cases \(z=0\) and \(z = \infty \). Indeed, when \(z=0\), the free boundary dimer model obviously reduces to the dimer model on \(\mathbb {H}\), in which case the limit is a Dirichlet GFF. When \(z= \infty \), all vertices of \(V_0\) are monomers, so the model reduces to a dimer model on \((V_1 \cup V_2 \cup \ldots )\simeq \mathbb {H}\cap \mathbb {Z}^2\). Hence, the limit is also a Dirichlet GFF in this case. However, the result above says that for any z strictly in between these two extremes, the limit is a Neumann GFF.

The result (and the reason for this arguably surprising behaviour) may be heuristically understood through the following reflection argument. Let \(\mathcal {G}\) be a large finite graph approximating \(\mathbb {H}\) and satisfying the assumptions of Sect. 1.2. Let \(\tilde{\mathcal {G}}\) be a copy of \(\mathcal {G}\) shifted by i/2, so with a small abuse of notation, \(\tilde{\mathcal {G}} = \mathcal {G}+ i /2\) (here \(i = \sqrt{-1}\)), and let \(\bar{\mathcal {G}}\) be the same graph to which we add its conjugate (reflection through the real axis). We also add vertical edges crossing the real axis of the form \((k-i/2, k+i/2)\) for each \(k \in V_0\); note that the resulting graph is then clearly bipartite. Given a monomer-dimer configuration on \(\mathcal {G}\), we can readily associate a monomer-dimer configuration on \(\bar{\mathcal {G}}\) by reflecting it in the same manner. In this way, a monomer in \(k+ i/2\) necessarily sits across another monomer in \(k-i/2\) for any \(k \in V_0\). Such a pair of monomers can be interpreted as a dimer on the edge \((k-i/2, k+i/2)\) and once we have phrased it this way the resulting configuration is just an ordinary dimer configuration on \(\bar{\mathcal {G}}\) (which however has the property that it is reflection symmetric). It follows that its height function (defined on the faces of \(\bar{\mathcal {G}}\)) is even, i.e., \(h(f) = h (\bar{f})\) for every face f (where \(\bar{f}\) is the symmetric image of f about the real axis). Moreover, a moment of thought shows that monomer-dimer configurations on \(\mathcal {G}\) are in bijection in this manner with the set \(\mathcal {E}\mathcal {D}(\bar{\mathcal {G}})\) of even (symmetric) dimer configurations on \(\bar{\mathcal {G}}\), and that under this bijection the image of the law (1.2) is given by

(where for a dimer configuration \(M \in \mathcal {D}(\bar{\mathcal {G}})\), \(\text {mon} (M) \) is the set of vertical edges of M crossing the real axis), conditioned on the event \(\mathcal {E}\mathcal {D}(\bar{\mathcal {G}})\) of being even, where \(\bar{\mathcal Z}\) is the partition function of the dimer model on \(\bar{\mathcal {G}}\).

Now, suppose e.g. that \(\mathcal {G}\) is such that \(\bar{\mathcal {G}}\) is piecewise Temperleyan [36] (meaning that \(\bar{\mathcal {G}}\) has two more white convex corners than white concave corners, see [36] for precise definitions). This happens for instance if \(\mathcal {G}\) is a large rectangle with appropriate dimensions. By a result of Russkikh [36], in this case and if \(z = 1\), the unconditional (centered) height function associated with the dimer model (1.6) converges to a Gaussian free field with Dirichlet boundary condition in the scaling limit.

It is reasonable to believe that this convergence holds true even when \(z \ne 1\). For instance, when the monomer weights alternate between z and 1 every second vertex, then whatever the value of z, the dimer model has a Temperleyan representation (see [5, 27]): indeed, in that case the weighted graph is obtained as a superposition of a planar garph and its dual for which the dual edges all have weight one.

Then by considerations related to the imaginary geometry approach (see [6]), this convergence to the Dirichlet GFF is universal provided that the underlying random walk converges to Brownian motion (this will be rigourously proved in the forthcoming work [7]). In particular, given these results, we should get convergence to the Dirichlet GFF for the height function even when \(z \ne 1\): indeed, when we modify the weight of all the edges crossing the real line, random walk will still converge to Brownian motion. So far, this discussion concerned the (unconditioned) dimer model on \(\bar{\mathcal {G}}\) defined in (1.6). Once we start conditioning on \(\mathcal {E}\mathcal {D}(\bar{\mathcal {G}})\) it might be natural to expect that the scaling limit should be a “Dirichlet GFF conditioned to be even", though this is a highly degenerate conditioning. Nevertheless, this conditioning makes sense in the continuum, and in fact its restriction to the upper half plane gives the Neumann GFF, as we are about to argue. Indeed, for a full plane GFF \(\Phi _{\mathbb {C}}\) restricted to \(\mathbb H\), it is easy to check that one has the decomposition

where \(\Phi ^{\text {Neu}}_\mathbb {H}, \Phi ^{\text {Dir}}_\mathbb {H}\) are independent fields on \(\mathbb {H}\) with Neumann and Dirichlet boundary conditions on \(\mathbb {R}\) respectively. This follows immediately from the fact that any test function can be written as the sum of an even and odd functions, and this decomposition is orthogonal for the Dirichlet inner product \((\cdot , \cdot )_{\nabla }\) on \(\mathcal {D}_0(\mathbb {C})\). Therefore, conditioning \(\Phi _\mathbb {C}\) to be even amounts to conditioning on \(\Phi _\mathbb {H}^{\text {Dir}}\) to vanish everywhere, meaning that \(\Phi _\mathbb {C}\) (restricted to the upper half plane) is exactly equal to \(\Phi ^{\text {Neu}}_\mathbb {H}/\sqrt{2}\). (See Exercise 1 of Chapter 5 in [8] for details.)

We note that while this argument correctly predicts the Neumann GFF as a scaling limit of the height function, it is however also somewhat misleading as it suggests that the limit of \(h^\delta \) is not \((1/\sqrt{2}\pi ) \Phi ^{\text {Neu}}_{\mathbb {H}}\) as in Theorem 1.3, but is smaller by a factor \(1/\sqrt{2}\), i.e., \(1/(2\pi ) \Phi ^{\text {Neu}}_{\mathbb {H}}\).

To understand this discrepancy, we now explain why the additional factor turns out to be an artifact of a Gaussian computation and does not arise in the discrete setup. A convincing one-dimensional parallel can be that of Gaussian and simple random walk bridges. Indeed, consider bridges of 2n steps starting and ending at 0, with symmetric Bernoulli and Gaussian jump distributions with variance one. Now condition the walks to be symmetric around time n, i.e. \(X(n\pm k) = X(n\mp k)\). Again, the Gaussian conditioning is singular but can be easily made sense of using Gaussian integrals. Restricted to the time interval [0, n], the conditioned simple random walk bridge is just a simple random walk with the same step distribution as the original bridge. However, the conditioned Gaussian walk has step distribution with variance 1/2 as a result of the conditioning. In particular, in the diffusive scaling limit, the former walk converges to standard Brownian motion whereas the latter to \(1/\sqrt{2}\) times the standard Brownian motion. The framework of the current paper is more similar to the simple random walk case as discrete height functions are its “two-dimensional-time" analogs. This concludes the discussion giving the heuristics for Theorem 1.3.

1.5 A conjecture on the boundary-touching level lines

In the study of the dimer model, a well known conjecture of Kenyon concerns the superposition of two independent dimer configurations. It is easy to check that such a superposition results in a collection of loops (including double edges) covering every vertex. This observation is attributed to Percus [33]. These loops are the level lines of the difference of the two corresponding dimer height functions. Kenyon’s conjecture (stated somewhat informally in [25] for instance) is that the loops converge in the scaling limit to CLE\(_4\), the conformal loop ensemble with parameter \(\kappa = 4\) (defined in [37], see also [38]). This is strongly supported by the fact that in the continuum, CLE\(_4\) can be viewed as the level lines of a (Dirichlet) GFF with a specified variance (a consequence of a well known coupling between the GFF and CLE\(_4\) of Miller and Sheffield, see [1] for a complete statement and proof). Major progress has been made recently on this conjecture through the work of Kenyon [25], Dubédat [12] and Basok and Chelkak [4], and the only remaining ingredient of the full proof is to show precompactness of the family of loops in a suitable metric space. Updated during the revision process: in combination with the work of Bai and Wan [2] the above results now show that the so-called cylindrical probabilities of the double dimer model converge to those of CLE\(_4\). This gives the proof of a weak form of Kenyon’s conjecture.

Left: A superposition of two monomer-dimer configurations, respectively blue and red. Double edges are in purple. The boundary-touching level lines of the height-function is the collection of arcs joining monomers to monomers marked in bald black. Right: A simulation of ALE by B. Werness (color figure online)

It is natural to ask if any similar phenomenon occurs when we superpose two independent monomer-dimer configurations sampled according to the free boundary dimer model, say in the upper half-plane. For topological reasons, this gives rise to a gas of loops as above but also a collection of curves connecting monomers to monomers (and hence the real line to the real line). See Fig. 1 for an example. In fact, the superposition of two free boundary dimer configurations is related to the superposition of two dimer configurations with different boundary conditions considered in [26], with the difference being that in that model the monomers occur in fixed locations. We note that these authors already establish connections between their results and multiple-strand SLE.

An obvious question is to describe the law of this collection of curves in the scaling limit. By analogy with the above, and in view of our result (Theorem 1.3), it is natural to expect that these curves converge in the scaling limit to the level lines of a GFF with Neumann boundary conditions on the upper-half plane. The law of these curves was determined by Qian and Werner [35] to be the ALE process (ALE stands for Arc Loop Ensemble. It is a collection of arcs that can be connected into loops, but here we will not be interested in this aspect and will only see them as arcs.). ALE is one possible name for this set, but more precisely it is equal to the branching SLE\(_4(-1,-1)\) exploration tree targeting all boundary points, and is also equal to the (gasket of) BCLE\(_4(-1)\) in [32] and \(\mathbb {A}_{-\lambda , \lambda }\) in [1].

This leads us to the following conjecture:

Conjecture 1.4

For any \(z>0\), in the scaling limit, the collection of boundary-touching curves resulting from superimposing two independent free boundary dimer models converges to the Arc Loop Ensemble ALE in the upper half-plane.

1.6 Folding the dimer model onto itself

The discussion in Sects. 1.4 and 1.5 lead naturally to another conjecture which we now spell out. In Sect. 1.5 we explained a conjecture pertaining to the superposition of two independent monomer-dimer configurations sampled according to the free boundary dimer model. But there is at least one other natural way to superpose two such configurations that are not independent: namely, when they come from the same full plane dimer model. In fact, there are two ways to do the folding, depending on whether we shift by i/2 or not.

Let us explain this more precisely. Let us define the graph \(\hat{\mathcal {G}}\) which is obtained by adding to \(\mathcal {G}\) its reflection with respect to the real axis. The vertices of \(\mathcal {G}\) on the real axis (i.e., \(V_0\)) are not reflected: we only keep one copy of them in \(\hat{\mathcal {G}}\). (By contrast, in the graph \(\bar{\mathcal {G}}\), where \(\mathcal {G}\) is shifted by i/2 prior to reflection, these vertices are duplicated).

Now, consider an (infinite volume) dimer cover M on \(\hat{\mathcal {G}}\), viewed as a subset of edges where every vertex has degree 1, and consider the superposition \(\hat{\Sigma }\) obtained by superposing M with itself via a reflection through the real line: thus,

Then \(\hat{\Sigma }\) is a subgraph of degree two (including double edges), except for vertices on \(V_0 \subset \mathbb {R}\) which in M are connected to a vertical edge. Thus \(\hat{\Sigma }\) is exactly of the same nature as the graph in Fig. 1. It is not hard to see that the “height function” \(h_{\hat{\Sigma }}\) (really defined only up to a global additive constant) naturally associated with \(\hat{\Sigma }\) converges in the fine mesh size limit to \((1/\pi ) \Phi ^{\text {Neu}}_\mathbb {H}\): this is because at the discrete level, the corresponding height function \(h_{\hat{\Sigma }}(f)\) at a face \(f \subset \mathbb {H}\) can be viewed as \(h_M(f) + h_M( \bar{f})\) (where \(h_M\) is the height function associated with M), and \(h_M\) is known to converge to \((1/\sqrt{2} \pi )\Phi _\mathbb {C}\) [10]. These considerations lead us to the following conjecture:

Conjecture 1.5

In the scaling limit, the collection of boundary-touching curves in \(\hat{\Sigma }\) converges to the Arc Loop Ensemble ALE in the upper half-plane.

We remark that it is also meaningful to fold a dimer configuration on \(\bar{\mathcal {G}}\) (rather than \(\hat{\mathcal {G}}\) above) onto itself via reflection through the real line. In that case, one must erase the vertical edges straddling the real line and view the corresponding dimers as pairs of monomers. The resulting superposition \(\bar{\Sigma }\) is a subgraph of degree two, including multiple edges and double points (on \(V_0 \subset \mathbb {R}+ i /2\)). In particular there are no boundary arcs in \(\bar{\Sigma }\), except for degenerate lines connecting every monomer to itself. For the same reason as above, the height function \(h_{\bar{\Sigma }}\) associated to \(\bar{\Sigma }\) may be viewed as \(h_M(f) - h_M(\bar{f})\) and so converges in the scaling limit towards \((1/\pi ) \Phi ^{\text {Dir}}_\mathbb {H}\). Analogously to Conjecture 1.5, we conjecture that the loops of \(\bar{\Sigma }\) converge to CLE\(_4\).

1.7 Connection with isoradial random walk with critical weights

The following remark was suggested by an anonymous referee. There is a special value of the fuagcity parameter z, namely

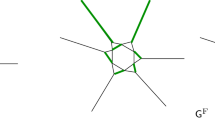

such that the even walk \(Z_{\text {even}}\) coincides (after a small change in the embedding) with the random walk on isoradial graphs with critical weights considered in the work of Kenyon [24]. To see this, one can notice that the even walk \(Z_{\text {even}}\) is equivalent to a random walk on two upper-half planes (or more precisely, square lattices on these half planes) welded together via a row of triangles. See Fig. 2. Such a graph has an isoradial embedding and the corresponding critical weights have weight 1 on the square lattice edges, and weight z given by (1.8) on the remaining triangle edges, as follows from elementary calculations. In that case, convergence of the derivative of the potential kernel (i.e., part of the inverse Kasteleyn matrix) would follow from Theorem 4.3 in [24].

After reflecting the white sublattice of \(V_{\text {even}}\) (dashed lines), a graph composed of two square lattice half-planes glued together by a row of interlacing isosceles triangles is formed (solid lines). If the angle between the legs of the triangle is equal to \(\pi /4\), the graph is isoradial and the walk \(Z_{\text {even}}\) for \(z^2 = \tan (\pi /8)\) is the same as the walk studied by Kenyon in [24]

An augmented non-bipartite graph \(\mathcal {G}^{0}\) and its Kasteleyn orientation. The graph is constructed from a piece of the square lattice \(\mathcal {G}\) with \(\partial _m \mathcal {G}= V_0\) by adding the bottom circuit of triangles. The two red edges and vertices in the figure denote a single edge and vertex in \(\mathcal {G}^0\). Diamonds represent vertices of \(\mathcal {G}^0\setminus \mathcal {G}\). We assume that \(|V_0|\) even (here equal 6) so that the mapping of [16] can be directly applied. In this case \(\mathcal {G}\) has one black and one white monomer-corner, three black dimer-corners, and one white dimer-corner. The additional circuit of triangles simulates the presence of monomers in the free boundary dimer model by means of a standard dimer model. This is expressed as a measure-preserving two-to-one map between \(\mathcal {D}(\mathcal {G}^0)\) and \(\mathcal{M}\mathcal{D}(G)\) with a proper choice of weights [16]. One of the two dimer covers of \(\mathcal {G}^0\) corresponding to the fixed monomer-dimer cover of \(\mathcal {G}\) is depicted in orange (color figure online)

Three types of vertices x where the transition weights of \(D=K^*K\) are signed. The arrows indicate the corresponding value of D(x, y). Note the following crucial observations: First, in the rightmost case (when \(x\in V_0\)), the absolute values of the transition weights sum up to the diagonal term. Moreover, the transition weight is negative if and only if the size of the step is odd (more precisely equal to one). A similar observation holds in the central picture (when \(x\in V_{-1}\)) if one ignores the transition weights that lead back to \(V_1\). This is the basis for the construction of Sect. 2.3 and the definition of the auxiliary random walk on \(\mathbb {Z}\) from (2.7). Our approach is to “forget” what the walk does when it stays in \(V_{-1}\) and resum over all trajectories contained in \(V_{-1}\) and with the same endpoints in \(V_1\)

1.8 Outline of the paper and structure of proof

This paper is organized as follows: In Sect. 2 we first describe the measure preserving mapping from [16] between the free boundary dimer model on \(\mathcal {G}\) and the standard dimer model on an augmented (nonbipartite) graph \(\mathcal {G}^0\) (as in Fig. 3), which is the starting point of our paper. We then define a Kasteleyn orientation on \(\mathcal {G}^0\), the associated Kasteleyn matrix \(\tilde{K}\), and finally we choose a convenient complex-valued gauge changed Kasteleyn matrix K (this gauge is closely related to the one of Kenyon [22] and allows one to interpret K as a discrete Dirac operator). Kasteleyn theory (which we recall later on in the paper) says that the correlations of the dimer model on \(\mathcal {G}^0\) (and hence also of the free boundary dimer model on \(\mathcal {G}\)) can be computed from the inverse Kasteleyn matrix \(K^{-1}\).

With an intention of developing its random walk representation, we therefore begin analyzing the inverse Kasteleyn matrix when \(\mathcal {G}\) is a subgraph of the square lattice with appropriate boundary conditions described in Sect. 1.2. To this end we look at the matrix \(D =K^*K\), whose off-diagonal entries we interpret as (signed) transition weights. These weights away from \(\partial _{\text {free}} \mathcal {G}\) (which is a subset of the real line) are positive and hence define proper random walks as in [22]. However, the description of D as a Laplacian matrix associated to a random walk breaks down completely for vertices on the three bottommost rows of \(\mathcal {G}^0\) (as in Fig. 4). We stress the fact that the level of complication is considerably higher for transitions between odd rows (that will lead to the definition of the walk \(Z_{\text {odd}}\)). Indeed, as mentioned in Fig. 4, for even rows the arising walk \(Z_{\text {even}}\) can be relatively easily understood as a proper random walk reflected on the real line after taking into account a global sign factor appearing in D (which leads to the formula in the second line of (1.4)).

Therefore the remainder of Sect. 2 is devoted to the random walk representation for \(K^{-1}\), which is one of the main contributions of this paper. The main idea is to “forget” the steps of the signed walk induced by D taken along the row \(V_{-1}\), or more precisely to only specify the trajectory of a path away from \(V_{-1}\) and combine together all paths that agree with this choice. The hope is that the resulting projected signed measure on trajectories contained in \(V_{\text {odd}}=V_1\cup V_3\cup \ldots \) with (unbounded) steps from \(V_1\) to \(V_1\) is actually a true probability measure. Remarkably (in our opinion), we show that this is indeed the case; this phenomenon is what really lies behind the random walk representation of Theorem 1.1. To achieve this, an additional (intermediate) limiting procedure is required. To be precise, we first pretend that the rows \(V_0\) and \(V_{-1}\) of \(\mathcal {G}^0\) are infinite. This is done by defining graphs \(\mathcal {G}^N\), where a circuit of additional triangles is appended to \(\mathcal {G}\), and then taking the limit while the size of the circuit diverges. This allows us to perform exact computations for the transition weights from \(V_1\) to \(V_1\) by analysing the potential kernel of the auxiliary one-dimensional walk on \(\mathbb {Z}\) defined in Sect. 2.5. The required positivity of the combined weights and the identity stating that these weights sum to one as we sum over all possible jump locations, stated in Lemma 2.3, is the result of an exact (and rather long) computation involving the potential kernel of this auxiliary walk.

This intermediary limit is also the technical reason for the introduction of the modified monomer weight \(z'\), which arises as the limiting weight of the peripheral monomers on \(\mathcal {G}\). Finally, in Sect. 2.6 we use the notion of Schur complement of a matrix as a convenient tool to implement the idea of combining all the walks with given excursions away from (the now infinite) row \(V_{-1}\). All in all, at the end of Sect. 2 a random walk representation of \(K^{-1}\) is developed and Theorem 1.1 is proved.

The goal of this section is to prove Theorem 1.2, i.e., to establish the infinite volume limit of the model when a sequence of graphs exhausts \(\mathbb {H}\cap \mathbb {Z}^2\). By Kasteleyn theory, it is enough that the inverse Kasteleyn matrix has a limit. This will be shown using the random walk representation established in Sect. 2. Essentially, the main goal is to show that in the infinite volume limit, the difference of the Green function associated to the random walk \(Z_{\text {even}}\) or \(Z_{\text {odd}}\) at two fixed vertices x, y converge to the difference of the potential kernel of the corresponding infinite volume walk. In fact, the very definition of this potential kernel is far from clear and occupies us for a sizeable part of this section. For the usual simple random walk on the square lattice, the definition of the potential kernel (see e.g. [30]) relies on precise estimates for the random walk coming from the exact computation of the Fourier transform of the law of random walk. Such an exact computation is clearly impossible here, since the effective walks cannot be viewed as a sum of i.i.d. random variables. We overcome this obstacle by developing a general method (which we think may be of independent interest) to define the potential kernel of a recurrent random walk and prove convergence of Green function differences towards it. The main idea is to proceed by coupling. We note that a similar idea has also been recently advocated by Popov (see Section 3.2 of [34]); but the approach in [34] also takes advantage of some properties and symmetries which are not available here. Instead, our starting point is the robust estimate of Nash (see e.g. [3]) characterising the heat kernel decay. With our approach, only a weak (polynomial of any order) bound for the probability of non coupling suffices to show the existence of the potential kernel. An immediate byproduct of our quantitative approach (which is crucial for us) is the proof of the desired convergence of Green function differences towards the differences of the potential kernel, obtained in Proposition 3.7.

In Sect. 4 we move on to describe the scaling limit (now in the limit of fine mesh size) for the potential kernel of the effective walks \(Z_{\text {even}}\) and \(Z_{\text {odd}}\). A key idea is to say that when such a walk hits the real line, it will hit it many times and therefore has a probability roughly 1/2 to end up at a vertex with even (resp. odd) horizontal coordinate once it is reasonably far away from the real line. This idea eventually leads us to asymptotic formulae for the potential kernel which depends on the parity of the horizontal coordinate of a point (see Theorem 4.1). To achieve this, we introduce an intermediary process which we call coloured random walk, which is a random walk on (twice) the usual square lattice, but which can also carry a colour (representing, roughly speaking, the actual parity of the effective walk). This colour may change only when the walk hits the real line, and then does so with a fixed probability p. The proof of Theorem 4.1 relies on first comparing our effective walk to the coloured random walk (Proposition 4.3) and then from the coloured walk to half of the potential kernel of the usual simple random walk (Proposition 4.4).

We are now finally in a position to start the proof of Theorem 1.3. From Theorem 4.1 we obtain a scaling limit for the inverse Kasteleyn matrix of the (infinite volume) free boundary dimer model. After recalling Kasteleyn theory in the nonbipartite setting, we then compute the scaling limit of the pointwise moments of height function differences on \(\mathbb {H}\) in Sect. 5.4. The argument is based on Kenyon’s original computation [22] but with substantial modifications coming from the fact that we use Pfaffian formulas instead of the determinantal formulas for bipartite graphs. This leads to different expressions which fortunately simplify asymptotically (for reasons that are related but distinct from those in [22]). This leads to the formula in Proposition 5.6, which is an asymptotic expression for the limiting joint moments of pointwise height differences, with an explicit quantification of the validity of the limiting formula (needed in the following). To finish the proof of the result, we transfer this result in Sect. 5.5 into one about the scaling limit of the height function as a random distribution. This is essentially obtained by integrating the result of Proposition 5.6, but extra arguments are needed for the case when some of the variables of integration are close to one another.

Remark 1.6

An alternative strategy for establishing the scaling limit of the inverse Kasteleyn matrix, suggested by an anonymous referee, would be the following. It would suffice to concentrate on one of the two types of walks (say \(Z_{\text {even}}\), which is simpler to define than its counterpart \(Z_{\text {odd}}\)) and analyse its potential kernel in the manner indicated above in order to derive the scaling limit of \(K^{-1} (u, \cdot )\) where \(u \in V_{\text {even}}\) is a given even vertex. Once this is done, discrete holomorphicity and antisymmetry can be invoked to obtain asymptotics of \(K^{-1}\) on the remaining vertices, and with the same error bounds (using a discrete version of the Poisson formula for the derivative of harmonic functions).

We have chosen not to implement this strategy for the following reasons. On the one hand, the asymptotic analysis of \(Z_{\text {even}}\) (and in particular its potential kernel) is as difficult as it is for \(Z_{\text {odd}}\). As this is probably the most challenging part of the analysis, there would be no real simplification in considering \(Z_{\text {even}}\) only. On the other hand, the exact random walk representation of \(K^{-1}\) seems interesting in its own right, especially since it shows a connection with reflected random walks even at the discrete level.

We end the introduction by mentioning the following problem. The dimer model on special families of bipartite planar graphs is famously related, through various measure preserving maps, to other classical models of statistical mechanics like spanning trees (see e.g. [28]), the double Ising model [9, 11] or the closely related double random current model [13]. This indicates the following direction of study.

Problem 1.7

Analyse the boundary conditions in these classical lattice models induced by the presence of monomers in their dimer model representations.

2 (Inverse) Kasteleyn matrix

2.1 Dimer representation

In [16] a representation of the free boundary dimer model was given in terms of a dimer model on an augmented (nonbipartite) graph where a circuit of triangles is appended to \(\partial _{\text {free}}\mathcal {G}\). By circuit we mean here that the additional triangles form a cycle. See Fig. 3 for a picture.

Here we state a lemma that conveniently fits into our framework but the result holds in much bigger generality.

Lemma 2.1

([16]) Let \(\mathcal {G}\) be a finite subgraph of the upper-half plane square lattice such that \(\partial _m\mathcal {G}\) is contained in the real line and forms an interval of an even number of vertices. Let \(\mathcal {G}^0\) be \(\mathcal {G}\) with an appended bottom circuit of triangles as in Fig. 3. Assign weight \(z_v\) to each edge of the triangle that is incident to a vertex \(v\in \partial _m \mathcal {G}\). Then, for each monomer-dimer cover in \(\mathcal{M}\mathcal{D}(\mathcal {G})\), there exist exactly two dimer covers in \(\mathcal {D}(\mathcal {G}^0)\). Moreover, this is a weight-preserving mapping.

In other words, there is a measure preserving two-to-one map between \(\mathcal {D}(\mathcal {G}^0)\) and \(\mathcal{M}\mathcal{D}(\mathcal {G})\).

2.2 Kasteleyn orientation, Kasteleyn matrix and gauge change

A Kasteleyn orientation of a planar graph is an assignment of orientations to its edges such that for each face of the graph, as we traverse the edges surrounding this face one by one in a counterclockwise direction, we encounter an odd number of edges in the opposite direction (see e.g. [40]). For graphs as defined in Sect. 1.2 (but with an extra row of triangles, as explained in the previous section) we make the following choice (see Fig. 3): every vertical line is oriented downwards (including the non-horizontal sides of triangular faces at the bottom). The orientation of horizontal edges alternates: in odd rows (starting at row \(-1\)): edges are oriented from left to right, whereas in even rows (starting at row 0) they are oriented from right to left.

Given a Kasteleyn orientation, the standard Kasteleyn matrix \(\tilde{K}(x,y)\) is taken to be the signed, weighted adjacency matrix: that is, \(\tilde{K} (x,y) = \pm 1_{x \sim y} w_{(x,y)}\) where the sign is \(+\) if and only if the edge is oriented from x to y, and the weight \(w_{(x,y)}\) is 1 for horizontal and vertical edges (including on \(V_{-1}\)), and z for the nonhorizontal sides of triangular faces. However, it will be useful to perform a change of gauge, as follows. For every \(k \ge 0\) even, and for every \(x \in V_k\), we multiply by i the weight of every edge adjacent to x. In particular, every horizontal edge in \(V_k\) with k even receives a factor of i twice coming from both of its endpoints, whereas each vertical edge receives a factor of i exactly once. We define the gauge-changed Kasteleyn matrix K(x, y) to be the resulting matrix. Formally,

For instance, if \(x \in V_{0}\) is not on the boundary, then x has five neighbours. Starting from the vertical edge and moving counterclockwise, the weights K(x, y) are given by \(-i, -1, iz, iz, 1\).

2.3 Towards the inverse Kasteleyn matrix

Let \(D=K^*K\). In this section we explain the key idea involved in computing \(D^{-1}\), and thus ultimately \(K^{-1}\). The matrix D already played a crucial role in [22], where Kenyon observed that it reduced to the Laplacian on the four types of sublattices of the square grid.

We will follow a similar approach but, as we will see, the immediate interpretation of D as a Laplacian breaks down in the rows \(V_{-1}, V_0\) and \(V_1\). Nevertheless, admitting the formal sum-over-all-paths identity (2.2), we will be able to make a guess on the structure of \(D^{-1}\). This will ultimately lead us to the identification of \(D^{-1}\) as the Green’s function of a certain effective random walk (or, in fact, a pair of effective random walks) which appear in the statement of Theorem 1.1.

Therefore, the purpose of this section is mostly to explain the heuristic principles guiding the proof, and to introduce the relevant objects and the notation. Once this framework is defined we will start with the actual proof in Sect. 2.4. We will complete the rigorous computation of \(D^{-1}\) (and therefore the proof of Theorem 1.1) in Sect. 2.6.

We now fix a finite arbitrary graph \(\mathcal {G}\) that satisfies the conditions of Sect. 1.2. We first compute D explicitly. Note that if \(x \in V_k\) with \(k \ge 2\), the entries of D are computed in a way identical to Kenyon [22]. Namely, the diagonal term is

Moreover, the off-diagonal terms are nonzero if and only if y is at distance two from x, but not diagonally (the diagonal cancellation is a consequence of the Kasteleyn orientation), i.e., if y is a neighbour of x on one of the sublattices \(2\mathbb {Z}\times 2 \mathbb {Z}\), \((2\mathbb {Z}+1) \times (2\mathbb {Z}+ 1)\), \(2\mathbb {Z}\times (2\mathbb {Z}+ 1)\) or \((2\mathbb {Z}+1) \times 2\mathbb {Z}\) in which case one can check as above that \(D(x,y) =-1\). Therefore away from the boundary \(\partial _{\text {free}} \mathcal {G}\), in the same way as in [22], D is the Laplace operator associated to a simple random walk on each of the sublattices, up to a multiplicative constant.

Complications for such an interpretation arise when \(x \in V_{-1} \cup V_{0} \cup V_1\). See Fig. 4 for the nonzero entries of D in these cases. Notice that now it is not necessarily true that the diagonal term D(x, x) is (up to a sign) the same as the sum of the off-diagonal entries on the row corresponding to x, or in other words, the transition weights \(d_{x,y}\) in (2.3) do not sum up to 1. Moreover, some of them are negative. While this seems like a very serious obstacle for describing the behaviour of the operator \(D^{-1}\) in the scaling limit, we nevertheless show in the next section how we can recover an effective random walk for which D really is the Laplacian.

More precisely, \(D^{-1}\) can be formally viewed as a sum of weights of paths of all possible lengths, where the weight of a path is the product of (signed) transition weights of individual jumps. That is, formally,

where for a path \(\pi :u \rightarrow v\),

For x in the bulk, \(d_{x,y} =1/4\) for each y which is neighbour of x on the sublattice of twice larger mesh size containing x, and is 0 otherwise, which is the same as the transition probability of a simple random walk on that sublattice.

Let us now point out that the transition weights between an even row and an odd row are always 0. Compared to the odd rows, the construction for even rows is much simpler. As seen in Fig. 4, for \(x\in V_0\), D(x, x) is in fact equal to the sum of |D(x, y)| for all \(y\not =x\). We can therefore view \(|d_{x,y}|\) for \(x\in V_{0}\) as the transition weights of a random walk that is reflected on row \(V_0\) (and can make jumps of size one and two on that row). When we take into account the signs of d(x, y) in (2.3), this gives rise to a global sign factor which depends only on u and v can be seen in the second line of (1.4).

The rest of this section is devoted to the more complicated task of giving a random walk representation to \(D^{-1}\) restricted to the vertices in odd rows \(V_{\text {odd}}\). We now describe the main idea. We will manage to give a meaning to the right hand side of (2.2) by fixing a specific order of summation. We will later on prove that this definition really does give us the inverse of D, and we will also find a random walk interpretation to this definition. We emphasise this because the signs are not constant, and hence the order of summation is a priori relevant to the value of the sum. Essentially we will compute the sum in (2.2) by ignoring the details of what the path does when it visits \(V_{-1}\). That is, we will identify two paths if they enter \(V_{-1}\) at the same place in \(V_{1}\) and leave \(V_{-1}\) at the same places in \(V_1\) for each visit to \(V_{-1}\), and we will be able to estimate contributions to (2.2) coming from each such equivalence class.

An important observation (see Fig. 4) here is that for each \(x\in V_{-1}\), the diagonal term D(x, x) is equal to the sum of |D(x, y)| for all \(y\in V_{-1}\) not equal to x. Note that D(x, y) is non zero for \(y=x\pm 1\) or \(y=x \pm 2\) (understood cyclically). This allows us to express the weight of the paths which stay in \(V_{-1}\) as the weight of a random walk with steps \(\pm 1\) and \(\pm 2\) on \(V_{-1}\). One can therefore associate a Green’s function \(g(\cdot , \cdot )\) (or more formally a potential kernel since the walk is recurrent on the cycle \(V_{-1}\)) with the random walk on \(V_{-1}\) with transition probabilities

For \(x\in V_1=V_1(\mathcal {G}^0)= V_1(\mathcal {G})\), let \(x_-\) and \(x_+\) be the left and right vertex in \(V_{-1}=V_{-1}(\mathcal {G}^0)\) two steps away from x (see Fig. 4). We fix \(u,v \in V_1\) and let \(u_\bullet \in \{ u_-, u_+\}\) and \(v_\bullet \in \{ v_-, v_+\}\). We define \(\mathcal {P}^{1}_{u_\bullet , v_\bullet } \) to be the set of paths from \(u_\bullet \) to \(v_\bullet \) which are contained in \(V_{-1}\). Observe that if \(\pi \in \mathcal {P}^{1}_{u_{\bullet },v_{\bullet }}\), then \(\pi \) makes jumps of size \(\pm 1\) or \(\pm 2\), and that each odd jump contributes a negative weight to (2.2) whereas each even jump contributes a positive weight. Since \(\pi \) goes from \(u_{\bullet }\) to \(v_{\bullet }\) the parity of the number of odd jumps is fixed and depends only on the distance between \(u_{\bullet }\) and \(v_{\bullet }\) in \(V_{-1}\). Hence

where \(d_{x,y}\) is defined in (2.3).

Going further: if \(\mathcal {P}^{1}_{u_{\bullet }, v}\) is the set of paths going from \(u_{\bullet }\) to v and staying in \(V_{-1}\) (except for the last step, which must be from \(v_\pm \) to v), then

where the last term accounts for the weight \(- D(v_\pm , v) / D(v_\pm , v_{\pm })\) of the last step from \(V_{-1}\) to \(V_1\). Finally, let \(\mathcal {P}^1_{u,v}\) be the set of paths from u to v which stay in \(V_{-1}\) except for the first and last step (which necessarily are from \(V_1\) to \(V_{-1}\) and vice versa). Using (2.5) we have

where the additional term \(\frac{z}{4} \) compared to (2.5) accounts for the weight \(- D(u, u_{\pm }) / D(u, u) \) of the first step from \(V_1\) to \(V_{-1}\). The factor \(\frac{1}{4}\) in the definition of \(q_{u,v}\) is included for later convenience. As mentioned, the Green’s function for the walk on \(V_{-1}\) is not directly defined, but its gradient (as in the expression above) will be defined with the use of the potential kernel.

Recall that our intention is to interpret the quantities \(q_{u,v}\) as transition probabilities between vertices in \(V_1\). In particular we would wish \(q_{u,v}\) to be positive and sum up to (something less than) one (since the other three transition weights induced by D from a vertex in the bulk of \(V_1\) to \(V_1\) and \(V_3\) are equal to 3/4). Unfortunately, in the setting described so far, we were unable to do so. However, a nice solution to this problem is the following construction. We note that this construction is the reason for the appearance of the special monomer weight \(z'\) at the monomer-corners in the statement of our results.

2.4 An intermediate limit

To overcome the issue raised above, we introduce an intermediate limiting procedure in our model. To this end, let 2m denote the number of triangles in \(\mathcal {G}^0\), and let \(\mathcal {G}^N\) be the graph where we add a circuit of \(2N \wedge 2m\) triangles (instead of 2m triangles as in \(\mathcal {G}^0\)), see Fig. 5 for an example. We assign weights 1 to every edge except if it belongs to a triangle and is not horizontal, in which case we assign weight z. Since we assumed that \(\mathcal {G}\) has a dimer cover, it is easy to see that \(\mathcal {G}^N\) also has at least one dimer cover. We can hence talk about the dimer model on \(\mathcal {G}^N\) with the specified weights.

A graph \(\mathcal {G}\) (drawn with round vertices), and its extension \(\mathcal {G}^8\) (there is a circuit of 16 additional triangles appended to the bottom of \(\mathcal {G}\)). The two red edges and vertices in the figure denote a single edge and vertex in \(\mathcal {G}^8\). The weights of transitions denoted by arrows are \(D(\cdot ,\cdot )=D_N(\cdot ,\cdot )=-1\). The diagonal terms are \(D(z_1,z_1)=3\), \(D(z_2,z_2)=4\). The black vertex \(z_1\) has Neumann boundary conditions for the associated walk, since the total weight of outgoing transitions is also 3. The white vertex \(z_2\) has Dirichlet boundary conditions since the total outgoing weight is \(2<4\) (color figure online)

Let us call \(\tilde{\mathcal {G}}^N\) the graph \(\mathcal {G}^N\) where the bottom row \(V_{-1}(\mathcal {G}^N)\) is removed. In other words, \(\tilde{\mathcal {G}}^N\) is \(\mathcal {G}\) with \(\partial _{\text {free}} \mathcal {G}\) replaced by the N-cycle. Using Lemma 2.1, we can rephrase the dimer model on \(\mathcal {G}^N\) as a free boundary dimer model on \(\tilde{\mathcal {G}}^N\). We claim that the monomer-dimer configuration on \(\tilde{\mathcal {G}}^N\) restricted to \(\mathcal {G}\), in the limit \(N\rightarrow \infty \) has the law of the free boundary dimer model with weight \(z'\) from (1.3) at the monomer-corners. To see this, let \(\mathcal {Z}_k\) be the partition function of the free boundary dimer model on a segment of \(\mathbb {Z}\) of length k with monomer weight z and edge weight 1 (monomers are allowed anywhere on the segment). Moreover, let L be the number of vertices in the row \(V_0(\mathcal {G}^N)\) that are not in \(\mathcal {G}\) (so \(L=(N-m)_+\)). Then, it is not difficult to see that the effective weight for the presence of two (resp. one and zero) corner-monomers in \(\mathcal {G}\) is \(\mathcal {Z}_{L+2}\) (resp. \(\mathcal {Z}_{L+1}\) and \(\mathcal {Z}_{L}\)). The claim is therefore a consequence of the following elementary lemma.

Lemma 2.2

As \(k \rightarrow \infty \),

Proof

It is enough to solve the recursion \(\mathcal {Z}_{k+1} = z \mathcal {Z}_k +\mathcal {Z}_{k-1}\) to get that

where \(\beta = \sqrt{1+\frac{z^2}{4}}\). \(\square \)

Let \(K_N\) be the Kasteleyn matrix of \(\mathcal {G}^N\) with Kaseteleyn weights as discussed above, see e.g. Fig. 5, and let \(D_N=(K_N)^*K_N\). The statement above and Kasteleyn theory imply that the inverse Kasteleyn matrix \(K_N^{-1}\) restricted to \(\mathcal {G}^0\) converges as \(N\rightarrow \infty \) to the inverse Kasteleyn matrix \((K')^{-1}\) for the free boundary dimer model on \(\mathcal {G}^0\) with monomer weights \(z'\) at the monomer-corners.

2.5 An auxiliary walk on \(\mathbb {Z}\)

It will be convenient to consider a random walk on \(V_{-1}(\mathbb Z^2) \simeq \mathbb {Z}\) with transition probabilities given by

In other words, this is the infinite volume version of the walk from (2.4). Now, while the Green’s function of this walk is infinite since the walk is recurrent, its differences makes sense in the form of the potential kernel (see [30], Section 4.4.3) given by

where \(p_n(k)= \sum _{i=0}^n \mathbb {P}_0( X_i = k )\) with X being the random walk with jump distribution (2.7). Using the potential kernel, for \(u,v \in V_1(\mathbb {Z}^2)\simeq \mathbb {Z}\), we can now define the infinite volume version of the transition weight \(q_{u,v}\) from (2.6) by

where \(k = \Re (v-u)\). Note that the sign is opposite to that in (2.6). To is due to the \(-p_n(k)\) term in the definition of the potential kernel.

The next result is one of the crucial observations in this work.

Lemma 2.3

(Effective transition probabilities) For all \(z>0\), and any pair of vertices \(u,v \in V_1(\mathbb {Z}^2)\), we have

Moreover, \(q^{\infty }_{u,v} \rightarrow 0\) exponentially fast as \(|u-v|\rightarrow \infty \).

Before we give the proof note that neither of these two facts is at all clear from the definitions of \(q^{\infty }_{u,v}\). Together they imply that we can think of \(q^\infty _{u,v}\) as the step distribution of some effective random walk on \(V_1\). Later in Lemma 2.6, we will prove that \(q^{\infty }_{u,v}\) is the limit of \(q_{u,v}\) from (2.6) on \(\mathcal {G}^N\) when \(N\rightarrow \infty \).

Proof of Lemma 2.3

The proof is based on an exact formula for the potential kernel \(\alpha \) of the walk on \(\mathbb {Z}\) defined by (2.7). To start with, by Theorem 4.4.8 from [30] we know that

for some constants \(\beta >0\) and \(A\in \mathbb {R}\), and where \(\sigma ^2=1+6p\) is the variance of the walk with p as in (2.7). Moreover, \(\alpha \) is harmonic (except at \(k=0\)) with respect to the Laplacian of the walk (2.7). The recursion for the sequence \(\alpha _k\) has the following characteristic equation

This equation has one double root at 1, and two other roots

Note that since \(z>0\), we have \(p<1/2\), so the two roots above are distinct. This implies that the \(O(e^{-\beta | k|})\) term is of the form \( B_1\gamma _1^{|k|} + B_2\gamma _2^{|k|}\) for some constants \(B_1\) and \(B_2\). However, we have \(|\gamma _1|<1\) and \(|\gamma _2|>1\), so we must have \(B_2=0\). This implies that

for some constants A and B, and

Using that \(\alpha _0=0\) by definition, we get \(A=-B\) and hence

We still need to compute B which is equivalent to computing \(\alpha _1\). Let X be the walk with transition probabilities (2.7). Let \(\tau =\inf \{n>0: X_n >0 \}\), and

Then, by considering the possible four different first steps (\(+1,-1,+2,-2\)) of X and using translation invariance and the strong Markov property, we get that

which simplifies to

One can check that \(q=\gamma +1\). Moreover, using the symmetry of jumps of X and the Markov property for the walk, we get the equation (again considering the first four steps in the same order)

To justify (2.13), one starts from the definition of \(\alpha _1\) in (2.8) as the limit as \(N \rightarrow \infty \) of the expected difference of number of visits by time N to the sites 0 and 1. We first apply the simple Markov property at the first step, and depending on the outcome of the first step, apply the strong Markov property at the next time \(\tau \) (after time 1) that the walk returns to 0 or 1, taking care of the contribution coming from the event \(\{\tau >N\}\). We then let \(N \rightarrow \infty \). There is no problem in doing so, first because the sequence \(\{\alpha _n\}_{n \ge 0}\) is bounded, which lets us use the dominated convergence theorem, and second because the contribution coming from the event \(\{\tau >N\}\) to the difference between the number of visits at 0 and 1 by time N is bounded by 1. Details are left to the reader.

Together with (2.12), (2.13) gives

and hence from (2.11) we obtain

We can now define

where \(\Delta \alpha _k = 2\alpha _k - \alpha _{k+1} - \alpha _{k-1} \) is the Laplacian of simple random walk. Then \(q_k=q^\infty _{u,v}\) whenever \(|u-v|=k\). Using (2.11), we have

and hence the total transition weight is

Using (2.10) and (2.15), it can be checked that the last expression is equal to one for all \(0<p<1/2\) (equivalently all \(z>0\)). Exponential decay of \(q_k\) is clear from (2.16).

2.6 Random walk representation of \(D^{-1}\)

Here we finally establish a rigorous version of (2.2) using the ingredients from the previous sections. Recall that \(K_N\) is the Kasteleyn matrix of the graph \(\mathcal {G}^N\) and \(D_N = (K_N)^* K_N \). We will be mostly interested in the restriction of \(D_N^{-1}\) to the vertices of \(\mathcal {G}\). Observe that \(D_N\) can be written as a block-diagonal matrix if we consider vertices respectively in the odd or even rows (this is one advantage of taking periodic boundary conditions in the bottom rows). Hence to invert \(D_N\) it will suffice to invert each of these blocks separately. We call \(D_N^{\text {odd}}\) (resp. \(D_N^{\text {even}}\)) the matrix \(D_N\) restricted to \(V_{\text {odd}}(\mathcal {G}^N) \cup V_{-1}(\mathcal {G}^N)\) (resp. \(V_{\text {even}}(\mathcal {G}^N)\)).

We first focus on the odd case (the even case is much easier as explained before), and for now we will write \(D_N\) for \(D_N^{\text {odd}}\). The key idea will be to use the Schur complement formula. To be more precise, we observe that \(D_N\) has the block structure

where \(\mathcal A\) is indexed by the special row \(V_{-1}\), and \(\mathcal C\) is indexed by all the other rows \(V_{\text {odd}}\). Hence \(\mathcal B\) and \(\mathcal B^T\) can be thought of as a “transition matrices” between \(V_{-1}\) and \(V_{\text {odd}}\). Note that these matrices depend on N but we don’t write this explicitly to lighten the notation. We define the Schur complement of \(\mathcal A\) to be the matrix

With this definition, the restriction of \(D_N^{-1}\) to \(V_{\text {odd}}\) is simply given by

One issue for us is that we will see A is not directly invertible, making the use of the Schur complement formula not immediately possible. Instead, we will consider a limiting procedure: we will introduce a modification \(D_{N, \varepsilon }\) of \(D_N\). While \(D_{N, \varepsilon }\) does not have any direct dimer interpretation, we will be able to compute its inverse \(D_{N, \varepsilon }^{-1}\) using the Schur complement formula, and taking a limit as \(\varepsilon \rightarrow 0\), deduce an expression for \(D_N^{-1}\). (The key observation is that, while \(\mathcal A^{-1}\) is not well-defined, \(\mathcal B^T \mathcal A^{-1} \mathcal B\) is.)

We now outline how we proceed.

-

We introduce the matrix \(D_{N, \varepsilon }\), which is a version of \(D_N\) but with an additional small killing probability at each vertex of \(V_{-1}\).

-

We then write the inverse \(\mathcal A_{\varepsilon }^{-1}\) appearing in the block decomposition of \(D_{N, \varepsilon }\) in terms of the potential kernel (i.e., difference of Green’s functions) for the random walk on \(V_{-1}(\mathcal {G}^N)\) with transition probabilities as in (2.4) and with killing.

-

As \(\varepsilon \rightarrow 0\), and N is fixed, we show that the potential kernel of the periodic, killed walk converges to the potential kernel of the periodic eternal (unkilled) walk, and give a formula for the latter. From this formula it also follows that as the period size N diverges to \(\infty \), the potential kernel of the periodic eternal walk converges to the potential kernel of the infinite walk.

-

This gives us a formula for the Schur complement \(D_N/\mathcal A\) via (2.18). We then use that for N sufficiently large, this Schur complement can be viewed as a (genuine) Laplacian for a random walk. The proof of this statement is postponed until Sect. 2.7.

-

As a consequence of (2.19), this gives a formula for the inverse of \(D_N\) as a Green’s function of a genuine random walk.

-

Finally, as the number N of triangles appended to \(\mathcal {G}^0\) tends to infinity, on the one hand, the above analysis shows that the inverse Kasteleyn matrix (restricted to \(V_{\text {odd}}\)) can be written in terms of the potential kernel of a random walk with jumps along the boundary. On the other hand as mentioned before, the free boundary dimer model becomes equivalent to the same model on \(\mathcal {G}^0\) with modified monomer weights \(z' \) as in (1.3) at the monomer-corners.

-

The results of this section are summarised below as Corollary 2.8.

Let

In words, we have added \(\varepsilon \) to the diagonal values on \(V_{-1}\), which correspond to killing the walk with probability \(p_\varepsilon = \varepsilon /D_{N, \varepsilon }(z,z) = O(\varepsilon )\) at each step in \(V_{-1}\). \(D_{N, \varepsilon }\) can still be written in block form as

We now start with the computation of \(\mathcal A^{-1}_{{\varepsilon }}\). To this end let

be the Green’s function of the random walk on \(V_{-1}(\mathcal {G}^N)\) with transition probabilities \(p^{N, \varepsilon }_{x,y}\) defined for \(\mathcal {G}^N\) as in (2.4) with killing probability \(p_\varepsilon \); that is,

with \(p^N_{x,y}\) as in (2.7) (except here x, y are points on the N-cycle \(\mathbb {Z}/ (N \mathbb {Z})\), identified with \(V_{-1} ( \mathcal {G}^N)\): that is, \(p_{x,y}^N\) is translation invariant on the cycle, \(p^N_{x, x\pm 1} = z^2 / (2+ 2z^2)\), \(p^N_{x, x\pm 2} = 1/ (2+ 2z^2)\), and the addition is mod N). Note that \(g^{N, \varepsilon }(u,v)\) is well defined because of the killing.

Lemma 2.4

Let \(u,v \in V_{-1} = V_{-1} (\mathcal {G}^N)\). Then

Proof

This follows from the fact that \(|\mathcal A_\varepsilon |\) is the Laplacian for the random walk described above, and moreover (as mentioned before) the sign of the transition weights induced by \(\mathcal A_\varepsilon \) is negative if the step is of size \(\pm 1\) and positive otherwise (step size \(\pm 2\)). This follows from the definition of \(D_N\) and the Kasteleyn matrix.

We now explain how this yields an interpretation for the Schur complement \(D_{N,\varepsilon }/\mathcal A_\varepsilon \) as a (genuine) Laplacian for a random walk in the bulk \(V_{\text {odd}}(\mathcal {G})\) with jumps along the boundary \(V_1(\mathcal {G})\). For \(u, v \in V_1 = V_1(\mathcal {G}^N) = V_1(\mathcal {G})\), we define

Recalling that \(D_N(v,v) = \mathcal A(v,v) = 2 + 2z^2\) for \(v \in V_{-1}(\mathcal {G}^0)\) and \(N\ge 1\), a straightforward computation using Lemma 2.4 shows that

where again \(u_\pm , v_\pm \) are the left and right vertices in \(V_{-1}\) at distance two from u and v respectively.

We now show that as \(\varepsilon \rightarrow 0\), the differences of Green’s functions on the right-hand side converge to a quantity, which could be viewed as the potential kernel of the walk on the N-cycle with transition probabilities \(p^N_{x,y}\) from (2.4) (i.e., without killing). We note that the existence of this potential kernel is not obvious, as the walk is recurrent on a finite graph (so we cannot directly apply the results of Lawler and Limic [30]). Nevertheless, since the transition probabilities converge exponentially fast to their equilibrium distribution on this finite graph, it is also not hard to see that the series defining this potential kernel converges. However, we will not make any use of this definition, and will not verify the equality of this series with our limit, although this would not be very hard to establish.

Lemma 2.5

Fix \(u_\pm ,v_\pm \in V_{-1} ( \mathcal {G}^N)\). Then, as \(\varepsilon \rightarrow 0\),

where, by definition:

\(\alpha (x,o) = \alpha _{x-o}\) is the potential kernel defined in (2.8), and we identify each vertex \(u_\pm , v_\pm \) with a position in \(\mathbb {Z}\) (i.e., with its real part).

The proof of this lemma (including the finiteness of \(F^N\)) will be given in Sect. 2.7.

From the above lemma and (2.22) it follows directly that \(q^{N, \varepsilon }_{u, v}\) has a limit as \(\varepsilon \rightarrow 0\), which we call \(q^N_{u,v}\). The next lemma allows us to take a second limit, now as \(N \rightarrow \infty \): in fact, the results imply that for N sufficiently large, \(q^N_{u,v}\) can really be viewed as transition probabilities, in the sense that they are positive and sum up to a quantity which is less than one. Recall the definition of \(q^\infty _{u,v}\) from (2.9).

Lemma 2.6