Abstract

We prove that the wired uniform spanning forest exhibits mean-field behaviour on a very large class of graphs, including every transitive graph of at least quintic volume growth and every bounded degree nonamenable graph. Several of our results are new even in the case of \(\mathbb {Z}^d\), \(d\ge 5\). In particular, we prove that every tree in the forest has spectral dimension 4/3 and walk dimension 3 almost surely, and that the critical exponents governing the intrinsic diameter and volume of the past of a vertex in the forest are 1 and 1/2 respectively. (The past of a vertex in the uniform spanning forest is the union of the vertex and the finite components that are disconnected from infinity when that vertex is deleted from the forest.) We obtain as a corollary that the critical exponent governing the extrinsic diameter of the past is 2 on any transitive graph of at least five dimensional polynomial growth, and is 1 on any bounded degree nonamenable graph. We deduce that the critical exponents describing the diameter and total number of topplings in an avalanche in the Abelian sandpile model are 2 and 1/2 respectively for any transitive graph with polynomial growth of dimension at least five, and are 1 and 1/2 respectively for any bounded degree nonamenable graph. In the case of \(\mathbb {Z}^d\), \(d\ge 5\), some of our results regarding critical exponents recover earlier results of Bhupatiraju et al. (Electron J Probab 22(85):51, 2017). In this case, we improve upon their results by showing that the tail probabilities in question are described by the appropriate power laws to within constant-order multiplicative errors, rather than the polylogarithmic-order multiplicative errors present in that work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The uniform spanning forests (USFs) of an infinite graph G are defined as weak limits of the uniform spanning trees of finite subgraphs of G. These limits can be taken with respect to two extremal boundary conditions, yielding the free uniform spanning forest (FUSF) and wired uniform spanning forest (WUSF). For transitive amenable graphs such as the hypercubic lattice \(\mathbb {Z}^d\), the free and wired forests coincide and we speak simply of the USF. In this paper we shall be concerned exclusively with the wired forest. Uniform spanning forests have played a central role in the development of probability theory over the last twenty years, and are closely related to several other topics in probability and statistical mechanics including electrical networks [20, 23, 44], loop-erased random walk [20, 49, 71], the random cluster model [25, 28], domino tiling [23, 42], random interlacements [34, 67], conformally invariant scaling limits [52, 59, 63], and the Abelian sandpile model [24, 39, 40, 56]. Indeed, our results have important implications for the Abelian sandpile model, which we discuss in Sect. 1.6.

Following the work of many authors, the basic qualitative features of the WUSF are firmly understood on a wide variety of graphs. In particular, it is known that every tree in the WUSF is recurrent almost surely on any graph [60], that the WUSF is connected a.s. if and only if two independent random walks on G intersect almost surely [20, 61], and that every tree in the WUSF is one-ended almost surely whenever G is in one of several large classes of graphs [2, 20, 33, 35, 53, 61] including all transient transitive graphs. (An infinite tree is one-ended if it does not contain a simple bi-infinite path.)

The goal of this paper is to understand the geometry of trees in the WUSF at a more detailed, quantitative level, under the assumption that the underlying graph is high-dimensional in a certain sense. Our results can be summarized informally as follows. Let G be a connected graph with bounded degrees, and suppose that the n-step return probabilities \(p_n(v,v)\) for (discrete-time) random walk on G satisfy \(\sum _{n\ge 1} n \sup _{v\in V} p_n(v,v)<\infty \). In particular, this holds for \(\mathbb {Z}^d\) if and only if \(d \ge 5\), and more generally for any transitive graph of at least quintic volume growth. Let \(\mathfrak {F}\) be the WUSF of G, and let v be a vertex of G. The pastFootnote 1 of v in \(\mathfrak {F}\) is the union of v with the finite connected components of \(\mathfrak {F}{\setminus }\{v\}\). The following hold:

- 1.

The intrinsic geometry of each tree in \(\mathfrak {F}\) is similar at large scales to that of a critical Galton–Watson tree with finite variance offspring distribution, conditioned to survive forever. In particular, every tree has volume growth dimension 2 (with respect to its intrinsic graph metric), spectral dimension 4/3, and walk dimension 3 almost surely. The latter two statements mean that the n-step return probabilities for simple random walk on the tree decay like \(n^{-2/3+o(1)}\), and that the typical displacement of the walk (as measured by the intrinsic graph distance in the tree) is \(n^{1/3+o(1)}\). These are known as the Alexander–Orbach values of these dimensions [4, 45].

- 2.

The intrinsic geometry of the past of v in \(\mathfrak {F}\) is similar in law to that of an unconditioned critical Galton–Watson tree with finite variance offspring distribution. In particular, the probability that the past contains a path of length at least n is of order \(n^{-1}\), and the probability that the past contains more than n points is of order \(n^{-1/2}\). That is, the intrinsic diameter exponent and volume exponent are 1 and 1/2 respectively.

- 3.

The extrinsic geometry of the past of v in \(\mathfrak {F}\) is similar in law to that of an unconditioned critical branching random walk on G with finite variance offspring distribution. In particular, the probability that the past of v includes a vertex at extrinsic distance at least n from v depends on the rate of escape of the random walk on G. For example, it is of order \(n^{-2}\) for \(G=\mathbb {Z}^d\) for \(d\ge 5\) and is of order \(n^{-1}\) for G a transitive nonamenable graph. This is related to the fact that the random walk on the ambient graph G is diffusive in the former case and ballistic in the latter case.

All of these results apply more generally to networks (a.k.a. weighted graphs); see the remainder of the introduction for details.

In light of the connections between the WUSF and the Abelian sandpile model, these results imply related results for that model, to the effect that an avalanche in the Abelian sandpile model has a similar distribution to a critical branching random walk (see Sect. 1.6). Precise statements of our results and further background are given in the remainder of the introduction.

The fact that our results apply at such a high level of generality is a strong vindication of universality for high-dimensional spanning trees and sandpiles, which predicts that the large-scale behaviour of these models should depend only on the dimension, and in particular should be insensitive to the microscopic structure of the lattice. In particular, our results apply not only to \(\mathbb {Z}^d\) for \(d\ge 5\), but also to non-transitive networks that are similar to \(\mathbb {Z}^d\) such as the half-space \(\mathbb {Z}^{d-1}\times \mathbb {N}\) or, say, \(\mathbb {Z}^d\) with variable edge conductances bounded between two positive constants. Many of our results also apply to long-range spanning forest models on \(\mathbb {Z}^d\) such as those associated with the fractional Laplacian \(-(-\Delta )^\beta \) of \(\mathbb {Z}^d\) for \(d\ge 1\), \(\beta \in (0,d/4 \wedge 1)\). Long-range models such as these are motivated physically as a route towards understanding low-dimensional models via the \(\varepsilon \)-expansion [72], for which it is desirable to think of the dimension as a continuous parameter. (See the introduction of [66] for an account of the \(\varepsilon \)-expansion for mathematicians.)

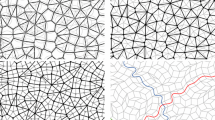

About the proofs. Our proof relies on the interplay between two different ways of sampling the WUSF. The first of these is Wilson’s algorithm, a method of sampling the WUSF by joining together loop-erased random walks which was introduced by David Wilson [71] and extended to infinite transient graphs by Benjamini et al. [20]. The second is the interlacement Aldous–Broder algorithm, a method of sampling the WUSF as the set of first-entry edges of Sznitman’s random interlacement process [67]. This algorithm was introduced in the author’s recent work [34] and extends the classical Aldous–Broder algorithm [3, 22] to infinite transient graphs. Generally speaking, it seems that Wilson’s algorithm is the better tool for estimating the moments of random variables associated with the WUSF, while the interlacement Aldous–Broder algorithm is the better tool for estimating tail probabilities.

A key feature of the interlacement Aldous–Broder algorithm is that it enables us to think of the WUSF as the stationary measure of a natural continuous-time Markov chain. Moreover, the past of the origin evolves in an easily-understood way under these Markovian dynamics. In particular, as we run time backwards, the past of the origin gets monotonically smaller except possibly for those times at which the origin is visited by an interlacement trajectory. Indeed, the central insight in the proof of our results is that static tail events (on which the past of the origin is large) can be related to to dynamic tail events (on which the origin is hit by an interlacement trajectory at a small time). Roughly speaking, we show that these two types of tail event tend to occur together, and consequently have comparable probabilities. We make this intuition precise using inductive inequalities similar to those used to analyze one-arm probabilities in high-dimensional percolation [32, 45, 46].

Once the critical exponent results are in place, the results concerning the simple random walk on the trees can be proven rather straightforwardly using the results and techniques of Barlow et al. [12].

1.1 Relation to other work

-

When G is a regular tree of degree \(k\ge 3\), the components of the WUSF are distributed exactly as augmented critical binomial Galton–Watson trees conditioned to survive forever, and in this case all of our results are classical [13, 43, 54].

-

In the case of \(\mathbb {Z}^d\) for \(d\ge 5\), Barlow and Járai [11] established that the trees in the WUSF have quadratic volume growth almost surely. Our proof of quadratic volume growth uses similar methods to theirs, which were in turn inspired by related methods in percolation due to Aizenman and Newman [1].

-

Also in the case of \(\mathbb {Z}^d\) for \(d\ge 5\), Bhupatiraju et al. [21] followed the strategy of an unpublished proof of Lyons et al. [53] to prove that the probability that the past reaches extrinsic distance n is \(n^{-2} \log ^{O(1)}n\) and that the probability that the past has volume n is \(n^{-1/2} \log ^{O(1)} n\). Our results improve upon theirs in this case by reducing the error from polylogarithmic to constant order. Moreover, their proof relies heavily on transitivity and cannot be used to derive universal results of the kind we prove here.

-

Peres and Revelle [62] proved that the USTs of large d-dimensional tori converge under rescaling (with respect to the Gromov-weak topology) to Aldous’s continuum random tree when \(d\ge 5\). They also proved that their result extends to other sequences of finite transitive graphs satisfying a heat-kernel upper bound similar to the one we assume here. Later, Schweinsberg [64] established a similar result for four-dimensional tori. Related results concerning loop-erased random walk on high-dimensional tori had previosuly been proven by Benjamini and Kozma [19]. While these results are closely related in spirit to those that we prove here, it does not seem that either can be deduced from the other.

-

For planar Euclidean lattices such as \(\mathbb {Z}^2\), the UST is very well understood thanks in part to its connections to conformally invariant processes in the continuum [42, 52, 57, 59, 63]. In particular, Barlow and Masson [14, 15] proved that the UST of \(\mathbb {Z}^2\) has volume growth dimension 8/5 and spectral dimension 16/13 almost surely. See also [9] for more refined results.

-

In [35], the author and Nachmias established that the WUSF of any transient proper plane graph with bounded degrees and codegrees has mean-field critical exponents provided that measurements are made using the hyperbolic geometry of the graph’s circle packing rather than its usual combinatorial geometry. Our results recover those of [35] in the case that the graph in question is also uniformly transient, in which case it is nonamenable and the graph distances and hyperbolic distances are comparable.

-

A consequence of this paper is that several properties of the WUSF are insensitive to the geometry of the graph once the dimension is sufficiently large. In contrast, the theory developed in [18, 36] shows that some other properties describing the adjacency structure of the trees in the forest continue to undergo qualitative changes every time the dimension increases.

-

In forthcoming work with Sousi, we build upon the methods of this paper to analyze related problems concerning the uniform spanning tree in \(\mathbb {Z}^3\) and \(\mathbb {Z}^4\).

1.2 Basic definitions

In this paper, a network will be a connected graph \(G=(V,E)\) (possibly containing loops and multiple edges) together with a function \(c:E \rightarrow (0,\infty )\) assigning a positive conductancec(e) to each edge \(e \in E\) such that for each vertex \(v\in V\), the vertex conductance\(c(v):= \sum c(e)< \infty \), taken over edges incident to v, is finite. We say that the network G has controlled stationary measure if there exists a positive constant C such that \(C^{-1} \le c(v) \le C\) for every vertex v of G. Locally finite graphs can always be considered as networks by setting \(c(e) \equiv 1\), and in this case have controlled stationary measure if and only if they have bounded degrees. We write \(E^\rightarrow \) for the set of oriented edges of a network. An oriented edge e is oriented from its tail \(e^-\) to its head \(e^+\) and has reversal \(-e\).

The random walk on a network G is the process that, at each time step, chooses an edge emanating from its current position with probability proportional to its conductance, independently of everything it has done so far to reach its current position, and then traverses that edge. We use \(\mathbf {P}_v\) to denote the law of the random walk started at a vertex v, \(\mathbf {E}_v\) to denote the associated expectation operator, and P to denote the Markov operator \(P:\ell ^2(V,c) \rightarrow \ell ^2(V,c)\), defined by

where \(\ell ^2(V,c)\) is the space of functions \(f:V \rightarrow \mathbb {R}\) such that \(\sum _v f(v)^2c(v) <\infty \). Finally, for each two vertices u and v of G and \(n\ge 0\), we write \(p_n(u,v) = \mathbf {P}_u(X_n = v)\) for the probability that a random walk started at u is at v at time n.

Given a graph or network G, a spanning tree of G is a connected subgraph of G that contains every vertex of G and does not contain any cycles. The uniform spanning tree measure \({\mathsf {UST}}_G\) on a finite connected graph \(G=(V,E)\) is the probability measure on subgraphs of G (considered as elements of \(E^{\{0,1\}}\)) that assigns equal mass to each spanning tree of G. If G is a finite connected network, then the uniform spanning tree measure \(\mathsf {UST}_G\) of G is defined so that the mass of each tree is proportional to the product of the conductances of the edges it contains. That is,

for every \(\omega \in \{0,1\}^E\), where \(Z_G\) is a normalizing constant.

Let G be an infinite network and let \(\langle V_n \rangle _{n \ge 1}\) be an exhaustion of G, that is, an increasing sequence of finite sets \(V_n \subset V\) such that \(\bigcup _{n\ge 1} V_n = V\). For each \(n \ge 1\), let \(G^*_n\) be the network obtained from \(G_n\) by contracting every vertex in \(V{\setminus }V_n\) into a single vertex, denoted \(\partial _n\), and deleting all the resulting self-loops from \(\partial _n\). The wired uniform spanning forest measure on G, denoted \(\mathsf {WUSF}_G\), is defined to be the weak limit of the uniform spanning tree measures on the finite networks \(G_n^*\). That is,

for every finite set \(S \subseteq E\). It follows from the work of Pemantle [61] that this limit exists and does not depend on the choice of exhaustion; See also [55, Chapter 10].

In the limiting construction above, one can also orient the uniform spanning tree of \(G_n^*\) towards the boundary vertex \(\partial _n\), so that every vertex other than \(\partial _n\) has exactly one oriented edge emanating from it in the spanning tree. If G is transient, then the sequence of laws of these random oriented spanning trees converge weakly to the law of a random oriented spanning forest of G, which is known as the oriented wired uniform spanning forest, and from which we can recover the usual (unoriented) WUSF by forgetting the orientation. (This assertion follows from the proof of [20, Theorem 5.1].) It is easily seen that the oriented wired uniform spanning forest of G is almost surely an oriented essential spanning forest of G, that is, an oriented spanning forest of G such that every vertex of G has exactly one oriented edge emanating from it in the forest (from which it follows that every tree is infinite).

1.3 Intrinsic exponents

Let \(\mathfrak {F}\) be an oriented essential spanning forest of an infinite graph G. We define the past of a vertex v in \(\mathfrak {F}\), denoted \(\mathfrak {P}(v)\), to be the subgraph of \(\mathfrak {F}\) induced by the set of vertices u of \(\mathfrak {F}\) such that every edge in the geodesic from u to v in \(\mathfrak {F}\) is oriented in the direction of v, where we also consider v to be included in this set. (By abuse of notation, we will also use \(\mathfrak {P}(v)\) to mean the vertex set of this subgraph.) Thus, a component of \(\mathfrak {F}\) is one-ended if and only if the past of each of its vertices is finite. The future of a vertex v is denoted by \(\Gamma (v,\infty )\) and is defined to be the set of vertices u such that v is in the past of u.

In order to quantify the one-endedness of the WUSF, it is interesting to estimate the probability that the past of a vertex is large in various senses. Perhaps the three most natural such measures of largeness are given by the intrinsic diameter, extrinsic diameter, and volume of the past. Here, given a subgraph K of a graph G, we define the extrinsic diameter of K, denoted \(\mathrm {diam}_\mathrm {ext}(K)\), to be the supremal graph distance in G between two points in K, and define the intrinsic diameter of K, denoted \(\mathrm {diam}_\mathrm {int}(K)\), to be the diameter of K. The volume of K, denoted |K|, is defined to be the number of vertices in K.

Generally speaking, for critical statistical mechanics models defined on Euclidean lattices such as \(\mathbb {Z}^d\), many natural random variables arising geometrically from the model are expected to have power law tails. The exponents governing these tails are referred to as critical exponents. For example, if \(\mathfrak {F}\) is the USF of \(\mathbb {Z}^d\), we expect that for each \(d\ge 2\) there exists \(\alpha _d\) such that

in which case we call \(\alpha _d\) the extrinsic diameter exponent for the USF of \(\mathbb {Z}^d\). Calculating and proving the existence of critical exponents is considered a central problem in probability theory and mathematical statistical mechanics.

It is also expected that each model has an upper-critical dimension, denoted \(d_c\), above which the critical exponents of the model stabilize at their so-called mean-field values. For the uniform spanning forest, the upper critical dimension is believed to be four. Intuitively, above the upper critical dimension the lattice is spacious enough that different parts of the model do not interact with each other very much. This causes the model to behave similarly to how it behaves on, say, the 3-regular tree or the complete graph, both of which have a rather trivial geometric structure. Below the upper critical dimension, the geometry of the lattice affects the model in a non-trivial way, and the critical exponents are expected to differ from their mean-field values. The upper critical dimension itself (which need not necessarily be an integer) is often characterised by the mean-field exponents holding up to a polylogarithmic multiplicative correction, which is not expected to be present in other dimensions.

For example, we expect that there exist constants \(\gamma _2,\gamma _3,\gamma _{\mathrm {mf}}\) and \(\delta \) such that

where \(\mathfrak {F}\) is the uniform spanning forest of \(\mathbb {Z}^d\) and \(\asymp \) denotes an equality that holds up to positive multiplicative constants. Moreover, the values of the exponents \(\gamma _2,\gamma _3,\gamma _{\mathrm {mf}}\), and \(\delta \) should depend only on the dimension d, and not on the choice of lattice; this predicted insensitivity to the microscopic structure of the lattice is an instance of the phenomenon of universality.

Our first main result verifies the high-dimensional part of this picture for the intrinsic exponents. The corresponding results for the extrinsic diameter exponent are given in Sect. 1.5. Before stating our results, let us give some further definitions and motivation. For many models, an important signifier of mean-field behaviour is that certain diagrammatic sums associated with the model are convergent. Examples include the bubble diagram for self-avoiding walk and the Ising model, the triangle diagram for percolation, and the square diagram for lattices trees and animals; see e.g. [65] for an overview. For the WUSF, the relevant signifier of mean-field behaviour on a network G is the convergence of the random walk bubble diagram

where \(p_n(\cdot ,\cdot )\) denotes the n-step transition probabilities for simple random walk on G and v is a fixed root vertex. Note that the value of the bubble diagram is exactly the expected number of times that two independent simple random walks started at the origin intersect. Using time-reversal, the convergence of the bubble diagram of a network with controlled stationary measure is equivalent to the convergence of the sum

It is characteristic of the upper-critical dimension \(d_c\) that the bubble diagram converges for all \(d>d_c\), while at the upper-critical dimension itself we expect that the bubble diagram diverges logarithmically, as indeed is the case in our setting.

Our condition for mean-field behaviour of the WUSF will be that the bubble diagram converges uniformly in a certain sense. Let \(G=(V,E)\) be a network, let P be the Markov operator of G, and let \(\Vert P^n\Vert _{1\rightarrow \infty }\) be the \(1\rightarrow \infty \) norm of \(P^n\), defined by

We define the bubble norm of P to be

Thus, for transitive networks, \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \) is equivalent to convergence of the random walk bubble diagram. Here, a network is said to be transitive if for every two vertices \(u,v\in V\) there exists a conductance-preserving graph automorphism mapping u to v. Throughout the paper, we use \(\asymp , \preceq \) and \(\succeq \) to denote equalities and inequalities that hold to within multiplication by two positive constants depending only on the choice of network.

Theorem 1.1

(Mean-field intrinsic exponents) Let G be a transitive network such that \(\Vert {P}\Vert _{\mathrm {bub}} < \infty \), and let \(\mathfrak {F}\) be the wired uniform spanning forest of G. Then

for every vertex v and every \(R\ge 1\). In particular, the critical exponents governing the intrinsic diameter and volume of the past are 1 and 1/2 respectively.

For transitive graphs, it follows from work of Hebisch and Saloff-Coste [30] that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \) if and only if the graph has at least quintic volume growth, i.e., if and only if there exists a constant c such that the number of points in every graph distance ball of radius n is at least \(cn^5\) for every \(n\ge 1\). Thus, by Gromov’s theorem [26], Trofimov’s theorem [69], and the Bass-Guivarc’h formula [16, 27], the class of graphs treated by Theorem 1.1 includes all transitive graphs not rough-isometric to \(\mathbb {Z},\mathbb {Z}^2,\mathbb {Z}^3,\mathbb {Z}^4\), or the discrete Heisenberg group. As mentioned above, the theorem also applies for example to long-ranged transitive networks with vertex set \(\mathbb {Z}^d\), a single edge between every two vertices, and with translation-invariant conductances given up to positive multiplicative constants by

provided that either \(1 \le d \le 4\) and \(\alpha \in (0,d/2)\) or \(d\ge 5\) and \(\alpha >0\). The canonical example of such a network is that associated with the fractional Laplacian \(-(-\Delta )^\beta \) of \(\mathbb {Z}^d\) for \(\beta \in (0,d/4 \wedge 1)\). (See [66, Section 2].)

The general form of our result is similar, but has an additional technical complication owing to the need to avoid trivialities that may arise from the local geometry of the network. Let G be a network, let v be a vertex of G, let X and Y be independent random walks started at v, and let q(v) be the probability that X and Y never return to v or intersect each other after time zero.

Theorem 1.2

Let G be a network with controlled stationary measure such that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), and let \(\mathfrak {F}\) be the wired uniform spanning forest of G. Then

and

for all \(v\in V\) and \(R\ge 1\).

The presence of q(v) in the theorem is required, for example, in the case that we attach a vertex by a single edge to the origin of \(\mathbb {Z}^d\), so that the past of this vertex in the USF is necessarily trivial. (The precise nature of the dependence on q(v) has not been optimized.) However, in any network G with controlled stationary measure and with \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), there exist positive constants \(\varepsilon \) and r such that for every vertex v in G, there exists a vertex u within distance r of G such that \(q(u)>\varepsilon \) (Lemma 4.2). In particular, if G is a transitive network with \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \) then q(v) is a positive constant, so that Theorem 1.1 follows from Theorem 1.2.

Let us note that Theorem 1.2 applies in particular to any bounded degree nonamenable graph, or more generally to any network with controlled stationary measures satisfying a d-dimensional isoperimetric inequality for some \(d>4\), see [47, Theorem 3.2.7]. In particular, it applies to \(\mathbb {Z}^d\), \(d\ge 5\), with any specification of edge conductances bounded above and below by two positive constants (in which case it can also be shown that q(v) is bounded below by a positive constant). A further example to which our results are applicable is given by taking \(G=H^d\) where \(d\ge 5\) and H is any infinite, bounded degree graph.

1.4 Volume growth, spectral dimension, and anomalous diffusion

The theorems concerning intrinsic exponents stated in the previous subsection also allow us to determine exponents describing the almost sure asymptotic geometry of the trees in the WUSF, and in particular allow us to compute the almost sure spectral dimension and walk dimension of the trees in the forest. See e.g. [47] for background on these and related concepts. Here, we always consider the trees of the WUSF as graphs. One could instead consider the trees as networks with conductances inherited from G, and the same results would apply with minor modifications to the proofs.

Let G be an infinite, connected network and let v be a vertex of G. We define the volume growth dimension (a.k.a. fractal dimension) of G to be

define the spectral dimension of G to be

and define the walk dimension of G to be

In each case, the limit used to define the dimension does not depend on the choice of root vertex v. Our next theorem establishes the values of \(d_f,d_s\), and \(d_w\) for the trees in the WUSF under the assumption that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \). The results concerning \(d_s\) and \(d_w\) are new even in the case of \(\mathbb {Z}^d\), \(d\ge 5\), while the result concerning the volume growth was established for \(\mathbb {Z}^d\), \(d\ge 5\), by Barlow and Járai [11].

Theorem 1.3

Let G be a network with controlled stationary measure and with \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), and let \(\mathfrak {F}\) be the wired uniform spanning forest of G. Then almost surely, for every component T of \(\mathfrak {F}\), the volume growth dimension, spectral dimension, and walk dimension of T satisfy

In particular, the limits defining these quantities are well-defined almost surely.

The values \(d_f=2,d_s=4/3\), and \(d_w=3\) are known as the Alexander–Orbach values of these exponents, following the conjecture due to Alexander and Orbach [4] that they held for high-dimensional incipient infinite percolation clusters. The first rigorous proof of Alexander–Orbach behaviour was due to Kesten [43], who established it for critical Galton–Watson trees conditioned to survive (see also [13]). The first proof for a model in Euclidean space was due to Barlow et al. [12], who established it for high-dimensional incipient infinite clusters in oriented percolation. Later, Kozma and Nachmias [45] established the Alexander–Orbach conjecture for high-dimensional unoriented percolation. See [31] for an extension to long-range percolation, [47] for an overview, and [17] for results regarding scaling limits of a related model.

As previously mentioned, Barlow and Masson [15] have shown that in the two-dimensional uniform spanning tree, \(d_f=8/5\), \(d_s=16/13\), and \(d_w=13/5\).

1.5 Extrinsic exponents

We now describe our results concerning the extrinsic diameter of the past. In comparison to the intrinsic diameter, our methods to study the extrinsic diameter are more delicate and require stronger assumptions on the graph in order to derive sharp estimates. Our first result on the extrinsic diameter concerns \(\mathbb {Z}^d\), and improves upon the results of Bhupatiraju et al. [21] by removing the polylogarithmic errors present in their results.

Theorem 1.4

(Mean-field Euclidean extrinsic diameter) Let \(d\ge 5\), and let \(\mathfrak {F}\) be the wired uniform spanning forest of \(\mathbb {Z}^d\). Then

for every \(R\ge 1\).

We expect that it should be possible to generalize the proof of Theorem 1.4 to other similar graphs, and to long-range models, but we do not pursue this here.

On the other hand, if we are unconcerned about polylogarithmic errors, it is rather straightforward to deduce various estimates on the extrinsic diameter from the analogous estimates on the intrinsic diameter, Theorems 1.1 and 1.2. The following is one such estimate of particular interest. For notational simplicity we will always work with the graph metric, although our methods easily adapt to various other metrics. We say that a network G with controlled stationary measure is d-Ahlfors regular if there exist positive constants c and C such that \( cn^d \le B(v,n) \le Cn^d\) for every vertex v and \(n\ge 1\). We say that G satisfies Gaussian heat kernel estimates if there exist positive constants \(c,c'\) such that

for every \(n\ge 0\) and every pair of vertices x, y in G with \(d(x,y)\le n\). It follows from the work of Hebisch and Saloff-Coste [30] that every transitive graph of polynomial volume growth satisfies Gaussian heat-kernel estimates, as does every bounded degree network with edge conductances bounded between two positive constants that is rough-isometric to a transitive graph of polynomial growth.

Theorem 1.5

Let G be a network with controlled stationary measure that is d-Ahlfors regular for some \(d>4\) and that satisfies Gaussian heat kernel estimates. Then

for every vertex v and every \(R\ge 1\).

Note that the hypotheses of this theorem imply that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \).

Finally, we consider networks in which the random walk is ballistic rather than diffusive. We say that a network G is uniformly ballistic if there exists a constant C such that

for every \(r\ge 1\). Every nonamenable network with bounded degrees and edge conductances bounded above is uniformly ballistic, as can be seen from the proof of [55, Proposition 6.9].

Theorem 1.6

(Extrinsic diameter in the positive speed case) Let G be a uniformly ballistic network with controlled stationary measure and \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), and let \(\mathfrak {F}\) be the wired uniform spanning forest of G. Then

for every vertex v and every \(R\ge 1\).

Note that the upper bound of Theorem 1.6 is a trivial consequence of Theorem 1.2.

1.6 Applications to the Abelian sandpile model

The Abelian sandpile model was introduced by Dhar [24] as an analytically tractable example of a system exhibiting self-organized criticality. This is the phenomenon by which certain randomized dynamical systems tend to exhibit critical-like behaviour at equilibrium despite being defined without any parameters that can be varied to produce a phase transition in the traditional sense. The concept of self-organized criticality was first posited in the highly influential work of Bak, Tang, and Wiesenfeld [7, 8], who proposed (somewhat controversially [70]) that it may account for the occurrence of complexity, fractals, and power laws in nature. See [38] for a detailed introduction to the Abelian sandpile model, and [41] for a discussion of self-organized criticality in applications.

We now define the Abelian sandpile model. Let \(G=(V,E)\) be a connected, locally finite graph and let \(K \subseteq V\) be a set of vertices. A sandpile on K is a function \(\eta : K \rightarrow \{0,1,\ldots \}\), which we think of as a collection of indistinguishable particles (grains of sand) located on the vertices of K. We say that \(\eta \) is stable at a vertex x if \(\eta (x) < \deg (x)\), and otherwise that \(\eta \) is unstable at x. We say that \(\eta \) is stable if it is stable at every x, and that it is unstable otherwise. If \(\eta \) is unstable at x, we can topple\(\eta \) at x to obtain the sandpile \(\eta '\) defined by

for all \(y\in K\). That is, when x topples, \(\deg (x)\) of the grains of sand at x are redistributed to its neighbours, and grains of sand redistributed to neighbours of x in \(V{\setminus }K\) are lost. Dhar [24] observed that if K is finite and not equal to V then carrying out successive topplings will eventually result in a stable configuration and, moreover, that the stable configuration obtained in this manner does not depend on the order in which the topplings are carried out. (This property justifies the model’s description as Abelian.)

We define a Markov chain on the set of stable sandpile configurations on K as follows: At each time step, a vertex of K is chosen uniformly at random, an additional grain of sand is placed at that vertex, and the resulting configuration is stabilized. Although this Markov chain is not irreducible, it can be shown that chain has a unique closed communicating class, consisting of the recurrent configurations, and that the stationary measure of the Markov chain is simply the uniform measure on the set of recurrent configurations. In particular, the stationary measure for the Markov chain is also stationary if we add a grain of sand to a fixed vertex and then stabilize [38, Exercise 2.17].

The connection between sandpiles and spanning trees was first discovered by Majumdar and Dhar [56], who described a bijection, known as the burning bijection, between recurrent sandpile configurations and spanning trees. Using the burning bijection, Athreya and Járai [6] showed that if \(d\ge 2\) and \(\langle V_n\rangle _{n\ge 1}\) is an exhaustion of \(\mathbb {Z}^d\) by finite sets, then the uniform measure on recurrent sandpile configurations on \(V_n\) converges weakly as \(n\rightarrow \infty \) to a limiting measure on sandpile configurations on \(\mathbb {Z}^d\). Járai and Werning [40] later extended this result to any infinite, connected, locally finite graph G for which every component of the WUSF of G is one-ended almost surely. We call a random sandpile configuration on G drawn from this measure a uniform recurrent sandpile on G, and typically denote such a random variable by \(\mathrm {H}\) (capital \(\eta \)).

We are particularly interested in what happens during one step of the dynamics at equilibrium, in which one grain of sand is added to a vertex v in a uniformly random recurrent configuration \(\mathrm {H}\), and then topplings are performed in order to stabilize the resulting configuration. The multi-set of vertices counted according to the number of times they topple is called the Avalanche, and is denoted \({\text {Av}}_v(\mathrm {H})\). The set of vertices that topple at all is called the Avalanche cluster and is denoted by \({\text {AvC}}_v(\mathrm {H})\).

Járai and Redig [39] showed that the burning bijection allows one to relate avalanches to the past of the WUSF, which allowed them to prove that avalanches in \(\mathbb {Z}^d\) satisfy \(\mathbb {P}( v \in {\text {AvC}}_0(\mathrm {H})) \asymp \Vert v\Vert ^{-d+2}\) for \(d\ge 5\). (The fact that the expected number of times v topples scales this way is an immediate consequence of Dhar’s formula, see [38, Section 3.3.1].) Bhupatiraju et al. [21] built upon these methods to prove that, when \(d\ge 5\), the probability that the diameter of the avalanche is at least n scales as \(n^{-2} \log ^{O(1)} n\) and the probability that the total number of topplings in the avalanche is at least n is between \(c n^{-1/2}\) and \(n^{-2/5+o(1)}\). Using the combinatorial tools that they developed, the following theorem, which improves upon theirs, follows straightforwardly from our results concerning the WUSF. (Strictly speaking, it also requires our results on the v-WUSF, see Sect. 2.2.)

Theorem 1.7

Let \(d\ge 5\) and let \(\mathrm {H}\) be a uniform recurrent sandpile on \(\mathbb {Z}^d\). Then

for all \(n\ge 1\).

As with the WUSF, our methods also yield several variations on this theorem for other classes of graphs, the following of which are particularly notable. See Sect. 1.5 for the relevant definitions. With a little further work, it should be possible to remove the dependency on v in the lower bounds of Theorems 1.8 and 1.9. The upper bounds of Theorem 1.8 only require that G has polynomial growth, see Proposition 7.8.

Theorem 1.8

Let G be a bounded degree graph that is d-Ahlfors regular for some \(d>4\) and that satisfies Gaussian heat kernel estimates, and let \(\mathrm {H}\) be a uniform recurrent sandpile on G. Then

for all \(n\ge 1\).

Similarly, the following theorem concerning uniformly ballistic graphs can be deduced from Theorems 7.4 and 1.6. Again, we stress that this result applies in particular to any bounded degree nonamenable graph.

Theorem 1.9

Let G be a bounded degree, uniformly ballistic graph such that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), and let \(\mathrm {H}\) be a uniform recurrent sandpile on G. Then

for all \(n\ge 1\).

Notation

As previously discussed, we use \(\asymp , \preceq \) and \(\succeq \) to denote equalities and inequalities that hold to within multiplication by two positive constants depending only on the choice of network. Typically, but not always, these constants will only depend on a few important parameters such as \(\inf _{v\in V} c(v)\), \(\sup _{v\in V}c(v)\), and \(\Vert {P}\Vert _{\mathrm {bub}}\).

For the reader’s convenience, we gather here several pieces of notation that will be used throughout the paper. Each such piece of notation is also defined whenever it first appears within the body of the paper. In particular, the v-wired uniform spanning forest is defined in Sect. 2.2 and the interlacement and v-wired interlacement processes are defined in Sect. 3.

\(\mathfrak {F},\mathfrak {F}_v\) A sample of the wired uniform spanning forest and v-wired uniform spanning forest respectively.

\(\mathfrak {T}_v\) The tree containing v in \(\mathfrak {F}_v\).

\(\mathfrak {B}(u,n),\mathfrak {B}_v(u,n)\) The intrinsic ball of radius n around u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively.

\(\partial \mathfrak {B}(u,n),\partial \mathfrak {B}_v(u,n)\) The set of vertices at distance exactly n from u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively.

\(\mathfrak {P}(u),\mathfrak {P}_v(u)\) The past of u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively.

\(\mathrm {past}_{F}(u)\) The past of u in the oriented forest F (which need not be spanning).

\(\mathfrak {P}(u,n),\mathfrak {P}_v(u,n)\) The intrinsic ball of radius n around u in the past of u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively.

\(\Gamma (u,w), \Gamma _v(u,w)\) The path from u to w in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively, should these vertices be in the same component.

\(\Gamma (u,\infty ), \Gamma _v(u,\infty )\) The future of u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively.

\(\partial \mathfrak {P}(u,n),\partial \mathfrak {P}_v(u,n)\) The set of vertices with intrinsic distance exactly n from u in the past of u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively.

\(\mathscr {I},\mathscr {I}_v\) The interlacement process and v-wired interlacement process respectively.

\(\mathcal {I}_{[a,b]},\mathcal {I}_{v,[a,b]}\) The set of vertices visited by the interlacement process and the v-wired interlacement process in the time interval [a, b] respectively.

2 Background

2.1 Loop-erased random walk and Wilson’s algorithm

Let G be a network. For each \(-\infty \le n \le m \le \infty \) we define L(n, m) to be the line graph with vertex set \(\{i \in \mathbb {Z}: n \le i\le m\}\) and with edge set \(\{\{i,i+1\}: n\le i \le m-1\}\). A path in G is a multigraph homomorphism from L(n, m) to G for some \(-\infty \le n \le m \le \infty \). We can consider the random walk on G as a path by keeping track of the edges it traverses as well as the vertices it visits. Given a path \(w : L(n,m)\rightarrow G\) we will use w(i) and \(w_i\) interchangeably to denote the vertex visited by w at time i, and use \(w(i,i+1)\) and \(w_{i,i+1}\) interchangeably to denote the oriented edge crossed by w between times i and \(i+1\).

Given a path in \(w : L(0,m) \rightarrow G\) for some \(m\in [0,\infty ]\) that is transient in the sense that it visits each vertex at most finitely many times, we define the sequence of times \(\ell _n(w)\) by \(\ell _0(w)=0\) and \(\ell _{n+1}(w) = 1+\max \{ k : w_k = w_{\ell _n}\}\), terminating the sequence when \(\max \{ k : w_k = w_{\ell _n}\}=m\) in the case that \(m<\infty \). The loop-erasure\(\mathsf {LE}(w)\) of w is the path defined by

In other words, \(\mathsf {LE}(w)\) is the path formed by erasing cycles from w chronologically as they are created. The loop-erasure of simple random walk is known as loop-erased random walk and was first studied by Lawler [49].

Wilson’s algorithm [71] is a method of sampling the UST of a finite graph by recursively joining together loop-erased random walk paths. It was extended to sample the WUSF of infinite transient graphs by Benjamini et al. [20]. See also [55, Chapters 4 and 10] for an overview of the algorithm and its applications.

Wilson’s algorithm can be described in the infinite transient case as follows. Let G be an infinite transient network, and let \(v_1,v_2,\ldots \) be an enumeration of the vertices of G. Let \(\mathfrak {F}^{0}\) be the empty forest, which has no vertices or edges. Given \(\mathfrak {F}^{n}\) for some \(n\ge 0\), start a random walk at \(v_{n+1}\). Stop the random walk if and when it hits the set of vertices already included in \(\mathfrak {F}^n\), running it forever otherwise. Let \(\mathfrak {F}^{n+1}\) be the union of \(\mathfrak {F}^n\) with the set of edges traversed by the loop-erasure of this stopped path. Let \(\mathfrak {F}=\bigcup _{n\ge 0} \mathfrak {F}^n\). Then the random forest \(\mathfrak {F}\) has the law of the wired uniform spanning forest of G. If we keep track of direction in which edges are crossed by the loop-erased random walks when performing Wilson’s algorithm, we obtain the oriented wired uniform spanning forest. The algorithm works similarly in the finite and recurrent cases, except that we start by taking \(\mathfrak {F}^{0}\) to contain one vertex and no edges.

2.2 The v-wired uniform spanning forest and stochastic domination

In this section we introduce the v-wired uniform spanning forest (v-WUSF), which was originally defined by Járai and Redig [39] in the context of their work on the sandpile model (where it was called the WSF\(_o\)). The v-WUSF is a variation of the WUSF of G in which, roughly speaking, we consider v to be ‘wired to infinity’. The v-WUSF serves two useful purposes in this paper: its stochastic domination properties allow us to ignore interactions between different parts of the WUSF, and the control of the v-WUSF that we obtain will be applied to prove our results concerning the Abelian sandpile model in Sect. 9.

Let G be an infinite network and let v be a vertex of G. Let \(\langle V_n \rangle _{n\ge 1}\) be an exhaustion of G and, for each \(n\ge 1\), let \(G^{*v}_n\) be the graph obtained by identifying v with \(\partial _n\) in the graph \(G^*_n\). The measure \(\mathsf {WUSF}_v\) is defined to be the weak limit

The fact that this limit exists and does not depend on the choice of exhaustion of G is proved similarly to the corresponding statement for the WUSF, see [53]. As with the WUSF, we can also define the orientedv-wired uniform spanning forest by orienting the uniform spanning tree of \(G_n^{*v}\) towards \(\partial _n\) (which is identified with v) at each step of the exhaustion before taking the weak limit. It is possible to sample the v-WUSF by running Wilson’s algorithm rooted at infinity, but starting with \(\mathfrak {F}^{0}_v\) as the forest that has vertex set \(\{v\}\) and no edges (as we usually would in the finite and recurrent cases). Moreover, if we orient each edge in the direction in which it is crossed by the loop-erased random walk when running Wilson’s algorithm, we obtain a sample of the oriented v-WUSF.

The following lemma makes the v-WUSF extremely useful for studying the usual WUSF, particularly in the mean-field setting. It will be the primary means by which we ignore the interactions between different parts of the forest. (Indeed, it plays a role analogous to that played by the BK inequality in Bernoulli percolation.) We denote by \(\mathrm {past}_F(v)\) the past of v in the oriented forest F, which need not be spanning. We write \(\mathfrak {T}_v\) for the tree containing v in \(\mathfrak {F}_v\), and write \(\Gamma (u,\infty )\) and \(\Gamma _v(u,\infty )\) for the future of u in \(\mathfrak {F}\) and \(\mathfrak {F}_v\) respectively, as defined in Sect. 1.3.

Lemma 2.1

(Stochastic domination) Let G be an infinite network, let \(\mathfrak {F}\) be an oriented wired uniform spanning forest of G, and for each vertex v of G let \(\mathfrak {F}_v\) be an oriented v-wired uniform spanning forest of G. Let K be a finite set of vertices of G, and define \(F(K) =\bigcup _{u\in K} \Gamma (u,\infty )\) and \(F_v(K) =\bigcup _{u\in K} \Gamma _v(u,\infty )\). Then for every \(u\in K\) and every increasing event \(\mathscr {A}\subseteq \{0,1\}^E\) we have that

and similarly

Note that when K is a singleton, (2.1) follows implicitly from [53, Lemma 2.3]. The proof in the general case is also very similar to theirs, but we include it for completeness. Given a network G and a finite set of vertices K, we write G/K for the network formed from G by identifying all the vertices of K.

Lemma 2.2

Let G be a finite network, let \(K_1 \subseteq K_2\) be sets of vertices of G. For each spanning tree T of G, let \(S(T,K_2)\) be the smallest subtree of T containing all of \(K_2\). Then the uniform spanning tree of G/\(K_1\) stochastically dominates \(T{\setminus }S(T,K_2)\), where T is a uniform spanning tree of G.

Proof

It follows from the spatial Markov property of the UST that, conditional on \(S(T,K_2)\), the complement \(T{\setminus }S(T,K_2)\) is distributed as the UST of the network G/\(S(T,K_2)\) constructed from G by identifying all the vertices in the tree \(S(T,K_2)\), see [35, Section 2.2.1]. On the other hand, it follows from the negative association property of the UST [55, Theorem 4.6] that if \(A \subseteq B\) are two sets of vertices, then the UST of G/A stochastically dominates the UST of G/B. This implies that the claim holds when we condition on \(S(T,K_2)\), and we conclude by averaging over the possible choices of \(S(T,K_2)\). \(\square \)

Proof of Lemma 2.1

The claim (2.1) follows from Lemma 2.2 by considering the finite networks \(G_n^*\) used in the definition of the WUSF, taking \(K_1 = \{u,\partial _n\}\) and \(K_2 = K \cup \{\partial _n\}\), and taking the limit as \(n\rightarrow \infty \).

We now prove (2.2). If \(u=v\) then the claim follows by applying Lemma 2.2 to the finite networks \(G_n^{*v}\), taking \(K_1 = \emptyset \) and \(K_2 = K\), and taking the limit as \(n\rightarrow \infty \). Now suppose that \(u\ne v\). Let \(G/\{u,v\}\) be the network obtained from G by identifying u and v into a single vertex x, and let \(\mathfrak {F}'\) be the x-wired uniform spanning forest of \(G/\{u,v\}\). We consider \(\mathfrak {F}'\) as a subgraph of G, and let \(\mathfrak {T}'\) be the component of u in \(\mathfrak {F}'\). It follows from the negative association property of the UST and an obvious limiting argument that \(\mathfrak {F}'\) is stochastically dominated by \(\mathfrak {F}_u\), and hence that \(\mathfrak {T}'\) is stochastically dominated by \(\mathfrak {T}_u\). On the other hand, applying Lemma 2.2 to the finite networks \(G_n^{*v}\), taking \(K_1 = \{u,v\}\) and \(K_2 = K \cup \{v\}\), and taking the limit as \(n\rightarrow \infty \) yields that the conditional distribution of \(\mathrm {past}_{\mathfrak {F}_v{\setminus }F_v(K)}(u)\) given \(F_v(K)\) is stochastically dominated by \(\mathfrak {T}'\) and hence by \(\mathfrak {T}_u\). \(\square \)

3 Interlacements and the Aldous–Broder algorithm

The random interlacement process is a Poissonian soup of doubly-infinite random walks that was introduced by Sznitman [67] and generalized to arbitrary transient graphs by Texeira [68]. Formally, the interlacement process \(\mathscr {I}\) on the transient graph G is a Poisson point process on \(\mathcal {W}^* \times \mathbb {R}\), where \(\mathcal {W}^*\) is the space of bi-infinite paths in G modulo time-shift, and \(\mathbb {R}\) is thought of as a time coordinate. In [34], we showed that the random interlacement process can be used to generate the WUSF via a generalization of the Aldous–Broder algorithm. By shifting the time coordinate of the interlacement process, this sampling algorithm also allows us to view the WUSF as the stationary measure of a Markov process; this dynamical picture of the WUSF, or more precisely its generalization to the v-WUSF, is of central importance to the proofs of the main theorems of this paper.

We now begin to define these notions formally. We must first define the space of trajectories \(\mathcal {W}^*\). Recall that for each \(-\infty \le n \le m \le \infty \) we define L(n, m) to be the line graph with vertex set \(\{i \in \mathbb {Z}: n \le i\le m\}\) and with edge set \(\{\{i,i+1\}: n\le i \le m-1\}\). Given a graph G, we define \(\mathcal {W}(n,m)\) to be the set of multigraph homomorphisms from L(n, m) to G that are transient in the sense that the preimage of each vertex of G is finite. We define the set \(\mathcal {W}\) to be the union

The set \(\mathcal {W}\) can be made into a Polish space in such a way that local times at vertices and first and last hitting times of finite sets are continuous, see [34, Section 3.2]. We define the time shift\(\theta _k:\mathcal {W}\rightarrow \mathcal {W}\) by \(\theta _k : \mathcal {W}(n,m) \longrightarrow \mathcal {W}(n-k,m-k)\),

and define the space \(\mathcal {W}^*\) to be the quotient

Let \(\pi : \mathcal {W}\rightarrow \mathcal {W}^*\) denote the associated quotient function. We equip the set \(\mathcal {W}^*\) with the quotient topology (which is Polish) and associated Borel \(\sigma \)-algebra. An element of \(\mathcal {W}^*\) is called a trajectory.

We now define the intensity measure of the interlacement process. Let G be a transient network. Given \(w \in \mathcal {W}(n,m)\), let \(w^\leftarrow \in \mathcal {W}(-m,-n)\) be the reversal of w, which is defined by setting \(w^\leftarrow (i)=w(-i)\) for all \(-m \le i \le -n\) and setting \(w^\leftarrow (i,i+1)=w(-i,-i-1)\) for all \(-m \le i \le -n-1\). For each subset \(\mathscr {A}\subseteq \mathcal {W}\), let \(\mathscr {A}^\leftarrow \) denote the set

For each set \(K \subseteq V\), we let \(\mathcal {W}_K(n,m)\) be the set of \(w\in \mathcal {W}(n,m)\) such that there exists \(n\le i\le m\) such that \(w(i)\in K\), and similarly define \(\mathcal {W}_K\) to be the union \(\mathcal {W}_K=\bigcup \{\mathcal {W}_K(n,m): -\infty \le n \le m \le \infty \}\). Let \(\tau ^+_K\) be the first positive time that the walk visits K, where we set \(\tau ^+_K=\infty \) if the walk does not visit K at any positive time. We define a measure \(Q_K\) on \(\mathcal {W}_K\) by setting

and, for each \(u\in K\) and each two Borel subsets \(\mathscr {A},\mathscr {B}\subseteq \mathcal {W}\),

For each set \(K \subseteq V\), let \(\mathcal {W}^*_K= \pi (W_K)\) be the set of trajectories that visit K. It follows from the work of Sznitman [67] and Teixeira [68] that there exists a unique \(\sigma \)-finite measure \(Q^*\) on \(\mathcal {W}^*\) such that for every Borel set \(\mathscr {A}\subseteq \mathcal {W}^*\) and every finite \(K\subset V\),

We refer to the unique such measure \(Q^*\) as the interlacement intensity measure, and define the random interlacement process\(\mathscr {I}\) to be the Poisson point process on \(\mathcal {W}^*\times \mathbb {R}\) with intensity measure \(Q^* \otimes \Lambda \), where \(\Lambda \) is the Lebesgue measure on \(\mathbb {R}\). For each \(t\in \mathbb {R}\) and \(A \subseteq \mathbb {R}\), we write \(\mathscr {I}_t\) for the set of \(w\in \mathcal {W}^*\) such that \((w,t)\in \mathscr {I}\), and write \(\mathscr {I}_{A}\) for the intersection of \(\mathscr {I}\) with \(\mathcal {W}^* \times A\).

See [34, Proposition 3.3] for a limiting construction of the interlacement process from the random walk on an exhaustion with wired boundary conditions.

In [34], we proved that the WUSF can be generated from the random interlacement process in the following manner. Let G be a transient network, and let \(t\in \mathbb {R}\). For each vertex v of G, let \(\sigma _t(v)\) be the smallest time greater than t such that there exists a trajectory \(W_{\sigma _t(v)} \in \mathscr {I}_{\sigma _t(v)}\) that hits v, and note that the trajectory \(W_{\sigma _t(v)}\) is unique for every \(t\in \mathbb {R}\) and \(v\in V\) almost surely. We define \(e_t(v)\) to be the oriented edge of G that is traversed by the trajectory \(W_{\sigma _t(v)}\) as it enters v for the first time, and define

[34, Theorem 1.1] states that \(\mathsf {AB}_t(\mathscr {I})\) has the law of the oriented wired uniform spanning forest of G for every \(t\in \mathbb {R}\). Moreover, [34, Proposition 4.2] states that \(\langle \mathsf {AB}_t(\mathscr {I})\rangle _{t\in \mathbb {R}}\) is a stationary, ergodic, mixing, stochastically continuous Markov process.

3.1 v-wired variants

In this section, we introduce a variation on the interlacement process in which a vertex v is wired to infinity, which we call the v-wired interlacement process. We then show how the v-wired interlacement process can be used to generate the v-WUSF in the same way that the usual interlacement process generates the usual WUSF.

Let G be a (not necessarily transient) network and let v be a vertex of G. We denote by \(\tau _v\) the first time that the random walk visits v, and denote by \(\tau ^+_K\) the first positive time that the random walk visits K. We write \(X^T\) for the random walk ran up to the (possibly random and/or infinite) time T, which is considered to be an element of \(\mathcal {W}(0,T)\). In particular, if X is started at v then \(X^{\tau _v}\) is the path of length zero at v. For each finite set \(K \subset V\) we define a measure \(Q_{v,K}\) on \(\mathcal {W}\) by \(Q_{v,K}(\{w \in \mathcal {W}: w(0)\notin K\})=0\),

(with the convention that \(\tau ^+_K>\tau _v\) in the case that both hitting times are equal to \(\infty \)) for every \(u\in K{\setminus } \{v\}\) and every two Borel sets \(\mathscr {A},\mathscr {B}\subseteq \mathcal {W}\), and

for every two Borel sets \(\mathscr {A},\mathscr {B}\subseteq \mathcal {W}\) if \(v\in K\), where we write \(w_0\in \mathcal {W}(0,0)\) for the path of length zero at v.

As with the usual interlacement intensity measure, we wish to define a measure \(Q^*_v\) on \(\mathcal {W}^*\) via the consistency condition

for every finite set \(K \subset V\) and every Borel set \(\mathscr {A}\subseteq \mathcal {W}^*\), and define the v-rooted interlacement process to be the Poisson point process on \(\mathcal {W}^*\times \mathbb {R}\) with intensity measure \(Q_v^* \otimes \Lambda \), where \(\Lambda \) is the Lebesgue measure on \(\mathbb {R}\).

We will deduce that such a measure exists via the following limiting procedure, which also gives a direct construction of the v-rooted interlacement process. Let N be a Poisson point process on \(\mathbb {R}\) with intensity measure \((c(\partial _n)+c(v))\Lambda \). Conditional on N, for each \(t\in N\), let \(W_t\) be a random walk on \(G_n^{*v}\) started at \(\partial _n\) (which is identified with v) and stopped when it first returns to \(\partial _n\), where we consider each \(W_t\) to be an element of \(\mathcal {W}^*\). We define \(\mathscr {I}^n_v\) to be the point process \(\mathscr {I}^n_v:=\left\{ (W_t,t) : t \in N\right\} \).

Proposition 3.1

Let G be an infinite network, let v be a vertex of G, and let \(\langle V_n \rangle _{n\ge 0}\) be an exhaustion of G. Then the Poisson point processes \(\mathscr {I}^n_v\) converge in distribution as \(n\rightarrow \infty \) to a Poisson point process \(\mathscr {I}_v\) on \(\mathcal {W}^* \times \mathbb {R}\) with intensity measure of the form \(Q^*_v \otimes \Lambda \), where \(\Lambda \) is the Lebesgue measure on \(\mathbb {R}\) and \(Q^*_v\) is a \(\sigma \)-finite measure on \(\mathcal {W}^*\) such that (3.2) is satisfied for every finite set \(K \subset V\) and every event \(\mathscr {A}\subseteq \mathcal {W}^*\).

The proof is very similar to that of [34, Proposition 3.3], and is omitted.

Corollary 3.2

Let G be an infinite network and let v be a vertex of G. Then there exists a unique \(\sigma \)-finite measure \(Q^*_v\) on \(\mathcal {W}^*\) such that (3.2) is satisfied for every finite set \(K \subset V\) and every event \(\mathscr {A}\subseteq \mathcal {W}^*\).

Proof

The existence statement follows immediately from Proposition 3.1. The uniqueness statement is immediate since sets of the form \(\mathscr {A}\cap \mathcal {W}^*_K\) are a \(\pi \)-system generating the Borel \(\sigma \)-algebra on \(\mathcal {W}^*\). \(\square \)

We call \(\mathscr {I}_v\) the v-wired interlacement process. Note that it may include trajectories that are either doubly infinite, singly infinite and ending at v, singly infinite and starting at v, or finite and both starting and ending at v.

We have the following v-rooted analogue of [34, Theorem 1.1 and Proposition 4.2], whose proof is identical to those in that paper.

Proposition 3.3

Let G be an infinite network, let v be a vertex of G, and let \(\mathscr {I}_v\) be the v-rooted interlacement process on G. Then

has the law of the oriented v-wired uniform spanning forest of G for every \(t\in \mathbb {R}\). Moreover, the process \(\langle \mathsf {AB} _{v,t}(\mathscr {I}_v) \rangle _{t\in \mathbb {R}}\) is a stationary, ergodic, stochastically continuous Markov process.

3.2 Relation to capacity

In this section, we record the well-known relationship between the interlacement intensity measure \(Q^*\) and the capacity of a set, and extend this relationship to the v-rooted interlacement intensity measure \(Q^*_v\). Recall that the capacity (a.k.a. conductance to infinity [55, Chapter 2]) of a finite set of vertices K in a network G is defined to be

where \(\tau ^+_K\) is the first positive time that the random walk visits K and the second equality follows by definition of \(Q^*\). Similarly, we define the v-wired capacity of a finite set K to be

with the convention that \(\tau ^+_K>\tau _v\) in the case that both hitting times are equal to \(\infty \). Note that if \(v\notin K\) then \(\mathrm {Cap}_v(K)\) is the effective conductance between K and \(\{\infty ,v\}\) (see Sect. 8 or [55, Chapter 2] for background on effective conductances). Thus, the number of trajectories in \(\mathscr {I}_{[a,b]}\) that hit K is a Poisson random variable with parameter \(|a-b| \mathrm {Cap}(K)\), while the number of trajectories in \(\mathscr {I}_{v,[a,b]}\) that hit K is a Poisson random variable with parameter \(|a-b| \mathrm {Cap}_v(K)\).

In our setting the capacity and v-rooted capacity of a set will always be of the same order: The inequality \(\mathrm {Cap}(K)\le \mathrm {Cap}_v(K)\) is immediate, while on the other hand we have that

By time-reversal we have that

for each \(u\in K{\setminus }\{v\}\), and summing over \(u\in K{\setminus }\{v\}\) we obtain that

Thus, in networks with bounded vertex conductances, the capacity and and v-rooted capacity agree to within an additive constant. Furthermore, the assumption that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \) implies that G is uniformly transient and hence that \(\mathrm {Cap}(K)\) is bounded below by a positive constant for every non-empty set K.

3.3 Evolution of the past under the dynamics

The reason that the dynamics induced by the interlacement Aldous–Broder algorithm are so useful for studying the past of the origin in the WUSF is that the past itself evolves in a very natural way under the dynamics. Indeed, if we run time backwards and compare the pasts \(\mathfrak {P}_0(v)\) and \(\mathfrak {P}_{-t}(v)\) of v in \(\mathfrak {F}_0\) and \(\mathfrak {F}_{-t}\), we find that the past can become larger only at those times when a trajectory visits v. At all other times, \(\mathfrak {P}_{-t}(v)\) decreases monotonically in t as it is ‘sliced into pieces’ by newly arriving trajectories. This behaviour is summarised in the following lemma, which is adapted from [34, Lemma 5.1]. Given a set \(A \subseteq \mathbb {R}\), we write \(\mathcal {I}_{A}\) for the set of vertices that are hit by some trajectory in \(\mathscr {I}_{A}\), and write \(\mathfrak {P}_t(v)\) for the past of v in the forest \(\mathfrak {F}_t\).

Lemma 3.4

Let G be a transient network, let \(\mathscr {I}\) be the interlacement process on G, and let \(\langle \mathfrak {F}_t\rangle _{t\in \mathbb {R}}=\langle \mathsf {AB} _t(\mathscr {I}) \rangle _{t\in \mathbb {R}}\). Let v be a vertex of G, and let \(s<t\). If \(v \notin \mathcal {I}_{[s,t)}\), then \(\mathfrak {P}_s(v)\) is equal to the component of v in the subgraph of \(\mathfrak {P}_t(v)\) induced by \(V{\setminus }\mathcal {I}_{[s,t)}\).

Proof

Suppose that u is a vertex of V, and let \(\Gamma _s(u,\infty )\) and \(\Gamma _t(u,\infty )\) be the futures of u in \(\mathfrak {F}_s\) and \(\mathfrak {F}_t\) respectively. Let \(u=u_{0,s},u_{1,s},\ldots \) and \(u=u_{0,t},u_{1,t},\ldots \) be, respectively, the vertices visited by \(\Gamma _s(u,\infty )\) and \(\Gamma _t(u,\infty )\) in order. Let \(i_0\) be the smallest i such that \(\sigma _s(u_{i,s}) <t\). Then it follows from the definitions that \(\Gamma _s(u,\infty )\) and \(\Gamma _t(u,\infty )\) coincide up until step \(i_0\), and that \(\sigma _s(u_{i,s}) <t\) for every \(i\ge i_0\). (Indeed, \(\sigma _s(u_{i,s})\) is decreasing in i.) On the other hand, if \(v\notin \mathcal {I}_{[s,t)}\) then \(\sigma _s(v)>t\), and the claim follows readily. \(\square \)

Similarly, we have the following lemma in the v-wired case, whose proof is identical to that of Lemma 3.4 above. Given \(A \subseteq \mathbb {R}\), we write \(\mathcal {I}_{v,A}\) for the set of vertices that are hit by some trajectory in \(\mathscr {I}_{v,A}\), and write \(\mathfrak {P}_{v,t}(u)\) for the past of u in the forest \(\mathfrak {F}_{v,t}\).

Lemma 3.5

Let G be a network, let v be a vertex of G, let \(\mathscr {I}_v\) be the v-wired interlacement process on G, and let \(\langle \mathfrak {F}_{v,t}\rangle _{t\in \mathbb {R}}=\langle \mathsf {AB} _{v,t}(\mathscr {I}_v) \rangle _{t\in \mathbb {R}}\). Let u be a vertex of G, and let \(s<t\). If \(u \notin \mathcal {I}_{v,[s,t)}\), then \(\mathfrak {P}_{v,s}(u)\) is equal to the component of u in the subgraph of \(\mathfrak {P}_{v,t}(u)\) induced by \(V{\setminus }\mathcal {I}_{v,[s,t)}\).

4 Lower bounds for the diameter

In this section, we use the interlacement Aldous–Broder algorithm to derive the lower bounds on the tail of the intrinsic and extrinsic diameter of Theorems 1.1–1.4.

4.1 Lower bounds for the intrinsic diameter

Recall that \(\mathfrak {P}(v)\) denotes the past of v in the WUSF, that \(\mathfrak {T}_v\) denotes the component of v in the v-WUSF, and that q(v) is the probability that two independent random walks started at v do not return to v or intersect each other at any positive time.

Proposition 4.1

Let G be a transient network. Then for each vertex v of G we have that

and similarly

for every \(r\ge 0\).

Note that (4.2) gives a non-trivial lower bound for every transitive network, and can be thought of as a mean-field lower bound. (For recurrent networks, the tree \(\mathfrak {T}_v\) contains every vertex of the network almost surely, so that the bound also holds degenerately in that case.)

Proof

We prove (4.1), the proof of (4.2) being similar. Let \(\mathscr {I}\) be the interlacement process on G and let \(\mathfrak {F}=\mathsf {AB}_0(\mathscr {I})\). Given a path \(X \in \mathcal {W}(0,\infty )\) and a vertex u of G visited by the path after time zero, we define e(X, u) to be the oriented edge pointing into u that is traversed by X as it enters u for the first time, and define

Note that, by definition of \(\mathcal {W}(0,\infty )\), X visits infinitely many vertices and \(\mathsf {AB}(X)\) is an infinite tree oriented towards \(X_0\). In particular, \(\mathsf {AB}(X)\) contains an infinite path starting at \(X_0\), whose edges are oriented towards \(X_0\). (If X is a random walk then this infinite path is unique and can be interpreted to be the loop-erasure of the time-reversal of X. We will not need to use these properties here.)

For each \(\varepsilon >0\), let \(\mathscr {A}_{\varepsilon }\) be the event that v is hit by exactly one trajectory in \(\mathscr {I}_{[0,\varepsilon ]}\), that this trajectory hits v exactly once, and that the parts of the trajectory before and after hitting v do not intersect each other. (In the v-wired case, in the proof of (4.2), one would instead take \(\mathscr {A}_\varepsilon \) to be the event that v is hit by exactly one trajectory in \(\mathscr {I}_{v,[0,\varepsilon ]}\), and that this trajectory is half-infinite and begins at v.) It follows from the definition of the interlacement intensity measure that

Given \(\mathscr {A}_\varepsilon \), let \(\langle W_n \rangle _{n \in \mathbb {Z}}\) be the unique representative of this trajectory that has \(W_0=v\), let \(X=W|_{[0,\infty )}\), let Z be an infinite path starting at v in \(\mathsf {AB}(X)\) (chosen in some measurable way if there are multiple such paths), and let \(\eta \) be the set of vertices visited by the first r steps of Z, not including v itself. Let \(\mathscr {B}_{r,\varepsilon }\subseteq \mathscr {A}_\varepsilon \) be the event that \(\mathscr {A}_\varepsilon \) occurs and that \(\eta \) is not hit by any trajectories in \(\mathscr {I}_{[0,\varepsilon ]}\) other than W. On the event \(\mathscr {B}_{r,\varepsilon }\) we have by definition of \(\mathsf {AB}_0(\mathscr {I})\) that the reversals of the first r edges traversed by Z are all contained in \(\mathfrak {F}\) and oriented towards v, and it follows that

for every \(r\ge 1\) and \(\varepsilon >0\). On the other hand, by the splitting property of Poisson processes, for every set \(K\subset V\), the number of trajectories of \(\mathscr {I}_{[0,\varepsilon ]}\) that hit K but not v is independent of the set of trajectories of \(\mathscr {I}_{[0,\varepsilon ]}\) that hit v. We deduce that

Let \(M = \sup _{u \in V} \mathrm {Cap}(u)\). We may assume that \(M<\infty \), since the claim is trivial otherwise. By the subadditivity of the capacity we have that \(\mathrm {Cap}(\eta ) \le Mr\), so that \(\mathbb {P}(\mathscr {B}_{r,\varepsilon } \mid \mathscr {A}_{\varepsilon }) \ge e^{-\varepsilon Mr}\) and hence that

The claimed inequality now follows by taking \(\varepsilon = 1/(M(r+1))\). \(\square \)

The following lemma shows that the lower bound of (4.1) is always meaningful provided that \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \). We write  for the Greens function.

for the Greens function.

Lemma 4.2

Let G be a network with controlled stationary measure and with \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \). Then there exists a positive constant \(\varepsilon \) such that for every \(v\in V\) there exists \(u\in V\) with \(\mathbf {G}(v,u)\ge \varepsilon \), \(d(v,u) \le \varepsilon ^{-1}\), and \(q(u)>\varepsilon \). In particular, if G is transitive then q(v) is a positive constant.

Note that the statement concerning the graph distance may hold degenerately on networks that are not locally finite, but that the Greens function lower bound remains meaningful in this setting.

Proof

Let X and Y be independent random walks started at v, and observe that

for every \(n,m\ge 0\).

Let \(I=\{(i,j) : i \ge 0, j \ge 0, X_i=Y_j \}\) be the set of intersection times, which is almost surely finite by (4.6), and let \((\tau _1,\tau _2)\) be the unique element of I that is lexicographically maximal in the sense that every \((i,j)\in I\) either has \(i<\tau _1\) or \(i=\tau _1\) and \(j \le \tau _2\). Since \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \) the right hand side of (4.6) tends to zero as \(n+m\rightarrow \infty \), and it follows by Markov’s inequality that there exists \(k_0<\infty \) such that \(\mathbf {P}_v(\tau _1,\tau _2 \le k_0) \ge 1/2\) for every \(v\in V\). Thus, we deduce that

On the other hand, it follows from the definitions that \(\sup _{u,v\in V} \mathbf {G}(u,v) \le \Vert {P}\Vert _{\mathrm {bub}}\), and the claim follows. \(\square \)

4.2 Lower bounds for the extrinsic diameter

In this section we apply a similar method to that used in the previous subsection to prove a lower bound on the tail of the extrinsic diameter. The method we use is very general and, as well as being used in the proof of Theorems 1.4–1.6 and 7.3, can also be used to deduce similar lower bounds for e.g. long-ranged models.

Let G be a network. For each \(r\ge 0\), we define

to be the maximum expected final visit time to a ball of radius r. It is easily seen that every transitive graph of polynomial growth of dimension \(d>2\) has \(L(r)\asymp r^2\), and the same holds for any Ahlfors regular network with controlled stationary measure satisfying Gaussian heat kernel estimates, see Lemma 4.4 below. On the other hand, uniformly ballistic networks have by definition that \(L(r)\asymp r\).

Proposition 4.3

Let G be a transient network. Then for each vertex v of G we have that

for every \(r\ge 1\), and similarly

for every \(r\ge 1\).

Proof of Proposition 4.3

We prove (4.7), the proof of (4.8) being similar. We continue to use the notation \(\mathscr {A}_{\varepsilon }\), \(\mathscr {B}_{R,\varepsilon }\), W, X, Z, \(\eta \), and M defined in the proof of Proposition 4.1 but with the variable R replacing the variable r there, so that \(\eta \) is the set of vertices visited by the first R steps of Z. We also define \(\mathscr {C}_{r,R,\varepsilon } \subseteq \mathscr {A}_{\varepsilon }\) to be the event in which \(\mathscr {A}_{\varepsilon }\) occurs and the distance in G between v and the endpoint of \(\eta \) is at least r, and define \(\mathscr {D}_{r,R,\varepsilon } = \mathscr {C}_{r,R,\varepsilon } \cap \mathscr {B}_{R,\varepsilon }\). Note that, since the path Z is oriented towards v in \(\mathsf {AB}(X)\), it follows from the definition of \(\mathsf {AB}(X)\) that the Rth point visited by Z is visited by X at a time greater than or equal to R. Thus, we have by the definition of the interlacement intensity measure, the union bound (in the form \(\mathbb {P}(A{\setminus }B) \ge \mathbb {P}(A)-\mathbb {P}(B)\)), and Markov’s inequality that, letting \(Y^1\) and \(Y^2\) be independent random walks started at v,

Thus, it follows by the same reasoning as in the proof of Proposition 4.1 that

for every \(R,r\ge 1\) and \(\varepsilon >0\). We conclude by taking \(\varepsilon =1/M(R+1)\) and \(R= \lceil 2 L(r)/q(v) \rceil \). \(\square \)

Lemma 4.4

Let \(G=(V,E)\) be a network with controlled stationary measure that is d-Ahlfors regular for some \(d>2\) and satisfies Gaussian heat kernel estimates. Then \(L(r)\preceq r^2\).

Proof

Let v be a vertex of G and let \(T_r= \sup \{n \ge 0: X_n \in B(v,r)\}\). It follows from the definitions that

for every \(n\ge 1\) and \(r\ge 1\), and also that there exists a positive constant c such that

for every \(n\ge 1\) and \(r\ge 1\). We deduce that

for every \(n\ge 1\) and \(r\ge 1\), and hence that, since \(d>2\),

On the other hand, it follows by the strong Markov property that

where the final inequality follows from (4.9), and we deduce that

for every \(n\ge r^2\). The claim follows by summing over n. \(\square \)

5 The length and capacity of the loop-erased random walk

In this section, we study the length and capacity of loop-erased random walk. In particular, we prove that in a network with controlled stationary measure and \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), an n-step loop-erased random walk has capacity of order n with high probability. The estimates we derive are used extensively throughout the remainder of the paper. In the case of \(\mathbb {Z}^d\), these estimates are closely related to classical estimates of Lawler, see [51] and references therein.

5.1 The number of points erased

Recall that when X is a path in a network G, the times \(\langle \ell _n(X) : 0 \le n \le |\mathsf {LE}(X)|\rangle \) are defined to be the times contributing to the loop-erasure of X, defined recursively by \(\ell _0(X)=0\) and \(\ell _{n+1}(X) = 1+ \max \{m : X_m = X_{\ell _n(X)}\}\). We also define

for each \(0 \le n \le |X|\), so that \(\rho _n(X)\) is the number of times between 1 and n that contribute to the loop-erasure of X. The purpose of this section is to study the growth of \(\ell \) and \(\rho \) when X is a random walk on a network with controlled stationary measure satisfying \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \).

Recall that we write \(X^T\) for the random walk ran up to the (possibly random) time T, and use similar notation for other paths such as \(\mathsf {LE}(X)\).

Lemma 5.1

Let G be a transient network, let v be a vertex of G, and let X be a random walk on G started at v. Then the following hold.

- 1.

The random variables \(\langle \ell _{n+1}(X)-\ell _{n}(X) \rangle _{n\ge 0}\) are independent conditional on \(\mathsf {LE} (X)\), and the estimate

$$\begin{aligned} \mathbf {P}_v\left[ \ell _{n+1}(X)-\ell _n(X) -1 =m \mid \mathsf {LE} (X) \right] \le \Vert P^{m}\Vert _{1\rightarrow \infty } \end{aligned}$$(5.1)holds for every \(n\ge 0\) and \(m \ge 0\).

- 2.

If \(\Vert {P}\Vert _{\mathrm {bub}}<\infty \), then

$$\begin{aligned} \mathbf {E}_v \left[ \ell _n(X) \mid \mathsf {LE} \left( X\right) \right] \le \Vert {P}\Vert _{\mathrm {bub}} \, n \end{aligned}$$(5.2)and

$$\begin{aligned} \mathbf {P}_v \left[ \rho _n(X) \le \lambda ^{-1} n \mid \mathsf {LE} (X) \right] \le \Vert {P}\Vert _{\mathrm {bub}} \, \lambda ^{-1} \end{aligned}$$(5.3)for every \(n\ge 1\) and \(\lambda > 0\).

Note that, in the other direction, we have the trivial inequalities \(\ell _n \ge n\) and \(\rho _n \le n\).

Proof

We first prove item 1. Observe that the conditional distribution of \(\langle X_i \rangle _{i \ge \ell _n}\) given \(X^{\ell _n}\) is equal to the distribution of a random walk started at \(X_{\ell _n}\) and conditioned never to return to the set of vertices visited by \(\mathsf {LE}(X)^{n-1}\). (This is the same observation that is used to derive the Laplacian random walk representation of loop-erased random walk, see [50].) Thus, the conditional distribution of X given \(\mathsf {LE}(X) = \gamma \) can be described as follows. For each finite path \(\eta \) in G, let \(w(\eta )\) be its random walk weight

For each time \(n \ge 0\), let \(L_n=L_n(\mathsf {LE}(X))\) be the set of finite loops in G that start and end at \(\mathsf {LE}(X)_n\) and do not hit the trace of \(\mathsf {LE}(X)^{n-1}\) (which we consider to be the empty set if \(n=0\)). In particular, \(L_n\) includes the loop of length zero at \(\mathsf {LE}(X)_n\) for each \(n \ge 0\). Then the random walk segments \(\langle X_i \rangle _{i=\ell _n}^{\ell _{n+1}-1}\) are conditionally independent given \(\mathsf {LE}(X)\), and have law given by

The contribution of the loop of length zero ensures that the denominator in (5.4) is at least one, so that

and hence, summing over \(\eta \in L_n\) of length m,

for all \(m \ge 0\), establishing item 1.

For item 2, (5.2) follows immediately from (5.1). Furthermore, \(\rho _n \le \lambda ^{-1} n\) if and only if \(\ell _{\lfloor \lambda ^{-1} n \rfloor } \ge n\), so that (5.3) follows from (5.2) and Markov’s inequality\(.\square \)