Abstract

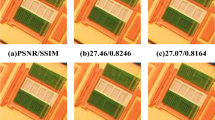

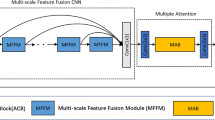

Recently, Transformer has achieved outstanding performance in the field of computer vision, where the ability to capture global context is crucial for image super-resolution (SR) reconstruction. Unlike convolutional neural networks (CNNs), Transformers lack a local mechanism for information exchange within local regions. To address this problem, we propose a U-Net network (TCSR) based on Transformer and CNN feature interaction fusion, which has skip-connections for local and global semantic feature learning. The TCSR takes the transformer blocks as the basic module of the U-Net architecture and gradually extracts multi-scale feature information while modeling global long-range dependencies. First, we propose an efficient multi-head shift transposed attention to improve the internal structure of the transformer and thus recover sufficient texture details. In addition, a feature enhancement module is inserted in the skip-connection positions to capture local structural information at different levels. Finally, to further exploit the contextual information from features, we use a locally enhanced feed-forward layer to replace the feed-forward network in each Transformer, which incorporates local feature representation into the global context. Powered by these designs, TCSR has the ability to capture both local and global dependencies for image HR reconstruction. Extensive experiments showed that compared with other state-of-the-art SR algorithms, our proposed method could effectively recover the details of the image, producing significant improvements in both visual effect and image quality.

Similar content being viewed by others

Data availability

All data generated or analyzed during this study are included in this published article (and its supplementary information files).

References

Anwar, S., Barnes, N.: Densely residual Laplacian super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 44(3), 1192–1204 (2020)

Niu, B., Wen, W., Ren, W., Zhang, X., Yang, L., Wang, S., Shen, H.: Single image super-resolution via a holistic attention network. In: Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, pp. 191–207 (2020)

Zhang, L., Wu, X.: An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 15(8), 2226–2238 (2006)

Zhang, K., Gao, X., Tao, D., Li, X.: Single image super-resolution with non-local means and steering kernel regression. IEEE Trans. Image Process. 21(11), 4544–4556 (2012)

Cao, J., Liang, J., Zhang, K., Li, Y., Zhang, Y., Wang, W., Gool, L.V.: Reference-based image super-resolution with deformable attention transformer. In: European Conference on Computer Vision, pp. 325–342 (2022)

Wang, J., Wu, Y., Wang, L., Wang, L., Alfarraj, O., Tolba, A.: Lightweight feedback convolution neural network for remote sensing images super-resolution. IEEE Access 9, 15992–16003 (2021)

Wei, W., Yongbin, J., Yanhong, L., Ji, L., Xin, W., Tong, Z.: An advanced deep residual dense network (DRDN) approach for image super-resolution. Int. J. Comput. Intell. Syst. 12(2), 1592–1601 (2019)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, pp. 184–199 (2014)

Shi, W., Caballero, J., Huszár, F., Totz, J., Aitken, A.P., Bishop, R., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1874–1883 (2016)

Kim, J., Lee, J.K., Lee, K. M.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646–1654 (2016)

Tai, Y., Yang, J., Liu, X.: Image super-resolution via deep recursive residual network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3147–3155 (2017)

Chen, H., Wang, Y., Guo, T., Xu, C., Deng, Y., Liu, Z., Gao, W.: Pre-trained image processing transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12299–12310 (2021)

Liang, J., Cao, J., Fan, Y., Zhang, K., Ranjan, R., Li, Y., Van Gool, L. Vrt: A video restoration transformer. arXiv preprint arXiv:2201.12288 (2022)

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: Proceedings of the ECCV European Conference on Computer Vision, pp. 286–301 (2018)

Dai, T., Cai, J., Zhang, Y., Xia, S.T., Zhang, L.: Second-order attention network for single image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11065–11074 (2019)

Wang, J., Zou, Y., Wu, H.: Image super-resolution method based on attention aggregation hierarchy feature. Vis. Comput. 1–12 (2023)

Zhang, J., Wang, W., Lu, C., Wang, J., Sangaiah, A.K.: Lightweight deep network for traffic sign classification. Ann. Telecommun. 75, 369–379 (2020)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Li, W., Lu, X., Qian, S., Lu, J., Zhang, X., Jia, J.: On efficient transformer-based image pre-training for low-level vision. arXiv preprint arXiv:2112.10175 (2021)

Chen, X., Wang, X., Zhou, J., Qiao, Y., Dong, C.: Activating more pixels in image super-resolution transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 22367–22377 (2023)

Wang, J., Wu, Y., He, S., Sharma, P.K., Yu, X., Alfarraj, O., Tolba, A.: Lightweight single image super-resolution convolution neural network in PorTablele device. KSII Trans. Internet Inf. Syst. 15(11), 25 (2021)

Cao, J., Liang, J., Zhang, K., Wang, W., Wang, Q., Zhang, Y., Van Gool, L.: Towards interpretable video super-resolution via alternating optimization. In: European Conference on Computer Vision, pp. 393–411 (2022)

Chen, Y., Xia, R., Yang, K., Zou, K.: MFFN: image super-resolution via multi-level features fusion network. Vis. Comput. 1–16 (2023)

Ledig, C., Theis, L., Huszár, F., Caballero, J., Cunningham, A., Acosta, A., Shi, W.: Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4681–4690 (2017)

Haris, M., Shakhnarovich, G., Ukita, N.: Deep back-projection networks for super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1664–1673 (2018)

Hui, Z., Li, J., Gao, X., Wang, X.: Progressive perception-oriented network for single image super-resolution. Inf. Sci. 546, 769–786 (2021)

Li, Z., Kuang, Z.S., Zhu, Z.L., Wang, H.P., Shao, X.L.: Wavelet-based texture reformation network for image super-resolution. IEEE Trans. Image Process. 31, 2647–2660 (2022)

Li, Y., Zhang, K., Cao, J., Timofte, R., Van Gool, L.: Localvit: bringing locality to vision transformers. arXiv preprint arXiv:2104.05707 (2021)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Polosukhin, I.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Yang, F., Yang, H., Fu, J., Lu, H., Guo, B.: Learning texture transformer network for image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5791–5800 (2020)

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.H.: Restormer: efficient transformer for high-resolution image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5728–5739 (2022)

Fang, J., Lin, H., Chen, X., Zeng, K.: A hybrid network of CNN and transformer for lightweight image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1103–1112 (2022)

Wang, Z., Cun, X., Bao, J., Zhou, W., Liu, J., Li, H.: Uformer: a general u-shaped transformer for image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17683–17693 (2022)

Mao, X., Shen, C., Yang, Y.B.: Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. Adv. Neural Inf. Process. Syst. 29 (2016)

Zhu, L., Zhan, S., Zhang, H.: Stacked U-shape networks with channel-wise attention for image super-resolution. Neurocomputing 345, 58–66 (2019)

Lu, Z., Li, J., Liu, H., Huang, C., Zhang, L., Zeng, T.: Transformer for single image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 457–466 (2022)

Yuan, K., Guo, S., Liu, Z., Zhou, A., Yu, F., Wu, W.: Incorporating convolution designs into visual transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 579–588 (2021)

Hui, Z., Gao, X., Wang, X.: Lightweight image super-resolution with feature enhancement residual network. Neurocomputing 404, 50–60 (2020)

Liang, J., Cao, J., Sun, G., Zhang, K., Van Gool, L., Timofte, R.: Swinir: image restoration using swin transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1833–1844 (2021)

Ba, J.L., Kiros, J.R., Hinton, G.E.: Layer normalization. arXiv preprint arXiv:160706450 (2016)

Wu, B., Wan, A., Yue, X., Jin, P., Zhao, S., Golmant, N., Keutzer, K.: Shift: a zero flop, zero parameter alternative to spatial convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9127–9135 (2018)

Li, Z., Liu, Y., Chen, X., Cai, H., Gu, J., Qiao, Y., Dong, C.: Blueprint separable residual network for efficient image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 833–843 (2022)

Agustsson, E., Timofte, R.: Ntire 2017 challenge on single image super-resolution: dataset and study. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 126–117 (2017)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding, pp. 117–1 (2012)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: Curves and Surfaces: 7th International Conference, Avignon, France, pp. 711–730 (2012)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings Eighth IEEE International Conference on Computer Vision, vol. 2, pp. 416–423 (2001)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206 (2015)

Matsui, Y., Ito, K., Aramaki, Y., Fujimoto, A., Ogawa, T., Yamasaki, T., Aizawa, K.: Sketch-based manga retrieval using manga109 dataset. Multimedia Tools Appl. 76, 21811–21838 (2017)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:14126980 (2014)

Lim, B., Son, S., Kim, H., Nah, S., Mu Lee, K.: Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 136–144 (2017)

Hui, Z., Gao, X., Yang, Y., Wang, X.: Lightweight image super-resolution with information multi-distillation network. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 2024–2032 (2019)

Zhao, H., Kong, X., He, J., Qiao, Y., Dong, C.: Efficient image super-resolution using pixel attention. In: European Conference on Computer Vision, pp. 56–72. Springer (2020)

Chen, H., Gu, J., Zhang, Z.: Attention in attention network for image super-resolution. arXiv preprint arXiv:210409497 (2021)

Acknowledgements

This work was supported by the Scientific Research Fund of the Hunan Provincial Education Department (Grant No. 22C0171), the Traffic Science and Technology Project of Hunan Province (Grant No. 202042), the Research Foundation of the Education Bureau of Hunan Province (Grant No. 21B0287), and the Researchers Support Project (Grant No. RSP2023R102), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, J., Zou, Y., Alfarraj, O. et al. Image super-resolution method based on the interactive fusion of transformer and CNN features. Vis Comput (2023). https://doi.org/10.1007/s00371-023-03138-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-023-03138-9